Abstract

In this paper, we consider the problem of fair statistical inference involving outcome variables. Examples include classification and regression problems, and estimating treatment effects in randomized trials or observational data. The issue of fairness arises in such problems where some covariates or treatments are “sensitive,” in the sense of having potential of creating discrimination. In this paper, we argue that the presence of discrimination can be formalized in a sensible way as the presence of an effect of a sensitive covariate on the outcome along certain causal pathways, a view which generalizes (Pearl 2009). A fair outcome model can then be learned by solving a constrained optimization problem. We discuss a number of complications that arise in classical statistical inference due to this view and provide workarounds based on recent work in causal and semi-parametric inference.

Introduction

As statistical and machine learning models become an increasingly ubiquitous part of our lives, policymakers, regulators, and advocates have expressed concerns about the impact of deployment of such models that encode potential harmful and discriminatory biases. Unfortunately, data analysis is based on statistical models that do not, by default, encode human intuitions about fairness and bias. For instance, it is well-known that recidivism is predicted at higher rates among certain minorities in the US (Angwin et al. 2016). To what extent are these predictions discriminatory? What is a sensible framework for thinking about these issues? A growing community is now addressing issues of fairness and transparency in data analysis in part by defining, analyzing, and mitigating harmful effects of algorithmic bias from a variety of perspectives and frameworks (Pedreshi, Ruggieri, and Turini 2008; Feldman et al. 2015; Hardt, Price, and Srebro 2016; Kamiran, Zliobaite, and Calders 2013; Corbe-Davies et al. 2017; Jabbari et al. 2016).

In this paper, we propose to model discrimination based on a “sensitive feature,” such as race or gender, with respect to an outcome as the presence of an effect of the feature on the outcome along certain “disallowed” causal pathways. As a simple example, discussed in (Pearl 2009), job applicants’ gender should not directly influence the hiring decision, but may influence the hiring decision indirectly, via secondary applicant characteristics important for the job, and correlated with gender. We argue that this view captures a number of intuitive properties of discrimination, and generalizes existing formal (Pearl 2009; Zhang, Wu, and Wu 2017) and informal proposals (Bertrand and Mullainathan 2004).

The paper is organized as follows. We first fix our notation and give a brief introduction to causal inference and mediation analysis, which will be necessary to formally define our approach to fair inference. We then discuss representative prior work on fair inference, and enumerate issues these methods may run into. Moving forward, we show that fair inference from finite samples under our definition can be viewed as a certain type of constrained optimization problem. We then discuss a number of complications to the basic framework of fair inference. We illustrate our framework via experiments on real datasets in the experimental section followed by additional discussion and final conclusions.

Notation And Preliminaries

Variables will be denoted by uppercase letters, V, values by lowercase letters, v, and sets by bold letters. A state space of a variable will be denoted by 𝔛V. We will represent datasets by 𝒟 = (Y, X), where Y is the outcome and X is the feature vector. We denote by and yi the ith realization of the jth feature Xj ∈ X and the outcome Y. Similarly, xi is the ith realization of the entire feature vector.

In this paper, we consider probabilistic classification and regression problems with a set of features X and an outcome Y, where a feature S ∈ X is sensitive, in the sense that making inferences on the outcome Y based on S carelessly may result in discrimination. There are many examples of S, Y pairs that have this property. These include hiring discrimination (Y is a hiring decision, and S is gender), or recidivism prediction in parole hearings (where Y is a parole decision, and S is race). Our approach readily generalizes to any outcome based inference task, such as establishing causal effects, although we do not consider these generalizations here in the interests of space.

Causal Inference

In causal inference, in addition to the outcome Y, we distinguish a treatment variable A ∈ X, and sometimes also one or more mediator variables M ∈ X, or M ⊆ X. The primary object of interest in causal inference is the potential outcome variable, Y(a) (Neyman 1923), which represents the outcome if, possibly contrary to fact, A were set to value a. Given a, a′ ∈ 𝔛A, comparison of Y(a′) and Y(a) in expectation: 𝔼[Y(a)] − 𝔼[Y(a′)] would allow us to quantify the average causal effect (ACE) of A on Y. In general, the average causal effect is not computed using the conditional expectation 𝔼[Y|A], since association of A and Y may be spurious or only partly causal.

Causal inference uses assumptions in causal models to link observed data with counterfactual contrasts of interest. When such a functional exists, we say the parameter is identified from the observed data under the causal model. One such assumption, known as consistency, states that the mechanism that determines the value of the outcome does not distinguish the method by which the treatment was assigned, as long as the treatment value assigned was invariant. This is expressed as Y(A) = Y. Here Y(A) reads “the random variable Y, had A been intervened on to whatever value A would have naturally attained.”

Another standard assumption is known as conditional ignorability. This assumption states that conditional on a set of factors C ⊆ X, A is independent of any counterfactual outcome, i.e. Y(a) ⫫ A|C, ∀a ∈ 𝔛A, where (. ⫫ .|.) represents conditional independence. Given these assumptions, we can show that p(Y(a)) = ΣC p(Y|a, C)p(C), known as the adjustment formula, the backdoor formula, or stratification. Intuitively, the set C acts as a set of observed confounders, such that adjusting for their influence suffices to remove all non-causal dependence of A and Y, leaving only the part of the dependence that corresponds to the causal effect. A general characterization of identifiable functionals of causal effects exists (Tian and Pearl 2002; Shpitser and Pearl 2008).

Causal Diagrams

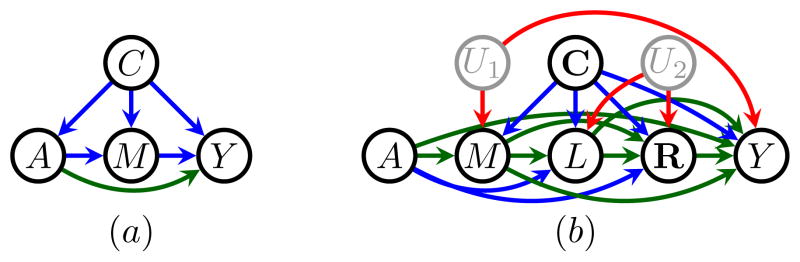

Causal relationships are often represented by graphical causal models (Spirtes, Glymour, and Scheines 2001; Pearl 2009). Such models generalize independence models on directed acyclic graphs, also known as Bayesian networks (Pearl 1988), to also encode conditional independence statements on counterfactual random variables (Richardson and Robins 2013). In such graphs, vertices represent observed random variables, and absence of directed edges represents absence of direct causal relationships. As an example, in Fig. 1(a), C is potentially a direct cause of A, while M mediates a part of the causal influence of A on Y, represented by all directed paths from A to Y.

Figure 1.

(a) A causal graph with a single mediator. (b) A causal graph with two mediators, one confounded with the outcome via an unobserved common cause. (c) A causal graph with a single mediator where the natural direct effect is not identified.

Mediation Analysis

A natural step in causal inference is understanding the mechanism by which A influences Y. A simple form of understanding mechanisms is via mediation analysis, where the causal influence of A on Y, as quantified by the ACE, is decomposed into the direct effect, and the indirect effect mediated by a mediator variable M. In typical mediation settings, X is partitioned into a treatment A, a single mediator M, an outcome Y, and a set of baseline factors C = X \ {A, M, Y}.

Mediation is encoded via a counterfactual contrast using a nested potential outcome of the form Y(a, M(a′)), for a, a′ ∈ 𝔛A. Y(a, M(a′)) reads as “the outcome Y if A were set to a, while M were set to whatever value it would have attained had A been set to a′. An intuitive interpretation for this counterfactual occurs in cases where a treatment can be decomposed into two disjoint parts, one of which acts on Y but not M, and another acts on M but not Y. For instance, smoking can be decomposed into smoke and nicotine. Then if M is a mediator affected by smoke, but not nicotine (for instance lung cancer), and Y is a composite health outcome, then Y(a, M(a′)) corresponds to the response of Y to an intervention that sets the nicotine exposure (the part of the treatment associated with Y ) to what it would be in smokers, and the smoke exposure (the part of the treatment associated with M) to what it would be in non-smokers. An example of such an intervention would be a nicotine patch.

Given Y(a, M(a′)), we define the following effects on the mean difference scale: the natural direct effect (NDE) as 𝔼[Y(a, M(a′))] − 𝔼[Y(a′)], and the natural indirect effect (NIE) as 𝔼[Y(a)] − 𝔼[Y(a, M(a′))] (Robins and Greenland 1992). Intuitively, the NDE compares the mean outcome affected only by the part of the treatment that acts on it, and the mean outcome under placebo treatment. Similarly, the NIE compares the outcome affected by all treatment, and the outcome where the part of the treatment that acts on the mediator is “turned off.” A telescoping sum argument implies that ACE = NDE + NIE.

Aside from consistency, additional assumptions are needed to identify p(Y(a, M(a′))). One such assumption is known as sequential ignorability, and states that conditional on C, counterfactuals Y(a, m) and M(a′) are independent for any a, a′ ∈ 𝔛A, m ∈ 𝔛M. In addition, conditional ignorability for Y acting as the outcome, and A, M acting as a single composite treatment, that is (Y(a, m) ⫫ A, M|C), and conditional ignorability for M acting as the outcome, that is (M(a′) ⫫ A|C), should hold. Under these assumptions, the NDE is identified as the functional known as the mediation formula (Pearl 2011):

| (1) |

which may be estimated by plug in estimators, or other methods (Tchetgen and Shpitser 2012a).

Path-Specific Effects

In general, we may be interested in decomposing the ACE into effects along particular causal pathways. For example in Fig. 1(b), we may wish to decompose the effect of A on Y into the contribution of the path A → W → Y, and the path bundle A → Y and A → M → W → Y. Effects along paths, such as an effect along the path A → W → Y, are known as path-specific effects (Pearl 2001). Just as the NDE and NIE, path-specific effects (PSEs) can be formulated as nested counterfactuals (Shpitser 2013). The general idea is that along the pathways of interest, variables behave as if the treatment variable A were set to the “active value” a, and along other pathways, variables behave as if the treatment variable A were set to the “baseline value” a′, thus “turning the treatment off.” Using this scheme, the path-specific effect of A on Y along the path A → W → Y, on the mean difference scale, can be formulated as

| (2) |

Under a more complex sets of assumptions found in (Shpitser 2013), the counterfactual mean 𝔼[Y(a′, W(M (a′), a), M (a′))] is identified via the edge g-formula (Shpitser and Tchetgen 2015):

| (3) |

and may be estimated by plug-in estimators. PSEs such as (2) has been used in the context of observational studies of HIV patients for assessing the role of adherence in determining viral failure outcomes (Miles et al. 2017).

Formalizing Discrimination And Prior Approaches To Fair Inference

We are now ready to discuss prior approaches to fair inference. In discussing the extent to which a particular approach is “fair,” we believe the gold standard is human intuition. That is, we consider an approach inappropriate if it leads to counter-intuitive conclusions in examples. For space reasons, we restrict attention to a representative subset of approaches.

A common class of approaches for fair inference is to quantify fairness via an associative (rather than causal) relationship between the sensitive feature S and the outcome Y. For instance, (Feldman et al. 2015) adopted the 80% rule, for comparing selection rates based on sensitive features. This is a guideline (not a legal test) advocated by the Equal Employment Opportunity Commission (EEOC. 1979) as a way of suggesting possible discrimination. Rate of selection here is defined as the conditional probability of selection given the sensitive feature, or p(Y |S). (Feldman et al. 2015) proposed methods for removing disparities based on this rule via a link to classification accuracy. A White House report on “equal opportunity by design” (Executive Office of the President May 2016) prompted (Hardt, Price, and Srebro 2016) to propose a fairness criterion, called equalized odds, that ensures that true and false positive rates are equal across all groups. This criterion is also associative.

The issue with these approaches is they do not give intuitive results in cases where the sensitive feature is not randomly assigned (as gender is at conception), but instead exhibits spurious correlations with the outcome via another, possibly unobserved, feature. We illustrate the difficulty with the following hypothetical example. Certain states in the US prohibit discrimination based on past conviction history. Prior convictions are influenced by other variables, such as gender (men have more prior convictions than women, on average). Consider a hypothetical dataset (consisting mostly of people with prior convictions) with two features – prior conviction (C) and gender (G) as well as the hiring outcome (H). The values are coded as follows: male is 1, female is 0, prior conviction and hiring are 1, lack of prior conviction and no hiring is 0. Assume the dataset is drawn from the joint density specified as follows: p(G = 1) = 0.5, and

| p(H=1|G, C) | G value | C value | p(C=1|G) |

|---|---|---|---|

| 0.06 | 1 | 1 | 0.99 |

| 0.01 | 0 | 1 | 0.01 |

| 0.2 | 1 | 0 | |

| 0.05 | 0 | 0 |

That is, gender is randomly assigned at birth, the people in the cohort are very likely to have prior convictions (with men having more), and p(H|C, G) specifies a certain hiring rule for the cohort. For simplicity, we assume no other features of people in the cohort are relevant for either the prior conviction or the hiring decision. It’s easy to show that

However, intuitively we would consider a hiring rule in this example fair if, in a hypothetical randomized trial that assigned convictions randomly (conviction to the case group, no conviction to the control group), the rule would yield equal hiring probabilities to cases and controls. In our example, this implies comparing counterfactual probabilities p(H(C = 1)) and p(H(C = 0)). Since we posited no other relevant features for assigning C and H than A, these probabilities are identified, via the adjustment formula described earlier, yielding p(H(C = 1)) = 0.035, and p(H(C = 0)) = 0.125. That is, any method relying on associative measures of discrimination will likely conclude no discrimination here, yet the intuitively compelling test of discrimination will reveal a strong preference to hiring people without prior convictions. The large difference between p(H(C = 0)) and p(H|C = 0) has to do with extreme probabilities p(C|G) in our example. Even in less extreme examples, any approach that relies on associative measures of association will be led astray due to failing to properly model sources of confounding for the relationship of the sensitive feature and the outcome. One might imagine that a simple repair in this example would be to also include G as a feature. The reason this does not work in general is not all features are possible to measure, and in general counter-factual probabilities are complex functions of the observed data, not just conditional densities (Shpitser and Pearl 2006).

In the example above, it made intuitive sense to think of discrimination as a causal relationship between the sensitive feature and outcome. In other examples, discrimination intuitively entails only a part of the causal relationship. Consider a modification of the hiring example above where potential discrimination is with respect to gender (a variable randomized at conception, which means worries about confounding are no longer relevant). As before, consider binary variables G and H for gender and hiring, and an additional vector C, representing applicant characteristics relevant for the job, of the kind that would appear on the resume. The intuition here is it is legitimate to consider job characteristics in making hiring decisions even if those characteristics are correlated with gender. However, it is not legitimate to consider gender directly. This intuition underscores resume “name-swapping” experiments where identical resumes are sent for review with names switched from a Caucasian sounding name to an African-American sounding name (Bertrand and Mullainathan 2004). In such experiments, name serves as a proxy for race as a direct determinant of the hiring decision.

The definition of discrimination as related to causal pathways is further supported in the legal literature. The following definition of employment discrimination, which appeared in the legal literature (7th Circuit Court 1996), and was cited by (Pearl 2009), makes clear the counterfactual nature of our intuitive conception of discrimination:

The central question in any employment-discrimination case is whether the employer would have taken the same action had the employee been of a different race (age, sex, religion, national origin etc.) and everything else had been the same.

The counterfactual “had the employee been of a different gender” phrase entails considering, for women, the outcome Y had gender been male G = 1, while the “everything else had been the same” phrase entails considering job characteristics under the original gender G = 0. The resulting counterfactual Y(G = 1, C(G = 0)) is precisely the one used in mediation analysis to define natural direct effects.

It is possible to construct examples, discussed further, where some causal paths from a sensitive variable to the outcome are intuitively discriminatory, and others are not. Thus, our view is that discrimination ought to be formalized as the presence of certain path-specific effects. The specific paths which correspond to discrimination are a domain specific issue. For example, physical fitness tests may be appropriate to administer for certain physically demanding jobs, such as construction, but not for white collar jobs, such as accounting. As a result, a path from gender to the result of a test to a hiring decision may or may not be discriminatory, depending on the nature of the job.

Existing work considered similar proposals. Prior work closest to ours appears in (Zhang, Wu, and Wu 2017), where discrimination was also linked to path-specific effects. While we agree with the link, we disagree on three essential points. First, the authors do not appear to do any statistical inference and operate directly on discrete densities. In high dimensional settings, where most practical outcome based inference takes place, statistical modeling becomes necessary, and an approach that avoids it will not scale. In this paper we show how removing certain path-specific effects corresponds to a constrained inference problem on statistical models. As we show later, the constrained optimization problems that arise are non-trivial. Second, the authors propose an ad hoc repair in cases where the path-specific effect is not identifiable. We believe this is a misunderstanding of the concept of non-identifiability. If discrimination is indeed linked to a path-specific effect and this effect is not identifiable (not a function of the observed data), then the problem of removing discrimination is not solvable without more assumptions. In domains such as recidivism prediction, failure today and a better method tomorrow is preferable to an improper correction that preserves discriminatory practice. We discuss more principled approaches to repairing lack of identifiability of discriminatory path-specific effects in later sections. Finally, the authors, while repairing the observed data distribution to be fair, do not modify new instances to be classified in any way. Since new instances are, by definition, drawn from the observed data distribution, which is “unfair,” no guarantees about discrimination when classifying new samples can be made. We discuss this issue further below.

Inference On Outcomes That Minimizes Discriminatory Path-Specific Effects

We now describe our proposal precisely. Assume we are interested in making inferences on outcomes given a joint distribution p(Y, X) either modeled fully via a generative model, or partly via a discriminative model p(Y, X\W|W). In addition, we assume that p(Y, X) is induced by a causal model in the sense of (Pearl 2009; Spirtes, Glymour, and Scheines 2001), that the presence of discrimination based on some sensitive feature A with respect to Y is represented by a PSE, and that this PSE is identified given the causal model as a functional f(p(Y, X)). Finally, we fix upper and lower bounds εl, εu on the PSE, representing the degree of discrimination we are willing to tolerate.

Our proposal is to transform the inference problem on p(Y, X) into an inference problem on another distribution p*(Y, X) which is close, in the Kullback-Leibler (KL) divergence sense, to p(Y, X) while also having the property that the PSE lies within (εl, εu). In some sense, p* represents a hypothetical “fair world” where discrimination is reduced, while p represents our world, where discrimination is present. A special case most relevant in practice is when εl = εu is set to values that remove the PSE entirely. We consider the more general case of bounding the PSE by εl, εu to link with earlier work in (Zhang, Wu, and Wu 2017), and for mathematical convenience. In our framework, any function of p of interest that we wish to make fair, such as the ACE or 𝔼[Y|X], is to be computed from p* instead. That is, just as causal inference is interested in hypothetical worlds representing randomized trials, so is fair inference interested in hypothetical worlds representing fair situations. And just as in causal inference, where it was important to only make inference in the hypothetical world of interest, it is important to make inferences only in the fair world, especially given new instances. This is because new instances are likely drawn from the observed data distribution p, not from the hypothetical “fair distribution” p*. Since p does not ensure discrimination is removed, any guarantees on discrimination removal made in p* will not translate to draws from p for reasons similar to ones described in the covariate shift literature in machine learning – the distribution of the new instance is not the right distribution. We thus map any new instance xi to a sensible version of it that is drawn from p*.

A number of approaches for doing this mapping are possible. In this paper we propose perhaps the simplest conservative approach. That is, we will consider only generative models for p(Y, X \ W|W), and map to “fair” versions of this distribution p*(Y, X \ W|W). This ensures that p*(W) = p(W), and thus can be viewed as drawn from p*(W), that is from the “fair world.” Since there is no unique way of specifying what values of X \ W the “fair version” of the xi instance would attain, we simply average over these possible values using p*. This amounts to predicting Y using 𝔼*[Y|W] with , and the expectation taken with respect to p*(Y|W).

The choice of variables to include in W, which governs the degree to which our model resembles a generative or a discriminative model is not obvious. More discriminative models with larger W sets allow the use of larger parts of new instances for classification, which yields more information on the outcome. On the other hand, more generative models with smaller W sets will be KL-closer to the true model. To see this, consider two models, one which eliminates the discriminatory PSE by only constraining 𝔼[Y|A, M, C], and one which eliminates the same PSE by constraining both 𝔼[Y|A, M, C] and p(M|A, C). It is clear that the second model will be at least as KL-close to the true model p(Y, X) as the first, and likely closer in general. In this paper we propose a simple approach for choosing W, based on the form of the estimator of the PSE, described further below. We leave the investigation of more principled approaches for selecting W to future work.

A feature of our proposal is that we are selectively ignoring some known information about a new instance xi, if this information was drawn from the distribution that differs from p*. We believe this is unavoidable in fair inference settings – the entire point is using the information “as effectively as possible” is discriminatory. We do want to use information as well as possible, but only insofar as we remain in the “fair world”.

Fair Inference From Finite Samples

Given a set of finite samples 𝒟 drawn from p(Y, X), a PSE representing discrimination identified as f(p(Y, X)), a (possibly conditional) likelihood function ℒY,X (𝒟; α) parameterized by α, an estimator g(𝒟) of the PSE, and εl, εu, we approximate p* by solving a constrained maximum likelihood problem

| (4) |

Our choice for the set W will be guided by the form of the estimator g(.). Specifically W will contain variables with models not a part of g(.). Since the estimators for the PSE developed within the causal inference literature do not model the baseline factors C, C ⊆ W. In addition, certain estimators also do not use other parts of the model. For example, (5) below does not use p(A|C). For such estimators we also include those variables in W.

We now illustrate the relationship between the choice of Wand the choice of g by considering three of the four consistent estimators of the NDE (assuming the model shown in Fig. 1(a) is correct) presented in (Tchetgen and Shpitser 2012b). The first estimator is the MLE plug in estimator for (1), given by

| (5) |

Since solving (4) using (5) entails constraining 𝔼[Y|A, M, C] and p(M|A, C), classifying a new point entails using 𝔼̃[Y|A, C] = ΣM 𝔼̃[Y|A, M, C]p̃(M|A, C), where 𝔼̃ and p̃ represent constrained models.

The second estimator uses all three models, as follows:

| (6) |

with η(a, a′, c) ≡ Σm 𝔼[Y|a, m, c] p(m|a′, c). Since the models of A, M, and Y are all constrained with this estimator, predicting Y for a new instance is via 𝔼̃[Y|C]. We discuss the advantages of this estimator in the next section.

The final estimator is based on inverse probability weighting (IPW). The IPW estimator uses the A and M models to estimate the NDE. We can fit the models p(A|C) and p(M|A, C) by MLE, and use the following weighted empirical average as our estimate of the NDE:

| (7) |

Since solving the constrained MLE problem using this estimator entails only restricting parameters of A and M models, predicting a new instance ai, mi, ci is done using

| (8) |

A sensitive feature may affect the outcome through multiple paths, and paths other than a single edge path corresponding to the direct effect may be inadmissible. Consider an example where A is gender, and Y is a hiring decision in a construction labor agency. We now consider two mediators of A, the number of children M, and physical strength as measured by an entrance test W. In this setting, it seems that it is inappropriate for the applicant’s gender A to directly influence the hiring decision Y, nor for the number of the subject’s children to influence the hiring decision either since the consensus is that women should not be penalized in their career for the biological necessity of having to bear children in the family. However, gender also likely influences the subject’s performance on the entrance test, and requiring that certain requirements of strength and fitness is reasonable in a job like construction. The situation is represented by Fig. 1(b), with a hidden common cause of M and Y added since it does not influence the subsequent analysis.

In this case, the PSE that must be minimized for the purposes of making the hiring decision is given by (2), and is identified, given a causal model in Fig. 1(b), by (3). If we use the analogue of (5), we would maximize ℒ(𝒟; α) subject to

| (9) |

being within (εl, εu). This would entail classifying new instances ai, wi, mi, ci using 𝔼̃[Y|A, C]. Our proposal can be generalized in this way to any setting where an identifiable PSE represents discrimination, using the complete theory of identification of PSEs (Shpitser 2013), and plug-in MLE estimators that generalize (9). We consider approaches for non-identifiable PSEs in one of the following sections.

Fair Inference Via Box Constraints

Generally, the optimization problem in (4) involves complex non-linear constraints on the parameter space. However, in certain cases the optimization problem is significantly simpler, and involves box constraints on individual parameters of the likelihood. In such cases, standard optimization software such as the optim function in the R programming language can be used to directly solve (4). We describe two such cases here. First, if we assume a linear regression model for the outcome Y = w0 + waA + wmM + wcC in the causal graph of Fig. 1(a), then the NDE on the mean difference scale, 𝔼[Y(1, M(0))] − 𝔼[Y(0, M(0))] is equal to wa, and (4) simplifies to a box constraint on wa. Note that setting εl = εu = 0 in this case coincides to simply dropping A from the outcome regression.

Second, consider a setting with a binary outcome Y specified with a logistic model, logit(P(Y = 1|A, M, C)) = θ0 + θaA + θmM + θcC, a continuous mediator specified with a linear model, and the NDE (within a given level of C) defined on the odds ratio scale as in (VanderWeele and Vansteelandt 2010):

In this setting, it was shown in (VanderWeele and Vanstee-landt 2010) that under certain additional assumptions, the NDE has no dependencies on C, and is approximately equal to exp (θa). Hence, (4) can be expressed using box constraints on θa. Note that the null hypothesis of the absence of discrimination corresponds to the value of 1 of the NDE on the odds ratio scale, and to 0 on the mean difference scale.

Fair Inference With A Regularized Outcome Model

In many applications, the goal of inference is not to approximate the true model itself, but to maximize out of sample prediction performance regardless of what the true model might be. Validation datasets or resampling approaches can be used to assess the performance of such predictive models, with various regularization methods used to make the tradeoff between bias and variance. The difficulty here is that searching for a model Y with good out of sample prediction performance implies the true Y model might be sufficiently complex that it may not lie within the model we consider. This means we cannot use any estimator of the PSE that relies on the Y model. This is because most estimators that rely on the Y model are not consistent if the Y model is misspecified. An inconsistent estimator of the PSE implies we cannot be sure solving the constrained optimization problem will indeed remove discrimination.

The key approach for addressing this is to use estimators that do not rely on the Y model. For the special case of the NDE, one such estimator is the IPW estimator, described earlier and in (Tchetgen and Shpitser 2012b). Another is the estimator in (6), which was shown to be triply robust in (Tchetgen and Shpitser 2012b), meaning it remains consistent in the union model where any two of the three models (of Y, M and A) are specified correctly. With either estimator, predicting a new instance ai, mi, ci entails using 𝔼̃[Y|C]. Since A and M models are assumed to be known, we regularize the model 𝔼[Y|A, M, C] to maximize out of sample predictive performance using 𝔼̃[Y|C]. Using these estimators ensures any regularization of the Y model does not influence the estimate of the NDE, meaning that discrimination remains minimized. We give an example analysis illustrating this approach in the experiment section.

Fairness In Computational Bayesian Methods

Methods for fair inference described so far are fundamentally frequentist in character, in a sense that they assumed a particular true parameter value, and parameter fitting was constrained in a way that an estimate of this parameter was within specified bounds. Here, we do not extend our approach to a fully Bayesian setting, where we would update distributions over causal parameters based on data, and use the resulting posterior distributions for constraining inferences. Instead, we consider how Bayesian methods for estimating conditional densities can be adapted, as a computational tool, to our frequentist approach.

Many Bayesian methods do not compute a posterior distribution explicitly, but instead sample the posterior using Markov chain Monte Carlo approaches (Metropolis et al. 1953). These sampling methods can be used to compute any function of the posterior distribution, including conditional expectations, and can be modified to obey constraints in our problem in a straightforward way. As an example, we consider BART, a popular Bayesian random forest method described in (Chipman, George, and McCulloch 2010). This method constructs a distribution over a forest of regression trees, with a prior that favors small trees, and samples the posterior using a variant of Gibbs sampling, where a new tree is chosen while all others are held fixed. A well known result (Gelfand, Smith, and Lee 1992) states that a Gibbs sampler will generate samples from a constrained posterior directly if it rejects all draws that violate the constraint.

We implemented this simple method by modifying the R package (with a C++ backend) BayesTree, and applied the result to the model in Fig 2(a), where NDE was estimated via a mixed estimation strategy described in (Tchetgen and Shpitser 2012b), where A was assumed to be randomly assigned (i.e. no modeling, and hence no constraining, of A was required), and the Y model was fit using constrained BART. The experiment using the resulting constrained outcome model is described in the experimental section.

Figure 2.

Causal graphs for (a) the COMPAS dataset, and (b) the Adult dataset.

Dealing With Non-Identification of the PSE

Suppose our problem entailed the causal model in Fig. 1(b), or Fig. 1(c) where in both cases only the NDE of A on Y is discriminatory. Existing identification results for PSEs (Shpitser 2013) imply that the NDE is not identified in either model. This means estimation of the NDE from observed data is not possible as the NDE is not a function of the observed data distribution in either model.

In such cases, three approaches are possible. In both cases, the unobserved confounders U are responsible for the lack of identification. If it were possible to obtain data on these variables, or obtain reliable proxies for them, the NDE becomes identifiable in both cases. If measuring U is not possible, a second alternative is to consider a PSE that is identified, and that includes the paths in the PSE of interest and other paths. For example, in Fig. 1(b), while the NDE of A on Y, which is the PSE including only the path A → Y, is not identified, the PSE which includes paths A → Y, A → M → Y, and A → M → W → Y, namely (2), is. The first counterfactual in the PSE contrast is identified in Fig. 1(b) by (3), and the second by the adjustment formula.

If we are using the PSE on the mean difference scale, the magnitude of the effect which includes more paths than the PSE we are interested in must be an upper bound on the magnitude of the PSE of interest in order for the bounds we impose to actually limit discrimination. This is only possible if, for instance, all causal influence of A on Y along paths involved in the PSE are of the same sign. In Fig. 1(b), this would mean assuming that if we expect the NDE of A on Y to be negative (due to discrimination), then it is also negative along the paths A → M → W → Y, and A → M → Y.

If measuring U is impossible, and it is not possible to find an identifiable PSE that includes the paths of interest from A to Y, and serves as a useful upper bound to the PSE of interest, the other alternative is to use bounds derived for non-identifiable PSEs. While finding such bounds is an open problem in general, they were derived in the context of the NDE with a discrete mediator in (Miles et al. 2016).

The issue with non-identification of the PSE was also noted in (Zhang, Wu, and Wu 2017). They proposed to change the causal model, specifically by cutting off some paths from the sensitive variable to the outcome such that the identification criterion in (Shpitser 2013) became satisfied, and the PSE became identified. We disagree with this approach, as we believe it amounts to “redefining success.” If the original causal model truly represents our beliefs about the structure of the problem, and in particular the pathways corresponding to discrimination, then making any sort of inferences in a model modified away from truth no longer tracks reality. We would certainly not expect any kind of repair within a modified model to result in fair inferences in the real world. The workarounds for non-identification we propose aim to stay within the true model, but try to obtain information on the true non-identified PSE, either by non-parametric bounds, or by including other pathways along with the “unfair” pathways.

Experiments

We first illustrate our approach to fair inference via two datasets: the COMPAS dataset (Angwin et al. 2016) and the Adult dataset (Lichman 2013). We also illustrate how a part of the model involving the outcome Y may be regularized without compromising fair inferences if the NDE quantifying discrimination is estimated using methods that are robust to misspecification of the Y model.

The COMPAS Dataset

Correctional Offender Management Profiling for Alternative Sanctions, or COMPAS, is a risk assessment tool, created by the company Northpointe, that is being used across the US to determine whether to release or detain a defendant before his or her trial. Each pretrial defendant receives several COMPAS scores based on factors including but not limited to demographics, criminal history, family history, and social status. Among these scores, we are primarily interested in “Risk of Recidivism”. Propublica (Angwin et al. 2016) has obtained two years worth of COMPAS scores from the Broward County Sheriff’s Office in Florida that contains scores for over 11000 people who were assessed at the pretrial stage and scored in 2013 and 2014. COMPAS score for each defendant ranges from 1 to 10, with 10 being the highest risk. Besides the COMPAS score, the data also includes records on defendant’s age, gender, race, prior convictions, and whether or not recidivism occurred in a span of two years. We limited our attention to the cohort consisting of African-Americans and Caucasians.

We are interested in predicting whether a defendant would reoffend using the COMPAS data. For illustration, we assume the use of prior convictions, possibly influenced by race, is fair for determining recidivism. Thus, we defined discrimination as effect along the direct path from race to the recidivism prediction outcome. The simplified causal graph model for this task is given in Figure 2(a), where A denotes race, prior convictions is the mediator M, demographic information such as age and gender are collected in C, and Y is recidivism. The “disallowed” path in this problem is drawn in green in Figure 2(a). The effect along this path is the NDE. The objective is to learn a fair model for Y. i.e. a model where NDE is minimized.

We obtained the posterior sample representation of 𝔼[Y|A, M, C] via both regular and constrained BART. Under the unconstrained posterior, the NDE (on the odds ratio scale) was equal to 1.3. This number is interpreted to mean that the odds of recidivism would have been 1.3 times higher had we changed race from Caucasian to African-American. In our experiment we restricted NDE to lie between 0.95 and 1.05. Using unconstrained BART, our prediction accuracy on the test set was 67.8%, removing treatment from the outcome model dropped the accuracy to 64.0%, and using constrained BART lead to the accuracy of 66.4%. As expected, dropping race, an informative feature, led to a greater decrease in accuracy, compared to simply constraining the outcome model to obey the constraint on the NDE.

In addition to our approach to removing discrimination, we are also interested in assessing the extent to which the existing recidivism classifier used by Northpointe is biased. Unfortunately, we do not have access to the exact model which generated COMPAS scores, since it is proprietary, nor all the input features used. Instead, we used our dataset to predict a binarized COMPAS score by fitting the model p̃(Y|M, C) using BART. We dropped race, as we know Northpointe’s model does not use that feature. Discrimination, as we defined it, may still be present even if we drop race. To assess discrimination, we estimate the NDE, our measure of discrimination, in the semiparametric model of p(Y, M, A, C), where the only constraint is that p(Y|M, C) is equal to p̃ above. This model corresponds to (our approximation of) the “world” used by Northpointe. Measuring the NDE on the ratio scale using this model yielded 2.1, which is far from 1 (the null effect value). In other words, assuming the defendant is Caucasian, then the odds of recidivism for him would be 2.1 times higher had he been, contrary to fact, African-American. Thus, our best guess on North-pointe’s model is that it is severely discriminatory.

The Adult Dataset

The “adult” dataset from the UCI repository has records on 14 attributes such as demographic information, level of education, and job related variables such as occupation and work class on 48842 instances along with their income that is recorded as a binary variable denoting whether individuals have income above or below 50k – high vs low income. The objective is to learn a statistical model that predicts the class of income for a given individual. Suppose banks are interested in using this model to identify reliable candidates for loan application. Raw use of data might construct models that are biased towards females who are perceived to have lower income in general compared to males. The causal model for this dataset is drawn in Figure 2(b). Gender is the sensitive variable in this example denoted by A in figure 2(b) and income class is denoted by Y. M denotes the marital status, L denotes the level of education, and R consists of three variables, occupation, hours per week, and work class. The baseline variables including age and nationality are collected in C. U1 and U2 capture the unobserved confounders between M, Y and L, R, respectively.

Here, besides the direct effect (A → Y), we would like to remove the effect of gender on income through marital status (A → M → … → Y). The “disallowed” paths are drawn in green in Figure 2(b). The PSE along the green paths is identifiable via the recanting district criterion in (Shpitser 2013), and can be computed by calculating odds ratio or contrast comparison of the counterfactual variable Y (a, M (a), L(a′, M(a)), R(a′, M(a), L(a′, M(a))), C), where a′ is set to a baseline value, a = 1 in one counterfactual, and a = 0 in the other. The counterfactual distribution can be estimated from the following functional: , where V are all observed variables.

If we use logistic regression to model Y and linear regression to model other variables given their past, and compute the PSE on the odds ratio scale, it is straightforward to show that the PSE simplifies to , where denotes the coefficient associated with variable i in modeling the variable j, (VanderWeele and Vansteelandt 2010). Therefore, the constraint in (4) is an easy function to compute, and the resulting constrained optimization problem relatively easy to solve.

The Y model is trained by maximizing the constrained likelihood in (4) using the R package nloptr. We trained two more models for Y, one using the full model with no constraint, and the other one by dropping all terms containing the “sensitive variable” A. We only included the “drop A” model in our simulations as a very simple naive approach to the problem that, superficially, might appear to be sensible for removing discrimination. In fact, we believe dropping the sensitive feature from the model is a poor choice in our setting, both because sensitive features are often highly predictive of the outcome in interesting (and politicized) cases, and because dropping the sensitive feature does not in fact remove discrimination (as we defined it)! For performance evaluation on test set, we should use 𝔼[Y|A, C] in constrained model, 𝔼[Y|A, M, L, R, C] in unconstrained model, and 𝔼[Y|M, L, R, C] in drop-A model.

The PSE in the unconstrained model is 3.16. This means, the odds of having a high income would have been more than 3 times higher for a female if her sex and marital status would have been the same as if she was a male. We solve the constrained problem by restricting the PSE, as estimated by (9), to lie between 0.95 and 1.05. Accuracy in the unconstrained model is the highest, 82%, and the lowest in the “drop A” scenario, 42%, (as expected). The constrained model not only boosts accuracy to 72%, but also guarantees fairness, in our sense.

Selecting The Outcome Model To Maximize Out Of Sample Predictive Performance

The search for an outcome model with the best out of sample performance, as is often done in machine learning problems, may result in a model which does not give consistent estimates of the NDE and thus does not guarantee removal of discrimination, if the NDE estimator is not chosen carefully. As discussed in the previous sections, the key approach is to use estimators that do not rely on the Y model being correctly specified, such as the triply robust estimator, and the IPW estimator. Here we demonstrate, via a simple simulation study, that selecting the outcome model to maximize predictive performance does not interfere with solving the constrained optimization problem for removing discrimination as long as we use triply robust or IPW estimators given that A and M models are specified correctly.

We generated 4000 data points using the models shown in (10) and split the data into training and validation sets.

| (10) |

We assume A and M models are correctly specified; A is randomized (like race or gender) and M has a logistic regression model with interaction terms. Using the IPW estimator, which only uses A and M models, we obtain the NDE (on the ratio scale) of 3.01. As expected, the triply robust estimator, which uses A, M, and Y models, gives us the same estimate of NDE, even under a misspecified Y model.

To select the most predictive outcome model, we searched over possible candidate models and performed constrained optimization that restricted the NDE to be within −0.5 and 0.5. We chose the model that lead to the smallest rMSE on the test data, where the predictions were done using 𝔼̃[Y|C], with this expectation evaluated by marginalizing over the candidate outcome model 𝔼[Y|A, M, C], and the constrained models for M and A. Our pool of candidate models were the linear regression models with different subsets of interaction terms. As expected, when using the triply robust estimator under the correctly specified models for A and M, the NDE for all candidate Y models we considered was almost the same and close to the truth (3.01). Out of the pool of candidate models, the following model was selected to have the smallest rMSE:

where D ⊆ C. Consider, by contrast, what happened when we used an estimator that relied on the Y model being specified correctly. We pick the following (incorrect) Y model:

and compute the NDE using (1), to obtain the value of 2.7.

Performing the constrained optimization using the above model and the estimator in (1) would lead us to the optimal coefficients for the M and Y models that ensure the NDE is within (−0.5, 0.5), as desired. However, since the Y model was incorrect, and (1) was not robust to misspecification of Y, the results cannot be trusted. Indeed, using the constrained coefficients for M and Y models in the triply robust estimator, that is robust to misspecified of Y, leads to a large NDE of 3.07.

The takeaway here is that the classical machine learning task of model selection to optimize out of sample prediction performance, be it via parameter regularization or other methods, can only ensure fairness if the estimators for the degree of fairness, as quantified by the PSE, do not rely on the model being selected, the models the estimators do rely on are specified correctly, and only the part of the model the estimators do not rely on is selected.

Discussion And Conclusions

In this paper, we considered the problem of fair statistical inference on outcomes, a setting where we wish to minimize discrimination with respect to a particular sensitive feature, such as race or gender. We formalized the presence of discrimination as the presence of a certain path-specific effect (PSE) (Pearl 2001; Shpitser 2013), as defined in mediation analysis, and framed the problem as one where we maximize the likelihood subject to constraints that restrict the magnitude of the PSE. We explored the implications of this view for predicting outcomes out of sample, for cases where the PSE of interest is not identified, and for computational Bayesian methods. We illustrated our approach using experiments on real datasets.

One of the advantages of our approach is it can be readily extended to concepts like affirmative action and “the wage gap” in a way that matches human intuition. To conceptualize affirmative action, we propose to define a set of “valid paths” from A (race/sexual orientation) to Y (admission decision), perhaps paths through academic merit, or extracurriculars, or even the direct path, and solve a constrained optimization problem that increases the PSE along these paths. Here we mean placing a lower bound εl on the PSE away from the value corresponding to “no effect”. Then, we learn p* as the KL-closest distribution to the observed data distribution p that satisfies the constraint on the PSE. Finally, we predict the admission decision of a new instance X in a similar way as the proposal in our paper, by using the information in the new instance X shared between p and p*, and predicting/averaging over other information using p*. We thus “count the causal influence of the sensitive feature on admission via prescribed paths” more highly among disadvantaged minorities. Defining these paths is a domain-specific issue. Increasing the PSE potentially lowers predictive performance, just as decreasing the PSE did in our experiments on reducing discrimination. This makes sense since we are moving away from the PSE implied by the “unfair world” given by the MLE towards something else that we deem more “fair”. A similar definition can be made for “the wage gap”, which we believe should be meaningfully defined as a comparison of the PSE of gender on salary with respect to “inappropriate paths.”

One methodological difficulty with our approach is the need for a computationally challenging constrained optimization problem. An alternative would be to reparameterize the observed data likelihood to include the causal parameter corresponding to the discrimination PSE, in a way causal parameters have been added to the likelihood in structural nested mean models (Robins 1999). Under such a reparameterization, minimizing the PSE always corresponds to imposing box constraints on the likelihood. However, this reparameterization is currently an open problem.

Acknowledgments

The research was supported by the grants R01 AI104459- 01A1 and R01 AI127271-01A1. We thank James M. Robins, David Sontag and his group, Alexandra Chouldechova, and Shira Mitchell for insightful conversations on fairness issues. We also thank the anonymous reviewers for their comments that greatly improved the manuscript.

Contributor Information

Razieh Nabi, Email: rnabiab1@.jhu.edu.

Ilya Shpitser, Email: ilyas@cs.jhu.edu.

References

- 7 th Circuit Court. 70 FEP cases 921. 1996. Carson vs Bethlehem Steel Corp. [Google Scholar]

- Angwin J, Larson J, Mattu S, Kirchner L. Machine bias. 2016. https://www.propublica.org/article/machine-bias-risk-assessments-in-criminal-\sentencing .

- Bertrand M, Mullainathan S. Are Emily and Greg more employable than Lakisha and Jamal? a field experiment on labor market discrimination. American Economic Review. 2004;94:991–1013. [Google Scholar]

- Chipman HA, George EI, McCulloch RE. BART: Bayesian additive regression trees. Annals of Applied Statistics. 2010;4(1):266–298. [Google Scholar]

- Corbe-Davies S, Pierson E, Feller A, Goel S, Huq A. Algorithmic decision making and the cost of fairness. 2017 arxiv preprint: 1701.08230. [Google Scholar]

- EEOC. The U.S. Equal Employment Opportunity Commission uniform guidelines on employee selection procedures 1979 [Google Scholar]

- Executive Office of the President. Big data: A report on algorithmic systems, opportunity, and civil rights 2016 May; [Google Scholar]

- Feldman M, Friedler SA, Moeller J, Scheidegger C, Venkatasubramanian S. Certifying and removing disparate impact. Proceedings of the 21th ACM SIGKDD International Conference on KDD; 2015. pp. 259–268. [Google Scholar]

- Gelfand AE, Smith AFM, Lee TM. Bayesian analysis of constrained parameter and truncated data problems using Gibbs sampling. Journal of the American Statistical Association. 1992;87(418):523–532. [Google Scholar]

- Hardt M, Price E, Srebro N. Equality of opportunity in supervised learning. NIPS. 2016:3315–3323. [Google Scholar]

- Jabbari S, Joseph M, Kearns M, Morgenstern J, Roth A. Fair learning in markovian environments. 2016 arxiv preprint: 1611.03071. [Google Scholar]

- Kamiran F, Zliobaite I, Calders T. Quantifying explainable discrimination and removing illegal discrimination in automated decision making. Knowledge and information systems. 2013;35(3):613–644. [Google Scholar]

- Lichman M. UCI machine learning repository. 2013. https://archive.ics.uci.edu/ml/datasets/adult .

- Metropolis N, Rosenbluth A, Rosenbluth M, Teller A, Teller E. Equations of state calculations by fast computing machines. Journal of Chemical Physics. 1953;21(6):1087–1092. [Google Scholar]

- Miles C, Kanki P, Meloni S, Tchetgen ET. On partial identification of the pure direct effect. Journal of Causal Inference. 2016 doi: 10.1515/jci-2016-0004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miles C, Shpitser I, Kanki P, Meloni S, Tchetgen ET. Quantifying an adherence path-specific effect of antiretroviral therapy in the nigeria pepfar program. Journal of the American Statistical Society. 2017 doi: 10.1080/01621459.2017.1295862. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Neyman J. Sur les applications de la thar des probabilities aux experiences agaricales: Essay des principle. excerpts reprinted (1990) in English. Statistical Science. 1923;5:463–472. [Google Scholar]

- Pearl J. Probabilistic Reasoning in Intelligent Systems. Morgan and Kaufmann; San Mateo: 1988. [Google Scholar]

- Pearl J. Direct and indirect effects. Proceedings of the Seventeenth Conference on Uncertainty in Artificial Intelligence (UAI-01); San Francisco: Morgan Kaufmann; 2001. pp. 411–420. [Google Scholar]

- Pearl J. Causality: Models, Reasoning, and Inference. 2 Cambridge University Press; 2009. [Google Scholar]

- Pearl J. Technical Report R-379. Cognitive Systems Laboratory, UCLA; 2011. The causal mediation formula – a guide to the assessment of pathways and mechanisms. [DOI] [PubMed] [Google Scholar]

- Pedreshi D, Ruggieri S, Turini F. Discrimination-aware data mining. Proceedings of the 14th ACM SIGKDD international conference on KDD; 2008. pp. 560–568. [Google Scholar]

- Richardson TS, Robins JM. Working Paper 128. Center for Statistics and the Social Sciences, Univ. Washington; Seattle, WA: 2013. Single world intervention graphs (SWIGs): A unification of the counterfactual and graphical approaches to causality. [Google Scholar]

- Robins JM, Greenland S. Identifiability and exchangeability of direct and indirect effects. Epidemiology. 1992;3:143–155. doi: 10.1097/00001648-199203000-00013. [DOI] [PubMed] [Google Scholar]

- Robins JM. Statistical Models in Epidemiology: The Environment and Clinical Trials. Springer; 1999. Marginal structural models versus structural nested models as tools for causal inference. [Google Scholar]

- Shpitser I, Pearl J. Identification of joint interventional distributions in recursive semi-Markovian causal models. Proceedings of the Twenty-First National Conference on Artificial Intelligence (AAAI-06); Palo Alto: AAAI Press; 2006. [Google Scholar]

- Shpitser I, Pearl J. Complete identification methods for the causal hierarchy. JMLR. 2008;9(Sep):1941–1979. [Google Scholar]

- Shpitser I, Tchetgen ET. Causal inference with a graphical hierarchy of interventions. Ann Stat. 2015 doi: 10.1214/15-AOS1411. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shpitser I. Counterfactual graphical models for longitudinal mediation analysis with unobserved confounding. Cognitive Science (Rumelhart special issue) 2013;37:1011–1035. doi: 10.1111/cogs.12058. [DOI] [PubMed] [Google Scholar]

- Spirtes P, Glymour C, Scheines R. Causation, Prediction, and Search. 2 Springer Verlag; New York: 2001. [Google Scholar]

- Tchetgen EJT, Shpitser I. Technical Report 129. Department of Biostatistics, HSPH; 2012a. Semiparametric estimation of models for natural direct and indirect effects. [Google Scholar]

- Tchetgen EJT, Shpitser I. Semiparametric theory for causal mediation analysis: efficiency bounds, multiple robustness, and sensitivity analysis. Ann Stat. 2012b;40(3):1816–1845. doi: 10.1214/12-AOS990. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tian J, Pearl J. Technical Report R-290-L. Department of CS, UCLA; 2002. On the identification of causal effects. [Google Scholar]

- VanderWeele TJ, Vansteelandt S. Odds ratios for mediation analysis for a dichotomous outcome. American Journal of Epidemiology. 2010;172:1339–1348. doi: 10.1093/aje/kwq332. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhang L, Wu Y, Wu X. A causal framework for discovering and removing direct and indirect discrimination. IJCAI. 2017:3929–3935. [Google Scholar]