ABSTRACT

Unusual patterns of fixation behavior in individuals with autism spectrum disorder during face tasks hint at atypical processing strategies that could contribute to diminished face expertise in this group. Here, we use the Bubbles reverse correlation technique to directly examine face-processing strategies during identity judgments in children with and without autism, and typical adults. Results support a qualitative atypicality in autistic face processing. We identify clear differences not only in the specific features relied upon for face judgments, but also more generally in the extent to which they demonstrate a flexible and adaptive profile of information use.

Face-processing atypicalities are widely observed in individuals diagnosed with autism spectrum disorder. Relative to typically developing individuals, differences and difficulties have been reported in processing of emotional expressions (see Harms, Martin, & Wallace, 2010) and social cues such as eye gaze (Nation & Penny, 2008; Senju & Johnson, 2009) (note—here and elsewhere we make use of preferred, identity-first terminology; Kenny et al., 2015). Atypicalities are also reported in the discrimination and recognition of face identity (Behrmann et al., 2006; Boucher, Lewis, & Collis, 1998; Croydon, Pimperton, Ewing, Duchaine, & Pellicano, 2014; Gepner, de Gelder, & de Schonen, 1996; Hauck, Fein, Maltby, Waterhouse, & Feinstein, 1998; Klin et al., 1999; Tantam, Monaghan, Nicholson, & Stirling, 1989; Wallace, Coleman, & Bailey, 2008), particularly when tasks involve a memory component or careful processing of the eyes (see, Weigelt, Koldewyn, & Kanwisher, 2012). These identity-processing difficulties are rarely as functionally debilitating as those deficits observed in “face blind” individuals with prosopagnosia (Behrmann & Avidan 2005; Yardley, McDermott, Pisarski, Duchaine, & Nakayama, 2008) but may nevertheless importantly contribute to the social communication and interaction difficulties characteristic of autism (Weigelt et al., 2012).

Researchers have long been interested in the extent to which these atypical outcomes on face tasks might reflect qualitative—as well as quantitative—processing differences in autistic people. Indeed, one of the earliest studies of autistic face perception tackled this question. Langdell (1978) investigated whether children with and without autism differ in their reliance upon different facial features/components during identification judgments of familiar faces: classroom peers. Young autistic children selectively benefited from the lower half of (otherwise masked) faces, in contrast to comparison groups who benefited more from the top half of faces. The authors tentatively linked this bias toward information in the lower half of the face with verbal and non-verbal communication difficulties in the condition. Interestingly, older autistic children showed no particular preference for either half; with their use of the top and bottom face halves hinting at a combination of the profiles observed in children from the other two groups.

Direct investigations of processing strategies in autism have revealed highly variable visual scan paths during face tasks: initially characterized as “erratic, undirected and disorganised” (Pelphrey et al., 2002, p. 258). Eye-tracking research findings are often inconsistent, particularly for static stimuli (Chevallier et al., 2015). Even the popular notion that autistic individuals look and rely relatively less on the eye region and more on the mouth is more consistently supported by behavioral data (e.g., discrimination tasks, Joseph & Tanaka, 2003; Rutherford, Clements, & Sekuler, 2007; Wolf et al., 2008) than eye-tracking evidence (see Falck-Ytter & von Hofsten, 2011; Guillon, Hadjikhani, Baduel, & Rogè, 2014; for recent reviews). Nevertheless, researchers continue to pursue evidence of links between (atypical) patterns of fixation to faces in ASD and face-recognition impairments (Kirchner, Hatri, Heekeren, & Dziobek, 2011; Snow et al., 2011).

This research interest may partly reflect that several conceptualizations of autism give cause to predict atypical processing strategies and information use during face judgments. Atypical looking at the eyes could stem, for example, from active avoidance of direct eye contact in the condition due to emotional arousal associated with this potent signal of social engagement (Kylliäinen & Hietanen, 2006; Tanaka & Sung, 2013). Equally, individual differences in scanning patterns could reflect atypical communicative skills in autism, driving variability in fixations to the eyes (particularly implicated in complex socio-emotional interactions) vs. the mouth (more critical for language and speech-related information) (Falck-Ytter, Fernell, Gillberg, & von Hofsten, 2010; Norbury et al., 2009). Finally, it could indicate an immature processing strategy associated with limited perceptual expertise with these social stimuli and a lack of appreciation of the importance/utility of this region (Itier & Batty, 2009). Irrespective of its origins, such an atypical profile is likely to negatively impact expertise because the eyes constitute a critical cue for face reading (Peterson & Eckstein, 2011)

Eye-tracking studies have unquestionably provided helpful insights into how participants read information from faces (Boraston & Blakemore, 2007). Yet there are limits to the utility of gaze behavior as an independent index of information use and processing strategies. Fixations are just one of a series of processing subcomponents that culminate in a discrete social judgment. They reflect a range of bottom-up and top-down influences, and can vary independently of visual attention (Triesch, Ballard, Hayhoe, & Sullivan, 2003; Turatto, Angrilli, Mazza, Umità, & Driver, 2002). An alternative and complementary approach to revealing face-processing strategies and profiles of information use for face judgments is the “Bubbles” reverse correlation technique (Gosselin & Schyns, 2001). This experimental paradigm allows researchers to pinpoint the specific visual information associated with participant outcomes on a categorization task (e.g., accuracy, reaction time, EEG activation). In the context of face-processing tasks, test images are presented to participants for categorization (e.g., identity, emotional expression, gender) with the systematic addition of visual noise across trials: randomly positioned Gaussian apertures or “bubbles” that reveal only subsampled regions of each stimulus on each trial (Gosselin & Schyns, 2001). Upon completion of the task, when the entire stimulus space has been sampled, performance across trials is analyzed to generate classification images that reveal the visual features significantly associated with correct (cf. incorrect) performance. That is, the pixels/cues/features that participants relied upon significantly for their judgments.

Spezio, Adolphs, Hurley, and Piven (2006) used this technique with autistic adults to reveal face-processing strategies during expression categorization judgments (fear vs. happy). Relative to a group of typical adults of similar cognitive ability, autistic individuals demonstrated equivalent levels of performance accuracy but with a distinctly atypical profile of information use. Classification images indicated that autistic adults relied more upon information from the mouth region and less on information from the eye region than did their typical counterparts. Concurrent eye tracking revealed close alignment between these patterns of information use and fixation behavior in each participant group. That is, the autism group consistently fixated more on the mouth and slightly less on the eyes than did the typical group. This association was interpreted as evidence that atypicalities in social gaze behavior in autism strongly contribute to differences in information use during face tasks.

In a more detailed investigation of participants’ saccade behavior during that original task, Spezio and colleagues (2007) also identified diminished specificity in this gaze to the mouth in autistic adults. Thus, this selective looking to the mouth was observed even when useful information was available in other regions (e.g., the eyes) that could have aided their categorizations. In line with this result, another study that used the same paradigm with a different group of adults confirmed consistent deviation from the pattern of fixation behavior predicted by computational models of stimulus salience and observed in the typical population, that is, in favor of looking at the mouth in the autistic group (Neumann, Spezio, Piven, & Adolphs, 2006). Together, these findings strongly suggest that reliance upon information from the mouth region during expression judgments may constitute a top-down driven information-processing bias in autism.

Only one study has investigated face-processing strategies in children on the autism spectrum using Bubbles. This dearth of research is surprising; researchers are often particularly interested in children because they are presumed to be less likely than adults to have developed compensatory strategies. Song, Kawabe, Hakoda, and Du (2012) investigated information use in school-aged autistic and typical children of similar age and cognitive ability during emotion and identity categorizations (Song et al., 2012). The inclusion of an identity task allowed unique exploration of a judgment that enlists highly specialist processing resources in the typical population (see Schwaninger, Carbon, & Leder, 2003) and is known to be especially challenging for individuals with autism (Robel et al., 2004; Scherf, Behrmann, Minshew, & Luna, 2008; Serra et al., 2003). Results indicated that autistic children’s information use during expression judgments closely resembled the typical children’s profile. On the identity task, however, counter to expectations based on previous eye-tracking research with autistic children and previous Bubbles experiments with autistic adults, children with autism were not less reliant upon information in the eye region than were typical children (Song et al., 2012). Moreover, the key point of difference from the typical children, who consistently relied on both the eye and mouth regions, was that rather surprisingly, the autistic children relied almost exclusively on the eye region.

This unexpected finding has yet to be replicated, and it remains unclear whether these intriguing results reflect a genuine atypicality in the salience and/or importance of the eye region for identity judgments in children with autism. Song and colleagues substantially adapted the Bubbles experimental paradigm to ensure that it was appropriate for their sample (Song et al., 2012). They kept trial numbers very low (80 per task), used child rather than adult face images in the task (a smiling and a neutral version of two identities) and presented these test images in pairs, rather than individually, to simplify the required participant response (i.e., “which of these faces is IdentityA/Happy?”). It is possible that these modifications contributed to their unexpected findings. For example, the limited number of trials might have prevented adequate sampling of the stimulus space; children’s faces might have been less distinctive than adult faces, which could have distorted participants’ profiles of information use, particularly for identity judgments; and presenting faces in pairs might have encouraged an atypical, low-level feature-matching processing strategy. The current study sought to rule out these possibilities by investigating information use during face recognition judgments in autistic children using a more traditional Bubbles experimental paradigm.

Our bubbles identity categorization task included as many trials as possible for the participant groups employed (based on pilot testing) to ensure optimized sampling of the stimulus space (216 trials). We also used the traditional individual presentation of test faces for categorization to encourage high-level face processing and probed judgments of both child and adult face stimuli in separate versions of the task (administered on different days). Based on the findings of previous adult Bubbles research and other evidence suggestive of a qualitative autistic atypicality in the use of the eyes and mouth during face tasks, we hypothesized that autistic children would use information from the eye region selectively less than typically developing children for their identity categorizations. Typical adults were included as a secondary comparison group. Their inclusion allowed us to place results from the typical children in a developmental context and to relate findings from this abbreviated bubbles paradigm with those from more exhaustive investigations of identity-related information use (e.g., Butler, Blais, Gosselin, Bub, & Fiset, 2010; Gosselin & Schyns, 2001; Schyns, Bonnar, & Gosselin, 2002).

Predictions regarding autistic vs. typical participants’ performance profiles for the child and adult faces were less straightforward. Given some previous reports of superior ability with own-relative to other-age faces (Anastasi & Rhodes, 2005; Hills & Lewis, 2011), it seemed possible that we might observe differences in face-processing strategies for these different categories in our typically developing participants. Such own vs. other-age face differences, however, may be absent (or be present to a lesser extent) for autistic children, who less reliably demonstrate in/out-group processing biases (see Chien, Wang, Chen, Chen, & Chen, 2014; Wilson, Palermo, Burton, & Brock, 2011; Yi et al., 2015).

Method

Ethics statement

This study was approved by the Human Research Ethics Committee at the UCL Institute of Education, University College London. All adults and parents provided written consent prior to their child’s participation in the project. All children also gave verbal assent before taking part.

Participants

Participants were eight cognitively able autistic children (five male), eight typically developing children (three male) and eight typical adults (three male). See Table 1 for detailed descriptive information. The sample size is similar to Bubbles studies investigating information use in adults with ASD (e.g., Neumann et al., 2006; Spezio et al., 2006, 2007). These children were recruited from a primary school in London (with autism specialist and mainstream classrooms) and adults were personal contacts of the researchers. Both typical groups did not have any personal history of autism spectrum disorder or psychiatric disorders. Parents of the typical children also completed the Social Communication Questionnaire (SCQ, Rutter, Bailey, Lord, & Berument, 2003), which revealed scores well below the cut-off for clinically significant autism symptoms (15), see Table 1.

Table 1.

Descriptive statistics for age, cognitive ability, and autism symptomatology measures.

| Measure |

|

Group |

|

||||

|---|---|---|---|---|---|---|---|

| |

Adults (n = 8) |

Autism (n = 8) |

Typical (n = 8) |

Difference between child groups | |||

| M (SD) | Range | M (SD) | Range | M (SD) | Range | ||

| Age (years) | 33.7 (14.7) |

24–58 | 10.6 (1.2) |

8.9–12.9 | 8.9 (0.8) |

7.0–9.8 |

t(14) = 3.27, p < 0.01, d = 1.74 |

| RCPM Nonverbal abilitya |

22.9 (3.1) |

16–26 | 21.7 (5.2) |

15–30 |

t(14) = 0.52, p = 0.60, d = 0.27 |

||

| BPVS Verbal abilitya |

89.1 (20.2) |

61–117 | 102.9 (10.7) |

83–118 |

t(14) = 1.70, p = 0.11, d = 0.90 |

||

| SCQb Autism symptoms |

27.5 (3.5) |

22–34 | 4.6 (2.1) |

1–7 |

t(14) = 15.77, p < 0.001, d = 8.42 |

||

| CFMT-C/CFMT Face Processing Abilityc |

84.1 (10.8) |

68–100 | 65.0 (19.2) |

38.3–98.3 | 77.5 (7.0) |

66.7–85 |

t(14) = 1.73, p = 0.05 (one tailed), d = 0.92 |

aNon-verbal and verbal cognitive ability were measured with the Ravens Coloured Progressive Matrices (RCPM, (Raven, 1998)) and the British Picture Vocabulary Scale III (BPVS, (Dunn et al., 2009)). bHigher scores on the SCQ (Social Communication Questionaire—Lifetime form; Rutter et al., 2003) indicate a greater degree of autism symptomatology. cFace processing ability measures: Adults completed the Cambridge Face Memory Test (Duchaine & Nakayama, 2006), children completed the Cambridge Face Memory Test - for Children (Croydon et al., 2014). Scores = accuracy (total percentage correct).

All autistic children had received an independent clinical diagnosis following DSM-IV criteria (American Psychiatric Association, 2000) and scored above 21 on the SCQ (Rutter et al., 2003). This group was significantly older than the typically developing comparison group of children, but otherwise did not differ with regards to verbal ability, as measured by the British Picture Vocabulary Scale (BPVS III, Dunn, Dunn, Styles, & Sewell, 2009) or non-verbal ability (Ravens Coloured Progressive Matrices; RCPM, Raven, 1998) (see Table 1). They did, however, perform more poorly than the typical group on a standardized measure of face-recognition ability (Cambridge Face Memory Test for Children; CFMT-C, Croydon et al., 2014). Adults would perform at ceiling on these children’s measures, so were assessed only for face-recognition ability (for completeness), using the adult form of the Cambridge Face Memory Test (CFMT, Duchaine & Nakayama, 2006). All participants had normal or corrected to normal vision, as reported by themselves and/or their parents.

Stimuli

Stimuli were grayscale photographs of neutral expression faces taken from stimulus databases with standardized pose and lighting conditions (see Figure 2). We used two adult male identities (from Schyns & Oliva, 1999) and two (approximately) 9 year-old male identities (JimStim database, University of Victoria). Hairstyle and feature locations were standardized within each age stimulus set using Adobe Photoshop.

Figure 2.

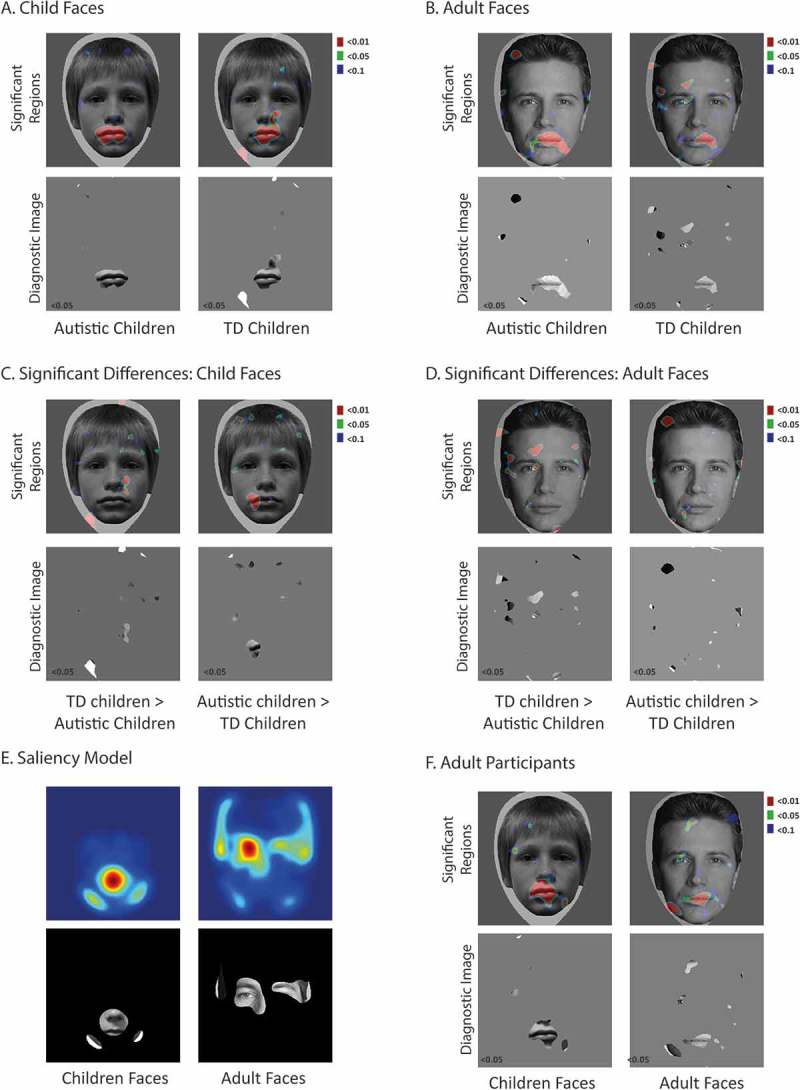

A. Child Faces. Top row: The visual information significantly associated with correct categorization performance for each stimulus face age in each participant group shown on a sample face image (red regions are significant p<0.01, green regions at p<0.05, blue regions at p<0.1). For the autistic group, the mouth significantly drives correct performance. Second row: Diagnostic images depicting only that information significantly associated with correct performance (p<0.05). Figure 2B. As Figure 2A for adult face stimuli. Figure 2C. Child faces. Top row: The visual information that is used significantly more by typical children than autistic children (left column) and more by autistic children than typical children (right column) on a sample face stimulus (red regions at p<0.01, green regions at p<0.05 and blue at p<0.1). Bottom row: Diagnostic images depicting only that information whose use differs significantly between the typical children and autistic children. Figure 2D. As Figure 2C for the adult face images. Figure 2E. Computationally determined salient visual information available to differentiate the two face identities in each task (red colour indicates greatest salience, blue colour indicates minimal values). A thresholded version applied to a sample stimulus image highlights the corresponding visual information for comparison. Figure 2F. As Figure 2A for the adult participants

The Puzzle Bubble Game—child and adult versions

In the Puzzle Bubble Game, learned face identities were presented individually for participants to identify with a verbal response or labeled key-press (“Bob or Ted” adult identities, “Guy or Max” child identities). The task was challenging because participants were provided with only subsets of information on a given trial, revealed through pseudo-randomly positioned circularly symmetric Gaussian apertures or “bubbles”. The rest of the image was hidden from view (for full methodological details, see Gosselin & Schyns, 2001). To minimize trial numbers, only the central portion of the stimulus images - including the entire face stimulus itself but not the outer dark grey area surrounding the faces, was sampled with bubbles during the experiment. An adaptive staircase algorithm was used to adjust the sampling density (i.e., total number of bubbles) on each trial to target participants’ accuracy at 75% correct (minimum 40 bubbles, maximum 250 bubbles). That is, when performance was low we presented participants with more visual information to guide their judgments, and when performance was high we presented less information. This personalized calibration of bubble numbers ensured that the task was comparably challenging across participant groups. Stimuli were projected on a light gray background to the center of the screen at a viewing distance of approximately 50 cm, subtending 6.5 × 6.5° of visual angle (similar to 5.7 × 5.7 in Gosselin & Schyns, 2001).

The child and adult versions of the task were identically structured, differing only in the to-be-categorized stimuli. Each game began with a training phase (12 trials), during which participants learned the names of the two test identities and practiced categorizing them. They were encouraged to look carefully at each picture and if they were unsure, to take their “best guess”. In this training phase the identities first appeared intact for an unlimited amount of time (twice each), and then intact for 1000 ms (twice each) and then “with bubbles” for 1000 ms (twice each) to familiarize and prepare participants for the main test trials. Auditory accuracy feedback was provided during this training phase. A minimum 75% level of performance accuracy during this training was required in order to progress to the main task.

The main test trials comprised nine blocks of 24 test trials (216 total). On each trial, a centrally presented test face appeared for 1000 ms, followed by a blank screen until the participant made their response. Between-block breaks provided participants with generic encouragement (e.g., screens saying “keep up the great effort”, odd-numbered blocks) or an engaging task-irrelevant game (even-numbered blocks). In this game (The Puzzle Bubble Challenge) participants identified “bubbled” images of films, TV shows, or geographical locations (category = participant’s choice) with as few added “clues” as possible, which each revealed more visual information to make their task easier.

Procedure

All participants completed both the child and adult face versions of the Puzzle Bubble Game along with our additional measures (children: CFMT-C, BPVS, RCPM; adults: CFMT) during two (children) or one (adults) 30–45 minute session/s. The order of the child and adult face versions was counterbalanced across participants. The computer tasks were run on a 13-inch Samsung Notebook computer. An experimenter sat alongside each participant at all times to monitor engagement and provide one-on-one encouragement.

Results

Participant performance metrics

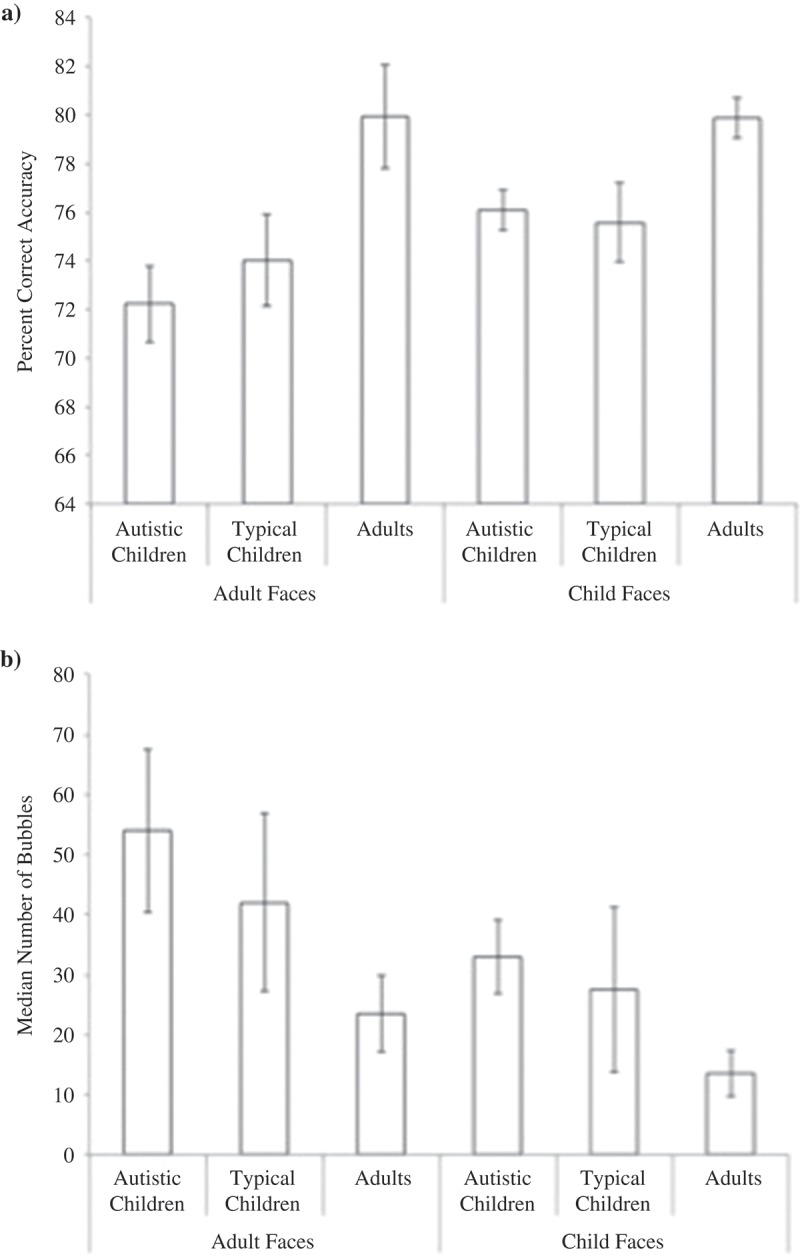

Our behavioral measure of performance accuracy during the categorization task was percentage correct (Figure 1(a)). Despite using a staircase algorithm to calibrate task difficulty and maintain performance at 75%, the use of an unbiased and equivalent “starting point” for all participants (125 bubbles) meant that accuracy was not necessarily matched perfectly across groups within the 216 experimental trials. A 2-way repeated-measures ANOVA investigating the impact of face stimulus age (child, adult) and participant group (autistic children, typical children, adults) on this variable revealed a significant effect only for participant group, F(2,21) = 7.09, p = 0.004, ηp 2 = .40. The effects of stimulus age and the interaction were not significant, Fs < 2.31, ps > 0.13. Importantly, the effect of participant group did not reflect any difference in categorization accuracy between autistic children (M = 74.1, SD = 3.9) and typical children (M = 74.8, SD = 4.8), t(14) = 0.39, p = 0.69). Rather, it was that typical adults performed significantly better (M = 79.9, SD = 4.4) compared to both child groups (ts >2.89, ps < 0.01).

Figure 1.

Performance metrics for the child and adult versions of the Puzzle Bubble Task: A) mean percent correct accuracy and B) median number of bubbles (amount of information revealed) to achieve these performance levels. Standard error is indicated.

A second 2-way repeated-measures ANOVA investigated the effects of face stimulus age and participant group on the amount of information (median number of bubbles) that participants required to reach these performance levels (see Figure 1(b)). Once again, there was a significant main effect of participant group (F(2,21) = 4.64, p = 0.02, ηp 2 = .30) but again, this effect did not reflect differences between the two child participant groups. To achieve the comparable levels of categorization accuracy reported above, the autistic children (M = 55.8, SD = 33.0) needed numerically but not significantly more visual information than typically developing children (M = 49.7, SD = 39.5), t(14) = 0.45, p = 0.65. Unsurprisingly, adults required significantly fewer bubbles than both child groups to achieve their superior accuracy levels (M = 22.9, SD = 15.0), ts > 2.49, ps < 0.02). This analysis also identified a main effect of face stimulus age, F(1,21) = 4.64, p = 0.04, ηp 2 = 0.18. Interestingly, this result did not reflect the child faces (Guy and Max) being more difficult to discriminate than the more mature adult faces (Bob and Ted). Instead, the reverse was true: overall participants needed fewer bubbles to identify the child faces (M = 34.2, SD = 26.8) than the adult faces (M = 51.5, SD = 37.8). There was no interaction with participant group, F(2,21) = 0.76, p = 0.47, ηp 2 = 0.06.

There was no significant association between either of these performance metrics and participants’ age, verbal ability or non-verbal ability in the autistic or typical child groups (all ps > 0.08, Kendall’s tau_b nonparametric correlations).

Classification & difference images

We followed standard approaches (e.g., Gosselin & Schyns, 2001) to determine the specific information associated with correct categorization performance in the child and adult stimulus versions of the task. Each trial was sorted as a function of whether the information presented to the participant resulted in a correct or incorrect identity categorization response. Observers tend to be correct if the information necessary to perform a task is available to them and incorrect if this information is missing. Thus, we summed together all of the bubble masks (pixel locations of available visual information) leading to correct categorizations in each case and divided this by the sum of all bubble masks presented during that task. The resulting classification images represented the probability that presenting visual information at each pixel location would lead to a correct response. The classification images were transformed into z-scores using the non-informative normalized hairstyle region at the top of the image space as a baseline. We established those regions that were statistically associated with correct categorization performance by applying a threshold criterion on the z-scores. The information found to be significantly associated with correct categorization performance, termed the diagnostic information, was superimposed in red (p < 0.01), green (p < 0.05), and blue (p < 0.1) on a representative face image to reveal its location (Figure 2(a) and 1(b), Significant Regions). We chose deliberately to include liberal (p < 0.1) as well as the more standard, conservative (p < .05, p < .01) thresholds when presenting the results to ensure that no important visual features were missed due to not quite reaching these (somewhat arbitrary) criteria. To create the diagnostic image, we displayed the information significantly associated with correct performance (at the p < 0.05 level) on a representative face (Figure 2(a,b), bottom row). These diagnostic images serve to further highlight those regions significantly driving performance in each group. This process was completed separately for the child and adult stimulus versions of the Bubbles task and separately for autistic children (Figure 2(a)), typical children (Figure 2(b)), as well as the adult comparison group (Figure 2(f)).

To compare information use across autistic and typical children we also computed the difference of the z-scored maps. We used the un-thresholded z-score maps that included all pixel values greater than zero (indicating greater than average association with correct performance) rather than use thresholded images which may result in misleading findings if some features are associated with performance but do not quite pass a significance threshold. These differences were re-normalized to the baseline region and we applied the same probability threshold criteria. Figure 2(c) illustrates the visual information that is significantly more used by typical children vs. autistic children (left column), and the reverse (right column) when categorizing child faces, with regions highlighted in red (p < 0.01), green (p < 0.05), and blue (p < 0.1) on a sample face image. Revealing only this significant information (p < 0.05 level) on a sample face provides a clear indication of those facial regions used more by typical children (corner of the nose) and used more by autistic children (left side of the mouth). Figure 2(d) similarly indicates the group differences for the adult faces. Here, typical children make relatively more use of the left-sided eye. There is very little facial information that autistic children use more than their typical peers for these adult faces.

During the child face task, the classification images also revealed some differences in the information used by autistic children compared to the typical children (Figure 2(a)). For example, the autistic children demonstrated a focused reliance upon one particular feature in isolation, whereas typical children drew upon a slightly broader set of face cues. Crucially, the singular, significant point of focus for the children with autism was the mouth region. This finding contrasts directly with the findings of Song and colleagues (Song et al., 2012) but fits well with the widely reported autistic bias to look relatively less at the eyes than typical individuals (Tanaka & Sung, 2013). Given this result, it is tempting to speculate about a possible link between differences or deficits in making use of visual information in the eye region in autism and atypical/impaired identity processing in autism. Yet any such association is invalidated by the parallel mouth-focus also observed in typically developing children, as well as adults (Figure 2(f)). Just like the children with autism, typical children also failed to make significant use of the eye region during their identity categorizations of these child faces. They relied instead upon the mouth and a slightly larger area of the face, encompassing also some of the nose and cheeks.

Looking at the classification images for the adult face stimuli, we observe a different—more traditional profile of information use in typical children and adults (see Figure 2(b,f)). Here, they relied significantly upon information in the eye region (particularly the left side eye) and the mouth region, as reported in Butler et al. (2010), Caldara et al. (2005), Schyns et al. (2002). Small idiosyncrasies were observed between these two typical groups, for example, adults consistently also used a left side jawline cue. Generally, however, both showed a similar profile of information use for the adult faces, which differed distinctly from that we observed for their child categorizations. These results highlight that for typical participants, the most efficient face processing strategy can vary depending on the task and specific stimuli presented (Smith & Merlusca, 2014). It was interesting to note then, that the same was not true here for children with autism. Instead, this group used the same strategy with the adult faces as they had with the child faces. That is, they persisted in their strong reliance upon information in the mouth region. These strategy differences are borne out in the difference images where the typically developing children are shown to make more use of the eye area for adult faces, and the side of the nose for the child faces, whereas the autistic children consistently focus more on the mouth.

Saliency model

We used the Graph-Based Visual Saliency metric (Harel, Koch, & Perona, 2007) to identify the most salient cues available for the identity discrimination judgment in each face set from the stimulus images. We computed a saliency map of the visual difference between the two identities separately for the two child faces (i.e. child face 1 minus child face 2) and the two adult faces (adult face 1 minus adult face 2). These saliency map images highlight the pixel locations of the most objectively salient regions in our to-be-discriminated test stimuli using metrics based on biologically grounded models of the early primate visual system (Itti & Koch, 2001). Figure 2(e) provides the result of the saliency model for the child and adult face stimuli. Applying a threshold to the saliency metric allows the most salient regions to be visualized on a sample face stimulus to permit direct comparison with the visual information used by participants discriminating the images (Figure 2(e), bottom row).

Importantly, these results indicate that the profile of information use that we observed for the child faces across all three participant groups (focused largely on the lower half of the face) is closely aligned with those features highlighted by the saliency model. Similar alignment of participants’ behavior and test stimulus properties was observed for the adult faces, which proved to be particularly important for interpreting our results. The focus on the left eye observed in the two typical groups (and also reported in other studies) could have been viewed as a product of the lateralization of face processing (Meng, Cherian, Singal, & Sinha, 2012; Rhodes, 1985). Crucially, however, the saliency model results indicate that the left eye also happened to be objectively useful for discriminating between the two adult face identities used in this experiment. Figure 2(e) indicates also that the mouth region was not a particularly salient cue for these particular stimuli. Nevertheless, all three participant groups relied significantly on information in this region (as also found previously Gosselin & Schyns, 2001; though see Butler et al., 2010). The use of these less than salient cues in the typical children and adults suggests that a bias to sub-optimally encode redundant facial features is not unique to autism.

Discussion

The current study examined how autistic children go about the complex process of reading identity information from faces. Our carefully designed developmental adaptation of the Bubbles reverse correlation technique allowed us to identify the cues that autistic children, typically developing children, and adults rely upon during a challenging identity categorization task. Results revealed a consistent, atypical bias in autistic children to focus on the mouth region for these judgments. This bias held irrespective of the to-be-discriminated stimuli, that is, whether they were child or adult faces. This behavioral profile differed markedly from the more flexible profile observed with typical children, who demonstrated a qualitatively similar profile to adults.

The atypical information use we observed in autistic children cannot be explained as the product of baseline differences between our participant groups. It is unlikely, for example, to reflect the increased age of the autistic children relative to the typical children because there was no significant correlation between age and either of our performance metrics during the Bubbles tasks. Our Bubbles design allowed us to equate categorization performance (percent correct accuracy for child and adult faces) and overall processing efficiency (number of bubbles) to highlight this qualitative difference in their face-processing strategy, which could be contributing to difficulties with face perception observed here and elsewhere.

Between-group differences were also observed in the distinct response profiles generated across the child and adult face tasks. Though the stimuli in each version were similarly standardized, visual saliency maps highlighted distinct face regions that were more and less salient for categorizations of the identities in these child and adult face pairs. Specifically, the eyes were confirmed to be particularly discriminative for the adult faces, the mouth for the child faces. Our classification images signal that typical but not autistic children were sensitive to this variability. Categorization behavior revealed that for the autistic children, the mouth region was always diagnostic, irrespective of the test stimulus. Typical children, however, flexibly modulated their information use across tasks: broadly in line with the features that were most salient. Similarly strategic information use was observed for the adult participants, and has been reported in other adult studies exploring other categorical face decisions (e.g., Schyns et al., 2002).

Autistic children demonstrated a fixed processing strategy for face identity that relied consistently on information in the mouth region. Such an approach fits with several eye-tracking studies that have similarly observed a particular focus on the mouth (Jones, Carr, & Klin, 2008; Klin, Jones, Schultz, Volkmar, & Cohen, 2002) and could reflect several different mechanisms. A bias toward the mouth (often also associated with a bias away from the eyes) could be associated with atypical communication in the condition (Langdell, 1978), an aversion to the socially intimidating eye region (Tanaka & Sung, 2013) or a failure to appreciate the utility of this information (Itier & Batty, 2009). Regardless of its origins, a mouth bias could negatively influence face-processing ability by preventing the exploitation of useful cues in the top half of the face (Peterson & Eckstein, 2011). Certainly, in the current study, performance in the autistic children was poorer than that of their more flexible and strategic comparison group of typical children.

The fixed, particular reliance upon the mouth region that we observed in autistic children contrasts not only with the behavioral profile observed in our typical comparison groups, but also with the results of the only previous investigation of information use in autistic children. Song and colleagues (2012) reported a strong reliance upon the eyes, rather than the mouth, during identity judgments in their Bubbles study. It is difficult to draw strong conclusions about the cause of the discrepancy between these findings because many methodological differences distinguished the two experimental tasks. Our confidence in the current findings is drawn from the extent to which our task closely resembles the classic Bubbles experimental paradigm, for example, with individual presentation of test stimuli. Moreover, our results are consistent with other behavioral and eye-tracking evidence that supports an autistic focus to fixate upon the mouth region (see Tanaka & Sung, 2013) and previous Bubbles research conducted with autistic adults reporting a strong reliance upon the mouth during fear vs happy judgments (see Spezio et al., 2006, 2007).

It is important to note that the face processing strategies observed in typical children, as well as adults, were also far from (objectively) perfect. Even though both these participant groups seemed to recognize the utility of the information in the eye region when categorizing adult face stimuli, they also continued to draw upon the mouth region. This information was used even though the mouth was not particularly helpful for discriminating between these two particular identities (confirmed by the saliency model). Such a bias to draw information from the mouth is very much in line with other published work on information use during face judgments, which is suggested to be broadly optimized to support face expertise (Smith, Cottrell, Gosselin, & Schyns, 2005). We speculate that across their extensive face experience accumulated from infancy, typical individuals might develop a “default” face processing strategy, which is then flexibly adapted to match stimulus characteristics and task demands but was not wholly recalibrated in the 216 trials of the current study.

The reverse correlation technique provides a highly sophisticated means to pinpoint the specific information significantly driving categorization judgments. There are necessary limits, however, to the ecological validity of such tasks. We consciously avoided overloading our participants by asking them to learn a large set of identities. Yet outside of the experimental context, face identity judgments are clearly more complex than the two-choice categorizations assessed here. Moreover the flexible face profiles of typical information use observed across stimulus categories in the current study confirm that participants’ experience with the test identities can impact upon performance outcomes, including face-processing strategies.

The current study sought to characterize face-processing strategies in autistic children, typical children and adults by elucidating the visual information that drives identity judgments. Our results indicate that autistic children differ from typical children not only in the specific features that they rely upon for these judgments of child and adult faces, but also more generally in the extent to which they demonstrate a flexible and adaptive profile of information use in this domain. These results were striking, even in our small sample of autistic participants—which was comparable to most previous studies in this domain, e.g., Spezio et al. (2006) tested nine adults, Spezio et al. (2007) tested eight adults, and Neumann et al. (2006) tested ten adults. We acknowledge that the trial numbers were relatively small in the context of “classical” bubbles research (e.g., Gosselin & Schyns, 2001) but note that they were not far from the more modest numbers that have led to stable solutions in individual level analyses associated with EEG studies (e.g., Schyns, Petro, Smith, 2007, 2009). The profile of information use observed with typical adults converges nicely with solutions obtained in more exhaustive testing sessions and, perhaps most crucially, the total number of trials per participant was considerably higher than the only other published study conducted with autistic children.1

Having identified a clear, potentially developmentally stable qualitative difference in autistic face processing strategies, an interesting future direction for this research will be to more directly investigate the functional consequences with respect to processing ability. There is a broad consensus that efficient (i.e., in some sense optimized) information use and flexible processing strategies support typical face expertise, but this link is yet to be empirically tested. It is true that evidence of atypical strategic information use in populations with face reading difficulties are consistent with this notion, for example, autism spectrum disorder (e.g., current study, also Neumann et al., 2006; Spezio et al., 2006, 2007) and prosopagnosia (e.g., Caldara et al., 2005; Xivry et al., 2008). Still these groups demonstrate other, potentially influential visuoperceptual and/or social atypicalities, making it an interesting open question whether this association truly holds and/or extends to the typical population. Directly assessing and contrasting profiles of information use in high- and lower-performing ability children and adults could highlight the functional consequences of qualitative differences in face processing strategy. Findings could provide an evidence base for training programs to improve skills in those with clinical and non-clinical difficulties in this domain.

Funding Statement

This research was supported by a grant from the Leverhulme Trust: RPG-2013-019 awarded to MLS, EF, and AKS. EP was supported by a grant from the UK’s Medical Research Council (MR/J013145/1). Research at the Centre for Research in Autism and Education is generously supported by The Clothworkers’ Foundation and Pears Foundation.

Note 1.

In the current study: 8participants*216 = 3456, that is, 1728 identity categorization trials with adult faces plus 1728 trials with child faces cf. Song et al. 15participants*80 = 1200 identity categorization trials with child faces only.

Acknowledgments

Thanks to Jim Tanaka for the use of his “JimStim” stimulus set.

References

- American Psychiatric Association (2000). . Washington, DC: Author. [Google Scholar]

- Anastasi J., & Rhodes M. (2005). An own-age bias in face recognition for children and older adults. , 12, 1043–1047. doi: 10.3758/BF03206441 [DOI] [PubMed] [Google Scholar]

- Behrmann M., & Avidan G. (2005). Congenital prosopagnosia: Face-blind from birth. , 9, 180–187. doi: 10.1016/j.tics.2005.02.011 [DOI] [PubMed] [Google Scholar]

- Behrmann M., Avidan G., Leonard G., Kimchi R., Luna B., Humphreys K., & Minshew N. (2006). Configural processing in autism and its relationship to face processing. , 44, 110–129. doi: 10.1016/j.neuropsychologia.2005.04.002 [DOI] [PubMed] [Google Scholar]

- Boraston Z., & Blakemore S. (2007). The application of eye-tracking technology in the study of autism. , 581, 893–898. doi: 10.1113/jphysiol.2007.133587 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Boucher J., Lewis V., & Collis G. (1998). Familiar face and voice matching and recognition in children with autism. , 39, 171–181. doi: 10.1111/jcpp.1998.39.issue-2 [DOI] [PubMed] [Google Scholar]

- Butler S., Blais C., Gosselin F., Bub D., & Fiset D. (2010). Recognising famous people. , 72, 1444–1449. doi: 10.3758/APP.72.6.1444 [DOI] [PubMed] [Google Scholar]

- Caldara R., Schyns P., Mayer E., Smith M., Gosselin F., & Rossion B. (2005). Does prosopagnosia take the eyes out of face representations? Evidence for a defect in representing diagnostic facial information following brain damage. , 17, 1652–1666. doi: 10.1162/089892905774597254 [DOI] [PubMed] [Google Scholar]

- Chevallier C., Parish-Morris J., McVey A., Rump K., Sasson N., Herrington J., & Schultz R. (2015). Measuring social attention and motivation in autism spectrum disorder using eye-tracking: Stimulus type matters. . doi: 10.1002/aur.1479 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chien S., Wang L., Chen C., Chen T., & Chen H. (2014). Autistic children do not exhibit an own-race advantage as compared to typically developing children. , 8, 1544–1551. doi: 10.1016/j.rasd.2014.08.005 [DOI] [Google Scholar]

- Croydon A., Pimperton H., Ewing L., Duchaine B., & Pellicano E. (2014). The Cambridge Face Memory Test for Children (CGMT-C): A new tool for measuring face recognition skills in childhood. , 62, 60–67. doi: 10.1016/j.neuropsychologia.2014.07.008 [DOI] [PubMed] [Google Scholar]

- Duchaine B., & Nakayama K. (2006). The Cambridge face memory test: Results for neurologically intact individuals and an investigation of its utility using inverted face stimuli and prosopagnosic participants. , 44, 576–585. doi: 10.1016/j.neuropsychologia.2005.07.001 [DOI] [PubMed] [Google Scholar]

- Dunn L. M., Dunn D. M., Styles B., & Sewell J. (2009). (3rd ed.). London, UK: GL Assessment. [Google Scholar]

- Falck-Ytter T., Fernell E., Gillberg C., & von Hofsten C. (2010). Face scanning distinguishes social from communication impairments in autism. , 13, 864–875. doi: 10.1111/desc.2010.13.issue-6 [DOI] [PubMed] [Google Scholar]

- Falck-Ytter T., & von Hofsten C. (2011). How special is social looking in ASD: A review. , 189, 209–222. [DOI] [PubMed] [Google Scholar]

- Gepner B., de Gelder B., & de Schonen S. (1996). Face processing in autistics: Evidence for a generalised deficit? , 2, 123–139. doi: 10.1080/09297049608401357 [DOI] [Google Scholar]

- Gosselin F., & Schyns P. G. (2001). Bubbles: A technique to reveal the use of information in recognition tasks. , 41(17), 2261–2271. doi: 10.1016/S0042-6989(01)00097-9 [DOI] [PubMed] [Google Scholar]

- Guillon Q., Hadjikhani N., Baduel S., & Rogè B. (2014). Visual social attention in autism spectrum disorder: Insights from eye tracking studies. , 42, 279–297. doi: 10.1016/j.neubiorev.2014.03.013 [DOI] [PubMed] [Google Scholar]

- Harel J., Koch C., & Perona P. (2007). Graph-Based Visual Saliency. In (pp. 545–552). Cambridge, MA: MIT Press. [Google Scholar]

- Harms M. B., Martin A., & Wallace G. L. (2010). Facial emotion recognition in autism spectrum disorders: A review of behavioral and neuroimaging studies. , 20(3), 290–322. doi: 10.1007/s11065-010-9138-6 [DOI] [PubMed] [Google Scholar]

- Hauck M., Fein M., Maltby N., Waterhouse L., & Feinstein C. (1998). Memory for faces in children with autism. , 4, 187–198. doi: 10.1076/chin.4.3.187.3174 [DOI] [Google Scholar]

- Hills P., & Lewis M. (2011). The own-age face recognition bias in children and adults. , 64, 17–23. doi: 10.1080/17470218.2010.537926 [DOI] [PubMed] [Google Scholar]

- Itier R., & Batty M. (2009). Neural bases of eye and gaze processing: The core of social cognition. , 33, 843–863. doi: 10.1016/j.neubiorev.2009.02.004 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Itti L., & Koch C. (2001). Computational modelling of visual attention. , 2(3), 194–203. doi: 10.1038/35058500 [DOI] [PubMed] [Google Scholar]

- Jones W., Carr K., & Klin A. (2008). Absence of preferential looking to the eyes of approaching adults predicts level of social disability in 2-year-old toddlers with autism spectrum disorder. , 65, 946. doi: 10.1001/archpsyc.65.8.946 [DOI] [PubMed] [Google Scholar]

- Joseph R., & Tanaka J. (2003). Holistic and part-based face recognition in children with autism. , 43, 1–14. [DOI] [PubMed] [Google Scholar]

- Kenny L., Hattersley C., Molins B., Buckley C., Povey C., & Pellicano E. (2015). Which terms should be used to describe autism? Perspectives from the UK autism community. , 1–21. doi: 10.1177/1362361315588200 [DOI] [PubMed] [Google Scholar]

- Kirchner J., Hatri A., Heekeren H., & Dziobek I. (2011). Autistic symptomatology, face processing abilities, and eye fixation patterns. , 41, 158–167. doi: 10.1007/s10803-010-1032-9 [DOI] [PubMed] [Google Scholar]

- Klin A., Jones W., Schultz R., Volkmar F., & Cohen D. (2002). Visual fixation patterns during viewing of naturalistic social situations as predictors of social competence in individuals with autism. , 59, 809–816. doi: 10.1001/archpsyc.59.9.809 [DOI] [PubMed] [Google Scholar]

- Klin A., Sparrow S., De Bildt A., Cicchetti D., Cohen D., & Volkmar F. (1999). A normed study of face recognition in autism and related disorders. , 29, 499–508. doi: 10.1023/A:1022299920240 [DOI] [PubMed] [Google Scholar]

- Kylliäinen A., & Hietanen J. (2006). Skin conductance responses to another person’s gaze in children with autism. , 36, 517–525. doi: 10.1007/s10803-006-0091-4 [DOI] [PubMed] [Google Scholar]

- Langdell T. (1978). Recognition of faces: An approach to the study of autism. , 19, 255–268. doi: 10.1111/jcpp.1978.19.issue-3 [DOI] [PubMed] [Google Scholar]

- Meng M., Cherian T., Singal G., & Sinha P. (2012). Lateralisation of face processing in the human brain. , 279, 2052–2061. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nation K., & Penny S. (2008). Sensitivity to eye gaze in autism: Is it normal? Is it automatic? Is it social? , 20, 79–97. doi: 10.1017/S0954579408000047 [DOI] [PubMed] [Google Scholar]

- Neumann D., Spezio M., Piven J., & Adolphs R. (2006). Looking you in the mouth: Abnormal gaze in autism resulting from impaired top-down modulation of visual attention. , 1, 194–202. doi: 10.1093/scan/nsl030 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Norbury C., Brock J., Cragg L., Einav S., Griffiths H., & Nation K. (2009). Eye movements are associated with language competence in autism spectrum disorder. , 50, 834–842. doi: 10.1111/j.1469-7610.2009.02073.x [DOI] [PubMed] [Google Scholar]

- Pelphrey K., Sasson N., Reznick S., Paul G., Goldman B., & Piven J. (2002). Visual scanning of faces in autism. , 32, 249–261. doi: 10.1023/A:1016374617369 [DOI] [PubMed] [Google Scholar]

- Peterson M., & Eckstein M. (2011). Fixating the eyes is an optimal strategy across important face (related) tasks. , 11, 662. doi: 10.1167/11.11.662 [DOI] [Google Scholar]

- Raven J., Raven J. C., & Court J. H. (1998). . Oxford, UK: Oxford Psychologists Press. [Google Scholar]

- Rhodes G. (1985). Lateralised processes in face recognition. , 76, 249–271. doi: 10.1111/j.2044-8295.1985.tb01949.x [DOI] [PubMed] [Google Scholar]

- Robel L., Ennouri K., Piana H., Vaivre-Douret L., Perier A., Flament M., & Mouren-Siméoni M. (2004). Discrimination of face identities and expressions in children with autism: Same or different? , 13, 227–233. doi: 10.1007/s00787-004-0409-8 [DOI] [PubMed] [Google Scholar]

- Rutherford M., Clements K., & Sekuler A. (2007). Differences in discrimination of eye and mouth displacement in autism spectrum disorders. , 47, 2099–2110. doi: 10.1016/j.visres.2007.01.029 [DOI] [PubMed] [Google Scholar]

- Rutter M., Bailey A., Lord C., & Berument S. (2003). . Los Angeles, CA: Western Psychological Services. [Google Scholar]

- Scherf K., Behrmann M., Minshew N., & Luna B. (2008). Atypical development of face and greeble recognition in autism. , 49, 838–847. doi: 10.1111/jcpp.2008.49.issue-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schwaninger A., Carbon C., & Leder H. (2003). (pp. 81–97). Cambridge, MA: Hogrefe & Huber. [Google Scholar]

- Schyns P., Bonnar L., & Gosselin F. (2002). Show me the features! Understanding recognition from the use of visual information. , 13, 402–409. doi: 10.1111/1467-9280.00472 [DOI] [PubMed] [Google Scholar]

- Schyns P., & Oliva A. (1999). Dr Angry and Mr Smile: When categorization flexibly modifies the perception of faces in rapid visual presentations. , 69, 243–265. doi: 10.1016/S0010-0277(98)00069-9 [DOI] [PubMed] [Google Scholar]

- Schyns P. G., Petro L. S., & Smith M. L. (2007). Dynamics of Visual Information Integration in the Brain for Categorizing Facial Expressions. , 17(18), 1580–1585. [DOI] [PubMed] [Google Scholar]

- Schyns P. G., Petro L. S., & Smith M. L. (2009). Transmission of Facial Expressions of Emotion Co-Evolved with Their Efficient Decoding in the Brain: Behavioral and Brain Evidence. , 4(5), e5625. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Senju A., & Johnson M. (2009). Atypical eye contact in autism: Models, mechanisms and development. , 33, 1204–1214. doi: 10.1016/j.neubiorev.2009.06.001 [DOI] [PubMed] [Google Scholar]

- Serra M., Althaus M., De Sonneville L., Stant A., Jackson A., & Minderaa R. (2003). Face recognition in children with a pervasive developmental disorder not otherwise specified. , 33, 303–317. doi: 10.1023/A:1024458618172 [DOI] [PubMed] [Google Scholar]

- Smith M. L., Cottrell G. W., Gosselin F., & Schyns P. G. (2005). Transmitting and decoding facial expressions. , 16(3), 184–189. doi: 10.1111/j.0956-7976.2005.00801.x [DOI] [PubMed] [Google Scholar]

- Smith M. L., & Merlusca C. (2014). How task shapes the use of information during facial expression categorisations. , 14, 478–487. doi: 10.1037/a0035588 [DOI] [PubMed] [Google Scholar]

- Snow J., Ingeholm J., Levy I., Caravella R., Case L., Wallace G., & Martin A. (2011). Impaired visual scanning and memory for faces in high-functioning autism spectrum disorders: Its not just the eyes. , 17, 1021–1029. doi: 10.1017/S1355617711000981 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Song Y., Kawabe T., Hakoda Y., & Du X. (2012). Do the eyes have it? Extraction of identity and positive expression from another’s eyes in autism, proved using “Bubbles”. , 34, 584–590. doi: 10.1016/j.braindev.2011.09.009 [DOI] [PubMed] [Google Scholar]

- Spezio M., Adolphs R., Hurley R., & Piven J. (2006). Abnormal use of facial information in high-functioning autism. , 37, 929–939. doi: 10.1007/s10803-006-0232-9 [DOI] [PubMed] [Google Scholar]

- Spezio M., Adolphs R., Hurley R., & Piven J. (2007). Analysis of face gaze in autism using “Bubbles”. , 45, 144–151. doi: 10.1016/j.neuropsychologia.2006.04.027 [DOI] [PubMed] [Google Scholar]

- Tanaka J., & Sung A. (2013). The “eye avoidance” hypothesis of autism face processing. , 46, 1538–1552. doi: 10.1007/s10803-013-1976-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tantam D., Monaghan L., Nicholson H., & Stirling J. (1989). Autistic children’s ability to interpret faces: A research note. , 30, 623–630. doi: 10.1111/jcpp.1989.30.issue-4 [DOI] [PubMed] [Google Scholar]

- Triesch J., Ballard D., Hayhoe M., & Sullivan B. (2003). What you see is what you need. , 3, 86–94. doi: 10.1167/3.1.9 [DOI] [PubMed] [Google Scholar]

- Turatto M., Angrilli A., Mazza V., Umità C., & Driver J. (2002). Looking without seeing the background change: Electrophysiological correlates of change detection versus change blindness. , 84, B1–B10. doi: 10.1016/S0010-0277(02)00016-1 [DOI] [PubMed] [Google Scholar]

- Wallace G., Coleman M., & Bailey A. (2008). Face and object processing in autism spectrum disorders. , 1, 43–51. doi: 10.1002/aur.7 [DOI] [PubMed] [Google Scholar]

- Weigelt S., Koldewyn K., & Kanwisher N. (2012). Face identity recognition in autism spectrum disorders: A review of behavioral studies. , 36, 1060–1084. doi: 10.1016/j.neubiorev.2011.12.008 [DOI] [PubMed] [Google Scholar]

- Wilson C. E., Palermo R., Burton A. M., & Brock J. (2011). Recognition of own- and other-race faces in autism spectrum disorders. , 64, 1939–1954. doi: 10.1080/17470218.2011.603052 [DOI] [PubMed] [Google Scholar]

- Wolf J., Tanaka J., Klaiman C., Cockburn J., Herlihy L., Brown C., … Schultz R. (2008). Specific impairment of face-processing abilities in children with autism spectrum disorder using the Let’s Face It! Skills Battery. , 1, 329–340. doi: 10.1002/aur.56 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Xivry J. J. O., Ramon M., Lefevre P., & Rossion B. (2008). Reduced fixation on the upper area of personally familiar faces following acquired prosopagnosia. , 2(1), 245–268. [DOI] [PubMed] [Google Scholar]

- Yardley L., McDermott L., Pisarski S., Duchaine B., & Nakayama K. (2008). Psychosocial consequences of developmental prosopagnosia: A problem of recognition. , 65, 445–451. doi: 10.1016/j.jpsychores.2008.03.013 [DOI] [PubMed] [Google Scholar]

- Yi L., Quinn P., Feng C., Li J., Ding H., & Lee K. (2015). Do individuals with autism spectrum disorder process own- and other-race faces differently? , 107, 124–132. doi: 10.1016/j.visres.2014.11.021 [DOI] [PubMed] [Google Scholar]