Abstract

Background

Patient decision aids (PDAs) are evidence-based tools designed to help patients make specific and deliberated choices among healthcare options. The International Patient Decision Aid Standards (IPDAS) Collaboration review papers and Cochrane systematic review of PDAs have found significant gaps in the reporting of evaluations of PDAs, including poor or limited reporting of PDA content, development methods and delivery. This study sought to develop and reach consensus on reporting guidelines to improve the quality of publications evaluating PDAs.

Methods

An international workgroup, consisting of members from IPDAS Collaboration, followed established methods to develop reporting guidelines for PDA evaluation studies. This paper describes the results from three completed phases: (1) planning, (2) drafting and (3) consensus, which included a modified, two-stage, online international Delphi process. The work was conducted over 2 years with bimonthly conference calls and three in-person meetings. The workgroup used input from these phases to produce a final set of recommended items in the form of a checklist.

Results

The SUNDAE Checklist (Standards for UNiversal reporting of patient Decision Aid Evaluations) includes 26 items recommended for studies reporting evaluations of PDAs. In the two-stage Delphi process, 117/143 (82%) experts from 14 countries completed round 1 and 96/117 (82%) completed round 2. Respondents reached a high level of consensus on the importance of the items and indicated strong willingness to use the items when reporting PDA studies.

Conclusion

The SUNDAE Checklist will help ensure that reports of PDA evaluation studies are understandable, transparent and of high quality. A separate Explanation and Elaboration publication provides additional details to support use of the checklist.

Keywords: shared decision making, patient-centred care, checklists, patient education

Introduction

Patient decision aids (PDAs) are evidence-based interventions designed to help people make informed and deliberated choices among healthcare options.1 2 At a minimum, PDAs provide accurate and unbiased information on options and relevant outcomes, help patients clarify their values and treatment preferences, and provide guidance in steps of decision making and deliberation.3 4

The number and types of PDAs being developed, tested and reported in the literature have expanded considerably. The latest published update of the Cochrane systematic review of PDAs included 105 randomised trials.3 Compared with usual care, using PDAs improves patients’ knowledge of and expectations about healthcare outcomes, reduces decisional conflict, increases decision quality and increases patient participation in decision making.3 The International Patient Decision Aid Standards (IPDAS) Collaboration has synthesised evidence across professional and disciplinary domains to develop quality standards for PDA development. In 2013, the IPDAS Collaboration published 12 papers updating the definitions, evidence and emerging research areas for all quality dimensions.5–16

The evidence base for PDA effectiveness relies on accurate, complete and high-quality reports of evaluation studies. Despite the availability of reporting guidelines for a wide range of methodologies and interventions, investigators involved in the latest update of IPDAS and Cochrane reviews identified pervasive and significant gaps in published PDA evaluation studies. The problems included poor or limited reporting of the PDA content, development methods, delivery and evaluation methods.9 12 17 For example, the description of PDAs was often so limited that Cochrane reviewers had to obtain copies of the PDAs to determine their content and elements (eg, benefits and harms, patient stories, probabilities).18 Further, a systematic review of measures used in PDA evaluations found that the description of the outcome measures used was often inadequate, particularly for the core outcomes related to decision quality and decision-making process outcomes.17

Without comprehensive and clear reporting of the PDA content and evaluation methods, there is limited evidence to understand which PDAs work and in what context. To address these gaps, an international workgroup was formed to develop and reach consensus on a set of reporting guidelines to improve the quality of manuscripts describing PDA evaluation studies.

Methods

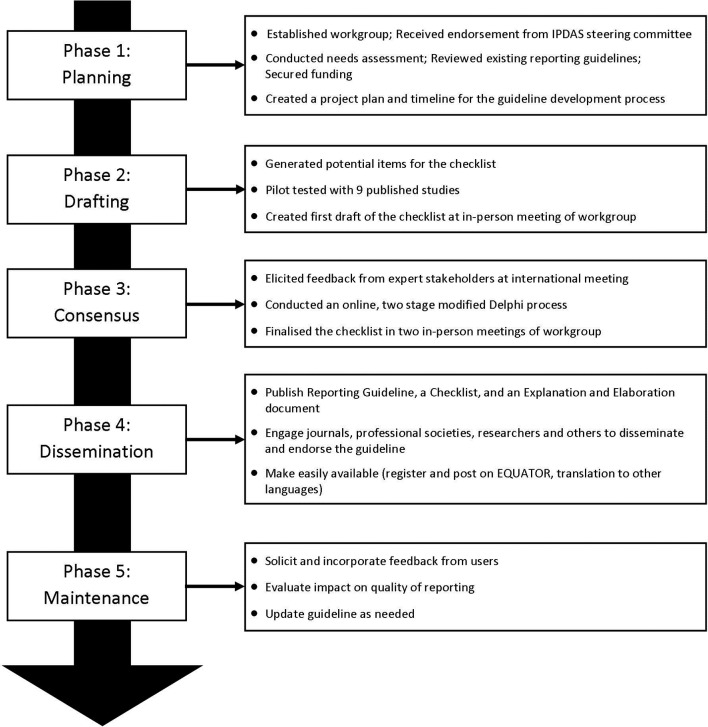

The development of SUNDAE Checklist, or Standards for UNiversal reporting of patient Decision Aid Evaluations, followed the recommended process for developing and disseminating reporting guidelines put forth by EQUATOR (Enhancing the QUAlity and Transparency Of health Research).19 20 The development process has five phases: (1) planning phase, (2) drafting phase, (3) consensus phase, (4) dissemination phase and (5) maintenance phase (see figure 1). The first three phases are complete and reported in this manuscript. The phases are listed generally in the order they were initiated; however, the process was iterative within and between phases rather than purely sequential.

Figure 1.

SUNDAE guideline development process to date. EQUATOR, Enhancing the QUAlity and Transparency Of health Research; IPDAS, International Patient Decision Aid Standards; SUNDAE, Standards for UNiversal reporting of patient Decision Aid Evaluations.

Planning phase

The purpose of this phase was to secure resources, conduct a needs assessment, review existing guidelines, and set a timeline and scope for the project. A reporting guideline workgroup (IPDAS-RG), led by KRS and RT, was set up to facilitate the guideline development. The cochairs received support and endorsement from the IPDAS Steering Committee, and then invited authors from each of the 12 IPDAS chapters to join the workgroup. Fourteen experts in PDA research representing a range of countries and disciplines (medicine, nursing, psychology, decision science and health services research) agreed to participate in the IPDAS-RG. The workgroup communicated through regular conference calls and emails, and held three in-person meetings to enable discussion and debate over the course of the project.

The IPDAS-RG conducted a needs assessment to characterise the scope and range of issues that might be addressed with improved reporting of PDA evaluation studies. Workgroup members examined each of the 12 IPDAS core dimensions, and summarised gaps in current reporting, the significance of the problem, evidence supporting the inclusion of specific items on PDA evaluations and the challenges to full reporting. We grouped gaps into major categories (eg, terminology, decision aid components, research methods) and counted the number of chapters documenting a reporting gap in those categories.

Next, the workgroup reviewed and catalogued existing guidelines to determine whether the gaps could be addressed in full or in part by existing reporting guidelines. Relevant guidelines included CONSORT (CONsolidated Standards of Reporting Trials) and its extensions,21 TREND (Transparent Reporting of Evaluations with Nonrandomized Designs),22 STROBE (Strengthening The Reporting of Observational Studies in Epidemiology),23 TIDieR (Template for Intervention Description and Replication),24 CReDECI (Criteria for Reporting the Development and Evaluation of Complex Interventions in healthcare),25 SQUIRE (Standards for Quality Improvement Reporting Excellence),26 and STaRI (Standards for Reporting Implementation studies of complex interventions).27

The group prioritised issues for inclusion in the guidelines when there was (1) evidence that the item or limited reporting of the item impacted outcomes and/or interpretation of decision aid evaluation results; (2) inconsistent or limited reporting impacted the ability to conduct meta-analyses and/or systematic reviews to generate evidence; or (3) inconsistent or limited reporting, despite being included in existing reporting guidelines. The workgroup also agreed that the new guidelines would avoid overlap with other guidelines when possible, have language consistent with existing guidelines where items are similar, and focus on reporting studies of PDA evaluation. Although some of the items may be relevant for studies describing development or implementation of PDAs, the focus is on evaluation studies. Once the need for reporting guidelines was clarified, the workgroup submitted proposals to governmental agencies and foundations to secure funding for the work.

Drafting phase

The goal of this phase was to generate, test and refine an initial list of items to be included in the SUNDAE Checklist. As shown in figure 1, this phase had three steps. First, the workgroup used the findings from its needs assessment and review of existing guidelines to draft emerging items. Second, the workgroup came together in the first in-person meeting to review and comment on the items. The workgroup discussed the importance, relevance, overlap with existing guidelines and feasibility of reporting the requested information for each item. Two outside experts, lead authors of SQUIRE and TIDieR reporting guidelines, also attended the meeting and provided advice on guideline development methodology and process. The meeting resulted in consensus on the scope and purpose of the guideline, a draft of the 83 potential items to be included, a clear plan for next steps and allocation of tasks.

Third, IPDAS-RG members pilot-tested the draft of the 83 checklist items on nine published manuscripts of PDA evaluation studies. The selected publications were authored by the workgroup members in order to enable assessment of whether the information was available but not reported, or not available. Each article had two reviewers, the article’s author and an independent reviewer. The reviewers assessed whether and where each proposed item was reported and the ease of finding each item. If items were not present, reviewers assessed whether the information could feasibly be reported in the manuscript. Results of the pilot study were discussed among the workgroup, and revisions were made to the items to improve clarity and feasibility.

Consensus phase

In this phase, we engaged a wide range of experts to review and comment on the draft items for the SUNDAE Checklist. First, we conducted a 90 min workshop at the International Shared Decision Making Conference (July 2015) to test the draft items, explore areas of disagreement and address any gaps. Attendees raised several issues that the IPDAS-RG had also discussed, including the potential overlap with existing guidelines, feasibility of including the information in an evaluation paper, potential overlap with work being done on developing guidelines for PDA development process28 and relationship to IPDAS.

Second, we conducted a two-stage modified Delphi survey online with a wider network of international stakeholders to help prioritise items and build consensus around the checklist.15 17 We recruited expert stakeholders worldwide, including (1) researchers who have published or planned to publish evaluation studies of PDAs, (2) clinicians and administrators who read and appraise the PDA studies, (3) patient/consumer advocates, (4) journal editors, (5) funders of PDA research, and (6) other reporting guideline developers. We sent invitations through the IPDAS Collaboration group, Shared Decision Making Facebook page and listserv, Society for Medical Decision Making newsletter, and through direct outreach to experts from workgroup members. We also used a snowball recruitment method to increase the respondent base by asking potential participants to forward the invitation to their colleagues. We sent any subjects who expressed interest a link to the survey. We calculated response rates for the survey in each round by dividing the number of subjects who were sent the survey link by the number who completed the survey.

For the Delphi process, draft items were rated on a 9-point scale. The a priori rule of ‘disagreement’ was defined as having 30% or more responses in the lowest tertile (1–3) and 30% or more in the highest tertile (7–9). Because none of the items met the a priori threshold for disagreement, the workgroup flagged items that received less than 80% of importance ratings in the highest tertile (7–9) for review and discussion.29 Open-ended comments were reviewed and used to help interpret scores and revise items. In addition, we considered the evidence that the items impacted outcomes, the importance of the items for meta-analyses and/or systematic reviews and, for items that overlapped with other checklists, the importance of including the items due to misreporting or poor reporting in the existing literature. We considered weighting responses by stakeholder group, but decided not to because the majority of participants (82%) indicated considerable expertise in two or more areas, making it difficult to assign participants to one stakeholder group. We also examined whether responses of the IPDAS-RG workgroup respondents differed from others.

In round 1 of the Delphi, participants rated the importance (1=not at all, 9=extremely) and the clarity of wording (1=not at all, 9=completely) for 51 items. For their ratings, participants were encouraged to consider whether the interpretation, analysis or appraisal of the paper would be adversely affected if the information was not included in the manuscript. We also encouraged participants to provide open-ended comments on each item and at the end of the survey. At the second in-person meeting (March 2016), the IPDAS-RG workgroup reviewed the round 1 data and revised the items for round 2.

In round 2, participants were provided feedback from round 1 and asked to rate the revised 31 items on the same 9-point importance scale and a 9-point essential scale (1=not at all, 9=absolutely). The essential scale was introduced in an attempt to spread responses and differentiate between items that should remain in the checklist and those that might be considered for removal. Open-ended comments were invited. In round 2, the survey also elicited participants’ perceptions of the checklist, including their intention to use the ‘reporting checklist’, with five statements rated on a 5-point scale ranging from 1=strongly agree to 5=strongly disagree. In the final in-person meeting (July 2016), the workgroup reviewed the data from round 2, discussed the ratings and comments on individual items, and finalised the SUNDAE Checklist.

Results

Planning phase

Reporting gaps identified by IPDAS chapters included lack of information about the PDA and its components (n=7 chapters); lack of information about the PDA development process (n=4); lack of information about implementation and sustainability (n=3); lack of clarity of terminology (n=2); and lack of information about measurement properties (n=1).

The review of relevant reporting guidelines found that the CONSORT, STROBE and TREND focused primarily on issues that would help readers understand the overall study rationale and design; issues of bias; and results and their implications. TIDieR and CReDECI focused on intervention characteristics, delivery and use. Finally, SQUIRE and STaRI addressed some of these same issues and additionally focused on intervention adaptation, delivery fidelity and potential financial conflicts. None of the existing reporting guidelines addressed issues of how an intervention might be accessed, key features of intervention content and design relevant to the efficacy of decision aids, or a full set of methods to understand how and why the intervention works.

Drafting phase

The workgroup generated a list of potential items and piloted the draft checklist on nine manuscripts. After this pilot, the workgroup removed, reworded and grouped items to reduce the number, and clarified the wording and organisation of items. Workgroup members then developed a brief rationale explaining each item, summarising evidence underlying the need for each item and outlining how accuracy and completeness of reporting support systematic reviews of the evidence for PDAs. The resulting draft checklist had 51 items and was used in round 1 of the Delphi process.

Consensus phase

In the Delphi process, 117/143 (82%) invited participants completed round 1. Round 2 was sent to all those who completed round 1. The majority, 96/117 (82%), completed round 2. Respondents represented 14 different countries, with about half from the USA (table 1). No item met the a priori definition for disagreement in either round. In round 1, 32/51 (63%) items had ≥80% of the importance ratings in the highest tertile (scores of 7–9), 17/51 (33%) items had 60%–79% of ratings in the highest tertile, and 2/51 (4%) items had 50%–59% of ratings in the highest tertile. Participant comments revealed concerns about lack of clarity of some items, the length of the checklist, redundancy among items within the checklist and redundancy of items with other checklists.

Table 1.

Two-stage modified Delphi process participant characteristics*

| Characteristics | Round 1 (n=117)† |

Round 2 (n=96)† |

| Age (years) | 48±12 | 49±12 |

| Sex | ||

| Female | 76 (65%) | 63 (66%) |

| Male | 39 (33%) | 32 (33%) |

| Missing | 2 (2%) | 1 (1%) |

| Country of residence | ||

| USA | 60 (51%) | 50 (52%) |

| Canada | 20 (17%) | 14 (15%) |

| UK | 17 (14%) | 13 (14%) |

| The Netherlands | 5 (4%) | 5 (5%) |

| Australia, Costa Rica, Denmark, Germany, Italy, Jordan, Romania, Spain, Sweden (<5 each) | 13 (11%) | 12 (12%) |

| Unknown | 2 (2%) | 2 (2%) |

| Expertise (respondents could check more than one) | ||

| Decision aid researcher, author, developer | 86 (74%) | NA |

| Clinician, healthcare administration, decision aid user | 56 (48%) | NA |

| Patient or consumer representative | 4 (3%) | NA |

| Journal editor or funder | 36 (31%) | NA |

| Guideline developer | 9 (8%) | NA |

| Number of publications | ||

| 0–1 | 15 (13%) | 12 (12%) |

| 2–4 | 23 (20%) | 17 (18%) |

| 5+ | 79 (67%) | 67 (70%) |

*Table values are mean±SD for continuous variables and n (column %) for categorical variables.

†Numbers may not sum to total due to missing data, and percentages may not sum to 100% due to rounding.

NA, not available.

The IPDAS-RG workgroup respondents (n=12) had similar scores to others (n=105). For the majority of individual items, 44/51 (86%), the median importance scores were similar (±0.5 points), and as a result we did not separate the workgroup responses from the rest of the respondents. After detailed discussion of each item, the revised checklist had 31 items.

The ratings from round 2 indicated even higher consensus as 24/31 (77%) items had ≥80% of importance ratings in the highest tertile (7–9) and 7/31 (23%) had 60%–79% of importance ratings in the highest tertile. There were fewer open-ended comments in round 2. Many comments were positive about the reduced length and clarity of items. Only a few raised concerns about redundancy. Table 2 presents the finalised SUNDAE Checklist with 26 items, and an accompanying glossary is included as an online supplementary appendix.

Table 2.

SUNDAE Checklist

| Section | SUNDAE Checklist for evaluation studies of patient decision aids |

| Title/abstract | |

| 1. | Use the term patient decision aid in the abstract to identify the intervention evaluated and, if possible, in the title. |

| 2. | In the abstract, identify the main outcomes used to evaluate the patient decision aid. |

| Introduction | As part of standard introduction (the problem, gaps, purpose): |

| 3. | Describe the decision that is the focus of the patient decision aid. |

| 4. | Describe the intended user(s) of the patient decision aid. |

| 5. | Summarise the need for the patient decision aid under evaluation. |

| 6. | Describe the purpose of the evaluation study with respect to the patient decision aid. |

| Methods | Studies with a comparator should also address items 7–13 for the comparator, if possible |

| 7. | Briefly describe the development process for the patient decision aid (and any comparator), or cite other documents that describe the process. At a minimum include the following:

|

| 8. | Identify the patient decision aid evaluated in the study (and any comparator) by including:

|

| 9. | Describe the format(s) of the patient decision aid (and any comparator) (eg, paper, online, video). |

| 10. | List the options presented in the patient decision aid (and any comparator). |

| 11. | Indicate the components in the patient decision aid (and any comparator) including:

|

| 12. | Briefly describe the components from item 11 that are included in the patient decision aid (and any comparator) or cite other documents that describe the components. |

| 13. | Describe the delivery of the patient decision aid (and any comparator) including:

|

| 14. | Describe any methods used to assess the degree to which the patient decision aid was delivered and used as intended (also known as fidelity). |

| 15. | Describe any methods used to understand how and why the patient decision aid works (also known as process evaluation) or cite other documents that describe the methods. |

| 16. | Identify theories, models or frameworks used to guide the design of the evaluation and selection of study measures. |

| 17. | For all study measures used to assess the impact of the patient decision aid on patients, health professionals, organisation, and health system:

|

| 18. | For any instruments used:

|

| Results | In addition to standard reporting of results: |

| 19. | Describe the characteristics of the patient, family and carer population(s) (eg, health literacy, numeracy, prior experience with treatment options) that may affect patient decision aid outcomes. |

| 20. | Describe any characteristics of the participating health professionals (eg, relevant training, usual care vs study professional, role in decision-making) that may affect decision aid outcomes. |

| 21. | Report any results on the use of the patient decision aid:

|

| 22. | Report relevant results of any analyses conducted to understand how and why the patient decision aid works (also known as process evaluation). |

| 23. | Report any unanticipated positive or negative consequences of the patient decision aid. |

| Discussion | As part of the standard discussion section (summary of key findings, interpretation, limitations and conclusion): |

| 24. | Discuss whether the patient decision aid worked as intended and interpret the results taking into account the specific context of the study including any process evaluation. |

| 25. | Discuss any implications of the results for patient decision aid development, research, implementation, and theory, frameworks or models. |

| Conflict of interest | |

| 26. | All study authors should disclose if they have an interest (professional, financial or intellectual) in any of the options included in the patient decision aid or a financial interest in the decision aid itself. |

For any questions or comments on the SUNDAE Checklist 2017, please email decisions@partners.org.

*These components are needed to meet the definition of a patient decision aid.

SUNDAE, Standards for UNiversal reporting of patient Decision Aid Evaluations.

bmjqs-2017-006986supp001.pdf (299.8KB, pdf)

Willingness to use checklist

Virtually all respondents, 89/91 (98%), agreed that the checklist would improve the reporting of PDA studies, and 88/91 (97%) agreed they would recommend it to colleagues or students. The majority indicated high intentions to use it when preparing manuscripts (80/82, 98%), designing studies (75/78, 96%) or supporting peer review of manuscripts (79/84, 94%).

Discussion

Key findings

The reporting guidelines included in the SUNDAE Checklist are the result of a rigorous, iterative development process and are supported by substantial international consensus. While some of the items were included due to a strong evidence base linking their reporting to improved outcomes or reduced reporting bias, others were included to support systematic reviews and meta-analyses in efforts to advance the evidence base. Some items included in other reporting guidelines were adopted because they were specific to PDA evaluation studies and reflected factors likely to impact PDA effectiveness. The final guidelines reflect both evidence-based and expert consensus-based items to improve the reporting of evaluation studies of PDAs.

Purpose and scope

The purpose of SUNDAE is to improve the transparency, quality and completeness of reporting evaluation studies of PDAs. Evaluation studies may include efficacy studies, comparative effectiveness studies or studies testing components of PDAs with a range of study designs. The checklist is not designed for manuscripts where the sole purpose is reporting the development or the implementation of PDAs, nor the results of systematic reviews or meta-analyses of PDA studies. The checklist covers any decision support intervention that meets the definition of PDA adopted by the Cochrane systematic review of PDAs or the IPDAS Collaboration,2 3 including decision aids designed for use during the consultation with the healthcare provider.

Using the SUNDAE guidelines

The SUNDAE guidelines are intended to be used by researchers who are preparing to report a PDA evaluation study. As is the case with other reporting guidelines, it may also be helpful for researchers to review the guidelines when designing the study protocol, to ensure the recommended items are collected, and thus able to be reported. Researchers are encouraged to review item 11 (table 2), which covers the description of the decision aid, as it is important that the intervention meets the definition for a decision aid based on the Cochrane Collaborative Systematic Review definition.3 The guidelines represent recommendations for reporting, and there may be editorial and other constraints that limit authors’ ability to include all items in a paper. To overcome this challenge, researchers may reference other publications or sources if the relevant material is available elsewhere. Further, researchers are encouraged to consider using supplementary materials to maintain manuscript brevity while providing access to important details (eg, an online table describing key features of the decision aid and how it was developed). The accompanying Explanation and Elaboration (E&E) document provides published examples of how authors have included this information in a parsimonious manner.30

Relationship to other guidelines

Over recent years there has been a major international effort for reporting guidelines development, and this work has led to the creation of the EQUATOR network.19 20 The SUNDAE Checklist is registered on EQUATOR and was carefully designed to minimise repetition of items that are standard in other reporting checklists (eg, it does not include items that recommend reporting details of main findings/primary outcomes). Therefore, the SUNDAE Checklist is intended to supplement other reporting guidelines, not serve as a stand-alone checklist. It is expected that authors will consult other relevant reporting guidelines as needed (eg, CONSORT for randomised control trials, TREND for non-randomised trials of behavioural interventions, STROBE for cohort or case–control studies).21–23 Researchers preparing publications evaluating PDAs may also find it helpful to review guidelines for describing complex interventions (eg, TIDieR or CreDECI).24 25

Achieving consensus in multidisciplinary community

Research in PDAs exemplifies interdisciplinary applied science. The development, evaluation and implementation of PDAs draw on theories, knowledge and methods from several academic and professional disciplines, including, but not limited to, medicine, nursing, psychology, decision science, health informatics, implementation science and health services research. The workgroup members came from varied disciplines, as did the Delphi respondents, and the items that had the most varied ratings in the Delphi often marked ones that had different disciplinary approaches (eg, item 15, dealing with process evaluation; and item 16, dealing with use of theory, frameworks or models). The workgroup is eager to learn from users to continue to refine and advance the items, with a view to future modification.

Strengths and limitations

The key strengths of SUNDAE include the detailed and rigorous development process, the multidisciplinary and international IPDAS-RG workgroup, and the consistently high ratings of items across a large international group of experts. There are some limitations, as with most reporting guidelines. Although there was wide engagement from international researchers in the Delphi process, the majority (80%) were from the USA, Canada and the UK, in part reflecting the leading countries involved to date in the development and evaluation of PDAs. There was limited input from consumers. As with other reporting guidelines, many of the guideline items are expert consensus-based, but this process drew on wide input from stakeholders with appropriate expertise.

Future work

The two remaining phases include (4) dissemination and (5) maintenance (figure 1). As part of the dissemination efforts, the SUNDAE Checklist will be available on multiple websites (including EQUATOR, IPDAS hosted by Ottawa Hospital Research Institute/University of Ottawa, Health Decision Sciences Center hosted by Massachusetts General Hospital, the US Agency for Healthcare Research and Quality, and the UK Health Foundation), and this guideline paper and the accompanying E&E will be open-access publications.

The IPDAS-RG encourages colleagues to use the checklist and to send the corresponding authors feedback on their experience. Those planning evaluation studies of PDAs may find the checklist valuable as they are designing the study (as is the case with other reporting guidelines). Journal editors may consider the checklist to provide guidance for authors and for reviewers. The IPDAS-RG workgroup plans to gather feedback on feasibility and utilisation of the checklist and will reconvene in 2 years to assess the need for revision and updates.

The SUNDAE Checklist and the guidance in the accompanying E&E document will help to improve reporting of PDAs. Clear, comprehensive and consistent reporting of evaluation studies will contribute to the evidence base and advance the collective understanding of the most effective ways to improve the quality of healthcare decisions.

Acknowledgments

We want to acknowledge the support of the IPDAS Steering Committee and chapter participants; the many participants in the Delphi process for their time and invaluable contributions; Greg Ogrinc and Tammy Hoffman for advice on methodology and development process; and Sarah Ivan for project support.

Footnotes

Contributors: The writing team was led by KRS and RT and included PA and ASH. All authors were involved in the data acquisition, analysis and interpretation of data, and drafting and critical revision of the manuscript. All but one author (HV) was involved in the study design. Each has provided final approval of the version submitted, and the lead authors KRS and RT are accountable for the accuracy and integrity of the work presented.

Funding: The in-person workgroup meetings were supported through grants from the UK’s Health Foundation (grant # 7444, RT) and the Agency for Healthcare Research and Quality’s small conference grant (1R13HS024250-01, KRS). ASH is funded by the shared decision-making collaborative of the Duncan Family Institute for Cancer Prevention and Risk Assessment at the University of Texas MD Anderson Cancer Center.

Competing interests: KRS received salary support as a scientific advisory board member for the Informed Medical Decisions Foundation, which was part of Healthwise, a not-for-profit organisation that develops patient decision aids, from April 2014 to April 2017. CAL was employed by Healthwise from April 2014 to November 2016. VAS received personal fees from Merck Pharmaceuticals. During the last 36 months, SLS has received funding from the Agency for Health Services Research and Quality for a scoping review to identify a research agenda on shared decision making and high value care. During this time, she also completed unfunded research or papers on patient decision aid evaluations and developed the reaching for high value care toolkit, a toolkit of evidence briefs and resources on patient-centred high value care for all levels of system leaders. As part of those efforts and efforts on the current manuscripts, SLS has developed a series of research resources on reporting research. She is considering the potential benefits and harms of pursuing intellectual property protection for some of these efforts, but has not initiated these to date.

Provenance and peer review: Not commissioned; externally peer reviewed.

References

- 1. Elwyn G, O’Connor A, Stacey D, et al. Developing a quality criteria framework for patient decision aids: online international Delphi consensus process. BMJ 2006;333:417 10.1136/bmj.38926.629329.AE [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. International Patient Decision Aid Standards (IPDAS) Collaboration. In: O’Connor A, Llewellyn-Thomas H, Stacey D, eds IPDAS Collaboration Background Document, 2005. (accessed 8 Feb 2016). [Google Scholar]

- 3. Stacey D, Légaré F, Lewis K, et al. Decision aids for people facing health treatment or screening decisions. Cochrane Database Syst Rev 2017;4:Cd001431 10.1002/14651858.CD001431.pub5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4. Joseph-Williams N, Newcombe R, Politi M, et al. Toward minimum standards for certifying patient decision aids: a modified delphi consensus process. Med Decis Making 2014;34:699–710. 10.1177/0272989X13501721 [DOI] [PubMed] [Google Scholar]

- 5. Coulter A, Stilwell D, Kryworuchko J, et al. A systematic development process for patient decision aids. BMC Med Inform Decis Mak 2013;13:S2 10.1186/1472-6947-13-S2-S2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6. Barry MJ, Chan E, Moulton B, et al. Disclosing conflicts of interest in patient decision aids. BMC Med Inform Decis Mak 2013;13:S3 10.1186/1472-6947-13-S2-S3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7. Feldman-Stewart D, O’Brien MA, Clayman ML, et al. Providing information about options in patient decision aids. BMC Med Inform Decis Mak 2013;13:S4 10.1186/1472-6947-13-S2-S4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Montori VM, LeBlanc A, Buchholz A, et al. Basing information on comprehensive, critically appraised, and up-to-date syntheses of the scientific evidence: a quality dimension of the International Patient Decision Aid Standards. BMC Med Inform Decis Mak 2013;13:S5 10.1186/1472-6947-13-S2-S5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Abhyankar P, Volk RJ, Blumenthal-Barby J, et al. Balancing the presentation of information and options in patient decision aids: an updated review. BMC Med Inform Decis Mak 2013;13:S6 10.1186/1472-6947-13-S2-S6 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10. Trevena LJ, Zikmund-Fisher BJ, Edwards A, et al. Presenting quantitative information about decision outcomes: a risk communication primer for patient decision aid developers. BMC Med Inform Decis Mak 2013;13:S7 10.1186/1472-6947-13-S2-S7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11. Fagerlin A, Pignone M, Abhyankar P, et al. Clarifying values: an updated review. BMC Med Inform Decis Mak 2013;13:S8 10.1186/1472-6947-13-S2-S8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Bekker HL, Winterbottom AE, Butow P, et al. Do personal stories make patient decision aids more effective? A critical review of theory and evidence. BMC Med Inform Decis Mak 2013;13:S9 10.1186/1472-6947-13-S2-S9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13. McCaffery KJ, Holmes-Rovner M, Smith SK, et al. Addressing health literacy in patient decision aids. BMC Med Inform Decis Mak 2013;13:S10 10.1186/1472-6947-13-S2-S10 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Stacey D, Kryworuchko J, Belkora J, et al. Coaching and guidance with patient decision aids: a review of theoretical and empirical evidence. BMC Med Inform Decis Mak 2013;13:S11 10.1186/1472-6947-13-S2-S11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Sepucha KR, Borkhoff CM, Lally J, et al. Establishing the effectiveness of patient decision aids: key constructs and measurement instruments. BMC Med Inform Decis Mak 2013;13:S12 10.1186/1472-6947-13-S2-S12 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16. Hoffman AS, Volk RJ, Saarimaki A, et al. Delivering patient decision aids on the Internet: definitions, theories, current evidence, and emerging research areas. BMC Med Inform Decis Mak 2013;13:S13 10.1186/1472-6947-13-S2-S13 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Sepucha KR, Matlock DD, Wills CE, et al. ’It’s Valid and Reliable' Is Not Enough. Medical Decision Making 2014;34:560–6. 10.1177/0272989X14528381 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18. Lewis KB, Wood B, Sepucha KR, et al. Quality of reporting of patient decision aids in recent randomized controlled trials: A descriptive synthesis and comparative analysis. Patient Educ Couns 2017;100:1387–93. 10.1016/j.pec.2017.02.021 [DOI] [PubMed] [Google Scholar]

- 19. EQUATOR Network. Enhancing the quality and transparency of health research. http://www.equator-network.org/toolkits/developers/ (accessed 8 Feb 2016).

- 20. Moher D, Schulz KF, Simera I, et al. Guidance for developers of health research reporting guidelines. PLoS Med 2010;7:e1000217 10.1371/journal.pmed.1000217 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Schulz KF. Consort 2010 Statement: updated guidelines for reporting parallel group randomized trials. Ann Intern Med 2010;152:726–32. 10.7326/0003-4819-152-11-201006010-00232 [DOI] [PubMed] [Google Scholar]

- 22. Des Jarlais DC, Lyles C, Crepaz N. TREND Group. Improving the reporting quality of nonrandomized evaluations of behavioral and public health interventions: the TREND statement. Am J Public Health 2004;94:361–6. 10.2105/AJPH.94.3.361 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23. von Elm E, Altman DG, Egger M, et al. The Strengthening the Reporting of Observational Studies in Epidemiology (STROBE) statement: guidelines for reporting observational studies. Ann Intern Med 2007;147:573–7. 10.7326/0003-4819-147-8-200710160-00010 [DOI] [PubMed] [Google Scholar]

- 24. Hoffmann TC, Glasziou PP, Boutron I, et al. Better reporting of interventions: template for intervention description and replication (TIDieR) checklist and guide. BMJ 2014;348:g1687 10.1136/bmj.g1687 [DOI] [PubMed] [Google Scholar]

- 25. Möhler R, Köpke S, Meyer G. Criteria for Reporting the Development and Evaluation of Complex Interventions in healthcare: revised guideline (CReDECI 2). Trials 2015;16:204 10.1186/s13063-015-0709-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Ogrinc G, Davies L, Goodman D, et al. SQUIRE 2.0 (Standards for QUality Improvement Reporting Excellence): revised publication guidelines from a detailed consensus process. BMJ Qual Saf 2016;25:986–92. 10.1136/bmjqs-2015-004411 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27. Pinnock H, Epiphaniou E, Sheikh A, et al. Developing standards for reporting implementation studies of complex interventions (StaRI): a systematic review and e-Delphi. Implement Sci 2015;10:42 10.1186/s13012-015-0235-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28. Witteman HO, Dansokho SC, Colquhoun H, et al. User-centered design and the development of patient decision aids: protocol for a systematic review. Syst Rev 2015;4:11 10.1186/2046-4053-4-11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29. Hasson F, Keeney S, McKenna H. Research guidelines for the Delphi survey technique. J Adv Nurs 2000;32:1008–15. [PubMed] [Google Scholar]

- 30. Hoffman AS, Sepucha KR, Abhyankar P, et al. Explanation and elaboration of the Standards for UNiversal reporting of patient Decision Aid Evaluations (SUNDAE) guidelines: examples of reporting SUNDAE items from patient decision aid evaluation literature. BMJ Qual Saf 2018;27:389–412. 10.1136/bmjqs-2017-006985 [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

bmjqs-2017-006986supp001.pdf (299.8KB, pdf)