Abstract

We consider the problem of estimating a signal from noisy circularly-translated versions of itself, called multireference alignment (MRA). One natural approach to MRA could be to estimate the shifts of the observations first, and infer the signal by aligning and averaging the data. In contrast, we consider a method based on estimating the signal directly, using features of the signal that are invariant under translations. Specifically, we estimate the power spectrum and the bispectrum of the signal from the observations. Under mild assumptions, these invariant features contain enough information to infer the signal. In particular, the bispectrum can be used to estimate the Fourier phases. To this end, we propose and analyze a few algorithms. Our main methods consist of non-convex optimization over the smooth manifold of phases. Empirically, in the absence of noise, these non-convex algorithms appear to converge to the target signal with random initialization. The algorithms are also robust to noise. We then suggest three additional methods. These methods are based on frequency marching, semidefinite relaxation and integer programming. The first two methods provably recover the phases exactly in the absence of noise. In the high noise level regime, the invariant features approach for MRA results in stable estimation if the number of measurements scales like the cube of the noise variance, which is the information-theoretic rate. Additionally, it requires only one pass over the data which is important at low signal–to–noise ratio when the number of observations must be large.

Index Terms: bispectrum, multireference alignment, phase retrieval, non-convex optimization, optimization on manifolds, semidefinite relaxation, phase synchronization, frequency marching, integer programming, cryo-EM

I. Introduction

We consider the problem of estimating a discrete signal from multiple noisy and translated (i.e., circularly shifted) versions of itself, called multireference aligment (MRA). This problem occurs in a variety of applications in biology [1], [2], [3], [4], radar [5], [6], image registration and super-resolution [7], [8], [9], and has been the subject of recent theoretical analysis [10], [11]. The MRA model reads

| (I.1) |

where εj are i.i.d. normal random vectors with variance σ2 and the underlying signal x is in ℝN or in ℂN. Operator Rrj rotates the signal x circularly by rj locations, namely, (Rrjx)[n] = x[n − rj], where indexing is zero-based and considered modulo N (throughout the paper). While both x and the translations {rj} are unknown, we stress that the goal here is merely to estimate x. This estimation is possible only up to an arbitrary translation.

A chief motivation for this work arises from the imaging technique called single particle Cryo-Electron Microscopy (Cryo-EM), which allows to visualize molecules at near-atomic resolution [12], [13]. In Cryo-EM, we aim to estimate a three dimensional (3D) object from its two-dimensional (2D) noisy projections, taken at unknown viewing directions [14], [15]. While typically the recovery process involves alignment of multiple observations in a low signal-to-noise ratio (SNR) regime, the underlying goal is merely to estimate the 3D object. In this manner, with the unknown shifts corresponding to the unknown viewing directions, MRA can be understood as a simplified model for Cryo-EM.

Existing approaches for MRA can be classified into two main categories. The first class of methods aims to estimate the set of translations {rj} first. Given this set, estimating x can be achieved easily by aligning all observations ξj and then averaging to reduce the noise. The second class, which we favor in this paper, consists of methods which aim to estimate the signal directly, without estimating the shifts.

Considering the first class, one intuitive approach to estimating the translations is to fix a template observation, say ξ1, and to estimate the relative translations by cross-correlation. This is called template matching. Specifically, rj is estimated as

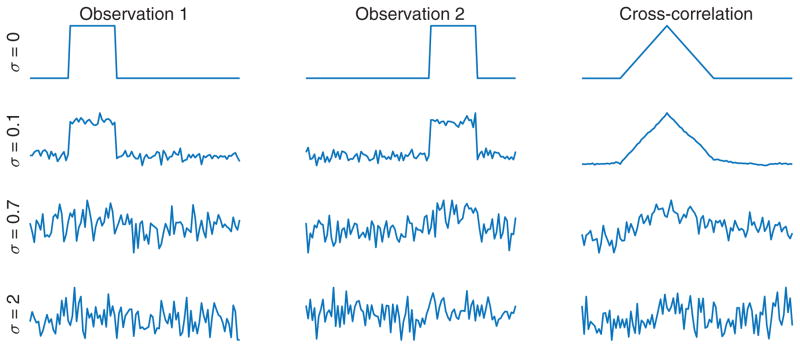

where ℜ{z} and z̄ denote the real part and the conjugate of a complex number z. This approach requires only one pass over the data: for each observation, the best shift can be computed in O(N log N), and the aligned observations can be averaged online. This results in a total computational cost of O(MN log N), see Table I. While this approach is simple and efficient, it necessarily fails below a critical SNR—see Figure I.1 for a representative example.

Table I.

Comparison of main MRA approaches. F(N) denotes the complexity of inverting the bispectrum. For instance, for the FM algorithm, F(N) = O(N2). Storage requirements include the possibility of streaming computations where possible.

| Method | Computational complexity | Storage requirement | Comments |

|---|---|---|---|

| Template alignment | O(MN log N) | O(N) | Fails at moderate SNR (see Figure I.1) |

| Angular synchronization | O(M2N log N) | O(M2) | Fails at low SNR |

| Expectation maximization | O(TMN log N) | O(MN) | Empirically accurate; #iterations T grows with noise level |

| Invariant features (this paper) | O(MN2 + F(N)) | O(N2) | Under mild conditions, accurate estimation if M grows as σ6 |

Figure I.1.

Alignment of two translated versions of the same signal in the presence of i.i.d. Gaussian noise with various standard deviations σ. The true signal in ℝ100 is a window of length 22 and height 1. Each row presents two observations and their cross-correlation. Importantly, beyond a certain threshold, noise makes pairwise alignment impossible.

The issue with template matching is that we rely on aligning each observation to only one template: this is error prone at low SNR. Instead, to derive a more robust estimator, one can look for the most suitable alignment among all pairs of observations. The M2 relative shifts thus computed must then be reconciled into a compatible choice of M shifts for the individual observations. This is a discrete version of the angular synchronization problem, see [16], [17], [18], [19], [20], [21]. The computational complexity of aligning all pairs individually is O(M2N log N), while storing the results uses O(M2) memory.

Alternative algorithms for estimating the translations are based on different SDP relaxations [22], [23], iterative template alignment [24], zero phase representations [5] and neural networks [6]. The statistical limits of alignment tasks were derived for a variety of setups and noise models, see for instance [25], [26], [27], [28]. For example, for a continuous, 2D version of the MRA model, it was shown that the Cramér–Rao lower bound (CRLB) for translation estimation is proportional to the noise variance σ2 [25]; crucially, it does not improve with M, even if the underlying signal is known. This is motivation to consider the second category of MRA methods, where shifts are not estimated.

Section VI elaborates on expectation maximization (EM) which tries to compute the maximum marginalized likelihood estimator (MMLE) of the signal—marginalization is done over the shifts. This method acknowledges the difficulty of alignment by working not with estimates of the shifts themselves, but rather with estimates of the probability distributions of the shifts. As a result, EM achieves excellent numerical performance in practice. However its computational complexity is high and its performance is not understood in theory.

It has been shown recently that the sample complexity of MRA, under assumption that shifts are distributed uniformly, is proportional to σ6 in the low SNR regime. In other words, the number of measurements M needs to scale like σ6 to retain a constant estimation error [11].

In this work, we propose a framework which achieves this sample complexity by estimating the sought signal x directly using features that are invariant under translations. For instance, the mean of x is invariant under translation and can be estimated easily from the mean of all observations. We further use the power spectrum and the bispectrum of the observations—which are Fourier-transform based invariants— to estimate the magnitudes and phases of the signal’s Fourier transform, respectively.

For any fixed noise level (which may be arbitrarily large), these features can be estimated accurately provided sufficiently many measurements are available. Hence, our approach allows to deal with any noise level. Besides achieving the sample complexity, the computational complexity and memory requirements of the methods we describe are relatively low. Indeed, the only operations whose computational cost grows with M are computations of averages over the data. These can be performed on-the-fly and are easily parallelizable. We mention that a recent tensor decomposition algorithm also achieves this estimation rate [29].

Given estimators for the mean and power spectrum of x, estimating the DC component and Fourier magnitudes of x is straightforward. In this paper, we thus focus on the task of recovering the Fourier phases of x from an estimator of its bispectrum. We propose two non-convex optimization algorithms on the manifold of phases for this task, which we call bispectrum inversion. We also discuss three additional algorithms which do not require initialization (and hence could be used to initialize others), based on frequency marching, SDP relaxation and integer programming. The first two methods recover the phases exactly in the absence of noise.

Beyond MRA, the bispectrum plays a central role in a variety of signal processing applications. For instance, it is a key tool to separate Gaussian and non-Gaussian processes [30], [31]. It is also used to investigate the cosmic background radiation [32], [33], seismic signal processing [34], image deblurring [35], feature extraction for radar [36], analysis of EEG signals [37], MIMO systems [38] and classification [39] (see also [40], [41], [42], [43], [44] and references therein). In Section III, we review previous works on bispectrum inversion [45], [46], [34]. Reliable algorithms to invert the bispectrum, as studied here, may prove useful in some of these applications.

The paper is organized as follows. Section II discusses the invariant feature approach for MRA. Section III presents the non-convex algorithms on the manifold of phases for bispectrum inversion. Section IV is devoted to additional algorithms that can be used to initialize the non-convex algorithms. Section V analyzes one of the proposed non-convex algorithm. Section VI elaborates on the EM approach for MRA, Section VII shows numerical experiments and Section VIII offers conclusions and perspective.

Throughout the paper we use the following notation. Vectors x in ℝN or ℂN and y ∈ ℂN denote the underlying signal and its discrete Fourier transform (DFT), respectively. In the sequel, all indices are understood modulo N, namely, in the range 0, …, N −1. The phase of a complex scalar a, defined as a/|a| if a ≠ 0 and zero otherwise, is denoted by phase(a) or ã. The conjugate-transpose of a vector z is denoted by z*. We use ‘∘’ to denote the Hadamard (entry-wise) product, 𝔼 for expectation, Tr(Z) for the trace and ||Z||F for the Frobenius norm of a matrix Z. We reserve T(z) for circulant matrices determined by their first row z, i.e., T(z)[k1, k2] = z[k2−k1], and ℋN for the set of Hermitian matrices of size N × N.

II. Multireference Alignment via Invariant Features

We propose to solve the MRA problem directly using features that are invariant under translations. Unlike pairwise alignment, this approach fuses information from all M observations together—not just of pairs—and it only aims to recover the signal itself—not the translations. The essence of this idea was discussed as a possible extension in [22, Appendix A]. The invariant features can be understood either as auto-correlation functions or as their Fourier transform. In this work, we make use of the first three invariants defined as

| (II.1) |

for n1, n2 = 0, …, N−1. It is clear that c1, c2, c3 are invariant under circular shifts of x. For higher-order invariants based on auto-correlations, see for instance [47].

The first feature is the mean of the signal which is the auto-corrleation function of order one (i.e., c1 in (II.1)). The distribution of the mean of ξj is then given by and we can estimate μx as

| (II.2) |

Estimating the signal’s mean supplies only limited information about the signal itself. Thus, we consider also the auto-correlation function of order two (i.e., c2 in (II.1)). Its Fourier transform, the power spectrum, is explicitly defined as

for all k, where y is the DFT of x. An alternative way to understand the invariance of the power spectrum under shifts is through the effect of shifts on the DFT of a signal:

| (II.3) |

Thus, shifts only affect the phases of the DFT, so that PRsx = Px for any shift Rs. Furthermore, owing to independence of the noise with respect to the signal itself and to the shift,

where the second term is the power spectrum of the noise εj. Therefore, we estimate the power spectrum of x as:

| (II.4) |

It can be shown that P̂x is unbiased and its variance is dominated by for large σ. Hence, P̂x → Px as M → ∞. In particular, accurate estimation of the power spectrum requires M to scale like σ4. In the sequel, we assume that σ is known.1

Recovering a signal from its power spectrum is commonly referred to as phase retrieval. This problem received considerable attention in recent years, see for instance [48], [49], [50], [51], [52], [53], [54]. It is well known that almost no one-dimensional signal can be determined uniquely from its power spectrum. Therefore, we use the power spectrum merely to estimate the signal’s Fourier magnitudes. As explained next, we use the auto-correlation of third order and its Fourier transform, the bispectrum, to estimate the Fourier phases.

Since phase retrieval is in general ill posed, we use the auto-correlation function of order three (that is, c3 in (II.1)) through its Fourier transform, the bispectrum, to estimate the Fourier phases of the sought signal. The bispectrum is a function of two frequencies k1, k2 = 0, …, N −1 and is defined as [55]:

| (II.5) |

Note that, if y[0] ≠ 0, the power spectrum is explicitly included in the bispectrum since Px[k] = Bx[k, k]/y[0]. The fact that the bispectrum is invariant under shifts can also be deduced from (II.3). Indeed, for any shift Rs,

In matrix notation, we express this as

| (II.6) |

where T(y) is a circulant matrix whose first row is y, that is, T(y)[k1, k2] = y[k2 − k1]. Observe that if x is real, then so that T(y) and Bx are Hermitian matrices. Simple expectation calculations lead to the conclusion that

| (II.7) |

where A = Aℝ or A = Aℂ depending on x ∈ ℝN or x ∈ ℂN and

Since the bias term is proportional to μx, we propose to estimate B̂x−μx by averaging over Bξj−μx for all j. This estimator is unbiased and its variance is controlled by for large σ. Therefore, M is required to scale like σ6 to ensure accurate estimation. In practice, μx is not known exactly. Thus, we estimate the bispectrum by

| (II.8) |

which is asymptotically unbiased. For finite M and large σ, bias induced by the approximation μ̂x ≈ μx is significantly smaller than the standard deviation of (II.8).

The bispectrum contains information about the Fourier phases of x because, defining ỹ[k] = phase(y[k]) and B̃x[k1, k2] = phase(Bx[k1, k2]) where phase extracts the phase of a complex number (and returns 0 if that number is 0), we have

| (II.9) |

In matrix notation, the normalized bispectrum takes the form B̃x = ỹ ỹ* ∘ T(ỹ).

Contrary to the power spectrum, the bispectrum is usually invertible. Indeed, in the absence of noise, the bispectrum determines the sought signal uniquely under moderate conditions:

Proposition II.1

For N ≥ 5, let x ∈ ℂN be a signal whose DFT y obeys y[k] ≠ 0 for k = 1, …, K, possibly also for k = 0, and zero otherwise. Up to integer time shifts, x is determined exactly by its bispectrum provided .

For N ≥ 5, let x ∈ ℝN be a real signal whose DFT y obeys y[k] ≠ 0 for k = 1, …, K and k = N −1, …, N − K, possibly also for k = 0, and zero otherwise. Up to integer time shifts, x is determined exactly by its bispectrum provided .

Proof

This is a direct corollary of Lemmas V.1 and B.1.

We stress that the bispectrum estimator in (II.8) is not a bispectrum itself, since the set of bispectra is not a linear space: B̂x−μx is not invertible as such [42]. Algorithms we propose aim to find a stable inverse, in the sense that the recovered signal will have a bispectrum which is close to the estimated bispectrum in ℂN×N. The following propositions combined argue formally that this can be done in the MRA model. The proofs in Appendix A are constructive.

Proposition II.2 (Stable bispectrum inversion)

There exists an estimator x̂ with the following property. For any signal x in ℝN or ℂN whose DFT is non-vanishing, there exist a precision δ = δ(x) > 0 and a sensitivity L = L(x) < ∞ such that if an estimator B̂x of Bx satisfies ||B̂x−Bx||F ≤ δ, then x̂ = x̂ (B̂x) satisfies minr=0…N−1 ||x − Rr x̂||2 ≤ L||B̂x − Bx||F.

Proposition II.3 (Bispectrum estimation)

For any signal x in ℝN or ℂN whose DFT is non-vanishing, for any required precision δ > 0 and for any probability p < 1, there exists a constant C = C(x, p, δ) < ∞ such that, for any noise level σ > 0, if the number of observations M exceeds C·(σ2+σ6), the estimator

satisfies ||B̂x − Bx||F ≤ δ with probability at least p.

We mention that uniqueness in the continuous setup was considered in [56]. The more general setting of bispectrum over compact groups was considered in [57], [58], [59], [60].

The MRA model here assumes i.i.d. Gaussian noise. However, the estimation is performed by averaging in the bispectrum domain, where noise affecting individual entries is correlated. Consequently, one may want to use a more robust estimator, such as the median. Yet, computing the median of complex matrices is computationally expensive, while computing the average can be performed efficiently and on-the-fly, that is, without requiring to store all observations. For Gaussian noise, we have noticed numerically that using the mean or the median for bispectrum estimation leads to comparable estimation errors (experiments not shown). In other noise models, e.g., with outliers, it might be useful to consider the median or the median of means method, see for instance [61].

Algorithm 1.

Outline of the invariant approach for MRA

| Input: Set of observations ξj, j = 1, …, M according to (I.1) and noise level σ |

| Output: x̂: estimation of x |

| Estimate invariant features: |

Estimate the signal’s DFT:

|

| Return: x̂: inverse DFT of the estimated y |

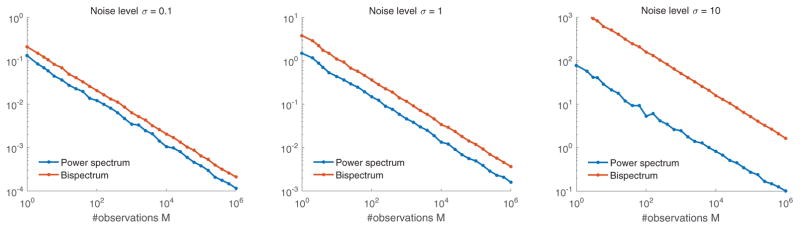

Figure II.1 presents the relative estimation error of the power spectrum and bispectrum as a function of the number of observations M. For the bispectrum, the relative error is computed as

Figure II.1.

Relative error of estimating the power spectrum and bispectrum for different noise levels as a function of the number of observations M. Results are averaged over 10 repetitions for each value of M on a fixed real signal of length N = 41 with i.i.d. normal random entries. The signal-to-noise ratio is then 1/σ2. Importantly, the relative error decreases as regardless of noise level.

and similarly for the power spectrum. As expected, the slope of all curves is approximately 1/2 in logarithmic scale, implying that the estimation error decreases as . The invariant features approach for MRA is summarized in Algorithm 1.

Consider the case in which the number of samples M may be very large whereas the size of the object is fixed, namely N ≪ M. This case is of interest in many applications, such as cryo-EM [14], [15]. In this regime, the invariant features approach has two important advantages over methods that rely on estimating the translations. First, in the invariant features approach, we average over the M observations (which is computationally cheap), and then apply a more complex algorithm (say, to recover a signal from its bispectrum) whose input size is a function of N but is independent of M. Hence, the overall complexity of this approach can be relatively low. Second, the alignment-based method requires storing all M observations, namely, MN samples, which is unnecessary in the invariant features approach. There, for each observation, we just need to compute its invariants, to be averaged over all observations: this can be done online (in streaming mode) and in parallel.

III. Non-convex Algorithms for bispectrum inversion

After estimating the first Fourier coefficient y[0] as Nμ̂x, our approach for MRA by invariant features consists of two parts. We use the power spectrum to estimate the signal’s Fourier magnitudes and the bispectrum for the phases. The first part is straightforward: |y[k]| can be estimated as if P̂x[k] ≥ 0, and as 0 otherwise. Hereafter, we focus on estimation of the phases of the DFT, ỹ.

In the literature, two main approaches were suggested to invert the discrete bispectrum. The first is based on estimating the frequencies one after the other by exploiting simple algebraic relations [45], [46]. The second approach suggests to estimate the signal by least-squares solution and phase unwrapping [46], [34]. We improve these methods and suggest a few new algorithms. The algorithms are split into two sections. This section is devoted to two new non-convex algorithms based on optimization on the manifold of phases. Both of these algorithms require initialization. While experimentally it appears that random initialization works well, for completeness, in the next section we propose three additional algorithms which do not need initialization and hence could be used to initialize the non-convex algorithms.

A. Local non-convex algorithm over the manifold of phases

In this section, similarly to (II.9), we let B̃ denote our estimate of the phases of Bx. Since B̃ ≈ ỹ ỹ* ∘ T(ỹ), one way to model recovery of the Fourier phases ỹ is by means of the non-convex least-squares optimization problem

| (III.1) |

The matrix W ∈ ℝN×N is a weight matrix with nonnegative entries. These weights can be used to indicate our confidence in each entry of B̃. Expanding the squared Frobenius norm yields

where

| (III.2) |

is the real inner product associated to the Frobenius norm. Under the constraints on z, the first two terms are constant and the inner product term is equivalent to

with

| (III.3) |

where we use the notation W(2):= W ∘ W. One possibility is to choose , where the absolute value and the square root are taken entry-wise, so that . Hence, optimization problem (III.1) is equivalent to

| (III.4) |

We can also impose z[0] = phase(μ̂x). If x is real, we have the additional symmetry constraints .

Algorithm 2.

Non-convex optimization on phase manifold

Since the cost function f is continuous and the search space is compact, a solution exists. Of course, the solution is not unique, in accordance with the invariance of the bispectrum under integer time-shifts of the underlying discrete signal. This is apparent through the fact that the cost function f is invariant under the corresponding (discrete) transformations of z. This is true independently of the data B̃ and W. The proof is in Appendix C.

Lemma III.1

The cost function f is invariant under transformations of z that correspond to integer time-shifts of the underlying signal.

To solve this non-convex program, we use the Riemannian trust-region method (RTR) [62], whose usage is simplified by the toolbox Manopt [63]. RTR enjoys global convergence to second-order critical points, that is, points which satisfy first- and second-order necessary optimality conditions [64] and a quadratic local convergence rate. Empirically, in the noiseless case it appears that the algorithm recovers the target signal with random initialization, all local minima are global (with minor technicality for even N in the real case) and all second-order critical points have an escape direction, that is, saddles are “strict”. Numerical experiments demonstrate reasonable robustness in the face of noise. This algorithm is summarized in Algorithm 2 and studied in detail in Section V.

B. Iterative phase synchronization algorithm

In this section we present an alternative heuristic to the non-convex algorithm on the manifold of phases. This algorithm is based on iteratively solving the phase synchronization problem. Suppose we get an estimation of ỹ, say ŷk−1. If ŷk−1 ≈ ỹ is non-vanishing, then this estimation should approximately satisfy the bispectrum relation:

The underlying idea is now to push the current estimation towards ỹ by finding a rank-one approximation of with unit modulus entries. This problem can be formulated as:

| (III.5) |

where we treat the matrix as a constant. This problem is called phase synchronization. Many algorithms have been suggested to solve the phase synchronization problem. Among them are the eigenvector method, SDP relaxation, projected power method, Riemannian optimization and approximate message passing [16], [20], [17], [18], [19]. Notice that the solution of (III.5) is only defined up to a global phase, namely, if z is optimal, then so is zeiϕ for any angle ϕ. To resolve this ambiguity, we require knowledge of the phase of the mean, ỹ[0] (which is easy to estimate from the data) and we pick the global phase of ŷk such that ŷk[0] = ỹ[0].

The kth iteration of our algorithm thus (tries to) solve the phase synchronization problem with respect to the matrix , where ŷk−1 is the solution of the previous estimation. Assuming the signal is real, we also impose at each iteration the conjugate-reflection property of for all ℓ so that Mk is Hermitian. In the numerical experiments in Section VII, we solve (III.5) by the Riemannian trust-region method described in [17]. Empirically, the performance of this algorithm and Algorithm 2 is indistinguishable. The algorithm is summarized in Algorithm 3.

Algorithm 3.

Iterative phase synchronization algorithm

| Input: The normalized bispectrum B̃, initial estimation ŷ0, phase of the mean ỹ[0] |

| Output: ŷ: estimation of ỹ |

| Set k = 0 |

while stopping criterion does not trigger do:

|

| end while |

| Return: ŷ ← ŷk |

IV. Initialization-free Algorithms

The previous section was devoted to non-convex algorithms to invert the bispectrum. In this section we present three additional algorithms based on frequency marching (FM), SDP relaxation and phase unwrapping. These algorithms do not require initialization and therefore could be used to initialize the non-convex algorithms.

We prove that FM and the SDP recover the Fourier phases exactly in the absence of noise under the assumption that we can fix ỹ[1]. If the signal has non-vanishing DFT, ỹ[1] can be estimated from the bispectrum using the fact that ỹ[1]N equals

(Any Nth root can be used for ỹ[1], corresponding to the N possible shifts of x.) In all cases, we argue that forcing ỹ[1] = 1 is acceptable if N is large. Indeed, recall that a shift by ℓ entries in the space domain is equivalent to modulating the kth Fourier coefficient by e−2πiℓk/N. In particular, it means that the phase ỹ[1] can be shifted by e−2πiℓ/N for an arbitrary ℓ ∈ ℤ. Thus, for signals of length N ≫ 1, the phase ỹ[1] can be set arbitrarily with only small error. In the numerical experiments of Section VII, we give the correct value of ỹ[1] to the algorithms in order to assess their best possible behavior.

We begin by discussing the FM algorithm, which is a simple propagation method: it is exact in the absence of noise. Notwithstanding, its estimation for the low-frequency coefficients is sensitive to noise. Because of its recursive nature, error in the low frequencies propagates to the high frequencies, resulting in unreliable estimation. The other two algorithms are more computationally demanding but appear more robust.

A. Frequency marching algorithm

The FM algorithm is a simple propagation algorithm in the spirit of [45], [46] that aims to estimate ỹ one frequency at a time. This algorithm has computational complexity O(N2) and it recovers ỹ exactly for both real and complex signals in the absence of noise, assuming ỹ[1] is known.

Let us denote B̃[k1, k2] = eiΨ[k1,k2] and ỹ[k] = eiψ[k]. Accordingly, we can reformulate (II.5) as

where the modulo is taken over the sum of all three terms. Using this relation, we can start to estimate the missing phases. The first unknown phase, ψ[2], can be estimated by:

where ψ̂[2] refers to the estimator of ψ[2] (defined modulo 2π). We can estimate the next phase in the same manner:

For higher frequencies, we have more measurements to rely on. For the fourth entry, we now can derive two estimators as follows:

and

In the noiseless case, it is clear that ψ[4] = ψ̂(1)[4] = ψ̂(2)[4]. In a noisy environment, we can reduce the noise by averaging the two estimators, where averaging is done over the set of phases (namely, over the rotation group SO(2)) as explained in Appendix D. Specifically,

We can iterate this procedure. To estimate phase q, we want to consider all entries of such that exactly one of the indices k, ℓ, or ℓ − k is equal to q and all other indices are in 1, …, q − 1, so that all other phases involved have already been estimated. A simple verification shows that only entries B̃[p, q], p = 1, …, q − 1, have that property. Furthermore, because of symmetry in the bispectrum (V.4), half of these entries are redundant so that only entries B̃[p, q], remain. As a result, estimation of the kth phase relies on averaging over equations, as summarized in Algorithm 4, with the following simple guarantee. The above construction yields the following proposition.

Proposition IV.1

Let B̃ = B̃x be the normalized bispectrum as defined in (II.9) and assume that ỹ[1] is known. If y[k] ≠ 0 for k = 1, …, K, then Algorithm 4 recovers the Fourier phases ỹ[k], k = 1, …, K exactly.

We note in closing that, if the signal x is real, symmetries in the phases ỹ and B̃ can be exploited easily in FM.

B. Semidefinite programming relaxation

In this section we assume that the DFT y is non-vanishing so that the bispectrum relation can be manipulated as

where is its entry-wise conjugate. The developments are easily adapted if the signal has zero mean. Similarly to the FM algorithm, we assume that ỹ[0] and ỹ[1] are available. We aim to estimate ỹ by a convex program. As a first step, we decouple the bispectrum equation and write the problem of estimating ỹ as the following non-convex optimization problem:

| (IV.1) |

where ℋN is the set of Hermitian matrices of size N and W ∈ ℝN×N is a real weight matrix with positive entries. In particular, in the numerical experiments we set W = |B|.

Algorithm 4.

Frequency marching algorithm

| Input: Normalized bispectrum B̃[k1, k2] = eiΨ[k1,k2], ỹ[0] and ỹ[1] ≠ 0 | ||

Output:

ŷ: estimation of ỹ

| ||

| Return: ŷ ← eiψ̂ |

In the absence of noise, the minimizers of (IV.1) satisfy the bispectrum equation. However, in general these cannot be computed in polynomial time. In order to make the problem tractable, we relax the non-convex coupling constraint Z = zz* to the convex constraint Z ⪰ zz* (that is, Z − zz* is positive semidefinite). The convex relaxation is then given by

| (IV.2) |

Upon solving (IV.2), which can be done in polynomial time with interior point methods, the phases ỹ are estimated from phase(z). In practice, we use CVX to solve this problem [65]. The algorithm is summarized in Algorithm 5. We note that problem (IV.2) is not a standard SDP, in that its cost function is nonlinear.

In the noiseless case, the SDP relaxation (IV.2) recovers the missing phases exactly. Interestingly, the proof is not so much based on optimality conditions as it is on an algebraic property of circulant matrices. The proof of the following property is given in Appendix E.

Algorithm 5.

Semidefinite relaxation algorithm

Lemma IV.2

Let û be the DFT of a vector u ∈ ℂN obeying , so that û is real. If u[0] = u[1] = 1 and û is non-negative, then u[k] = 1 for all k.

The following theorem is a direct corollary of Lemma IV.2. The main proof idea is as follows. Consider where (Z, z) is optimal for the SDP; then, the constraints ensure u[0] = u[1] = 1. Furthermore, one can see via the Schur complement that the constraints force T(u) to be positive semidefinite. Since the eigenvalues of T(u) are the DFT of u, it follows that û is non-negative, so that the lemma above applies and u ≡ 1, or, equivalently, z = ỹ. Details of the proof are in Appendix F.

Theorem IV.3

For a real signal with non-vanishing DFT y, if all weights in W are positive, ỹ[0] and ỹ[1] are known and the objective value of (IV.2) attains 0 (which is the case in the absence of noise), then the SDP has a unique solution given by z = ỹ and Z = zz*.

We close with an important remark about the symmetry breaking purpose of constraint z[1] = ỹ[1] in the SDP. Because the signal x can be recovered only up to integer time shifts, even in the noiseless case, without this constraint there are at least N distinct solutions (z, Z) to the SDP. Because SDP is a convex program, any point in the convex hull of these N points is also a solution. Thus, if the symmetry is not broken, the set of solutions contains many irrelevant points. Furthermore, interior point methods tend to converge to a center of the set of solutions, which in this case is never one of the desired solutions.

C. Phase unwrapping by integer programming algorithm

The next algorithm is based on solving an over-determined system of equations involving integers. Let us denote ỹ[k] = eiψ[k] and B̃[k1, k2] = eiΨ[k1,k2] so the normalized bispectrum model is given by

By taking the logarithm, we get the algebraic relation

| (IV.3) |

where, as a result of phase wrapping, χ takes on integer values. Let Ψvec and χvec be the column-stacked versions of Ψ and χ, respectively. Then, the model reads

| (IV.4) |

where the sparse matrix A ∈ ℝN2×N encodes the right hand side of (IV.3). It can be verified that A is of rank N − 1 (see for instance [66]), with null space corresponding to the time-shift-induced ambiguity on the phases (II.3). Note that both the integer vector χvec and the phases ψ are unknown. Given χvec, the phases ψ can be obtained easily by solving

| (IV.5) |

for some ℓp norm. Observe that any error in estimating χ may cause a big estimation error of ψ in (IV.5). These errors can be thought of as outliers. Hence, we choose to use least unsquared deviations (LUD), p = 1, which is more robust to outliers. The more challenging task is to estimate the integer vector χvec ∈ ℤN2. To this end, we first eliminate ψ from (IV.4) as follows. Let C ∈ ℝ(N2 − (N−1))×N2 be a full rank matrix such that CA = 0, that is, the columns of CT are in the null space of AT. Matrix C can be designed by at least two methods. One, suggested in [67], exploits the special structure of A to design a sparse matrix composed of integer values. Another, which we use here, is to take C to have orthonormal rows which form a basis of the kernel of AT. Numerical experiments (not shown) indicate that the latter approach is more stable. Next, we multiply both sides of (IV.4) from the left by C to get

Therefore, the integer recovery problem can be formulated as

| (IV.6) |

where we minimize over all integers. Note that CΨvec is a known vector. The problem is then equivalent to finding a lattice vector with the basis C which is as close as possible to the vector −CΨvec/(2π). While the problem is known to be NP-hard, we approximate the solution of (IV.6) with the LLL (Lenstra–Lenstra–Lovasz) algorithm, which can be run in polynomial time [68]. The LLL algorithm computes a lattice basis, called a reduced basis, which is approximately orthogonal. It uses the Gram–Schmidt process to determine the quality of the basis. For more details, see [69, Ch. 17].

We note that (IV.6) is under-determined as the matrix C is of rank N2 − rank(A) = N2 − (N − 1). While the LLL algorithm works with under-determined systems, in our case we can solve it for a determined system since we can fix the first N − 1 entries of χvec to be zero.2 Once we have estimated χvec, we solve (IV.5) with p = 1. This approach is summarized in Algorithm 6.

Algorithm 6.

Phase unwrapping by integer programming

| Input: The normalized bispectrum B̃[k1, k2] = eiΨ[k1,k2] |

| Output: ŷ: estimation of ỹ |

| Return: ŷ ← eiψ̂ |

V. Analysis of optimization over phases

In this section, we study the non-convex optimization problem (III.4) and give more implementation details to solve it, since numerical experiments identify this as the method of choice for MRA from invariant features among all methods compared. We start by considering the general case of a complex signal x ∈ ℂN and consider the real case in Appendix B. Recall that we aim to maximize

where the inner product is defined by (III.2), W is a real weighting matrix and W(2):= W ∘ W. The optimization problem lives on a manifold, that is, a smooth nonlinear space. Indeed, the smooth cost function f(z) is to be maximized over the set

which is a Cartesian product of N unit circles in the complex plane (a torus). Theory and algorithms for optimization on manifolds can be found in the monograph [71]. We follow this formalism here. Details can also be found in [17], which deals with the similar problem of phase synchronization, using similar techniques. For the numerical experiments below, we use the toolbox Manopt which provides implementations of various optimization algorithms on manifolds [63].

Under mild conditions, the global optima of (III.4) correspond exactly to ỹ up to integer time shifts. This fact is proven in Appendix G.

Lemma V.1

For N ≥ 3, let x ∈ ℂN be a signal whose DFT y is nonzero for frequencies k in {1, …, K}, possibly also for k = 0, and zero otherwise. Up to integer time shifts, x is determined exactly by its bispectrum B provided . Furthermore, the global optima of (III.4) correspond exactly to the relevant phases of y—up to the effects of integer time shifts—provided W[k, ℓ] is positive when B[k, ℓ] ≠ 0.

The problem at hand is

| (V.1) |

This is smooth but non-convex, so that in general it is hard to compute the global optimum. We derive first- and second-order necessary optimality conditions. Points which satisfy these conditions are called critical and second-order critical points, respectively. Known algorithms converge to critical points (e.g., Riemannian gradient descent) and even to second-order critical points (e.g., Riemannian trust-regions) regardless of initialization [71], [62], [64]. Empirically, despite non-convexity, the global optimum appears to be computable reliably in favorable noise regimes.

As we proceed to consider optimization algorithms for (III.4), the gradient of f will come into play:

where Madj: ℂN×N → ℂN is the adjoint of M with respect to the inner product 〈·, ·〉. Formally, the adjoint is defined such that, for any z ∈ ℂN, X ∈ ℂN×N,

Specifically, in Appendix H we show that

| (V.2) |

where Tk is a circulant matrix with ones in its kth (circular) diagonal and zero otherwise, namely,

| (V.3) |

As it turns out, under the symmetries of the problem at hand, there is no need to evaluate Madj explicitly. Indeed, B̃ obeys

| (V.4) |

This property is preserved when B̃ is obtained by averaging bispectra of multiple observations, as in (II.8). Assuming the same symmetry for the real weights W, we find below that Madj(zz*) = M(z)z. See Appendix I.

Lemma V.2

If B̃[k2 − k1, k2] = B̃[k1, k2] and W[k2 − k1, k2] = W[k1, k2] for all k1, k2, then Madj(zz*) = M(z)z for all z ∈ ℂN.

Thus, under the symmetries assumed in Lemma V.2, the gradient of f simplifies and we get a simple expression for the Hessian as well:

| (V.5) |

For unconstrained optimization, the first-order necessary optimality conditions are ∇f(z) = 0. In the presence of the constraint z ∈ ℳ, the conditions are different. Namely, following [71, eq. (3.37)], since ℳ is a submanifold of ℂN, first-order necessary optimality conditions state that the orthogonal projection of the gradient ∇f(z) to the tangent space to ℳ at z must vanish. The result of this projection is called the Riemannian gradient. Formally, the tangent space is obtained by linearizing (differentiating) the constraints |z[k]|2 = 〈z[k], z[k]〉 = 1 for all k, yielding

Orthogonal projection of u ∈ ℂN to the tangent space Tzℳ can be computed entry-wise by subtracting from each u[k] its component aligned with z[k]. Let Projz: ℂN → Tzℳ denote this projection. This operation admits a compact matrix notation as

where ddiag: ℂN×N → ℂN×N sets all non-diagonal entries of a matrix to zero. Equipped with this notion and the expression for ∇f(z) (V.5), it follows that the Riemannian gradient of f at z on ℳ is

with

Lemma V.3

If z ∈ ℳ is optimal for (V.1), then grad f(z) = 0; equivalently, diag(∇f(z)z*) = ∇f(z) ∘ z̄ is real.

Proof

See [72, Rem. 4.2 and Cor. 4.2]. For the equivalence, notice that Projz(u) = 0 if and only if for all k, and multiply by on both sides using |z[k]| = 1.

A point z which satisfies these conditions is called a critical point. Likewise, we can define a notion of Riemannian Hessian as the linear, self-adjoint operator on Tzℳ which captures infinitesimal changes in the Riemannian gradient around z. Without getting into technical details, we follow [71, eq. (5.15)] and define (with D the directional derivative operator):

where DD(z)[ż] is a real, diagonal matrix. Its contribution to the Hessian is zero, since (DD(z)[ż])z vanishes under the projection Projz. Hence,

The Riemannian Hessian intervenes in the second-order necessary optimality conditions as follows.

Lemma V.4

If z ∈ ℳ is optimal for (V.1), then grad f(z) = 0 and Hess f(z) ⪯ 0, that is, for all ż ∈ Tzℳ we have

Proof

See [72, Rem. 4.2 and Cor. 4.2]. In the equality, we used the fact that Projz is self-adjoint and ż ∈ Tzℳ.

A point z which satisfies these conditions is called a second-order critical point. With unit weights, the following lemma shows that second-order critical points z, in the noiseless case, cannot have an arbitrarily bad objective value f(z). This result is weak, however, since empirically it is observed that in the noiseless case local optimization methods consistently converge to global optima whose value are N2, suggesting that all second-order critical points are global optima in this simplified scenario. While we do not have a proof for this stronger conjecture, we provide the lemma below because it is analogous to [17, Lemma 14] which, in that reference, is a key step toward proving global optimality of second-order critical points.

Lemma V.5

In the absence of noise and with unit weights, a second-order critical point z of (V.1) satisfies

In particular, this implies

Proof

See Appendix J for the proof of the inequality. It follows from two key considerations. First, because z is a critical point, Lemma V.3 indicates that ∇f(z) ∘ z̄ is real. Second, because z is second-order critical, the Riemannian Hessian at z must be negative semidefinite by Lemma V.4. Applied to all tangent directions at z which perturb only one phase at a time implies the desired inequality. The fact that 3f(z) = 〈z,∇f(z)〉 follows from (V.5).

One final ingredient that is necessary to optimize f overℳ is a means of moving away from a current iterate z ∈ ℳ to the next by following a tangent vector ż. A simple means of achieving this is through a retraction [71, Def. 4.1.1]. For ℳ, an obvious retraction is the following:

| (V.6) |

With the formalism of (V.1) and the above derivations, we can now run a local Riemannian optimization algorithm. As an example, the Riemannian gradient ascent algorithm would iterate the following:

where η(t) > 0 is an appropriately chosen step size and z(0) ∈ ℳ is an initial guess. It is relatively easy to choose the step sizes such that the sequence z(t) converges to critical points regardless of z(0), with a linear local convergence rate [71, §4]. In practice, we prefer to use the Riemannian trust-region method (RTR) [62], whose usage is simplified by the toolbox Manopt [63]. RTR enjoys global convergence to second-order critical points [64] and a quadratic local convergence rate.

In this section, the analysis focused on complex signals. For real signals, we can follow the same methodology while taking the symmetry in the Fourier domain into account. This analysis is given in Appendix B.

VI. Expectation maximization

In this section, we detail the expectation maximization algorithm (EM) [73] applied to MRA. As the numerical experiments in Section VII demonstrate, EM achieves excellent accuracy in estimating the signal. However, compared to the invariant features approach proposed in this paper, it is significantly slower and requires many passes over the data (thus excluding online processing).

Let X = [ξ1, …, ξM] be the data matrix of size N × M, following the MRA model (I.1). The maximum marginalized likelihood estimator (MMLE) for the signal x given X is the maximizer of the likelihood function L(x; X) = p(X|x) (the probability density of X given x). This density could in principle be evaluated by marginalizing the joint distribution p(X, r|x) over the unknown shifts r ∈ {0, …, N−1}M. This, however, is intractable as it involves summing over NM terms.

Alternatively, EM tries to estimate the MMLE as follows. Given a current estimate for the signal xk, consider the expected value of the log-likelihood function, with respect to the conditional distribution of r given X and xk:

| (VI.1) |

This step is called the E-step. Then, iterate by computing the M-step:

| (VI.2) |

For the MRA model, this can be done in closed form. Indeed, the log-likelihood function follows from the i.i.d. Gaussian noise model:

| (VI.3) |

To take the expectation with respect to r, we need to compute : for each observation j, this is the probability that the shift rj is equal to ℓ, given X and assuming x = xk. This also follows easily from the i.i.d. Gaussian noise model:

| (VI.4) |

(with appropriate scale so that ). This allows to write Q down explicitly:

This is a convex quadratic expression in x with maximizer

| (VI.5) |

In words: given an estimator xk, the next estimator is obtained by averaging all shifted versions of all observations, weighted by the empirical probabilities of the shifts. Considering all shifts of all observations would, in principle, induce an iteration complexity of O(MN2), but fortunately, for each observation, the matrix of its shifted versions is circulant, which makes it possible to use FFT to reduce the overall computational cost to O(MN logN). See the available code for details. We note that Matlab naturally parallelizes the computations over M.

In practice, we set x0 ~ 𝒩(0, IN) to be a random guess. Furthermore, for M ≥ 3000, we first execute 3000 batch iterations, where the EM update is computed based on a random sample of 1000 observations (fresh sample at each iteration). This inexpensively transforms the random initialization into a ballpark estimate of the signal. The algorithm then proceeds with full-data iterations until the relative change between two consecutive estimates drops below 10−5 (in ℓ2-norm, up to shifts).

VII. Numerical Experiments

This section is devoted to numerical experiments, examining all proposed algorithms. Code for all algorithms and to reproduce the experiments is available online.3 The experiments were conducted as follows. The true signal x of length N = 41 is a fixed window of height 1 and width 21. With this signal, the signal-to-noise ratio is . We generated a set of M shifted noisy versions of x as

where each shift was randomly drawn from a uniform distribution over {0, …, N − 1} and εj ~ 𝒩(0, σ2I) for all j. The relative recovery error for a single experiment is defined as

where x̂ is the estimation of the signal. All results are averaged over 20 repetitions. While we present here results for a specific signal, alternative signal models (e.g., random signals) showed similar numerical behavior.

The following figures compare the recovery errors for all proposed algorithms, with random initialization for those that need initialization. The non-convex algorithm on the manifold of phases, Algorithm 2, runs the Riemannian trust-region method (RTR) [62] using the toolbox Manopt [63]. Algorithm 3 runs 15 iterations with warm-start using the same toolbox. For the phase unwrapping algorithm, Algorithm 6, we use an implementation of LLL available in the MILES package [70]. The SDP is solved with CVX [65]. The EM algorithm is implemented as explained in Section VI. We compared the algorithms with an oracle who knowns the random shifts rj and therefore simply averages out the Gaussian noise. Experiments are run on a computer with 30 CPUs available. These CPUs are used to compute the invariants in parallel (with Matlab’s parfor), while the EM algorithm benefits from parallelism to run the many thousands of FFTs it requires efficiently (built-in Matlab). The algorithms that need ỹ[0] and ỹ[1] are given the correct values.

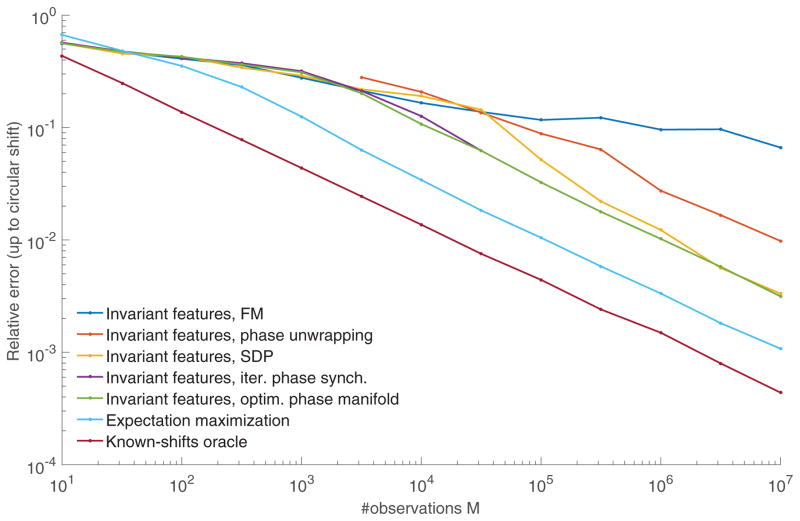

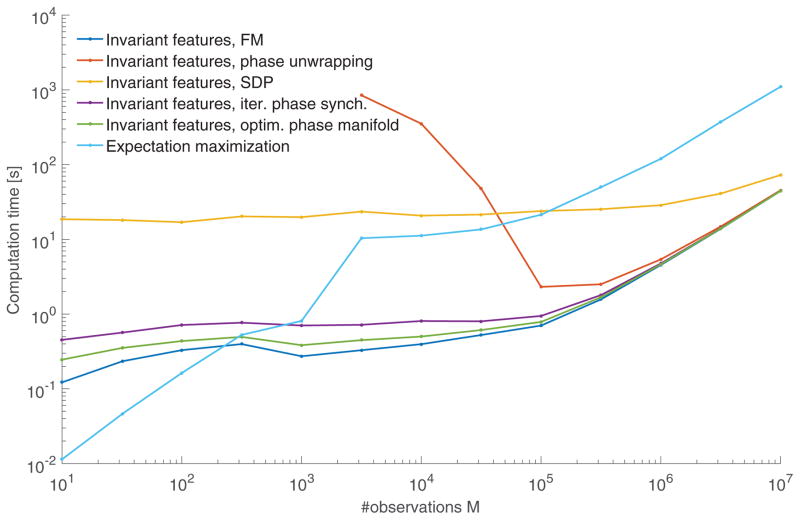

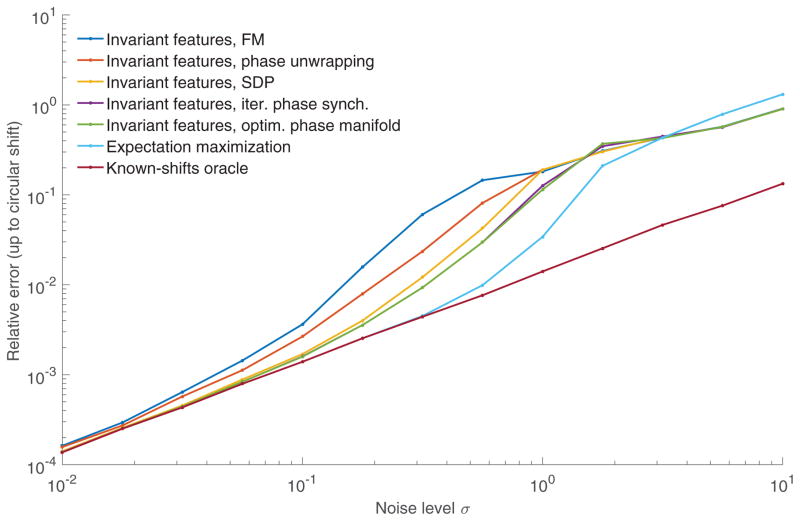

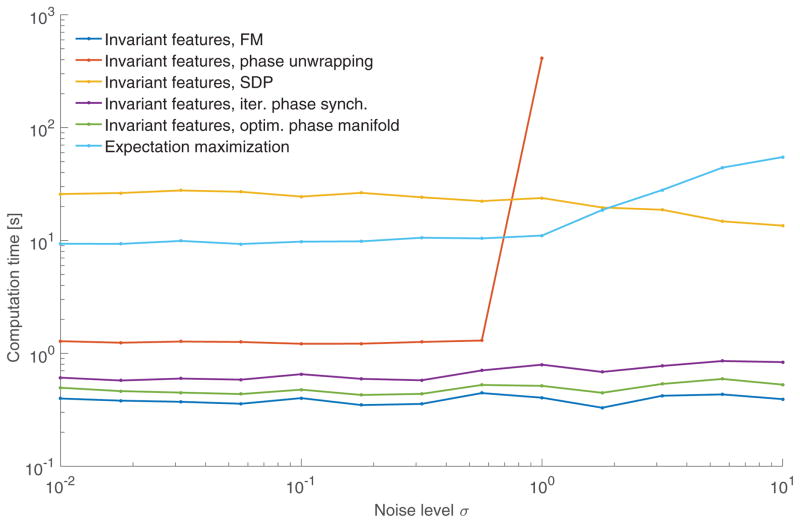

Figures VII.1 and VII.2 present the recovery error and computation time of all algorithms as a function of the number of observations M for fixed noise level σ = 1. Of course, the oracle who knows the shifts of the observations is unbeatable. Algorithms 2 and 3 outperform all invariant approach methods. The inferior performance of the SDP might be explained by the fact that we are minimizing a smooth non-linear objective. This is in contrast to SDPs with linear or piecewise linear objectives which tend to promote “simple” (i.e., low rank) solutions [74], [75, Remark 6.2]. Additionally, while this is not depicted on the figure, we note that for σ = 0 all methods get exact recovery up to machine precision. EM outperforms the best invariant features approaches by a factor of 3, at the cost of being significantly slower. For large M, the best invariant features approaches are faster than EM by a factor of 25. Note, however, that for M up to about 300, EM is faster than the other algorithms. For invariant features approaches (aside from the SDP), almost all of the time is spent computing the bispectrum estimator, while inverting the bispectrum is relatively cheap.

Figure VII.1.

Relative recovery error for the signal x as a function of the number of observations M for fixed noise level σ = 1. The curves corresponding to the optim. phase manifold (Algorithm 2) and the iter. phase synch. (Algorithm 3) overlap.

Figure VII.2.

Average computation times corresponding to Figure VII.1.

Figures VII.3 and VII.4 show the recovery error and computation time as a function of the noise level σ with M = 10,000 observations. Surprisingly, for high noise level σ ≳ 3, the invariant features algorithms outperform EM.

Figure VII.3.

Relative recovery error for the signal x as a function of the noise level σ with M = 10,000 observations. The curves corresponding to the optim. phase manifold (Algorithm 2) and the iter. phase synch. (Algorithm 3) overlap.

Figure VII.4.

Average computation times corresponding to Figure VII.3.

VIII. Conclusions and perspective

The goal of this paper is twofold. First, we have suggested a new approach for the MRA problem based on features that are invariant under translations. This technique enables us to deal with any noise level as long as we have access to enough measurements and particularly it achieves the sample complexity of MRA. The invariant features approach has low computational complexity and it requires less memory with respect to alternative methods, such as EM. If one wants to have a highly accurate solution, it can therefore be used to initialize EM.

A main ingredient of the invariant features approach is estimating the signal’s Fourier phases by inverting the bispectrum. Hence, the second goal of this paper was to study algorithms for bispectrum inversion. We have proposed a few algorithms for this task. In the presence of noise, the non-convex algorithms on the manifold of phases, namely, Algorithms 2 and 3, perform the best. Empirically, these algorithms have a remarkable property: despite their non-convex landscape, they appear to converge to the target signal from random initialization. We provide some analysis for Algorithm 2 but this phenomenon is not well understood.

Our chief motivation for this work comes from the more involved problem of cryo-EM. In cryo-EM, a 3D object is estimated from its 2D projections at unknown rotations in a low SNR environment. One line of research for the object recovery is based on first estimating the unknown rotations [76], [77], [78]. However, the rotation estimation is performed in a very noisy environment and therefore might be inaccurate. An interesting question is to examine whether the 3D object can be estimated directly from the acquired data using features that are invariant under the unknown viewing directions [79].

Supplementary Material

Acknowledgments

The authors were partially supported by Award Number R01GM090200 from the NIGMS, FA9550-17-1-0291 from AFOSR, Simons Investigator Award and Simons Collaboration on Algorithms and Geometry from Simons Foundation, and the Moore Foundation Data-Driven Discovery Investigator Award. NB is partially supported by NSF grant DMS-1719558. ZZ is partially supported by National Center for Supercomputing Applications Faculty Fellowship and University of Illinois at Urbana-Champaign College of Engineering Strategic Research Initiative.

The authors are grateful to Afonso Bandeira, Roy Lederman, William Leeb, Nir Sharon and Susannah Shoemaker for many insightful discussions. We also thank the reviewers for their useful comments, and particularly the anonymous reviewer who proposed a simpler proof for Lemma IV.2.

Footnotes

If σ is not known, it can be estimated from the data as

We omit the proof of this property here and only mention that it is based on the derivation in [67].

Contributor Information

Tamir Bendory, Princeton University in PACM and the Mathematics Department.

Nicolas Boumal, Princeton University in PACM and the Mathematics Department.

Chao Ma, Princeton University in PACM and the Mathematics Department.

Zhizhen Zhao, University of Illinois at Urbana-Champaign in the Department of Electrical and Computer Engineering.

Amit Singer, Princeton University in PACM and the Mathematics Department.

References

- 1.Diamond R. On the multiple simultaneous superposition of molecular structures by rigid body transformations. Protein Science. 1992;1(10):1279–1287. doi: 10.1002/pro.5560011006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Theobald DL, Steindel PA. Optimal simultaneous superpositioning of multiple structures with missing data. Bioinformatics. 2012;28(15):1972–1979. doi: 10.1093/bioinformatics/bts243. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Park W, Midgett CR, Madden DR, Chirikjian GS. A stochastic kinematic model of class averaging in single-particle electron microscopy. The International journal of robotics research. 2011;30(6):730–754. doi: 10.1177/0278364911400220. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Park W, Chirikjian GS. An assembly automation approach to alignment of noncircular projections in electron microscopy. IEEE Transactions on Automation Science and Engineering. 2014;11(3):668–679. [Google Scholar]

- 5.Zwart JP, van der Heiden R, Gelsema S, Groen F. Fast translation invariant classification of HRR range profiles in a zero phase representation. IEE Proceedings-Radar, Sonar and Navigation. 2003;150(6):411–418. [Google Scholar]

- 6.Gil-Pita R, Rosa-Zurera M, Jarabo-Amores P, López-Ferreras F. Using multilayer perceptrons to align high range resolution radar signals. International Conference on Artificial Neural Networks; Springer; 2005. pp. 911–916. [Google Scholar]

- 7.Dryden IL, Mardia KV. Statistical shape analysis. Vol. 4. J. Wiley; Chichester: 1998. [Google Scholar]

- 8.Foroosh H, Zerubia JB, Berthod M. Extension of phase correlation to subpixel registration. IEEE transactions on image processing. 2002;11(3):188–200. doi: 10.1109/83.988953. [DOI] [PubMed] [Google Scholar]

- 9.Robinson D, Farsiu S, Milanfar P. Optimal registration of aliased images using variable projection with applications to super-resolution. The Computer Journal. 2009;52(1):31–42. [Google Scholar]

- 10.Abbe E, Pereira J, Singer A. Sample complexity of the boolean multireference alignment problem,” to appear. The IEEE International Symposium on Information Theory (ISIT); 2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Bandeira A, Rigollet P, Weed J. Optimal rates of estimation for multi-reference alignment. 2017 arXiv preprint arXiv:1702.08546. [Google Scholar]

- 12.Bartesaghi A, Merk A, Banerjee S, Matthies D, Wu X, Milne JL, Subramaniam S. 2.2 Å resolution cryo-EM structure of β-galactosidase in complex with a cell-permeant inhibitor. Science. 2015;348(6239):1147–1151. doi: 10.1126/science.aab1576. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Sirohi D, Chen Z, Sun L, Klose T, Pierson TC, Rossmann MG, Kuhn RJ. The 3.8 Å resolution cryo-EM structure of Zika virus. Science. 2016;352(6284):467–470. doi: 10.1126/science.aaf5316. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Frank J. Three-dimensional electron microscopy of macromolecular assemblies: visualization of biological molecules in their native state. Oxford University Press; 2006. [Google Scholar]

- 15.van Heel M, Gowen B, Matadeen R, Orlova EV, Finn R, Pape T, Cohen D, Stark H, Schmidt R, Schatz M, et al. Single-particle electron cryo-microscopy: towards atomic resolution. Quarterly reviews of biophysics. 2000;33(04):307–369. doi: 10.1017/s0033583500003644. [DOI] [PubMed] [Google Scholar]

- 16.Singer A. Angular synchronization by eigenvectors and semidefinite programming. Applied and computational harmonic analysis. 2011;30(1):20–36. doi: 10.1016/j.acha.2010.02.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Boumal N. Nonconvex phase synchronization. SIAM Journal on Optimization. 2016;26(4):2355–2377. [Google Scholar]

- 18.Perry A, Wein AS, Bandeira AS, Moitra A. Message-passing algorithms for synchronization problems over compact groups. 2016 arXiv preprint arXiv:1610.04583. [Google Scholar]

- 19.Chen Y, Candes E. The projected power method: An efficient algorithm for joint alignment from pairwise differences. 2016 arXiv preprint arXiv:1609.05820. [Google Scholar]

- 20.Bandeira AS, Boumal N, Singer A. Tightness of the maximum likelihood semidefinite relaxation for angular synchronization. Mathematical Programming. 2017;163(1):145–167. [Google Scholar]

- 21.Zhong Y, Boumal N. Near-optimal bounds for phase synchronization. 2017 arXiv preprint arXiv:1703.06605. [Google Scholar]

- 22.Bandeira AS, Charikar M, Singer A, Zhu A. Multireference alignment using semidefinite programming. Proceedings of the 5th conference on Innovations in theoretical computer science; ACM; 2014. pp. 459–470. [Google Scholar]

- 23.Chen Y, Guibas L, Huang Q. Near-optimal joint object matching via convex relaxation. Proceedings of the 31st International Conference on Machine Learning (ICML-14); 2014. pp. 100–108. [Google Scholar]

- 24.Kosir P, DeWall R, Mitchell RA. A multiple measurement approach for feature alignment. Aerospace and Electronics Conference, 1995. NAECON 1995., Proceedings of the IEEE 1995 National; IEEE; 1995. pp. 94–101. [Google Scholar]

- 25.Aguerrebere C, Delbracio M, Bartesaghi A, Sapiro G. Fundamental limits in multi-image alignment. IEEE Transactions on Signal Processing. 2016;64(21):5707–5722. [Google Scholar]

- 26.Robinson D, Milanfar P. Fundamental performance limits in image registration. IEEE Transactions on Image Processing. 2004;13(9):1185–1199. doi: 10.1109/tip.2004.832923. [DOI] [PubMed] [Google Scholar]

- 27.Weiss A, Weinstein E. Fundamental limitations in passive time delay estimation–Part I: Narrow-band systems. IEEE Transactions on Acoustics, Speech, and Signal Processing. 1983;31(2):472–486. [Google Scholar]

- 28.Weinstein E, Weiss A. Fundamental limitations in passive time-delay estimation–Part II: Wide-band systems. IEEE transactions on acoustics, speech, and signal processing. 1984;32(5):1064–1078. [Google Scholar]

- 29.Perry A, Weed J, Bandeira A, Rigollet P, Singer A. The sample complexity of multi-reference alignment. 2017 arXiv preprint arXiv:1707.00943. [Google Scholar]

- 30.Brockett PL, Hinich MJ, Patterson D. Bispectral-based tests for the detection of gaussianity and linearity in time series. Journal of the American Statistical Association. 1988;83(403):657–664. [Google Scholar]

- 31.Bartolo N, Komatsu E, Matarrese S, Riotto A. Non-gaussianity from inflation: theory and observations. Physics Reports. 2004;402(3):103–266. [Google Scholar]

- 32.Luo X. The angular bispectrum of the cosmic microwave background. The Astrophysical journal. 1994;427(2):L71–L71. [Google Scholar]

- 33.Wang L, Kamionkowski M. Cosmic microwave background bispectrum and inflation. Physical Review D. 2000;61(6):063504. [Google Scholar]

- 34.Matsuoka T, Ulrych TJ. Phase estimation using the bispectrum. Proceedings of the IEEE. 1984;72(10):1403–1411. [Google Scholar]

- 35.Chang MM, Tekalp AM, Erdem AT. Blur identification using the bispectrum. IEEE transactions on signal processing. 1991;39(10):2323–2325. [Google Scholar]

- 36.Chen T-w, Jin W-d, Li J. Feature extraction using surrounding-line integral bispectrum for radar emitter signal. 2008 IEEE International Joint Conference on Neural Networks (IEEE World Congress on Computational Intelligence); IEEE; 2008. pp. 294–298. [Google Scholar]

- 37.Ning T, Bronzino JD. Bispectral analysis of the rat EEG during various vigilance states. IEEE Transactions on Biomedical Engineering. 1989;36(4):497–499. doi: 10.1109/10.18759. [DOI] [PubMed] [Google Scholar]

- 38.Chen B, Petropulu AP. Frequency domain blind mimo system identification based on second-and higher order statistics. IEEE Transactions on Signal Processing. 2001;49(8):1677–1688. [Google Scholar]

- 39.Zhao Z, Singer A. Rotationally invariant image representation for viewing direction classification in cryo-EM. Journal of structural biology. 2014;186(1):153–166. doi: 10.1016/j.jsb.2014.03.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Mendel JM. Tutorial on higher-order statistics (spectra) in signal processing and system theory: Theoretical results and some applications. Proceedings of the IEEE. 1991;79(3):278–305. [Google Scholar]

- 41.Nikias CL, Raghuveer MR. Bispectrum estimation: A digital signal processing framework. Proceedings of the IEEE. 1987;75(7):869–891. [Google Scholar]

- 42.Marabini R, Carazo JM. Practical issues on invariant image averaging using the bispectrum. Signal processing. 1994;40(2–3):119–128. [Google Scholar]

- 43.Marabini R, Carazo JM. On a new computationally fast image invariant based on bispectral projections. Pattern recognition letters. 1996;17(9):959–967. [Google Scholar]

- 44.Petropulu AP, Pozidis H. Phase reconstruction from bispectrum slices. IEEE Transactions on Signal Processing. 1998;46(2):527–530. [Google Scholar]

- 45.Giannakis GB. Signal reconstruction from multiple correlations: frequency-and time-domain approaches. JOSA A. 1989;6(5):682–697. [Google Scholar]

- 46.Sadler BM, Giannakis GB. Shift-and rotation-invariant object reconstruction using the bispectrum. JOSA A. 1992;9(1):57–69. [Google Scholar]

- 47.Swami A, Giannakis G, Mendel J. Linear modeling of multidimensional non-gaussian processes using cumulants. Multidimensional Systems and Signal Processing. 1990;1(1):11–37. [Google Scholar]

- 48.Fienup JR. Phase retrieval algorithms: a comparison. Applied optics. 1982;21(15):2758–2769. doi: 10.1364/AO.21.002758. [DOI] [PubMed] [Google Scholar]

- 49.Shechtman Y, Eldar YC, Cohen O, Chapman HN, Miao J, Segev M. Phase retrieval with application to optical imaging: a contemporary overview. IEEE signal processing magazine. 2015;32(3):87–109. [Google Scholar]

- 50.Jaganathan K, Oymak S, Hassibi B. Sparse phase retrieval: Convex algorithms and limitations. Information Theory Proceedings (ISIT), 2013 IEEE International Symposium on; IEEE; 2013. pp. 1022–1026. [Google Scholar]

- 51.Bendory T, Eldar YC, Boumal N. Non-convex phase retrieval from stft measurements. IEEE Transactions on Information Theory. 2017 [Google Scholar]

- 52.Beinert R, Plonka G. Ambiguities in one-dimensional discrete phase retrieval from fourier magnitudes. Journal of Fourier Analysis and Applications. 2015;21(6):1169–1198. [Google Scholar]

- 53.Bendory T, Beinert R, Eldar YC. Fourier phase retrieval: Uniqueness and algorithms. 2017 arXiv preprint arXiv:1705.09590. [Google Scholar]

- 54.Bendory T, Edidin D, Eldar YC. On signal reconstruction from FROG measurements. 2017 arXiv preprint arXiv:1706.08494. [Google Scholar]

- 55.Tukey JW. The spectral representation and transformation properties of the higher moments of stationary time series. In: Brillinger DR, editor. The Collected Works of John W. Tukey. 1. ch 4. Wadsworth; 1984. pp. 165–184. [Google Scholar]

- 56.Yellott JI, Iverson GJ. Uniqueness properties of higher-order autocorrelation functions. JOSA A. 1992;9(3):388–404. [Google Scholar]

- 57.Kondor R. A novel set of rotationally and translationally invariant features for images based on the non-commutative bispectrum. 2007 arXiv preprint cs/0701127. [Google Scholar]

- 58.Kakarala R. Completeness of bispectrum on compact groups. 2009;1 arXiv preprint arXiv:0902.0196. [Google Scholar]

- 59.Kakarala R. The bispectrum as a source of phase-sensitive invariants for fourier descriptors: a group-theoretic approach. Journal of Mathematical Imaging and Vision. 2012;44(3):341–353. [Google Scholar]

- 60.Kakarala R. Bispectrum on finite groups. 2009 IEEE International Conference on Acoustics, Speech and Signal Processing; IEEE; 2009. pp. 3293–3296. [Google Scholar]

- 61.Devroye L, Lerasle M, Lugosi G, Oliveira RI, et al. Sub-gaussian mean estimators. The Annals of Statistics. 2016;44(6):2695–2725. [Google Scholar]

- 62.Absil PA, Baker CG, Gallivan KA. Trust-region methods on Riemannian manifolds. Foundations of Computational Mathematics. 2007;7(3):303–330. [Google Scholar]

- 63.Boumal N, Mishra B, Absil P-A, Sepulchre R. Manopt, a Matlab toolbox for optimization on manifolds. Journal of Machine Learning Research. 2014;15:1455–1459. [Google Scholar]

- 64.Boumal N, Absil P-A, Cartis C. Global rates of convergence for nonconvex optimization on manifolds. 2016 arXiv preprint arXiv:1605.08101. [Google Scholar]

- 65.Grant M, Boyd S, Ye Y. CVX: Matlab software for disciplined convex programming. 2008 [Google Scholar]

- 66.Bendory T, Sidorenko P, Eldar YC. On the uniqueness of frog methods. IEEE Signal Processing Letters. 2017;24(5):722–726. [Google Scholar]

- 67.Marron J, Sanchez P, Sullivan R. Unwrapping algorithm for leastsquares phase recovery from the modulo 2π bispectrum phase. JOSA A. 1990;7(1):14–20. [Google Scholar]

- 68.Lenstra AK, Lenstra HW, Lovász L. Factoring polynomials with rational coefficients. Mathematische Annalen. 1982;261(4):515–534. [Google Scholar]

- 69.Galbraith SD. Mathematics of public key cryptography. Cambridge University Press; 2012. [Google Scholar]

- 70.Chang XW, Zhou T. Miles: Matlab package for solving mixed integer least squares problems. GPS Solutions. 2007;11(4):289–294. [Google Scholar]

- 71.Absil P-A, Mahony R, Sepulchre R. Optimization algorithms on matrix manifolds. Princeton University Press; 2009. [Google Scholar]

- 72.Yang WH, Zhang LH, Song R. Optimality conditions for the nonlinear programming problems on Riemannian manifolds. Pacific Journal of Optimization. 2014;10(2):415–434. [Google Scholar]

- 73.Dempster A, Laird N, Rubin D. Maximum likelihood from incomplete data via the EM algorithm. Journal of the royal statistical society Series B (methodological) 1977;39(1):1–38. [Google Scholar]

- 74.Pataki G. On the rank of extreme matrices in semidefinite programs and the multiplicity of optimal eigenvalues. Mathematics of operations research. 1998;23(2):339–358. [Google Scholar]

- 75.Boumal N. A Riemannian low-rank method for optimization over semidefinite matrices with block-diagonal constraints. 2015 arXiv preprint arXiv:1506.00575v2. [Google Scholar]

- 76.Singer A, Shkolnisky Y. Three-dimensional structure determination from common lines in cryo-EM by eigenvectors and semidefinite programming. SIAM journal on imaging sciences. 2011;4(2):543–572. doi: 10.1137/090767777. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77.Shkolnisky Y, Singer A. Viewing direction estimation in cryo-EM using synchronization. SIAM journal on imaging sciences. 2012;5(3):1088–1110. doi: 10.1137/120863642. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 78.Wang L, Singer A, Wen Z. Orientation determination of cryo- EM images using least unsquared deviations. SIAM journal on imaging sciences. 2013;6(4):2450–2483. doi: 10.1137/130916436. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.Kam Z. The reconstruction of structure from electron micrographs of randomly oriented particles. Journal of Theoretical Biology. 1980;82(1):15–39. doi: 10.1016/0022-5193(80)90088-0. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.