Abstract

Background

Traditional strategies for surveillance of surgical site infections (SSI) have multiple limitations, including delayed and incomplete outbreak detection. Statistical process control (SPC) methods address these deficiencies by combining longitudinal analysis with graphical presentation of data.

Methods

We performed a pilot study within a large network of community hospitals to evaluate performance of SPC methods for detecting SSI outbreaks. We applied conventional Shewhart and exponentially weighted moving average (EWMA) SPC charts to 10 previously investigated SSI outbreaks that occurred from 2003 to 2013. We compared the results of SPC surveillance to the results of traditional SSI surveillance methods. Then, we analysed the performance of modified SPC charts constructed with different outbreak detection rules, EWMA smoothing factors and baseline SSI rate calculations.

Results

Conventional Shewhart and EWMA SPC charts both detected 8 of the 10 SSI outbreaks analysed, in each case prior to the date of traditional detection. Among detected outbreaks, conventional Shewhart chart detection occurred a median of 12 months prior to outbreak onset and 22 months prior to traditional detection. Conventional EWMA chart detection occurred a median of 7 months prior to outbreak onset and 14 months prior to traditional detection. Modified Shewhart and EWMA charts additionally detected several outbreaks earlier than conventional SPC charts. Shewhart and SPC charts had low false-positive rates when used to analyse separate control hospital SSI data.

Conclusions

Our findings illustrate the potential usefulness and feasibility of real-time SPC surveillance of SSI to rapidly identify outbreaks and improve patient safety. Further study is needed to optimise SPC chart selection and calculation, statistical outbreak detection rules and the process for reacting to signals of potential outbreaks.

INTRODUCTION

Surgical site infections (SSI) are the most common type of healthcare-associated infection (HAI).1–5 Patients who develop SSIs have longer postoperative hospitalisations, increased mortality and higher healthcare-related costs compared with patients without SSIs.6–8 Estimates of annual hospital costs of SSI range from €1.5 billion to €19 billion in Europe9 and from $3 billion to $10 billion in the USA.10

Hospitals currently spend considerable time and resources attempting to optimise SSI prevention measures, but few strategies for prevention of SSI are evidence based.11 Furthermore, no proven or widely accepted algorithm for SSI surveillance exists. In general, traditional SSI surveillance at an individual hospital involves a multistep process: data collection, rate calculation (typically on a quarterly or semiannual basis) and feedback to surgical personnel.12 SSI rates are often compared with previous rates at the same hospital and to external benchmarks, such as those established by the National Healthcare Safety Network (NHSN).13

Traditional approaches for SSI surveillance have several major deficiencies. First, the traditional approach is slow. Traditional statistical methods require aggregation of data over time, which delays analysis until sufficient data accumulate.14 Data accumulation often requires several months because SSI is a low-frequency event.15 Second, analyses based on average SSI rates during predefined and arbitrary time periods are associated with delayed outbreak detection and may fail to detect important SSI outbreaks altogether.16 For example, a signal from a cluster of SSIs that occurs during a single month may be diluted by accrual of additional data collected during subsequent months, prior to the next scheduled analysis. Third, investigators typically perform retrospective SSI analyses only periodically, which may delay detection of important outbreaks that occur between analyses. Finally, the use of external benchmarks, such as SSI rates published by the NHSN, is problematic. The NHSN publishes SSI rates for specific procedure types from aggregate national historical data that may not be applicable to an individual hospital currently experiencing an SSI outbreak. Therefore, in practice, hospital epidemiologists often do not independently detect an outbreak via real-time surveillance but rather are told about a suspected cluster of SSIs by a perceptive surgeon or microbiologist.

Statistical process control (SPC) is a statistical approach that addresses several shortcomings of traditional SSI surveillance. SPC combines longitudinal analysis methods with graphical presentation of data to determine in real time whether variation exhibited by a process represents ‘common cause’ natural variation occurring by chance alone or ‘special cause’ unnatural variation occurring due to circumstances not previously inherent in the process.17 When the latter case occurs, the underlying occurrence rate changes (increases or decreases), and the process is said to be inconsistent, or in SPC terminology, ‘out of statistical control.’ Processes that are in control follow stable laws of probability and, therefore, have predictable future events, whereas future events from out-of-control processes are less predictable.

Commonly employed in manufacturing and other industries, SPC methods are increasingly being used as a tool for healthcare improvement.18 Prior healthcare-related analyses have included study of methicillin-resistant Staphylococcus aureus colonisation and infection rates,19 SSI following caesarean section,20 complications following cardiac surgery16 and a nationwide outbreak of Pseudomonas in Norway.21 Investigators in the preceding retrospective Norwegian study concluded that SPC methods would have detected the outbreak 9 weeks earlier than traditional surveillance, thereby potentially greatly decreasing the number of infected patients and associated deaths.

We hypothesised that (1) SPC methods would accurately detect outbreaks of SSI at individual hospitals in our network; (2) SPC detection would occur earlier than detection using traditional surveillance methods; and (3) certain SPC methods and calculation alternatives would perform best for detection of SSI outbreaks.22–24 Thus, we conducted a retrospective pilot study within a large network of community hospitals to determine the feasibility and potential usefulness of various SPC surveillance methods for SSI outbreak detection.

METHODS

Setting

The Duke Infection Control Outreach Network (DICON) is a network of more than 40 community hospitals in the Southeastern United States.25 Community hospitals within DICON receive expert infection control consultation, benchmark data on rates of SSI and other HAIs, detailed data analysis of time-trended surveillance data and educational services.26 Experienced infection preventionists prospectively enter data collected from patients undergoing more than 30 types of surgical procedures into the DICON Surgical Database. This database contains variables, such as type of surgical procedure, hospital, primary surgeon, procedure date and duration, patient American Society of Anesthesiologists classification system score,27 wound class and presence or absence of postoperative SSI, including causative organism. NHSN criteria are used to define and categorise SSIs.28

DICON hospitals detect potential SSI outbreaks by several mechanisms. DICON epidemiologists and infection preventionists perform regular surveillance for key NHSN procedures and compare individual member hospital’s SSI rates to DICON benchmark rates every 6 months. DICON personnel or staff members at individual hospitals, including hospital epidemiologists, infection preventionists, surgeons, infectious disease or other clinicians, and microbiologists, may also report suspicious SSI clusters. DICON personnel investigate suspected SSI outbreaks and make recommendations, if indicated, that are designed to terminate the outbreak and return SSI rates to acceptable baselines.

Analysis plan

We analysed SSI data from the DICON Surgical Database for all procedures performed from September 2003 to August 2013. A total of 11 142 SSIs complicated the 1 005 286 consecutive procedures performed over this 10-year time period (overall SSI rate=1.1%). Surgical data were included from all 50 hospitals that were DICON members during part or all of the study period and for all 44 procedure types performed during this time span.

We retrospectively reviewed all documented clusters of SSI detected by traditional surveillance measures during the study period. We examined formal outbreak reports and unpublished communication records of these previously detected clusters. These reports typically included procedures and surgeons involved, as well as the initial date of outbreak detection. We classified each prior outbreak as ‘possible’, ‘probable’ or ‘definite’. We classified 12 of 34 clusters as definite outbreaks; the remaining 22 clusters were determined to be probable or possible outbreaks. We excluded two definite outbreaks: one due to missing data and the other because its onset occurred near the beginning of the study period, precluding determination of an adequate pre-outbreak baseline SSI rate.

We applied SPC charts to each of the 10 definite SSI outbreaks with complete data (table 1). SSI rates for the outbreak hospital, associated procedures, and when indicated, particular surgeon, were plotted with monthly resolution. The centre line, representing the baseline SSI rate, was estimated by the mean SSI rate taken from the 12-month period that occurred from 25 to 36 months prior to the beginning of the historically defined outbreak period. We used this 24-month lag when calculating baseline SSI rates in order to decrease the contribution of potentially elevated rates from the immediate pre-outbreak time period to the baseline rate.21 In addition, this approach to baseline estimation allowed us to simulate prospective surveillance for the months approaching outbreak onset. For example, SPC charts prospectively created at the time of outbreak onset with a 12-month moving window baseline and 24-month lag would have the same characteristics as SPC charts used in this study.

Table 1.

Description of 10 surgical site infection outbreaks, Duke Infection Control Outreach Network (DICON), 2003-2013

| Outbreak number | Hospital designation | Outbreak time period | NHSN procedure(s) | Surgeon |

|---|---|---|---|---|

| 1 | A | November 2007 to November 2012 | Hip prosthesis, knee prosthesis | Single surgeon |

| 2 | B | January 2008 to December 2008 | Colon surgery, rectal surgery | All |

| 3 | B | July 2008 to April 2012 | Knee prosthesis | All |

| 4 | C | January 2009 to December 2009 | Orthopaedic procedures* | Single surgeon |

| 5 | D | January 2009 to December 2009 | Caesarean section | All |

| 6 | E | January 2010 to December 2011 | Knee prosthesis | All |

| 7 | F | April 2010 to April 2011 | All procedures | All |

| 8 | G | February 2011 to October 2011 | Spinal fusion | All |

| 9 | H | July 2011 to November 2011 | Laminectomy, spinal fusion | All |

| 10 | I | January 2013 to March 2013 | Liver transplant | All |

Orthopaedic procedures included hip prosthesis, knee prosthesis, ‘other’ prosthesis, open reduction of fracture and limb amputation surgeries. NHSN, National Healthcare Safety Network.

For each outbreak, we first constructed conventional Shewhart p control charts, based on an underlying binomial sampling distribution for binary SSI data.22 The upper control limit (UCL) and lower control limit were set ±3 binomial SD from the centre line, following standard SPC practice.17 Any data point above the UCL indicated a likely SSI rate change and, thus, a potential outbreak. For convenience, we standardised each chart to plot z-scores, converting SSI rates to SD from the baseline rate. We used a standardised centre line at 0 and control limits of ±3.

We also constructed standardised exponentially weighted moving average (EWMA) control charts for each of the 10 historic outbreaks.29 EWMA control charts average data collected from a process over time, giving increased weight to the most recent observations. EWMA charts are more powerful than Shewhart charts for detecting small or gradual changes in the rate of a process but can be slower to detect large changes.30 By convention, we set the EWMA smoothing factor, λ, to 0.2 and the control limits to ±3 SD of the plotted statistic from the centre line. Any data point above the UCL represented an ‘out-of-control’ signal and indicated a possible outbreak.

For each outbreak hospital, we analysed SSI rates and trends from the relevant procedures and time periods, including data collected during the initial outbreak investigations. We used dates of outbreak onset and outbreak termination that local hospital and DICON personnel had documented during their investigations. We defined the date of traditional surveillance outbreak detection as the date that the initial outbreak investigation began, which was nearly always after the date of outbreak onset. We defined the date of SPC outbreak detection from Shewhart and EWMA charts as the date of the first out-of-control signal for each chart type.

We determined performance of Shewhart and EWMA charts by analysing each chart’s ability to detect the specified outbreak (sensitivity) and compared the date of SPC detection to both the date of outbreak onset and date that traditional surveillance methods originally detected the outbreak. We also evaluated specificity of these charts by applying the same conventional Shewhart and EWMA charts to 10 control hospitals. For each outbreak study hospital, we randomly selected one control hospital that did not have a known SSI outbreak for the surgical procedure(s) and outbreak time period analysed at the study hospital. We used the same 12-month baseline time period, control chart formulas and surgical procedure(s) used to analyse the corresponding study hospital outbreak. No potential control hospitals performed liver transplant surgeries; therefore, for the control hospital corresponding to study hospital I (outbreak 10), we substituted colon surgeries, which had similar volume and expected SSI rates. We evaluated all out-of-control SPC signals at each control hospital for the 12 months immediately preceding time of outbreak onset at the paired study hospital. Epidemiologists subsequently evaluated SSI data associated with SPC signals at control hospitals to further investigate false-positive alerts.

In secondary analysis, we explored numerous calculation alternatives for the Shewhart and EWMA charts.22–24 For example, we varied the outbreak detection rules (eg, requiring two consecutive data points instead of a single point above the UCL to signal a possible outbreak), EWMA smoothing factor and data used to determine baseline SSI rates (eg, using DICON network-wide data instead of individual outbreak-hospital data to calculate baseline rates, or using a moving window21 to iteratively update the baseline over time).

Patient data were deidentified prior to entry into the DICON Surgical Database. Institutional review boards at each author’s organisation declared the study to be exempt research. We maintained the data in Microsoft Access and Microsoft Excel (Microsoft, Redmond, WA), and we analysed the data using SAS V9.4 (SAS Institute).

RESULTS

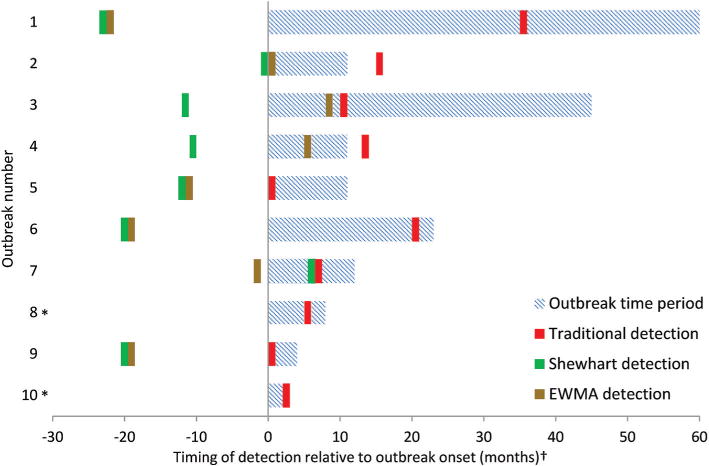

Traditional SSI surveillance methods detected the 10 SSI outbreaks a median of 9 months (IQR: 3, 16 months) after actual outbreak onset (table 2). Traditional surveillance did not detect any outbreak prior to outbreak onset, and three outbreaks were not detected until after the outbreak time period had concluded (figure 1).

Table 2.

Detection of 10 surgical site infection outbreaks by traditional surveillance, Shewhart statistical process control (SPC) and exponentially weighted moving average (EWMA) SPC methods, Duke Infection Control Outreach Network (DICON), 2003–2013

| Outbreak number | Outbreak time period | Traditional surveillance date of detection | Shewhart SPC date of detection | EWMA SPC date of detection | Months from outbreak onset to traditional detection | Months from outbreak onset to Shewhart SPC detection | Months from outbreak onset to EWMA SPC detection |

|---|---|---|---|---|---|---|---|

| 1 | November 2007 to November 2012 | November 2010 | December 2005 | December 2005 | +36 | −23 | −23 |

| 2 | January 2008 to December 2008 | May 2009 | January 2008 | January 2008 | +16 | 0 | 0 |

| 3 | July 2008 to April 2012 | June 2009 | July 2007 | April 2009 | +11 | −12 | +9 |

| 4 | January 2009 to December 2009 | March 2010 | February 2008 | July 2009 | +14 | −11 | +6 |

| 5 | January 2009 to December 2009 | January 2009 | January 2008 | January 2008 | 0 | −12 | −12 |

| 6 | January 2010 to December 2011 | October 2011 | May 2008 | May 2008 | +21 | −20 | −20 |

| 7 | April 2010 to April 2011 | November 2010 | December 2010 | February 2010 | +7 | +7 | −2 |

| 8 | February 2011 to October 2011 | August 2011 | Not detected | Not detected | +6 | – | – |

| 9 | July 2011 to November 2011 | August 2011 | November 2009 | November 2009 | +1 | −20 | −20 |

| 10 | January 2013 to March 2013 | April 2013 | Not detected | Not detected | +3 | – | – |

Figure 1.

Timeline of traditional surveillance, Shewhart statistical process control (SPC), and exponentially weighted moving average (EWMA) SPC detection of 10 historic surgical site infection (SSI) outbreaks. SSI outbreaks occurred in the Duke Infection Control Outreach Network (DICON) from 2003 to 2013. *Outbreaks 8 and 10 were not detected by Shewhart or EWMA SPC charts. †Adjacent bars indicate that detection occurred during the same month.

Conventional Shewhart SPC charts detected 8 of the 10 SSI outbreaks, 6 of which were detected prior to the date of outbreak onset (table 2). Among detected outbreaks, median Shewhart chart detection occurred 12 months (IQR: 6, 20 months) prior to outbreak onset (figure 1). Conventional EWMA SPC charts detected the same eight outbreaks, including five outbreaks prior to outbreak onset. Median EWMA chart detection occurred 7 months (IQR: −3, 20 months) prior to outbreak onset. Two of the outbreaks were not detected by either SPC method.

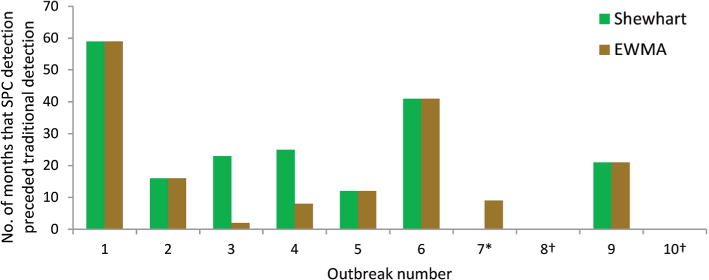

Among detected outbreaks, each Shewhart and EWMA chart detected outbreaks prior to, or, in one case, at the same time as traditional surveillance methods (figure 2). Shewhart detection of the eight recognised outbreaks occurred a median of 22 months (IQR: 14, 33 months) prior to traditional detection. Median EWMA detection of these eight outbreaks occurred 14 months (IQR: 9, 31 months) prior to traditional detection.

Figure 2.

Early detection of historic surgical site infection (SSI) outbreaks by Shewhart and exponentially weighted moving average (EWMA) statistical process control (SPC) charts in comparison to time of traditional surveillance detection. SSI outbreaks occurred in the Duke Infection Control Outreach Network (DICON) from 2003 to 2013. *The Shewhart SPC chart detected outbreak 7 at the same time as traditional detection. †Outbreaks 8 and 10 were not detected by Shewhart or EWMA SPC charts.

Analysis of the 10 control hospitals with Shewhart charts produced out-of-control signals at three hospitals during the 12 months analysed, giving a specificity of 70% and positive predictive value (PPV) of 73% (data not shown). Each control hospital with an out-of-control signal had only a single data point above the UCL. Control hospital analysis with EWMA charts produced out-of-control signals at only one hospital, giving a specificity of 90% and PPV of 89%. The EWMA chart for this hospital had four data points above the UCL, and this hospital was one of the three control hospitals that also had false-positive alarms when analysed with Shewhart charts. Based on a priori definitions, we considered all SPC signals generated at control hospitals to represent false-positive alarms; however, subsequent epidemiologist review of SSI data associated with these signals revealed that all three control hospitals had important SSI rate increases at the time the out-of-control signals were generated.

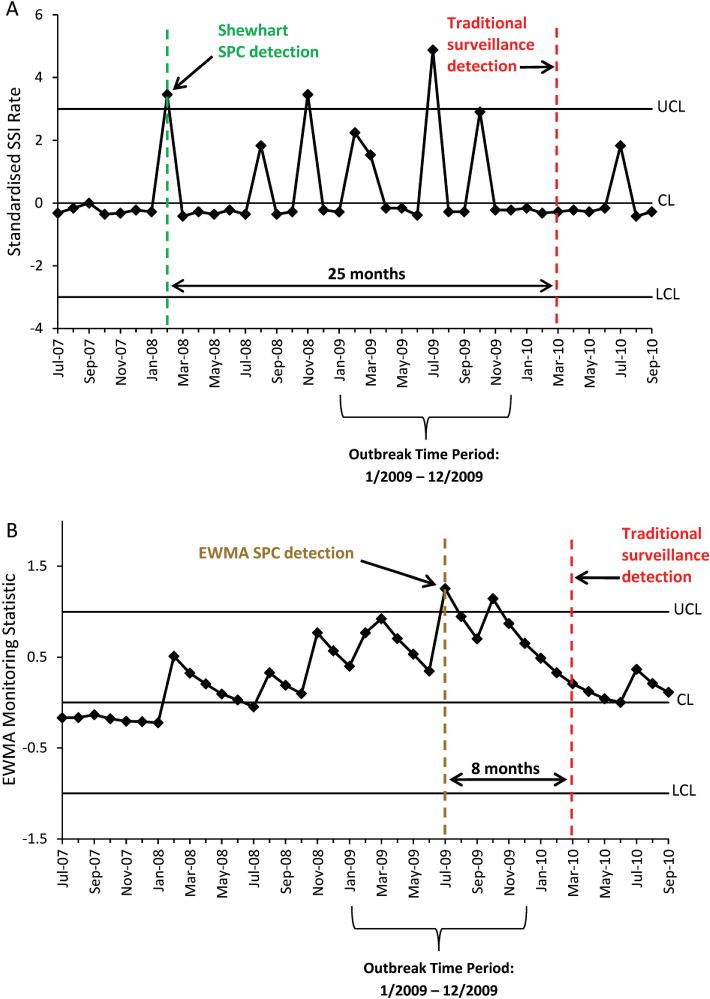

Shewhart and EWMA charts constructed for SSI outbreaks 4 and 7 serve as examples to illustrate SPC chart interpretation (figures 3 and 4). Outbreak 4 occurred at hospital C from January to December 2009. This outbreak involved several orthopaedic procedures, including hip prosthesis, knee prosthesis, ‘other’ prosthesis, open reduction of fracture and limb amputation surgeries performed by a single surgeon. Traditional methods of outbreak detection did not identify the outbreak until March 2010, fourteen months after outbreak onset, at which point the outbreak already had ceased. However, Shewhart detection occurred via the first out-of-control signal above the UCL in February 2008 (figure 3A), and EWMA detection occurred in July 2009 (figure 3B).

Figure 3.

Statistical process control (SPC) charts illustrating detection of a surgical site infection (SSI) outbreak following orthopaedic procedures performed at one hospital by a single surgeon. Shewhart (A) and exponentially weighted moving average (EWMA) (B) SPC detection dates are compared with the date of historic traditional surveillance outbreak detection. CL, centre line; LCL, lower control limit; UCL, upper control limit.

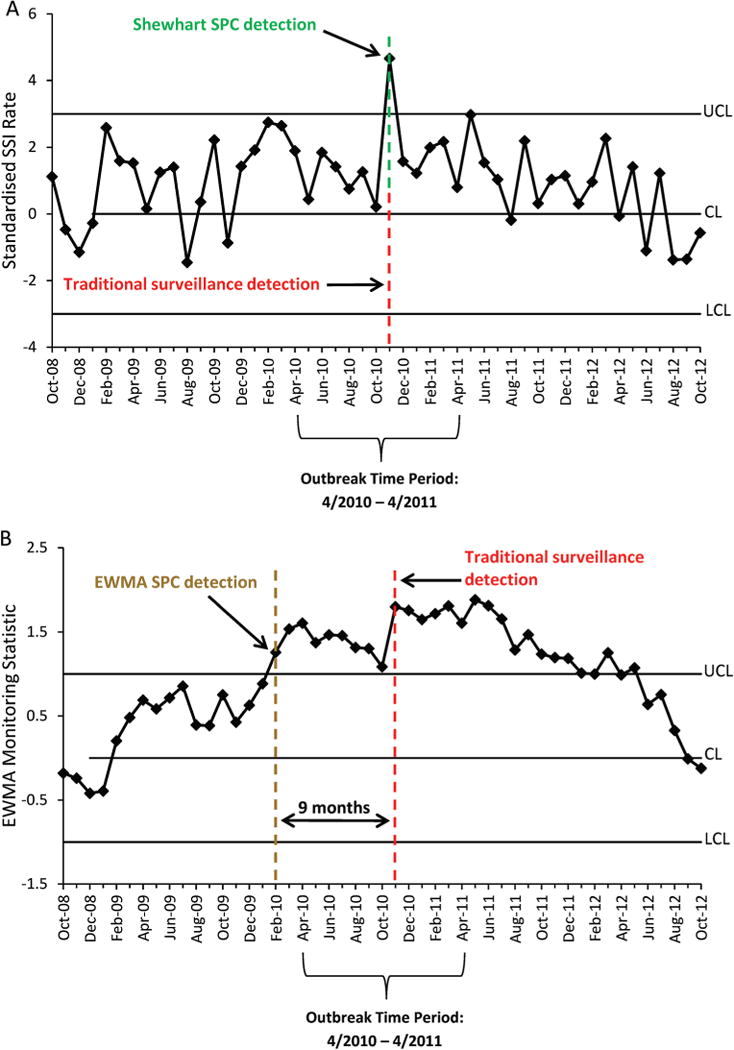

Figure 4.

Statistical process control (SPC) charts illustrating detection of a surgical site infection (SSI) outbreak including all procedures performed at one hospital. Shewhart (A) and exponentially weighted moving average (EWMA) (B) SPC detection dates are compared with the date of historic traditional surveillance outbreak detection. CL, centre line; LCL, lower control limit; UCL, upper control limit.

Outbreak 7 occurred at hospital F from April 2010 to April 2011. The original investigation detected the outbreak in November 2010 and revealed increased cumulative rates of SSI over this time period rather than increased rates for certain procedures or surgeons. The first out-of-control Shewhart signal also occurred in November 2010, seven months after outbreak onset (figure 4A). The EWMA chart, however, had an out-of-control signal in February 2010, two months prior to outbreak onset and 9 months prior to traditional and Shewhart detection (figure 4B).

In secondary analysis, several variations in SPC chart construction and detection led to important changes in outbreak detection sensitivity. For example, the use of two consecutive data points above the UCL to define a signal led to Shewhart detection of only 2 of the 10 outbreaks. EWMA charts detected six outbreaks using this detection rule.

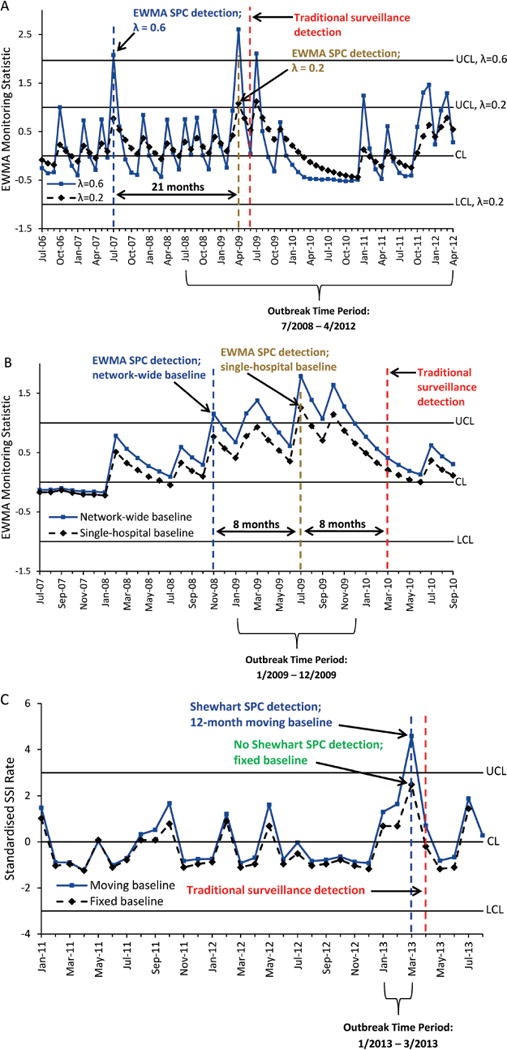

We further illustrate how changes in SPC chart calculations affect outbreak detection by reconsidering three of the outbreaks (figure 5). First, we applied different values for λ, the EWMA smoothing factor, to the outbreaks. For outbreak 3, we used a value for λ of 0.6, instead of 0.2, to give greater weight to the most recent observations. The modified EWMA chart detected the outbreak in July 2007, twenty-one months sooner than the original EWMA chart with λ=0.2 (figure 5A). Second, we analysed charts using baseline data from the entire DICON network of hospitals rather than from the outbreak hospital alone. For outbreak 4, the EWMA chart that used the network-wide baseline SSI rates exhibited an out-of-control signal in November 2008, eight months earlier than the original EWMA chart that used only hospital C data to estimate the baseline (figure 5B). Third, we experimented with a moving SSI baseline rate that changed with incorporation of new data each month. As one example, we used a 3-month lag to exclude the most recent data from the baseline and a moving baseline window of 12 months. The modified Shewhart chart detected outbreak 10 in March 2013, only 2 months after outbreak onset (figure 5C). The EWMA chart with this moving baseline also detected this outbreak in March 2013 (data not shown). In comparison, conventional Shewhart and EWMA charts did not detect this outbreak (table 2).

Figure 5.

Statistical process control (SPC) charts illustrating variations in chart construction and associated changes in detection of surgical site infection (SSI) outbreaks at three hospitals. (A) Exponentially weighted moving average (EWMA) SPC chart with λ=0.2 is compared with EWMA chart with λ=0.6 (outbreak 3). (B) EWMA SPC chart constructed with baseline SSI data from the single outbreak hospital is compared with EWMA chart with baseline SSI data from the Duke Infection Control Outreach Network (DICON) (outbreak 4). (C) Shewhart SPC chart using fixed baseline data from months 25 to 36 prior to outbreak onset is compared with Shewhart SPC chart with 12-month moving baseline window and 3-month lag (outbreak 10). All SPC detection dates are compared with the date of historic traditional surveillance outbreak detection. CL, centre line; LCL, lower control limit; UCL, upper control limit.

DISCUSSION

SPC techniques effectively identified the majority of SSI outbreaks that were previously investigated in our network of community hospitals using traditional surveillance methods over the 10-year study period. Conventional Shewhart and EWMA SPC charts detected 80% of known SSI outbreaks. Simulated SPC detection of these outbreaks usually occurred many months prior to both outbreak onset and original outbreak detection using traditional SSI surveillance measures. In addition, control hospitals had a low rate of false-positive signals. In fact, after further analysis of control hospital SSI data, we discovered that ‘false positive’ signals may have instead represented clinically relevant SSI clusters not detected by traditional surveillance. These results highlight the potential for SPC to facilitate early recognition and termination of outbreaks and to prevent outbreaks altogether by prompt detection of initial out-of-control signals meriting further investigation.

Overall, conventional Shewhart and EWMA charts had similar sensitivity and specificity in outbreak detection, as well as timing of detection; however, performance of these SPC chart types, in particular, timing of outbreak detection, differed notably for certain outbreaks. In addition, SPC performance varied significantly with adjustments to key chart characteristics, including detection rules, weight given to most recent data and baseline SSI rate determinations. For several outbreaks, calculation alternatives provided improved detection compared with conventional Shewhart and EWMA charts. These design considerations should be further investigated in order to optimise the use of SPC in this context.

Only two of the SSI outbreaks (outbreak 8 and outbreak 10) that we studied were not detected by conventional Shewhart or EWMA SPC charts. In these two cases, the 12-month baseline period chosen a priori for all charts (25-36 months prior to documented outbreak onset) coincided with increased SSI rates for the same procedures that later were associated with confirmed outbreaks. Using ‘baseline’ rates that were elevated above true steady-state or expected SSI rates interfered with outbreak detection. These two examples underscore the need for further investigation of how to best define baseline data when using SPC surveillance for HAIs. For example, modified Shewhart and EWMA charts with moving baselines detected outbreak 10 shortly after outbreak onset and prior to traditional surveillance detection (figure 5). The lack of detection of outbreaks 8 and 10 with conventional Shewhart and EWMA charts also suggests that SPC surveillance is best used to augment and improve, rather than replace, traditional surveillance methods. Some outbreaks of SSI, for example, those associated with an unusual pathogen or procedures that have extremely low infection rates, may remain best detected by an astute microbiologist or surgeon.

Our results are concordant with prior studies that demonstrated benefit of SPC surveillance for HAIs, typically for either a single procedure type or pathogen.19–21 In contrast, our study investigated detection of outbreaks associated with many different procedure types or combinations of procedures. Also, successful detection of an outbreak occurred when we restricted data to a single surgeon with increased SSI rates or, when the outbreak was not surgeon specific, when we included data on SSI for all surgeons at a hospital performing the implicated procedure types. Finally, SPC charts performed well throughout the 10-year study period, including when they were applied to ‘smoldering’ outbreaks associated with mild or moderate increases in SSI rates that often had delayed detection by traditional surveillance or to acute-onset outbreaks with transient but steep SSI rate elevations.

A key contribution of this study is its application of SPC methods to a large number of diverse, well-documented SSI outbreaks with discrete dates of outbreak onset and subsequent traditional detection. These data allowed us to directly compare SPC performance with traditional surveillance techniques. Our unique interdisciplinary partnership between physician epidemiologists with SSI surveillance expertise and engineering statisticians with SPC expertise gave us the opportunity to develop and explore new hypotheses regarding SSI surveillance and SPC methodology.

Our findings have important implications for future methods of surveillance of SSI and a broad range of other HAIs. Early termination or prevention of SSI outbreaks could substantially decrease duration of hospitalisation, readmissions, morbidity, mortality and healthcare costs for a large number of surgical patients. Similar SPC methods could augment surveillance of other HAIs, such as Clostridium difficile infections, central line-associated bloodstream infections and catheter-associated urinary tract infections. Improved detection of clusters of these infections could also promote earlier intervention17,18,22 and quality improvement. Use of SPC for prospective HAI surveillance would require resources for data processing, analysis and investigation of out-of-control signals; however, healthcare facilities could mitigate these costs by automating many facets of SPC and using data collected by existing surveillance techniques. In addition, the low false-positive rate demonstrated by this study shows the potential for SPC surveillance to have low investigative burden on health systems.

Based on our pilot data, we believe SPC charts can contribute to earlier detection of increases in SSI. However, further research is needed to determine the best and most efficient use of SPC for SSI surveillance. For example, which chart types and associated calculations will maximise detection while limiting false alarms? What are the best detection rules, and how aggressively should hospital personnel investigate each type and magnitude of out-of-control signal? How should investigators estimate baseline rates, and how should these estimates be updated over time? Finally, how might different SPC charts be used in combination to maximise performance?

Our study has two primary limitations. First, the study was retrospective. The use of well-documented past SSI outbreaks was useful for large-scale analysis of performance of numerous SPC chart types; however, retrospective analysis limited evaluation of the specificity of out-of-control signals at study hospitals. For example, some signals in this study interpreted as markers of early SSI outbreak detection could have instead represented detection of distinct outbreaks that occurred earlier than the historic outbreaks studied and were not captured by traditional surveillance. Other signals may have been false alarms that coincidentally preceded an actual outbreak. Analysis of prospective surveillance of SSI with SPC is needed to further evaluate and optimise specificity of outbreak detection. Broad, prospective, automated surveillance likely will produce some false or mild signals that do not indicate impending outbreaks. However, prospective surveillance may also reveal signals of important SSI increases that escape traditional detection methods altogether, as illustrated above. Therefore, developing protocols for responding in real time to various types and magnitudes of signals will be an important next step. These protocols likely will not recommend immediate investigation of all out-of-control signals; thus, the initial investigation of some true outbreaks may not occur at the time of the first SPC signal. A second limitation of this study is the fact that experienced epidemiologists and engineering statisticians constructed and interpreted SPC charts. Ultimately, we plan to develop an automated tool for early SSI detection that does not require specialised knowledge in epidemiology and this statistical specialty to use and interpret.

In summary, results of this pilot study, based on empirical analysis of 10 years of SSI data from a large network of community hospitals, demonstrate the feasibility and usefulness of SPC surveillance of surgical procedures to improve early detection of SSI outbreaks. Early outbreak detection could improve important SSI-related outcomes, such as days of hospitalisation, mortality and cost. SPC surveillance could similarly be applied to any number of other important HAIs. We hypothesise that a subsequent prospective study of conventional and optimised SPC methods will verify the improvements in SSI surveillance predicted by this preliminary study.

Acknowledgments

Funding This work was supported by the National Center for Advancing Translational Sciences of the National Institutes of Health (grant number UL1TR001117), the Transplant Infectious Disease Interdisciplinary Research Training Grant of the National Institutes of Health (grant number 5T32AI100851-02), the National Science Foundation (grant number IIP-1034990) and the Agency for Healthcare Research and Quality (grant number R01 HS 23821-01).

Footnotes

Contributors AWB designed the study, performed the analysis and wrote the first version of the manuscript. SH, JS, II, AOE, AS, NA and JCB constructed and analysed SPC charts. JCB and DJA additionally designed the study, performed the analysis and revised the manuscript. DJS designed the study and revised the manuscript.

Competing interests None declared.

Ethics approval Institutional review board at our organisations.

Provenance and peer review Not commissioned; externally peer reviewed.

Data sharing Statement Unpublished data are available upon request.

References

- 1.Klevens RM, Edwards JR, Richards CL, et al. Estimating health care-associated infections and deaths in U.S. hospitals, 2002. Public Health Rep. 2007;122:160–6. doi: 10.1177/003335490712200205. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Magill SS, Edwards JR, Bamberg W, et al. Multistate point-prevalence survey of health care-associated infections. N Engl J Med. 2014;370:1198–208. doi: 10.1056/NEJMoa1306801. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Lewis SS, Moehring RW, Chen LF, et al. Assessing the relative burden of hospital-acquired infections in a network of community hospitals. Infect Control Hosp Epidemiol. 2013;34:1229–30. doi: 10.1086/673443. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Magill SS, Hellinger W, Cohen J, et al. Prevalence of healthcare-associated infections in acute care hospitals in Jacksonville, Florida. Infect Control Hosp Epidemiol. 2012;33:283–91. doi: 10.1086/664048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Zimlichman E, Henderson D, Tamir O, et al. Health care-associated infections: a meta-analysis of costs and financial impact on the US health care system. JAMA Intern Med. 2013;173:2039–46. doi: 10.1001/jamainternmed.2013.9763. [DOI] [PubMed] [Google Scholar]

- 6.Kirkland KB, Briggs JP, Trivette SL, et al. The impact of surgical-site infections in the 1990s: attributable mortality, excess length of hospitalization, and extra costs. Infect Control Hosp Epidemiol. 1999;20:725–30. doi: 10.1086/501572. [DOI] [PubMed] [Google Scholar]

- 7.Engemann JJ, Carmeli Y, Cosgrove SE, et al. Adverse clinical and economic outcomes attributable to methicillin resistance among patients with Staphylococcus aureus surgical site infection. Clin Infect Dis. 2003;36:592–8. doi: 10.1086/367653. [DOI] [PubMed] [Google Scholar]

- 8.Broex EC, van Asselt AD, Bruggeman CA, et al. Surgical site infections: how high are the costs? J Hosp Infect. 2009;72:193–201. doi: 10.1016/j.jhin.2009.03.020. [DOI] [PubMed] [Google Scholar]

- 9.Leaper DJ, van Goor H, Reilly J, et al. Surgical site infection – a European perspective of incidence and economic burden. Int Wound J. 2004;1:247–73. doi: 10.1111/j.1742-4801.2004.00067.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Scott RD. The direct medical costs of healthcare-associated infections in US hospitals and the benefits of prevention. Centers for Disease Control and Prevention; http://www.cdc.gov/hai/pdfs/hai/scott_costpaper.pdf. (accessed 1 Mar 2015) [Google Scholar]

- 11.Anderson DJ, Podgorny K, Berrios-Torres SI, et al. Strategies to prevent surgical site infections in acute care hospitals: 2014 update. Infect Control Hosp Epidemiol. 2014;35:S66–S88. doi: 10.1017/s0899823x00193869. [DOI] [PubMed] [Google Scholar]

- 12.Haley RW, Culver DH, White JW, et al. The efficacy of infection surveillance and control programs in preventing nosocomial infections in US hospitals. Am J Epidemiol. 1985;121:182–205. doi: 10.1093/oxfordjournals.aje.a113990. [DOI] [PubMed] [Google Scholar]

- 13.Edwards JR, Peterson KD, Mu Y, et al. National Healthcare Safety Network (NHSN) report: data summary for 2006 through 2008, issued December 2009. Am J Infect Control. 2009;37:783–805. doi: 10.1016/j.ajic.2009.10.001. [DOI] [PubMed] [Google Scholar]

- 14.Benneyan JC, Lloyd RC, Plsek PE. Statistical process control as a tool for research and healthcare improvement. Qual Saf Health Care. 2003;12:458–64. doi: 10.1136/qhc.12.6.458. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Mu Y, Edwards JR, Horan TC, et al. Improving risk-adjusted measures of surgical site infection for the national healthcare safety network. Infect Control Hosp Epidemiol. 2011;32:970–86. doi: 10.1086/662016. [DOI] [PubMed] [Google Scholar]

- 16.Levett JM, Carey RG. Measuring for improvement: from Toyota to thoracic surgery. Ann Thorac Surg. 1999;68:353–8. doi: 10.1016/s0003-4975(99)00547-0. [DOI] [PubMed] [Google Scholar]

- 17.Benneyan JC. Use and interpretation of statistical quality control charts. Int J Qual Health Care. 1998;10:69–73. doi: 10.1093/intqhc/10.1.69. [DOI] [PubMed] [Google Scholar]

- 18.Benneyan JC. Statistical quality control methods in infection control and hospital epidemiology, part I: Introduction and basic theory. Infect Control Hosp Epidemiol. 1998;19:194–214. doi: 10.1086/647795. [DOI] [PubMed] [Google Scholar]

- 19.Curran E, Harper P, Loveday H, et al. Results of a multicentre randomised controlled trial of statistical process control charts and structured diagnostic tools to reduce ward-acquired meticillin-resistant Staphylococcus aureus: the CHART Project. J Hosp Infect. 2008;70:127–35. doi: 10.1016/j.jhin.2008.06.013. [DOI] [PubMed] [Google Scholar]

- 20.Dyrkorn OA, Kristoffersen M, Walberg M. Reducing post-caesarean surgical wound infection rate: an improvement project in a Norwegian maternity clinic. BMJ Qual Saf. 2012;21:206–10. doi: 10.1136/bmjqs-2011-000316. [DOI] [PubMed] [Google Scholar]

- 21.Walberg M, Frøslie KF, Røislien J. Local hospital perspective on a nationwide outbreak of Pseudomonas aeruginosa infection in Norway. Infect Control Hosp Epidemiol. 2008;29:635–41. doi: 10.1086/589332. [DOI] [PubMed] [Google Scholar]

- 22.Benneyan JC. Statistical quality control methods in infection control and hospital epidemiology, Part II: Chart use, statistical properties, and research issues. Infect Control Hosp Epidemiol. 1998;19:265–83. [PubMed] [Google Scholar]

- 23.Brown SM, Benneyan JC, Theobald DA, et al. Binary cumulative sums and moving averages in nosocomial infection cluster detection. Emerg Infect Dis. 2002;8:1426–32. doi: 10.3201/eid0812.010514. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Benneyan JC, Villapiano A, Katz N, et al. Illustration of a statistical process control approach to regional prescription opioid abuse surveillance. J Addict Med. 2011;5:99–109. doi: 10.1097/ADM.0b013e3181e9632b. [DOI] [PubMed] [Google Scholar]

- 25.Anderson DJ, Miller BA, Chen LF, et al. The network approach for prevention of healthcare-associated infections: long-term effect of participation in the Duke Infection Control Outreach Network. Infect Control Hosp Epidemiol. 2011;32:315–22. doi: 10.1086/658940. [DOI] [PubMed] [Google Scholar]

- 26.Kaye KS, Engemann JJ, Fulmer EM, et al. Favorable impact of an infection control network on nosocomial infection rates in community hospitals. Infect Control Hosp Epidemiol. 2006;27:228–32. doi: 10.1086/500371. [DOI] [PubMed] [Google Scholar]

- 27.Culver DH, Horan TC, Gaynes RP, et al. Surgical wound infection rates by wound class, operative procedure, and patient risk index. National Nosocomial Infections Surveillance System. Am J Med. 1991;91:152S–7. doi: 10.1016/0002-9343(91)90361-z. [DOI] [PubMed] [Google Scholar]

- 28.Centers for Disease Control and Prevention (CDC) Procedure Associated Module: Surgical Site Infection (SSI) Event. http://www.cdc.gov/nhsn/PDFs/pscManual/9pscSSIcurrent.pdf. (accessed 15 Feb 2016)

- 29.Morton AP, Whitby M, McLaws ML, et al. The application of statistical process control charts to the detection and monitoring of hospital-acquired infections. J Qual Clin Pract. 2001;21:112–7. doi: 10.1046/j.1440-1762.2001.00423.x. [DOI] [PubMed] [Google Scholar]

- 30.National Institute of Standards and Technology. NIST/SEMATECH e-Handbook of Statistical Methods. http://www.itl.nist.gov/div898/handbook/mpc/section2/mpc2211.htm (accessed 23 Jul 2015)