Abstract

The national Monitoring the Future (MTF) study examines substance use among adolescents and adults in the United States and has used paper questionnaires since it began in 1975. The current experiment tested three conditions as compared to the standard MTF follow-up protocol (i.e., MTF Control) for the first MTF follow-up survey at ages 19/20 years (i.e., one or two years after high school graduation). The MTF Control group included participants who completed in-school baseline surveys in the 12th grade in 2012–2013 and who were selected to participate in the first follow-up survey in 2014 (n = 2,451). A supplementary sample of participants who completed the 12th grade baseline survey in 2012 or 2013 but were not selected to participate in the main MTF follow-up (n = 4,950) were recruited and randomly assigned to one of three experimental conditions: (1) Mail Push, (2) Web Push, (3) Web Push + E-mail. Results indicated that the overall response rate was lower in Condition 2 compared to MTF Control and to Condition 1; there were no differences between Condition 3 and other conditions. Web response was highest in Condition 3; among web responders, smartphone response was also highest in Condition 3. Subgroup differences also emerged such that, for example, compared to white participants, Hispanics had greater odds of web (versus paper) response and blacks had greater odds of smartphone (versus computer or tablet) response. Item nonresponse was lowest in the Web Push conditions (compared to MTF Control) and on the web survey (compared to paper). Compared to MTF Control, Condition 3 respondents reported higher rates of alcohol use in the past 30 days. The total cost was lowest for Condition 3. Overall, the Condition 3 Web Push + E-mail design is promising. Future research is needed to continue to examine the implications of web and mobile response in large, national surveys.

1. INTRODUCTION

The national Monitoring the Future (MTF) study examines substance use among adolescents and adults in the United States, sampling high school students and following a selected subsample of 12th graders into adulthood (Johnston, O'Malley, Bachman, Schulenberg, and Miech 2015; Miech, Johnston, O'Malley, Bachman, and Schulenberg 2016). MTF longitudinal surveys have been delivered exclusively as paper questionnaires since the initial follow-ups in 1976. As web-based surveys have become more standard, MTF is exploring the possibility of using web-based surveys in the longitudinal portion of the study. The study thereby offers a unique opportunity to examine the effects of contact strategies and response modes on respondent characteristics in a national sample, followed longitudinally.

Potential advantages of web-based strategies include cost-effective data collection, a lower response burden (e.g., using automated skip patterns), improved data quality and reduced measurement error, reduced time between data collection and dissemination (e.g., by eliminating scanning and keyed data entry by study staff), and analysis of paradata and partial data that provides greater insight into the ways participants respond (Couper 1998; Couper and Lyberg 2005; Heerwegh 2003; Stern 2008; Tourangeau, Conrad, and Couper 2013). In addition to the notable advantages of web-based surveys, there are also potential challenges. Internet response rates tend to be lower than those for mail surveys (Lozar Manfreda, Bosnjak, Berzelak, Haas, and Vehovar 2008) and can lengthen the period of data collection necessary to reach the same response rates as those of mailed surveys (Holmberg, Lorenc, and Werner 2010). Furthermore, changing the mode of data collection from paper to web in ongoing surveys can lead to differences in findings due to mode differences in measurement, which could be problematic for studies like MTF that investigate period, developmental, and cohort changes.

To capitalize on the potential advantages and to systematically address the challenges, MTF implemented an experimental test to document the response rates and data quality associated with three conditions in comparison to the MTF Control (i.e., existing MTF protocol). A growing body of research has begun to explore mixed-mode surveys using mail and web data collection. These have focused on both concurrent (or choice) designs and sequential designs. Choice designs (sometimes called mail with web option) mail a paper questionnaire to sample persons but give them the option of completing the survey online. Medway and Fulton’s (2012) widely cited meta-analysis of 19 experimental comparisons found that offering a web option was associated with lower response rates than a mail-only design (an overall odds ratio of 0.87). A common argument proffered for the lack of benefit of the concurrent approach is consistent with the “paradox of choice” hypothesis (Schwartz 2004), which states that offering a choice makes the decision more difficult, leading to no decision (i.e., nonresponse). This hypothesis has led researchers to posit that offering only one mode at a time should improve response rates. The “paradox of choice” and the surprising findings from the concurrent mode studies have led researchers to explore sequential mixed-mode designs in which one mode (usually web) is initially offered, followed later by mail.

The research evidence regarding sequential mixed-mode designs is still varied and inconclusive. Several studies contrasting a sequential web-to-mail design with mail-only found lower response rates for the sequential design (Cantor, Brick, Han, and Aponte 2010; Friese, Lee, O’Brien, and Crawford 2010; Israel 2009; Lesser, Newton, and Yang 2010; Messer and Dillman 2011; Newsome, Levin, Brick, Langetieg, Vigil, et al. 2013), while others found the overall rates to be similar (Olson, Smyth, and Wood 2012; Skjåk and Kolsrud 2013). Tests on the American Community Survey found mixed results on sequential web-to-mail approaches, with some protocols achieving higher self-response rates than the mail-only control, and others lower rates (see Matthews, Davis, Tancreto, Zelenak, and Ruiter 2012; Tancreto, Zelenak, Davis, Ruiter, and Matthews 2012).

In studies contrasting sequential with concurrent mixed-mode designs, the results again vary. For example, several studies have found the response rates to be lower for the sequential design than the concurrent design (Friese et al. 2010; Lagerstrøm 2011; Lesser, Newton, and Yang 2010; Smyth, Dillman, Christian, and O’Neill 2010), while others found the sequential approach to yield higher response rates (Tully and Lerman 2013). Still others have found similar response rates between sequential web-to-mail designs and concurrent designs (Bensky, Link, and Shuttles 2010; Biemer, Murphy, Zimmer, Berry, Deng, et al. 2016; Lebrasseur, Morin, Rodrigue, and Taylor 2010; Skjåk and Kolsrud 2013). Despite mixed results on overall response rates, evidence from these studies clearly shows that starting with the web (versus introducing the web later) in a sequential mixed-mode design increases the proportion of web responses.

Accordingly, research has also explored response rate differences between sequential designs that start with mail (i.e., mail push or mail-first sequential designs) and those that start with the web (web push or web-first sequential designs), and between various versions of sequential designs (e.g., varying in how soon to introduce the mail mode) (see Borkan 2010; Holmberg, Lorenc, and Werner 2010; Smyth et al. 2010; Stevenson, Dykema, Kniss, Black, and Moberg 2011). While the results for overall response rates are again mixed, these studies find that the longer the delay before mail is introduced (i.e., the more web is “pushed”), the higher the proportion of responses that come via the web.

Most of the studies reviewed above focus on response rate differences between the different designs. A few explore demographic differences between web and mail respondents. Most of the studies are based on cross-sectional data, so they can only compare differences between respondents and nonrespondents in the different mode mixes on available frame data. Explorations of data quality and substantive differences between the different mixed-mode approaches are also rare.

Further, most of these studies rely on address- or register-based frames containing mailing addresses, so mail is the primary mode of contact. A potential advantage of panel studies is the ability to collect additional contact information (e-mail addresses and telephone numbers) to use in subsequent waves (see Cernat and Lynn 2014). Millar and Dillman (2011) demonstrated the value of additional e-mail contacts in a college student population, and Israel (2013) found a modest advantage of adding e-mail in a customer satisfaction survey. Bandilla, Couper, and Kaczmirek (2012, 2014) examined the value of soliciting e-mail addresses, but used only mailed invitations in a follow-up study of the general population in Germany. While several studies have explored e-mail versus mail invitations (see Bandilla, Couper, and Kaczmirek 2014 for a review), few studies have examined the value of adding e-mail to a mail protocol (what Millar and Dillman 2011 call “e-mail augmentation”).

Another area that is receiving a great deal of research attention in the web survey literature is the device used to complete the web surveys (see Couper, Antoun, and Mavletova in press for a review), an area that has received little or no attention in the mixed-mode literature. Increasingly web respondents are using mobile devices (specifically smartphones) to complete web surveys, and this is particularly true of younger age groups. The extent of mobile device use and the effect on data quality in a mixed-mode context is largely unexplored.

In summary, while there is a large literature on sequential mixed-mode designs involving web and mail, the results focus narrowly on response rate differences and are still quite mixed in their results. Our paper adds to this growing body of literature in several ways. First, we add to the growing number of studies on sequential mixed-mode designs with the use of a national sample of a population likely to benefit the most from a web-first approach (modal ages 19–20 years) (see Medway and Fulton 2012). Second, we examine the added value of e-mail invitations for those who provided e-mail addresses at baseline. Third, we use characteristics measured at baseline to model the behavioral mode choice. Fourth, given the prevalence of smartphones among this age group, we examine the effect of different mixed-mode strategies on the type of device (smartphone, tablet, or laptop/desktop) used to complete the survey. Finally, we examine important substantive outcomes (substance use) across different experimental conditions.

1.1 Research Aims

The current experimental design tested each of three experimental conditions as compared to the standard MTF follow-up protocol (MTF Control) for the first MTF follow-up survey of high school graduates at modal ages 19–20 years. The standard protocol is to use a mail-only procedure with a phone prompt for nonrespondents. Unique features of these data include a national sample, longitudinal data starting in high school, and a focus on the sensitive issue of substance use. In the current study, we examined the impact of the experimental conditions (i.e., randomly assigned contact strategies) on characteristics of the sample and variables of substantive interest. The three experimental conditions were: (1) Mail Push, (2) Web Push, (3) Web Push + E-mail. The aims were to examine how the conditions differed on: (1) overall response rates, (2) response rates among subgroups (gender, race/ethnicity, parent education, college plans), (3) mode choice (web versus paper response), (4) device choice for web responses (i.e., smartphone, tablet, or laptop/desktop) and potential differences in data quality across devices, (5) item nonresponse (an indicator of data quality), (6) results on main data of interest (i.e., rates of substance use), and (7) estimated cost.

2. METHODS

2.1 Monitoring the Future Main Study

MTF includes U.S. nationally representative samples of 12th grade students (n ≈ 15,000 per year, modal age 18 years) surveyed annually in high schools across the country (Bachman, Johnston, O'Malley, Schulenberg, and Miech 2015). Each year, approximately 2,450 students are randomly selected to participate in the longitudinal portion of the study; half are randomly assigned to begin one year later at modal age 19 years, and the other half to begin two years later at modal age 20 years. The MTF Control group for the current study included participants who completed the in-school baseline survey in the 12th grade in 2012 or 2013 and who were selected to participate in their first follow-up survey in 2014 (n = 2,451). Drug users were oversampled for follow-up; weights were used to adjust for this sampling procedure. Characteristics of the sample, by condition, are described in table 1.

Table 1.

Baseline (Age 18) Sample Characteristics, by Condition

| MTF Control |

Condition 1 (Mail Push) |

Condition 2 (Web Push) |

Condition 3 (Web Push + E-mail) |

|||||

|---|---|---|---|---|---|---|---|---|

| % | SE | % | SE | % | SE | % | SE | |

| Class year | ||||||||

| 2012 | 49.75 | 1.07 | 48.88 | 1.26 | 48.78 | 1.26 | 48.78 | 1.26 |

| 2013 | 50.25 | 1.07 | 51.12 | 1.26 | 51.22 | 1.26 | 51.22 | 1.26 |

| Gender | ||||||||

| Male | 47.32 | 1.07 | 48.72 | 1.26 | 48.55 | 1.26 | 48.58 | 1.26 |

| Female | 52.68 | 1.07 | 51.28 | 1.26 | 51.45 | 1.26 | 51.42 | 1.26 |

| Race/ethnicity | ||||||||

| White | 63.04 | 1.05 | 61.27 | 1.25 | 62.27 | 1.24 | 62.98 | 1.23 |

| Black | 9.95 | 0.65 | 10.42 | 0.78 | 10.42 | 0.78 | 10.51 | 0.79 |

| Hispanic | 14.99 | 0.78 | 15.36 | 0.92 | 13.18 | 0.87 | 14.75 | 0.91 |

| Other | 12.02 | 0.71 | 12.95 | 0.86 | 14.12 | 0.89 | 11.76 | 0.82 |

| Parent education | ||||||||

| High school or less | 27.10 | 0.97 | 23.96 | 1.09 | 23.96 | 1.09 | 22.50 | 1.07 |

| Some college/more | 72.90C1,C2,C3 | 0.97 | 76.04MTF | 1.09 | 76.04 MTF | 1.09 | 77.50MTF | 1.07 |

| 4-year college plans | ||||||||

| Not definitely | 39.12 | 1.07 | 34.78 | 1.22 | 36.58 | 1.23 | 33.24 | 1.21 |

| Definitely | 60.88C1,C3 | 1.07 | 65.22MTF | 1.22 | 63.42 | 1.23 | 66.76MTF | 1.21 |

| Any lifetime substance use | ||||||||

| Alcohol | 67.61C3 | 1.04 | 67.43C3 | 1.22 | 66.20 | 1.23 | 71.64MTF,C2 | 1.17 |

| Cigarettes | 36.85 | 1.02 | 34.75 | 1.19 | 34.56 | 1.19 | 37.05 | 1.21 |

| Marijuana | 43.14 | 1.06 | 45.28 | 1.26 | 42.72 | 1.25 | 45.64 | 1.26 |

| Other illicit drugs | 23.99C2 | 0.86 | 23.26 | 1.02 | 20.69MTF | 0.97 | 23.40 | 1.03 |

Note.— Total weighted n = 6492. All comparisons between conditions were nonsignificant, unless otherwise noted. C1 = percentage was significantly different from that in Condition 1. C2 = percentage was significantly different from that in Condition 2. C3 = percentage was significantly different from that in Condition 3. MTF = percentage was significantly different from that in the MTF Control Group (p<0.05).

Survey procedures are described in table 2. In December, newly selected longitudinal participants in MTF (MTF Control) were sent two mailings. The first mailing was the selection letter, which told participants that they were selected for the follow-up study and would be paid $25 for participation. The second mailing was a newsletter containing selected summary results from the study in an informational format, along with a cover letter and a change of address card for the respondent to update contact information. In April, one week before the questionnaire mailing, participants were sent an advance letter alerting them that the survey would arrive soon. The paper questionnaire was then mailed along with a pencil, prepaid return envelope, and check for $25 in the participant’s name. A reminder postcard was sent one week later, and a reminder letter was sent three weeks after that (for those participants who had not yet returned their questionnaire). One week later, nonresponse phone calls were made to all those who had not yet returned a questionnaire. A final mailing about six weeks later included a second copy of the paper questionnaire (for those participants who had not yet returned one).

Table 2.

Experimental Procedures by Condition

| Condition |

|||||

|---|---|---|---|---|---|

| Date | Actiona | MTF control | Condition 1: Mail Push | Condition 2: Web Push | Condition 3: Web Push + E-mail |

| Dec. 13 | Selection letter | Cp | Cp | C | C |

| Dec. 20 | Newsletter | Cp | Cp | C | C |

| Apr. 3 | Advance letter | Cp | Cp | Cw | Cw, e-mail |

| Apr. 10 | Questionnaire | P, $ | P, $ | W, $ | W, $, e-mail |

| Apr. 17 | Reminder postcard | Cp | Cp | W | W, e-mail |

| May 10 | Reminder letter | Cp | Cp, Wb | Pc, W | Pc, W, e-mail |

| May 18 | Nonresponse phone call | Cp | Cpw | Cpw | Cpw |

| Aug. 27 | Final mailing | P | Cpw, P, W | Cpw, P, W | Cpw, P, W |

Note.— $ = incentive check; C = communication without any mention of questionnaire delivery mode; Cp = communication mentioning the paper questionnaire (not including the actual questionnaire); Cpw = communication mentioning both the paper questionnaire and the web questionnaire; Cw = communication mentioning the web questionnaire instead of the paper questionnaire; E-mail = communication duplicated in an e-mail to those who provided e-mail addresses; P = paper questionnaire; W = web survey log-in information (URL and PIN).

All procedures are communications to the participant based on traditional MTF procedures and are altered minimally in the experimental conditions. All occur via postal mail service except for “e-mail.”

This is the first time Condition 1 respondents are invited to the web questionnaire.

This is the first time Conditions 2 and 3 respondents receive a paper questionnaire.

2.2 Experimental Design

For the experimental conditions, we selected a supplementary sample of participants who completed the MTF baseline survey in 2012 or 2013 but had not been selected to participate in the main MTF follow-up (n = 4,950). Participants were randomly assigned to one of the three experimental conditions. Across conditions, we made minimal changes to survey layout, text of communications, and survey content so as not to confound differences in communication with the survey and invitation modes. The web version of the survey was programmed using DatStat’s Illume software. Procedures are shown in table 2 and described below.

In Condition 1, the Mail Push Condition, participants were sent the selection letter, newsletter, advance letter, paper questionnaire with a check for $25, and reminder postcard. Each mailing mirrored the MTF main study (i.e., MTF Control) and was sent at the same time. The Condition 1 reminder letter reminded participants of the paper questionnaire that had already been sent but also gave them the option to complete the survey online. The letter also included the web survey login information (i.e., survey URL and a personal identification number [PIN]). Nonresponse phone calls to all those who had not yet returned a questionnaire provided information about the paper and web response modes. A final mailing included a paper questionnaire and information about the web survey option.

In Condition 2, the Web Push Condition, participants were sent the selection letter and newsletter that mirrored the MTF Control group, except that language implying a paper survey was removed. Condition 2 participants were then sent an advance letter stating that the next week they would be sent an invitation to complete an online survey. A week later, they were sent web survey login information (i.e., survey URL and PIN) and a check for $25. The reminder postcard was the same as MTF Control except it requested they do the web survey. The reminder letter reminded participants to do the online survey (with URL and PIN), but also gave the option of completing the enclosed paper questionnaire instead. Nonresponse phone calls to all those who had not yet returned a questionnaire provided information about the paper and web response modes. A final mailing included a paper questionnaire and information about the web survey.

Condition 3, the Web Push + E-mail Condition (i.e., what Millar and Dillman 2011 call “e-mail augmentation”), had identical procedures to Condition 2 with the addition of e-mailed versions of the advance letter, web survey login information, reminder postcard, and reminder letter. E-mail addresses (requested at baseline) were available for 77% of participants in Condition 3, and usable e-mail addresses (i.e., where the messages were not returned as undeliverable) were available for 64%. (Thirteen percent provided e-mails at age 18 that were undeliverable at age 19/20; 23% left e-mail address blank.) Participants who responded in 2013 (versus 2012), whose parents attended college (versus did not), and who definitely planned to graduate from a four-year college (versus other) were more likely to provide a usable e-mail address (see Appendix A). Participants who did not provide a usable e-mail address received the same protocol as Condition 2.

2.3 Measures

To address the substantive aims, we used measures from baseline (12th grade, in school surveys) that were available for both respondents and nonrespondents of the experiment. In addition, concurrent characteristics (at ages 19/20 years) were provided by respondents in the experimental data collection.

2.3.1 Baseline Characteristics (12th Grade, Modal Age 18)

Class year, the year in which the participant graduated from high school, was coded as 2012 or 2013. Gender was coded as male or female. Race/Ethnicity was coded as white, black, Hispanic, or other. Parent education was coded based on whether either parent had at least some college education (compared to high school education or less). Four-year college plans were coded based on whether the participant indicated that she/he would “definitely” graduate from a four-year college program, compared to other responses (probably will, probably won't, and definitely won't). Lifetime substance use measures indicated whether the participant had ever used any alcohol, cigarettes, marijuana, or illicit drugs other than marijuana (yes or no).

2.3.2 Concurrent Characteristics (Modal Age 19/20)

College student status was coded as full-time enrollment in a four-year college (yes or no). Living with parents indicated whether the participant lived with their parents in March of 2014 (yes or no). Employment indicated whether the participant reported a full-time job, a part-time job, or two or more different jobs (yes), or no outside job or paid employment, laid-off, or waiting to start a job (no) during the first full week in March 2014. Substance use in the past 30 days indicated whether the participant used alcohol, cigarettes, marijuana, or illicit drugs other than marijuana (yes or no).

2.3.3 Device Type (Modal Age 19/20)

Device type was coded from the DatStat Illume web survey paradata. Smartphones included Android, Windows, and iOS phones. Android tablets and iPads were coded as tablets. Computers included laptop and desktop computers running full-screen browsers.

3. RESULTS

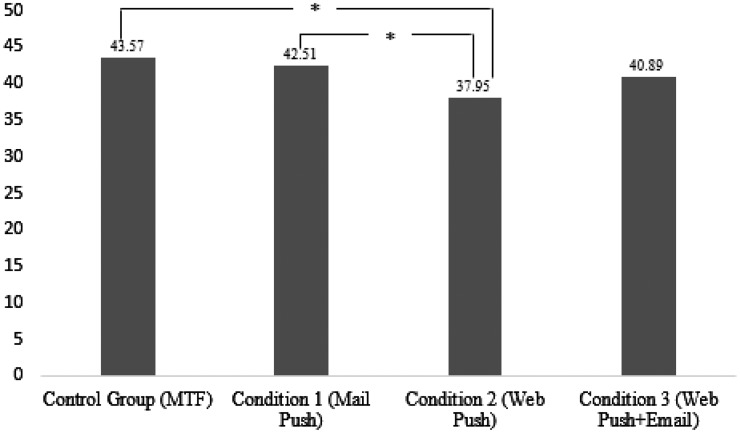

The first research aim was to examine overall response rates based on condition. Results are shown in figure 1, comparing the standard MTF (i.e., MTF Control) and the three experimental conditions. Based on weighted difference in proportions tests, the response rates in the experimental conditions did not significantly differ from each other; the exception was that Condition 2 (Web Push without e-mail) had a lower response rate than Condition 1 (Mail Push) and a lower response rate than MTF Control, making Condition 2 the only experimental condition significantly different from MTF Control.

Figure 1.

Overall Response Rates by Condition. *Response rates differ at p < 0.05. Number of responders by condition: Control Group n = 879, Condition 1 n = 634, Condition 2 n = 566, Condition 3 n = 610.

The second research aim was to examine response rates between conditions among specific subgroups based on characteristics measured at baseline (age 18), shown in table 3. The majority of the significant differences that emerged pertained to Condition 2. Specifically, in Condition 2 compared to MTF Control and to Condition 3, a lower percentage of people from the 2012 cohort responded; in Conditions 2 and 3 compared to MTF Control, a lower percentage of people from the 2013 cohort responded. In Condition 2, a lower percentage of people responded who were male (compared to all other conditions), female (compared to MTF Control), white (compared to MTF Control and Condition 1), or black (compared to MTF Control and Condition 1), whose parents had college education (compared to all other conditions), and who definitely planned to go to four-year college (compared to MTF Control and Condition 1). Lifetime alcohol users (compared to MTF Control), cigarette users (compared to MTF Control and Condition 1), and other illicit drug users (compared to Condition 3) were less likely to respond in Condition 2. In addition, a multiple logistic regression was used to examine the predictors of responding (versus not responding) based on condition and background characteristics, shown in table 4. Results indicated that, after controlling for baseline characteristics, participants in Condition 2 had lower odds of responding than those in Condition 1. There were no significant differences comparing Condition 1 to MTF Control or Condition 3.

Table 3.

Response Rates Overall and by Baseline Characteristics, by Condition

| MTF Control |

Condition 1 (Mail Push) |

Condition 2 (Web Push) |

Condition 3 (Web Push + E-mail) |

|||||

|---|---|---|---|---|---|---|---|---|

| % | SE | % | SE | % | SE | % | SE | |

| Total (overall) | 43.57C2 | 1.07 | 42.51C2 | 1.25 | 38.02MTF,C1 | 1.23 | 40.99 | 1.24 |

| Class year | ||||||||

| 2012 | 40.90C2 | 1.50 | 40.29 | 1.77 | 35.38MTF,C3 | 1.73 | 40.37C2 | 1.77 |

| 2013 | 46.22C2,C3 | 1.51 | 44.63 | 1.76 | 40.41 | 1.74 | 41.38 | 1.74 |

| Gender | ||||||||

| Male | 38.98C2 | 1.52 | 37.93C2 | 1.74 | 32.87MTF,C1,C3 | 1.70 | 38.19C2 | 1.75 |

| Female | 47.69C2 | 1.49 | 46.85 | 1.77 | 42.75MTF | 1.75 | 43.44 | 1.76 |

| Race/ethnicity | ||||||||

| White | 48.24 C2 | 1.36 | 45.93C2 | 1.62 | 41.06 MTF,C1 | 1.59 | 44.06 | 1.59 |

| Black | 36.59C2 | 3.34 | 37.63C2 | 3.87 | 23.81MTF,C1 | 3.38 | 30.08 | 3.62 |

| Hispanic | 33.93 | 2.69 | 34.94 | 3.13 | 33.14 | 3.34 | 31.96 | 3.10 |

| Other | 38.41 | 3.06 | 39.08 | 3.50 | 41.75 | 3.35 | 42.47 | 3.69 |

| Parent education | ||||||||

| High school or less | 36.39 | 2.03 | 33.57 | 2.48 | 34.44 | 2.49 | 29.78 | 2.48 |

| Some college/more | 46.79C2 | 1.28 | 45.79C2 | 1.46 | 39.78 MTF,C1,C3 | 1.44 | 44.53C2 | 1.45 |

| 4-year college plans | ||||||||

| Not definitely | 36.77 | 1.69 | 35.80 | 2.08 | 34.18 | 2.01 | 34.12 | 2.10 |

| Definitely | 48.89C2 | 1.41 | 47.03C2 | 1.60 | 41.02 MTF,C1 | 1.59 | 44.89 | 1.58 |

| Any lifetime substance use | ||||||||

| Alcohol | 40.27C2 | 1.29 | 39.89 | 1.52 | 35.71 MTF | 1.50 | 38.20 | 1.46 |

| Cigarettes | 35.93C2 | 1.65 | 36.57C2 | 2.02 | 28.87 MTF,C1 | 1.91 | 34.26 | 1.91 |

| Marijuana | 35.28 | 1.52 | 37.53 | 1.79 | 31.49 | 1.77 | 34.01 | 1.73 |

| Other illicit drugs | 36.12 | 1.92 | 35.57 | 2.35 | 31.42 C3 | 2.40 | 40.53C2 | 2.40 |

Note.— All comparisons between conditions were nonsignificant, unless otherwise noted. C1 = response rate was significantly different than in Condition 1. C2 = response rate was significantly different than in Condition 2. C3 = response rate was significantly different than in Condition 3 (p<0.05).

Table 4.

Multiple Logistic Regression Predicting Any Response (1) versus No Response (0) Based on Experimental Condition and Baseline Characteristics

| AOR (95% CI) | |

|---|---|

| Condition | |

| MTF Control (versus Condition 1 [Mail Push]) | 1.076 (0.931–1.245) |

| Condition 2 [Web Push] (versus Condition 1 [Mail Push]) | 0.849 (0.725–0.995)* |

| Condition 3 [Web Push+E-mail] (versus Condition 1 [Mail Push]) | 0.921 (0.787–1.077) |

| Class year 2013 (versus 2012) | 1.234 (1.110–1.372)*** |

| Male | 0.754 (0.678–0.840)*** |

| Race/ethnicity | |

| Black (versus white) | 0.612 (0.502–0.747)*** |

| Hispanic (versus white) | 0.717 (0.605–0.848)*** |

| Other (versus white) | 0.795 (0.673–0.939)** |

| Parent some college education | 1.318 (1.155–1.505)*** |

| 4-year college plans (definite) | 1.375 (1.225–1.544)*** |

| Any lifetime substance use (age 18) | |

| Alcohol use (versus no use) | 0.808 (0.706–0.924)** |

| Cigarette use (versus no use) | 0.818 (0.712–0.940)** |

| Marijuana use (versus no use) | 0.775 (0.672–0.892)*** |

| Other illicit drug use (versus no use) | 1.001 (0.870–1.151) |

Note.— Weighted n = 5,575. AOR = adjusted odds ratio.

p<0.05.

p<0.01.

p<0.001.

The third research aim was to examine mode choice based on experimental condition, shown in table 5. Participants in the MTF Control were not offered the web option. The percentage of people responding via the web significantly increased across conditions, with Condition 1 (Mail Push) having the lowest and Condition 3 (Web Push + E-mail) having the highest web response. Multiple logistic regression was used to examine who chose to respond via the web, shown in table 6. Participants in Condition 3 (versus Conditions 1 and 2) had the highest odds of responding via the web, and those in Condition 2 also had higher odds than did those in Condition 1. Subgroups that showed greater odds of responding via the web rather than via paper included Hispanics (versus whites), those whose parents had some college education (versus none), and current full-time college students (versus others). Differences in characteristics of web responders in Conditions 2 and 3 are shown in table 7; all differences with Condition 1 were not significant.

Table 5.

Mode Choice (among Responders) by Condition

| MTF Control |

Condition 1(Mail Push) |

Condition 2(Web Push) |

Condition 3(Web Push + E-mail) |

|||||

|---|---|---|---|---|---|---|---|---|

| % | SE | % | SE | % | SE | % | SE | |

| Paper responders | 100.00 | 0.00 | 85.63 | 0.01 | 36.19 | 0.02 | 25.04 | 0.02 |

| Web responders | N/A | 14.37 | 0.01 | 63.81 | 0.02 | 74.96 | 0.02 | |

Note.— All comparisons of Conditions 1, 2, and 3 within mode were significantly different (p<0.05), except C2 versus C3 paper response did not differ.

Table 6.

Multiple Logistic Regression Predicting Web Response (1) versus Paper Response (0) among Responders, Based on Condition and Baseline (Age 18) and Concurrent (Age 19/20) Characteristics

| AOR (95% CI) | |

|---|---|

| Condition | |

| Condition 2 [Web Push] (versus Condition 1 [Mail Push]) | 12.066 (8.626–16.125)*** |

| Condition 3 [Web Push+E-mail] (versus Condition 1 [Mail Push]) | 20.683 (14.238–27.326)*** |

| Class year 2013 (versus 2012) | 0.900 (0.729–1.202) |

| Male | 1.123 (0.896–1.485) |

| Race/ethnicity | |

| Black (versus white) | 1.222 (0.819–2.321) |

| Hispanic (versus white) | 1.942 (1.202–2.820)** |

| Other (versus white) | 1.978 (0.710–1.496) |

| Parent some college education | 1.405 (1.010–1.932)* |

| 4-year college plans (definite) | 0.898 (0.698–1.302) |

| Any lifetime substance use (age 18) | |

| Alcohol use (versus no use) | 0.849 (0.594–1.114) |

| Cigarette use (versus no use) | 0.971 (0.722–1.433) |

| Marijuana use (versus no use) | 1.062 (0.725–1.427) |

| Other illicit drug use (versus no use) | 0.924 (0.650–1.315) |

| Age 19/20 characteristics | |

| College student (versus not full-time 4-year student) | 1.550 (1.099–2.084)** |

| Live with parents (versus other) | 0.927 (0.686–1.219) |

| Employed (versus not) | 0.869 (0.695–1.166) |

| Past 30-day alcohol use (versus no use) | 1.136 (0.865–1.548) |

| Past 30-day cigarette use (versus no use) | 0.903 (0.596–1.371) |

| Past 30-day marijuana use (versus no use) | 0.849 (0.633–1.277) |

| Past 30-day other illicit drug use (versus no use) | 1.427 (0.834–2.441) |

Note.— Weighted n = 1,440. Sample is responders in Conditions 1, 2, and 3. AOR = adjusted odds ratio.

p<0.05.

p<0.01.

p<0.001.

Table 7.

Web Response Rates by Baseline Characteristics, by Condition

| Condition 2(Web Push) |

Condition 3(Web Push + E-mail) |

|||

|---|---|---|---|---|

| % | SE | % | SE | |

| Total (overall) | 63.70*** | 1.98 | 74.76 | 1.71 |

| Class year | ||||

| 2012 | 62.02*** | 2.96 | 75.08 | 2.46 |

| 2013 | 65.10* | 2.66 | 74.47 | 2.39 |

| Gender | ||||

| Male | 64.08** | 3.04 | 75.87 | 2.49 |

| Female | 63.42** | 2.61 | 73.85 | 2.36 |

| Race/ethnicity | ||||

| White | 64.59** | 2.43 | 73.91 | 2.12 |

| Black | 60.66 | 7.90 | 78.06 | 5.94 |

| Hispanic | 67.12 | 5.82 | 71.88 | 5.25 |

| Other | 58.65** | 5.15 | 78.80 | 4.68 |

| Parent education | ||||

| High school or less | 56.13* | 4.46 | 70.32 | 4.57 |

| Some college/more | 65.97*** | 2.22 | 75.65 | 1.88 |

| 4-year college plans | ||||

| Not definitely | 58.66** | 3.58 | 72.99 | 3.37 |

| Definitely | 66.29** | 2.40 | 75.61 | 2.03 |

| Any lifetime substance use | ||||

| Alcohol | 59.82*** | 2.58 | 73.12 | 2.15 |

| Cigarettes | 60.44** | 3.85 | 73.06 | 3.05 |

| Marijuana | 59.50*** | 3.35 | 74.62 | 2.71 |

| Other illicit drugs | 60.83 | 4.54 | 70.13 | 3.56 |

Note.— Comparisons between Conditions 2 and 3 are shown.

p<0.001.

p<0.01.

p<0.05. All comparisons between Condition 1 and Conditions 2 and 3 (not shown) were significant at p<0.001.

The fourth research aim was to examine responses to the web-based survey by device and related data quality. The distribution of device type is shown in table 8. Note that participants were encouraged to use “a desktop, laptop, or tablet” due to the fact that the survey was not optimized for smartphones. Nonetheless, 12% of participants who responded to the web survey used smartphones. Participants in Condition 3 (Web Push + E-mail) less often used a computer and more often used a smartphone compared to participants in Conditions 1 and 2. A multiple logistic regression was used to predict characteristics of those who used a smartphone (compared to a desktop, laptop, or tablet), shown in table 9, among those in Conditions 2 and 3; Condition 1 participants were excluded from this analysis because of very low response via smartphone (weighted n = 4). Participants in Condition 3 (Web Push + E-mail; versus Condition 2 [Web Push]) had higher odds of responding via smartphone. Participants who were black (versus white) had greater odds of responding via smartphone, and those who were full-time college students (versus others) had lower odds of responding via smartphone.

Table 8.

Device Type Used by Web Responders, by Condition

| Total |

Condition 1(Mail Push) |

Condition 2(Web Push) |

Condition 3(Web Push + E-mail) |

||||

|---|---|---|---|---|---|---|---|

| % (weighted n) | % | SE | % | SE | % | SE | |

| Computer | 83.83 (765) | 92.30C3 | 2.70 | 88.47C3 | 1.63 | 78.71C1,C2 | 1.86 |

| Tablet | 4.38 (40) | 2.19 | 1.53 | 3.88 | 1.00 | 5.24 | 1.02 |

| Smartphone | 11.79 (108) | 5.51C3 | 2.28 | 7.64C3 | 1.37 | 16.05 C1,C2 | 1.66 |

Note.— Sample includes cases of respondents who chose to respond by web but broke off before completing the survey. All comparisons between conditions were nonsignificant, unless otherwise noted. C1 = response rate was significantly different from that in Condition 1. C2 = response rate was significantly different from that in Condition 2. C3 = response rate was significantly different from that in Condition 3 (p<0.05).

Table 9.

Multiple Logistic Regression Predicting Smartphone Response (1) versus Computer/Tablet (Desktop, Laptop, or Tablet) Response (0) among Web Responders, Based on Condition and Baseline (Age 18) and Concurrent (Age 19/20) Characteristics

| AOR (95% CI) | |

|---|---|

| Condition | |

| Condition 3 [Web Push+E-mail] (versus Condition 2 [Web Push]) | 2.437 (1.390–4.274)** |

| Class year 2013 (versus 2012) | 1.347 (0.796–2.281) |

| Male | 1.163 (0.698–1.939) |

| Race/ethnicity | |

| Black (versus white) | 4.343 (1.992–9.466)*** |

| Hispanic (versus white) | 0.960 (0.380–2.426) |

| Other (versus white) | 1.445 (0.661–3.158) |

| Parent some college education | 0.677 (0.360–1.275) |

| 4-year college plans (definite) | 1.248 (0.674–2.313) |

| Substance use (age 18) | |

| Any lifetime alcohol use (versus no use) | 1.624 (0.783–3.368) |

| Any lifetime cigarette use (versus no use) | 1.231 (0.690–2.197) |

| Any lifetime marijuana use (versus no use) | 1.324 (0.703–2.495) |

| Any lifetime other illicit drug use (versus no use) | 0.748 (0.390–1.436) |

| Age 19/20 characteristics | |

| College student (versus not full-time 4-year student) | 0.467 (0.266–0.822)** |

| Live with parents (versus other) | 0.843 (0.468–1.517) |

| Employed (versus not) | 0.959 (0.564–1.629) |

| Past 30-day alcohol use (versus no use) | 0.849 (0.439–1.642) |

| Past 30-day cigarette use (versus no use) | 1.548 (0.753–3.183) |

| Past 30-day marijuana use (versus no use) | 0.732 (0.358–1.497) |

| Past 30-day other illicit drug use (versus no use) | 0.881 (0.297–2.617) |

Note.— Weighted n = 666 (n = 77 for smartphone, n = 641 for computer/tablet). Condition 1 respondents were excluded because of very low (weighted n = 4) response via smartphone. Web cases that broke off were excluded. AOR = adjusted odds ratio.

p<0.05.

p<0.01.

p<0.001.

We also explored the effect of smartphone use on survey breakoffs, multiple sessions, and time to complete the survey. We found that breakoffs were significantly higher for smartphone users (11.6% of those who started broke off), compared to tablet (3.6%) and computer (1.9%) users. Multiple sessions were also higher for smartphone users (18.6% of people who completed the survey on a smartphone logged in two or more times), compared to tablet (7.6%) and computer (9.1%) users. Finally, median times to complete the survey were longer for smartphone users (54.4 minutes) compared to tablet (37.7 minutes) and computer (40.5 minutes) users. These findings all match the emerging literature on device use (see Couper, Antoun, and Mavletova in press for a summary).

The fifth research aim was to examine item nonresponse across condition, mode (web versus paper), and device (computer, tablet, smartphone). We examined total percent of item-missing data, as well as specific rates of missing data on key items of interest to the study (i.e., marital status, employment status, highest degree completed) reported at age 19/20 by condition, mode, and device type (shown in Appendix B). We found differences across condition, such that Conditions 2 and 3 had lower rates of total missing data than MTF Control. In addition, the web survey had a lower rate of item missing data compared to the paper survey (across all responders from MTF Control, Condition 1, Condition 2, and Condition 3). Among web responders, there were no significant differences across type of device used for the web survey (computer, tablet, smartphone). Furthermore, rates of missing data on specific items of interest did not significantly vary.

The sixth research aim was to determine whether the main data of interest (i.e., substance use rates reported at age 19/20 years) differed by experimental condition. Standard procedures to adjust for sampling (but not for nonresponse) were used. Here we show past 30-day levels of the most commonly used substances: alcohol, cigarettes, and marijuana, as well as a composite for use of any illicit drug other than marijuana. We do not present the tests conducted specifically for differences in less commonly used substances (i.e., heroin, inhalants, and methamphetamines) because levels were very low and no significant differences were found. Table 10 shows the past 30-day prevalence of alcohol, cigarettes, marijuana, and other illicit drugs by (A) condition, (B) mode of response, and (C) device type. As for condition, Condition 3 (Web Push + E-mail) respondents reported higher levels of alcohol use than MTF Control participants and higher levels of cigarette use than Condition 2 (Web Push) respondents. However, some of these preexisting differences (i.e., higher rates of alcohol use among Condition 3 participants) were also evident at baseline (see table 1). There were no significant differences by mode (web versus paper) or device type.

Table 10.

Prevalence of Substance Use in the Past 30 Days Reported at Modal Ages 19/20

| A) By Condition | ||||||||

|---|---|---|---|---|---|---|---|---|

| MTF Control |

Condition 1(Mail Push) |

Condition 2(Web Push) |

Condition 3(Web Push + E-mail) |

|||||

| % | SE | % | SE | % | SE | % | SE | |

| Alcohol | 46.8C3 | 1.67 | 50.8 | 1.98 | 49.3 | 2.11 | 53.3MTF | 2.02 |

| Cigarettes | 12.0 | 1.03 | 13.6 | 1.29 | 10.6C3 | 1.22 | 15.4C2 | 1.39 |

| Marijuana | 20.2 | 1.28 | 20.9 | 1.56 | 19.8 | 1.64 | 22.2 | 1.63 |

| Illicit drugs | 8.1 | 0.84 | 7.0 | 0.96 | 5.6 | 0.92 | 6.4 | 0.94 |

| B) By Mode of Response | ||||

|---|---|---|---|---|

| Paper |

Web |

|||

| % | SE | % | SE | |

| Alcohol | 49.7 | 1.67 | 52.7 | 1.65 |

| Cigarettes | 14.2 | 1.11 | 12.4 | 1.03 |

| Marijuana | 21.6 | 1.33 | 20.4 | 1.29 |

| Illicit drugs | 6.1 | 0.76 | 6.6 | 0.78 |

| C) By Device Type | ||||

|---|---|---|---|---|

| Smartphone |

Desktop, laptop, or tablet |

|||

| % | SE | % | SE | |

| Alcohol | 52.4 | 5.21 | 51.6 | 1.87 |

| Cigarettes | 18.2 | 3.79 | 10.8 | 1.09 |

| Marijuana | 20.9 | 4.05 | 20.6 | 1.47 |

| Illicit drugs | 5.4 | 2.24 | 6.5 | 0.88 |

Note.— A) All comparisons between conditions were nonsignificant unless otherwise noted. C1 = response rate was significantly different from that in Condition 1. C2 = response rate was significantly different from that in Condition 2. C3 = response rate was significantly different from that in Condition 3 (p<0.05).

Note.— B) Sample includes responders in Conditions 1, 2, and 3. There were no significant differences between paper and web.

Note.— C) Sample includes those who completed web-based surveys in Conditions 2 and 3.

Finally, the seventh research question concerned the cost of each design. Costs were calculated to include survey mailing materials, postage, labor (from our own staff and survey research operations staff [including fringes]), incentives, daily web operations, paper survey scanning, and nonresponse calling. Costs that were not included were costs associated with survey design (for the web survey or the paper survey) and staff time expected to be approximately equal across conditions (i.e., for nonresponse respondent tracking and data cleaning). The cost per targeted respondent is the cost divided across the number of targeted participants. The cost per participant is the cost divided across the number of people who actually participated. Results are reported in table 11. (The costs of the MTF main study are not shown, given the complexity in separating the costs for the age 19/20 data collection in the context of an ongoing study collecting data from participants from age 14 to age 55. However, the design used in Condition 1 requires the same number of mailings and is expected to be very similar to the MTF costs for age 19/20 data collection.) As shown, the lowest cost for both targeted respondents and actual respondents is for Condition 3 (Web Push + E-mail). Cost differences are largely a result of significantly reduced material and labor costs associated with the initial mailing (i.e., sending an initial letter rather than a questionnaire packet) and the reminder (reduced paper because 552 participants [Condition 2 n = 222, Condition 3 n = 330] completed the survey online in the first month and therefore did not need a reminder).

Table 11.

Estimated Change in Cost Compared to Current MTF Procedures, by Condition

| Cost per targeted respondent | Cost per participant | |

|---|---|---|

| Mail Push (Condition 1) | 0 | 0 |

| Web Push (Condition 2) | −4% | +8% |

| Web Push + E-mail (Condition 3) | −8% | −5% |

Note.— Costs were calculated to include survey mailing materials, postage, labor (from our own staff and survey research operations staff [including fringes]), incentives, daily web operations, paper survey scanning, and nonresponse calling. Costs that were not included were costs associated with survey design (for the web survey or the paper survey) and staff time expected to be approximately equal across conditions (i.e., to do nonresponse respondent tracking and data cleaning). The cost per targeted respondent sample is the cost divided across the number of targeted participants. The cost per participant is the cost divided across the number of people who actually participated.

Cost differences across conditions were also affected by differences in rates of cashing incentive checks. Participants in Condition 3 (Web Push + E-mail) were more likely to do the survey without cashing their incentive check (20.0% of responders), compared to Condition 1 (6.7%) and Condition 2 (12.8%). There were approximately equal rates of cashing the check without completing the survey: 28.9% of nonresponders in Condition 1, 27.4% in Condition 2, and 29.7% in Condition 3. Overall, 55.9% in Condition 1, 49.1% in Condition 2, and 49.6% in Condition 3 cashed a check. Of respondents who completed a paper questionnaire, 95% of Condition 1, 79% of Condition 2, and 78% of Condition 3 cashed the check. Of respondents who completed a web survey, 82% of Condition 1, 92% of Condition 2, and 81% of Condition 3 cashed the check.

4. DISCUSSION

Results show that overall response rates were lower in the Web Push without e-mail condition compared to the MTF Control and to the Mail Push condition. Response rates in the Mail Push and the Web Push + E-mail conditions did not differ significantly from the MTF Control. Participants in the Web Push + E-mail condition were most likely to respond via the web survey and most likely to respond via smartphone. Based on multivariable analyses, Hispanics were more likely than whites to respond on the web, as were those with higher parent education and those currently in college. Blacks were more likely than whites to respond via smartphone, and young adults not attending college were more likely than full-time four-year college students to respond via smartphone. These results suggest that demographic groups that were less likely to respond at follow-up overall across conditions had higher odds of response to the web survey (Hispanic young adults) and using smartphones (black young adults). Therefore, future efforts to facilitate response via a smartphone may help mitigate racial/ethnic differences in follow-up response.

Total percentages of item-level missing data (i.e., item nonresponse as an indicator of data quality) differed by condition and mode. The two Web Push conditions had lower missing data rates than MTF Control, and the web response overall had lower missing data than the paper response. Total percent missing data did not differ by device type among web responders.

There were some differences in key variables of interest related to substance use. Web Push + E-mail respondents reported higher rates of alcohol use (53%) compared to MTF (47%) and higher rates of cigarette use (15%) compared to Web Push (11%). We found higher rates of cigarette use among smartphone responders compared to desktop, laptop, or tablet responders. Although previous studies have shown comparable rates of substance use across modes (Denscombe 2006; McCabe 2004; McCabe, Boyd, Couper, Crawford, and D’Arcy 2002), the previous work randomized people to respond via different modes while the current study gave participants the option of response mode. Therefore, the current results also reflect selection effects because young adults are differentially likely to respond via web and via smartphone, particularly based on race/ethnicity and college status groups that have previously been shown to have different rates of substance use (Johnston et al. 2015). Future research should continue to investigate sample characteristics associated with self-selection into different response modes and potential differences in validity of responses across response modes to help determine why the differences were observed in the current study.

The Web Push + E-mail condition was the most cost-effective. However, more work needs to be done to understand differences in respondent incentive check cashing among the Web Push + E-mail group. Lower rates of check cashing could indicate that respondents discarded or never received the paper mailing but were able to respond to the survey through the e-mail invitation. This would suggest that e-mail is important for encouraging response among people who may not receive or open the paper mailing. At the same time, not cashing the incentive check could detrimentally impact future participation.

4.1 Conclusions and Future Directions

Data used in the current study are from the baseline and first follow-up of the national Monitoring the Future Study. Therefore, participants were aged 19/20 years during the experimental manipulation described and had previously participated in the longitudinal study; results may not generalize to other age participants or other study designs. The Web Push + E-mail condition was promising, as others (e.g., Israel 2013; Millar and Dillman 2011) have found, and may lead to cost savings without a significant negative impact on response rates, and potentially a reduced missing data rate. The effectiveness of this condition in increasing the proportion of web responses points to the potential value of collecting and using e-mail addresses for follow-up in longitudinal studies (see also Bandilla, Couper, and Kaczmirek 2014; Cernat and Lynn 2014). The Web Push + E-mail condition could be further strengthened by obtaining more accurate and current e-mail address for participants; just less than two-thirds of participants provided an e-mail address at age 18 that was usable during the age 19/20 follow-up. Our results also suggest the importance of examining the effect of mode changes on substantive variables in longitudinal studies. We found some differences in key substance use indicators, but given that these are likely due to selection effects rather than mode effects, further work is needed to explore adjustments for these effects in considerations of long-term trends. Longitudinal work is warranted to examine how these experimental conditions may affect retention rates across young adulthood. While much research has examined cross-sectional differences in response rates, the effects of such mode switches on longitudinal outcomes and analyses of trends need further exploration.

Additional work is also needed to optimize the survey questionnaires for smartphones, given the potential to reach relatively difficult-to-reach groups (e.g., African American participants, those not in college). Optimizing for smartphones could serve to streamline and improve the survey experience, thus increasing ongoing retention. Additional e-mail contact and text messaging may also be relatively inexpensive ways to increase web and mobile responding. In conclusion, integrating web and mobile survey techniques into large, national, longitudinal studies should be done carefully due to potential differences in responding. While proceeding thoughtfully, we should continue to explore these methods due to their potential to make data collection more cost-effective and more representative of the population.

Appendix A

A.1. Characteristics of Participants Providing Usable E-mail Addresses

|

|

|

Usable e-mail |

No e-mail orunusable e-maila |

Chi2 |

|---|---|---|---|---|

| Total | 100.00(n = 1,646) | 64.03(n = 1,050) | 35.97(n = 596) | |

| Survey year | 2012 | 61.36 | 38.64 | 4.84* |

| 2013 | 66.57 | 33.43 | ||

| Sex | Male | 65.56 | 34.44 | 1.59 |

| Female | 62.58 | 37.42 | ||

| Race | White | 62.54 | 37.46 | 5.02 |

| Black | 69.97 | 30.03 | ||

| Hispanic | 63.64 | 36.36 | ||

| Others | 68.22 | 31.78 | ||

| Parent college | Yes | 66.44 | 33.56 | 7.05** |

| No | 58.82 | 41.18 | ||

| College plan | Yes | 66.78 | 33.22 | 6.99** |

| No | 60.02 | 39.98 |

p < 0.05.

p < 0.01.

No e-mail address was provided by 23% of the sample; unusable e-mail addresses (i.e., where messages were automatically returned to the sender as undeliverable) were provided by 13% of the sample. For these analyses, no e-mail and unusable e-mail addresses were combined.

Appendix B

Appendix BB.1. Percent Original Item Nonresponse at Modal Age 19/20 by Condition and by Survey Mode

| Age 19/20 questionnaire item | MTF control | Condition 1(Mail Push) | Condition 2(Web Push) | Condition 3(Web Push + E-mail) | Web survey | Paper survey |

|---|---|---|---|---|---|---|

| Total percent item-missing data | 4C2, C3 | 3 | 3 | 2 | 1* | 4 |

| Specific items of interest:Lifetime alcohol use | 3 | 3 | 4 | 3 | 3 | 4 |

| Past year cigarette use | 3 | 2 | 2 | 2 | 2 | 2 |

| Lifetime marijuana use | 2 | 2 | 3 | 3 | 3 | 2 |

| Marital status | 1 | 0 | 2 | 2 | 2 | 1 |

| Highest degree completed | 1 | 0 | 2 | 2 | 2 | 0 |

| Employment status | 4 | 3 | 2 | 4 | 4 | 3 |

| n per column | 879 | 634 | 566 | 610 | 910 | 1779 |

| B.2. | |||

|---|---|---|---|

| Age 19/20 questionnaire item | Laptop/desktop | Smartphone | Tablet |

| Total percent item-missing data | 1 | 1 | 2 |

| Specific Items of Interest:Lifetime alcohol use | 2 | 6 | 10 |

| Past year cigarette use | 1 | 4 | 3 |

| Lifetime marijuana use | 2 | 7 | 4 |

| Marital status | 1 | 6 | 3 |

| Highest degree completed | 2 | 6 | 3 |

| Employment status | 2 | 8 | 3 |

| n per column | 763 | 106 | 40 |

|

| |||

B.1 Note.— Total percent item-missing data was calculated for each person as the number of items not answered divided by the number of eligible items, taking into account skips and conditional questions; the mean percent missing is shown for each column. Comparisons across conditions (MTF Control, Condition 1, Condition 2, and Condition 3) were not significant, unless otherwise noted. C2 = total percent item-missing data was significantly different from that in Condition 2. C3 = total percent item-missing data was significantly different from that in Condition 3 (p<0.05). Significance in comparisons of mode (web versus paper) among all responders in MTF Control and Conditions 1, 2, and 3 were noted as

p<0.05 in total percent item-missing data between the web and paper survey.

B.2 Note.— All comparisons across mode among these web-based responders were not significant.

References

- Bachman J. G., Johnston L. D., O'Malley P. M., Schulenberg J. E., Miech R. A. (2015), The Monitoring the Future Project after Four Decades: Design and Procedures (Monitoring the Future Occasional Paper No. 82), Ann Arbor, MI: Institute for Social Research. [Google Scholar]

- Bandilla W., Couper M. P., Kaczmirek L. (2012), “ The Mode of Invitation for Web Surveys,” Survey Practice, 53 Available at: <http://www.surveypractice.org/index.php/SurveyPractice/article/view/20>. Date accessed: 15 January. 2017. [Google Scholar]

- Bandilla W., Couper M. P., Kaczmirek L. (2014), “ The Effectiveness of Mailed Invitations for Web Surveys and the Representativeness of Mixed-Mode Versus Internet-Only Samples,” Survey Practice, 74 Available at: <http://www.surveypractice.org/index.php/SurveyPractice/article/view/274>. Date accessed: 15 January. 2017. [Google Scholar]

- Bensky E. N., Link M., Shuttles C. (2010), “ Does the Timing of Offering Multiple Modes of Return Hurt the Response Rates?” Survey Practice, 35 Available at: <http://www.surveypractice.org/index.php/SurveyPractice/article/view/146>. Date accessed: 15 January. 2017. [Google Scholar]

- Biemer P. P., Murphy J., Zimmer S., Berry C., Deng G., Lewis K. (2016), “A Test of Web/Papi Protocols and Incentives for the Residential Energy Consumption Survey,” paper presented at the Annual Meeting of the American Association for Public Opinion Research, Austin, TX.

- Borkan B. (2010), “ The Mode Effect in Mixed-Mode Surveys Mail and Web Surveys,” Social Science Computer Review, 28, 371–380. [Google Scholar]

- Cantor D., Brick P. D., Han D., Aponte M. (2010), “Incorporating a Web Option in a Two-Phase Mail Survey,” paper presented at the Annual Meeting of the American Association for Public Opinion Research, Chicago, IL.

- Cernat A., Lynn P. (2014), “The Role of E-mail Addresses and E-mail Contact in Encouraging Web Response in a Mixed Mode Design,” Understanding Society Working Paper Series No. 2014-10, University of Essex, ISER, Colchester, England.

- Couper M. P. (1998), “Measuring Survey Quality in a Casic Environment,” paper presented at the Join Statistical Meetings of the American Statistical Association, Dallas, TX.

- Couper M. P., Antoun C., Mavletova A. (in press), “Mobile Web Surveys: A Total Survey Error Perspective,” in Total Survey Error in Practice, eds. Biemer P., Eckman S., Edwards B., de Leeuw E., Kreuter F., Lyberg L., Tucker C., West B., New York: Wiley. [Google Scholar]

- Couper M. P., Lyberg L. E. (2005), “The Use of Paradata in Survey Research,” Proceedings of the 54th Session of the International Statistical Institute, Sydney, Australia.

- Denscombe M. (2006), “ Web-Based Questionnaires and the Mode Effect: An Evaluation Based on Completion Rates and Data Contents of near-Identical Questionnaires Delivered in Different Modes,” Social Science Computer Review, 24, 246–254. [Google Scholar]

- Friese C. R., Lee C. S., O’Brien S., Crawford S. D. (2010), “ Multi-Mode and Method Experiment in a Study of Nurses,” Survey Practice, 35 Available at: <http://www.surveypractice.org/index.php/SurveyPractice/article/view/141>. Date accessed: 15 January. 2017. [Google Scholar]

- Heerwegh D. (2003), “ Explaining Response Latencies and Changing Answers Using Client-Side Paradata from a Web Survey,” Social Science Computer Review, 21, 360–373. [Google Scholar]

- Holmberg A., Lorenc B., Werner P. (2010), “ Contact Strategies to Improve Participation Via the Web in a Mixed-Mode Mail and Web Survey,” Journal of Official Statistics, 26, 465–480. [Google Scholar]

- Israel G. (2013), “ Combining Mail and E-Mail Contacts to Facilitate Participation in Mixed-Mode Surveys,” Social Science Computer Review, 31, 346–358. [Google Scholar]

- Israel G. (2009), “ Obtaining Responses by Mail or Web: Response Rates and Data Consequences,” Survey Practice, 25 Available at: <http://www.surveypractice.org/index.php/SurveyPractice/article/view/177>. Date accessed: 15 January. 2017. [Google Scholar]

- Johnston L. D., O'Malley P. M., Bachman J. G., Schulenberg J. E., Miech R. A. (2015), Monitoring the Future National Survey Results on Drug Use, 1975-2014: Volume 2, College Students and Adults Ages 19-55, Ann Arbor, MI: Institute for Social Research, The University of Michigan. [Google Scholar]

- Lagerstrøm B. O. (2011), “Web in Mix-Mode Surveys in Norway,” paper presented at the Seminar on General Population Surveys on the Web: Possibilities and Barriers, ESRC’s National Centre for Research Methods, London, UK.

- Lebrasseur D., Morin J.-P., Rodrigue J.-F., Taylor J. (2010), “Evaluation of the Innovations Implemented in the 2009 Canadian Census Test,” Proceedings of the American Statistical Association Survey Research Methods Section, pp. 4089–4097.

- Lesser V. M., Newton L., Yang D. (2010), “Does Providing a Choice of Survey Modes Influence Response?” paper presented at the Annual Conference of the American Association for Public Opinion Research, Chicago, IL.

- Lozar Manfreda K. L., Bosnjak M., Berzelak J., Haas I., Vehovar V. (2008), “ Web Surveys Versus Other Survey Modes: A Meta-Analysis Comparing Response Rates,” International Journal of Market Research, 50, 79–104. [Google Scholar]

- Matthews B., Davis M. C., Tancreto J. G., Zelenak M. F., Ruiter M. (2012), “2011 American Community Survey Internet Tests: Results from Second Test in November 2011,” American Community Survey Research and Evaluation Report Memorandum Series #ACS12-RER-21, Washington, DC: U.S. Census Bureau.

- McCabe S. E. (2004), “ Comparison of Web and Mail Surveys in Collecting Illicit Drug Use Data: A Randomized Experiment,” Journal of Drug Education, 34, 61–72. [DOI] [PubMed] [Google Scholar]

- McCabe S. E., Boyd C. J., Couper M. P., Crawford S., D’Arcy H. (2002), “ Mode Effects for Collecting Alcohol and Other Drug Use Data: Web and U.S. Mail,” Journal of Studies on Alcohol, 63, 755–761. [DOI] [PubMed] [Google Scholar]

- Medway R., Fulton J. (2012), “ When More Gets You Less: A Meta-Analysis of the Effect of Concurrent Web Options on Mail Survey Response Rates,” Public Opinion Quarterly, 76, 733–746. [Google Scholar]

- Messer B. L., Dillman D. A. (2011), “ Surveying the General Public over the Internet Using Address-Based Sampling and Mail Contact Procedures,” Public Opinion Quarterly, 75, 429–457. [Google Scholar]

- Miech R. A., Johnston L. D., O'Malley P. M., Bachman J. G., Schulenberg J. E. (2016), Monitoring the Future National Survey Results on Drug Use, 1975-2015: Volume I, Secondary School Students, Ann Arbor: Institute for Social Research, The University of Michigan. [Google Scholar]

- Millar M. M., Dillman D. A. (2011), “ Improving Response to Web and Mixed-Mode Surveys,” Public Opinion Quarterly, 75, 249–269. [Google Scholar]

- Newsome L., Levin K., Brick P. D., Langetieg P., Vigil M., Sebastiani M. (2013), “Multi-Mode Survey Administration: Does Offering Multiple Modes at Once Depress Response Rates?” paper presented at the Annual Meeting of the American Association for Public Opinion Research, Boston, MA.

- Olson K., Smyth J. D., Wood H. (2012), “ Does Giving People Their Preferred Survey Mode Actually Increase Survey Participation Rates? An Experimental Examination,” Public Opinion Quarterly, 76, 611–635. [Google Scholar]

- Schwartz B. (2004), The Paradox of Choice: Why More Is Less, New York, NY: Harper Collins Publishers. [Google Scholar]

- Skjåk K. K., Kolsrud K. (2013), “Non-Response Bias in Mixed Mode Self-Completion Surveys,” paper presented at the WebDataNet Meeting, Reykjavik, Iceland.

- Smyth J. D., Dillman D. A., Christian L. M., O’Neill A. C. (2010), “ Using the Internet to Survey Small Towns and Communities: Limitations and Possibilities in the Early 21st Century,” American Behavioral Scientist, 53, 1423–1448. [Google Scholar]

- Stern M. J. (2008), “ The Use of Client-Side Paradata in Analyzing the Effects of Visual Layout on Changing Responses in Web Surveys,” Field Methods, 20, 377–398. [Google Scholar]

- Stevenson J., Dykema J., Kniss C., Black P., Moberg D. P. (2011), “Effects of Mode and Incentives on Response Rates, Costs, and Response Quality in a Survey of Alcohol Use Among Young Adults,” paper presented at the Annual Conference of the American Association for Public Opinion Research, Phoenix, AZ.

- Tancreto J., Zelenak M. F., Davis M. C., Ruiter M., Matthews B. (2012), “2011 American Community Survey Internet Tests: Results from First Test in April 2011,” American Community Survey Research and Evaluation Program, Final Report, Washington, DC: U.S. Census Bureau.

- Tourangeau R., Conrad F. G., Couper M. P. (2013), The Science of Web Surveys, New York: Oxford University Press. [Google Scholar]

- Tully R., Lerman A. (2013), “Comparing Mode Design Effects on Response Rate, Representativeness, and Costs in New Jersey Mixed Mode Surveys,” paper presented at the Annual Meeting of the American Association for Public Opinion Research, Boston, MA.