Abstract

DSM-5 Autism Spectrum Disorder (ASD) comprises a set of neurodevelopmental disorders characterized by deficits in social communication and interaction and repetitive behaviors or restricted interests, and may both affect and be affected by multiple cognitive mechanisms. This study attempts to identify and characterize cognitive subtypes within the ASD population using a random forest (RF) machine learning classification model. We trained our model on measures from seven tasks that reflect multiple levels of information processing. 47 ASD diagnosed and 58 typically developing (TD) children between the ages of 9 and 13 participated in this study. Our RF model was 72.7% accurate, with 80.7% specificity and 63.1% sensitivity. Using the RF model, we measured the proximity of each subject to every other subject, generating a distance matrix between participants. This matrix was then used in a community detection algorithm to identify subgroups within the ASD and TD groups, revealing 3 ASD and 4 TD putative subgroups with unique behavioral profiles. We then examined differences in functional brain systems between diagnostic groups and putative subgroups using resting-state functional connectivity magnetic resonance imaging (rsfcMRI). Chi-square tests revealed a significantly greater number of between group differences (p < .05) within the cingulo-opercular, visual, and default systems as well as differences in inter-system connections in the somato-motor, dorsal attention, and subcortical systems. Many of these differences were primarily driven by specific subgroups suggesting that our method could potentially parse the variation in brain mechanisms affected by ASD.

Keywords: supervised learning, random forests, autism, functional connectivity, MRI

Introduction

Issues in diagnosing and treating ASD

Lack of precision medicine in ASD

Autism Spectrum Disorders (ASD) comprise altered social interactions and/or communication, as well as the presence of stereotyped or repetitive behavior 1. The prevalence of ASD in the global population has been estimated around 1%, but that number has been growing over the past decade 2,3. The variability in symptoms, severity, and adaptive behavior impairment within the ASD population 4 complicates the development of effective treatments and improved diagnostic measures. Such variation also suggests the possibility of discrete ASD subphenotypes and is consistent with the evidence that ASD may encompass multiple etiologies 1,5. Therefore, identifying and differentiating subgroups in this population should help refine ASD diagnostic criteria and further the study of precision medicine for individuals with ASD.

Heterogeneity in ASD

The etiology of ASD is complex, and the ASD diagnosis has been related to multiple cognitive, sensory, and motor faculties 6. We focused here on the cognitive domain. A thorough review of cognitive mechanisms underlying ASD suggested that non-social cognitive mechanisms, including reward, executive function, attention, visual and auditory processing, may affect the presentation of social behavior regardless of specific impairment or the existence of domain-specific social cognitive mechanisms7. We examined seven cognitive domains related to information processing and control that have varying levels of association with ASD: spatial working memory, response inhibition, temporal discounting of reward, attentional vigilance, facial recognition, facial affect processing and vocal affect processing.

Working Memory

Working memory refers here to a limited capacity cognitive system that retains information in an accessible state which supports human thought processes8. A vast literature in ASD reveals inconsistent findings as to whether visuospatial working memory may be impaired, suggesting the existence of ASD subgroups, which may drive the observed impairments. Early studies of working memory showed that high 9, but not low 10, functioning children with autism had impairments in verbal and non-verbal working memory. Another found no differences in working memory between children with or without ASD 11. Measures of non-verbal working memory on a non-spatial and non-verbal self-ordered pointing task correlate with visuospatial memory in children with ASD but not children without ASD 12. In contrast, children without ASD, but not children with ASD, show a relationship between language ability and verbal working memory 12. Such heterogeneity may reflect differences in how individuals with ASD utilize visuospatial memory to augment non-verbal working memory, whereas individuals without ASD may utilize language to augment verbal working memory 13.

More recent studies have supported the hypothesis that children with ASD may use different cognitive mechanisms to support working memory. A large-scale study revealed that children with ASD exhibited lower performance than unaffected children on a spatial span task 14, requiring children to repeat a sequence of fixed spatial locations indicated by a series of changing colors. Interestingly, the ASD participants had significantly lower verbal, but not performance, IQ. This study is consistent with findings from two recent studies on children with ASD 15,16, one of which showed that better performance on working memory tasks predicted faster development of play behavior 15. However, another recent study found no differences in a similar spatial span task 17. Taken together, all of these findings suggest working memory differences between children with and without ASD are inconsistent, and may be affected by sample differences that comprise different ASD subgroups.

Response Inhibition

Response inhibition refers here to the ability to inhibit a prepotent response, a lower level component of executive function18. Over 40 studies have examined whether response inhibition is different between individuals with and without ASD 19. While a number of these studies are underpowered, several use large sample sizes and previously validated psychophysical tests. The results from these studies are quite variable, despite large sample sizes and similar task designs. For example, Guerts and colleagues used a stop task to compare stop signal reaction times between TD and ASD children and found a large effect of diagnosis 20, while a more recent study employing the same task found only a small effect of ASD when examining commission errors 21. Although sampling variation may explain divergent results, an interesting possibility is that heterogeneity in ASD helps explain the inconsistency across the literature 19.

Temporal Discounting of Reward

Temporal discounting refers here to the weakening of the subjective value of a reward due to a delay22. A few studies 23–25 reveal that those with ASD have altered performance on delayed reward discounting tasks. On average, people naturally prefer immediate to delayed rewards of similar values. Different types of rewards may be discounted differently, and may reflect varying preferences for rewards associated with goal-oriented behavior. For example, individuals with ASD discount monetary and social rewards similarly, whereas typically developing (TD) individuals discount social rewards more than monetary rewards 24. ASD individuals may also discount monetary rewards more steeply with respect to time than TD individuals 25.

Attentional Vigilance

Attentional vigilance refers to the ability to maintain an alert state in the absence of an alerting stimulus. It is often measured using continuous performance tasks (CPTs). ASD performance on CPTs show mixed results. An early study found no difference between children with and without ASD on CPT performance. However, the task used long displays and the parameters of the task were not shifted throughout 26. A more recent study using the same version of the task also failed to find differences between children with and without an ASD. However, they did find differences in EEG signals that are important for sustained and selective attention 27, suggesting that individuals with ASD may use an alternative, perhaps compensatory, strategy to perform similarly on CPTs. Consistent with this hypothesis, individuals with ASD show impaired performance on CPTs where the ratio of distractors to targets 28 or inter-stimulus interval 29 varies over the task duration. On the other hand, increasing attentional demands by crowding the visual display does not seem to affect performance in participants with ASD 30.

Processing of facial features, vocal affect, and facial emotion

Previous work has repeatedly suggested that individuals with ASDs may have trouble processing the arrangements of facial features, which may impair facial identity recognition and the ability to link speech to facial expressions. Individuals with ASD show impairments in searching for the eye region on a face 31. Unlike TD individuals, individuals with ASD are not faster at recognizing a part of the face when it is placed in the context of a whole face 32, and performance on facial identity recognition is not maintained when the orientation of a face is altered 33. Impairments in face processing may affect other domains; individuals with an ASD have difficulty integrating visual facial and auditory speech information 34 and do not use visual information from the mouth to guide speech perception 35.

However, results on facial emotion recognition are more mixed 36. Earlier studies found wide variation in facial emotion recognition performance in adults with an ASD 37,38. More recent studies have shown that facial recognition can be improved in ASD, but that this improvement may not generalize when recognizing emotions from faces 39. ASD participants trained to recognize basic emotions like ‘happy’ or ‘sad’ for a particular set of identities did not improve recognition on faces from novel identities. Furthermore, ASD participants did not improve at recognizing emotion when the eyes were presented in the context of a whole face, suggesting that such training did not enable individuals with ASD to process the eyes holistically 39.

In summary, multiple information processing streams may be affected in individuals with ASD, but the types of impairment may be heterogeneous within the ASD population, with different individuals showing varying patterns of difficulty. Critically, it is difficult to disentangle from these studies whether individuals with an ASD diagnosis comprise distinct subgroups, as shown by working memory and response inhibition findings. Therefore, it is critical to test whether ASD is heterogeneous categorically and/or multi-dimensionally. The identification of distinct ASD subgroups may enable better mapping of the cognitive domains affected by and/or responsible for ASD.

Lack of clear biomarkers in ASD

Due to the wide variation in behavioral measures related to ASD, many studies have sought brain-based biological markers to identify a common etiology across individuals with ASD. Markers that are measurable via MRI are highly desirable, because they may represent potential targets for diagnostic tools and or treatments. Unfortunately, the results of these studies are varied due to differences in both study design and sample composition.

Structural brain biomarkers indicating heterogeneity

Reviews of structural MRI findings in ASD have found a wide range of putative biomarkers across independent studies 40–42. Whole brain-volume 43 developmental trajectories may differ between individuals with and without ASD. Regionally, the temporal-parietal junction 44, anterior insula 44,45, posterior cingulate 46,47, lateral and medial prefrontal 46, corpus-callosum 48, intra-parietal sulcus 45,49, and occipital cortex 47, have all been shown to be different between samples with and without ASD. This has led a number of reviewers to suggest that the heterogeneity within the disorder may account for the divergent findings 40,41. Indeed, an interesting study by Christine Nordahl in 2007 examined differences between individuals diagnosed with high-functioning autism, Asperger’s, and low functioning autism. Compared to TD individuals, these three samples showed varying cortical folding signatures, indicating that the mechanisms underlying the diagnosis for these samples may differ 45.

Functional brain biomarkers indicating heterogeneity

Studies of functional brain biomarkers for ASD have largely centered on studies of resting state functional connectivity MRI (rsfcMRI) for two reasons. First, the hemodynamic response in ASD children has been shown to be largely similar to the hemodynamic response in TD children 50, suggesting that differences in functional MRI reflect differences in neural activity. Second, the absence of a task enables one to examine differences across multiple brain regions and/or networks, similar to structural MRI.

Unfortunately, findings from rsfcMRI have also varied considerably from study to study. Studies have found altered connectivity within the dorsal attention network 51; default mode-network (DEF; 52); whole-brain 53,54 and subcortical-cortical 55 underconnectivity; whole-brain 56 and cortical-subcortical 57 hyperconnectivity; and altered connectivity within a discrete set of regions dubbed the “social brain” 58. Some studies 59,60 found no differences in functional connectivity. All of these studies differ not only in MRI processing strategies, but also in the diagnostic inclusion/exclusion criteria. More recent studies 51,58,59 also examined differences in processing strategy, but continued to show discrepant results. Taken together, the findings strongly suggest that ASD heterogeneity may limit the replicability of findings.

Machine Learning approaches in classifying ASD

Machine learning algorithms provide data-driven methods that can characterize ASD heterogeneity by identifying data-driven subgroups of individuals with ASD. However, most studies using machine-learning algorithms focused only on the identification of individuals with ASD, despite recent studies demonstrating moderate success using such algorithms. A large number of studies have tested whether imaging biomarkers can classify whether an individual has or does not have ASD. Early studies had small sample sizes under 100 individuals and showed high classification rates ranging from 80 to 97 percent accurate 61–64. Larger scale studies greater than 100 individuals typically showed modest accuracy in range of 60 to 80 percent 65–67. The discrepancies may indicate poor control of motion in some cases or over-fit models in others68. Alternatively, the discrepancies might be the result of ASD heterogeneity. Along these latter lines, one of the best classifications of ASD was performed using Random Forests (RF; 67). RFs are random ensembles of independently grown decision trees, where each decision tree votes as a weak classifier, and classification into the same group can occur through different pathways. ASD classification was improved when behavioral features were incorporated into models, suggesting that ASD may be stratified by differences in brain function and behavior 65. Interestingly, random forests can also enable the identification of subgroups 69, however, to our knowledge no machine learning approach has attempted to do so for individuals diagnosed with ASD.

Novel use of Random Forest (RF) in identifying subgroups within sample

Here we implement a novel approach for using RFs to identify more homogenous ASD subgroups. RFs is a random ensemble classification approach that iteratively grows decision trees to classify data. The RF model produces a proximity matrix that indicates the similarity between participants. This proximity matrix illustrates how often a pair of subjects were grouped into the same terminal node of each decision tree within the RF and is similar to a correlation matrix. Conceptually, we can recast the proximity matrix as a graph, and a community detection algorithm 70 can be used to identify putative subgroups. Several recent studies have used community detection to characterize subpopulations 71. However, one limitation from the approach as it is currently being used is that the community detection approach does not tie the sub-grouping to the outcome measurement of interest. In other words, prior studies have not evaluated whether the similarity measured between participants, which drives the community detection, is associated with the clinical diagnosis. Thus, an approach that ties the defined sub-populations to the clinical diagnosis is better equipped to identify clinically relevant subgroups. We posit that the combination of random forest classification and community detection can assist with this goal.

In the current report we classify children with and without ASD using several information processing and control measures. To attempt to validate the group assignments identified from the cognitive measures, we then compared the strength of rsfcMRI connections, within or between neural systems, across the identified subgroups. Such a link would provide external evidence that these subgroups differ in functional brain organization as it pertains to an ASD diagnosis.

Methods

Participants/Demographics

Participants

The study sample consisted of 105 children between the ages of 9 and 13. Age demographics are shown in Table 1, PDS in Table S1, and all other demographics are shown in Table 2. The ASD group was recruited by community outreach and referrals from a nearby autism treatment center and included 47 children (11 females) with a mean age of 12.15 years (SD = 2.12) across all tests. All ASD children had their diagnosis confirmed (using DSM-IV criteria) by a diagnostic team that included two licensed psychologists and a child psychiatrist, and were assessed with a research reliable Autism Diagnostic Observation Schedule Second Edition (ADOS; mean ASD = 12.36, SD = 3.371), Autism Diagnostic Interview-Revised interview (ADI-R) and by the Social Responsiveness Scale Second Edition (SRS; TD mean = 17.8, SD = 10.45; ASD mean = 92.32, SD = 27.02) surveys filled out by parents of the children. The TD group included 58 children (31 females) with a mean age of 10.29 years (SD 2.16) for all tests. A Fisher’s exact test indicated that gender was significantly different between the two groups (p = 0.025). It should be noted that the gender difference between our groups is consistent with the fact that males are at increased risk for autism in the general population. Parental pubertal developmental stage (PDS) report was used to assess pubertal stage. The PDS information was acquired once for all participants, but was untied to the tasks or MRI visits, which limits our ability to infer from it. For each MRI and task visit, we calculated the difference between the date of PDS acquisition and the date the task/MRI was acquired. For each task, any participant that had a PDS within 6 months of the task/MRI visit was included. As a result, the reported subject numbers for the PDS, as linked to the task and MRI, vary. However, we did have a single PDS measure acquired for all participants. Median PDS values were calculated from the observable measures on the PDS (e.g. hair growth or skin changes), measures that did not involve observation (e.g. whether the parent will discuss puberty with his/her child) were excluded. Unsurprisingly, differences in PDS were strikingly similar to the differences observed in age (see: Table S1). Exclusion criteria for both groups included the presence of seizure disorder, cerebral palsy, pediatric stroke, history of chemotherapy, sensorimotor handicaps, closed head injury, thyroid disorder, schizophrenia, bipolar disorder, current major depressive episode, fetal alcohol syndrome, severe vision impairments, Rett’s syndrome, and an IQ below 70. Participants in the TD group were also excluded if diagnosed with attention-deficit hyperactivity disorder. Subjects taking prescribed stimulant medications completed medication washout prior to testing and scanning. Children performed tasks and completed MRI visits following a minimum of five half-life washouts, which ranged from 24 to 48 hours given the preparation. Participants on non-stimulant psychotropic medication (e.g. anxioltyics or anti-depressants) were excluded from this study.

Table 1.

Age table for ASD and TD samples per test. TD = Typically Developing; ASD = Autism Spectrum Disorder; M = mean; SD = Standard Deviation. Independent-sample t-tests revealed that subgroups were significantly differed in terms of age on the Facial and Affect Processing Tasks, Spatial Span, Delay Discounting, CPT, Stop Task, and MRI scans. Note that the demographics for the Facial and Affect Processing Tasks applies to the Face Identity Recognition, Facial Affect Matching, and the Vocal Affect Recognition tasks.

| Age | |||

|---|---|---|---|

| Average for All Tests: TD = 58, ASD=47 | |||

| TDM(SD) | ASD M(SD) | Tstat | p-value |

| 10.29 (1.48) | 12.15 (2.16) | 5.237 | 0.006 |

| Facial&Affect Processing: TD = 55. ASD = 46 | |||

| 11.26 (1.51) | 12.51 (2.14) | 3.426 | <.001 |

| Delay Discounting : TD = 58, ASD =47 | |||

| 10.12 (1.74) | 12.41 (2.50) | 5.509 | <.001 |

| Stop Task: TD = 58, ASD = 46 | |||

| 9.91 (1.62) | 12.00(2.29) | 5.46 | <.001 |

| Spatial Soan Task: TD = 58 ASD =47 | |||

| 10.08 (1.69) | 11.89 (2.27) | 4.69 | <.001 |

| CPT: TD = 58, ASD =47 | |||

| 9.91 (1.62) | 12.07 (2.24) | 5.64 | <.001 |

| MRI Data: TD = 42, ASD = 26 | |||

| 10.73 (1.74) | 12.73 (2.03) | 5.08 | <.001 |

Table 2.

Demographics table for ASD and TD samples per test. WISC BD = Wechsler’s Intelligence Scale for Children IV: Block design raw score.

| IQ scores | TD | ASD |

|---|---|---|

| WISC BD(M) | 38 | 40.81 |

| WISC BD(SD) | 12.78 | 13.96 |

| T score | 1.074 | |

| p-value | 0.279 | |

| Gender | ||

| Males(N) | 27 | 38 |

| Males(%) | 46.56 | 80.85 |

| Females(N) | 31 | 9 |

| Females(%) | 54.45 | 19.15 |

| Pearson’s Chi-square | 12.951 | |

| p-value | <.001 | |

| Ethincity | ||

| Non-Hispanic(N) | 49 | 39 |

| Non-Hispanic(%) | 84.48 | 82.38 |

| Hispanic (N) | 9 | 8 |

| Hispanic (%) | 15.52 | 17.02 |

| Pearson’s Chi-square | 0.07 | |

| p-value | 0.966 | |

| Race | ||

| White(N) | 51 | 39 |

| White(%) | 87.93 | 82.98 |

| Black/African American (N) | 1 | 2 |

| Black/African American (%) | 1.72 | 426 |

| Asian (N) | 4 | 1 |

| Asian (%) | 6.7 | 2.13 |

| Native Hawaiian/Pacific Islander (N) | 0 | 1 |

| Native Hawaiian/Pacific Islander (%) | 0 | 2.13 |

| Pearson’s Chi-square | 4.295 | |

| p-value | 0.368 | |

Data collection procedures

ASD participants came in for a screening visit to determine if they qualified for the study. During this initial visit, informed written consent or assent was obtained from all participants and their parents, consistent with the Oregon Health & Science University institutional review board. Additionally, children completed the ADOS and the Wechsler Intelligence Scale for Children IV (WISC-IV; 72) block design subtest while parents completed the SRS, ADI-R, and Developmental and Medical History surveys. Participants who qualified for the study came back for a second visit where they completed our Delay Discounting, Spatial Span, CPT, and Stop tasks. All participants also experienced a “mock scanner” to acclimate to the scanner environment and to train themselves to lie still during the procedure. Participants then came in for a third visit where they were scanned. At the fourth visit, participants completed our Face Identity Recognition, Facial Affect Matching, and Vocal Affect Recognition tasks.

Participants in the TD group were recruited from a partner study with similar protocol. During the initial screening visit, participants underwent a diagnostic evaluation based on the Kiddie-Schedule for Affective Disorders and Schizophrenia (KSADS) interview, as well as parent and teacher standardized ratings, which were reviewed by their research diagnostic team. TD participants completed their study visits and tasks in a similar timeline and were recruited for our study during their MRI visit. TD participants were then screened and enrolled in an additional visit in which they completed the Face Identity Recognition, Facial Affect Matching, and Vocal Affect Recognition tasks.

Most of the participants consented to a longitudinal study where they returned on an annual basis to be reassessed on these same tasks and were re-scanned. For this study, we used data from each participant’s earliest time point for each completed task and MRI scan. Per task and scan, a t-test was conducted to test whether the cross-sectional ages were significantly different for that test. In all cases, ASD participants were significantly older than TD participants (all p < 0.05). We controlled for non-verbal intelligence, as measured by the WISC block design, by ensuring that block design scores were not significantly different between the groups (p = 0.285). We also calculated and tested the difference in visit age for the ASD (mean years = 1.51, s.d. (years) = 1.36) and typical (mean years = 1.14, s.d. (years) = 1.17) samples selected. We found no significant group effects on average visit difference ( t(103) = 1.49, p = 0.14).

Tasks

Measures derived from seven tasks were used as input features for the random forest. These seven tasks cover multiple levels of information processing, which may affect or be affected by the presence of an ASD diagnosis. Per measure, an independent samples, two-tailed, t-test was conducted to evaluate whether ASD and TD participants differed significantly. Table 3 lists each feature along with the t-statistic and p-value associated with the test. Because the random forest approach is robust against the presence of non-predictive features73, our initial feature selection was inclusive. Despite this liberal inclusion, these non-predictive features did not contribute meaningfully to the classification model and thus did not affect results materially (supplementary materials).

Table 3.

Table of task measures used in RF analysis. Independent samples t-tests were conducted between all available ASD and TD data. RT = reaction time.

| Behavior Data | ||||||

|---|---|---|---|---|---|---|

| Task | Variable | TD M(SD) | ASD M(SD) | T Score | p-value | df |

| Delay Discounting | 7-day indifference score | 8.16(1.99) | 7.65 (2.9) | 1.06 | 0.29 | 103 |

| Delay Discounting | 90-day indifference score | 4.81 (3.03) | 4.92(3.31) | −0.16 | 0.88 | 103 |

| Delay Discounting | Natural log of k | −4.48(1.83) | −4.74 (2.2) | 0.64 | 0.53 | 94 |

| Delay Discounting | 30-day indifference score | 6.32(2.91) | 5.89 (3.64) | 0.66 | 0.51 | 103 |

| Delay Discounting | K-value | 0.0521 (0.185) | 0.057 (0.14) | 0.14 | 0.89 | 94 |

| Delay Discounting | 180-indiffence score | 3.77(3.1) | 4.11 (3.82) | 0.50 | 0.62 | 103 |

| Delay Discounting | Intial indifference score | 9.97(0.417) | 9.89 (0.464) | 0.99 | 0.33 | 103 |

| Delay Discounting | AUG | 0.527 (0.26) | 0.543(0.318) | −0.27 | 0.79 | 95 |

| Delay Discounting | Timepoint and Score R2 value | 0.682 (0.352) | 0.666 (039) | 1.58 | 0.12 | 102 |

| Stop Task | Probablity of Stopping on Stop Trials | 51 (3.75) | 51.4(5.28) | −0.53 | 0.60 | 102 |

| Stop Task | Stop Signal RT (ms) | 253 (72.4) | 303(129) | −2.49 | 0.01 | 102 |

| Stop Task | Mean RT on Go Trials (ms) | 703(130) | 816(205) | −3.40 | 0.00 | 103 |

| Stop Task | Go Trials Accuracy | 95.2(3.48) | 95 (4.63) | 0.35 | 0.73 | 103 |

| Stop Task | SD Go Trials RT (ms) | 199(59.2) | 242 (93.5) | −2.84 | 0.01 | 103 |

| CPT | Bias score Stim Trials | −0.301 (0.133) | −0.317(0.157) | 0.52 | 0.60 | 100 |

| CPT | DprimeStim Trials | 2.69 (0.82) | 2.67 (0.866) | 0.10 | 0.92 | 100 |

| CPT | Dprime Catch trials | 1.21 (0.684) | 1.41 (0.828) | −1.36 | 0.18 | 100 |

| CPT | Natural Log of Bias Score Stim Trials | 1.6(0.793) | 1.72(1.05) | −0.67 | 0.50 | 100 |

| CPT | Natural log of Bias Score Catch Trials | −0.12(1.08) | 0.0662(1.42) | −0.74 | 0.46 | 100 |

| CPT | Bias Score Catch Trials | 0.057 (0.73) | −0.0191 (0.525) | 0.58 | 0.56 | 100 |

| Spatial Span | SS Backwards Number Completed | 8.59 (2.31) | 8.19(2.99) | 0.75 | 0.42 | 103 |

| Spatial Span | SS Backward RT (ms) | 1290(438) | 1370 (701) | −0.70 | 0.48 | 103 |

| Spatial Span | SS Backward Response Consistency | 0.619(0.105) | 0.559 (0.143) | 2.46 | 0.02 | 103 |

| Spatial Span | SS Backward Span Number Correct | 5.41 (1.86) | 4.87 (2.51) | 1.26 | 0.21 | 103 |

| Spatial Span | SS Forward Number Completed | 9.34 (2.26) | 8.79 (2.95) | 1.08 | 0.28 | 103 |

| Spatial Span | SS Forward RT (ms) | 1170(348) | 1170 (443) | 0.10 | 0.92 | 103 |

| Spatial Span | SS Forward Response Consistency | 0.627 (0.0935) | 0.622 (0.123) | 0.24 | 0.81 | 103 |

| Spatial Span | SS Forward Span Number Correct | 5.93(1.93) | 5.66 (2.43) | 0.63 | 0.53 | 103 |

| Facial Affect | Total Correct | 18.4(2.27) | 17.1 (3.24) | 2.39 | 0.02 | 98 |

| Facial Affect | Median RT (s) | 5.06(1.74) | 5.45(1.92) | −1.07 | 0.24 | 98 |

| Facial Recogniton | Total Correct | 22.6(1.91) | 19.6(4.06) | 4.77 | 0.00 | 98 |

| Facial Recogniton | Median RT (s) | 6.05 (2.37) | 6.22 (3.05) | −0.31 | 0.76 | 98 |

| Vocal Affect | Total Correct | 16.7(2.17) | 15.8(3.02) | 1.63 | 0.11 | 98 |

| Vocal Affect | Median RT (s) | 1.96(0.658) | 1.68(0.72) | 2.04 | 0.04 | 98 |

Delay Discounting

The Delay Discounting task measures an individual’s impulsivity by asking them to evaluate a reward’s subjective value following a delay. The task design employed here has been described in detail previously 74,75. In short, this computerized task consisted of 91 questions and requested participants to choose between two hypothetical amounts of money, one smaller amount that would be available immediately, and one larger amount that would be available after a fluctuating delay (between 0 to 180 days). No actual money was obtained. We used 9 variables from this task in our RF model: the indifference score at 5 time points (7, 30, 90, or 180 days), the calculated area under the curve (AUC) based on these indifference scores, the proportion of variance explained between the scores and their timepoints, their k value (a measure of overall rate of discounting), and the natural log-transformation of these k values. Three validity criteria were applied76: 1) an indifference point for a specific delay could not be greater than the preceding-delay indifference point by more than 20% ($2); 2) the final (180 day) indifference point was required to be less than the first (0 day) indifference point, indicating evidence of variation in subjective value of rewards across delays; and 3) the 0-day indifference point was required to be at least 9.25. Lower values for the 0-day indifference point indicate that the child chose multiple times to have a smaller reward now over a larger reward now, suggesting misunderstanding or poor task engagement. Data that did not meet validity criteria were treated as missing in analyses.

Spatial Span

The Spatial Span task measures an individual’s visuospatial working memory capacity. Our participants received a spatial span subtest identical to the computerized Cambridge Neuropsychological Test Battery (CANTAB; 77). Briefly, this computerized task presents a series of 10 white boxes randomly placed on the screen, a subset of which would change color in a fixed order. Participants were instructed to watch for boxes that changed color and to keep track of their sequence. In the spatial forward task, participants were instructed to click on the boxes in the same sequential order in which they were presented. In the spatial backward task, participants were instructed to click on the boxes in the reverse order in which they were presented. The tasks were counterbalanced, and every subject had the opportunity to practice before administration. At the beginning of both tasks, the numbers of squares that changed started at three and increased to nine, with two trials at each sequence length (a total of 24 trials for both tasks). The task discontinued when a child failed both trials at a sequence length. We used 8 measures from this task in our RF model: reaction time, accuracy, number completed, and span number correct for both the forward and backward tasks.

Stop Task

A tracking version of the Logan stop task was administered to all participants78,79. The Stop Task is a dual go-stop task. The go portion of the task measures reaction time and variability of reaction time on a simple choice detection task; the stop portion measures speed at which the individual can interrupt a prepotent response (how much warning is needed). For this computerized task participants fixated on a small cross in the center of computer screen, which appeared for 500ms on each trial. For the “go trials” (75% of total trials), either a rainbow “X” or an “O” would appear on the screen for 1000ms. Participants then had 2000ms to indicate whether they saw an “X” or an “O” using a key press, after which the next trial would automatically start. The “stop trials” (25% of total trials) were identical except that an auditory tone was played briefly after the presentation of the visual cue. The timing of the tone was varied stochastically to maintain approximately 50% success at stopping. Participants were instructed to not respond with the key press if they heard the tone. Each participant performed 20 practice trials to ensure they understood the task, before completing eight 32 trial blocks of the task. We used 5 measures from this task in our RF forest model: accuracy of the X/O choice on “go-trials”, probability of successful stopping on the “stop-trials”, stop signal reaction time (computed as the difference between go RT and timing of the stop delay warning signal), mean reaction time on go-trials, and the standard deviation of reaction times during “go-trials”.

Continuous Performance Task

The Continuous Performance task was an identical-pairs version of the common CPT, which measures vigilance. For this computerized task participants viewed a series of four digit numbers (250ms per cue) and were instructed to press a button whenever they saw a pair of identical numbers back-to-back. The task consisted of three types of trials: 1) trials where the paired numbers were made of distinct digits called “stim trials”, 2) trials where paired numbers only differed by one digit called “catch trials” and 3) trials where the pair of numbers were identical (target trials). The task included a total of 300 stimuli and required about 10 minutes to complete. There were 20% target trials, 20% catch trials, and 60% “stim” or non-target trials. We used 6 measures from this task in our RF model: dprime (a measure of discriminability80) per discrimination type (essentially, “hard” and “easy” discriminations), bias score for each discrimination type, and the natural log of bias per discrimination type.

Face Identity Recognition Task

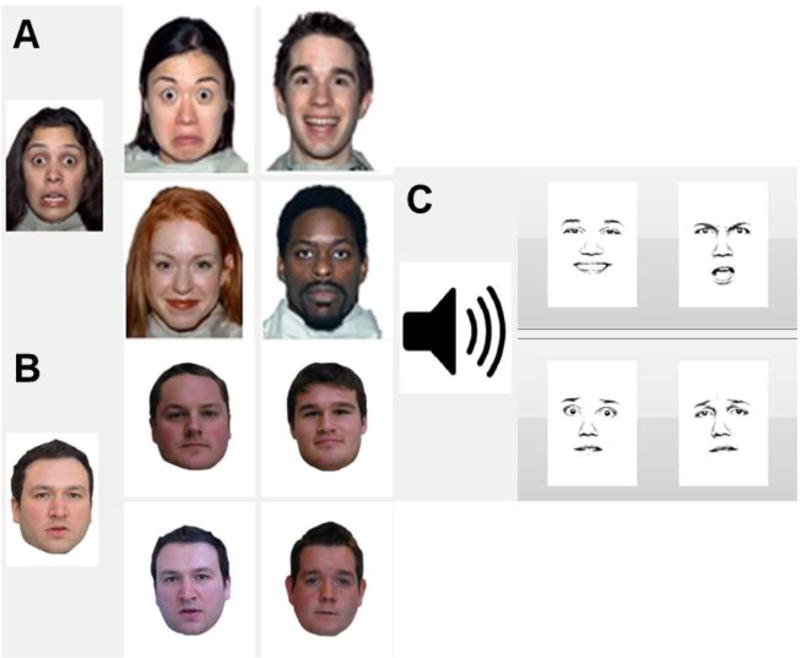

The Face Identity Recognition Task was designed by the Center for Spoken Language Understanding (CSLU) at OHSU to measure facial processing skills. In this computerized identification task, for each of the 25 trials (inter-trial interval = 2s), participants were presented with a “target face” on the left side of the screen, a colored photograph of a human face presented in standardized poses with neutral facial expressions. At the same time participants were shown an additional four facial photographs on the right side of the screen (all photographs were selected from the Glasgow Unfamiliar Faces Database 81, see Fig 1B), one of which matched the target face. Participants were asked to select the target face out of the lineup by touching the screen with stylus pen. Reaction times were calculated from the moment the trial began to the participant’s response; however, participants were not told they were being timed or instructed to complete the task as quickly as possible. Each participant was allowed five practice trials to ensure they understood the task. We used 2 measures from this task in our RF model which included the number of correct responses and the median reaction time for all trials.

Figure 1.

Depiction of stimuli used in face and affect processing experiments. (A) Example from visual facial affect recognition task. (B) Example from facial identity recognition task. (C) Example from auditory facial affect recognition task.

Facial Affect Matching Task

The Facial Affect Matching Task and was designed by the CSLU at OHSU to measure affect discrimination skills using facial expressions. In this computerized task, for each of the 25 trials (inter-trial interval = 2s), participants were presented with a “target emotion”, a colored photograph of a human face expressing one of six possible emotions (happiness, sadness, surprise, disgust, fear or anger), on the left side of the screen. At the same time participants were shown an additional four facial photographs on the right side of the screen (all photographs were selected from the NimStim set of facial expressions 82, see Fig 1A), one of which matched the target emotion. Participants were asked to select the target emotion out of the lineup by touching the screen with stylus pen. Reaction times were calculated from the moment the trial began to the participant’s response; however, participants were not told they were being timed or instructed to complete the task as quickly as possible. Each participant was allowed five practice trials to ensure they understood the task. We used 2 measures from this task in our RF model which included the number of correct responses and the median reaction time for all trials.

Vocal Affect Recognition

The Affect Matching Task was designed by the CSLU g at OHSU to measure affect discrimination skills using auditory cues. In this computerized task, for each of the 24 trials (inter-trial interval = 2s), participants were presented with an audio recording of an actor reading neutral phrases (e.g., “we leave tomorrow”) but expressing one of four possible emotions (happiness, sadness, fear or anger) during the reading. Participants were asked to identify what type of emotion the actor was expressing by selecting one of four black and white drawings of facial expressions, each depicting one of the 4 basic emotions (see Fig 1C). Reaction times were calculated from the moment the trial began to the participant’s response; however, participants were not told they were being timed or instructed to complete the task as quickly as possible. Each participant was allowed four practice trials to ensure they understood the task. We used 2 measures from this task in our RF model which included the number of correct responses and the median reaction time for all trials.

MRI scans

Data acquisition

Participants were scanned in a 3.0 T Siemens Magnetom Tim Trio scanner (Siemens Medical Solutions, Erlangen, Germany) with a 12 channel head coil at the Advanced Imaging Research center at Oregon Health and Science University. One T1 weighted structural image (TR = 2300ms, TE = 3.58ms, orientation = sagittal, FOV = 256×256 matrix, voxel resolution = 1mm×1mm×1.1mm slice thickness), and one T2-weighted structural image (TR = 3200ms, TE = 30ms, flip angle= 90° FOV =240mm, slice thickness = 1mm, in-plane resolution = 1 × 1mm) was acquired for each participant. Functional imaging was performed using blood oxygenated level-dependent (BOLD) contrast sensitive gradient echo-planar sequence (TR = 2500ms, TE = 30ms, flip angle = 90°, in-plane resolution 3.8×3.8mm, slice thickness = 3.8mm, 36 slices). For fMRI data acquisition, there were three 5-minute rest scans where participants were asked to relax, lie perfectly still and fixate on a black cross in the center of a white display.

General preprocessing

All functional images went through identical Human Connectome Project preprocessing pipelines as described previously 83 in order to reduce artifacts. These pipelines included 1) PreFreeSurfer, which corrects for MR gradient and bias field distortions, performs T1w and T2w image alignment, and registers structural volume to MNI space; 2) FreeSurfer 84, which segments volumes into predefined cortical and subcortical regions, reconstructs white and pial surfaces, and aligns images to a standard surface template (FreeSurfer’s fsaverage); 3) PostFreeSurfer, which converts data to NIFTI and GIFTI formats, down sampled from a 164k to a 32k vertices surface space, applies surface registration to a Conte69 template, and generates a final brain mask. 4) fMRIVolume, which removes spatial distortions, performs motion correction, aligns fMRI data to the subject’s structural data, normalizes data to a global mean, and masks the data using the final brain mask, and 5) fMRISurface which maps the volume time series to a standard CIFTI grayordinate space.

Functional connectivity processing

All resting state functional connectivity MRI data received additional preprocessing that have been widely used in the imaging literature 85 to account for signals from non-neuronal processes. These steps included: 1) removal of a central spike caused by MR signal offset, 2) slice timing correction 3) correction for head movement between and across runs, 4) intensity normalization to a whole brain mode value of 1000, 5) temporal band-pass filtering (.009Hz < f <.08 Hz), 6) regression of nuisance variables: 36 motion related parameters, and three averaged signal timecourses from the grayordinates, white matter, and cerebrospinal fluid (CSF). Additionally, because previous research has indicated that minor head movement can result in changes in MRI signal, we performed motion-targeted “scrubbing” on all rs-fcMRI data 85. These steps included censoring any volumes with frame displacement (FD) > .2mm, and the elimination of any run with less than a total of two and a half minutes of data.

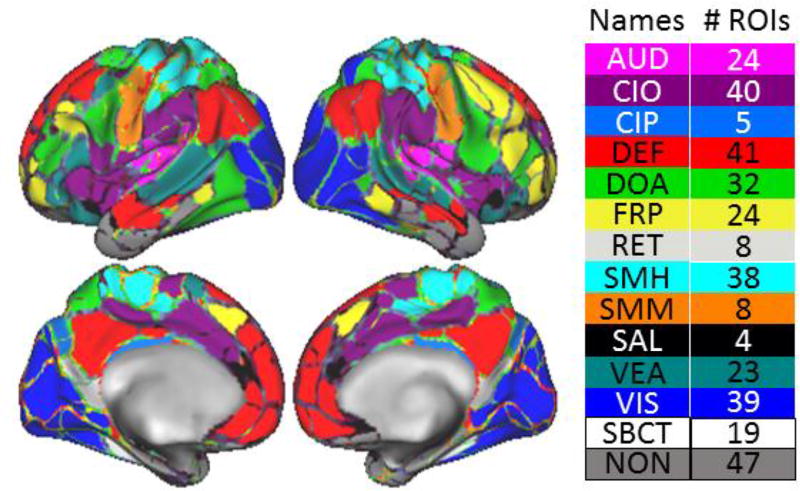

Correlation matrix generation

All timecourses and correlations were derived from a set of 333 Regions of Interest (ROIs) produced from a published data-driven parcellation scheme (Figure 4) 86, and a set of 19 subcortical areas parcellated by FreeSurfer during preprocessing. The resulting parcellations set comprised 352 ROIs. Correlations between ROIs were calculated using Pearson product-moment coefficient between each pair of ROIs over the extracted time series following preprocessing and motion censoring. We created a correlation matrix for each participant and then created group correlation matrices by averaging individual matrices across groups and subgroups.

Figure 4.

Visualization of systems of the brain used in the chi-squared analysis. Aud = Auditory. CIO = Cingulo-opercular. CIP = Cingulo-parietal. Def = Default-mode. DoA = Dorsal attention. FrP = Frontal-parietal. ReT = Retrosplenial. SMh = Somato-motor hand. Smm = somato-motor mouth. VeA = ventral attention. Vis = Visual. SBcT = subcortical. Non = none.

Data Analysis

Exploratory Data Analysis

Prior to construction of the RF model, we measured the quantity of missing data. Machine-learning model performance can be greatly affected by missing data. Therefore, we excluded any measures and participants that were missing more than 15 percent of data. The remaining missing data is imputed separately for the training and test datasets using the random forest algorithm below, where the missing data’s column is the outcome measure and the remaining variables are used as predictors. Prior to our exploratory data analysis we had a total of 143 subjects (73 ASD, 70 TD) with partially completed data, after eliminating subjects with more than 15 percent missing data we finalized our subject list down to 105 (47 ASD, 58 TD). In the final dataset, less than 3 percent of all possible data was missing. An inspection of the missing data was unable to find any patterns that distinguish the missing ASD data from the remaining cases.

Random Forest classification

General algorithm

The RF algorithm constructs a series of decision trees. Per tree, a bootstrapped dataset is generated from a subset of the training data and a subset of features are randomly used to predict group classification or outcome measure in the case of imputation. The Gini impurity is used as the cost function to determine the optimal tree for classification and the mean square error is used as the cost function to determine the optimal tree for regression. Finally, a testing dataset comprising participants that were excluded from the training dataset is used to evaluate classification model performance. We implemented this algorithm via in-house custom-built MATLAB programs that used the MATLAB TreeBagger class. 1000 trees were used for the classification model and 20 trees were used for the surrogate imputation. Missing data was imputed separately for training and testing datasets. For classification, 1000 iterations of the RF algorithm were run to assess the performance of the RF models. Per iteration, 60 percent of participants formed the training dataset and the remaining 40 percent formed the testing dataset.

Optimization and validation

Distributions of overall, ASD, and control accuracy were constructed from the 1000 iterations and compared against a distribution of 1000 null-models. Per null-model, the group assignments are randomly permuted and the RF procedure above is performed on the permuted data. If the RF classification models are significantly better than the null models, then we interpret the RF models as valid for predicting a given outcome measure. An independent samples t-test was used to evaluate the significance of the RF model performance against the null model performance based on the models’ accuracy, specificity, and sensitivity rates.

Community detection

Since each tree has different terminal branches, the RF algorithm may identify different paths for participants with the same diagnosis. Therefore, validated models can be further analyzed to identify putative subgroups that reflect the same diagnosis but perhaps different etiologies. Briefly, the RF algorithm produces a proximity matrix, where the rows and columns reflect the participants and each cell represents the proportion of times, across all trees and forests, a given pair of participants ended in the same terminal branch. For the classification model, the Infomap algorithm (Rosvall, 2007) was used to identify putative subgroups from the proximity matrix for participants with an ASD and from the proximity matrix for control participants. Because we have no basis for determining what constitutes an edge, an iterative procedure was used 87, where we identified a consensus set of community assignments across all possible thresholds.

Radar plot visualization

Task measures were then examined via radar plots to identify features that distinguish putative subgroups. Since plotting all measures may obscure differences between the groups, visualized task measures were chosen via statistical testing. For the ASD and the TD samples separately, one-way ANOVAs, with subgroup as the factor and each subgroup a level, were conducted for each task measure. Significant (p < 0.05) task measures were chosen for visualization. Individual task measures were converted to percentiles and visualized by task.

Functional connectivity cluster analysis

We used a chi-square approach to identify potential differences between subgroups within or between functional systems, as opposed to individual functional connections 88. Briefly, three sets of mass univariate tests were conducted for all Fisher-Z transformed functional connections: a set of one-way ANOVA using ASD subgroup as the factor, a set of one-way ANOVAs using control subgroup as the factor, and a set of t-tests between ASD and control groups. Per set, a matrix of coefficients are extracted and binarized to an uncorrected p < 0.05 threshold. This binary matrix is then divided into modules based on the published community structure 89 which reflects groups of within system (e.g. connections within the default mode system) and between system (e.g. connections between the default mode system and the visual system) functional connections. The subcortical parcellation was defined as its own system for this analysis because of prior research suggesting differences between cortical and subcortical connectivity 55. A ratio of expected significant to non-significant functional connections (i.e. the expected ratio) is calculated by dividing the total number of significant connections by the total number of all connections. Per module, the number of expected significant and non-significant functional connections is determined by multiplying the expected ratio by the total number of functional connections within the module. A chi-squared statistic is then calculated using the observed and expected ratio of significant connections. Permutation tests were conducted for all functional connections across the 352 ROIs to calculate the p value per module, and evaluate whether the observed clustering is greater than what would be observed by random chance.

Results

Random Forest Classification results

Random forest successfully classified individuals as having ASD or not

RF model accuracy is shown in Figure 2A. Applying the RF algorithm on behavioral data from 7 different tasks (34 variables) achieved an overall classification accuracy of 73% (M = .727, SD = .087) and an independent sample t-test revealed that the RF model was significantly more accurate than the permutation accuracy measure of 51% [M = 50.9, SD =.103; t (1998) = 51.325, p < .001]. The RF model had a sensitivity of 63% (M = .631, SD = .153) when classifying ASD subjects, the ability to correctly identify true positives, and an independent sample t-test revealed that the model’s sensitivity was significantly higher compared to the permutation sensitivity of 44%. [M = .441, SD =.166; t (1998) = 26.643, p < .001]. The RF model also had a specificity of 81% (M = .807, SD = .153) when classifying control participants, the ability to correctly identify true negatives, and an independent sample t-test revealed that this was significantly more accurate compared to the permutation specificity of 56%. [M = .564, SD =.153; t (1998) = 40.501, p < .001]. Taken together, these findings show that the RF model identified patterns in the cognitive data that stratified individuals with an ASD diagnosis from individuals without. (Note: Due to confound age and gender factors, a secondary RF analysis was performed on the behavioral data, controlling for both factors. Despite the large confounds, the RF analysis accurately classified ASD from control participants greater than chance. This analysis is discussed in supplemental materials).

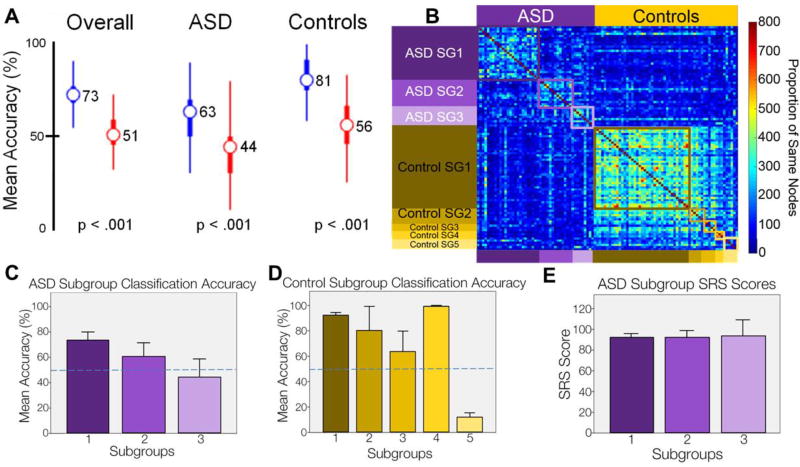

Figure 2.

(A) Plot of accuracy for observed (blue) vs. permuted (red) RF models. Wide bars refer to the 25th/75th percentiles and thinner bars refer to the 2.5th/97.5th percentiles. (B) Sorted proximity matrix, where each row and column represents a participant and each cell represents the number of times two participants ended in the same terminal node across all the RF models. (C) Plot of RF classification accuracy for ASD subgroups, error bars represent 1 standard error of the mean (SE). Dashed blue line represents 50% mean accuracy. (D) Plot of RF classification accuracy for control subgroups. Error bars represent 1 SE. Dashed blue line represents 50% mean accuracy. (E) Plot of SRS for ASD subgroups. The color code for each subgroup is maintained throughout all subfigures.

Proximity matrices from random forest model suggest subgroups in ASD and Control samples

We next applied community detection to the proximity matrices generated through the random forest modeling. The community detection algorithm identified three putative ASD subgroups and four putative control subgroups (Figure 2B). For children with an ASD diagnosis, the largest subgroup comprised 25 individuals, while the other two subgroups numbered 13 and 9 children respectively. For children without an ASD diagnosis, the largest subgroup comprised 39 individuals; three other subgroups were evenly split with five, five, and three children respectively. Six controls were not identified as part of any community, which were placed into a fifth “unspecified” subgroup. To characterize these subgroups, we first examined whether accuracy of classification varied between subgroups, and then examined variation in the task measures between the subgroups.

ASD subgroups differed in terms of classification accuracy

We next compared the classification accuracy of individuals within each ASD subgroup to see if specific subgroups may have differentially affected RF model performance (Figure 2C). It also allowed us to validate that these subgroups were indeed systematically different from one another based on the cognitive data used in the RF model.

Because we constructed multiple RFs, each subject was included in the test dataset a large number of times, therefore we can calculate the rate of accurate classification per subject. A one-way between subjects ANOVA was conducted to compare the rate of classification accuracy between the 3 ASD subgroups identified by community detection. There was no significant difference between the groups [F (2, 44) =1.859, p=.168]. An independent sample t-test was conducted to see if subgroup classification accuracy significantly differed from chance (.5) using a Bonferroni adjusted alpha level of .0167 per test (.05/3). Subgroup 1 was significantly better at classification than chance [M = .726 SD = .367; t (24) =3.0732, p=.005] but subgroups 2 [M = .607 SD = .383; t (12) =1.01, p=.334] and subgroup 3 [M = .443 SD = .431; t (8) =-.399, p=.701] were not.

These results suggest that there may be differences in our subgroups that are important for distinguishing ASD from TD. This difference is subtle, because effects of subgroup on accuracy are small and could largely be driven by the small sample size in subgroups 2 and 3. However, variation in classification accuracy may reflect differences in cognitive profiles. Subjects in subgroup 3 had a classification accuracy of only 44%, which may indicate that these individuals had cognitive scores more similar to our control group than our ASD group, while subgroup 1 had a classification accuracy of nearly 73% suggesting that their cognitive scores may be far different from both our control group, and ASD subgroup 3.

Control subgroups differed in terms of classification accuracy

We also compared the classification accuracy of individuals within each control subgroup to again see if specific subgroups were differentially affecting our RF model’s performance (Figure 2D).

A one-way between subjects ANOVA was conducted to compare classification accuracy for each of the 4 control subgroups plus the controls that were lumped into a fifth subgroup, identified by community detection. There was a significant effect of subgroups on classification accuracy [F (4, 53) =24.018, p<.001]. Post-hoc comparisons using an independent-sample t-test indicated that the classification accuracy for subgroup 5 (M = .120 SD=.086) was significantly worse (using a Bonferroni adjusted alpha level of .006 per test) than subgroup 1 [M = .922 SD = .137; t(43)=−13.871, p<.001], subgroup 2 [M = .804 SD = .422; t(9)=−3.910, p=.004], and subgroup 4 [M = .995 SD = .0089; t(7)=−16.903, p<.001], but not subgroup 3 [M = .636 SD = .362; t(9)=−3.411, p=.008]. Additionally, an independent sample t-test was conducted to see if subgroup classification accuracy significantly differed from chance (.5) using the Bonferroni adjusted alpha level of .006 per test. Participants in subgroups 1 [t (38) =19.276, p<.001] and 4 [t (2) =96.00, p<.001] were classified as controls significantly more than chance, while participants in subgroup 5 [t (5) =−10.773, p<.001] were classified as controls significantly less than chance.

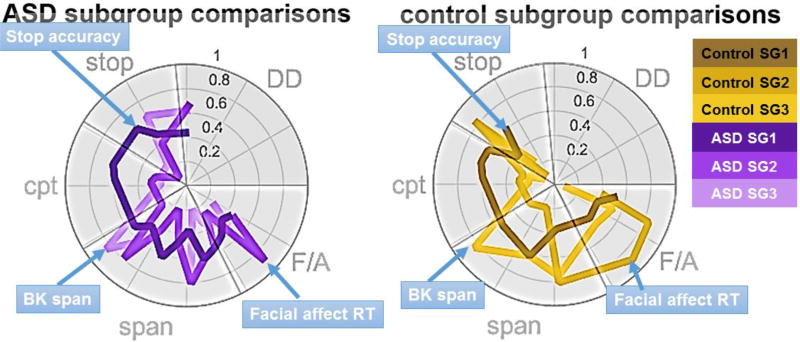

Community Detection identified these subgroups in ASD and Control samples who differed in behavioral tasks and classification accuracy

To test whether ASD subgroups may reflect quantitative variation in autism symptom severity, we examined whether identified ASD subgroups varied by Social Responsiveness Scale (SRS). A one-way ANOVA revealed no significant differences between the subgroups on SRS (Figure 2E; F (2, 44) = 0.006, p = 0.994), suggesting that ASD subgroups had similar autism severity but varied in other ways. Because normal variation in cognitive profiles may affect the manifestation of a developmental disorder 71, we then examined the variation in task performance for ASD (Figure 3; left) and control (Figure 3; right) subgroups. For control subgroups, the fourth subgroup was not examined due to the small sample size and the fifth subgroup was not examined because it represented “unspecified” subjects. A series of subgroupXtask measure repeated measures ANOVA were performed to assess whether we should examine task performance between specific subgroups. The ASD subgroups (F(66,1056) = 7.65, p = 7.5*10−54), control subgroups (F(66,1452) = 2.19, p = 2.4*10−7), and accurately identified subgroups (F(33,1716) = 10.64, p = 3.3*10−49) showed significant differences across task, indicating that identified subgroups varied by task measure. Post-hoc one-way ANOVAs identified 11 significant different features for control subgroups (F (2, 46) > 3.29, p < 0.0462) and 16 significant different features for ASD subgroups (F (2, 44) > 3.45, p < 0.0405). For both ASD and control subgroups, similar relative cognitive profiles were observed. The largest subgroup in both cohorts performed best on stop and continuous performance tasks. The second largest subgroup in both cohorts had the smallest spatial span, and the highest accuracy and longest reaction times for the facial and affect processing tasks. The third subgroup in both cohorts was characterized by highest spatial span, but lowest accuracy and shortest reaction time for the face processing tasks. Participants who show a combination of low accuracy and short reaction time may be showing a speed accuracy trade-off 90, where individual participants are making quicker responses at a cost of more accurate responses. For the most part, delayed discounting did not differentiate the subgroups, which is unsurprising, because evidence is mixed whether delayed discounting varies by ASD or ASD subgroups. A prior study suggests that ASD and control subgroups discount monetary rewards similarly24; the relationship between discounting and time varies by ASD subgroup, which is consistent with findings from a separate study where some ASD participants may discount monetary rewards more steeply than controls25. The similar cognitive profiles observed between controls and ASD subgroups suggests that normal variation in cognitive profiles may impact how ASD manifests in individuals.

Figure 3.

Radar plots represent the 50th percentile for performance per group. All data are normalized within each radar plot from 0 to 100 percent. Per sample, one-way ANOVAs were conducted on raw data to reduce the number of points plotted based on differences between subgroups. The colors for each subgroup are the same as in Figure 2.

Functional Connectivity Results

Functional connectivity differences between ASDs and Controls

To test our hypothesis that our ASD and controls groups differed in terms of resting-state functional connections between, and within, different functional systems, we used the chi-squared approach described earlier. The Gordon parcellation plus 19 subcortical regions were used to define the modules (Figure 4). We conducted the analysis on the 26 ASD subjects and 42 control subjects with satisfactory fMRI data (Figure 5A). The chi-squared analysis revealed significant clustering effects between the cingulo-opercular system and the default mode system (χ2 = 48.86, p =.0002), the somato-motor hand system and the default mode system (χ2 = 12.81, p =.0016), the visual system and default mode system (χ2 = 11.74, p =.001), and between the subcortical system and the dorsal attention system (χ2 = 35.05, p =.0024). It also revealed significant clustering effects within the cingulo-opercular (χ2 = 259.36, p =.0002), the default mode system (χ2 = 11.66, p =.0002), and the visual system (χ2 = 35.05, p =.0002). These findings are consistent with prior reports of rsfcMRI differences between TD and ASD samples (see: Discussion).

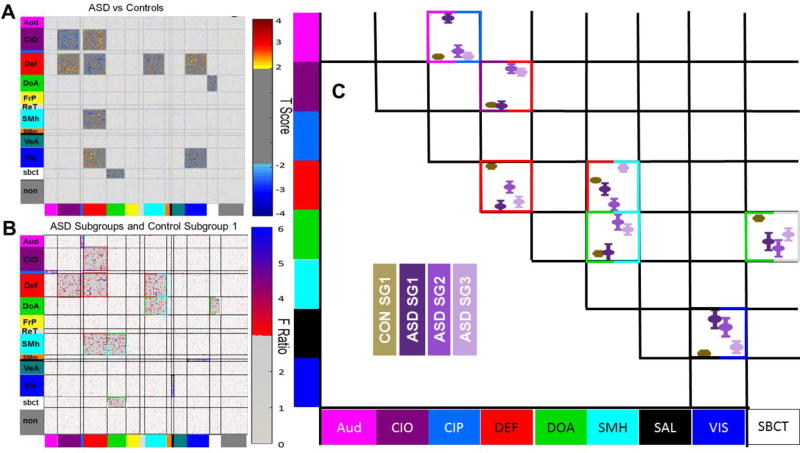

Figure 5.

(A) Plot of t-statistics for significant clustering observed between ASD and controls. (B) Plot of F ratios for significant clustering observed in the ANOVAs by subgroup. Colors surrounding significant clusters reflect the functional systems involved in the module (e.g. within or between system connectivity). (C) Visualization of estimated marginal means via subgroup by network interactions. Error bars represent 2 times the standard error of the mean. Colors surrounding the boxes reflect the functional systems involved and are consistent with the colors in B.

Subgroup differences within ASD and control samples

Because ASD subgroups differed in classification accuracy with respect to chance (Figure 2C), we also tested whether variance between each of the ASD subgroups and the large control subgroup differed in terms of resting-state functional connections between, and within, different function systems, using the chi-squared analysis. Unfortunately, due to the MRI ‘scrubbing’ procedure, we did not have sufficient data in the other control subgroups to include them in this analysis. We conducted a one-way ANOVA with four groups on 57 subjects: the 31 subjects from Control subgroup one, 12 subjects from ASD subgroup 1, 8 subjects from ASD subgroup two, and 6 subjects from ASD subgroup three who had satisfactory fMRI data. We again used a permutation test to determine each system’s expected ratio and compared this to the observed ratio using the chi-squared analysis (Figure 5B). We used the estimated marginal means from the ANOVA to visualize which subgroups drove significant clustering (Figure 5C). This test revealed significant increases in connectivity for ASD subgroup 1, relative to all other subgroups, between the cingulo-parietal system and the auditory system (χ2 = 12.06, p =.0014). Significant increases in ASD subgroup 2 and 3 between the cingulo-opercular system and the default system (χ2 = 24.01, p =.0002), and between the dorsal attention system and the somato-motor hand system (χ2 = 15.37, p = .0006). Significant increases in ASD subgroup 1 and 2 connectivity between the salience system and the visual system (χ2 = 11.36, p = .0016). Significant increases in control connectivity were observed within the default system (χ2 = 22.36, p = .0010) and between the dorsal attention system and the subcortical system (χ2 = 11.85, p = .002). Connectivity between the default system and the somato-motor hand system (χ2 = 28.85, p = .0002) showed mixed results, with ASD subgroups deviating from controls. The estimated marginal means for these tests are summarized in Table 3.

These differences overlapped substantially with the differences observed between ASD and controls (Figure 5A), suggesting that normal variation in mechanisms that are also affected by ASD may cause variation in how ASD may manifest 1,91. These findings should be interpreted cautiously, however, because these data are not predictive of diagnosis.

Discussion

Accuracy of the Random Forest model

Link our results to prior findings using machine learning ASD classification

Using a RF model, ASD and control participants were accurately classified 73 percent of the time using a comprehensive battery of cognitive tasks often identified as affected by an ASD diagnosis. Despite differences in age between samples, it is unlikely that the accurate classification was driven by age for two primary reasons. First, task measures important for classification did not show strong correlations with age (see: supplemental materials for discussion); when corrected for multiple comparisons, no relationships between gender and task performance are observed. Second, we performed a second RF model controlling for age and gender across all features, which continued to perform above chance (see: supplemental materials for discussion).

Higher performance has been reported for behavior when constructing a model using visual face scanning (88.1%; 92) or goal-oriented reach (96.7%; 93) measures. However, high classification accuracy may be a function of validation strategies or sample size. Liu et al used a leave-one-outcross validation (LOOCV) strategy, which improves classification accuracy within a test dataset, but may reduce the generalizability of the model to other datasets. Crippa et al. also used a LOOCV validation strategy, and were also limited in sample size. Machine learning approaches using imaging data have shown that validation accuracy decreases as the sample size increases, suggesting that these small sample sizes may be overfitting the data 65,68.

Recent classification studies incorporating brain measures have shown comparable results to our initial classification and further suggest that heterogeneity of clinically relevant ASD subgroups may limit high classification accuracy. Duchesnay et al. found that PET imaging could be used to predict ASD with 88% accuracy in a sample of 26 participants 61. Murdaugh et al. used the intra-DEF connectivity to predict ASD with 96% accuracy in a sample of 27 participants 62. Wang et al., using whole-brain functional connectivity, correctly predicted ASD with 83% accuracy in a sample of 58 participants 63. Jamal et al. used EEG activity during task switching to predict ASD with 95% accuracy in a sample of 24 participants 64. Using large data consortiums like the Autism Brain Imaging Data Exchange (ABIDE), recent classification studies have developed and tested models using datasets with over 100 participants. Collectively, these large-sample studies demonstrate performance accuracy from 59% to 70% when testing untrained data 65–68,94. Our data highlights the importance of considering heterogeneity for such tests.

Extension of prior Machine Learning studies

Individual classification results and their relation to subgroups

Our RF approach extends prior studies by identifying putative subgroups from a validated ASD classification model. Specifically, we identified three ASD and four control putative subgroups, with a fifth group of isolated subjects. To further characterize these subgroups, we examined whether subgroups were stratified via classification accuracy. Because of our extremely stringent inclusion criteria, we are extremely confident that all ASD subjects indeed have an ASD, therefore ASD subgroups that contain misclassified individuals may represent clinically important subgroups that our initial RF model failed to capture. Control subgroups that contain misclassified individuals may represent subgroups that our initial RF model confused for ASD individuals. We found that the largest subgroup for ASD and the largest and smallest subgroup for controls were significantly more accurate than chance. Other ASD and control subgroups were not, and the distinction in classification accuracy may reflect the heterogeneity within the disorder. In an earlier study, ASD participants were sub-grouped on the basis of symptom severity, verbal IQ, and age, which caused classification rates to increase by as much as 10% 65. On the other hand, the fact that control subgroups also showed misclassification suggests that variation in such skills may represent the existence of broad cognitive subgroups that are independent of diagnosis, whose variation may impact the presentation of ASD symptoms 1. Prior work by Fair et al. has shown similar heterogeneity in both TD and ADHD children; as with Katuwal, taking into account this heterogeneity improved diagnostic accuracy 71.

ASD subgroups are not associated with variance in symptom severity

It is controversial whether clinical subgroups even exist in ASD. Recently, it has been suggested that ASD represents the tail end of a continuous distribution of social abilities. Categorically distinct subtypes are either artificial constructs 95 or unknown 1. Categorically distinct subtypes may be difficult to discover due to the heterogeneity present within the typical population 71 as well as the heterogeneity in genetic causes of ASD 1. According to Constantino et al., such genetic subtypes may interact with the environment of the individual, leading to varying manifestations of ASD. Findings that the trajectories of adaptive functioning and autism symptom severity are distinct from one another 14,91 further suggests a dissociation between adaptive functioning and symptom burden.

Therefore, our subgroups may reflect the variation in autism symptom severity or in cognitive mechanisms that may impact ASD profiles, independent of severity. To test this hypothesis, we examined whether our ASD subgroups varied by autism symptom severity, as measured by the SRS 96 and the ADOS (Supplemental Analysis 3). We found that our subgroups did not differ on the SRS or the ADOS, suggesting that autism symptom severity was similar across the three subgroups, despite differences in classification accuracy. Because we are confident in the ASD diagnosis, we suspect that the variation between these three subgroups reflects typical variation in cognitive mechanisms, which may be independent of autism symptom severity but influence ASD presentation14,91. Identification of such subgroups may be critical for the development of personalized treatment approaches in future studies and has the potential for improving ASD diagnosis and long term outcomes 1. Future studies could better characterize putatively identified subgroups by examining how subgroups may differ on measures of adaptive functioning, or examining whether the subgroups may be characterized by a set of measured ASD symptoms. Critically, future studies should also seek to assess the stability of identified subgroups using longitudinal data.

Describe identified subgroups

To further characterize the identified subgroups, we examine how the subgroups differed on the tasks incorporated into the model. With such an analysis, we can compare our results to prior research that has identified subgroups in independent datasets using similar tasks 71. Replication of similar subgroups would suggests these subgroups may be meaningful. However, because the data from these tasks were used to construct the model, an independent set of measures is necessary to establish the validity of the identified subgroups. Therefore, we also examined differences in functional brain organization in a subset of participants, to see whether differences in functional brain organization between the subgroups reflects the effect of an ASD diagnosis on functional brain organization.

Differences in behavior and how that compares to previous literature

Due to fragmentation and limited sample size, we examined variation in task performance between the three ASD subgroups and between the largest three control subgroups only. Similar to prior research, subgroup differences were largely similar, independent of clinical diagnosis. Per sample, the largest subgroups performed best on CPT and stop tasks, and worst on face processing tasks. The second largest subgroups had the smallest spatial span and were slower but more accurate on the face processing tasks. The third largest subgroups had the largest spatial span and were faster, but less accurate, on the face processing tasks. The distinctions between these subgroups are consistent with prior research, which characterized heterogeneity in typical and ADHD samples and found multiple subgroups characterized by either a small spatial span, slow RT, and high information processing, or high spatial span, fast RT and low information processing71. Taken together, these findings suggest that clinical heterogeneity may emerge from normal variation in cognitive profiles, and are consistent with a recent study showing that clinical heterogeneity within ASD may be driven by normative development97. Our study here extends the prior findings to ASD and establishes a predictive model, which provides some clinical validity to the identified subgroups.

Our finding that the differences between subgroups were similar in both ASD and TD samples may appear inconsistent with prior studies that show an effect of ASD on the relationship between cognitive measures and task performance12,27,37,38. However, differences in diagnostic criteria may explain some of the apparent contradiction here. Our study used a team of experts to confirm ASD diagnosis per individual, whereas these prior studies often used only a DSM diagnosis plus one or two instruments that assess autism symptom severity (e.g. the ADOS and/or ADIR). The inconsistency in findings may be interpreted as further evidence of heterogeneity within ASD. Differences in cognitive profiles across individuals with ASD could explain the variation in attention, working memory, and face processing. In addition, prior work suggests that cognitive subtypes within ASD may be similar to cognitive subtypes found in typical populations98.

Differences in fMRI data and validation of subgroups how that compares to previous literature

To provide further validation of the subgroups, we examined whether significant differences in the functional organization of the brain between subgroups overlapped with significant effects of ASD on functional brain organization. Since this data was never used in the RF model, variation that overlaps with differences between ASD and typical children may reflect clinically or etiologically important distinctions between subgroups. Because we did not observe differences in symptom severity between subgroups, the findings above are more likely to reflect typical variation in neural mechanisms underlying cognitive performance, as opposed to manifestations of ASD symptoms.

Differences between children with and without ASD are consistent with prior studies but also show some novel findings. Children with an ASD have shown altered visual system responses to stacks of oriented lines 99, and at rest they’ve exhibited altered DEF functional connectivity 52, but not altered cingulo-operuclar connectivity 59. Between system differences have been less studied in ASD, however, sub-cortical cortical connectivity has been shown to be altered 55,57 as well as the dorsal attention network organization 51, which is consistent with altered connections between subcortical and dorsal attention networks. However, differences between the DEF and visual, somatomotor, and cingulo-opercular systems have not been documented. The differences found between somatomotor and DEF may be consistent with findings of altered motor system function in ASD 100, while differences between DEF and cingulo-opercular systems may be consistent with altered rich-club organization 51.

We would like to emphasize that the ANOVA chi-squared analysis may be underpowered 88 and, though enticing, is not definitive. Nevertheless, the subgroup chi-squared ANOVAs hint that the identified subgroups may reflect differences in both mechanisms relevant to an ASD diagnosis, and mechanisms that reflect variation across the subgroups. Four of the seven connectivity modules significantly affected by an ASD diagnosis showed variation in the ANOVA analysis: connectivity within the DEF; connectivity between the DEF and cingulo-opercular systems, between the DEF and somatomotor systems, and between the dorsal attention and subcortical systems. We also found significant variation in the ANOVA chi-squared analysis from the ASD and typical comparisons. Like with behavioral measures in children with and without ADHD, it is possible that variation within the ASD subgroups identified here may actually be “nested” within the normal variation found in brain networks across typical children 1,71,91 .

The correspondence between the subgroups and the connectivity profiles are intriguing, and hint that the first ASD subgroup may have altered visual processing mechanisms, the third ASD subgroup may have altered attention mechanisms, and the second ASD subgroup may have both. Speculatively, the first ASD subgroup shows the best ASD performance on both stop and CPT tasks, just as individuals in the first control subgroup performs better than the other control subgroups. Inter-system connectivity between the default mode and task control and attention systems (i.e. CIP and DOA) are control-like in the first ASD subgroup, as well as connectivity between attention and motor systems. As discussed extensively in the introduction, such variation is consistent with the literature and may reflect typical heterogeneity variability related to the presentation of ASD. The third ASD subgroup shows the worst performance on facial and affect tasks of the three ASD subgroups; the first control group performs worse on the same tasks compared to the other control subgroups (Figure 3; right). Such tasks would involve visual processing, and the chi-squared comparison reveals that the third ASD and first control subgroups have similar visual system connectivity. Variation in facial task performance may be implicated in some children with autism 39, but not others 37. It will be interesting to see whether future studies identify similar variation in system-level connectivity between ASD subgroups, and whether these groups are stable over time. In addition, future studies with larger sample sizes may be able to uncover additional or more refined sub-populations within the disorder.

Effects of demographics on RF model performance and subgroup affiliation