Abstract

Observed touch interactions provide useful information on how others communicate with the external world. Previous studies revealed shared neural circuits between the direct experience and the passive observation of simple touch, such as being stroked/slapped. Here, we investigate the complexity of the neural representations underlying the understanding of others' socio-affective touch interactions. Importantly, we use a recently developed touch database that contains a larger range of more complex social and non-social touch interactions. Participants judged affective aspects of each touch event and were scanned while watching the same videos. Using correlational multivariate pattern analysis methods, we obtained neural similarity matrices in 18 regions of interest from five different networks: somatosensory, pain, the theory of mind, visual and motor regions. Among them, four networks except motor cortex represent the social nature of the touch, whereas fine-detailed affective information is reflected in more targeted areas such as social brain regions and somatosensory cortex. Lastly, individual social touch preference at the behavioral level was correlated with the involvement of somatosensory areas on representing affective information, suggesting that individuals with higher social touch preference exhibit stronger vicarious emotional responses to others' social touch experiences. Together, these results highlight the overall complexity and the individual modulation of the distributed neural representations underlying the processing of observed socio-affective touch.

Keywords: MVPA, Social/non-social touch, Theory of mind, Somatosensory resonance, Individual social touch preferences

Abbreviations: OT, observed touch; AT, actual touch

Highlights

-

•

∙Neural bases of observed touch are investigated with touch videos and MVPA.

-

•

∙Social touch evokes stronger activation in the theory of mind (ToM) network.

-

•

∙The ToM network represents affective meanings of observed social touch events.

-

•

∙Affective representations of observed touch are present in somatosensory areas.

-

•

∙Affective representations in S1 relate to individual's attitude towards touch.

Introduction

Affective touch enables us to communicate effectively with others. Already for an infant, touch communication and understanding is one of the primary non-verbal social skills, used during interactions with primary caregivers (Anisfeld et al., 1990; Weiss et al., 2000; Hertenstein, 2002) and being essential for the development of social brain later in development (Brauer et al., 2016). Furthermore, recent neuroimaging studies have demonstrated the neural selectivity for affective touch in the infant's brain, implying the importance of touch in social communication at an early age (Jönsson et al., 2017; Tuulari et al., 2017).

This functional selectivity is present in various somatosensory regions including the postcentral gyrus (PoCG) that first receives tactile information from subcortical thalamus (Penfield and Boldrey, 1937; Mountcastle, 1957). The PoCG could be divided into cortical Brodmann Areas (BA) 3a, 3b, 1 and 2 (Brodmann, 1909). Among them, BA 3 has been considered as the primary somatosensory cortical area (S1) since it receives the direct input from the thalamus (Kaas, 1983). The parietal operculum (PO) and the insular cortex also process somatosensory information at a later stage (Kitada et al., 2005).

Importantly, this remarkable social understanding extends to the touch that is delivered to another person's skin through mere observation (Hertenstein et al., 2006). Indeed, the ability to understand and react to another person's somatosensory experience is a core part of the theory of mind (ToM) and empathy mechanisms (Schaefer et al., 2012; Giummarra et al., 2015; Peled-Avron et al., 2016).

The goal of this study is to provide a comprehensive and detailed understanding of the neural mechanisms underlying the observation of affective touch in comparison to the observation of non-social touch. This topic has been under investigation before, but with important limitations. Most importantly, previous studies have almost exclusively focused on one type of simple touch such as brush/hand stroking of the skin (Blakemore et al., 2005; Ebisch et al., 2008, 2011; Morrison et al., 2011; Schaefer et al., 2012; Walker et al., 2016). While these studies probably underestimate the complexity of processes involved in more complex touch interactions, they already showed some very interesting results. Most strikingly, it has been suggested that viewing others being touched induces vicarious touch experiences, recruiting brain regions originally responsive to first-hand somatosensation (i.e., the somatosensory cortex, the PO and the insular cortex). Among them, the activation of the somatosensory cortex is obviously surprising, because this part of cortex receives very specific input from somatosensory receptors. A full description of these pathways is beyond the scope of the current study; reviews can be found elsewhere (e.g. (Kaas, 1983; Abraira and Ginty, 2013)).

To characterize the many different representations that are involved in the neural processing of observed touch, we designed a study with two important characteristics. First, we overcome the limitations of existing stimulus material by using a systematically defined and well-controlled touch database that contains a broad range of natural touch interaction scenarios (Lee Masson and Op de Beeck, 2018). Our stimuli include video clips displaying interpersonal social touch, varying on valence and arousal of interactions, and non-social touch in which each type of grasp/biological motion is intentionally matched to the one in social touch. Several low-level visual features (e.g., motion energy) of the video clips are systematically quantified in order to control the intrinsic visual characteristics of the stimuli when predicting the neural patterns from socio-affective dimensions. Second, going beyond the univariate analysis methods on functional magnetic resonance imaging (fMRI) data that show which brain regions are active, here we employ correlational multi-voxel pattern analysis (MVPA) methods (Haxby, 2001; Kriegeskorte et al., 2008; Op de Beeck et al., 2008). We examine where and how the neural activity patterns reflect detailed affective information of observed social touch interactions as well as other visual aspects of touch, and detail the functional/representational relationships across different brain regions.

Using this paradigm, we expect to have the right tools to uncover the various representations that are involved during the observation of social touch. Several predictions can be made. First, concerning the neural bases of social and non-social touch, a recent monkey study demonstrated considerable sociality-related differences in neural activity between observation of monkey-to-monkey social interaction and observation of object-to-object physical interaction (Sliwa and Freiwald, 2017). We, therefore, hypothesize that watching socio-affective touch events would generate distinctive neural activities compare to watching non-social touch in social brain regions.

Second, we expect representations of affective dimensions in ToM network, such as temporoparietal junction (TPJ). Furthermore, there might be a relationship between the processing of affective dimensions and cortical regions involved in the processing of high-level visual dimensions. For example, some brain regions such as the temporal lobe have a clear relationship to visual cortex, and in particular to nearby biological motion-sensitive regions, which might result in overlap between the coding of visual and of affective dimensions (Grossman et al., 2000; Grèzes et al., 2001; Vangeneugden et al., 2014).

Third, given that the aforementioned studies found shared cortical areas for receiving and observing simple touch, we hypothesize that first-hand touch selective cortical areas would engage in the processing of socio-affective information of observed social touch interactions.

In particular, the role of somatosensory cortex in touch observation is thoroughly examined in this study since it is currently under debate. Some studies found the involvement of somatosensory areas during observation of both pleasant and unpleasant touch, which might be associated with empathic ability (Keysers and Gazzola, 2009; Keysers et al., 2010; Bolognini et al., 2011, 2013; Ebisch et al., 2011; Schaefer et al., 2012; Marcoux et al., 2013). On the contrary, others challenged the idea of automatic engagement of somatosensory cortices in empathy and affect sharing (Singer, 2004; Chan and Baker, 2015; Lamm and Majdandžić, 2015). The diverging results may be due to the simplicity and non-controlled aspects of the stimuli, due to the use of simple neuroimaging analysis methods, or due to inter-individual variability in this particular cortical region. To top it all off, the definition of somatosensory cortex in human is rather elusive and therefore varies across studies. Some studies considered the combination of Brodmann areas (BA) 3, 1, and 2 as the primary somatosensory cortex (S1) (Ebisch et al., 2011; Gazzola et al., 2012) while others claimed that BA3 should be solely considered as S1 due to its primary input from the thalamus (Keysers and Gazzola, 2009). To go further and to come to a finer understanding of these diverging results, beyond employing more elaborate stimuli and advanced neuroimaging techniques, we additionally measure the individual preference for social touch and correlate this measure with the strength of the affective representation in different somatosensory areas.

S1, defined as BA3, is known for processing ‘private’ touch sensation delivered to one's skin (Keysers and Gazzola, 2009). Here, the term ‘private’ touch sensation refers to the bodily sensation evoked by direct contact with a surface of an object or a human skin. Based on the embodied simulation theory of vicarious touch experiences (Gallese and Ebisch, 2013), affective representations of observed social touch in this region would be associated with individual attitudes toward social touch. Thus, we hypothesize that individuals with high social touch preference may better represent affective aspects of observed social touch in S1.

In sum, with a newly developed touch database and an advanced neuroimaging approach, we aim to characterize the multidimensional representational space behind observed socio-affective touch while considering individual variability in neural patterns.

Materials and methods

Participants

Twenty-two right-handed healthy adults (male = 12; mean age = 26) were included in the fMRI experiment. The same subjects completed Experiment 2 from our previous study (Lee Masson and Op de Beeck, 2018) in a separate session. No previous neurological nor psychological histories were reported. All participants provided written informed consent before the experiment. The study was approved by the Medical Ethical Committee of KU Leuven (S53768 and S59577).

Stimuli

To investigate neural representation of observed touch in a highly well-controlled fashion, we used a total of 75 greyscale social and non-social touch video clips that were created and validated in our previous study (Lee Masson and Op de Beeck, 2018).

The stimuli consisted of 39 videos displaying human-to-human touch interaction (social touch) and 36 videos showing human-to-object interaction (non-social touch). Six human agents (three pairs) engaged in both interpersonal social (three actor-pairs) and object-oriented touch. In social touch condition, each actor pair consisted of one male and one female involved in 13 different touch interactions, including hugs, caresses, holding hands, tap on the shoulder, shaking arms, grabbing an arm, nudges and a slap. Social and non-social stimuli were matched according to the type of interaction. For example, the non-social touch scene in which the box was carried by holding it close to the chest was included as matched touch interaction for the social touch stimulus where the actor hugged another actor. Fig. 1 shows a few example snapshots of the stimuli. The detailed description of a complete set of stimuli and the procedure for the creation and the validation of the stimuli can be found in our previous study ((Lee Masson and Op de Beeck, 2018), stimuli available at https://osf.io/8j74m/).

Fig. 1.

A few example snapshots of the stimuli. The 1st and the 2nd rows in the figure shows representative example still frames of social touch stimuli, showing different types of interpersonal touch events: positive (the first four stimuli in the 1st row), neutral (the last stimuli in the 1st row) and negative touch (five stimuli in the 2nd row). The corresponding non-social touch events are shown in the 3rd and the 4th rows, displaying different interactions with various objects. The complete set of video materials can be found in https://osf.io/8j74m/.

Importantly, social touch interaction varied in valence and arousal while non-social touch toward to an object was perceived rather neutral in terms of valence and as not arousing (Lee Masson and Op de Beeck, 2018). Lastly, the actors shown in each video wore either black or grey long-sleeves shirts. This information was relevant for the fMRI task wherein participants were asked to press a button whenever they detected the agent who initiated the touch wearing a sweatshirt of a pre-instructed color. An equal number of black and grey appeared as a sweatshirts' color of a touch initiator in 75 stimuli.

General procedure

The participants first performed the behavioral experiment where they rated valence and arousal of the videos displaying various touch events. Second, the participants received both pleasant (brush stroking) and unpleasant touch (rubber band snapping) and rated subjective feeling of pleasantness in a pre-scan touch session during which individual's threshold on unpleasant touch was also measured. Third, the same participants went through two fMRI scanning sessions during which they watched touch videos and received the touch stimulation. The order of two experiments was counter-balanced, meaning that some participants watched the videos before receiving actual touch while others received the touch before watching the videos. Additionally, right after fMRI sessions, an anatomical image of each participant was also obtained. Lastly, all participants filled out a Social Touch Questionnaire that assesses individual attitudes toward social touch (see Table 1). For an illustration of the experimental procedure, see Inline Supplementary Fig S1.

Table 1.

Chronological list of the experiments.

| Order | The type of experiment | |

|---|---|---|

| 1 | Behavioral Experiment | |

| 2 | Pre-scan touch test | |

| 3 | fMRI Experiments | Observing the touch |

| Receiving the touch | ||

| 4 | Anatomical scan (T1) | |

| 5 | Social touch questionnaire | |

The table lists chronically all the experiments conducted in this study. For the fMRI experiments, the order of two experiments were counter-balanced across participants.

Behavioral experiment

In our previous study (Experiment 2 in (Lee Masson and Op de Beeck, 2018)), the participants watched each video clip and evaluated its valence (“How pleasant is the touch?”) and arousal (“How arousing is the touch?”) on a 9-point Likert-like scale using Self-Assessment Manikin (Bradley and Lang, 1994). The valence and arousal ratings of observed social touch events were used to create behavioral dissimilarity matrices in order to investigate their unique relationship with distributed neural activities in the brain. The details of these analyses are specified below in the section of “Analysis: Preparation of Multiple Predictors”.

Touch preference indices

After the behavioral task, participants filled out a Social Touch Questionnaire (Wilhelm et al., 2001), which consisted of 20 items that assessed attitudes toward social touch (e.g., ‘I feel uncomfortable when someone I do not know very well hugs me’ and ‘I feel embarrassed if I have to touch someone in order to get their attention’). Participants indicated the degree to which each statement applies to them by giving a number from 1 to 5 (1 = strongly disagree, 2 = disagree, 3 = undecided, 4 = agree, 5 = strongly agree). Higher total scores indicated the aversion to giving, receiving and witnessing social touch; with raw total scores ranging from 20 (positive attitude towards social touch) to 100 (negative attitude towards social touch). We inverted the scores such that a high score was labelled as “high preference for social touch” for the later analysis. We correlated these scores with the strength of affect representation in somatosensory areas at the later stage.

MRI acquisition

MRI images were obtained on a 3T Philips scanner with a 32-channel coil at the Department of Radiology of the University Hospitals Leuven. For the functional data, whole brain images were acquired by using echo planar (EPI) T2∗ - weighted sequences with the following acquisition parameters: repetition time (TR) = 2000 ms, echo time (TE) = 30 ms, flip angle (FA) = 90°, field of view (FOV) = 216 × 216 mm, and in-plane matrix = 80 x 80. Each run comprised 37 axial slices with voxel size 2.7 x 2.7 × 3 mm without a gap with the acquisition of 239 vol for each run of the main experiment and 298 vol for the localizer run. The T1-weighted anatomical images were acquired with a magnetization prepared rapid gradient echo (MP-RAGE) sequence, with 0.98 × 0.98 × 1.2 mm resolution (182 axial slices, FOV = 250 x 250, TR = 9.6 ms, TE = 4.6 ms, FA = 8°, in-plane matrix = 256 x 256).

fMRI experiment1: observing touch [main runs]

The same group of participants from the behavioral task took part in the imaging experiment. The task during the scanning was to watch each touch video while performing an orthogonal attention task. All the videos were presented once per run (N = 6) in an optimally designed pseudo-random order, which prevented the same touch scenes (e.g., the three slapping scenes performed by three different pairs of actors) from being displayed consecutively. This was done to minimize other confounding effects related to ordering of stimulus presentation (e.g. rapid habituation). Each video lasted for 3 s with another 3 s for the inter stimulus interval (ISI) which included the response period during which a fixation cross was displayed. The total duration of each run took 7.8 min [3 blocks within the run x (baseline displaying a fixation cross (6sc) + 25 videos per block x (video presentation (3sc) + ISI (3sc)))]. In order to ensure participants' attention throughout the task, they were instructed to press a button during the ISI whenever they detected that the actor who initiated the touch in the video sequence wore a sweatshirt of a pre-instructed color (either black or grey). The grey color of the sweatshirt in the video was coupled with the left hand and the black with the right. Thus, 3 runs among total 6 runs were required to use only the left hand and the other only the right hand. All the videos were projected on a screen and viewed through a mirror mounted on the head coil. The experiment was controlled by Psychophysics Toolbox Version 3.0.12 (PTB-3) (Kleiner et al., 2007) in Matlab (R2015a, The Mathworks, Natick, MA).

fMRI experiment2: receiving touch

To determine cortical areas activated by actual touch, we stimulated the ventral surface of the participant's forearm during scanning using a brush and elastic bands in order to elicit pleasant and unpleasant touch experiences.

Pre-scan procedure

Prior to the fMRI scan, we demarcated the stimulating areas on both right and left forearms by measuring 10 cm in length beginning from the wrist. Once they were demarcated, an experimenter stroked the participant's right forearm in a proximal to distal direction between the marked point and the wrist for five times during 10 s. The approximate brush-stoke velocity (5 cm/s) was chosen based on a previous study that defined an optimal speed for evoking pleasant sensation as ‘1 cm/s ∼ 10 cm/s’ (Löken et al., 2009). All participant rated subjective feeling of pleasantness using a 9-point Likert-like scale (1-very unpleasant, 5-neutral, 9-very pleasant). Based on their ratings, we confirmed that participants indeed perceived the brush stroking as pleasant touch (mean rating = 7.8, standard deviation (std) = 0.83). In addition, we also induced negative touch by snapping a rubber band at a perpendicular distance of 8 cm from the forearm for five times, and asked participants to rate the pleasantness (See also the study (Meffert et al., 2013) using an everyday object (a ruler) to induce negative touch in the scanner). If the rating was either higher or lower than the scale 2, we either respectively increased or decreased the distance of the snapping point and asked them to evaluate again. We repeated this procedure up until we found the point where the perceived pleasantness of rubber band snapping matched to the scale 2. The chosen length of the perpendicular distance from a forearm was used during the scanning to induce similar amount of unpleasant touch experience across participants. Average distance between snapping point and the forearm was 8 cm (std = 2).

fMRI experiment: receiving touch [localizer run]

In the scanner, participants received the brush-stroke (positive touch) and the rubber band snapping (negative touch) on their ventral surface of right and left forearms consecutively in a block design fashion. Participants were instructed to close their eyes and to focus on touch sensation. The experiment consisted of 4 randomized blocks [positive touch-left arm, positive-right, negative-left, negative-right] in one run. Each block contained 8 trials where the stimulation lasted 10 s with 6 s of ISI. Both positive and negative touch were delivered five times in each trial. A trained experimenter, who stood next to the scanner, followed the block information announced by an audio (e.g. “positive-left”, “positive-right”, “negative-left”, “negative-right”) at the start of each block. The timing of each trial was ensured by short, spoken audio cues, synchronized with the scanner, to guide the experimenter when to start and stop (“start” for the experimenter to stimulate, “Stop” for the experimenter to end the stimulation). These cues were only audible for the experimenter and delivered via headphones. The total duration of the localizer run was 9.9 min [4 blocks x (rest (10sc) + 8 trials x (touch stimulation (10sc) + ISI (6sc))].

Analysis: preparation of multiple predictors

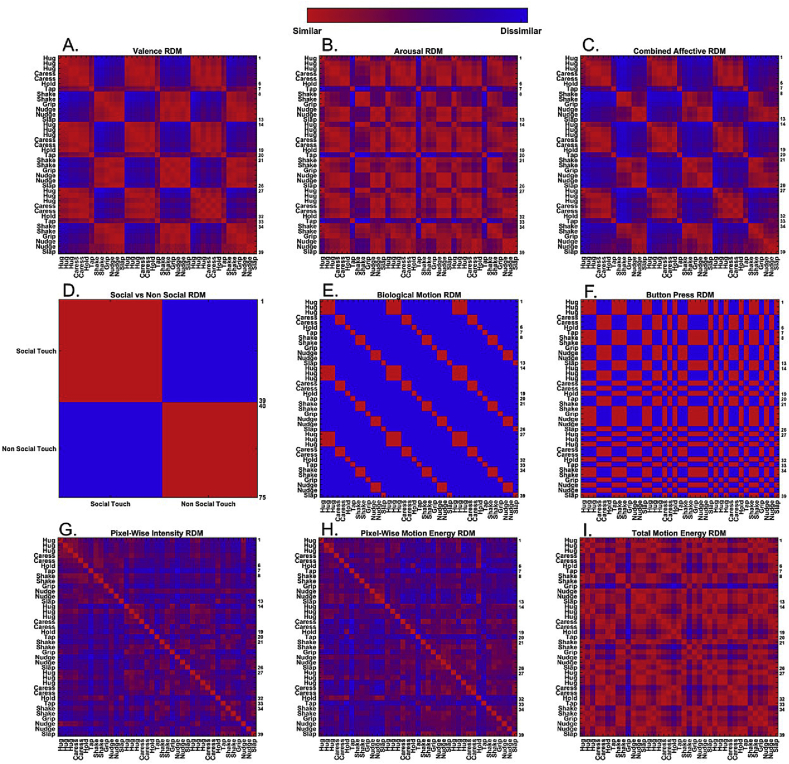

We created multiple matrices representing social/non-social, affective (valence, arousal and both), visual and motor dissimilarity information between all pairs of stimuli (Fig. 2). These representational dissimilarity matrices (RDMs) were use as predictors in the multiple regression model to define how much each variable could independently explain the neural data after ruling out the effects of other predictors. See also Inline Supplementary Fig S1, showing the origin of each RDM.

Fig. 2.

Multiple dissimilarity matrices representing different aspects of the touch stimuli. Each element indicates the dissimilarity shown as a color (red = similar (identical) and blue = dissimilar (different)) for a pair of stimuli. In the case of (A) valence, (B) arousal and (C) affective RDMs, averaged group matrices from the behavioral data were used. (D–F) Are derived from the semantic or task-related content of the movies, and (G–I) from a quantitative analysis of visual features. (A–I). Labels on left and top axes provide short description about the stimuli based on the displayed touch interaction (i.e. “Hug” indicates any video clips showing a person hugs another person), and the numbers on right axes indicate the numbers of stimuli. As reflected in the right axes, most RDMs are 39 × 39 matrices (the number of social touch stimuli = 39), except (D) the social/non-social RDM (75 × 75 matrix, the number of all touch stimuli = 75).

Social versus non-social matrix

We constructed a 75 × 75 RDM representing the social versus non-social content of the touch events (Fig. 2-D). Each element indicated if a pair of stimuli came from the same categories (value 0, e.g. hugging somebody (social, human-human interaction) vs. slapping somebody (social, human-human interaction)) or not (value 1, e.g. hugging somebody (social, human-human interaction) vs. holding a box (non-social/non-affective, human-object interaction)).

Valence, arousal and affective matrices

The ratings from the behavioral measures were used to construct matrices representing the dissimilarity in perceived valence (Fig. 2-A), arousal (Fig. 2-B) and overall affect (valence + arousal; Fig. 2-C) for all pairs of social touch events. Each element in the valence and arousal matrices was determined by the absolute value of the rating differences for a pair of social touch stimuli. The overall affective matrix was generated based on Euclidean Distance of valence and arousal ratings for each possible pair combination of social touch stimuli. Subject-specific RDMs were averaged to yield a mean group RDM.

Biological motion dissimilarity matrix

We created a binary matrix representing the dissimilarity in the displayed biological motion (action) attributes of human social touch interaction across all pairs of video clips (Fig. 2-E, e g. hug, caress, hold, tap, shake, grip, nudge or slap). Each element indicated if a pair of stimuli displayed the identical biological motion (value 0, e.g. hug vs. hug) or not (value 1, e.g. hug vs. caress).

Dissimilarity matrix of button press

The response button was pressed either with a right or with a left hand of the participant according to task instructions in each run during the main experiment. The sweatshirt color of the touch initiator in the movie was coupled with lateralized motor responses. The dissimilarity matrix represented whether two videos required the same or a different response (same hand = 0, different hand = 1) (Fig. 2-F).

Quantitative analysis of visual features: pixel-wise intensity dissimilarity matrix

First, we computed pixel-wise intensity values across the frames and averaged them so as to obtain a pixel-wise intensity matrix (640 × 360 pixels) per video, followed by computing the pixel-wise dissimilarity for each possible pair combination of video clips based on Euclidean Distance (Fig. 2-G) (Op de Beeck et al., 2008). The larger the value in the dissimilarity matrix, the greater the difference in time-averaged local luminance for a pair of stimuli.

Quantitative analysis of visual features: pixel-wise motion energy dissimilarity matrix

We computed pixel-wise motion energy per video to quantify optic flow changes in each pixel across frames. Larger values indicate more motion occurred in a pixel. The resulting matrix contained information about the pixel in the videos in which most of the motion occurred. Pair-wise correlations of these matrices yields a 39 × 39 pixel-wise motion energy similarity matrix. We transformed this similarity matrix into a dissimilarity matrix (Fig. 2-H).

Quantitative analysis of visual features: total motion energy dissimilarity matrix

Next, we created a matrix reflecting total motion energy differences for all pairs of social touch video clips. For this, the sum of motion energy across all pixels was determined for each video (Lee Masson and Op de Beeck, 2018). Then, we computed the differences by using the absolute values of subtracted total motion energy between all pair of video clips (Fig. 2-I). Thus, the larger the value in the matrix, the greater differences on total amount of displayed motion energy for a pair of social touch videos.

Analysis: imaging data

Neural data were analyzed using the Statistical Parametric Mapping software package (SPM12, Welcome Department of Cognitive Neurology, London, UK), as well as custom Matlab code for region of interest (ROI) selection, ROI-based correlational MVPA, permutation test, and multidimensional scaling analysis.

Pre-processing

Using SPM, first, slice-timing differences were corrected, followed by a realignment procedure where each functional image was aligned to the mean image of the first run. The localizer run was separately aligned to its own mean image. At this point, participants' head movements were evaluated. One participant had to be discarded because there was head movement of more than a voxel size, in this case even up to 10 mm of head movements in z-axis translation.

Next, both the anatomical scan and the localizer run were coregistered with the mean image of the first run from the main experiment using normalized mutual information as the cost function. Segmentation was performed to obtain forward deformation fields that were used for the normalization process. Here, we warped all images (functional and anatomical) to a Montreal Neurological Institute (MNI) space with a re-sampling size of 2 x 2 × 2 mm. Lastly, we chose two different smoothing parameters for MVPA and second-level univariate group analysis. Normalized functional images were spatially smoothed using Gaussian kernels with a 5 mm and an 8 mm full-width at half maxima (FWHM) respectively.

Univariate analysis for imaging data [first-level analysis]

As a first-level (subject-level) analysis, a standard general linear model (GLM) was fitted to the functional data. All regressors of interest were modeled as delta functions (a stick rather than a box) matching the onset time of each regressor (duration = 0, an event-related design) for the fMRI experiment1 (observing touch). For the fMRI experiment2 (actual touch localizer), boxcar functions were matched to the onset time of each regressor with a duration of 10 s. They were all convolved with a canonical hemodynamic response function. Six head motion-related covariates were included as nuisance covariates in all GLMs. A high-pass filter (1/128 Hz) was used. We used a total of four GLMs for different purposes.

The first GLM (functional data with 8 mm FWHM) was applied to the main experiment contained 3 predictors (social, non-social touch and baseline). The blood-oxygenation-level-dependent (BOLD) responses evoked by social touch observation and non-social touch observation were contrasted for the second level univariate group analysis.

The second GLM included only one stimulus condition, defining the onset of all touch videos, and was used to evaluate the contrast of all touch videos versus baseline (functional data with 5 mm FWHM) to identify the functionally active voxels in the majority of ROIs.

The third GLM (functional data with 5 mm FWHM) only involved the data of the actual touch localizer run and contained three predictors (pleasant, unpleasant touch and rest conditions). The contrast of all touch versus rest was used to define the first-hand touch selective cortical regions as ROIs.

The fourth GLM (functional data with 5 mm FWHM) was applied to the main experiment and contained 75 predictors, one for each stimulus. The resulting 75 estimated beta-values were used in the correlational MVPA to construct a subject-specific neural dissimilarity matrix per ROI.

Univariate analysis for imaging data [second-level analysis]

Standard random-effect group-level analyses were conducted to identify significantly activated voxels in the contrast of social minus non-social touch in the whole brain. Statistical maps were thresholded at P FWE < 0.05.

Regions of interest (ROIs)

To perform ROI-based correlational MVPA, we selected multiple ROIs that constitute four different networks: (1) somatosensory, (2) pain, (3) ToM, (4) vision. They are relevant for somatosensory touch perception, for social cognition involved in the understanding of others' bodily states and thought, and for visual processing. Lastly, (5) motor cortex was included since participants were required to give motor responses during the fMRI task.

All ROIs excluding M1 and whole brain networks were defined by merging anatomical and functional criteria.

For the functional criteria, the actual touch fMRI GLM was used to define the ROIs in the somatosensory network, whereas the observed touch fMRI GLM was used to define the ROIs in ToM, pain, and visual networks. In the latter case, using observed touch fMRI runs, the same fMRI runs used for correlational MVPA, is statistically acceptable (Pereira et al., 2009), because the univariate contrast (observed social and non-social touch > baseline) used for the voxel selection does not test for differences between stimulus conditions (e.g., social vs. non-social touch). This selection enables us to reduce the dimensionality of the multi-voxel space (De Martino et al., 2008) by restricting the voxels to those showing a significant stimulus-related BOLD response to observed social and non-social touch compared to baseline levels.

Anatomical templates were obtained from various sources, including PickAtlas software (Maldjian et al., 2003), SPM Anatomy toolbox (Eickhoff et al., 2005) and connectivity-based parcellation atlas (Mars et al., 2012). Using the templates as an anatomical mask, we extracted the voxels in the mask that were activated in the relevant functional contrast in an individual participant with the statistical threshold Puncorrected <0.001. When the number of selected voxels was less than 20, a more liberal threshold of Puncorrected < 0.01 was used. The ROI was not defined in a subject if it could not reach the criteria mentioned above. The left and right hemispheres are combined for each ROI based on previous studies illustrating the bilateral activation in these areas (e.g. (Grèzes et al., 2001; Saxe and Kanwisher, 2003)).

Somatosensory network from the actual touch GLM

Somatosensory areas process both positive and negative touch sensation (Rolls et al., 2003). When it comes to touch observation, previous studies provided rather divergent evidence of involvement of somatosensory areas in observed/vicarious affective touch (e.g., Ebisch et al., 2008, 2011; Chan and Baker, 2015). Thus, we included BA3, BA1, BA2, and PO as separate ROIs to understand the fine-detailed representation of vicarious affective touch in each ROI to resolve the discrepancies observed in other studies.

Somatosensory touch selective ROIs were defined in each participant by the contrast of touch stimulation versus rest with the thresholds mentioned above. Each of them was masked by an anatomical template for BA3, BA1, BA2 and subpart OP1 (Eickhoff et al., 2005) of PO (see (Eickhoff et al., 2006) for the functional descriptions of OP1 – OP4). With our criteria mentioned above, we had one participant who did not have enough activated voxels in BA1, thus excluded this participant from the further analysis of BA1. For an illustration of the selected BA3, BA1 and BA2, see Inline Supplementary Fig S2.

Additionally, to provide further insights into how somatosensory areas are functionally organized, we defined these touch-related ROIs (i.e., BA3, 1 and 2) using other methods (see the Supplementary Result).

Pain network from the observed touch GLM

Our set of stimuli includes unpleasant touch events during the observation of which vicarious bodily pain may be experienced. Thus, the insula and MCC were selected as ROIs that are known to be activated during observation of others in pain (Engen and Singer, 2013; Lamm and Majdandžić, 2015). In case of the insular cortex, although it is tentatively included in pain network, it also shows stronger neural responses to pleasant touch (Morrison et al., 2011; Gordon et al., 2013).

Both ROIs were defined by the contrast of observed touch (that consists of social and non-social touch) versus baseline from the touch observation fMRI experiment, masked by the respective anatomical templates. Each ROI was defined in 20 of the 21 participants.

ToM network from the observed touch GLM

Interpreting the affective meaning of others' touch communications requires social cognition. Thus, brain areas that considered to be part of ToM network are included as ROIs. Specifically, precuneus, middle temporal gyrus (MTG), superior temporal gyrus (STG), TPJ, posterior cingulate cortex (PCC), and medial prefrontal cortex (MPFC) are included (Jacoby et al., 2016). We defined them by using the contrast of observed touch versus baseline and masked them with the respective anatomical templates. An anatomical template for the bilateral TPJ was created by combining posterior and anterior parts of TPJ (TPJp + TPJa) (Mars et al., 2012). We had two participants who did not reach the criteria for PCC.

Visual network from the observed touch GLM

Since the stimuli contained abundant visual information, we included standard visual areas along the ventral stream from the occipital to the temporal lobe. ROIs were defined by the same contrast activated during touch observation, and masked by the anatomical templates for BA17, BA18, BA19, BA37, and V5 (Malikovic et al., 2007).

Motor area

BA4 as the primary motor cortex (M1) was defined solely by anatomical criteria (BA4 with PickAtlas software (Maldjian et al., 2003)). No functional criteria were used since neither the observed touch nor the actual touch GLM seemed to be suitable for extracting the functionally relevant parts of M1. M1 often showed no clear activation in individual participants. Receiving the touch (actual touch GLM) or passively observing the touch events (observed touch GLM) do not strongly activate the motor cortex. There was an active orthogonal task during the observed touch experiment, but this task involved only finger movement and thus is not suited to localize the motor representations of the parts of the body that are moving most in the videos (main focus on the arms).

Whole brain network

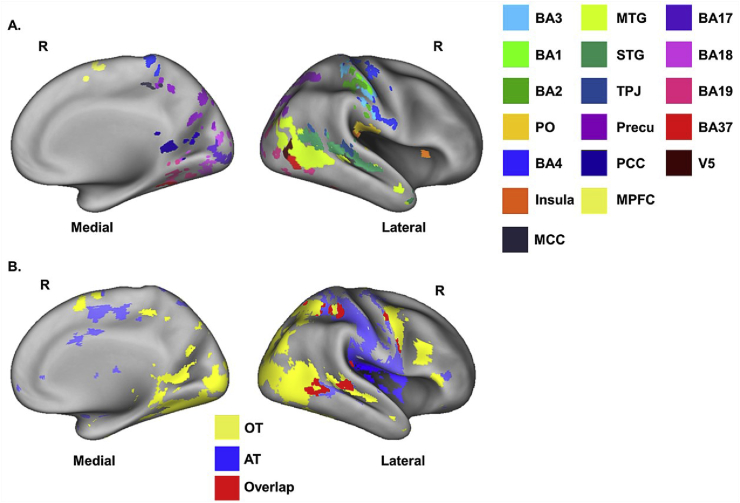

We also delineated two whole brain networks activated either by observed touch (OT) or by actual touch (AT) without anatomically masking them. The two networks are shown in Fig. 3-B. These two whole brain networks were included in order to examine how AT and OT networks represent observed touch information.

Fig. 3.

Illustration of ROIs and whole brain networks. A. The figure illustrates the functionally-defined ROIs (except BA4) after masking with each anatomical template (one representative participant), which were mapped on inflated cortices using the CARET software (Van Essen et al., 2001) with PALS atlas (Van Essen, 2005). Note that volume-to-surface mapping can introduce some artefacts (e.g., over-representation of BA4 in the ventral postcentral gyrus). B. whole brain network ROIs, showing significant activations during observation of the touch (yellow), receiving the actual touch (blue), and the overlapping areas (red). R = right hemisphere.

Trimming ROIs

Importantly, overlapping voxels among ROIs were rigorously examined and removed from each other to ensure all ROIs to be independent of each other (Bracci et al., 2017). The rationale behind the ROI trimming process was based on the size and the location of the ROI, mainly taking voxels away from the largest ROI in case of overlap. This was particularly important for area V5, given that all voxels in V5 were shared with at least one other ROIs, which would include no voxels in V5 if overlapping voxels would be removed from all ROIs. Thus, overlapping voxels between V5 and other ROIs (MTG, BA19, and BA37) were removed from MTG, BA19, and BA37 while keeping original V5 as it was. Table 2 illustrates how the choice was made when it came to overlapping voxels. Note that we did not trim the overlapping voxels in the OT and AT since the aim of including those two ROIs were to investigate neural representation of social touch in the whole brain network involved in observed and actual touch, respectively. Fig. 3 represents examples of final ROIs in the right hemisphere after trimming. Mean ROI sizes after trimming were as follows: BA3 = 207; BA1 = 76; BA2 = 258; PO = 410; Insula = 92; MCC = 127; Precuneus = 1567; MTG = 1364; STG = 502; TPJ = 315; PCC = 59; MPFC = 362; BA17 = 300; BA18 = 948; BA19 = 895; BA37 = 398; V5 = 144; BA4 = 1258; TO = 18,351 and AT = 11,061 voxels.

Table 2.

The choice made for the overlapping voxels across ROIs.

| ROIS | BA2 | Insula | MCC | Precu | MTG | STG | TPJ | BA18 | BA19 | BA37 | MPFC |

|---|---|---|---|---|---|---|---|---|---|---|---|

| OVERLAP | TPJ | PO, TPJ | MPFC | BA18, BA19 | BA19, BA37, V5, TPJ | TPJ, PO | Insula, PO | Precu | Precu, V5, MTG | V5, MTG | MCC |

The table illustrates the overlapping ROIs (the second row) that we chose to remove from the original ROI (the first row). For instance, original BA2 contained some voxels shared with TPJ. Those voxels were removed from the original BA2, yielding a final trimmed BA2. ROIs that were not mentioned in this table did not have any voxels removed (e.g., BA17). Precu signifies precuneus.

Neural representational dissimilarity matrices (RDMs)

Two types of individual neural matrices, namely “general touch matrix’ and “social touch matrix”, were determined by Pearson correlation for each possible pair combination of distributed neural patterns activated during observation of each touch interaction (e.g., observation of a person hugging another person or a person holding a box). To generated them, first, beta-values were normalized for each voxel in each ROI by subtracting the average beta-values across all conditions (N = 75 for the general touch matrix and 39 for the social touch matrix). This process was applied to all 6 runs separately. Then, we averaged the beta values of the six runs and computed Pearson correlations for the resulting multi-voxel patterns for each possible pair combination of conditions, yielding two symmetric matrices in each ROI (Kriegeskorte et al., 2008). The general touch matrix contained pair-wise correlation of 75 neural patterns (39 social and 36 non-social conditions) while the social touch matrix contained pair-wise correlation of 39 neural patterns. All neural similarity matrices were transformed into RDMs (1-correlation) that resulted in 21 individual general touch matrices with 75 x 75 elements and another 21 social touch matrices with 39 x 39 elements per ROI. Finally, we averaged them to create one group general touch matrix and one group social touch matrix in each ROI.

The purpose of having a general touch matrix was to understand if the spatial neural patterns evoked by observed social/non-social touch could be distinguishable in the selected ROIs to draw a global picture about the understanding of social events in the human brain. We also performed two reliability tests on general touch matrices to examine if each of them contained reliable signals in each ROI (see below).

The social touch matrix was created to detail the brain areas representing the valence, arousal or overall affective information. In this case, we excluded non-social conditions since most of them exclusively exhibited neutral valence and low arousal (Lee Masson and Op de Beeck, 2018).

Reliability tests

Diagonal versus non-diagonal measures

We evaluated the reliability of the spatial neural patterns in each ROI by comparing diagonal and non-diagonal cells in the neural similarity matrices (Ritchie et al., 2017). As described previously (e.g., Op de Beeck et al., 2008), we split 6 runs into two halves and correlated each of the within-conditions, and placed the resulting values on the diagonal of a 75 × 75 square matrix. On the other hand, between-condition correlations filled in the non-diagonal elements. This process was iterated 100 times, each time randomly dividing the 6 runs into two subsets. As a result, 100 square matrices were created per ROI, which we averaged in order to generate one 75 × 75 neural matrix with diagonals (within-condition correlations) and off-diagonal elements (between-condition correlations) for each within each ROI. In the end, we investigated if an averaged diagonal element from this group matrix was significantly higher from an averaged non-diagonal element per ROI. Statistical inferences were based upon two different approaches, first by using a permutation test (1000 times) and second by the classical parametric paired t-test (one-tailed, the degree of freedom = number of participants). For the permutation test, we randomly shuffled elements of the group matrix and averaged 75 randomly filled diagonal elements, followed by measuring if this value from the shuffled matrix was lower than the one from the real matrix in each ROI.

Any ROIs that did not show significantly larger correlations in the diagonal compared to the non-diagonal cells were excluded from further analysis, reasoning that any further patterns in the non-diagonal cells are hard to interpret without having a significantly larger correlation when data from the same condition are compared (Ritchie et al., 2017).

Between-subject correlations as a measure of reliability

We implemented a split-half correlational method comparing one-half of participants to the other half, intended to provide an estimate of the maximum correlation we could expect with predictive models after taking into account the between-subject variability in the neural data (Bracci and Op de Beeck, 2016). First, we randomly split the total group of participants into two sub-groups (N = 10 or 11 per group), followed by averaging the individual matrices for each sub-group. As a result, two averaged sub-group matrices were created per ROI. Next, we vectorized the upper diagonal elements (without diagonal and lower diagonal elements) of both matrices into two vectors, and correlated them. Finally, we adjusted the resulting correlations with the Spearman-Brown formula (2 × r/(1 + r)) to obtain an estimate of the reliability of the entire group per ROI. We iterated this process 100 times, each time randomly designating participants into two sub-groups, and finally we averaged the results per ROI.

Multiple regression analysis

We conducted a multiple regression analysis to predict the average neural matrices based on various independent variables mentioned above: social/non-social, button-press, low-level physical parameters to high-level motion perception and affective evaluation from the behavioral experiment. All upper diagonal elements in each matrix were vectorized into a vector and normalized (Z-score transformation) prior to the regression analysis.

For the statistical inference, we did both nonparametric and parametric testing during the analyses, and we report the two approaches. Reporting two very different statistical approaches shows the extent to which results depend on the specific statistical test.

Importantly, since 18 ROIs were tested, the probabilities resulting from the multiple regression test were corrected using false discovery rate (FDR) for multiple comparisons.

Non-Parametric permutation analysis was conducted by randomly shuffling the condition indices from the neural data for 1000 times while controlling the FDR. As an alternative statistical approach, we performed multiple regression on the neural matrices of individual subjects, after which a classical parametric one-tailed t-test was conducted with FDR correction to check whether a beta weight was significantly higher than zero given between-subject variation. Globally, we report FDR-corrected p-values from the permutation tests. The inference about statistical significance agreed for the two statistical approaches for the large majority of tests. Otherwise, we mentioned. In the results part, we also marked in the figures if the results from both tests were inconsistent (See Fig. 6).

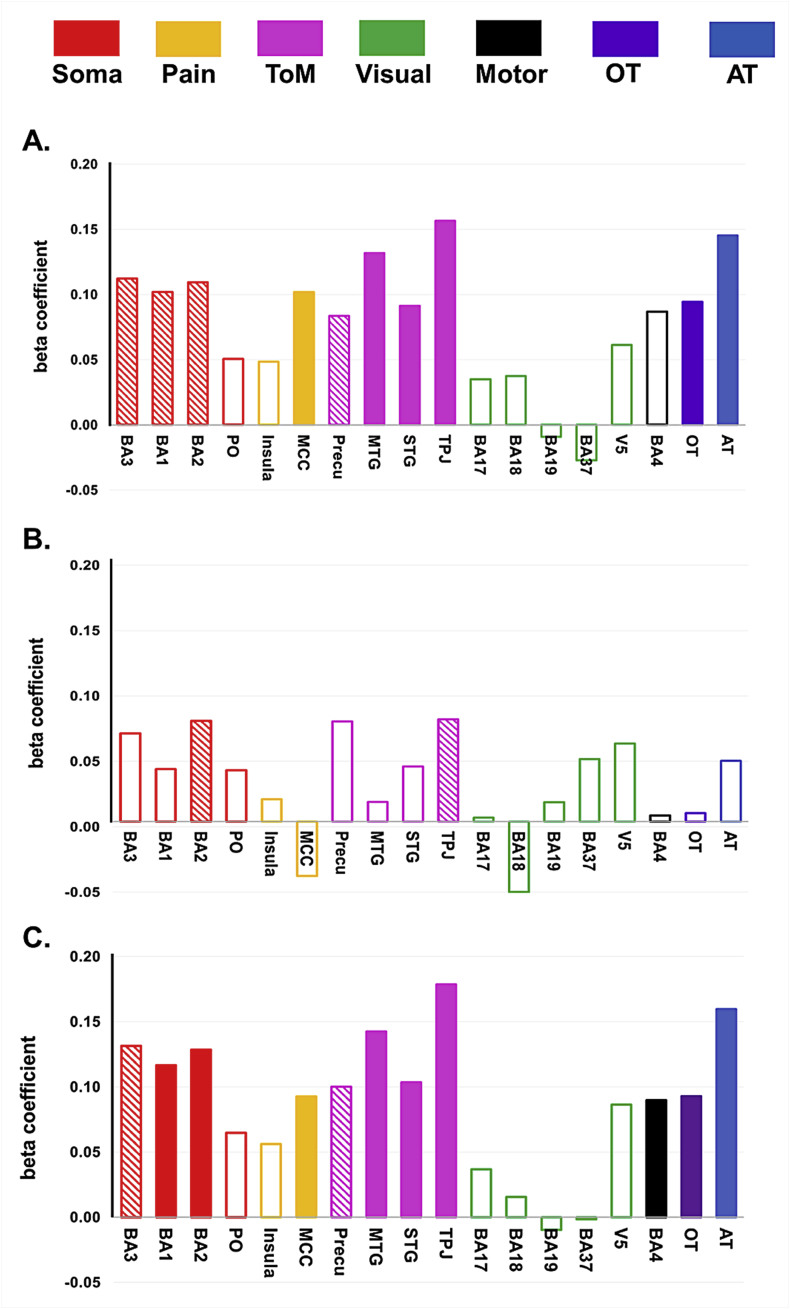

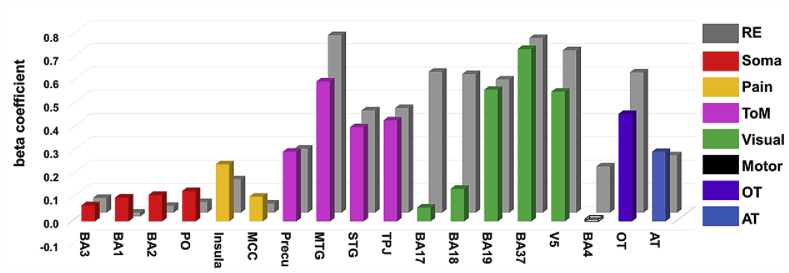

Fig. 6.

Representation of valence (A), arousal (B) and affective (C) spaces in ROIs. The bars with color-fill indicate significant results from both permutation test and parametric t-test. The bars with the diagonal patterns imply significant results restricted to only one statistical test. The bars without the color-fill indicate nonsignificant results from both statistical tests. Labels such as “Soma” in the right panel were already defined in the previous figure.

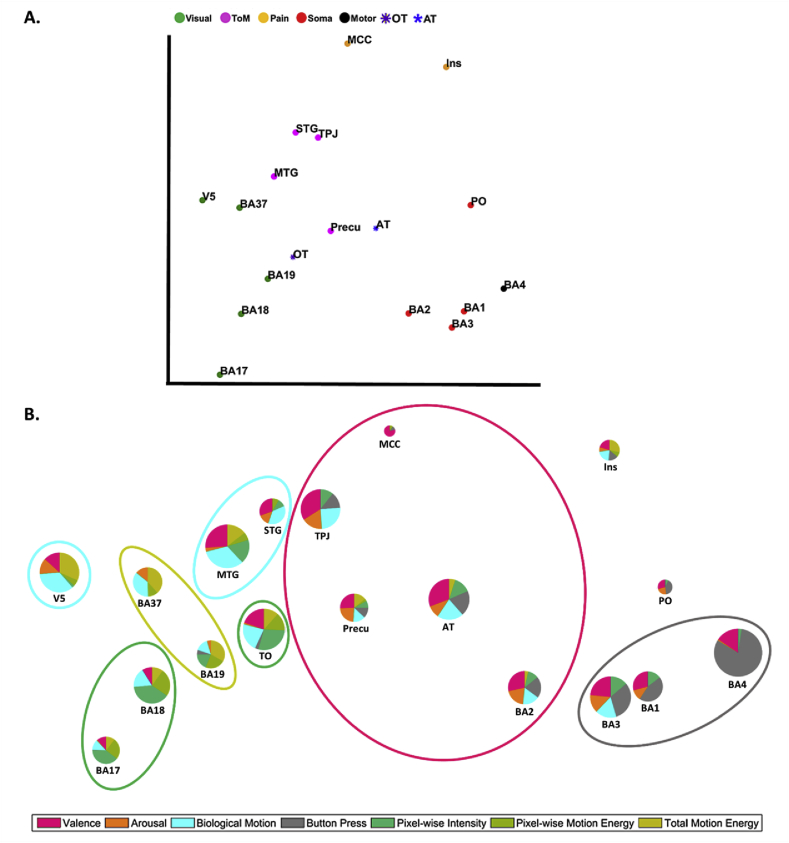

The second-order similarity between ROIs

We computed a second-order RDM to investigate the relations of the social touch representational structures in different brain regions. More specifically, we vectorized upper diagonal elements of the group 39 x 39 first-order RDMs of individual ROIs into a vector, and we correlated these vectors between all pairs of ROIs. This resulted in an 18 x 18 (the number of ROIs) second-order RDM containing (1-correlation) in each cell.

For visualization purposes, we performed multidimensional scaling (MDS) on this second-order RDM to reconstruct a two-dimensional neural space that shows a distance between each possible pair combination of ROIs based on how dissimilar the carried information on observed social touch was. Matlab built-in function mdscale was used with 100 replications. The stress value of 2D MDS is 0.15, which seems to be acceptable for the purpose of visualization as long as the number of item (ROIs = 18) we plotted were at least 4 times larger than the chosen dimensionality (2D) (Kruskal and Wish, 1978).

Lastly, we drew pie charts whose slices represent beta coefficients of 8 different predictors (valence, arousal, biological motion, button press, pixel-wise intensity, pixel-wise motion energy and total motion energy). We replaced any negative beta coefficients with zero since negative correlation does not have a meaningful interpretation. Afterward, each beta coefficient was divided by the sum of all beta coefficients for normalization in each ROI. This sum of the 8 beta coefficients determined the radius of each pie chart in order to illustrate the overall amount of information captured in each ROI. In the end, we centered each pie chart at the position of the ROI in the MDS plot.

Results

We investigated where and how various dimensions of observed touch were processed in the brain. Our experimental design allows us to differentiate among the following dimensions: social vs. non-social, affective dimensions, biological motion, button press, pixel-wise intensity, pixel-wise motion energy and total motion energy.

We perform a combination of univariate analyses and representational similarity analyses (RSA) to find out which brain areas represent which properties of the touch events.

Extended neural representation of social versus non-social touch

An impressively long list of regions represents whether a video includes social or non-social touch. Note that the social and non-social stimuli were closely matched for the type of motion used and low-level visual features (see Materials and Methods, also see (Lee Masson and Op de Beeck, 2018)).

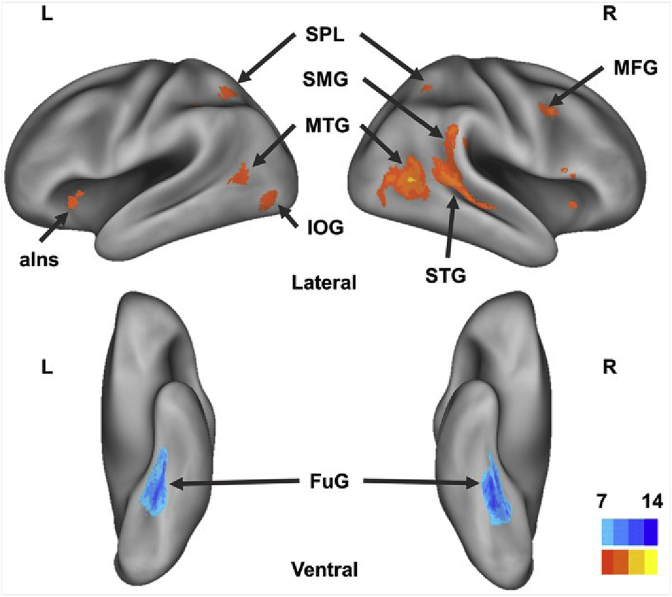

Univariate analyses already showed significant overall differences in activation level in some parts of the cortex. Indeed, of all the dimensions that can be targeted by our design, the difference between social and non-social touch events was the only contrast that showed significant univariate effects. Based on the second-level group results of the contrast between social touch and non-social touch, several social brain areas showed significantly stronger neural activations with the thresholded level at P FWE < 0.05 during the conditions in which participants observed social touch video clips compared to the non-social touch conditions. The social touch preferring regions included the right posterior (p) MTG, pSTG, supramarginal gyrus (SMG) and posterior parietal lobe. On the other hand, brain regions showing increased BOLD signals during the conditions in which non-social touch video was displayed included the bilateral fusiform gyrus (FuG). The MNI coordinates and sizes of clusters, t-values, and their FWE corrected p-values of each contrast are listed in Table 3. Fig. 4 illustrates the whole brain univariate results from both directions of the contrast (Yellow color for the social conditions and Blue for the non-social conditions).

Table 3.

Brain areas activated during social touch observation compared to non-social touch observation and vice versa.

|

Social Touch Observation > Non-Social Touch Observation | ||||||

|---|---|---|---|---|---|---|

| Peak X | Peak Y | Peak Z | T (20) | P | N Voxels | |

| L SMG | −42 | −38 | 42 | 12.1 | 0.000 | 88 |

| R aIns | 44 | 22 | 0 | 11.3 | 0.000 | 295 |

| R MTG | 50 | −60 | 8 | 11.3 | 0.000 | 2021 |

| L SMG/PO | −56 | −38 | 24 | 10.1 | 0.000 | 90 |

| R MFG | 40 | 6 | 38 | 9.8 | 0.000 | 330 |

| L IOG/MTG | −42 | −78 | −6 | 9.1 | 0.001 | 474 |

| L SPL | −30 | −54 | 50 | 8.7 | 0.002 | 159 |

| R SPL | 28 | −46 | 44 | 8.6 | 0.002 | 108 |

| L aIns | −28 | 20 | 4 | 8.6 | 0.002 | 141 |

|

R SMA |

2 |

16 |

52 |

7.8 |

0.007 |

88 |

| Non-Social Touch Observation > Social Touch Observation | ||||||

| Peak X |

Peak Y |

Peak Z |

T (20) |

P |

N Voxels |

|

| R FuG | 28 | −46 | −12 | 14 | 0.000 | 598 |

| L FuG | −32 | −46 | −12 | 13.5 | 0.000 | 744 |

Results from direct contrasts of social touch > non-social touch and vice versa at P FWE < 0.05 (Clusters restricted to a minimum of 60 voxels in extent). Labels in the table are defined as follows: N Voxels = number of voxels given for activated clusters, L = left, R = right, SMA = supplementary motor area.

Fig. 4.

Areas of increased neural activation for the two conditions. The results of whole-brain univariate analyses comparing social (orange to yellow) and non-social (sky-blue to blue) touch mapped on inflated cortices using the CARET software (Van Essen et al., 2001) with PALS atlas (Van Essen, 2005) (P FWE < 0.05, k = 60). Observing the social touch events elicited stronger neural responses in social brain areas, including MTG, STG, SMG. Observing the non-social touch events where object were touched by a human showed stronger activations in high-level visual areas, FuG. L = left hemisphere, R = right hemisphere, SPL = superior parietal lobe, IOG = inferior occipital gyrus, MFG = middle frontal gyrus, aIns = anterior insula.

The univariate analyses only reveal the strongest focal response differences, and might underestimate how many brain regions differentiate between social and non-social touch. We used correlational MVPA to pinpoint further the representation of the social/non-social distinction in the a priori defined ROIs. Prior to performing an RSA on the neural dissimilarity matrices, we checked which ROIs conveyed significant information about condition identity. More specifically, we compared the similarity of neural patterns from the same touch conditions (within-condition correlations; diagonal cells in the RDMs) with the similarity of neural patterns from different touch events (between-condition correlations). Both our statistical tests (permutation statistics and an across-subject t-test) revealed that all ROIs showed above-chance reliability and were analyzed further except PCC and MPFC.

We analyzed the remaining neural dissimilarity matrices through a multiple regression model with multiple factors (see Methods), including a binary model, presenting the distinction between human-to-human (social) and human-to-object (non-social) touch interaction. The social/non-social model was a significant predictor of the neural “general touch” matrix in almost all ROIs. Except for theM1, the touch scenes conveying the same sociality (social/non-social) evoked more similar distributed neural activities in all over the brain areas after adjusting for effects of other visual features and motor responses.

As seen in Fig. 5, social/non-social model could particularly well explain distributed neural patterns in BA37 (β = 0.74, P < 0.001) and MTG (β = 0.6, P < 0.001), which was in line with the results from the whole-brain univariate analyses (See Fig. 4 showing peak activations in MTG for social touch and FuG for non-social touch). However, the ROI-based MVPA results reveal a much larger network of regions that show selectivity for observed social versus non-social touch. The first column of Table 4 shows each beta estimate for the social/non-social model, identifying its engagement in explaining neural RDMs in most ROIs.

Fig. 5.

Representation of the observed social versus non-social touch and reliability estimate in ROIs. Y-axis indicates either a beta coefficient for the social/non-social model in predicting neural RDM from the multiple regression analysis (colored bars) or a correlation coefficient representing a noise ceiling (grey bars). X-axis lists the name of the ROIs. The bar without the color-fill (BA4) means that the results are nonsignificant (for neither of the statistical approaches, permutation test and parametric t-test). Labels in the right panel are defined as follows: RE = reliability estimate (based on split-half correlations), Soma = somatosensory areas, ToM = theory of mind areas, Visual = visual areas, Motor = motor areas, OT = whole brain network activated by observed touch, AT = whole brain network activated by actual touch.

Table 4.

The beta coefficients of the social/non-social, valence, arousal and overall affective dimensions for all ROIs.

| Social/Non-Social | Valence | Arousal | Affect | |

|---|---|---|---|---|

| BA3 | 0.07*** | 0.11 (*) | 0.07 | 0.13 (**) |

| BA1 | 0.10*** | 0.10 (*) | 0.04 | 0.12* |

| BA2 | 0.11*** | 0.11 (*) | 0.08 [*] | 0.13** |

| PO | 0.13*** | 0.05 | 0.04 | 0.06 |

| Insula | 0.25*** | 0.05 | 0.02 | 0.06 |

| MCC | 0.11*** | 0.10* | −0.04 | 0.09* |

| Precuneus | 0.30*** | 0.08 (*) | 0.08 | 0.10 (*) |

| MTG | 0.60*** | 0.13** | 0.01 | 0.14** |

| STG | 0.41*** | 0.09* | 0.04 | 0.10* |

| TPJ | 0.43*** | 0.16*** | 0.08 [*] | 0.18*** |

| BA17 | 0.06** | 0.03 | 0.00 | 0.04 |

| BA18 | 0.14*** | 0.04 | −0.05 | 0.02 |

| BA19 | 0.57*** | −0.01 | 0.01 | −0.01 |

| BA37 | 0.74*** | −0.03 | 0.05 | −0.001 |

| V5 | 0.56*** | 0.06 | 0.06 | 0.09 |

| BA4 | 0.01 | 0.09 | 0.00 | 0.09* |

| OT | 0.46*** | 0.09* | 0.01 | 0.09* |

| AT | 0.30*** | 0.15*** | 0.05 | 0.16** |

Asterisks denote FDR-corrected P values from permutation test (*P < 0.05, **P < 0.01, ***P < 0.001). (*) symbols indicate that the results were only significant with the permutation test while [*] with the results only significant with a parametric t-test.

Importantly, Fig. 5 does not only illustrate the beta coefficient for the social/non-social model as a predictor but also demonstrate the reliability measurements (noise ceilings) shown as grey bars behind the beta coefficients. The way to interpret these two values together is explained with the following example. BA3 and BA17, as a primary somatosensory cortex and a primary visual cortex respectively, contained the neural RDMs that could be explained by a social/non-social model in a similar degree (β = 0.07 and β = 0.06, respectively). However, their noise ceilings (grey bars) were largely different (r = 0.06 and r = 0.6, respectively), indicating that the distinction between observed social and non-social touch could capture most of the explainable signal in BA3 while a large part of the signal in BA17 could not be explained by this distinction. This suggests that other factors have to be taken into account to understand the neural representation in BA17 fully.

Neural representation of valence, arousal and overall affective aspects of touch

We analyzed the neural “social touch” dissimilarity matrices through two multiple regression models to understand how the affective aspects of social touch are represented. Since the overall affective RDM is a result of a combination of valence and arousal RDMs (See Analysis: Preparation of Multiple Predictors), we created two separate multiple regression models in order to avoid having a redundancy among predictors in the model. Thus, the first model included the following factors: valence, arousal, biological motion, button press, pixel-wise intensity, pixel-wise motion energy and total motion energy. The second model included the factors of overall affect, biological motion, button press, pixel-wise intensity, pixel-wise motion energy and total motion energy. Unless otherwise noted, p-values are FDR-corrected and based upon permutation tests (see Table 4 and Fig. 6 for a comparison with a parametric t-test).

Valence

There was a clear representation of valence (perceived pleasantness) in the Neural RDMs based upon the touch observation network (β = 0.09, P = 0.03) and the actual touch network (β = 0.15, P < 0.001).

At a smaller scale, perceived pleasantness of the observed interpersonal social touch exhibited significantly positive regression weights for the neural RDM in most of ToM areas (see Fig. 6-A, pink bars). Specifically, observed social touch scenes conveying similar degree of pleasantness, evoked similar spatial neural patterns in MTG (β = 0.13, P = 0.009), STG (β = 0.09, P = 0.03), TPJ (β = 0.16, P < 0.001). In addition, a significant correlation with valence was observed in MCC (β = 0.1, P = 0.02).

Several other areas showed a weak relationship with valence that was marginal from a statistical point of view. Somatosensory areas BA3, 1, 2 and Precuneus showed significant results only with the permutation test (also see the Supplementary Result for the complementary results of BA3, 1, and 2). Here the parametric t-test was more conservative, with FDR-corrected p-values above 0.05 (BA3, P = 0.11; BA1, P = 0.06; BA2, P = 0.07; Precuneus, P = 0.11). These discrepancies were reflected in Fig. 6-A and the second column of Table 4.

Importantly, no visual areas exhibited valence selectivity during observation of social touch scenes even though our stimuli were presented visually (see Fig. 6-A, green bars).

In sum, large-scale networks activated during touch observation or actual touch stimulation also represented valence aspects of observed interpersonal social touch interaction. At a finer scale, valence information about observed social touch was represented in MCC, where the mentalization of other's bodily pain happens, and the ToM regions.

Arousal

Unlike valence, perceived arousal information of social touch events did not yield significant regression weights for any of the ROIs according to the permutation test. Parametric t-test only resulted in significant beta coefficients for arousal dissimilarity matrix in BA2 (β = 0.08, P = 0.02) and TPJ (β = 0.08, P = 0.02) (see Fig. 6-B and the third column of Table 4 for the full results of beta estimates for arousal across ROIs). In sum, despite of some tendencies showing arousal representation in BA2 and TPJ, the neural patterns did not strongly reflect perceived arousal dissimilarity of observed social touch events after ruling out other potential confounding effects such as motion energy.

Affective space

By combining the two affective dimensions of valence and arousal, we obtain a measure of overall affective dissimilarity. Given this definition of overall affective dissimilarity, it is not surprising that many of the regions that showed a strong representation of valence also represent overall affective dissimilarity (Fig. 6-B). More specifically, we find this in the large-scale touch observation network (β = 0.09, P = 0.02) and actual touch network (β = 0.16, P = 0.003), MTG (β = 0.14, P = 0.003), STG (β = 0.1, P = 0.02), TPJ (β = 0.18, P < 0.001), and MCC (β = 0.09, P = 0.02).

In addition, overall affective dissimilarity is significantly related to neural dissimilarity in several brain regions that showed at most marginal representations of the individual dimensions. In particular, neural “social touch” RDMs in the somatosensory areas, including BA1 (β = 0.12, P = 0.01) and BA2 (β = 0.13, P = 0.005), could be explained by the affective dissimilarity matrix after regressing out other factors. Note that this result was found even though there was no real somatosensation delivered to the participant's skin during observation of social touch.

In addition, we also found the M1 (β = 0.09, P = 0.03) representing this information, again without being involved in a task that required any overt motor response related to the affective properties of the observed social touch events. The results in a few other brain regions were inconclusive, in particular BA3 and Precuneus, with significant effects in the permutation test but not in the parametric t-test (both P = 0.06).

Finally, overall affective dissimilarity could not explain the multi-voxel neural patterns in visual areas, despite the fact that all stimuli were presented in a visual form (see Fig. 6-C, green bars).

In sum, the dissimilarity of the neural patterns in several ROIs was related significantly to overall affective dissimilarity. This includes ToM regions, MCC, and, interestingly, somatosensory regions (also see the Supplementary Result for the complementary results), but not visual regions.

Neural representation of other factors

The multiple regression models included basic visual factors. As one would expect, some of these factors were highly successful in predicting neural dissimilarity in a range of ROIs, in particular the visual ROIs. The regression model with the two affective dimensions described above also included the following factors: biological motion, button press, pixel-wise intensity, pixel-wise motion energy and total motion energy. The results are very close even if we only report the results from a regression model with valence and arousal in the current study.

The low-level visual features such as pixel-wise intensity information were captured in the neural RDMs from early to mid-level visual areas (BA17 and BA18) and the whole brain network activated during touch observation.

When it comes to motion energy dissimilarity, spatial neural patterns in BA19, BA37, and V5 reflected total motion energy displayed in each social touch scene.

As for a more high level visual feature, the type of biological motion used for the social touch interaction was reflected in the neural RDMs extracted from the social brain regions (MTG, STG, and TPJ) and high level visual areas (BA37 and V5), most of which have already been implicated in the processing of complex motion. Additionally, the large-scale touch observation network also represented observed biological motion after controlling other visual effects.

Lastly, as we expected, motor dissimilarity based upon the buttons pressed during the main (touch observation) fMRI showed positive weights for the neural RDMs in BA3, BA1, and BA4.

These additional results identified the role of each cortical area on processing different aspects of observed social touch events from low-level to high-level visual information and motor execution. Full results listing beta coefficients for the other factors can be found in the supplementary material table 1 (see Inline Supplementary Table 1).

Neural representational structures across ROIs

A second-order RDM analysis was performed to visualize the relationships between the 18 ROIs (16 fine-scaled regions and 2 large whole-brain networks) in terms of how they represent social touch events. As input, we used the RDMs per ROI which were then correlated between ROIs. The resulting 18 × 18 s-order RDM captures similarities between ROIs in their neural representations. We visualized this matrix by Multidimensional scaling (MDS) as shown in Fig. 7-A. ROIs with similar representational structures reflected by a high correlation are close in this MDS-derived space. In the plot, we further clustered the ROIs based on the most strongly represented dimension. Although there was no statistical test carried out for separating the clusters across a set of ROIs, it may be worthwhile to mention some expected clusters that emerged. In particular, a distinctive cluster (red circles) of somatosensory areas (BA3, BA1 and BA2), a cluster of social brain areas (pink circles) known as ToM networks (MTG, STG, TPJ and Precuneus), and a topological organization from low (BA17) to high (BA37) level visual areas (green circles) can be observed in Fig. 7-A.

Fig. 7.

2D MDS reconstruction visualizing the representational relationships across brain regions. A. Similarity configuration of the ROIs. The distance of ROIs is based on the correlation coefficient between the neural patterns of ROIs. Thus, the closer a pair of ROIs are in this plot, the similar the neural patterns. Filled circles in different colors indicate different a priori categories of ROIs based on the aforementioned studies (e.g., Visual = brain regions known for visual processing). Asterisks indicate the whole brain networks activated during either touch observation (purple) or actual touch stimulation (blue). The description of a priori categories is located on the top side of figure A, whose labels were already defined in Fig. 5. B. The plot shows the same ROI space with additional information in the format of pie charts based on the results of the multiple regression test. Note that the plot is purposely stretched in width (x-axis) for better visualization. Pie charts illustrate summed beta coefficients (size of the pie charts) and the proportion of each dimension represented (size of the slices) in each ROI. The slices of the pie charts in different colors correspond to representational dimensions of the stimuli (e.g., valence = a slice in a pink). Each ellipse that encircles multiple ROIs in the plot indicates a group of ROIs of which the largest slice is the same dimension. For example, the task-related motor response dimension (grey ellipse) is the strongest factor that explains BA3, BA1, and BA4. The pie charts that are not included in any circle do not significantly represent any dimension.

Strikingly, the representational structure in the observed touch network (purple asterisk) and the actual touch network (blue asterisk) appeared to be similar as indicated by a high correlation (r = 0.58, P < 0.001). It indicates shared neural representation between the observed and actual touch networks although the two networks are largely non-overlapping (see Fig. 3-B) and even though touch information was administered by completely different sensory modalities, vision and touch respectively.

Furthermore, pie charts, based on the results from the multiple regression test, were added to the MDS plot, illustrating how much each representational dimension of social touch stimuli (e.g., valence) contributed in each ROI (Fig. 7-B). This visualization summarizes the findings from our experiment.

Here we will focus upon what is the most dominant dimension in the ROIs without claiming a significant difference between the most strongly represented dimension and the second most strongly represented dimension. Valence information of social touch was the most dominantly reflected in the neural patterns of BA2, precuneus, TPJ, and MCC (see Fig. 7-B and lined-ellipse in pink). In the case of biological motion information, V5, MTG, and STG seem to represent well the type of observed social touch action (see lined-ellipse in cyan). Other visual features such as pixel-wise intensity and total motion energy information were found to be the most important factors in other visual areas (see lined-ellipse in green and light green). Finally, task-related motor response dominated the characteristics of the neural patterns in both primary motor and somatosensory cortices (see lined-ellipse in grey).

Note that a smaller size of the pie chart (e.g., MCC and PO) indicates weaker representations overall based on the sum of all beta coefficients in each ROI. For example, MCC seems to solely represent valence information with a relatively lower beta coefficient based on the size of the pie chart when comparing with another area (e.g., TPJ) that also represents valence with the bigger radius. Without scaling the size of pie charts, it would be misleading that MCC represented the valence information better than any other brain regions. There are two possible causes for the low value in MCC compared to TPJ: either the representations in MCC are partially explained by a factor not included in our analyses, or the reliability of the neural patterns is lower (see the reliability estimates in Fig. 5).

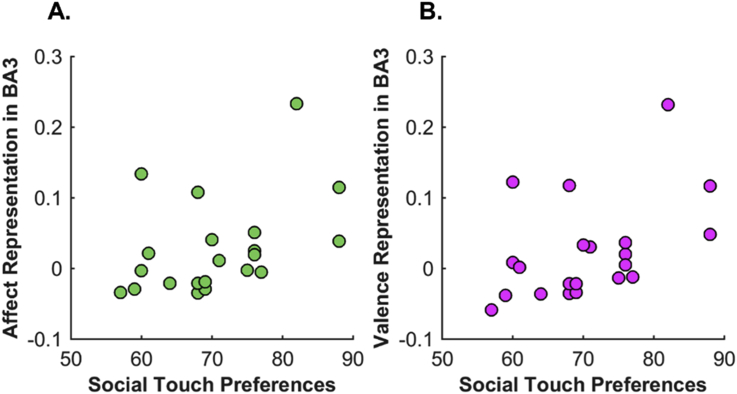

Individual touch behavior and its association with neural representations

It is striking that relatively low-level somatosensory areas and not so much the low- and mid-level visual areas are representing the overall affective content of visually presented touch events. In our study, however, somatosensory areas showed often only marginally significant effects of overall affective dissimilarity and of valence. The statistical result could be due to small effect size, or due to a large error term. A large error term could be the result of high inter-subject variation. From this perspective, it is striking that areas such as BA3 and BA1 have a very low-reliability estimate. The reliability estimate in these regions is even lower than the observed correlations in the regression model (See Fig. 5, grey bars). This situation can happen because our reliability estimate is an underestimate because the estimate depends upon inter-subject consistency (Kriegeskorte et al., 2008). When we observed this outcome, we suspected that maybe we were dealing with high individual variability in the extent of somatosensory cortical involvement on representing affective information during observation of social touch.

At the behavioral level, we have a measure capturing how much individual participants prefer social touch. Interestingly, when correlating this preference for social touch with the strength of affective representations in somatosensory cortex, the results revealed that individual differences in preference for social touch were significantly associated with the degree of affective (RHO = 0.5, P = 0.02) and valence (RHO = 0.51, P = 0.02) representations in BA3. In contrast, neural representations in BA1 were not significantly associated with social preference measures (RHO = 0.43, P = 0.06 for affective representation; RHO = 0.29, P < 0.2 for valence representation).

Fig. 8 demonstrates the relationship between the preference for social touch and the degree of neural representation from beta coefficients for affective (A) and valence (B) information in BA3.

Fig. 8.

Social touch preference and its association with neural representation in BA3. Each scatter plot shows the relationship between attitude to social touch and beta coefficients for the affect (A) and the valence (B) model in explaining neural RDM in BA3.

It appears that the presence of a neural representation about affective and valence information in BA3 during observation of social touch is not a universal phenomenon in all people, but is mostly found in participants that show a stronger preference to give, receive and witness social touch. In sum, the degree of affective representation in S1 might reflect interpersonal variability about the attitude toward social touch in daily life.

Discussion

The present study investigated the neural mechanisms of socio-affective touch during the observation of other's touch interactions using a systematically defined and well-controlled touch database that covers a broad range of natural touch communication.

Major findings and their implications

First, the standard univariate voxel-wise analysis revealed the involvement of multiple brain areas including the social brain, the secondary somatosensory cortex (i.e., the PO), and the insular cortex in processing observed social touch. However, concerning the PoCG, observing social touch events did not evoke stronger BOLD responses as compared to observing non-social touch events (it only did at a very lenient threshold of P uncorrected < 0.001). Given these results, the current study partially replicates the previous findings that suggest the involvement of the PoCG and the PO in processing vicarious touch experiences (see introduction).

MVPA methods revealed distinct patterns of neural activation between observed social vs. non-social touch in two very different large-scale touch networks (i.e., OT and AT networks). It suggests selectivity for observed touch in OT and AT networks regardless of presented sensory modalities: the network in processing observed touch through eyes versus the network in processing actual touch through a skin. At a finer scale, many cortical regions in somatosensory, pain, ToM and visual networks are highly sensitive to the distinction between social and non-social videos.

Multi-voxel patterns can contain stimulus/condition/task-related information without having differences in mean activation between the conditions (for the detailed review, see (Jimura and Poldrack, 2012; Coutanche, 2013)). Thus, observed selectivity for social vs. non-social touch in the large-scale AT network (encompassing the parietal, temporal and frontal lobes) and the somatosensory network is independent of the univariate results of the PoCG.

Importantly, MVPA methods allow us to pinpoint how much of the explainable signal is captured by selectivity for observed social vs. non-social touch. The predictive power of social vs. non-social distinction is almost as large as the across-participant reliability estimate in most ROIs, except early visual cortex (BA17-18) and early motor cortex (Fig. 5). This finding extends recent monkey (Sliwa and Freiwald, 2017) and human studies (Wurm et al., 2017) with an advanced set of stimuli in orthogonal/indirect tasks and fMRI-based RSA methods, highlighting that a widespread neural network is dedicated to capturing the social nature of touch interaction in which others are engaged.

Second, beyond the social/non-social selectivity in the neural system, we for the first time pinpointed the cortical regions that carry fine-detailed affective information presented in observed interpersonal touch by predicting a neural space from affective perceptual spaces, after ruling out effects of nuisance covariates.

Like observed selectivity for social vs. non-social touch videos, both valence and overall affect, but not arousal, are well represented throughout the whole brain touch networks (both OT through eyes and AT through a skin).