Abstract

Timely implementation of principles of evidence-based public health (EBPH) is critical for bridging the gap between discovery of new knowledge and application. Public health organizations need sufficient capacity (the availability of resources, structures, and workforce to plan deliver and evaluate the “preventive dose” of an evidence-based intervention) to move science to practice. We review principles of EBPH, the importance of capacity building to advance evidence-based approaches, promising approaches for capacity building, and future areas for research and practice. While there is general agreement on the importance of EBPH, there is less clarity on the definition of evidence, how to find it, and how, when and where to use it. Capacity for EBPH is needed among both individuals and organizations. Capacity can be strengthened via training, use of tools, technical assistance, assessment and feedback, peer networking, and incentives. Modest investments in EBPH capacity-building will foster more effective public health practice.

Keywords: capacity building, context, evidence-based interventions, external validity, implementation, practice-based evidence

Evidence without capacity is an empty shell.

— Mohan Singh

INTRODUCTION

The gap between discovery of new research findings and their application in public health and policy settings is extensive in time lapse, completeness, and fidelity (87, 101). Timely implementation of evidence-based interventions (EBIs) is critical to bridge this chasm and to improve population health (72). A vast array of EBIs is now available in systematic reviews such as the Guide to Community Preventive Services (the Community Guide) (174). Systematic reviews summarize large bodies of research and provide decision makers (practitioners, policy makers) a useful “menu” of EBIs from which to prioritize resources and plan programs (17, 63). Yet multiple lines of inquiry show that EBIs are not being disseminated or implemented effectively (87). In two surveys of US public health departments, an estimated 58 to 64% of programs and policies were reported as evidence-based (55, 67). Participants in a European public health training program reported 56% of programs as evidence-based (67). These findings compare closely across these continents and with studies of the use of EBIs in clinical settings (123, 135).

Investigating these gaps leads to several key findings: 1) practitioners underuse EBIs (91, 92); 2) passive approaches for disseminating EBIs are largely ineffective, because dissemination does not happen spontaneously (15, 121); 3) stakeholder involvement in the research or evaluation process (so-called practice-based evidence that responds to the “pull” of practitioners) is likely to enhance dissemination (80, 81, 85, 88, 97, 99, 180); 4) theory and planning frameworks are useful to guide the uptake of EBIs (172); and 5) capacity-building approaches in health-related settings (public health, medical care, policy) should be time-efficient, consistent with organizational climate, culture and resources, and aligned with the needs and skills of staff members (20, 119).

Putting evidence to use in public health or other settings requires sufficient capacity (i.e., the availability of resources, structures, and workforce to recognize and deliver the “preventive dose” of an EBI) (93, 184) and the adaptation of highly controlled research-based practices to fit the varied circumstances and populations in which they would be applied. Capacity is a determinant of performance; that is, greater capacity is linked with higher public health impact (20, 138, 161). Conceptually, capacity is the ability for a public health agency to provide or perform essential public health services. It requires skills in evaluating the quality (“strength”), quantity (“weight”) and applicability of evidence. Capacity building for EBPH is essential at all levels of public health, from national or international standards to agency-level practices. Yet how capacity is operationalized, built, and maintained is less straightforward, and relatively little is known about how to tailor capacity-building approaches to practitioners’ needs (120).

Capacity-building efforts are often aimed at improving the use of scientific evidence in day-to-day public health practice (so called evidence-based public health (EBPH) (20) or evidence-informed public health (5, 40, 84)). Much of the early research on EBPH focused on barriers to the uptake of EBIs. Studies have focused on public health practitioners’ personal (e.g., lack of skills) and organizational challenges (e.g., lack of incentives or resources) in utilizing EBIs. There is a strong correlation between the perception of organizational leadership or priority for evidence-based practices and use of research to inform program adoption and implementation among practitioners (24, 50, 102).

The overarching purposes of this review are to aid practitioners in building organizational-level capacity and to assist researchers conducting participatory research in identifying gaps in the literature in need of inquiry. Our review contains four major sections that describe: 1) the historical evolution and key principles of EBPH; 2) the importance of capacity building for EBPH; 3) promising approaches for capacity building; and 4) future issues for research and practice.

WHY EVIDENCE-BASED PUBLIC HEALTH MATTERS

Numerous reviews from teams on multiple continents have described the importance and core elements of EBPH (4, 20, 27, 36, 109, 111, 125, 128, 145). Many of the principles of EBPH have their historical precedents in the seminal work of Archie Cochrane, who noted in the early 1970s that many medical treatments lacked scientific effectiveness (41). The philosophical origins of evidence-based medicine extend as far back as 19th century Paris (158), while a more formal doctrine and set of processes were described in the 1990s (61, 158). The basic tenet of evidence-based medicine is to de-emphasize unsystematic clinical experience and place greater emphasis on evidence from clinical research, especially randomized controlled trials. This approach requires new skills, such as efficient literature searching and an understanding of types and quality of evidence in evaluating the clinical literature (90). Even though the formal terminology of evidence-based medicine is relatively recent, its concepts are embedded in earlier efforts such as the Canadian Task Force for the Periodic Health Examination (34) and the Guide to Clinical Preventive Services (177).

Building on concepts of evidence-based medicine, formal discourse on the nature and scope of EBPH originated about two decades ago. In 1997, Jenicek defined EBPH as the “…conscientious, explicit, and judicious use of current best evidence in making decisions about the care of communities and populations in the domain of health protection, disease prevention, health maintenance and improvement (health promotion)” (pg. 190) (108). The emphasis was less on randomized controlled trial evidence, because public health made such research less feasible in many settings or conditions. Though widely and variously taught in schools of public health as an implicit step in planning public health programs and policy in earlier years, in 1999, scholars and practitioners in Australia (73) and the United States (28) elaborated further on the concept of EBPH. Glasziou and colleagues posed a series of questions to enhance uptake of EBPH (e.g., “Does this intervention help alleviate this problem?”) and identified 14 sources of high quality evidence (73). Brownson and colleagues described a multi-stage process by which practitioners are able to take a more evidence-based approach to decision making (20, 28). Rychetnik and colleagues summarized many key concepts in a glossary for EBPH (157). Across this body of literature, there is a consensus that evidence-based decision making requires not only scientific evidence, but also consideration of values, resources, and context (20, 141, 157, 159).

It is important to maintain both a practitioner and a stakeholder-oriented focus in concepts of EBPH. The concise definition proposed by Kohatsu puts a stronger focus on participatory decision making: “evidence-based public health is the process of integrating science-based interventions with community preferences to improve the health of populations” (p. 419) (116). Particularly in Canada and Australia, the term “evidence-informed decision making” is commonly used (5, 187), in part, to emphasize that public health decisions are not based only on research but particularly need to consider political and organizational factors (179). In a similar vein, Green has argued that we not only need a focus on evidence-based practice but also on practice-based evidence (80, 81). In the Community Guide, an estimated 54% studies reviewed were practice-based, which was defined mainly by whether participants were allocated to intervention and comparison conditions in their natural settings. Most of the practice-based studies occurred in community settings (178). To achieve a stronger practice orientation, besides more consistent evaluation of programs, we need research that better responds to practitioners’ needs and circumstances (e.g., practice-based research networks) (81), funding mechanisms that evaluate natural experiments (26), and reliance on so-called “tacit knowledge” or “colloquial evidence” (pragmatic information based on direct experience and action in practice) (117, 164). Among practitioners, the general concepts and importance of EBPH are well accepted; there is less clarity on the definition of evidence, how to find it, how to use it (7) and how to weight the variations among types or sources of evidence, recognizing that decisions should be based not just on the strength of evidence, but also on the weight of evidence (84). These observations highlight the need for clarity in the criteria for sufficient evidence to catalyze action as well as capacity-building activities for both those sponsoring the intervention and the target organizations and populations.

The Need to Understand When Evidence is Sufficient for Action

An ongoing challenge for public health practitioners involves determining when scientific evidence is sufficient for action, and when it is sufficient for some settings or problems or populations, whether it is sufficient for the ones at hand. Many of the key considerations are discussed in detail elsewhere (20, 65, 79). Advances in public health research are generally incremental, suggesting the need for intervention as a body of literature accumulates and single studies are not definitive. When evaluating a body of literature and determining a course of action, an excellent starting point for EBIs is a systematic review (e.g., the Community Guide, Cochrane reviews). Every public health team should have a staff member with the skills to evaluate the quality and quantity of evidence along with the ability to translate this assessment into options for intervention in the particular problem, setting, population and circumstances faced.

The Key Role of EBPH in Accreditation and Certification Efforts

A national voluntary accreditation program for public health agencies in the United States, established in 2007 through the Public Health Accreditation Board (PHAB), has direct and indirect effects on EBPH (14). The accreditation process intersects with EBPH on at least three levels. First, the prerequisites for accreditation—a community health assessment, a community health improvement plan, and an agency strategic plan—are key elements of EBPH (20). Second, the process is based on the assertion that if a public health agency meets certain standards and measures, quality and performance (EBPH) will be enhanced. And third, domain 10 of the PHAB process is “Contribute to and apply the evidence base of public health.” Successfully accomplishing the standards and measures under domain 10 involves using EBIs from such sources as the Community Guide, having access to research expertise, and disseminating the data and implications of research to appropriate audiences. Similarly, certification of practitioners, such as the examinations for Certified Health Education Specialists and for public health practitioners have built-in test questions of EBIs. In addition, the rapid growth in number of schools and programs in public health puts growing pressure on them to hire faculty without experience in public health, leading to calls for encouraging, if not requiring, faculty to have periodic rotations in practice or policy settings (33, 82, 83).

Understanding the Disconnect between Evidence Generators and Evidence Users

For public health practitioners to apply the latest scientific evidence, they need to be connected all along the research production-to-application pipeline, and not just the end of it (7, 81). Research-based evidence serves many public health functions including assuring the public and policymakers of the scientific grounding of advice, selecting EBIs, needs assessment, evaluation, and grant writing (102). Several factors are likely to affect the use of research evidence and practice-based research including its perceived importance, accessibility of the latest research, and methods of obtaining or receiving and challenging the latest evidence for its applicability in their setting and population. While multiple studies show that public health practitioners value evidence-based decision making, access to the latest research information is sometimes limited. For example, Harris and colleagues found that only 46% of state public health practitioners use journals in their day-to-day work and that lack of access is a major barrier to journal use (95). Journal access is a particular barrier for those without university library privileges. Open access publishing and online summaries of research reviews are obvious solutions to this limitation, and more journals are offering and moving toward open access, including the Annual Review of Public Health.

Perhaps the biggest challenge lies in the disconnect between how researchers disseminate their findings and how practitioners learn about the latest evidence (Table 1). Academic journals and conferences are by far the most common methods by which researchers disseminate their research (18, 136), yet among local and state public health practitioners in the United States, webinars and workshops are the most frequently selected methods to learn about research (64, 102). In qualitative research in Ontario, Dobbins and colleagues found that public health decision makers value systematic reviews, short summaries of research, and clear statements of implications for practice (51).

Table 1.

Preferred Methods for Disseminating or Learning about the Latest Research-based Evidence, United States

| Method | Researchers %a (rank)b | Local practitioners % a (rank)c | Local practitioners % a (rank)d | State practitioners % a (rank)e |

|---|---|---|---|---|

| Academic journals | 100 (1) | 35 (3) | 33 (4) | 50 (2) |

| Academic conferences | 92.5 (2) | 24 (5) | 22 (5) | 17.5 (6) |

| Reports to funders | 68 (3) | -- | -- | -- |

| Press releases | 62 (4) | -- | 12.5 (7) | -- |

| Seminars or workshops | 61 (5) | 50 (1) | 53 (1) | 59 (1) |

| Face-to-face meetings with stakeholders | 53 (6) | 15 (7) | 11 (6) | 15 (7) |

| Media interviews | 51 (7) | -- | 1 (9) | -- |

| Policy briefs | 26 (8) | 24 (5) | 17 (6) | 30 (4) |

| Email alerts | 22 (9) | 46 (2) | 34 (3) | 40 (3) |

| Professional associations | -- | 30 (4) | 48 (2) | 24.5 (5) |

The percentage is determined for any method ranked as one of three top choices.

Based on a study of US local public health department employees (n=147) (102).

Based on a study of US local public health department employees (n=849) (64).

Based on a study of US state public health department employees (n=596) (102).

WHY CAPACITY BUILDING MATTERS

While capacity building is recognized as a core activity for furthering EBPH (105), it is also recognized that capacity building is multifaceted and is often a difficult concept to define (e.g., over 80 distinct characteristics of capacity building have been identified (165)) (74, 119). Capacity building is often described more precisely within the business and management literature than in the health literature (74). Across diverse disciplines, capacity building involves intentional, coordinated and mission-driven efforts aimed at strengthening the activities, management and governance of agencies to improve their performance and impact (43, 74). In public health, capacity building can be broad, crossing programmatic (or organizational) silos, or can be specific to a particular topic area such as cancer prevention (137), nutrition (171), maternal and child health (46), HIV prevention (48), or to the professional specializations in performing their tasks identified by certification or licensing requirements (149).

Capacity for Evidence-based Public Health

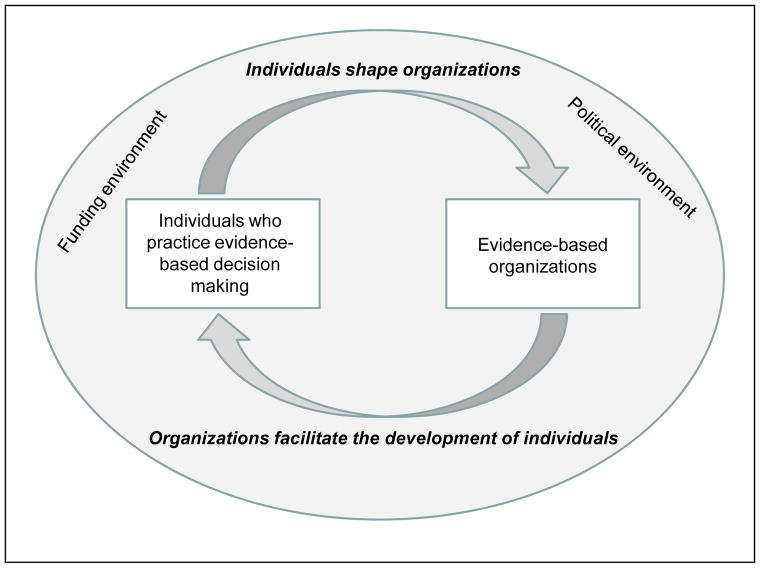

Capacity for EBPH is needed both among individuals, work units and whole organizations (113). These multiple groups should benefit from having reciprocal relationships, i.e., individuals shape organizations and organizations support the development of individuals (Figure 1) (141). Success in achieving evidence-based decision making is achieved both by building the skills and competencies of individuals (e.g., capacity to carry out a program evaluation) (20, 25, 129) and by taking actions in multiple levels of organizations (e.g., achieving a climate and culture that supports innovation, recording and providing feedback on performance, making rewards for performance public). Capacity alone is a necessary but not a sufficient prerequisite for improving population health; sustained change in public health is driven by many additional factors including selection of EBIs, the policy and political environments, funding, and public support for improvements in population health (126, 168). Recent data from US state health departments suggest that individual-level capacity may be easier to change than organizational-level capacity (19).

Figure 1.

The inter-relationships between individuals and organizations in supporting evidence-based decision making; adapted with permission from Muir Gray (141).

Theory to Guide Capacity Building

Evidence from a variety of fields, including public health, have found that interventions using health behavior theories are more effective than those lacking a basis in theory, as a theory-based model can provide a way to guide the search for evidence on interventions needed in the process of change in the intermediate variables (such as behavior) leading to the health outcome (69, 80, 139). A theory is a set of interrelated concepts, definitions, and propositions that present a systematic view of events by specifying relations among variables, in order to explain and predict events (70), and to impute potential interventions for which evidence can be sought (87).

There are few reviews of theories that are specific to capacity building among public health practitioners. In perhaps the most exhaustive summary, Leeman and colleagues used an iterative process to review 24 capacity-building theories for their salient variations (i.e., how complexity and uncertainty influence the uptake of EBIs) (120). Several practice contexts are particularly important across the theories for capacity building. First, the practice setting decision-making structures (hierarchy, climate and culture) influence EBI adoption. Second, an organization’s capacity to innovate is crucial in EBI uptake and is related to strong leadership, a learning environment, and a track record with innovation. These characteristics help us inform the “how” of capacity building, described later in this review.

Lessons from Community-Level Efforts

While the focus of this review is primarily on organizational (agency) level settings, a considerable literature exists on capacity building in community settings. These articles have covered numerous aspects including the core domains for defining community capacity (e.g., participation and leadership, social capital, community values) (75, 86, 165); methods of measuring community capacity (122); participatory evaluation in community settings (32, 35); and coalition building as a means to enhance community capacity (77). Several elements and challenges from these community-based studies inform our review of capacity in public health practice, in particular: 1) capacity building is informed by the broader concepts from community development; 2) with lack of agreement on the core concepts underlying community capacity building, measurement is lacking; and 3) building and cultivating leadership is one of the most important aspects of capacity.

Barriers to Capacity Building

The gap between research and practice underscores the need to understand the barriers to uptake of EBIs (120). Several studies have reported practitioners’ personal and institutional barriers to utilizing EBIs. Lack of time, inadequate funding, inability to analyze and interpret evidence, and absence of cultural and managerial support are among the most commonly cited barriers (50, 52, 55, 104, 115, 133). In a national survey of public health practitioners in the United States, absence of incentives within the organization was the largest barrier to evidence-based decision making (104), including the inevitable disincentive of time required for locating and studying evidence sources, which delays launching programs or services. Other studies have found a strong correlation between the perception of institutional priority and expectation of documentation for evidence-based practices and actual use of research to inform program adoption and implementation (24, 50). Therefore, it is important to recognize that uptake of EBIs is not likely to succeed in an environment that is not explicitly supportive of innovation or is protective of the status quo (163). At an individual level, US practitioners who lacked skills to develop EBIs were likely to have had a lower level of education, suggesting that some personal barriers are modifiable through training (104). To overcome barriers, capacity-building approaches need to involve the target population (practitioners) in development of training and evidence-based approaches and take into account numerous contextual variables (e.g., resources, incentives, values) (71, 120).

Complex, Multi-Level Challenges

Systems thinking is needed to address our most vexing public health issues (49, 80, 166). The need for systems approaches is grounded in the knowledge that public health problems (e.g., violence, mental illness, substance abuse, infectious and chronic diseases) have complex “upstream” causes that are multilevel, interrelated and closely linked with social determinants (a group of highly interrelated social and economic factors that create inequities in income, education, housing, and employment). Often the solutions are policy dependent since these have the largest impact on population health outcomes (62). However, adherence to a strict hierarchy of study designs may reinforce an “inverse evidence law” by which interventions most likely to influence whole populations (e.g., policy or systems change) are least valued in an evidence hierarchy emphasizing randomized designs (78, 84, 110, 142, 143).

New skills are often needed to identify and implement EBIs that are multilevel, policy-oriented, and take into account a complex set of system-level factors. Studies in cancer control show that public health practitioners are less equipped to address systems-level interventions than client-oriented EBIs (60). The capacities and skills needed among practitioners for implementing complex interventions cut across and go beyond traditional specializations of public health training (e.g., epidemiology, environmental health, health education) to other areas including systems thinking, new methods of communication, and policy analysis.

PROMISING APPROACHES FOR BUILDING CAPACITY

Based on the current literature, we describe the core components of capacity-building efforts and how these elements can be operationalized.

The “What” of Capacity Building

There are many components of capacity-building efforts. One set of targets involve broader, macro-level determinants (23). Many of these macro-level determinants of performance are less modifiable, closely connect to policy or governance, and may take years to change (e.g., may be connected to a political party in power or a funding mechanism for public health agencies).

For this review, we focus on micro-level determinants of capacity. Some have called these “administrative evidence-based practices,” (A-EBPs) which are agency (health department)- and work unit-level structures and activities that are positively associated with performance measures (e.g., achieving core public health functions, carrying out EBIs) (23). Evidence-based interventions are often the objects of capacity-building activities. These are interventions with proven efficacy and effectiveness and, defined broadly, may include programs, practices, processes, policies, and guidelines (154). These often involve complex interventions (e.g., multilevel interventions) where the core intervention components and their relationships involve multiple settings, audiences, and approaches (89, 98).

Across several reviews, core elements (domains) of A-EBPs appear to be particularly important: (1) leadership, (2) organizational climate and culture, (3) partnerships, (4) workforce development, and (5) financial processes (Table 2). These domains, described in detail below, are particularly useful targets for quality improvement efforts because they are modifiable in a shorter time frame than the macro-level determinants (12, 23, 54, 57).

Table 2.

Modifiable administrative evidence-based practice applications

| Capacity-building domain | Core elements | Sample activities to build capacity | Time frame for modificationa |

|---|---|---|---|

| Leadership |

|

Training (e.g., leadership/management and employee training in EBPH) Peer networking (e.g., leaders and middle managers seek and incorporate employee input) |

Short to medium |

| Organizational climate and culture |

|

Tools (e.g., 360 degree employee performance reviews geared to evidence-based practices; access to high-quality information) Assessment and feedback (e.g., employees perceiving that management supports innovation, direct supervisor expectations for EBPH use, performance evaluation based partially on EBPH principles). Incentives (e.g., recognition for using EBPH principles) |

Short |

| Partnerships |

|

Peer networking (e.g., build and/or enhance partnerships with schools, hospitals, community organizations, social services, private businesses, universities, law enforcement; communities of practice) | Medium |

| Workforce development |

|

Training (e.g., in-service training in quality improvement or evidence-based decision making, skills-based training in organization and systems change, training aligned with essential services and usual job responsibilities) Technical assistance (e.g., access and use of knowledge brokersb) Assessment and feedback (e.g., use of process improvement activities including accreditation, performance assessment) |

Short |

| Financial processes |

|

Tools (e.g., outcomes-based contracting) Incentives (e.g., contracts to incentivize use of EBPH principles) |

Medium |

Time frame definitions: short = less than 1 year; medium = 1–3 years.

A knowledge broker is defined as a masters-trained individual available for technical assistance (176).

Leadership is the most common element across all reviews as it is essential in promoting adoption of EBPH as a core part of public health practice (13, 29, 189). Recent research shows a number of actions from leaders in public health agencies that may increase the use of scientific information in decision making (102). These actions include direct supervisor expectations for EBPH use and performance evaluation based partially on EBPH principles (102).

The climate and culture within an agency are associated with employee attitudes, motivation, and performance (2). Based on reviews from the fields of organizational behavior, implementation science, public administration, and public health, high performing agencies require the creation of an organizational environment conducive to EBPH and implementation of innovations (3). Climate is how employees rate perceptions of the extent to which their use of a specific innovation (e.g., an EBI) is rewarded, supported, and expected within an organization (114). Culture is what makes that organization unique among all others (e.g., productive relationships between leaders and subordinates) (1). Activities to support EBPH in organizations include ready access to high-quality information, employees perceiving that management supports innovation, and management teams that encourage communication and collaboration.

The domain on partnerships builds in part on extensive literature in participatory research (35). It also acknowledges that much of the progress in public health requires local actions with partners outside the health sector (e.g., schools, social services, urban planners, law enforcement). Activities to build and maintain partnerships include aligning mission and vision statements and co-learning with partners.

A commitment to workforce development is an essential element of capacity building in public health practice (11, 58). One of the core domains for accreditation of public health agencies covers the need for a competent workforce (151). To achieve this, numerous actions are warranted including training in quality improvement and EBPH, access to ongoing technical assistance (e.g., knowledge brokers (176)), and conducting process improvement activities (e.g., accreditation) that build the workforce.

Finally, financial processes are critical for progress in public health. When public health agencies spend more per capita, measureable improvements are shown, particularly in lower resource communities (134). Yet in the current funding environment, public health is often a zero- (or shrinking) sum game—a loss of funding results in a loss in benefit to the population (183). Policy interventions are often useful in a limited resource environment insofar as they have significant impact without high cost. Processes in the financial domain may include reliance on diverse funding sources or outcomes-based contracting.

Several factors, both at individual and organizational levels, appear to influence the use of A-EBPs at the local level. Among the five A-EBP domains, local health departments in the United States generally scored lowest for organizational climate and culture (mean for the domain = 50%) and highest for partnerships (mean for the domain = 77%) (29). Two national studies have shown that A-EBPs are far less likely to be used by local health departments with jurisdictions of less than 25,000 persons (from 3 to 4 times less likely to apply A-EBPs than health departments with jurisdictions of 500,000 persons or more) (29, 59). This highlights the challenges encountered by rural health departments that often face a double disparity (i.e., higher rates of risk factors coupled with limited capacity) (96).

The “How” of Capacity Building

More challenging and less grounded in the scientific literature than the “what” of capacity building is the “how” of capacity building (119, 120, 147). In determining the optimal approaches for capacity building, it is important to understand the “push–pull” process, in which the potential adopter of an EBI must be receptive to a wide array of choices (pull) and, at the same time, there must be a systematic effort provided to the adopter to enhance the implementation of the EBI (push) (45, 86, 119, 144). The mismatch between push and pull is illustrated in Table 1. Too often, capacity-building efforts have been built around pushing out research-based evidence without accounting for the pull of practitioners, policy makers, or community members or accounting for key contextual variables (e.g., resources, needs, culture, capacity) (79, 81).

In responding to the demand from communities for more help from universities and agencies in their communities in their public health problem-solving efforts, the “pull” of the stakeholders seeking EBIs will compete to some extent with the “push” of the university’s or funders for particular of EBIs that they deem most appropriate (86). This was illustrated in the early period of the AIDS epidemic when the pull of activists was far ahead of the push of researchers and government agencies (66). Reconciling these conflicting perceptions of needs and appropriate solutions can become a source of training and experience for the public health agency or university providing technical assistance to community groups, and thereby strengthening their capacity to meet other community groups’ needs with contextually appropriate EBIs to meet their needs more effectively. This will be even more so if the partnership involves evaluation of the interventions to produce practice-based evidence.

Building on several reviews (particularly those from Leeman and colleagues (119, 120)) (23, 44, 48, 118), we describe six approaches for capacity building that show evidence of effectiveness in building capacity for EBPH (particularly supporting adoption and implementation of EBIs (119)) (see examples in Table 2). Some scholars label these approaches broadly as knowledge translation strategies (118), others focus on aspects of the EBPH process, such as “reinvention,” “adaptation,” and “integration” (87). Training involves organized education or skill-building sessions to a group of practitioners (e.g., in-service training). On the “push” side, the largest number of studies has evaluated the impact of various training programs for EBPH (Table 3) (52, 119, 168). Many of these programs show evidence of effectiveness (e.g., increased capacity, improved skills, development of new partnerships). However, many of the evaluations of these training programs are post-test only and lack comparison groups. Training on EBPH for public health professionals should employ principles of adult learning (e.g., respect and build upon previous experience, actively involve the audience in learning) (31). The reach of these training programs can be increased by employing a train-the-trainer approach (185). Tools are media or technology resources for use in planning, implementing, and evaluating EBPH-related activities (106). For example, the Public Health Foundation has assembled a series of online tools for improving performance (152). Scholars in public health services and systems research have developed an online tool for assessing agency progress in achieving A-EBPs (153). Technical assistance is the provision of interactive, individualized education and skill building, often seeking to solve a specific problem. For example, knowledge brokers (generally masters-level individuals providing one-on-one technical assistance) show evidence of effectiveness for organizations that perceive their setting to place little value on EBPH (1). Assessment and feedback involves providing data-based feedback on EBPH-related performance (e.g., evaluation of performance based on EBPH use (102)). On the “pull” side, peer networking involves bringing practitioners together to learn from each other via in-person or distance methods. Networking is sometimes achieved through communities of practice that support EBPH, which show promise in use of analytic tools (10). Incentives are financial compensation and in-kind resources to incentivize progress or build capacity in EBPH. For example, in the largest local public health agency in Canada, leaders used criteria-based resource allocation to shift funds from lower to higher priority areas (76).

Table 3.

Summary of selected empirical studies on capacity building for evidence-based (or evidence-informed) public health

| First author/year | Location | Setting | Capacity-building approach | Type of evaluation | Findings |

|---|---|---|---|---|---|

| Ramos/2002 (155) | US-Mexico border | CBOs serving Hispanics | Cooperative training approach to build skills in HIV/AIDS prevention; 3 part train-the-trainer approach | Quantitative process & outcome evaluation with data collected at 3 time points (program staff in 42 agencies) | The training program increased the infrastructure capacity & program development in CBOs; collaboration among agencies was increased |

| MacLean/2003 (131) | Nova Scotia, Canada | Provincial, municipal & CBOs engaged in health, education & recreation | Partnership (multilevel partnerships) & organizational development (technical support, action research, community activation) for heart health promotion | Mixed-method with pre/post 5-year follow-up (20 organizations) & 5 qualitative instruments | New partnerships were developed; 18 community initiatives were implemented; organizational changes documented including policy changes, funding reallocations & enhanced knowledge & practices |

| Barron/2007 (9) | Allegheny County, PA, USA | Local public health agency | Action planning after use of the Local Public Health System Performance Assessment Instrument | Case study (2 years pre/post) | The assessment process & action planning led to organizational change in the ability to carry out 10 essential services; the assessment tool fostered cross-program communication |

| Dreisinger/2008 (55) | United States | State & local public health agencies | 2.5 or 3.5 day in-person training course (9 modules) in evidence-based public health (EBPH) | Quantitative follow-up survey (n=107) | 90% of participants used course information to inform decision making; improved abilities to communicate with coworkers & read reports |

| Horton/2008 (100) | Yukon, Canada | Health education workers | Capacity-building instruction to support first aid, food safety & health promotion | Qualitative follow-up individual & focus group interviews (n=21) | Themes showed ways in which health educators build on strengths; focusing on issues of immediate importance to the community; key individual & community level capacity-building outcomes |

| Baker/2009 (6) | United States | State & local public health agencies | 2.5 or 3.5 day in-person training course (9 modules) in EBPH | Qualitative follow-up (open-ended) interviews | Course beneficial for those without a public health background; provides a common knowledge base for staff; support from leaders is crucial for furthering EBPH |

| Lloyd/2009 (127) | Australia (New South Wales) | Senior health promotion staff | 2 day train-the-trainer course focused on evidence-based practice (EBP) content & skill | Quantitative follow-up survey (n=50) | Significant improvements in EBP knowledge & skills; incorporated knowledge into practice; key barriers identified (resources, staff movement, organizational change, insufficient |

| Peirson/2012 (148) | Ontario, Canada | Local public health unit | Implementation of strategic plan that included a significant focus on EIDM | Qualitative case study including interviews & focus groups (n=70 respondents) & review of 137 documents | A series of critical organizational-level success factors covered 7 domains: leadership, organizational structure, human resources, organizational culture, knowledge management, communication & change management |

| Gibbert/2013 (68) | United States & Europe | National, state & local public health agencies; NGOs | 3.5 – 4.5 day in-person training course (9 modules) in EBPH | Mixed-method with 2 parts: course pre/post (n=393) & follow-up (n=358) | Significant pre/post improvement in knowledge, skill, ability; high levels of use of EBPH course materials; use of materials differed by location & agency type; qualitative responses provided multiple options for course improvement |

| Pettman/2013 (150) | Australia | State & local public health agencies; NGOs | Short course on evidence-informed public health (EIPH) (5 domains) | Mixed-method course pre (n=45), post (n=59), 6 month follow-up (n=38) | Course objectives continually met & exceeded; improvements across several domains of EIPH such as asking answerable questions, literature searching, critical appraisal |

| Yost/2014 (186) | Ontario, Canada | Health professionals involved in decision making | 5 day workshop on evidence-informed decision making (EIDM) knowledge, skills & behaviors | Mixed-method with 2 parts: longitudinal survey (n=40 at baseline) & qualitative interviews (n=8) | Significant pre/post increase in knowledge & skills; no significant improvement in EIDM behaviors; interviews identified perceived barriers & facilitators in participating in continuing education |

| Jacobs/2014 (105) | Four U.S. states (Michigan, North Carolina, Ohio, Washington) | Local public health agencies | 2.5 day train-the-trainer course (9 modules) in EBPH | Quantitative, quasi-experimental (pre/post) survey (n=82 participants; n=214 controls) | Course participants reported greater increases in the availability & decreases in the skill gaps than controls; course benefits included becoming better leader & making scientifically informed decisions |

| Mainor/2014 (132) | United States (43 states & the District of Columbia) | Mainly state public health program managers | 5 day training conducted over a 7 year period in obesity prevention | Quantitative course pre/post (n=303) & 6 mo. follow-up (n=229) | High course ratings for quality & relevance; at least 70% reported self-confidence in performing competencies; majority of participants at follow-up reported completing at least 1 activity from action planning |

| Schuchter/2015 (162) | United States | National & local public health agencies; NGOs; universities | 4 different trainings, ranging from 1.5 to 11.5 days in Health Impact Assessment | Qualitative follow-up (open-ended) interviews (n=48) | Training objectives were met; case studies were beneficial; new collaborations developed; trainees disseminated what they had learned |

| Hardy/2015 (94) | Pueblo City-County, CO | Local public health agency | 3 day EBPH training, formalized language in personnel policies and strategic plan | Mixed-method with 2 parts: longitudinal survey (n=74 at baseline) & qualitative interviews (n=11) | At post test, attitudes toward EBPH were improved, more resources were allocated, greater access to EBPH information was achieved. Skills were improved in developing EBIs and communicating with policy makers |

| Jaskiewicz/2015 (107) | Chicago, IL | CBOs serving minority communities | 1 day workshop, technical assistance, 3 webinars to build capacity in healthy food access | Qualitative interviews with project staff | Training & materials provided by the project increased staff confidence in working with food stores; individualized project support was particularly useful; leadership support & staff time, were limitations to project success |

| Yarber/2015 (185) | Four US states (Indiana, Colorado, Nebraska, Kansas) | State & local public health agencies | 3.5 day train-the-trainer course (9 modules) in EBPH | Quantitative follow-up survey (n=144) | 78% of respondents indicated that the course allowed them to make more scientifically informed decisions; utilization of materials was high whether the course was taught by original trainers or state-based trainers |

| Sauaia/2016 (160) | Colorado | 6 area health agencies (CBOs) | 2-day training featuring local data, sources of EBIs, hands-on activities | Quantitative short-term (n=94) & follow-up surveys (n=26) | Significant improvement in knowledge in core content areas & accomplishment of self-proposed organizational goals, grant applications/awards & several community-academic partnerships |

| Yost/2016 (188) | Canada & other parts of the world | Multiple sectors at multiple levels of government | Webinar series to promote use of a registry of EIDM methods & tools | Quantitative follow-up survey (n=434) & Google Analytics | 22 webinars have reached 2,048 people; webinars were valuable strategy for enhancing EIDM by increasing awareness of the registry & intentions to use the tools |

| Morshed/2017(140) | Nebraska | State & local public health agencies | 6 online modules featuring scenario-based learning | Quantitative, quasi-experimental (pre/post) survey (n=123 participants; n=201 controls) | Significant improvement in skills among participants without advanced degrees; no improvement for participants with advanced degrees |

CHALLENGES AND OPPORTUNITIES FOR RESEARCH AND PRACTICE

This section briefly describes a set of challenges in public health that take into account issues raised in this review, current priorities in public health, the body of available evidence, how the evidence is applied across various settings, and broader macro-level changes (30, 58). While these examples are not exhaustive, they illustrate the vast array of capacity-related issues faced by public health practitioners currently and in the coming years and areas for practice-based research.

Recognize that Leadership Matters

As noted previously in this review, leadership is essential to promote adoption of EBPH principles as a core part of public health practice. This includes an expectation that decisions will: 1) be made on the basis of the best science (use of EBIs), 2) fit the needs of the target population, 3) be realistic given the resources available, and 4) plan for evaluation early in the life cycle of a program or policy. In some cases additional funding may be required but in many circumstances not having the will to change (rather than dollars) is the major impediment. Recent practice-based research shows at least three actions from leaders in public health agencies that may increase the use of scientific information in decision making. These include participatory decision making, accessing and sharing information widely, encouragement to use EBPH (36, 94, 102).

Measure the Important Variables

A public health adage is “what gets measured, gets done” (175). Successful progress in capacity building will require the development of practical measures of outcomes that are both reliable and valid, yet brief enough for use by busy public health practitioners. One of the greatest needs among public health practitioners involves how to better assess organizational capacity (60). Most existing measures focus on ultimate outcomes, such as change in health status. Previous reviews have shown that most existing measures of capacity have not been adequately tested for reliability and predictive validity (37, 56, 181). There are, however, examples of practical tools for tracking organizational capacity in the United States (156) and in developing regions (16). Using these tools, it is feasible for a mid-sized local health department to measure A-EBPs and take action based on this assessment (94).

Agree on Capacity Standards

The National Academy of Medicine (formerly the Institute of Medicine) has called for a minimum set of services that no health department should be without (42). These cover both foundational capabilities (e.g., policy development capacity, quality improvement) and basic programs (e.g., mainly categorical programs: maternal and child health promotion, communicable disease control, chronic disease prevention). The A-EBPs fit most closely with the foundational capabilities and provide baseline data and a reliable method for measuring administrative and management capacity.

Embrace Policy and Complexity

Complex, multilevel, policy-focused and policy-supported interventions are often the most effective in improving population health indicators (39, 62). To achieve progress by furthering evidence-based policy, researchers need to use the best available evidence and expand the role of researchers and practitioners to communicate evidence packaged appropriately for various policy audiences (policy-makers and advocacy groups). New skills are needed to embrace more fully complexity, such as systems thinking for practice and systems methods for research (e.g., agent based modeling, social network analysis). These tools allow us to describe more effectively the dynamic processes at work, map social and organizational relationships, identify feedback mechanisms, and forecast future system behavior (130), especially as applied to the particular population, circumstances and participating parties (80).

Turn Data into Policy-Relevant Stories

The former Speaker of the US House of Representatives, Thomas (Tip) O’Neill, made famous the phrase “All politics is local.” Evidence becomes more relevant to policy makers when it involves a local example (a story), often describing some type of direct impact on one’s local community, family, or constituents. Research is beginning to present data on contextual issues and the importance of narrative communication in the form of story. The premises for this line of research are that storytelling makes messages personally relevant, that motivation is gauged by personal susceptibility, and that practical information is provided. Policy makers cite the impact on “real people” as one of the most important factors in increasing the coverage and relevance of research (167). New skills in this area can build on advice on how to construct an effective policy brief (53, 169).

Prepare for New Threats

Processes in EBPH need to take stock of the maturity of the evidence base, including the availability of EBIs. For example, for well-established public health issues with a well-established evidence base (e.g., tobacco, immunizations), the issue is often one of selection and implementation of EBIs. Whereas for an emerging infectious disease that has newly appeared in a population (e.g., SARS, Zika), a set of EBIs may not be available. These situations call for other EBPH-related processes such as strengthening surveillance efforts or capacity building to support the physical infrastructure (e.g., laboratories, research facilities) and personnel for outbreak investigation and medical follow-up. An example of emerging conflicts in applying the established evidence base is the emergence of a competing method of reducing tobacco smoking with e-cigarette use. The EBI science base for population tobacco control is well established, but still not entirely successful with smoking cessation or with preventing youth from taking up a nicotine habit. E-cigarettes are being promoted as a solution to the first of these (smoking cessation), but might be introducing more young users to nicotine addiction.

Fix the Broken Connections

The public health research enterprise does a great deal of dissemination—but not necessarily effective dissemination (22). As this review illustrates, researchers are often successful in connecting with other researchers rather than linking with the most important receptor sites for their scholarship (practitioners and policy makers). There are vast opportunities for dissemination research to better understand how to improve research-practice connections. Perhaps more importantly, we need new skills and approaches for balancing the push/pull between research and practice. This may involve working with new disciplines (e.g., communications or marketing experts) and crossing professional boundaries (e.g., researchers becoming more involved with advocacy or professional groups). Working in public health practice may improve dissemination skills among researchers. For example, in a national study from the United States, public health researchers with practice or policy experience were 4.4 times more likely to report good or excellent skills in dissemination (173). Practice-based evidence, including case examples of action outside the health sector (80, 81), will help in developing practical approaches for multi-sectoral action to bridge the research-practice divide (8, 146, 166). Cross cutting approaches that address health equity may be effective in breaking down disease- and risk factor-specific silos (47, 182).

Find and Fill the Biggest Skill Gaps

A summary of four US surveys of state and local public health practitioners identified three skills where the gaps in capacity are largest between importance and availability: economic evaluation, communicating research to policymakers, and adapting interventions from one setting or population to another (103). The shortage of economic data has also been observed in England (115). The deficits in capacity are often larger in developing countries and in smaller (often rural) health departments (29, 57, 170). To address these gaps, agencies need to leverage more effectively the existing resources and build community partnerships to share resources (38).

Reduce the Imbalance between Internal Versus External Validity

Those who develop and disseminate public health guidelines have placed a premium on internal validity, too often giving short shrift to external validity (84). For EBPH practitioners, the generalizability of an EBI from one population and setting to another, the core concepts of external validity, is an essential ingredient (78). The issues in external validity often relate to context for an intervention (124)—e.g., What factors need to be taken into account when an internally valid program or policy is implemented in a different setting or with a different population subgroup? How does one balance the concepts of fidelity and adaptation/reinvention? If the adaptation process changes the original EBI to such an extent that the original efficacy data may no longer apply, then the program may be viewed as a new intervention under very different contextual conditions. Green has recommended that the implementation of evidence-based approaches (“best practices”) involves careful consideration of the “best processes” needed when generalizing evidence to alternate populations, places, and times (e.g., what makes evidence useful and applicable to another setting, population or circumstances than those in which the controlled trial evidence was generated) (79).

SUMMARY AND CONCLUSION

Successful application of EBPH principles in public health settings is a combination of science, art, and timing. The science is built on epidemiologic, behavioral, and policy research showing the size and scope of a public health problem and available EBIs. The art of decision making often involves knowing what information is important to a particular stakeholder at the right time (often when a policy “window” is open (112)).

With an abundance of public health research showing the need for action, why is the translation of science into practice and policy so slow? The ever-expanding knowledge from dissemination and implementation science is beginning to provide lessons to speed up the translation of science to application (21, 87). We need new approaches for disseminating research, an increased emphasis on practice-based evidence, and a greater focus on external validity. This will help us to understand whether EBPH approaches work, for whom, why, and at what cost.

Across the diverse literature reviewed in this article, it is apparent that a “one size fits all” approach for improving public health capacity is unlikely to be effective. Efforts to build capacity in public health practice have probably focused too much on simply whether EBIs are or are not being used, which puts the entire onus on the practitioners who often find the published evidence does not fit their population or circumstances. While easier to measure, and to cast blame on the receivers rather than the research sources, reviewers, and disseminators, this approach overlooks the context and complex processes of decision making that are central to EBPH. It is likely that modest investments in the training and capacity-building activities we have outlined will lead to greater use of EBIs, more effective public health practice and ultimately, improvements in population health and reductions in health inequality.

SUMMARY POINTS.

Sufficient capacity in the form of resources, structures, and workforce is needed to further the production and use of evidence in public health settings.

The uptake of evidence-based public health can be accelerated by a stronger focus on practice-based evidence, skills in evaluating the applicability, quality and quantity of evidence, a focus on public health accreditation, and addressing the disconnect between evidence generators and evidence users.

Capacity for EBPH involves a reciprocal relationship between individuals and organizations—individuals shape organizations and organizations support the development of individuals.

A set of new skills is often needed to identify and implement evidence-based interventions that are multilevel, policy-oriented, and take into account a complex group of system-level factors.

The “what” of capacity building involves a core set of attributes across five domains: 1) leadership, 2) organizational climate and culture, 3) partnerships, 4) workforce development, and 5) financial processes.

The “how” of capacity building must be receptive to a wide array of choices (pull) from practitioners and not only the “push” of researchers, thus involving a core set of activities: 1) training, 2) use of tools, 3) technical assistance, 4) assessment and feedback, 5) peer networking, and 6) incentives.

Going forward, capacity building needs to focus on several core issues: leadership, measurement, capacity standards, the nexus of policy and complexity, data-based stories for policy change, readiness for new public health threats, effective dissemination, skill gaps, and external validity.

Acknowledgments

The authors are grateful for the helpful comments on the draft manuscript from Rebecca Armstrong, Carol Brownson, and Shiriki Kumanyika.

Parts of this review were adapted with permission from chapter 2 in Brownson RC, Baker EA, Deshpande AD, Gillespie KN. Evidence-Based Public Health. 3rd Edition. New York: Oxford University Press; 2018.

FUNDING

This work was supported in part by the National Association of Chronic Disease Directors agreement number 1612017 and grant number R01CA160327 from the National Cancer Institute at the National Institutes of Health.

Terms and Definitions

- Administrative evidence-based practices

agency (health department)- and work unit-level structures and activities that are positively associated with performance measures (e.g., achieving core public health functions, carrying out evidence-based interventions)

- Capacity

the availability of resources, structures, and workforce to deliver the “preventive dose” of an evidence-based intervention

- Capacity building

activities (e.g., training, technical assistance) that build durable resources and enable the recipient setting or community to deliver an evidence-based intervention

- Dissemination

an active approach of spreading evidence-based interventions to the target audience via determined channels using planned strategies

- Evidence-based intervention

public health practices and policies that have been shown to be effective based on evaluation research. Often, lists of evidence-based interventions are identified through systematic reviews, but sometimes need adaptation to unique or varied settings, populations or circumstances

- Evidence-based public health (or evidence-based decision making):

defined by several key characteristics: making decisions based on evidence-based interventions; using data and information systems systematically; applying program planning frameworks; engaging the community in assessment and decision making; conducting sound evaluation; and disseminating what is learned to key stakeholders and decision makers

- Evidence-informed decision making

the process of distilling and disseminating the best available evidence from research, context and experience (political, organizational), and using that evidence to inform and improve public health practice and policy. The term “evidence-informed public health” is often used in Australia and Canada

- External validity

the degree to which findings from a study or set of studies can be generalizable to and relevant for populations, settings, and times other than those in which the original studies were conducted

- Practice-based evidence

the process of deriving or determining the effectiveness and implementation of evidence-based interventions from evaluation in “real world” practice experience rather than or in addition to highly controlled research studies

Footnotes

DISCLOSURE STATEMENT

The authors are not aware of any affiliations, memberships, funding, or financial holdings that might be perceived as affecting the objectivity of this review.

LITERATURE CITED

- 1.Aarons G, Moullin J, Ehrhart M. The role of organizational processes in dissemination and implementation research. In: Brownson R, Colditz G, Proctor E, editors. Dissemination and Implementation Research in Health: Translating Science to Practice. New York: Oxford University Press; 2018. in press. [Google Scholar]

- 2.Aarons GA, Ehrhart MG, Farahnak LR, Sklar M. Aligning leadership across systems and organizations to develop a strategic climate for evidence-based practice implementation. Annu Rev Public Health. 2014;35:255–74. doi: 10.1146/annurev-publhealth-032013-182447. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Allen P, Brownson R, Duggan K, Stamatakis K, Erwin P. The makings of an evidence-based local health department: identifying administrative and management practices. Frontiers in Public Health Services & Systems Research. 2012:1. [Google Scholar]

- 4.Armstrong R, Doyle J, Lamb C, Waters E. Multi-sectoral health promotion and public health: the role of evidence. J Public Health (Oxf) 2006;28:168–72. doi: 10.1093/pubmed/fdl013. [DOI] [PubMed] [Google Scholar]

- 5.Armstrong R, Pettman TL, Waters E. Shifting sands - from descriptions to solutions. Public Health. 2014;128:525–32. doi: 10.1016/j.puhe.2014.03.013. [DOI] [PubMed] [Google Scholar]

- 6.Baker EA, Brownson RC, Dreisinger M, McIntosh LD, Karamehic-Muratovic A. Examining the role of training in evidence-based public health: a qualitative study. Health Promot Pract. 2009;10:342–8. doi: 10.1177/1524839909336649. [DOI] [PubMed] [Google Scholar]

- 7.Barr-Walker J. Evidence-based information needs of public health workers: a systematized review. J Med Libr Assoc. 2017;105:69–79. doi: 10.5195/jmla.2017.109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Barr V, Pedersen S, Pennock M, Rootman I. Health equity through intersectoral action: An analysis of 18 country case studies. Public Health Agency of Canada and World Health Organization; Ottawa, Ontario: 2008. [Google Scholar]

- 9.Barron G, Glad J, Vukotich C. The use of the National Public Health Performance Standards to evaluate change in capacity to carry out the 10 essential services. J Environ Health. 2007;70:29–31. 63. [PubMed] [Google Scholar]

- 10.Barwick MA, Peters J, Boydell K. Getting to uptake: do communities of practice support the implementation of evidence-based practice? J Can Acad Child Adolesc Psychiatry. 2009;18:16–29. [PMC free article] [PubMed] [Google Scholar]

- 11.Beaglehole R, Dal Poz MR. Public health workforce: challenges and policy issues. Hum Resour Health. 2003;1:4. doi: 10.1186/1478-4491-1-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Beitsch LM, Leep C, Shah G, Brooks RG, Pestronk RM. Quality improvement in local health departments: results of the NACCHO 2008 survey. J Public Health Manag Pract. 2010;16:49–54. doi: 10.1097/PHH.0b013e3181bedd0c. [DOI] [PubMed] [Google Scholar]

- 13.Bekemeier B, Grembowski D, Yang Y, Herting JR. Leadership matters: local health department clinician leaders and their relationship to decreasing health disparities. J Public Health Manag Pract. 2012;18:E1–E10. doi: 10.1097/PHH.0b013e318242d4fc. [DOI] [PubMed] [Google Scholar]

- 14.Bender K, Halverson PK. Quality improvement and accreditation: what might it look like? J Public Health Manag Pract. 2010;16:79–82. doi: 10.1097/PHH.0b013e3181c2c7b8. [DOI] [PubMed] [Google Scholar]

- 15.Bero LA, Grilli R, Grimshaw JM, Harvey E, Oxman AD, Thomson MA. Closing the gap between research and practice: an overview of systematic reviews of interventions to promote the implementation of research findings. The Cochrane Effective Practice and Organization of Care Review Group. BMJ. 1998;317:465–8. doi: 10.1136/bmj.317.7156.465. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Bishai D, Sherry M, Pereira CC, Chicumbe S, Mbofana F, et al. Development and Usefulness of a District Health Systems Tool for Performance Improvement in Essential Public Health Functions in Botswana and Mozambique. J Public Health Manag Pract. 2016;22:586–96. doi: 10.1097/PHH.0000000000000407. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Briss PA, Brownson RC, Fielding JE, Zaza S. Developing and using the Guide to Community Preventive Services: lessons learned About evidence-based public health. Annu Rev Public Health. 2004;25:281–302. doi: 10.1146/annurev.publhealth.25.050503.153933. [DOI] [PubMed] [Google Scholar]

- 18.Brownson R. Research Translation and Public Health Services & Systems Research. Keeneland Conference: Public Health Services & Systems Research; Lexington, KY. 2013. [Google Scholar]

- 19.Brownson R, Allen P, Jacob R, deReyter A, Lakshman M, et al. Controlling Chronic Diseases through Evidence-Based Decision Making: A Group-Randomized Trial. Prev Chronic Dis. 2018 doi: 10.5888/pcd14.170326. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Brownson R, Baker E, Deshpande A, Gillespie K. Evidence-Based Public Health. New York: Oxford University Press; 2018. [Google Scholar]

- 21.Brownson R, Colditz G, Proctor E, editors. Dissemination and Implementation Research in Health: Translating Science to Practice. New York: Oxford University Press; 2018. in press. [Google Scholar]

- 22.Brownson R, Eyler A, Harris J, Moore J, Tabak R. Getting the Word Out: New Approaches for Disseminating Public Health Science. J Public Health Manag Pract. 2018 doi: 10.1097/PHH.0000000000000673. in press. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Brownson RC, Allen P, Duggan K, Stamatakis KA, Erwin PC. Fostering more-effective public health by identifying administrative evidence-based practices: a review of the literature. Am J Prev Med. 2012;43:309–19. doi: 10.1016/j.amepre.2012.06.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Brownson RC, Ballew P, Dieffenderfer B, Haire-Joshu D, Heath GW, et al. Evidence-based interventions to promote physical activity: what contributes to dissemination by state health departments. Am J Prev Med. 2007;33:S66–73. doi: 10.1016/j.amepre.2007.03.011. quiz S4–8. [DOI] [PubMed] [Google Scholar]

- 25.Brownson RC, Ballew P, Kittur ND, Elliott MB, Haire-Joshu D, et al. Developing competencies for training practitioners in evidence-based cancer control. J Cancer Educ. 2009;24:186–93. doi: 10.1080/08858190902876395. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Brownson RC, Diez Roux AV, Swartz K. Commentary: Generating rigorous evidence for public health: the need for new thinking to improve research and practice. Annu Rev Public Health. 2014;35:1–7. doi: 10.1146/annurev-publhealth-112613-011646. [DOI] [PubMed] [Google Scholar]

- 27.Brownson RC, Fielding JE, Maylahn CM. Evidence-based public health: A fundamental concept for public health practice. Annu Rev Public Health. 2009;30:175–201. doi: 10.1146/annurev.publhealth.031308.100134. [DOI] [PubMed] [Google Scholar]

- 28.Brownson RC, Gurney JG, Land G. Evidence-based decision making in public health. J Public Health Manag Pract. 1999;5:86–97. doi: 10.1097/00124784-199909000-00012. [DOI] [PubMed] [Google Scholar]

- 29.Brownson RC, Reis RS, Allen P, Duggan K, Fields R, et al. Understanding administrative evidence-based practices: findings from a survey of local health department leaders. Am J Prev Med. 2014;46:49–57. doi: 10.1016/j.amepre.2013.08.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Brownson RC, Samet JM, Bensyl DM. Applied epidemiology and public health: are we training the future generations appropriately? Ann Epidemiol. 2017;27:77–82. doi: 10.1016/j.annepidem.2016.12.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Bryan RL, Kreuter MW, Brownson RC. Integrating Adult Learning Principles Into Training for Public Health Practice. Health Promot Pract. 2009;10:557–63. doi: 10.1177/1524839907308117. [DOI] [PubMed] [Google Scholar]

- 32.Butterfoss FD. Process evaluation for community participation. Annu Rev Public Health. 2006;27:323–40. doi: 10.1146/annurev.publhealth.27.021405.102207. [DOI] [PubMed] [Google Scholar]

- 33.Calleson DC, Jordan C, Seifer SD. Community-engaged scholarship: is faculty work in communities a true academic enterprise? Acad Med. 2005;80:317–21. doi: 10.1097/00001888-200504000-00002. [DOI] [PubMed] [Google Scholar]

- 34.Canadian Task Force on the Periodic Health Examination. The periodic health examination. Canadian Task Force on the Periodic Health Examination. Can Med Assoc J. 1979;121:1193–254. [PMC free article] [PubMed] [Google Scholar]

- 35.Cargo M, Mercer SL. The value and challenges of participatory research: Strengthening its practice. Annu Rev Public Health. 2008;29:325–50. doi: 10.1146/annurev.publhealth.29.091307.083824. [DOI] [PubMed] [Google Scholar]

- 36.Chatterji M, Green LW, Kumanyika S. L.E.A.D.: a framework for evidence gathering and use for the prevention of obesity and other complex public health problems. Health Educ Behav. 2013;41:85–99. doi: 10.1177/1090198113490726. [DOI] [PubMed] [Google Scholar]

- 37.Chaudoir SR, Dugan AG, Barr CH. Measuring factors affecting implementation of health innovations: a systematic review of structural, organizational, provider, patient, and innovation level measures. Implement Sci. 2013;8:22. doi: 10.1186/1748-5908-8-22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Chen LW, Jacobson J, Roberts S, Palm D. Resource allocation and funding challenges for regional local health departments in Nebraska. J Public Health Manag Pract. 2012;18:141–7. doi: 10.1097/PHH.0b013e3182294fff. [DOI] [PubMed] [Google Scholar]

- 39.Chokshi DA, Stine NW. Reconsidering the politics of public health. JAMA. 2013;310:1025–6. doi: 10.1001/jama.2013.110872. [DOI] [PubMed] [Google Scholar]

- 40.Ciliska D, Thomas H, Buffett C. An Introduction to Evidence-informed Public Health and a Compendium of Critical Appraisal Tools for Public Health Practice. National Collaborating Centre for Methods and Tools; Hamilton, ON: 2008. [Google Scholar]

- 41.Cochrane A. Effectiveness and Efficiency: Random Reflections on Health Services. London: Nuffield Provincial Hospital Trust; 1972. [Google Scholar]

- 42.Committee on Public Health Strategies to Improve Health. For the Public’s Health: Investing in a Healthier Future. Washington, DC: Institute of Medicine of The National Academies; 2012. [PubMed] [Google Scholar]

- 43.Create the Future. Capacity Building Overview. Milwaukee, WI: 2016. [Google Scholar]

- 44.Crisp BR, Swerissen H, Duckett SJ. Four approaches to capacity building in health: consequences for measurement and accountability. Health Promot Int. 2000;15:99–107. [Google Scholar]

- 45.Curry SJ. Organizational interventions to encourage guideline implementation. Chest. 2000;118:40S–6S. doi: 10.1378/chest.118.2_suppl.40s. [DOI] [PubMed] [Google Scholar]

- 46.Dawson A, Brodie P, Copeland F, Rumsey M, Homer C. Collaborative approaches towards building midwifery capacity in low income countries: a review of experiences. Midwifery. 2013;30:391–402. doi: 10.1016/j.midw.2013.05.009. [DOI] [PubMed] [Google Scholar]

- 47.Dean HD, Fenton KA. Integrating a social determinants of health approach into public health practice: a five-year perspective of actions implemented by CDC’s national center for HIV/AIDS, viral hepatitis, STD, and TB prevention. Public Health Rep. 2013;128(Suppl 3):5–11. doi: 10.1177/00333549131286S302. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Dean HD, Myles RL, Spears-Jones C, Bishop-Cline A, Fenton KA. A strategic approach to public health workforce development and capacity building. Am J Prev Med. 2014;47:S288–96. doi: 10.1016/j.amepre.2014.07.016. [DOI] [PubMed] [Google Scholar]

- 49.Diez Roux AV. Complex systems thinking and current impasses in health disparities research. Am J Public Health. 2011;101:1627–34. doi: 10.2105/AJPH.2011.300149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Dobbins M, Cockerill R, Barnsley J, Ciliska D. Factors of the innovation, organization, environment, and individual that predict the influence five systematic reviews had on public health decisions. Int J Technol Assess Health Care. 2001;17:467–78. [PubMed] [Google Scholar]

- 51.Dobbins M, Jack S, Thomas H, Kothari A. Public health decision-makers’ informational needs and preferences for receiving research evidence. Worldviews Evid Based Nurs. 2007;4:156–63. doi: 10.1111/j.1741-6787.2007.00089.x. [DOI] [PubMed] [Google Scholar]

- 52.Dodson EA, Baker EA, Brownson RC. Use of evidence-based interventions in state health departments: a qualitative assessment of barriers and solutions. J Public Health Manag Pract. 2010;16:E9–E15. doi: 10.1097/PHH.0b013e3181d1f1e2. [DOI] [PubMed] [Google Scholar]

- 53.Dodson EA, Eyler AA, Chalifour S, Wintrode CG. A review of obesity-themed policy briefs. Am J Prev Med. 2012;43:S143–8. doi: 10.1016/j.amepre.2012.05.021. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Drabczyk A, Epstein P, Marshall M. A quality improvement initiative to enhance public health workforce capabilities. J Public Health Manag Pract. 2012;18:95–9. doi: 10.1097/PHH.0b013e31823bca32. [DOI] [PubMed] [Google Scholar]

- 55.Dreisinger M, Leet TL, Baker EA, Gillespie KN, Haas B, Brownson RC. Improving the public health workforce: evaluation of a training course to enhance evidence-based decision making. J Public Health Manag Pract. 2008;14:138–43. doi: 10.1097/01.PHH.0000311891.73078.50. [DOI] [PubMed] [Google Scholar]

- 56.Emmons KM, Weiner B, Fernandez ME, Tu SP. Systems antecedents for dissemination and implementation: a review and analysis of measures. Health Educ Behav. 2011;39:87–105. doi: 10.1177/1090198111409748. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Erwin PC. The performance of local health departments: a review of the literature. J Public Health Manag Pract. 2008;14:E9–18. doi: 10.1097/01.PHH.0000311903.34067.89. [DOI] [PubMed] [Google Scholar]

- 58.Erwin PC, Brownson RC. Macro Trends and the Future of Public Health Practice. Annu Rev Public Health. 2017 doi: 10.1146/annurev-publhealth-031816-044224. [DOI] [PubMed] [Google Scholar]

- 59.Erwin PC, Harris JK, Smith C, Leep CJ, Duggan K, Brownson RC. Evidence-Based Public Health Practice Among Program Managers in Local Public Health Departments. J Public Health Manag Pract. 2013 doi: 10.1097/PHH.0000000000000027. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Escoffery C, Hannon P, Maxwell AE, Vu T, Leeman J, et al. Assessment of training and technical assistance needs of Colorectal Cancer Control Program Grantees in the U.S. BMC Public Health. 2015;15:49. doi: 10.1186/s12889-015-1386-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Evidence-Based Medicine Working Group. Evidence-based medicine. A new approach to teaching the practice of medicine. JAMA. 1992;17:2420–5. doi: 10.1001/jama.1992.03490170092032. [DOI] [PubMed] [Google Scholar]

- 62.Fielding JE. Health education 2.0: the next generation of health education practice. Health Educ Behav. 2013;40:513–9. doi: 10.1177/1090198113502356. [DOI] [PubMed] [Google Scholar]

- 63.Fielding JE, Briss PA. Promoting evidence-based public health policy: can we have better evidence and more action? Health Aff (Millwood) 2006;25:969–78. doi: 10.1377/hlthaff.25.4.969. [DOI] [PubMed] [Google Scholar]

- 64.Fields RP, Stamatakis KA, Duggan K, Brownson RC. Importance of scientific resources among local public health practitioners. Am J Public Health. 2015;105(Suppl 2):S288–94. doi: 10.2105/AJPH.2014.302323. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Fink A. Evidence-Based Public Health Practice. Thousand Oaks, CA: Sage; 2013. [Google Scholar]

- 66.France D. How to Survive a Plague: The Inside Story of How Citizens and Science Tamed AIDS. New York, NY: Knopf; 2016. [Google Scholar]

- 67.Gibbert WS, Keating SM, Jacobs JA, Dodson E, Baker E, et al. Training the Workforce in Evidence-Based Public Health: An Evaluation of Impact Among US and International Practitioners. Prev Chronic Dis. 2013;10:E148. doi: 10.5888/pcd10.130120. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Gilbert B, Moos MK, Miller CA. State level decision-making for public health: the status of boards of health. Journal of Public Health Policy. 1982 Mar;:51–61. [PubMed] [Google Scholar]

- 69.Glanz K, Bishop DB. The role of behavioral science theory in development and implementation of public health interventions. Annu Rev Public Health. 2010;31:399–418. doi: 10.1146/annurev.publhealth.012809.103604. [DOI] [PubMed] [Google Scholar]

- 70.Glanz K, Rimer B, Viswanath K, editors. Health Behavior and Health Education. San Francisco, CA: Jossey-Bass Publishers; 2015. [Google Scholar]

- 71.Glasgow RE, Marcus AC, Bull SS, Wilson KM. Disseminating effective cancer screening interventions. Cancer. 2004;101:1239–50. doi: 10.1002/cncr.20509. [DOI] [PubMed] [Google Scholar]

- 72.Glasgow RE, Vinson C, Chambers D, Khoury MJ, Kaplan RM, Hunter C. National Institutes of Health Approaches to Dissemination and Implementation Science: Current and Future Directions. Am J Public Health. 2012;102:1274–81. doi: 10.2105/AJPH.2012.300755. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Glasziou P, Longbottom H. Evidence-based public health practice. Australian and New Zealand Journal of Public Health. 1999;23:436–40. doi: 10.1111/j.1467-842x.1999.tb01291.x. [DOI] [PubMed] [Google Scholar]