Abstract

Although a universal code for the acoustic features of animal vocal communication calls may not exist, the thorough analysis of the distinctive acoustical features of vocalization categories is important not only to decipher the acoustical code for a specific species but also to understand the evolution of communication signals and the mechanisms used to produce and understand them.

Here, we recorded more than 8,000 examples of almost all the vocalizations of the domesticated zebra finch, Taeniopygia guttata: vocalizations produced to establish contact, to form and maintain pair bonds, to sound an alarm, to communicate distress or to advertise hunger or aggressive intents. We characterized each vocalization type using complete representations that avoided any a priori assumptions on the acoustic code, as well as classical bioacoustics measures that could provide more intuitive interpretations. We then used these acoustical features to rigorously determine the potential information-bearing acoustical features for each vocalization type using both a novel regularized classifier and an unsupervised clustering algorithm. Vocalization categories are discriminated by the shape of their frequency spectrum and by their pitch saliency (noisy to tonal vocalizations) but not particularly by their fundamental frequency. Notably, the spectral shape of zebra finch vocalizations contains peaks or formants that vary systematically across categories and that would be generated by active control of both the vocal organ (source) and the upper vocal tract (filter).

Keywords: vocalization, Songbird, acoustic signature, meaning, classification, regularization

Background

Many social animals have evolved complex vocal repertoires not only to facilitate cooperative behaviors, such as pair bonding or predator avoidance, but also in competitive interactions such as in the establishment of social ranks, mate guarding or territorial defense (Seyfarth and Cheney, 2003). For example, many songbirds use elaborate songs (Catchpole and Slater, 1995) as well as other short calls (Marler, 2004) as part of the courtship behavior and initial pair formation. This initial pair formation can lead to cooperative nest building, territory defense, reproduction, chick-rearing and the formation of a long-term partnership. The maintenance of such a stable pair bond requires close social contact that can be facilitated by vocal communication. For example, loud contact calls that carry individual information play a key role for songbird partners that attempt to reunite after having lost visual contact (Vignal et al., 2008). Similarly, vocal duets of songs (Farabaugh, 1982; Hall, 2004; Thorpe, 1972) or of calls (Elie et al., 2010) performed by partners can act as cooperative displays that signal commitment and reinforce the pair bond (Elie et al., 2010; Hall, 2004; Hall, 2009; Smith, 1994; Wickler and Seibt, 1980). In the context of cooperation for predator avoidance, birds produce alarm calls that can be predator specific (Evans et al., 1993). Finally, in competitive interactions, such as in territorial defense, songbirds can use both songs (Searcy and Beecher, 2009) and specific calls (Ballentine et al., 2008) to advertise aggressive intentions. The social context in which each of these vocalizations is emitted (e.g. affiliative interaction, alarming situation, etc.) can be used to classify vocalizations into behaviorally meaningful categories that define the vocal repertoire.

To provide a large range of information to the receivers, categories of vocal communication signals in social species must be acoustically separable. Animals can exploit two sources of variability for the production of acoustic signals: the acoustic structure of each individual sound element and how these elements are combined into sequences (see Zuberbühler and Lemasson, 2014 for a review in primates). How animals exploit sound variability to code the different categories of meanings is still an open question. Here, we explore how the acoustic structure of each individual sound element encodes meanings in vocalizations. Although these identifying acoustical features (or signatures) of specific vocal categories have been examined extensively in the vocal repertoires of both mammals (e.g. Brand, 1976; Deaux and Clarke, 2013; Kruuk, 1972; Salmi et al., 2013) and birds (e.g. Collias, 1987; Dragonetti et al., 2013; Ficken et al., 1978), a comprehensive and rigorous analysis of these distinguishing features in a given species has been difficult to achieve because of the limited number of acoustic features tested and/or because of the limited size or quality of the datasets. As a result, acoustical signatures for complete repertoires are often described qualitatively from a relatively small number of distinguishing features as experienced by human listeners or as observed visually on their spectrographic representation (but see Fuller, 2014; Stowell and Plumbley, 2014). A more quantitative analysis requires a very large dataset of calls for two reasons. First, animal vocalizations, as characterized by their sound pressure waveform, are inherently highly dimensional: they are represented by a large number of amplitude values in time that jointly can code an infinite number of unique combinations. Describing the sounds without a priori assumptions on the nature of the distinguishing features requires a representation that is also highly dimensional and preferably complete or invertible in the sense of being equivalent to the sound pressure waveform. In order to estimate the distribution of the sounds in this highly dimensional feature space, one would optimally need more samples than the number of parameters that describe each sound; and even if dimensionality reduction approaches are used, many samples are still required. Second, the dataset of vocalizations should include examples from the complete vocal repertoire of as many animals as possible. This sampling is needed in order to properly assess if vocalizations produced during different social interactions indeed form separable acoustical categories and, if so, to obtain reliable estimates of the within category variability. Such a data driven approach results in an unbiased identification of the distinguishing acoustical features among categories and in a rigorous estimation of the maximally achievable discriminability of vocalization types based solely on acoustics.

We embarked on such a data driven exploration of a complete vocal repertoire for the zebra finch, Taeniopygia guttata. We compared the results of this assumption free approach to a more classical approach that investigates the potential role of a small subset of chosen acoustical parameters for determining the information-bearing features in vocal repertoires. First, as ethologists who have studied the vocal behavior of this species in the field and in the laboratory (Elie et al., 2010; Elie et al., 2011; Mouterde et al., 2014), we were interested in generating a detailed description of the complete vocal repertoire of this songbird both to contribute to our knowledge of its natural history and to contribute to the field of animal communication. More precisely, by using quantitative methods and encouraging comparative approaches, we wanted to gain insights into the evolution of vocal communication signals and assess the degree with which such acoustic codes share similarities across species. The idea of universal codes for vocal communication is hotly debated (Hauser, 2002; Seyfarth and Cheney, 2003), but common principles have been found at different levels. At the acoustical level, relationships between the coarse sound attributes and the meaning of vocal signals have been shown to hold in many species (Morton, 1977); for example, affiliative vocalizations are often soft and low frequency sounds, while loud and high-frequency sounds are often alarm vocalizations (Collias, 1987). At the production level, the source-filter mechanism describing the making and shaping of vocal communication calls is also present in many species (Taylor and Reby, 2010). By performing a quantitative analysis of the information-bearing features in the zebra finch vocal signals, we determined to what extent our data support these putative universal principles of animal communication.

Second, as neuroethologists, we wanted to determine precisely the acoustical features that distinguish vocalization categories in zebra finches in order to then investigate how these could be represented in their auditory system and from there what neural mechanism lead to vocal categorizations (Bennur et al., 2013; Elie and Theunissen, 2015).

Finally, the zebra finch provided a unique opportunity to obtain a very large data set of vocalizations of high audio quality that would be accurately labeled in terms of their behavioral context and the identity of the emitter. Indeed, the vocal repertoire of the zebra finch has been described in the field in the complete context of its natural history (Zann, 1996). Our own fieldwork had also given us insights on the range of vocal behaviors produced in the wild (Elie et al., 2010). Thus, as we describe in detail below, using this knowledge and experience, we recorded a very large number of vocalizations emitted in clearly distinct social contexts from different groups of domesticated zebra finches in the laboratory. To obtain the appropriate range of vocal behaviors, these zebra finches were housed in enriched cages that were designed to encourage both affiliative and agonistic social interactions. Additional behavioral conditions (such as those required to produce alarm calls) were established experimentally. We were then able to describe these sounds using large feature spaces and to apply machine learning techniques including a novel regularized discriminant analysis for the identification of the acoustic features encoding the behavioral meaning embedded in these vocal signals.

Methods

Recording the Complete Repertoire of Captive Zebra Finches

Number of birds, age, sex, living conditions

We recorded calls and songs from 45 zebra finches: 18 chicks and 27 adults. Chicks were all recorded while they were 19 to 25 days old (note that only one chick was recorded on day 25 after hatch). Birds were considered as adults if they had molted into their mature sexual plumage, which is achieved around 60 days. For the 17 adults for which we had birth records, their age ranged between 2 months (>60 days) and 7 years (17.6±5.1 months, only one female was less than 90 days old). Of the 18 chicks, 7 were female, 9 were male and 2 of unknown sex (LblGre0000 and LblGre0001). Of the 27 adults, 13 were female and 14 were male. All the birds were born in one of the captive zebra finch colonies housed at University of California (UC) Berkeley or UC San Francisco. In these colonies birds are bred in large cages containing 1 to 15 families and can see and vocally interact with the rest of the colony. Therefore, birds were raised in a rich social and acoustic environment.

For recording purposes, adults were divided into groups of 4 to 6 individuals with even sex-ratio. Each group was housed in a cage (L = 56 cm, H = 36 cm, D = 41 cm) placed in a soundproof booth (L = 74 cm, H = 60 cm, D= 61 cm; Med Associates Inc, VT, USA) whose inside walls were coated with 5 cm of soundproof foam (Soundcoat, Irvine, CA, USA) and which was isolated in a room from the rest of the colony. The cage was provided with 3 nest boxes. Food, drinking water, grit, lettuce, bath access and nest material were provided ad libitum and the light cycle was 12/12. Adults were housed and daily recorded while freely interacting in these housing conditions for up to 4 months.

Chicks were housed with their siblings and parents in the same family cage (L = 56 cm, H = 36 cm, D = 41 cm) in one of the breeding colony rooms. Food, drinking water, grit and nest material were provided ad libitum and the light cycle was 12/12. Lettuce and bath access were provided once a week. Before each recording session, the cage was transferred into a sound proof booth (Acoustic Systems, MSR West, Louisville, CO, USA) and chicks were physically and acoustically isolated from their parents for 30 minutes to 1 hour to elicit their begging calls upon re-introduction into their parents’ cage.

Recording methods: equipment, distance, methodology (selection by ear, etc)

All recordings were performed between 02/2011 and 06/2013 using a digital recorder (Zoom H4N Handy Recorder, Samson; recording parameters: stereo, 44100 Hz, gain 90 or 67) placed 20 cm above the top of the cage for adults’ recordings or 19 cm from the side of the cage for chicks’ recordings. The calls recorded with a gain of 67 (to prevent clipping during recording sessions) were adjusted once digitized to match those recorded with a gain of 90 and in this manner produce a set of audio files that included relative sound level information. Because the position of the birds from the recording device was limited to the size of the cage, the vocalizations in our recordings sampled a range of intensities corresponding to a range of distances from the microphone of 20 to 80 cm. The behaviors of the birds were monitored during the recording sessions (147 sessions of 60–90 min) by an expert observer (JEE) placed behind a blind, into the darkness of the room, for the adults’ recordings, or by observation through a peephole in the sound proof booth for chicks’ recordings. Note that chicks were placed back one by one in their parents’ cage to record their Begging and Long Tonal calls to ensure identification of the calling chick. Indeed, chicks tend to beg and call together at exactly the same time, so recording individual calls was only possible by separating siblings during recording sessions. During recording sessions of the adult groups, the observer was tracking birds’ behavior, sampling vocalizations for which both the behavioral context and the identity of the emitter were clearly identified and taking notes of this information and the exact time of emission of the vocalizations for as many as possible. Then, annotated vocalizations were manually extracted offline from the sessions’ recordings and selected to be part of the vocalization library only if no overlap with noise (cage noise, wing flaps, etc.) or vocalizations from other individuals could be heard in the extract. Based on the distinctiveness of behavioral contexts and of acoustic structures, and on the grouping and nomenclature described by Zann in his fieldwork with wild zebra finches (Zann, 1996), vocalizations were classified into 11 categories: Begging calls, Long Tonal calls, Distance calls, Tet calls, Nest calls, Whine calls, Wsst calls, Distress calls, Thuk calls, Tuck calls and Songs. Vocalizations were either isolated as bouts for those emitted in bouts (Song, Begging calls, and occasionally Nest calls) or as individual vocalizations for all the others. A single expert observer (first author JEE) was used to this human based classification, as it required extensive experience with the birds’ behavior. Dr. Elie obtained this experience observing zebra finch vocal and social behaviors both in the field and in the laboratory over a period of 6 years. The classification yielded results that for the more descriptive acoustical measures also agrees with previous accounts (Zann, 1996) and is further validated here by using unsupervised clustering algorithms.

Vocalization Preprocessing and the Generation of the Vocalization Data Base

The audio recordings described above resulted in a vocalization library of 3405 vocalization bouts. To prepare the sounds for various acoustical analyses, the vocalization bouts were filtered, segmented into examples of single call or song syllables and time centered. First, all the sounds were band-pass filtered between 250 Hz and 12 kHz to remove any potential unscreened low and high frequency noise that would be outside of the hearing range of zebra finches (Amin et al., 2007) and could affect acoustical measurements such as those pertaining to the shape of the temporal amplitude envelope. Second, vocalization bouts were segmented into individual calls or song syllables. For this purpose, we estimated the sequence of maxima and minima in the temporal amplitude envelope. The amplitude envelope was estimated by full rectification of the sound pressure waveforms followed by low-pass filtering below 20 Hz. The maxima above 10% of maximum overall amplitude and minima below this threshold were found. When successive maxima were found without interleaved minima, the maximum with largest amplitude was picked. Similarly, successive minima were eliminated by choosing the one with the smallest amplitude. This succession of minima and maxima were used to cut the vocalization bouts into individual calls or syllables. Vocalization segments shorter than 30 ms were ignored. Third and finally, we generated vocalization sound files that were all of the same length and time aligned. This standard representation was key for the subsequent analyses. The length of these vocalization segments was chosen to be 350 ms to accommodate the longest vocalizations with clear start and finish in our vocalization library (female distance calls). Vocalizations segments that were shorter than 350 ms were padded with zeros and sounds longer than 350 ms were truncated. The vocalizations were aligned by finding the mean time and centering this time value at 175 ms. The mean time is obtained by taking the amplitude envelope as a density function of time as described below.

This pre-processing yielded a vocalization database of 8136 calls and song syllables from 45 birds. The number of vocalizations and birds recorded varied among categories as shown on Table 1.

Table 1.

Vocalization names and number of calls or syllables and birds recorded in our zebra finch Vocalization Database

| Vocalization Type | Abbreviation | # Sounds | # Birds |

|---|---|---|---|

| Wsst | Ws | 235 | 23 |

| Begging | Be | 1824 | 15 |

| Distance | DC | 630 | 26 |

| Distress | Di | 51 | 11 |

| Long Tonal | LT | 217 | 13 |

| Nest | Ne | 1063 | 23 |

| Song | So | 2776 | 13 |

| Tet | Te | 613 | 24 |

| Thuk | Th | 290 | 13 |

| Tuck | Tu | 240 | 13 |

| Whine | Wh | 197 | 15 |

Acoustical Feature Spaces

To describe the acoustical properties that characterized each vocalization type we used four distinct acoustical feature spaces that were used in independent analyses. In each of these feature spaces, sounds were described by a number of parameters that were obtained from a series of nonlinear operations on the sound pressure waveform. These acoustical parameters were used to describe the information-bearing features of the sounds. These acoustical feature spaces were chosen because, as compared to the raw sound pressure waveform, these representations were closer to perceptual attributes of the sound (e.g. fundamental frequency and pitch), were more intuitively understood (e.g. RMS and intensity) and/or could have provided the non-linear transformations required for vocalization categories to be segregated with linear decision boundaries.

First, we used an acoustical feature space that summarized spectral and temporal envelopes’ acoustical structure as well as fundamental frequency features since these are easily interpretable and have been widely used by bio-acousticians. Parameters in this acoustical feature space can also be directly associated with perceptual attributes (albeit human based). We called these parameters the Predefined Acoustical Features (PAFs).

Second, we used a complete and invertible spectrographic representation. This representation has multiple advantages. Being invertible, it does not make any a priori assumptions on the nature of the information-bearing acoustical features. It is also easily interpretable since results such as the discriminant functions obtained in linear discriminant analysis (LDA) or logistic regression can be displayed in spectrographic representation. Moreover, such results can then easily be compared to neural response functions obtained from single neurons that are frequently described in terms of spectro-temporal receptive fields (Theunissen and Elie, 2014), allowing a direct assessment of potential mechanisms for behaviorally relevant neural discrimination. The disadvantage of the spectrographic feature space is that it is high dimensional and that it requires additional techniques in statistical regularization (dimensionality reduction) as described below.

Third, we extracted the modulation power spectrum (MPS). The MPS is the joint temporal and spectral modulation amplitude spectrum obtained from a 2D Fourier Transform of the spectrogram (Singh and Theunissen, 2003). The MPS is used to further summarize the joint spectral and temporal structure observed in the spectrogram by averaging across features that occur with different delays or frequency shifts. The MPS could therefore be a powerful representation as it offers a shift invariant of the information present in spectrograms and is able to do so with fewer dimensions by focusing on appropriate regions of temporal and spectral modulations, mostly in the lower or intermediate frequencies (Singh and Theunissen, 2003; Woolley et al., 2005). Results in the MPS feature space can also be compared to those found in auditory neurons (Woolley et al., 2009).

Fourth, we extracted the Mel Frequency Cepstral Coefficients (MFCC). The MFCC representation is similar to the MPS since cepstral coefficients are obtained from the Fourier Transform of time slices in the spectrogram. The MFCC differs from the MPS in that it starts with a spectrographic representation obtained from a Mel Frequency filter bank, reflecting the logarithmic frequency sensitivity of the vertebrate auditory system at high frequencies. As opposed to the MPS, in MFCC the temporal information is also kept in the time domain (and not transferred to the temporal modulation domain). MFCCs are commonly and successfully used in speech processing and speech recognition algorithms as they succinctly describe essential information-bearing structures in speech such as formants and formant sweeps (Picone, 1993). MFCC have also been used successfully to study animal vocalizations (Cheng et al., 2010; Mielke and Zuberbühler, 2013). We added the MFCC representation here primarily to compare the classification performance of the new classifiers we propose to those of the classifier used in these previous studies.

Predefined Acoustical Features (PAFs)

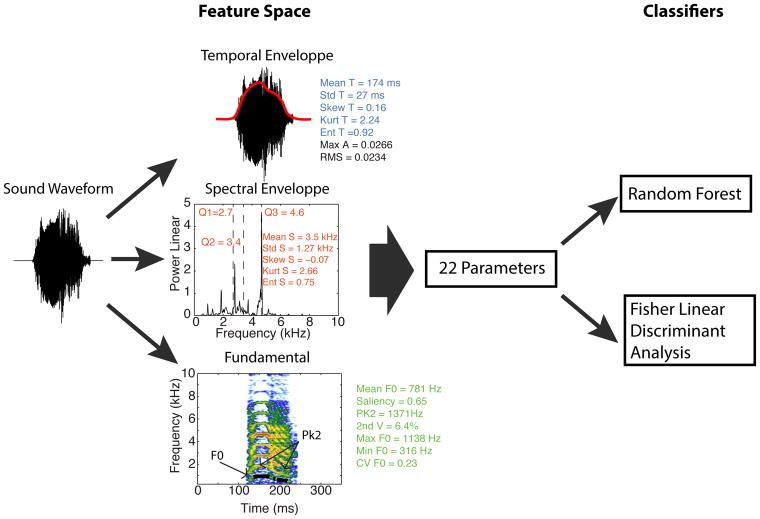

Our first sets of parameters described the shape of the frequency power spectrum (also called the spectral envelope here), the shape of the temporal amplitude envelope and features related to the fundamental frequency (Figure 1). The frequency power spectrum was estimated using the Welch’s averaged, modified periodogram with a Hanning window of 42 ms and an overlap of 99%. The temporal envelope was obtained by rectifying the sound pressure waveform and low-pass filtering below 20 Hz. Note that the temporal amplitude envelope is in units of pressure amplitude while the spectral envelope (or frequency power spectrum) is in units of pressure square (power). From these envelopes we obtained 15 acoustical parameters: 5 describing temporal features, 8 describing spectral features and two describing the intensity (or loudness) of the signal.

Figure 1. Extraction of the Predefined Acoustical Features (PAFs) and flow-chart showing the classification procedure using these parameters.

Five acoustical parameters were obtained from the temporal amplitude envelope of the sound (middle top, in blue), two parameters characterized the amplitude of the signal (middle top, in black), eight acoustical parameters were derived from the spectral amplitude envelope (middle center, in red) and seven acoustical parameters described the time varying fundamental (bottom center, in green). The fundamental is shown as a black line on the spectrogram. The fundamental is only extracted when the pitch saliency is greater than 0.5. These 22 acoustical parameters were then used to train two classifiers in vocalization category discrimination: a Random Forest and a Fisher Linear Discriminant Analysis. Performance was assessed by cross-validation. See Methods for more details on the calculation of the parameters and on the classification procedure.

The shapes of the amplitude envelopes (spectral and temporal) were described by treating the envelopes as density functions: the envelopes were normalized so that the sums of all amplitude values (in frequency or time) equal 1. We quantified the shape of these normalized envelopes by estimating the moments of the corresponding density functions: their mean (i.e. the spectral centroid for the spectral envelope Mean S and temporal centroid for the temporal envelope Mean T), standard deviation (i.e. spectral bandwidth Std S and temporal duration Std T), skewness (i.e. measure of the asymmetry in the shape of the amplitude envelopes, Skew S and Skew T), kurtosis (i.e. the peakedness in the shape of the envelope, Kurt S and Kurt T) and entropy (Ent S and Ent T). The entropy captures the overall variability in the envelope; for a given standard deviation, higher entropy values are obtained for more uniform amplitude envelopes (e.g. noise-like broad band sounds and steady temporal envelopes) and lower entropy values for amplitude envelopes with high amplitudes concentrated at fewer spectral or temporal points (e.g. harmonic stacks or temporal envelope with very high modulations of amplitude).

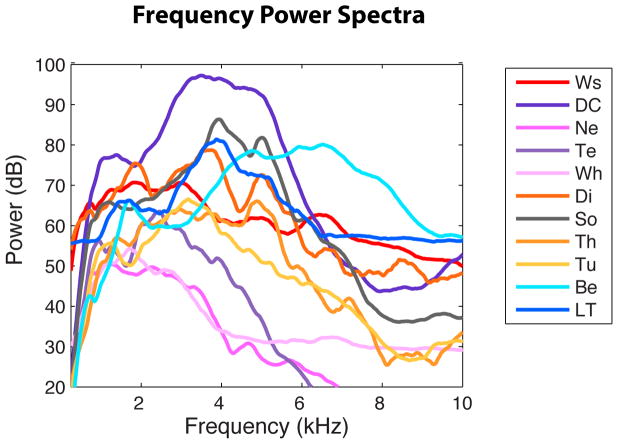

In addition, the first, second and third quartiles (Q1, Q2, Q3) were calculated for the spectral envelope: 25% of the energy is found below Q1, 50% below Q2 (Q2 is the median frequency) and 75% below Q3. These additional parameters were calculated for the spectral envelopes as they exhibited much more structure than the temporal envelopes (see Figure 1). Nonetheless, the quartiles were highly correlated with each other and the spectral mean (see Supplementary Table 1). The average spectral envelopes and temporal envelopes for each vocalization type were estimated by first averaging the envelopes of all the vocalizations of each bird within each vocalization type, and then averaging across birds (i.e. equal weight per bird). The average spectral envelopes showed characteristic broad peaks of energy for each vocalization type that we call formants, using the acoustical definition of “formant” and thus not implying resonances in the vocal tract. Note that in Figure 5A, spectral envelopes were first normalized before average calculations to equalize the weights of the envelope shapes between vocalizations and avoid any masking effect due to differences of loudness between vocalizations.

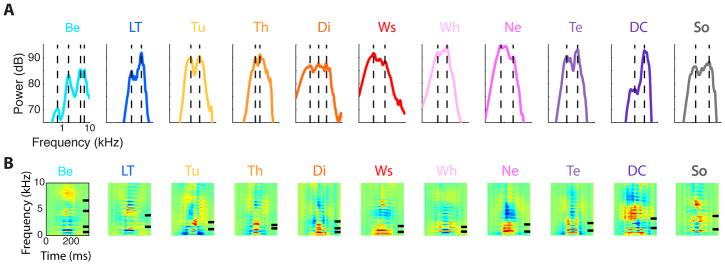

Figure 5. Formant peaks revealed by average frequency power spectra and Logistic Regression Functions (weights) for each vocalization type.

The upper panel (A) shows the average power spectrum for each vocalization type in log frequency – dB scale. Compare to Figure 4, the average power spectra were calculated on normalized spectra (peak = 100 dB), by first averaging the normalized spectra of all vocalizations within the same category for each bird and then average over birds for each vocalization type. These power spectra show two and sometimes three peaks at reliable frequencies that we call formants, using its acoustical definition. The vertical dotted lines show the location of these spectral envelope peaks. (B) For each vocalization type, we performed a separate logistic regression to assess how well a particular category could be distinguished from all the others and to determine the spectro-temporal features that could best select one vocalization type over the others. The logistic regression was applied to the vocalizations in the spectrogram feature space and the weights of the regression are shown as spectrograms. On the right side of the spectrogram, short black lines indicate the formants found in the average power spectrum of each vocalization category as shown on A. Abbreviations are defined in Figure 3.

To capture the intensity of the signal we also calculated the RMS of the signal obtained directly from the sound pressure waveform (RMS) as well as the peak amplitude of the temporal envelope (Max A).

We extracted 7 parameters describing properties related to the time varying fundamental extracted for each vocalization. This time-varying fundamental was extracted using a custom algorithm that used a combination of approaches, first identifying portions of the vocalization that had high degree of spectral periodicity (or pitch saliency) and then extracting the fundamental at these time points. To estimate pitch saliency, an auto-correlation function was first calculated using a 33.3 ms Gaussian window with a standard deviation of 6.66 ms. This window was slidden along the sound pressure waveform in 1ms steps. The largest non-zero peak in the auto-correlation function corresponding to a periodicity below 1500 Hz was found. The 1500 Hz threshold was chosen so as to avoid detection of harmonics and favor the detection of the fundamental that is known in zebra finches to mostly be below 1500Hz (Tchernichovski et al., 2001; Vignal et al., 2008; Zann, 1996). The pitch saliency was then defined as the ratio of the amplitude of that peak to the amplitude of the peak at zero delay (corresponding to the variance of the signal in that time window). The saliency was only defined for windows with a root mean square (RMS) amplitude above 10% of the maximum RMS found across all sliding windows spanning a given vocalization. The mean pitch saliency (called Sal in the figures) was estimated by averaging across time and is an estimate of the average “pitchiness” of the vocalization.

For all time points where the saliency was above 0.5, we extracted a fundamental frequency. An initial guess for the value of this fundamental frequency was obtained from the time delay of the nonzero peak in the auto-correlation function. We then refined the value of that estimate by fitting the frequency power spectrum of the same windowed segment of the vocalization with the spectrum of an idealized harmonic stack. This non-linear fit not only provided small corrections in the guess of our fundamental frequency but also allowed us to quantify significant deviations in the observed power spectrum from this ideal harmonic stack. In particular we detected significant peaks in the spectrum (peaks above 50% of the maxima) that were not explained by peaks corresponding to the fundamental or its harmonics. These peaks were used as evidence of an inharmonic structure occurring in a sound segment that nonetheless had high periodicity. This inharmonic structure was often the result of the presence of two sound sources (double voice phenomenon), produced by the same bird, in the same single vocalization. On the fundamental panel of Figure 1, the detected time-varying pitch is shown as a black line and the extraneous peaks of energy as green segments. The second voice parameter (2nd V in Figures 1 & 8) is defined as the percent of the time when a fundamental is estimated and where a second voice is found. The peak 2 parameter (Pk 2 in Figures 1 & 8) is the average frequency of these second peaks. The other fundamental parameters describe the time varying fundamental: its mean over time (mean F0 in Figures 1 and 8), its maximum (Max F0 in Figures 1 and 8), its minimum (Min F0 in Figures 1 and 8), and its coefficient of variation (CV F0 in Figures 1and 8), which is a measure of frequency modulation.

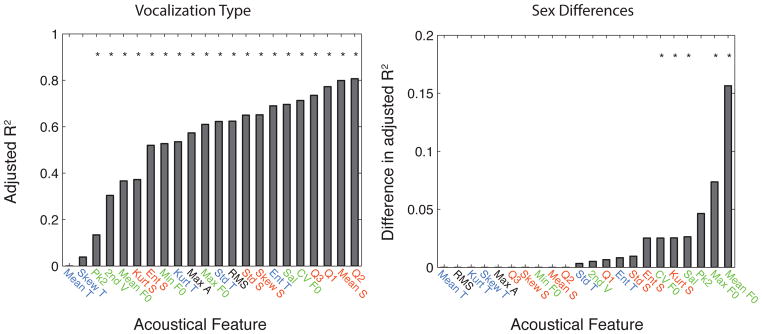

Figure 8. Variance explained by the Vocalization Type and additionally by Sex for each of the 22 PAFs.

(A) The adjusted R2 is the fraction of the variance explained in a linear mixed-effects model with vocalization type as a predictor and bird identity as a random factor. (B) The difference in adjusted R2 is the difference in adjusted R2 obtained from the model that includes vocalization type and sex (including interactions) and the adjusted R2 obtained from the model that only includes vocalization type as a predictor. The color code is used to distinguish acoustical parameters that characterize the spectral envelope (red) from those that characterize the temporal envelope (blue), those that characterize the pitch of the sound (green) and those that characterize the intensity of the sound (black). The * indicate the values that were significantly different from zero with p<0.05. Note that a different y-scale is used in the two graphs.

Overall 22 acoustical parameters describing the temporal amplitude envelope, the frequency spectrum and the time varying fundamental were obtained. These PAFs were first used to qualitatively describe the defining acoustical features of each vocalization category and then used as inputs to classifiers to quantify the validity of these features to detect vocalization types.

Spectrogram

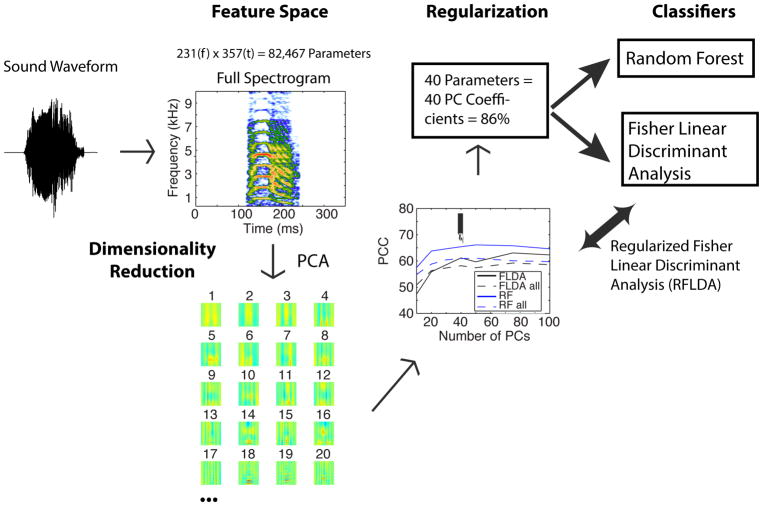

Our second feature space is the complete spectrographic representation of each vocalization (Figure 2). The spectrograms were obtained using Gaussian windows of spectral bandwidth of approximately 52 Hz (corresponding to the “standard deviation” parameter of the Gaussian). The corresponding temporal bandwidth is approximately 3 ms (or exactly: 1/(2π*52)). The spectrogram had 231 frequency bands between 0 and 12 kHz and a sampling rate of 1017 Hz yielding 357 points in time for the 350 ms window used to frame each vocalization. The total number of parameters describing the sounds in this spectrographic representation was therefore 82,467. This spectrographic representation is invertible (Cohen, 1995; Singh and Theunissen, 2003) and over-complete. Thus, on the one hand, it has the potential to provide a full description of the sound – one with no a priori assumptions on the nature of the information-bearing features. On the other hand, these spectrograms could not be “averaged” to obtain a mean description of a vocalization type and, given the high-number of parameters, they could not be used, without further data reduction methods, as inputs to classifiers. In this study, we show how one can combine spectrograms with principal component analysis (PCA) and regularization to use them in classifiers. We also show that logistic regression can also be used to obtain a “defining” spectrographic representation for each vocalization type. In this manner, we were able to circumvent this problem of dimensionality and use a data-driven approach to describe the defining acoustical features.

Figure 2. Flow-chart showing the regularized classification procedure using a complete and invertible spectrogram to represent the vocalizations.

Here we performed a classification of vocalization category using an over-complete feature space representation of the sounds: an invertible spectrogram (top panel, Feature Space column). The invertible spectrogram had 231 frequency bands between 0 and 12 kHz (~52 Hz bandwidth) and a sampling rate of 1017 Hz yielding 357 points in time for the 350 ms window used to frame each vocalization. The total number of parameters describing the sounds in this spectrographic representation was 82,467. To prevent overfitting, we reduced the number of parameters using a principal component analysis (PCA). The first 20 PCs are shown as little spectrograms in the bottom row of the Feature Space column. The optimal number of PC coefficients was found by training the two classifiers with a varying number of PC coefficients and estimating the performance of the classifier using a cross-validation data set. The performance of the classifiers as a function of parameters is shown on the line plot in the Regularization column. RF = Random Forest, RFLDA = Regularized Fisher Linear Discriminant Analysis, PCC = Probability of Correct Classification. The solid lines correspond to the performance averaged first for each vocalization type and then average across all types. The dashed lines correspond to the average overall performance (the average across types weighted by the number of vocalizations in each category). Performance for all these measures plateaued or decreased at approximately 40 PCs. 40 PCs explained 87% of the overall variance in the spectrograms of all vocalizations. The classification results presented in detail in this paper were thus obtained by describing each sound with the coefficients of 40 PCs. See the Methods for more details on the spectrographic representation and on this regularized classification procedure.

Modulation Power Spectrum

Our third feature space is the Modulation Power Spectrum (MPS; Supplementary Figure 1). The MPS is the modulus square of the 2-D Fourier Transform of the log spectrogram. Spectrograms were calculated as described above. The MPS was then obtained as follows. First, the log spectrogram S(t,f) can be written as:

where s is the sound pressure waveform and h is the Gaussian window centered at t. The MPS is obtained from the Fourier expansion of S(t,f) written in discrete form as:

where the Sj,k are the 2D Fourier terms

Here ωt,j describes the modulation frequency of the amplitude envelope along the temporal dimension and has units of Hz. The parameter ωf,k describes the modulation frequency of the amplitude envelope along the spectral dimension and has units of 1/Hz. In the modulation power spectrum, Aj,k is plotted as a function of ωt,j (shown on the x-axis) and ωf,k (shown on the y-axis), as done in Figure Supplementary Figure 1.

The time-frequency scale of the spectrograms used in the MPS (here 3ms and 52 Hz) determines the temporal and spectral Nyquist limits of the modulation spectrum (~ 167 Hz and 9.6 cycles/kHz respectively). Because natural sounds obey power law relationships in their MPS (Singh and Theunissen, 2003), most of the relevant modulation energy is found at lower frequency modulations, for example, for zebra finch vocalizations, below 40 Hz and 4 cycles/kHz. Therefore, we chose not to represent higher frequency modulations, which greatly reduced the number of parameters. In addition, we also ignored the phase spectrum (φ j,k)further reducing by half the number of parameters. Our chosen MPS feature space was ultimately based on 30 ωt,j slices (between −40 and 40 Hz) and 50 ωf,k slices (between 0 and 4 cycles/kHz) yielding 1500 parameters for our MPS feature space (vs. 82,467 for the spectrogram). Nonetheless and as for the spectrographic representation, PCA was used as a further data reduction technique before using the MPS as input in classifiers.

Mel Frequency Cepstral Coefficients

Our fourth feature space was the Mel Frequency Cepstral Coefficients (MFCCs; Supplementary Figure 2). The MFCCs are calculated from a spectrogram obtained with a frequency filter bank with varying bandwidths. We used N=25 frequency channels between 500 and 8000 Hz spanning the most sensitive region of the zebra finch hearing range (Amin et al., 2007). The Mel frequency filters are triangular in shape with center frequencies uniformly spaced on the Mel frequency scale with 50% overlap. In natural logarithmic units, the Mel frequency scale is given by (O’Shaughnessy, 1999):

The amplitude in these bands was estimated in a 25 ms sliding analysis window with a 10 ms frame shift. An example of such a Mel Spectrogram can be seen on Supplementary Figure 2. The cepstral coefficients were then obtained from the discrete cosine transform of the log amplitude of this Mel Spectrogram. Just as we did for the MPS, we truncated the cepstral coefficients at M=12 (out of 25 possible) since higher cepstral coefficients (corresponding to higher spectral modulations) have significantly less power. Ultimately our MFCC representation had 12 cepstral coefficients for 33 time slices for a total of 396 parameters. PCA was also used to further reduce the number of parameters before using these parameters as inputs in classifiers.

Statistical Analyses

Statistically significant differences in mean values across vocalization categories for the 22 PAFs were assessed using linear mixed-effects models. In this analysis, individual acoustical parameters (e.g. the fundamental frequency) were the predicted variable, the vocalization type was the only fixed effect (the predictor) and the bird identity was taken as the random effect. Furthermore, to prevent pseudo-replication effects, all the data for a given bird were averaged before performing the analyses for each vocalization type (Nakagawa and Hauber, 2011). In this manner, data from each bird were given equal weight. The effect size (as a main fixed effect of vocalization type) was reported as the adjusted R2 of the model, and the statistical significance was calculated from a likelihood ratio test, which in this case is equivalent to an F-test. As post-hoc tests and to assess the differences in acoustical parameters for each vocalization type, we performed a Wald test to assess whether the estimated coefficient (corresponding to the adjusted average value for a particular acoustical parameter) for each vocalization type was significantly different from the average value obtained across all vocalization types. We also report the 95% confidence intervals for each of the coefficients. The complete statistical results from these mixed-effect models are shown in the supplementary material (Supplementary Table 1).

We also used mixed-effect models to assess the effect of sex on acoustical differences. In this model, the predicted variable was the acoustical parameter, the main fixed effects were the vocalization type, the sex and the interaction (Type*Sex) and the random effect was the bird identity. Statistical significance for the effect of Sex was then obtained from a likelihood ratio comparing the model that included Type, Sex and the interaction to the model that only included Type. When this test was significant, post-hoc tests were performed to determine which vocalization types were different between males and females. In the post-hoc test, data from each vocalization type were analyzed separately and a linear model was used to test the significance of the unique fixed factor Sex. This test was equivalent to a t-test used to assess the differences on the average values of that acoustical parameter obtained for each male and female bird.

Classifiers

To determine the combination of acoustical features that can discriminate between vocalization types and to quantify the degree of discrimination among these categories, we compared two multinomial supervised classifiers using as input the representations in our four feature spaces (Figures 1, 2, Supplementary Figures 1 and 2). The two supervised classifiers are the Random Forest (RF) and the Fisher Linear Discriminant Analysis (FLDA). To use the nomenclature of supervised classifiers, the vocalization types will be referred to as classes in this next section. We also used an unsupervised classifier (clustering analysis): a mixture-of-Gaussians that can be used efficiently to fit multi-modal and multivariate probability density functions.

Random Forest

A random forest (RF) is a powerful supervised classifier that uses a set of classification trees in a bootstrap fashion to both prevent over-fitting and better explore potential partitions of the feature space (Breiman, 2001). RFs have been shown to often provide the best performance in classification tasks including in the field of bioacoustics (Armitage and Ober, 2010). In this study, we used RF to obtain estimates of an upper bound on classification performance. The measure of this upper bound was critical in order to validate the results obtained for the FLDA. We used Random Forests of 200 trees, with a minimum of 5 data points per leaf and a uniform prior for class probabilities to avoid any bias towards categories that would be better represented in the dataset.

Fisher Linear Discriminant Analysis

Our second classifier was the classical Fisher linear discriminant analysis (FLDA). The FLDA finds linear combination of acoustical features to maximally separate classes while taking into account the within-classes covariance matrix. These discriminant functions are the eigenvectors obtained from the ratio of the between-classes and within-classes covariance matrix. Discriminant functions are ordered by the decreasing value of the eigenvalue (i.e. the function where the ratio of the between and within variance is the greatest is first). Linear decision boundaries within the linear subspace spanned by all significant discriminant functions can then be used to classify sound into their respective classes. In our implementation, we assumed that the within-class covariance was the same for all classes. The great advantage of the FLDA over the RF is that it allows one to examine the form of the discriminant functions and thus easily interpret the acoustical factors that could be used for discrimination. The disadvantage of the FLDA is that the classes might not be linearly separable in a particular acoustical feature space.

Logistic Regression

To further facilitate the interpretation of our results, we also performed a series of logistic regression analyses, one for each vocalization type. The goal of these analyses was to find the unique linear combination of acoustical features that would allow one to separate one vocalization type (or class) from all the others. The logistic regression was only performed on the acoustical feature space based on the full spectrograms. The inputs to the logistic regression were taken to be the coordinates of each vocalization in the subspace defined by the significant discriminant functions obtained in the FLDA.

Performance: Cross-Validation and Regularization

Just as mixed-effects modeling is required in the statistical analyses described above to potentially correct for bird dependent effects, the same care must be taken when training and testing the multivariate classifiers (Mundry and Sommer, 2007). To do so, we used a cross-validation procedure that took into account the nested format of our data. More specifically, we used a training data set where, for each vocalization type, all the data from a particular bird were excluded, and different birds could be excluded for different vocalization types. The “excluded” data were then used as our validation dataset. In this manner, the classification was assessed for vocalizations from a given bird and class that were not included in the training, allowing us to directly assess the generalization of the classifier. Two hundred (200) different permutations of excluded birds per vocalization type were obtained to generate 200 training and validation data sets. These performance data were sufficient to generate stable confusion matrices shown on Figure 10. We also used these performance data to calculate confidence intervals on percent of correct classification using a maximum likelihood binomial fit.

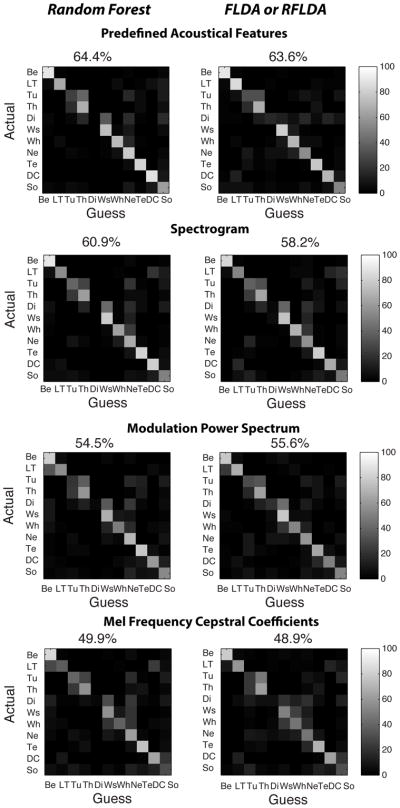

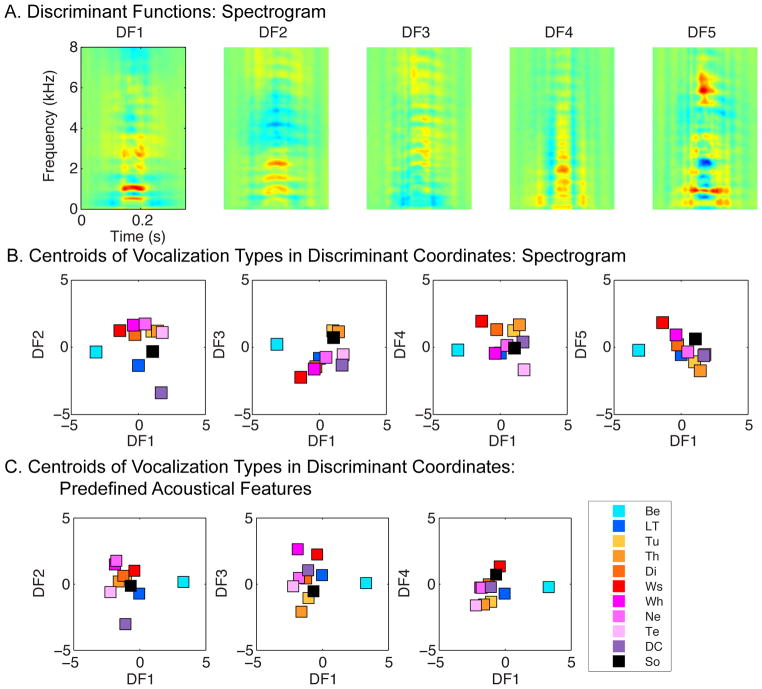

Figure 10. Confusion Matrices.

The figure shows all the confusion matrices obtained from the Random Forest (left column) and Regularized Fisher Linear Discriminant Analysis (right column) for the four feature spaces used here and described on Figures 1, 2, Supplementary Figures 1 and 2. In a confusion matrix each row shows how exemplars from a particular vocalization category were classified into the categories shown in the columns. The color code is used to show the probability of that classification: the conditional probability of classifying a vocalization as type x (column x) when it is actually type y (row y). The classification is performed on a cross-validation dataset as explained in the methods. The average percentage of correct classification, obtained by averaging the diagonal of each matrix, is shown on the top of each confusion matrix. These numbers are used in the plot of Figure 11A. Abbreviations are defined in Figure 3.

The cross-validation was also used as part of a regularization procedure (see Figure 2). For acoustical feature spaces that included a large number of parameters (e.g. the spectrogram) both the FLDA and RF classifiers generated solutions that over fitted the data. To prevent over-fitting, we used principal component analysis (PCA) as a dimensionality reduction step and tested the performance of the classifiers as a function of the number of PCs as a regularization step. As shown on Figure 2, best performances were obtained with approximately 40 PCs when using the Spectrogram as a feature space. In order to use the same number of parameters for all our large feature spaces, 40 PCs were used for both the RF and the FLDA and for the feature space based on the Spectrogram, the MPS and the MFCCs. The percent of the variance in the data explained by these 40 PCs is shown on Figures 2, Supplementary Figures 1 and 2. Using PCA as a regularization step in FLDA is equivalent to assigning a Wishart prior on the within-group covariance matrix of features (assumed to be the same for each group). This technique is called Regularized LDA or RLDA (Murphy, 2012, p. 107). Here we use both the regularization obtained from the PCA (by systematically evaluating the goodness of fit obtained by varying the number of PCs) and the dimensionality reduction obtained in the FLDA (Murphy, 2012, p. 271). We will call this technique the regularized FLDA or RFLDA.

Clustering analysis: a mixture-of-Gaussians used as an unsupervised Classifier

An unsupervised classifier (also known as a clustering algorithm) was used to further determine whether the vocalization types defined behaviorally did indeed form separate clusters in acoustical feature spaces. Unsupervised classifiers decompose the generally multi-dimensional distribution of a dataset into a sum of distributions. In the case of a mixture-of-Gaussians, the component distributions are all multi-dimensional Gaussian distributions. If the weights of these Gaussian component distributions are approximately equal and when the component distributions are well separated (e.g. separated by one standard deviation), then the joint distribution is shown to be multi-modal, suggestive of the presence of different groups. Note that it is possible that a set of vocalization types (defined behaviorally) could form a unimodal distribution and still be separable using a supervised classifier as long as the different vocalization types are found predominantly at different ranges of this unimodal distribution. The mixture-of-Gaussians unsupervised classifier is thus stringent in that it will only generate “positive” answers for groups that are separable in the sense of being multi-modal.

In the mixture-of-Gaussians modeling, all the data points (the n vocalizations) are used as a sample of the probability density function that is modeled. Each Gaussian component is defined by its weight (one value), its mean value in the feature space (in the k-dimensional space so a k element vector) and its covariance matrix (a symmetric k*k matrix). For a k dimensional feature space, each component Gaussian has therefore 1+k+(k*(k+1))/2 parameters. For a mixture-of-Gaussians of m components, the model will have m*(1+k+(k*(k+1))/2) parameters. These parameters are fitted by maximizing the likelihood using the Expectation-Maximization algorithm (EM). The EM algorithm finds a local minimum that depends on the initialization values. Thus, we ran multiple fits using different initialization values for each mixture model (i.e. for each value of m) and used the one that gave us the maximum likelihood. To compare the goodness of fit of Gaussian mixtures with different number of components (m), we used the Bayesian Information Criterion (BIC), which takes into account the negative log likelihood but penalizes for the number of parameters relative to the number of sample points (n). Since k is fixed the BIC penalizes for higher numbers of Gaussian components. The model with the smallest BIC is deemed to be the best.

In our analysis, we applied the mixture-of-Gaussians to specific call types (e.g. Tet calls) to determine whether they could actually be composed of multiple vocalization types or, in the contrary, to specific sets of two call types to determine whether they indeed formed separate clusters acoustically. For these analyses, we used the 22 PAFs, as described above and in Figure 1. The optimal number of Gaussian components was chosen by finding the best trade-off between having the smallest BIC and obtaining close to uniform relative weights of the Gaussian components. In the case where multiple call types were combined to be modeled by the Gaussian mixture, we could then calculate the proportion of each call type in the groups determined from the Gaussian mixture (as shown in Figures 9 and Supplementary Figure 4).

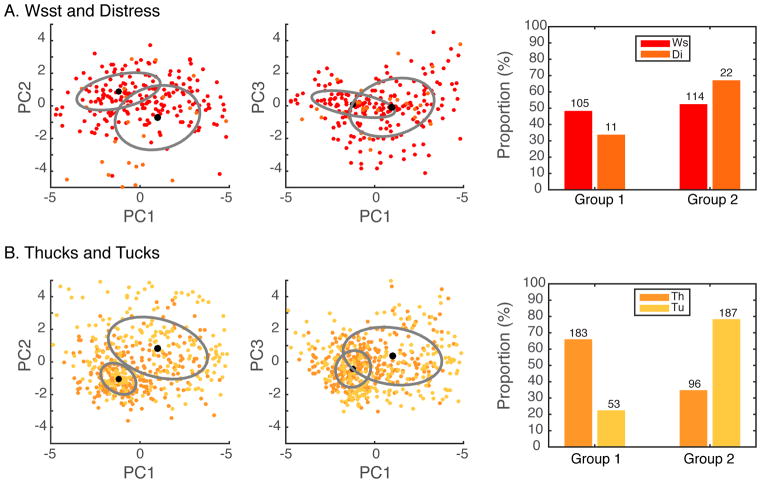

Figure 9. Unsupervised Clustering: Are Thuk acoustically distinct from Tuck calls, and Distress from Wsst calls?

A “mixture-of-Gaussians” model was fitted to the probability density distribution of the 10 first principal component coefficients derived for the 22 PAFs as defined on Figure 1 (see Methods). Each point in the raster plots on the left and middle column correspond to one vocalization. The points are color-coded according to call type but the mixture-of-Gaussians algorithm is blind to this information. The ellipses and center black dot show the covariance (at one standard deviation) and mean of the fit obtained from a mixture of two Gaussians model. The size of the center dot is proportional to the weight of the Gaussian. A. Distress and Wsst calls. We analyzed the shape of the distribution of Wsst and Distress calls as one group. Two Gaussians with similar weights (w1=0.4603, w2 = 0.5397) provided a good description of the distribution but the two call types were equally assigned to each of these two groups (z=1.57, p=0.12) suggesting that, at the level of a single call syllable, Distress and Wsst are acoustically similar. B. The second row shows the results of the same analysis for Thuk and Tuck calls. Here also two Gaussians with similar weights (w1=0.4591, w2 = 0.5409) fit the data well. The bar graph on the right panel shows the proportion (and raw number) of Thuk and Tuck that would be assigned to each of these two groups. The proportions are different in the two groups (z=9.92 p<10-4).

Software

All analyses were performed using custom code written in Matlab, using the following high-level functions when appropriate. The MFCCs were calculated using the mfcc function provided by Kamil Wojcicki to match the algorithm in the Hidden Markov Toolkit for speech processing known as HTK (Young et al., 2006). The mixed-effect modeling was performed using the Matlab function fitlme. The RFLDA was estimated with a custom Matlab script that used the Matlab function manova1 to estimate the FLDA for different PCA subspaces. The random forest classifier was trained using the Matlab function TreeBagger. The logistic regression was performed using the Matlab function glmfit. The mixture-of-Gaussian modeling was performed using the Matlab function fitgmdist.

Results

In the following sections, we first describe qualitatively and quantitatively the different types of vocalizations produced by domesticated zebra finches. We then show how we applied multiple approaches in order to reveal the acoustic signature of each vocalization type. For this purpose, we isolated over 8000 calls and song syllables and used four independent representations for these vocalizations: various measures in the temporal and spectral domains that we call the Predefined Acoustical Features (PAF) as well as three representations in the joint spectro-temporal domain provided by the spectrogram, the modulation power spectrum and a time varying cepstrum (see methods, Figures 1–2, Supplementary Figures 1–2). We compare the results obtained from these different representations of the sounds (or feature spaces) and two distinct classification algorithms. All of our analyses emphasize the importance of the spectral shape and of the pitch saliency as the main acoustical parameters that code meaning in zebra finches’ vocalizations.

The vocal repertoire of the domesticated zebra finch

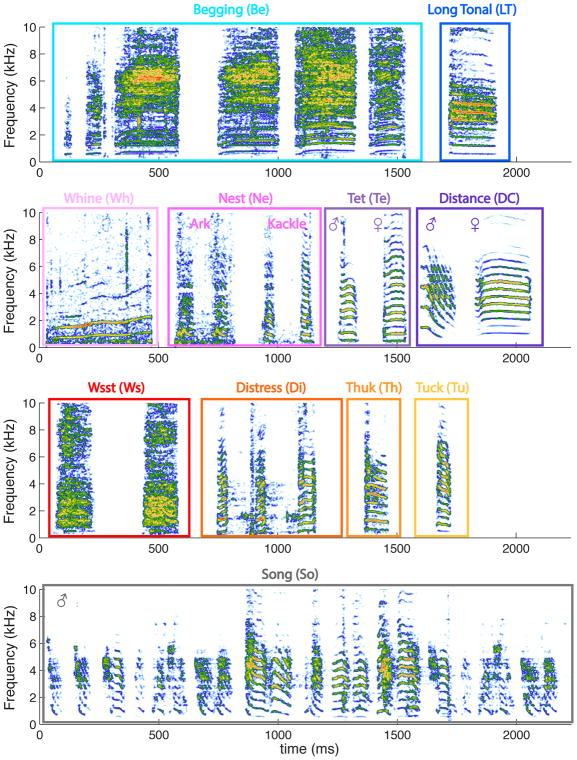

On Figure 3, we show spectrograms of examples of each of the vocalization types that we recorded from our domesticated zebra finch colony. We classified these vocalizations by assessing the behavioral context in which they were emitted and by ear, having learned their acoustical signatures during previous experiments (Elie et al., 2011). Our classification also followed the grouping and nomenclature described by Zann (1996) in his fieldwork with wild zebra finches. Indeed, we found that domesticated zebra finches housed in groups and in living conditions that also promote nesting, foraging, defensive and alarming behaviors produced all vocalization types described by Zann with the potential exception of the Stack, a call mainly produced just before takeoff. A Stack call has also been described by Ter Maat et al (2014) in captive animals as a contact call used somewhat interchangeably with the soft and short contact call, called the Tet call. As we will discuss below, the call described by Ter Maat et al (2014) is most certainly included in our Tet category. Based on different behavioral contexts, we also made a distinction between the alarm call described by Zann (Thuk call) and another new alarm call that we named the Tuck call. Furthermore, because the two types of nest calls named by Zann Arks and Kackles constituted a continuum in our recordings (shown below), we decided to group all of them in the same vocalization type, Nest call. Note also that although we were able to record Copulation calls as described by Zann (1996), the quality and the quantity of recordings for this particular vocalization type were not sufficient to include it in our analysis (but see one example as Supplementary Sound File 5). Finally, we further grouped the vocalization types into calls produced only by juveniles (top row in Figure 3, blue hues), calls and song produced in affiliative contexts (second and fourth rows in Figure 3, purple/black hues) and non-affiliative calls (third row, orange hues).

Figure 3. The zebra finch vocal repertoire.

Spectrograms of examples of each vocalization type found in domesticated zebra finches. The top row shows the two types of calls produced solely by chicks: a Begging call bout and a Long Tonal call. The Long Tonal call is the precursor of the adult Distance call and functions as a contact call. The second row shows the calls produced by adults during affiliative or neutral behaviors. The Whine and the Nest calls are not only produced during early phases of pair bonding and nest building but also any time mates are relaying each other at the nest. The Tet call is a contact call produced for short-range communication while the Distance call is a contact call produced for long-range communication. Both are sexually dimorphic. The third row shows the calls produced during agonistic interactions or threatening situations by adults. The aggressive call, called the Wsst call, is made here of two syllables and is produced shortly before aggression of a conspecific. The Distress call made here of three syllables is produced by the victim during or just after the aggression. There are two alarm calls called the Thuk call, produced by parents and directed at chicks and mate, and the Tuck call, a more generic alarm call. Finally, an example of a Song, the more complex signal used by males in courtship, pair bonding and mating behavior is shown on the last row. The color code used in this figure categorizes the vocalization types into hyper classes: blue hues for chick calls, pink to deep purple hues for affiliative calls, red/orange hues for non-affiliative calls and grey/black for song. The same color code is used in all the figures. For the spectrogram colors, vocalizations in each group (rows) where normalized to peak amplitude and a 40dB color scale was used. The sounds corresponding to these vocalizations can be found online as supplemental material (chick calls, Supplementary Sound File 1; affiliative calls, Supplementary Sound File 2; nonaffiliative calls, Supplementary Sound File 3; and song, Supplementary Sound File 4). The abbreviations used for each category in other figures are given in parenthesis.

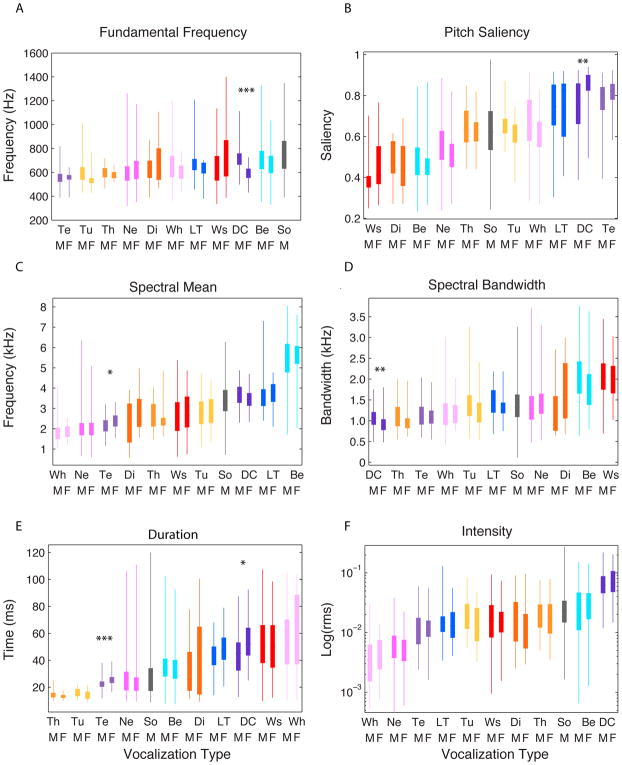

In addition to qualitatively describing each vocalization type based on its characteristic spectrographic features, we quantified differences across vocalization types using the PAFs measures that described the temporal envelope, the spectral envelope and the fundamental frequency of the sounds (see Figure 1 and Methods). Those PAFs measures were aimed to fully describe the spectral, loudness, duration and pitch characteristics of the vocalizations. Results based on those measures are shown in Figures 4–7. Statistical analyses using linear mixed effect models showed that most of these PAF measures carried some information about vocalization types as shown in Figure 8. For each PAF, the correctly weighted mean value of each vocalization type with its 95% confidence intervals obtained from the mixed effect model is shown in an additional table (see Supplementary Table 2). This table also reports the P-values obtained for the comparisons of each category mean value to the mean over all categories (Wald Test). In the text below, when we state that a particular acoustical measure (such as the fundamental) is significantly different between two vocalization categories, we mean that the 95% confidence intervals for the two categories do not overlap. Finally, from the average spectral envelope of each vocalization type shown in Fig. 5a, we obtained spectral peaks that we called formants using the acoustical definition of this word. The frequency of these formants is shown on Table 2.

Figure 4. Average frequency power spectra.

The average frequency power spectra for each vocalization type were obtained by first averaging the spectra of all vocalizations for each bird and each vocalization type, and then averaging across birds for each vocalization type. 100 dB corresponds to the peak amplitude recorded (found for Distance calls ~ 80 dB SPL at 20 cm). Abbreviations are defined in Figure 3.

Figure 7. Box and whisker plots for 6 out of the 22 PAFs vs vocalization type.

These parameters were chosen to illustrate the distinctive acoustical properties of vocalization categories. The bottom and the top of the solid rectangles correspond to the beginning and end of the 2nd and 3rd quartile and the whiskers show the entire range of values found in our data set. In all plots the vocalization types (shown in the x-axis) are ordered in increasing value of the corresponding acoustical feature to facilitate the interpretation of the results. Two acoustical properties related to the fundamental frequency are shown on the first row: the fundamental frequency F0 (A) and the saliency (B) defined as the proportion of sections of the vocalization with an auto-correlation peak amplitude at the periodicity period greater than 50% of the peak amplitude at zero. Two acoustical properties related to the spectral envelope are shown in the middle row: the spectral mean (C) and the spectral bandwidth (D). Finally, two additional properties, the duration (E) and the sound intensity (F) are shown in the third row. The *, **, *** indicate significant differences between male and female vocalizations for specific types in post-hoc tests with p < 0.05, <0.01 and < 0.001 correspondingly. Vocalization abbreviations are defined in Figure 3.

Table 2. Frequency of spectral peaks (formants, in kHz) in the average spectral envelope of each vocalization type.

The location of these peaks is shown as dotted lines on Figure 5a.

| F1 | F2 | F3 | F4 | |

|---|---|---|---|---|

| Begging | 0.67 | 1.69 | 4.67 | 6.53 |

| Long Tonal | 1.67 | 3.86 | ||

| Tuck | 1.22 | 2.55 | ||

| Thuk | 1.36 | 2 | ||

| Distress | 0.67 | 1.38 | 2.69 | |

| Wsst | 0.69 | 1.81 | ||

| Whine | 0.76 | 1.65 | ||

| Nest | 0.76 | 2.12 | ||

| Tet | 0.95 | 2.38 | ||

| Distance | 1.43 | 3.24 | ||

| Song | 1.19 | 3.72 |

Juvenile Calls

Begging Calls

On the fourth day after birth, chicks start to emit Begging calls that are described as “soft cheeping sounds”, while gaping to elicit feeding behavior in their parents (Zann, 1996). The acoustic structure of this vocalization changes along development to become the loud and noisy broadband sounds emitted in long bouts by 15-days-old to 40-days-old chicks (see ontogeny descriptions of this call in Zann, 1996 and Levrero et al., 2009). In the present study, we recorded the mature begging call of 19-days-old to 25-days-old fledglings (0 to 4 days after the chick got out of the nest; Figure 3). Begging calls were recorded while the chick was displaying the typical head twisted open beak posture of zebra finches. Begging calls were among the three vocalizations in the vocal repertoire that were the “noisiest” on average as quantified by our measure of pitch saliency (see Fig. 7B; Wald test, P <10−4). Note however that Begging calls exhibited a very large range of pitch saliencies and were far from lacking harmonic structure as it can be seen in the examples of Figures 3 and Supplementary Figure 3. In addition, Begging calls had the highest occurrence frequency of double-voices (29% vs 13% average, Wald Test P<10−4). We detected the occurrence of two voices by the presence of harmonics in the spectrogram that were not multiples of the principal fundamental. The measure of frequency of double-voices was conducted on sections of the call that had harmonic structure (defined by a pitch saliency > 0.5). On Supplementary Figure 3, we show examples of calls with two voices, including a Begging call. Double voices were quite common in the two juvenile vocalizations and might in these cases reflect an immature control of the bird’s vocal organ. In other avian species, double voices can generate frequency beats (Robisson et al., 1993) or rapid switching of notes in songs (Allan and Suthers, 1994), both of which might be required to produce behaviorally effective signals and provide additional information about the identity of the caller. In zebra finches, the presence of double voices could be informative to distinguish among call categories and, in particular, to further distinguish juvenile calls from adult calls. Begging calls had also a very distinctive spectral envelope characterized by two high frequency formants (F3 and F4) between 4 and 8 kHz (visible on the spectrogram example of Figure 3 and see Figures 4 and 5a and Table 2). These high-frequency resonances were unique to this call type. As a result, the mean frequency of their spectral envelope, or spectral mean, was significantly higher than that of all vocalization types (Mean S = 5430 Hz vs. the overall mean of 2970 Hz, Wald Test P<10−4; Figure 7C and Supplementary Table 2). Begging calls also had a relatively large frequency bandwidth (Figure 7D). In terms of temporal properties, Begging calls displayed an average duration (Figures 6B and 7E; Wald Test P=0.48) but with a very large spread (Figure 7E), and this range of durations was observed within birds since Begging call bouts are often composed of calls of varying lengths (see the spectrogram shown in Figure 3). Zann (1996) noted that Begging calls were among the loudest in the repertoire and could be heard as far as 100m. Our measurements support that observation as Begging calls were on average the second loudest vocalization after the Distance call, although we also observed a very large spread of intensities (Figures 6A and 7F). Because of all of these unique acoustical properties, Begging calls were very easily classified by discrimination algorithms, as we will show below (Figures 10 and 11B).

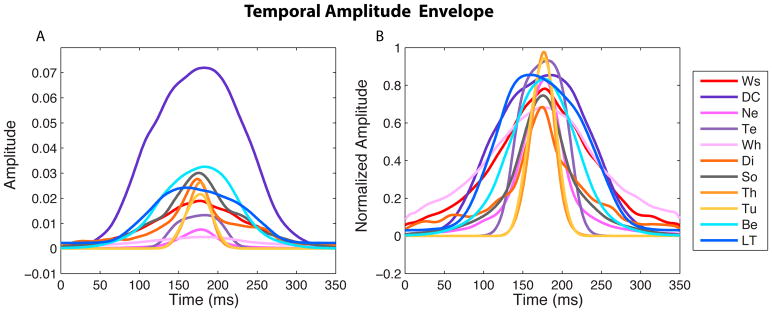

Figure 6. Temporal Amplitude Envelopes.

The average temporal amplitude envelope for each vocalization type is shown raw on the left panel (A) and normalized by peak amplitude on the right (B). Note that the y-scale is linear and not logarithmic as in the frequency power spectra of Figure 4. These average envelopes were obtained by first averaging for each bird and vocalization type, and then across birds. Abbreviations are defined in Figure 3.

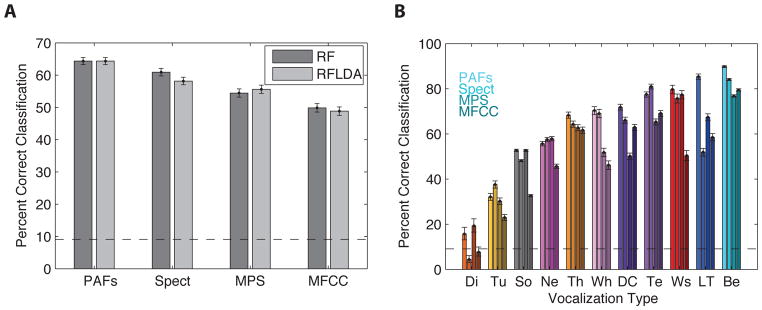

Figure 11. Performance of the Random Forest (RF) and Regularized Fisher Linear Discriminant Analysis (RFLDA) for all vocalization types and for each feature space.

A. Average performance of each classifier across all vocalization types. The error bars are confidence intervals obtained from a binomial fit of the classification performance on cross-validated data. The dotted horizontal line is the chance level (1/11). B. Performance of the RFLDA for each vocalization type. A gradient of darkness (from light to dark) is used to represent the four feature spaces: Predefined Acoustical Features (PAFs), Spectrogram (Spect), Modulation Power Spectrum (MPS) and Mel Frequency Cepstral Coefficients (MFCC). The vocalization types on the x-axis are sorted in ascending order according to the percent of correct classification obtained with the Spectrogram feature space. Abbreviations are defined in Figure 3.

Long Tonal Calls

The Long Tonal call is a contact call produced by chicks when they are about to fledge (from 15 days post-hatch). Fledglings spontaneously emit this call when they lose visual contact with members of their family and in response to the Distance calls of their parents or the Long Tonal calls of their siblings (Zann, 1996). Here we report the analysis for Long Tonal calls of fledglings recorded 1 to 4 days after they flew out of the nest (21–25 days-old chicks). The Long Tonal call is a precursor of the adult Distance call and starts to change slightly from 22 days after hatch (Zann, 1996). Therefore, the Long Tonal call shares many similarities with Distance calls. Long Tonal calls were highly harmonic as quantified by very high pitch saliency values (Fig 7B, Wald Test P<10−4), with a range of fundamental frequencies that was very similar to that of the adult Distance call (Fig 7A). The average fundamental was 625 Hz for females and 671 Hz for males and although this difference was not statistically significant (P=0.15; post-hoc mixed-effect model; see also the differences in range between males and females), it showed a trend that was in accordance with the sex differences observed for the adult Distance call, as described below. The shape of the frequency spectrum and the location of the first two formants in Long Tonal calls were also very similar to that of Distance calls, with the juvenile call being shifted slightly towards higher frequencies and having a slightly larger bandwidth (Figures 4, 5A and 7D and Table 2). Long Tonal calls also had similar durations to Distance calls and were among the longest calls in the repertoire (measured as a temporal width, Std T: 43.8 ms vs. the overall mean of 34.7 ms; Figures 6B and 7E, Wald Test P=0.0003). Finally, the loudness of Long Tonal calls was middle range compared to other vocalization types and in particular those calls were significantly softer than the adult Distance call (see Supplementary Table 2; Figures 6A and 7F).

Juveniles also produced Distress calls and Tet calls that shared the acoustical characteristics of adult calls described below. Examples of these calls are given as additional sound files (see Supplementary Sound File 6 for a juvenile Distress call and Supplementary Sound File 7 for a juvenile Tet call). However, we did not record sufficient juvenile Distress and Tet calls to quantify any differences, were they to exist.

Affiliative calls

Tet Calls and Distance Calls

Adult zebra finches produce two contact calls: the shorter and softer Tet call for short-range communication and the louder and longer Distance call for long-range communication (Mouterde et al., 2014; Perez et al., 2015; Zann, 1996). The Tet call is the most frequent vocalization as it appears to be produced in an almost automatic and continuous fashion when zebra finches move around on perches or on the ground. These “background” Tet calls form an almost continuous hum and do not appear to produce a particular response in the nearby birds, although in the wild Elie et al. (Elie et al., 2010) show that Tet calls could also be used for mate recognition in nesting birds. Increases and decreases in Tet call frequency might also be informative: a sudden decrease in Tet call frequency could signal an unusual event, and the intensity and frequency of Tet calls also increases before takeoffs (Zann, 1996). Note also that Tet calls are components along with Nest calls and Whine calls of the quiet duos that mates perform at nest sites (Elie et al., 2010). In a recent analysis of short-range contact calls produced by captive zebra finches, Ter Maat et al. (2014) distinguish Tet calls from Stack calls, where Tet is used to describe the slightly shorter and more frequency modulated set of contact calls and Stack is used to describe the calls that are presented as flat harmonic stacks in spectrographic representations. To investigate whether these two types of soft contact calls belong to a single acoustical category or to two distinct acoustical groups, we performed unsupervised clustering on all the soft contact calls we recorded and labeled as Tets. The unsupervised clustering was performed separately on male and female Tets because, as we will show below, Tets are also sexually dimorphic. Here the Tets were represented by the 10 first principal components (10 PCs) obtained from the 22 PAF (see Methods and Figure 1). As shown on Supplementary Figure 4(B and C), female and male Tet calls can be clustered into two groups, one with low values of coefficient of variation (CV) for the fundamental (corresponding to the description of the Stack by Ter Maat et al., 2014) and one with high CV values for the fundamental (corresponding to the description of the Tet by Ter Maat et al.). Because, in our observations, these two acoustically distinct call types were produced during the same behavioral context, we grouped them together and designate them as Tets from here on.

Distance calls are produced when zebra finches are out of immediate visual contact with the colony, their mate or the fledglings they care for. Distance calls can be produced both during flight and while perched. These loud contact calls carry individual information and elicit orienting responses and vocal callbacks both in juveniles and adults, and promote reunions: they are used for sex, mate, parent or kin recognition (Mouterde et al., 2014; Mulard et al., 2010; Perez et al., 2015; Vicario et al., 2001; Vignal et al., 2004). In our recordings, the Distance calls, the Long Tonal calls and the Tet calls had the highest levels of pitch saliency (Figure 7B). The sharp harmonic structure of all these contact calls can be seen in the spectrogram examples shown in Figure 3. Of the three harmonic contact calls, Tet calls had the lowest fundamental frequency (Figure 7A; male and female Mean F0 Tet = 558 Hz, Distance = 680 Hz, Long Tonal = 655 Hz; see Supplementary Table 2). Tet, Distance and Long Tonal calls could also be distinguished by their spectral envelope and formant frequencies: both Distance and Long Tonal calls were characterized by the highest first and second formants (see Figure 5A and Table 2), while Tet calls had those formants at average frequencies. As a result the spectral mean of Tet calls was significantly lower than that of Distance and Long Tonal calls (Tet = 2280 Hz, Distance = 3580 Hz, Long Tonal = 3600 Hz; see Figure 7C and Supplementary Table 2). Tet calls also differed in their duration and loudness. Tet calls were very short calls while Distance and Long Tonal calls were among the longest (Tet Std T = 23.9 ms; Distance = 47.7 ms; Long Tonal = 43.8 ms; see Figures. 6B and 7E and Supplementary Table 2). Tet calls were also much softer than Distance calls (see Figures 4, 6A and 7F, and Supplementary Table 2) as one might expect given their function.

Nest Calls

Potential nest sites and nests are the scenes for particular soft calls: the Nest calls and the Whine calls. Nest and Whine calls are emitted by paired birds around reproductive activities: when they are searching for a new nest, when they are building their nest and almost each time they relieve each other at the nest during the brooding period (Zann, 1996; Elie et al. 2010; Gill et al., 2015). These calls are emitted in sequence either by one single partner (especially by the male when leading the nest search; Zann, 1996) or by both birds that are then performing soft duets using these calls in combination with Tet calls (Elie et al. 2010). Zann divided Nest calls into Ark and Kackle calls. According to Zann, Kackles are shorter raspy sounding loud calls produced initially around potential nesting sites. Arks are longer and softer sounds with a harsh sound often coming in pairs as in “ark-ark”. Although in captivity we also recorded Nest calls that could be acoustically classified using Zann’s descriptions as Ark and Kackle calls (see examples on Figure 3), we found that our Nest calls were emitted in identical behavioral context and were best described by a unimodal distribution, as revealed by the unsupervised clustering algorithm (see Supplementary Figure 5). Thus, we decided not to separate them into subcategories. Nest calls were among the shortest vocalizations with durations similar to that of Tet calls and much shorter than Whine calls (Std T = 28 ms; see Figure 6B and 7E, Wald Test P=0.015). While their level of pitch saliency was far lower than that of Tet calls, it was similar to the mid-range pitch saliency of Whine calls (Figure 7B). The two formants of Nest calls were lower than the formants of Tet calls. Compared to the Whine calls, the first formants were identical while the second formant was relatively higher in the Nest calls. As a result, Nest calls had the second lowest spectral mean after Whine calls (Mean S = 2013Hz; see Figure 7C, Wald test P<10−4). Nest calls were also among the softer ones with a level of intensity between that of Whine calls and Tet calls (see Figures 4, 6A, 7F; Wald test P<10−4).

Whine Calls