Abstract

Background

Studies addressing the appropriateness of laboratory testing have revealed approximately 20% overutilization. We conducted a narrative review to (1) describe current interventions aimed at reducing unnecessary laboratory testing, specifically in hospital settings, and (2) provide estimates of their efficacy in reducing test order volume and improving patient-related clinical outcomes.

Methods

The PubMed, Embase, Scopus, Web of Science, and Canadian Agency for Drugs and Technologies in Health-Health Technology Assessment databases were searched for studies describing the effects of interventions aimed at reducing unnecessary laboratory tests. Data on test order volume and clinical outcomes were extracted by one reviewer, while uncertainties were discussed with two other reviewers. Because of the heterogeneity of interventions and outcomes, no meta-analysis was performed.

Results

Eighty-four studies were included. Interventions were categorized into educational, (computerized) provider order entry [(C)POE], audit and feedback, or other interventions. Nearly all studies reported a reduction in test order volume. Only 15 assessed sustainability up to two years. Patient-related clinical outcomes were reported in 45 studies, two of which found negative effects.

Conclusions

Interventions from all categories have the potential to reduce unnecessary laboratory testing, although long-term sustainability is questionable. Owing to the heterogeneity of the interventions studied, it is difficult to conclude which approach was most successful, and for which tests. Most studies had methodological limitations, such as the absence of a control arm. Therefore, well-designed, controlled trials using clearly described interventions and relevant clinical outcomes are needed.

Keywords: Laboratory utilization, Laboratory testing, Unnecessary laboratory utilization, Unnecessary laboratory testing, Reduction, Intervention

INTRODUCTION

Over the past decades, Western countries have witnessed a marked rise in healthcare expenditure, with annual growth rates exceeding the rise in gross domestic product [1]. The constantly expanding field of diagnostics has contributed to this exponential growth in curative health-care costs. Rapid increases have been seen in the volumes and costs of different types of diagnostics, with absolute test volumes doubling every five to ten years in the United States, the United Kingdom, and Canada [2].

Laboratory testing represents the largest volume of medical activity and is considered to influence more than 70% of decision making in medical practice [2,3]. In 2015, Kobewka et al [4] reviewed numerous international studies to conclude that a considerable proportion of performed (laboratory) tests were unnecessary. Another review addressing the appropriateness of diagnostic laboratory testing reported a mean rate of overutilization of approximately 20% [5]. Statistically, laboratory test results will deviate from normal in 5% of healthy individuals [6]. Besides the financial impact, overutilization increases the number of false-positive results, leading to more, sometimes invasive and potentially harmful tests. In addition, excessive blood draw can result in iatrogenic anemia [7,8]. Moreover, excessive testing can lead to less patient-friendly practices. Therefore, a reduction in unnecessary laboratory testing is often targeted with the aim of improving patient safety and reducing healthcare expenditure. Such a reduction does not lead to adverse patient outcomes and might even reduce the length of hospital stay and the need for red cell transfusion [8,9,10,11,12].

Interventions to reduce unnecessary laboratory testing, such as educational sessions or posters, pop-up reminders upon test ordering through an electronic ordering system, modification of paper order forms, or providing clinicians insight into their ordering patterns, have been implemented and studied in different clinical settings in many countries [4,13]. Although a few reviews examine the efficacy of these interventions in different settings [4,13], no recent review has considered a hospital setting. Therefore, this review aims to describe the different types of interventions implemented to reduce unnecessary laboratory testing in hospital settings as well as the overall efficacy of these interventions and their impact on patient-related clinical outcomes.

METHODS

1. Data sources and search strategy

We initially searched the PubMed, Embase, and Canadian Agency for Drugs and Technologies in Health-Health Technology Assessment (CADTH HTA) databases from inception through July 2016 for potentially relevant articles describing interventions to reduce unnecessary laboratory testing in hospital settings. We combined synonyms of the following terms: laboratory test, reduction, and intervention. Supplemental Data S1 provides an overview of all search terms used. Highly relevant papers found in this initial screening of titles and abstracts were selected and subjected to backward reference checking in Scopus. Of the papers retrieved in this round, a selection was checked backwards and forwards for references in Scopus and Web of Science. Our search was not exhaustive, as the aim of our effort was not to report and compare exact estimates of effectiveness, but merely to describe published interventions and provide crude estimates of their effectiveness.

2. Study selection

We selected only hospital-based studies that reported an intervention to reduce unnecessary laboratory testing and presented data on changes in test order volumes. Only articles written in English or Dutch with full text available were included. We defined unnecessary laboratory tests as those with results that did not generate added value in clinical decision making, relying on the authors' judgment. Studies were excluded when only the influence of the intervention on costs was presented or when reduction in test order volumes was given only for a subset of all tests studied. We chose to exclude the latter to avoid over-optimism that might occur when selective results are presented.

3. Data extraction and quality assessment

For each report included, data on the type of intervention(s) carried out were extracted. The interventions were categorized as educational interventions, (computerized) provider order entry [(C)POE] interventions, audit and feedback interventions, and others, based in part on a subdivision previously used by Kobewka et al [4]. We extracted data on the reduction in test order volume, which was expressed as the percentage change in order volume of the targeted tests before and after the intervention.

Further, we assessed the study design and characteristics of the comparators used. To get an indication of the study size, the number of participating centers was recorded along with a measure of study population, such as number of visits and admissions and number of hospital days. We assessed the number of tests targeted and the reproducibility and sustainability of the interventions (i.e., reduction in test order volume up to 2 years post-intervention). In addition, we noted whether the studies provided data on patient-related (clinical) outcomes that might have been affected by the modification of laboratory utilization, such as hospital length of stay, number of intensive care unit (ICU) admissions, number of readmissions, and mortality.

Data were extracted by one reviewer (RB). Uncertainties in data extraction were discussed with two other reviewers (MB, PN) until consensus was reached. Because of the anticipated heterogeneity of the tests, studied interventions, and reported outcome measures, we did not perform a meta-analysis.

RESULTS

1. Search results

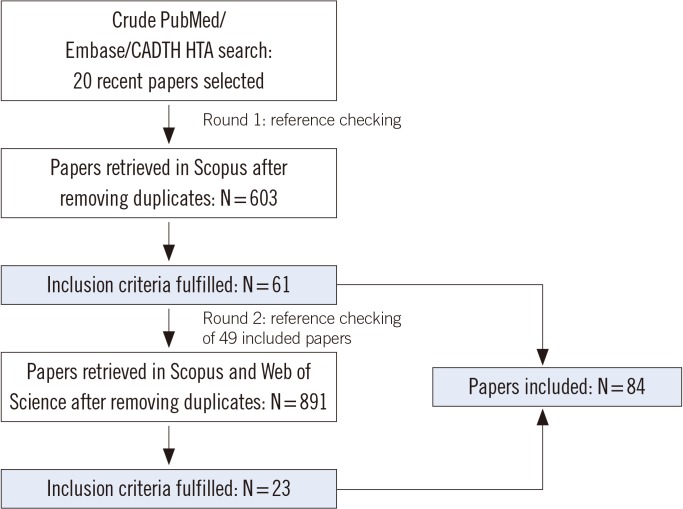

After backward reference checking of 20 relevant papers selected from our PubMed/Embase/CADTH HTA database search, we retrieved 603 unique papers. Of these, 61 papers met our inclusion criteria. A selection of these papers was checked for references backwards and forwards. Of the 891 papers retrieved in this search, 23 papers fulfilled our inclusion criteria. Fig. 1 illustrates our search algorithm.

Fig. 1. Flowchart of the literature search algorithm used for identifying and selecting studies for inclusion in this review.

Abbrevations: CADTH HTA, Canadian Agency for Drugs and Technologies in Health-Health Technology Assessment.

2. Study characteristics and quality assessment

Table 1 lists characteristics of studies included (N=84) in terms of design, presence and similarity of a comparator group, study size, number of tests targeted, reproducibility of the intervention, sustainability of effects, and reported effect on clinical outcomes if investigated. A more detailed overview of the individual studies can be found in Supplemental Data Table S2.

Table 1. Characteristics of included studies.

| N (%) | |

|---|---|

| Study design | |

| Before after study | 56.6 (66.7) |

| Retrospective audit | 8 (9.5) |

| Randomized controlled trial | 5 (6.0) |

| Non-randomized controlled trial | 15 (17.8) |

| Similarity of patients and providers between comparison groups | |

| Both patients and providers comparable between both groups | 7 (8.3) |

| Patients comparable, providers not comparable | 1 (1.2) |

| Patients and providers not comparable | 1 (1.2) |

| Patients comparable, no data on comparability of providers | 21 (25.0) |

| Patients not comparable, no data on comparability of providers | 3 (3.6) |

| Providers comparable, no data on comparability of patients | 7 (8.3) |

| No data on comparability of either patients or providers | 36 (42.9) |

| No comparator group | 8 (9.5) |

| Number of centers included | 78 (92.9) |

| Single center | 6 (7.1) |

| Multiple centers | |

| Number of tests studied | |

| 1 | 17 (20.2) |

| 2–5 | 5 (6.0) |

| >5 | 53 (63.1) |

| Unclear | 9 (10.7) |

| Reproducible intervention | |

| Yes | 44 (52.4) |

| No | 40 (47.6) |

| Sustainability assessed | |

| Yes | 14 (16.7) |

| No | 70 (83.3) |

| Data on clinical outcomes reported | |

| Yes | 45 (53.6) |

| No | 39 (46.4) |

1) Study design and characteristics of comparator

Of the five randomized controlled trials, randomization was performed at the patient level in two studies, at the provider level in two studies, and at the test level in one study (i.e., a test was randomized to be subject to the intervention or not). Of the non-randomized controlled trials included, six used (a subset of) other tests as a control arm (e.g., a CPOE intervention in which the intervention applied to a subset of tests and another subset was used as a comparator), six used another department within the same clinic, and in three studies, another clinic was used as the control arm.

For controlled trials, we assessed whether both the intervention group and the control group were comparable with regard to the providers subjected to the intervention as well as the patients for whom they provided. In before-after studies, we assessed whether both patient and provider groups before and after the intervention were comparable; as shown in Table 1, this was the case in only seven studies (8.3%).

2) Study population and tests

The numbers of visits and admissions analyzed ranged from 287 to 5,026,049. The number of hospital days analyzed ranged from 9,890 to 1,557,550. The majority of studies (93%) were single-center studies. In the majority of studies, more than five tests were targeted.

3) Reproducibility of the intervention

We assessed whether the interventions were described in sufficient detail to allow replication in another setting. This was the case in 44 studies, most of which (59%) reported (C)POE interventions. Information provided included the guidelines that were developed and screenshots of the modified order screen or form.

4) Sustainability

Only 15 studies (17.9%) investigated sustainability. All of these demonstrated a reduction in test order volume that was sustained for two or more years.

3. Interventions

Forty-four studies had an educational component, 49 had a (C)POE component, and 25 had an audit and feedback component. The majority of studies (55%) reported interventions in a single category. The remaining studies involved a combination of interventions from different categories. Table 2 shows the classification of studies by category of interventions used.

Table 2. Classification of interventions.

| N (%) | |

|---|---|

| Studies in which a single intervention was performed | 46 (54.8) |

| Educational | 9 (10.7) |

| (C)POE | 33 (39.3) |

| Audit and feedback | 0 (0) |

| Others | 4 (4.8) |

| Studies in which combined interventions were performed | 38 (45.2) |

| Educational & audit and feedback | 15 (17.8) |

| Educational & (C)POE | 4 (4.8) |

| Educational & others | 3 (3.5) |

| Audit and feedback & (C)POE | 1 (1.2) |

| (C)POE & others | 2 (2.4) |

| Educational & (C)POE & Others | 4 (4.8) |

| Educational & audit and feedback & others | 4 (4.8) |

| Educational & audit and feedback & (C)POE | 3 (3.5) |

| Educational & audit and feedback & (C)POE & others | 2 (2.4) |

Abbreviation: (C)POE, (computerized) provider order entry.

Table 3 provides an overview of the observed changes in test order volume in the individual studies included in this review. We classified all studies by category of intervention(s) used. A variety of outcomes are used to express the change in test order volume, e.g., “reduction in total number of tests,” “reduction in the number of tests per patient per day,” and “reduction in the number of tests per admission.” For a more detailed description of the individual studies, see Supplemental Data Table S2.

Table 3. Test volume reduction by category of intervention(s).

| Ref | Reduction in testing |

|---|---|

| Education | |

| [8] | 8.7%* |

| [28] | 32.7%† |

| [30] | 22.4%‡ |

| [32] | 27.8%* |

| [33] | 14.7%§ |

| [18] | 29.9%‡ |

| [34] | 57%‡ |

| [35] | 28.6% (I) vs 11.8% (C)∥ |

| [36] | 40.6% (I) vs 21.3% (C)† |

| (C)POE | |

| Soft stop | |

| [39] | 46% (pre-I) vs 14% (post-I)¶ |

| [41] | 22.2–53.7% (I) vs 1.7–40.1% (C)∥ |

| [43] | 16.7%‡ |

| [45] | 21%‡ |

| [46] | 39.8%‡ |

| [47] | 19.5%‡ |

| [49] | 73% (I) vs 49% (C)** |

| Hard stop | |

| [19] | 11.2%†† |

| [16] | 5.7%‡ |

| [53] | 96.6%** |

| [55] | 12.4% (I) vs 0.3% (C)‡‡ |

| [57] | 0.56%‡ |

| Soft stop vs hard stop | |

| [60] | 92.3% (I) vs 43.6% (C)‡ |

| Order form changes, display of fee | |

| [62] | 44.2%† |

| [64] | 3.9%† |

| [66] | 25.5% (I) vs 1.3% (C)§§ |

| [67] | 18.6%∥∥ |

| [69] | 8.6% (I) vs 5.6% (C)* |

| [20] | 17.3%‡‡ |

| [71] | 56.5%‡ |

| [73] | 54.3–52.5%+∥ |

| [74] | 19.1% (I) vs 40.6%+ (C)‡‡ |

| [76] | 18.5%∥ |

| [77] | 32.7%‡‡ |

| [79] | 4.5%¶¶ |

| [80] | 23.9%‡ |

| Time limits on orders | |

| [81] | 8.5%* |

| [83] | 11.5%* |

| [85] | 64.7%§ |

| Combined (C)POE & Others | |

| [7] | 33.3–48.5%* |

| [88] | 18.0%††† |

| [90] | 13.7%‡ |

| [92] | 55.2%† |

| Others | |

| [27] | 38%‡ |

| [29] | 12%‡ |

| [31] | 15.9%‡ |

| [15] | 3.6%† |

| Education & Audit/Feedback | |

| [25] | 5.1–7.0%§ |

| [24] | 25.5–42.2% (I) vs 3.7–22.4% (C)* |

| [3] | 21%‡ |

| [37] | 29.8%‡ |

| [10] | 12.3–52.0% (I) vs 26.5–8.5%+ (C)‡‡ |

| [38] | 14.6%* |

| [40] | 12%‡‡‡ |

| [42] | 48.6%‡ |

| [44] | 38.0–73.7%* |

| [9] | 20.8%* |

| [11] | 13.5%* |

| [48] | 4.5%+† |

| [50] | 41.5% (I) vs 10.0%+ (C)* |

| [51] | 24–32%* |

| [52] | 14%† |

| Education & (C)POE | |

| [54] | 26.7% and 36.0%‡ |

| [56] | 61.5% and 100%‡‡ |

| [58] | 3.1–58.5% (I) vs 4.1–33.9%+* |

| [59] | 41.9% and 44.8%‡ |

| Education & Others | |

| [61] | 20.7–56.3%* |

| [63] | 7.5%* |

| [65] | 69.5%‡ |

| Audit/Feedback & (C)POE | |

| [68] | 17%* |

| (C)POE & Others | |

| [70] | 33.3–60%* |

| [72] | 47.2%+§§§ |

| Education, (C)POE & Others | |

| [75] | 7.1–8.9%* |

| [21] | 66%‡ |

| [78] | 80.9% (I) vs 11.8% (C)‡ |

| [22] | 34.5% (I) vs 10.1–14.8% (C)∥ |

| Education, Audit/Feedback & Others | |

| [14] | 5.7–30.4% (I) vs 1.2–8.8%+ (C)§ |

| [82] | 47.4%‡ |

| [84] | 11.5%‡‡ |

| [86] | 10.7% (I1) vs 52.3% (I2) vs 23.5% (I3)∥ |

| Education, Audit/Feedback & (C)POE | |

| [87] | 20%‡ |

| [89] | 95%∥∥∥ |

| [91] | 19.0% (I) vs 7.6% (C)¶¶¶ |

| Education, Audit/Feedback, (C)POE & Others | |

| [93] | 8%* |

| [94] | 25.9%∥ |

*Number of target tests per (in)patient day; †Number of target tests per (in)patient; ‡Total number of target tests; §Number of tests per day; ∥Number of tests per admission, visit or discharge; ¶Percentage of admissions in which test was performed; **Percentage of redundant orders cancelled; ††Number of target tests per year; ‡‡Number of tests per month; §§Monthly tests per patient day; ∥∥Number of tests per 100 ED presentations; ¶¶Fewer tests in intervention group compared to control group; ***Number of tests per day; †††Number of tests per week per hospitalization; ‡‡‡Percentage of patients undergoing target test; §§§Number of tests per patient per visit; ∥∥∥Percentage reduction in use of panel; ¶¶¶Number of tests per 100 hospital days.

Abbreviations: Ref, reference; I, intervention group; C, control group; I1, intervention group 1; I2, intervention group 2; I3, intervention group 3.

1) Interventions with educational component

Out of 84 studies, nine implemented interventions that were exclusively educational. In 35 studies, interventions combining educational efforts with other approaches were implemented.

2) Interventions with (C)POE element

Thirty-three studies exclusively involved modifications in the (C)POE system. In 16 studies, these modifications were combined with other approaches. In seven studies, pop-up reminders were instated upon ordering a potentially redundant test, providing the opportunity to either cancel or continue the order (“soft stop”), which in some cases required justification. Five studies used a more rigorous approach by automatically rejecting orders that appeared to be redundant (“hard stop”), with or without a direct notification of the ordering provider. Another strategy used involved the unbundling or elimination of order panels or other modifications in order forms, e.g., by grouping tests by organ or disease, or displaying fee information. This strategy was used in 13 reports. A different approach was to limit the time window for order placement, with requests scheduled to be carried out beyond this time window being canceled, which was done in three studies.

3) Interventions with audit and feedback component

None of the studies included used audit and feedback methods solely. In 25 studies, audit and feedback methods, in which providers were presented with their ordering patterns, were combined with other interventions.

4) Other interventions

In three studies, test orders were reviewed for approval by a multidisciplinary team of specialists. In one study, the providers allowed to order tests were restricted.

4. Clinical patient outcomes

Possible effects of the reduction in laboratory test utilization on patient (clinical) outcomes were studied in slightly more than half (54%) of all studies evaluated. Clinical outcomes were generally not or positively affected by most of the interventions studied. Negative effects on patient outcomes were reported in only two papers. In the report by Finegan et al [15], test selection was individualized by staff or resident anesthesiologists instead of according to surgery-specific clinical pathways by surgical staff. Significantly more complications and a higher mortality rate were found in the intervention group, although the internist reviewing the complications concluded in all cases that additional tests would not have affected these outcomes. In the report by Smit et al [16], an electronic gatekeeping system was implemented, automatically rejecting orders not meeting specific rules. Some restored tests were evaluated after previous rejection, and the negative effects on duration of hospital stay and conducting further diagnostics were noted.

DISCUSSION

We provided an overview of the nature and effectiveness of interventions aimed at reducing unnecessary laboratory utilization on the basis of 84 peer-reviewed studies that investigated educational, (C)POE, audit and feedback, and other interventions. Nearly all the studied interventions had the potential to reduce unnecessary laboratory utilization without affecting patient safety. In the majority of studies, reductions in unnecessary diagnostics were achieved, which was consistent with the previous findings [4,13]. Study design, type of intervention, targeted tests, and reported outcomes were heterogeneous. The positive effects reported in nearly all studies and the insufficient detail in study descriptions make it difficult to replicate the studies or to identify the exact elements underlying success. Finally, sustainability of the effects was examined in only few studies. In nearly all studies, the authors concluded that their intervention was succesful; however, most studies merely reported a reduction in test order volume and no target for reduction was set at the outset, opening the way to considering the intervention succesful on the basis of any positive number. In addition, publication bias may be involved, in that mainly studies with positive outcomes are reported.

Although the interventions could be subdivided into three broad categories, the study designs, interventions, and tests targeted were rather heterogeneous. Moreover, the outcomes were reported in various ways (e.g., “reduction in total number of tests,” “number of tests per patient day,” “number of tests per patient,” “number of tests per day,” and “number of tests per month”). Therefore, we conclude that it is not possible to assess the individual effectiveness of different types of interventions.

A change in test utilization requires changes in provider awareness and behavior. Knowledge and attitude are concepts regularly targeted in acquiring and sustaining behavioral change [17]. Increase of knowledge is targeted through education. Attitude can be influenced through audit and feedback methods: knowing that one is being monitored may change one's attitude towards testing, while feedback can also be a learning experience. (C)POE interventions focus directly on behavioral change, although they can contain educational elements as well. Because many interventions were not described in detail in the studies evaluated, it is difficult to identify which elements of an intervention led to success.

Although interventions from all categories seemed to be effective, most studies were relatively short and did not provide follow-up data to demonstrate the sustainability of the intervention. Another element to take into account when comparing interventions is adherence; in approximately half of the interventions, it was not clear to what extent care providers adhered to the interventions. Further, most studies did not use a control arm and had methodological limitations.

Many of the studies evaluated in this review focused on reducing repeated monitoring tests or (accidental) duplicate requests instead of focusing on assessing whether certain tests were indeed indicated. Additionally, patient-related (clinical) outcomes were studied in only slightly more than half of the studies. These outcomes, such as mortality, length of hospital stay, and admission to the ICU, remained mostly unaffected, although they are crude and it is unclear to what extent these outcomes are linked to a reduction in laboratory testing. Further, studies might not have had sufficient power to demonstrate an effect on the reported clinical outcomes. Only a few studies have investigated consequences of reduced testing in terms of actually missing diagnosis [18,19,20,21,22]. This gives us the impression that reducing unnecessary testing has mostly focused on improvements in efficiency, without affecting patient outcomes.

1. Interventions with educational elements

Educational interventions provide an opportunity for a personal approach because physicians may be actively involved in the development and implementation of the intervention, e.g., through the development of guidelines. However, an element we did not often encounter in the studies we evaluated was to involve residents through educational sessions, flyers, e-mails, etc., which might further increase their commitment. A possible disadvantage to an educational approach is the amount of effort necessary to successfully carry out such an intervention. Here too, adherence might be a problem, as the extent to which care providers follow guidelines or algorithms, attend educational sessions, or read educational e-mails is often not clear.

2. Interventions with (C)POE elements

Most studies described in this review contain elements of changes in (C)POE systems. A major advantage of this type of intervention is the relatively little effort needed to carry out such an approach. While determining which modifications should be made in the order systems can be labor-intensive (e.g., how to modify order sets, how a new order form should be designed, and which time limits should be instated on which tests), once such modifications are implemented, no further action is needed. In general, provider adherence to these types of interventions is better than adherence to educational interventions since in most studies, all ordering providers receive the intervention upon ordering. Delvaux et al [23] recently published a systematic review on the effects of computerized clinical decision support systems on laboratory test ordering and noted that in the majority of studies, a positive effect was found in compliance with recommendations made by the order system.

3. Interventions with audit and feedback elements

In some studies, audits were performed to assess test order volume, while other studies also assessed test appropriateness. Providers were subsequently presented with data on their ordering patterns. The amount of effort this approach requires differs depending on the content and frequency of auditing and feedback. As was described in these studies, feedback can be provided about the entire study population or on an individual basis, with or without comparison to peers, and, in some cases, anonymously. The level of feedback might influence the extent of commitment [24,25].

4. Comparison with the literature

In line with findings in other reviews on de-implementation, we found that most interventions were succesful [4,13]. Because of the heterogeneity in the interventions studied and the outcomes reported, we found it difficult to compare effectiveness and to draw conclusions as to which intervention(s) is/are most successful. This difficulty was also encountered by Delvaux et al [23]. However, previous reviews stated that combined interventions appear to be more succesful than single interventions [4,13].

Kobewka et al [4] reviewed 109 studies on interventions to reduce test utilization in both primary care facilities and hospital settings. In line with our findings, they found interventions from all categories to be successful. Further, they found that combined interventions were more effective than single interventions. To express median relative reduction, different outcome measures were combined. We found this approach questionable, even more so because the authors also found the effects of interventions to be different when these were expressed using a different outcome measure (e.g., Kumwilaisak et al [9] reported a 21% reduction in number of tests per patient per day, while the total number of tests decreased by 36% in the same study). Solomon et al [13] reviewed 49 studies on interventions aiming to improve physicians' testing practices and assessed methodological quality and efficacy of the interventions. Of 21 interventions using a single approach, 62% reported success, while 86% of 28 interventions using a combinatorial approach were successful.

5. Strengths and limitations

This review and the studies included have a number of strengths and limitations. A strength of this review is that it considered a variety of interventions and approaches to reduce unnecessary laboratory testing. In addition to assessing the reduction in test order volume, we were also interested in the effects of these interventions on patient-related clinical outcomes.

A limitation is our exclusive focus on studies on reducing unnecessary testing in hospital settings, although we found that interventions carried out in primary care facilities were broadly similar to those we described [4,26]. Further, we only included studies that reported a reduction in test order volume of all, not just a subset, of studied tests. In addition, we did not perform an exhaustive literature search; we concluded our search when we had, in our opinion, reached theoretical saturation and no new domains of interventions were found. Thus, we might have missed relevant articles. Finally, we did not assess the costs of development and implementation of interventions and the cost-benefit reducing laboratory testing yields.

6. Conclusions and implications for future research

In conclusion, there are various interventions to reduce unnecessary laboratory testing in the hospital setting. While the majority seems to be effective, the generalizability of the data is questionable and the data are not comparable. An important step in changing test-ordering behavior is changing the mindset of providers and for this purpose, even a few test items can be used to introduce the concepts related to unnecessary diagnostics. We do, however, believe that not all interventions are equally suitable in every setting and for every test targeted, e.g., instating time limits might be more suitable for tests that are (unnecessarily) ordered in high frequency, while education might be more suitable when aiming to reduce unnecessary arterial blood gas requests. Thus, investigators should consider the clinical setting, the providers, and the tests targeted when developing or implementing strategies for reduction. Reporting on interventions can be improved if articles share more details about the study design and intervention to allow replication. In addition, we recommend performing studies with relevant patient-related outcomes and the investigation of sustainability of the effect of interventions.

Acknowledgment

The authors would like to acknowledge René Spijker from the Medical Library of the Academic Medical Center in Amsterdam and Cochrane Netherlands at the Julius Center for Health Sciences and Primary Care of the University Medical Center Utrecht for providing help with the literature search.

Footnotes

Authors' Disclosures of Potential Conflicts of Interest: No potential conflicts of interest relevant to this article were reported.

SUPPLEMENTARY MATERIALS

Search terms

Overview of individual studies

References

- 1.van Rooijen M, Goedvolk R, Houwert T. A vision for the Dutch health care system in 2040-Towards a sustainable, high-quality health care system; World Economic Forum; 2013. [Google Scholar]

- 2.Hauser RG, Shirts BH. Do we now know what inappropriate laboratory utilization is? Am J Clin Pathol. 2014;141:774–783. doi: 10.1309/AJCPX1HIEM4KLGNU. [DOI] [PubMed] [Google Scholar]

- 3.Minerowicz C, Abel N, Hunter K, Behling KC, Cerceo E, Bierl C. Impact of weekly feedback on test ordering patterns. Am J Manag Care. 2015;21:763–768. [PubMed] [Google Scholar]

- 4.Kobewka DM, Ronksley PE, McKay JA, Forster AJ, Van Walraven C. Influence of educational, audit and feedback, system based, and incentive and penalty interventions to reduce laboratory test utilization: A systematic review. Clin Chem Lab Med. 2015;53:157–183. doi: 10.1515/cclm-2014-0778. [DOI] [PubMed] [Google Scholar]

- 5.Zhi M, Ding EL, Theisen-Toupal J, Whelan J, Arnaout R. The landscape of inappropriate laboratory testing: a 15-year meta-analysis. PLoS One. 2013;8:e78962. doi: 10.1371/journal.pone.0078962. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.NVKC. Zinnige Diagnostiek-Overwegingen bij het aanvragen en interpreteren van laboratoriumdiagnostiek (Usefull Diagnostics-Considerations upon requesting and interpreting laboratory diagnostics) Nederlandse Vereniging voor Klinische Chemie (Netherlands Society for Clinical Chemistry and Laboratory Medicine); [Google Scholar]

- 7.Pageler NM, Franzon D, Longhurst CA, Wood M, Shin AY, Adams ES, et al. Embedding time-limited laboratory orders within computerized provider order entry reduces laboratory utilization. Pediatr Crit Care Med. 2013;14:413–419. doi: 10.1097/PCC.0b013e318272010c. [DOI] [PubMed] [Google Scholar]

- 8.Thakkar RN, Kim D, Knight AM, Riedel S, Vaidya D, Wright SM. Impact of an educational intervention on the frequency of daily blood test orders for hospitalized patients. Am J Clin Pathol. 2015;143:393–397. doi: 10.1309/AJCPJS4EEM7UAUBV. [DOI] [PubMed] [Google Scholar]

- 9.Kumwilaisak K, Noto A, Schmidt UH, Beck CI, Crimi C, Lewandrowski K, et al. Effect of laboratory testing guidelines on the utilization of tests and order entries in a surgical intensive care unit. Crit Care Med. 2008;36:2993–2999. doi: 10.1097/CCM.0b013e31818b3a9d. [DOI] [PubMed] [Google Scholar]

- 10.Erlingsdóttir H, Jóhannesson A, Ásgeirsdóttir TL. Can physician laboratory-test requests be influenced by interventions? Scand J Clin Lab Invest. 2015;75:18–26. doi: 10.3109/00365513.2014.965734. [DOI] [PubMed] [Google Scholar]

- 11.Miyakis S, Karamanof G, Liontos M, Mountokalakis TD. Factors contributing to inappropriate ordering of tests in an academic medical department and the effect of an educational feedback strategy. Postgrad Med J. 2006;82:823–829. doi: 10.1136/pgmj.2006.049551. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Vegting IL, Van Beneden M, Kramer MHH, Thijs A, Kostense PJ, Nanayakkara PWB. How to save costs by reducing unnecessary testing: Lean thinking in clinical practice. Eur J Intern Med. 2012;23:70–75. doi: 10.1016/j.ejim.2011.07.003. [DOI] [PubMed] [Google Scholar]

- 13.Solomon DH, Hashimoto H, Daltroy L, Liang MH. Techniques to improve physicians' use of diagnostic tests: a new conceptual framework. JAMA. 1998;280:2020–2027. doi: 10.1001/jama.280.23.2020. [DOI] [PubMed] [Google Scholar]

- 14.Yarbrough PM, Kukhareva PV, Horton D, Edholm K, Kawamoto K. Multifaceted intervention including education, rounding checklist implementation, cost feedback, and financial incentives reduces inpatient laboratory costs. J Hosp Med. 2016;11:348–354. doi: 10.1002/jhm.2552. [DOI] [PubMed] [Google Scholar]

- 15.Finegan BA, Rashiq S, McAlister FA, O'Connor P. Selective ordering of preoperative investigations by anesthesiologists reduces the number and cost of tests. Can J Anaesth. 2005;52:575–580. doi: 10.1007/BF03015765. [DOI] [PubMed] [Google Scholar]

- 16.Smit I, Zemlin AE, Erasmus RT. Demand management: an audit of chemical pathology test rejections by an electronic gate-keeping system at an academic hospital in Cape Town. Ann Clin Biochem. 2015;52:481–487. doi: 10.1177/0004563214567688. [DOI] [PubMed] [Google Scholar]

- 17.Cabana MD, Rand CS, Powe NR, Wu AW, Wilson MH, Abboud PAC, et al. Why don't physicians follow clinical practice guidelines?: a framework for improvement. JAMA. 1999;282:1458–1465. doi: 10.1001/jama.282.15.1458. [DOI] [PubMed] [Google Scholar]

- 18.Meng QH, Zhu S, Booth C, Stevens L, Bertsch B, Qureshi M, et al. Impact of the cardiac troponin testing algorithm on excessive and inappropriate troponin test requests. Am J Clin Pathol. 2006;126:195–199. doi: 10.1309/GK9B-FAB1-Y5LN-BWU1. [DOI] [PubMed] [Google Scholar]

- 19.Konger RL, Ndekwe P, Jones G, Schmidt RP, Trey M, Baty EJ, et al. Reduction in unnecessary clinical laboratory testing through utilization management at a US government veterans affairs hospital. Am J Clin Pathol. 2016;145:355–364. doi: 10.1093/ajcp/aqv092. [DOI] [PubMed] [Google Scholar]

- 20.Powles LAR, Rolls AE, Lamb BW, Taylor E, Green JSA. Can redesigning a laboratory request form reduce the number of inappropriate PSA requests without compromising clinical outcome. Br J Med Surg Urol. 2012:567–573. [Google Scholar]

- 21.Larochelle MR, Knight AM, Pantle H, Riedel S, Trost JC. Reducing excess cardiac biomarker testing at an academic medical center. J Gen Intern Med. 2014;29:1468–1474. doi: 10.1007/s11606-014-2919-5. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Attali M, Barel Y, Somin M, Beilinson N, Shankman M, Ackerman A, et al. A cost-effective method for reducing the volume of laboratory tests in a university-associated teaching hospital. Mt Sinai J Med. 2006;73:787–794. [PubMed] [Google Scholar]

- 23.Delvaux N, Van Thienen K, Heselmans A, Van de, Ramaekers D, Aertgeerts B. The effects of computerized clinical decision support systems on laboratory test ordering-a systematic review. Arch Pathol Lab Med. 2017;141:585–595. doi: 10.5858/arpa.2016-0115-RA. [DOI] [PubMed] [Google Scholar]

- 24.Iams W, Heck J, Kapp M, Leverenz D, Vella M, Szentirmai E, et al. A multidisciplinary housestaff-led initiative to safely reduce daily laboratory testing. Acad Med. 2016;91:813–820. doi: 10.1097/ACM.0000000000001149. [DOI] [PubMed] [Google Scholar]

- 25.Tawfik B, Collins JB, Fino NF, Miller DP. House officer-driven reduction in laboratory utilization. South Med J. 2016;109:5–10. doi: 10.14423/SMJ.0000000000000390. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Cadogan SL, Browne JP, Bradley CP, Cahill MR. The effectiveness of interventions to improve laboratory requesting patterns among primary care physicians: a systematic review. Implement Sci. 2015;10:167. doi: 10.1186/s13012-015-0356-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Aesif SW, Parenti DM, Lesky L, Keiser JF. A cost-effective interdisciplinary approach to microbiologic send-out test use. Arch Pathol Lab Med. 2015;139:194–198. doi: 10.5858/arpa.2013-0693-OA. [DOI] [PubMed] [Google Scholar]

- 28.Blum FE, Lund ET, Hall HA, Tachauer AD, Chedrawy EG, Zilberstein J. Reevaluation of the utilization of arterial blood gas analysis in the intensive care unit: effects on patient safety and patient outcome. J Crit Care. 2015;30:438. doi: 10.1016/j.jcrc.2014.10.025. [DOI] [PubMed] [Google Scholar]

- 29.Greenblatt MB, Nowak JA, Quade CC, Tanasijevic M, Lindeman N, Jarolim P. Impact of a prospective review program for reference laboratory testing requests. Am J Clin Pathol. 2015;143:627–634. doi: 10.1309/AJCPN1VCZDVD9ZVX. [DOI] [PubMed] [Google Scholar]

- 30.DellaVolpe JD, Chakraborti C, Cerreta K, Romero CJ, Firestein CE, Myers L, et al. Effects of implementing a protocol for arterial blood gas use on ordering practices and diagnostic yield. Healthc (Amst) 2014;2:130–135. doi: 10.1016/j.hjdsi.2013.09.006. [DOI] [PubMed] [Google Scholar]

- 31.Dickerson JA, Cole B, Conta JH, Wellner M, Wallace SE, Jack RM, et al. Improving the value of costly genetic reference laboratory testing with active utilization management. Arch Pathol Lab Med. 2014;138:110–113. doi: 10.5858/arpa.2012-0726-OA. [DOI] [PubMed] [Google Scholar]

- 32.Delgado-Corcoran C, Bodily S, Frank DU, Witte MK, Castillo R, Bratton SL. Reducing blood testing in pediatric patients after heart surgery: a quality improvement project. Pediatr Crit Care Med. 2014;15:756–761. doi: 10.1097/PCC.0000000000000194. [DOI] [PubMed] [Google Scholar]

- 33.Seegmiller AC, Kim AS, Mosse CA, Levy MA, Thompson MA, Kressin MK, et al. Optimizing personalized bone marrow testing using an evidence-based, interdisciplinary team approach. Am J Clin Pathol. 2013;140:643–650. doi: 10.1309/AJCP8CKE9NEINQFL. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Gentile NT, Ufberg J, Barnum M, McHugh M, Karras D. Guidelines reduce X-ray and blood gas utilization in acute asthma. Am J Emerg Med. 2003;21:451–453. doi: 10.1016/s0735-6757(03)00165-7. [DOI] [PubMed] [Google Scholar]

- 35.Davidoff F, Goodspeed R, Clive J. Changing test ordering behavior: a randomized controlled trial comparing probabilistic reasoning with cost-containment education. Med Care. 1989;27:45–58. [PubMed] [Google Scholar]

- 36.FowkesFGR, Hall R, Jones JH, Scanlon MF, Elder GH, Hobbs DR, et al. Trial of strategy for reducing the use of laboratory tests. Br Med J (Clin Res Ed) 1986;292:883–885. doi: 10.1136/bmj.292.6524.883. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Giordano D, Zasa M, Iaccarino C, Vincenti V, Dascola I, Brevi BC, et al. Improving laboratory test ordering can reduce costs in surgical wards. Acta Biomed. 2015;86:32–37. [PubMed] [Google Scholar]

- 38.Corson AH, Fan VS, White T, Sullivan SD, Asakura K, Myint M, et al. A multifaceted hospitalist quality improvement intervention: decreased frequency of common labs. J Hosp Med. 2015;10:390–395. doi: 10.1002/jhm.2354. [DOI] [PubMed] [Google Scholar]

- 39.Salman M, Pike DC, Wu R, Oncken C. Effectiveness and safety of a clinical decision rule to reduce repeat ionized calcium testing: a pre/post test intervention. Conn Med. 2016;80:5–10. [PubMed] [Google Scholar]

- 40.Svecova N, Sammut L. Update on inappropriate C-reactive protein testing in epistaxis patients. Clin Otolaryngol. 2013;38:192. doi: 10.1111/coa.12097. [DOI] [PubMed] [Google Scholar]

- 41.Moyer AM, Saenger AK, Willrich M, Donato LJ, Baumann NA, Block DR, et al. implementation of clinical decision support rules to reduce repeat measurement of serum ionized calcium, serum magnesium, and N-terminal Pro-B-type natriuretic peptide in intensive care unit inpatients. Clin Chem. 2016;62:824–830. doi: 10.1373/clinchem.2015.250514. [DOI] [PubMed] [Google Scholar]

- 42.Santos IS, Bensenor IM, Machado JB, Fedeli LM, Lotufo PA. Intervention to reduce C-reactive protein determination requests for acute infections at an emergency department. Emerg Med J. 2012;29:965–968. doi: 10.1136/emermed-2011-200787. [DOI] [PubMed] [Google Scholar]

- 43.Lippi G, Brambilla M, Bonelli P, Aloe R, Balestrino A, Nardelli A, et al. Effectiveness of a computerized alert system based on re-testing intervals for limiting the inappropriateness of laboratory test requests. Clin Biochem. 2015;48:1174–1176. doi: 10.1016/j.clinbiochem.2015.06.006. [DOI] [PubMed] [Google Scholar]

- 44.Prat G, Lefèvre M, Nowak E, Tonnelier JM, Renault A, L'Her E, et al. Impact of clinical guidelines to improve appropriateness of laboratory tests and chest radiographs. Intensive Care Med. 2009;35:1047–1053. doi: 10.1007/s00134-009-1438-z. [DOI] [PubMed] [Google Scholar]

- 45.Levick DL, Stern G, Meyerhoefer CD, Levick A, Pucklavage D. Reducing unnecessary testing in a CPOE system through implementation of a targeted CDS intervention. BMC Med Inform Decis Mak. 2013;13:43. doi: 10.1186/1472-6947-13-43. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Niès J, Colombet I, Zapletal E, Gillaizeau F, Durieux P. Effects of automated alerts on unnecessarily repeated serology tests in a cardiovascular surgery department: a time series analysis. BMC Health Serv Res. 2010;10:70. doi: 10.1186/1472-6963-10-70. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Chen P, Tanasijevic MJ, Schoenenberger RA, Fiskio J, Kuperman GJ, Bates DW. A computer-based intervention for improving the appropriateness of antiepileptic drug level monitoring. Am J Clin Pathol. 2003;119:432–438. doi: 10.1309/a96xu9yku298hb2r. [DOI] [PubMed] [Google Scholar]

- 48.Wisser D, Van Ackern K, Knoll E, Wisser H, Bertsch T. Blood loss from laboratory tests. Clin Chem. 2003;49:1651–1655. doi: 10.1373/49.10.1651. [DOI] [PubMed] [Google Scholar]

- 49.Bates DW, Kuperman GJ, Rittenberg E, Teich JM, Fiskio J, Ma'luf N, et al. A randomized trial of a computer-based intervention to reduce utilization of redundant laboratory tests. Am J Med. 1999;106:144–150. doi: 10.1016/s0002-9343(98)00410-0. [DOI] [PubMed] [Google Scholar]

- 50.Merlani P, Garnerin P, Diby M, Ferring M, Ricou B. Linking guideline to regular feedback to increase appropriate requests for clinical tests: blood gas analysis in intensive care. Br Med J. 2001;323:620–624. doi: 10.1136/bmj.323.7313.620. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Barie PS, Hydo LJ. Learning to not know: results of a program for ancillary cost reduction in surgical care. J Trauma. 1996;41:714–720. doi: 10.1097/00005373-199610000-00020. [DOI] [PubMed] [Google Scholar]

- 52.Gortmaker SL, Bickford AF, Mathewson HO, Dumbaugh K, Tirrell PC. A successful experiment to reduce unnecessary laboratory use in a community hospital. Med Care. 1988;26:631–642. doi: 10.1097/00005650-198806000-00011. [DOI] [PubMed] [Google Scholar]

- 53.Procop GW, Yerian LM, Wyllie R, Harrison AM, Kottke-Marchant K. Duplicate laboratory test reduction using a clinical decision support tool. Am J Clin Pathol. 2014;141:718–723. doi: 10.1309/AJCPOWHOIZBZ3FRW. [DOI] [PubMed] [Google Scholar]

- 54.De Bie P, Tepaske R, Hoek A, Sturk A, Van Dongen-Lases E. Reduction in the number of reported laboratory results for an adult intensive care unit by effective order management and parameter selection on the blood gas analyzers. Point Care. 2016;15:7–10. [Google Scholar]

- 55.Waldron JL, Ford C, Dobie D, Danks G, Humphrey R, Rolli A, et al. An automated minimum retest interval rejection rule reduces repeat CRP workload and expenditure, and influences clinician-requesting behaviour. J Clin Pathol. 2014;67:731–733. doi: 10.1136/jclinpath-2014-202256. [DOI] [PubMed] [Google Scholar]

- 56.Krasowski MD, Savage J, Ehlers A, Maakestad J, Schmidt GA, La'ulu SL, et al. Ordering of the serum angiotensin-converting enzyme test in patients receiving angiotensin-converting enzyme inhibitor therapy an avoidable but common error. Chest. 2015;148:1447–1453. doi: 10.1378/chest.15-1061. [DOI] [PubMed] [Google Scholar]

- 57.Janssens PMW, Wasser G. Managing laboratory test ordering through test frequency filtering. Clin Chem Lab Med. 2013;51:1207–1215. doi: 10.1515/cclm-2012-0841. [DOI] [PubMed] [Google Scholar]

- 58.Wang TJ, Mort EA, Nordberg P, Chang Y, Cadigan ME, Mylott L, et al. A utilization management intervention to reduce unnecessary testing in the coronary care unit. Arch Intern Med. 2002;162:1885–1890. doi: 10.1001/archinte.162.16.1885. [DOI] [PubMed] [Google Scholar]

- 59.Toubert ME, Chevret S, Cassinat B, Schlageter MH, Beressi JP, Rain JD. From guidelines to hospital practice: reducing inappropriate ordering of thyroid hormone and antibody tests. Eur J Endocrinol. 2000;142:605–610. doi: 10.1530/eje.0.1420605. [DOI] [PubMed] [Google Scholar]

- 60.Procop GW, Keating C, Stagno P, Kottke-Marchant K, Partin M, Tuttle R, et al. Reducing duplicate testing: A comparison of two clinical decision support tools. Am J Clin Pathol. 2015;143:623–626. doi: 10.1309/AJCPJOJ3HKEBD3TU. [DOI] [PubMed] [Google Scholar]

- 61.Ko A, Murry JS, Hoang DM, Harada MY, Aquino L, Coffey C, et al. High-value care in the surgical intensive care effect on ancillary resources. J Surg Res. 2016;202:455–460. doi: 10.1016/j.jss.2016.01.040. [DOI] [PubMed] [Google Scholar]

- 62.Kobkitjaroen J, Pongprasobchai S, Tientadakul P. γ-Glutamyl transferase testing, change of its designation on the laboratory request form, and resulting ratio of inappropriate to appropriate use. Lab Med. 2015;46:265–270. doi: 10.1309/LM7E5LG6PWJYEFUJ. [DOI] [PubMed] [Google Scholar]

- 63.Le Maguet P, Asehnoune K, Autet LM, Gaillard T, Lasocki S, Mimoz O, et al. Transitioning from routine to on-demand test ordering in intensive care units: a prospective, multicentre, interventional study. Br J Anaesth. 2015;115:941–942. doi: 10.1093/bja/aev390. [DOI] [PubMed] [Google Scholar]

- 64.Janssens PMW, Staring W, Winkelman K, Krist G. Active intervention in hospital test request panels pays. Clin Chem Lab Med. 2015;53:731–742. doi: 10.1515/cclm-2014-0575. [DOI] [PubMed] [Google Scholar]

- 65.Mallows JL. The effect of a gold coin fine on C-reactive protein test ordering in a tertiary referral emergency department. Med J Aust. 2013;199:813–814. doi: 10.5694/mja13.11298. [DOI] [PubMed] [Google Scholar]

- 66.Fang DZ, Sran G, Gessner D, Loftus PD, Folkings A, Christopher JY, III, et al. Cost and turn-around time display decreases inpatient ordering of reference laboratory tests: a time series. BMJ Qual Saf. 2014;23:994–1000. doi: 10.1136/bmjqs-2014-003053. [DOI] [PubMed] [Google Scholar]

- 67.Chu KH, Wagholikar AS, Greenslade JH, O'Dwyer JA, Brown AF. Sustained reductions in emergency department laboratory test orders: impact of a simple intervention. Postgrad Med J. 2013;89:566–571. doi: 10.1136/postgradmedj-2012-130833. [DOI] [PubMed] [Google Scholar]

- 68.Nightingale P, Peters M, Mutimer D, Neuberger J. Effects of a computerised protocol management system on ordering of clinical tests. Qual Health Care. 1994;3:23–28. doi: 10.1136/qshc.3.1.23. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Feldman LS, Shihab HM, Thiemann D, Yeh HC, Ardolino M, Mandell S, et al. Impact of providing fee data on laboratory test ordering: a controlled clinical trial. JAMA Intern Med. 2013;173:903–908. doi: 10.1001/jamainternmed.2013.232. [DOI] [PubMed] [Google Scholar]

- 70.Algaze C, Wood M, Pageler N, Sharek P, Longhurst C, Shin A. Use of a checklist and clinical decision support tool reduces laboratory use and improves cost. Pediatrics. 2016;137:1–8. doi: 10.1542/peds.2014-3019. [DOI] [PubMed] [Google Scholar]

- 71.Durieux P, Ravaud P, Porcher R, Fulla Y, Manet CS, Chaussade S. Long-term impact of a restrictive laboratory test ordering form on tumor marker prescriptions. Int J Technol Assess Health Care. 2003;19:106–113. doi: 10.1017/s0266462303000102. [DOI] [PubMed] [Google Scholar]

- 72.Petrou P. Failed attempts to reduce inappropriate laboratory utilization in an emergency department setting in Cyprus: lessons learned. J Emerg Med. 2016;50:510–517. doi: 10.1016/j.jemermed.2015.07.025. [DOI] [PubMed] [Google Scholar]

- 73.Seguin P, Bleichner J, Grolier J, Guillou Y, Mallédant Y. Effects of price information on test ordering in an intensive care unit. Intensive Care Med. 2002;28:332–335. doi: 10.1007/s00134-002-1213-x. [DOI] [PubMed] [Google Scholar]

- 74.Barth JH, Balen AH, Jennings A. Appropriate design of biochemistry request cards can promote the use of protocols and reduce unnecessary investigations. Ann Clin Biochem. 2001;38:714–716. doi: 10.1258/0004563011900957. [DOI] [PubMed] [Google Scholar]

- 75.Merkeley HL, Hemmett J, Cessford TA, Amiri N, Geller GS, Baradaran N, et al. Multipronged strategy to reduce routine-priority blood testing in intensive care unit patients. J Crit Care. 2016;31:212–216. doi: 10.1016/j.jcrc.2015.09.013. [DOI] [PubMed] [Google Scholar]

- 76.Emerson JF, Emerson SS. The impact of requisition design on laboratory utilization. Am J Clin Pathol. 2001;116:879–884. doi: 10.1309/WC83-ERLY-NEDF-471E. [DOI] [PubMed] [Google Scholar]

- 77.Pysher TJ, Bach PR, Lowichik A, Petersen MD, Shields LH. Chemistry test ordering patterns after elimination of predefined multitest chemistry panels in a children's hospital. Pediatr Dev Pathol. 1999;2:446–453. doi: 10.1007/s100249900148. [DOI] [PubMed] [Google Scholar]

- 78.Hutton HD, Drummond HS, Fryer AA. The rise and fall of C-reactive protein: managing demand within clinical biochemistry. Ann Clin Biochem. 2009;46:155–158. doi: 10.1258/acb.2008.008126. [DOI] [PubMed] [Google Scholar]

- 79.Bates DW, Kuperman GJ, Jha A, Teich JM, John Orav E, Ma'luf N, et al. Does the computerized display of charges affect inpatient ancillary test utilization? Arch Intern Med. 1997;157:2501–2508. [PubMed] [Google Scholar]

- 80.Durand-Zaleski I, Roudot-Thoraval F, Rymer JC, Rosa J, Revuz J. Reducing unnecessary laboratory use with new test request form: example of tumour markers. Lancet. 1993;342:150–153. doi: 10.1016/0140-6736(93)91349-q. [DOI] [PubMed] [Google Scholar]

- 81.Iturrate E, Jubelt L, Volpicelli F, Hochman K. Optimize your electronic medical record to increase value: reducing laboratory overutilization. Am J Med. 2016;129:215–220. doi: 10.1016/j.amjmed.2015.09.009. [DOI] [PubMed] [Google Scholar]

- 82.Han SJ, Saigal R, Rolston JD, Cheng JS, Lau CY, Mistry RI, et al. Targeted reduction in neurosurgical laboratory utilization: resident-led effort at a single academic institution. J Neurosurg. 2014;120:173–177. doi: 10.3171/2013.8.JNS13512. [DOI] [PubMed] [Google Scholar]

- 83.May TA, Clancy M, Critchfield J, Ebeling F, Enriquez A, Gallagher C, et al. Reducing unnecessary inpatient laboratory testing in a teaching hospital. Am J Clin Pathol. 2006;126:200–206. doi: 10.1309/WP59-YM73-L6CE-GX2F. [DOI] [PubMed] [Google Scholar]

- 84.Niemeijer GC, Trip A, Ahaus KCTB, Wendt KW, Does RJMM. Quality quandaries: reducing overuse of diagnostic tests for trauma patients. Qual Eng. 2012;24:558–563. [Google Scholar]

- 85.Marx WH, DeMaintenon NL, Mooney KF, Mascia ML, Medicis J, Franklin PD, et al. Cost reduction and outcome improvement in the intensive care unit. J Trauma. 1999;46:625–630. doi: 10.1097/00005373-199904000-00011. [DOI] [PubMed] [Google Scholar]

- 86.Martin AR, Wolf MA, Thibodeau LA, Dzau V, Braunwald E. A trial of two strategies to modify the test-ordering behavior of medical residents. N Engl J Med. 1980;303:1330–1336. doi: 10.1056/NEJM198012043032304. [DOI] [PubMed] [Google Scholar]

- 87.Zalts R, Ben-Hur D, Yahia A, Khateeb J, Belsky V, Grushko N, et al. Hospital care efficiency and the SMART (specific, measurable, agreed, required, and timely) medicine initiative. JAMA Intern Med. 2016;176:398–399. doi: 10.1001/jamainternmed.2015.7705. [DOI] [PubMed] [Google Scholar]

- 88.Zlabek JA, Wickus JW, Mathiason MA. Early cost and safety benefits of an inpatient electronic health record. J Am Med Inform Assoc. 2011;18:169–172. doi: 10.1136/jamia.2010.007229. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 89.Amukele TK, Baird GS, Chandler WL. Reducing the use of coagulation test panels. Blood Coagul Fibrinolysis. 2011;22:688–695. doi: 10.1097/MBC.0b013e32834b8246. [DOI] [PubMed] [Google Scholar]

- 90.Dorizzi RM, Ferrari A, Rossini A, Mozzo U, Meneghelli S, Melloni N, et al. Tumour markers workload of an university hospital laboratory two years after the redesign of the optical reading request forms. Accred Qual Assur. 2008;13:133–137. [Google Scholar]

- 91.Calderon-Margalit R, Mor-Yosef S, Mayer M, Adler B, Shapira SC. An administrative intervention to improve the utilization of laboratory tests within a university hospital. Int J Qual Health Care. 2005;17:243–248. doi: 10.1093/intqhc/mzi025. [DOI] [PubMed] [Google Scholar]

- 92.Rosenbloom S, Chiu K, Byrne D, Talbert D, Neilson E, Miller R. Interventions to regulate ordering of serum magnesium levels: report of an unintended consequence of decision support. J Am Med Inform Assoc. 2005;12:546–553. doi: 10.1197/jamia.M1811. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93.Vidyarthi AR, Hamill T, Green AL, Rosenbluth G, Baron RB. Changing resident test ordering behavior: a multilevel intervention to decrease laboratory utilization at an academic medical center. Am J Med Qual. 2015;30:81–87. doi: 10.1177/1062860613517502. [DOI] [PubMed] [Google Scholar]

- 94.Kim JY, Dzik WH, Dighe AS, Lewandrowski KB. Utilization management in a large urban academic medical center: a 10-year experience. Am J Clin Pathol. 2011;135:108–118. doi: 10.1309/AJCP4GS7KSBDBACF. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Search terms

Overview of individual studies