Abstract

As part of an enterprise-wide rollout of a new EHR, Intermountain Healthcare is investing significant effort in building a central library of best-practice order sets. As part of this effort, we have built analytics tools that can capture and determine actionable opportunities for change to order set templates, as reflected by aggregate user data. In order to determine the acceptability of this system and set meaningful thresholds for actual use, we extracted recommendations for additions, removals, and change in initial order selection status for a series of thirteen order sets. We asked local clinical experts to review the changes and classify them as acceptable or not. In total, the system identified 362 potential changes in the order set templates and 186 were deemed acceptable. While further enhancement will co sharpen the efficacy of the intervention, we expect that this type of utility will provide useful insight for content owners.

Introduction

Multiple studies in the medical informatics literature that have characterized CPOE system implementations have identified order sets, or standardized groupings of orders targeted to specific clinical conditions or scenarios, as a determining factor in driving user acceptance of CPOE1,2. Physicians often find benefit from using them in that the system can provide logical, pre-populated views of commonly grouped orders for routine procedures and scenarios that they face in the course of their work3. Users often find them quick, convenient and thoughtful in helping them remember all of the nuanced details that can accompany the ordering process for a clinical situation. In similar fashion, organizations have a unique opportunity through order set creation/customization to create value and standardize care by ‘making it easy to do the right thing’ in treating patients. They can build into these templates the kinds of practice elements that represent current best practice4-6. Order set templates often drive usage and adherence, potentially leading to lower overall variation in clinical care processes7.

These benefits, however, come with tradeoffs. Researchers have cited the difficulties of building up a comprehensive library of order sets1, keeping it current as medical knowledge evolves, and the management difficulties that can come with accommodating highly personalized derivatives of order set templates (sometimes known as ‘favorites’ or ‘personal order sets’)8. Clinical knowledge management systems are intended to help organizations identify, centralize, evaluate, refine, and share knowledge resources throughout a given company. Knowledge management strategy as it relates to Computerized Physician Order Entry (CPOE) systems poses a particularly complex challenge. A wide variety of knowledge components must be accounted for, ranging from orderable item libraries, drug databases, order set definitions, decision support rule bases and content dependency trees.

Compiling a centralized knowledge base of order sets has been described as a labor-intensive process. The amount of effort associated with building and maintaining this type of content has led previous research groups to explore methods of automating the creation and refinement of this content9-11. Whether an organization opts to purchase and customize third-party content or develop the order set content in-house, there are significant investments of time and expertise necessary to make it work. Not only are there up-front tasks to populate the catalog and customize it to local practice and availability, but there are ongoing costs associated with the effort such as a) regular review and update existing content to bring it into alignment with current knowledge, b) additions to the catalog to address gaps in coverage and c) removal of obsolete or low-utility content. Researchers have noted that with the exponential growth in medical knowledge, that there is a real risk that once implemented, order sets may be inadequately maintained; in essence, driving caregivers to practice outdated medicine on a widespread basis12. Experts have recommended standard, regular review processes before initial implementation of order set content, but also consistent, periodic review that involves the clinical sponsors as well as allied clinical services (nursing, laboratory, radiology, etc)13. In these reviews, committees should analyze variance from established clinical standards, consistency of care recommendations across order sets, potential for adverse events, and establish priorities for associated clinical decision support rules.

Background

Intermountain Healthcare is a not-for-profit integrated delivery network that serves the populations of the Intermountain West (Utah and southern Idaho)14. It has 22 hospitals, over 150 clinics, a medical group of over 700 employed physicians and an insurance plan that serves the needs of the people in the region. Collectively, it accounts for roughly half of the healthcare given in the region and insures a little more than a quarter of the population.

Intermountain Healthcare has an established legacy of informatics excellence, dating back to the some of the earliest efforts at building electronic medical records in the United States. Recently, Intermountain has opted to transition over to a new EHR offering, in partnership with the Cerner Corporation. Our enterprise rollout of the Cerner EHR product is approximately two thirds complete, scheduled for completion by the end of the year. The change in strategy from building our own EHR to using one provided by a vendor has necessitated shifts in our clinical knowledge management strategy. In particular, many of the content authoring tools have shifted from homegrown solutions to that of using content builders and wizards afforded by the vendor. The two most immediate priorities that have come from the decision related to knowledge management are that of building up and providing visibility into usage patterns surrounding an enterprise library of order sets and decision support rules. In this manuscript, we will focus on the efforts of the former, specifically tooling and processes surrounding order set management.

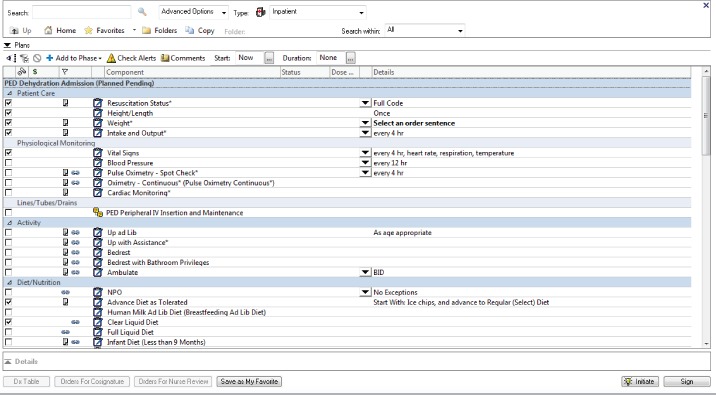

The effort to create an enterprise repository of order sets been conducted under the organizational structure of Intermountain’s Clinical Programs. These groups cover ten specific domains of medical care at Intermountain including cardiovascular, intensive medicine, pediatric, and oncology. Clinical Programs have been in place at Intermountain since 1997 and have a primary charge to identify, develop, and deploy best practice protocols in the enterprise. Among the chief roles that they have is to set and drive towards specific clinical performance targets, and create enterprise content that supports these initiatives. As Intermountain has worked to prepare for and roll out a new EHR platform built on a vendor-supplied infrastructure, care teams of clinical champions, data managers and quality experts have collaborated in building out a library of over 2,000 order sets. (an example is given in Figure 1 below) Most of these order sets have been iterated upon and refined multiple times in the course of preparation and actual usage. This library continues to grow and iterative enhancements are regularly incorporated into the content as stakeholders give feedback and as the content adapts to changes in understanding of current best practice. The maintenance burdens that come with building and maintaining a library of this size are significant. At Intermountain, well over 100 authors have been personally involved in the creation and maintenance of this content, with hundreds of others giving feedback directly or in committee as to enhancement and refinement.

Figure 1.

Order set example. This particular order set focuses on the treatment of dehydration in infants.

Rationale

As part of this effort, business and Clinical Programs leadership have prioritized the development of tools that give visibility into the order set catalog. Specific questions around the catalog, associated metadata (authors, review teams, publication dates, sponsoring departments) and usage patterns have been the focus of a tool known as DOT (Dashboard for Order set Transactions). The development and usage of this product has been previously described 15. As authors grew accustomed to this very granular level of visibility into the content usage and variation related to their order sets, they became increasingly interested in specific scenarios in which the prescribed order sets content and user behavior didn’t align well. In particular, four scenarios were identified in which they wanted the system to assist them by identifying particular opportunities for either change to the order set template or active education and change management processes to redirect user behavior. These included:

Change to Unselected: An order is pre-selected in the order set template (it shows up automatically selected upon loading) but users are actually ordering it infrequently. This would identify scenarios in which users are actively going out of their way to not order preselected content.

Change to Preselected: An order is initially unselected in the order set template, but a majority of users end up selecting and ordering it anyway.

Additions: An order that is not in the order set template at all is frequently added to the order list as part of the ordering encounter. This may identify scenarios in which thoughtful and clinically useful additions might be made to the order set template

Removals: Orders that are in the order set template are never or very seldom used. Given that users can always revert back to looking up any individual order from the orderable item catalog, there is some benefit in considering removals from the order set template if they are seldom used. This can optimize users’ experience with the order set by reducing the number of items that they need to scan and process during the ordering episode.

While there are clearly other elements to utilization of order sets that could be approached in this type of optimization effort (order sentence detail, organization and co-location of order elements, etc.) these particular emphases were agreed upon as initial priorities. The overall effort behind this type of utility would be to reinforce the clinical review processes intended for systematic review of content with detailed, actionable feedback from the system that reflects usage patterns. Additionally, clinical sponsors recognized that in building this type of tool, not all feedback would result in template change. Ordering patterns that deviated from expected care might be just as useful for identifying specific areas where clinical leadership may need to build specific goals and education plans to bring ordering patterns closer to expected levels.

The process of navigating and using an order set has been previously described as a collective mathematical sum of both cognitive and physical costs16. Cognitive costs involve the reading, scanning, and logical processing of the recommendations given by an order set. Physical costs involve the manual efforts of clicking checkboxes, dropdown menus, selection, and scrolling through the content itself to fully review and utilize it. In essence, the process of order set optimization is intended to account for both optimizing these costs while maintaining the clinical and operational effectiveness and utility that come from order set usage.

Methods

Report Generation – In order to balance these needs, we decided to build a content advisor utility that analyzes order set templates from the knowledge repository, associated usage data from the enterprise data warehouse and makes active recommendations for change along the four change ‘axes’ described above. Order set template data was extracted from Cerner’s database tables to Intermountain’s knowledge repository and organized into logical groupings by version. In similar fashion, encounters for which these order sets were used in clinical care were identified, and the orders derived from these sessions were grouped and aligned against the order set template. Under advisement from clinical partners, we set four initial thresholds for identifying actionable change items in the content.

Orders would be flagged as ‘candidates for unselected status’ if they were initially selected in the order set template, yet ordered in less than 50% of the encounters that used that order set template.

Orders would be identified as ‘candidates for preselected status’ if they were initially unselected in the order set template, yet ordered in more than 50% of the encounters that used that order set template

Orders would be identified as ‘candidates for addition’ if they were not in the order set template at all, yet were added ‘ad hoc’ by ordering providers more than 5% of the time.

Orders would be identified as ‘candidates for removal’ if they were in the order set template, yet ordered 1% or less of the time.

We built database scripts that align the data from the sources described above, identifies order elements that match these thresholds and organized four lists in a document that details all recommended content changes per order set. These documents contain four sections, and in each section candidate orders for preselection, deselection, addition, and removal are detailed, along with the corresponding data that was intended to direct the users attention to the specific details of how the orders were used. In particular, the reports showed:

Order set title and version

Number of overall ordering instances for which the specific version of the order set template was used

Orderable title

Percentage of overall ordering instances for which the particular orderable item was used

Actionable recommendation (change selection status to preselected/deselected, addition, removal)

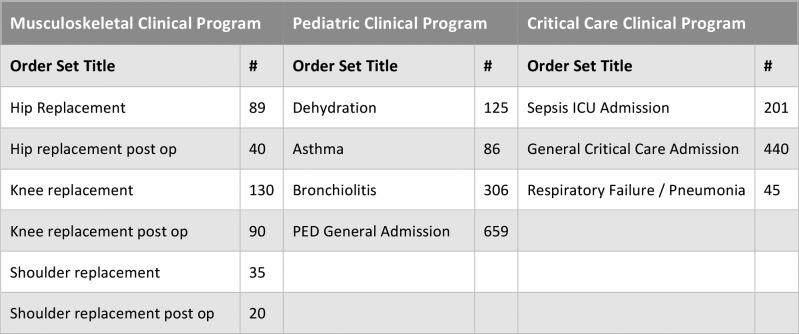

Report Validation – In order to test the utility of these reports and understand whether these initial thresholds were optimal for this purpose, we sampled a series of thirteen order sets relevant to three separate clinical programs, and asked content and data managers to review the recommendations. These order sets were chosen as order sets of interest by three Intermountain Clinical Programs and are listed in Table 1 below.

Table 1:

- Clinical Programs, Selected Order Sets and Corresponding Numbers of Ordering Instances for the Order Sets Analyzed in this Study

|

Report Selection & Creation Criteria – This type of feedback mechanism is dependent upon a minimal threshold of content being in place. As the overall number of encounters for which a specific version of an order set increases, so does the sampling by which the recommendations are representative of general use. As a rule, we opted to analyze the most current version of the content in the system, unless there were fewer than 20 instances of use of the order set template. In this circumstance, we reverted to the version immediately prior the current version for analysis. Table 2 contains the # of ordering instances that correspond with each order set included in this analysis. It is noteworthy to mention that while it might be expected that the number of ordering instances of a hip replacement and hip replacement post-operative order set would be expected to be the same, the data does not bear this premise out. This is primarily due to the fact that these order sets are independently maintained and updated in the database. As such, some of the content was updated during this timeframe, affecting the overall number of ordering instances per version. The numbers reflected below are specific to one single version of the content in the database.

Table 2:

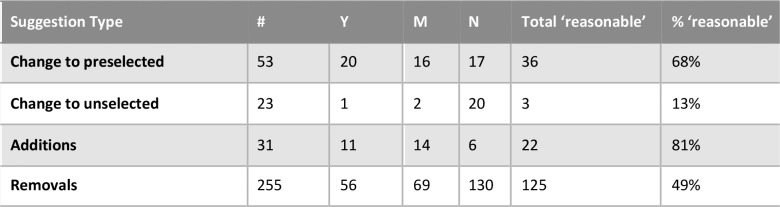

Suggestion Type Analysis - Total Counts, Ratings and Percentage of 'Reasonable' Suggestion Numbers are shown

|

From the metadata associated with each order set in the database, we contacted the main author and content stewards to see if they would be willing to review these system generated recommendations and provide us with feedback about the system-generated recommendations. They were given a spreadsheet in which they were asked to indicate whether they thought that the suggestion was acceptable as is, worthy of discussing in committee (but not yet implementing) or not acceptable. For those deemed not acceptable, we asked them to provide specific feedback as to why they felt that the feedback was not acceptable or useful. We then aggregated the results, tallied the responses, and stratified the results by suggestion type.

Results

Overall, the system made 53 recommendations for preselection, 23 recommendations for deselection, 32 candidate orders as ‘additions, and 255 candidates for removal. Given that both a response of ‘accept’ and ‘would consider in committee’ are positive indicators of how the suggestion was received, we aggregated those groups into a common category indicating that the respondents felt that the suggestion was reasonable. Table 2 contains the results of each of the suggestion categories made by the system, as well as the corresponding ratings from the owners and content managers.

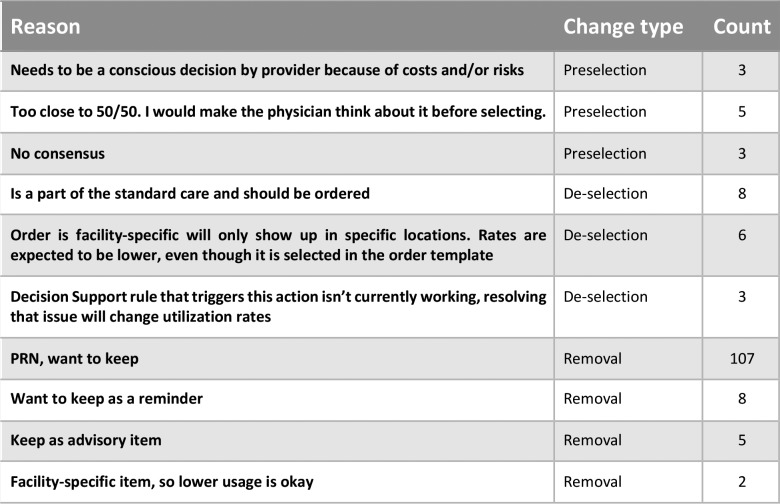

For the respondents that indicated ‘no’ as to whether or not the recommendation was acceptable, we gathered additional narrative input about their reasons for not wanting to implement the feedback. For purposes of presentation, we grouped the comments according to common themes and aggregated them into counts that reflect those reasons. The reasons that were more commonly given for the various suggested edits are summarized in Table 3.

Table 3:

Common reasons for deeming feedback to change order set elements as 'not acceptable', along with change suggestion type and corresponding counts

|

Discussion

The content recommendation system produced a significant number of recommended changes across the order sets, with an average of just under 28 recommended changes per order set in the study. In total, just over 51% of the recommended 362 changes were deemed as ‘accepted’ or ‘pending committee review’. Overall the system has exhibited higher rates of acceptable suggestions for suggesting changes to make orders preselected and additions to the order sets. Content owners were less likely to consider removals from the system (closer to 50%) and far less likely to consider unselecting currently-selected orders (only 13% of the time).

Opportunities for goal setting - One of the key findings of this effort is that the orders identified by the system may either be opportunities for change to the order set template itself or outliers from expected behaviors. Several of the orders flagged for ‘deselection’ came as surprises to the content owners and stewards. They were under the impression that users were ordering pre-selected orders close to 100% of the time, only to find out that they were in fact ordering them less than half the time. This serves as an important notice to the content author, even if it does not result in an immediate change to the order template. It represents a disconnect between the authors’ expectations around user behavior and the actual behaviors themselves. While individual reasons are different for each scenario, some possible reasons for these disconnects include a) different priorities between caregivers and content authors b) presumed workflows that bear out differently in actual practice c) and local workarounds and user treatment behaviors that may deviate from centrally-designated best practice.

Identification of broken components - One of the suggestions for order set template change came from unexpectedly low rates for ordering head circumference measurements for pediatric patients. This suggestion showed up in three of the four pediatric order sets reviewed. Upon further inspection of the reasoning behind these low utilization rates, a knowledge engineer identified that an associated decision support rule intended to trigger and drive higher usage of these orders was currently not working. Although not a direct intent of the tool itself, the resultant suggestions from the tool helped to identify a different knowledge artifact in the system that was malfunctioning. Separately, in extracting data for the analysis process, it became clear that some of the order set definitions themselves pointed to orderables that were no longer active in the orderables library. While not considered a specific objective in this study, those particular points could also be brought forward to content authors in order to facilitate cleanup and maintenance of order set content.

Facility-specific items – Our recommendation system was not attuned for the fact that some orderable items are configured to only be available in specific locations. These types of orderables often correspond with a given location’s abilities to perform orders based on availability of equipment, specialized personnel, formulary, or other constraints. Since our system did not account for these variations, it sometimes recommended modifications that would not be universally useful in scope.

Strength of suggestion – There is some evidence in the data we gathered to indicate that the strength of the content recommendation is stronger with a greater overall ‘denominator’ of order set content usage. Content reviewers felt that a recommendation to remove an order that had not been ordered in a set of a few dozen episodes of care was far less impactful than a recommendation to remove an orderable that had not been ordered from the template from hundreds of order episodes. We may use this feedback to only present ‘candidates for removal with a lower threshold of usage (absolutely no usage) and with a required denominator of at least several hundred ordering instances before the recommendation was made. In similar fashion, recommendations to either preselect or deselect orders near the 50% threshold were less acceptable to users. Going forward, we may require 65-70% usage of a currently unselected order before recommending it for preselection, as users responses to those recommendations were more absolute. Several of the responses near the 50% threshold were deemed as too inconclusive to really make a change either way.

Overall, there appears to be more willingness on the part of content authors to preselect and add than there is to remove and unselect. This may be problematic if not tempered, in that overselecting and overloading any particular order set can make the order set more cumbersome to use. Overuse of preselection status within an order set may lead to overutilization of labs, imaging, and even medications. Our hope is that the approach taken by content authors to feed these recommendations back to a larger committee for review would temper and offset some of these concerns.

Limitations

Size and scale – In this study, we have only sampled thirteen order sets from our order set library of over 2,000 separate documents. We realize that this is a small sample and that it involves only a small number of reviewers. We hope to incorporate the lessons learned from this early sampler of content into the behavior of the system before rolling it out for broader use among the other Clinical Programs and content owners. Furthermore, our study has some real limitations in that we are bringing in data only from the sites that are currently live on our new EHR platform. Nearly half of the care delivery volumes at Intermountain Healthcare are not yet live on this platform and will undoubtedly impact both the usage numbers but also behavior patterns exhibited in the data set.

Personalized order sets or ‘favorites’ – In our new EHR implementation, users do have the ability to derive personal order sets with modifications from the enterprise order set templates and save them as ‘favorites’ from which they can order. As of yet, our system does not account for either the definition of these templates, nor the usage patterns derived from their use. We do anticipate including both of these dimensions of content derivation and use in future iterations of the content recommendation tool itself. We feel that there is a rich amount of data stored in the definitions of these personalized order sets and that machine learning techniques will help us to identify clusters of user behaviors in this space.

Consolidation of feedback recommendations across template versions – In the current design of the content recommendation tool, we are not accounting for broader usage numbers that could be achieved with cleaner reconciliation of common orders across versions of order sets. While the content differences between versions of an order set may be either subtle or substantial, we anticpate that we should be able to employ some grouping logic to allow the framework to more gracefully span order set versions and hopefully make recommendations that are more crosscutting as it relates to overall usage.

Next Steps

Going forward, we expect to extend the content recommendation tool to account for recommendations derived from the authoring of and usage surrounding personalized order sets. We expect to account for recommendations that span versions of the document, as detailed above. Further, we intend to pursue similar recommendation patterns for other portions of the knowledge captured in order set definition, including order sentence details (that often contain form elements specific to the orderable), sequence, redundancy (identical or similar orders placed in multiple locations in the template), and decision support integration.

In studying the acceptability of the recommendations themselves, we intend to pursue the recommendations that go to content committees and record how they are received there and whether the content changes eventually make their way into the order set definitions themselves. As described earlier, we plan to use altered ‘thresholds’ for triggering these recommendations, both in terms of the percentages and minimum number of ordering instances needed before the reports can be created.

Finally, we intend to conduct time-based studies to see if these types of changes affect the overall efficiency of our end-users. One of the main objectives of the research is to build a framework that can maximize efficiencies in users’ interactions without compromising the value and clinical quality that order set usage can bring.

Conclusion

As part of a broader initiative aimed at creation and effective use of clinical knowledge content inside our EHR, we have built a content recommendation system that is capable of identifying opportunities for optimizing order set definitions inside our system. Our preliminary testing has shown that the system produces recommendations that users generally find reasonable, although its performance in recommending additions and preselections is higher than that of deselections and removals. We expect that our efforts in trying to automate and summarize user-derived feedback systems will leave content owners better informed and more empowered to maintain their content in useful ways over time.

Acknowledgements

We would like to acknowledge our colleagues at Cerner for their help in identifying data relevant to the order set templates, corresponding metadata, and the order set instance derivatives created from the use of these plans in the clinical data repository. Additionally, we would like to thank the authors, data managers, and clinical leaders who spent time reviewing suggested edits from the system and providing feedback.

References

- 1.Payne TH, Hoey PJ, Nichol P, Lovis C. Preparation and Use of Preconstructed Orders, Order Sets, and Order Menus in a Computerized Provider Order Entry System. Journal of the American Medical Informatics Association: JAMIA. 2003;10(4):322–329. doi: 10.1197/jamia.M1090. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Osheroff JA, Pifer EA, Sittig DF, Jenders RA, Teich JM. Clinical decision support implementers’ workbook. Chicago: Healthcare Information Management and Systems Society. 2004 [Google Scholar]

- 3.Wright A, Feblowitz JC, Pang JE, et al. Use of Order Sets in Inpatient Computerized Provider Order Entry Systems: A Comparative Analysis of Usage Patterns at Seven Sites. International journal of medical informatics. 2012;81(11):733–745. doi: 10.1016/j.ijmedinf.2012.04.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Asaro P, Sheldahl A, Char D. Physician Perspective on Computerized Order-sets with Embedded Guideline Information in a Commercial Emergency Department Information System. American Medical Informatics Association Symposium; 2005 October 22-26; Washington, DC. 2005. pp. 6–10. [PMC free article] [PubMed] [Google Scholar]

- 5.Ozdas A, Speroff T, Waitman R, Ozbolt J, Butler J, Miller R. Integrating “Best of Care” Protocols into Clinicians’ Workflow via Care Provider Order Entry: Impact of Quality-of-Care Indicators for Acute Myocardial Infarction. Journal of the American Medical Informatics Association. 2006 Mar-Apr;13(2):188–96. doi: 10.1197/jamia.M1656. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Scheck McAlearney A, Chisolm D, Veneris S, Rich D, Kelleher K. Utilization of evidence-based computerized order sets in pediatrics. International Journal of Medical Informatics. 2006 Jul;75(7):501–12. doi: 10.1016/j.ijmedinf.2005.07.040. [DOI] [PubMed] [Google Scholar]

- 7.Jacobs BR, Hart KW, Rucker DW. Reduction in clinical variance using targeted design changes in computerized provider order entry (CPOE) order sets: impact on hospitalized children with acute asthma exacerbation. Appl Clin Inform. 2012;3:52–63. doi: 10.4338/ACI-2011-01-RA-0002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Thomas SM, Davis DC. The Characteristics of Personal Order Sets in a Computerized Physician Order Entry System at a Community Hospital. AMIA Annual Symposium Proceedings. 2003;2003:1031. [PMC free article] [PubMed] [Google Scholar]

- 9.Wright A, Sittig DF. Automated development of order sets and corollary orders by data mining in an ambulatory computerized physician order entry system. AMIA Annu Symp Proc. 2006:819–23. [PMC free article] [PubMed] [Google Scholar]

- 10.Zhang Y, Levin JE, Padman R. Data-driven order set generation and evaluation in the pediatric environment. AMIA Annual Symposium Proceedings. 2012:1469–78. [PMC free article] [PubMed] [Google Scholar]

- 11.Hulse NC, Del Fiol G, Bradshaw RL, et al. Towards an on-demand peer feedback system for a clinical knowledge base: a case study with order sets. J Biomed Inform. 2008;41:152–64. doi: 10.1016/j.jbi.2007.05.006. [DOI] [PubMed] [Google Scholar]

- 12.Bobb AM, Payne TH, Gross PA. Viewpoint: Controversies Surrounding Use of Order Sets for Clinical Decision Support in Computerized Provider Order Entry. Journal of the American Medical Informatics Association: JAMIA. 2007;14(1):41–47. doi: 10.1197/jamia.M2184. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Pearson SD, Goulart-Fisher D, Lee TH. Critical pathways as a strategy for improving care: problems and potential Ann Intern Med. 1995;123:941–948. doi: 10.7326/0003-4819-123-12-199512150-00008. [DOI] [PubMed] [Google Scholar]

- 14.Intermountain Healthcare – www.intermountainhealthcare.org. [Accessed on 8 Mar 2017].

- 15.Hulse NC, Lee J, Borgeson T. Visualization of Order Set Creation and Usage Patterns in Early Implementation Phases of an Electronic Health Record. AMIA Annual Symposium Proceedings. 2016;2016:657–666. [PMC free article] [PubMed] [Google Scholar]

- 16.Zhang Y, Padman R, Levin JE. Paving the COWpath: data-driven design of pediatric order sets. J Am Med Inform Assoc. 2014 Apr 1; doi: 10.1136/amiajnl-2013-002316. [DOI] [PMC free article] [PubMed] [Google Scholar]