Abstract

Cortical activity involves large populations of neurons, even when it is limited to functionally coherent areas. Electrophysiological recordings, on the other hand, involve comparatively small neural ensembles, even when modern-day techniques are used. Here we review results which have started to fill the gap between these two scales of inquiry, by shedding light on the statistical distributions of activity in large populations of cells. We put our main focus on data recorded in awake animals that perform simple decision-making tasks and consider statistical distributions of activity throughout cortex, across sensory, associative, and motor areas. We transversally review the complexity of these distributions, from distributions of firing rates and metrics of spike-train structure, through distributions of tuning to stimuli or actions and of choice signals, and finally the dynamical evolution of neural population activity and the distributions of (pairwise) neural interactions. This approach reveals shared patterns of statistical organization across cortex, including: (i) long-tailed distributions of activity, where quasi-silence seems to be the rule for a majority of neurons; that are barely distinguishable between spontaneous and active states; (ii) distributions of tuning parameters for sensory (and motor) variables, which show an extensive extrapolation and fragmentation of their representations in the periphery; and (iii) population-wide dynamics that reveal rotations of internal representations over time, whose traces can be found both in stimulus-driven and internally generated activity. We discuss how these insights are leading us away from the notion of discrete classes of cells, and are acting as powerful constraints on theories and models of cortical organization and population coding.

Keywords: neural populations, cell-to-cell variability, perceptual decision-making, long-tailed distributions, neural coding, population dynamics

1. Introduction

Over the course of the last century, studies based on lesions, electrophysiology, fMRI, and other methods have quite successfully mapped out where different types of information are represented in the brain (Kandel et al., 2000). During the same period, our understanding of the single neuron and its role in the brain has increased substantially (Koch, 1999). While both research directions have created a wealth of knowledge about the organization of the brain, a large gap remains between them. At the center of this gap lie neural networks—thousands or millions of interconnected neurons, responding in myriad ways to whatever task an organism is engaged in. The number of degrees of freedom in these neural populations explodes, a phenomenon known as the “curse of dimensionality”. This dimensionality explosion creates a tremendous challenge to unravel how information in populations of neurons is processed and represented. One challenge is to understand the structure and plasticity of these networks, one to link this structure and plasticity to the generated activity, and one challenge is to describe and interpret this activity. This latter problem will be the focus of our review.

What defines a neural population and how should we represent its activity? A neural population is a collection of single cells in a given region or area of the brain. Accordingly, the population activity is just the collection of the respective single cell activities. A common view is that if a large class of cells is activated by the same type of information, e.g., a feature of a visual stimulus, then any differences in their responses are noise that must be averaged over. At the other extreme, the details of every single neuron matter, and the activation of each neuron has to be considered separately. In this review, we will attempt to strike a balance between these extremes, and center on the statistical approach to characterizing population activity. The statistical approach aims to quantify the probability with which a set of features is represented in a population of neurons.

Such a quantitative, probabilistic description of the population activity will be useful on three fronts. First, it shows how information is embedded in population activity and may thereby expose widespread organizational principles or statistical patterns that are shared across brain areas. Second, such an understanding may help to study the computations carried out in a given circuit. Third, a statistical description of population activity imparts important constraints to network models that seek to explain how these activities are generated through the interaction of neurons. To obtain these constraints, a description of the population activity for a particular experimental condition requires more detail than simply “N/500 cells were responsive (T-test, P < 0.05)”. Instead, we need to access the overall distribution of measured features in the population, and we need to provide a specific probabilistic model of the activity.

Here, we will review what we have learnt about population activities across the cortex, from sensory to executive to motor areas. We will restrict ourselves to the representation of simple stimuli or simple actions and put a strong emphasis on data that have been collected from animals performing well-controlled behavioral tasks. We will mostly leave aside parts of the literature that are concerned with complex stimuli (such as natural stimuli), complex movement sequences etc. Focusing on one dimensionality explosion, namely the one we face when observing thousands of neurons, already provides ample perplexity. We will therefore disregard that second dimensionality explosion neuroscience is struggling with, namely the innumerable sensory stimuli or motor actions that may shape and modify the population activity.

The review is largely organized by model complexity. First, in Section 2, we discuss and specify the statistical approach to describing population activity, emphasizing its usefulness and its limitations. In Section 3, we review what is known about distributions of firing rates. In Section 4, we look beyond firing rates and review the distributions of second- and higher-order statistics of spike trains. In Section 5, we review how neural populations are tuned to stimuli or actions and look into distributions of tuning curves and other encoding features. In Section 6, we take time into consideration and review what is known about the dynamics of neural population activity. Finally, in Section 7, we discuss more recent attempts to yield full characterizations of the population activity, that include (pairwise) neural interactions and correlations.

2. The statistical approach to population activity

2.1. The statistical characterization of cell-to-cell variability

For concreteness, imagine an animal that is engaged in a task in which we monitor a set of sensory stimuli s and a set of movements or actions a (Figure 1). In such a setting, we can often record the simultaneous activity of many neurons in a given patch of cortex. In analyzing the activity of these neurons in relation to the chosen set of stimuli or actions, we are likely to encounter strong cell-to-cell variability. Even if two cells are responsive to the same set of stimuli, their precise tuning is likely to differ. A third cell may show yet another tuning to the same stimuli and so on. How are we to characterize these response differences?

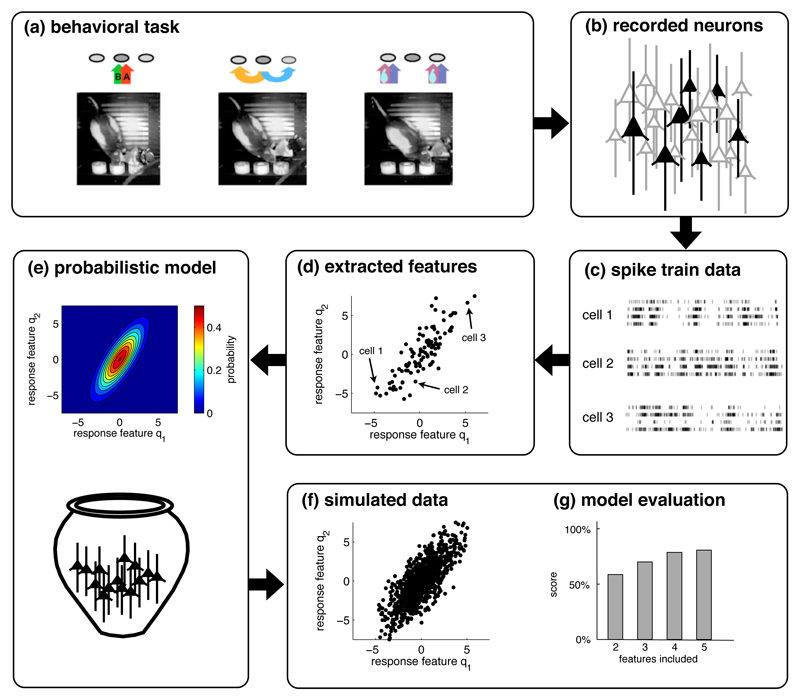

Figure 1. The statistical approach to population responses.

(a) We assume that data are recorded from awake behaving animals engaged in simple tasks. (Figure adapted from Feierstein et al. 2006). (b) The spike trains of a small subset of neurons in one area are recorded. (c) Recordings are sorted by trials over identical conditions. (d) For each neuron, we extract certain features from the spike trains, e.g., the stimulus-dependence of the firing rate or of the change in firing rate. (e) The distribution of these features across the recorded population leads to a probabilistic model of the population response. Such a model is usually generative, so that we can simulate data by randomly drawing neurons from the model (f), here depicted as an urn. (g) The simulated data should ideally be similar to the original data. This similarity is then quantified to evaluate the probabilistic model.

In the statistical approach to population activity, we neglect the identities of the neurons and only characterize the statistics or probabilities of their activity or some feature thereof. Assume, for instance, that we are measuring spontaneous activity in a region of interest. Focusing on a time window of length T = 10 seconds, how likely are we to find cells that fire exactly r = 24 spikes? How likely to find cells that fire r = 25 spikes? To answer that question, we can simply construct a distribution p(r) that measures the probability of observing the response r in the given scenario. The distribution will typically depend on one or more parameters, such as the mean and standard deviation in the case of a Gaussian, or just the mean in the case of an exponential distribution.

The situation becomes slightly more complicated if we consider that neural activities change with the presentation of a stimulus, the execution of an action, or simply the passage of time. Imagine we were to measure the responses of cells to two different stimuli—such as two visual gratings with different orientations. In this case, we can summarize a neuron’s response in a two-dimensional vector r = (r1, r2), where r1 denotes the response (such as a firing rate) to the first orientation, and r2 denotes the response to the second orientation. In turn, we can ask what is the probability of observing a particular response r in the area we are recording from? Just as above, this amounts to characterizing the (now bi-variate) distribution p(r) = p(r1, r2).

As the number of stimuli or actions increases, as we start considering temporal changes in activity, or the precise timing of spikes, the distributions become distributions over many variables, and the naive construction of distributions p(r) through histograms and the like becomes unfeasible. One way to address this problem is to construct quantitative models of the cells’ responses. For instance, we could describe the tuning of each cell to the set of stimuli with a few parameters, such as the width of tuning etc. In turn, we obtain a set of tuning parameters q = (q1, q2, …) for each neuron. In a second step, we can then seek to quantify the distribution p(q) = p(q1, q2, …) of these parameters over the population.

A problem of this two-step approach is that small modeling errors on the single-cell level may add up to large modeling errors on the population level. In other words, even though we may think that we are extracting the right features to describe the responses of single neurons, we may still miss important features of the population response. This problem can be avoided by directly modeling the population activity. For instance, we could use dimensionality reduction techniques such as principal component analysis to extract the relevant features from the data. In either case, we write p(q) and use this distribution as a proper stochastic description of the population activity.

The statistical approach to characterizing population activity is illustrated in Figure 1. We record responses from a subset of the actual population, Figure 1b–c, extract the features of the cell’s responses, plot these features for the whole population, Figure 1d, and then fit a probabilistic model to the population data, Figure 1e. In turn, if we were to draw random samples from this probabilistic model, Figure 1f, these random samples should look similar—in a well-specified, statistical sense—to the recorded data. Importantly, in the last step we need to evaluate the probabilistic model, ideally on a new set of data. Such evaluations essentially compare how “likely” the new data are with reference to the model, when compared to the (known) likelihood of simulated data (Figure 1g).

Why would such an approach, in which we neglect the identity of neurons, make any sense? Several reasons: first, if there are thousands or millions of neurons to consider, we can no longer tend to each individual neuron. Other methods are needed to study what is happening and the statistical approach is one of those methods, presumably one of the simplest. Second, as we will see, the statistical approach can highlight commonalities in the properties of population responses across cortex, which in turn yields important insights into the cortical architecture, as well as constraints for network models of neural systems. Third, to the extent that there are different classes of cells, i.e., different types of neurons with clearly distinguishable response patterns, these will be identified by a statistical approach, which may allow us to link response patterns to anatomical features.

2.2. The sampling problem: recording methods and their biases

Recording a full neural population is virtually impossible with current technologies. We must therefore rely on inferring the properties of a population from a small sample of recorded neurons. This approach will generally work if the sampling is representative, so that the statistical properties of the few neurons we record are similar to the statistical properties of the population as a whole. Consequently, statistical approaches require a precise definition or boundary for the population, and a recording technique that samples neurons randomly and uniformly.

Historically, most studies used extracellular recordings with metal microelectrodes and relied on neural activity for localizing neurons and determining their responsiveness to stimuli or tasks. Consequently, electrophysiological studies are usually biased, in that the most active neurons are self-selective for participating in population analyses, and the rest of the neurons in a region of interest are omitted (see e.g. Olshausen and Field, 2005). This poses a serious problem since there is good reason to believe that most neurons in mammalian cortex are almost silent: from analyses of the expected numbers of neurons within the recording radius of a microelectrode, it has long been suspected that most neurons in cortex are not firing, otherwise an order of magnitude more spikes should be visible (see Shoham et al., 2006, for a discussion of this “dark neuron” problem). However, these more silent neurons are largely ignored with classical electrophysiological methods.

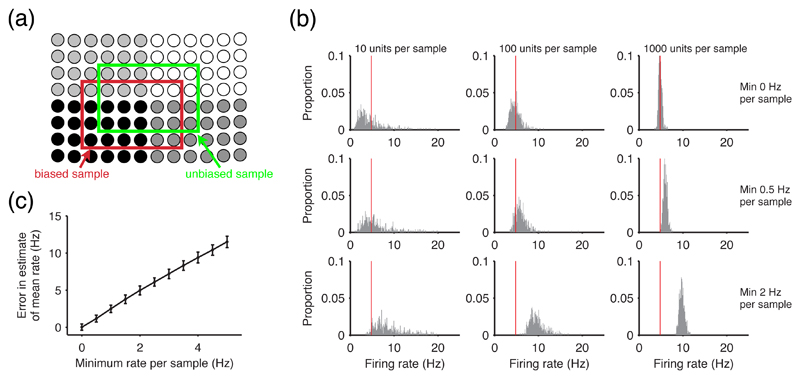

The problem of biased sampling is illustrated in Figure 2. Traditionally, population activity has been characterized by estimating a mean and standard error of the firing rate from pooled single neuron samples. However, any activity-dependent bias in the sampling of cells causes the sample mean to converge higher than the true population mean with an increasing number of samples. Consequently, the mean firing rates are too high and give little clue to the preponderance of low-firing neurons.

Figure 2. The effect of recording biases on the estimation of distributions.

(a) Statistical approaches rely on unbiased sampling. Within a population, neurons usually have different activity levels, here shown as different grey levels. Most extracellular recording techniques are more likely to find active cells, thus providing a biased sample (red box) as opposed to the desired unbiased sample of the population (green box). (b) A recording bias in single-cell recordings causes systematic errors in estimating population mean firing rates. Using a log-normal distribution of firing rates across neurons (see Section 3.1) to describe a simulated population, we took 500 samples of N individual firing rates; we repeated this for different levels of bias in the sample, by only taking samples above a certain firing rate, mimicking the bias in single-cell recordings. We plot the histograms of every sample’s mean rate, for a range of sample sizes and biases; the red line gives the mean value of the population distribution. The top row, with no bias, shows the central limit theorem in action: increasing the sample size causes the estimates of the mean firing rate to converge around the true mean value. However any bias in the sampling causes the convergence to occur around an incorrect mean firing rate for the population. (c) Increasing bias increases error in the estimate. We plot the mean ± s.d. of the error in estimating the true firing rate mean, taken over every sample for N = 1000 units. The error increases linearly, as expected, and is approximately twice the size of the recording bias.

One way of avoiding this recording bias is to isolate neurons independently of their responsiveness to stimuli or tasks. This approach is common to tetrode recordings or multi-cell arrays, for instance. Nonetheless, in both cases neurons are still isolated by activity, even if that activity is not directly task-relevant, and less active neurons risk being overlooked. More recent recording methods such as patch-clamping and calcium-imaging circumvent the problem of activity bias completely. As we will see in Section 3.1, patch-clamp recordings provide a different picture of population activity, with many more silent neurons included, thereby confirming the suspicions mentioned above.

In principle, of course, any recording technique is likely to introduce a bias. Patch-clamping may bias the sampling towards larger neurons or reduce their firing rates, for instance, and calcium-imaging may bias the sampling towards neurons that favour expression or uptake of fluorescent markers. However, these biases are likely to be less important than the activity-dependent bias of traditional electrophysiology. Yet even without any recording bias, neurons that become active only under very specific circumstances are notoriously hard to characterize. In the visual system, for instance, neurons with smaller receptive fields are less likely to be discovered or characterized than neurons with larger receptive fields, simply because they are less responsive (Olshausen and Field, 2005). These problems should be kept in mind when characterizing populations of neurons.

2.3. The sampling problem: simultaneous versus pooled recordings

In most studies, only a few neurons are recorded at the same time, and estimates of the population properties are obtained via pooling data across recording sessions. This approach is based on two crucial assumptions. The first assumption is that the population activity does not change over time. Since large shifts in population responses have only been observed in the context of learning, this assumption seems reasonable for a given task. The second assumption is that the activity of single neurons does not change from trial to trial. This, however, is usually not true as neurons exhibit strong trial-to-trial variability, thus leading to a potential confound as soon as we start characterizing populations by pooling over sequential experiments.

From a statistical point of view, we can remedy this problem by characterizing a neural response through a firing rate that is averaged over trials, and through higher-order statistics of the spike trains such as CVs, Fano factors etc. However, even then the estimate of a neuron’s firing rate will come with error bars. Since the errors differ between neurons, they will lead to a distribution of responses even if there were no cell-to-cell variability at all. Accordingly, whenever we compute neural responses by averaging over trials, we introduce artificial variability in the responses, an artefact that needs to be taken into account and corrected for in order to distinguish generic cell-to-cell variability from ordinary trial-to-trial variability 2. While the effect is likely to be small if responses are averaged over a large amount of trials, it does become relevant if only a few trials are used for averaging (e.g. less than ten) and may then lead to an artificial widening of the population distributions. That said, we note that this effect has largely been ignored in the literature, and we simply caution the reader that some of the distributions reported in our review may be slightly narrower than stated.

Our review focuses on characterizing cell-to-cell variability, and we will write p(r) to refer to the probability of observing a certain response r within a population of cells. Once in a while, we also need to specify the above-mentioned trial-to-trial variability. In these cases, we will use the subscript t and write pt(r) to denote the probability of observing the response r of a given neuron within a set of (identical) trials.

3. Population distributions of firing rates

Perhaps the simplest characterisation of a neuron population is the distribution of its activity, p(r), when r is some measure of firing rate. Surprisingly, such distributions have only been characterized in a few cases. Standard practice in electrophysiological studies has been to report recorded firing rates across all neurons as the arithmetic mean ± standard error, rather than fit a probabilistic model p(r). Seemingly few studies have directly reported the actual distribution of firing rates across all recorded neurons (whether single-neuron or multi-neuron recording). As we will see below, where they have been reported, the mean firing rate tells us little about the population, and firing rate distributions are clearly not Gaussian. Moreover, we will see that whether characterising spontaneous activity, stimulus-evoked activity, or activity related to the execution of actions, the distributions are remarkably similar and robust.

3.1. Population activity has a (very) long-tailed distribution, across all of cortex

Studies explicitly reporting and examining distributions of firing rates in cortical populations are mostly recent (see DeCharms and Zador (2000) for an earlier discussion). They have consistently described distributions dominated by neurons with low firing rates – low in comparison to classical single electrode recording (Kerr et al., 2005) – with a small set of neurons scattered over orders of magnitude higher rates, forming a long tail 3. Shafi et al. (2007) re-analysed a data-set of extracellular single neuron recordings from awake monkeys, and reported long-tailed distributions of firing rates in both parietal and prefrontal cortices. Using cell-attached recording in awake mouse, O’Connor et al. (2010) reported that barrel cortex had a very long-tailed distribution of firing rates when averaged over trial epochs. In perhaps the only study to explicitly fit a probabilistic model p(r), Hromadka et al. (2008b) used cell-attached recording in awake rat A1 to show that log-normal, rather than exponential, functions best-fit the distributions of firing rates across all task stages.

Bringing together the results of these studies with further multi-neuron recording data made available to us, we first survey the distributions of firing rates r and the probabilistic models p(r) we can use to describe those distributions, covering regions from all across cortex. We show the ubiquity of the long-tailed distribution, and also that the most common model points to a non-negligible peak at very low firing rates.

3.1.1. Spontaneous and overall activity

To get a first statistical picture of the population, we start by building a simple distribution of the population activity p(r), where we ignore the animal’s behaviour. This covers a wide range of situations we label for convenience as “spontaneous”: recordings under anaesthesia, during sleep, and quiet wakefulness, as well as “baseline” epochs of recordings before a stimulus presentation or a movement. It also covers what we term here “aggregate” recordings, analysed across presentations of stimuli or productions of actions. We group these as both are neural activity analyses without alignment to a component of a task (stimulus, action, or delay).

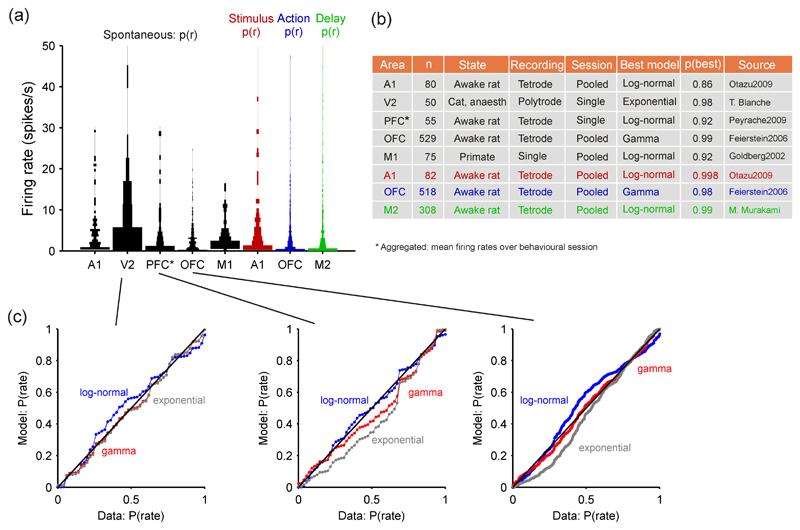

Figure 3a shows how neural populations’ distributions of spontaneous and aggregate firing rates are consistently right-skewed and long-tailed in examples from across the whole cortex: from primary sensory cortices (A1, V2), through “higher” prefrontal regions (OFC, PFC), to primary motor cortices (M1). These examples also show the consistency across species, recording technique and vigilance state – under anaesthetic, awake and resting, or awake and behaving. In every case, the distributions are dominated by neurons firing less than 1 spike/s, with few neurons firing at rates an order of magnitude higher.

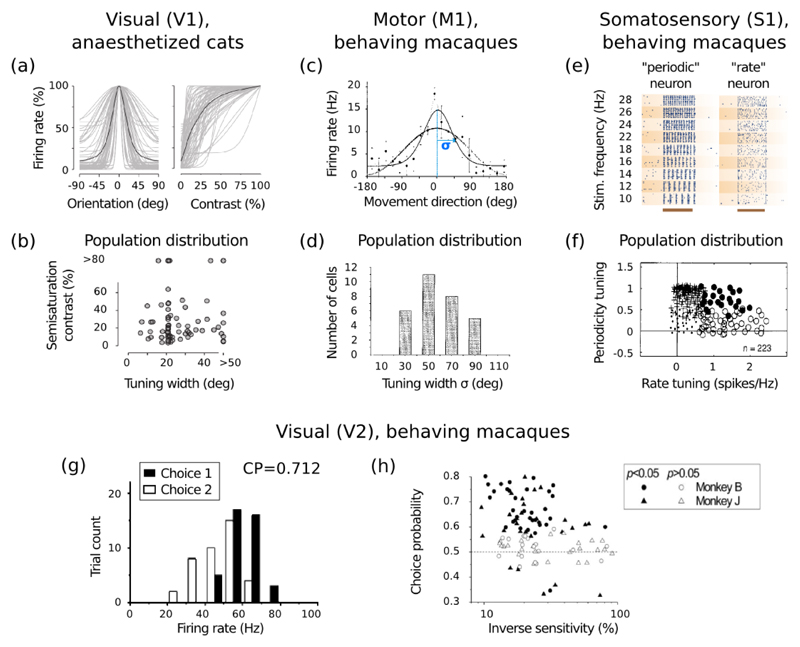

Figure 3. Distributions of firing rates across neural populations in cortex.

(a) Violin plot (Allen et al., 2012) of firing rate distributions across cortical regions, in both spontaneous and task-aligned conditions. Width of bar is proportional to the fraction of neurons in that bin. Optimal histogram widths separately derived for each data-set (Wasserman, 2004). (b) Summary of data-sources in panel (a) and best-fit model to each data-set. Exponential, gamma, and log-normal distributions were fitted using maximum likelihood; best-fitting model was chosen using the Bayesian Information Criterion; p(best): the posterior probability of that being the best model within those tested (Wagenmakers and Farrell, 2004; Wasserman, 2004). The Session column indicates whether the data were from a single recording session or pooled across sessions and/or animals. (c) Probability-probability plots showing the correspondence between the cumulative probability distributions of the firing rate data and of the fitted models. A perfect fit would lie on the diagonal. Left: the V2 data was the only data-set best-fit by the exponential distribution; Middle: the prefrontal cortex data was best-fit by a log-normal distribution; Right: the orbitofrontal cortex data-set was best-fit by a gamma distribution. These examples show not only the best fitting model, but also that it was a good fit to the data. Data sources: A1, from (Otazu et al., 2009), data supplied by Gonzalo Otazu; V2: data recorded by Tim Blanche (see Blanche et al., 2005), available from crcns.org; PFC: from (Peyrache et al., 2009), data supplied by Adrien Peyrache; OFC, from (Feierstein et al., 2006), data supplied by Claudia Feierstein; M1, extracted from Figure 6A of (Goldberg et al., 2002); M2: data supplied by Masayoshi Murakami and Zach Mainen, unpublished.

Deciding which statistical models p(r) best describe the firing rate distributions, whether these are different across cortical areas, and what that means, are open problems. For firing rate data available to us we fitted exponential, log-normal, and gamma probability distributions as examples of general potential models. 4 As firing rate data can only take zero or positive values, one might consider the exponential distribution to be the null model for such distributions: deviations from this distribution are then informative about the relative spread of firing rates into the lower-rate peak or higher-rate tail of the distribution.

We found that all three distributions occur in the cortical activity data-sets (Figure 3b). Like Hromadka et al. (2008b), we found log-normal models best-fit the firing rate distributions in A1, as well as PFC and M1, suggesting that there is a non-negligible peak of very low firing rates in each of these regions; both other models under-estimated p(r) over the middle range of the distributions (Figure 3c). The distribution of firing rates in V2 was best-fit by an exponential model, suggesting just a long tail (Figure 3c). The distribution of firing rates in OFC was best-fit by a gamma model: the exponential model under-estimated the mid-range of the distribution and the log-normal model over-estimated the tail of distribution. Are these distinct distributions between cortical regions meaningful? This is unknown: they could represent differences between vigilance states (awake vs anaesthetised), of recording type, of the sample size and construction (pooled vs single recording), or of the spatial sampling (see results on layer-specificity below). Nonetheless, they may also reflect different characteristics of the cortical micro-circuits in different areas.

As we noted in Section 2.2, such long-tailed distributions are surprising from the perspective of classical single neuron recording studies, but not surprising from the perspective that most neurons in cortex must be almost silent, given the likely recording radius of electrode technology (Shoham et al., 2006). Consequently, we expect most cortical neurons to be at the sub-1 Hz end of the firing rate distribution, and the few neurons that are more active ensure long-tailed distributions of firing rate everywhere in cortex. Such long-tailed distributions, dominated by ultra-low rate neurons, are consistent with estimates that mean rate over all cortex must be very low due to metabolic demands (Laughlin and Sejnowski, 2003; Lennie, 2003). What is perhaps genuinely surprising is the ubiquity of the rejection of an exponential model: low firing rates dominate, but not arbitrarily low.

3.1.2. Evoked activity and activity during task-engagement

Given the little firing in the spontaneous state, one may wonder what happens when the respective piece of cortex becomes engaged, e.g., through sensory stimulation, or through a particular behavioral task that an animal is carrying out. Ignoring the precise tuning of cells (see Section 5) or their temporal dynamics (see Section 6), this is simply asking what we know about the distribution of firing rates, p(r), when a single stimulus is presented, or when a particular action taken. From current evidence, it seems all such distributions are also long-tailed – the majority of neurons have a low firing rate, and only a small subset display strong activity aligned to the task component.

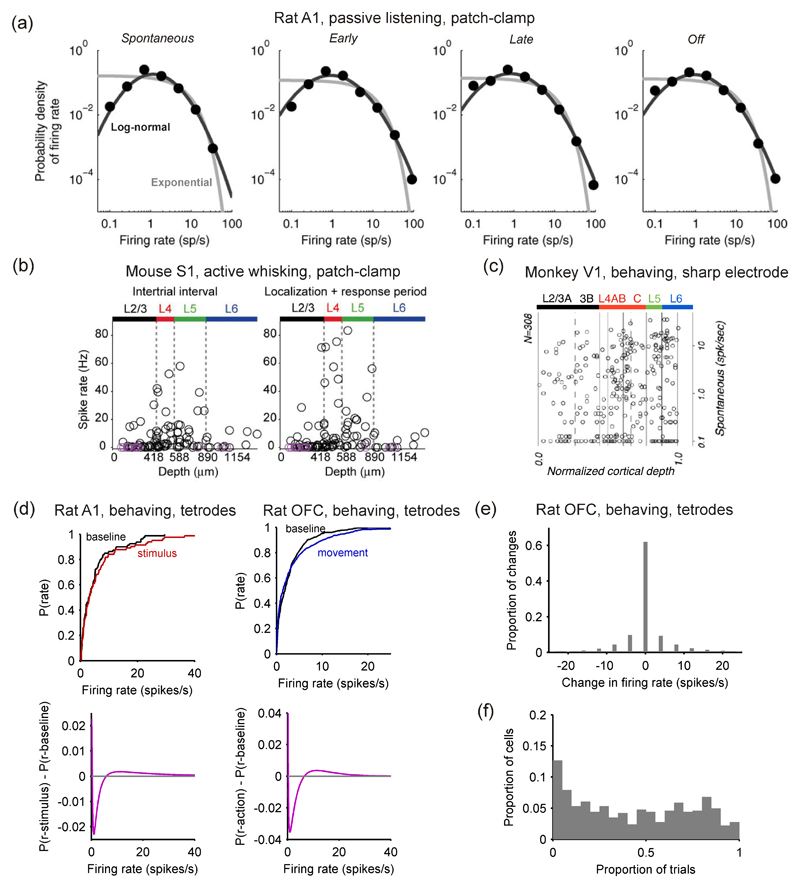

Hromadka et al. (2008a) pooled single cell-attached recordings to show that the A1 cortex in awake rats had long-tailed firing rate distributions aligned to stimuli presentations (Figure 4a); similar to their recordings of spontaneous activity, they reported good fits by log-normal distributions. Using similar recording methods, O’Connor et al. (2010) reported long-tailed distributions of firing rates in mouse barrel cortex (S1) aligned to stimulus contact by the whiskers (Figure 4b, right). Shafi et al. (2007) pooled single-cell extracellular recordings from monkeys performing a delayed-match-to-sample task to show a long-tailed distribution of firing rates during the delay period.

Figure 4. Evoked changes from baseline firing-rate distributions are small.

(a) Distribution of spike counts across neurons in the auditory cortex (A1) of awake rats responding to a pure tone pip. Activity distributions are shown before (‘spontaneous’), during (‘early’, ‘late’) and after (‘offset’) the tone pip. Best model fits are provided, using respectively an exponential (gray) and a log-normal (black) distribution. The log-normal provides a markedly better fit. Loose patch clamp recordings, from Hromadka et al. (2008a). (b) Distribution of spike counts in the layers of barrel cortex (S1) of awake mice involved in a tactile detection task. The mean level of activity is plotted for each neuron against its cortical depth, both during (‘localization+response’) and between (‘intertrials’) periods of whisker contact with the stimulus. Loose patch clamp recordings from O’Connor et al. (2010). (c) Distribution of firing rates for spontaneous (baseline) activity in each layer of area V1 of monkeys observing a uniform-luminance screen; note firing rates are plotted on a log-scale (single extracellular micro-electrode recordings; adapted from Ringach et al., 2002). (d) Changes in mean firing rate distributions for two data-sets from Figure 3: stimulus-evoked changes in A1 (tetrodes; data from Otazu et al. 2009), and movement-evoked changes in OFC (tetrodes; data from Feierstein et al. 2006). Top: the empirical cumulative probability distributions for the baseline (‘spontaneous’) and task-aligned firing rates. Bottom: difference in probability distribution functions for the best-fitting models to spontaneous activity, p(r – baseline), stimulus-evoked activity, p(r – stimulus), or action-related activity, p(r – action) (the models are given in Figure 3b), showing the smooth extent of the increase in higher firing rates in both A1 and OFC. (e) From rat OFC data (Feierstein et al., 2006), the distribution of rate changes between baseline and movement for every sampled firing rate (every neuron in every trial): no change occurred between baseline and movement in 62.1% of samples. (f) From rat OFC data (Feierstein et al., 2006), the distribution of the proportion of trials on which each neuron showed a difference in rate between baseline and movement; median proportion was 0.42.

We confirmed the generality of these prior results by fitting distribution models to firing-rate data from each of the stimulus presentation, action, and delay parts of different tasks. For stimulus-aligned rates, we used recordings of rat A1 after presentation of a tone stimulus in a two-choice decision-making task (data from Otazu et al., 2009). For action-aligned rates, we used recordings of rat orbitofrontal cortex neurons during the movement epoch (move left or right) of a two-choice decision-making task (data from Feierstein et al., 2006). For delay-aligned rates, we used recordings from rat area M2 taken during a reinforcement task with delay, with firing rates calculated from a waiting period in contact with a nose-port before the rat exited to collect reward (unpublished data supplied by Masayoshi Murakami and Zach Mainen). Figure 3a shows that all three firing rate data-sets had long-tailed distributions. When we fitted the same exponential, gamma, and log-normal distribution models, we found best fits by the log-normal model for stimulus (A1) and delay (M2) components, and the gamma model for the action component (OFC) (Figure 3b). Again, silence reigns, with the majority of neurons firing less than 1 spike per second.

Both the analyses of Hromadka et al. (2008a) (Figure 4a) and our analysis of data from Otazu et al. (2009) found clear best-fits of a log-normal distribution to cortical firing rates in rat A1. This consistency is reassuring as the two data-sets were collected respectively using single cell (patch-clamp) and multiple cell (tetrode) recording technologies, and in different behavioural states, passive receipt of stimuli against active engagement in a decision-making task. For rat A1 at least we thus have converging evidence for log-normal firing rate distributions.

3.1.3. Layer-specific differences in spontaneous activity

A confound in many studies of cortical activity in vivo is that we do not know the layer(s) from which the neurons are being recorded. This confound is important because different layers have different levels of activity. Barth and Poulet (2012) have provided a comprehensive review of differences in average firing rate between layers – we note a few key papers here. In a pioneering use of calcium-imaging in vivo, Kerr et al. (2005) reported that layer 2/3 in both A1 and S1 of the rat had very sparse firing rates under anaesthesia, with a maximum of 0.1 Hz over ten minutes of recording across 212 neurons (their Figure 6G). Such low rates were confirmed by simultaneous cell-attached or whole-cell recordings from a sample of those neurons; later studies confirmed that layer 2/3 neurons have substantially lower rates than layer 4 and 5 neurons for both spontaneous and stimulus-evoked firing (de Kock et al., 2007; Sakata and Harris, 2009). Similarly, Beloozerova et al. (2003) reported significantly more activity in layer 5 neurons than layer 6 neurons of rabbit primary motor cortex during both rest and locomotion. It seems that the difference in average rates extends to higher moments too: distributions of p(r) are different between different layers. Ringach et al. (2002) constructed a comprehensive map of the layer-by-layer differences in spontaneous firing rates of monkey V1 (Figure 4c), showing that, while all layers were dominated by low firing rates of less than 1 Hz, layers 4-6 had markedly more neurons with higher firing rates than layers 2/3. A direct comparison by (Hromadka et al., 2008b) found that the firing rate distributions in rat A1 were consistently long-tailed in each layer (2/3, 4, 5 and 6), and, though not explicitly tested, that the distributions were not the same between layers. O’Connor et al. (2010) reported that barrel cortex had very long-tailed distributions in each layer (2/3, 4, 5, and 6); moreover, the distributions were significantly different between layers, with layers 2/3 and 6 showing lower rates than layers 4 and 5 (the raw mean firing rate data are shown in Figure 4b). Unfortunately, this is the current extent of layer-specific delineation of distributions; layer-specific distributions of the more complex models considered later in this review—of spike-train structure, feature encoding, temporal dynamics—have rarely been attempted. Notable exceptions include the study of Ringach et al. (2002), which characterised the layer distributions of various metrics capturing V1 neurons’ responses to moving gratings. Together, the work of (Ringach et al., 2002; Hromadka et al., 2008b; O’Connor et al., 2010) and the review of (Barth and Poulet, 2012) provide the first insights on layer-specific distributions, and indicate that far more information has yet to be discovered.

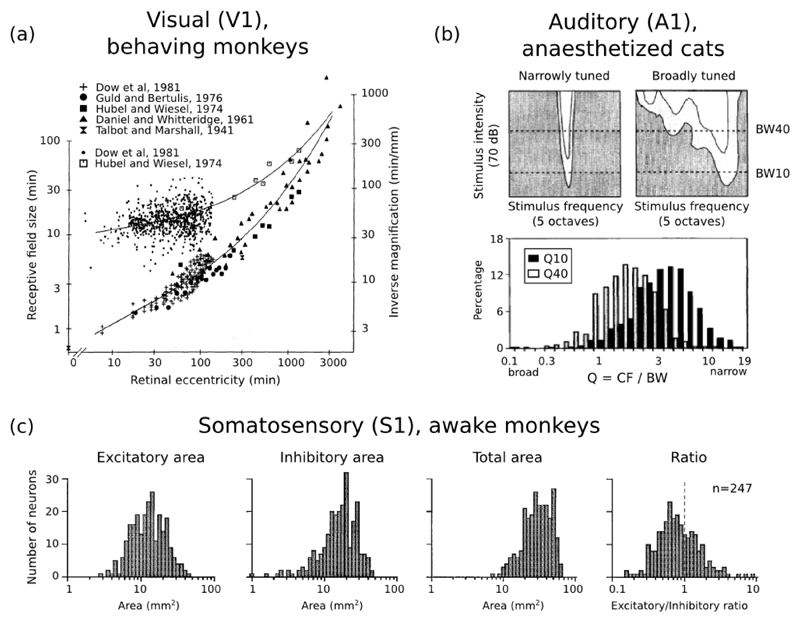

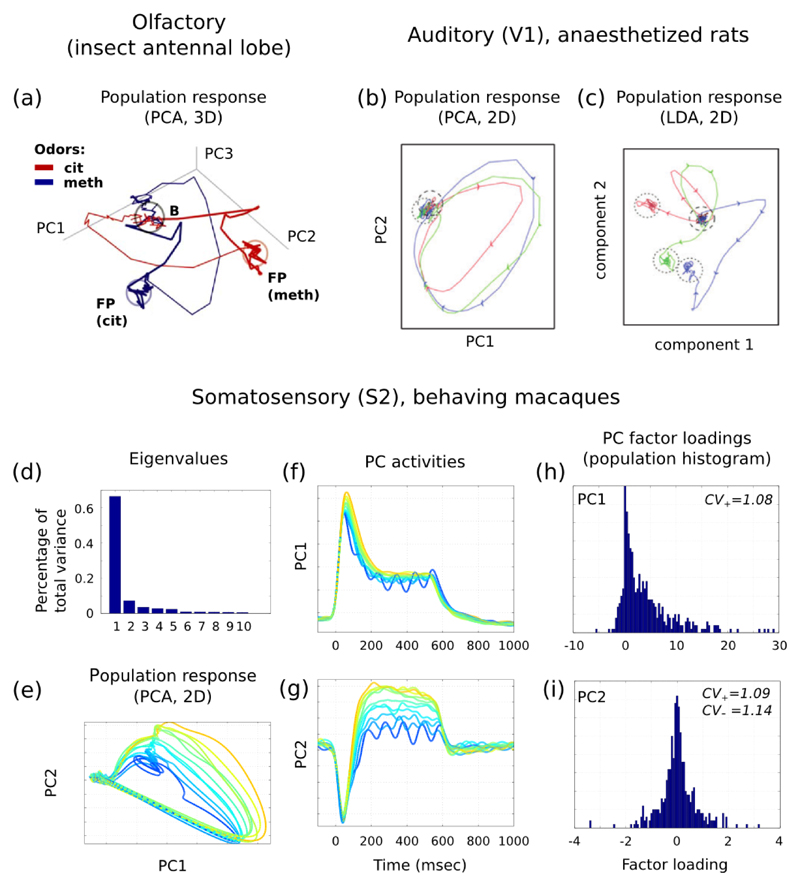

Figure 6. Cortical receptive fields sizes are broadly distributed.

(a) Distribution of receptive field sizes (top curve, left ordinates) and inverse cortical magnification (bottom curve, right ordinates) in monkey V1 neurons as a function of retinal eccentricity; from Dow et al. (1981). (b) Top panels: sample responses of A1 neurons to pure tones, as a function of tone frequency, and intensity (measured in dB above the neuron’s threshold of response). Bottom panel: distribution of relative response bandwidths (best frequency divided by bandwidth) in cat A1 neurons, at respectively 10 dB and 40 dB above threshold; from Schreiner et al. (2000). (c) Distribution of receptive field sizes in monkey S1 neurons from the distal digit pads. From left to right, population histograms are shown for the excitatory subfields, inhibitory subfields, full receptive fields, and for the exc/inh ratio of areas; from DiCarlo et al. (1998).

3.2. Spontaneous and evoked firing rate distributions are highly similar

If both spontaneous and task-aligned activity consists of long-tailed firing rate distributions, do these distributions differ? Answering this is the first application of taking a statistical model perspective of cortical activity: we can compare the probabilistic models for the different conditions to directly detect the effect of task events.

Stimulus representation in sensory neurons is probably one of the domains of electrophysiology where most literature is available, stemming from Adrian’s first measurements of action potentials in nerve fibers responding to sensory stimulation (Adrian, 1928). Because many sensory neurons have always been found which respond vigorously to the presence of an adequate stimulus, it has generally been thought that population activity in visual cortices changes dramatically when a stimulus is presented (Barth and Poulet, 2012). Similar reasoning could be applied for the execution of an action, or the maintenance of working-memory during a delay period. We review recent results which have started to challenge this view thanks to new experimental techniques, revealing that population-wide statistics of task-driven and spontaneous activity may be much closer than previously expected.

3.2.1. Only small differences between distributions of spontaneous and evoked activity

Visual inspection of Figure 4a,b shows that distributions of mean firing rates in rat A1 (Hromadka et al., 2008a) and mouse S1 (O’Connor et al., 2010) barely changed between baseline and evoked firing. Similar results were obtained in PFC of monkeys performing a delayed match-to-sample task (Shafi et al., 2007), and the OFC of rats performing an olfactory discrimination task where distributions remained the same during epochs in which rats smelled an odor, moved, or received a reward (Feierstein et al., 2006). We could quantitatively reaffirm these results using the action-related activity in rat OFC (Feierstein et al., 2006) or stimulus-evoked activity in rat A1 (Otazu et al., 2009). Figure 4d (top) visually confirms that the task-aligned distributions were not significantly different from their corresponding baseline distributions (two-sample Kolmogorov-Smirnov test, p = 0.117 for OFC; p = 0.827 for A1). Thus, there seems little change in the population firing rate distribution between spontaneous and evoked activity. Figure 4d (bottom) illustrates the extent of the changes in both A1 and OFC by plotting the deviation between the models p(r) fitted to the baseline and evoked firing rate distributions; from this perspective, we see a small and smooth increase in the probability of neurons appearing in the tail of the distributions of evoked firing rates.

3.2.2. The single neuron’s perspective

These small changes in distribution obscure substantial changes at the single-neuron level between spontaneous (baseline) and evoked activity. O’Connor et al. (2010) reported that in mouse S1 34% neurons showed a significant change in the number of spikes evoked by whisker contact; the mean evoked change was between 2 and 4 spikes. In rat auditory cortex, Hromadka et al. (2008a) always found around 5% of cells with strong responses to the stimulus, an increase of at least 20 spikes/s, independent of its exact nature (tone pips, white noise, natural stimuli). Still in rat auditory cortex, Bartho et al. (2009) presented a similar picture during the sustained response to tones, with most neurons returning to low activity levels while a few neurons kept on encoding stimulus value through their strong firing rates. Single neuron changes are thus both large (number of neurons showing a response over the whole experiment or individual neuron firing rate changes) and small (number of neurons showing a large response, or number of neurons simultaneously showing a response per trial). However, since firing rate changes of individual neurons can be both positive and negative, the overall effect of these changes on the firing rate distributions is rather small.

This large/small picture is also present in the action-evoked activity of rat OFC (Feierstein et al., 2006). The majority of the neurons in the data-set (349/515) had significant differences5 (Mann-Whitney U-test, p < 0.05) between their rates in baseline and rates in movement epochs. However, on a trial-wise basis, these changes could be both negative and positive, and the dominant change from baseline to movement epochs was 0 spikes/s (Figure 4e). Moreover, there was a wide-ranging distribution of the proportion of trials on which each neuron changed rate between baseline and movement epochs (Figure 4f), with a median proportion of 42% of trials. Thus while the majority of neurons’ rates were “significantly” altered by movement, the majority of neurons changed their rates on less than half the trials.

3.2.3. Whence the dramatic macro-scale changes in activity?

The above evidence argues that population distributions during spontaneous and stimulus-evoked responses of activity appear highly similar. How is this overall similarity of distributions compatible with the fluctuations in population-wide activity traditionally observed with imaging methods such as fMRI and EEG? Although the issue remains to be further explored, it seems that there is no fundamental contradiction between the two. Indeed, because overall firing rates in the population are so low, the few cells with strong response may actually be sufficient to significantly increase the overall number of spikes in the population in response to a stimulus. For example, Hromadka et al. (2008a) report both that 16% of neurons contributed 50% of spikes and an overall increase of mean firing rate of almost 50% between pre-stimulus and early stimulus responses. Similarly, in mouse barrel cortex (S1) responding to a simple tactile discrimination task, O’Connor et al. (2010) report that around 10% of the cells in a single column account for 50% of all spikes fired. Their data also suggests that around 15% of the cells increased their firing rate by more than 10 spikes/s after the onset of active whisking (their Figure S3, panel A1). Thus, the overall sparseness of responsive neurons may be compatible with significant increases of spiking activity, detectable using macro-scale recording methods. Since the distributions are long-tailed, this overall increase in average firing rate is compatible with only small changes in the distribution itself.

3.3. Firing rate distributions constrain theories of neural function

Why is it interesting that firing rate distribution are long-tailed? And why is it interesting that, statistically, the spontaneous and evoked activity distributions are so similar? We review here the implications of these first simple probabilistic models of cortical activity.

3.3.1. The theory of cell “classes”

From the perspective of probability distributions for firing rates, the classical physiological approach to defining distinct “cell classes” by firing rate is not appropriate. Traditional analyses make binary distinctions into two classes of neurons that fire at a significantly high or low rate, or that significantly fire to stimulus s or that significantly fire before action a and so on. But continuous long-tailed distributions show that there is nothing special about neurons with “significantly” higher activity; that amounts to drawing a threshold line somewhere on the distribution of firing rates. Moreover, these results show there are no grounds for demarcating responsive vs non-responsive neurons after stimulus, during delay, or before action. As Figure 4 shows, when we fit a model to the distribution of rates, we can no longer distinguish such classes – the distribution shape changes smoothly. We can do statistical tests to distinguish responsive from non-responsive neurons, as we illustrated above for the OFC data, but from the distribution model perspective this is the wrong approach; to echo (Gelman and Stern, 2006; Nieuwenhuis et al., 2011): the difference between the significant and non-significant response is not itself significant.

3.3.2. Participation in computation

Knowledge of firing rate distributions provides constraints on and opens up new questions for theories of neural function. Any theories of neural function based on Gaussian distributions of population activity to encode stimuli or compute functions may require re-examining. Rather, the experimental results reviewed above support the view of sparse neural representations, where a relatively small number of active neurons mediate a population’s function at any time.

Sparse representations constitute one of the first and most influential theoretical proposals concerning neural populations, whose roots can be traced back to ideas of redundancy reduction (Barlow, 1961, 1972) and of robust memory storage in neural networks (Marr, 1969; Kanerva, 1988). Sparse codes can be viewed as striking a balance between the two extreme views of population representation with ‘grandmother cells’ (one specific cell for each concept or object) at one extreme and fully distributed neural representations at the other. A strong conceptual quality of sparse-coding theories is to provide a functional explanation for the explosion in the number of neurons observed between subcortical structures and cortex: since a sparse code relies largely on the identities of active neurons rather than on their exact level of activity, the population size must be large enough to allow a rich and robust combinatorial repertoire of activities. In early sensory systems particularly, sparse coding is one of the most influential theories of population representation, further reviewed in Section 5.2.1.

While experimental observations of long-tailed population activity favorably echo the predictions of sparse coding theories, they also offer a promising glimpse on new theoretical perspectives. For example, the recurring observation of low but non-zero peaks in population distributions of activity (Figures 3 and 4a), leading to better fits by log-normal rather than exponential models, is at odds with classical predictions of sparse coding models. And indeed, if most cortical neurons are barely active, then this raises the question of whether they meaningfully participate: either only the most active neurons are actually participating in the ongoing encoding or computation, and the sparse firing of occasional spikes in the remaining neurons is irrelevant (Shadlen and Newsome, 1998; Mazurek and Shadlen, 2002), or all active neurons participate. The latter view is supported by considerations of energy expenditure—the total number of spikes fired by the sparsely firing neurons is on a par with the total number of spikes fired by the few highly active neurons, thereby creating large energetic costs for cortex (Attwell and Laughlin, 2001). It is further supported by the observation that the dynamics of the local cortical circuit are exquisitely sensitive to the addition or deletion of a single spike (Izhikevich and Edelman, 2008; London et al., 2010).

3.3.3. Underlying cortical network

The long-tailed distributions also place strong constraints on the underlying cortical network and on the dynamical properties of cortical neurons. Earlier theoretical work predicted that simple balanced networks of excitatory and inhibitory neurons would have long-tailed distributions of firing rates (van Vreeswijk and Sompolinsky, 1996). A more complete theory was recently developed by Roxin et al. (2011), who showed that a long-tailed firing rate distribution in the population implies that each neuron’s transfer function in vivo is an expansive non-linearity, such as a rising exponential or power-law function (Finn et al., 2007). They demonstrated that this result held across changes to the synaptic weight distribution, and held even if inhibitory interneurons were more active than the principal neurons.

This theory assumes a Gaussian distribution of input currents to each neuron, as generated in a uniformly-random coupled network of neurons. An open question is whether the transfer function alone can generate long-tailed firing distributions when the Gaussian assumption is violated, whether by more realistic network topologies that promote localised synchrony (Galan et al., 2008; Haeusler et al., 2009), or by the non-Gaussian statistics of external inputs to the network, as has been demonstrated in rat A1 (DeWeese and Zador, 2006).

Side-stepping the assumption of Gaussian inputs, Koulakov et al. (2009) used a simple linear integration and linear transfer function model of an excitatory, recurrent cortical network to study how such long-tailed distributions of spontaneous activity might arise from aspects of network connectivity. They showed that a log-normal distribution of synaptic weights across the network is insufficient to generate log-normal firing rate distributions. Rather, a stable log-normal distribution of spontaneous firing rates arises if, in addition, synaptic inputs to each neuron are clustered by their mean weight, such that each neuron tends to receive either weak synaptic inputs or strong synaptic inputs. Open questions here are whether such a long-tailed distribution of rates would remain with any departure from linearity in the transfer function, and the impact of inhibitory neurons on the distribution.

3.3.4. Spontaneous activity as “browsing” through possible dynamical states

Several studies in the last decade have begun to investigate the functional and theoretical links between spontaneous and evoked activity. Joint studies of optical imaging and single-cell activities in anaesthetized cat visual cortex have revealed a preserved link between a particular neuron’s spiking and the overall activity in its embedding network, both during spontaneous and stimulus-evoked phases (Arieli et al., 1996; Tsodyks et al., 1999). In rat visual cortex, population-wide patterns of neural activation during a repeated stimulus presentation have been observed to replay during subsequent spontaneous activity (Han et al., 2008). In ferret visual cortex, Berkes et al. (2011) have found a quantitative similarity between the trial-to-trial probabilities of activity in the absence of visual stimulation and of activity averaged over natural visual stimulations. Furthermore, this similarity increased progressively during the early phases of visual development, pointing to sensory learning as a crucial shaping factor of spontaneous activity. Similarities have also been found in the temporal patterns of population activity between spontaneous and stimulus-evoked responses, both in anaesthetized and awake states (MacLean et al., 2005; Luczak et al., 2009).

All these studies suggest that spontaneous activity may be akin to an internal (and experimentally uncontrolled) “browsing” through potential patterns of sensory-evoked responses. Consistent with this view, Luczak et al. (2009) have found that neural population activity in rat auditory cortex during sensory stimulation lives in a subset of possible activities observed during sensory spontaneous activity. From a more theoretical perspective, these results raise the exciting possibility that the organization of spontaneous activity reflects an internal connectivity, learned by interaction with the environment, that would act as a probabilistic prior helping to interpret incoming stimuli (Ringach, 2009; Berkes et al., 2011). These theories have mostly operated on the level of few cells and considered the trial-to-trial variability of neural responses. What such theories predict in terms of cell-to-cell variability, and the respective population distributions of firing rates that we have discussed here, has yet to be determined.

4. Population distributions of spike-train statistics

In this section, we will review the population-wide statistics of spike-trains’ structure across cortex. This is simply anything we know about distributions p(r), when r is some quantification of each neuron’s spike pattern beyond its rate. As the study of firing rate distributions alone is in a nascent stage, only a few studies have directly addressed this problem. So here we take a brief, broad survey of measures of spike-train structure, for which a population picture would provide us with useful additional constraints on neural theories and models.

4.1. Regularity of spike trains

The relative regularity of spikes emitted by a neuron is central to many theories of neural coding (Rieke et al., 1997; Gabbiani and Koch, 1998). Basic measures of neuron output irregularity such as the coefficient of variation (CV = σ/μ) (e.g. Softky and Koch, 1993) and the Fano factor (F = σ2/μ) (e.g. Kara et al., 2000) are widely used. Both allow relative comparison between the output irregularity and a Poisson process, for which both CV and F equal 1 for different summary measures of the spike-train: the CV for inter-spike intervals, and the Fano factor for spike counts in time windows of equal length. However, these measures alone are difficult to compare over time or between neurons, as they are global measures that are sensitive to fluctuations in the neuron’s firing rate (Softky and Koch, 1993; Holt et al., 1996; Ponce-Alvarez et al., 2010). That is, the standard deviation σ of the inter-spike intervals (for CV) or spike counts (for F) is computed using the global mean interval or count μ, but if that mean value is not locally stationary then irregularity tends to be over-estimated. Consequently, CV and F are not ideal for characterising a population distribution p(r) of spike-train regularity.

More advanced measures attempt to capture a rate-invariant irregularity, allowing meaningful comparisons between different neurons. One approach has been to compute a rate-corrected CV by computing separate CV values for inter-spike intervals grouped by sections of spike-train with the same firing rate (Softky and Koch, 1993; Maimon and Assad, 2009). Another has been to define alternative metrics that measure local changes in regularity, such as local variation (Shinomoto et al., 2009) and CV2 (Holt et al., 1996). The CV2 metric has been most widely used for detailed studies of spike-train regularity in cortex (Holt et al., 1996; Compte et al., 2003; Ponce-Alvarez et al., 2010; Hamaguchi et al., 2011). The comparative study of Ponce-Alvarez et al. (2010) suggested that of all tested metrics for regularity CV2 was least affected by rate variation. We use it below, so note here its definition: if Ii and Ii+1 are adjacent inter-spike intervals, then for that pair CV2(i) = 2|Ii - Ii+1|/(Ii + Ii+1), and CV2 is the average over all pairs; similar to other regularity metrics, CV2 = 1 for a Poisson process.

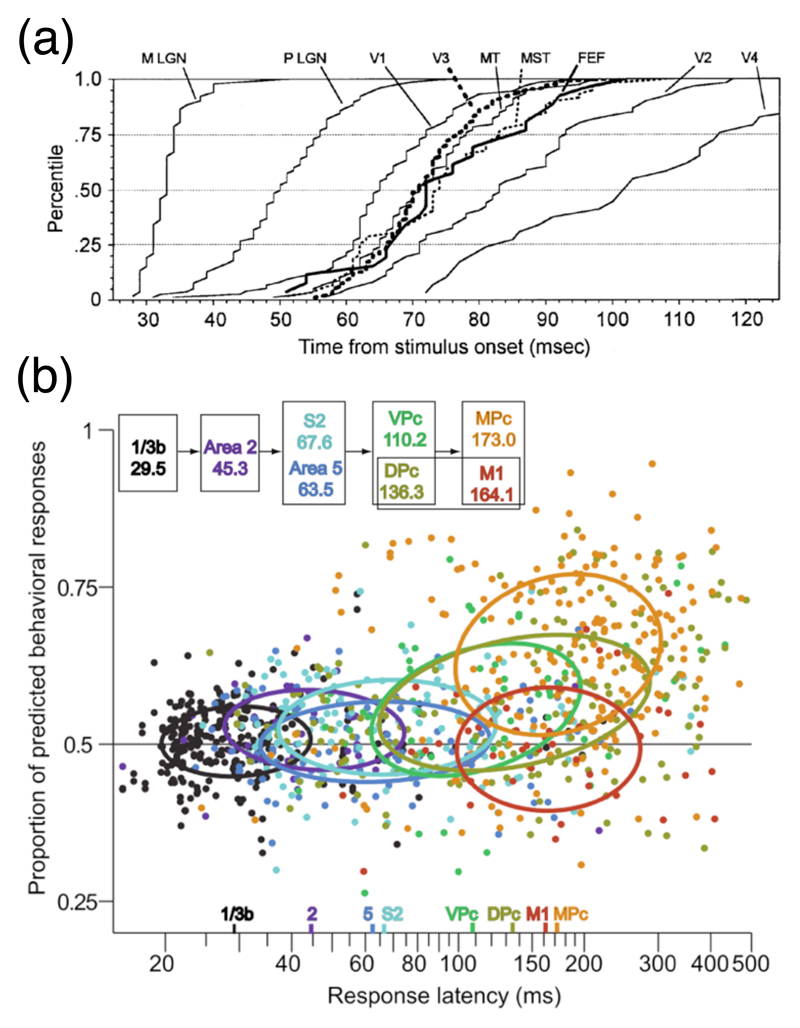

4.1.1. Spike-train regularity changes across cortex and across tasks

Both the local-variation metric (Shinomoto et al., 2009) and the rate-corrected CV (Maimon and Assad, 2009) have been used to study the differences in spike-train regularity between cortical areas. Shinomoto et al. (2009) showed that, after accounting for rate differences, the average spike train changed from random (Poisson-like) to bursty and then to regular patterning when moving from sensory to prefrontal and then to motor cortex in a data-set of multiple primate studies. Similarly, Maimon and Assad (2009) found that neurons in “sensory” parietal cortex (area MT) had irregular spike-trains, whereas neurons in “motor” parietal regions (LIP and Area 5) had more regular spike-trains. They also noted that the distributions of rate-corrected CV changed in shape and left-shifted from MT to LIP and then to Area 5 (their Figure 4A). Thus in both these wide-ranging surveys of spike-train regularity across cortical areas, spike-trains were more regular in motor areas of cortex than in other regions, and both speculated that this was linked to the necessity for rate-coding in motor areas (Maimon and Assad, 2009; Shinomoto et al., 2009).

In a seminal paper, Compte et al. (2003) used measures of spike train structure including CV2 to analyse single-unit recordings from the PFC of monkeys performing an oculomotor-delayed-response task. They reported that the spike trains of most neurons (around 60%) could be classified as Poisson-like, but that the rest of the neurons were more irregular (or bursty) than a Poisson model. Their most striking finding was that the population distribution of CV and CV2 changed between the fixation and delay periods of the task (Figure 5a). Thus, despite the overall increase in mean firing rate during the delay period 6, the neurons’ outputs were significantly more irregular than during the fixation period.

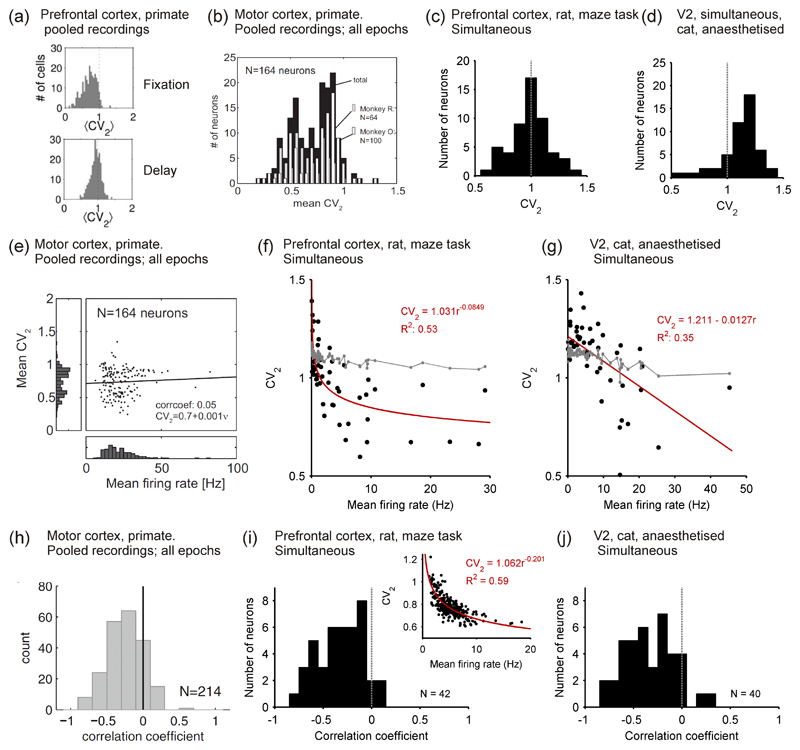

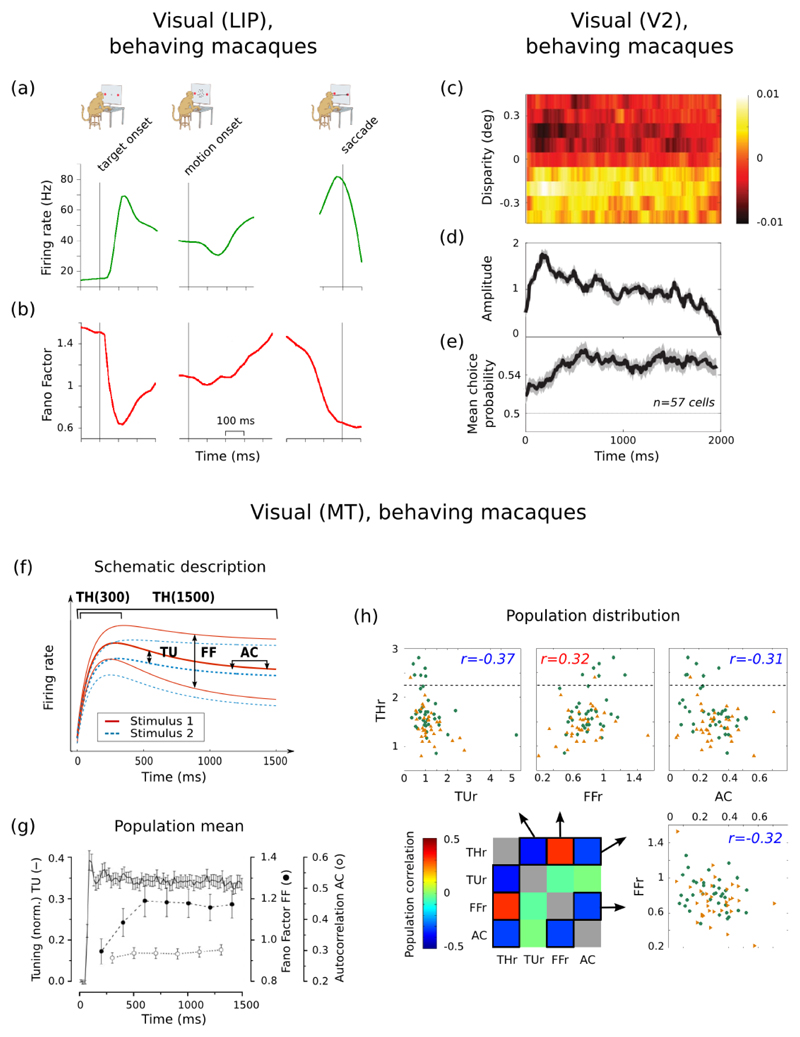

Figure 5. Distributions of spike train regularity in cortex.

(a) Distributions of rate-invariant irregularity measure CV2 in primate PFC during the fixation (top) and delay (bottom) period of an oculomotor task; neurons pooled over multiple single-unit recordings. Taken from Compte et al. (2003). (b) Distribution of CV2 in primate motor cortex, averaged over all task stages; neurons pooled over multiple single-unit recordings. Taken from (Hamaguchi et al., 2011). (c) Distribution of CV2 in a single tetrode recording from awake rat PFC. Data from study of (Peyrache et al., 2009). (d) Distribution of CV2 in a single polytrode recording from anaesthetised cat V2. Data recorded by Tim Blanche (see Blanche et al., 2005), available from crcns.org. (e) Correlation between mean firing rate and CV2 in primate motor cortex, calculated over all task stages. Note the lack of data-points below 10 Hz. Taken from (Hamaguchi et al., 2011). (f) Correlation between mean firing rate and CV2 in a single recording from awake rat PFC; data: black symbols; best-fit model: red line. The grey symbols and lines give the predicted relationship if each spike-train was a rate-varying Poisson process: each point is the mean predicted CV2 for a spike-train of that mean rate; 95% confidence intervals are too small to see on this scale. Data from study of (Peyrache et al., 2009). (g) Correlation between mean firing rate and CV2 in a single polytrode recording from anaesthetised cat V2. Grey lines and symbols as in panel e. Data recorded by Tim Blanche (see Blanche et al., 2005), available from crcns.org. (h) Distribution of coefficients r for the correlation between rate and CV2 for each neuron in a data-set of single-unit extracellular recordings from primate motor cortex during a joystick task. Taken from (Ponce-Alvarez et al., 2010). (i) Distribution of coeefficients r for the correlation between rate and CV2 for a population of neurons simultaneously recorded in awake rat PFC; the inset shows an example neuron that had a power-law relationship between rate and CV2. Data from study of (Peyrache et al., 2009). (j) Distribution of coecients r for the correlation between rate and CV2 for a population of neurons simultaneously recorded in anaesthetised cat V2. Data recorded by Tim Blanche (see Blanche et al., 2005), available from crcns.org.

Hamaguchi et al. (2011) used CV2 to analyse spike-train regularity of a database of single-unit recordings from the motor cortex of monkeys performing two delayed arm movement tasks. The population distribution of mean CV2 was predominantly below CV2 = 1 and left-skewed, suggesting marked regularity of single neuron firing in motor cortex (Figure 5b). Moreover, when broken down by task stage, each neuron’s CV2 values were highly correlated between task stages (r = 0.99) and between the two arm movement tasks (r = 0.86), suggesting that each neuron had a fixed level of firing regularity. Also using single-unit recordings from motor cortex of a monkey performing a joystick task, Ponce-Alvarez et al. (2010) reported that CV2 distributions did not differ between the two delay stages or arm movement stage of the task (though we note their reported CV2 distributions were approximately symmetrical around 0.9, thus not consistent with the distributions in (Hamaguchi et al., 2011)).

Together, the analyses of (Compte et al., 2003) and (Hamaguchi et al., 2011) are consistent with the (Shinomoto et al., 2009) and (Maimon and Assad, 2009) reports of greater regularity in motor regions of cortex than in prefrontal cortex. Moreover, the analyses of (Compte et al., 2003) and (Hamaguchi et al., 2011) together suggest that an additional difference in spike-train structure between motor cortex and PFC is that a neuron’s irregularity is stable in motor cortex, but not in PFC.

The analyses of (Compte et al., 2003; Ponce-Alvarez et al., 2010; Hamaguchi et al., 2011) all pooled single-unit recordings using extracellular microelectrodes, and were all from primate studies. They were thus restricted to relatively high firing neurons in a single species. To further generalise these findings, we analysed simultaneous recordings in rat PFC and in cat V2 for their distributions of CV2. Figure 5c shows that spike-train irregularity in rat PFC during a maze task had a symmetrical distribution around CV2 = 1, and was thus consistent with the predominance of Poisson-like neurons reported by (Compte et al., 2003) for primate PFC. Figure 5d shows that spike-train regularity in cat V2 under anaesthesia had a strongly left-skewed distribution, with a peak around CV2 = 1.15. Thus, under anaesthesia many V2 neurons are more irregular than a Poisson process – while cortex was showing up/down state oscillations under this anaesthesia (Humphries, 2011), the rate-invariance of the CV2 measure suggests that the supra-Poisson spike-trains were independent of this oscillation. Nonetheless, these analyses of simultaneous recordings from rat PFC and cat V2 data confirm that, even when using recording techniques with less activity bias, much of cortical single neuron activity is still surprisingly well-described by a Poisson process, as both have peak CV2 close to 1.

4.1.2. Correlations of regularity and rate in cortical populations

Once we have separately characterised distributions of firing rate, p(r1), and distributions of spike-train structure, p(r2), for a population, we may want to take the further step in model complexity of characterising their co-variation. We illustrate this here for rate and regularity, as rate-invariant measures of spike-train regularity have made it possible to meaningfully consider whether there is a relationship between a neuron’s rate and the regularity of the spikes it emits. The proper characterization of this covariation amounts to estimating a joint distribution of rate and regularity statistics, which we will denote p(r1, r2).

Both (Compte et al., 2003) and (Hamaguchi et al., 2011) reported that in, respectively, primate PFC and motor cortex there was no relationship between rate and CV2 within the data-sets they analysed. This is illustrated in Figure 5e, taken from (Hamaguchi et al., 2011), which clearly shows the absence of any correlation. However, as noted above, the analyses of (Compte et al., 2003; Hamaguchi et al., 2011) pooled single-unit recordings using extracellular microelectrodes, with rates estimated over short portions of a trial, and were thus limited to neurons with relatively high firing rates; neither data-set contained neurons with rates less than 1 Hz. As we saw in Section 3.1, such low firing rates should dominate the population distribution. We thus also analysed example simultaneous recordings with low-rate dominated distributions to check their joint population distribution of rate and regularity.

We found a clear negative correlation between mean firing rate and CV2 in single recordings from both rat PFC (Figure 5f) and cat V2 (Figure 5g). While CV2 is notionally rate-independent, to our knowledge its ability to cope with orders-of-magnitude firing rate fluctuations in ultra-low firing neurons (< 1 Hz) has not been tested; thus, plausibly, this correlation at such low frequencies could have been an artefact of such rate fluctuations. To rule this out, we found the expected CV2 for a null model in which each spike train was modelled as an inhomogenous Poisson process with varying rate derived from the data 7. We found that this model predicted a relationship between mean firing rate and CV2 (grey symbols and lines in panels f and g) that departed strongly from the data. Thus, the correlation between mean firing rate and CV2 was not an artefact of rate fluctuations.

We also found the correlation differed between the rat PFC and cat V2: CV2 was a power-law function of firing rate in awake rat PFC, but a linear function of firing rate in anaesthetised cat V2 8. The power-law fit in rat PFC shows that rate-regularity correlation occurs at very low firing rates and that there is little correlation above ~ 5 Hz, where the power-law function flattens out. Thus in this range there appears no correlation between rate and regularity, consistent with this results of (Compte et al., 2003) and (Hamaguchi et al., 2011). The linear fit over all rates in cat V2 is potentially a result of the unique spike-train structure that occurs under slow-wave inducing anaesthesia. Nonetheless, less-biased recordings dominated by low-firing neurons do reveal a different perspective on the the joint distributions of rate and regularity.

4.1.3. The single neuron’s perspective: joint distributions of regularity and rate modulation

An orthogonal perspective is to ask whether, over longer periods of time, each neuron has a correlation between its own rate and regularity, and thus determine the population distribution of rate-regularity modulation. (It is possible that the population show no correlation between the mean rate and mean CV2 of its neurons, yet each neuron has rate-dependent modulation of its own regularity). Ponce-Alvarez et al. (2010) computed firing rate and CV2 in windows of 200 ms for a data-set of 214 extracellular recordings in primate motor cortex, and found a large sub-set of neurons with a significantly negative correlation between their own rate and CV2 (118/214 had r < 0 and p < 0.01). The distribution of correlation coefficients is plotted in Figure 5h, and shows a left-skewed distribution with a peak close to zero. Thus neurons in primate motor cortex had a broad distribution of negative rate-regularity modulations.

Similar to the above problems with the population-level correlation between rate and regularity, the data-set in (Ponce-Alvarez et al., 2010) was from extracellular recordings and thus biased towards high firing rates. To generalise their results, we applied a similar analysis to single recordings of population activity from awake rat PFC and anaesthetised cat V2. For each neuron we calculated firing rate and CV2 in sliding windows of 20s advanced in steps of 5s 9. Similar to (Ponce-Alvarez et al., 2010), we ensured sufficient data for a reliable correlation by omitting from each neuron any window with less than 20 inter-spike intervals, and omitted any neuron that had less than 40 windows as a result. Nonetheless, despite the dominance of low firing rates, we found a sub-set of neurons in both data-sets had strong negative correlations between their own spike rate and regularity: 26/42 in awake rat PFC and 20/40 in anaesthetised cat V2 had r < 0 and p < 0.01. Figure 5i,j shows that both PFC and V2 had a broad distribution of negative correlation coefficients, and thus seemingly a continuum of the strength of regularity modulations.

Moreover, we found that, beyond the simple linear correlation coefficient, most neurons with strong modulation of regularity actually have a non-linear correlation between rate and CV2. The majority of strongly modulated neurons in both rat PFC and cat V2 had power-law relationships, as illustrated in Figure 5i. Thus, similar to the population distribution, for individual neurons modulation of regularity happens at low firing rates.

4.2. Beyond spike-train regularity: self-correlation and precision

Measures of spike-train regularity are perhaps the most widely applied measures of spike-train structure. A wide-range of other measures are possible, but for which we lack knowledge of population distributions. We briefly review below two further aspects of spike-train structure that may yield insights into population coding. This is not exhaustive: further examples of measures amenable to population distributions include burst structure (e.g. Gourevitch and Eggermont, 2007), spiking coherence with an underlying oscillation (e.g. Benchenane et al., 2010), and of peak(s) in power spectra (e.g. Bair et al., 1994).

4.2.1. Cortical neurons have varying short time-scale self-correlation

Neurons can have detectable correlations between their inter-spike intervals (Perkel et al., 1967). Simulations of simple neuron models have shown these correlations may imply improved transmission of information about time-varying stimuli (Chacron et al., 2004). Such serial correlations can be computed up to any order n for the correlation between intervals Ii and intervals Ii+n, but are typically computed only for adjacent intervals (n = 1) because of the length of stationary recording required to compute sufficient higher-order interval correlations. Serial correlations between intervals are logically distinct from the properties of bursts and oscillations: a bursting or oscillating neuron is likely, but not guaranteed, to have serial correlations in its spike-train; a neuron with serial correlations in its spike-train does not imply that neuron is bursting or oscillating.

While serial correlations have been reported in a wide range of neural systems (Farkhooi et al., 2009), few studies have measured this property in mammalian cortex (Nawrot et al., 2007; Engel et al., 2008). Nonetheless, consistent with reports from other neural systems, this limited evidence suggests that some neurons in both rat S1 and entorhinal cortex have significant, negative serial correlations between adjacent intervals: thus long inter-spike intervals tend to be followed by short intervals, and vice-versa. As neurons with and without negative serial correlations may have different optimal inputs for transferring information (Lindner et al., 2005), understanding the prevalence and distribution of serial correlations within and between cortical populations could shed light on their information coding capacity.

4.2.2. Cortical neurons have variable firing precision

Separate from their intrinsic irregularity, a population of neurons may respond to repeated external stimuli or internal state transitions with different precision in their trial-to-trial repetitions of spike times. There is recent evidence that such changes in spike-time precision are similar for both internally- and externally-driven state changes. Luczak et al. (2007) reported that neurons in layer 5 of rat somatosensory cortex had a repeating sequential structure of each neuron’s mean spike time at the onset of spontaneous UP-states; this ordering of spike-timing disappeared during the rest of the subsequent UP-state. The sequence of neuron firing during spontaneous activity was recapitulated following sensory input, in both anaesthetized and awake animals (Luczak et al., 2009). Peyrache et al. (2010) showed the same sequential reinstatement of activity at the onset of spontaneous UP-states in neurons of medial prefrontal cortex in sleeping rats; they further confirmed that deriving these sequences from mean spike-time at the onset of the UP-states meant that the sequence of neuron firing was independent from the overall firing rate of each neuron.

As an approximation to such state transitions, we may also simply study the responses of neurons to the onset of noisy current inputs. The degree of input-driven precision is known to depend on both the magnitude of the noise in the current (Hunter et al., 1998), the resonant firing frequency of the post-synaptic neuron (Hunter et al., 1998; Fellous et al., 2001) and the time-scale of correlation in the noise (Galan et al., 2008). Such boundaries on reliability suggest there should be different distributions of spike-time reliability between different areas and layers, depending on the exact nature of the local microcircuit, and of the inputs from other cortical and sub-cortical regions. Consistent with this, data from cat V1 suggest that layer 4 neurons can produce a reliably timed first spike to a repeated stimulus, and that this reliability is subsequently reduced in both layers 2/3 and 5 (Cardin et al., 2010). While there have been efforts to quantify the reliability of individual neuron spike-timing using correlations between evoked spike patterns (Fellous et al., 2004; Humphries, 2011), to our knowledge we lack any in vitro or in vivo characterisation of the population distribution of spike-timing precision.

4.3. Implications of distributions of spike-train metrics

In general, distributions of metrics for spike-train structure across cortical populations may place strong constraints on either or both of single neuron and network properties. We finish this survey by considering several implications for theories and models of cortex that might arise from knowing these distributions.

4.3.1. Cell “classes” are inconsistent with unimodal distributions

From the perspective of probability distributions for neural populations, the classical physiological approach to defining distinct “cell classes” based on spike-train structure is problematic. It is typical in electrophysiological studies to make sense of the data by demarcating the firing patterns of neurons into categories such as “regular”, “irregular”, “bursting” and so on. But by characterising distributions of the spike-train measures used to define these categories (e.g. Figure 5a-d), we can see that this approach amounts to drawing an arbitrary threshold line somewhere on the distribution – above that line is irregular, below is regular, and so on. Similarly, for joint distributions of rate and regularity, we can reasonably fit unimodal probabilistic models relating the two; and for single neurons there is a broad distribution of correlations between rate and regularity. Attempts to define classes of neurons by spike-train structure may be more successful when using multiple defining metrics, as in (Compte et al., 2003), or when more than one peak is clear in the distribution of a single metric, as suggested by the CV2 data in Figure 5b.

4.3.2. The irregularity of cortical firing and the balance of excitation and inhibition

The irregularity of cortical spike trains and its implication for single neurons or neural networks has been the focus of numerous studies. In vitro studies of cortical neurons have shown that they can reproduce spike-trains with millisecond-scale precision to repeated injections of the same noisy current, but not to injections of constant current (Mainen and Sejnowski, 1995; Fellous et al., 2001; Galan et al., 2008). Such data suggest that, under synaptic barrage in vivo, cortical neurons are capable of reproducing specific patterns of spike times. There is some evidence from a range of cortical areas for spike-timing precision in response to repeated stimuli (DeWeese et al., 2003; Fellous et al., 2004). Consequently, the irregularity of cortical neuron firing likely reflects inherently irregular (“noisy”) inputs that in turn generate the irregular output.

Such constraints often act as a major spur for a rich vein of interacting computational and experimental work. For example, the report by (Softky and Koch, 1993) that cortical cells have consistently irregular spike-trains, inconsistent with a single neuron integrating random inhibitory and excitatory synaptic input, inspired a wealth of computational and experimental work to explain the source of the irregularity (Stevens and Zador, 1998). Computational models were able to account for the consistent irregularity through both single cell (Troyer and Miller, 1997; Gutkin and Ermentrout, 1998) and network properties (Tsodyks and Sejnowski, 1995; van Vreeswijk and Sompolinsky, 1996); one outcome of this work was the proposal of balanced excitation and inhibition in the inputs to cortical pyramidal cells (van Vreeswijk and Sompolinsky, 1996; Shadlen and Newsome, 1998; Salinas and Sejnowski, 2000). The theoretical studies in turn drove experimental work which confirmed that this balance exists (Shu et al., 2003; Haider et al., 2006; Renart et al., 2010). These series of studies provide probably the best explanation for the irregularity of firing in cortex and have helped to elucidate the “operating point” of cortical neurons in vivo.