Abstract

Many neuroimaging studies have investigated the neural correlates of face processing. However, the location of face-preferential regions differs considerably between studies, possibly due to the use of different stimuli or tasks. By using Activation likelihood estimation meta-analyses, we aimed to a) delineate regions consistently involved in face processing and b) to assess the influence of stimuli and task on convergence of activation patterns. In total, we included 77 neuroimaging experiments in healthy subjects comparing face processing to a control condition. Results revealed a core face-processing network encompassing bilateral fusiform gyrus (FFG), inferior occipital (IOG) gyrus, superior temporal sulcus/middle temporal gyrus (STS/MTG), amygdala, inferior frontal junction (IFJ) and gyrus (IFG), left anterior insula as well as pre-supplementary motor area (pre-SMA). Furthermore, separate meta-analyses showed, that while significant convergence across all task and stimuli conditions was found in bilateral amygdala, right IOG, right mid-FFG, and right IFG, convergence in IFJ, STS/MTG, right posterior FFG, left FFG and pre-SMA differed between conditions. Thus, our results point to an occipito-frontal-amygdalae system that is involved regardless of stimulus and attention, whereas the remaining regions of the face-processing network are influenced by the task-dependent focus on specific facial characteristics as well as the type of stimuli processed.

Keywords: emotion evaluation, emotional faces, gender evaluation, meta-analysis, neutral faces

1. Introduction

In daily life, social interactions rely to a large degree on the processing and interpretation of non-verbal cues, most importantly facial expressions. The ability to recognize a face and the multiplicity of information it may convey is hence a key prerequisite to social functioning. Consequently, a wide range of studies has been conducted over the last decades to unravel the neuronal mechanisms of face processing. The most influential theoretical model of face recognition (Bruce & Young, 1986) distinguishes between seven types of information or components that can be extracted from a face and are assumed to be independent from each other. For example, the processing of the gender of a face is largely independent of the analysis of the emotional expression. Thus, the information used when making decisions about a face differ should depend on the specific task requirements. This implicates, that different neuronal resources may be associated with these, i.e., that different brain modules process different aspects such as the gender or the expression of a face.

Later Haxby, Hoffman, and Gobbini (2000) proposed a neurobiological model, distinguishing between a core system for the visual processing and analysis of faces and an extended system that process the meaning a face may convey as well as its significance. In this context, the inferior occipital gyrus (IOG), lateral fusiform gyrus (FFG) and superior temporal sulcus (STS) have been associated with the core system, while the amygdala and other limbic regions were considered to belong to the extended system of emotion processing. Within the core system, the FFG has been associated to processing configural cues (Barton, Press, Keenan, & O’Connor, 2002; Maurer et al., 2007; Zhao et al., 2014) and invariant features of faces (Haxby et al., 2000; Haxby, Hoffman, & Gobbini, 2002), while the IOG has been suggested to process shape and face parts and thus primarily local facial cues (Liu, Harris, & Kanwisher, 2010; for review see Atkinson & Adolphs, 2011 and Kanwisher & Dilks, 2013). In contrast, the STS has been suggested to play a major role in processing the changeable aspects of faces (Haxby et al., 2002). Thus, it may be assumed that invariant aspects like the gender activate regions like IOG and FFG while the STS is more strongly responsive to facial expression. However, studies indicate also fusiform involvement in processing emotional expressions (Fox, Moon, Iaria, & Barton, 2009; Ganel, Valyear, Goshen-Gottstein, & Goodale, 2005; Harry, Williams, Davis, & Kim, 2013; Xu & Biederman, 2010) as well as a lack of STS activity related to facial processing (Barton et al., 2002; Benuzzi et al., 2007; Jehna et al., 2011; Joassin, Maurage, & Campanella, 2011; Loven, Svard, Ebner, Herlitz, & Fischer, 2014). In addition, studies by Gschwind, Pourtois, Schwartz, Van De Ville, and Vuilleumier (2012) as well as Pyles, Verstynen, Schneider, and Tarr (2013) reported that anatomical connections of the STS with fusiform and inferior occipital face selective regions are weak, indicating that the STS is not as strongly interconnected within the rest of core face-processing network as previously assumed. They thus concluded that the STS has a functionally different role than the other regions of that network, even though its contribution is still a matter of conjecture.

Additionally, the exact location of activation of face-preferential regions, especially FFG and IOG varies considerably across studies (Weiner & Grill-Spector, 2013, and compare Gauthier, et al., 2000; Hoffman & Haxby, 2000; Peelen & Downing, 2005; Pitcher et al., 2011) and subjects (Frost & Goebel, 2012; Zhen et al., 2015). This might be related to differences in acquisition/resolution, spatial normalization and smoothing between studies as well as inter-individual differences in the sampled populations. However, studies investigating the neural correlates of face processing use different tasks (the most widely used are gender and emotion evaluation) and stimuli (emotional or neutral faces), which might also have an impact on the specific regions (as well as location of those) recruited. One way to deal with this variation of localization is to use localizers, providing face-preferntial regions for individual subjects, which can be used for further analyses. Another way is to deliniate where studies of face processing converge by using meta-analysis. This convergence can be used as predictors of functional regions at the group level and thus provide functional ROIs of face-preferential regions for fMRI studies investigating group effects. However, if a meta-analysis can’t find convergence across experiments of face processing, this would indicate that common-space analyses should not be done for face processing fMRI and only individual localizers used.

Taken together, even though there is a general agreement that a distributed network of brain regions is involved in face processing, key questions pertaining to the specific functional contribution and recruitment of these areas are still open. Furthermore, as outlined above the exact location of activation within broader regions such as the FFG and IOG varies considerably across studies. In light of previous models (Bruce & Young, 1986; Haxby et al., 2000) of face recognition, suggesting that different facial information is processed within different processing streams, this variation might be due to differences in methods, i.e. the use of different stimuli (emotional or neutral faces), or task instructions (with evaluation of emotion or gender of a face being most widely used). If the involvement of different brain regions differs depending on task demands and/or the kind of stimuli used, this could explain such inconsistencies. In addition, one of the downsides in the face processing literature is, that a great deal of studies has primarily focused on specific a priori defined regions (especially on the FFG and the amygdala). Thus, many studies only investigate activation and modulations of specifically these regions, while concurrently ignoring other, putatively also relevant regions. Hence, the importance of these a priori defined regions in face processing might be overestimated.

Here, we aim to address these problems by performing coordinate-based meta-analyses across published (whole-brain) studies of face processing. That is, given the variability of face-preferential regions across experiments, we aim to identify convergent locations in standard space across face processing experiments published until now. In particular, the goal of the present study is to a) delineate those regions, which are consistently involved in face processing and to assess the influence of b) affective valence (emotional versus neutral face stimuli) and c) task instructions (emotion or gender evaluation of faces) on the activation patterns within the resulting regions.

2. Materials and Methods

2.1. Inclusion and Exclusion Criteria

Two researchers (VM and YH) conducted the literature research and coding of the current meta-analysis. Published neuroimaging experiments using functional magnetic resonance imaging (fMRI) or positron emission tomography (PET) included in this meta-analysis were identified by a PubMed (http://www.pubmed.org), google scholar (https://scholar.google.de) and web-of-knowledge (https://apps.webofknowledge.com) literature search (using different combination of search strings: “fMRI”, “PET”, “face”, “facial”, “emotion”, “expression”, “neutral”, “localizer”) of face processing studies published between 1992 and January 2016. Further studies were obtained by reviewing previous meta-analyses on face or emotion processing (Delvecchio, Sugranyes, & Frangou, 2013; Fusar-Poli et al., 2009; Sabatinelli et al., 2011; Stevens & Hamann, 2012), review articles and reference tracing. We selected studies, which focused on face processing using static faces or dynamic video clips of faces as stimuli. Additionally, we included studies that performed and reported a localizer task for face processing. In turn, studies using face stimuli as distractors or facial material for investigation of other cognitive processes were not considered in the current analysis. Furthermore, we only included studies that compared a face condition against a control condition, while studies comparing different face conditions (i.e. comparison of emotional faces versus neutral faces) or those contrasting against a resting baseline were excluded in order to avoid findings reflecting primarily general task demands or emotion processing rather than the processing of visual faces. We included only studies that reported results of whole-brain group analyses as coordinates in a standard reference space (Talairach/Tournoux or MNI). In turn, results obtained in region of interest analyses (ROI) were not considered. This criterion was necessary as ALE delineates voxels where convergence across experiments is higher than expected under the null-hypothesis of random spatial association across the brain under the assumption that each voxel has the a priori same chance of being activated (Eickhoff, Bzdok, Laird, Kurth, & Fox, 2012). Differences in coordinate space (MNI vs. Talairach space) were accounted for by transforming those coordinates reported in Talairach space into MNI coordinates using a linear transformation (Lancaster et al., 2007). All coordinates of experiments that reported the use of SPM or FSL were treated as MNI coordinates. The only exception to this rule was papers that explicitly reported that a transformation from MNI (which is the default space in SPM and FSL) into Talairach space was performed. At last, we only included experiments, which report results on healthy adult subjects. In some cases, the definition of healthy was based on standardized diagnostic interviews whereas others used self-report and/or unstructured assessments by the investigators. Importantly, however, all of the included studies explicitly stated that healthy subjects were assessed. In turn we excluded results obtained from patients and children as well as experiments investigating between-group effects pertaining, e.g., to disease effects or pharmacological manipulation. However, if those studies separately reported within-group effects in healthy subjects at baseline, those experiments were included.

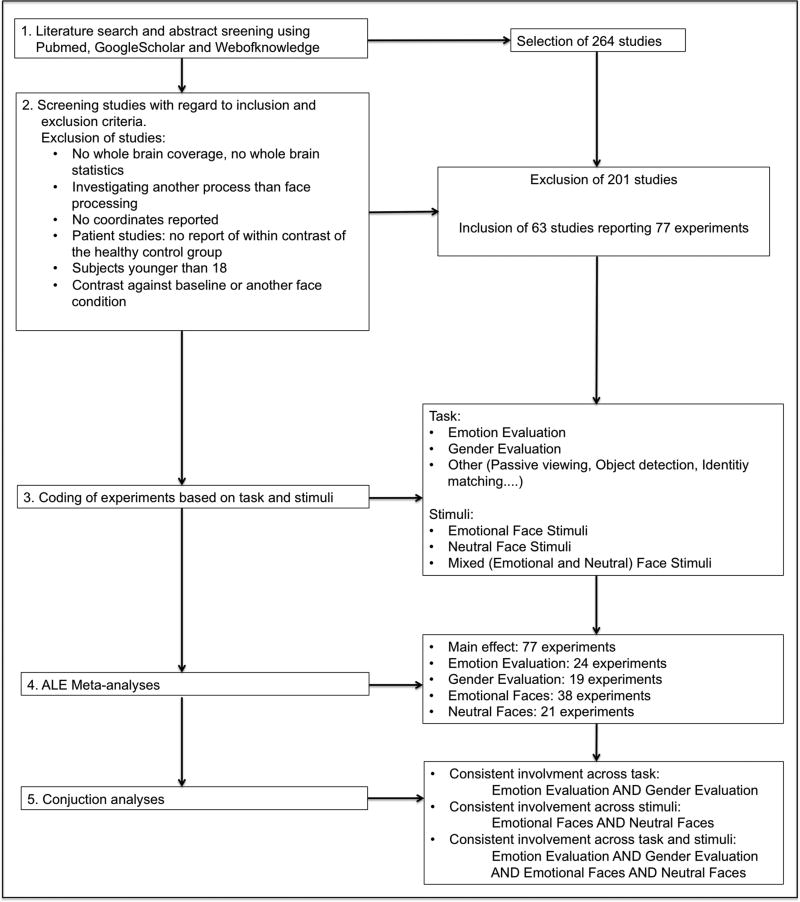

These criteria resulted in inclusion of 63 studies reporting 77 experiments in 1317 healthy subjects (see supplementary table 1 for a detailed list of included experiments and Figure 1 for an illustration of the steps of the study).

Figure 1.

Illustration of the steps of the meta-analysis.

2.2. Statistical Methods

2.2.1. Activation likelihood algorithm

All meta-analyses were performed according to standard analysis procedures as used in previous studies (cf. S. Caspers, Zilles, Laird, & Eickhoff, 2010; Chase, Kumar, Eickhoff, & Dombrovski, 2015; Cieslik, Müller, Eickhoff, Langner, & Eickhoff, 2015; Langner & Eickhoff, 2013). In brief, coordinate-based meta-analyses were performed in order to identify consistent co-activations across experiments by using the revised Activation Likelihood Estimation (ALE) algorithm (Eickhoff et al., 2012; Eickhoff et al., 2009; Turkeltaub et al., 2012) implemented as in-house MATLAB tools. This algorithm aims to identify areas showing convergence of reported coordinates across experiments, which is higher than expected under a random spatial association. The key idea behind ALE is to treat the reported foci not as single points, but rather as centers for 3D Gaussian probability distributions capturing the spatial uncertainty associated with each focus. The width of these uncertainty functions was determined based on empirical data on the between-subject (uncertainty of spatial localizations between different subjects) and between-template (uncertainty of spatial localizations between different spatial normalization strategies) variance, which represent the main components of this uncertainty. Importantly, the between-subject variance is weighted by the number of examined subjects per study, accommodating the notion that larger sample sizes should provide more reliable approximations of the true activation effect and should therefore be modeled by smaller Gaussian distributions (Eickhoff et al., 2009).

The probabilities of all foci reported in a given experiment were then aggregated for each voxel, resulting in a modeled activation (MA) map for that experiment (Turkeltaub et al., 2012). Importantly, in order to ensure that results were not driven by studies reporting more than one emotional face contrast in the same subject group (i.e. angry faces vs control, fearful faces vs control and happy faces vs control), the different contrasts were coded as one experiment (i.e. all were coded as emotional faces vs control). However, as we performed different analyses for emotion and gender evaluation as well as emotion and neutral face stimuli and some paper report results an all (or some) of these contrasts, some experiments were included that were derived from the same subject group (for example two experiments from the same paper in the gender evaluation analysis, one reporting results of the neutral and one of the emotional face condition). We carefully inspected our resulting cluster with regard to the contributing experiments in order to rule out that experiments from the same subject group drive convergence (see supplement tables S2–S5 for contributions).

Taking the union across the MA maps yielded voxel-wise ALE scores describing the convergence of results at each particular location of the brain. To distinguish true convergence across experiments from random overlap, ALE scores were compared to an analytically derived null-distribution reflecting a random spatial association between experiments. Hereby, a random-effects inference was invoked, focusing on inference on the above-chance convergence between studies, not clustering of foci within a particular study. Conceptually, the null-distribution can be formulated as sampling a voxel at random from each of the MA maps and taking the union of these values in the same manner as done for the (spatially contingent) voxels in the true analysis. The p-value of ‘a true’ ALE was then given by the proportion of equal or higher values obtained under the null-distribution. The resulting non-parametric p-values for each meta-analysis were then thresholded at a cluster level corrected threshold of p < 0.05 (cluster-forming threshold at voxel-level p<0.001) and transformed into z-scores for display. Cluster level family-wise error (FWE) correction was done as described in previous meta-analyses (Bzdok et al., 2012; Rottschy et al., 2012). First the statistical image of the uncorrected voxel-wise p-values of the original analyses was thresholded at the cluster-forming threshold of p<0.001. Then the size of the clusters surviving this threshold was compared against a null-distribution of cluster-sizes. This null distribution of cluster sizes was derived by simulating 5000 datasets of randomly distributed foci but with otherwise identical properties (number of foci, uncertainty) as the original dataset. This distribution was then used to identify the cluster-size, which was only exceeded in 5 % of all random simulations.

As a previous study (Eickhoff et al., 2016) indicated that the ALE algorithm behaves unstably when calculating meta-analyses across experiments smaller than 17 experiments, we only calculated analyses where at least 17 experiments were available. In particular Eickhoff et al. (2016) showed that inclusion of a minimum of 17 experiments avoids that results are driven by individual experiments.

All resulting areas were anatomically labeled using the SPM Anatomy Toolbox 2.2c (Eickhoff et al., 2007; Eickhoff et al., 2005). Details on the cytoarchitectonic regions referred to in the results tables may be found in the following publications reporting on Broca’s region (Amunts et al., 1999), motor cortex (Geyer et al., 1996), somatosensory cortex (Geyer, Schleicher, & Zilles, 1999; Grefkes, Geyer, Schormann, Roland, & Zilles, 2001), amygdala and hippocampus (Amunts et al., 2005), visual cortex (Amunts, Malikovic, Mohlberg, Schormann, & Zilles, 2000), lateral occipital and ventral extrastriate cortex (Malikovic et al., 2015; Rottschy et al., 2007), cerebellum (Diedrichsen, Balsters, Flavell, Cussans, & Ramnani, 2009), posterior fusiform gyrus (J. Caspers et al., 2013), mid-fusiform gyrus (Lorenz et al., 2015), basal forebrain (Zaborszky et al., 2008) and thalamus (connectivity-based regions: Behrens et al., 2003).

All results were illustrated using Mango (Research Imaging Institute, UTHSCSA; http://ric.uthscsa.edu/mango/mango.html).

2.2.2. Conjunctions

In order to determine those voxels where a significant effect was present in two or more separate analyses, conjunctions were computed using the conservative minimum statistic (Nichols, Brett, Andersson, Wager, & Poline, 2005). That is, only regions significant on a corrected level in each individual analysis were considered. In order to exclude smaller regions of presumably incidental overlap between the thresholded ALE maps of the individual analyses, an additional extent threshold of 25 voxels was applied (Langner & Eickhoff, 2013).

2.3. Classification of Experiments

As we were interested in how brain networks may be differently recruited depending on stimuli type and task instructions, we coded the included experiments with regard to different criteria:

Task instructions: Here we coded the task the subjects had to perform while viewing the faces, independent of the valence of the presented face. We thus classified for each experiment if the task was to focus attention on the emotion expressed in the facial stimuli (emotion evaluation), on the gender of the face (gender evaluation), facial identity (identity evaluation), on other characteristics of the face (for example attractiveness) or if the subjects had no task at all (passive viewing). As, as mentioned above, the algorithm behaves instable when including a small amount of experiments, a minimum of 17 experiments had to be available in order to calculate a separate meta-analysis. A sufficient number of experiments was only available for emotion and gender evaluation. In turn there were too few experiments reporting whole-brain activation results (cf. in-/exclusion criteria) for a separate analysis of identity (12 experiments) or passive viewing (9 experiments). Please note, that an analysis across identity judgments task was performed and results reported in the supplement (supplementary table 5). These results should, however be regarded with caution given the small sample size. The remaining experiments either reported results across more than one task or had other instructions (for example attractiveness rating, object detection). We thus only calculated meta-analyses across emotion and gender evaluation experiments.

Type of facial stimuli used: Here the use of emotional or neutral faces or a mix of both as stimuli was coded, independently of the task (i.e. pay attention to the emotion or other characteristics of the face). Importantly, emotional stimuli were all categorized as emotional independently of the specific valence of the presented face. This was done, as after applying all exclusion/inclusion criteria there were not enough experiments left to differentiate between emotional categories. Thus, two meta-analyses were calculated, one across studies using emotional face stimuli and one across experiments using neutral faces.

Importantly, each experiment was coded on both criteria and was therefore included in the calculation of more than just one analysis. For example, an experiment where subjects had to rate the expression of emotional faces was included in the analysis of emotion evaluation, but also in that across studies of emotional faces.

In total five different meta-analyses were calculated:

Main effect (general face processing network) across all experiments.

Emotion evaluation.

Gender evaluation.

Emotional stimuli.

Neutral stimuli.

Conjunction analyses were then calculated to reveal overlap between the individual results and hence putative shared substrates between different processes. In total, three conjunctions were calculated with the aim to reveal those regions that are found to be activated above chance across experiments in more than one condition. First of all a conjunction was performed across the meta-analytic results of emotion and gender evaluation, assessing effects of evaluation type. This conjunction between task conditions revealed regions that are consistently found when either evaluating the emotion or gender of a face, i.,e., regions consistently activated no matter what task was performed. If, conversely, a particular region was not revealed by this conjunction but rather only in one of the two task conditions (emotion or gender evaluation), we regarded involvement of this region as influenced by task.

Next, we assessed effects of stimulus type and a conjunction was calculated between the meta-analytic correlates of processing emotional and neutral stimuli, respectively, revealing regions for which convergence is found for both stimulus conditions, i.e. regions consistently activated when emotional as well as when neutral stimuli are presented. If, a region was not revealed by this conjunction but rather only in one of the two stimuli conditions (emotional or neutral stimuli), we regarded involvement of this region as influenced by stimuli.

Lastly, a conjunction across all evaluation and stimulus conditions was performed, i.e. across emotion evaluation, gender evaluation, emotional stimuli and neutral stimuli. Regions revealed in this conjunction were regarded as being consistently activated in all task and stimuli conditions because they were found in all individual meta-analyses.

Please note that the conjunctions revealed regions consistently found to be activated in the different conditions, which does not imply that activation strength in those regions is the same in the different conditions. More precisely, if a region is for example found in a conjunction of two meta-analyses, we can confidently conclude that it is consistently activated in both of the two conditions, but we cannot conclude, that activation is independent of the condition. This is because, even though that region is consistently activated by both conditions, it could still activate stronger and/or more consistently in one of them. That is, consistent activation across two conditions does not preclude differential involvement.

3. Results

In total 63 papers reporting 77 neuroimaging experiments were included in the current study (supplementary table 1). Of these 77 experiments, 24 experiments with 532 subjects conducted an emotion evaluation and 19 experiments with 337 subjects a gender evaluation task. In addition, 38 of the 77 experiments and in total 647 subjects used the emotional and 21 experiments with 406 subjects neutral faces as stimuli.

3.1. Characterization of the experiments included in the different meta-analyses

3.1.1. Emotion Evaluation versus gender evaluation

Most of the experiments of both meta-analyses used Ekman faces (Emotion evaluation: 71% of the emotion evaluation experiments; Gender evaluation: 42% of the gender evaluation experiments). Other face sets used were KDEF (Emotion: 8%; Gender:26%), Gur (Emotion:8%; Gender:0) and NimStim (Emotion:4%; Gender:0). The remaining experiments used either faces of more than one set, own material or didn’t specify the face stimuli used (Emotion: 8%; Gender: 32%).

Most experiments of both meta-analyses used shapes (Emotion: 58%; Gender: 52%) or scrambled faces (Emotion: 29%; Gender: 32%) as control stimuli, while the remaining experiments used other stimuli (Emotion:13%; Gender: 16%) like houses, animals, radios or patterns.

3.1.2. Emotional versus neutral stimuli

Most of the experiments of the emotional face stimuli meta-analyses used Ekman faces (Emotional faces: 63% of experiments; Neutral faces: 14%). Other face sets used were KDEF (Emotional:11%; Neutral:10%), Gur (Emotional: 3%; Neutral: 5%) and NimStim (Emotional:5%; Neutral:5%). The remaining experiments used other face sets (POET, PICS, Vital longevity face database, Max Plank dataset, faces from movies; Emotional:8%; Neutral:14%), faces of more than one set, own material or didn’t specify the face stimuli used (Emotional: 8%; Neutral: 32%). Two experiments of the neutral meta-analysis used face stimuli with different orientations (turning heads and head movements).

Most experiments of both meta-analyses used scrambled faces (Emotional: 47%; Neutral: 24%) or shapes (Emotional: 45%; Neutral: 24%) as control stimuli. Experiments using neutral stimuli additionally used houses (Emotional:0; Neutral: 24%). The remaining experiments used other stimuli (Emotional:8%; Neutral: 14%) like places, landscapes, animals or radios.

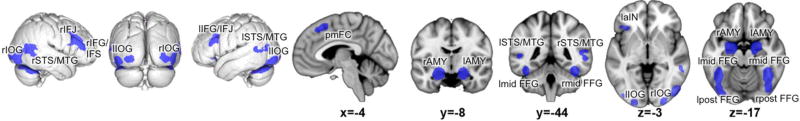

3.2. General Face Processing Network: Convergence Across All Face Processing Experiments

The main effect across all 77 experiments revealed consistent activity in a broad network (figure 2, table 1) encompassing bilateral amygdala (encompassing CM, SF and LB) extending in hippocampus and basal forebrain, bilateral inferior occipital gyrus covering ventral extrastriate (hOc3v, hOc4v) and lateral occipital cortex (hOc4la, hOc4lp), bilateral (posterior and mid) fusiform gyrus extending into cerebellum, bilateral middle temporal gyrus/superior temporal sulcus, bilateral inferior frontal junction, sulcus and gyrus, left anterior insula as well as the posterior medial frontal cortex.

Figure 2.

Face processing network: Convergence across all experiments investigating face processing compared to a control condition. r: right; l: left; IOG: inferior occipital gyrus; STS: superior temporal sulcus; MTG: middle temporal gyrus; IFJ: inferior frontal junction; IFG: inferior frontal gyrus; IFS: inferior frontal sulcus; pmFC: posterior medial frontal cortex; AMY: amygdala; mid FFG: mid-fusiform gyrus; post FFG: posterior fusiform gyrus; aIN: anterior insula.

Table 1.

General face processing network: Brain regions showing significant convergence across all experiments comparing face processing against a control condition.

| Macroanatomical structure | x,y,z | Histological assignment | z score |

|---|---|---|---|

| Cluster 1 (k= 2742) | |||

| Right mid Fusiform Gyrus | 40 −52 −20 | FG4 | 8.33 |

| Right Inferior Occipital Gyrus | 46 −80 −6 | hOc4la | 8.26 |

| Right Inferior Occipital Gyrus | 28 −94 −6 | hOc3v | 6.89 |

| Right Inferior Occipital Gyrus | 30 −92 −8 | hOc3v | 6.82 |

| Right Middle Temporal Gyrus | 52 −52 6 | 6.06 | |

| Right Middle Temporal Gyrus | 56 −40 0 | 5.35 | |

| Cluster 2 (k= 1417) | |||

| Left mid Fusiform Gyrus | −42 −52 −20 | FG4 | 8.28 |

| Left posterior Fusiform Gyrus | −40 −74 −14 | FG2 | 7.52 |

| Cluster 3 (k= 1345) | |||

| Right Inferior Frontal Junction | 44 14 26 | 8.27 | |

| Right Inferior Frontal Gyrus | 50 22 22 | 7.26 | |

| Right Inferior Frontal Gyrus | 54 10 16 | Area 44 | 4.39 |

| Cluster 4 (k= 742) | |||

| Left Amygdala | −20 −8 −16 | 8.37 | |

| Cluster 5 (k=656) | |||

| Right Amygdala | 20 −6 −14 | 8.38 | |

| Right Hippocampus | 34 −12 −18 | CA1 | 3.22 |

| Cluster 6 (k= 546) | |||

| Left Inferior Frontal Gyrus | −44 20 22 | 6.10 | |

| Left Inferior Frontal Junction | −38 8 28 | 4.93 | |

| Cluster 7 (k=423) | |||

| Posterior Medial Frontal Cortex | 0 16 56 | 5.91 | |

| Posterior Medial Frontal Cortex | −4 20 52 | 5.64 | |

| Posterior Medial Frontal Cortex | −6 28 48 | 4.94 | |

| Cluster 8 (k=351) | |||

| Left Middle Temporal Gyrus | −50 −48 4 | 5.64 | |

| Left Middle Temporal Gyrus | −56 −72 14 | 4.60 | |

| Left Middle Temporal Gyrus | −66 −48 10 | 4.15 | |

| Cluster 9 (k=233) | |||

| Left Inferior Occipital Gyrus | hOc3v | 7.48 | |

| −22 −96 −8 | |||

| Cluster 10 (k=159) | |||

| Left Anterior Insula | −36 26 0 | 4.62 | |

| Left Inferior Frontal Gyrus | −44 28 −4 | 3.86 |

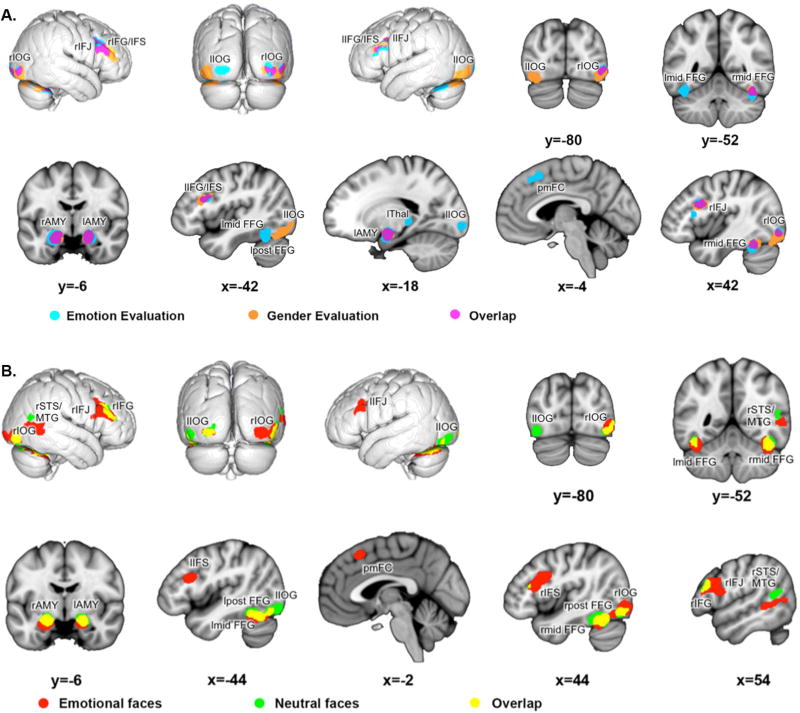

3.3. Influence of Task Instructions: Emotion Versus Gender Evaluation

As revealed by the conjunction analysis, both task instructions lead to consistent activity (table 2, figure 3A in pink) in bilateral inferior frontal junction and sulcus/gyrus, bilateral amygdala (encompassing CM, SF and LB) and basal forebrain, right midfusiform gyrus and right inferior occipital gyrus (covering hOc4la, hOc4v and hOc3v).

Table 2.

Regions from the conjunction across the results of the meta-analyses across studies of emotion evaluation and gender evaluation.

| Macroanatomical structure | x,y,z | Histological assignment | z score |

|---|---|---|---|

| Cluster 1 (k= 333) | |||

| Right Inferior Frontal Junction | 46 12 28 | 5.93 | |

| Right Inferior Frontal Gyrus | 54 24 26 | Area 45 | 4.30 |

| Right Inferior Frontal Gyrus | 48 22 22 | 4.24 | |

| Cluster 2 (k=264) | |||

| Left Amygdala | −20 −6 −16 | 7.14 | |

| Cluster 3 (k=220) | |||

| Right Amygdala | 20 −6 −16 | Amyg (SF) | 7.04 |

| Cluster 4 (k=104) | |||

| Right mid Fusiform Gyrus | 40 −52 −22 | FG4 | 5.21 |

| Cluster 5 (k=99) | |||

| Left Inferior Frontal Gyrus | −42 20 22 | 4.40 | |

| Cluster 6 (k=98) | |||

| Right Inferior Occipital Gyrus | 46 −80 −8 | hOc4la | 4.88 |

| Cluster 7 (k=95) | |||

| Right Inferior Occipital Gyrus | 32 −88 −10 | hOc4v | 7.71 |

| Right Inferior Occipital Gyrus | 26 −96 −6 | hOc3v | 4.25 |

Figure 3.

Influence of task instructions and stimuli: (A) Convergence across experiments of emotion evaluation of faces are shown in light blue, convergence across experiments of gender evaluation in orange and the overlap (conjunction, i.e. regions consistently involved across task conditions) of both in pink. r: right; l: left; IOG: inferior occipital gyrus; IFJ: inferior frontal junction; IFG: inferior frontal gyrus; IFS: inferior frontal sulcus; pmFC: posterior medial frontal cortex; AMY: amygdala; Thal: thalamus; mid FFG: mid-fusiform gyrus; post FFG: posterior fusiform gyrus. (B) Convergence across experiments using emotional faces are shown in red, convergence across experiments using neutral faces in green and the overlap (conjunction, i.e. regions consistently involved across stimuli conditions) between both are illustrated in yellow. r: right; l: left; IOG: inferior occipital gyrus; STS: superior temporal sulcus; MTG: middle temporal gyrus; IFJ: inferior frontal junction; IFG: inferior frontal gyrus; IFS: inferior frontal sulcus; pmFC: posterior medial frontal cortex; AMY: amygdala; mid FFG: mid-fusiform gyrus; post FFG: posterior fusiform gyrus.

The individual analysis of emotional evaluation (figure 3A in light blue, supplementary table 2) shows additional convergence in left midfusiform gyrus, left thalamus, left inferior occipital gyrus (hOc3v) and posterior medial frontal cortex.

The individual analysis of gender evaluation (Figure 3A in orange, supplementary table 3) shows additional convergence in left (hOc4v, hOc4lp) and right inferior occipital gyrus (hOc4lp), left posterior fusiform gyrus as well as right inferior frontal gyrus (anterior to right inferior frontal convergence found in the conjunction).

3.4 Influence of Stimuli: Processing of Emotional and Neutral Faces

The conjunction across both analyses (table 3, figure 3B in yellow) revealed conjoint activity of bilateral amygdala (mainly encompassing CM, SF on the left and CM, SF and LB on the right) and basal forebrain, bilateral mid-fusiform gyrus, left posterior fusiform gyrus, left (hOc3v) and right (hOc4la) inferior occipital gyrus, as well as right inferior frontal gyrus (overlapping area 45).

Table 3.

Regions from the conjunction across the results of the meta-analyses across studies of emotional and neutral face stimuli.

| Macroanatomical structure | x,y,z | Histological assignment | z score |

|---|---|---|---|

| Cluster 1 (k=366) | |||

| Right mid Fusiform Gyrus | 42 −52 −22 | FG4 | 7.32 |

| Cluster 2 (k=227) | |||

| Left mid Fusiform Gyrus | −44 −58 −20 | FG4 | 5.22 |

| Left posterior Fusiform Gyrus | −40 −72 −14 | FG2 | 4.85 |

| Left mid Fusiform Gyrus | −42 −48 −18 | FG4 | 4.74 |

| Cluster 3 (k= 233) | |||

| Left Amygdala | −20 −8 −14 | Amyg (CM) | 6.28 |

| Cluster 4 (k= 226) | |||

| Right Inferior Occipital Gyrus | 44 −76 −12 | hOc4la | 5.21 |

| Right Inferior Occipital Gyrus | 48 −82 −6 | hOc4la | 4.12 |

| Right Inferior Occipital Gyrus | 50 −76 −2 | hOc4la | 3.94 |

| Cluster 4 (k= 226) | |||

| Right Amygdala | 20 −6 −16 | Amyg (SF) | 6.68 |

| Cluster 6 (k=149) | |||

| Right Inferior Frontal Gyrus | 54 26 24 | Area 45 | 4.60 |

| Right Inferior Frontal Gyrus | 50 30 18 | Area 45 | 4.18 |

| Cluster 7 (k=89) | |||

| Left Inferior Occipital Gyrus | hOc3v | 5.26 | |

| −20 −96 −8 |

The individual analysis of emotional faces revealed additional convergence in bilateral inferior frontal junction/sulcus, right posterior fusiform gyrus, left laterobasal amygdala, right posterior middle temporal gyrus/superior temporal sulcus, right inferior occipital gyrus (hOc3v) and posterior medial frontal cortex (figure 3B in red, supplementary table 4).

In contrast, the individual analysis of neutral faces (figure 3B in green, supplementary table 5) revealed, in addition to the regions found in the conjunction, convergence in left inferior occipital gyrus (hOc4la) and right posterior superior temporal sulcus.

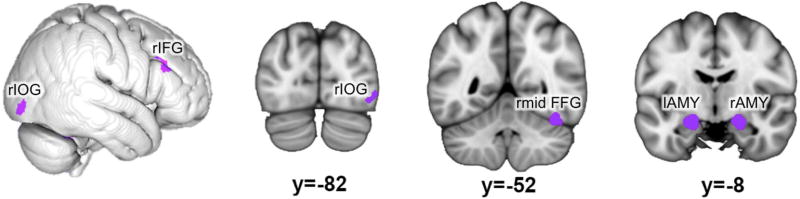

3.5. Regions with consistent Recruitment regardless of Task Instructions and Stimuli

Finally we aimed to determine those regions, which show convergence across all task stimuli conditions. Such robust recruitment was evaluated by performing a conjunction across the four aforementioned meta-analyses (emotion evaluation, gender evaluation, emotional stimuli, neutral stimuli). The resulting network (figure 4, table 4) involves bilateral amygdala (encompassing mainly CM and SF), right inferior occipital (hOc4la) and mid-fusiform gyrus as well as right inferior frontal gyrus (area 45).

Figure 4.

Regions of the face-processing network consistently activated across task and stimuli: Regions found in all individual analyses. r: right; l: left; IOG: inferior occipital gyrus; IFG: inferior frontal gyrus; AMY: amygdala; mid FFG: mid-fusiform gyrus.

Table 4.

Regions from the conjunction across the results of the meta-analyses across studies of emotion evaluation, gender evaluation, neutral and emotional stimuli.

| Macroanatomical structure | x,y,z | Histological assignment | z score |

|---|---|---|---|

| Cluster 1 (k=193) | |||

| Left Amygdala | −20 −8 −14 | Amyg (CM) | 6.16 |

| Cluster 2 (k=161) | |||

| Right Amygdala | 20 −6 −16 | Amyg (SF) | 6.68 |

| Cluster 3 (k=104) | |||

| Right mid Fusiform Gyrus | FG4 | 5.21 | |

| 40 −52 −22 | |||

| Cluster 4 (k= 60) | |||

| Right Inferior Occipital Gyrus | 48 −82 −6 | hOc4la | 4.12 |

| 44 −80 −10 | hOc4la | 3.98 | |

| Cluster 5 (k=34) | |||

| Right Inferior Frontal Gyrus | Area 45 | 4.30 | |

| 54 24 26 |

4. Discussion

4.1. The Face Processing Network consistently activated across task and stimuli

By calculating a meta-analysis across all experiments included in our study we confirmed a general face-processing network. Importantly, individual meta-analyses and conjunctions across them showed, that five regions of this face processing network are consistently recruited regardless of task and stimuli, namely right IOG and mid-FFG, but also bilateral amygdala and right IFG. Importantly, it has to be noted that by consistent involvement across task and stimuli we refer to regions for which convergence across experiments was found for all individual analyses. Thus, the fact that we for all individual analyses do find convergence in amygdala, IOG, FFG and IFG does not necessarily imply that the activation strength in these regions is the same in response to the different conditions. It is possible, and in fact very likely based on previous literature, that those regions do show activation differences when directly comparing conditions. However, the current study aimed to delineate spatial convergence of neuroimaging findings. Thus our results just indicate that those regions are implicated in processes, which are always recruited regardless of what task the subjects had to fulfill and what stimuli are presented.

First of all, our task and stimuli consistent network points to a right hemisphere dominance in face processing. This fits with the general view that in particular right-sided regions play a major role in face processing while involvement of the left side is rather minor (Adolphs, Damasio, Tranel, & Damasio, 1996; Bukowski, Dricot, Hanseeuw, & Rossion, 2013; Duchaine & Yovel, 2015; N. Kanwisher, McDermott, & Chun, 1997; Pitcher, Walsh, & Duchaine, 2011; Pitcher, Walsh, Yovel, & Duchaine, 2007; Yovel, Tambini, & Brandman, 2008).

Second, the result of consistent IOG and FFG involvement is in line with previous literature granting them a key role in visual processing of faces (Atkinson & Adolphs, 2011; Haxby et al., 2000, 2002; N. Kanwisher & Yovel, 2006). Especially, the FFG has a well-established status in the face processing literature. First described by Sergent, Ohta, and MacDonald (1992) and (Puce, Allison, Gore, & McCarthy, 1995) and labeled by N. Kanwisher et al. (1997) as the “Fusiform face area” (FFA), this region has been recognized as one of the most important regions in face processing. However, while originally the FFA has been described as one face-selective region (N. Kanwisher et al., 1997), it has been argued that there are two distinct face preferential cluster, one located in mid-FFG and the other in the more posterior FFG (Haxby et al., 1994; Pinsk et al., 2009; Rossion et al., 2000; Weiner & Grill-Spector, 2010). Our results now show, that the (more anteriorly located) right mid-FFG is part of the face-processing network consistently involved across task and stimuli while right posterior FFG (as discussed later) and left posterior and mid-FFG are influenced by either task or stimuli. Thus, our meta-analyses supports the crucial role of the mid-FFG in face processing, and additionally provides evidence that it is consistently involved regardless of the emotional display in a face or the task that needs to be fulfilled.

The importance of the right IOG in face processing has been demonstrated in many clinical studies of prosopagnosia as well as by TMS and intracerebral stimulation experiments. A study investigating the structural scans of 52 patients with prosopagnosia shows that the majority of patients exhibit damage of the right IOG (Bouvier & Engel, 2006). Jonas et al. (2012) provided more direct evidence by showing that intracerebral stimulation of the right IOG temporarily led to prosopagnosia. Furthermore, previous TMS studies in healthy subjects have reported impairments of gender (Dzhelyova, Ellison, & Atkinson, 2011) as well as emotion evaluation (Pitcher, Garrido, Walsh, & Duchaine, 2008) when disrupting right IOG with TMS. Our results, demonstrating involvement of right IOG in all individual meta-analyses fits well with these observations. Given previous results suggesting that right IOG is primarily involved in extracting and processing local features (Pitcher et al., 2007), it might be argued, that the IOG is involved in the first stages of face-preferential processing, needed for all further higher-level analyses. However, we need to point out, that the performed analysis does not allow any conclusion about the temporal course of face processeing and that several previous studies in prosopagnosia (Rossion et al., 2003; Sorger, Goebel, Schiltz, & Rossion, 2007; Steeves et al., 2009) questioned a strict hierarchical model. Thus, we would tentatively side with the view that right IOG receives input in parallel to mid-FFG and that the interaction of those two regions allows the fine grained perception of a face (Rossion, 2008).

In addition to IOG and mid-FFG, our results revealed bilateral amygdalae as key structures in the face network. Involvement of the amygdala in face processing has mainly been related to responding to emotional expressions (Adolphs, 2010; Haxby et al., 2000). However, it has been suggested that the amygdala in general responds to behavioral relevant and salient information, acting as an environmental relevance detector (Adolphs, 2010; Ewbank, Barnard, Croucher, Ramponi, & Calder, 2009; Pessoa & Adolphs, 2010; Sander, Grafman, & Zalla, 2003; Santos, Mier, Kirsch, & Meyer-Lindenberg, 2011). As we did not only find amygdala involvement in the meta-analysis across emotional but also in that across neutral faces (as well as in the two task analyses), our results sides with this assumption. Thus, we suggest that all faces gain access to the amygdala and that activation of the amygdalae do not just reflect processing of emotions but rather more broadly signal the social and biological relevance of faces. Based on the relevance of incoming information, the amygdala might then modulate other brain regions in order to influence further stimulus processing. This is in line with studies proposing that the amygdala is involved in influencing vigilance and allocating recourses in order to facilitate stimuli processing by focusing on relevant attributes of stimuli (Jacobs, Renken, Aleman, & Cornelissen, 2012; Whalen et al., 1998).

Finally, a cluster on the right inferior frontal gyrus (IFG) was also found in all individual meta-analyses. Even though Vignal et al. (2000) already reported at the beginning of this century that electrical stimulation of IFG led to face hallucinations, up to now, the IFG has been a rather neglected region in the face processing literature. Frontal involvement during face processing has been implicated in mirror neuron response and automatic imitation of facial expressions (S. Caspers et al., 2010; Engell & Haxby, 2007) but also in processing sematic aspects of faces (Fairhall & Ishai, 2007; Leveroni et al., 2000). However our IFG cluster found consistently across all conditions does not overlap with the results of a meta-analysis on action observation and imitation (S. Caspers et al., 2010) and studies on semantic memory show a network that does hardly include right IFG but rather its homologue on the left side (Binder, Desai, Graves, & Conant, 2009). These results thus make it rather unlikely that consistent right IFG recruitment does reflect a mirror neuron response or processing sematic aspects of faces. We would thus suggest, that the consistent right IFG involvement during face processing regardless of task and stimuli does reflect general control processes. Right inferior frontal involvement has been associated with top-down signals (Miller, Vytlacil, Fegen, Pradhan, & D’Esposito, 2011; Weidner, Krummenacher, Reimann, Muller, & Fink, 2009) that modulate stimulus processing. Therefore, we suggest, that the social and behavioral relevance of faces leads to automatic allocation of attention, which leads to involvement of right IFG that further influences other brain regions in order to adapt stimulus processing.

4.2. Influences of Task Instructions and Stimulus Type

In addition to the regions found in all individual meta-analyses, our results show that for the remaining parts of the face-processing network the recruitment and specific location is dependent on task requirements as well as stimuli used.

4.2.1. Fusiform gyrus

Besides right mid-FFG convergence for all stimulus types and tasks, our results additionally show a second region along the right FFG, which shows effects of stimulus type. In addition, convergence in the left FFG was strongly influenced by task demands, with mid-FFG convergence found in the emotion evaluation but not gender evaluation analysis, while for left posterior FFG it was the other way around (only convergence for emotion evaluation). Taken together, results of the current meta-analyses thus indicate that different parts of the FFG are differently involved depending on task and stimulus type. These results support previous studies suggesting more than one face preferential fusiform region (Pinsk et al., 2009; Weiner & Grill-Spector, 2010, 2012, 2013). In particular, the location of right posterior FFG only found in the analysis of emotional but not neutral stimuli correspond to FFA-1 (Pinsk et al., 2009) and pFus (Weiner & Grill-Spector, 2010), while the convergence in right mid-FFG found in all analyses corresponds to FFA-2 (Pinsk et al., 2009) and mFus (Weiner & Grill-Spector, 2010), respectively. Similarly, the different clusters found for gender and emotion evaluation along the left FFG also correspond well to FFA-1/pFus and FFA-2/mFus respectively. Importantly, our results now indicate that those regions can be functionally segregated on the aggregate level, i.e., across many different neuroimaging experiments employing different settings, analysis strategies etc.

4.2.2. Superior temporal sulcus/middle temporal gyrus

Even though our general face-processing network supports involvement of superior temporal sulcus in face processing, convergence was not found for all individual analyses. In general involvement of the STS in face processing has been associated to the processing of changeable aspects of a face like gaze, lip movement but also the expression of a face (Adolphs, 2002; Haxby et al., 2002). In line with this, we do find convergence in middle temporal gyrus extending into the posterior STS in the analyses of emotional stimuli. In addition, a non-overlapping pSTS region corresponding to the posterior TPJ (Bzdok et al., 2013) was also found in the analyses of neutral stimuli. These results therefore support the view that pSTS and adjacent temporal regions play a role in processing facial expressions, but additionally indicate that neutral and emotional faces recruit different regions within pSTS and middle temporal regions depending on the expression (neutral or emotional) of a face.

When analyzing the effects of task instructions, neither for gender evaluation nor for emotion evaluation a significant convergence was found in any temporal regions indicating that this part of the brain is mainly involved stimulus-driven processes. However, it has to be noted, that the analyses of task instructions hardly involved experiments using dynamic stimuli. This is because almost all of the dynamic experiments included in the current study did not require gender or emotion evaluation but rather investigated face processing by using other tasks. As it has been shown that the STS plays a major role when processing facial movement (Fox, Iaria, & Barton, 2009; Grosbras & Paus, 2006), the lack of STS involvement during gender and emotion evaluation might therefore be attributed to the lack of experiments using dynamic stimuli in these analyses. This additionally underlines the role of the pSTS for processing face motion and changeable aspects of faces, as these are more strongly observable in dynamic than static facial stimuli.

In summary, our results support the role of the pSTS in processing (dynamic) facial expressions and additionally indicate that distinct parts of this region are involved based on whether the expression is neutral or emotional.

4.2.3. Inferior frontal junction

In addition to consistent involvement of right IFG regardless of task and stimuli, convergence in bilateral inferior frontal junction (IFJ) was found for all individual analyses except in the one of neutral stimuli (an additional conjunction between emotion and gender evaluation and emotional stimuli did reveal the IFJ, cf. Supplementary figure 1). This indicates that activation of the IFJ is modulated by the emotionality of a presented face. The IFJ, has been repeatedly associated to various aspects of cognitive control, in particular task switching and set shifting paradigms (Brass, Derrfuss, Forstmann, & von Cramon, 2005). In a recent meta-analysis across different tasks of cognitive action control IFJ convergence has been related to reactivation of the relevant rule of an on-going task (Cieslik et al., 2015). When comparing our results with the IFJ cluster of Cieslik et al. (2015), the posterior parts of the frontal cluster of all individual meta-analyses (except for that across neutral stimuli) clearly overlap with the IFJ reported in Cieslik et al. (2015). With regard to face processing, IFJ involvement may thus not be primarily related to emotionality but rather be associated to the reactivation of the requirement to focus attention on the gender or the emotion of a face while concurrently the presented facial stimuli leads to automatic orientation of attention. While emotional stimuli lead to a stronger automatic attentional orientation than neutral stimuli (Nummenmaa, Hyona, & Calvo, 2006; Stormark, Nordby, & Hugdahl, 1995), IFJ involvement is required during emotional but to a lesser extend during neutral stimulus processing in order to successfully perform the ongoing task.

4.2.4. Pre-supplementary motor area

Consistent involvement of a region of the posterior medial frontal cortex corresponding to the pre-supplementary motor area (pre-SMA) was not found in any conjunction but only for the individual analyses of emotion evaluation and emotional stimuli (an additional conjunction between emotion evaluation and emotional stimuli revealed pre-SMA, cf. Supplementary figure 2), thus indicating that involvement of this region is influenced by stimuli and task. In general, the pre-SMA has been implicated in action preparation and execution, control of response conflict (for review see Nachev, Kennard, & Husain, 2008) and supervisory attentional control (Cieslik et al., 2015), suggesting that this region is not necessarily involved in face specific processes but rather more in response selection and preparation. This view, however, does not fully fit with our findings regarding the influence of task and stimuli as all task and stimuli conditions required (motor) response selection and execution. Thus, a more likely interpretation would be that activity of the pre-SMA signals attention to emotional signals. Alternatively the pre-SMA could have a more specific role in processing emotional aspects of faces. In particular, stimulation of this region in induces smiling and laughter (Krolak-Salmon et al., 2006) and leads to fewer errors and faster reaction times when recognizing emotional expressions in faces (Balconi and Bortolotti, (2013). Finally, disruption of the pre-SMA has also been shown to impair recognition of happy facial expressions (Rochas et al., 2013). Thus, pre-SMA activity in face processing seems to be involved in both, execution but also recognition of emotional expressions. Therefore, it has been suggested that pre-SMA involvement during emotional face processing reflects mirror neuron responses (Kircher et al., 2013; Rochas et al., 2013). In line with this view, Kircher et al. (2013) showed that the observation of happy faces as well as the execution of a happy facial expression led to activation of the pre-SMA, while observation and execution of neutral or non-valenced expression did not involve this region.

We would thus suggest, that the pre-SMA involvement reflects emotion related processes that helps the understanding of the emotion of others.

4.3. Limitations

It has to be acknowledged, that for the influence of stimuli, we calculated a meta-analysis across all emotions, as after exclusion of region of interest studies, there were not enough experiments left to differentiate between individual emotions. In this context, another limitation of our study lies in the fact, that in the (emotion) face processing literature there is a strong bias of the use of fearful face stimuli. Thus, our results of the meta-analysis across studies of emotional stimuli may be driven by fearful faces.

With regard to the analyses of the influence of task instructions it has to be noted, that the results are possibly confounded by task difficulty. We, however, can largely rule out this possibility because the mean accuracies reported in the experiments of emotion (mean accuracy=93,7;SD=6,3; for 5 experiments accuracies were not reported) and gender (mean accuracy=96,1; SD=4,2, for 4 experiments accuracies were not reported) are not statistically different (t32=1.24, p=0.22).

In ALE, clusters of convergence can extend along different anatomical regions. This is especially the case for regions that are spatially close. In our study, we can in particular observe this merging in the main effect (i.e. analysis across all experiments), where a large cluster encompassing right IOG, mid and posterior FFG as well as pSTS/MTG was found. However by combining individual analyses of different conditions (gender evaluation, emotion evaluation, emotional stimuli, neutral stimuli) and specific conjunctions we were able to reveal subclusters.

It has recently been shown that stereotactic coordinates are poor predictors of functional regions in the individual brain (Weiner et al., 2014). Thus, as the current study calculates meta-analyses across coordinates reported in different experiments of face processing, the coordinates resulting from our meta-analyses might not correspond to the functional regions found in any individual subjects. In this context, however, it should be noted that the aim of a meta-analysis is not to reveal consistent or most likely locations across individuals but rather to delineate convergent activation of face-preferential regions in standard space. As our results summarize the reports of all previously published face-processing literature, they should thus be good predictors of functional regions at the group level, hopefully providing functional ROIs of face-preferential regions for fMRI studies investigating group effects.

5. Summary and Conclusion

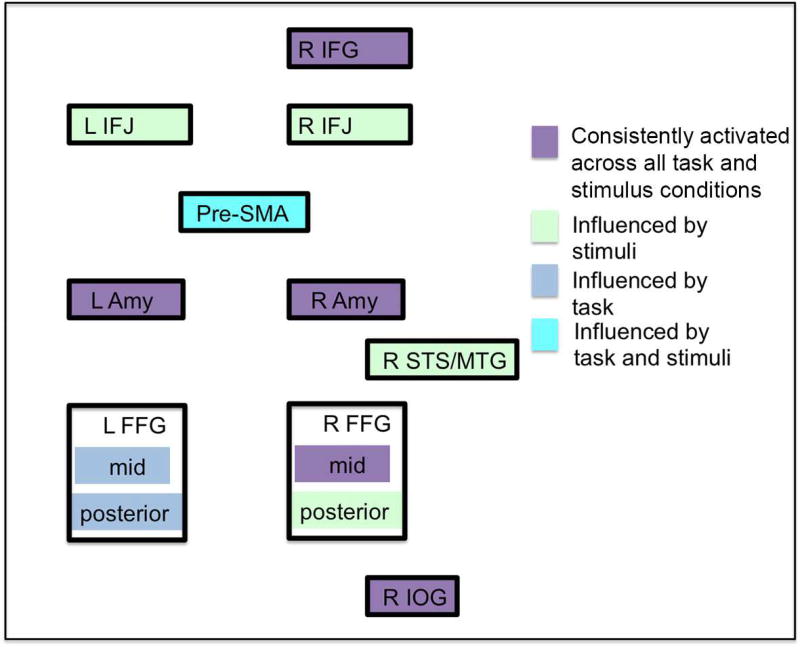

Our results provide evidence for general face processing network consisting of bilateral amygdala, FFG, pSTS/MTG, IFJ/IFG, IOG, left anterior insula and pre-SMA. Among those regions, bilateral amygdala, right IOG, right mid-FFG and right IFG could be found in all individual analyses and are thus always involved when a face is presented, regardless of the displayed emotion and task instructions. Importantly, the results of the separate analyses additionally showed that convergence within the face-processing network also differs depending on task instructions and stimuli processed (figure 5).

Figure 5.

Summary of the findings illustrating regions found in all individual meta-analyses (purple), influenced by stimuli (light blue), influenced by task (blue) and influenced by both, stimuli and task (light blue). R: right; L: left; IFG: inferior frontal gyrus; IFJ: inferior frontal junction; pre-SMA: pre- supplementary motor area; Amy: amygdala; STS/MTG: superior temporal sulcus/middle temporal gyrus; FFG: fusiform gyrus; IOG: inferior occipital gyrus.

Thus, in addition to the classification of Haxby et al. (Haxby et al., 2000, 2002), suggesting a core system for face processing and a extended system that extracts the meaning of face, we would argue for a classification based on the consistency of involvement based on task and stimuli: On the one hand, there is a system consisting of right mid-FFG, IOG, IFG and bilateral amygdala that is always recruited regardless of task and stimuli, while right posterior FFG, left FFG, right STS, bilateral IFJ as well as pre-SMA should always be considered in light of the specific task performed as well as the type of stimuli used.

Supplementary Material

Acknowledgments

This study was supported by the National Institute of Mental Health (R01-MH074457), the Helmholtz Portfolio Theme “Supercomputing and Modelling for the Human Brain” and the European Union’s Horizon 2020 Research and Innovation Programme under Grant Agreement No. 7202070 (HBP SGA1).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Conflict of interest

All authors declare that they have no conflicts of interest.

References

- Adolphs R. Neural systems for recognizing emotion. Curr.Opin.Neurobiol. 2002;12(2):169–177. doi: 10.1016/s0959-4388(02)00301-x. [DOI] [PubMed] [Google Scholar]

- Adolphs R. What does the amygdala contribute to social cognition? Ann N Y Acad Sci. 2010;1191:42–61. doi: 10.1111/j.1749-6632.2010.05445.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Adolphs R, Damasio H, Tranel D, Damasio AR. Cortical systems for the recognition of emotion in facial expressions. J Neurosci. 1996;16(23):7678–7687. doi: 10.1523/JNEUROSCI.16-23-07678.1996. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Amunts K, Kedo O, Kindler M, Pieperhoff P, Mohlberg H, Shah NJ, Zilles K. Cytoarchitectonic mapping of the human amygdala, hippocampal region and entorhinal cortex: intersubject variability and probability maps. Anat.Embryol.(Berl) 2005;210(5–6):343–352. doi: 10.1007/s00429-005-0025-5. [DOI] [PubMed] [Google Scholar]

- Amunts K, Malikovic A, Mohlberg H, Schormann T, Zilles K. Brodmann’s areas 17 and 18 brought into stereotaxic space-where and how variable? Neuroimage. 2000;11(1):66–84. doi: 10.1006/nimg.1999.0516. [DOI] [PubMed] [Google Scholar]

- Amunts K, Schleicher A, Burgel U, Mohlberg H, Uylings HB, Zilles K. Broca’s region revisited: cytoarchitecture and intersubject variability. [Comparative Study Research Support, Non-U.S. Gov’t] J Comp Neurol. 1999;412(2):319–341. doi: 10.1002/(sici)1096-9861(19990920)412:2<319::aid-cne10>3.0.co;2-7. [DOI] [PubMed] [Google Scholar]

- Atkinson AP, Adolphs R. The neuropsychology of face perception: beyond simple dissociations and functional selectivity. Philos Trans R Soc Lond B Biol Sci. 2011;366(1571):1726–1738. doi: 10.1098/rstb.2010.0349. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Balconi M, Bortolotti A. Conscious and unconscious face recognition is improved by high-frequency rTMS on pre-motor cortex. Conscious Cogn. 2013;22(3):771–778. doi: 10.1016/j.concog.2013.04.013. [DOI] [PubMed] [Google Scholar]

- Barton JJ, Press DZ, Keenan JP, O’Connor M. Lesions of the fusiform face area impair perception of facial configuration in prosopagnosia. Neurology. 2002;58(1):71–78. doi: 10.1212/wnl.58.1.71. [DOI] [PubMed] [Google Scholar]

- Behrens TE, Johansen-Berg H, Woolrich MW, Smith SM, Wheeler-Kingshott CA, Boulby PA, Matthews PM. Non-invasive mapping of connections between human thalamus and cortex using diffusion imaging. Nat Neurosci. 2003;6(7):750–757. doi: 10.1038/nn1075. [DOI] [PubMed] [Google Scholar]

- Benuzzi F, Pugnaghi M, Meletti S, Lui F, Serafini M, Baraldi P, Nichelli P. Processing the socially relevant parts of faces. Brain Res Bull. 2007;74(5):344–356. doi: 10.1016/j.brainresbull.2007.07.010. [DOI] [PubMed] [Google Scholar]

- Binder JR, Desai RH, Graves WW, Conant LL. Where is the semantic system? A critical review and meta-analysis of 120 functional neuroimaging studies. [Meta-Analysis Research Support, N.I.H., Extramural Review] Cereb Cortex. 2009;19(12):2767–2796. doi: 10.1093/cercor/bhp055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bouvier SE, Engel SA. Behavioral deficits and cortical damage loci in cerebral achromatopsia. Cereb Cortex. 2006;16(2):183–191. doi: 10.1093/cercor/bhi096. [DOI] [PubMed] [Google Scholar]

- Brass M, Derrfuss J, Forstmann B, von Cramon DY. The role of the inferior frontal junction area in cognitive control. Trends in Cognitive Sciences. 2005;9(7):314–316. doi: 10.1016/J.Tics.2005.05.001. [DOI] [PubMed] [Google Scholar]

- Bruce V, Young A. Understanding face recognition. Br J Psychol. 1986;77(Pt 3):305–327. doi: 10.1111/j.2044-8295.1986.tb02199.x. [DOI] [PubMed] [Google Scholar]

- Bukowski H, Dricot L, Hanseeuw B, Rossion B. Cerebral lateralization of face-sensitive areas in left-handers: only the FFA does not get it right. Cortex. 2013;49(9):2583–2589. doi: 10.1016/j.cortex.2013.05.002. [DOI] [PubMed] [Google Scholar]

- Bzdok D, Langner R, Schilbach L, Jakobs O, Roski C, Caspers S, Eickhoff SB. Characterization of the temporo-parietal junction by combining data-driven parcellation, complementary connectivity analyses, and functional decoding. Neuroimage. 2013;81:381–392. doi: 10.1016/j.neuroimage.2013.05.046. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bzdok D, Schilbach L, Vogeley K, Schneider K, Laird AR, Langner R, Eickhoff SB. Parsing the neural correlates of moral cognition: ALE meta-analysis on morality, theory of mind, and empathy. Brain Struct Funct. 2012;217(4):783–796. doi: 10.1007/s00429-012-0380-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caspers J, Zilles K, Eickhoff SB, Schleicher A, Mohlberg H, Amunts K. Cytoarchitectonical analysis and probabilistic mapping of two extrastriate areas of the human posterior fusiform gyrus. Brain Struct Funct. 2013;218(2):511–526. doi: 10.1007/s00429-012-0411-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Caspers S, Zilles K, Laird AR, Eickhoff SB. ALE meta-analysis of action observation and imitation in the human brain. [Meta-Analysis Research Support, N.I.H., Extramural Research Support, Non-U.S. Gov’t] Neuroimage. 2010;50(3):1148–1167. doi: 10.1016/j.neuroimage.2009.12.112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chase HW, Kumar P, Eickhoff SB, Dombrovski AY. Reinforcement learning models and their neural correlates: An activation likelihood estimation meta-analysis. Cogn Affect Behav Neurosci. 2015 doi: 10.3758/s13415-015-0338-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cieslik EC, Müller VI, Eickhoff CR, Langner R, Eickhoff SB. Three key regions for supervisory attentional control: evidence from neuroimaging meta-analyses. Neurosci Biobehav Rev. 2015;48:22–34. doi: 10.1016/j.neubiorev.2014.11.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Delvecchio G, Sugranyes G, Frangou S. Evidence of diagnostic specificity in the neural correlates of facial affect processing in bipolar disorder and schizophrenia: a meta-analysis of functional imaging studies. Psychol Med. 2013;43(3):553–569. doi: 10.1017/S0033291712001432. [DOI] [PubMed] [Google Scholar]

- Diedrichsen J, Balsters JH, Flavell J, Cussans E, Ramnani N. A probabilistic MR atlas of the human cerebellum. [Research Support, Non-U.S. Gov’t Research Support, U.S. Gov’t, Non-P.H.S.] Neuroimage. 2009;46(1):39–46. doi: 10.1016/j.neuroimage.2009.01.045. [DOI] [PubMed] [Google Scholar]

- Duchaine B, Yovel G. A revised neural framework of face processing. Annual Review of Vision Science. 2015;1:393–416. doi: 10.1146/annurev-vision-082114-035518. [DOI] [PubMed] [Google Scholar]

- Dzhelyova MP, Ellison A, Atkinson AP. Event-related repetitive TMS reveals distinct, critical roles for right OFA and bilateral posterior STS in judging the sex and trustworthiness of faces. J Cogn Neurosci. 2011;23(10):2782–2796. doi: 10.1162/jocn.2011.21604. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Bzdok D, Laird AR, Kurth F, Fox PT. Activation likelihood estimation meta-analysis revisited. [Research Support, N.I.H., Extramural Research Support, Non-U.S. Gov’t] Neuroimage. 2012;59(3):2349–2361. doi: 10.1016/j.neuroimage.2011.09.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eickhoff SB, Laird AR, Grefkes C, Wang LE, Zilles K, Fox PT. Coordinate-based activation likelihood estimation meta-analysis of neuroimaging data: a random-effects approach based on empirical estimates of spatial uncertainty. [Research Support, N.I.H., Extramural Research Support, Non-U.S. Gov’t] Hum Brain Mapp. 2009;30(9):2907–2926. doi: 10.1002/hbm.20718. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eickhoff SB, Nichols TE, Laird AR, Hoffstaedter F, Amunts K, Fox PT, Eickhoff CR. Behavior, Sensitivity, and power of activation likelihood estimation characterized by massive empirical simulation. Neuroimage. 2016 doi: 10.1016/j.neuroimage.2016.04.072. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Eickhoff SB, Paus T, Caspers S, Grosbras MH, Evans AC, Zilles K, Amunts K. Assignment of functional activations to probabilistic cytoarchitectonic areas revisited. Neuroimage. 2007;36(3):511–521. doi: 10.1016/j.neuroimage.2007.03.060. [DOI] [PubMed] [Google Scholar]

- Eickhoff SB, Stephan KE, Mohlberg H, Grefkes C, Fink GR, Amunts K, Zilles K. A new SPM toolbox for combining probabilistic cytoarchitectonic maps and functional imaging data. Neuroimage. 2005;25(4):1325–1335. doi: 10.1016/j.neuroimage.2004.12.034. [DOI] [PubMed] [Google Scholar]

- Engell AD, Haxby JV. Facial expression and gaze-direction in human superior temporal sulcus. Neuropsychologia. 2007;45(14):3234–3241. doi: 10.1016/j.neuropsychologia.2007.06.022. [DOI] [PubMed] [Google Scholar]

- Ewbank MP, Barnard PJ, Croucher CJ, Ramponi C, Calder AJ. The amygdala response to images with impact. Soc Cogn Affect Neurosci. 2009;4(2):127–133. doi: 10.1093/scan/nsn048. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fairhall SL, Ishai A. Effective connectivity within the distributed cortical network for face perception. [bhl148 pii ;10.1093/cercor/bhl148 doi] Cereb.Cortex. 2007;17(10):2400–2406. doi: 10.1093/cercor/bhl148. [DOI] [PubMed] [Google Scholar]

- Fox CJ, Iaria G, Barton JJ. Defining the face processing network: optimization of the functional localizer in fMRI. Hum Brain Mapp. 2009;30(5):1637–1651. doi: 10.1002/hbm.20630. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fox CJ, Moon SY, Iaria G, Barton JJ. The correlates of subjective perception of identity and expression in the face network: an fMRI adaptation study. Neuroimage. 2009;44(2):569–580. doi: 10.1016/j.neuroimage.2008.09.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Frost MA, Goebel R. Measuring structural-functional correspondence: spatial variability of specialised brain regions after macro-anatomical alignment. Neuroimage. 2012;59(2):1369–1381. doi: 10.1016/j.neuroimage.2011.08.035. [DOI] [PubMed] [Google Scholar]

- Fusar-Poli P, Placentino A, Carletti F, Landi P, Allen P, Surguladze S, Politi P. Functional atlas of emotional faces processing: a voxel-based meta-analysis of 105 functional magnetic resonance imaging studies. J Psychiatry Neurosci. 2009;34(6):418–432. [PMC free article] [PubMed] [Google Scholar]

- Ganel T, Valyear KF, Goshen-Gottstein Y, Goodale MA. The involvement of the “fusiform face area” in processing facial expression. Neuropsychologia. 2005;43(11):1645–1654. doi: 10.1016/j.neuropsychologia.2005.01.012. [DOI] [PubMed] [Google Scholar]

- Gauthier I, Tarr MJ, Moylan J, Skudlarski P, Gore JC, Anderson AW. The fusiform “face area” is part of a network that processes faces at the individual level. J Cogn Neurosci. 2000;12(3):495–504. doi: 10.1162/089892900562165. [DOI] [PubMed] [Google Scholar]

- Geyer S, Ledberg A, Schleicher A, Kinomura S, Schormann T, Burgel U, Roland PE. Two different areas within the primary motor cortex of man. Nature. 1996;382(6594):805–807. doi: 10.1038/382805a0. [DOI] [PubMed] [Google Scholar]

- Geyer S, Schleicher A, Zilles K. Areas 3a, 3b, and 1 of human primary somatosensory cortex. Neuroimage. 1999;10(1):63–83. doi: 10.1006/nimg.1999.0440. [DOI] [PubMed] [Google Scholar]

- Grefkes C, Geyer S, Schormann T, Roland P, Zilles K. Human somatosensory area 2: observer-independent cytoarchitectonic mapping, interindividual variability, and population map. Neuroimage. 2001;14(3):617–631. doi: 10.1006/nimg.2001.0858. [DOI] [PubMed] [Google Scholar]

- Grosbras MH, Paus T. Brain networks involved in viewing angry hands or faces. Cereb Cortex. 2006;16(8):1087–1096. doi: 10.1093/cercor/bhj050. [DOI] [PubMed] [Google Scholar]

- Gschwind M, Pourtois G, Schwartz S, Van De Ville D, Vuilleumier P. White-matter connectivity between face-responsive regions in the human brain. Cereb Cortex. 2012;22(7):1564–1576. doi: 10.1093/cercor/bhr226. [DOI] [PubMed] [Google Scholar]

- Harry B, Williams MA, Davis C, Kim J. Emotional expressions evoke a differential response in the fusiform face area. Front Hum Neurosci. 2013;7:692. doi: 10.3389/fnhum.2013.00692. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. The distributed human neural system for face perception. Trends Cogn Sci. 2000;4(6):223–233. doi: 10.1016/s1364-6613(00)01482-0. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Hoffman EA, Gobbini MI. Human neural systems for face recognition and social communication. Biol Psychiatry. 2002;51(1):59–67. doi: 10.1016/s0006-3223(01)01330-0. [DOI] [PubMed] [Google Scholar]

- Haxby JV, Horwitz B, Ungerleider LG, Maisog JM, Pietrini P, Grady CL. The functional organization of human extrastriate cortex: a PET-rCBF study of selective attention to faces and locations. J Neurosci. 1994;14(11 Pt 1):6336–6353. doi: 10.1523/JNEUROSCI.14-11-06336.1994. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoffman EA, Haxby JV. Distinct representations of eye gaze and identity in the distributed human neural system for face perception. Nat Neurosci. 2000;3(1):80–84. doi: 10.1038/71152. [DOI] [PubMed] [Google Scholar]

- Jacobs RH, Renken R, Aleman A, Cornelissen FW. The amygdala, top-down effects, and selective attention to features. Neurosci Biobehav Rev. 2012;36(9):2069–2084. doi: 10.1016/j.neubiorev.2012.05.011. [DOI] [PubMed] [Google Scholar]

- Jehna M, Neuper C, Ischebeck A, Loitfelder M, Ropele S, Langkammer C, Enzinger C. The functional correlates of face perception and recognition of emotional facial expressions as evidenced by fMRI. Brain Res. 2011;1393:73–83. doi: 10.1016/j.brainres.2011.04.007. [DOI] [PubMed] [Google Scholar]

- Joassin F, Maurage P, Campanella S. The neural network sustaining the crossmodal processing of human gender from faces and voices: an fMRI study. Neuroimage. 2011;54(2):1654–1661. doi: 10.1016/j.neuroimage.2010.08.073. [DOI] [PubMed] [Google Scholar]

- Jonas J, Descoins M, Koessler L, Colnat-Coulbois S, Sauvee M, Guye M, Maillard L. Focal electrical intracerebral stimulation of a face-sensitive area causes transient prosopagnosia. Neuroscience. 2012;222:281–288. doi: 10.1016/j.neuroscience.2012.07.021. [DOI] [PubMed] [Google Scholar]

- Kanwisher N, Dilks D. The Functional Organization of the Ventral Visual Pathway in Humans. In: Chalupa LWJ, editor. The New Visual Neurosciences. The MIT Press; 2013. [Google Scholar]

- Kanwisher N, McDermott J, Chun MM. The fusiform face area: a module in human extrastriate cortex specialized for face perception. J.Neurosci. 1997;17(11):4302–4311. doi: 10.1523/JNEUROSCI.17-11-04302.1997. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanwisher N, Yovel G. The fusiform face area: a cortical region specialized for the perception of faces. [X83871K50×365124 pii ;10.1098/rstb.2006.1934 doi] Philos.Trans.R.Soc.Lond B Biol.Sci. 2006;361(1476):2109–2128. doi: 10.1098/rstb.2006.1934. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kircher T, Pohl A, Krach S, Thimm M, Schulte-Ruther M, Anders S, Mathiak K. Affect-specific activation of shared networks for perception and execution of facial expressions. Soc Cogn Affect Neurosci. 2013;8(4):370–377. doi: 10.1093/scan/nss008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krolak-Salmon P, Henaff MA, Vighetto A, Bauchet F, Bertrand O, Mauguiere F, Isnard J. Experiencing and detecting happiness in humans: the role of the supplementary motor area. Ann Neurol. 2006;59(1):196–199. doi: 10.1002/ana.20706. [DOI] [PubMed] [Google Scholar]

- Lancaster JL, Tordesillas-Gutierrez D, Martinez M, Salinas F, Evans A, Zilles K, Fox PT. Bias between MNI and Talairach coordinates analyzed using the ICBM-152 brain template. Hum Brain Mapp. 2007;28(11):1194–1205. doi: 10.1002/hbm.20345. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Langner R, Eickhoff SB. Sustaining attention to simple tasks: a meta-analytic review of the neural mechanisms of vigilant attention. Psychol Bull. 2013;139(4):870–900. doi: 10.1037/a0030694. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leveroni CL, Seidenberg M, Mayer AR, Mead LA, Binder JR, Rao SM. Neural systems underlying the recognition of familiar and newly learned faces. J Neurosci. 2000;20(2):878–886. doi: 10.1523/JNEUROSCI.20-02-00878.2000. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Liu J, Harris A, Kanwisher N. Perception of face parts and face configurations: an FMRI study. J Cogn Neurosci. 2010;22(1):203–211. doi: 10.1162/jocn.2009.21203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lorenz S, Weiner KS, Caspers J, Mohlberg H, Schleicher A, Bludau S, Amunts K. Two New Cytoarchitectonic Areas on the Human Mid-Fusiform Gyrus. Cereb Cortex. 2015 doi: 10.1093/cercor/bhv225. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Loven J, Svard J, Ebner NC, Herlitz A, Fischer H. Face gender modulates women’s brain activity during face encoding. Soc Cogn Affect Neurosci. 2014;9(7):1000–1005. doi: 10.1093/scan/nst073. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Malikovic A, Amunts K, Schleicher A, Mohlberg H, Kujovic M, Palomero-Gallagher N, Zilles K. Cytoarchitecture of the human lateral occipital cortex: mapping of two extrastriate areas hOc4la and hOc4lp. Brain Struct Funct. 2015 doi: 10.1007/s00429-015-1009-8. [DOI] [PubMed] [Google Scholar]

- Maurer D, O’Craven KM, Le Grand R, Mondloch CJ, Springer MV, Lewis TL, Grady CL. Neural correlates of processing facial identity based on features versus their spacing. Neuropsychologia. 2007;45(7):1438–1451. doi: 10.1016/j.neuropsychologia.2006.11.016. [DOI] [PubMed] [Google Scholar]

- Miller BT, Vytlacil J, Fegen D, Pradhan S, D’Esposito M. The prefrontal cortex modulates category selectivity in human extrastriate cortex. J Cogn Neurosci. 2011;23(1):1–10. doi: 10.1162/jocn.2010.21516. [DOI] [PubMed] [Google Scholar]

- Nachev P, Kennard C, Husain M. Functional role of the supplementary and pre-supplementary motor areas. Nat.Rev.Neurosci. 2008;9(11):856–869. doi: 10.1038/nrn2478. [DOI] [PubMed] [Google Scholar]

- Nichols T, Brett M, Andersson J, Wager T, Poline JB. Valid conjunction inference with the minimum statistic. [Comment] Neuroimage. 2005;25(3):653–660. doi: 10.1016/j.neuroimage.2004.12.005. [DOI] [PubMed] [Google Scholar]

- Nummenmaa L, Hyona J, Calvo MG. Eye movement assessment of selective attentional capture by emotional pictures. Emotion. 2006;6(2):257–268. doi: 10.1037/1528-3542.6.2.257. [DOI] [PubMed] [Google Scholar]

- Peelen MV, Downing PE. Within-subject reproducibility of category-specific visual activation with functional MRI. Hum Brain Mapp. 2005;25(4):402–408. doi: 10.1002/hbm.20116. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pessoa L, Adolphs R. Emotion processing and the amygdala: from a ‘low road’ to ‘many roads’ of evaluating biological significance. Nat Rev Neurosci. 2010;11(11):773–783. doi: 10.1038/nrn2920. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pinsk MA, Arcaro M, Weiner KS, Kalkus JF, Inati SJ, Gross CG, Kastner S. Neural representations of faces and body parts in macaque and human cortex: a comparative FMRI study. J Neurophysiol. 2009;101(5):2581–2600. doi: 10.1152/jn.91198.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pitcher D, Duchaine B, Walsh V, Yovel G, Kanwisher N. The role of lateral occipital face and object areas in the face inversion effect. Neuropsychologia. 2011;49(12):3448–3453. doi: 10.1016/j.neuropsychologia.2011.08.020. [DOI] [PubMed] [Google Scholar]

- Pitcher D, Garrido L, Walsh V, Duchaine BC. Transcranial magnetic stimulation disrupts the perception and embodiment of facial expressions. J Neurosci. 2008;28(36):8929–8933. doi: 10.1523/JNEUROSCI.1450-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pitcher D, Walsh V, Duchaine B. The role of the occipital face area in the cortical face perception network. Exp Brain Res. 2011;209(4):481–493. doi: 10.1007/s00221-011-2579-1. [DOI] [PubMed] [Google Scholar]

- Pitcher D, Walsh V, Yovel G, Duchaine B. TMS evidence for the involvement of the right occipital face area in early face processing. Curr Biol. 2007;17(18):1568–1573. doi: 10.1016/j.cub.2007.07.063. [DOI] [PubMed] [Google Scholar]

- Puce A, Allison T, Gore JC, McCarthy G. Face-sensitive regions in human extrastriate cortex studied by functional MRI. J Neurophysiol. 1995;74(3):1192–1199. doi: 10.1152/jn.1995.74.3.1192. [DOI] [PubMed] [Google Scholar]