Summary

Visual motion is an ethologically important stimulus throughout the animal kingdom. In primates, motion perception relies on specific higher-order cortical regions. While mouse primary visual cortex (V1) and higher-order visual areas show direction-selective (DS) responses, their role in motion perception remains unknown. Here we tested whether V1 is involved in motion perception in mice. We developed a head-fixed discrimination task in which mice must report their perceived direction of motion from random-dot kinematograms (RDKs). After training, mice made around 90% correct choices for stimuli with high coherence and performed significantly above chance for 16% coherent RDKs. Accuracy increased with both stimulus duration and visual field coverage of the stimulus, suggesting that mice in this task integrate motion information in time and space. Retinal recordings showed that thalamically projecting On-Off DS ganglion cells display DS responses when stimulated with RDKs. Two-photon calcium imaging revealed that neurons in layer(L) 2/3 of V1 display strong DS tuning in response to this stimulus. Thus, RDKs engage motion-sensitive retinal circuits as well as downstream visual cortical areas. Contralateral V1 activity played a key role in this motion direction discrimination task since its reversible inactivation with muscimol led to a significant reduction in performance. Neurometric-psychometric comparisons showed that an ideal observer could solve the task with the information encoded in DS L2/3 neurons. Motion discrimination of RDKs presents a powerful behavioral tool for dissecting the role of retino-forebrain circuits in motion processing.

ETOC BLURB

Using a head fixed task, Marques et al. show that mice can discriminate the direction of motion of random dot kinetogram stimuli (RDK). RDKs elicit direction-selective responses in both On-OFF DSGCs of the retina and in V1 L2/3 neurons. V1 inactivation impairs task performance, showing that this area plays a key role in motion perception.

Introduction

Motion perception is an important feature in animal behavior and, thus, understanding its neural basis has been a major goal of sensory neuroscience. Optic flow signals generated during locomotion provide navigational cues. The detection of externally generated motion also helps animals avoid predators and identify potential prey[1]. Recently, the mouse has emerged as a prominent model organism for studying visual perception[2,3]. Basic circuit mechanisms for visual processing are conserved between mice and other species[4]. In addition, the extensive genetic toolkit and excellent experimental access of the mouse model allows manipulation and recording from defined cell types[5], greatly facilitating the dissection of circuits underlying motion vision.

Motion perception relies on direction-selective (DS) neurons that respond preferentially to image motion in defined directions. DS neurons are found throughout the visual system, including the retina, where direction-selective ganglion cells (DSGCs) relay motion information to several downstream targets such as the superior colliculus, the dorsal lateral geniculate nucleus (dLGN) and the accessory optic system[6]. In primates, motion encoding begins in the retina[7], but also relies on higher cortical areas MT and MST[8,9]. While DS responses have been observed in mouse visual cortex[10–14], the role of these areas in motion perception is unknown.

Unveiling the neural basis of motion perception requires well controlled, quantitative behavioral measurements. Previously, visual acuity in mice has been assessed with reflexive behaviors such as the optokinetic reflex, a behavior involving subcortical structures and not requiring a functional visual cortex [15,16]. Alternatively, motion vision has been probed using operant behavioral methods in freely moving mice[17,18]. However, there are no known motion discrimination tasks in mice with a demonstrated dependence upon primary visual cortex.

Here we developed a head-fixed discrimination task in which mice report their perceived direction of motion after presentation of random-dot kinematograms (RDKs). RDKs provide no positional or form cues for motion and have been extensively used for probing the mechanisms of motion perception and decision making in humans and other primates[8,19–24]. We show that RDK strongly drove a population of DS V1 neurons and that motion discrimination depended on activity within the visual cortex.

Results

Mice discriminate the motion direction of RDK stimuli

Classically, motion processing in mice has been assayed using the optokinetic reflex, a reflexive eye movement that is mediated by subcortical neural circuits. Our goal was to identify a motion perception task that relied upon activation of DS cells in visual cortex. To probe how well mice perceive motion direction, we developed a head-fixed two alternative forced choice (2AFC) behavioral paradigm using RDKs.

We used the following protocol to test mouse perception of motion direction. Mice self-initiated trials by running on a treadmill. RDK stimuli were presented monocularly and mice reported the perceived direction of motion by licking one of two available lickports[25] immediately after stimulus offset. A RDK moving in the nasal direction was associated with a water reward in the left lickport, and a RDK moving in the temporal direction was associated with a water reward in the right lickport (Figure 1A). A correct choice was rewarded with a water drop, and a wrong choice was punished with a time-out period (Figure 1B). Trial difficulty was adjusted with the RDK coherence, defined as the net percentage of the number of dots that moved in the stimulus direction (Figure 1C, STAR Methods).

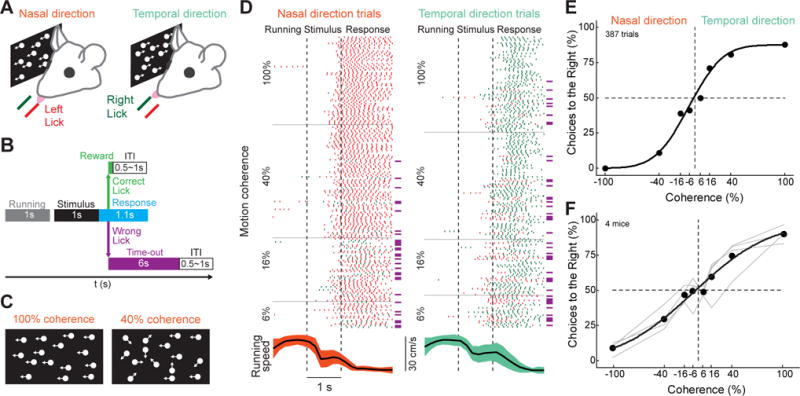

Figure 1. Mice perform a self-initiated head-fixed 2AFC motion discrimination task.

(A) Schematic of the 2AFC motion discrimination task. Mice report the direction of a random dot kinetogram by either licking the left lickport (nasal moving stimulus) or the right lickport (temporal moving stimulus). Stimulus is presented monocularly to the right eye. (B) Trial structure. To initiate a trial, mice run for 1s in a linear treadmill. The stimulus is then presented for 1s and the decision is determined by the first lick during the response period immediately after the stimulus offset. Correct choices are rewarded with a drop of water and wrong choices are punished with a time-out. (C) Schematic of random dot kinetograms in the nasal direction with 100% coherence (left) and 40% coherence (right). (D) Example session with trials sorted by side (left, nasal; right temporal) and coherence (arranged bottom to top from low to high coherences). Each row is a trial. Red ticks, left licks; green ticks, right licks. Purple lines, wrong trials. Bottom, average trial running speed traces. Black lines, mean; shaded areas, SD. Dashed lines, stimulus onset and offset. (E) Psychometric curve of session in (D). Nasal moving stimuli are represented with negative coherences. (F) Population psychometric curve (circles, mean; black line, fit; gray lines, individual animals). See also Figures S1 and S2.

Mice learned the task in less than two weeks of training (sessions to reach 65% accuracy: 8 ± 3.8 for 100% coherence stimuli, 12.4 ± 4.1 for 40% coherence stimuli; sessions to reach 80% accuracy: 10.6 ± 4.1 for 100% coherent stimuli, n = 7 mice). Trained mice did hundreds of trials per session (Figure 1D), allowing us to measure psychometric performance in single sessions (Figure 1E). Mice performed the task with high levels of accuracy for 100% coherence RDKs (Figures 1F and 2A, accuracy, 91 ± 3% correct, n = 4 mice, 3 sessions each) and performance was affected by stimulus coherence (Fig 2A, p = 3.48 × 10−8, repeated measurements ANOVA followed by Tukey-Kramer honest significant difference). Mice discriminated above chance for 16% coherence stimuli (accuracy, 56 ± 6% correct, p = 0.025), but not for 6% coherence stimuli (accuracy, 50 ± 5% correct, p = 1). While some individual animals performed better when presented with stimuli moving in one of the two directions, the performance of the population of trained mice was unbiased (Figures 1E–F and S1). Although mice were allowed to lick during stimulus presentation with no effect on trial outcome, mice withheld licking for most of this epoch (Figures 1D and S2). They also rarely changed licking sides but did so more often for stimuli with lower coherences (Figure S2).

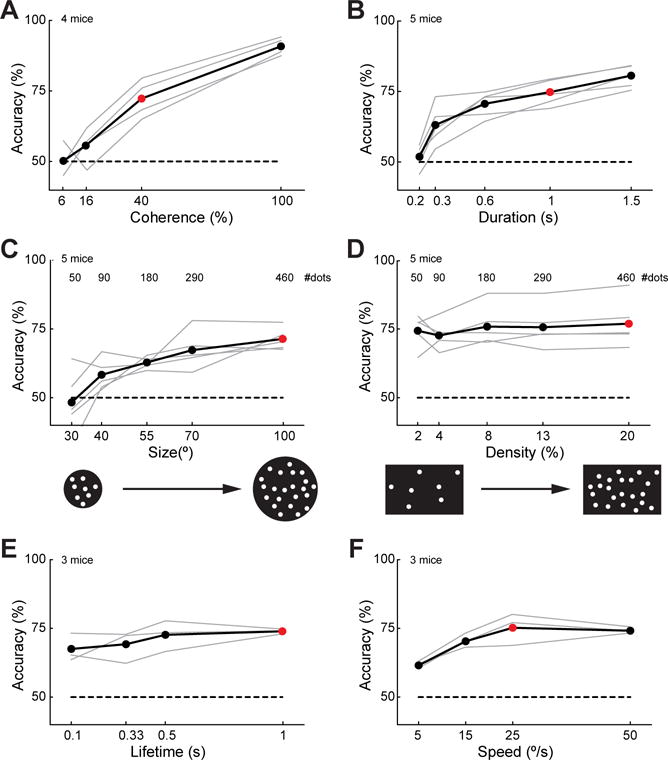

Figure 2. Mice integrate motion information in time and space.

(A) Performance in the motion discrimination task as a function of stimulus coherence (same sessions as in Figure 1F). (B–F) Same as in (A) but for stimulus duration, stimulus aperture size, dot density, dot lifetime and dot speed, respectively. Circles and black line, mean; gray lines, individual animals. Red circles represent the standard parameter values. For (B–C), number of dots present in the monitor is shown over the curve and cartoons of the stimuli are shown under the plot. See also Figures S3 and S4.

We studied how other stimulus parameters affected task performance. We fixed the motion coherence at 40% (Figure 2A, 72 ± 7% correct, n = 4 mice, 3 sessions each) and changed one parameter at a time. First, we tested how long mice needed to observe the stimulus to accurately perceive the direction of motion. Task accuracy monotonically increased with the duration of the stimulus (Figure 2B, p = 1.38 × 10−8, repeated measurements ANOVA followed by Tukey-Kramer honest significant difference, n = 5 mice, 3 sessions each). Mice could discriminate stimuli lasting 300ms (accuracy, 63 ± 7% correct, p = 4.0×10−3) but were at chance for 200ms stimuli (52 ± 4%, p = 0.92). Although mice had been trained with 1s stimuli, when given more time to accumulate evidence responses accuracy trended upward, though did not reach significance (75 ± 5% correct for 1s, 80 ± 4% correct for 1.5s, p = 0.28).

We next sought to determine if mice integrated moving dots over a large visual area to determine the direction of motion from RDK stimuli. We varied the size of the stimulus in the visual field by constraining the RDK to a circular aperture with different diameters and saw a decrease in motion discrimination as the size of stimuli became smaller (Figure 2C, p = 2.19 × 10−8, repeated measurements ANOVA followed by Tukey-Kramer honest significant difference, n = 5 mice, 3 sessions each). Mice could discriminate RDKs with 40° diameter apertures (58 ± 6%, p = 0.06) but were at chance for stimuli only 30° in diameter (48 ± 11%, p = 1.0). The increase in performance with stimulus size could be due to an increase in the visual space covered by the motion signal or to an increase in the number of dots moving coherently. The first case would indicate that global motion perception requires integrating motion energy from a large visual area, while the second case would indicate that it requires integrating a large number of moving dots, independently of their distribution in visual space. To disambiguate between these two hypotheses, we varied the number of dots while keeping the stimulus aperture constant by manipulating the dot density (Figure 2D). We chose density values that matched the same number of dots as in the previous condition (number of dots in stimuli ranged from 50 to 460). Motion discrimination remained unchanged for all values of dot densities sampled (p = 0.34, repeated measurements ANOVA, n = 5 mice, 3 sessions each). Thus, motion discrimination in this task relies on circuits integrating motion information over a large area of the visual field.

Do animals discriminate motion direction by integrating motion at large, or by tracking individual moving dots within the stimulus? To answer this, we varied dot lifetimes, or the amount of time that each individual dot stays in a trajectory before being redrawn in a random new position, between 0.1s and 1s (Figure 2E). Accuracy was mostly unaffected, but there was a small decrease in performance for very short dot lifetimes (p = 0.017, repeated measurements ANOVA, n = 3 mice, 3 sessions each; from 74% ± 1% for 1s lifetime to 67% ± 5% for 0.1s lifetime, p = 0.15). Shorter lifetimes reduce the number of dots moving coherently on any given frame (from 40% coherence for 1s lifetime to 37% coherence for 0.1s lifetime, STAR Methods), and thus a small decrease in performance is expected from the decrease in coherence (Figure 2A). This suggests that mice do not follow individual dots to infer motion direction.

Global motion stimuli using gratings elicit optokinetic responses, a reflex depending on retinal projections to brainstem structures[6,16,26]. The magnitude of the optokinetic reflex is highly dependent on stimulus speed, its gain falling off rapidly for speed exceeding 5°/s[15]. We tested if motion perception in our task showed a similar dependency. Changing the speed of the dots between 5°/s and 50°/s had a significant effect on task accuracy (Figure 2F, p = 1.25 × 10−5, repeated measurements ANOVA followed by Tukey-Kramer honest significant difference, n = 3 mice, 3 sessions each). Motion perception was the same for 50°/s as for 25°/s (75% ± 6% correct for 25°/s, 74% ± 1% correct for 50°/s, p = 0.96), but was reduced for lower speeds, particularly at 5°/s (61% ± 1%, p = 7.28×10−4 with 25°/s). Thus, motion discrimination in this task depends on the speed of the stimuli but, unlike the optokinetic reflex, accuracy is greater at high speeds (25°/s or faster), suggesting that the two behaviors engage different motion circuits.

Interestingly, eyes remained mostly stable during stimulus presentation as animals performed the task. Saccades during presentation of RDKs were rare (~5% of trials) and eyes did not track the dots’ speed (Figure S3). There were small horizontal eye movements when viewing temporal-nasal moving RDKs that resulted in displacements of less than 2° by the end of 1.5s of visual stimulation (Figure S3; speed of eye movements: 0.99 ± 0.44°/s for 100% coherence nasal direction stimuli; 0.35 ± 0.64°/s for 100% temporal direction stimuli). The magnitude of the horizontal eye movements was also small in naïve mice (Figure S3, speed of eye movements: 1.77 ± 0.33°/s for 100% coherence nasal direction stimuli; 0.57 ± 0.12°/s for 100% temporal direction stimuli), suggesting that the parameters of the RDKs used in the task result in a low-gain OKR regardless of training.

As mice are running on a treadmill and dots move horizontally on the monocular visual field, nasal-to-temporal directed stimuli are more congruent with expected optic flow than temporal-to-nasal motion. To test if the task relied on circuits comparing expected and actual optic flow [27], we presented horizontal moving stimuli on a monitor located in front of mice after training them on a laterally placed monitor. In this configuration, both RDK stimuli are equally incongruent with locomotion, moving either left or right in front of the mice. Mice were able to generalize and discriminate the direction of motion from the first session (Figure S4), suggesting that the task does not depend on motion expectation circuits.

V1 required for motion discrimination

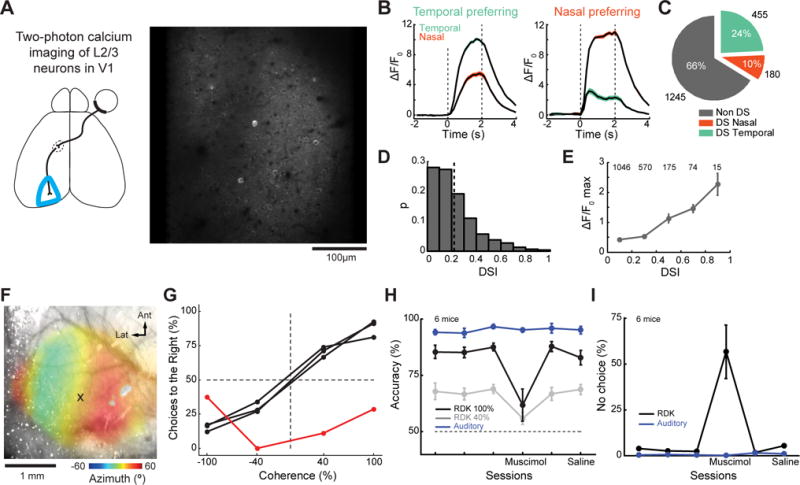

To determine if perception of motion direction of RDKs is dependent on activity in V1, we first tested whether RDKs drive DS responses in V1. Using a two-photon (2P) microscope we recorded neural responses to horizontally-moving RDKs in V1 L2/3 neurons expressing GCaMP6s [28,29], contralateral to the stimulated eye in anesthetized mice (Figure 3A). A subset of L2/3 neurons showed responses that were highly selective for direction of motion (Figure 3B). DS neurons accounted for over one third of all visually driven neurons (Figure 3C), the majority of them showing larger responses to stimuli moving in the temporal direction (455 temporal-DS out of 635 DS, p = 1.1×10−28, binomial test). Although most of the responsive neurons were not selective for motion direction (Figure 3D; population direction selectivity index, DSI = 0.22 ± 0.17), DS neurons showed larger amplitude responses than other visually responsive neurons and their magnitudes were highly correlated with DSI (Figure 3E; p = 5.6×10−36, ANOVA). To check if this was an artifact of probing DS only with horizontally-moving stimuli, we also measured the preferred direction of V1 neurons using RDKs moving in 8 directions (Figure S5). Neurons tuned to the temporal and ventro-temporal directions were overrepresented in the population, consistent with observations of their predominance when sampling only the nasal and temporal directions (Figure S5). Strong responses were again correlated with high selectivity, both when analyzing the entire population of DS neurons and when restricting analysis to neurons tuned for horizontal motion (Figure S5). Thus, RDK stimuli strongly drive DS neurons in mouse V1.

Figure 3. RDK motion direction discrimination requires activity in contralateral V1.

(A) Two-photon field of view of a single plane of L2/3 V1 in a Thy1-GCaMP6s mouse. (B) Mean fluorescent responses to RDKs for two example DS L2/3 V1 neurons (left, temporal preferring; right, nasal preferring). Black line, mean. Shaded area, SEM. Color corresponds to the motion direction (orange, nasal; green, temporal). Dashed lines, stimulus onset and offset. (C) Relative abundance of non-DS, nasal or temporal preferring DS neurons. (D) Distribution of DSI for visually responsive L2/3 V1 neurons. Dashed line, mean of the distribution (0.22). (E) Population mean ΔF/F0 max as a function of DSI. Neurons were binned in DSI intervals of 0.2. Circle, mean; errorbars, SEM. Numbers on top correspond to the number of neurons in each bin. (F) Intrinsic signal imaging through the intact skull used to identify V1. Cross marks the muscimol injection site. (G) Black, psychometric curves for three consecutive sessions for the animal in (F); red, psychometric curve obtained after muscimol injection in contralateral V1. (H) Performance of the animals during the baseline sessions, muscimol injection session, recovery session and saline injection session. Black, RDK 100% coherence; gray, RDK 40% coherence; blue, auditory task. N=6 mice in RDK and auditory stimulus. (I) Fraction of trials in which animals did not report any choice. Circles, mean; errorbars, SEM. See also Figure S5.

We next sought to determine whether activity in V1 is necessary for perception of motion direction of RDKs. We trained a group of animals in the motion discrimination task with the 100% and the 40% coherence stimuli until they reached a stable performance level. Using intrinsic signal imaging we identified V1 in the left hemisphere (Figure 3F) and injected it with the GABAA receptor agonist muscimol prior to behavior testing. Pharmacological inactivation of contralateral V1 impaired motion discrimination, disrupting animal performance (Figure 3G). The number of trials in which mice did not lick any lickport increased (Figure 3I, 2% to 57% no choice trials, p = 0.014, paired t-test). In trials with responses, accuracy decreased for both coherences (Figure 3H, 40% coherence: 69% to 56% accuracy, p = 0.016, paired t-test; 100% coherence: 88% to 62% accuracy, p = 0.032, paired t-test). These effects were not observed in control saline injections. To control if unilateral muscimol inactivation affected motor or decision-making areas, resulting in a non-specific degradation of behavioral performance, we performed similar injections of muscimol in V1 in mice performing a 2AFC auditory task (STAR Methods, Figure 3H–I). Muscimol inactivation of V1 did not affect performance in the auditory task, indicating that this manipulation did not result in non-specific motor impairments. We conclude that motion perception in this task depends on a functional contralateral V1.

Random dot kinetograms robustly activate DS neurons in the retina

Do DS responses to RDKs first emerge in V1? On-Off DS ganglion cells in the retina (DSGCs) have been reported to form a di-synaptic circuit conveying direction-selective information to the superficial layers of V1 via neurons in the dLGN[30]. Recent evidence also suggests that some, but not all DS responses in superficial layers of V1 are inherited from the retina[31].

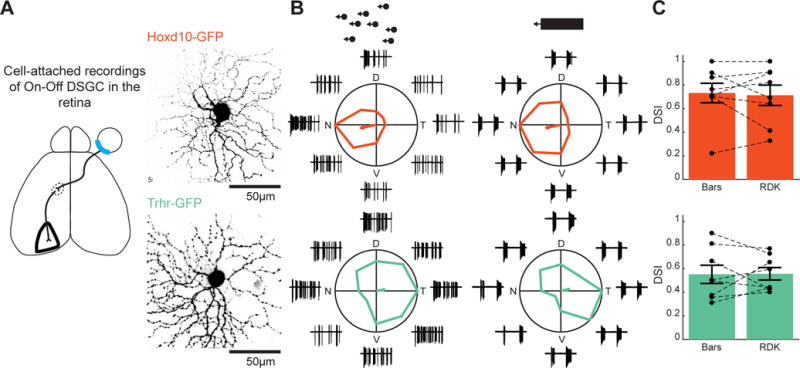

To determine whether RDKs activated DSGCs in the retina, we performed cell-attached recordings of two-photon targeted On-Off DSGC populations in retinal explants from two transgenic mouse lines: the nasally preferring Hoxd10-GFP[32] (Figure 4A–B, top), and the temporally preferring Trhr-GFP [33] (Figure 4A–B, bottom). For each DSGC, we compared directional tuning in response to drifting bars and 100% coherence RDKs. Directional tuning was quantified by two measures: DSI (Figure 4C) and normalized vector sum length (data not shown). We found no significant difference in the directional tuning of DSGCs in response to drifting bar and RDK stimuli (DSIHoxd10[Bars] = 0.73 ± 0.23, DSIHoxd10[Dots] = 0.71 ± 0.24, n = 8 cells, paired t-test, p = 0.75; VecHoxd10[Bars] = 0.43 ± 0.18, VecHoxd10[Dots] = 0.41 ± 0.26, paired t-test, p = 0.86; DSITrhr[Bars] = 0.55 ± 0.22, DSITrhr[Dots] = 0.56 ± 0.15, n = 8 cells, paired t-test, p = 0.94; VecTrhr[Bars] = 0.31 ± 0.14, VecTrhr[Dots] = 0.28 ± 0.12, paired t-test, p = 0.46).

Figure 4. RDKs drive direction-selective responses in the retina.

(A) Top, two-photon image of an On-Off DSGC labeled under the Hoxd10 promoter. Bottom, same but for the Trhr promoter. (B) Top, example tuning curves of a Hoxd10-GFP On-Off DSGC to RDKs (left) and moving bars (right). Bottom, same but for a Trhr-GFP On-Off DSGC. Arrows correspond to the normalized vector sum of responses for each stimulus direction. Raw cell-attached recordings surround each polar plot, corresponding to a stimulus presentation in that direction. (C) Top, DSI for Hoxd10-GFP On-Off DSGCs calculated for both stimuli type. Circles and dashed lines, individual DSGCs. Errorbars, SEM. Bottom, same but for Trhr-GFP On-Off DSGC.

Thus, information about the motion direction of RDKs is already present in the retina. Furthermore, On-Off DSGCs encode motion information about RDKs and drifting bars, two stimuli with very different spatio-temporal characteristics, in a similar way.

DS neurons in L2/3 of V1 match animal performance

We then compared the ability of individual neurons to discriminate between stimuli moving in opposite directions with the animals’ behavior. To examine this relationship directly, we recorded the activity of V1 L2/3 neurons during behavioral sessions using a 2P microscope (Figure 5A–B, 8 sessions, 4 mice). Similar to the anesthetized recordings, the majority of stimulus driven V1 L2/3 neurons were weakly modulated by the direction of motion (Figure S5, population DSI of 0.29 ± 0.26). Most DS neurons were tuned for the temporal direction (Figure S5, 109 temporal-DS out of 156 DS neurons, p = 7.5×10−7, binomial test) and response amplitude was correlated with direction selectivity (Figure S5, p = 7.1×10−18, ANOVA).

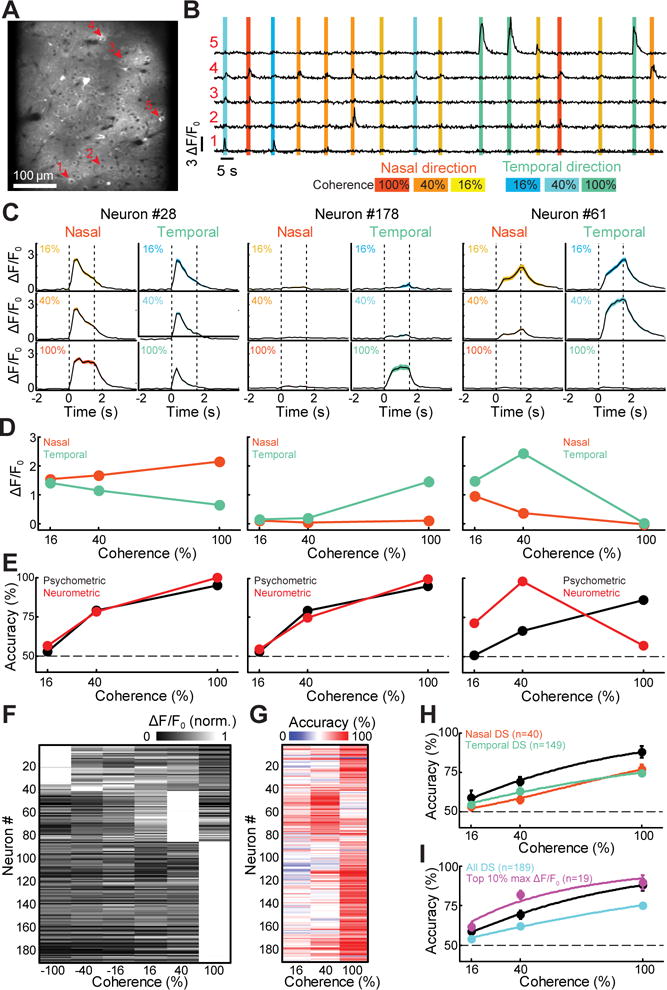

Figure 5. Single neuron psychometric-neurometric comparisons.

(A) Two-photon field of view of an imaging plane during behavior. Arrowheads, neurons used for fluorescence traces in (B). (B) Fluorescence traces over multiple behavioral trials. Colored bars indicate RDK stimuli presentation. Color corresponds to motion direction and coherence. (C) Mean fluorescent responses to RDKs with 100%, 40% and 16% coherence for three example DS L2/3 V1 neurons recorded in behaving animals. Black line, mean; shaded area, SEM. Color as in (B). Dashed lines, stimulus onset and offset. Neuron numbers refer to rows in (F) and (G). (D) Tuning curves of neurons in (C). For the neurons in the left and center panels responses increase with coherence along the preferred direction. For the neuron in the right panel, the largest response occurs for the 40% temporal direction stimulus. (E) Red, neurometric curves of the neurons in (C–D). Black, psychometric performance of the animal in the same session. (F) Normalized tuning curves for all DS L2/3 V1 neurons. Neurons were sorted according to their preferred stimulus (from top to bottom, 100% nasal, 40% nasal, 40% temporal and 100% temporal). (G) Neurometric curves for all DS L2/3 V1 neurons. (H) Population mean neurometric curves for all the nasal DS (orange) and temporal DS (green) L2/3 V1 neurons. Black, mean psychometric performance of all the imaging sessions (8 sessions). Circles, mean; errorbars, SEM; lines, fitted weibul functions. n corresponds to number of neurons. (I) Same as in (H) but for all DS neurons (cyan) and the 10% with the largest stimulus evoked responses (magenta). See also Figure S5.

DS responses were modulated by stimulus coherence (Figure 5C–D,F). Most DS neurons showed tuning curves that monotonically increased or decreased with coherence along preferred and null directions, respectively (Figure 5C–D, left and middle). A subset of DS neurons preferred intermediate coherences (Figure 5C–D, right), contributing to a diversity of tuning curves (Figure 5F).

Using a signal detection theory approach, we computed neurometric curves [34] for DS neurons (STAR Methods, Figure 5E,G). Single neurons could produce neurometric curves that matched animal performance in the session in which they were recorded. Both temporal preferring and nasal-preferring DS neurons produced similar neurometric curves with higher discriminability at increased stimulus coherence (Figure 5H, p = 0.394 for preferred direction, p = 5.73×10−28 for coherence, 2-way ANOVA). While the average neurometric curve underperformed the animals in the task, those of neurons showing large-amplitude sensory responses (top 10%, n=19) matched or slightly outperformed the animals (Figure 5I).

Thus, performance in the task is not sensory-limited as activity in a single L2/3 neuron can, in some cases, decode the stimulus with the same accuracy as the animal. However, neurometric curves underperformed the animals on average, suggesting that mice might need to pool sensory responses from several neurons to solve the task.

An ideal observer requires just a few L2/3 cortical DS neurons

How many neurons in V1 are required to match the performance of the animals in the motion direction discrimination task? We trained a support-vector-machine (SVM) to discriminate between nasal and temporal stimuli of mixed coherences using responses from a given number of randomly sampled DS neurons. Then we tested the accuracy of the SVM for each coherence, separately. For both the 100% and 40% coherences, the activity of 5 random DS neurons could match animal performance (Figure 6A). Decoder accuracy increased with the number of neurons sampled, outperforming animal discriminability for populations of only 10 neurons. The time required to match the animal’s performance decreased as a function of the number of neurons included in the decoder. While a 10-neuron decoder needed more than 0.8s to match animal discriminability for both the 100% and 40% coherences, a 50-neuron decoder required less than half a second to do the same (Figure 6B–C). When restricting the trials used to train and test the SVM to only those in which no licking occurred during the first second after stimulus onset, the decoder could also match the animal performance within the first second (Figure S6). Thus, licking-related activity is not a major factor contributing to the accuracy of the decoder.

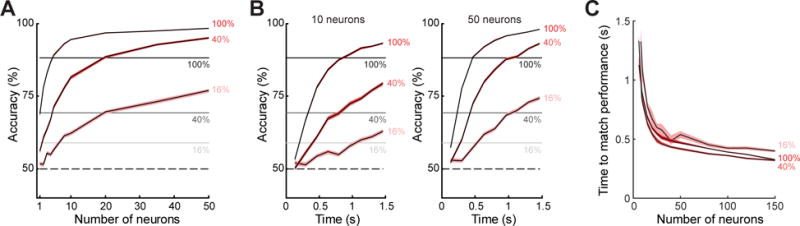

Figure 6. An ideal observer can solve the task with just a few DS L2/3 V1 neurons.

(A) Performance of a population decoder trained using a SVM as a function of the number of neurons (1000 repetitions). Black line, mean; shaded area, SEM. Horizontal lines, mean animal performance of the imaging sessions (n=8). (B) Performance of the decoder during the stimulus presentation as it integrates the fluorescent traces for two population sizes (left, 10 neurons; right, 50 neurons). (C) Time for the decoder to match animal performance for the different coherences as a function of the population size. See also Figure S6.

Interestingly, the time to match the animal’s performance was similar for the three coherence levels independently of the number of neurons in the decoder (Figure 6C), suggesting that performance in the task reflects the motion information available in V1 after a fixed amount of time.

Taken together, these analyses show that an ideal observer could solve the motion discrimination task by focusing on the activity of a population of V1 L2/3 DS neurons for a fixed amount of time.

Discussion

We demonstrated for the first time a motion direction discrimination task in mice that is dependent upon activity within visual cortex. We used a stimulus that strongly activated DS neurons in V1 and did not elicit a large optokinetic reflexive response. By comparing neuronal response properties with behavior, we can begin to understand how mice integrate motion information across a population of neurons to generate a directional motion perception.

A role of V1 in motion perception

Several studies have reported DS responses in V1 [10–12]. However, most studies on motion perception have relied upon reflexive behaviors such as optokinetic eye movements[15,32]. Here we show that the direction of motion of RDKs, a stimulus that does not strongly activate the optokinetic reflex, can nevertheless be robustly perceived by mice (Figure 1) over a wide variety of stimulus parameters (Figure 2). As RDK stimuli elicit DS responses in retinal On-Off DSGCs (Figure 4), motion information is likely to also be present in the brain regions to which they project: accessory optic system, the superior colliculus and dLGN, as well as V1 and other visual cortical areas. Projections to the accessory optic system could contribute to the small gain OKR elicited by RDK stimuli [32] (Figure S3). Despite the wide availability of DS signals in the brain, V1 played a critical role in this discrimination task, as performance was significantly impaired when it was silenced (Figure 3). Thus, like in orientation discrimination and contrast change tasks [35–37], a functional V1 is required for motion discrimination, confirming the privileged role of mouse V1 in sensory perception.

Motion perception in this task likely relies on DS neurons in V1, like the ones we observed in L2/3, as they show DS responses to RDK stimuli that are modulated by coherence like behavioral performance and collectively encode direction of motion information (Figures 5 and 6). Other DS populations of neurons located in other layers are also likely to be involved in motion perception. As direction information of RDKs is already available in the retina, the role of V1 in motion discrimination might relate more to pooling and integrating afferent DS responses to inform downstream decision-making circuits rather than to compute the direction of motion of RDK stimuli within individual receptive fields[31]. Behavioral performance required stimulus apertures larger than 30° in diameter (Figure 2B), while the receptive field diameter of individual DSGCs in the retina is ~6° and ~20° in V1[10,33]. Thus, mice might integrate DS responses from several neurons distributed over the retinotopic map of V1 to accurately extract direction of motion from RDK stimuli.

In primates, this dependence of motion discrimination on stimuli covering large areas of visual space is congruent with the large receptive fields of neurons found in higher order area MT. Area MT contains a large fraction of global motion DS neurons and is critically involved in motion perception in monkeys [8,9]. In mice, neurons in higher visual areas also show larger receptive fields than those in V1[38]. However, global motion information is already available in V1[39–41], and the role of higher visual areas in motion perception is still undetermined. Manipulations of activity in V1 in our experiments (Figure 3) likely affected motion-related activity in the high-order visual areas surrounding it, as V1 is their main source of visual information[42]. Thus, like in primates, V1 together with a subgroup of high-order visual areas might be required for motion perception in mice.

By showing that cortical circuits play a key role in motion perception, our task provides an initial step for investigating the involvement of the rodent higher visual areas in motion perception.

Encoding of motion in mouse V1

RDKs have been extensively used in humans and monkeys to study motion processing and decision-making. Neural responses to RDKs have also been recorded through the cortical hierarchy in monkeys[23,43,44] but only in a few studies in mice[45,46]. We found that RDKs can be used to probe motion direction sensitive circuits in V1 as they strongly stimulate DS L2/3 neurons with large DSIs (Figures 3E and S5). Most DS neurons were monotonically modulated by coherence in the RDK stimuli, except for a small population that showed larger responses at intermediate coherences (Figure 5F). The mechanisms underlying these non-monotonic responses are unknown. One possibility could be surround suppressive effects that may be larger in coherent stimuli. Preferred directions were unevenly distributed, the number of temporal direction preferring DS neurons doubling those of nasal direction preferring ones both in behaving and anesthetized animals (Figures 3C and S5). Thus, consistent with a recent report probing direction-selectivity with gratings[31], using RDK stimuli we found that DS cortical neurons in mouse V1 are enriched for processing the predominant direction experienced by animals with laterally-positioned eyes during locomotion. Our results reinforce the idea that motion circuits in visual cortex are specialized for processing locomotion-induced self-generated optic flow in mice.

A task for dissecting motion circuits in mice

The head-fixed 2AFC behavioral paradigm described here allows probing brain circuits underlying motion perception. Behavioral assays using reflexive behaviors primarily engage On-DSGCs neurons and brainstem nuclei[6,15,16,26]. The motion discrimination task provides a unique assay for dissecting retino-forebrain and retino-midbrain circuits involved in motion processing as it involves stimuli engaging On-Off DSGCs and depends on a functioning visual cortex. Previous behavioral paradigms using operant conditioning for assessing motion vision in mice involved freely moving tasks[17,18]. Since our task is head-fixed, it allows for excellent stimulus control and repeatability across trials while facilitating neuronal manipulations and recordings of large neuronal populations and subcellular compartments[5,47]. The coherence threshold measured in our task agrees with measurements in freely-moving mice [18,19], with 75% correct choices when viewing 40% coherent stimuli (Figure 2A), indicating that performance does not seem to be significantly compromised by head restraining the animals. In previous reports using freely moving discrimination tasks, mice only discriminated between RDKs moving along different axes[17,18], one of the studies reporting that mice could not be trained to discriminate stimuli moving in opposing directions[17]. Our study shows that mice can robustly discriminate motion stimuli moving in opposing directions along the horizontal axis both in monocular (Figures 1 and 2) and binocular visual fields (Figure S4).

Comparable to similar tasks in monkeys[48,49] and humans[50], mice made fewer mistakes the longer they viewed the stimuli. This would suggest that animals integrate motion evidence over time prior to deciding. Thus, in addition to the dissection of motion circuits, this task also allows for the study of the neural mechanisms of temporal integration in decision-making in a head-fixed mouse preparation[51].

In summary, the head-fixed motion task we describe here reveals a role for visual cortex in mouse motion perception. This task also presents a powerful new tool for dissecting the role of retinal and cortical circuits in motion perception.

STAR Methods

CONTACT FOR REAGENT AND RESOURCE SHARING

Further information and requests for resources and reagents should be directed to and will be fulfilled by the Lead Contact, Leopoldo Petreanu (leopoldo.petreanu@neuro.fchampalimaud.org).

EXPERIMENTAL MODEL AND SUB JECT DETAILS

Animals

Wild-type male C57BL/6J mice (n = 30) were used for the motion discrimination behavioral results and for the in vivo functional imaging of L2/3 V1 neurons in behaving animals. Thy1-GCaMP6s[29] (line GP4.3, Jax Stock No: 024275) male mice (n = 7) were used for the L2/3 V1 anesthetized recordings. These mice were individually housed in a 12:12 reversed light-dark cycle. Mice were implanted at P40 to P100 and used in the experiments up to ~8 months. Mice were housed with littermates (2-4 per cage) prior to surgical procedures and were singly housed afterwards. Trhr-GFP (n = 5) and Hoxd10-GFP (n = 6) [33,32] adult mice were used for the retinal recordings. All animal procedures related to the motion discrimination task and in vivo two-photon imaging were reviewed by the Champalimaud Centre for the Unknown Ethics Committee guidelines and performed in accordance with the Portuguese Direcção Geral de Veterinária. All animal procedures related to the retinal recordings were approved by the UC Berkeley Institutional Animal Care and Use Committees and conformed to the NIH Guide for the Care and Use of Laboratory Animals, the Public Health Service Policy, and the SFN Policy on the Use of Animals in Neuroscience Research.

METHOD DETAILS

Retina preparations

Adult mice (P40–P100) were anesthetized with isoflurane and then decapitated. Retinas were mounted photoreceptor layer down over a hole of 1–2 mm2 on filter paper (Millipore), and kept in oxygenated Ames’ medium (Sigma) in the dark at room temperature.

Head-post implantation

Surgeries were performed on adult mice (P50–P80) under isoflurane anesthesia. Bupivacaine (0.05%; injected under the scalp) and Dolorex (1 mg/kg; injected subcutaneously) provided local and general analgesia. Eyes were protected and kept moist using ophthalmic ointment (Clorocil, Edol). Animals used in the behavioral study of motion discrimination had a custom-designed iron head-post attached to the skull with dental cement. The skull over the visual cortex was covered with a thin layer of clear dental cement.

Virus injection and chronic imaging window implantation

For in vivo two-photon calcium imaging experiments, before attaching the head-post to the skull, we performed a circular craniotomy over the left visual cortex (diameter, 4 to 5 mm). The dura was left intact. For imaging activity during behavior (Figures 5), V1 neurons were labeled with virus expressing GCaMP6f AAV1-Syn-GCaMP6f-WPRE-SV40 (University of Pennsylvania Gene Therapy Program Vector Core). Virus was injected in four to seven different locations in the visual cortex (20 nl per location, 10 nl/min, 200 - 315 μm deep) using a custom volumetric injection system based on a Narishige MO-10 manipulator [47]. To prevent backflow during withdrawal, the pipette was left in the brain for over 5 minutes before withdrawal. For the V1 anesthetized recordings (Figure 3 and S5), the transgenic mouse line Thy1-GCaMP6s[29] (line GP4.3) was used and no virus was injected. An imaging window was constructed from two layers of microscope cover glass (Fisher Scientific no. 1 and no. 2) joined with a UV curable optical glue. The window was embedded into the craniotomy and secured in place using black dental cement and then the head-post was attached with the same dental cement. The glass window gently pressed against the brain, minimizing movement artifacts during behavior[52].

Intrinsic Signal Imaging

For the animals with chronic imaging windows implanted, instrinsic signal imaging was done through the windows. For the animals used in the muscimol experiments, prior to intrinsic signal imaging, the dental cement over the visual cortex was polished and covered with a thin layer of standard clear nail polisher. Mice were head-fixed and lightly anesthetized with isoflurane (1%) and injected intramuscularly with chlorprothixene (1mg/kg)[53]. The eyes were coated with a thin layer of silicone oil (Sigma-Aldrich) to ensure optical clarity during visual stimulation. Optical images of cortical intrinsic signals were recorded using a Retiga QIClick camera (QImaging) controlled using Ephus [54] with a high magnification zoom lens (Thorlabs) focused at the brain surface under the skull at 5Hz. To measure intrinsic hemodynamic responses, the surface of the cortex was illuminated with a 620nm red LED while drifting bar stimuli were presented to the right eye. A periodic drifting bar was continuously presented 80 times for each of the four cardinal directions (12s period, 20° width, masking an alternating checkerboard pattern at 5Hz). A spherical correction was applied to compensate for the monitor flatness and visual space was measured in azimuth and elevation coordinates. An image of the cortical vasculature was obtained using a 535nm green LED.

Muscimol injections

Unilateral injections of a solution of muscimol hydrobromide (Sigma-Aldrich; P/N G019) in saline were performed in mice proficient in the task (n = 6) and showing a stable performance for at least 3 consecutive days. Muscimol was injected (50 nL, 5 μg/μl; 300 μm underneath the dura) in V1 through a small craniotomy (500 μm diameter) with a beveled glass pipette. V1 was identified by intrinsic signal imaging performed after head-post implantation. Animals were under anesthesia for 43 ± 8 min and were returned to their home cages for 59 ± 19 min prior to behavioral testing. In control experiments, saline was injected following the same procedure.

Visual stimulation used in the in vivo experiments

Visual stimuli were presented at 120Hz using either an LCD display (Samsung S23A700D, 120Hz monitor, for animal training and behavioral measurements or an LED display (BenQ XL2411Z, 144Hz monitor, for the two-photon calcium imaging and intrinsic signal imaging experiments). The screen was oriented at 30° to the axis of the body and positioned perpendicularly to the right eye of the animal (20cm away for behavior, 11cm away for intrinsic signal imaging experiments). Visual stimuli were generated and presented using MATLAB and the Psychophysics Toolbox[55].

We adapted RDK stimuli for its use in mice. Dots (2° diameter) were spawned randomly and uniformly inside a circle of a given diameter. Full screen stimuli were obtained by making the circle big enough to encompass the whole screen. Each dot was assigned one of 8 motion directions (from 0° to 315° with 45° spacing) and moved without collisions inside the stimulus area. Distribution of the dots’ motion direction was determined by the stimulus coherence. A 100% coherence stimulus had all the dots moving in one direction, while a 40% coherence stimulus had 40% of the dots moving in the coherent direction and the rest equally spread across the 8 possible directions. If a dot encountered an edge of the circle it was transported to the diametrically opposed position of the circle depending on its motion direction. Dots had a fixed lifetime, after which they re-spawned in a different location of the stimulus area keeping the same motion direction. In the beginning of the stimulus, dots were initiated with different lifetimes, so that at any moment there was the same number of dots re-spawning. Reducing lifetime of the dots also affected the effective coherence of the stimulus. With shorter lifetimes, dots re-spawned more often and, therefore, at any given frame there were less dots moving in the coherent direction. Except for the experiments described in Figure 2, dots were presented in a full screen, covering 20% of the stimulus area, with a 1s lifetime and moving at 25°/s. For the anesthetized two-photon calcium imaging experiments, we presented 100% coherence stimuli with 2s duration and 4s inter-trial-interval (20 repetitions per direction randomized) moving in the nasal and temporal direction (Figure 3) or moving in 8 directions (Figure S5). Stimuli had 1s duration in the experiments described in Figures 1, 2, 3, S1 and S2, and 1.5s in the experiments in Figures 5, 6, S3, S4, S5 and S6. RDKs with 100%, 40%, 16% and 6% coherence were used in the motion discrimination task. These values are roughly equidistant in a logarithmic scale.

Visual stimulation used in the retina recordings

Visual stimuli were generated using a computer running an Intel core duo processor with Ubuntu (v. 14.04.2, “Trusty Tahr”) running a DMD projector (Cel5500-Fiber, Digital Light Innovations, 1024 × 768 pixel resolution, 60 Hz refresh rate) and an LED light source (M470L2, Thorlabs). Visual stimuli were filtered to project only wavelengths between 480-490 nm. The DMD was projected through a condenser lens onto the photoreceptor-side of the sample. The maximum size of the projected image on the retina was 670 μm × 500 μm. Custom stimuli were developed using Matlab or GNU Octave and the Psychophysics Toolbox. Proper alignment of stimuli in all planes was checked each day prior to performing the experiment.

Display images were centered on the soma of the recorded cell, and were focused on the photoreceptor layer. All visual stimuli were presented on a dark background (6.4×104 R*/rod/s), and both bars and dots were of equal intensity (2.6×105 R*/rod/s). Baseline direction selectivity was assessed with 100 μm (3.2 °) wide by 650 μm (20.9 °) long bars moving at either 250 μm/s or 500 μm/s (8 °/sec and 16 °/sec respectively). Dot stimuli were 62 μm (2 °) in diameter, moved at 775 μm/s (25 °/sec), and occupied 20% of the total stimulus area. Each stimulus presentation lasted 5 seconds in total.

The directional preference of a DSGC was first established by 2-3 repetitions of drifting bars moving in 8 block-shuffled directions, each separated by 45 degrees. Drifting dots were then presented with 3 repetitions of 8 block-shuffled directions. A second set of bar stimuli (2 repetitions, 8 directions) was presented after the dot stimulus set to ensure DSGC tuning did not change over the course of recording. Any cells which changed preferred directions between bar stimuli sets were discarded from further analysis.

Behavior apparatus

Mice performed the motion discrimination task inside a sound and light isolated box with a monitor, speakers, mouse stage with linear treadmill and lickports and CCTV camera inside. The running speed was calculated by a microcontroller (Arduino Uno), which acquired the trace of an analogue rotary encoder embedded in the treadmill. Lickports were constructed by cutting and polishing 18G needles. Lick detection was done electrically. Water flowed to the lickport by gravity and was controlled by a calibrated solenoid valve. In order to avoid water to remain at the tip of the lickport after a trial, a peristaltic pump was used to slowly pump out the water excess. The behavior apparatus was controlled by Bcontrol (C. Brody Lab, Princeton), which was responsible for recording the time of the licks and the running speed on the treadmill, for controlling water delivery and for triggering visual stimuli and two-photon imaging acquisition. Animals were monitored during behavior with a CCTV camera.

Training and motion discrimination task

Mice were placed in a water restriction regime and had ad libitum access to food. Two days after head-post implantation, mice were given 1ml of water daily for at least 5 days prior to behavior training. Body weight was checked daily. Mice with weight losses larger than 20% were removed from water restriction. After training started, mice received water while performing on the task. On days in which no behavioral sessions took place, mice were given 1ml of water.

Training began with a session to habituate mice to the treadmill and the lickports. In the start of the first session, mice were rewarded with a water drop (~4 μl) by licking one of the lickports with a small (0.5s) inter-reward-interval. Reward side was controlled in order to prevent mice from developing strong biases. When mice started licking both lickports comfortably, water reward was only made available after mice ran in the treadmill for a small duration (0.5s). In most cases, mice only needed one session to learn to run and lick for reward. During the next phase of training, running triggered the presentation of a 2s full screen RDK, which moved either in the nasal or temporal direction. The visual stimulus was accompanied by the presentation of either a 2kHz (for the nasal moving RDK) or a 4kHz (for the temporal moving RDK) pure tone. Water reward was delivered upon the first lick to the side associated with the stimulus, that is, left for nasal and right for temporal, after a small delay from stimulus onset (0.3s). Licks to the incorrect side were not taken into account and did not invalidate rewarding the first correct lick later in the same trial. Mice performed two or three sessions in this phase, after which error trials were introduced: the first lick shortly after stimulus onset determined the choice in that trial. If it was correct, the lick was rewarded with a water drop and a new trial could be initiated after a small inter-trial-interval. Incorrect licks were punished with a time-out period (3 to 6s), during which a flickering visual stimulus was showed in the screen and an auditory white noise was played. As mice began discriminating between the two types of trials (2kHz sound and nasal RDK) and (4kHz sound and temporal RDK), the amplitude of the sound tone was gradually decreased until it was removed completely (usually 3 to 5 sessions). At this point, mice were already discriminating between a nasal and a temporal moving RDK in a two-alternative forced choice (2AFC) task. During the rest of the training period, the delay after which the first lick determined the trial outcome was gradually increased, while the stimulus duration was decreased until the two were the same. In the final phase of the task, the first lick after stimulus offset determined the animal choice. Lower motion coherence stimuli were gradually introduced. In all these phases, behavioral sessions were performed daily and lasted while mice were still engaged in the task (30-90 minutes).

For the experiments in Figure 2, animals were trained with these standard RDK parameters (25°/s, 1s duration, 1s lifetime, 460 dots presented over the full screen, 100%, 40%, 16% and 6% coherence). After reaching expert performance discriminating these stimuli, they were tested with stimuli with different parameters (Figure 2). Values of a single stimulus parameter were changed within a single session. It is unclear to what extent the dependency of performance level on the different stimulus parameters depends on the stimuli used for initial training.

Auditory discrimination task

To control for the specificity of the effects on performance by the muscimol injection in contralateral V1, we trained a set of mice (n = 6) in an auditory discrimination task. The training was similar to the RDK motion discrimination task, but instead, mice discriminated between a 4kHz and 8kHz sounds. Half of the animals were trained to lick left for the 4kHz tone and right for the 8kHz tone and the other half were trained with a reversed contingency. In this version of the task, no visual stimuli were ever used. All other aspects of the task remained the same and animals were trained and tested with the same treadmills and behavior boxes as the motion discrimination task.

Two-Photon Targeted Recordings from GFP+ DSGCs

Filter-paper-mounted retinas were placed under the microscope in oxygenated Ames’ medium at 32–34°C. Identification and recordings from GFP+ cells were performed as described previously[56]. In brief, GFP+ cells were identified using a custom-built two-photon microscope tuned to 920 nm to minimize bleaching of photoreceptors. The average sample plane laser power measured after the objective was 6.5-13 mW. The inner limiting membrane above the targeted cell was dissected using a glass electrode. Loose-patch voltage-clamp recordings (holding voltage set to “OFF”) were performed with a new glass electrode (3–5 MΩ) filled with Ames’ medium. Signals were acquired at 10 kHz and filtered at 2 kHz with a Multiclamp 700A amplifier (Molecular Devices) using pCLAMP 10 recording software and a Digidata 1440 digitizer. Comparison of in vivo retinal recordings with in vitro response properties indicates that in vitro recordings closely resemble those in vivo. In particular, cortical direction selectivity shares many response properties with retinal responses, though cortical neurons are perhaps more narrowly tuned[31].

Eye tracking

We recorded movies of the mouse right eye during behavior in the sessions we also did two-photon imaging of V1 L2/3. We used a CMOS camera (Flea3 USB3 Vision, PointGrey) mounted with a telephoto lens (Navitar Zoom 7000). A high power infrared LED (850 nm, Roithner LaserTechnick) was used to generate a bright reflection in the cornea. The camera was triggered by the two-photon imaging acquisition system at 60Hz. In another set of experiments we recorded eye movies in awake mice passively viewing the same stimuli used for the motion discrimination task.

Two-photon in vivo imaging

We used a custom microscope (based on the MIMMS design, Janelia Research Campus, https://www.janelia.org/open-science/mimms) equipped with a resonant scanner, controlled by ScanImage 4 [57] (Vidrio Technologies) Mice were head-fixed either awake performing in the motion discrimination task or lightly anesthetized with isoflurane (1%) and injected intramuscularly with chlorprothixene (1 mg/kg). In the anesthetized experiments, the eyes were coated with a thin layer of silicone oil (Sigma, #378429). Visual stimuli were presented to the right eye. O-rings were glued to the headpost concentric with the center of the cranial window to form a well for imaging. To prevent stray light from the monitor from entering the imaging well, a flexible, conical light shield was attached to the headpost and secured around the imaging objective. GCaMP6 was excited using a Ti:sapphire laser (Chameleon Ultra II, Coherent) tuned to 920 nm. We used GaAsP photomultiplier tubes (10770PB-40, Hamamatsu) and a 16× (0.8 NA) objective (Nikon). The field of view was 400 × 400 μm (512 × 512 pixels). We performed multi-plane imaging scanning in the axial direction with a piezo actuator (Physik Instrumente) (5 planes with 25μm spacing; first plane was discarded from analyses due to flyback). Imaging framerate was 30Hz (6Hz for each plane). Images of L2/3 neurons were taken 130 - 300 μm below dura. Imaging areas were located over monocular primary visual cortex. Laser power measured at the objective exit varied between 80 and 140 mW.

Image Analysis

For each session, a trial with visible structure and little movement was selected. All frames from the trial were registered to a single frame and then averaged. Each frame recorded in the session was registered to this averaged image using a cross-correlation based fast algorithm[58]. Doughnut shaped regions of interest (ROIs) were drawn over cell bodies, excluding nuclei, using a semi-automated procedure[28] in the mean projection of the registered session. The pixels in each ROI were averaged to estimate the fluorescence corresponding to a single neuron. The ROI’s baseline fluorescence, F0, was estimated at the mean fluorescence using a 60 second sliding window after discarding the tails of the distribution. The baseline fluorescence was used to calculate ΔF/F0 = ((F − F0) / F0).

QUANTIFICATION AND STATISTICAL ANALYSIS

Statistical analyses were performed in MATLAB (Mathworks).

Behavioral performance

Trials in which animals did not make any licks (no choice trials) represented 6% of all trials and were removed from all analyses except in Figure 3I. Animal performance was plotted either as the percentage of choices to the right (Figures 1E,F, 3G and S4) or as the percentage of correct trials (accuracy; Figures 2, 3H, 5, 6, S1, S4 and S6). Percentage of choices to the right was fitted with a maximum likelihood procedure to the following psychometric function:

where c is the stimulus coherence (negative for nasal moving RDKs and positive for temporal moving RDKs), λright and λleft are, respectively, the right and left lapse rates, F is the cumulative Gaussian with mean μ and standard deviation σ.

Lick ratio (Figure S2) was defined as the difference between the number of licks that matched the trial choice and the licks to the other side for correct trials.

Changes of side occurred in trials in which mice switched licking during stimulus presentation at least once. Reaction time (Figure S1) was defined as the time from stimulus onset to the first lick after which mice no longer switched licking sides.

Licking, running speed and eye position traces were calculated using 167ms bins (6Hz).

Eye position

A modified version of the “starburst” circle finding algorithm was used to determine the angular position of the pupil[59]. For the first frame, an initial estimate of both the pupil and the cornea reflection (CR) was done by finding the darkest and brightest pixels in the image. The image was transformed using a Sobel filter, highlighting the edges. For both the estimates of the pupil and CR centers, a series of rays were projected, sampling the Sobel filtered image. For each ray, an estimate of the edge of the corresponding structure was determined as the first point that passed a calibrated threshold. Edge points were used to find the centers of the structures by fitting a circle for the pupil and an ellipse to the CR. Points that deviated considerably from the radius were discarded and a new fit was performed. This procedure was done frame by frame, using the last frame centers as the initial estimates. The CR position was used to compensate for translational motion of the eye by subtracting it to the pupil position. Eye traces were smoothed using a Savitzky-Golay FIR filter and downsampled to 6Hz. Pupil position in the image was converted from mm to deg using the formula:

where Δθ is the horizontal angular displacement, Δx is the horizontal displacement measured in the eye images and RP is the radius of rotation of the pupil[60] (1.2mm). Average eye position trial traces were obtained after detecting and correcting for saccades and subtracting the eye baseline position in each trial.

Retina recordings

The preferred direction (PD) of the cell was determined by first normalizing the average spike count in each stimulus direction by the total mean spikes for all directions. The sum of these normalized responses yielded a vector (vector sum) whose direction was the preferred direction of the cell, and whose length gave the strength and width of tuning (ranged between 0 and 1). The direction selective index (DSI) for each cell was calculated as DSI=(Pref-Null)/(Pref+Null), where Pref is the average response to the stimulus whose direction was closest to the PD, and Null is the average response to the stimulus whose direction was 180 degrees opposite the PD.

V1 recordings

For the two-photon anesthetized recordings, we calculated the average calcium signal during three trial epochs: 2s before stimulus onset, during stimulus presentation (2s) and 2s after the stimulus offset. For neurons to be considered visually driven their activity had to be modulated by trial epoch (p<0.01, ANOVA) and their responses to the preferred stimulus had to be above a certain threshold (mean ΔF/F0 during stimulus presentation >15%). We calculated the DSI for visually responsive neurons and considered neurons to be direction-selective (DS) if their DSI was larger than 0.25. For the data in Figure 3B–E, we presented RDK either moving in the nasal or temporal directions. For comparison, we analyzed the two-photon recordings in behaving animals, analogously, considering only the 100% coherence stimuli (Figure S5). The only difference was the length of the trials epochs: 1.5s before stimulus onset, during stimulus presentation (1.5s) and 1.5s after stimulus offset. For the anesthetized recordings in Figure S5, we presented RDK moving in 8 directions. We calculated the preferred direction of neurons by either taking the direction that elicited the largest response (Figure S5B, top) or obtaining the vector sum of all the responses (Figure S5B, bottom). For the two-photon recordings during behavior (Figures 5 and 6) we also considered neurons that responded to the 40% coherence stimuli (mean ΔF/F0 during stimulus presentation >15%) since there were visually driven neurons that did not respond to high coherence stimuli (Figure 5C, neuron #61). Neurons showing non-stimulus related activity, for example those active while running before the onset of the stimulus were not included in the analysis.

Single neuron neurometric analysis

We obtained single neuron neurometric curves[24] by computing the receiver operating characteristic (ROC) curve. For each coherence level we compared the preferred and null direction response distributions and calculated the probability that an external observer could discriminate between the two using the area under the curve method.

Population decoder

We built a population decoder using the activity of V1 L2/3 DS neurons. For each simulation, we sampled with replacement a number of DS neurons from all the recorded 8 sessions. We randomly defined a set of motion directions and coherences, generating training and test sets of stimulus types (200 and 40 trials, respectively). We required that there was at least one trial per stimulus type (100%, 40% and 16% coherence for the nasal and temporal direction RDK) in both the training and test sets. Then, for each neuron, we divided their responses from the recorded sessions in training and test groups (80% and 20% in training and test groups, respectively). We sampled with replacement neural responses corresponding for the different stimulus types in the training set from then training group of responses. We trained a support vector machine (SVM) classifier using either the mean activity during the stimulus presentation of a given number of neurons (Figure 6A) or their instantaneous ΔF/F at each time sample during the stimulus presentation (Figure 6B–C). Note that for each neuron used in the decoder we used the same number of trials per stimulus type. We generated a test dataset analogously. We then measured the accuracy of the classifier for each coherence separately using the test dataset. We ran 1000 simulations of the described procedure for different population sizes. To calculate the time the decoder took to match animal performance (Figure 6C) we linearly interpolated in time the decoder performance between the frames flanking the mean animal performance values.

Statistics

Statistical significance was defined with p < 0.05 and the exact p values are reported. Exact values of n and what n represents are described in the results section and Figure captions. Repeated measurements ANOVAs were used to determine which stimulus parameters affected behavioral performance. Compound symmetry was tested using the Mauchly’s test of sphericity and when violated, p values were corrected using the Greenhouse-Geisser approximation. Tukey’s Honest Significant Difference tests following repeated measurements ANOVAs were used to test whether animals discriminated between the two directions for a given stimulus parameter value. Paired sample t-tests were used to determine the effect of muscimol and saline injections in accuracy and percentage of trials with no choices. Paired sample t-tests were used to compare directional tuning (DSI and Vec) between the responses to RDKs and moving bars of the DSGCs. Binomial tests were used to compare the number of temporal and nasal DS L2/3 V1 neurons. One-way ANOVAs were used to assess the relationship between visual response amplitudes and directional tuning (DSI and Vec). Chi-Square goodness of fit tests were used to compare the distributions of preferred directions with a uniform distribution. A 2-way ANOVA was used to assess the effects of coherence and direction on neurometric discriminability.

Data in the text is mean ± S.D.

DATA AND SOFTWARE AVAILABILITY

Data and custom MATLAB codes are available from the Lead Contact upon request.

Supplementary Material

Highlights.

Mice discriminate direction of motion from RDKs in a head-fixed task

Mice solve the task by integrating motion information in time and space

RDKs elicit direction-selective responses in V1 neurons and On-Off DSGCs

Motion perception requires a functioning V1

Acknowledgments

This work was supported by fellowships from Fundação para a Ciência e a Tecnologia to TM, RFD, GF and MF. LP was supported by grants from Marie Curie (PCIG12-GA-2012-334353), the European Union’s Seventh Framework Program (FP7/2007-2013) under grant agreement No. 600925, the Human Frontier Science Program (RGY0085/2013) and by the Champalimaud Foundation. MTS and MBF were supported by NIH RO1EY019498 and RO1EY013528.

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

Author Contributions

Conceptualization, TM and LP; Methodology TM and LP; Investigation, TM, GF, RFD, MF and MTS; Formal Analysis, TM, MTS; Writing, TM, MTS, MBF and LP; Funding Acquisition, MBF and LP; Supervision, MBF and LP

Declaration of interests

The authors declare no competing interests.

References

- 1.Hoy JL, Yavorska I, Wehr M, Niell CM. Vision Drives Accurate Approach Behavior during Prey Capture in Laboratory Mice. Curr Biol. 2016:1–7. doi: 10.1016/j.cub.2016.09.009. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Huberman AD, Niell CM. What can mice tell us about how vision works? Trends Neurosci. 2011;34:464–473. doi: 10.1016/j.tins.2011.07.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Hübener M. Mouse visual cortex. Curr Opin Neurobiol. 2003;13:413–20. doi: 10.1016/s0959-4388(03)00102-8. [DOI] [PubMed] [Google Scholar]

- 4.Niell CM. Cell Types, Circuits, and Receptive Fields in the Mouse Visual Cortex. Annu Rev Neurosci. 2015;38:413–431. doi: 10.1146/annurev-neuro-071714-033807. [DOI] [PubMed] [Google Scholar]

- 5.O’Connor DH, Huber D, Svoboda K. Reverse engineering the mouse brain. Nature. 2009;461:923–9. doi: 10.1038/nature08539. [DOI] [PubMed] [Google Scholar]

- 6.Borst A, Euler T. Seeing Things in Motion: Models, Circuits, and Mechanisms. Neuron. 2011;71:974–994. doi: 10.1016/j.neuron.2011.08.031. [DOI] [PubMed] [Google Scholar]

- 7.Manookin MB, Patterson SS, Linehan CM. Neural Mechanisms Mediating Motion Sensitivity in Parasol Ganglion Cells of the Primate Retina. Neuron. 2018;97:1327–1340.e4. doi: 10.1016/j.neuron.2018.02.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Newsome WT, Pare EB. A selective impairment of motion perception following lesions of the middle temporal visual area (MT) J Neurosci. 1988;8:2201–2211. doi: 10.1523/JNEUROSCI.08-06-02201.1988. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Salzman CD, Britten KH, Newsome WT. Cortical microstimulation influences perceptual judgements of motion direction. Nature. 1990;346:174–177. doi: 10.1038/346174a0. [DOI] [PubMed] [Google Scholar]

- 10.Niell CM, Stryker MP. Highly selective receptive fields in mouse visual cortex. J Neurosci. 2008;28:7520–36. doi: 10.1523/JNEUROSCI.0623-08.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Dräger UC. Receptive fields of single cells and topography in mouse visual cortex. J Comp Neurol. 1975;160:269–90. doi: 10.1002/cne.901600302. [DOI] [PubMed] [Google Scholar]

- 12.Metin C, Godement P, Imbert M. The primary visual cortex in the mouse: receptive field properties and functional organization. Exp Brain Res. 1988;69:594–612. doi: 10.1007/BF00247312. [DOI] [PubMed] [Google Scholar]

- 13.Andermann ML, Kerlin AM, Roumis DK, Glickfeld LL, Reid RC. Functional specialization of mouse higher visual cortical areas. Neuron. 2011;72:1025–39. doi: 10.1016/j.neuron.2011.11.013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Marshel JH, Garrett ME, Nauhaus I, Callaway EM. Functional specialization of seven mouse visual cortical areas. Neuron. 2011;72:1040–54. doi: 10.1016/j.neuron.2011.12.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Stahl JS. Using eye movements to assess brain function in mice. Vision Res. 2004;44:3401–3410. doi: 10.1016/j.visres.2004.09.011. [DOI] [PubMed] [Google Scholar]

- 16.Liu B, Huberman AD, Scanziani M. Cortico-fugal output from visual cortex promotes plasticity of innate motor behaviour. Nature. 2016;538:383–387. doi: 10.1038/nature19818. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Douglas RM, Neve A, Quittenbaum JP, Alam NM, Prusky GT. Perception of visual motion coherence by rats and mice. Vision Res. 2006;46:2842–7. doi: 10.1016/j.visres.2006.02.025. [DOI] [PubMed] [Google Scholar]

- 18.Stirman JN, Townsend LB, Smith SL. A touchscreen based global motion perception task for mice. Vision Res. 2016;127:74–83. doi: 10.1016/j.visres.2016.07.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Williams DW, Sekuler R. Coherent global motion percepts from stochastic local motions. Vision Res. 1984;24:55–62. doi: 10.1016/0042-6989(84)90144-5. [DOI] [PubMed] [Google Scholar]

- 20.Nakayama K, Tyler CW. Psychophysical isolation of movement sensitivity by removal of familiar position cues. Vision Res. 1981;21:427–433. doi: 10.1016/0042-6989(81)90089-4. [DOI] [PubMed] [Google Scholar]

- 21.Braddick O. A short-range process in apparent motion. Vision Res. 1974;14:519–27. doi: 10.1016/0042-6989(74)90041-8. [DOI] [PubMed] [Google Scholar]

- 22.Law CT, Gold JI. Reinforcement learning can account for associative and perceptual learning on a visual-decision task. Nat Neurosci. 2009;12:655–63. doi: 10.1038/nn.2304. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Roitman JD, Shadlen MN. Response of neurons in the lateral intraparietal area during a combined visual discrimination reaction time task. J Neurosci. 2002;22:9475–89. doi: 10.1523/JNEUROSCI.22-21-09475.2002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Britten KH, Shadlen MN, Newsome WT, Movshon JA. The Analysis of Visual Motion : A Comparison Psychophysical Performance of Neuronal. J Neurosci. 1992;12:4745–4765. doi: 10.1523/JNEUROSCI.12-12-04745.1992. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Guo ZV, Li N, Huber D, Ophir E, Gutnisky DA, Ting JT, Feng G, Svoboda K. Flow of Cortical Activity Underlying a Tactile Decision in Mice. Neuron. 2013:1–16. doi: 10.1016/j.neuron.2013.10.020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Giolli RA, Blanks RHI, Lui F. The accessory optic system: basic organization with an update on connectivity, neurochemistry, and function. Prog Brain Res. 2006;151:407–40. doi: 10.1016/S0079-6123(05)51013-6. [DOI] [PubMed] [Google Scholar]

- 27.Keller GB, Bonhoeffer T, Hübener M. Sensorimotor Mismatch Signals in Primary Visual Cortex of the Behaving Mouse. Neuron. 2012;74:809–815. doi: 10.1016/j.neuron.2012.03.040. [DOI] [PubMed] [Google Scholar]

- 28.Chen TW, Wardill TJ, Sun Y, Pulver SR, Renninger SL, Baohan A, Schreiter ER, Kerr Ra, Orger MB, Jayaraman V, et al. Ultrasensitive fluorescent proteins for imaging neuronal activity. Nature. 2013;499:295–300. doi: 10.1038/nature12354. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Dana H, Chen TW, Hu A, Shields BC, Guo C, Looger LLL, Kim DS, Svoboda K. Thy1-GCaMP6 Transgenic Mice for Neuronal Population Imaging In Vivo. PLoS One. 2014;9:e108697. doi: 10.1371/journal.pone.0108697. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Cruz-Martín A, El-Danaf RN, Osakada F, Sriram B, Dhande OS, Nguyen PL, Callaway EM, Ghosh A, Huberman AD. A dedicated circuit links direction-selective retinal ganglion cells to the primary visual cortex. Nature. 2014;4:358–61. doi: 10.1038/nature12989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Hillier D, Fiscella M, Drinnenberg A, Trenholm S, Rompani SB, Raics Z, Katona G, Juettner J, Hierlemann A, Rozsa B, et al. Causal evidence for retina-dependent and -independent visual motion computations in mouse cortex. Nat Neurosci. 2017;20:960–968. doi: 10.1038/nn.4566. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Dhande OS, Estevez ME, Quattrochi LE, El-Danaf RN, Nguyen PL, Berson DM, Huberman AD. Genetic dissection of retinal inputs to brainstem nuclei controlling image stabilization. J Neurosci. 2013;33:17797–813. doi: 10.1523/JNEUROSCI.2778-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Rivlin-Etzion M, Zhou K, Wei W, Elstrott J, Nguyen PL, Barres Ba, Huberman AD, Feller MB. Transgenic Mice Reveal Unexpected Diversity of On-Off Direction-Selective Retinal Ganglion Cell Subtypes and Brain Structures Involved in Motion Processing. J Neurosci. 2011;31:8760–8769. doi: 10.1523/JNEUROSCI.0564-11.2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Britten KH, Shadlen MN, Newsome WT, Movshon JA. Responses of neurons in macaque MT to stochastic motion signals. Vis Neurosci. 1993;10:1157–1169. doi: 10.1017/s0952523800010269. [DOI] [PubMed] [Google Scholar]

- 35.Poort J, Khan AG, Pachitariu M, Nemri A, Orsolic I, Krupic J, Bauza M, Sahani M, Keller GB, Mrsic-Flogel TD, et al. Learning Enhances Sensory and Multiple Non-sensory Representations in Primary Visual Cortex. Neuron. 2015;86:1478–1490. doi: 10.1016/j.neuron.2015.05.037. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Goard MJ, Pho GN, Woodson J, Sur M. Distinct roles of visual, parietal, and frontal motor cortices in memory-guided sensorimotor decisions. Elife. 2016;5:1–30. doi: 10.7554/eLife.13764. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Glickfeld LL, Histed M, Maunsell JHR. Mouse Primary Visual Cortex Is Used to Detect Both Orientation and Contrast Changes. J Neurosci. 2013;33:19416–19422. doi: 10.1523/JNEUROSCI.3560-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Wang Q, Burkhalter A. Area map of mouse visual cortex. J Comp. 2007;357:339–357. doi: 10.1002/cne.21286. [DOI] [PubMed] [Google Scholar]

- 39.Juavinett AL, Callaway EM. Pattern and Component Motion Responses in Mouse Visual Cortical Areas. Curr Biol. 2015;25:1–6. doi: 10.1016/j.cub.2015.05.028. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Palagina G, Meyer JF, Smirnakis SM. Complex Visual Motion Representation in Mouse Area V1. J Neurosci. 2017;37:164–183. doi: 10.1523/JNEUROSCI.0997-16.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Muir DR, Roth MM, Helmchen F, Kampa BM. Model-based analysis of pattern motion processing in mouse primary visual cortex. Front Neural Circuits. 2015;9:1–14. doi: 10.3389/fncir.2015.00038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42.Tohmi M, Meguro R, Tsukano H, Hishida R, Shibuki K. The Extrageniculate Visual Pathway Generates Distinct Response Properties in the Higher Visual Areas of Mice. Curr Biol. 2014:1–11. doi: 10.1016/j.cub.2014.01.061. [DOI] [PubMed] [Google Scholar]

- 43.Britten KH, Newsome WT, Shadlen MN, Celebrini S, Movshon JA. A relationship between behavioral choice and the visual responses of neurons in macaque MT. Vis Neurosci. 1996;13:87–100. doi: 10.1017/s095252380000715x. [DOI] [PubMed] [Google Scholar]

- 44.Snowden RJ, Treue S, Andersen RA. The response of neurons in areas V1 and MT of the alert rhesus monkey to moving random dot patterns. Exp Brain Res. 1992;88:389–400. doi: 10.1007/BF02259114. [DOI] [PubMed] [Google Scholar]

- 45.Ji W, Gamamut R, Bista P, D’Souza RD, Wang Q, Burkhalter A. Modularity in the Organization of Mouse Primary Visual Cortex. Neuron. 2015;87:632–643. doi: 10.1016/j.neuron.2015.07.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Gao E, DeAngelis GC, Burkhalter A. Parallel input channels to mouse primary visual cortex. J Neurosci. 2010;30:5912–26. doi: 10.1523/JNEUROSCI.6456-09.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Petreanu L, Gutnisky DA, Huber D, Xu N, O’Connor DH, Tian L, Looger LLL, Svoboda K, O’Connor DH, Tian L, et al. Activity in motor–sensory projections reveals distributed coding in somatosensation. Nature. 2012;489:299–303. doi: 10.1038/nature11321. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Gold JI, Shadlen MN. The influence of behavioral context on the representation of a perceptual decision in developing oculomotor commands. J Neurosci. 2003;23:632–51. doi: 10.1523/JNEUROSCI.23-02-00632.2003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Gold JI, Shadlen MN. The neural basis of decision making. Annu Rev Neurosci. 2007;30:535–74. doi: 10.1146/annurev.neuro.29.051605.113038. [DOI] [PubMed] [Google Scholar]

- 50.Downing C, Movshon JA. Spatial and temporal summation in the detection of motion in stochastic random dot displays. Invest- Ophthalmol Vis Sci [Suppl] 1989;30:72. [Google Scholar]

- 51.Carandini M, Churchland AK. Probing perceptual decisions in rodents. Nat Neurosci. 2013;16:824–31. doi: 10.1038/nn.3410. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Dombeck DA, Khabbaz AN, Collman F, Adelman TL, Tank DW. Imaging large-scale neural activity with cellular resolution in awake, mobile mice. Neuron. 2007;56:43–57. doi: 10.1016/j.neuron.2007.08.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Kalatsky Va, Stryker MP. New paradigm for optical imaging: temporally encoded maps of intrinsic signal. Neuron. 2003;38:529–45. doi: 10.1016/s0896-6273(03)00286-1. [DOI] [PubMed] [Google Scholar]

- 54.Suter BABA, O’Connor T, Iyer V, Petreanu L, Hooks BM, Kiritani T, Svoboda K, Shepherd GMG, Connor TO, Ascoli G, et al. Ephus: multipurpose data acquisition software for neuroscience experiments. Front Neural Circuits. 2010;4:1–12. doi: 10.3389/fncir.2010.00100. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Brainard DH. The Psychophysics Toolbox. Spat Vis. 1997;10:433–436. [PubMed] [Google Scholar]