Abstract

The emergence of the classical world from the quantum substrate of our Universe is a long-standing conundrum. In this paper, I describe three insights into the transition from quantum to classical that are based on the recognition of the role of the environment. I begin with the derivation of preferred sets of states that help to define what exists—our everyday classical reality. They emerge as a result of the breaking of the unitary symmetry of the Hilbert space which happens when the unitarity of quantum evolutions encounters nonlinearities inherent in the process of amplification—of replicating information. This derivation is accomplished without the usual tools of decoherence, and accounts for the appearance of quantum jumps and the emergence of preferred pointer states consistent with those obtained via environment-induced superselection, or einselection. The pointer states obtained in this way determine what can happen—define events—without appealing to Born’s Rule for probabilities. Therefore, pk=|ψk|2 can now be deduced from the entanglement-assisted invariance, or envariance—a symmetry of entangled quantum states. With probabilities at hand, one also gains new insights into the foundations of quantum statistical physics. Moreover, one can now analyse the information flows responsible for decoherence. These information flows explain how the perception of objective classical reality arises from the quantum substrate: the effective amplification that they represent accounts for the objective existence of the einselected states of macroscopic quantum systems through the redundancy of pointer state records in their environment—through quantum Darwinism.

This article is part of a discussion meeting issue ‘Foundations of quantum mechanics and their impact on contemporary society’.

Keywords: quantum Darwinism, decoherence, quantum jumps, probabilities, Born’s Rule

1. Introduction and preview

This survey article is not a comprehensive review. It is, nevertheless, a brief review of several interrelated developments that can be collectively described as the ‘quantum theory of classical reality’.

Two mini-reviews in Nature Physics [1] and Physics Today [2] are also available. A more detailed review is given in [3]. It is by now somewhat out of date, as several relevant results have been obtained since 2007 when it was written. Moreover, a book that will cover this same ground, as well as the theory of decoherence and other related subjects, is (slowly) being written [4]. Nevertheless, it is hoped that readers may appreciate, in the interim, an update as well as the more informal presentation style of this overview.

The ‘relative state interpretation’ set out 50 years ago by Hugh Everett III [5,6] is a convenient starting point for our discussion. Within its context, one can re-evaluate the basic axioms of quantum theory (as extracted, for example, from Dirac [7]). The Everettian view of the Universe is a good way to motivate exploring the effect of the environment on the state of the system. (Of course, a complementary motivation based on a non-dogmatic reading of Bohr [8] is also possible.)

The basic idea we shall pursue here is to accept a relative state explanation of the ‘collapse of the wavepacket’ by recognizing, with Everett, that observers perceive the state of the ‘rest of the Universe’ relative to their own state, or—to be more precise—relative to the state of their records. This allows quantum theory to be universally valid. (This does not mean that one has to accept a ‘many worlds’ ontology; see [3] for discussion.)

Much of the heat in various debates on the foundations of quantum theory seems to be generated by the expectation that a single idea should provide a complete solution. When this does not happen—when there is progress, but there are still unresolved issues—the possibility that an idea responsible for this progress may be a step in the right direction—but that more than one idea, one step, is needed—is often dismissed. As we shall see, developing the quantum theory of our classical everyday reality requires the solution of several problems and calls for several ideas. In order to avoid circularities, they need to be introduced in the right order.

(a). Preferred pointer states from einselection

Everett explains the perception of the collapse. However, his relative state approach raises three questions absent in Bohr’s Copenhagen interpretation [8] that relied on the independent existence of an ab initio classical domain. Thus, in a completely quantum Universe, one is forced to seek sets of preferred, effectively classical but ultimately quantum, states that can define what exists—branches of the universal state vector—and that allow observers to keep reliable records. Without such a preferred basis, relative states are just ‘too relative’, and the relative state approach suffers from basis ambiguity [9].

Decoherence selects preferred pointer states [9–11], so this issue was in fact resolved some time ago. The principal consequence of environment-induced decoherence is that, in open quantum systems—systems interacting with their environments—only certain quantum states retain stability in spite of the immersion of the system in the environment: superpositions are unstable, and quickly decay into mixtures of the einselected, stable pointer states [1–4,9–19]. This is einselection—a nickname for environment-induced superselection. Thus, while the significance of the environment in suppressing quantum behaviour was pointed out by Dieter Zeh already in 1970 [20], the role of einselection in the emergence of these preferred pointer states in the transition from quantum to classical has only become fully appreciated since 1981 [21].

(b). Born’s Rule from envariance

Einselection can account for preferred sets of states, and hence for Everettian ‘branches’. But this is achieved at a very high price—the usual practice of decoherence is based on averaging (as it involves reduced density matrices defined by a partial trace). This means that one is using Born’s Rule to relate amplitudes to probabilities. But, as emphasized by Everett, Born’s Rule should not be postulated in an approach that is based on purely unitary quantum dynamics. The assumption of the universal validity of quantum theory raises the issue of the origin of Born’s Rule, pk=|ψk|2, which—following the original conjecture [22]—is simply postulated in textbook discussions.

Here we shall see that Born’s Rule can be derived from entanglement-assisted invariance, or envariance—from the symmetry of entangled quantum states. Envariance is a purely quantum symmetry, as it is critically dependent on the telltale quantum feature—entanglement. Envariance sheds new light on the origin of probabilities relevant for the everyday world we live in, e.g. for statistical physics and thermodynamics. Moreover, the fundamental derivation of objective probabilities allows one to discuss information flows in our quantum Universe, and hence understand how the perception of classical reality emerges from the quantum substrate.

(c). Classical reality via quantum Darwinism

Even preferred quantum states defined by einselection are still ultimately quantum. Therefore, they cannot be found out by initially ignorant observers through direct measurement without getting disrupted (reprepared). Yet, the states of macroscopic systems in our everyday world seem to exist objectively—they can be found out by anyone without getting disrupted. This ability to find out an unknown state is in fact an operational definition of ‘objective existence’. So, if we are to explain the emergence of everyday objective classical reality, we need to identify the quantum origin of objective existence.

We shall do that by dramatically upgrading the role of the environment: in decoherence theory, the environment is the collection of degrees of freedom where quantum coherence (and hence phase information) is lost. However, in ‘real life’, the role of the environment is in effect that of a witness (e.g. [17,23]) to the state of the system of interest, and a communication channel through which the information reaches us, the observers. This mechanism for the emergence of the classical objective reality is the subject of the theory of quantum Darwinism.

2. Quantum postulates and relative states

We start from a well-defined solid ground—the list of quantum postulates that are explicit in Dirac [8], and at least implicit in most quantum textbooks.

The first two deal with the mathematics of quantum theory:

(i) The state of a quantum system is represented by a vector in its Hilbert space

.

.(ii) Evolutions are unitary (e.g. generated by the Schrödinger equation).

These two postulates provide an essentially complete summary of the mathematical structure of quantum physics. They are often [24,25] supplemented by a composition postulate:

(o) The states of composite quantum systems are represented by a vector in the tensor product of the Hilbert spaces of its components.

Physicists sometimes differ in assessing how much of postulate (o) follows from (i). We shall not be distracted by this issue, and move on to where the real problems are. Readers can follow their personal taste in supplementing (i) and (ii) with whatever portion of (o) they deem necessary. It is, nevertheless, useful to list (o) explicitly to emphasize the role of the tensor structure it posits: it is crucial for entanglement, the quantum phenomenon we will depend on.

Using (o), (i) and (ii), suitable Hamiltonians, etc., one can calculate. Yet, such quantum calculations are only a mathematical exercise—without additional postulates, one can predict nothing of experimental consequence from their results. What is so far missing is physics—a way to establish correspondence between abstract state vectors in  and laboratory experiments (and/or everyday experience) is needed to relate quantum mathematics to our world.

and laboratory experiments (and/or everyday experience) is needed to relate quantum mathematics to our world.

Establishing this correspondence starts with the next postulate:

(iii) Immediate repetition of a measurement yields the same outcome.

Immediate repeatability is an idealization (it is hard to devise such non-demolition measurements, but it can be done). Yet postulate (iii) is uncontroversial. The notion of a ‘state’ is based on predictability, and the most rudimentary prediction is a confirmation that the state is what it is known to be. This key ingredient of quantum physics goes beyond the mathematics of postulates (o)–(ii). It enters through the repeatability postulate (iii). Moreover, a classical equivalent of (iii) is taken for granted (an unknown classical state can be discovered without getting disrupted), so repeatability does not clash with our classical intuition.

Postulate (iii) is the last uncontroversial postulate on the textbook list. This collection comprises our quantum core postulates—our credo, the foundation of the quantum theory of the classical.

In contrast to classical physics (where unknown states can be found out by an initially ignorant observer), the very next quantum axiom limits the predictive attributes of the state compared with what they were in the classical domain:

(iv) Measurement outcomes are limited to an orthonormal set of states (eigenstates of the measured observable). In any given run of a measurement, an outcome is just one such state.

This collapse postulate is controversial. To begin with, in a completely quantum Universe, it is inconsistent with the first two postulates: starting from a general pure state  of the system (postulate (i)), and an initial state |A0〉 of the apparatus

of the system (postulate (i)), and an initial state |A0〉 of the apparatus  , and assuming unitary evolution (postulate (ii)), one is led to a superposition of outcomes:

, and assuming unitary evolution (postulate (ii)), one is led to a superposition of outcomes:

|

2.1 |

which is in contradiction with, at least, a literal interpretation of the ‘collapse’ anticipated by axiom (iv). This conclusion follows for an apparatus that works as intended in tests (i.e. |sk〉|A0〉⇒|sk〉|Ak〉) from linearity of quantum evolutions that is in turn implied by the unitarity of postulate (ii).

Everett settled (or at least bypassed) the ‘collapse’ part of the problem with (iv)—an observer perceives the state of the rest of the Universe relative to his/her records. This is the essence of the relative state interpretation.

However, from the standpoint of our quest for classical reality, perhaps the most significant and disturbing implication of (iv) is that quantum states do not exist—at least not in the objective sense which we are used to in the classical world. The outcome of the measurement is typically not the pre-existing state of the system, but one of the eigenstates of the measured observable.

Thus, whatever a quantum state is, ‘objective existence’ independent of what is known about it is clearly not one of its attributes. This malleability of quantum states clashes with the classical idea of what the state should be. Some even go as far as to claim that quantum states are simply a description of the information that an observer has, and have essentially nothing to do with ‘existence’.

I believe this denial of existence under any circumstances is going too far—after all, there are situations when a state can be found out, and the repeatability postulated by (iii) recognizes that its existence can be confirmed. But, clearly, (iv) limits the ‘quantum existence’ of states to situations that are ‘under the jurisdiction’ of postulate (iii) (or slightly more general situations where the pre-existing density matrix of the system commutes with the measured observable).

Collapse postulate (iv) complicates interpreting the quantum formalism, as has been appreciated since Bohr and von Neumann [8,26]. Therefore, at least before Everett, it was often cited as an indication of the ultimate insolubility of the ‘quantum measurement problem’. Yet, (iv) is hard to argue with—it captures what happens in laboratory measurements.

To resolve the clash between the mathematical structure of quantum theory and our perception of what happens in the laboratory, in real-world measurements, one can accept—with Bohr—the primacy of our experience. The inconsistency of (iv) with the mathematical core of the quantum formalism—the superpositions of (i) and the unitarity of (ii)—can then be blamed on the nature of the apparatus. According to the Copenhagen interpretation, the apparatus is classical, and, therefore, not subject to the quantum principle of superposition (which follows from (i)). Measurements straddle the quantum–classical border, so they need not abide by the unitarity of (ii). Therefore, collapse can happen in the ‘lawless’ quantum–classical no man’s land.

This quantum–classical duality posited by Bohr challenges the unification instinct of physicists. One way of viewing decoherence is to regard einselection as a mechanism that accounts for effective classicality by suspending the validity of the quantum principle of superposition in a subsystem while upholding it for the composite system that includes the environment [11,17].

Everett’s alternative to Bohr’s approach was to abandon the literal collapse and recognize that, once the observer is included in the wave function, one can consistently interpret the consequences of such correlations. The right-hand side of equation (2.1) contains all the possible outcomes, so the observer who records outcome no. 17 perceives the branch of the Universe that is consistent with that event reflected in his records. This view of the collapse is also consistent with the repeatability of postulate (iii); remeasurement by the same observer using the same (non-demolition) device yields the same outcome.

Nevertheless, this relative state view of the quantum Universe suffers from a basic problem: the principle of superposition (the consequence of axiom (i)) implies that the state of the system or of the apparatus after the measurement can be written in infinitely many unitarily equivalent basis sets in the Hilbert spaces of the apparatus (or of the observer’s memory):

| 2.2 |

This is basis ambiguity [9]. It appears as soon as—with Everett—one eliminates axiom (iv). The bases employed above are typically non-orthogonal, but in the Everettian relative state setting there is nothing that would preclude them, or that would favour, for example, the Schmidt basis of  and

and  (the orthonormal basis that is unique, provided that the absolute values of the Schmidt coefficients in such a Schmidt decomposition of an entangled bipartite state differ).

(the orthonormal basis that is unique, provided that the absolute values of the Schmidt coefficients in such a Schmidt decomposition of an entangled bipartite state differ).

In our everyday reality, we do not seem to be plagued by such basis ambiguity problems. So, in our Universe there is something that (in spite of (i) and the egalitarian superposition principle it implies) picks out preferred states, and makes them effectively classical. Axiom (iv) anticipates this.

Consequently, before there is an (apparent) collapse in the sense of Everett, a set of preferred states—one of which is selected by (or, at the very least, consistent with) the observer’s records—must be chosen. There is nothing in the writings of Everett that would even hint that he was aware of basis ambiguity and the questions it leads to.

The next question concerns probabilities: How likely is it that, after I measure, my state will be, say,  ? Everett was keenly aware of this issue, and even believed that he had solved it by deriving Born’s Rule. In retrospect, it is clear that the argument he proposed—as well as the arguments proposed by his followers, including DeWitt [24,25,27], Graham [25] and Geroch [28], who noted the failure of Everett’s original approach, and attempted to fix the problem—did not accomplish as much as was hoped for, and did not amount to a derivation of Born’s Rule (see [29–31] for influential critical assessments).

? Everett was keenly aware of this issue, and even believed that he had solved it by deriving Born’s Rule. In retrospect, it is clear that the argument he proposed—as well as the arguments proposed by his followers, including DeWitt [24,25,27], Graham [25] and Geroch [28], who noted the failure of Everett’s original approach, and attempted to fix the problem—did not accomplish as much as was hoped for, and did not amount to a derivation of Born’s Rule (see [29–31] for influential critical assessments).

In textbook versions of the quantum postulates, probabilities are assigned by another (Born’s Rule) axiom:

(v) The probability pk of an outcome |sk〉 in a measurement of a quantum system that was previously prepared in the state |ψ〉 is given by |〈sk|ψ〉|2.

Born’s Rule fits very well with Bohr’s approach to the quantum–classical transition (e.g. with postulate (iv)). However, Born’s Rule is at odds with the spirit of the relative state approach, or any approach that attempts (as we do) to deduce perception of the classical everyday reality starting from the quantum laws that govern our Universe. This does not mean that there is a mathematical inconsistency here: one can certainly use Born’s Rule (as the formula pk=|〈sk|ψ〉|2 is known) along with the relative state approach in averaging to get expectation values and the reduced density matrix.

Indeed, until the derivation of Born’s Rule in a framework of decoherence was proposed, decoherence practice relied on probabilities given by pk=|〈sk|ψ〉|2. They enter whenever one assigns physical interpretation to reduced density matrices, a key tool of the decoherence theory. Everett’s point was not that Born’s Rule is wrong, but, rather, that it should be derived from the other quantum postulates, and we shall show how to do that.

3. Quantum origin of quantum jumps

To restate briefly the three problems identified above, we need to derive the essence of the collapse postulate (iv) and Born’s Rule (v) from our credo—the core quantum postulates (o)–(iii). Moreover, even when we accept the relative state origin of ‘single outcomes’ and ‘collapse’, we still need to justify the emergence of the preferred basis that is the essence of (iv).

This issue (which in our summary of textbook axiomatics of quantum theory is part of the collapse postulate) is so important that it is often captured by a separate postulate which declares that ‘observables are Hermitian’. This, in effect, means that the outcomes of measurements should correspond to orthogonal states in the Hilbert space. Furthermore, we should do it without appealing to Born’s Rule—without decoherence, or at least without its usual tools such as reduced density matrices that rely on Born’s Rule. Once we have preferred states, we will also have a set of candidate events. Once we have events, we shall be able to pose questions about their probabilities.

The preferred basis problem was settled by environment-induced superselection (einselection), usually regarded as a principal consequence of decoherence. This is discussed elsewhere [9,10]. Preferred pointer states and einselection are usually justified by appealing to decoherence. Therefore, they come at a price that would have been unacceptable to Everett: decoherence and einselection employ reduced density matrices and trace, and so their predictions are based on averaging, and thus on probabilities—on Born’s Rule.

Here we present an alternative strategy for arriving at preferred states that—while not at odds with decoherence—does not rely on the Born’s Rule-dependent tools of decoherence. Our overview of the origin of quantum jumps is brief. However, we direct the reader to references where different steps of that strategy are discussed in more detail. In short, we describe how one should go about doing the necessary physics, but we only sketch what needs to be done, and we do not explain all the details—the requisite steps are carried out in the references we provide: our discussion is meant as a guide to the literature and not a substitute.

Decoherence done ‘in the usual way’ (which, by the way, is a step in the right direction, in understanding the practical, and even many of the fundamental, aspects of the quantum–classical transition!) is not a good starting point in addressing the more fundamental aspects of the origins of the classical.

In particular, decoherence is not a good starting point for the derivation of Born’s Rule. We have already noted the problem with this strategy: it courts circularity. It employs Born’s Rule to arrive at the pointer states by using the reduced density matrix which is obtained through trace—i.e. averaging, which is where Born’s Rule is implicitly invoked (e.g. [32]). So, using decoherence to derive Born’s Rule is at best a consistency check.

While I am most familiar with my own transgressions in this matter [33], this circularity also afflicts other approaches, including the proposal based on decision theory [34–36], as noted also by Forrester [37] and Dawid & Thebault [38] among others. Therefore, one has to start the task from a different end.

To get anywhere—e.g. to define ‘events’ essential in the introduction of probabilities—we need to show how the mathematical structure of quantum theory (postulates (o), (i) and (ii)—Hilbert space and unitarity) supplemented by the uncontroversial postulate (iii) (immediate repeatability, hence predictability) leads to preferred sets of states.

(a). Quantum jumps from quantum core postulates

Surprisingly enough, deducing preferred states from our ‘quantum credo’ turns out to be simple. The possibility of repeated confirmation of an outcome is all that is needed to establish an effectively classical domain within the quantum Universe and to define events such as measurement outcomes.

One can accomplish this with minimal assumptions (‘quantum core’ postulates (o)–(iii) on the above list) as described in [39,40]. Here we review the basic steps. We assume that |v〉 and |w〉 are among the possible repeatably accessible outcome states of  :

:

| 3.1a |

and

| 3.1b |

So far, we have employed postulates (i) and (iii). The measurement, when repeated, would yield the same outcome, as the pre-measurement states have not changed. Thus, postulate (iii) is indeed satisfied.

We now assume the process described by equations (3.1) is fully quantum, so postulate (ii)—unitarity of evolutions—must also apply. Unitarity implies that the overlap of the states before and after must be the same. Hence:

| 3.2 |

Our conclusions follow from this simple equation. There are two possibilities that depend on the overlap 〈v|w〉.

Suppose first that 〈v|w〉≠0. One is then forced to conclude that the measurement was unsuccessful, as the state of  was unaffected by the process above. That is, the transfer of information from

was unaffected by the process above. That is, the transfer of information from  to

to  must have failed completely, as in this case 〈Av|Aw〉=1 must hold. In particular, the apparatus can bear no imprint that distinguishes between states |v〉 and |w〉 that are not orthogonal.

must have failed completely, as in this case 〈Av|Aw〉=1 must hold. In particular, the apparatus can bear no imprint that distinguishes between states |v〉 and |w〉 that are not orthogonal.

The other possibility, 〈v|w〉=0, allows for an arbitrary 〈Av|Aw〉, including a perfect record, 〈Av|Aw〉=0. Thus, outcome states must be orthogonal if—in accord with postulate (iii)—they are to survive intact a successful information transfer, in general, or a quantum measurement, in particular, so that immediate remeasurement can yield the same result.

The same derivation can be carried out for  with a Hilbert space of dimension

with a Hilbert space of dimension  starting with a system state vector

starting with a system state vector  , where (as before) a priori {|sk〉} need to be only linearly independent.

, where (as before) a priori {|sk〉} need to be only linearly independent.

The simple reasoning above leads to a surprisingly decisive conclusion: orthogonality of the outcome states of the system is absolutely essential for them to imprint even a minute difference on the state of any other system while retaining their identity. The overlap 〈v|w〉 must be 0 exactly for 〈Av|Aw〉 to differ from unity.

Imperfect or accidental information transfers (e.g. to the environment in the course of decoherence) can also define preferred sets of states providing that the crucial non-demolition demand of postulate (iii) is imposed on the unitary evolution responsible for the information flow.

A straightforward extension of the above derivation to where it can be applied not just to measured quantum systems (where non-demolition is a tall order) but also to the measuring devices (where repeatability is essential) is possible [39,40]. It is somewhat more demanding technically, as one needs to allow for mixed states and for decoherence in a model of a presumably macroscopic apparatus, but the conclusion is the same: records maintained by the apparatus or repeatably accessible states of macroscopic but ultimately quantum systems must correspond to orthogonal subspaces of their Hilbert space.

It is important to emphasize that we are not asking for clearly distinguishable records (i.e. we are not demanding orthogonality of the states of the apparatus, 〈Av|Aw〉=0). Indeed, in the macroscopic case [40] one does not even ask for the state of the system to remain unchanged, but only for the outcomes of the consecutive measurements to be identical (i.e. the evidence of repeatability is in the outcomes). Still, even under these rather weak assumptions, one is forced to conclude that quantum states can exert distinguishable influences and remain unperturbed only when they are orthogonal. To arrive at this conclusion we only used postulate (i)—the fact that when two vectors in the Hilbert space are identical, then physical states they correspond to must also be identical.

(b). Discussion

The emergence of orthogonal outcome states is established above on the foundation of very basic (and very quantum) assumptions. It leads one to conclude that observables are indeed associated with Hermitian operators.

Hermitian observables are usually introduced in a very different manner—they are the (postulated!) quantum versions of the familiar classical quantities. This emphasizes the physical significance of their spectra (especially when they correspond to conserved quantities). Their orthogonal eigenstates emerge from the mathematics, once their Hermitian nature is assumed. Here we have deduced their Hermiticity by proving orthogonality of their eigenstates—possible outcomes—from the quantum core postulates by focusing on the effect of information transfer on the measured system.

The restriction to an orthogonal set of outcomes yields a preferred basis: the essence of the collapse axiom (iv) need not be postulated! It follows from the uncontroversial quantum core postulates (o)–(iii).

We note that the preferred basis arrived at in this manner essentially coincides with the basis obtained a long time ago via einselection [9,10]. It is just that here we have arrived at this familiar result without implicit appeal to Born’s Rule, which is essential if we want to take the next step, and derive postulate (v).

We have relied on unitarity, so we did not derive the actual collapse of the wavepacket to a single outcome—a single event. Collapse is non-unitary, so one cannot deduce it starting from the quantum core that includes postulate (ii). However, we have accounted for one of the key collapse attributes: the necessity of a symmetry breaking—of the choice of a single orthonormal set of states from amongst various possible basis sets, each of which can equally well span the Hilbert space of the system—follows from the core quantum postulates. This sets the stage for collapse—for quantum jumps.

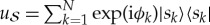

As we have already briefly noted, this reasoning can be extended [40] to when repeatably copied states belong to a macroscopic, decohering system (e.g. an apparatus pointer). In that case a microstate can be perturbed by copying (or by the environment). What matters then is not the ‘non-demolition’ of the microstate of the pointer, but the persistence of the record its macrostate (corresponding to a whole collection of microstates) represents. To formulate this demand precisely, one can rely on repeatability of copies: for instance, even though the microstates of the pointer change upon readout due to the interaction with the environment, its macrostate should still represent the same measurement outcome—it should still contain the same ‘actionable information’ [40]. This more general discussion addresses also other issues (e.g. connection between repeatability, distinguishability and positive operator-valued measures (POVMs), raised as FAQ 4 in the frequently asked questions in §6) that arise in realistic settings (see figure 1 for the illustration of the key idea).

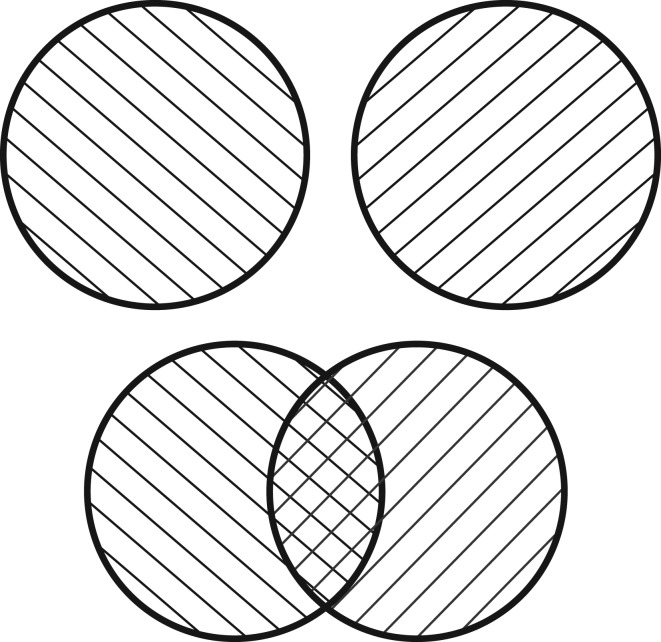

Figure 1.

The fundamental (pre-quantum) connection between distinguishability and repeatability of measurements. The two circles represent two states of the measured system. They correspond to two outcomes—e.g. two properties of the underlying states (represented by two cross-hatchings). A measurement that can result in either outcome—that can produce a record correlated with these two properties—can be repeatable only when the two corresponding states (the two circles) do not overlap (case illustrated at the top). Repeatability is impossible without distinguishability: when two states overlap (case illustrated at the bottom), repetition of the measurement can always result in a system switching the state (and, thus, defying repeatability). In the quantum setting this pre-quantum connection between repeatability and distinguishability leads to the derivation of orthogonality of repeatable measurement outcomes (and the two cross-hatchings can be thought of as two linear polarizations of a photon—orthogonal on the top, but not below), but the basic intuition demanding distinguishability as a prerequisite for repeatability does not rely on the quantum formalism.

4. Probabilities from entanglement

The derivation of events allows, and even forces, one to enquire about their probabilities or—more specifically—about the relation between the probabilities of measurement outcomes and the initial pre-measurement state. As noted earlier, several past attempts at the derivation of Born’s Rule turned out to be circular. Here we present the key ideas behind a circularity-free approach.

We emphasize that our derivation of events does not rely on Born’s Rule. In particular, we have not attached any physical interpretation to the values of scalar products, and the key to our conclusions rests on whether the scalar product is (or is not) 0 or 1, or neither.

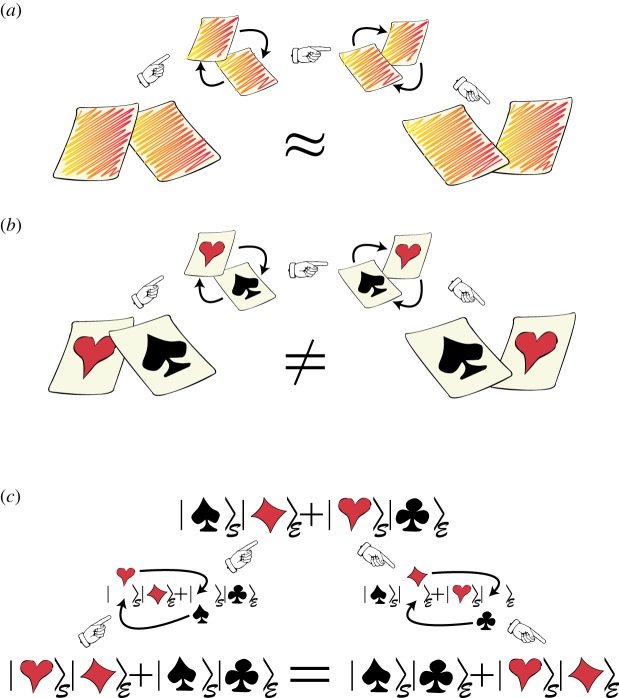

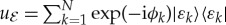

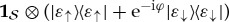

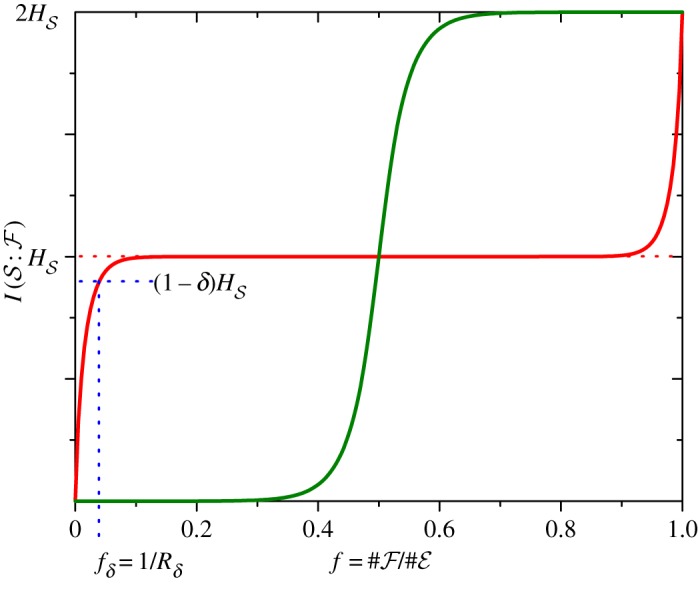

We now briefly review the envariant derivation of Born’s Rule based on the symmetry of entangled quantum states—on entanglement-assisted invariance or envariance. The study of envariance as a physical basis of Born’s Rule started with [17,41,42], and is now the focus of several other papers (e.g. [43–45]). The key idea is illustrated in figure 2.

Figure 2.

(Opposite.) Envariance—entanglement-assisted invariance—is a symmetry of entangled states. Envariance allows one to demonstrate Born’s Rule [17,41,42] using a combination of an old intuition of Laplace [47] about invariance and the origins of probability and quantum symmetries of entanglement. (a) Laplace’s principle of indifference (illustrated with playing cards) aims to establish symmetry using invariance under swaps. A player who doesn’t know the face values of cards is indifferent—does not care—if they are swapped before he gets the one on the left. For Laplace, this indifference was evidence of a (subjective) symmetry: it implied equal likelihood—equal probabilities of the invariantly swappable alternatives. For the two cards, subjective probability  would be inferred by someone who doesn’t know their face value, but knows that one of them is a spade. When probabilities of a set of elementary events are provably equal, one can compute the probabilities of composite events and thus develop a theory of probability. Even the additivity of probabilities can be established (e.g. [48]). This is in contrast to Kolmogorov’s measure-theoretic axioms (which include additivity of probabilities). Above all, Kolmogorov’s theory does not assign probabilities to elementary events (physical or otherwise), while the envariant approach yields probabilities when the symmetries of elementary events under swaps are known. (b) The problem with Laplace’s principle of indifference is its subjectivity. The actual physical state of the system (the two cards) is altered by the swap. A related problem is that the assessment of indifference is based on ignorance. It was argued, e.g. by supporters of the relative frequency approach (regarded by many as a more ‘objective’ foundation of probability), that it is impossible to deduce anything (including probabilities) from ignorance. This (along with subjectivity) is the reason why the equal likelihood approach is regarded with suspicion as a basis of probability in physics. (c) In quantum physics, symmetries of entanglement can be used to deduce objective probabilities starting with a known state. Envariance is the relevant symmetry. When a pure entangled state of a system

would be inferred by someone who doesn’t know their face value, but knows that one of them is a spade. When probabilities of a set of elementary events are provably equal, one can compute the probabilities of composite events and thus develop a theory of probability. Even the additivity of probabilities can be established (e.g. [48]). This is in contrast to Kolmogorov’s measure-theoretic axioms (which include additivity of probabilities). Above all, Kolmogorov’s theory does not assign probabilities to elementary events (physical or otherwise), while the envariant approach yields probabilities when the symmetries of elementary events under swaps are known. (b) The problem with Laplace’s principle of indifference is its subjectivity. The actual physical state of the system (the two cards) is altered by the swap. A related problem is that the assessment of indifference is based on ignorance. It was argued, e.g. by supporters of the relative frequency approach (regarded by many as a more ‘objective’ foundation of probability), that it is impossible to deduce anything (including probabilities) from ignorance. This (along with subjectivity) is the reason why the equal likelihood approach is regarded with suspicion as a basis of probability in physics. (c) In quantum physics, symmetries of entanglement can be used to deduce objective probabilities starting with a known state. Envariance is the relevant symmetry. When a pure entangled state of a system  and another system we call ‘an environment

and another system we call ‘an environment  ’ (anticipating connections with decoherence)

’ (anticipating connections with decoherence)  can be transformed by

can be transformed by  acting solely on

acting solely on  , but the effect of

, but the effect of  can be undone by acting solely on

can be undone by acting solely on  with an appropriately chosen

with an appropriately chosen  ,

,  , it is envariant under

, it is envariant under  . For such composite states, one can rigorously establish that the local state of

. For such composite states, one can rigorously establish that the local state of  remains unaffected by

remains unaffected by  . Thus, for example, the phases of the coefficients in the Schmidt expansion

. Thus, for example, the phases of the coefficients in the Schmidt expansion  are envariant, as the effect of

are envariant, as the effect of  can be undone by a countertransformation

can be undone by a countertransformation

acting solely on the environment. This envariance of phases implies their irrelevance for the local states—in effect, it implies decoherence. Moreover, when the absolute values of the Schmidt coefficients are equal, a swap

acting solely on the environment. This envariance of phases implies their irrelevance for the local states—in effect, it implies decoherence. Moreover, when the absolute values of the Schmidt coefficients are equal, a swap  in

in  can be undone by a ‘counterswap’

can be undone by a ‘counterswap’  in

in  . So, as can be established more carefully [42],

. So, as can be established more carefully [42],  follows from the objective symmetry of such an entangled state. This proof of equal probabilities is based not on ignorance (as in Laplace’s subjective ‘indifference’) but on knowledge of the ‘wrong property’—of the global observable that rules out (via quantum indeterminacy) any information about complementary local observables. When supplemented by simple counting, envariance leads to Born’s Rule also for unequal Schmidt coefficients [17,41,42].

follows from the objective symmetry of such an entangled state. This proof of equal probabilities is based not on ignorance (as in Laplace’s subjective ‘indifference’) but on knowledge of the ‘wrong property’—of the global observable that rules out (via quantum indeterminacy) any information about complementary local observables. When supplemented by simple counting, envariance leads to Born’s Rule also for unequal Schmidt coefficients [17,41,42].

As we shall see, the eventual loss of coherence between pointer states can also be regarded as a consequence of quantum symmetries of the states of systems entangled with their environment. Thus, the essence of decoherence arises from the symmetries of entangled states. Indeed, some of the consequences of einselection (including the emergence of preferred states, as we have seen in the previous section) can be studied without employing the usual tools of decoherence theory (reduced density matrices and trace) that, for their physical significance, rely on Born’s Rule.

Decoherence that follows from envariance also allows one to justify the additivity of probabilities, whereas the derivation of Born’s Rule by Gleason [46] assumed it (along with the other Kolmogorov’s axioms of the measure-theoretic formulation of the foundations of probability theory, and with the Copenhagen-like setting). Appeal to symmetries leads to additivity also in the classical setting (as was noted already by Laplace [47,48]). Moreover, Gleason’s theorem (with its rather complicated proof based on ‘frame functions’ introduced especially for this purpose) provides no motivation as to why the measure he obtains should have any physical significance—i.e. why should it be regarded as a probability? As illustrated in figure 2 and discussed below, the envariant derivation of Born’s Rule has a transparent physical motivation.

The additivity of probabilities is a highly non-trivial point. In quantum theory, the overarching additivity principle is the quantum principle of superposition. Anyone familiar with the double-slit experiment knows that the probabilities of quantum states (such as the states corresponding to passing through one of the two slits) do not add, which in turn leads to interference patterns.

The presence of entanglement eliminates local phases (thus suppressing quantum superpositions, i.e. doing the job of decoherence). This leads to additivity of the probabilities of events associated with preferred pointer states.

(a). Decoherence, phases and entanglement

Decoherence is the loss of phase coherence between preferred states. It occurs when  starts in a superposition of pointer states singled out by the interaction (represented below by the Hamiltonian

starts in a superposition of pointer states singled out by the interaction (represented below by the Hamiltonian  ). As in equations (3.1), states of the system leave imprints—become ‘copied’—but now

). As in equations (3.1), states of the system leave imprints—become ‘copied’—but now  is ‘measured’ by

is ‘measured’ by  , its environment:

, its environment:

| 4.1 |

Equation (3.2) implied that the untouched states are orthogonal,  . Their superposition

. Their superposition  turns into an entangled

turns into an entangled  . Thus, neither

. Thus, neither  nor

nor  alone have a pure state. This loss of purity signifies decoherence. One can still assign a mixed state that represents surviving information about

alone have a pure state. This loss of purity signifies decoherence. One can still assign a mixed state that represents surviving information about  to the system.

to the system.

Phase changes can be detected: in a spin- -like

-like  ,

,  is orthogonal to

is orthogonal to  . The phase shift operator

. The phase shift operator  alters the phase that distinguishes them: for instance, when φ=π, it converts

alters the phase that distinguishes them: for instance, when φ=π, it converts  to

to  . In experiments

. In experiments  would shift the interference pattern.

would shift the interference pattern.

We assume perfect decoherence,  :

:  has a perfect record of pointer states. What information survives decoherence and what is lost?

has a perfect record of pointer states. What information survives decoherence and what is lost?

Consider someone who knows the initial pre-decoherence state,  , and would like to make predictions about the decohered

, and would like to make predictions about the decohered  . We now show that, when

. We now show that, when  , the phases of α and β no longer matter for

, the phases of α and β no longer matter for  —phase φ has no effect on the local state of

—phase φ has no effect on the local state of  , so measurements on

, so measurements on  cannot detect a phase shift, as there is no interference pattern to shift.

cannot detect a phase shift, as there is no interference pattern to shift.

Phase shift  (acting on an entangled

(acting on an entangled  ) cannot have any effect on its local state because it can be undone by

) cannot have any effect on its local state because it can be undone by  , a ‘countershift’ acting on a distant

, a ‘countershift’ acting on a distant  decoupled from the system:

decoupled from the system:

| 4.2 |

Phases in  can be changed in a faraway

can be changed in a faraway  decoupled from but entangled with

decoupled from but entangled with  . Therefore, they can no longer influence the local state of

. Therefore, they can no longer influence the local state of  . (This follows from quantum theory alone, but is essential for causality—if they could, measuring

. (This follows from quantum theory alone, but is essential for causality—if they could, measuring  would reveal this, enabling superluminal communication!)

would reveal this, enabling superluminal communication!)

Decoherence is caused by the loss of phase coherence. Superpositions decohere as  and

and  are recorded by

are recorded by  . This is not because phases become ‘randomized’ by interactions with

. This is not because phases become ‘randomized’ by interactions with  , as is sometimes said [7]. Rather, they become delocalized: they lose significance for

, as is sometimes said [7]. Rather, they become delocalized: they lose significance for  alone. They are a global property of the composite state—they no longer belong to

alone. They are a global property of the composite state—they no longer belong to  , so measurements on

, so measurements on  cannot distinguish states that started as superpositions with different phases for α and β. Consequently, information about

cannot distinguish states that started as superpositions with different phases for α and β. Consequently, information about  is lost—it is displaced into correlations between

is lost—it is displaced into correlations between  and

and  , and local phases of

, and local phases of  become a global property—global phases of the composite entangled state of

become a global property—global phases of the composite entangled state of  .

.

We have considered this information loss here without reduced density matrices, the usual decoherence tool. Our view of decoherence appeals to symmetry, invariance of  —entanglement-assisted invariance or envariance under phase shifts of pointer state coefficients, equation (4.2). As

—entanglement-assisted invariance or envariance under phase shifts of pointer state coefficients, equation (4.2). As  entangles with

entangles with  , its local state becomes invariant under transformations that could have affected it before.

, its local state becomes invariant under transformations that could have affected it before.

Rigorous proof of coherence loss uses quantum core postulates (o)–(iii) and relies on quantum facts 1–3:

Locality: A unitary must act on a system to change its state. A state of

that is not acted upon does not change even as other systems evolve (so

that is not acted upon does not change even as other systems evolve (so  does not affect

does not affect  even when

even when  and

and  are entangled, in

are entangled, in  .

.The state of a system is all there is to predict measurement outcomes.

A composite state determines states of subsystems (so the local state of

is restored when the state of the whole

is restored when the state of the whole  and

and  is restored).

is restored).

These facts help to characterize local states of entangled systems without using reduced density matrices. They follow from quantum theory: locality is a property of interactions. The other two facts define the role and the relation of the quantum states of individual and composite systems in a way that does not invoke density matrices (to which we are not entitled in the absence of Born’s Rule). Thus, phase shift  acting on a pure pre-decoherence state matters: measurement can reveal φ. In accord with facts 1 and 2,

acting on a pure pre-decoherence state matters: measurement can reveal φ. In accord with facts 1 and 2,  changes

changes  into

into  . However, the same

. However, the same  acting on

acting on  in an entangled state

in an entangled state  does not matter for

does not matter for  alone, as it can be undone by

alone, as it can be undone by  , a countershift acting on a faraway, decoupled

, a countershift acting on a faraway, decoupled  . As the global

. As the global  is restored, by fact 3 the local state of

is restored, by fact 3 the local state of  is also restored even if

is also restored even if  is not acted upon (so that, by fact 1, it remains unchanged). Hence, the local state of decohered

is not acted upon (so that, by fact 1, it remains unchanged). Hence, the local state of decohered  that one obtains from

that one obtains from  could not have changed to begin with, and so it cannot depend on the phases of α and β.

could not have changed to begin with, and so it cannot depend on the phases of α and β.

The only pure states invariant under such phase shifts (unaffected by decoherence) are pointer states. Resilience, as we saw, equations (2.1) and (3.1), lets them preserve correlations. For instance, the entangled state of the measured system  and the apparatus,

and the apparatus,  , equation (3.2), decoheres as

, equation (3.2), decoheres as  interacts with

interacts with  :

:

| 4.3 |

The pointer states  and

and  of

of  survive decoherence by

survive decoherence by  . They retain perfect correlation with

. They retain perfect correlation with  (or an observer, or other systems) in spite of

(or an observer, or other systems) in spite of  , independently of the value of

, independently of the value of  . Stability under decoherence is—in our quantum Universe—a prerequisite for effective classicality: familiar states of macroscopic objects also have to survive monitoring by

. Stability under decoherence is—in our quantum Universe—a prerequisite for effective classicality: familiar states of macroscopic objects also have to survive monitoring by  and hence retain correlations.

and hence retain correlations.

Decohered  is described by a reduced density matrix,

is described by a reduced density matrix,

| 4.4a |

When 〈ε↑|ε↓〉=0, pointer states of  retain correlations with the outcomes:

retain correlations with the outcomes:

| 4.4b |

Both ↑ and ↓ are present: there is no ‘literal collapse’. We will use  to examine information flows. Thus, we will need the probabilities of the outcomes.

to examine information flows. Thus, we will need the probabilities of the outcomes.

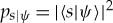

Trace is a mathematical operation. However, regarding the reduced density matrix  as a statistical mixture of its eigenstates—states ↑ and ↓, and A↑ and A↓ (pointer state) records—relies on Born’s Rule, which allows one to view tracing as averaging. We did not use it till equations (4.4) to avoid circularity. Now we derive pk=|ψk|2, Born’s Rule as we shall need it: we need to prove that the probabilities are indeed given by the eigenvalues |α|2 and |β|2 of

as a statistical mixture of its eigenstates—states ↑ and ↓, and A↑ and A↓ (pointer state) records—relies on Born’s Rule, which allows one to view tracing as averaging. We did not use it till equations (4.4) to avoid circularity. Now we derive pk=|ψk|2, Born’s Rule as we shall need it: we need to prove that the probabilities are indeed given by the eigenvalues |α|2 and |β|2 of  . This is the postulate (v), obviously crucial for relating quantum formalism to experiments. We want to deduce Born’s Rule from the quantum core postulates (o)–(iii).

. This is the postulate (v), obviously crucial for relating quantum formalism to experiments. We want to deduce Born’s Rule from the quantum core postulates (o)–(iii).

We note that this brief and somewhat biased discussion of the origin of decoherence is not a substitute for more complete presentations that employ the usual tools of decoherence theory, including in particular reduced density matrices [11,14,17]. We have, for good reason in the present context of the derivation of Born’s rule, avoided them (with the brief illustrative exception immediately above, Eq. (4.4)).

(b). Probabilities from symmetries of entanglement

In quantum physics, one seeks the probability of a measurement outcome starting from a known state of  and a ready-to-measure state of the apparatus pointer

and a ready-to-measure state of the apparatus pointer  . The entangled state of the whole is pure, so (at least prior to the decoherence by the environment) there is no ignorance in the usual sense.

. The entangled state of the whole is pure, so (at least prior to the decoherence by the environment) there is no ignorance in the usual sense.

However, envariance in a guise slightly different than before (when it accounted for decoherence) implies that mutually exclusive outcomes have certifiably equal probabilities: suppose  starts as

starts as  , so interaction with

, so interaction with  yields

yields  , an even (equal coefficient) state. (Here and below we skip normalization to save on notation.)

, an even (equal coefficient) state. (Here and below we skip normalization to save on notation.)

A unitary swap

permutes states in

permutes states in  :

:

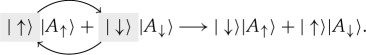

|

4.5a |

After the swap  is as probable as

is as probable as  was (and still is), and

was (and still is), and  . Probabilities in

. Probabilities in  are unchanged (as

are unchanged (as  is untouched) so p↑ and p↓ must have been swapped. To prove equiprobability, we now swap records in

is untouched) so p↑ and p↓ must have been swapped. To prove equiprobability, we now swap records in  :

:

|

4.5b |

Swap in  restores pre-swap

restores pre-swap  without touching

without touching  , so (by fact 3) the local state of

, so (by fact 3) the local state of  is also restored (even though, by fact 1, it could not have been affected by the swap of equation (4.5b)). Hence (by fact 2), all predictions about

is also restored (even though, by fact 1, it could not have been affected by the swap of equation (4.5b)). Hence (by fact 2), all predictions about  , including probabilities, must be the same! The probabilities of

, including probabilities, must be the same! The probabilities of  and

and  (as well as of

(as well as of  and

and  ) are exchanged yet unchanged. Therefore, they must be equal. Thus, in our two-state case,

) are exchanged yet unchanged. Therefore, they must be equal. Thus, in our two-state case,  . For N envariantly equivalent alternatives, pk=1/N for all k.

. For N envariantly equivalent alternatives, pk=1/N for all k.

Getting rid of phases beforehand was crucial: swaps in an isolated pure state will, in general, change the phases, and hence will change the state. For instance,  , after a swap

, after a swap  , becomes

, becomes  , i.e. is orthogonal to the pre-swap state.

, i.e. is orthogonal to the pre-swap state.

The crux of the proof of equal probabilities was that the swap does not change anything locally. This can be established for entangled states with equal coefficients but—as we have just seen—is simply not true for a pure unentangled state of just one system.

In the real world, the environment will become entangled (in the course of decoherence) with the preferred states of the system of interest (or with the preferred states of the apparatus pointer). We have already seen how postulates (i)–(iii) lead to preferred sets of states. We have also pointed out that—at least in idealized situations—these states coincide with the familiar pointer states that remain stable in spite of decoherence. So, in effect, we are using the familiar framework of decoherence to derive Born’s Rule. Fortunately, our conclusions about decoherence can be reached without employing the usual (Born’s Rule-dependent) tools of decoherence (reduced density matrix and trace).

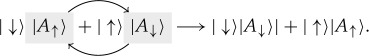

So far, we have only explained how one can establish equality of probabilities for the outcomes that correspond to Schmidt states associated with coefficients that differ at most by a phase. This is not yet Born’s Rule. However, it turns out that this is the hard part of the proof: once such equality is established, a simple counting argument (a version of that employed in [33–36]) leads to the relation between probabilities and unequal coefficients [17,41,42].

Thus, for an uneven state  , swaps on

, swaps on  and

and  yield

yield  , and not the pre-swap state, so p↑ and p↓ are not equal. However, the uneven case reduces to equiprobability via fine-graining, so envariance, equations (4.4), yields Born’s Rule,

, and not the pre-swap state, so p↑ and p↓ are not equal. However, the uneven case reduces to equiprobability via fine-graining, so envariance, equations (4.4), yields Born’s Rule,  , in general.

, in general.

To see how, we take  and

and  , where μ and ν are natural numbers (so the squares of α and β are commensurate). To fine-grain, we change the basis,

, where μ and ν are natural numbers (so the squares of α and β are commensurate). To fine-grain, we change the basis,  and

and  , in the Hilbert space of

, in the Hilbert space of  :

:

|

4.6a |

We simplify, and imagine an environment decohering  in a new orthonormal basis. That is, the |ak〉 correlate with the |ek〉 so that

in a new orthonormal basis. That is, the |ak〉 correlate with the |ek〉 so that

|

4.6b |

as if  were the preferred pointer states decohered by the environment so that

were the preferred pointer states decohered by the environment so that  .

.

Now swaps of  can be undone by counterswaps of the corresponding

can be undone by counterswaps of the corresponding  . Counts of the fine-grained equiprobable

. Counts of the fine-grained equiprobable  alternatives labelled with

alternatives labelled with  or

or  lead to Born’s Rule:

lead to Born’s Rule:

| 4.7 |

Amplitudes have ‘got squared’ as a result of Pythagoras’ theorem (Euclidean nature of Hilbert spaces). The case of incommensurate |α|2 and |β|2 can be settled by an appeal to the continuity of probabilities as functions of state vectors.

(c). Discussion

In physics textbooks, Born’s Rule is a postulate. Using entanglement, we have derived it here from the quantum core axioms. Our reasoning was purely quantum: knowing a state of the composite classical system means knowing the state of each part. There are no entangled classical states, and no objective symmetry to deduce classical equiprobability, the crux of our derivation. Entanglement—made possible by the tensor structure of composite Hilbert spaces, introduced by the composition postulate (o)—was key. Appeal to symmetry—subjective and suspect in the classical case—becomes rigorous thanks to objective envariance in the quantum case. Born’s Rule, introduced by textbooks as postulate (v), follows.

The relative frequency approach (found in many probability texts) starts with the count of the number of events. It has not led to successful derivation of Born’s Rule. We used entanglement symmetries to identify equiprobable alternatives. However, by employing envariance, one can also deduce the frequencies of events by considering M repetitions (i.e.  ) of an experiment and deduce departures that are also expected when M is finite. Moreover, one can even show the inverse of Born’s Rule. That is, one can demonstrate that the amplitude should be proportional to the square root of frequency [49].

) of an experiment and deduce departures that are also expected when M is finite. Moreover, one can even show the inverse of Born’s Rule. That is, one can demonstrate that the amplitude should be proportional to the square root of frequency [49].

As the probabilities are now in place, one can think of quantum statistical physics. One could establish its foundations using the probabilities we have just deduced. But there is an even simpler and more radical approach [50,51] that arrives at the microcanonical state without the need to invoke ensembles and probabilities. Its detailed explanation is beyond the scope of this section, but the basic idea is to regard an even state of the system entangled with its environment as the microcanonical state. This is a major conceptual simplification of the foundations of statistical physics: one can get rid of the artifice of invoking infinite collections of similar systems to represent a state of a single system in a manner that allows one to deduce relevant thermodynamic properties.1

5. Quantum Darwinism, classical reality and objective existence

Quantum Darwinism [17,23] recognizes that observers use the environment as a communication channel to acquire information about pointer states indirectly, leaving the system of interest untouched and its state unperturbed. Observers can find out the state of the system without endangering its existence (which would be inevitable in direct measurements). Indeed, the reader of this text is—at this very moment—intercepting a tiny fraction of the photon environment by his eyes to gather all of the information he needs.

This is how virtually all of our information is acquired. A direct measurement is not what we do. Rather, we count on redundancy and settle for information that exists in many copies. This is how objective existence—the cornerstone of classical reality—arises in the quantum world.

(a). Mutual information in quantum correlations

To develop the theory of quantum Darwinism, we need to quantify information between fragments of the environment and the system. Mutual information is a convenient tool that we shall use for this purpose.

The mutual information between the system  and a fragment

and a fragment  (that will play the role of the apparatus

(that will play the role of the apparatus  of equations (4.4) in the discussion above) can be computed using the density matrices of the systems of interest using their von Neumann entropies

of equations (4.4) in the discussion above) can be computed using the density matrices of the systems of interest using their von Neumann entropies  :

:

| 5.1 |

We have used the density matrices of  and

and  (as a ‘stand-in’ for

(as a ‘stand-in’ for  ) from equations (4.4) to obtain the specific value of mutual information above.

) from equations (4.4) to obtain the specific value of mutual information above.

We have already noted the special role of the pointer observable. It is stable and hence it leaves behind information-theoretic progeny—multiple imprints, copies of the pointer states—in the environment. By contrast, complementary observables are destroyed by the interaction with a single subsystem of  . They can, in principle, still be accessed, but only when all of the environment is measured. Indeed, because we are dealing with a quantum system, things are much worse than that: the environment must be measured in precisely the right (typically global) basis to allow for such a reconstruction. Otherwise, the accumulation of errors over multiple measurements will lead to an incorrect conclusion and re-prepare the state and environment, so that it is no longer a record of the state of

. They can, in principle, still be accessed, but only when all of the environment is measured. Indeed, because we are dealing with a quantum system, things are much worse than that: the environment must be measured in precisely the right (typically global) basis to allow for such a reconstruction. Otherwise, the accumulation of errors over multiple measurements will lead to an incorrect conclusion and re-prepare the state and environment, so that it is no longer a record of the state of  , and phase information is irretrievably lost.

, and phase information is irretrievably lost.

(b). Objective reality from redundant information

Quantum Darwinism was introduced relatively recently. Previous studies of the records ‘kept’ by the environment were focused on its effect on the state of the system, and not on their utility. Decoherence is a case in point, as are some of the studies of the decoherent histories approach [56,57]. The exploration of quantum Darwinism in specific models was started at the beginning of this millenium [58–62]. We do not intend to review all of the results obtained to date in detail. The basic conclusion of these studies is, however, that the dynamics responsible for decoherence is also capable of imprinting multiple copies of the pointer basis on the environment. Moreover, while decoherence is always implied by quantum Darwinism, the reverse need not be true. One can easily imagine situations where the environment is completely mixed, and thus cannot be used as a communication channel, but would still suppress quantum coherence in the system.

For many subsystems,  , the initial state

, the initial state  evolves into a ‘branching state’:

evolves into a ‘branching state’:

| 5.2 |

Linearity assures all branches persist: collapse to one outcome is not in the cards. However, large  can disseminate information about the system. The state

can disseminate information about the system. The state  represents many records inscribed in its fragments, collections of subsystems of

represents many records inscribed in its fragments, collections of subsystems of  (figure 3). This means that the state of

(figure 3). This means that the state of  can be found out by many, independently and indirectly—hence, without disturbing

can be found out by many, independently and indirectly—hence, without disturbing  . This is how evidence of objective existence arises in our quantum world.

. This is how evidence of objective existence arises in our quantum world.

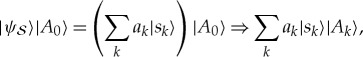

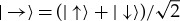

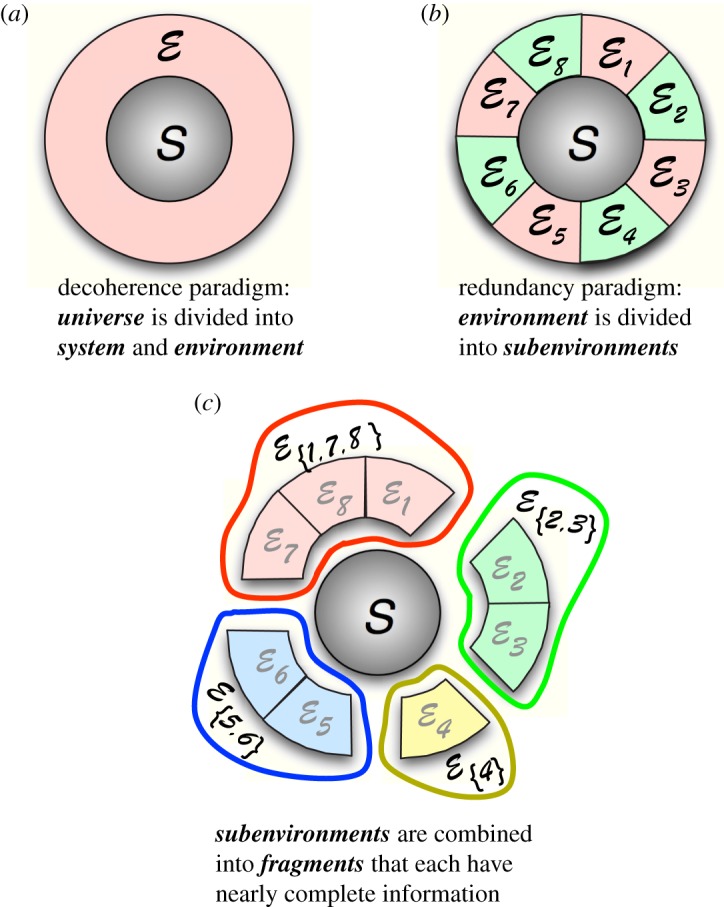

Figure 3.

Quantum Darwinism recognizes that environments consist of many subsystems and that observers acquire information about the system of interest  by intercepting copies of its pointer states deposited in

by intercepting copies of its pointer states deposited in  as a result of decoherence. (a) Decoherence paradigm: universe is divided into systemand environment. (b,c) Quantum Darwinism: environment consists of elementary subsystems—subenvironments. The latter can be combined into fragments that each have nearly complete information about the system. Redundancy is the number of such fragments. (Online version in colour.)

as a result of decoherence. (a) Decoherence paradigm: universe is divided into systemand environment. (b,c) Quantum Darwinism: environment consists of elementary subsystems—subenvironments. The latter can be combined into fragments that each have nearly complete information about the system. Redundancy is the number of such fragments. (Online version in colour.)

An environment fragment  can act as apparatus with a (possibly incomplete) record of

can act as apparatus with a (possibly incomplete) record of  . When

. When  (the rest of the

(the rest of the  ) is traced out,

) is traced out,  decoheres, and the reduced density matrix describing the joint state of

decoheres, and the reduced density matrix describing the joint state of  and

and  is

is

| 5.3 |

When  ,

,  contains a perfect record of the preferred states of the system. In principle, each subsystem of

contains a perfect record of the preferred states of the system. In principle, each subsystem of  may be enough to reveal its state, but this is unlikely. Typically, one must collect many subsystems of

may be enough to reveal its state, but this is unlikely. Typically, one must collect many subsystems of  into

into  to find out about

to find out about  .

.

The redundancy of the data about pointer states in  determines how many times the same information can be independently extracted—it is a measure of objectivity. The key question of quantum Darwinism is then: How many subsystems of

determines how many times the same information can be independently extracted—it is a measure of objectivity. The key question of quantum Darwinism is then: How many subsystems of  —what fraction of

—what fraction of  —does one need to find out about

—does one need to find out about  ? The answer is provided by the mutual information

? The answer is provided by the mutual information  , information about

, information about  available from

available from  , fraction

, fraction  of

of  (where

(where  and

and  are the numbers of subsystems).

are the numbers of subsystems).

In the case of perfect correlation, a single subsystem of  would suffice, as

would suffice, as  jumps to

jumps to  at

at  . The data in additional subsystems of

. The data in additional subsystems of  are then redundant. Usually, however, larger fragments of

are then redundant. Usually, however, larger fragments of  are needed to find out enough about

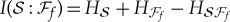

are needed to find out enough about  . The red line in figure 4 illustrates this:

. The red line in figure 4 illustrates this:  still approaches

still approaches  , but only gradually. The length of this plateau can be measured in units of fδ, the initial rising portion of

, but only gradually. The length of this plateau can be measured in units of fδ, the initial rising portion of  . It is defined with the help of the information deficit

δ that observers tolerate:

. It is defined with the help of the information deficit

δ that observers tolerate:

| 5.4 |

Redundancy is the number of such records of  in

in  :

:

| 5.5 |

sets the upper limit on how many observers can find out the state of

sets the upper limit on how many observers can find out the state of  from

from  independently and indirectly. In models [58–65] (especially photon scattering analysed by extending the decoherence model of Joos & Zeh [66]) ℛδ is huge [63–65] and depends on δ only weakly (logarithmically).

independently and indirectly. In models [58–65] (especially photon scattering analysed by extending the decoherence model of Joos & Zeh [66]) ℛδ is huge [63–65] and depends on δ only weakly (logarithmically).

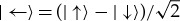

Figure 4.

Information about the system contained in a fraction f of the environment. Red plot (with plateau) shows a typical  established by decoherence. The rapid rise means that nearly all classically accessible information is revealed bya small fraction of

established by decoherence. The rapid rise means that nearly all classically accessible information is revealed bya small fraction of  . It is followed by a plateau: additional fragments only confirm what is already known. Redundancy

. It is followed by a plateau: additional fragments only confirm what is already known. Redundancy  is the number of such independent fractions. Green plot shows

is the number of such independent fractions. Green plot shows  for a random state in the composite system

for a random state in the composite system  . (Online version in colour.)

. (Online version in colour.)

This is ‘quantum spam’:  imprints of pointer states are broadcast through the environment. Many observers can access them independently and indirectly, assuring objectivity of pointer states of

imprints of pointer states are broadcast through the environment. Many observers can access them independently and indirectly, assuring objectivity of pointer states of  . Repeatability is key: states must survive copying to produce many imprints.

. Repeatability is key: states must survive copying to produce many imprints.

(c). Discussion

Our discussion of quantum jumps shows when, in spite of the no-cloning theorem [67,68], repeatable copying is possible. Discrete preferred states set the stage for quantum jumps. Copying yields branches of records inscribed in subsystems of  . Initial superposition yields superposition of branches, equation (5.2), so there is no literal collapse. However, fragments of

. Initial superposition yields superposition of branches, equation (5.2), so there is no literal collapse. However, fragments of  can reveal only one branch (and not their superposition). Such evidence will suggest a ‘quantum jump’ from superposition to a single outcome, in accord with (iv).

can reveal only one branch (and not their superposition). Such evidence will suggest a ‘quantum jump’ from superposition to a single outcome, in accord with (iv).

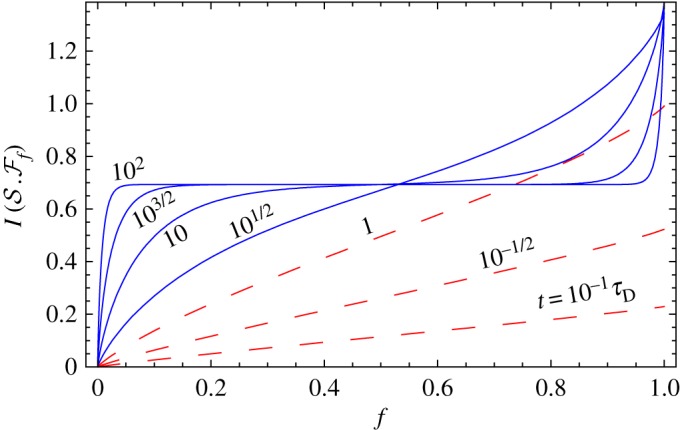

Not all environments are good in this role of a witness. Photons excel: they do not interact with the air or with each other, faithfully passing on information. A small fraction of the photon environment usually reveals all we need to know. Scattering of sunlight quickly builds up redundancy: a 1μ dielectric sphere in a superposition of 1μ size increases  by approximately 108 every microsecond [63,64]. The mutual information plot illustrating this case is shown in figure 5.

by approximately 108 every microsecond [63,64]. The mutual information plot illustrating this case is shown in figure 5.

Figure 5.

The quantum mutual information  versus fragment size f at different elapsed times for an object illuminated by point-source black-body radiation. Individual curves are labelled by the time t in units of the decoherence time τD. For t≤τD (red dashed lines), the information about the system available in the environment is low. The linearity in f means each piece of the environment contains new, independent information. For t>τD (blue solid lines), the shape of the partial information plot indicates redundancy; the first few pieces of the environment increase the information, but additional pieces only confirm what is already known. (Online version in colour.)

versus fragment size f at different elapsed times for an object illuminated by point-source black-body radiation. Individual curves are labelled by the time t in units of the decoherence time τD. For t≤τD (red dashed lines), the information about the system available in the environment is low. The linearity in f means each piece of the environment contains new, independent information. For t>τD (blue solid lines), the shape of the partial information plot indicates redundancy; the first few pieces of the environment increase the information, but additional pieces only confirm what is already known. (Online version in colour.)

Air is also good in decohering, but its molecules interact, scrambling acquired data. Objects of interest scatter both air and photons, so both acquire information about position, and favour similar localized pointer states.

Quantum Darwinism shows why it is so hard to undo decoherence [69]. Plots of mutual information  for initially pure

for initially pure  and

and  are antisymmetric (figure 4) around

are antisymmetric (figure 4) around  and

and  [58]. Hence, a counterpoint of the initial quick rise at f≤fδ is a quick rise at f≥1−fδ, as the last few subsystems of

[58]. Hence, a counterpoint of the initial quick rise at f≤fδ is a quick rise at f≥1−fδ, as the last few subsystems of  are included in the fragment

are included in the fragment  that by now contains nearly all

that by now contains nearly all  . This is because an initially pure

. This is because an initially pure  remains pure under unitary evolution, so

remains pure under unitary evolution, so  , and

, and  must reach

must reach  . Thus, a measurement on all of

. Thus, a measurement on all of  could confirm its purity in spite of decoherence caused by

could confirm its purity in spite of decoherence caused by  for all f≤1−fδ. However, to verify this, one has to intercept and measure all of

for all f≤1−fδ. However, to verify this, one has to intercept and measure all of  in a way that reveals pure state

in a way that reveals pure state  , equation (5.2). Other measurements destroy phase information. So, undoing decoherence is in principle possible, but the required resources and foresight preclude it.

, equation (5.2). Other measurements destroy phase information. So, undoing decoherence is in principle possible, but the required resources and foresight preclude it.

In quantum Darwinism, a decohering environment acts as an amplifier, inducing branch structure of  distinct from typical states in the Hilbert space of

distinct from typical states in the Hilbert space of  :

:  of a random state is given by the green line in figure 4, with no plateau or redundancy. Antisymmetry means that

of a random state is given by the green line in figure 4, with no plateau or redundancy. Antisymmetry means that  ‘jumps’ at

‘jumps’ at  to

to  .

.

Environments that decohere  , but scramble information because of interactions between subsystems (e.g. air), eventually approach such random states. Quantum Darwinism is possible only when information about

, but scramble information because of interactions between subsystems (e.g. air), eventually approach such random states. Quantum Darwinism is possible only when information about  is preserved in fragments of

is preserved in fragments of  , so that it can be recovered by observers. There is no need for perfection: partially mixed environments or imperfect measurements correspond to noisy communication channels: their capacity is depleted, but we can still get the message [70,71].

, so that it can be recovered by observers. There is no need for perfection: partially mixed environments or imperfect measurements correspond to noisy communication channels: their capacity is depleted, but we can still get the message [70,71].