Abstract

A model of interdependent networks of networks (NoN) has been introduced recently in the context of brain activation to identify the neural collective influencers in the brain NoN. Here we develop a new approach to derive an exact expression for the random percolation transition in Erdös-Rényi NoN. Analytical calculations are in excellent agreement with numerical simulations and highlight the robustness of the NoN against random node failures. Interestingly, the phase diagram of the model unveils particular patterns of interconnectivity for which the NoN is most vulnerable. Our results help to understand the emergence of robustness in such interdependent architectures.

PACS numbers: 89.75.Hc, 64.60.ah, 05.70.Fh

Many biological, social and technological systems are composed of multiple, if not vast numbers of, interacting elements. In a stylized representation each element is portrayed as a node and the interactions among nodes as mutual links, so as to form what is known as a network [1]. A finer description further isolates several subnetworks, called modules, each of them performing a different function. These modules are, in turn, integrated to form a larger aggregate referred to as a network of networks (NoN). A compelling problem is how to define the interdependencies between modules, specifically how the functioning of nodes in one module depends on the functioning of nodes in other modules [2–6].

Current models of such interdependent NoN, inspired by the power grid, represent dependencies across modules through very fragile couplings [2, 3], such that the random failure of few nodes gives rise to a catastrophic cascading collapse of the NoN. Many real-life systems, however, exhibit high resilience against malfunctioning. The prototypical example of such robust modular architectures is the brain, which thus cannot fit in catastrophic NoN models [6]. To cope with the fragility of current NoN models, we recently introduced a model of interdependencies in NoN [7], inspired by the phenomenon of top-down control in brain activation [8, 9], in order to study the impact of rare events, i.e. non-random optimal percolation [10], on the global communication of the brain with application to neurological disorders.

Here we investigate the robustness of this NoN model with respect to typical node failures, i.e. random percolation. More precisely, we develop a new approach to derive an analytical expression for the random percolation phase diagram in Erdös-Rényi (ER) NoN, which predicts the conditions responsible for the emergence of robustness and the absence of cascading effects.

Definition of control intra-modular links

Consider N nodes in a NoN composed of several interdependent modules (Fig. 1). We distinguish the roles of intra-module links connecting nodes within a module, and inter-module dependency links (corresponding to control links in the brain [6, 8]), connecting nodes across modules: the former (intra-links) only represent whether or not two nodes are connected, the latter (inter-links) express mutual control. Every node i has intra-module links, referred to as node i’s in-degree, and intermodule connections, referred to as i’s out-degree.

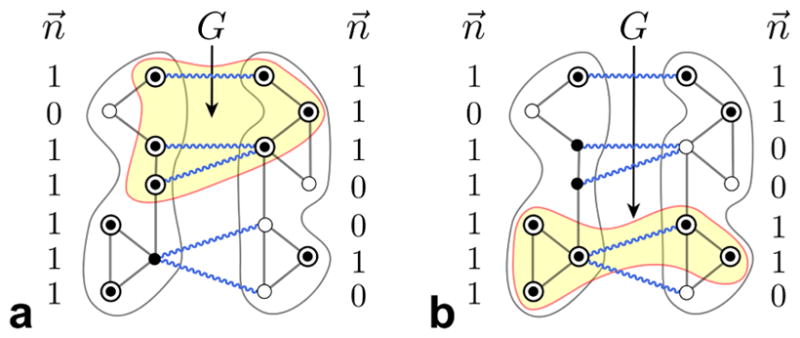

FIG. 1. Robust interdependent 2-NoN.

Intra-module links (black) represent connectivity, while intermodule links (wiggly blue lines) express mutual dependencies. The occupation variable ni specifies whether a node is present (ni = 1) or removed (ni = 0). The activation state σi, defined through inter-module dependencies, indicates whether a node is activated (σi = 1) or inactivated (σi = 0). Nodes can be activated even if they do not belong to the giant connected activated component G. Note also that the configuration of occupation variables n⃗ is identical for the module on the left in a and b. Legend: ◉ σi = 1; ● ni = 1, σi = 0; ○ ni = 0, σi = 0.

Each node can be present or removed, and, if present, it can be activated or inactivated. We introduce the binary occupation variable ni = 1, 0 to specify whether node i is present (ni = 1) or removed (ni = 0). By virtue of inter-module dependencies, the functioning of a node in one module depends on the functioning of nodes in other modules. In order to conceptualize this form of control, we introduce the activation state σi, taking values σi = 1 if node i is activated and σi = 0 if not. A node i with one or more inter-module dependency/control connections ( ) is activated (σi = 1) if and only if it is present (ni = 1) and at least one of its out-neighbors j is also present (nj = 1), otherwise it is not activated (σi = 0). In other words, a node with one or several inter-module dependencies is inactivated when the last of its out-neighbors is removed.

The rationale for this control rule is that the activation (σi = σj = 1) of two nodes connected by, for instance, one inter-link occurs only when both nodes are occupied, ni = nj = 1. If just one of them is unoccupied, let’s say nj = 0, then both nodes become inactive. Thus, σi = 0 even though ni = 1, and we say that j exerts a control over i. This rule models the way neurons control the activation of other neurons in distant brain modules via control/dependency links (fibers through the white matter) in a process known as top-down influence in sensory processing [9]. Mathematically, σi is defined as

| (1) |

where ℱ(i) denotes the set of nodes connected to i via an inter-module link. Conceptually, the inter-links define a mapping from the configuration of occupation variables n⃗ ≡ (n1, ..., nN) to the configuration of activated states σ⃗ ≡ (σ1, ..., σN), as given by Eq. (1).

Not all nodes participate in the control of other nodes via dependencies, i.e. a certain fraction of them does not establish inter-links. If a node does not have inter-module dependencies, it activates as long as it is present:

| (2) |

Therefore, products over empty sets ℱ(i) = ∅ default to zero in Eq. (1). This last property also guarantees that we recover the single network case for vanishing intermodule connections ( ), i.e. when considering the limiting case of one isolated module only.

When a fraction of nodes is removed, the NoN breaks into isolated components of activated nodes. In this work we focus on the largest (giant) mutually connected activated component G, which encodes global properties of the system. In contrast to previous NoN models [2, 3], in our model a node can be activated even if it does not belong to G (see Fig. 1). Indeed, the activation of a node, given by Eq. (1), is not tied to its membership in the giant component. Therefore, a node can be part of G without being part of the largest connected activated component in its own module (consider for instance the top left node in Fig. 1 a). As a consequence, controlling dependencies in the NoN do not lead to cascades of failures, which ultimately explains the robustness of our NoN model. In the model of Refs. [2, 3], on the other hand, a node can be activated (therein termed “functional”) if and only if it belongs to the largest connected component of its own module and (for the case that it has inter-module dependency links) its out-neighbors also belong to the giant component within their module. Indeed, in Refs. [2, 3] the propagation of failures is not local as in Eq. (1), implying that the failure of a single node may catastrophically destroy the NoN.

In order to quantify robustness, we measure the impact of node failures ni = 0 on the size of G [2–4]. More precisely, we calculate G under typical configurations n⃗, sampled from a flat distribution with a given fraction of removed nodes, and show that G remains sizeable even for high values of q. In practice, starting from q = 0, we compute G(q) while progressively increasing the fraction q of randomly removed nodes. The robustness of the NoN is then formally characterized by the critical fraction qc, the percolation threshold, at which the giant connected activated component collapses G(qc) = 0 [2, 3]. Accordingly, NoN models with high qc (ideally close to 1) are robust, whereas low qc is considered fragile. A plot of G(q) for ER 2-NoN is shown in the inset of Fig. 2.

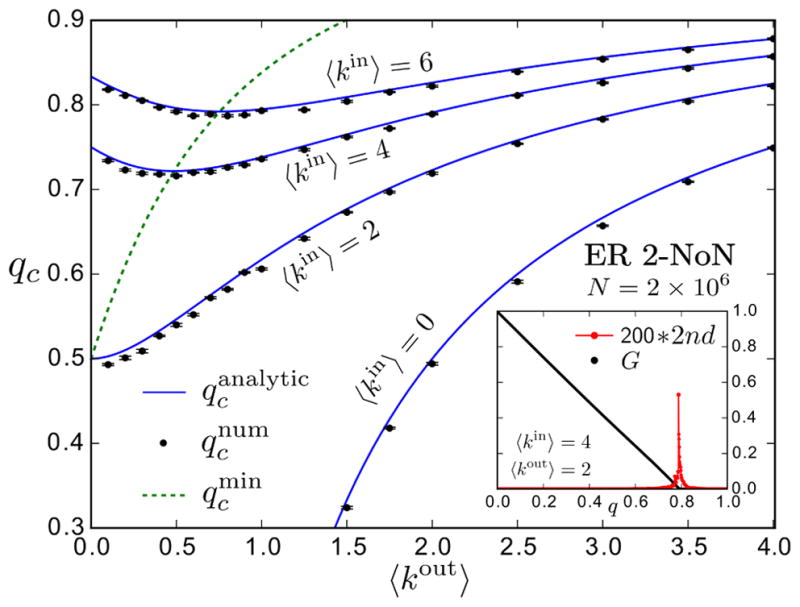

FIG. 2. Percolation phase diagram for ER 2-NoN.

Blue curves show our analytical prediction of the percolation threshold, , as a function of 〈kout〉 for different values of 〈kin〉 = 0, 2, 4, 6, obtained from Eq. (13). Black dots show the measured numerical percolation threshold, , from direct simulation of the random percolation process, obtained at the peak of the second largest connected activated component. The green dashed line indicates the maximal vulnerability . The percolation transition qc denotes the critical fraction of randomly removed nodes at which G(qc) = 0 collapses. Errors are s.e.m. over 10 NoN realizations of system size N = 2 × 106. Inset. Size of G (black dots) and 200 * size of the second largest connected activated component (red dots) as a function of q for an ER 2-NoN with 〈kin〉 = 4, 〈kout〉 = 2 and N = 2 × 106. The peak is at .

Message Passing

The problem of calculating G can be solved using a message passing approach [4, 10, 11] which provides exact solutions on locally tree-like NoN, containing a small number of short loops [11]. This includes the thermodynamic limit (N → ∞) of Erdös-Rényi and scale-free random graphs as well as the config-uration model (the maximally random graphs generated from a given degree distribution), which contain loops whose typical length grows logarithmically with the system size [12].

In principle, it works like this: each node receives messages from its neighbors containing information about their membership in G. Based on what they receive, the nodes then send further messages until everyone eventually agrees on who belongs to G. In practice, we need to derive a self-consistent system of equations that spec-ifies for each node how the message to be sent is computed from the incoming messages [13]. To this end, we introduce two types of messages: ρi→j running along an intra-module link and φi→j running along an intermodule link. Formally, we denote ρi→j ≡ probability that node i is connected to G other than via in-neighbor j, and φi→j ≡ probability that node i is connected to G other than via out-neighbor j. The binary nature of the occupation variables and the activation states constrains the messages to take values ρi→j, φi→j ∈ {0, 1}.

A node can only send non-zero information if it is activated, hence the messages must be proportional to σi. Assuming node i is activated, it can send a non-zero intra-module message ρi→j to node j if and only if it receives a non-zero message by at least one of its in-neighbors other than j or one of its out-neighbors. Similarly, we can consider the message φi→j along an intermodule link. Thus, the self-consistent system of message passing equations is given by:

| (3) |

| (4) |

where 𝒮 (i) denotes the set of node i’s intra-module nearest neighbors and ℱ(i) denotes the set of i’s inter-module nearest neighbors. Note that products over empty sets 𝒮 (i) = ∅ or ℱ(i) = ∅ default to one.

In practice, the message passing equations are solved iteratively. Starting from a random initial configuration ρi→j, φi→j ∈ {0, 1}, the messages are updated until they finally converge. From the converged solutions for the messages we can then compute the marginal probability ρi = 0, 1 for each node i to belong to the giant connected activated component G:

| (5) |

The size of G, or rather the fraction of nodes belonging to G, can then simply be computed by summing the probability marginals ρi and dividing by the system size: .

Percolation Phase Diagram for ER NoN

In what follows we derive an exact expression for the percolation threshold in Erdös-Rényi 2-NoN, defined as two randomly interconnected ER modules. Each module is an ER random graph with Poisson degree distribution, ℙz[kin] = e−zzkin/kin! for kin ∈ ℕ0, where z ≡ 〈kin〉 denotes the average in-degree. Similarly, we consider the inter-module links to form a bipartite ER random graph with Poisson degree distribution, ℙw[kout] = e−wwkout/kout! for kout ∈ ℕ0, where w ≡ 〈kout〉 denotes the average out-degree. The corresponding distributions for the in-/out-degree at the end of an intra-/ inter-link are given by, ℚz[kin] = (kinℙz[kin]𝟙{kin>0})/z and ℚw[kout] = (koutℙw[kout]𝟙{kout>0})/w, for kin, kout in ℕ0, where 𝟙{·} denotes the indicator function.

The random percolation process is then defined by removing each node in the NoN independently with probability q, which is equivalently formulated as taking the configurations n⃗ = (n1, ..., nN ) at random from the binomial distribution, , where p = 1 − q denotes the occupation probability.

The probability of a node to be activated when a randomly chosen fraction p of nodes in the NoN is present, , can straightforwardly be obtained by averaging σi, given by Eq. (1), over ℙp[n⃗]. The expected fraction of activated nodes 〈σi〉p,w = p [1 + e−w − e−wp] is then given by averaging 〈σi〉p over . Unlike a node’s probability to be present 〈ni〉p = p, the probability to be activated 〈σi〉p is therefore highly dependent on the node’s out-degree . In other words, the deactivations are highly degree dependent, even if the fraction q of nodes to be removed from the NoN is chosen randomly!

To compute the expectation of messages within the ensemble of ER 2-NoN, we average the expressions for ρi→j and φi→j, representing the converged solutions to the message passing equations, over all possible realizations of randomness inherent in the above distributions. In doing so, we must however make sure to properly account for the fact that, for nodes i with inter-links ( ), the binary occupation variable ni shows up more than once within the entire system of message passing equations, due to the activation rule for σi. Indeed, since the occupation variable is a binary number ni ∈ {0, 1}, powers of for each exponent k ∈ ℕ+ and therefore the self-consistency is not affected by the existence of multiple ni per node. Yet, when naively averaging withthe distribution of configurations, we would incorrectly obtain instead of , without properly accounting for the binary nature of the occupation variable across the entire system of equations.

Specifically, when inserting the expression for the message φk→i, determined by Eq. (4), into the expression for ρi→j, given by Eq. (3), then the activation state σk = nk[1 − (1 − ni) Πℓ∈ℱ(k)\i(1 − nℓ) (within φk→i) reduces to nk, since ni(1 − ni) = 0 for binomial variables. In other words, we need to replace σk (σi) with nk (ni) within the expression for φk→i (φi→j, Eq. (4)).

Thus, the modified message passing equations we need to average read:

| (6) |

In practice, we expand ρi→j, given by Eq. (6), and perform the averaging separately for each term:

| (7) |

The only non-trivial average involves the following expression:

| (8) |

where we have to account for the fact that (1 − nk)(1 − φk→i) = (1 − nk). The final expression for the average intra-module message ρ reads:

| (9) |

Averaging the modified inter-link message φi→j, given by Eq. (6), over all possible realizations of randomness inherent in the percolation process yields:

| (10) |

The percolation threshold pc = 1 − qc of the ER 2-NoN can now be found by evaluating the leading eigenvalue determining the stability of the fixed point solution {ρ = φ = 0} to the averaged modified message passing equations [11]:

| (11) |

The corresponding eigenvalues can readily be obtained as

| (12) |

where we define f(p) ≡ e−w − e−wp. Formally, the fixed point solution {ρ = φ = 0} is stable if and only if λ+ ≤ 1 [10, 11]. The implicit function theorem then allows us to obtain the percolation threshold pc = 1−qc by saturating the stability condition as follows:

| (13) |

Results for qc(z, w) = 1 − pc(z, w) in ER 2-NoN are shown in Fig. 2 and confirm the excellent agreement between direct simulations of the random percolation process on synthetic NoN and the theoretical percolation threshold calculated from Eq. (13). The numerically measured percolation thresholds, , were obtained at the peak of the second largest activated component (Fig. 2 Inset), measured relative to the fraction of randomly removed nodes in synthetic ER 2-NoN. The analytical prediction of the percolation threshold, , was obtained from the numerical solution of Eq. (13).

The large values of qc in the percolation phase diagram confirm that the NoN is very robust with respect to random node failures. The results indicate, for instance, that a fraction of more than 70% of randomly chosen nodes in an ER 2-NoN with 〈kin〉 = 4 can be damaged without destroying the giant connected activated component G. Moreover, the percolation transition, separating the phases G > 0 and G = 0, is of second order in the robust NoN (Fig. 2 Inset).

Interestingly, the phase diagram reveals that, for a given average in-degree z, the NoN exhibits maximal vulnerability at a characteristic average out-degree w*(z), indicated by the dip in the percolation threshold qc in Fig. 2. The equation determining w*(z) can straightforwardly be obtained via implicit differentiation of λ+(pc, z, w) = 1, using ∂pc/∂w|w* = 0, where pc(z, w) is given by the solution of Eq. (13). The corresponding curve for is shown in Fig. 2. Conceptually, the dip in qc occurs as a consequence of the competition between dependency and redundancy effects in the NoN. Starting from vanishing inter-module connections, the critical fraction qc, and therefore the robustness of the NoN, initially decreases slightly as the number of dependency links in the NoN is increased. However, upon further increasing the density of inter-module dependencies, the resilience of the NoN increases again with increasing redundancy among the dependency connections.

The underlying mechanism responsible for the robustness of the NoN is best understood from the behaviour of the model in the limit 〈kin〉 → 0, which corresponds to a bipartite network equipped with our activation rule for σi, given by Eq. (1). The corresponding message passing equations, φi→j = σi [1 − Πk∈ℱ(i)\j(1 − φk→i)], are straightforwardly obtainable from Eqs. (3)&(4), and can be seen to coincide with the usual single network message passing equations by observing that the activation state σi can actually be replaced with the occupation variable ni in this case (the reason is the following: assuming node i is present (ni = 1), σi = 0 implies that none of i’s out-neighbors is present and so none of the incoming inter-module messages can be non-zero either). This property can of course directly be obtained also from Eq. (12), which in the limit z = 0 implies

| (14) |

Therefore, the functioning of dependency links is well-defined even if they connect nodes that do not belong to the giant connected activated component within each module. In the model of Refs [2, 3], on the other hand, inter-module links only exist if they connect nodes that belong to the largest connected activated component in their own module. Hence, it is impossible to construct the NoN from below pc (or above qc) using dependency links. In the present robust model, we can construct the links even if the nodes are not in G, allowing us to build the NoN from below pc using dependency connections. Thus, the transition is well-defined from above and below the percolation threshold.

In conclusion, we have seen that the robustness in NoN can be understood to emerge if dependency links do not need to be part of the giant connected activated component G for their proper functioning. In contrast to previously existing models of interdependent networks [2, 3], dependencies in the robust NoN do not lead to cascades of failures. The key point in our model is that a node can be activated even if it does not belong to G. An example of the structure of NoN where the model applies is that of the brain [6–9]. While in Ref. [6] we have shown that the model of [2] becomes robust when correlations in the dependencies are considered, here we show that a local activation rule Eq. (1) akin to brain control between modules defines a novel model of NoN which is robust even without correlations. The effect of degree correlations on the robustness of the NoN is to be investigated [6]. The model is straightforwardly generalizable also to directed links and to dependency connections not restricted to be only across modules, but also inside each module.

Acknowledgments

We acknowledge funding from NSF PHY-1305476, NIH-NIGMS 1R21GM107641, nsf-iis 1515022 and Army Research Laboratory Cooperative Agreement Number W911NF-09-2-0053 (the ARL Network Science CTA).

References

- 1.Newman MEJ. Networks: An Introduction. Oxford University Press; USA: 2010. [Google Scholar]

- 2.Buldyrev SV, Parshani R, Paul G, Stanley HE, Havlin S. Nature. 2010;464:1025. doi: 10.1038/nature08932. [DOI] [PubMed] [Google Scholar]

- 3.Gao J, Buldyrev SV, Stanley HE, Havlin S. Nature Phys. 2012;8:40. [Google Scholar]

- 4.Bianconi G, Dorogovtsev SN, Mendes JFF. Phys Rev E. 2015;91:012804. doi: 10.1103/PhysRevE.91.012804. [DOI] [PubMed] [Google Scholar]

- 5.Parshani R, Buldyrev SV, Havlin S. Phys Rev E. 2010;105:048701. doi: 10.1103/PhysRevLett.105.048701. [DOI] [PubMed] [Google Scholar]

- 6.Reis SDS, Hu Y, Babino A, Andrade JS, Jr, Canals S, Sigman M, Makse HA. Nature Phys. 2014;10:762. [Google Scholar]

- 7.Morone F, Roth K, Min B, Stanley HE, Makse HA. 2016 submitted. http://bit.ly/1YuumcS.

- 8.Gallos LK, Makse HA, Sigman M. Proc Natl Acad Sci USA. 2012;109:2825. doi: 10.1073/pnas.1106612109. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Gilbert CD, Sigman M. Neuron. 2007;54:677. doi: 10.1016/j.neuron.2007.05.019. [DOI] [PubMed] [Google Scholar]

- 10.Morone F, Makse HA. Nature. 2015;524:65. doi: 10.1038/nature14604. [DOI] [PubMed] [Google Scholar]

- 11.Karrer B, Newman MEJ, Zdeborová L. Phys Rev Lett. 2014;113:208702. doi: 10.1103/PhysRevLett.113.208702. [DOI] [PubMed] [Google Scholar]

- 12.Dorogovtsev SN, Mendes JFF, Samukhin AN. Nucl Phys B. 2003;653:307. [Google Scholar]

- 13.Mézard M, Montanari A. Information, Physics, and Computation. Oxford University Press; USA: 2009. [Google Scholar]