Abstract

Developmental dyslexia is presumed to arise from phonological impairments. Accordingly, people with dyslexia show speech perception deficits taken as indication of impoverished phonological representations. However, the nature of speech perception deficits in those with dyslexia remains elusive. Specifically, there is no agreement as to whether speech perception deficits arise from speech-specific processing impairments, or from general auditory impairments that might be either specific to temporal processing or more general. Recent studies show that general auditory referents such as Long Term Average Spectrum (LTAS, the distribution of acoustic energy across the duration of a sound sequence) affect speech perception. Here we examine the impact of preceding target sounds’ LTAS on phoneme categorization to assess the nature of putative general auditory impairments associated with dyslexia. Dyslexic and typical listeners categorized speech targets varying perceptually from /ga/-/da/ preceded by speech and nonspeech tone contexts varying. Results revealed a spectrally contrastive influence of the preceding context LTAS on speech categorization, with a larger magnitude effect for nonspeech compared to speech precursors. Importantly, there was no difference in the presence or magnitude of the effects across dyslexia and control groups. These results demonstrate an aspect of general auditory processing that is spared in dyslexia, available to support phonemic processing when speech is presented in context.

Introduction

Developmental dyslexia is a specific developmental disorder in learning to read that is not a direct result of impairments in general intelligence, gross neurological deficits, uncorrected visual or auditory problems, emotional disturbances or inadequate schooling [1]. Typical symptoms include poor phonological awareness, impaired verbal short term memory, and impaired lexical retrieval [2]. In line with this profile, a major guiding hypothesis has been that dyslexia involves a core phonological deficit in the access to, and manipulation of, phonemic language units [3, 4].

Nonetheless, there remains considerable debate about whether impairments in dyslexia are restricted to speech or whether they may reflect more general impairments [5–7]. Research directed at resolving this debate has focused largely on potential deficits in auditory temporal processing of rapidly-evolving sounds [8], in forming perceptual anchors against which incoming acoustic information may be compared [9, 10], or in a general capacity to establish short-term representations of sound stimulus statistics perhaps as a result of diminished repetition-induced adaptation [11, 12]. At the same time, other research suggests that there may be domain-general impairments in procedural learning among individuals with dyslexia that provide a basis for phonological deficits [13–15]. Recent work has indicated impairments in tracking probabilistic information across speech, nonspeech auditory, and visual perceptual input [16, 17] as well as procedural learning inefficiencies in auditory and visual domains [18, 19]. Despite empirical progress, there remains little consensus regarding the basis of the ubiquitous phonological deficits observed in dyslexia [20].

In this regard, a paradigm that has been widely studied among typical adults [21–25] and typically-developing children [26] is interesting in that it taps into phonological processing, as well as general auditory perceptual processes and sensitivity to the accumulation of probabilistic acoustic information across time. In these studies, listeners hear a speech syllable preceded by a probabilistic sequence of nonspeech sine-wave tones sampled from one of two distributions of tones varying in the spectral mean (i.e., higher- or lower-frequency distributions of tones). The resulting nonspeech precursor sequences sound something like a simple tune. When these tone sequences precede speech targets drawn from a series of syllables varying perceptually from /ga/ to /da/, tones sampled probabilistically from a higher-frequency distribution result in more /ga/ responses, whereas the same speech targets are more often categorized as /da/ when preceding tones are sampled from a lower-frequency distribution [21–23].

The influence of the preceding nonspeech precursors is spectrally contrastive. Sequences of tones with a lower-frequency spectral mean shift speech categorization toward response alternatives with greater high-frequency spectral energy whereas higher-frequency tone sequences shift categorization toward the speech category characterized by lower-frequency energy. As an example, /ga/ and /da/ are differentiated in large part by the onset frequency of the third formant (F3), which is lower for /ga/ and higher for /da/. Perceptually-ambiguous speech syllables, with F3 onset frequencies intermediate /ga/ and /da/ are more often perceived as /ga/ (the lower-frequency alternative) when preceded by a higher-frequency tone sequence. The same syllables are more often categorized as /da/ when lower-frequency tones precede it [21–23, 27]. Similar effects of preceding distributions of tones are observed for categorization of vowels [25] as well as Mandarin tone [24]. Speech categorization among typically-developing 5-year-olds is also influenced in the same manner [26]. In each case, the effects are spectrally contrastive, with regard to the spectral energy that differentiates speech categories.

Laing, Liu, Lotto, and Holt [21] point out that this constellation of findings is particularly interesting because the pattern of context dependence across these nonspeech-speech stimuli looks very much like classic demonstrations of talker normalization. A classic example of talker-dependent speech categorization was offered by Ladefoged and Broadbent [28], who presented listeners with target words varying in the vowel within a /b_t/ frame at the end of a context phrase, Please say what this word is. Using early speech synthesis techniques, Ladefoged and Broadbent manipulated the context phrase by raising or lowering the first (F1) and/or second (F2) formant frequencies of the precursor phrases, conceptually modeling an increase or decrease in vocal tract length and, correspondingly, a change in talker. When phrases modeling different ‘talkers’ preceded the target words, vowel categorization in /b_t/ context shifted as a function of the ‘voice’ of the context phrase. For example, when a phrase consistent with a shorter vocal tract preceded the target, listeners reported hearing bit whereas they reported the same vowel to be bet when it was preceded by a sentence modeling a longer vocal tract. These results have long been interpreted to suggest that listeners extract some type of talker-specific information from context to ‘normalize’ speech perception, inasmuch as perception may compensate for vocal tract differences evident across talkers. In light of the influence nonspeech tone sequences have upon subsequent speech categorization [21], it is possible that the information extracted from prior context that influences speech categorization need not be talker-specific, or even speech-specific information. Holt and colleagues [22, 23, 25] suggest that general auditory processes that track statistical distributions of energy across the frequency spectrum and shift subsequent perception contrastively in relation to these distributions could play a role in the context dependent speech perception that has been taken as evidence of talker normalization.

In understanding these context dependencies, it is useful to note that the nonspeech tone sequences utilized in the Holt [22] studies were composed of individual sine-wave tones randomly sampled from frequency distributions defined by a specific mean frequency on a trial-by-trial basis. Thus, each nonspeech precursor stimulus was unique, with contexts defined probabilistically. As a result, only the long-term average spectrum (LTAS, the distribution of acoustic energy across frequency for the entire duration of the tone sequence) differentiated the context conditions. The influence of these statistically-defined tone sequences on subsequent speech categorization suggests that listeners may keep a running estimate of the distributionally-defined LTAS across both speech and nonspeech sounds and encode subsequent sounds relative to, and contrastively with, these running averages [21, 22, 25]. Spectral contrast as a function of the LTAS may be an effective, domain-general process contributing to accommodation of talker differences across speech [21–24, 26, 29–33], including normalization of the sort described by Ladefoged and Broadbent [28], accommodation of individual differences in overall voice pitch that impact Mandarin lexical tone realization [24], the ability to adapt to a speaker’s style [casual vs. careful; 32] and the inability to adapt to some particular voice changes [34].

In sum, there is evidence that the LTAS of incoming sounds, whether speech or nonspeech, impacts subsequent phonological processing among both typical adult and typically-developing child listeners. In this way the LTAS of probabilistically-defined preceding sounds appears to act as a referent for perception. Notably, the evidence indicates that these effects arise from general auditory processing, not specific to speech. In light of observations that individuals with dyslexia have phonological processing impairments [2], difficulty forming perceptual anchors [9], inefficiencies in learning across probabilistic information [16, 17] and dysfunction in forming short-term representations across sound statistics [11, 12], these characteristics make context-dependent speech categorization across precursors varying in LTAS a potentially useful tool for examining the nature of impairment in dyslexia.

The processing demands this paradigm places on auditory and speech processing make it possible that speech processing may not be impacted by context sounds’ LTAS among individuals with developmental dyslexia. First, one common element across these effects is that LTAS acts as a referent; listeners perceive subsequent speech relative to, and contrastively with, the LTAS of the precursor sounds. To the extent that individuals with developmental dyslexia have a poor ability to form a perceptual anchor [35], there may be difficulty establishing LTAS as a referent. Second, listeners’ sensitivity to LTAS, as a distributional characteristic emerging across a sequence of sounds, appears to involve general auditory processing inasmuch as effects can be elicited by nonspeech, as well as speech, precursors. Although many prior studies have pursued general auditory origins for the phonological impairments typical of dyslexia, these studies have tended to focus on putative impairments in temporal processing [36] and have not yet investigated spectral processing demands of the sort involved in these context effects. One prior study reports that a single preceding tone affects phoneme categorization among typically-developing children as well as children with dyslexia [37]. However, the spectral contrast effects elicited by single tones [38] have a different time course than those elicited by probabilistic sequences of tones drawn from a spectral distribution [23] and so it is unclear if they rely on the same underlying mechanisms. Third, the nonspeech tone sequences that precede speech syllables are defined probabilistically in that they sample a particular distribution across the spectral input dimension. This involves a distributional regularity across the context sounds. Individuals with developmental dyslexia exhibit impairments in other forms of distributional learning across auditory visual and speech stimuli [16–18]. Likewise, dyslexia has been reported to involve impaired implicit use of sound statistics[11]. Thus, the extent to which individuals with dyslexia exhibit context-dependent speech categorization in the present paradigm may inform mechanisms that are involved in both the etiology of developmental dyslexia, and mechanisms that drive these effects within auditory processing, more generally. Fourth, by varying the probabilistic short-term acoustic context history across trials, the paradigm described above may require accumulation of probabilistic acoustic information over time. The ability to extract probabilistic information has been observed to be impaired among people with dyslexia [16, 17]. On the other hand, if participants with dyslexia are able of compute LTAS, this would demonstrate intact distribution-based general auditory processing that may positively influence speech processing when speech targets are presented in context, as they are in most natural listening environments.

This latter point connects with another motivation for testing context-dependent speech categorization among individuals with developmental dyslexia. Laboratory studies traditionally have documented auditory or phonological processing deficits in those with developmental dyslexia across categorization of isolated speech exemplars. This may underestimate the phonological processing in real-world listening environments if mechanisms for capitalizing on context to support phonological processing are intact among individuals with dyslexia. For example, in labeling a series of speech stimuli that morph from one phoneme to another (e.g., /ga/ to /da/), individuals with dyslexia tend to exhibit shallower labeling slopes indicative of a less sharp boundary between phonetic categories [8, 39–42]. Recent research with typical adults and typically-developing children [26] makes clear that measuring phonological processing in this standard way can underestimate speech categorization abilities because syllables are presented in isolation. Hufnagle, Thissen and Holt [26], for example, documented shallow categorization curves among typically-developing 5-year-olds when /ga/-/da/ syllables were presented for labeling in isolation. However, phoneme categorization was sharper when these same children heard the syllables in the context of sequences of tone precursor sounds varying in LTAS that elicited spectrally contrastive context effects. Inasmuch as natural speech syllables are rarely encountered in strict isolation, this is an important caveat that should be considered in relation to establishing the nature of phonological impairments among individuals with dyslexia. In the current study, we examine speech categorization in the context of preceding speech and nonspeech contexts differing in LTAS among adults with developmental dyslexia.

Method

Participants

Fourteen participants with developmental dyslexia and an equal number of control volunteers participated. Participants were native-English university students in Pittsburgh with no reported sensory or neurological deficits, including attention deficit hyperactivity disorder. All came from families of middle to high socioeconomic status. Diagnosis of a comorbid developmental learning disability served as an exclusion criterion. All individuals included in the Dyslexia group had a well-documented history of dyslexia. Specifically, (1) each individual had received a formal evaluation of dyslexia by a qualified psychologist; (2) each individual’s evaluation was verified by the diagnostic and therapeutic center at his or her university; and (3) each individual was receiving accommodations in educational settings. The Control group was age-matched with the Dyslexia group, with no diagnosed reading impairment and the same level of intelligence as measured by the Raven’s Standard Progressive Matrices (SPM) test [43]. All individuals included in the Control group had no history of learning disabilities and performance at or above average on standardized measures of reading. Written informed consent was obtained from all participants. The study was approved by Carnegie Mellon University Institutional Review Board (IRB) and it was conducted in accordance with the Declaration of Helsinki.

All participants performed a series of cognitive tests (see appendix 1 for a detailed description) to evaluate general cognitive ability (as measured by Raven’s Progressive Matrices; Raven, [43]), verbal working memory (as measured by the forward and backward Digit Span from the Wechsler Adult Intelligence Scale [44]; rapid automatized naming [45] and phonological awareness[46]. In addition, all participants performed both un-timed and timed (fluency) tests of word reading and decoding skills. In particular, participants performed the Word Identification (WI) and Word Attack (WA) subtests from the Woodcock Reading Mastery Test-Revised; WRMT-R [47] and they also performed the Sight Word Efficiency, Forms A+B (i.e., rate of word identification) and Phonemic Decoding Efficiency, Forms A+B (i.e., rate of decoding pseudo-words) subtests from the Test of Word Reading Efficiency; TOWRE-II[48].

As indicated by results shown in Table 1, the groups did not differ in age or cognitive ability. However, compared to the Control group, the Dyslexia group exhibited a profile of reading disability conforming to the symptomatology of developmental dyslexia. This group differed significantly from the Control group on word reading and decoding skills in both rate and accuracy measures (Table 1). In addition, the Dyslexia group showed characteristic deficits in the three major phonological domains: phonological awareness (Spoonerisms), verbal short-term memory (digit span) and rapid naming (rapid automatized naming).

Table 1. Demographic and psychometric data of dyslexia and control groups.

| Group | ||||||

|---|---|---|---|---|---|---|

| Measure |

Dyslexia Mean (SD) |

Range |

Control Mean (SD) |

Range | P | Cohen’s d |

| Age (in years) | 20.78 (3.21) | 18–30 | 21.5 (2.73) | 18–29 | .57 | .23 |

| Raven’s SPM | 56.42 (2.79) | 51–60 | 57.85 (1.95) | 54–60 | .12 | .59 |

| Digit spanª (combined) | 10.5 (2.47) | 7–16 | 13.64 (3.07) | 6–18 | .01 | 1.12 |

| RAN objectsª | 106.14 (18.68) | 74–129 | 118.64 (13.46) | 93–133 | .05 | 1.1 |

| RAN colorsª | 100 (13.67) | 80–120 | 111.14 (7.82) | 97–124 | .05 | .76 |

| RAN numbersª | 103.78 (12.95) | 63–113 | 114.57 (3.41) | 109–120 | .01 | 1.13 |

| RAN lettersª | 103.16 (6.35) | 85–111 | 114.57 (6.93) | 105–117 | .01 | 1.68 |

| WRMT-R WIª | 99.42 (5.57) | 92–113 | 116.50 (6.83) | 100–126 | .01 | 1.79 |

| WRMT-R WAª | 96.78 (7.83) | 87–115 | 116.5 (13.35) | 100–137 | .01 | 1.8 |

| TOWRE SA (A+B)ª | 97.78 (8.55) | 81–112 | 117.28 (6.82) | 101–127 | .01 | 2.51 |

| TOWRE PD (A+B)ª | 91.42 (7.83) | 72–112 | 113.57 (13.35) | 100–127 | .01 | 2.36 |

| Spoonerism time | 126.58 (52.98) | 13–224 | 91.5 (30.21) | 63–156 | .05 | .81 |

| Spoonerism accuracy | 8.21 (3.35) | 1–12 | 11.14 (2.10) | 4–12 | .01 | 1.04 |

ªStandard scores (whereby smaller numbers are expected for dyslexia group), other scores are raw scores. Raven scores are presented in percentiles.

Note that all participants in the Dyslexia group were high functioning university students with dyslexia. Prior studies of dyslexia have revealed that such participants achieve average performance on standardized reading tests (including tests that involve low-frequency words such as word identification from the Woodcock Reading Mastery Test-Revised), but nevertheless vary significantly from matched control groups and continue to present phonological problems that can be assessed by phonological tests such as the Spoonerism test [49]. Participants in the Dyslexia group fit this profile. The Dyslexia group differed significantly from the Control group across all literacy measures and exhibited phonological processing impairments (as indicted by the Spoonerism test), despite average performance on standardized tests. This profile is typical of a sample of dyslexic adults.

Stimuli

Speech targets

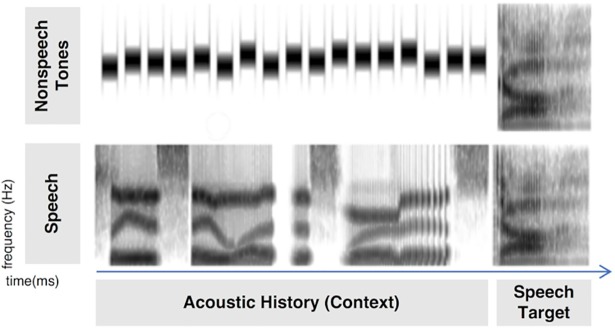

Nine speech target stimuli were derived from natural /ga/ and /da/ recordings from a monolingual male native English speaker (Computer Speech Laboratory, Kay Elemetrics, Lincoln Park, NJ, USA; 20-kHz sampling rate, 16-bit resolution) and were identical to those utilized in several earlier studies [22, 23, 50]. To create the nine-step series, multiple natural productions of the syllables were recorded and, from this set, one /ga/ and one /da/ token were selected that were nearly identical in spectral and temporal properties except for the onset frequencies of F2 and F3. Linear predictive coding (LPC) analysis was performed on each of the tokens to determine a series of filters that spanned these endpoints (Analysis-Synthesis Laboratory, Kay Elemetrics) such that the onset frequencies of F2 and, primarily, F3 varied approximately linearly between /ga/ and /da/ endpoints. These filters were excited by the LPC residual of the original /ga/ production to create an acoustic series spanning the natural /ga/ and /da/ endpoints in approximately equal steps. Creating stimuli in this way provides the advantage of very natural-sounding speech tokens. These 411-ms speech series members served as categorization targets. Fig 1 illustrates the stimuli. Notice that the main difference between the targets is the onset frequency of the third formant (F3) in the range of approximately 1800–2800 Hz. Likewise, the concentration of acoustic energy in the LTAS of the speech and nonspeech targets differs in this spectral region. Each speech target was RMS matched in energy to the /da/ endpoint.

Fig 1. A schematic illustration of stimulus construction.

The top panel shows a spectrogram (time x frequency) of a single nonspeech tone context stimulus with a High LTAS preceding a perceptually unambiguous /ga/ syllable. The bottom panel shows the High LTAS speech context (Please say what this word is…) preceding a perceptually unambiguous /da/.

Speech context stimuli

Following the approach of Laing, Liu, Lotto, and Holt [21] and building from the work of Ladefoged and Broadbent [28], two speech contexts were synthesized. Formant frequencies and bandwidths from a recording of a male native English speaker reciting ‘Please say what this word is…’ were extracted and used as parameters to synthesize the phrase using the parallel branch of the Klatt synthesizer [51]. From these baseline synthesis parameters, third formant (F3) center frequency and bandwidth parameters were manipulated to create two “talkers.” One “talker” was synthesized to possess relatively higher-frequency energy in the F3 region with a peak in energy at about 2866 Hz. Another “talker” was created with relatively lower-frequency F3 energy peaking at about 1886Hz. These manipulations resulted a Context LTAS (High, Low) independent variable across speech contexts. Pairing each of these two 1700-ms contexts with the nine speech targets (50-ms inter-stimulus interval) resulted in 18 unique stimuli. These stimuli were mixed across High and Low Context Frequency and randomized for presentation. Twenty such randomized blocks resulted in a total of 360 speech context trials. Speech stimuli were sampled at 11.025 kHz, and matched in RMS energy to the speech targets.

Nonspeech context stimuli

Following the methods of previous studies [21, 23], the LTAS differences between the High and Low speech contexts in the F3 region were modeled with two distributions of sine-wave tones to create nonspeech contexts that varied in their LTAS. Whereas the LTAS of the speech contexts inherently possesses energy across the frequency spectrum, the nonspeech contexts explicitly sample acoustic energy only in the third formant (F3) frequency region of the spectrum significant to /ga/-/da/ categorization by sampling sine-wave tones within a limited frequency band. Thus, with nonspeech contexts, it is possible to focus acoustic energy precisely on the spectral regions predicted by prior studies of spectral contrast [23, 52] to have an effect on target /ga/-/da/ categorization, specifically energy in the region of F3.

These sequences of tones were similar to those described by Holt (23). They did not sound like speech and did not possess articulatory or talker-specific information. Seventeen 70-ms tones (5 ms linear onset/offset amplitude ramps) with 30 ms silent intervals created 1700-ms nonspeech contexts matched in duration to the speech contexts. As in previous experiments [21–23, 30], the order of the tones making up the nonspeech contexts was randomized on a trial-by-trial basis to minimize effects elicited by any particular tone ordering. Thus, any influence of the nonspeech contexts on the speech categorization is indicative of listeners’ sensitivity to the LTAS of the context and not merely to the simple acoustic characteristics of any particular segment of the tone sequence. It should be noted that the final tone of the sequence was constant. This prevented any differences between conditions from arising from the impact of the tone temporally adjacent to the speech targets. The frequency of the final tone was 2300 Hz, intermediate between the distribution means defining the High and Low LTAS tone contexts.

As in Laing, Liu, Lotto, and Holt [21], the bandwidth of frequency variation of the distributions from which nonspeech tones were sampled to create the tone sequence contexts was approximately matched to the bandwidth of the peak in the corresponding speech contexts’ LTAS, as measured 10 dB below the peak. The low-frequency F3 distribution sampled 435 Hz in 29 Hz steps around a distribution mean (1873.5 Hz, range 1656–2091 Hz) that modeled the F3-energy of the Low LTAS speech context. The high-frequency F3 distribution sampled 570 Hz in 38 Hz steps around a mean (2785 Hz, range 2500–3070Hz) that modeled the F3-energy of the High LTAS speech contexts. Tones from these High and Low frequency distributions were randomly ordered to create 360 unique contexts, with 20 High frequency sequences and 20 Low frequency sequences. The final, constant 2300 Hz tone was appended to each and, a 50-ms silent interval separated the 17-tone sequences from the speech target. Tones comprising the nonspeech contexts were sampled at 11.025 kHz and matched in energy to the speech targets.

Procedure

Listeners categorized the nine speech targets in each of the four contexts (speech/nonspeech x high/low LTAS). Speech and Nonspeech contexts were presented in separate blocks, with High and Low LTAS contexts mixed with each block. The order of blocks was counterbalanced across participants. Within a block, trial order was random. On each trial, listeners heard a context plus speech target stimulus and categorized the speech target as /ga/ or /da/ using buttons on a computer keyboard corresponding to labels on a monitor mounted in front of participants.

The two categorization blocks were followed by a brief discrimination test to measure the extent to which manipulations of the LTAS were successful in producing perceived talker differences across the High and Low speech contexts. On each trial, participants heard a pair of context sentences and judged whether the voice speaking the sentences was the same or different by pressing buttons on a computer keyboard. The task was divided into two blocks, with a brief break between blocks. Within a block, listeners heard both the High and Low speech context stimuli across 20 randomly-ordered trials. One-half of the trials were different talker pairs (High-Low or Low-High, five repetitions each) and the remaining trials were identical voices (High-High, Low-Low, five repetitions each).

For both speech categorization and talker discrimination tests, acoustic presentation was under the control of E-Prime [53] and stimuli were presented diotically over linear headphones (Beyer DT-150) at approximately 70 dB SPL (A) with participants seated in a sound-attenuating booth. The experiment lasted approximately an hour.

Results

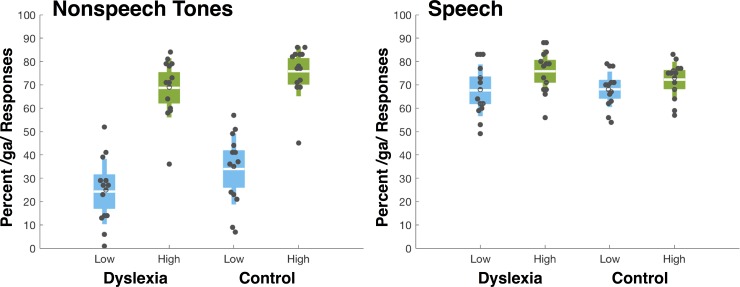

Fig 2 plots the results. Following the approach of Laing, Liu, Lotto, and Holt [21] we conducted an analysis of variance (ANOVA) with context type (Speech vs. Nonspeech), context LTAS (High vs. Low) and speech target (/ga/ vs. /da/) as within-subjects factors and group (Dyslexia vs. Controls) as a between subjects factor with percent of /ga/ responses as the dependent variable. The context type (Speech vs. Nonspeech) main effect was significant F (1, 26) = 66.253, p = .001, ηp2 = .71, indicating that listeners more often reported the speech targets to be /ga/ following speech, compared to nonspeech, contexts. This likely arises from the necessary spectral differences between the speech and nonspeech contexts, since speech is a wideband signal and the nonspeech tone sequences sample a limited spectral range. There was also a significant main effect of speech target, F(8, 208) = 315.0, p = .001, ηp2 = .92, indicating that /ga/ responses varied as intended across the speech targets.

Fig 2. Mean percent /ga/ responses as a function of context type (speech, nonspeech tone), context LTAS (High, Low) and group (dyslexia, control).

Dots represent individual participant’s data. Each box shows the mean (white line) and 95% confidence intervals for the mean. Blue boxes correspond to Low LTAS contexts whereas green boxes illustrate High LTAS contexts. Thus, the spectrally contrastive influence of context is evident as greater /ga/ responses for High (green) compared to Low (blue) LTAS contexts.

Additionally, there was a robust main effect of context LTAS (High, Low) on speech target categorization, F(1, 26) = 237.75, p = .001, ηp2 = .901. This influence was consistent with patterns of spectral contrast observed in prior research [54]. When the preceding phrase or nonspeech tone sequence had greater acoustic energy in higher frequencies in the F3 frequency band, listeners more often categorized the following target as /ga/ (M = .73, SE = .015), compared to categorization of the same target following the phrase or nonspeech tone sequence sampling lower F3 frequencies (M = .48, SE = .016). The context type by group interaction was marginally significant, F (1, 26) = 3.9, p = .06, ηp2 = .12. Further analysis revealed that the nonspeech contexts elicited more /ga/ responses among the Control, compared to the Dyslexia group, F (1, 26) = 4.1, p = .053 whereas there was no difference across groups for speech contexts, F<1. The target by group interaction was not significant indicative of similar identification curves between the two groups, F (8, 208) = 1.3, p = .24, ηp2 = .04.

Of most interest to the present research question, the context frequency by group interaction was not significant, F (1, 26) = 1.1, p = .3077, ηp2 = .04. The LTAS of preceding speech and nonspeech contexts affected phoneme categorization just as much among individuals in the Dyslexia group as those in the Control group. The three-way interaction of context type, context frequency, and group was not significant, F<1, indicating that the magnitude of influence of speech and nonspeech precursors’ LTAS was consistent across the Dyslexia and Control groups.

Higher order interactions involving speech targets were also significant (p < .01). However, since our predictions center on context-dependent speech target categorization, the focus of interpretation is placed on interactions that do not involve target.

General discussion

The present study examined the influence of the long-term average spectrum (LTAS) of preceding speech and nonspeech contexts on speech categorization among typical and dyslexic listeners. Prior research with typical young adult [22–24, 54, 55]and child listeners [26] demonstrates that the LTAS of preceding sound, whether speech or nonspeech, affects speech categorization in a spectrally-contrastive manner [54, 55]. Sounds with a greater concentration of high-frequency energy push speech categorization toward lower-frequency alternatives whereas contexts with greater lower-frequency energy shift categorization to higher-frequency alternatives. Single preceding speech syllables [56] and nonspeech sinewave tones [52] also influence speech categorization in a spectrally contrastive manner, including among children with dyslexia [37]. However, the influence of probabilistic acoustic energy evolving across sentence-length utterances or nonspeech sequences has a distinct time course from the spectral contrast effects evoked by single precursor tones, suggesting the possibility that different mechanisms contribute [23, 38].

We hypothesized that the processing demands of tracking LTAS across acoustic contexts may present difficulties for listeners with dyslexia for several reasons. First, sensitivity to the LTAS may relate to the ability to form a perceptual anchor inasmuch as listeners categorize subsequent speech targets relative to, and contrastively with, the LTAS of precursor sounds. If listeners with developmental dyslexia are impaired in their ability to form a perceptual anchor [9], they may have difficulty establishing the LTAS of precursor sounds as a ‘perceptual anchor’ against which to inform speech categorization. This possibility is all the more intriguing because LTAS-dependent spectral contrast effects, like perceptual anchor effects [11, 12, 57], have been hypothesized to arise from neural adaptation [22]. Second, since most studies of general auditory processing in dyslexia have concentrated on temporal processing [8, 36], there is quite little information to inform an understanding of how spectral-domain processing demands impact speech categorization in dyslexia. Third, the probabilistic nonspeech tone contexts used in prior studies with typical adult listeners have involved extracting information across probabilistic, distributional regularities in sound input [23]. Since individuals with dyslexia can exhibit impaired processing of statistical regularities present across sounds [16] and reduced sensitivity to probabilistic information [17], the statistically-defined, probabilistic LTAS of nonspeech precursor sounds may not influence speech categorization as it does in typical listeners. Lastly, we sought to examine the possibility that intact context-dependent speech categorization in dyslexia may provide unexpected support for speech categorization in real-world environments, compared to the contextually-impoverished listening conditions in which phoneme perception is typically assessed in the laboratory.

Our results replicate previous findings with typical adult and child listeners [21–26]. In particular, we observe that the LTAS of speech and nonspeech precursor sounds influences speech categorization among typical listeners in a spectrally contrastive manner. When participants heard contexts with greater low-frequency acoustic energy, categorization was shifted toward the syllable with greater high-frequency energy, /da/. The same syllables were more often reported as /ga/ (characterized by greater low-frequency energy) when contexts had greater higher-frequency acoustic energy. Notably, there was a larger magnitude LTAS effect for nonspeech compared to speech precursors, consistent with previous observations [54]. This likely arises from the concentrated energy present in a targeted spectral band in nonspeech contexts compared to the more distributed spectral information necessarily present in speech.

Most critically, we observed that individuals with dyslexia also exhibit spectrally contrastive, context-dependent speech categorization. In fact, the influence of distributionally-defined probabilistic nonspeech tone contexts and sentence-length speech contexts was equivalent to the influence observed for typical listeners. This indicates that listeners with dyslexia track evolving spectral statistics from sound and use it to influence phonetic perception in the manner of typical listeners.

In prior research, Holt [22] has found that the task employed in the current investigation demands that listeners are sensitive to the spectral mean of the distribution of probabilistic nonspeech tone precursors. This is especially interesting with regard to the possibility that individuals with dyslexia have difficulty in forming a perceptual anchor, described also as a reduced sensitivity to sound regularities [58] that may be related to impairments in neural adaptation [57] or faster decay of implicit memory across sound statistics [11]. This perspective has been mostly gained support from studies revealing that individuals with dyslexia are less able to benefit from simple item repetition in the context of frequency discrimination and speech-in-noise tasks [58]. Recently these observations have been extended to much more complex regularities embedded in richer stimulus statistics. Typical listeners are capable of extracting summary statistics across longer sequences of sounds [59]. People with dyslexia, however, are less able to use this information. That is, their perception is less biased toward the experienced mean and they tend to exhibit smaller bias towards mean frequency embedded in a stimuli in the context of auditory frequency discrimination [35] and visual spatial frequency discrimination tasks [57], consistent with the anchoring deficit hypothesis [9]. Compatibly, adults and children with dyslexia exhibit reduced neural adaptation across words, objects, faces and voice [12]. Notably, however, the pattern of results observed has not always been consistent; several studies have demonstrated that the ability to form a perceptual anchor is unimpaired among adults with dyslexia in both the visual [60] and auditory modalities [61, 62], including the language domain [63, 64]. The present results demonstrate that individuals with dyslexia are able to extract a spectral mean evolving across more than a second of sound and use it to ‘anchor’ how subsequent speech acoustics are categorized. For the nonspeech tone condition, this ‘anchor’ was defined by evolving sound statistics as stimuli varied trial-by-trial. Future research will be need to resolve this seeming disparity.

The present results are also informative in the context of evidence of an impaired ability to implicitly extract probabilistic information among those with dyslexia. In our previous research, we have reported that individuals with dyslexia are impaired in extracting probabilistic information in both the auditory [16] and visual modalities [17]. Despite these cross-modal results, the current results underscore that it is too strong to conclude that individuals with dyslexia have a general impairment in processing probabilistic information. The nonspeech tone sequences of the present study were defined probabilistically, yet their LTAS influenced subsequent speech categorization. Future research will need to focus on the detailed processing demands involved in various ‘probabilistic’ tasks, as well as different stimulus regularities that may be considered to be ‘probabilistic,’ to determine where differences between typical and dyslexic listeners emerge.

The present stimuli were modeled after Laing, Liu, Lotto, and Holt [21], with nonspeech tones defined probabilistically within a condition’s LTAS distribution. This matched the probabilistic nature of F3 variation in the speech contexts. Prior studies with typical listeners often have employed an additional control in order to assure that the probabilistic sequence of nonspeech tones, and not just the final tone temporally-adjacent to the speech target, produce the contrast effect [22, 23]. The present study cannot rule out the possibility that listeners with dyslexia may differ from typical listeners in the perceptual weighting of context information that accumulates over time. Thus, future studies in which distributional characteristics of nonspeech contexts [22] will be informative in addressing whether there may be more subtle differences in how listeners with dyslexia track statistical regularities evolving in the LTAS.

The present results also bring up the intriguing possibility that traditional approaches to measuring speech perception in the laboratory may underestimated phoneme categorization abilities among individuals with dyslexia (and typical listeners, for that matter). Presenting isolated phonemes or syllables to listeners for identification strips away context sounds that, as the present results demonstrate, can support categorization. Speech exemplars that are perceptually ambiguous in isolation can be perceptually exaggerated in a spectrally contrastive manner by surrounding sound context. In this way, the shallow speech identification curves often associated with individuals with dyslexia [65] would be expected to sharpen with informative contexts, such as those present in real-world listening environments, are made available. Although the present research did not directly test this hypothesis, this was the case among typical 5-year-old listeners who categorized the very same /ga/-/da/ syllables used here [26].

Speech categorization deficits in dyslexia have been attributed to an auditory processing deficit that affects both speech and nonspeech stimuli, and that is specific to temporal, but not spectral, acoustic information [7, 8, 66]. The present results are consistent with the view that some aspects of spectral processing across speech and nonspeech stimuli may be unimpaired in dyslexia. In this context, it is worth noting that typical listener’s exhibit contrastive context-dependent effects of temporal information on speech categorization, as well [67]. In a paradigm much like that of the present study, Wade and Holt found that preceding sequences of tones varying in their duration (and therefore rate of presentation) impacted how typical young adult listeners categorized /ba/-/wa/ syllables created to vary along a temporal formant-transition duration dimension. Future studies of temporal contrast in context-dependent speech categorization in dyslexia are likely to be informative. The present results demonstrate that ‘normalization’ of speech categorization as a function of the preceding long-term average spectrum of sound, whether speech or nonspeech, is intact in individuals with dyslexia and it is as efficient as it is among typical listeners.

Appendix 1 –psychometric tests

The following tests were administered according to the test manual instructions:

Raven’s Standard Progressive Matrices test (Raven, Court & Raven, 1992)–Non-verbal intelligence was assessed by the Raven’s-SPM test. This task requires participants to choose an item from the bottom of the figure that would complete the pattern at the top. The maximum raw score is 60. Test reliability coefficient is .9

Digit Span from the Wechsler Adult Intelligence Scale (WAIS-III; Wechsler, 1997)—In this task, participants are required to recall digits presented auditorily in the order the were presented with a maximum total raw score 28. Task administration is discontinued after a failure to recall two trials with a similar length of digits. Test reliability coefficient is .9

Rapid Automatized Naming (Denkla, & Rudel, 1976)—The tasks require oral naming of rows of visually-presented exemplars drawn from a constant category (RAN colors, RAN categories, RAN numerals, and RAN letters). It requires not only the retrieval of a familiar phonological code for each stimulus, but also coordination of phonological and visual (color) or orthographic (alphanumeric) information quickly in time. The reliability coefficient of these tests ranging between .98 to .99.

Woodcock Reading Mastery Test Word Identification and Word Attack subtests (Woodcock & Johnson, 1990). The Word Identification subtest measures participants’ ability to accurately pronounce printed English words, ranging from high to low frequency of word occurrence with a maximum of total raw score 106. Test reliability coefficient is .97. The Word Attack subtest assesses participants’ ability to read pronounceable nonwords varying in complexity with a maximum total raw score of 45. Test reliability coefficient is .87. Task administration is discontinued when 6 consecutive words are read incorrectly.

Sight Word Efficiency (i.e., rate of word identification) and Phonemic Decoding Efficiency, (i.e., rate of decoding pseudowords) subtests from the Test of Word Reading Efficiency (TOWRE-II; [68]) were used to measure reading rate. The test contains two timed measures of real word reading and pseudo word decoding. Participants are required to read the words aloud as quickly and accurately as possible. The score reflects the total number of words/nonwords read correctly in a fixed 45-s interval. Task administration is discontinued after 45 seconds. Sight word efficiency maximum raw score is 108. Phonemic decoding efficiency maximum raw core is 65. Test-retest reliability coefficients for these subtests are .91 and .90 respectively.

Spoonerism Test (adapted from [69])—This test assesses the participants’ ability to segment single syllable words and then to synthesize the segments to provide new words. For example, the word pair “Basket Lemon” become “Lasket Bemon”. The maximum raw score is 12.

Acknowledgments

The authors thank Christi Gomez for her assistance. The research was supported by a grant from the National Institutes of Health (R01DC004674) to LLH. Correspondence may be addressed to LLH, loriholt@cmu.edu or YG, ygabay@edu.haifa.ac.il.

Data Availability

All data files are available at DOI: 10.1184/R1/6025262.

Funding Statement

The research was supported by a grant from the National Institutes of Health (R01DC004674) to LLH.

References

- 1.Association AP, Association AP. DSM-IV-TR: Diagnostic and statistical manual of mental disorders, text revision Washington, DC: American Psychiatric Association; 2000;75:78–85. [Google Scholar]

- 2.Vellutino FR, Fletcher JM, Snowling MJ, Scanlon DM. Specific reading disability (dyslexia): What have we learned in the past four decades? Journal of Child Psychology and Psychiatry. 2004;45(1):2–40. [DOI] [PubMed] [Google Scholar]

- 3.Ramus F, Szenkovits G. What phonological deficit? The Quarterly Journal of Experimental Psychology. 2008;61(1):129–41. doi: 10.1080/17470210701508822 [DOI] [PubMed] [Google Scholar]

- 4.Snowling MJ. Dyslexia: Blackwell Publishing; 2000.

- 5.Rosen S. Auditory processing in dyslexia and specific language impairment: Is there a deficit? What is its nature? Does it explain anything? Journal of phonetics. 2003;31(3–4):509–27. [Google Scholar]

- 6.Hämäläinen JA, Salminen HK, Leppänen PH. Basic auditory processing deficits in dyslexia: systematic review of the behavioral and event-related potential/field evidence. Journal of learning disabilities. 2013;46(5):413–27. doi: 10.1177/0022219411436213 [DOI] [PubMed] [Google Scholar]

- 7.Vandermosten M, Boets B, Luts H, Poelmans H, Golestani N, Wouters J, et al. Adults with dyslexia are impaired in categorizing speech and nonspeech sounds on the basis of temporal cues. Proceedings of the National Academy of Sciences. 2010;107(23):10389–94. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Tallal P. Auditory temporal perception, phonics, and reading disabilities in children. Brain and language. 1980;9(2):182–98. [DOI] [PubMed] [Google Scholar]

- 9.Ahissar M. Dyslexia and the anchoring-deficit hypothesis. Trends in cognitive sciences. 2007;11(11):458–65. doi: 10.1016/j.tics.2007.08.015 [DOI] [PubMed] [Google Scholar]

- 10.Ahissar M, Lubin Y, Putter-Katz H, Banai K. Dyslexia and the failure to form a perceptual anchor. Nature neuroscience. 2006;9(12):1558–64. doi: 10.1038/nn1800 [DOI] [PubMed] [Google Scholar]

- 11.Jaffe-Dax S, Kimel E, Ahissar M. Shorter cortical adaptation in dyslexia is broadly distributed in the superior temporal lobe and includes the primary auditory cortex. eLife. 2018;7:e30018 doi: 10.7554/eLife.30018 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Perrachione TK, Del Tufo SN, Winter R, Murtagh J, Cyr A, Chang P, et al. Dysfunction of rapid neural adaptation in dyslexia. Neuron. 2016;92(6):1383–97. doi: 10.1016/j.neuron.2016.11.020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Nicolson RI, Fawcett AJ. Dyslexia, dysgraphia, procedural learning and the cerebellum. Cortex. 2011;47(1):117–27. doi: 10.1016/j.cortex.2009.08.016 [DOI] [PubMed] [Google Scholar]

- 14.Nicolson RI, Fawcett AJ. Procedural learning difficulties: reuniting the developmental disorders? TRENDS in Neurosciences. 2007;30(4):135–41. doi: 10.1016/j.tins.2007.02.003 [DOI] [PubMed] [Google Scholar]

- 15.Ullman MT. Contributions of memory circuits to language: The declarative/procedural model. Cognition. 2004;92(1–2):231–70. doi: 10.1016/j.cognition.2003.10.008 [DOI] [PubMed] [Google Scholar]

- 16.Gabay Y, Thiessen ED, Holt LL. Impaired statistical learning in developmental dyslexia. Journal of Speech, Language, and Hearing Research. 2015;58(3):934–45. doi: 10.1044/2015_JSLHR-L-14-0324 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Gabay Y, Vakil E, Schiff R, Holt LL. Probabilistic category learning in developmental dyslexia: Evidence from feedback and paired-associate weather prediction tasks. Neuropsychology. 2015;29(6):844 doi: 10.1037/neu0000194 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Gabay Y, Holt LL. Incidental learning of sound categories is impaired in developmental dyslexia. cortex. 2015;73:131–43. doi: 10.1016/j.cortex.2015.08.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Gabay Y, Schiff R, Vakil E. Dissociation between the procedural learning of letter names and motor sequences in developmental dyslexia. Neuropsychologia. 2012;50(10):2435–41. doi: 10.1016/j.neuropsychologia.2012.06.014 [DOI] [PubMed] [Google Scholar]

- 20.Démonet JF, Taylor MJ, Chaix Y. Developmental dyslexia. The Lancet. 2004;363(9419):1451–60. [DOI] [PubMed] [Google Scholar]

- 21.Laing EJ, Liu R, Lotto AJ, Holt LL. Tuned with a tune: Talker normalization via general auditory processes. Frontiers in psychology. 2012;3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Holt LL. The mean matters: Effects of statistically defined nonspeech spectral distributions on speech categorization. The Journal of the Acoustical Society of America. 2006;120(5):2801–17. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Holt LL. Temporally nonadjacent nonlinguistic sounds affect speech categorization. Psychological Science. 2005;16(4):305–12. doi: 10.1111/j.0956-7976.2005.01532.x [DOI] [PubMed] [Google Scholar]

- 24.Huang J, Holt LL. General perceptual contributions to lexical tone normalization. The Journal of the Acoustical Society of America. 2009;125(6):3983–94. doi: 10.1121/1.3125342 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Huang J, Holt LL. Listening for the norm: adaptive coding in speech categorization. Frontiers in psychology. 2012;3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Hufnagle DG, Holt LL, Thiessen ED. Spectral information in nonspeech contexts influences children’s categorization of ambiguous speech sounds. Journal of experimental child psychology. 2013;116(3):728–37. doi: 10.1016/j.jecp.2013.05.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Holt LL. Speech categorization in context: Joint effects of nonspeech and speech precursors. The Journal of the Acoustical Society of America. 2006;119(6):4016–26. doi: 10.1121/1.2195119 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Ladefoged P, Broadbent DE. Information conveyed by vowels. The Journal of the acoustical society of America. 1957;29(1):98–104. [DOI] [PubMed] [Google Scholar]

- 29.Aravamudhan R, Lotto AJ, Hawks JW. Perceptual context effects of speech and nonspeech sounds: The role of auditory categories. The Journal of the acoustical society of America. 2008;124(3):1695–703. doi: 10.1121/1.2956482 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Huang J, Holt LL. Evidence for the central origin of lexical tone normalization (L). The Journal of the acoustical society of America. 2011;129(3):1145–8. doi: 10.1121/1.3543994 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Huang J, Holt LL. Listening for the norm: adaptive coding in speech categorization. Front Psychol. 2012;3(10):1–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Vitela AD, Warner N, Lotto AJ. Perceptual compensation for differences in speaking style. Frontiers in psychology. 2013;4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Vitela AD, Story BH, Lotto AJ. Predicting the effect of talker differences on perceived vowel category. The Journal of the acoustical society of America. 2010;128(4):2349–. [Google Scholar]

- 34.Vitela AD, Monson BB, Lotto AJ. Phoneme categorization relying solely on high-frequency energy. The Journal of the Acoustical Society of America. 2015;137(1):EL65–EL70. doi: 10.1121/1.4903917 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Jaffe-Dax S, Lieder I, Biron T, Ahissar M. Dyslexics' usage of visual priors is impaired. Journal of vision. 2016;16(9):10–. doi: 10.1167/16.9.10 [DOI] [PubMed] [Google Scholar]

- 36.Farmer ME, Klein RM. The evidence for a temporal processing deficit linked to dyslexia: A review. Psychonomic bulletin & review. 1995;2(4):460–93. [DOI] [PubMed] [Google Scholar]

- 37.Blomert L, Mitterer H, Paffen C. In search of the auditory, phonetic, and/or phonological problems in dyslexia: Context effects in speech perception. Journal of Speech, Language, and Hearing Research. 2004;47(5):1030–47. [DOI] [PubMed] [Google Scholar]

- 38.Lotto AJ, Sullivan SC, Holt LL. Central locus for nonspeech context effects on phonetic identification (L). The Journal of the Acoustical Society of America. 2003;113(1):53–6. [DOI] [PubMed] [Google Scholar]

- 39.Godfrey JJ, Syrdal-Lasky K, Millay KK, Knox CM. Performance of dyslexic children on speech perception tests. Journal of experimental child psychology. 1981;32(3):401–24. [DOI] [PubMed] [Google Scholar]

- 40.Mody M, Studdert-Kennedy M, Brady S. Speech perception deficits in poor readers: auditory processing or phonological coding? Journal of experimental child psychology. 1997;64(2):199–231. doi: 10.1006/jecp.1996.2343 [DOI] [PubMed] [Google Scholar]

- 41.Reed MA. Speech perception and the discrimination of brief auditory cues in reading disabled children. Journal of experimental child psychology. 1989;48(2):270–92. [DOI] [PubMed] [Google Scholar]

- 42.Serniclaes W, Van Heghe S, Mousty P, Carré R, Sprenger-Charolles L. Allophonic mode of speech perception in dyslexia. Journal of experimental child psychology. 2004;87(4):336–61. doi: 10.1016/j.jecp.2004.02.001 [DOI] [PubMed] [Google Scholar]

- 43.Raven J-C. Court JH, & Raven J.(1992). Standard progressive matrices. 1992. doi: 10.2466/pms.1992.74.3c.1193 [Google Scholar]

- 44.Wechsler D, Coalson DL, Raiford SE. WAIS-III: Wechsler adult intelligence scale: Psychological Corporation; San Antonio, TX; 1997. [Google Scholar]

- 45.Wolf M, Denckla MB. RAN/RAS: Rapid automatized naming and rapid alternating stimulus tests: Pro-ed; 2005. [Google Scholar]

- 46.Brunswick N, McCrory E, Price C, Frith C, Frith U. Explicit and implicit processing of words and pseudowords by adult developmental dyslexics: A search for Wernicke's Wortschatz? Brain. 1999;122(10):1901–17. [DOI] [PubMed] [Google Scholar]

- 47.Woodcock RW. Woodcock reading mastery tests, revised, examiner's manual: American Guidance Service; 1998. [Google Scholar]

- 48.Torgesen JK, Rashotte CA, Wagner RK. TOWRE: Test of word reading efficiency: Pro-ed; Austin, TX; 1999. [Google Scholar]

- 49.Wilson AM, Lesaux NK. Persistence of phonological processing deficits in college students with dyslexia who have age-appropriate reading skills. Journal of Learning Disabilities. 2001;34(5):394–400. doi: 10.1177/002221940103400501 [DOI] [PubMed] [Google Scholar]

- 50.Wade T, Holt LL. Effects of later-occurring nonlinguistic sounds on speech categorization. The Journal of the acoustical society of America. 2005;118(3):1701–10. [DOI] [PubMed] [Google Scholar]

- 51.Klatt DH, Klatt LC. Analysis, synthesis, and perception of voice quality variations among female and male talkers. The Journal of the acoustical society of America. 1990;87(2):820–57. [DOI] [PubMed] [Google Scholar]

- 52.Lotto AJ, Kluender KR. General contrast effects in speech perception: Effect of preceding liquid on stop consonant identification. Perception & Psychophysics. 1998;60(4):602–19. [DOI] [PubMed] [Google Scholar]

- 53.Schneider W, Eschman A, Zuccolotto A, Guide E-PUs. Psychology Software Tools Inc; Pittsburgh, USA: 2002. [Google Scholar]

- 54.Laing EJ, Liu R, Lotto AJ, Holt LL. Tuned with a tune: Talker normalization via general auditory processes. Frontiers in psychology. 2012;3:203 doi: 10.3389/fpsyg.2012.00203 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Huang J, Holt LL. Listening for the norm: adaptive coding in speech categorization. Frontiers in psychology. 2012;3:10 doi: 10.3389/fpsyg.2012.00010 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Mann VA. Influence of preceding liquid on stop-consonant perception. Perception & Psychophysics. 1980;28(5):407–12. [DOI] [PubMed] [Google Scholar]

- 57.Jaffe-Dax S, Raviv O, Jacoby N, Loewenstein Y, Ahissar M. A computational model of implicit memory captures dyslexics' perceptual deficits. Journal of Neuroscience. 2015;35(35):12116–26. doi: 10.1523/JNEUROSCI.1302-15.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Banai K, Ahissar M. Poor sensitivity to sound statistics impairs the acquisition of speech categories in dyslexia. Language, Cognition and Neuroscience. 2018;33(3):321–32. [Google Scholar]

- 59.McDermott JH, Schemitsch M, Simoncelli EP. Summary statistics in auditory perception. Nature neuroscience. 2013;16(4):493 doi: 10.1038/nn.3347 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Beattie RL, Lu Z-L, Manis FR. Dyslexic adults can learn from repeated stimulus presentation but have difficulties in excluding external noise. PloS one. 2011;6(11):e27893 doi: 10.1371/journal.pone.0027893 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Agus TR, Carrión-Castillo A, Pressnitzer D, Ramus F. Perceptual learning of acoustic noise by dyslexic individuals. J Speech Lang Hearing Res. 2013;57:1069–77. [DOI] [PubMed] [Google Scholar]

- 62.Wijnen F, Kappers AM, Vlutters LD, Winkel S. Auditory frequency discrimination in adults with dyslexia: a test of the anchoring hypothesis. Journal of Speech, Language, and Hearing Research. 2012;55(5):1387–94. doi: 10.1044/1092-4388(2012/10-0302) [DOI] [PubMed] [Google Scholar]

- 63.Di Filippo G, Zoccolotti P, Ziegler JC. Rapid naming deficits in dyslexia: A stumbling block for the perceptual anchor theory of dyslexia. Developmental Science. 2008;11(6). [DOI] [PubMed] [Google Scholar]

- 64.Georgiou GK, Ghazyani R, Parrila R. Are RAN deficits in university students with dyslexia due to defective lexical access, impaired anchoring, or slow articulation? Annals of Dyslexia. 2018:1–19. doi: 10.1007/s11881-018-0158-x [DOI] [PubMed] [Google Scholar]

- 65.Noordenbos MW, Serniclaes W. The categorical perception deficit in dyslexia: A meta-analysis. Scientific Studies of Reading. 2015;19(5):340–59. [Google Scholar]

- 66.Vandermosten M, Boets B, Luts H, Poelmans H, Wouters J, Ghesquiere P. Impairments in speech and nonspeech sound categorization in children with dyslexia are driven by temporal processing difficulties. Research in developmental disabilities. 2011;32(2):593–603. doi: 10.1016/j.ridd.2010.12.015 [DOI] [PubMed] [Google Scholar]

- 67.Wade T, Holt LL. Perceptual effects of preceding nonspeech rate on temporal properties of speech categories. Perception & psychophysics. 2005;67(6):939–50. [DOI] [PubMed] [Google Scholar]

- 68.Torgesen JK, Wagner R, Rashotte C. TOWRE–2 Test of Word Reading Efffciency. 1999. [Google Scholar]

- 69.Brunswick N, McCrory E, Price C, Frith C, Frith U. Explicit and implicit processing of words and pseudowords by adult developmental dyslexics A search for Wernicke's Wortschatz? Brain. 1999;122(10):1901–17. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

All data files are available at DOI: 10.1184/R1/6025262.