Abstract

This study aims at analyzing sub-pixel misregistration between multi-spectral images acquired by the Multi-Spectral Instrument (MSI) aboard Sentinel-2A remote sensing satellite, and exploring its potential for moving target and cloud detection. By virtue of its hardware design, MSI’s detectors exhibit a parallax angle that leads to sub-pixel shifts that are corrected with special pre-processing routines. However, these routines do not correct shifts for moving and/or high altitude objects. In this letter, we apply a phase correlation approach to detect sub-pixel shifts between B2 (blue), B3 (green) and B4 (red) Sentinel-2A/MSI images. We show that shifts of more than 1.1 pixels can be observed for moving targets, such as airplanes and clouds, and can be used for cloud detection. We demonstrate that the proposed approach can detect clouds that are not identified in the built-in cloud mask provided within the Sentinel-2A Level-1C (L1C) product.

Index Terms: cloud detection, multi-spectral misregistration, phase correlation, Sentinel-2, sub-pixel

I. Introduction

The Multi-Spectral Instrument (MSI) aboard Sentinel-2A remote sensing satellite acquires images of Earth’s surface in thirteen spectral bands [1]. Bands 2 (blue), 3 (green), 4 (red) and 8 (near infrared, NIR) are acquired at 10 m spatial resolution; red edge bands 5, 6, 7, NIR band 8a and shortwave infrared (SWIR) bands 11 and 12 are acquired at 20 m spatial resolution; and bands 1, 9 and 10, that are designed for aerosols retrieval, water vapor retrieval and cirrus detection, respectively, are acquired at 60 m spatial resolution. The MSI is designed in such a way, that the sensor’s detectors for the different spectral bands are displaced from each other. This introduces a parallax angle between spectral bands that can result in along-track displacements of up to 17 km in the Sentinel-2A scene [2]. Corresponding corrections using a numerical terrain model are performed to remove these interband displacements, so the MSI images, acquired in different spectral bands, are co-registered at the sub-pixel level to meet the requirement of 0.3 pixels at 99.7% confidence. However, these pre-processing routines cannot fully correct displacements for high altitude objects, e.g. clouds or fast moving objects such as airplanes or cars. Therefore, these types of objects appear displaced in images for different spectral bands. The magnitude of the displacement varies among pairs of bands depending on the inter-band parallax angle. For example, at 10 m spatial resolution, the maximum displacement is observed for bands 2 (blue) and 4 (red). Hence, the multi-spectral displacement can be used as a feature to detect clouds or moving objects. This idea has been exploited for geostationary satellites, where spectral bands are acquired at slightly different times [3], [4], [5], and very high and moderate spatial resolution satellites, where a parallax angle between pan-chromatic and multi-spectral bands exist [6], [7], [8], [9], [10].

Various geometric issues for Sentinel-2A/MSI images have already been addressed in previous studies including co-registration of multi-temporal Sentinel-2A/MSI images [11], and co-registration of Sentinel-2A/MSI images with images acquired by other sensors, e.g. Operational Land Imager (OLI) aboard Landsat-8 satellite [11], [12], [13]. For these studies, well-established image registration techniques were exploited that allow automatic co-registration of satellite images with sub-pixel accuracy. Both feature-based [12] and area-based [11] approaches proved effective in improving co-registration at sub-pixel level between multi-temporal Sentinel-2A/MSI images and multi-source images (Sentinel-2A/MSI and Landsat-8/OLI). The European Space Agency (ESA) regularly publishes “Data Quality Reports” which provide quantitative overviews of Sentinel-2 product performance, including geometric aspects. However, aspects related to multi-spectral misregistration of Sentinel-2A/MSI images and potential applications that can be derived from this characteristic of the data, have not been documented in the literature yet. Therefore, in this letter, we aim to analyze the misregistration between Sentinel-2A/MSI images acquired in the visible spectrum at 10 m spatial resolution and explore its potential use for detection of clouds and moving objects.

II. Methodology

Phase correlation was the primary method used in this study for detecting misregistration between multi-spectral Sentinel-2A images at sub-pixel scale [14], [15], [16]. Phase correlation belongs to a group of area-based image matching methods, and is a well-established technique for estimating translation and rotation (through conversion to polar coordinates) between images. In particular, it finds correspondence between reference and sensed (slave) images by estimating a cross-correlation measure in the frequency domain, using Fourier transform. This peculiarity of the phase correlation technique allows detection of sub-pixel shifts between images. Advantages of phase correlation include [15]: high accuracy (at sub-pixel scale), error analysis to remove unreliable and erroneous shifts, and robustness. The latter refers to the ability of phase correlation to effectively detect shifts in multi-temporal or multi-spectral images. In this study, we used a computationally efficient procedure based on nonlinear optimization and Discrete Fourier Transforms (DFTs) to detect sub-pixel shifts between reference and sensed images proposed by Guizar-Sicairos et al. (2008) [16]. This approach was used to co-register Sentinel-2A/MSI and Landsat-8/OLI images [11]. For details on the algorithmic implementation of phase correlation for sub-pixel image registration, we refer the reader to [15], [16].

We applied phase correlation on a sliding window basis (patches) whereby a subpixel shift between two images is detected for each window with the size nw × nw and step size ns (Fig. 1). Window size should be big enough to contain details and features in order to detect the shift between images. At the same time, the window size should not be too big, so that the shift between images within the window will be uniform. Also, window size limits the size of objects that can be detected through misregistration of images. Step size with which windows are being slid determines the spatial resolution of the resulting shift map containing the shift between the images. For 10 m images, the window step size of 6 pixels (ns=6) will result in a map with an effective spatial resolution of 60 m, i.e. the shift will be detected every 60 m (without additional resampling).

Fig. 1.

A sliding window with the size nw × nw and step size ns for which a phase correlation is run to estimate sub-pixel misregistration.

The phase correlation approach was applied to multi-spectral (B2 – blue, B3 – green and B4 – red) Sentinel-2A images at 10 m spatial resolution. The parallax angle between odd/even detectors for bands B2, B3 and B4 is 0.022, 0.030 and 0.034 (all in radiance units), respectively [17]. These values along with the detectors’ configuration lead to the following intra-band parallax angles: 0.006 radians for B4-B2 bands, 0.002 radians for B4-B3 bands, and 0.008 radians for B3-B2 bands. The corrections, applied during pre-processing, will fix multi-spectral shifts, induced by parallax, for static and low altitude objects to meet the requirement of 0.3 pixels for multi-spectral band registration [2]. It is assumed, however, that larger shifts (>0.3 pixels) will be observed for moving and high altitude objects that can be detected with a sub-pixel phase correlation approach. These larger shifts can be separated from shifts for static and low altitude objects, and this will constitute a basis for detecting moving objects and high altitude objects such as airplanes and clouds. For example, an intra-band parallax angle for the B4-B2 pair is ΔαB4,B2=0.006 radians, and at nominal Sentinel-2A altitude of h=786 km and speed of v=7.44 km/s, this angular difference will introduce a time-lag [6] of ΔtB4,B2=ΔαB4,B2h/v=0.63 s. Therefore, for static objects at 500 m altitude, the pixel displacement (in B2 and B4 images) will be 0.006*500=3 m, or 0.3 pixels at 10 m resolution; for moving objects, the minimum detectable target motion at 0.3 pixels level will be ~4.7 m/s (17 km/h).

Finally, no NIR (B8) band was used in this study, because of the following reasons: (i) parallax angle for B8 band is close to the one of B3 (green), and therefore it does not lead to shifts larger than those in visible bands; (ii) significant differences in the spectral response of NIR compared to visible bands, especially in vegetation, that resulted in the phase correlation detecting erroneous shifts in images.

III. Data Description

We used the standard Sentinel-2A Level-1C (L1C) product which is radiometrically and geometrically corrected with ortho-rectification and provides top of atmosphere (TOA) reflectance values [2]. Along with quality information, L1C also contains a built-in vector cloud mask. The product is provided in tiles of 109.8 km × 109.8 km size in the Universal Transverse Mercator (UTM) projection with the World Geodetic System 1984 (WGS84) datum. Each Sentinel-2 tile is referenced through the U.S. Military Grid Reference System (MGRS), with a tile identifier consisting of two numbers and three letters e.g. 16TCK. The first two numbers in the tile identifier correspond to the UTM zone while the remaining three letters correspond to the tile location [17]. Three Sentinel-2A scenes covering two study areas (Table 1) and featuring diverse land cover (Fig. 2) were used in this study to investigate multi-spectral misregistration and the potential for detecting moving objects and clouds. First, an area in the US was selected consisting mainly of agricultural land, and a second region in United Arab Emirates (UAE) including urban areas and desert. The US scene was used to explore the potential of using multi-temporal images misregistration for cloud detection and the UAE scene was used to detect the moving objects.

TABLE I.

Description of data used in the study

| Region/Country | Sentinel-2 Tile |

Date of acquisition |

|---|---|---|

| Bondville, IL, US | 16TCK | 15 June 2016 and 21 May 2017 |

| Dubai, United Arab Emirates (UAE) | 40RCN | 13 June 2017 |

Fig. 2.

Examples of TOA true color images (combination of bands 4, 3, 2) acquired by Sentinel-2A/MSI over study areas: Bondville, IL, US on 15 June 2016 (a) and Dubai, UAE on 13 June 2017 (b).

IV. Results

Table 2 shows the average absolute shifts between different combinations of bands B2, B3 and B4 for subsets from the 15 June 2016 and 13 June 2017 Sentinel-2A scenes, without the presence of clouds and/or moving objects. The estimated shifts are within the requirement of 0.3 pixels and conform to results published in ESA “Data Quality Report” [18]. Our results are also in agreement with the relative parallax angles between bands B2, B3 and B4, as the largest shift (in terms of average and standard deviation) is observed between bands B4 and B2 (parallax angles are 0.034 and 0.022 radians), and smallest shift is observed between bands B4 and B3 (parallax angles are 0.030 and 0.034 radians).

TABLE II.

Average shifts (in pixels at 10 m) with standard deviation between multi-spectral Sentinel-2A images

| Scene | Number of points |

Band combination |

Shift, pixels |

|---|---|---|---|

| Dubai, 13 June 2017 | 154449 | B3-B2 | 0.037±0.028 |

| B4-B3 | 0.033±0.025 | ||

| B4-B2 | 0.043±0.089 | ||

| US, 15 June 2016 | 104329 | B3-B2 | 0.049±0.057 |

| B4-B3 | 0.051±0.053 | ||

| B4-B2 | 0.041±0.063 |

The magnitude of shifts between visible spectral bands in Sentinel-2A images increases, when moving or high altitude objects are present. Fig. 3 shows a Sentinel-2A true color image as well as shift maps for pairs of B2, B3, and B4 bands. A moving airplane can be seen with a “rainbow” effect due to shifts between multi-spectral bands, and these are observed in shift maps calculated using a phase correlation approach with a sliding window (nw=16, size ns=2). Misregistration shifts between multi-spectral images for a moving airplane are 0.5 to 1.1 pixels depending on the bands (Table 3). However, shifts for standing airplanes (shown in bottom left part of Fig. 3(a)) are within the ESA requirement and less than 0.3 pixels (Fig. 3 (b, c, d)).

Fig. 3.

A subset of Sentinel-2A true color image (combination of bands B4, B3, and B2) acquired on 13 June 2017 (a). Shift maps were estimated from different pairs of visible bands at 10 m spatial resolution using a phase correlation approach with window size nw=16 and step size ns=2: bands 3 and 2 (b); bands 4 and 3 (c); and bands 4 and 2 (d).

TABLE III.

Average shifts (in pixels at 10 m) with standard deviation between multi-spectral Sentinel-2A images for a moving airplane on a Dubai scene acquired on 13 June 2017. Shifts were averaged over a 5×5 window center over the moving airplane.

| Band combination | Shift, pixels |

|---|---|

| B3-B2 | 0.652±0.306 |

| B4-B3 | 0.500±0.142 |

| B4-B2 | 1.104±0.645 |

It should be however noted that successful detection of moving targets depends on the window size; i.e. if shifts are at the order of the window size, the detection of the shifts within the window by phase correlation is becoming problematic. In that case, the window size should be increased.

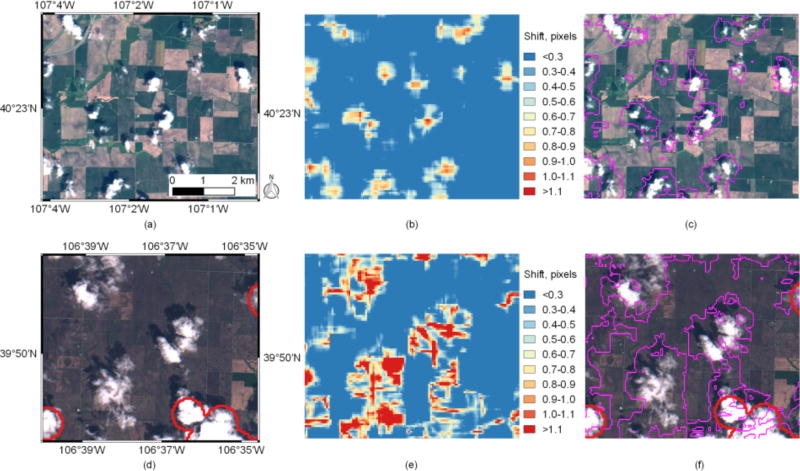

Multi-spectral misregistration of the similar magnitude is also observed for clouds on Sentinel-2A images which may be used as one of the criteria for detecting clouds. Fig. 4 shows subsets of Sentinel-2A images acquired over the US (tile 16TCK) on 15 June 2016 and 21 May 2017 with the internal cloud mask provided for the L1C product, the detected shifts between bands 4 and 2 using the phase correlation approach with a window size nw=64 and step size ns=6, and the derived potential cloud mask from the shifts using a threshold of 0.3 pixels.

Fig. 4.

Example of cloud detection for Sentinel-2A/MSI images acquired over the US (tile 16TCK) on 15 June 2016 (a) and 21 May 2017 (d). True color images (combination of bands 4, 3 and 2) at 10 m spatial resolution along with the built-in cloud mask (in red) are shown in subplots (a) and (d); shifts estimated from band 4 and 2 images using phase correlation and a sliding window with parameters nw=64 and ns=6 are shown in (b) and (e); cloud masks (in magenta) derived from the multi-spectral misregistration using a threshold of 0.2 pixels for shifts are shown in subplots (c) and (f).

Visual inspection of Fig. 4 shows that the built-in cloud mask of the L1C product does not always detect clouds, while the mask derived from the multi-spectral misregistration detects most of clouds. It is worth mentioning that the proposed approach is not intended to be used as a stand-alone criterion for cloud detection, but should be rather combined with already established criteria suggested, for example in [19] and [20]. The main strength of the presented approach is in its ability to detect small and thin clouds that lead to sub-pixel misregistration in visible bands of Sentinel-2A images. A limitation of the proposed approach is related to difficulties to detect clouds with high reflectance values and with a size substantially larger than the sliding window size. In this case, the window for which the phase correlation is applied will not have enough detail to detect any sub-pixel shifts between images. However, existing algorithms for cloud detection can handle such cases. There can be also a potential situation, when the effect of cloud motion and high altitude cancel each other out, so that no pixel displacement will be observed. This could happen when, the object will be strictly moving along the satellite track in the North/South direction. However, pixel displacements, when the object is moving in other directions will not be compensated by stereoscopic (altitude) parallax. One approach to mitigate and reduce the likelihood of this situation for Sentinel-2 will be to take advantage of different parallax angles between multiple bands (B2, B3, B4), which will induce different combinations of objects height and velocity values that compensate pixel displacement, and estimate the maximum pixel displacement between three band combinations (B2-B4, B2-B3, B3-B4).

V. Conclusions

In this letter, we demonstrate a concept for exploiting multispectral misregistration in Sentinel-2A/MSI images at 10 m spatial resolution to detect moving targets and clouds. Because of a parallax angle between detectors in the MSI instrument, misregistration occurs in multi-spectral images acquired by Sentinel-2A. These shifts are corrected with special pre-processing routines using a numerical terrain model; however, these routines cannot correct these shifts for moving or high altitude objects. Although this characteristic of the data might be considered as a disadvantageous artifact, in this letter we have shown that it can be beneficial for some applications. Moving and high altitude objects can be shifted more than 1 pixel at 10 m resolution for Sentinel-2A visible spectral bands, and these shifts can be detected using a phase correlation approach at sub-pixel scale. We have demonstrated that this Sentinel-2A/MSI feature of multi-spectral misregistration has the potential for detecting moving targets, e.g. airplanes, and clouds. Further work should be directed to combining this approach with other criteria to make this approach applicable in the operational context.

Acknowledgments

This work was supported by the NASA grant “Support for the HLS (Harmonized Landsat-Sentinel-2) Project” (no. NNX16AN88G).

Contributor Information

Sergii Skakun, Department of Geographical Sciences, University of Maryland, College Park, MD 20742 USA.

Eric Vermote, Terrestrial Information Systems Laboratory (Code 619), NASA Goddard Space Flight Center, Greenbelt, MD 20771 USA.

Jean-Claude Roger, Department of Geographical Sciences, University of Maryland, College Park, MD 20742 USA.

Christopher Justice, Department of Geographical Sciences, University of Maryland, College Park, MD 20742 USA.

References

- 1.Drusch M, et al. Sentinel-2: ESA's optical high-resolution mission for GMES operational services. Remote Sens. Environ. 2012;120:25–36. [Google Scholar]

- 2.Gascon F, et al. Copernicus Sentinel-2A calibration and products validation status. Remote Sens. 2017;9(6) Art. no. 584. [Google Scholar]

- 3.Leese JA, Novak CS, Clark BB. An automated technique for obtaining cloud motion from geosynchronous satellite data using cross correlation. J. Appl. Meteorol. 1971;10(1):118–132. [Google Scholar]

- 4.Vivone G, Addesso P, Conte R, Longo M, Restaino R. A class of cloud detection algorithms based on a map-mrf approach in space and time. IEEE Trans. Geosci. Remote Sens. 2014;52(8):5100–5115. [Google Scholar]

- 5.Robinson WD, Franz BA, Mannino A, Ahn JH. Cloud motion in the GOCI/COMS ocean colour data. Int. J. Remote Sens. 2016;37(20):4948–4963. [Google Scholar]

- 6.Kääb A, Leprince S. Motion detection using near-simultaneous satellite acquisitions. Remote Sens. Environ. 2014;154:164–179. [Google Scholar]

- 7.Meng L, Kerekes JP. Object tracking using high resolution satellite imagery. IEEE J. Sel. Topics Appl. Earth Observ. Remote Sens. 2012;5(1):146–152. [Google Scholar]

- 8.Easson G, DeLozier S, Momm HG. Estimating speed and direction of small dynamic targets through optical satellite imaging. Remote Sensing. 2010;2(5):1331–1347. [Google Scholar]

- 9.Latry C, Panem C, Dejean P. Cloud detection with SVM technique. Proc. IEEE Int. Geosci. Remote Sens. Symp. 2007 Jul;:448–451. [Google Scholar]

- 10.Kim TY, Choi MJ. Image Registration for Cloudy KOMPSAT-2 Imagery Using Disparity Clustering. Korean J. Remote Sens. 2009;25(3):287–294. [Google Scholar]

- 11.Skakun S, Roger J-C, Vermote EF, Masek JG, Justice CO. Automatic sub-pixel co-registration of Landsat-8 Operational Land Imager and Sentinel-2A Multi-Spectral Instrument images using phase correlation and machine learning based mapping. Int. J. Digital Earth. 2017 doi: 10.1080/17538947.2017.1304586. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Yan L, Roy DP, Zhang H, Li J, Huang H. An automated approach for sub-pixel registration of Landsat-8 Operational Land Imager (OLI) and Sentinel-2 Multi Spectral Instrument (MSI) imagery. Remote Sens. 2016;8(6) Art. no. 520. [Google Scholar]

- 13.Storey J, Roy DP, Masek J, Gascon F, Dwyer J, Choate M. A note on the temporary misregistration of Landsat-8 Operational Land Imager (OLI) and Sentinel-2 Multi Spectral Instrument (MSI) imagery. Remote Sens. Environ. 2016;186:121–122. [Google Scholar]

- 14.Kuglin CD, Hines DC. The phase correlation image alignment method. Proc. Int. Conf. Cybernetics Society. 1975:163–165. [Google Scholar]

- 15.Foroosh H, Zerubia JB, Berthod M. Extension of phase correlation to subpixel registration. IEEE Trans. Image Process. 2002;11(3):188–200. doi: 10.1109/83.988953. [DOI] [PubMed] [Google Scholar]

- 16.Guizar-Sicairos M, Thurman ST, Fienup JR. Efficient subpixel image registration algorithms. Opt. Lett. 2008;33(2):156–158. doi: 10.1364/ol.33.000156. [DOI] [PubMed] [Google Scholar]

- 17.Gatti A, Bertolini A. Sentinel-2 Products Specification Document. European Space Agency. 2016 Oct 24;(14.2) [Online]. Available: https://sentinel.esa.int/documents/247904/685211/Sentinel-2-Product-Specifications-Document.

- 18.Data Quality Report. [Online]. Available: https://sentinel.esa.int/web/sentinel/data-product-quality-reports.

- 19.Zhu Z, Wang S, Woodcock CE. Improvement and expansion of the Fmask algorithm: cloud, cloud shadow, and snow detection for Landsats 4–7, 8, and Sentinel 2 images. Remote Sens. Environ. 2015;159:269–277. [Google Scholar]

- 20.Vermote E, Justice C, Claverie M, Franch B. Preliminary analysis of the performance of the Landsat 8/OLI land surface reflectance product. Remote Sens. Environ. 2016;185:46–56. doi: 10.1016/j.rse.2016.04.008. [DOI] [PMC free article] [PubMed] [Google Scholar]