Abstract

A common challenge in systems biology is quantifying the effects of unknown parameters and estimating parameter values from data. For many systems, this task is computationally intractable due to expensive model evaluations and large numbers of parameters. In this work, we investigate a new method for performing sensitivity analysis and parameter estimation of complex biological models using techniques from uncertainty quantification. The primary advance is a significant improvement in computational efficiency from the replacement of model simulation by evaluation of a polynomial surrogate model. We demonstrate the method on two models of mating in budding yeast: a smaller ODE model of the heterotrimeric G-protein cycle, and a larger spatial model of pheromone-induced cell polarization. A small number of model simulations are used to fit the polynomial surrogates, which are then used to calculate global parameter sensitivities. The surrogate models also allow rapid Bayesian inference of the parameters via Markov chain Monte Carlo (MCMC) by eliminating model simulations at each step. Application to the ODE model shows results consistent with published single-point estimates for the model and data, with the added benefit of calculating the correlations between pairs of parameters. On the larger PDE model, the surrogate models allowed convergence for the distribution of 15 parameters, which otherwise would have been computationally prohibitive using simulations at each MCMC step. We inferred parameter distributions that in certain cases peaked at values different from published values, and showed that a wide range of parameters would permit polarization in the model. Strikingly our results suggested different diffusion constants for active versus inactive Cdc42 to achieve good polarization, which is consistent with experimental observations in another yeast species S. pombe.

Author summary

Mathematical models in systems biology often have many parameters, such as biochemical reaction rates, whose true values are unknown. When the number of parameters is large, it becomes computationally difficult to analyze their effects and to estimate parameter values from experimental data. This is especially challenging when the model is expensive to evaluate, which is the case for large spatial models. In this paper, we introduce a methodology for using surrogate models to drastically reduce the cost of parameter analysis in such models. By using a polynomial approximation to the full mathematical model, parameter sensitivity analysis and parameter estimation can be performed without the need for a large number of model evaluations. We explore the application of this methodology to two models for yeast mating polarization. A simpler non-spatial model is used to demonstrate the techniques and compare with published results, and a larger spatial model is used to demonstrate the computational savings offered by this method.

Introduction

Mathematical models provide a more quantitative description of biological systems compared to qualitative arrow diagrams. A major tool of mathematical modeling is differential equations representing the dynamics of various components of the system which may be a cell, organism, or ecosystem [1–3]. In models describing cellular dynamics, the components are typically different protein species, and their changing levels depend on the biochemical reactions between the species [4, 5]. If spatial dynamics, such as diffusion and advection, are present and considered, partial differential equation (PDE) models must be used; otherwise, the system can be modeled by ordinary differential equations (ODE).

One of the challenges in modeling is identifying the parameters from data [6, 7]. For cellular models these parameters include the kinetic rate constants in the various reaction terms, as well as initial conditions of the modeled species. Two important tools of parameter analysis are sensitivity analysis and parameter estimation. Parameter sensitivity analysis is used to quantify the degree to which each parameter affects an output of interest. Two general types of sensitivity analysis, local and global sensitivity analysis, have been widely used. In local sensitivity analysis, sensitivities are evaluated at a single parameter set, whereas in global sensitivity analysis, sensitivities are evaluated across the entire parameter space. These analyses have been widely applied, such as to models in epidemiology [8–12], signalling pathways [13–15], physiology [16], and wound healing [17].

For parameter estimation, two major approaches are Bayesian and maximum likelihood [7, 18]. The primary difference between these two is that Bayesian methods infer a probability distribution for the parameters based on the available data, whereas maximum likelihood methods will provide a single-point estimate. In systems biology, parameter estimation has been widely applied, via both Bayesian inference [11, 19–21] and optimization methods [22–25]. An important advantage of the Bayesian approach is a more explicit representation of the uncertainty in the parameter estimates; however, that usually comes at a price of high computational cost for estimating the distribution by extensive sampling.

In general, global sensitivity analysis and parameter estimation both require sampling of the parameter space. For systems with large parameter counts, this can become very challenging due to the curse of dimensionality. Too many parameters can make sampling of the parameter space computationally intractable, especially for partial differential equation models that are expensive to solve. Many advances have been made in reducing computational cost in the field of uncertainty quantification (UQ), which is concerned with the characterization and reduction of uncertainty in mathematical models [26–28]. Polynomial approximation has proven to be a key tool in uncertainty quantification. System outputs can be approximated by an orthogonal polynomial expansion (polynomial chaos), allowing for straight-forward computation of statistical quantities [29].

In this paper, we apply a method for parameter sensitivity analysis and parameter estimation that uses polynomial approximation to significantly reduce the computational cost for large problems. A key step in the proposed method is the construction of a polynomial surrogate model. This surrogate model allows for sampling methods to be applied without the need to solve the full system for each sample. The use of surrogate models (e.g. support vector machines) for biological systems has been explored previously in [16], and the use of polynomial approximations for uncertainty analysis has been investigated in [30, 31].

To demonstrate the capability of the proposed method, we apply it to models of yeast cell polarization. Cell polarization is the process by which intracellular species (e.g. proteins) become asymmetrically localized, which is fundamental to cellular processes such as cell division, differentiation, and movement [32, 33]. Failure in polarization can lead to cell death or dysfunction, and abnormal cell polarity is characteristic of cancer and may contribute to tumor initiation [34]. Cell polarization has been extensively studied in the budding yeast S. cerevisiae due to its pronounced polarity and genetic tractability [35, 36]. The models analyzed in this paper describe polarization in response to pheromone during mating in budding yeast.

We consider two models: an ODE model for only one module of the system (the heterotrimeric G-protein cycle), and a spatial model that incorporates a larger signaling pathway as well as membrane diffusion of the proteins. We will refer to these models as Model 1 and Model 2, respectively. Model 1 was proposed in [37] and has eight kinetic rate parameters, six of which have been experimentally measured or approximated from the literature. The remaining two parameters were estimated in [37] via an optimization method. This model is used to demonstrate the method and for comparison with the previous results. Model 2 is a mechanistic reaction-diffusion model, which is an extension of the model considered in [38]. This model has 35 unknown parameters. Parameter sensitivity analysis and parameter estimation have not previously been performed for this model, in part due to the large number of parameters. We seek to utilize polynomial surrogate models to quantify the effects of the parameters on polarization and to infer the biologically reasonable parameter values.

It should be noted that the results of parameter sensitivity and parameter estimation are dependent on the assumed model structure. In systems biology there is often significant uncertainty in the model structure itself. Some work has been done on quantifying the structural uncertainty in models of biological networks and reconstructing networks from data [39–41]. However, this is beyond the scope of the present work and this source of uncertainty is not addressed in this paper.

The structure of this paper is as follows. We first present the mathematical methods for surrogate model construction and how to perform parameter sensitivity analysis and parameter estimation using a polynomial surrogate. We then demonstrate the methods on Model 1, performing sensitivity analysis and estimation in two cases: first, varying only the two free parameters, and second, varying all eight parameters. We then present Model 2 and use sensitivity analysis to significantly reduce the parameter count. Bayesian parameter estimation is then performed in the reduced parameter space. We discuss the computational savings afforded by the use of a polynomial surrogate for parameter estimation in Model 2. Finally, we discuss biological implications of the results and future applications of the polynomial surrogates in Bayesian model analysis.

Methods

Surrogate model construction

Biological systems often possess many parameters whose true values are unknown. In order to gain an understanding of the effects of each parameter, we need to sample the parameter space. However, sampling a high-dimensional space is a difficult task. For example, in the next section we consider a large PDE model with 35 parameters. In this case, even with only two sample points in each dimension we would need 235 ∼ O(1010) samples, and each sample requires solving a PDE system. This makes direct sampling of the PDE impractical. Instead, we may choose a scalar response function that quantifies an output of interest and by assuming that this response function depends smoothly on the parameters, a polynomial can be fit using far fewer sample points. Since we are performing parameter estimation, the response function depends not only on what quantity is of interest but also on what experimental data are available.

If multiple response functions are of interest (for example, different time points or different values of some input), there are two options—one can either increase the number of variables in the polynomial or use multiple polynomials. For example, if measurements are taken at several time points t1,…,tk, then either t may be introduced as a variable of the polynomial or a polynomial Pi can be fit for each time point (i = 1,…, k). The choice can be made based on computational cost. If data are sparse, it is usually best to fit multiple polynomials, which is the approach taken in this work. Once the polynomial is established, we can use it as a surrogate for the full model so that sampling of the parameter space is far less expensive.

To perform the polynomial fitting, we use an orthogonal polynomial basis from the generalized polynomial chaos (gPC) approach [26, 29]. Thus the choice of basis for the polynomial space depends on the assumed probability distribution of the parameters. For the examples considered in this work, we assume that the parameters are independent and identically distributed, and uniformly distributed in a fixed range. This leads to the use of a Legendre polynomial basis. All parameters are mapped to a standard reference interval of [−1, 1]. We do not consider any other distributions, but the same principles can be applied if the parameters have a Gaussian distribution (Hermite polynomials), Gamma distribution (Laguerre polynomials), or Beta distribution (Jacobi polynomials).

Recall that the number of basis functions for the set of polynomials of degree up to d in n variables is . The polynomial coefficients can be solved for in a number of ways, depending on the number of samples available. If the number of samples is exactly , then the coefficients can be solved for by direct interpolation. This case should generally be avoided as interpolation is notoriously prone to instability. If the number of samples is greater than , least squares approximation can be used. If the number of samples is less than , which is the case of interest for large problems, one may use compressed sensing methods to solve for the coefficients [42]. This approach has been well established for UQ problems [43, 44].

The samples can be chosen in a variety of ways (e.g. uniform random sampling, sparse grids, Latin hypercube sampling, etc.). A quasi-optimal sampling scheme for least squares polynomial fitting has been explored in [45]. In the applications presented here, we use uniform random sampling. Details of the polynomial fitting are presented in Algorithm 1.

Algorithm 1 Polynomial fitting algorithm.

1. Determine the desired polynomial degree and how many samples can reasonably be obtained.

2. Sample the parameter space using the sampling method of your choice. The sampling method may depend on whether you are undersampling or oversampling (e.g. for oversampling, you may want to use quasi-optimal points for least squares [45]).

3. Using the samples from step 2, set up a linear system Ax = b where x is the vector of polynomial coefficients, A is a matrix whose entries are the basis polynomials evaluated at the sample points (each row corresponds to one sample, each column corresponds to one basis polynomial), and b is a column vector of the model output at the sample points.

4. Solve for the coefficients. If undersampling, perform compressed sensing with ℓ1-minimization. If oversampling, perform least-squares fitting.

The accuracy of the polynomial can be estimated by cross-validation. In cross-validation, the model is evaluated at additional sample points that were not used in the polynomial fitting. The model output can then be compared with the polynomial value at those points to determine the error. One may also perform k-fold cross validation in which the total set of sample points is partitioned into k equally sized subsets; call them Ωi, i = 1,…, k. Cross-validation is then performed k times. For each i, the samples in Ωi are used to evaluate the error and the remaining samples are used to fit the polynomial. The acceptable level of error will depend on the particular application.

Parameter sensitivity analysis and parameter estimation

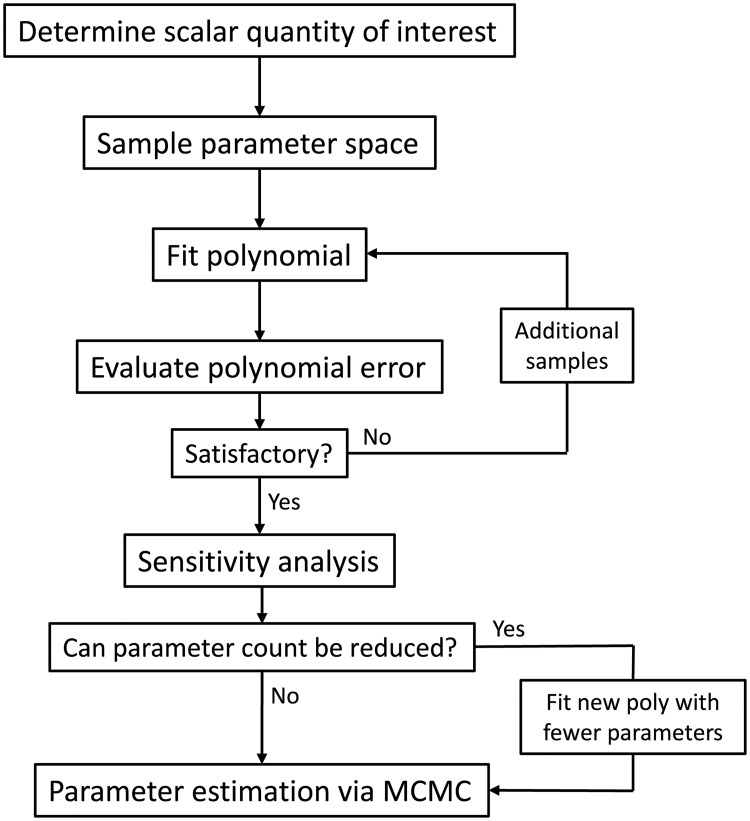

Once the polynomial surrogate model is constructed, it can be used to perform parameter sensitivity analysis and parameter estimation (Fig 1). Any sensitivity or estimation method can be applied using the polynomial surrogate model to decrease computational cost. In the work presented here, the methods are as follows.

Fig 1. Flowchart for parameter analysis described in methods.

The polynomial fitting procedure is described in Algorithm 1. The parameter space samples are generated by model simulation. The sensitivity analysis and parameter estimation use the fitted surrogate polyomial.

We define the sensitivity of a response function z(p1,…, pn) to a parameter pj as . We refer to Sj as the sensitivity coefficient for pj. Note that, while the partial derivative is typically used for local sensitivity analysis, the expectation makes this a global measure of sensitivity since is integrated over the entire parameter space. Using the surrogate model, the parameter sensitivities can be analytically computed by taking partial derivates and evaluating , where ρ is the probability measure associated with the n-dimensional parameter space.

We can then assess the importance of each parameter based on its sensitivity. If the response is not sensitive to a parameter pj, then the dynamics of the model will likely remain unchanged if pj is fixed. Further, pj may be non-identifiable so that multiple values can produce an equally good fit to data. Thus, we may use the sensitivity analysis to decrease the parameter count by fixing those parameters that have small sensitivity coefficients.

For parameter estimation, we use Markov chain Monte Carlo (MCMC) method with Metropolis-Hastings algorithm [46]. MCMC is a method for sampling the posterior distribution of the parameters—that is, the parameter distribution that corresponds to the distribution of the provided data, given an assumed prior distribution. For the prior distribution, we use the parameter distribution that was assumed in the construction of the surrogate polynomial (in this case, uniformly distributed within a range). This Bayesian approach to parameter estimation provides both the most probable parameter set (or sets) as well as a characterization of the parameter uncertainty.

MCMC methods have become a popular choice for parameter estimation in biological systems [21, 47, 48]. However, these methods are often prohibitively expensive for computationally intensive models, since each sample in the Markov chain requires a model evaluation. By using the polynomial surrogate, the cost is greatly reduced. Further, it has been shown that in the generalized polynomial chaos framework, the polynomial fit and the resulting posterior distribution have similar convergence properties [49]. Thus, if the error in the polynomial fit is small, we expect the error in the posterior distribution to also be small.

A key question is knowing when the MCMC has converged, meaning that the distribution of the Markov chain samples has converged to the posterior distribution. Several convergence diagnostics for MCMC have been proposed [50, 51]. We employ a simple test which is to run multiple Markov chains from different initial parameter sets and compare the resulting distributions. Roughly speaking, if the independent chains stabilize at the same distribution, then the MCMC has converged. Since the chains are independent, they can be run in parallel to save computing time. We choose MCMC over alternative sampling methods [20, 52, 53] because of its efficiency. Since MCMC is based on a Markov chain, the samples tend toward higher probability areas of the parameter space in contrast to schemes that may sample the entire space.

All codes have been made publicly available on GitHub in the repository https://github.com/chingshanchou/UQ-Yeast-Mating-Model.

Experimental techniques

The yeast strain CGY-021 is a derivative of W303-1A and contains the bar1Δ mutation that prevents α-factor degradation by deletion of the Bar1 protease. GFP has been integrated genomically at the C-terminus of Ste20 to create a Ste20-GFP fusion protein that is a fluorescent reporter for active Cdc42 [54]. The genotype of the strain CGY-021 is MATa, can1-100, ade2-1, leu2-3,-112, his3-11,-15, trp1-1, ura3-1, bar1::hisG, ste20Δ::STE20-GFP-HIS5.

Cells were cultured in YPD (yeast extract-peptone-dextrose) media supplemented with adenine. Cells were treated for 60 minutes with 10 nM α-factor and then fixed with formaldehyde. Visualization was performed using a 60x objective (NA = 1.4) on an Olympus Fluoview 1000 Spectral confocal microscope. The resulting images were analyzed in Matlab and the membrane fluorescent intensity was quantified over the periphery of the cell to generate the polarization profile that was averaged over 20 cells and converted into a polarization factor value.

Results

We apply the proposed method to two models of the yeast mating response. Haploid budding yeast cells assume two mating types, a or α, and each secretes a pheromone (a-factor and α-factor, respectively) to attract the opposite type. We consider the response of an a-cell to α-factor, although the two cases are nearly identical [55]. During the mating process, α-factor binds and activates the α-factor receptors on the a-cell surface, which leads to a sequence of reactions that results in the recruitment of polarization proteins to the cell membrane, causing the formation of the mating projection. These reactions occur in two G-protein cycles (unidirectional cycles formed by the activation and deactivation of G-proteins) which are the heterotrimeric and Cdc42 G-protein cycles [36, 56, 57]. A description of these cycles can be found in [38], and are summarized below.

First, the pheromone α-factor (L) binds the α-factor receptor (R) to form the receptor-ligand complex (RL). This activates the heterotrimeric G-protein (G) to form active α-subunit (Ga) and free Gβγ (Gbg). Gα can be deactivated to form inactive α-subunit (Gd), which binds Gβγ to reform G. The free Gβγ then recruits Cdc24 (C24) to the cell membrane (C24m), which activates Cdc42 (C42) to its active form (C42a). Active Cdc42 then binds the scaffold protein Bem1 (B1) and recruits it to the membrane (B1m). Membrane-bound Bem1 is then able to recruit more Cdc24 to the membrane, creating a positive feedback loop. In addition, active Cdc42 turns on the kinase Cla4 (Cla4a), which inhibits Cdc42 activation by negatively regulating Cdc24. The pathway is summarized in the schematic diagram in S1 Fig.

Two key features of this process are the positive and negative feedback loops. In the positive feedback loop, membrane-bound Bem1 binds and activates Cdc24 which catalyzes the formation of active Cdc42 which binds more Bem1. In the negative feedback loop, active Cdc42 activates Cla4 which inhibits the membrane-bound Cdc24, leading to a lower activation rate of Cdc42. Cdc42 is of particular interest since it plays a key role in establishing polarity and is highly conserved from yeasts to humans [58].

Model 1: The heterotrimeric G-protein cycle

To demonstrate our methods, we first consider a simple model: an ODE model of the heterotrimeric G-protein cycle taken from [37]. These equations represent the first stage of the system that senses the input ligand (L) α-factor:

| (1) |

| (2) |

| (3) |

| (4) |

where the k’s are reaction rates. Here, [Gd] = Gt − [G] − [Ga] and [Gbg] = Gt − [G], with Gt being the total number of G-protein molecules per cell. The model output is the fraction of free Gβγ (Gbg/Gt), and the time unit is seconds. The model contains 9 parameters (8 rate constants and Gt), 7 of which were determined in [37] from experimental measurements and information from the literature. The remaining two parameters (kGa and kGd) were fit to data in [37] via least squares minimization. These parameter values are given in S1 Table. We focus first on this two-parameter problem, and use the proposed methods to corroborate the published parameter estimates. Later, we will allow all eight kinetic parameters to vary to determine if the same parameter estimates are obtained in the larger parameter space. In the 2-dimensional sensitivity analysis and parameter estimation, we will assume that the parameters kGa and kGd are log-uniformly distributed in the intervals [10−7, 10−3] and [10−3, 10], respectively, which span the relevant ranges for the parameters.

Construction of the polynomial surrogate model

Since the ultimate goal is parameter estimation, the response functions of interest are those outputs for which we have experimental data. Using the data from [37], we have measurements of the free Gβγ fraction (Gbg/Gt) at the time points and α-factor levels specified in S2 Table.

We first construct a polynomial surrogate model that approximates the ODE model which allows us to sample the parameter space at a much lower computational cost. In this example, we construct a set of polynomials in two variables (kGa and kGd), whose output represents Gbg/Gt for the time-course and dose-response data. Since the dimension of the polynomial space is relatively low, we can perform polynomial fitting by least squares approximation. To fit the polynomial, we first map the parameter ranges to [−1, 1], and then fit the polynomial using a Legendre basis (see Methods).

The degree of the polynomial as well as the number of points used for least squares fitting can be adjusted depending on the error of the resulting polynomial. The error can be determined by calculating the difference between the polynomial and the simulated full model at randomly sampled points using cross-validation. Since the number of samples may need to be adjusted, it is best to use a sampling technique that allows for the sequential addition of points, such as simple random sampling or Sobol sampling.

In Fig 2A, we use uniform random sampling to fit a 5th order polynomial. The error of the polynomial stabilizes quite rapidly with the addition of more sample points, and settles to a roughly constant standard deviation (with a mean near 0) when the number of sample points is 4 times the number of basis polynomials. In Fig 2B, we fit polynomials of varying degree (up to degree 10) using 1000 sample points. With this relatively large sample size, the standard deviation of the polynomial error decreases as the polynomial degree grows. Thus, the polynomial can be made more accurate by increasing the number of samples and the polynomial degree. It should be noted that for a small sample size, increasing the degree does not necessarily improve the polynomial error due to extrapolation error and Runge’s phenomenon [59].

Fig 2. Polynomial errors.

Error mean and standard deviation (measured using 100 random samples by cross-validation) for different polynomial fits (top), and the cost to compute the polynomials (bottom). (A) 5th order polynomials fit using different numbers of sample points. (B) Polynomials of varying degree using least squares fitting with 1000 points. Polynomial error is the average difference between the polynomial and the model output, and the error bars indicate the standard deviation of the error over the 100 sample points.

We plot the computational cost of the polynomial fitting as a function of number of samples or polynomial degree at the bottom of Fig 2. We find that the cost is primarily determined by sample size.

Sensitivity analysis reveals importance of kGa and kGd

For the sensitivity analysis and parameter estimation, we use the 10th degree polynomial fit from 1000 sample points. Since each data point in S2 Table acts as its own response function, we obtain 15 sensitivity coefficients for each parameter (one per response function). These coefficients are given in Table 1 along with their means which give an idea of the overall sensitivity of the system to the parameters kGa and kGd. We observe from the sensitivity coefficients that a larger value of kGa is associated with a larger value of Gbg/Gt for all of the data points, and a larger value of kGd is associated with a smaller value of Gbg/Gt. Interestingly kGa has a larger effect on the output than kGd, but both effects are substantial (magnitude between 0.1 and 1), and the sensitivities of the data points are close to their mean.

Table 1. Sensitivities of output (Gbg/Gt) to kGa and kGd based on a 10th degree polynomial fit.

Sensitivity coefficients are given for different time points (T, secs) and α-factor concentrations (L, nM), as well as the overall mean.

| Data points | Sensitivity to kGa | Sensitivity to kGd | |

|---|---|---|---|

| L = 1000 | T = 10 | 4.6 × 10−1 | −2.4 × 10−1 |

| L = 1000 | T = 30 | 4.5 × 10−1 | −3.0 × 10−1 |

| L = 1000 | T = 60 | 4.5 × 10−1 | −3.4 × 10−1 |

| L = 1000 | T = 120 | 4.3 × 10−1 | −3.7 × 10−1 |

| L = 1000 | T = 210 | 4.3 × 10−1 | −4.0 × 10−1 |

| L = 1000 | T = 300 | 4.2 × 10−1 | −4.0 × 10−1 |

| L = 1000 | T = 450 | 4.0 × 10−1 | −4.0 × 10−1 |

| L = 1000 | T = 600 | 3.9 × 10−1 | −4.0 × 10−1 |

| L = 1 | T = 60 | 3.5 × 10−1 | −2.0 × 10−1 |

| L = 2 | T = 60 | 3.8 × 10−1 | −2.3 × 10−1 |

| L = 5 | T = 60 | 4.2 × 10−1 | −2.7 × 10−1 |

| L = 10 | T = 60 | 4.4 × 10−1 | −2.9 × 10−1 |

| L = 20 | T = 60 | 4.4 × 10−1 | −3.1 × 10−1 |

| L = 50 | T = 60 | 4.4 × 10−1 | −3.2 × 10−1 |

| L = 100 | T = 60 | 4.5 × 10−1 | −3.2 × 10−1 |

| Mean sensitivity | 4.2 × 10−1 | −3.2 × 10−1 | |

Parameter estimation of kGa and kGd is in agreement with previous estimates

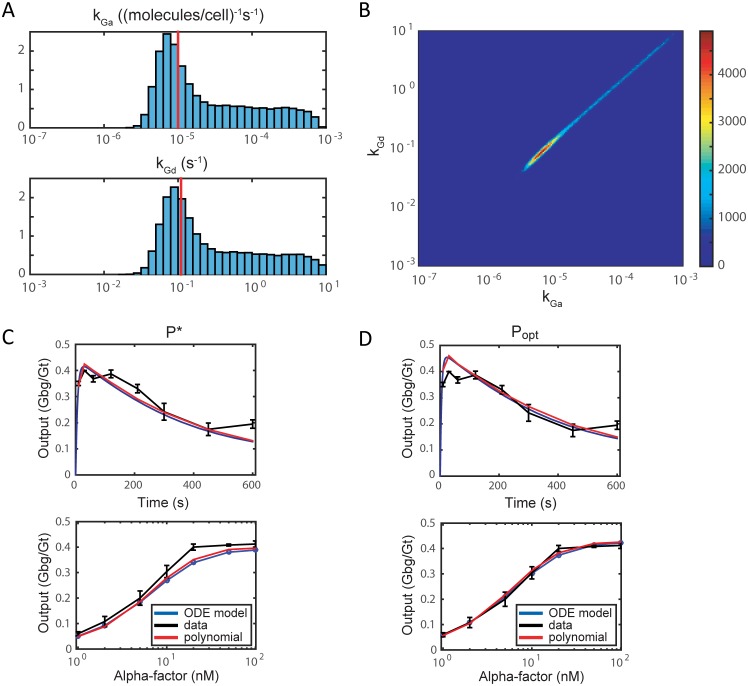

We perform parameter estimation using the data from [37] and described in S2 Table. Markov chain Monte Carlo with a 10th degree polynomial surrogate generates the parameter distributions shown in Fig 3A and 3B over a range of four orders of magnitude. The length of the Markov chain is 106 steps with a burn-in period of 105 steps. The distribution has a single mode at , which is close to the optimal (maximum likelihood) estimate Popt = (1 × 10−5, 1.1 × 10−1) from [37]. The units for kGa and kGd are (molecules per cell)−1s−1 and s−1, respectively. Simulating the model with P* produces a good fit to the time-course and dose-response data shown in Fig 3C and 3D. The mean squared errors for P* is 4.1 × 10−4, while the mean squared error for Popt is 1.3 × 10−4. Finally, plotting the two-dimensional distributions of kGa and kGd show that they are highly correlated with the highest probability region lying along the diagonal (Fig 3B).

Fig 3. Parameter estimation of kGa and kGd in ODE model (1)–(4).

Probability distributions are obtained via Markov chain Monte Carlo and a 10th degree polynomial. (A) Distributions for individual parameters, normalized so that the total area is equal to 1. Red lines indicate the optimal (maximum likelihood) parameter values Popt. (B) Colormap of the two-dimensional joint probability distribution of kGa and kGd from the MCMC chain. Red indicates high probability along the diagonal; blue indicates low prsobability. (C, D) Model (blue) and polynomial (red) outputs corresponding to parameter sets P* and Popt, respectively, compared with the data (black) from [37] for the time-course (top) and dose-response (bottom) experiments.

Allowing all 8 parameters to vary produces parameter estimates consistent with published values

We now apply the same parameter estimation procedure to the G-protein model allowing all 8 of the kinetic parameters to vary. In other words, we assume that all the parameters are unknown and would like to use our model to estimate these parameters. The parameters are assumed to be log-uniformly distributed in the ranges in S3 Table. All parameter ranges span two orders of magnitude (the ranges for kGa and kGd are shrunk from the previous section but still contain P* and Popt).

For this problem we choose a 5th degree polynomial surrogate that allows oversampling; the 5th degree polynomial space in 8 parameters has 1287 basis polynomials. We perform uniform random sampling on 1500 points generated by model simulation to construct the polynomial by least squares fitting. The resulting polynomial has mean absolute error 2.5 × 10−2.

Using the polynomial as a surrogate for the full model, we compute parameter sensitivities for the 8 parameters, and the mean sensitivities over the dataset are given in Table 2. As expected, we observe that the output (Gbg/Gt) is most sensitive to kGa and kGd whose sensitivities decreased slightly compared to when the other 6 parameters are fixed.

Table 2. Sensitivities of the ODE model output (Gbg/Gt) to all 8 kinetic parameters using a 5th degree surrogate polynomial.

Both mean sensitivities and the mean of the absolute value of the sensitivities are shown.

| Parameter | Mean sensitivity | Mean abs. value of sensitivity |

|---|---|---|

| kRL | 8.2 × 10−2 | 8.2 × 10−2 |

| kRLm | −3.2 × 10−2 | 3.2 × 10−2 |

| kRs | 9.2 × 10−3 | 1.2 × 10−2 |

| kRd0 | 1.1 × 10−3 | 6.3 × 10−3 |

| kRd1 | −6.2 × 10−2 | 6.2 × 10−2 |

| kG1 | 5.6 × 10−4 | 7.4 × 10−3 |

| kGa | 3.1 × 10−1 | 3.1 × 10−1 |

| kGd | −2.6 × 10−1 | 2.6 × 10−1 |

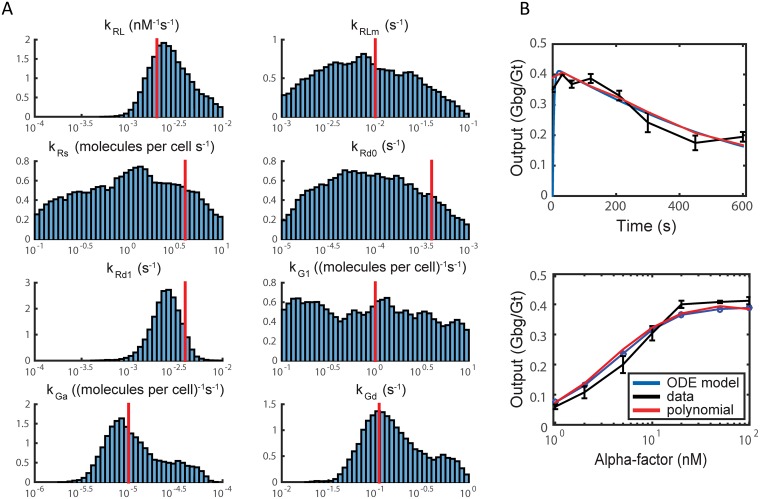

Next, we perform parameter estimation on all 8 parameters and obtain the distributions in Fig 4A. The distributions have a single relatively sharp peak for 4 parameters (kRL, kRd1, kGa, and kGd), which correspond to the parameters with the highest sensitivities. The peak values in these cases are close to the measured or estimated parameter values from [37]. The other 4 parameters possess broader distributions with the distribution for heterotrimeric G-protein reassociation (kG1) being nearly flat consistent with its low sensitivity. The values of the rate constants for receptor synthesis (kRs) and degradation (kRd0, kRd1) are somewhat lower than the previous estimates. However, given the uncertainty in the parameter estimates, the difference is not statistically significant; the measured values are less than 1.5 standard deviations away from the mean.

Fig 4. MCMC results for ODE model (1)–(4).

(A) Parameter distributions from ODE model (1)–(4) for all 8 kinetic parameters obtained via MCMC. Red vertical lines indicate the parameter values from experiments or maximum likelihood estimates [37]. Markov chain length was 106 steps. (B) Model simulation and polynomial outputs using the mean parameter set from the 8-parameter MCMC compared with the time-course (top) and dose-response (bottom) data.

We determined the mean values for each parameter distribution to create the mean parameter set (Pmean), and simulated the model with these parameter values. This parameter set is given in Table A in S1 Text. The resulting time-course and dose-response curves compared to data are shown in Fig 4B. The mean squared error for the mean parameter set is 6.4 × 10−4, which is close to the mean squared error for Popt (1.3 × 10−4).

The correlation between pairs of parameters can be calculated along with the individual distributions. A graphical representation of the correlations among the 8 parameters is given in S2 Fig. Note that kGa and kGd are still strongly correlated. There are weaker correlations between kRL and kRLm and between kRs and kRd1, which represent pairs of rate constants for opposing reactions in ligand binding/unbinding and receptor synthesis/degradation, respectively.

Model 2: Mechanistic spatial model for yeast cell polarization

To capture the spatiotemporal dynamics of yeast cell polarization during mating, one needs a mechanistic spatial model. In this model, protein spatial dynamics are driven by two processes: surface diffusion on the cell membrane and reactions with other proteins in the system. This leads to a system of reaction-diffusion equations, similar to the model presented in [38]. The first six equations represent the dynamics of the heterotrimeric G-protein cycle, and the remaining equations represent the dynamics of the Cdc42 G-protein cycle. The distance unit is μm, the time unit is seconds, and concentration is measured as the number of molecules per unit surface area or volume (except for the ligand L, which is measured in nM).

| (5) |

| (6) |

| (7) |

| (8) |

| (9) |

| (10) |

| (11) |

| (12) |

| (13) |

| (14) |

| (15) |

The coefficients are given by

where SA is the surface area of the cell. The initial conditions are given by

The conservation equations are

where C24t and B1t are the total amounts of C24 and B1 respectively, V is the volume of the cell, and [C24c] and [B1c] are the concentrations of C24 and B1, respectively, in the cytoplasm. Thus the total amounts of Bem1 and Cdc24 are conserved. Estimates from previous work and ranges for the parameters are given in Table 3.

Table 3. Parameter estimates and ranges from previous work.

SA denotes cell surface area and V denotes cell volume. The distance unit is μm, the time unit is seconds, and concentration is measured as the number of molecules per unit surface area or volume (unless otherwise specified).

| Parameter | Description | Previous estimate | Range | Ref. |

|---|---|---|---|---|

| DR | Diffusion of R | 0.001 | ±10% | [60, 61] |

| DRL | Diffusion of RL | 0.001 | ±10% | [60, 61] |

| DG | Diffusion of G | 0.01 | [0.005, 0.02] | [60, 61] |

| DGa | Diffusion of Ga | 0.01 | [0.005, 0.02] | [60, 61] |

| DGbg | Diffusion of Gbg | 0.01 | [0.005, 0.02] | [60, 61] |

| DGd | Diffusion of Gd | 0.01 | [0.005, 0.02] | [60, 61] |

| DC24m | Diffusion of C24m | 0.01 | [0.005, 0.02] | [60, 61] |

| DC42 | Diffusion of C42 | 0.01 | [0.005, 0.02] | [60, 61] |

| DC42a | Diffusion of C42a | 0.01 | [0.005, 0.02] | [60, 61] |

| DB1m | Diffusion of B1m | 0.01 | [0.005, 0.02] | [60, 61] |

| kRL | RL association | 2 × 10−3 nM−1s−1 | ±10% | [37] |

| kRLm | RL dissociation | 10−2 | ±10% | [37] |

| kRd0, kRd1 | R internalization | 4 × 10−4 | ±10% | [37] |

| kRs | R synthesis | 4/SA | ±10% | [37] |

| kGa | G-protein activation | 10−5 × SA | ±10% | [37] |

| kGd | G-protein deactivation | 0.1 | ±10% | [37] |

| kG1 | Heterotrimer association | 1 | ±10% | [37] |

| k42d | Cdc42 deactivation | 0.02 | [0.02, 2] | [60] |

| k42a | Cdc42 activation | 10−5 × SA | [10−5, 10−3] × SA | [60] |

| k24cm0 | Gβγ recruitment of Cd24 | 0.04 × V/SA | [0.004, 0.4] × V/SA | [60] |

| k24cm1 | Bem1 recruitment of Cdc24 | 3.3 × 10−3 × V | [3.3 × 10−4, 3.3 × 10−2] × V | [60] |

| k24mc | Cdc24, membrane to cytoplasm | 1 | [0.1, 1] | [60] |

| kB1mc | Bem1, membrane to cytoplasm | 0.01 | [0.01, 1] | [60] |

| kB1cm | Bem1, cytoplasm to membrane | 10−5 × V | [10−5, 10−3] × V | [60] |

| kCla4a | Cla4 activation | 0.006 | [0.0006, 0.06] | [60] |

| kCla4d | Cla4 deactivation | 0.01 | [0.001, 0.1] | [60] |

| k24d | Negative regulation of Cdc42 cycle | SA/3000 | [0.1, 10] × SA/3000 | [60] |

| q | Hill coefficient for | 100 | [1, 100] | [38, 60] |

| h | Hill coefficient for B1* | 8 | [1, 8] | [38, 60] |

| C24t | Total Cdc24 | 2000 | [1000, 3000] | [38] |

| B1t | Total Bem1 | 3000 | [2000, 5000] | [38] |

| Rt | Total receptor | 10000 | ±10% | [38] |

| Gt | Total G-protein | 10000 | ±10% | [37] |

| C42t | Total Cdc42 | 10000 | [5000, 20000] | [38] |

In our numerical simulations, the cell membrane is simulated as a circle centered at the origin with radius 2 μm. The pheromone input is administered as a gradient from the positive x-direction with midpoint of 10 nM and slope of 0.1 nM/μm. The surface diffusion of a quantity W on a circle is given by

where s is an arc length parameter, ds2 = dx2 + dy2. The computational domain is parametrized by α ∈ [0, 2π], where α denotes the angle from the negative x-axis. The numerical method utilizes a second order finite difference discretization for the spatial derivatives and an implicit Crank-Nicolson method for the time derivative. The spatial mesh consists of 400 equally spaced points. Each simulation is run to steady state (t = 1, 000s). More detail about the numerical method can be found in the Supplementary Material (S2 Text).

Surrogate model construction using an underdetermined fit

The quantity of interest in this model is the extent of cell polarization, more specifically, the extent of active Cdc42 polarization. Therefore, we consider a scalar function of active Cdc42 (C42a), which we call the polarization factor (PF) of active Cdc42, defined by

where Sp(C42a) is the surface area at the front of the cell that encompasses half of the polarized component C42a [60]. An unpolarized cell would have a PF of 0 and an infinitely polarized cell would have a PF of 1. One disadvantage of this measure of polarization is that it considers only the shape of the C42a curve and not the magnitude; thus PF may be close to 1 even if only a small amount of Cdc42 is activated and localized. To remedy this issue, we introduce a cut-off function:

| (21) |

where and x = max(C42a). This cut-off function penalizes the cases in which the maximum amount of C42a is less than half of the initial value of C42. We choose the exponent to be n = 5 to produce a reasonably sharp cut-off.

We perform polynomial fitting using a Legendre polynomial basis to fit the response function PF(C42a). The parameters q and h are mapped to [−1, 1] on a linear scale and all other parameters are mapped to [−1, 1] on a log scale. We use a 5th order polynomial as our surrogate model. Recall that to fit a 5th degree polynomial for the full 35-dimensional parameter space, we require at least sample points for interpolation or least squares fitting. Since each sample point involves simulating a large PDE model, we wish to decrease the number of sample points to save computation time. However, decreasing the number of sample points results in an underdetermined system. One technique for solving such a system is compressed sensing—in particular, ℓ1-minimization. We used ℓ1-MAGIC, a collection of MATLAB subroutines by Emmanuel Candes, to perform ℓ1-minimization with quadratic constraints [62].

5, 000 points are used to fit a 5th order polynomial in the full 35-dimensional parameter space. The accuracy of the polynomial is evaluated on an additional 500 uniformly random points. A histogram of the errors between the model and polynomial is shown in S3 Fig. The mean absolute error is 0.12 with most errors between -0.2 and 0.2.

Parameter sensitivity analysis helps to reduce number of free parameters

Once we have established a polynomial surrogate model, we can analytically compute parameter sensitivities. Assuming that each parameter is uniformly distributed in [−1, 1], the sensitivity of the response function z to j-th parameter is . Note that since the parameters are all mapped to the same range, there is no inherent bias due to differing magnitudes of parameter values. The parameter sensitivity coefficients for the full 35-parameter model are given in S4 Table.

We observe that many of the parameters have small sensitivity coefficients, and the parameters of primary importance are those associated with the Cdc42 cycle dynamics. Based on the parameter sensitivities in S4 Table, we can reduce the parameter count by eliminating the parameters to which polarization is least sensitive. If we consider only the parameters whose sensitivity coefficients are greater than 0.01, we are left with only 15 parameters: B1t, k24d, C24t, kCla4a, kCla4d, kB1cm, k24cm0, kB1mc, Dc42a, h, Dc42, q, k42d, k24cm1, and k42a.

In this 15-dimensional subspace, we can again perform polynomial fitting to obtain a surrogate model. We use 6000 points to fit a 5th order polynomial using ℓ1-minimization. We perform 12-fold cross validation to analyze the error in the polynomial fit, and find that the mean absolute error is 0.14. A histogram of the error in the polynomial approximation is given in S4 Fig. Using this reduced model, we may again compute the parameter sensitivities. The resulting sensitivities are given in Table 4, and are largely consistent with the parameter sensitivities from the full 35-parameter model. Surprisingly, the diffusion constants Dc42a and Dc42 have high sensitivities along with the parameters directly involved in the Bem1-Cdc24-Cdc42 positive feedback loop activating Cdc42.

Table 4. Sensitivity coefficients, in order of ascending magnitude, for the reduced 15-parameter PDE model based on a 5th order polynomial fit using 6,000 sample points.

| Parameter | Sensitivity |

|---|---|

| k24d | 1.4 × 10−3 |

| kCla4a | −4.0 × 10−3 |

| k24cm0 | −1.3 × 10−2 |

| B1t | 1.7 × 10−2 |

| kCla4d | 2.5 × 10−2 |

| C24t | 4.4 × 10−2 |

| kB1cm | 5.5 × 10−2 |

| kB1mc | −5.6 × 10−2 |

| Dc42a | −5.9 × 10−2 |

| q | 6.1 × 10−2 |

| Dc42 | 7.0 × 10−2 |

| h | 7.7 × 10−2 |

| k24cm1 | 9.8 × 10−2 |

| k42d | −1.3 × 10−1 |

| k42a | 1.4 × 10−1 |

Parameter estimation using yeast cell polarization data

We wished to estimate the model parameters that could produce polarization by fitting to experimental data. The key species in yeast polarization is active Cdc42 (C42a) which we can monitor using the reporter Ste20-GFP, a fusion protein that binds active Cdc42 and possesses a fluorescent tag [54]. We treat yeast cells containing Ste20-GFP with 10 nM of α-factor for 60 min to correspond to the steady-state polarization in the model. We assess the spatial distribution of Ste20-GFP by confocal microscopy of fixed cells, followed by quantitative image processing (see Methods). The Ste20-GFP polarization profile is converted into a polarization factor to be compared with the model simulations.

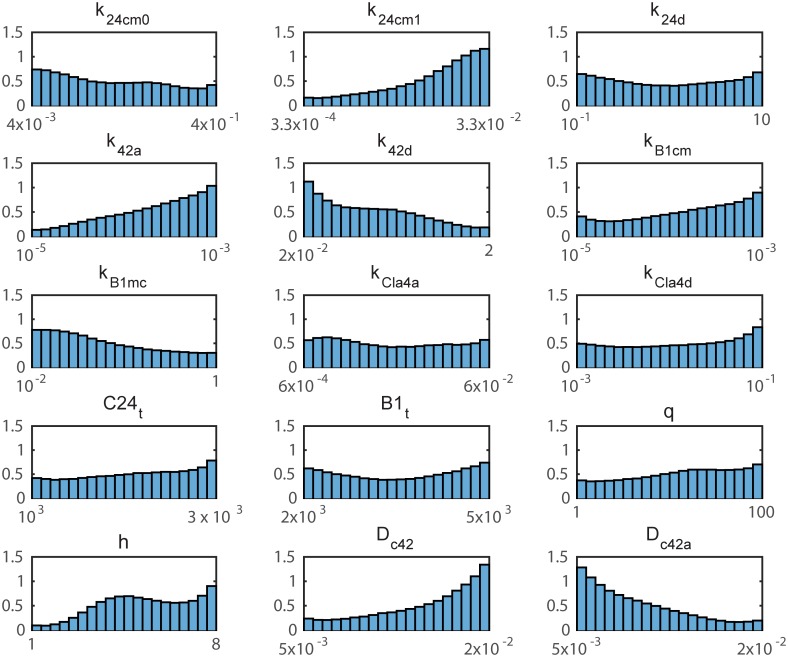

With these data, we can perform parameter estimation using the 15-parameter polynomial surrogate model and an MCMC method. Fig 5 shows the parameter distributions obtained via MCMC with Markov chain length 2 × 106. As done in the ODE model, the correlation coefficients between parameters can be calculated and they are displayed graphically in S5 Fig. There are a few key observations. First, there is significant uncertainty in many of the parameters (particularly those with small sensitivity coefficients). This implies that, given the current data, we are not able to infer much information about the parameters. The broad distributions also suggest that a wide range of parameter values are compatible with polarization in the model. Second, we see that several of the parameters tend toward the boundary (e.g. k42a, k42d, Dc42, Dc42a), indicating that the parameter ranges should be extended. In particular, a faster diffusion constant for inactive Cdc42 (Dc42) and a slower diffusion constant for active Cdc42 (Dc42a) promote polarization. Lastly, we see that the parameters are not strongly correlated, with the exception of some correlation between k42a and k42d and between h and q.

Fig 5. MCMC results for PDE model.

Parameter distributions based on MCMC with chain length 2 × 106 for the reduced 15-parameter PDE model. The parameter range is a log-scale except for the parameters q and h which span a linear scale.

Identifying an optimal parameter set for the PDE model

Sometimes it is desirable to obtain a single best parameter estimate (e.g. maximum likelihood) to visualize how closely the model can fit the data, and to determine the parameter values at that best fit. In this example, due to the large amount of uncertainty in the parameter distributions, there is no clear choice for such a point estimate. In fact, given the limited data relative to the large number of parameters, multiple “best” parameter estimates may exist. One approach is to take the mean of the MCMC iterates (Pmean) as was done with the ODE model in the previous section. This parameter set and its corresponding polarization factor are given in Table B in S1 Text. However, the large degree of parametric uncertainty suggests this estimate may not produce a good fit.

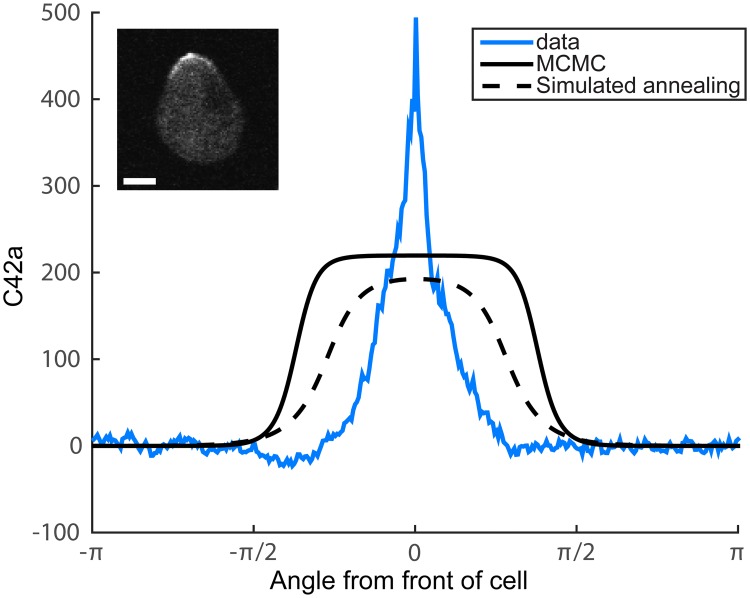

Another option is to use an optimization method such as simulated annealing to improve upon Pmean which is used as the starting point. Note that the polynomial surrogate model greatly speeds up this optimization. Fig 6 shows the steady state solutions for both the MCMC mean and a parameter set identified via simulated annealing (SA). Indeed, the SA gives a better fit to the data (PFSA = 0.64, PFmean = 0.57, PFdata = 0.87; see Supplement for parameter sets). However, the broadness of the model simulations compared to the experimental data suggests that the model structure is unable to capture the sharp polarization observed in the cell. One possible explanation is unmodeled dynamics. Nevertheless, the Bayesian parameter estimation provides valuable information about the range of parameters in the model that allow polarization, the most likely parameter values, and the uncertainty in the parameters given the current data.

Fig 6. MCMC vs. optimization results.

Steady state solutions for the mean parameter set from the MCMC (solid black) and a parameter set identified via simulated annealing (dashed black). Polarization is depicted by the concentration of active Cdc42 (C42a, number of molecules/μm2) over the angular range [−π, π]. The mean polarization of the experimental data (n = 20 cells) is shown in blue in arbitrary units. A sample cell, treated with 10 nM α-factor for 60 min, shows the membrane polarization profile of Ste20-GFP, a reporter for active Cdc42 (upper left). Scale bar = 2 μm.

Discussion

In this work we apply novel methods from uncertainty quantification to perform parameter sensitivity analysis and parameter estimation of two models of yeast mating. The central innovation is the construction of polynomial surrogate models to replace simulation for calculating the model output. We demonstrate the accuracy of the polynomials by cross-validation on random sample points left out from the polynomial fitting. For Bayesian parameter estimation, the method provides a dramatic reduction in computational cost.

Computational speed-up

Typically, MCMC requires a model evaluation at every iteration. Since our Markov chain length for the 15-parameter model was 2 × 106, we would require 2 × 106 evaluations of the PDE model to steady state. It would likely take even more iterations for the MCMC to converge for the full 35-parameter model. The PDE is solved with an implicit method implemented in Fortran, and each evaluation takes 40-60 minutes of CPU time. Thus, the full MCMC would require at least ∼200 years of CPU time. Further, MCMC is not inherently parallelizable, although advancements have been made in parallel MCMC methods [63–66].

Using the polynomial surrogate, we are able to practically eliminate the cost of MCMC by evaluating only a polynomial at each MCMC iteration. Computing a chain of length of 2 × 106 takes only a few hours in MATLAB. In place of this cost, we must evaluate the full PDE model at the sample points used to fit the polynomial. For our full 35-parameter model, we use 5000 sample points to fit a polynomial to perform the sensitivity analysis. We then are able to reduce the parameter count to 15, and use 6000 additional samples to fit a polynomial in the reduced parameter space. Thus we require 11,000 model evaluations in total. There is also some cost to fit the polynomial via ℓ1-minimization, which is of the order of several hours. The time required to evaluate the polynomial is considered to be negligible compared to the time required to solve the PDE. Thus we have a roughly 180-fold reduction in computational cost compared to the MCMC without a polynomial surrogate. In addition, the samples are independent so that the model evaluations to produce these samples can easily be computed in parallel.

The computational savings in the ODE test model are not as dramatic, since the ODE model is inexpensive to solve. In numerical tests for the 2-parameter ODE model with a 10th degree polynomial surrogate, we found a 20% reduction in CPU time in evaluating the polynomial vs. evaluating the model directly. In the 8-parameter model with a 5th degree polynomial surrogate, we found a more than 10-fold reduction in CPU time; we believe the greater reduction in cost is afforded by the lower polynomial degree. The computational savings afforded by using polynomial surrogates will vary depending on the ODE solver, the degree of the polynomials, and the time step required to solve the ODE. Whether a problem warrants the use of surrogate models will generally depend on the cost of evaluating the original model, the number of sample (data) points required for accurate parameter estimation, and the polynomial degree required to fit the model output.

Possible limitations of uncertainty quantification methodology

The primary challenge with the method is constructing accurate polynomials. As we demonstrate in the ODE example, more sample points and a higher degree polynomial produce greater accuracy. One concern is the ability of the surrogate polynomials to describe highly nonlinear relationships between parameters and outputs arising from bifurcations. If the model output is discontinuous with respect to the parameters, for example, then the model output will not be well-approximated by polynomials. This issue may exist in the PDE model presented here, since it has previously been shown that the model for some parameter values possesses multistability contributing to the polarization [60]; thus the steady state behavior is discontinuous with respect to the initial conditions. The 5th degree polynomial surrogate produces an error of 0.1 to 0.2. It is likely we can reduce the error by employing more sample points or by using a higher degree polynomial. Alternatively, one can take advantage of Design of Experiments methods [67] to pick more informative sample points to decrease the error. However, we will still not be able to capture the discontinuous nature of the model output.

Another issue is that one may make false assumptions in determining a response function. In the PDE model we choose a response function that quantifies the cell polarization at steady state, and thus we are assuming that the system settles to a steady state. While this seems to be a reasonable assumption for the system presented here, this may not always be the case. If a system has periodic solutions rather than a stable steady state in some region of the parameter space, then one would need to carefully consider how to build an appropriate response function. Unfortunately, it is not always clear a priori whether such solutions exist for a given system.

Finally, a third issue is the combinatorial increase in the number of polynomial coefficients as the number of parameters increases. The 5th degree polynomial for the 35 parameter model possesses 658,008 coefficients and a 100 parameter 5th degree polynomial would possess over 75 million coefficients. For the PDE model we employ compressed sensing methods (ℓ1-minimization) that allow undersampling to fit higher dimensional polynomials from larger models with fewer sample points. It is possible to adopt advanced sparse regression methods such as ℓ1-ℓ2 minimization [68] to further reduce the number of required model evaluations. A second approach is explore optimal sample set design such as the optimal sample selection strategy [45] that, for any given number of samples (model evaluations), finds the parameter sample points to provide a polynomial surrogate nearly as accurate as the one obtained by a much larger number of model evaluations.

Parameter analysis of yeast mating models yields biological insights

In the yeast G-protein ODE model, the parameter distributions inferred from the time-course and dose-response data are consistent with the parameter estimates and experimental measurements from [37]. For example, the peaks for kGa and kGd are very close to the previous maximum likelihood estimates. Interestingly, the parameter estimates for kRL and kRLm are close to the measured values found in [37], but they are at least one to two orders of magnitude larger than the estimated values from three other groups [69–71]. One possible explanation is the use of fluorescent analogs of α-factor in some of the earlier work, but this discrepancy needs to be addressed in future work.

The PDE model shows broad distributions for nearly all 15 parameters examined indicating that a wide range of parameter values are compatible with good polarization of active Cdc42. The fact that the feasible region of the parameter q, representing the cooperativity of the interaction between Gβγ and Cdc24, spans the full range from 1 to 100 demonstrates that the high value (q = 100) previously used in the model [38] is not necessary for polarization, and that lower values (e.g. q = 1 to 10) are almost equally probable. These lower cooperativity values corresponding to smaller Hill exponents are more plausible from a mechanistic standpoint. In addition, several parameters (k24cm1, k42a, k42d, Dc42, and Dc42a) show peaks at one or the other side of the distribution indicating that the previous estimates may miss the most likely parameter range. The diffusion constants Dc42 and Dc42a were assigned the same value in our previous model [38, 61], but in this work Dc42 shows a preference for higher values, whereas Dc42a shows a preference for lower values. Recent measurements by Bendezú et al. [72] in the fission yeast S. pombe found that inactive Cdc42 had a 10-fold faster diffusion rate than active Cdc42 consistent with the trends in our parameter distributions.

This work also highlights the inability of the current PDE model to produce the sharp polarization peak of active Cdc42 observed in cells. One explanation is that the model is missing important dynamics or positive feedback mechanisms that enhance cell polarization. In the future, we plan to include additional spatial dynamics such as the polarized transport of Cdc42 to the front of the projection, which is absent from the model.

The broadness of the obtained parameter distributions also implies that the current data are insufficient to obtain tight parameter estimates. In this study we focused on identifying parameter values that would produce polarization in the model versus an unpolarized state. Further data can be collected tracking the spatial dynamics of the other species in the model such as Gβγ, Cdc24, and Bem1 in both wild-type and mutant yeast strains. The additional data along with model modifications should result in narrower parameter distributions and a better fit to the total system dynamics.

Application of polynomial surrogates to other model analysis methods

In our analysis, we presented only the sensitivity measure . The advantage of this sensitivity measure is its simplicity; it is easy to compute analytically when z is a known function and it usually provides a good measure of sensitivity when the relationship between z and pj is monotonic. However, if the relationship between z and pj is non-monotonic or highly nonlinear, Sj may not be a desirable measure. In these cases, other measures of sensitivity may be a better choice such as variance-based sensitivity measures or the partial rank correlation coefficient [8, 73]. The use of polynomial chaos expansions to approximate variance-based sensitivities has been explored previously in [31]. Other derivative-based sensitivity measures have also been proposed [74], which can be computed analytically using the polynomial chaos expansion.

Polynomial surrogates may also be used in methods for parameter estimation not addressed in this paper. In principle, polynomial surrogates can be applied to any type of model for which parameter ranges are known, and for any sampling-based method that requires model evaluations. By fitting polynomials to the quantities for which data is available, every model evaluation in a computational method can be replaced by a polynomial evaluation. While we have demonstrated this here only in the context of a Markov chain Monte Carlo method, the same principles may be used to accelerate the computations involved in other Bayesian methods for parameter estimation, such as rejection sampling and sequential Monte Carlo.

Yet another potential application of polynomial surrogates is to accelerate methods for Bayesian model selection. The idea behind Bayesian model selection is that we can recover a probability distribution for a model index parameter m enumerating different models, providing information on the likelihoods of the candidate models given the available data. In essence this is still a parameter estimation problem, and established methods for parameter estimation can be adapted for model selection. Polynomial surrogates can be used to accelerate these methods which include Bayesian rejection sampling, sequential Monte Carlo, population annealing, and MCMC [20, 52, 53, 75, 76]. Model selection is of great importance in systems biology since uncertainty in the model structure may significantly impact the conclusions of parameter inference [39].

Supporting information

Arrows indicate the conversion of protein species from inactive to active form or from cytoplasmic localization to membrane localization (where the protein is active). Solid dots represent reactions catalyzed by the connected proteins. Lines terminating in a vertical bar (instead of an arrow) represent inhibition. Species and reactions are described in the main text.

(EPS)

Correlations between the 8 kinetic parameters in the MCMC chain using the polynomial surrogate of Model 1.

(EPS)

Error in the 35-dimensional polynomial surrogate function for Model 2 fit using 5000 points, and measured (tested) at 500 uniform random samples.

(EPS)

Error in the 15-dimensional polynomial surrogate function for Model 2 fit using 6000 points, and measured (tested) via 10-fold cross-validation.

(EPS)

Correlations between the parameters in the MCMC chain using the 15-dimensional polynomial surrogate of Model 2.

(EPS)

Parameter values are taken from [37]. Values for kGa and kGd were estimated based on least-squares fit to time course and dose-response data.

(PDF)

Experimental data for the given time points and α-factor levels from [37], and the resulting data. Output is the fraction of free Gβγ (Gbg/Gt). Data are given as mean ± standard deviation.

(PDF)

Ranges for the kinetic parameters used for parameter estimation of all 8 parameters in Model 1 (heterotrimeric G-protein model).

(PDF)

Sensitivity coefficients, in order of ascending magnitude, from sensitivity analysis of all 35 parameters in Model 2 using a 5th order surrogate polynomial fit to 5000 sample points.

(PDF)

(PDF)

(PDF)

Acknowledgments

The authors would like to thank He Yang for assistance with image processing, and Yeonjong Shin for assistance with uncertainty quantification. Large computations were carried out using the Ohio Supercomputer Center [77].

Data Availability

All relevant data are within the paper and its Supporting Information files.

Funding Statement

CSC is funded by National Science Foundation DMS-1253481 (https://www.nsf.gov/); TMY is funded by National Institute of Health GM113241 (https://www.nih.gov/); DBX is funded by National Science Foundation DMS-1418771, Air force Office of Research FA95501410022 (http://www.wpafb.af.mil/) and Defense Sciences Office, DARPA N660011524053 (https://www.darpa.mil/). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1. Edelstein-Keshet L. Mathematical models in biology. SIAM; 2005. [Google Scholar]

- 2. Chou CS, Friedman A. Introduction to Mathematical Biology: Modeling, Analysis, and Simulations. Springer; 2016. [Google Scholar]

- 3. Gershenfeld NA. The Nature of Mathematical Modeling. Cambridge University Press; 1999. [Google Scholar]

- 4. Slepchenko BM, Schaff JC, Carson JH, Loew LM. Computational cell biology: spatiotemporal simulation of cellular events. Annu Rev Biophys Biomol Struct. 2002;31:423–441. doi: 10.1146/annurev.biophys.31.101101.140930 [DOI] [PubMed] [Google Scholar]

- 5. Fall CP, Marland ES, Wagner JM, Tyson JJ. Computational Cell Biology. Springer Science & Business Media; 2007. [Google Scholar]

- 6. Ljung L. System Identification In: Signal Analysis and Prediction. Applied and Numerical Harmonic Analysis. Birkhäuser, Boston, MA; 1998. p. 163–173. [Google Scholar]

- 7. Bard Y. Nonlinear parameter estimation. Academic Press; 1974. [Google Scholar]

- 8. Marino S, Hogue IB, Ray CJ, Kirschner DE. A methodology for performing global uncertainty and sensitivity analysis in systems biology. Journal of Theoretical Biology. 2008;254:178–196. doi: 10.1016/j.jtbi.2008.04.011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9. Banks HT, Bortz DM. A parameter sensitivity methodology in the context of HIV delay equation models. Journal of Mathematical Biology. 2005;50:607–625. doi: 10.1007/s00285-004-0299-x [DOI] [PubMed] [Google Scholar]

- 10. Cariboni J, Gatelli D, Liska R, Saltelli A. The role of sensitivity analysis in ecological modelling. Ecological Modelling. 2007;203:167–182. doi: 10.1016/j.ecolmodel.2005.10.045 [Google Scholar]

- 11. Dancik GM, Jones DE, Dorman KS. Parameter estimation and sensitivity analysis in an agent-based model of Leishmania major infection. Journal of Theoretical Biology. 2010;262:398–412. doi: 10.1016/j.jtbi.2009.10.007 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12. Blower SM, Dowlatabadi H. Sensitivity and uncertainty analysis of complex models of disease transmission: an HIV model, as an example. International Statistical Review. 1994;62(2):229–243. doi: 10.2307/1403510 [Google Scholar]

- 13. Cho KH, Shin SY, Kolch W, Wolkenhauer O. Experimental design in systems biology, based on parameter sensitivity analysis using a Monte Carlo method: A case study for the TNFα-mediated NF-κB signal transduction pathway. Simulation. 2003;79(12):726–739. doi: 10.1177/0037549703040943 [Google Scholar]

- 14. Mahdavi A, Davey RE, Bhola P, Yin T, Zandstra PW. Sensitivity analysis of intracellular signaling pathway kinetics predicts targets for stem cell fate control. PLoS Computational Biology. 2007;3(7):e130 doi: 10.1371/journal.pcbi.0030130 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15. Chu Y, Jayaraman A, Hahn J. Parameter sensitivity analysis of IL-6 signalling pathways. IET Systems Biology. 2007;1(6):342–352. doi: 10.1049/iet-syb:20060053 [DOI] [PubMed] [Google Scholar]

- 16. Pruett W, Hester R. The Creation of Surrogate Models for Fast Estimation of Complex Model Outcomes. PLoS ONE. 2016;11(6):e0156574 doi: 10.1371/journal.pone.0156574 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17. Krishna NA, Pennington HM, Coppola CD, Eisenberg MC, Shugart RC. Connecting local and global sensitivities in a mathematical model for wound healing. Bulletin of Mathematical Biology. 2015;77:2294–2324. doi: 10.1007/s11538-015-0123-3 [DOI] [PubMed] [Google Scholar]

- 18. Gelman A, Carlin JB, Stern HS, Dunson DB, Vehtari A, others. Bayesian data analysis. 3rd ed CRC Press; 2014. [Google Scholar]

- 19.Xue N, Pan W, Guo Y. Parameter estimation for gene regulatory networks: a two-stage MCMC Bayesian approach. In: 38th Annual International Conference of the Engineering in Medicine and Biology Society (EMBC). IEEE; 2016. [DOI] [PubMed]

- 20. Toni T, Welch D, Strelkowa N, Ipsen A, Stumpf MPH. Approximate Bayesian computation scheme for parameter inference and model selection in dynamical systems. Journal of the Royal Society Interface. 2009;6:187–202. doi: 10.1098/rsif.2008.0172 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21. Ghasemi O, Lindsey ML, Yang T, Nguyen N, Huang Y, Jin YF. Bayesian parameter estimation for nonlinear modelling of biological pathways. BMC Systems Biology. 2011;5(Suppl 3):S9 doi: 10.1186/1752-0509-5-S3-S9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22. Ashyraliyev M, Fomekong-Nanfack Y, Kaandorp JA, Blom JG. Systems biology: parameter estimation for biochemical models. FEBS Journal. 2009;276(4):886–902. doi: 10.1111/j.1742-4658.2008.06844.x [DOI] [PubMed] [Google Scholar]

- 23. Moles CG, Mendes P, Banga JR. Parameter estimation in biochemical pathways: a comparison of global optimization methods. Genome Research. 2003;13:2467–2474. doi: 10.1101/gr.1262503 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24. Ashyraliyev M, Jaeger J, Blom JG. Parameter estimation and determinability analysis applied to Drosophila gap gene circuits. BMC Systems Biology. 2008;2(83). doi: 10.1186/1752-0509-2-83 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Lillacci G, Khammash M. Parameter estimation and model selection in computational biology. PLoS Computational Biology. 2010;6(3):e1000696 doi: 10.1371/journal.pcbi.1000696 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26. Xiu D, Karniadakis G. The Weiner-Askey polynomial chaos for stochastic differential equations. SIAM Journal on Scientific Computing. 2002;24(2):619–644. doi: 10.1137/S1064827501387826 [Google Scholar]

- 27. Najm HN. Uncertainty Quantification and Polynomial Chaos Techniques in Computational Fluid Dynamics. Annu Rev Fluid Mech. 2008;41(1):35–52. doi: 10.1146/annurev.fluid.010908.165248 [Google Scholar]

- 28. Iglesias M, Stuart AM. Inverse problems and uncertainty quantification. SIAM News. 2014; p. 2–3. [Google Scholar]

- 29. Xiu D. Fast Numerical Methods for Stochastic Computations: A Review. Communications in Computational Physics. 2009;5(2-4):242–272. [Google Scholar]

- 30. Xiu D. Efficient collocational approach for parametric uncertainty analysis. Communications in Computational Physics. 2007;2(2):293–309. [Google Scholar]

- 31. Sudret B. Global sensitivity analysis using polynomial chaos expansions. Reliability Engineering & System Safety. 2008;93(7):964–979. doi: 10.1016/j.ress.2007.04.002 [Google Scholar]

- 32. Butler MT, Wallingford JB. Planar cell polarity in development and disease. Nat Rev Mol Cell Biol. 2017;18(6):375–388. doi: 10.1038/nrm.2017.11 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Martin-Belmonte F, Perez-Moreno M. Epithelial cell polarity, stem cells and cancer. Nat Rev Cancer. 2011;12(1):23–38. doi: 10.1038/nrc3169 [DOI] [PubMed] [Google Scholar]

- 34. Lee M, Vasioukhin V. Cell polarity and cancer-cell and tissue polarity as a non-canonical tumor suppressor. Journal of Cell Science. 2008;121(8):1141–1150. doi: 10.1242/jcs.016634 [DOI] [PubMed] [Google Scholar]

- 35. Martin SG, Arkowitz RA. Cell polarization in budding and fission yeasts. FEMS Microbiol Rev. 2014;38(2):228–253. doi: 10.1111/1574-6976.12055 [DOI] [PubMed] [Google Scholar]

- 36. Park HO, Bi E. Central roles of small GTPases in the development of cell polarity in yeast and beyond. Microbiology and Molecular Biology Reviews. 2007;71(1):48–96. doi: 10.1128/MMBR.00028-06 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Yi TM, Kitano H, Simon MI. A quantitative characterization of the yeast heterotrimeric G protein cycle. Proc Natl Acad Sci USA. 2003;100(19):10764–10769. doi: 10.1073/pnas.1834247100 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Yi TM, Chen S, Chou CS, Nie Q. Modeling Yeast Cell Polarization Induced by Pheromone Gradients. Journal of Statistical Physics. 2007;128(1):193–207. doi: 10.1007/s10955-007-9285-1 [Google Scholar]

- 39. Babtie AC, Kirk P, Strumpf MPH. Topological sensitivity analysis for systems biology. PNAS. 2014;111(52):18507–18512. doi: 10.1073/pnas.1414026112 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Äijö T, Bonneau R. Biophysically motivated regulatory network inference: progress and prospects. Human Heredity. 2016;81:62–77. doi: 10.1159/000446614 [DOI] [PubMed] [Google Scholar]

- 41. Farhangmehr F, Maurya MR, Tartakovsky DM, Subramaniam S. Information theoretic approach to complex biological network reconstruction: application to cytokine release in RAW 264.7 macrophages. BMC Systems Biology. 2014;8(77). doi: 10.1186/1752-0509-8-77 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 42. Candes EJ, Romberg J, Tao T. Robust uncertainty principles: exact signal reconstruction from highly incomplete frequency information. IEEE Trans Inf Theory. 2006;52(2):489–509. doi: 10.1109/TIT.2005.862083 [Google Scholar]

- 43. Doostan A, Owhadi H. A non-adapted sparse approximation of PDEs with stochastic inputs. J Comput Phys. 2011;230(8):3015–3034. doi: 10.1016/j.jcp.2011.01.002 [Google Scholar]

- 44. Yan L, Guo L, Xiu D. Stochastic collocation algorithms using ℓ1-minimization. Int J UQ. 2012;2(3):279–293. [Google Scholar]

- 45. Shin Y, Xiu D. Nonadaptive quasi-optimal points selection for least squares linear regression. SIAM Journal on Scientific Computing. 2016;38(1):A385–A411. doi: 10.1137/15M1015868 [Google Scholar]

- 46. Brooks SP. Markov chain Monte Carlo method and its application. The Statistician. 1998;47(1):69–100. [Google Scholar]

- 47. Wilkinson DJ. Bayesian methods in bioinformatics and computational systems biology. Briefings in Bioinformatics. 2007;8(2):109–116. doi: 10.1093/bib/bbm007 [DOI] [PubMed] [Google Scholar]

- 48. Golightly A, Wilkinson DJ. Bayesian parameter inference for stochastic biochemical network models using particle Markov chain Monte Carlo. Interface Focus. 2011;1(6):807–820. doi: 10.1098/rsfs.2011.0047 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Marzouk Y, Xiu D. A stochastic collocation approach to Bayesian inference in inverse problems. Communications in Computational Physics. 2009;6(4):826–847. doi: 10.4208/cicp.2009.v6.p826 [Google Scholar]

- 50. Cowles M, Carlin B. Markov chain Monte Carlo convergence diagnostics: A comparative review. Journal of the American Statistical Association. 1996;91(434):883–904. doi: 10.1080/01621459.1996.10476956 [Google Scholar]

- 51. Gelman A, Rubin D. Inference from iterative simulation using multiple sequences. Statistical Science. 1992;7(4):457–472. doi: 10.1214/ss/1177011136 [Google Scholar]

- 52. Liepe J, Kirk P, Filippi S, Toni T, Barnes CP, Stumpf MPH. A framework for parameter estimation and model selection from experimental data in systems biology using approximate Bayesian computation. Nature Protocols. 2014;9(2):439–456. doi: 10.1038/nprot.2014.025 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53. Murakami Y. Bayesian Parameter Inference and Model Selection by Population Annealing in Systems Biology. PLoS ONE. 2014;9(8):e104057 doi: 10.1371/journal.pone.0104057 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Lawson MJ, Drawert B, Khammash M, Petzold L, Yi TM. Spatial stochastic dynamics enable robust cell polarization. PLoS Comput Biol. 2013;9(7):e1003139 doi: 10.1371/journal.pcbi.1003139 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55. Bender A, Sprague GF. Yeast peptide pheromones, a-factor and alpha-factor, activate a common response mechanism in their target cells. Cell. 1986;47(6):929–937. doi: 10.1016/0092-8674(86)90808-1 [DOI] [PubMed] [Google Scholar]

- 56. Dohlman HG, Thorner JW. Regulation of G protein-initiated signal transduction in yeast: paradigms and principles. Annual Review of Biochemistry. 2001;70:703–754. doi: 10.1146/annurev.biochem.70.1.703 [DOI] [PubMed] [Google Scholar]

- 57. Pruyne D, Bretscher A. Polarization of cell growth in yeast. I. Establishment and maintenance of polarity states. Journal of Cell Science. 2000;113(Pt 3):365–375. [DOI] [PubMed] [Google Scholar]

- 58. Bourne HR, Sanders DA, McCormick F. The GTPase superfamily: a conserved switch for diverse cell functions. Nature. 1990;348(6297):125–132. doi: 10.1038/348125a0 [DOI] [PubMed] [Google Scholar]

- 59. Epperson J. On the Runge Example. The American Mathematical Monthly. 1987;94:329–341. doi: 10.2307/2323093 [Google Scholar]

- 60. Chou CS, Nie Q, Yi TM. Modeling robustness tradeoffs in yeast cell polarization induced by spatial gradients. PLoS ONE. 2008;3:e3103 doi: 10.1371/journal.pone.0003103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61. Chou CS, Bardwell L, Nie Q, Yi TM. Noise filtering tradeoffs in spatial gradient sensing and cell polarization response. BMC Syst Biol. 2011;5:196 doi: 10.1186/1752-0509-5-196 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Candes E, Romberg J. l1-magic: Recovery of sparse signals via convex programming; 2005. https://statweb.stanford.edu/~candes/l1magic/downloads/l1magic.pdf.

- 63. Li S, Tso GK, Long L. Powered embrassing parallel MCMC sampling in Bayesian inference, a weighted average intuition. Computational Statistics & Data Analysis. 2017;115:11–20. doi: 10.1016/j.csda.2017.05.005 [Google Scholar]

- 64. Martino L, Elvira V, Luengo D, Corander J, Louzada F. Orthogonal parallel MCMC methods for sampling and optimization. Digital Signal Processing. 2016;58:64–84. doi: 10.1016/j.dsp.2016.07.013 [Google Scholar]

- 65. Solonen A, Ollinaho P, Laine M, Haario H, Tamminen J, Järvinen H. Efficient MCMC for climate model parameter estimation: parallel adaptive chains and early rejection. Bayesian Analysis. 2012;7(3):715–736. doi: 10.1214/12-BA724 [Google Scholar]

- 66. Rosenthal JS. Parallel computing and Monte Carlo algorithms. Far East Journal of Theoretical Statistics. 2000;4:207–236. [Google Scholar]

- 67. Atkinson AC, Donev AN, Tobias RD.Optimum experimental designs, with SAS Oxford Statistical Science Series. Oxford University Press, Oxford; 2007. [Google Scholar]

- 68. Yan L, Shin Y, Xiu D. Sparse Approximation using ℓ1 − ℓ2 Minimization and Its Application to Stochastic Collocation. SIAM J Sci Comput. 2017;39(1):A229–A254. doi: 10.1137/15M103947X [Google Scholar]

- 69. Jenness DD, Spatrick P. Down regulation of the alpha-factor pheromone receptor in S. cerevisiae. Cell. 1986;46(3):345–353. doi: 10.1016/0092-8674(86)90655-0 [DOI] [PubMed] [Google Scholar]

- 70. Bajaj A, Celić A, Ding FX, Naider F, Becker JM, Dumont ME. A fluorescent alpha-factor analogue exhibits multiple steps on binding to its G protein coupled receptor in yeast. Biochemistry. 2004;43(42):13564–13578. doi: 10.1021/bi0494018 [DOI] [PubMed] [Google Scholar]

- 71. Ventura AC, Bush A, Vasen G, Goldín MA, Burkinshaw B, Bhattacharjee N, et al. Utilization of extracellular information before ligand-receptor binding reaches equilibrium expands and shifts the input dynamic range. Proc Natl Acad Sci U S A. 2014;111(37):E3860–9. doi: 10.1073/pnas.1322761111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72. Bendezú FO, Vincenzetti V, Vavylonis D, Wyss R, Vogel H, Martin SG. Spontaneous Cdc42 polarization independent of GDI-mediated extraction and actin-based trafficking. PLoS Biol. 2015;13(4):e1002097 doi: 10.1371/journal.pbio.1002097 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73. Zi Z. Sensitivity analysis approaches applied to systems biology models. IET Systems Biology. 2011;5(6):336–346. doi: 10.1049/iet-syb.2011.0015 [DOI] [PubMed] [Google Scholar]

- 74. Sobol IM, Kucherenko S. Derivative based global sensitivity measures and their link with global sensitivity indices. Mathematics and Computers in Simulation. 2009;79(10):3009–3017. doi: 10.1016/j.matcom.2009.01.023 [Google Scholar]

- 75. Green PJ. Reversible Jump Markov Chain Monte Carlo Computation and Bayesian Model Determination. Biometrika. 1995;82(4):711–732. doi: 10.1093/biomet/82.4.711 [Google Scholar]