Abstract

Objective:

Variations in processes for different clinics and health systems can dramatically change the way preventive interventions are implemented. We present a method for documenting these variations using workflow diagrams and demonstrate how understanding workflow aided an electronic health record (EHR) embedded colorectal cancer screening intervention.

Materials and Methods:

We mapped variation in processes for ordering and documenting fecal testing, current colonoscopy, prior colonoscopies, and pathology results. This work was part of a multi-site cluster-randomized pragmatic trial to test a mailed approach to offering fecal testing at 26 safety net clinics (in eight organizations) in Oregon and Northern California. We created clinic-specific workflow diagrams and then distilled them into consolidated diagrams that captured the variations.

Results:

Clinics had varied practices for storing and using information about colorectal cancer screening. Developing workflow diagrams of key processes enabled clinics to find optimal ways to send fecal test kits to patients due for screening. The workflows informed the rollout of new EHR tools and identified best practices for data capture.

Discussion:

Diagramming workflows can have great utility when implementing and refining EHR tools for clinical practice, especially when doing so across multiple clinical sites. The process of developing the workflows uncovered successful practice recommendations and revealed limitations and potential effects of a research intervention.

Conclusion:

Our method of documenting clinical process variation might inform other EHR-powered, multi-site research and can improve data feedback from EHR systems to clinical caregivers.

Introduction

Over the past decade, the adoption of electronic health record (EHR) systems has risen rapidly in the United States. In 2013, 78 percent of office-based physicians in the United States used some type of EHR, up from 18 percent in 2001 [1]. Rapid accessibility and increased accuracy of patient health information could improve health care quality and safety the efficiency of health care delivery, and patient satisfaction [2,3,4]. The adoption and use of EHRs has been incentivized by federal agencies, such as the Centers for Medicare and Medicaid (CMS) [1,5]. The CMS EHR Incentive Program ties payments to three levels of “meaningful EHR use” by clinical staff [1,5,6].

Extensive EHR use has in turn paved the way for research using real-time data to both evaluate the care provided and aid in medical discovery. Pragmatic research can promote clinical decisions that rely on rigorous analysis of the health system’s actual population data [7]. The promise of EHR data, however remains only partially realized. Part of the problem is that EHRs are highly complex, contain large amounts of data, and data entry and storage can vary markedly within a given clinic, health system, or network of health systems. Many EHR data are unstructured [8] (free text rather than coded terms or discrete fields), which makes it difficult to extract data for research. Retrieving information across different EHR platforms also poses challenges. A growing consideration is research based on either extracting EHR information, or clinical interventions that add software or activate unused functionality within an EHR. However, only a few newly developed tools can work across software platforms [9]. Furthermore, until recently issues with interoperability have stymied efforts to extract data in toto from similar delivery sites because the extraction protocol may not work across the different EHRs of those sites.

Even when different providers use the same EHR platforms, how and where providers store information varies substantially [10]. Understanding this variability is important for accurately retrieving and analyzing data. The Strategies and Opportunity to STOP Colon Cancer in Priority Populations (STOP CRC) study, a pragmatic multi-site trial, encountered tremendous variation across clinic systems in documenting and reporting the same task: screening for colorectal cancer using either fecal testing or colonoscopy STOP CRC (described elsewhere) [11,13] is an ongoing comparative-effectiveness trial enrolling 26 clinics randomized to one of two arms. Clinics in the intervention arm (n=13) are using a data-driven, EHR-embedded program to identify patients due for colorectal screening and mail a fecal test to them. All health centers mailed fecal immunochemical tests (FIT) as part of the program).

Workflows to Describe Data Context

To implement an intervention focused on patients due for colorectal screening, we needed to create a way within our study to visualize and understand the variety of sources for relevant data within a common EHR platform (EPIC). Therefore, the research team documented each clinic’s workflow from start to finish (i.e., from identifying patients due for screening through recording the results in the EHR). For this paper, we use the term workflow to mean a repeatable pattern of actions enabled by systematic processes (e.g., the process for recording a prior colonoscopy result). Creating clinic-specific workflows not only shed light on the intended use of the data with implications for data analysis [12], but also enabled the research team to efficiently work with clinics.

Clinical workflow analysis and documentation has become a staple of clinical practice transformation within clinics. Workflow diagrams have been used to optimize population health management systems similar to STOP CRC [13,14]. Unertl and colleagues modeled workflow, information flow, and work practices inside-by-side diagrams representing chronic disease care; they found that clinic staff will create inefficient “work-arounds” to accomplish tasks when the technology does not fit their needs [15]. Malhoutra et al [16] present a cognitive model of patient care within an intensive care unit and conclude that models are useful to elucidate operational aspects, overall workflow, or task allocation. Indeed, our workflow models helped identify places where the technology did not fit with human processes and helped the clinics in the study understand the new workflow.

While these studies document clinical workflows, it is rare to see standardized clinic workflows across clinic organizations. To date, frameworks documenting variations in clinic data workflows are scarce [17] and have mostly been used to standardize data entry within a given organization. Even this use of workflows has been hindered by EHR platforms that contain unstructured data [18] or that do not allow electronic data transfer from external organizations [19].

Our methods build on the work of Johnson et al. that emphasizes the importance of understanding the context in which data are collected and used (referred to as the “data provenance”). Johnson and colleagues underscore the importance of knowing 1) the local clinic workflows and provider charting behavior; 2) the EHR’s data model and local implementation (i.e., some functions are available for use, some are not); and 3) the external context – that is, legal requirements and reimbursement incentives. Additional variation can be caused by ongoing EHR changes, such as the inclusion of patient-reported data, either obtained during clinic visits, phone calls, or through a patient portal, and the conversion from ICD-9 to ICD-10. Patient-reported data on colonoscopy screening, for example, may be useful if assessing lifetime screening history but may be incomplete (without details of the procedure or pathology specimens) if used for identifying patients eligible for a screening intervention. The context in which data are collected is often invisible to researchers and policy-makers that rely on it. Failure to understand this context can introduce systematic bias and misinterpretations. With the onset of greater use of electronic medical records, however, reliance on automated records is critically important. Yet extractable EHR data may not fully capture patient history, particularly to the degree of sensitivity and specificity that might be required for quality improvement, population-based care, and research.

Here, we present an approach to documenting variation in data workflows across clinic organizations. We then provide examples of how we used knowledge of these variations to improve the design of a process for increasing screening rates supported by an EHR decision-support tool. We also used the workflows to teach clinical staff about better practices for capturing data.

Materials and Methods

We documented workflows between the pilot and the implementation phases of the STOP CRC pragmatic trial. For the STOP CRC project, Reporting Workbench (RWB) was used to find and track patients eligible for colon cancer screening. RWB is a feature in Epic that allows clinical staff and providers to generate real-time reports, hereafter called rosters, to pull refined lists of patients directly from the EHR. OCHIN developed STOP CRC rosters that listed patients due for colon cancer screening, and three research staff members introduced the new RWB rosters during training. Additional rosters also showed patients who were due for outreach mailings, and clinic staff used those rosters to mail the study’s materials to their patients. Note that the pilot study used a slightly different roster method, and both the EHR and reporting tools were refined during rollout [20,14]. The initial design of the tools has been reported previously [21,15].

The clinics can store patient-level preventive health data in an Epic-based tool called Health Maintenance. Health Maintenance can be programmed to automatically update based on information in the EHR or can be updated manually. Some of the data being tracked in the EHR are fecal tests, which looks for hidden blood in the stool, and colonoscopy procedures, where a doctor inserts a tube in a patient’s rectum to look for growths in the colon. If tissue is removed during a colonoscopy, it is sent to pathology to determine if it contains abnormal cells. The close integration of RWB and Health Maintenance to accomplish the STOP CRC intervention was innovative. The integration meant that our tools relied on both study codes and Health Maintenance to select patients who were due for screening. In this way, providers could exempt patients from the program whom they knew to be poor candidates for screening. Moreover OCHIN programmed Health Maintenance to automatically postpone the colorectal cancer screening reminder for one year if a patient had a resulted fecal test lab order These steps improved the accuracy of our patient selection and allowed providers’ assessment of patients’ screening eligibility to factor into whether or not a patient received outreach.

Health Maintenance also had a field for tracking historical colonoscopies. A normal colonoscopy in the past 9 years excluded a patient from our registry, until follow-up was indicated as specified by the provider Clinics employed varying processes for documenting historical colonoscopies, and therefore we present the workflows for historical colonoscopies in addition to fecal testing and current colonoscopy workflows.

Participating Sites

The 26 STOP CRC clinics are part of eight participating health centers (Virginia Garcia Memorial Health Center, Multnomah County Health Department, Benton County Health Department, La Clinica del Valle, Medford Community Health Center Open Door Community Health Center, Mosaic Medical, and Oregon Health and Sciences University). They all contract for services with OCHIN, a local non-profit organization that provides a single instance of Epic EHR and services to help providers implement practice management. Workflow for the intervention was determined at the health center level. All health centers used Epic version 2010 (Vernona, WI). The research team actively worked with each health center’s EHR site specialist; the EHR site specialist is a “super user” of the system who helps utilize tools from OCHIN, and helps with training and new technology upgrades.

Data collection

A research team member met with the EHR site specialist at each health center. The research staff asked the EHR site specialist to describe the workflow for three discrete processes: 1) ordering and recording results of fecal tests; 2) ordering a referral for a colonoscopy (usually at outside specialty clinics) and documenting their results (once pathology reports are returned); and 3) documenting historical colonoscopies. Specifically, research staff asked about the activities of clinic staff as well as the input and use of colorectal cancer screening information in the EHR. As all health centers operated primary care clinics, none perform colonoscopies on site. Meetings generally lasted 1.5 hours. Research staff then summarized workflows across health centers, and validated their findings during an in-person advisory board meeting of the research team, which included both researchers and clinicians and administrators from the participating health centers. The summarized workflows and a table describing the clinic-specific workflows were presented to clinicians and administrators. Individual steps of the process were listed in columns and described how that clinic performed the step; multiple paths through the process were represented as additional rows(see Table 1).

Table 1.

Example of Individual Clinic Workflow Variation

| HEALTH CENTER | IDENTIFY PATIENTS | PROVIDE TEST/ENCOUNTER TYPE | FECAL TEST | ORDER TYPE | ORDER CLASS | LAB | HOW TEST GETS TO LAB | APPT AND ENCOUNTER TYPE – FUTURE ORDER RELEASE | HOW DOCUMENTED (VARIES WITHIN CLINIC) |

|---|---|---|---|---|---|---|---|---|---|

| 1 | Pre-visit chart review; some rely on Health Maintenance* | In person during visit/Office Visit | 2-sample FIT | Future order | External interface outside collection | Commercial lab | Mail or dropped off at clinic lab, appt created, order released into encouter and encounter closed, specimen processed at internal lab or mailed to outside lab | Lab appt | - Result only -Result note - Update problem list -Health Maintenance* (manual or automatic) review and confirm |

|

External interface |

Internal central lab |

||||||||

| 2 | Pre-visit chart review; some rely on Health Maintenance* | In person during visit/Office Visit | 3-sample gFOBT | Future order | External interface | Commercial lab Internal central lab | Dropped off at clinic draw station, order released into encounter, encounter closed and specimen sent to external lab | Same day appt | - Result only - Result note - Update problem list -Health Maintenance* (manual or automatic) review and confirm |

|

3-sample gFOBT |

Future order |

Back office |

Point-of-care |

Dropped off at clinic draw station, appt created, order released into encounter, results entered, encounter closed |

Lab appt |

||||

| 3 | Pre-visit chart review; some rely on Health Maintenance* | In person during visit/Office Visit | 3-sample gFOBT | Future order for number of specimen needed | Back office | Point-of-care | Dropped off at clinic draw station, appt created, order released into encounter, encounter closed | Lab appt | - Result only - Result note - Update problem list -Health Maintenance* (manual or automatic) review and confirm |

|

Regular order for number of specimens needed |

External interface outside collection |

Local hospital lab |

Mailed or dropped off at hospital lab, or dropped off at clinic and clinic sends to hospital lab |

NA |

|||||

| 4 | Pre-visit chart review | In person during visit/Office Visit | 3-sample gFOBT | Future order | Back office | Point-of-care | Dropped off at clinic draw station, appt created, order released into encounter, encounter closed, specimen processed on site | Lab appt | - Result only - Result note |

| 5 | Pre-visit chart review; some rely on Health Maintenance* | In person during visit/Office Visit | 1-sample FIT | Future order | Outside order external interface | Commercial lab | Dropped off at clinic draw station, appt created, order released into encounter, encounter closed and specimen sent to external lab | Lab appt | -Health Maintenance* (manual or automatic) review and confirm |

|

Mail/interim note |

|||||||||

| 6 | Gaps in care report and pre-visit chart review; some rely on Health Maintenance* | In person during visit/Office Visit | 2-sample FIT | Future order | External interface outside collection | Internal lab and point-of-care | Dropped off at clinic draw station, appt created, order released into encounter, encounter closed, processed on site | Lab appt | - Result only - Result note - Update problem list -Health Maintenance* (manual or automatic) review and confirm |

| 7 | Pre-visit chart review; some rely on Health Maintenance* | In person during visit/Office Visit | 3-sample gFOBT | Future order | Back office | Point-of-care | Dropped off or mailed to clinic; front desk takes to back office lab | Lab only encounter | - Result only - Result note -Health Maintenance* (manual or automatic) review and confirm |

| 8 | Pre-visit chart review | In person during visit/Office Visit | 3-sample gFOBT; change to 2-sample FIT | Future order | Back office | Point-of-care | Dropped off or mailed to clinic, appt created, order released into RN visit -OR- no appt created, order released, processed on site | RN visit or prescheduled office visit | - Result only - Result note - Update problem list -Health Maintenance* (manual or automatic) review and confirm |

| Gaps in care report from Medicaid Health Plan | Mail/phone encounter | 2-sample FIT | Future order | External interface | Commercial lab | Dropped off during prescheduled office visit; order released into encounter -OR- no appt created, order released; specimen sent to outside lab | Prescheduled office visit, or none | ||

*Health Maintenance is a tool in Epic for tracking preventive care services.

Institutional Review Board review and approval was obtained for the project. However, consent was not required for workflow mapping interviews as no individual patient data were used. All interviews were conducted between 7/15/2013 – 2/13/2014. Data were verified in person on 2/27/2014.

Results

In this paper, we present the workflows of the participating clinics. Several health centers used the same processes, and these final workflows consolidate all of the health centers into summary workflows that include one for each of the three discrete processes (e.g., Fecal Testing, Primary Care Referral to Colonoscopy and Recording Historical Colonoscopy). Each diagram should be read from left to right, progressing through the columns as individual steps in the workflow. Each column has multiple options to capture all the permutations of how clinics performed that step. In other words, each participating clinic takes one of the many possible paths through the workflow diagram.

We also describe several examples of the utility of the workflow knowledge to the STOP CRC intervention roll-out, to the EHR modification, and to the clinics’ delivery of preventive health services.

Fecal Testing

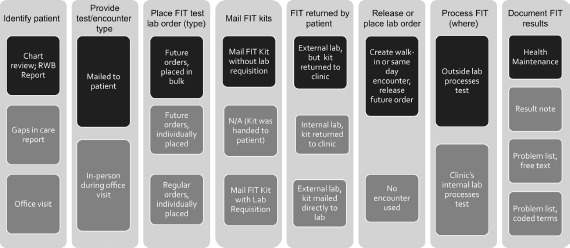

Figure 1 consolidates into one diagram the workflows for ordering and recording results of a fecal test. Since this trial was pragmatic, clinics were not required to change their processes (See Figure 1). Research staff presented successful practices both in project meetings and during on-site clinic training, but those worked in conjunction with various workflows. For example, the STOP CRC study could not control whether clinics had internal lab capacity to process fecal tests or used an outside lab. The intervention was designed to work in either situation.

Figure 1.

Summary of Clinic Workflows, FIT Testing

A patient who needs colorectal cancer screening can be identified during an office visit, during the pre-visit chart review (i.e. “scrub”), or using a gaps-in-care report. The new STOP CRC workflow uses a roster generated by the RWB tool, which can list all patients due for screening based on the US Preventive Services Task Force (USPSTF) guidelines [22,16]. The report can be generated by centralized office staff, medical assistants, or front desk staff; clinics used the workflow process to determine what staffing strategy worked best in their clinic.

In the STOP CRC intervention, the patient is mailed a FIT test, which requires a lab order for processing. The EHR was not set up for the lab order to be placed when the patient is not in the clinic (lab orders are typically requested during an in-person visit), and so each clinic needed to select a workflow to order the lab orders for the mailed kits. The decision of how and when to place the lab orders, depending on the staffing and logistics of whether lab orders would be processed either in the clinic or sent to an outside laboratory, had the most dramatic effect on the process to get patients screened by mail.

Health Centers had three major paths through the workflow. Some health centers (2 of 8 health centers) had the FIT test returned to the clinic and processed internally While this was the most streamlined process, most health centers did not have that lab capacity or had an existing relationship with an external lab. Some health centers (2 of 8) used an external interface with a separate lab company with the FIT test being mailed directly to that lab(by the patient). However, having patients mail kits directly to the lab was more labor intensive (because staff needed to place an order that would print a laboratory requisition to go in the mailed envelope) and could not always be managed due to staffing constraints. Therefore, many clinics (4 of 8) chose to have the test mailed or brought back to the clinic, where clinic staff then placed the order and printed a lab requisition (for the subset of completed kits) and sent the kit on to the external lab. Several health centers changed their initial decision about the process after they walked through the workflow diagrams with the research team.

Colonoscopy Initiated by Referral in Primary Care

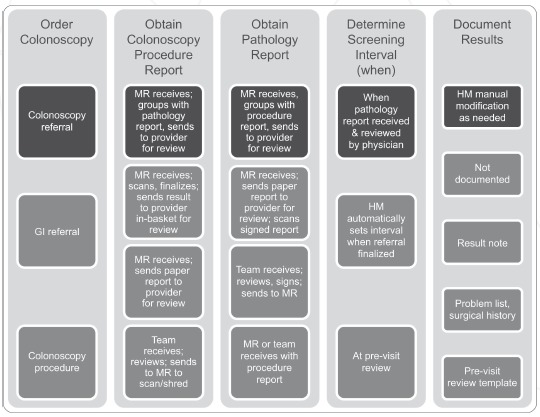

We developed a consolidated workflow for ordering and recording results of colonoscopies (see Figure 2). Figure 2 details the process for referring a patient to colonoscopy and capturing the result, with the highlighted boxes suggesting the most effective workflow.

Figure 2.

Summary of Clinic Workflows, Primary Care Referred Colonoscopy

Note: GI=Gastroenterologist; MR=Medical Records Department; HM=Health Maintenance

First, a provider orders a gastroenterology (GI) referral, a colonoscopy referral, or a colonoscopy procedure (in cases where they are affiliated with a hospital). When the patient completes the exam, the colonoscopy report is automatically generated and often forwarded to the referring clinician (the referral is received in the medical records department). Clinic staff update the chart to reflect the completion of the colonoscopy either when they receive a pathology report or conduct a pre-visit chart review. Staff members document the result in one or multiple places: the result note in free-text (5 of 8 health centers), the problem list or surgical history (4 of 8), the pre-visit template (4 of 8), or Health Maintenance (7 of 8). The research team recommended that, when the clinic receives the pathology report, clinic staff review it to determine the interval until the next screening and record it using Health Maintenance. As we describe below, we did not attempt to automate this step (using a 10-year default if the referral is completed) because it would have required provider review of the pathology report.

Historical Colonoscopy

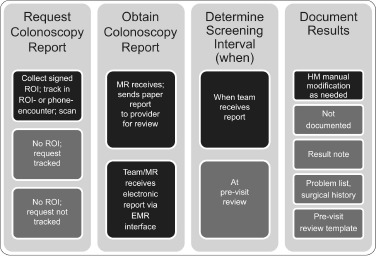

Several health centers chose to review past claims records, which STOP CRC made available to the health centers, to update past colonoscopies that had been performed but never recorded in patients’ medical records. Specifically, some health centers reviewed all charts prior to a mailing to ensure accurate colonoscopy information. In addition, some patients notified clinics of a prior colonoscopy when they got a mailed notice to complete a FIT test. Figure 3 consolidates the various workflows to obtain and record results of these historical colonoscopies (see Figure 3).

Figure 3.

Summary of Clinic Workflows, Historical Colonoscopy

Note: ROI=Release of Information; MR=Medical Record Department; HM=Health Maintenance

To obtain historical colonoscopy records, some health centers require a signed release of information (7 of 8 health centers). At other health centers, a colonoscopy record can be obtained through an electronic interface with a local hospital (5 of 8).

Some health centers’ medical records offices receive paper reports (7 of 8) and others electronic reports (6of8). The research team recommended that, when the clinic receives the report, they review it immediately to determine the interval until the next screening and record it using Health Maintenance. (This contrasts to the practice of delaying review until the patient’s next appointment.)

Pragmatic Workflow and EHR Modifications

Identifying patients eligible for the STOP CRC intervention was one challenge the team addressed through EHR modifications and associated workflow design. We realized during study design that patient rosters needed to be dynamic and more closely linked to the actual clinical data than they were in the STOP CRC pilot, which used a one-time selection of eligible patients based on research-defined inclusion and exclusion criteria alone [20,14]. However, the primary care clinics lacked accurate data on colonoscopy completion. This gap occurs because colonoscopies often take place in facilities outside of the clinic, such as in specialty centers or hospitals, and the clinics’ EHRs do not interface with these facilities and integrate electronic documentation of services. Such information is stored inconsistently and in a variety of places in medical records. We considered adding a discrete field to the EHR for prior colonoscopy screening, but instead opted to encourage using existing data fields embedded in Health Maintenance. To help providers enter this information, we trained them on use of the Health Maintenance, including adding modifiers to help medical staff enter details about colonoscopy and apply appropriate follow-up screening intervals. We also helped clinics manage the updating of this information through the Historical Colonoscopy workflow development.

The research team also developed a workflow specifically to help clinics clean up invalid addresses before letters were mailed to patients. Clinic staff could use the “Excluded Patients” roster to search for known missing addresses, or the Eligible Patients roster to sort and find bad addresses (including text fields like “Bad Address”, or “Returned Mail” or “Baptist Church”, etc.). One clinic decided to undergo a substantial clean-up of all their addresses before rolling out the new workflow for FIT testing. In another clinic, however, the workflow for indicating that a patient had an outdated address involved a field that was not date-stamped. As a result, this clinic could not use that roster to clean up their patient address list before rollout. Clinic managers opted to change their data entry procedure to conform to the suggested practice.

As we noted above, sometimes the EHR did not accommodate the STOP CRC workflow easily. In particular, patients sending completed FIT kits directly to a lab is not a standard feature in the EHR, which is designed for in-person patient encounters producing a lab request. Because the FIT tests were being mailed to patients, clinics needed to create a “patient encounter” to enable patients to return a test directly to a lab without walking into the clinic in-person. This patient encounter required all of the pertinent registration information as if they were standing in the office while the clinician placed the order. This lab order process was so time consuming that the research team and clinics changed the initially proposed workflow to minimize the number of patients for whom they requested labs. They only requested lab orders for patients who returned completed FIT kits to the clinic. While the STOP CRC intervention was originally envisioned as a process that streamlined bulk mailing and lab ordering, the reality was that the EHR and billing software was not designed to handle orders without direct patient interaction. In addition, after the initial rollout, the EHR was modified to enable clinic staff to generate a group of lab orders at once to facilitate mailing FIT kits to the whole list of eligible patients. The need for these modifications became apparent when the clinic workflows were discussed.

Having a detailed understanding of workflows enabled research staff to tailor staff training to address relevant topics. While clinics used different processes for entering data within the EHR, we still wanted to introduce a few “successful practices” to health centers that could provide a more effective process. In addition, we customized the training so it was based on staffing models, such as which clinic personnel used the EHR to order colonoscopies or FIT testing.

Discussion

The STOP CRC research team designed a process for documenting clinic workflows related to implementing a centralized colon cancer screening protocol. We then used the workflows as OCHIN customized the EHR (i.e., Health Maintenance) and its associated reporting tool (i.e., Reporting Workbench) to define how the clinic staff interacted with the EHR. The STOP CRC intervention was designed to be embedded within an existing integrated EHR. We used the workflows to help anticipate and address unintended consequences of our intervention.

We would argue for the utility of creating workflows during a study such as this one. The documented colorectal cancer screening workflows were important for our research, but also informed clinics about screening gaps, missing EHR data, and improved processes at other clinics. The STOP CRC study built EHR tools that could work across health centers that differed in how and where they recorded colorectal-related data in the EHR. We customized the intervention at each health center, based on our review of existing and proposed workflows for delivering screening. The clinics in the pragmatic trial had enough variation to require the research team to somehow capture the universe of intervention workflow options. If we had just designed a single workflow based on our pilot clinic, the implementation phase would not have succeeded. We found that better practices emerged from everybody understanding everybody else’s workflow decisions.

Creating consensus on the workflow of a given clinic process, including standardizing data, requires a significant investment in clinic leadership and content experts’ time to integrate clinical knowledge, operational realities, and EHR functionality. If a team (clinical or research) does not understand clinic workflows, they risk applying a one-size-fits-all solution across clinical systems that might have substantially different needs. Understanding and documenting variations in clinic workflows is important to successfully build, use, and maintain robust EHR tools. Establishing workflow variations helped us recognize and communicate limitations of clinic data. We also used the workflows to identify limitations of a given approach and plan for the potential effects of the STOP CRC intervention on factors such as the quality of data capture, clinical care delivery processes, and staffing roles.

Establishing Successful Practices

While STOP CRC adapted to the existing workflows of a given health center, it nevertheless presented an opportunity to discuss successful practices with clinic staff, including the pros and cons of changing their current workflow. Establishing those successful practices within our workflow processes was, in fact, necessary to develop appropriate training. Some practices were readily adopted while others were modified or clinics chose not to implement them.

If an EHR tool is developed specifically to identify patients eligible for an intervention, then it is crucial to know where all relevant information might be stored. For example, the Historical Colonoscopy reports enabled clinics to clean up their data to ensure letters were not mailed to patients who should have been excluded. If clinicians could trust the accuracy of EHR tools, they could use them to more easily identify people eligible for a screening test or other medical intervention. Therefore, the research team shared a goal with the clinics to maximize coded data entry to optimize tracking and outreach.

Given the importance of excluding patients who had had a prior colonoscopy in the past 9 years from lists of patients due for screening, we needed to capture the date of the last colonoscopy referral. Therefore, we recommended that clinicians use “colonoscopy referral” as a reason instead of “GI referral” because GI referrals can be made for a number of gastro-intestinal issues (e.g., chronic abdominal pain). Some clinics intend to switch to this notation over time, but either could not do so immediately or could not retrospectively apply this change to prior colonoscopies. Another data variation was that some clinics decided to update Health Maintenance to postpone the screening of patients for whom fecal testing was not considered clinically appropriate (due to comorbidities). While STOP CRC cast a wide net for the mailings, some clinicians manually excluded patients on their panel from receiving cancer screening reminders. Other clinics opted to standardize the capture of colonoscopy in the surgical history field, where it could be captured for federal reporting purposes, such as Uniform Data Systems. These different clinic choices were discussed among participating clinics and are important context for understanding where colonoscopy data are stored and retrieved in a particular system. The variation in where data are stored highlighted steps in a process where a work-around may have been introduced to meet an immediate need.

Another important practice is ensuring that the GI procedure and pathology reports are reviewed and the screening interval is updated in Health Maintenance. The pathology and procedure reports for colonoscopy need to be reviewed frequently enough to ensure that the EHR tools accurately identify the list of patients who need further intervention prompts. Clinicians that already update Health Maintenance when the pathology and procedure reports for colonoscopy arrive needed no further training on this procedure. Clinicians that delay updates to Health Maintenance until the patient has a subsequent visit (e.g. pre-visit chart review), on the other hand, needed training in this component.

A limitation that deserves mention is that all of our clinics were affiliated with a single network, OCHIN, whose staff provided initial training in the use of the EHR. Therefore, it is possible that we observed fewer variations than might be observed in health centers that use multiple EHR platforms. Even with less variation, however, our method may be an important model for ensuring comprehensive data capture [23,17]. Another limitation is that we are unable to provide a systematic quantitative assessment of the benefits of capturing and consolidating multi-site workflows before implementation. Instead, we have tried to provide several qualitative examples of benefits throughout this paper

Conclusion

Our findings add to those of Johnson and colleagues. Specifically we outline a process for describing data capture and use through workflow diagrams when multiple health systems are involved in shared research. We propose that teams use workflow diagrams not just to understand and describe the quality and limitations of the data, but to define successful practices to standardize the data collection and identify the eligible population. Finally, we argue that such information can help tailor training on new processes so that it is most effective and relevant to both research and practice. Understanding such workflows can enhance efforts to disseminate an evidence-based intervention across heterogeneous health systems. We present our methods for documenting the way that colorectal cancer screening information is gathered and presented to health care teams in the midst of clinical care improvement. By mapping and summarizing the workflows across clinic organizations, we were able to customize the implementation of new EHR tools at each site and plan for roll-out.

Our methods for producing workflow diagrams may help multiple health system compare and discuss their workflows to design a better EHR-enabled intervention and select optimal practices for recording clinical information in the EHR.

Acknowledgements

Research reported in this publication was supported by the National Institutes of Health through the National Center for Complementary & Alternative Medicine under Award Number UH2AT007782 and the National Cancer Institute under Award Number 4UH3CA188640-02. The content is solely the responsibility of the authors and does not necessarily represent the official views of the National Center for Complementary & Alternative Medicine or the National Cancer Institute. We would like to thank Lin Neighbors and Lisa Fox who provided graphics support for the figures in the article.

References

- 1.Hsiao, C.J. and Hing E., Use and characteristics of electronic health record systems among office-based physician practices: United States, 2001-2013. NCHS Data Brief, 2014(143): p. 1–8. [PubMed] [Google Scholar]

- 2.Cimino, J.J., Improving the electronic health record--are clinicians getting what they wished for? Jama, 2013. 309(10): p. 991–2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Vollmer, W.M., et al., Use of health information technology to improve medication adherence. Am J Manag Care, 2011. 17(12 Spec No.): p. Sp79–87. [PMC free article] [PubMed] [Google Scholar]

- 4.Lakbala, P. and Dindarloo K., Physicians’ perception and attitude toward electronic medical record. Springerplus, 2014. 3: p. 63. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Centers for Medicare and Medicaid Services EHR Meaningful Use Overview. 2012. [Google Scholar]

- 6.Shea, C.M., et al., Assessing organizational capacity for achieving meaningful use of electronic health records. Health Care Manage Rev, 2014. 39(2): p. 124–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Platt, R., Kass N.E., and McGraw D., Ethics, regulation, and comparative effectiveness research: time for a change. Jama, 2014. 311(15): p. 1497–8. [DOI] [PubMed] [Google Scholar]

- 8.Chen, E.S. and Sarkar I.N., Mining the electronic health record for disease knowledge. Methods Mol Biol, 2014. 1159: p. 26986. [DOI] [PubMed] [Google Scholar]

- 9.Johnson, E.K., et al., Use of the i2b2 research query tool to conduct a matched case-control clinical research study: advantages, disadvantages and methodological considerations. BMC Med Res Methodol, 2014. 14: p. 16. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Johnson, K.E., et al. How the Provenance of Electronic Health Record Data Matters for Research: A Case Example Using Systems Mapping. 2014. 2014; Available from: http://repository.academyhealth.org/egems/vol2/iss1/4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Coronado, G.D., et al., Strategies and Opportunities to STOP Colon Cancer in Priority Populations: design of a cluster-randomized pragmatic trial. Contemp Clin Trials, 2014. 38(2): p. 344–9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Johnson, K.E., et al. How the Provenance of Electronic Health Record Data Matters for Research: A Case Example Using System Mapping. 2014. 2, 1058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Zai, A.H., et al., Applying operations research to optimize a novel population management system for cancer screening. J Am Med Inform Assoc, 2014. 21(e1): p. e129–35. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Zai, A.H., et al., Lessons from implementing a combined workflow-informatics system for diabetes management. J Am Med Inform Assoc, 2008. 15(4): p. 524–33. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Unertl, K.M., et al., Describing and modeling workflow and information flow in chronic disease care. J Am Med Inform Assoc, 2009. 16(6): p. 826–36. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Malhotra, S., et al., Workflow modeling in critical care: piecing together your own puzzle. J Biomed Inform, 2007. 40(2): p. 81–92. [DOI] [PubMed] [Google Scholar]

- 17.Ramaiah, M., et al., Workflow and electronic health records in small medical practices. Perspect Health Inf Manag, 2012. 9: p. 1d. [PMC free article] [PubMed] [Google Scholar]

- 18.Manion, F.J., et al., Leveraging EHR data for outcomes and comparative effectiveness research in oncology. Curr Oncol Rep, 2012. 14(6): p. 494–501. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Friedman, A., et al., A typology of electronic health record workarounds in small-to-medium size primary care practices. J Am Med Inform Assoc, 2014. 21(e1): p. e78–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Coronado, G.D., et al., Strategies and opportunities to STOP colon cancer in priority populations: pragmatic pilot study design and outcomes. BMC Cancer, 2014. 14: p. 55. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Coronado, G.D., et al., Using an Automated Data-driven, EHR-Embedded Program for Mailing FIT kits: Lessons from the STOP CRC Pilot Study. J Gen Pract (Los Angel), 2014. 2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.US Preventive Services Task Force Screening for Colorectal Cancer, Topic Page. 2008. [Google Scholar]

- 23.Lanham, H.J., Leykum L.K., and R.R. McDaniel, Jr., Same organization, same electronic health records (EHRs) system, different use: exploring the linkage between practice member communication patterns and EHR use patterns in an ambulatory care setting. J Am Med Inform Assoc, 2012. 19(3): p. 382–91. [DOI] [PMC free article] [PubMed] [Google Scholar]