Abstract

Physiological, behavioral, and psychological changes associated with neuropsychiatric illness are reflected in several related signals, including actigraphy, location, word sentiment, voice tone, social activity, heart rate, and responses to standardized questionnaires. These signals can be passively monitored using sensors in smartphones, wearable accelerometers, Holter monitors, and multimodal sensing approaches that fuse multiple data types. Connection of these devices to the internet has made large scale studies feasible and is enabling a revolution in neuropsychiatric monitoring. Currently, evaluation and diagnosis of neuropsychiatric disorders relies on clinical visits, which are infrequent and out of the context of a patient’s home environment. Moreover, the demand for clinical care far exceeds the supply of providers. The growing prevalence of context-aware and physiologically relevant digital sensors in consumer technology could help address these challenges, enable objective indexing of patient severity, and inform rapid adjustment of treatment in real-time. Here we review recent studies utilizing such sensors in the context of neuropsychiatric illnesses including stress and depression, bipolar disorder, schizophrenia, post traumatic stress disorder, Alzheimer’s disease, and Parkinson’s disease.

1. Introduction

Neuropsychiatric illness comprises 13-16% of the total global burden of disease measured in disability life-adjusted years (DALYs) for all ages, which exceeds the burden of cardiovascular disease or cancer (Vigo et al. 2016). One in four people in the world will be affected by mental or neurological disorders at some point in their lives, yet only a small fraction of the 450 million people affected will receive treatment due to pervasive underdiagnosis, a lack of trained healthcare professionals, stigma, and other reasons (Sayers 2001). These illnesses are more prevalent among older people and will contribute even more to overall global disease as life expectancy improves. The burden of mental and substance use disorders increased by 37% between 1990 and 2010, which for most disorders was driven by population growth and aging (Whiteford et al. 2013). The prevalence of dementia continues to rise, and by 2050 an estimated 13.8 million Americans will have Alzheimer’s disease (AD; see Table A1 for definitions of abbreviations and acronyms used in this review) or another dementia. In 2016 in the United States, total payments for healthcare, long-term care, and hospice services for people 65 years or older with dementia were estimated to be $230.1 billion, and caregivers provided 18.2 billion hours of unpaid assistance (Alzheimer’s Association 2016). The lack of effective interventions for neuropsychiatric illness is partially due to limited understanding of underlying mechanisms, but also due to under-distribution of medications and human resources in low- and middle-income countries, in which disease burden measured in DALYs is disproportionately high (Collins et al. 2011).

Autonomic nervous system (ANS) dysfunction occurs in neuropsychiatric illness, resulting in altered heart rate (HR), heart rate variability (HRV), galvanic skin response, skin conductance and temperature, and respiratory rate (Draghici et al. 2016; Karemaker 2017). Due to the prevalence of HR sensors in wearable devices, and a substantial amount of literature exploring HRV measurements as markers of ANS modulation, we review studies that utilize HR and HRV measurements. Note HRV is not one metric; rather, it encompasses several types of metrics such as time domain (Stein et al. 1994; Kleiger et al. 2005; Bauer et al. 2017), frequency domain (Akselrod et al. 1981; Montano et al. 2009), and complexity measures such as entropy (Costa et al. 2002). Changes in these metrics have been reported in patients with stress (Thayer et al. 2012), major depressive disorder (MDD; Kemp et al. 2010), bipolar disorder (BD; Henry et al. 2010), schizophrenia (Chang et al. 2009), post traumatic stress disorder (PTSD; Liddell et al. 2016), Alzheimer’s disease (Femminella et al. 2014) and Parkinson’s disease (PD; Maetzler et al. 2013).

Neuropsychiatric illness is also associated with alterations in behavior, especially physical movements and social routine. Patients with MDD, BD, or schizophrenia can be significantly more sedentary than age- and gender-matched healthy controls (Vancampfort et al. 2017). Diminished motor function, the presence of tremor, and coordination issues also occur in movement disorders such as Parkinson’s disease. On the other hand, locomotor agitation can be a sign of mania or psychosis which may be part of the presentation of schizophrenia or BD. These abnormalities are detectable by smartphones and wearable devices with accelerometers or global positioning system (GPS) sensors. Because modern smartphones and most wearables marketed to consumers for fitness purposes (which are often used in academic studies for healthcare applications, including many referenced here) have accelerometers, we also review studies that analyze locomotor activity. Behavior can also be inferred from social activity data, such as phone calls, text messages, social media use, and web browser history. Importantly, passive monitoring via digital sensors can yield information about a patient’s physiology and behavior in the 99% of the time they are not seeing a clinician, during which they take actions and are influenced by their environment in ways that profoundly impact their health (Asch et al. 2012). Together these data could give us a richer understanding of the day-to-day variability of neuropsychiatric illness, enable the monitoring of patient status before (rather than after) symptoms reach a level warranting intervention, and reduce biases and inaccuracy intrinsic in subjective questionnaires (Karow et al. 2008; Copeland et al. 2017).

Monitoring is distinct from diagnosis. The latter is performed by clinicians who take a comprehensive history, perform a physical exam, utilize questionnaires and surveys, order and interpret laboratory tests and imaging, and exclude alternate diagnoses. Clinical diagnoses often form the ground truth for subsequent monitoring efforts. For example, a machine learning algorithm can associate patterns in digital sensor data – such as alterations in heart rate variability or locomotor activity – with questionnaire results indicating severity, or a clinical diagnosis. Passive monitoring could augment diagnosis by providing clinicians with additional information, capture behavioral and biological variation not accounted for by current diagnostic categories, and enable the discovery of novel illness phenotypes.

Digital sensors in smartphones and wearables generate a vast amount of high-frequency high-dimensional time series data that require new methods of analysis. In contrast, data used by clinicians – self-reported symptoms, lab tests, and vital signs – are subjective, infrequently sampled, and small-scale. Univariate significance testing and regression models are commonly used to perform hypothesis testing on these traditional data, but such methods are poorly suited for the analysis of data from digital sensors. Rather, approaches from signal processing, information theory, and complexity science are needed. Features of interest in digital sensor data include statistical moments, e.g. the mean or the variance of a signal, time-domain characteristics, frequency-domain characteristics such as power spectral density attributes or wavelet coefficients, and complexity measures such as entropy (Johnson et al. 2016). These features are used to train machine learning algorithms that perform regression, continuous parameter prediction, and classification of outputs such as disease phenotype or questionnaire score (Obermeyer et al. 2016). Excellent machine learning algorithms generalize in the sense that they accurately classify inputs from data used to train the algorithm as well as novel input from an external set of data not used for training. Of note, univariate statistical significance does not guarantee predictivity or clinical utility of a biomarker (Lo et al. 2015). Methods focusing on p-values can miss useful “weak features” – those that do not significantly differ by output class when assessed via univariate statistical tests, yet can be used as input to train a multivariate machine learning algorithm that achieves high accuracy.

In this review we summarize recent studies utilizing smartphones, wrist-worn wearables, and physiological patches for passive monitoring of some prevalent and debilitating neuropsychiatric illness: stress, MDD, BD, schizophrenia, PTSD, AD, and PD (Table A3). Sensors used in these studies measure accelerometry, HR, GPS, phone calls, SMS, and more. Examples of aberrations in physiology and behavior detectable by these sensors and associated with the above illnesses are provided in (Table 1). Particular emphasis is placed on studies that classify illness status, or estimate scores from neurological and psychiatric surveys, scales, and questionnaires (summarized in Table A2). We discuss the challenges, limitations, and potential of using these technologies for neuropsychiatric care. Related works and ongoing studies that have yet to yield results but are promising in terms of scope and scale are referenced in these latter sections (Table A4). We do not review smartphone applications (“apps”) designed to deliver interventions such as cognitive behavioral therapy, provide general information to patients about their illness, or accompany existing care delivery paradigms (i.e. a mobile version of a patient portal). Furthermore, a thorough technical review of signal processing, information theory, and machine learning used in these studies is beyond the scope of this review. We also do not cover the topic of sleep, a key aspect of neuropsychiatric conditions that has been extensively reviewed elsewhere (Krystal 2012; Behar et al. 2013; Roebuck et al. 2014; Zinkhan et al. 2016).

Table 1.

Aberrations in physiology and behavior associated with neuropsychiatric illness that are detectable by sensors in smartphones and wearables

| Sensor type | ||||

|---|---|---|---|---|

| Illness | Accelerometry | HR | GPS | Calls & SMS |

| Stress & depression | Disruptions in circadian rhythm and sleep | Emotion mediates vagal tone which manifests as altered HRV | Irregular travel routine | Decreased social interactions |

| Bipolar disorder | Disruptions in circadian rhythm and sleep, locomotor agitation during manic episode | ANS dysfunction via HRV measures | Irregular travel routine | Decreased or increased social interactions |

| Schizophrenia | Disruptions in circadian rhythm and sleep, locomotor agitation or catatonia, diminished overall activity | ANS dysfunction via HRV measures | Irregular travel routine | Decreased social interactions |

| PTSD | Inconclusive evidence | ANS dysfunction via HRV measures | Inconclusive evidence | Decreased social interactions |

| Dementia | Dementia Disruptions in circadian rhythm, diminished locomotor activity | Inconclusive evidence | Wandering away from home | Decreased social interaction |

| Parkinson’s disease | Gait impairment, ataxia, dyskinesia | ANS dysfunction via HRV measures | Inconclusive evidence | Voice features can indicate vocal impairment |

2. Smartphones

Smartphones are globally ubiquitous, owned by 72% of Americans and 3 billion people worldwide, and are projected to reach a global total of over 5 billion people by 2030 (Poushter 2016). Importantly, studies in the USA, United Kingdom, Canada, and India have found smartphone ownership to not be significantly lower among people with serious mental health conditions compared to the average owner, and ownership by these individuals is projected to increase, mirroring the trend seen in the general population (Torous et al. 2014; Firth et al. 2016). Additionally, people tend to keep their phones with them and check them between 46 to 85 times per day (Andrews et al. 2015; Eadicicco 2016). These data thus reflect social and behavioral manifestations of neuropsychiatric illnesses in the context of daily life rather than in an artificial clinical setting (Insel 2017). For example, GPS location data measured on smartphones can be used to estimate behavioral attributes such as percentage of time a subject spends in certain locations (fig. 1). By evaluating the time of day, day of week, and amount of time spent in each location, the purpose of each location datum can be inferred, e.g. work versus home. Additionally, social interactions in the form of calls and text messages can be monitored and quantified (fig. 2). Geolocation, social network activity, and other attributes reflect behavior and may differ in subjects with neuropsychiatric illness compared to healthy controls. Several investigators have built smartphone apps for collecting sensor and usage data, including Automated Monitoring of Symptom Severity (AMoSS; Palmius et al. 2014), Purple Robot (Schueller et al. 2014), and Beiwe (Torous et al. 2016).

Figure 1.

Geolocation data measured via smartphone can track time spent at modal locations. The x- and y-axes are distance from the most commonly visited location. The z-axis is the percentage of total time spent in a given location, with darker orange encoding a higher percentage and a lighter yellow encoding a lower percentage. The dark orange peak at the origin where the individual spends the most time is assumed to be home, and the second-largest peak (z-axis value) where the individual spends the next most time is assumed to be work, or vice-versa if the individual spends more time at work than home.

Figure 2.

Social network activity measured via smartphone can identify mood and illness. The y-axis encodes unique pairings of sender and recipient IDs. The x-axis encodes time. The radius of each colored dot is proportional to the number of calls and text messages in one day. Interactions from a sender-recipient pairing have the same color over time, i.e. all red dots with the same height on the y-axis represent interactions between the same two unique individuals. Qualitatively, (a) healthy controls demonstrate more regular amounts of interaction over time with their social contacts compared to (b) subjects with bipolar disorder who alternate bouts of high and low levels of interaction.

Smartphones can also be used to administer validated questionnaires for evaluating quality of life and mental well-being (Palmius et al. 2017). Although self-reported questionnaires are prone to recall, social desirability, and confirmation biases, they provide a pragmatic best estimate of an individual’s mental status and can achieve results comparable to clinician-administered surveys (Spitzer et al. 2012; Ebner-Priemer et al. 2006; Martel 2008; Solhan et al. 2009). The inference of mental health questionnaire results from digital sensor data is a common approach in the literature and could be useful for monitoring the status of subjects who struggle with adherence or have impaired cognition and executive decision-making capacity (Table A2; Mohr et al. 2016; Tsanas et al. 2016; Barrett et al. 2017; Aung et al. 2017). In this section we review recent studies using smartphones to monitor neuropsychiatric illnesses; work that may be related but also involves analysis of heart rate data is reviewed in later sections.

2.1. Stress and depression

MDD is a debilitating disease that is characterized by depressed mood, diminished interests, impaired cognitive function, vegetative symptoms, disturbed sleep, and altered appetite (Otte et al. 2016). The lifetime incidence of MDD in the United States of 12% in men and 20% in women (Belmaker et al. 2008). Affecting up to 300 million people in the world, MDD is the leading cause of disease burden in middle- and high-income countries worldwide. Individuals with MDD have higher medical costs, exacerbated medical conditions, and significantly increased rates of mortality. Compounding this severity, MDD is a heterogeneous disorder with a highly variable course, an inconsistent response to pharmacological treatment, and no established mechanism. Passively monitoring movement, location, social activity, and voice of patients with MDD could enable continuous assessment of mental well-being and inform context-appropriate clinical responses.

In a study of depression in 48 college undergraduates, Wang et al. used Android smartphones to monitor accelerometry, audio, ambient light, location, and device use over ten weeks (Wang et al. 2014). Depression was simultaneously measured using the PHQ-9 survey, a standardized nine question survey which has been shown to correlate with depression (Kroenke et al. 2001). In addition to making criteria-based diagnoses of depressive disorders, the PHQ-9 has also been shown to be a reliable and valid measure of depression severity (Martin et al. 2006). A PHQ-9 score ≥10 resulted in a sensitivity of 0.88 and a specificity of 0.88 for major depression in primary care and obstetrics-gynecology populations, and a sensitivity of 0.77 (0.71-0.84) and a specificity of 0.94 (0.90-0.97) in a meta-analysis, although the positive predictive value in an unselected primary care population was only 0.59 (Wittkampf et al. 2007). Students who slept less, held fewer conversations, self-reported higher stress responses, or interacted less during the day were more likely to be depressed (p < 0.05). Students started with high positive affect and conversation levels, low stress, and healthy sleep and daily activity patterns. As the term progressed, self-reported stress significantly rose, while positive affect, sleep, conversation and activity decreased. However, this study did not train a classifier, nor was cross-fold validation used. Study results may thus not generalize to out-of-sample data. Furthermore, p-values do not guarantee diagnostic accuracy or clinical utility (Wasserstein et al. 2016).

Burns et al. developed the “Mobilyze!” app to collect GPS, ambient light, recent calls, and other data (Burns et al. 2011). A companion website included feedback graphs illustrating correlations between patients’ self-reported states, as well as didactics and tools teaching patients behavioral activation concepts. Mobilyze! was tested for eight weeks in a cohort of seven adult patients with MDD who completed treatment. The Mini-International Neuropsychiatric Interview was used to characterize co-morbid anxiety disorders at baseline, the PHQ-9 was used to evaluate self-reported MDD symptom severity, and the GAD-7 was used to evaluate general anxiety symptom severity. In the Mobilyze! study, record-wise ten-fold cross validation was performed, although we note record-wise cross-validation overestimates predictive accuracy compared to subject-wise (Saeb et al. 2016b). Decision trees were used to estimate location, activity, social environment, and internal states. Generalized estimating equations logistic regression was used to estimate the binary outcome of either presence or absence of MDD diagnosis in the held-out set of sensor values. Categorical states such as location, isolation, and conversational status were estimated with mean accuracies ranging from 60-90%. However, the decision tree models estimated out-of-sample scale-based states such as mood no better than chance, and the results of the binary classification of MDD status were not reported.

Canzian et al. developed the MoodTraces app for the Android operating system to collect GPS data and answers to eight daily questions from the PHQ-8 depression test (Canzian et al. 2015). This study evaluated 28 subjects from the general population rather than a cohort of people diagnosed with depression. Mobility features were extracted from GPS data recorded over a period of 14 days and used to train a support vector machine (SVM) to predict PHQ score changes from an individual’s own past data, i.e. using individualized models. A positive label was defined as a change in PHQ score greater than one standard deviation of that subject’s normal PHQ score, and a negative label was defined as a change in PHQ score less than or equal to one standard deviation of a subject’s normal PHQ score. Leave-one-out cross validation was performed. Using a horizon window – the number of days between the last day of data collection and the day of the subsequently predicted PHQ score change – of 0 days resulted in a sensitivity of 0.71 and a specificity of 0.87. Interestingly, increasing the horizon window did not dramatically reduce the sensitivity and specificity. This study suggests that personalized models, instead of general ones, should be used to monitor the depressive state of an individual using his/her mobility traces. However, this study did not survey subjects diagnosed with MDD, nor were other digital sensor data from the smartphone utilized.

Ben-Zeev et al. evaluated behavior and mental health in 47 adolescent subjects using Android smartphones and an app developed in-house. GPS, accelerometry, ambient light and sound, and microphone data were recorded (Ben-Zeev et al. 2015). Geospatial activity, sleep duration, and variability in geospatial activity were associated with daily stress levels assessed via the 10-item Perceived Stress Scale (p < 0.05 for all). Sensor-derived speech duration, geospatial activity, and sleep duration were associated with changes in depression assessed via the PHQ-9, and sensor-derived kinesthetic activity was associated with loneliness. However, cross-validation was not performed, and features were assessed on the basis of statistical significance rather than classifier predictivity.

Saeb et al. used Android smartphones and an app developed in-house to evaluate 40 adult subjects for depressive symptoms over two weeks (Saeb et al. 2015). 28 of the subjects had sufficient sensor data to analyze. Several features extracted from GPS and phone usage data were related to depressive symptom severity. The lower the location entropy, e.g. more time spent in fewer locations, or the lower the regularity of circadian rhythm, the more likely a subject was to be depressed. Other predictive features included phone usage duration, and phone usage frequency. Elastic net regularization was performed to reduce overfitting, 1000 bootstrapped sets of features and their corresponding PHQ-9 scores were created, and leave-one-out cross validation was performed. A classifier trained on these data to distinguish subjects with PHQ-9 scores greater than or equal to from those with PHQ-9 scores less than 5 achieved an accuracy of 86.5%. Similar findings were reported in a subsequent study; location features from weekend data better predicted depression compared to location features from weekday data – even weeks in advance (Saeb et al. 2016a). These results suggest the relationship between depression and movement is stronger on non-workdays versus workdays when behavior is driven by social expectations. This finding highlights the importance of social context, time scale, and routine in the study of behavioral manifestations of mental illness.

2.2. Bipolar disorder

BD is a mental illness that can present with mania, hypomania, and major depression; manic episodes are characterized by significant changes in activity, energy, mood, behavior, sleep, and cognition (Belmaker et al. 2008). Patients with BD commonly manifest co-morbid psychiatric disorders, such as anxiety disorders and substance use disorders. Psychotic features such as delusions, hallucinations, and disorganized thinking and behavior can also occur during manic, major depressive, and mixed episodes.

Effective mood forecasting – the prediction of future mood states using current and past data – could support management of BD and provide early warning signs of relapse. Currently, symptoms are monitored using paper diaries or asking patients to recall mood during a clinical visit. Thus, data potentially usable to model mood in BD is sparse and may suffer from recall bias. Moore et al. used time series regression to forecast the next week’s depression ratings using self-rated mood data obtained via SMS (Moore et al. 2012). One method used was Gaussian process regression, a Bayesian nonparametric model in which a Gaussian prior distribution over the regression function is assumed. Forecasting by Gaussian process regression requires centering the time series because the prior process is assumed to have a zero mean. The algorithm then finds the optimal value for the hyperparameters θ and the noise variance by maximizing the marginal likelihood p(y |x, θ). The predictive equation is used to find the forecast mean, and the original signal bias is added, thus estimating the mood rating. Other types of simple exponential smoothing forecasting were also used for comparison. 153 patients with BD participated in the study; at least 23 responses were obtained from 100 patients whose data was used in the final analysis. Depression was measured via QIDS-SR16 and severity of mania was quantified using the ASRM. Questionnaires were administered every week for up to four years. Mood time series data varied widely in length, response interval, and stationarity. Out-of-sample forecasting was performed to estimate expected prediction error. Gaussian process regression did not outperform simpler exponential smoothing approaches. This is not surprising because noisy or undersampled time series will train a smoothing coefficient of zero, and most of the time series from these patients were noisy or lacked serial correlation. The authors concluded effective depression forecasts using this method cannot be made over the period of a week.

Faurholt-Jepsen et al. conducted the “MONitoring, treAtment and pRediCtion of bipolAr disorder episodes” (MONARCA) study, in which software for Android smartphones was used to monitor subjective and objective manifestations of BD alongside with treatment adherence in a bidirectional feedback loop between patients and providers (Maria et al. 2013). Subjective data included mood/irritability, sleep, and alcohol use. Objective data included speech, social, and physical activity. Data were recorded from 17 patients with BD for 3 consecutive months (Maria et al. 2014). Patients were rated every two weeks using the HDRS-17 and YMRS. Depressive symptoms correlated with less movement and fewer outgoing calls.

The MONARCA study was continued by Faurholt-Jepsen et al. in a larger cohort of 61 patients with BD. A linear mixed-effects regression model was used to estimate relationships between independent and dependent variables while accounting for within individual variation and between individual variations over time (Faurholt-Jepsen et al. 2015). The regression analysis was also adjusted for age and sex as possible confounders. Since the goal of the study was to estimate model coefficients rather than accurately classify subjects and estimate model generalizability, regression rather than a cross-validation or bootstrap approach was used. The duration of incoming and outgoing calls/day correlated with depressive symptoms. Additionally, the number and duration of incoming calls/day correlated with manic symptoms. Self-reported mood and activity data correlated negatively with HDRS-17 scores and positively with YMRS scores. Self-reported sleep quantity negatively correlated with both HDRS-17 and YMRS scores, whereas self-reported stress positively correlated with both. In other words, less sleep or more stress correlated with more depressive and manic symptomatology.

Recently, MONARCA was updated to collect and extract voice features from phone calls using the open-source “Media Interpretation by Large feature-space Extraction” (openSMILE) toolkit (Eyben et al. 2010; Maria et al. 2016). Class imbalance was addressed via random oversampling of the minority class, and a random forest algorithm trained on features derived from voice, objective, and self-reported data achieved an AUC of 0.78 in classifying a depressive state versus a euthymic state, and an AUC of 0.89 in classifying a manic or mixed state versus a euthymic state – although the number of folds used for k-fold cross-validation were not specified.

The group that created MONARCA recently began enrolling patients in the first-ever randomized controlled trial (RCT) to to investigate whether using a smartphone-based monitoring and treatment system, including an integrated clinical feedback loop, reduces the rate and duration of re-admissions more than standard treatment in unipolar disorder and bipolar disorder (Maria et al. 2017).

Grunerbl et al. passively recorded data from ten patients with BD over ten months using Android phones and a monitoring application developed in-house (Grünerbl et al. 2015). Bipolar symptoms were determined via HAMD or YMRS scale tests conducted every three weeks. Social activity features included number of phone calls, length, and unique numbers contacted. Speech and voice features included average speaking length, turn duration, utterances, and frequency-domain features. Four of the ten patients refused to use the study phone to make phone calls, so speech and voice features were determined for the remaining six patients. A naive Bayes algorithm classified records into one of seven states, including depressive, normal, and manic with different degrees. 66% of the data was randomly allocated to serve as training data, and the remaining 33% was allocated to the test set. This cross-validation was repeated 500 times, and resulted in an average 69% classification accuracy using a fusion of features extracted from accelerometer and GPS data. Next, the classifier was revised to weigh class estimates by data quantity; however, we note a quantity-based weighing introduces its own form of bias and does not guarantee improvement of classifier accuracy. On days with data from multiple modalities (phone, sound, acceleration, and location), class estimates were calculated for each modality and weighed by the amount of data as to favor modalities with more data and penalize modalities with fewer data. The class with the highest estimated probability was selected. Weighing estimates by data quantity dramatically improved classification accuracy to 76%, with both sensitivity and positive predictive value of 97%. However, the authors did not report the timing between feature collection and symptom assessment via questionnaire. Additionally, the variance across different folds was not reported.

Beiwinkel et al. developed “Social Information Monitoring for Patients with Bipolar Affective Disorder” (SIMBA), a smartphone app to track daily mood, physical activity, and social communication (Beiwinkel et al. 2016). SIMBA was tested with a cohort of 13 patients diagnosed with BD. Random-coefficient multilevel models were computed to analyze the relationship between smartphone data and externally rated manic and depressive symptoms. Lower self-reported mood in the monitoring period prior to a clinical visit predicted higher overall levels of clinical depressive symptoms (p < 0.05). A decline in social communication and physical activity predicted an increase in clinical depressive symptoms (p < 0.05). Lower physical activity but higher social communication predicted higher overall levels of clinical manic symptoms (p < 0.05). Lastly, a decrease in physical activity predicted an increase in clinical manic symptoms (p < 0.05). This study evaluated prediction rather than classification, as the outcome of interest was temporally later compared to the time at which smartphone data was assessed. However, no cross-validation or external validation cohort data set was utilized to assess generalizability of the model.

BD is characterized by disturbances in rhythmicity of sleep and social routine. Abdullah et al. used passive smartphone sensor data gathered via a customized Android app called “MoodRhythm” to measure rhythmicity in seven BD patients over four weeks (Abdullah et al. 2016). Measured data included accelerometry, ambient light, microphone audio, calls and SMS, and phone usage such as screen unlocks and recharging. Patients were administered the Social Rhythm Metric (SRM) questionnaire, although the frequency was unclear. The most predictive features were derived from these data via recursive feature elimination, and included: number of location clusters, distance traveled, frequency of conversation, and duration of non-sedentary activity. A support vector regression model was trained on data over a rolling window of seven days, and ten-fold cross-validation was performed. The average square root of average of squared errors between predicted and actual SRM scores was 1.40; the SRM ranges from zero to seven. To classify subjects as either “normal social rhythm” or “unstable”, a cutoff SRM score of 3.5 was selected due to the population SRM score being ≈3.5. A person with an SRM score < 3.5 was considered unstable, whereas a person with an SRM score ≥ 3.5 was considered stable. An SVM using the same features as before achieved a PPV of 0.85 and a sensitivity of 0.86.

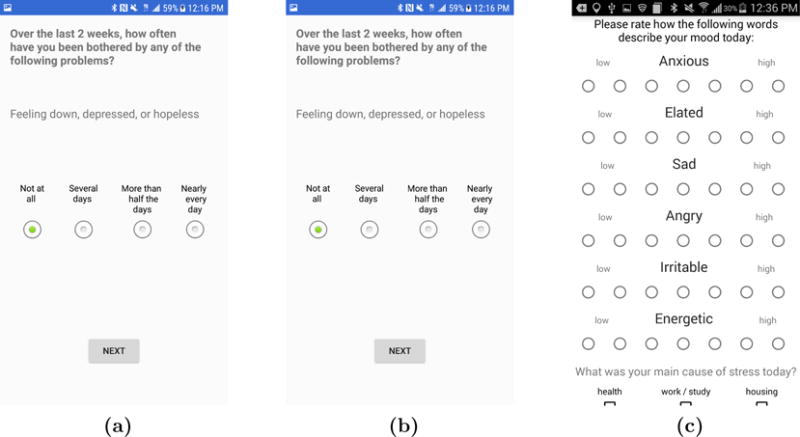

Palmius et al. designed the Automated Monitoring of Symptom Severity app, or “AMoSS” ((Palmius et al. 2014)). This app collected location, activity, battery usage, daily self-reported mood (through a six-axis seven-point Likert scale mood survey, ‘Mood Zoom’) and social networking behavior via de-identified lists of recipients and senders of text messages and phone calls, including length/duration and time of day (fig. 3). Collecting data up to several samples per second, AMoSS is a comprehensive mHealth monitoring system for mental health. Physiological monitoring devices recorded HR and blood pressure from a total of 100 participants, including patients with BD and matched controls. Early results demonstrated correlations between diagnoses of BD and borderline personality disorder with mood reports (Tsanas et al. 2016).

Figure 3.

Screenshots of the AMoSS app for the Android operating system: (a) PHQ-9 questionnaire, (b) Simple mood assessment tool, and (c) “MoodZoom” survey to assess emotional status and mood.

Subsequent work by the AMoSS team on a subset of these data demonstrated that clinically significant depression could be detected using features extracted from GPS location data (Palmius et al. 2017). Anonymized geographic locations were collected from 22 subjects with BD and 14 controls over three months, using location data from Samsung Galaxy S III or S4 smartphones running the Android 4.1 operating system. This version of Android featured a geospatial resolution of approximately 100 meters. Depressive symptomatology was self-reported by subjects via the QIDS-SR16 survey. Features were extracted to assess the level and regularity of geographic movements of the subjects, including normalized entropy, location variance, and number of distinct location clusters. For subjects with BD, a linear regression model trained on these features estimated questionnaire scores with a mean absolute error rate of 3.73 points. A quadratic discriminant analysis algorithm was trained to classify depression, and achieved an F1 score of 0.86 ± 0.02, classification accuracy of 0.85 ± 0.02; sensitivity of 0.84 ± 0.01, and specificity of 0.87 ± 0.05 (median ± IQR). Results were robust to leave-one-out, 10-fold, 5-fold, and 3-fold cross-validation.

2.3. Schizophrenia

Schizophrenia is a complex and heterogeneous illness characterized by several criteria, including at least two of the following symptoms for one month or longer: delusions, hallucinations, disorganized speech, grossly disorganized or catatonic behavior, or negative symptoms such as diminished emotional expression; furthermore, there must be impairment in work, interpersonal relations, or self-care, as well as continuous signs of the disorder for at least 6 months (Kahn et al. 2015). The lifetime global prevalence of schizophrenia is about 1%. Outcomes vary widely, ranging from total recovery to totally debilitating illness requiring chronic care. Life expectancy for people with schizophrenia is reduced by 20 years compared to people without the illness. Pharmacological treatments for schizophrenia can relieve psychotic symptoms but usually fail to meaningfully improve social, cognitive and professional functioning. Psychosocial interventions can be useful but are resource-intensive and inconsistently delivered. Finally, schizophrenia tends to be diagnosed years after symptoms begin. Compared to depression and BD, relatively little work on mobile health has focused on schizophrenia.

Wang et al. collected passive smartphone sensor data from 21 outpatients diagnosed with schizophrenia and recently discharged from hospital over a period ranging from 2 - 8.5 months (Wang et al. 2016). Samsung Galaxy S5 phones running the Android operating system were equipped with the “CrossCheck” app developed in-house that monitors type and duration of physical activity, sleep duration, number and durations of phone conversations, number of SMS, geolocation, phone and app usage, and ambient light and noise. Every three days, Ecological Momentary Assessment (EMA) questions were administered and sensor data were aggregated. Generalized estimating equations were used to map associations between features and EMA responses. Higher scores in attributes related to positive perception of mental well-being – including calm, hopeful, sleeping well, social, and ability to think clearly – were associated with waking up earlier, having fewer conversations, fewer phone calls, and fewer SMS. Higher scores in questions related to negative perception were associated with staying stationary more in the morning but less in the evening, visiting fewer new places, having fewer conversations but making more phone calls and SMS, and using the phone less. Gradient boosted regression trees were used to predict EMA scores from these features. Models trained on an individual’s data could estimate EMA scores for that individual with a correlation between prediction and outcome of r = 0.77 and p < 0.001. However, outcomes predicted via leave-one-out cross validation did not correlate with actual outcomes, suggesting high variance of feature phenotypes between individuals.

Staples et al. recently reported a three-month observational study of both self-reported and objective measures of sleep in schizophrenia (Staples et al. 2017). Using the Beiwe app (available for both iPhones and Android phones), 13 subjects diagnosed with chizophrenia were given tri-weekly EMAs. Passive data were continuously collected, including accelerometry, GPS, screen use, and anonymized call and SMS activity. Sleep quality was assessed in a clinical setting using the PSQI, which was compared to both EMAs and sleep estimates based on passively collected accelerometer data. A cross-validated linear regression model with mean phone-based EMA scores as the outcome and mean paper-based PSQI scores as the predictor classified 85% (11/13) of subjects as exhibiting high or low sleep quality. Accelerometry moderately correlated with subject self-assessments of sleep duration (r = 0.69, 95% CI 0.23 – 0.90). Active and passive phone data predicted concurrent PSQI scores with a mean average error of 0.75, and future PSQI scores with a mean average error of 1.9, with scores ranging from 0-14.

Among individuals who are diagnosed, hospitalized, and treated for schizophrenia, up to 40% of those who are discharged will relapse within one year. Barnett et al. evaluated a smartphone platform for monitoring seventeen patients with schizophrenia undergoing active treatment in order to identify warning signs of relapse, defined as psychiatric hospitalization or an increase in the level of psychiatric care, such as increase in the frequency of clinic visits or referral to a partial or outpatient hospital program (Barnett et al. 2018). Patients were monitored for three months using the Beiwe app, and mobility patterns and social behavior were gathered and analyzed. Features were extracted from the data, including daily distance traveled, time spent at home, number of significant locations visited, total duration of calls, number of missed calls, and number of text messages sent. The app also administered surveys twice per week to assess anxiety, depression, sleep quality, psychosis, the warning symptoms scale, and medication adherence. The rate of behavioral anomalies detected in the 2 weeks prior to relapse was 71% higher than the rate of anomalies during other time periods. Although anomalies were calculated using each patient’s own data to account for differences in baseline features, the number of anomalies greatly varied between subjects. Additionally, many subjects did not relapse, as the cohort enrolled only seventeen patients and for only three months. The features captured in patients that did relapse may not have reflected the “potential trajectories and mechanisms that can lead to relapse”. The anomaly detection approach demonstrated in this paper could be useful for measuring other outcomes that were not reported but could be clinically useful, such as changes in positive or negative symptoms of schizophrenia.

2.4. Post traumatic stress disorder

PTSD is a psychopathological response to a traumatic event such as violence, a natural disaster, or combat. Symptoms include nightmares of the trauma, hypervigilance, difficulty sleeping, poor concentration, and avoidance of places, activities, or persons that remind the affected individual of the causal incident (Shalev et al. 2017). The lifetime prevalence of PTSD ranges between 6-30%, is much higher in veterans exposed to combat, and varies by gender and country of origin.

Smartphone apps for PTSD have focused on education about the disorder, delivery of cognitive behavioral therapy, self-assessment of symptoms via questionnaires, and access to crisis support and other relevant resources (Kuhn et al. 2014). Few papers describe the use of digital sensors to passively monitor clinical symptoms of PTSD. However, many smartphone- and wearables-based sensing approaches have focused on anxiety and depression which are common co-morbidities.

Place et al. conducted a 12-week trial with 73 patients who reported at least one symptom of PTSD or depression (Place et al. 2017). Clinical symptoms were assessed by licensed social workers who administered the depression and PTSD modules of the Structured Clinical Interview for Mental Disorders (SCID). An Android app was developed to gather accelerometry, SMS and call, location, device use, and audio data. Extracted features included sum of outgoing calls, count of unique numbers texted, absolute distance traveled, dynamic variation of the voice, speaking rate, and voice quality. Feature reduction was performed to reduce over-fitting and interfeature correlation, and a logistic regression was trained using 10-fold cross validation. Fatigue, interest in activities, and social connectedness were predicted using data from the prior week with AUCs of 0.56, 0.75, and 0.83 respectively. Depressed mood was predicted from audio data with an AUC of 0.74. Finally, subjects reported comfort with sharing personal data with clinicians and medical researchers. However, it was unclear if the predictive model outperformed sample-and-hold estimations of mood from the previous week. This can be viewed using a Bayesian framework, in which the mood state from the previous week informs the prior, and data from the smartphone app is used to update the model and estimate the posterior. Evaluating subjects at several time points affords an opportunity to quantify the additional contribution of passive sensor data to predictive models that use questionnaires or surveys as ground truth.

University of North Carolina, Harvard University, and Verily Life Sciences LLC (South San Francisco, CA) are leading the AURORA study, a 19-institution five-year effort to perform the most comprehensive observational study of mental disorders that occur in the wake of trauma to date (National Institute of Mental Health 2016). Investigators will screen 5,000 people arriving in emergency rooms after trauma. After an initial evaluation and a baseline collection of biological data from blood samples, subjects will be monitored for the next several months through the use of mobile technology, such as wrist wearables and smart phones, to track factors like activity, sleep, and mood. Other assessments will include additional blood samples, functional brain imaging, and psychological tests. Participant involvement will continue over a year, generating a wide variety of detailed information on, for example, health history (including that of earlier trauma), genetics, stress responses (physical and psychological), behavior, and cognition. This collaboration presents a unique opportunity to learn more about the factors that mediate the development of mental illness after trauma, and potentially contribute to new diagnostic and therapeutic approaches. The Aurora study represents a new trend in public-private partnerships, involving multiple research institutions and and technology companies such as Verily and Mindstrong Health (Palo Alto, CA).

2.5. Dementia and Alzheimer’s disease

AD is a progressive, chronic neurodegenerative disorder that primarily affects older adults and is the most common cause of dementia. Selective memory impairment is the most common initial symptom (Wolk et al. 2017). Executive dysfunction and visuospatial impairment also present early, while deficits in language and behavioral symptoms typically manifest later in the disease course. The average life expectancy after a diagnosis of AD ranges from three to eight years; patients generally succumb to complications related to advanced debilitation such as dehydration, malnutrition, and infection. AD has an estimated prevalence of 10–30% in the population > 65 years of age with an incidence of 1–3% (Masters et al. 2015). According to The Alzheimer’s Association over 5 million Americans have AD and it is the sixth leading cause of death (Alzheimer’s Association 2016). In 2016 more than 15 million caregivers provided 18 billion hours of unpaid care at a value of approximately $221B. Given the devastating cost and prevalence of AD, relatively low cost smartphone and wearable technologies could potentially aid cognitive assessment and improvement, monitor daily activities, and prolong independence and/or improve lives of caregivers.

Tung et al. attempted to distinguish AD from controls using GPS features from smartphones (Tung et al. 2014). A cohort of 19 older adults with mild-to-moderate AD and 33 controls were monitored via wearing GPS-enabled mobile phones for three days. The make and model of the phones were not described. Measures of geographical territory (area, perimeter, mean distance from home, and time away from home) were calculated from GPS data and group differences were tested using two-sample t-tests. Area, perimeter, and mean distance from home were significantly smaller in the AD group compared to controls. Furthermore, area and perimeter were significantly associated with steps per day, Disability Assessment for Dementia questionnaire scores, gait velocity, symptoms of apathy, and depression.

Aguilera et al. demonstrated self-reported daily mood ratings assessed via SMS are proxies for PHQ-9 scores (Aguilera et al. 2015). A cohort of 33 people with a diagnosis of depression who were undergoing group cognitive behavioral therapy received a daily SMS asking them to report mood on an ordinal scale of 1-9. They were also asked questions about thoughts and activities. The subjects further received a PHQ-9 to complete each week that they attended the therapy group. Daily and one-week average SMS mood scores were significantly associated with PHQ-9 scores. The authors noted “SMS-based mobile mood ratings, when assessed daily, may provide a more accurate indicator of longitudinal symptom levels than the PHQ-9, as the PHQ-9 may be subject to a recency bias”. While short SMS-based surveys can provide a more nimble and higher-resolution picture of how a patient feels in the moment, they are not intended to replace more thorough survey instruments such as the PHQ-9, which focuses on the diagnostic criteria of the DSM-IV for MDD (Kroenke et al. 2001).

Batista et al. developed an Android app to monitor people with mild cognitive impairment (MCI) (Batista et al. 2015). Specifically, the app raises alarms under certain conditions, such as an AD patient leaving a defined geographic zone (e.g. home), not moving after a certain amount of time, moving at too high a speed (suggesting they are utilizing transportation), or the phone battery level reaching too low a level. 16 subjects with varying stages of AD participated in a pilot study in which user perception of the app was assessed. The authors selected the most inexpensive Android smartphones available via Amazon to make the platform affordable for more patients and caregivers.

2.6. Parkinson’s disease

PD is a neurodegenerative movement disorder that affects 1-3% of the population ≥65 years old (Poewe et al. 2017). PD is caused by a marked reduction of dopaminergic neurons in the substantia nigra, and subsequent disruption of dopaminergic neurotransmission in the basal ganglia. The diagnosis of PD is clinical as it classically presents with asymmetric resting tremor, rigidity, and bradykinesia (Nutt et al. 2005). The economic burden of PD is estimated to be $23B in the USA and projected to increase to $50B by the year 2040 (Dodel et al. 1998). Current dopamine replacement therapy with Carbidopa and Levodopa treats symptoms of the disease and is not curative; PD continues to progress resulting in significant disability, worsening quality of life, and eventual need for advanced care and nursing home placement (Huse et al. 2005).

Neurologists can adjust medication selection and dosage to manage symptoms. However, clinical visits merely provide a snapshot of a patient’s condition, which fluctuates within and across days. Furthermore, recall of symptoms can be inaccurate or incomplete. During the exam, some motor aspects of the disease such as nighttime akinesia can be absent. Continuous and passive measurements of physiology and behavior could provide complementary information to clinicians, and potentially reduce the recall bias associated with retrospective surveys and diaries.

Several groups have used the accelerometers and processing power of smartphones to assess walking and hand movement, especially during maneuvers. Some studies evaluated univariate correlations between clinical scales versus features such as tremor, amplitude of leg movements, and frequency of finger tapping. More recently, machine learning approaches have been utilized to classify activities, estimate PD severity, distinguish PD from controls, or quantify movement of PD patients. We direct the interested reader to an article by Kubota, Chen, and Little that provides a review of relevant machine-learning algorithms applied to large-scale wearable sensor data in Parkinson’s disease (Kubota et al. 2016).

Woods et al. developed a smartphone app that uses discrete wavelet transforms and support vector machines to discriminate between Parkinson’s and essential postural tremors (ET) with over 96% accuracy (Woods et al. 2014). 14 subjects with PD and 18 subjects with ET were evaluated via the motor portion of the UPDRS. Subjects performed several motor tasks while holding a smartphone to record tremor: holding phone with eyes open and closed, attending to active tremor hand, attending to a laser target, and counting backwards by 3. DB8 wavelet decomposition was performed to produce five frequency bins; the energy in the bands 3.5–7.0 Hz and 7.0–14.0 Hz were of particular interest as they are the dominant tremor frequencies of PD, ET and physiological tremor. Analysis of variance between PD and ET for the six tasks showed a significant main effect of task F (3.4, 104.8) = 6.93, p < 0.05 and a significant interaction of Group × Task F (3.494, 104.831) = 4.709, p < 0.05. The mean tremor amplitude at 3.5–7.0 Hz was significantly lower in PD than in ET for all tasks (p < 0.05). Resultant wavelet data were used to train an SVM to classify the type of tremor. Using five-fold cross validation, the classifier achieved an accuracy of 96.4%.

Ellis et al. developed the “SmartMOVE” app to quantify gait variability (Ellis et al. 2015). The accuracy of using a smartphone gyroscope to calculate successive step times and step lengths) was validated against two heel contact-based measurement devices: heel-mounted foot switch sensors to capture step times, and an instrumented pressure sensor mat to capture step length). 12 subjects with PD and 12 age-matched controls walked along a 26-m path during self-paced and metronome-cued conditions, with all three devices recording simultaneously. Four attributes of gait were calculated. Mixed factorial analysis of variance revealed several instances in which between-group differences (e.g., increased gait variability in PD patients relative to controls) yielded medium-to-large effect sizes. Cueing-mediated changes (e.g., decreased gait variability when PD patients walked with auditory cues) yielded small-to-medium effect sizes, whereas device-related measurement error yielded small-to-negligible effect sizes. Despite a small sample size, between-group effect sizes were greater than within-group or device-related effect sizes. However, factors that contribute to variance in outcomes are less intuitive and interpretable compared to factors that contribute to direction and magnitude of outcomes.

Kassavetis et al. recorded accelerometry using a smartphone on 14 subjects with PD who performed various tasks, e.g. holding the phone in their palm with outstretched arms, performing pronation-supination, and tapping the screen (Kassavetis et al. 2016). Metrics such as amplitude and frequency were calculated for each maneuver. Clinical severity of motor symptoms was assessed with the MDS-UPDRS. Five subscores – rest tremor, postural tremor, pronation-supination, leg agility, and finger tapping – significantly correlated with eight parameters of the data collected with the smartphone.

Albert et al. recorded accelerometry via smartphone from eight subjects with PD and 18 controls, who performed a number of different activities for at least one minute (Albert et al. 2017). These activities included walking, standing, sitting, holding, or not wearing the phone. Features extracted from these accelerometry data included statistical moments, root mean square, extremes, Fourier components, and cross product means between different accelerometry axes. Automatic feature selection was performed, although the particular method was not specified. Ten-fold record-wise cross validation was performed, and an SVM classified activities from these features with an accuracy of 96.1% for controls and 92.2% for PD patients. Regularized logistic regression achieved slightly inferior results. Next, the SVM was trained on data from healthy subjects and but applied to test set data from PD patients. This lowered classification accuracy to 60.3%. Subject-wise cross-validation on PD patients resulted in an accuracy of 75.1%, whereas the same approach but for controls resulted in an accuracy of 86.0%.

Pan et al. developed an Android app called “PD Dr” to monitor accelerometry from 40 subjects with PD (Pan et al. 2015). Subjects performed three motor performance tasks: hand resting tremor, walking, and turning. The phone was attached to the back of the hand, ankle, and pivot leg for evaluating hand tremor, walking, and turning respectively. Hand tremor features included power of motion data between 4–6 Hz, total power of motion data from 0–20 Hz, average acceleration of motion, etc. Gait features included average gait cycle time, stride length, and acceleration, and turning features included the number of steps used to turn 360°. SVMs were trained to classify hand resting tremor from no tremor, achieving a sensitivity of 0.77 and accuracy of 0.82. Gait difficulty was distinguished from normal walking with a sensitivity of 0.89 and an accuracy of 0.81. Three Lasso-regularized logistic regression models were trained to estimate disease stage (Hoehn & Yahr score from 1-5), hand resting tremor UPDRS score, and gait difficulty UPDRS score. The correlation coefficients for PD stage, hand resting tremor, and gait difficult were r = 0.81, r = 0.74, and r = 0.79 respectively. However, the complexity of the measurement protocol limits the translatability of this study into real-world home and clinical environments. Asking a participant to wear a smartphone in various specific locations to assess particular activities may reduce adherence and introduce variance, whereas passive monitoring of motor function without disrupting typical behaviors or requiring specific body locations for wear may be easier for patients.

Capecci et al. identified freezing of gait (FOG) in 20 subjects with PD using smartphone-based accelerometry (Capecci et al. 2016). Subjects were asked to perform the Timed Up and Go (TUG) test while being video-recorded. Clinicians assessed the videos to identify FOG events. Power in the “freeze” band (3–8 Hz) and the “locomotor” band (0.5–3 Hz) of accelerometry data were calculated. The sum of these powers was the freeze index “FI”, and the ratio of freeze to locomotor power was coined “EI” (acronym not definde in paper). The Moore-Bächlin Algorithm defined a freezing of gait event when FI and EI both exceeded one standard deviation above the mean. This approach achieved a sensitivity of 70.1% and a specificity of 84.1%. A second approach that also utilized information about step cadence achieved a sensitivity of 87.6% and a specificity of 95.0%. Of note, in this work the smartphone provided both sensing and computational function, whereas much previous work only used the smartphone for sensing. However, the performance of this approach has yet to be studied on a larger cohort with several PD types and stages.

Kostikis et al. evaluated hand tremor in 25 subjects with PD and 20 age-matched controls using an iPhone app developed in-house (Kostikis et al. 2014). A physician evaluated each participant to determine their UPDRS score. Next, subjects wore a custom-built glove case to attach an iPhone to their right hand. The app assessed four metrics of hand tremor via accelerometry, including the magnitude of acceleration and rotation rate vector. A random forest was trained via bagging to classify PD from healthy subjects, and out-of-bag results were reported. The classifier achieved a maximum AUC of 0.94, a sensitivity of 0.82, and a specificity of 0.90.

Lee et al. performed a similar but larger-scale study on 103 subjects with PD (Lee et al. 2016). Using a smartphone monitoring approach, the investigators sought to (i) validate their app against MDS-UPDRS motor assessment and the two-target tapping test; (ii) generate a prediction model for UPDRS scores; (iii) assess repeatability of the app, and (iv) examine compliance and user-satisfaction. Subjects used the app at home over three days. Features significantly correlated with MDS-UPDRS-III (r = 0.28 – −0.61, p < 0.05), and a prediction model based on these parameters accounted for 52.3% of variation in UPDRS (R2 = 0.523, F (4, 93) = 25.48, p < 0.05). 48 subjects underwent repeat assessment under identical conditions, and repeatability of features and predicted UPDRS scores was moderate with intraclass correlation coefficient of 0.584 – −0.763 (p < 0.05). A follow-up survey identified that subjects were comfortable with the app.

Speech degrades as PD progresses, with voice amplitude decreasing and breathiness increasing. Clinical speech scientists have used speech signal processing algorithms to characterize dysphonias such as those that occur in PD. Smartphones can record voice, either via standalone app or passively during phone calls or merely ambient background monitoring, which suggests the possibility for remote evaluation of PD. Tsanas et al. used statistical machine-learning techniques to map features from speech signal processing algorithms – such as spectral energy or amplitude – to UPDRS scores (Tsanas et al. 2011). Specifically, 42 subjects with PD performed self-administered speech tests that did not require their physical presence in the clinic, and clinicians evaluated PD symptomatology using UPDRS. 6,000 recordings were generated, and robust feature selection algorithms (LASSO and elastic net regression) were used to select the optimal subset of the speech features. These features were used to train a random forest learner, which estimated both motor and total UPDRS scores within about two points (p < 0.001 via the Wilcoxon rank sum test). Interestingly, linear best fit models between dysphonia features and UPDRS scores achieved low correlations in a univariate sense, but fusing multiple weak features using a machine learning approach enabled accurate UPDRS score estimation. This same group also utilized a similar approach but for dichotomizing PD subjects from healthy controls rather than estimating UPDRS scores (Tsanas et al. 2012). 132 dysophnia features were calculated from 263 samples recorded from 43 subjects. Feature selection was performed and features were used to train random forests and SVMs, which achieved almost 99% overall classification accuracy. Estimating UPDRS scores using passive and remote sensing could enable tracking patient status outside of the clinic, whereas dichotomizing (or estimating the probability of dysphonia features being close to those from a subject with PD) could be utilized for screening high-risk individuals for further evaluation by a neurologist.

3. Wearable accelerometers

Locomotor activity is altered in neuropsychiatric illnesses, due to impaired motor function, weakness, volitional and behavioral changes, or abnormal sleep patterns and circadian rhythms (Teicher 1995). Non-invasive body-worn accelerometers can measure these changes, and were first explored for assessing circadian rhythms (Witting et al. 1990; Sadeh et al. 2002). However, continual monitoring of locomotor activity was not feasible until recently due to poor battery life, the inability to wirelessly transmit data, and low patient adherence with research-grade instrumentation. Only recently have these technological constraints been overcome. Today, personal activity monitoring devices such as fitness bracelets or patches – also known as “wearables” – are affordable and widely available to public consumers. This is partly due to the global saturation of the smartphone market, which consequently reduced the cost of manufacturing and distributing similar component parts. Today, wearables house sensors that detect heart rate, activity, ambient light, and sleep. These devices have been used in the studies revealing disturbances in 24-hour routine and circadian rhythm associated with neuropsychiatric illnesses such as BD and schizophrenia (fig. 4). While only 2-4% of individuals in the United States have a wearable device, the market is estimated to increase to 115 million units in 2018 and generate %50 billion of revenue (Gandhi et al. 2014; Statista 2017). Here we review recent studies using wearable accelerometers to monitor neuropsychiatric illnesses.

Figure 4.

A “double-plot” of wearable accelerometry or actigraphy data demonstrates night-to-night patterns. The x-axis is the date, and the y-axis is time of day. Each day is repeated adjacent to and below the previous day. This aligns the nights of data and can be particularly useful in depicting circadian rhythm sleep disorders. (a) Actigraphy levels in a healthy control. (b) Actigraphy levels in a patient with borderline personality disorder.

3.1. Stress and depression

Winkler et al. used actigraphy to demonstrate bright light therapy (BLT) normalizes disturbances in the circadian rest-activity cycle associated with seasonal affective disorder (SAD) (Winkler et al. 2005). 17 SAD patients and 17 age-matched controls were treated with BLT for four weeks and monitored via wrist actigraphy. SAD patients had 33% lower total and 43% lower daylight activity in the first week compared with control subjects. Furthermore, SAD patients demonstrated altered relative amplitude and phase of the sleep-wake cycle, as well as lower sleep efficiency. BLT treatment restored these alterations, and increased both total and daylight activity of SAD patients. Interdaily stability (IS) of activity – a measure of regularity of the circadian rhythm between days, which reflects the strength of coupling of the rhythm to external cues such as light – increased by 9% in both patients and controls.

Nakamura et al. measured activity in 14 patients with MDD and 11 age-matched controls using wrist-worn accelerometers (Nakamura et al. 2007). Data from resting and active periods were fit to cumulative power law distributions with the form P (x ≥ a) ∼ a−γ. A period of activity was considered resting or active if the counts were cumulatively below or above a threshold value, respectively. The average scaling exponent among depressed patients and among controls. This difference was associated with a significantly longer mean resting period duration in depressed patients (15.64 ± 6.19 minutes) than in controls (7.72 ± 1.44 minutes).

Vallance et al. studied the associations between accelerometer-derived physical activity and sedentary time with depression in 2,862 adults from the 2005–2006 US National Health and Nutrition Examination Survey (NHANES) (Vallance et al. 2011). Depression occurred in 6.8% of the sample and was assessed via the PHQ-9 questionnaire. Depressed and non-depressed subjects significantly differed across several demographic, medical, and behavioral characteristics. Subjects wore accelerometers (ActiGraph, Ft. Walton Beach, FL) for seven days on their right hip, attached by a belt. Subjects in the highest quartile of moderate-to-vigorous intensity physical activity had 2.7-fold lower odds of depression compared to subjects in the lowest quartile. Sedentary time was associated with significantly increased odds of depression in overweight/obese adults, but not normal weight adults. However, only simple measures of activity were computed. More sophisticated metrics reflecting structure of routine, complexity, and disturbances in circadian rhythms may better distinguish depressed from healthy people.

Sano et al. searched for physiological or behavioral markers for stress by collecting 5 days of data from 18 subjects (Sano et al. 2013). A wrist sensor monitored accelerometry and skin conductance, and a custom app tracked call, SMS, location, and screen on/off time. The app also administered surveys to assess stress, mood, sleep, tiredness, general health, alcohol or caffeinated beverage intake, and electronics usage. Using features screen on, mobility, and call or activity levels, an SVM classified subjects into high or low perceived stress groups with 75% accuracy. Higher reported stress level was related to activity level, SMS and screen on/off patterns.

Sano et al. repeated this approach in a subsequent study, but also included academic performance among the outcomes (Sano et al. 2015). Subjective and objective data was gathered using mobile phones, surveys, and wearable sensors worn by 66 subjects for 30 days. Sequential forward feature selection was performed to find the best combinations of features derived from data including survey scores (from surveys that were not the outcome being estimated), accelerometry, skin temperature, calls and SMS, and internet and email use. An SVM achieved a classification accuracy of 90% in distinguishing individuals in the upper 20th percentile from the lower 20th percentile in three different scores: Pittsburg Sleep Quality Index, perceived stress scale, and mental health composite. Features from wearable devices resulted in higher classification accuracy than when using features from smartphones, with the exception of classifying high versus low GPA.

O’Brien et al. monitored activity in 29 older adults with MDD for seven days. Subjects underwent neuropsychological assessment and quality of life (QoL) (36-item Short-Form Health Survey) and activities of daily living (ADL) scales (Instrumental Activities of Daily Living Scale) (O’Brien et al. 2016). A wrist-mounted actigraph used in this study was developed as part of the OpenMovement initiative, a collection of open-source hardware sensors and software tools for research use (https://openlab.ncl.ac.uk/things/open-movement/). Physical activity, jerk, and entropy were all significantly lower in depressed subjects, and reductions in locomotor activity were associated with reduced ADL, lower quality of life, lower associated learning, and a higher depression rating scale score.

Burton et al. performed the first systematic review of activity monitoring in patients with depression and highlighted several limitations of the studies (Burton et al. 2013). 19 eligible studies were identified, and case control studies showed less daytime activity in patients with depression compared to controls (standardized mean difference −0.76, 95% confidence intervals −1.05 to −0.47). However, most studies in the literature contained a source of potential confounding by comparing inpatient subjects with depression to controls in the community. Large differences between groups could have been due to living environment and routine rather than depression. Outpatients have milder forms of depression and less co-morbidity than those admitted to hospital, so results from studies that do not account for treatment setting may not generalize to broader patient populations. Furthermore, not all studies mentioned the make and model of the wrist-worn actigraph used, and the duration of sampling varied considerably from study to study, ranging from 12 hours to > 2 weeks.

3.2. Bipolar disorder

Palmius et al. reported preliminary results from the AMoSS study involving 16 subjects with BD, nine subjects with borderline personality disorder, and 25 controls (Palmius et al. 2014). Subjects were provided with a Samsung Galaxy SIII phone running the Android operating system, a Fitbit One wireless activity and sleep tracker, and a GENEActiv Original wrist-worn accelerometer. Subjects also took their own temperature and blood pressure at specific times in the study. Furthermore, an ECG was worn for a limited period of time by each participant and selected subjects wore a pulse oximeter overnight. The AMoSS app recorded actigraphy, ambient light, phone call time and duration, SMS time and length, self-reported mood surveys, and blood pressure and temperature recorded from Bluetooth-connected devices. Clinically validated psychiatric questionnaires – including the quick inventory of depressive symptomatology (QIDS), Altman self-rating mania scale, generalized anxiety disorder (GAD-7), and EuroQol EQ-5D – were administered weekly. Although data collection was still ongoing, gross differences in the regularity of actigraphy between borderline personality disorder and controls were evident.

Bullock et al. measured locomotor activity over seven days in 36 subjects with high trait vulnerability and 36 subjects with low trait vulnerability for BD (Bullock et al. 2014). Patients wore wrist actigraphs (Mini-Mitter Actiwatch-L; Respironics, Inc., Bend, OR). Vulnerability to BD was determined via a self-reported General Behavior Inventory (GBI), taken by 358 potential subjects. The top and bottom 10% of the GBI distribution formed the high- and low-vulnerability groups. Relative amplitude (RA) is defined as:

where M10 is the mean activity level during the most active ten hours, and L5 is the mean activity level during the least active five hours. RA was lower in the high-vulnerability group than in the low-vulnerability group, while IV and IS did not significantly differ.

Krane-Gartiser et al. evaluated actigraphy recordings from 18 hospitalized patients with mania, 12 hospitalized patient with bipolar depression, and 28 controls (Krane-gartiser et al. 2014). Actiwatch Spectrum actigraphs were used. From each participant, the first period of 64 minutes containing ≤ two consecutive minutes of zero activity counts were selected for subsequent feature extraction. Features included the standard deviation (SD), Root Mean Square of the Successive Differences (RMSSD), autocorrelation (with lag of one), sample entropy, “symbolic dynamics” (a simplified version of sample entropy; Guzzetti et al. 2005), and “Fourier analysis” (ratio between variance in high and low frequencies of the spectrum). Mean activity of both manic and depressed patients was lower than in controls. SD/min in % of mean was higher in depressed patients than in both manic patients and controls. Although no difference was found in sample entropy of activity by patient group, symbolic dynamics were lower in depressed patients compared to manic patients and controls. Finally, the ratio between variance of high frequency (HF) power and variance of low frequency (LF) power was higher, and the autocorrelation was lower, in manic patients compared to other groups.

Hyperactivity is seen in both pediatric BD and attention-deficit hyperactivity disorder (ADHD). Faedda et al. accurately distinguished youth with BD (N=48) from those with ADHD (n=65) and typically developing controls (n=42) using features derived from five minutes of belt actigraphy data (Faedda et al. 2016). Features were selected on the basis of significance rather than predictivity, and included diurnal skew, L5, RA, and bipolar vulnerability index (VI). VI is the integrated area of shape coefficients of the gamma function fit to the distribution of Morlet wavelet coefficients at scales from 0.2–2 hr. Bagging was performed whereby 75% of the data was used as the training set and model performance was assessed using the remaining 25% of the data as the test set. This was repeated 500 times. Although the cohort included three classes of subjects, a binary classification task was performed to distinguish BD from non-subjects with BD. Several classifiers were used, including random forest, artificial neural networks, SVM, multinomial regression, and partial least squares, achieving area under the ROC curves ranging from 0.75 to 0.78.

3.3. Schizophrenia

Martin et al. found older schizophrenia patients have more disrupted sleep and circadian rhythms (Martin et al. 2005). 28 older schizophrenia patients (mean age=58.3 years) and 28 age- and gender-matched controls were monitored for three days using Actillume wrist actigraphs (Ambulatory Monitoring, Inc., Ardsley, New York). Minute-by-minute activity and light exposure were recorded. Patients spent longer in bed, had more disrupted nighttime sleep, slept more during the day, and had less robust circadian rhythms of activity and light exposure compared to controls.

Apiquian et al. evaluated rest-activity characteristics in 20 unmedicated and non-hospitalized schizophrenia patients and 20 controls for five days using a wrist-worn actigraph (Actiwatch-16) (Apiquian et al. 2017). Compared to controls, untreated patients showed significantly lower levels of motor activity and more sleep time.

Walther et al. investigated the relationship between objective measures of motor activity and PANSS scores (Walther et al. 2009b). 55 schizophrenia patients were monitored for 24 hours via wrist actigraphy. Low activity levels were correlated with high PANSS negative syndrome subscale scores. Interestingly, actigraphic parameters did not correlate with motor-specific questions of the PANSS, challenging the validity of the questionnaire.

This same research group subsequently used 24-hour actigraphy to differentiate schizophrenia subtypes in a cohort of 60 hospitalized patients (35 paranoid, 12 catatonic, 13 disorganized) (Walther et al. 2009a). Activity level and movement index (proportion of 2-second periods with nonzero activity) was highest in paranoid schizophrenics, whereas the mean duration of uninterrupted mobility was highest in catatonic schizophrenics.

Berle et al. used actigraphy to evaluate patterns of motor activity in 23 schizophrenia patients, 23 depressed patients, and 32 control subjects who did not have a history of mood or psychotic systems (Berle et al. 2017). Total motor activity was lower in patients diagnosed with schizophrenia or depression than in controls. However, IS was 18% higher in schizophrenia patients compared to controls, whereas IS did not differ between depressed patients and controls. IV was 18% lower in schizophrenia patients and 8% lower in depressed patients compared to controls.

Hauge et al. revisited this same cohort of patients, but analyzed activity data using Fourier analysis and entropy measurements (Hauge et al. 2011). For each patient, these features were derived from the first 300-minute segment of activity data that contained ≤ 4 consecutive minutes of zero activity. RMSSD/SD was significantly lower in schizophrenia patients compared to either depressed patients or controls. Sample entropy of activity was significantly lower in depressed patents compared to either schizophrenia patients or controls. Finally, the ratio between variance of HF power and variance of LF power was significantly higher in depressed patients compared to controls.

Wichniak et al. recorded seven days of actigraphy using Actiwatch AW4 devices (Cambridge Neurotechnology Inc., UK) in 73 patients with schizophrenia and 36 age-and sex-matched controls (Wichniak et al. 2011). Mental status was measured via the PANSS and CDSS questionnaires. Schizophrenia patients had lower mean 24-hour activity and mean 10-hour daytime activity levels, and spent more time in bed. Lower activity was associated with higher PANSS and CDSS scores.

Sano et al. recorded seven days of actigraphy (Actigraph Mini-Motionlogger; Ambulatory Monitors Inc., Ardsley, NY, USA) in 19 schizophrenia patients and 11 controls (Sano et al. 2012). Resting periods obeyed a power-law cumulative distribution whereas active periods obeyed a stretched exponential distribution. Distribution parameters differed among schizophrenia patients and controls. For resting periods, the average scaling exponent values (mean ± standard deviation) were for schizophrenia patients and for controls. For active periods, the average stretching parameters were for schizophrenia patients and for controls.