Abstract

Objective

The aim of this review was to summarize major topics in artificial intelligence (AI), including their applications and limitations in surgery. This paper reviews the key capabilities of AI to help surgeons understand and critically evaluate new AI applications and to contribute to new developments.

Summary Background Data

AI is composed of various subfields that each provide potential solutions to clinical problems. Each of the core subfields of AI reviewed in this piece have also been used in other industries such as the autonomous car, social networks, and deep learning computers.

Methods

A review of AI papers across computer science, statistics, and medical sources was conducted to identify key concepts and techniques within AI that are driving innovation across industries, including surgery. Limitations and challenges of working with AI were also reviewed.

Results

Four main subfields of AI were defined: 1) machine learning, 2) artificial neural networks, 3) natural language processing, and 4) computer vision. Their current and future applications to surgical practice were introduced, including big data analytics and clinical decision support systems. The implications of AI for surgeons and the role of surgeons in advancing the technology to optimize clinical effectiveness were discussed.

Conclusions

Surgeons are well-positioned to help integrate AI into modern practice. Surgeons should partner with data scientists to capture data across phases of care and to provide clinical context, for AI has the potential to revolutionize the way surgery is taught and practiced with the promise of a future optimized for the highest quality patient care.

Introduction

Artificial intelligence (AI) can be loosely defined as the study of algorithms that give machines the ability to reason and perform cognitive functions such as problem solving, object and word recognition, and decision-making.1 Previously thought to be science fiction, AI has increasingly become the topic of both popular and academic literature as years of research have finally built to thresholds of knowledge that have rapidly generated practical applications, such as International Business Machine’s (Armonk, NY, USA) Watson and Tesla’s (Palo Alto, CA, USA) autopilot.2

Stories of man-versus-machine, such as that of John Henry working to death to outperform the steam-powered hammer3, demonstrate how machines have long been feared yet ultimately both accepted and eagerly anticipated. Society proceeded to integrate simple machines into human workflow, and the resulting Industrial Revolution yielded a massive shift in productivity and quality of life. Similarly, AI has inspired awe and struck fear in people who now face a technology that can not only outperform but also potentially out-think its creators.

With the Information Age, a shift in workflow and productivity similar to that of the Industrial Revolution has begun; and surgery stands to gain from the current explosion of information technology. However, as with many emerging technologies, the true promise of AI can be lost in its hype.4

It is, therefore, important for surgeons to have a foundation of knowledge of AI to understand how it may impact healthcare and to consider ways in which they may interact with this technology. This review provides an introduction to AI by highlighting four core subfields – 1) machine learning, 2) natural language processing, 3) artificial neural networks, 4) computer vision – their limitations, and future implications for surgeons.

Subfields in AI

AI’s roots are found across multiple fields, including robotics, philosophy, psychology, linguistics, and statistics.5 Major advances in computer science, such as improvements in processing speed and power, have functioned as a catalyst to allow for the base technologies required for the advent of AI. The growing popularity of AI across many different industries has attracted venture capital investment up to $5 billion in 2016 alone.6 Much of the current attention on AI has focused on the four core subfields introduced below.

Machine Learning

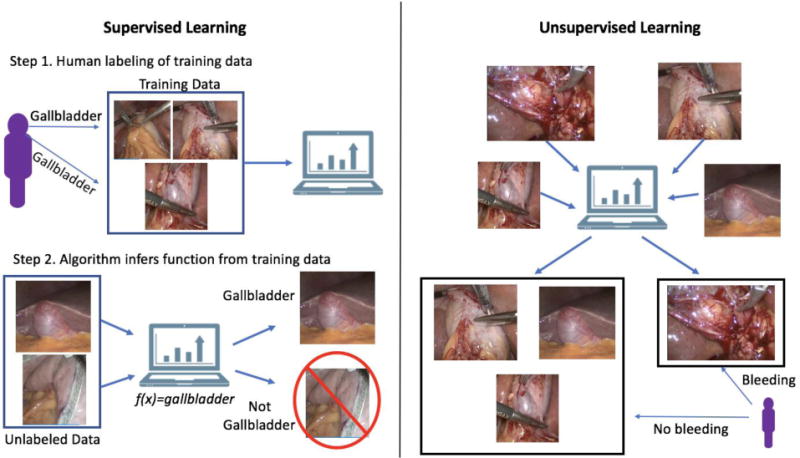

Machine learning (ML) enables machines to learn and make predictions by recognizing patterns. Traditional computer programs are explicitly programmed with a desired behavior (e.g. when the user clicks an icon, a new program opens). ML allows a computer to utilize partial labelling of the data (supervised learning) or the structure detected in the data itself (unsupervised learning) to explain or make predictions about the data without explicit programming (Figure 1). Supervised learning is useful for training a ML algorithm to predict a known result or outcome while unsupervised learning is useful in searching for patterns within data.7

Figure 1.

In supervised learning, human labeled data are fed to a machine learning algorithm to teach the computer a function, such as recognizing a gallbladder in an image or detecting a complication in a large claims database. In unsupervised learning, unlabeled data are fed to a machine learning algorithm, which then attempts to find a hidden structure to the data, such as identifying bright red (e.g. bleeding) as different from non-bleeding tissue.

A third category within machine learning is reinforcement learning, where a program attempts to accomplish a task (e.g. driving a car, inferring medical decisions) while learning from its own successes and mistakes.8 One can conceptualize reinforcement learning as the computer science equivalent of operant conditioning9 and is useful for automated tuning of predictions or actions, such as controlling an artificial pancreas system to fine tune the measurement and delivery of insulin to diabetic patients.10

ML is particularly useful for identifying subtle patterns in large datasets – patterns that may be imperceptible to humans performing manual analyses – by employing techniques that allow for more indirect and complex non-linear relationships and multivariate effects than conventional statistical analysis.11,12 ML has outperformed logistic regression for prediction of surgical site infections (SSI) by building non-linear models that incorporate multiple data sources, including diagnoses, treatments, and laboratory values.13

Furthermore, multiple algorithms working together (ensemble ML) can be used to calculate predictions at accuracy levels thought to be unattainable with conventional statistics.14 For example, by analyzing patterns of diagnostic and therapeutic data (including surgical resection) in the Surveillance, Epidemiology and End Results (SEER) cancer registry and comparing data to Medicare claims, ensemble ML with random forests, neural networks, and lasso regression was able to predict patient lung cancer staging by using International Classification of Diseases (ICD)-9 claims data alone with 93% sensitivity, 92% specificity, and 93% accuracy, outperforming a decision tree approach based on clinical guidelines alone (53% sensitivity, 89% specificity, 72% accuracy).15

Natural Language Processing

Natural language processing (NLP) is a subfield that emphasizes building a computer’s ability to understand human language and is crucial for large scale analyses of content such as electronic medical record (EMR) data, especially physicians’ narrative documentation. To achieve human-level understanding of language, successful NLP systems must expand beyond simple word recognition to incorporate semantics and syntax into their analyses.16

Rather than relying on codified classifications such as ICD codes, NLP enables machines to infer meaning and sentiment from unstructured data (e.g. prose written in the history of present illness or in a physician’s assessment and plan). NLP allows clinicians to write more naturally rather than having to input specific text sequences or select from menus to allow a computer to recognize the data. NLP has been utilized for large scale database analysis of the EMR to detect adverse events and postoperative complications from physician documentation17, 18, and many EMR systems now incorporate NLP – for example, to achieve automated claims coding – into their underlying software architecture to improve workflow or billing.19

In surgical patients, NLP has been used to automatically comb through EMRs to identify words and phrases in operative reports and progress notes that predicted anastomotic leak after colorectal resections. Many of its predictions reflected simple clinical knowledge that a surgeon would have (e.g. operation type and difficulty), but the algorithm was also able to adjust predictive weights of phrases describing patients (e.g. irritated, tired) relative to the postoperative day to achieve predictions of leak with a sensitivity of 100% and specificity of 72%.20 The ability of algorithms to self-correct can increase the utility of their predictions as datasets grow to become more representative of a patient population.

Artificial Neural Networks

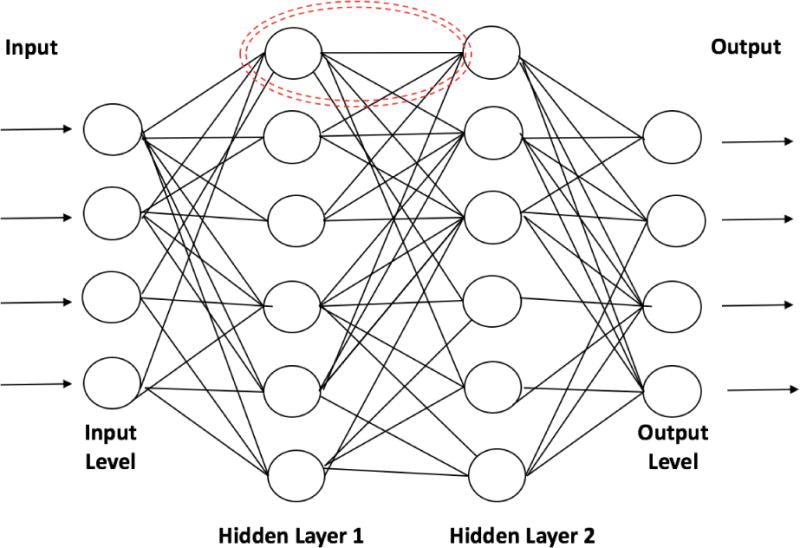

Artificial neural networks, a subfield of ML, are inspired by biological nervous systems and have become of paramount importance in many AI applications. Neural networks process signals in layers of simple computational units (neurons); connections between neurons are then parameterized via weights that change as the network learns different input-output maps corresponding to tasks such as pattern/image recognition and data classification (Figure 2).7 Deep learning networks are neural networks comprised of many layers and are able to learn more complex and subtle patterns than simple one or two-layer neural networks.21

Figure 2.

Artificial neural networks are composed of many computational units known as “neurons” (dotted red circle) that receive data inputs (similar to dendrites in biological neurons), perform calculations, and transmit output (similar to axons) to the next neuron. Input level neurons receive data while hidden layer neurons (many different hidden layers can be used) conduct the calculations necessary to analyze the complex relationships in the data. Hidden layer neurons then send the data to an output layer that provides the final version of the analysis for interpretation.

Clinically, ANNs have significantly outperformed more traditional risk prediction approaches. For example, an ANN’s sensitivity (89%) and specificity (96%) outperformed APACHE II sensitivity (80%) and specificity (85%) for prediction of pancreatitis severity six hours after admission.22 By using clinical variables such as patient history, medications, blood pressure, and length of stay, ANNs, in combination with other ML approaches, have yielded predictions of in-hospital mortality after open abdominal aortic aneurysm repair with sensitivity of 87%, specificity of 96.1%, and accuracy of 95.4%.23

Computer Vision

Computer vision describes machine understanding of images and videos, and significant advances have resulted in machines achieving human-level capabilities in areas such as object and scene recognition.24 Important healthcare-related work in computer vision includes image acquisition and interpretation in axial imaging with applications including computer-aided diagnosis, image-guided surgery, and virtual colonoscopy.25 Initially influenced by statistical signal processing, the field has recently shifted significantly towards more data-intensive ML approaches, such as neural networks,26 with adaptation into new applications.

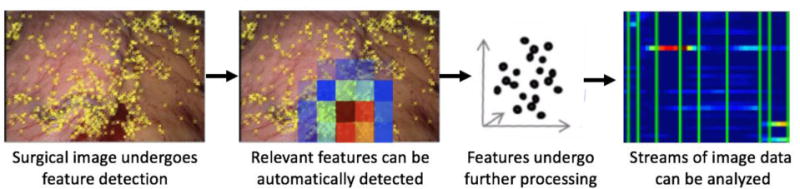

Utilizing ML approaches, current work in computer vision is focusing on higher level concepts such as image-based analysis of patient cohorts, longitudinal studies, and inference of more subtle conditions such as decision-making in surgery. For example, real-time analysis of laparoscopic video has yielded 92.8% accuracy in automated identification of the steps of a sleeve gastrectomy and noted missing or unexpected steps.27 With one minute of high-definition surgical video estimated to contain 25 times the amount of data found in a high-resolution computed tomography image28, video could contain a wealth of actionable data.29, 30 Thus, while predictive video analysis is in its infancy, such work provides proof-of-concept that AI can be leveraged to process massive amounts of surgical data to identify or predict adverse events in real-time for intraoperative clinical decision support (Figure 3).

Figure 3.

Computer vision utilizes mathematical techniques to analyze visual images or video streams as quantifiable features such as color, texture, and position that can then be used within a dataset to identify statistically meaningful events such as bleeding.

Synergy Across AI and Other Fields

The promise of AI lies in applications that combine aspects of each of the above subfields with other elements of computing such as database management and signal processing.7 The increasing potential of AI in surgery is analogous to other recent technological developments (e.g. mobile phones, cloud computing) that have arisen from the intersection of hyper-cycle advances in both hardware and software (i.e. as hardware advances, so too does software and vice versa).

Synergy between fields is also important in expanding the applications of AI. Combining NLP and computer vision, Google (Mountain View, CA, USA) Image Search is able to display relevant pictures in response to a textual query such as a word or phrase. Furthermore, neural networks, specifically deep learning, now form a significant part of the architecture underlying various AI systems. For example, deep learning in NLP has allowed for significant improvements in the accuracy of translation (60% more accurate translation by Google Translate31) while its use in computer vision has resulted in greater accuracy of classification of images (42% more accurate image classification by AlexNet32).

Clinical applications of such work include the successful utilization of deep learning to create a computer vision algorithm for the classification of smartphone images of benign and malignant skin lesions at an accuracy level equivalent to dermatologists.33 NLP and ML analyses of postoperative colorectal patients demonstrated that prediction of anastomotic leaks improved to 92% accuracy when different data types were analyzed in concert instead of individually (accuracy of vital signs – 65%; lab values – 74%; text data – 83%).34

Early attempts at using AI for technical skills augmentation focused on small feats such as task deconstruction and autonomous performance of simple tasks (e.g. suturing, knot-tying).35, 36 Such efforts have been critical to establishing a foundation of knowledge for more complex AI tasks.37 For example, the Smart Tissue Autonomous Robot (STAR) developed by Johns Hopkins University was equipped with algorithms that allowed it to match or outperform human surgeons in autonomous ex-vivo and in-vivo bowel anastomosis in animal models.38

While truly autonomous robotic surgery will remain out of reach for some time, synergy across fields will likely accelerate the capabilities of AI in augmenting surgical care. For AI, much of its clinical potential is in its ability to analyze combinations of structured and unstructured data (e.g. EMR notes, vitals, laboratory values, video, and other aspects of “big data”) to generate clinical decision support. Each type of data could be analyzed independently or in concert with different types of algorithms to yield innovations.

The true potential of AI remains to be seen and could be difficult to predict at this time. Synergistic reactions between different technologies can lead to unanticipated revolutionary technology; for example, recent synergistic combinations of advanced robotics, computer vision, and neural networks led to the advent of autonomous cars. Similarly, independent components within AI and other fields could combine to create a force multiplier effect with unanticipated changes to healthcare delivery. Therefore, surgeons should be engaged in assessing the quality and applicability of AI advances to ensure appropriate translation to the clinical sector.

Limitations of AI

As with any new technology, AI and each of its subfields are susceptible to unrealistic expectations from media hype that can lead to significant disappointment and disillusionment.39 AI is not a “magic bullet” that can yield answers to all questions. There are instances where traditional analytical methods can outperform ML40 or where the addition of ML does not improve on its results.41 As with any scientific endeavor, use of AI hinges on whether the correct scientific question is being asked and whether one has the appropriate data to answer that question.

ML provides a powerful tool with which to uncover subtle patterns in data. It excels at detecting patterns and demonstrating correlations that may be missed by traditional methods, and these results can then be used by investigators to uncover new clinical questions or generate novel hypotheses about surgical diseases and management.42, 43 However, there are both costs and risks to utilizing ML incorrectly.

The outputs of ML and other AI analyses are limited by the types and accuracy of available data. Systematic biases in clinical data collection can affect the type of patterns AI recognizes or the predictions it may make,44, 45 and this can especially affect women and racial minorities due to long-standing under-representation in clinical trial and patient registry populations.46–48 Supervised learning is dependent on labeling of data (such as identification of variables currently used in surgery-specific patient registries) which can be expensive to gather, and poorly labeled data will yield poor results. A publically available National Institutes of Health (NIH) dataset of chest x-rays and reports has been utilized to generate AI capable of generating diagnoses of chest x-rays. NLP was used to mine radiology reports to generate labels for chest x-rays, and these labels were used to train a deep learning network to recognize pathology on images with particularly good accuracy in identifying a pneumothorax.49 However, an in-depth analysis of the dataset by Oakden-Rayner50 revealed that some of the results may have been from improperly labeled data. Most of the x-rays labeled as pneumothorax also had a chest tube present, raising concern that the network was identifying chest tubes rather than pneumothoraces as intended.

An important concern regarding AI algorithms involves their interpretability51, for techniques such as neural networks are based on a “black box” design.52 While the automated nature of neural networks allows for detection of patterns missed by humans, human scientists are left with little ability to assess how or why such patterns were discerned by the computer. Medicine has been quick to recognize that the accountability of algorithms, the safety/verifiability of automated analyses, and the implications of these analyses on human-machine interactions can impact the utility of AI in clinical practice.53 Such concerns have hindered the use of AI algorithms in many applicative fields from medicine to autonomous driving and have pushed data scientists to improve the interpretability of AI analyses.54, 55 However, many of these efforts remain in their infancy, and surgeon input early in the design of AI algorithms may be helpful in improving accountability and interpretability of big data analyses.

Furthermore, despite advances in causal inference, AI cannot yet determine causal relationships in data at a level necessary for clinical implementation nor can it provide an automated clinical interpretation of its analyses.56 While big data can be rich with variables, it is poor in providing the appropriate clinical context with which to interpret the data. Human physicians, therefore, must critically evaluate the predictions generated by AI and interpret the data in clinically meaningful ways.

Implications for Surgeons

The first widespread uses of AI are likely be in the form of computer-augmentation of human performance. Clinician-machine interaction has already been demonstrated to augment decision-making. Pathologists have utilized AI to decrease their error rate in recognizing cancer-positive lymph nodes from 3.4% to 0.5%.57 Furthermore, by allowing for improved identification of high risk patients, AI can assist surgeons and radiologists in reducing the rate of lumpectomy by 30% in patients whose breast needle biopsies are considered high risk lesions but ultimately found to be benign after surgical excision.58

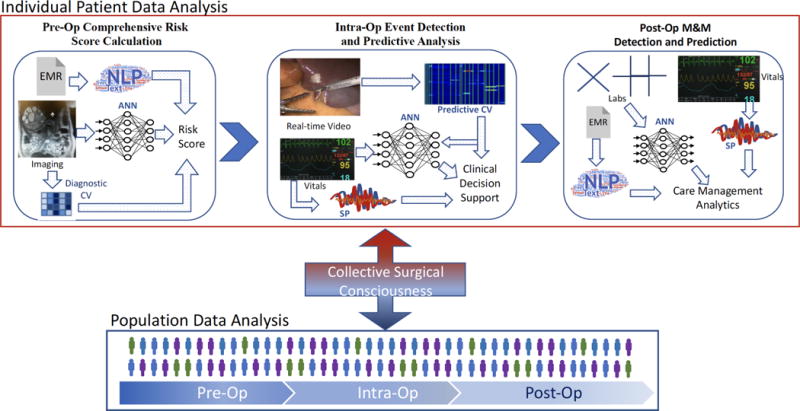

In the future, a surgeon will likely see AI analysis of population and patient-specific data augmenting each phase of care (Figure 4). Preoperatively, a patient undergoing evaluation for bariatric surgery may be tracking weight, glucose, meals, and activity through mobile applications and fitness trackers, with the data feeding into their EMR.59–61 Automated analysis of all preoperative mobile and clinical data could provide a more patient-specific risk score for operative planning and yield valuable predictors for postoperative care. The surgeon could then augment their decision-making intraoperatively based on real-time analysis of intraoperative progress that integrates EMR data with operative video, vital signs, instrument/hand tracking, and electrosurgical energy usage. Intraoperative monitoring of such different types of data could lead to real-time prediction and avoidance of adverse events. Integration of pre-, intra-, and post-operative data could help to monitor recovery and predict complications. After discharge, post-operative data from personal devices could continue to be integrated with data from their hospitalization to maximize weight loss and resolution of obesity-related comorbidities.62 Such an example could be applied to any type of surgical care with the potential for truly patient-specific, patient-centered care.

Figure 4.

Integration of multimodal data with AI can augment surgical decision-making across all phases of care both at the individual patient and at the population level. An integrated AI serving as a “collective surgical consciousness” serves as the conduit to add individual patient data to a population dataset while drawing from population data to provide clinical decision support during individual cases.

CV: computer vision, ANN: artificial neural network, NLP: natural language processing, SP: signal processing.

AI could be utilized to augment sharing of knowledge through the collection of massive amounts of operative video and EMR data across many surgeons around the world to generate a database of practices and techniques that can be assessed against outcomes. Video databases could use computer vision to capture rare cases or anatomy, aggregating and integrating data across pre-, intra-, and post-operative phases of care.63, 64 Such powerful analyses could create truly disruptive innovation in generating and validating evidence-based best practices to improve care quality.

The Surgeon’s Role

With big data analytics predicted to yield annual healthcare savings between $300 billion and $450 billion annually in the US alone65, there is great economic incentive to incorporate AI and big data into multiple elements of our healthcare system. Surgeons are uniquely positioned to help drive these innovations rather than passively waiting for the technology to become useful.

Since lack of data can limit the predictions made by AI, surgeons should seek to expand involvement in clinical data registries to ensure all patients are included. These can include registries at the local, national, or international levels. As data cleaning techniques improve, registries could become linked to expand their utility and increase the availability of clinical, genomic, proteomic, radiographic, and pathologic data.

Surgeons, as the key stakeholders in adoption of AI-based technologies for surgical care, should seek opportunities to partner with data scientists to capture novel forms of clinical data and help generate meaningful interpretations of that data.66 Surgeons have the clinical insight that can guide data scientists and engineers to answer the right questions with the right data, while engineers can provide automated, computational solutions to data analytics problems that would otherwise be too costly or time-consuming for manual methods.

Technology-based dissemination of surgical practice can empower every surgeon with the ability to improve the quality of global surgical care. Given that research has demonstrated that surgical technique and skill correlates to patient outcomes,67, 68 AI could help pool surgical experience – similar to efforts in genomics and biobanks69 – to bring the decision-making capabilities and techniques of the global surgical community into every operation. Big data could be leveraged to create a “collective surgical consciousness” that carries the entirety of the field’s knowledge, leading to technology-augmented real-time clinical decision support, such as intraoperative, GPS-like guidance.

Surgeons can provide value to data scientists by imparting their understanding of the relevance and importance of the relationship between seemingly simple topics, such as anatomy and physiology, to more complex phenomena, such as a disease pathophysiology, operative course, or postoperative complications. These types of relationships are important to appropriately model and predict clinical events, and they are critical to improving the interpretability of ML approaches. Surgeons and engineers alike should demand transparency and interpretability in algorithms so that AI can be held accountable for its predictions and recommendations. With patients’ lives at stake, the surgical community should expect automated systems that augment human capabilities to provide care to at least meet, if not exceed, the standards to which clinicians and scientists are held.

Surgeons are ultimately the ones providing clinical information to patients and will have to establish a patient communication framework through which to relay the data made accessible by AI.70 An understanding of AI will be key to appropriately conveying the results of complex analyses such as risk predictions, prognostications, and treatment algorithms to patients within the appropriate clinical context.71, 72

Working with patients, surgeons should develop and deliver the narrative behind optimal utilization of AI in patient care, avoiding complications that can arise when external forces (e.g. regulators, administrators) mandate implementation of new technologies73 without fully evaluating potential impacts on those who would use the technology most. If appropriately developed and implemented, AI has the potential to revolutionize the way surgery is taught and practiced with the promise of a future optimized for the highest quality patient care.

Conclusion

AI is expanding its footprint in clinical systems ranging from databases to intraoperative video analysis. The unique nature of surgical practice leaves surgeons well-positioned to help usher in the next phase of AI, one focused on generating evidence-based, real-time clinical decision support designed to optimize patient care and surgeon workflow.

Acknowledgments

Funding Support: Daniel Hashimoto is financially supported by NIH grant T32DK007754-16A1 and the Massachusetts General Hospital Edward D. Churchill Fellowship.

Disclosures: Daniel Hashimoto is financially supported by NIH grant T32DK007754-16A1 and the Massachusetts General Hospital Edward D. Churchill Fellowship. Ozanan Meireles and Daniel Hashimoto have grant funding from the Natural Orifice Surgery Consortium for Assessment and Research (NOSCAR) for research related to computer vision in endoscopic surgery. Guy Rosman and Daniela Rus are funded by Toyota Research Institute (TRI) for research on autonomous vehicles. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH, NOSCAR, or TRI.

References

- 1.Bellman R. An introduction to artificial intelligence: Can computers think? Thomson Course Technology. 1978 [Google Scholar]

- 2.Lewis-Kraus G. The Great A.I. Awakening. New York Times; 2016. [Google Scholar]

- 3.Keats EJ. John Henry: An American Legend. Pantheon; 1965. [Google Scholar]

- 4.Chen JH, Asch SM. Machine Learning and Prediction in Medicine — Beyond the Peak of Inflated Expectations. New England Journal of Medicine. 2017;376(26):2507–2509. doi: 10.1056/NEJMp1702071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Buchanan BG. A (very) brief history of artificial intelligence. Ai Magazine. 2005;26(4):53. [Google Scholar]

- 6.CBInsights. The 2016 AI Recap: Startups See Record High In Deals And Funding. 2017 Available at: https://www.cbinsights.com/blog/artificial-intelligence-startup-funding/. Accessed June 4, 2017.

- 7.Deo RC. Machine learning in medicine. Circulation. 2015;132(20):1920–1930. doi: 10.1161/CIRCULATIONAHA.115.001593. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Sutton RS, Barto AG. Reinforcement learning: An introduction. Vol. 1. MIT press; Cambridge: 1998. [Google Scholar]

- 9.Skinner BF. The behaviour of organisms: An experimental analysis. D. Appleton-Century Company Incorporated; 1938. [Google Scholar]

- 10.Bothe MK, Dickens L, Reichel K, et al. The use of reinforcement learning algorithms to meet the challenges of an artificial pancreas. Expert review of medical devices. 2013;10(5):661–673. doi: 10.1586/17434440.2013.827515. [DOI] [PubMed] [Google Scholar]

- 11.Miller RA, Pople HEJ, Myers JD. Internist-I, an Experimental Computer-Based Diagnostic Consultant for General Internal Medicine. New England Journal of Medicine. 1982;307(8):468–476. doi: 10.1056/NEJM198208193070803. [DOI] [PubMed] [Google Scholar]

- 12.Cruz JA, Wishart DS. Applications of machine learning in cancer prediction and prognosis. Cancer informatics. 2006;2:59. [PMC free article] [PubMed] [Google Scholar]

- 13.Soguero-Ruiz C, Fei WM, Jenssen R, et al. Data-driven Temporal Prediction of Surgical Site Infection. AMIA Annu Symp Proc. 2015;2015:1164–73. [PMC free article] [PubMed] [Google Scholar]

- 14.Wang PS, Walker A, Tsuang M, et al. Strategies for improving comorbidity measures based on Medicare and Medicaid claims data. Journal of clinical epidemiology. 2000;53(6):571–578. doi: 10.1016/s0895-4356(00)00222-5. [DOI] [PubMed] [Google Scholar]

- 15.Bergquist S, Brooks G, Keating N, et al. Classifying lung cancer severity with ensemble machine learning in health care claims data. Proceedings of Machine Learning Research. 2017 In Press. [PMC free article] [PubMed] [Google Scholar]

- 16.Nadkarni PM, Ohno-Machado L, Chapman WW. Natural language processing: an introduction. Journal of the American Medical Informatics Association. 2011;18(5):544–551. doi: 10.1136/amiajnl-2011-000464. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Murff HJ, FitzHenry F, Matheny ME, et al. Automated identification of postoperative complications within an electronic medical record using natural language processing. JAMA. 2011;306(8):848–855. doi: 10.1001/jama.2011.1204. [DOI] [PubMed] [Google Scholar]

- 18.Melton GB, Hripcsak G. Automated detection of adverse events using natural language processing of discharge summaries. Journal of the American Medical Informatics Association. 2005;12(4):448–457. doi: 10.1197/jamia.M1794. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Friedman C, Shagina L, Lussier Y, et al. Automated encoding of clinical documents based on natural language processing. Journal of the American Medical Informatics Association. 2004;11(5):392–402. doi: 10.1197/jamia.M1552. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Soguero-Ruiz C, Hindberg K, Rojo-Alvarez JL, et al. Support Vector Feature Selection for Early Detection of Anastomosis Leakage From Bag-of-Words in Electronic Health Records. IEEE J Biomed Health Inform. 2016;20(5):1404–15. doi: 10.1109/JBHI.2014.2361688. [DOI] [PubMed] [Google Scholar]

- 21.Hinton GE, Osindero S, Teh Y-W. A fast learning algorithm for deep belief nets. Neural computation. 2006;18(7):1527–1554. doi: 10.1162/neco.2006.18.7.1527. [DOI] [PubMed] [Google Scholar]

- 22.Modifi R, Duff MD, Madhavan KK, et al. Identification of severe acute pancreatitis using an artificial neural network. Surgery. 2007;141:59–66. doi: 10.1016/j.surg.2006.07.022. [DOI] [PubMed] [Google Scholar]

- 23.Monsalve-Torra A, Ruiz-Fernandez D, Marin-Alonso O, et al. Using machine learning methods for predicting inhospital mortality in patients undergoing open repair of abdominal aortic aneurysm. J Biomed Inform. 2016;62:195–201. doi: 10.1016/j.jbi.2016.07.007. [DOI] [PubMed] [Google Scholar]

- 24.Szeliski R. Computer vision: algorithms and applications. Springer Science & Business Media; 2010. [Google Scholar]

- 25.Kenngott HG, Wagner M, Nickel F, et al. Computer-assisted abdominal surgery: new technologies. Langenbecks Arch Surg. 2015;400(3):273–81. doi: 10.1007/s00423-015-1289-8. [DOI] [PubMed] [Google Scholar]

- 26.Egmont-Petersen M, de Ridder D, Handels H. Image processing with neural networks—a review. Pattern recognition. 2002;35(10):2279–2301. [Google Scholar]

- 27.Volkov M, Hashimoto DA, Rosman G, et al. IEEE International Conference on Robotics and Automation. Singapore: 2017. Machine Learning and Coresets for Automated Real-Time Video Segmentation of Laparoscopic and Robot-Assisted Surgery; pp. 754–759. [Google Scholar]

- 28.Natarajan P, Frenzel JC, Smaltz DH. Demystifying Big Data and Machine Learning for Healthcare. CRC Press; 2017. [Google Scholar]

- 29.Bonrath EM, Gordon LE, Grantcharov TP. Characterising ‘near miss’ events in complex laparoscopic surgery through video analysis. BMJ Qual Saf. 2015;24(8):516–21. doi: 10.1136/bmjqs-2014-003816. [DOI] [PubMed] [Google Scholar]

- 30.Grenda TR, Pradarelli JC, Dimick JB. Using Surgical Video to Improve Technique and Skill. Annals of Surgery. 2016;264(1):32–33. doi: 10.1097/SLA.0000000000001592. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Schuster Wu Y, Chen MZ, et al. Google’s neural machine translation system: Bridging the gap between human and machine translation. arXiv preprint arXiv:1609.08144. 2016 [Google Scholar]

- 32.Krizhevsky A, Sutskever I, Hinton GE. Imagenet classification with deep convolutional neural networks. Advances in neural information processing systems. 2012:1097–1105. [Google Scholar]

- 33.Esteva A, Kuprel B, Novoa RA, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542(7639):115–118. doi: 10.1038/nature21056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Soguero-Ruiz C, Hindberg K, Mora-Jimenez I, et al. Predicting colorectal surgical complications using heterogeneous clinical data and kernel methods. J Biomed Inform. 2016;61:87–96. doi: 10.1016/j.jbi.2016.03.008. [DOI] [PubMed] [Google Scholar]

- 35.DiPietro R, Lea C, Malpani A, et al. International Conference on Medical Image Computing and Computer-Assisted Intervention. Springer International Publishing; 2016. Recognizing surgical activities with recurrent neural networks; pp. 551–558. [Google Scholar]

- 36.Zappella L, Béjar B, Hager G, et al. Surgical gesture classification from video and kinematic data. Medical image analysis. 2013;17(7):732–745. doi: 10.1016/j.media.2013.04.007. [DOI] [PubMed] [Google Scholar]

- 37.Moustris GP, Hiridis SC, Deliparaschos KM, et al. Evolution of autonomous and semi-autonomous robotic surgical systems: a review of the literature. Int J Med Robot. 2011;7(4):375–92. doi: 10.1002/rcs.408. [DOI] [PubMed] [Google Scholar]

- 38.Shademan A, Decker RS, Opfermann JD, et al. Supervised autonomous robotic soft tissue surgery. Science Translational Medicine. 2016;8(337):337ra64, 337ra64. doi: 10.1126/scitranslmed.aad9398. [DOI] [PubMed] [Google Scholar]

- 39.Linden A, Fenn J. Strategic Analysis Report Nº R-20-1971. Gartner, Inc; 2003. Understanding Gartner’s hype cycles. [Google Scholar]

- 40.Austin PC, Tu JV, Lee DS. Logistic regression had superior performance compared with regression trees for predicting in-hospital mortality in patients hospitalized with heart failure. Journal of clinical epidemiology. 2010;63(10):1145–1155. doi: 10.1016/j.jclinepi.2009.12.004. [DOI] [PubMed] [Google Scholar]

- 41.Bellman RE. Adaptive control processes: a guided tour. Princeton university press; 2015. [Google Scholar]

- 42.Rudin C, Dunson D, Irizarry R, et al. Discovery with Data: Leveraging Statistics with Computer Science to Transform Science and Society. American Statistical Association White Paper. 2014 [Google Scholar]

- 43.Council NR. Frontiers in massive data analysis. National Academies Press; 2013. [Google Scholar]

- 44.Jüni P, Altman DG, Egger M. Systematic reviews in health care: assessing the quality of controlled clinical trials. BMJ: British Medical Journal. 2001;323(7303):42. doi: 10.1136/bmj.323.7303.42. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Hopewell S, Loudon K, Clarke MJ, et al. Publication bias in clinical trials due to statistical significance or direction of trial results. The Cochrane Library. 2009 doi: 10.1002/14651858.MR000006.pub3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Murthy VH, Krumholz HM, Gross CP. Participation in cancer clinical trials: race-, sex-, and age-based disparities. Jama. 2004;291(22):2720–2726. doi: 10.1001/jama.291.22.2720. [DOI] [PubMed] [Google Scholar]

- 47.Chang AM, Mumma B, Sease KL, et al. Gender bias in cardiovascular testing persists after adjustment for presenting characteristics and cardiac risk. Academic Emergency Medicine. 2007;14(7):599–605. doi: 10.1197/j.aem.2007.03.1355. [DOI] [PubMed] [Google Scholar]

- 48.Douglas PS, Ginsburg GS. The evaluation of chest pain in women. New England Journal of Medicine. 1996;334(20):1311–1315. doi: 10.1056/NEJM199605163342007. [DOI] [PubMed] [Google Scholar]

- 49.Wang X, Peng Y, Lu L, et al. IEEE CVPR. Honolulu, HI: 2017. ChestX-ray8: Hospital-scale Chest X-ray Database and Benchmarks on Weakly-Supervised Classification and Localization of Common Thorax Diseases. [Google Scholar]

- 50.Oakden-Rayner L. Luke Oakden Rayner. Wordpress; 2017. Exploring the ChestXray14 dataset: Problems. [Google Scholar]

- 51.Ribeiro MT, Singh S, Guestrin C. Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. ACM; 2016. Why should i trust you?: Explaining the predictions of any classifier; pp. 1135–1144. [Google Scholar]

- 52.Sussillo D, Barak O. Opening the black box: low-dimensional dynamics in high-dimensional recurrent neural networks. Neural computation. 2013;25(3):626–649. doi: 10.1162/NECO_a_00409. [DOI] [PubMed] [Google Scholar]

- 53.Cabitza F, Rasoini R, Gensini GF. Unintended consequences of machine learning in medicine. Jama. 2017;318(6):517–518. doi: 10.1001/jama.2017.7797. [DOI] [PubMed] [Google Scholar]

- 54.Sturm I, Lapuschkin S, Samek W, et al. Interpretable deep neural networks for single-trial EEG classification. Journal of neuroscience methods. 2016;274:141–145. doi: 10.1016/j.jneumeth.2016.10.008. [DOI] [PubMed] [Google Scholar]

- 55.Tan S, Sim KC, Gales M. Automatic Speech Recognition and Understanding (ASRU), 2015 IEEE Workshop on. IEEE; 2015. Improving the interpretability of deep neural networks with stimulated learning; pp. 617–623. [Google Scholar]

- 56.Pearl J. Causality: Models, Reasoning and Inference. 2. Cambridge, UK: Cambridge University Press; 2009. [Google Scholar]

- 57.Wang D, Khosla A, Gargeya R, et al. Deep learning for identifying metastatic breast cancer. arXiv preprint arXiv:1606.05718. 2016 [Google Scholar]

- 58.Bahl M, Barzilay R, Yedidia AB, et al. High-Risk Breast Lesions: A Machine Learning Model to Predict Pathologic Upgrade and Reduce Unnecessary Surgical Excision. Radiology. 0(0):170549. doi: 10.1148/radiol.2017170549. [DOI] [PubMed] [Google Scholar]

- 59.Harvey C, Koubek R, Begat V, et al. Usability Evaluation of a Blood Glucose Monitoring System With a Spill-Resistant Vial, Easier Strip Handling, and Connectivity to a Mobile App: Improvement of Patient Convenience and Satisfaction. J Diabetes Sci Technol. 2016;10(5):1136–41. doi: 10.1177/1932296816658058. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Horner GN, Agboola S, Jethwani K, et al. Designing Patient-Centered Text Messaging Interventions for Increasing Physical Activity Among Participants With Type 2 Diabetes: Qualitative Results From the Text to Move Intervention. JMIR Mhealth Uhealth. 2017;5(4):e54. doi: 10.2196/mhealth.6666. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Ryu B, Kim N, Heo E, et al. Impact of an Electronic Health Record-Integrated Personal Health Record on Patient Participation in Health Care: Development and Randomized Controlled Trial of MyHealthKeeper. J Med Internet Res. 2017;19(12):e401. doi: 10.2196/jmir.8867. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Elvin-Walsh L, Ferguson M, Collins PF. Nutritional monitoring of patients post-bariatric surgery: implications for smartphone applications. J Hum Nutr Diet. 2017 doi: 10.1111/jhn.12492. [DOI] [PubMed] [Google Scholar]

- 63.Langerman A, Grantcharov TP. Are We Ready for Our Close-up?: Why and How We Must Embrace Video in the OR. Annals of Surgery. 2017 doi: 10.1097/SLA.0000000000002232. [DOI] [PubMed] [Google Scholar]

- 64.Hashimoto DA, Rosman G, Rus D, et al. Surgical Video in the Age of Big Data. Annals of Surgery. 2017 doi: 10.1097/SLA.0000000000002493. Publish Ahead of Print. [DOI] [PubMed] [Google Scholar]

- 65.Groves P, Kayyali B, Knott D, et al. The‘big data‘revolution in healthcare: Accelerating value and innovation. 2016 [Google Scholar]

- 66.Weber GM, Mandl KD, Kohane IS. Finding the missing link for big biomedical data. Jama. 2014;311(24):2479–80. doi: 10.1001/jama.2014.4228. [DOI] [PubMed] [Google Scholar]

- 67.Birkmeyer JD, Finks JF, O’Reilly A, et al. Surgical skill and complication rates after bariatric surgery. N Engl J Med. 2013;369(15):1434–42. doi: 10.1056/NEJMsa1300625. [DOI] [PubMed] [Google Scholar]

- 68.Scally CP, Varban OA, Carlin AM, et al. Video Ratings of Surgical Skill and Late Outcomes of Bariatric Surgery. JAMA Surg. 2016;151(6):e160428. doi: 10.1001/jamasurg.2016.0428. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.O’Shea P. Future medicine shaped by an interdisciplinary new biology. Lancet. 2012;379(9825):1544–50. doi: 10.1016/S0140-6736(12)60476-0. [DOI] [PubMed] [Google Scholar]

- 70.Emanuel EJ, Emanuel LL. Four models of the physician-patient relationship. JAMA. 1992;267(16):2221–2226. [PubMed] [Google Scholar]

- 71.Chen JH, Asch SM. Machine Learning and Prediction in Medicine - Beyond the Peak of Inflated Expectations. N Engl J Med. 2017;376(26):2507–2509. doi: 10.1056/NEJMp1702071. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Obermeyer Z, Emanuel EJ. Predicting the Future - Big Data, Machine Learning, and Clinical Medicine. N Engl J Med. 2016;375(13):1216–9. doi: 10.1056/NEJMp1606181. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73.Miller RH, Sim I. Physicians’ use of electronic medical records: barriers and solutions. Health affairs. 2004;23(2):116–126. doi: 10.1377/hlthaff.23.2.116. [DOI] [PubMed] [Google Scholar]