Abstract

Explicit emphasis on teaching science process skills leads to both gains in the skills themselves and, strikingly, deeper understanding of content. Here, we created and tested a series of online, interactive tutorials with the goal of helping undergraduate students develop science process skills. We designed the tutorials in accordance with evidence-based multimedia design principles and student feedback from usability testing. We then tested the efficacy of the tutorials in an introductory undergraduate biology class. On the basis of a multivariate ordinary least-squares regression model, students who received the tutorials are predicted to score 0.82 points higher on a 15-point science process skill assessment than their peers who received traditional textbook instruction on the same topic. This moderate but significant impact indicates that well-designed online tutorials can be more effective than traditional ways of teaching science process skills to undergraduate students. We also found trends that suggest the tutorials are especially effective for nonnative English-speaking students. However, due to a limited sample size, we were unable to confirm that these trends occurred due to more than just variation in the student group sampled.

INTRODUCTION

Science Process Skills Are an Important Component of Undergraduate Biology Curricula

A primary goal of undergraduate biology education is to have students develop the ability to think like a scientist. That is, students must develop the ability to ask and answer meaningful biological questions, the core of scientific inquiry. To achieve this goal, students need to master an underlying set of skills, including the ability to ask a testable question, propose a hypothesis, design an experiment, analyze data, draw conclusions from evidence, and communicate findings. We refer to these skills as “science process skills.” Reports by the American Association for the Advancement of Science (AAAS; 2011) and other biology education leaders (Wright and Klymkowsky, 2005; Krajcik and Sutherland, 2010) emphasize teaching these skills as a key goal in improving undergraduate education. Faculty also overwhelmingly value these skills in their students but traditionally neglect to include them in course design due to a fear of losing time devoted to important subject content (Coil et al., 2010). Here, we offer an alternative approach to incorporating science process skills into course curricula. We created a series of interactive online tutorials that explicitly teach science process skills and supplement classroom learning with minimal added effort from instructors.

Recent attempts at redesigning undergraduate biology courses show that placing an explicit emphasis on science process skills leads to both gains in the skills themselves and, strikingly, greater retention of subject content. Students who participated in a supplemental course at the University of Washington that taught science process skills and other aspects of scientific culture earned higher grades in introductory biology classes than their peers who did not participate in the course (Dirks and Cunningham, 2006; Buchwitz et al., 2012). Further supporting the benefits of learning science process skills, students’ scores on a science process skills assessment taken after the supplemental course correlated with their introductory biology course grades. The connection between science process skills and overall course grade underscores the impact of explicit instruction in science process skills. In another example, students at Brigham Young University participated in a redesigned cell biology course that placed explicit emphasis on data analysis, interpretation, and communication. These students showed improvement in both data analysis and conceptual problems during the course (Kitchen et al., 2003). They also scored higher than students in the traditional content-focused cell biology course on both analysis and recall problems. Other instructors have focused on using primary literature to emphasize critical thinking and science process skills in redesigned courses. The CREATE method, developed at the City University of New York, teaches the nature of science through a series of primary research papers produced by a single research group covering a specific topic (Hoskins et al., 2007). Students use active-learning approaches to apply science process skills like hypothesis generation, experimental design, data analysis, and scientific communication. This approach has yielded gains in critical thinking, gains in experimental design, and improved student attitudes about science in a broad range of postsecondary settings from community colleges to graduate programs (Hoskins et al., 2011; Gottesman and Hoskins, 2013; Stevens and Hoskins, 2014; Kenyon et al., 2016). While the well-defined CREATE method has proven highly effective in most implementations studied, some instances show no extra gains compared with other active, literature-focused pedagogies (Segura-Totten and Dalman, 2013) In each of these examples, explicit instruction in science process skills led to greater facility with those skills and better content learning in the subject area. Taken together, these results illustrate a clear value in emphasizing science process skills for undergraduates.

Barriers to Teaching Science Process Skills

While the value of teaching science process skills becomes clearer every year, significant barriers still prevent their incorporation into undergraduate curricula. Chief among the barriers is the time commitment required for instruction. As any instructor knows, time with students is limited, and instructors must allocate class time thoughtfully to achieve the course goals. This leads to the familiar debate between covering as much subject material as possible versus a “less is more” approach that focuses on skill development and inquiry while covering a narrower range of topics. Up to this point, most incorporation of science process skills has focused on course-wide redesigns and long-term instruction, as described earlier. These large-scale changes require significant investment of the instructor’s time and reallocation of class time. Other attempts at emphasizing science process skills have focused on their integration into laboratory and research experiences, an option not available to all courses and instructors (DebBurman, 2002; Shi et al., 2011; Brownell et al., 2015; Woodham et al., 2016). Short of these large changes, could smaller-scale incorporation of science process skills instruction prove useful to student learning? Little research has evaluated the effect of limited, stand-alone instruction in science process skills.

Limitations of Textbook-Based Instruction and Benefits of Interactive Digital Instruction

As often as not, students’ only exposure to science process skills comes as an assigned reading in the first chapter of a science textbook. The major problem with textbook-based learning is that the majority of students do not read their textbooks (Clump et al., 2004; Podolefsky and Finklestein, 2006; Stelzer et al., 2009). Data collected across multiple science disciplines show that 70–80% of students do not read the textbook before coming to class (Clump et al., 2004; Podolefsky and Finklestein, 2006; Stelzer et al., 2009). Although positive correlations exist between grades and textbook reading, the vast majority of students, for a variety of reasons, choose to ignore the textbook.

Importantly, resistance to textbook-based learning is being met with new ways of engaging students. Online, multimedia-based learning is improving education by engaging students through interactive multisensory learning. Recently, physics departments have shown improvements in students’ understanding of basic physics content through the implementation of online multimedia-based modules when compared with traditional textbook-based learning (Stelzer et al., 2009). Harnessing the power of audio, visuals, text, animation, and user interactions, multimedia design capitalizes on a variety of ways to deliver information. Previous data have shown that students are able to receive and process information through two primary channels: audio and visual (Mayer, 2008). By simultaneously tapping into both channels, students are capable of processing larger amounts of information, resulting in increased retention. Using this medium, instructors can effectively reduce cognitive load for their students and enable quicker, better-retained learning (Mayer and Moreno, 2003; Mayer, 2008; Evans and Gibbons, 2007; Domagk et al., 2010). A multitude of examples show that online multimedia learning not only helps students to improve their understanding of the concepts presented but also allows them to integrate new concepts with their existing knowledge base (Carpi, 2001; Carpi and Mikhailova, 2003; McClean et al., 2005; Silver and Nickel, 2005; Chudler and Bergsman, 2014; Goff et al., 2017).

Interactive Digital Modules Created to Teach Science Process Skills

This paper will outline best practices for creating useful and engaging multimedia-based tutorials that provide students with an alternative method of learning key material outside the classroom. Using these principles, we created a series of seven interactive digital modules, each addressing a different science process skill and incorporated them into in a large-enrollment introductory biology course. We then assessed the tutorials’ effect on students’ ability to apply those skills compared with students who received only traditional textbook-based instruction. Although we tailored our design strategy to online, multimedia-based learning, instructors can, and should, be able to apply many of these same principles to lecture-based design as well.

TUTORIAL DESIGN

Tutorial Design Methods

To create online tutorials, we used Articulate Storyline 2 software (Articulate Global), with VideoScribe software (Sparkol Limited, Bristol, UK) to create animated whiteboard-style videos. All tutorial modules were administered as SCORM 2004 packages via Moodle learning management system, version 3.0.

Multimedia Design Principles

Our online, science process tutorial was broken up into seven modules outlining the different steps within the scientific process. Although we understand that science does not always progress linearly, we designed these tutorials to be completed in a sequential order. Following the principle of backward design (Wiggins and McTighe, 2005), we first established a concrete set of learning objectives for each of the seven modules (Supplemental Material 2). Each module covered one to five learning objectives guided by Bloom’s taxonomy principles to promote higher-level, critical-thinking, and analytical skills (Bloom et al., 1956; Crowe et al., 2008).

Once completed, these objectives became the road map that guided both the content and the assessments. When choosing the format for the tutorial, we felt it important that our students experience the process of scientific discovery firsthand. For many years, educational research has supported the use of stories and case studies to teach and make a personal connection with science students (Martin and Brouwer, 1991). Therefore, our science process tutorial follows the storyline of two Nobel laureates, Dr. Barry Marshall and Dr. Robin Warren, and their quest to discover the underlying causes of ulcers. From reading primary literature to establishing experiments to analyzing and presenting data, the goal of the online tutorial was to simulate the process of science through the lens of two experienced researchers. Importantly, our online platform offered students an opportunity to learn through interactive engagement rather than passive reading of text.

Having selected learning objectives and the overarching narrative structure for the tutorial, we next turned to designing how the students would interact with the tutorial. A growing body of evidence suggests that multimedia platforms, if properly designed, are effective tools for learning scientific material. For each of the seven individual modules, we followed evidence-based principles of multimedia design outlined, in large part, by Richard Mayer, with special attention placed on the coherency and redundancy principles (Mayer, 2008) (Supplemental Material 1).

The coherency principle suggests limiting the use of visuals that do not support the overall learning objectives of the project. Every item occupying the learning space increases cognitive load on the learner. Therefore, to maximize learning gains and limit cognitive overload, we carefully selected only diagrams and text that support the learning outcomes of the scene. Close adherence to the coherency principle was especially important for this tutorial because of the limited screen space. For each visual scene presented to students, we were careful to limit written text. This approach especially relied on narrated scenes, so that audible narration was not repeated by verbatim text, a concept that closely aligns with the redundancy principle.

The redundancy principle strives to limit the use of printed text for a narrated graphic. In other words, narration should describe an image rather than being a rereading of words on a screen. Simultaneous presentation of words and narration can overload the user’s working memory, making it harder to learn the topic at hand. Instead, research suggests that it is better to replace the words with an image that displays the narrated process.

Finally, to reduce cognitive load, we created a consistent user experience throughout the tutorial (Mayer, 2008; Blummer and Kritskaya, 2009). A consistent navigation interface with clearly labeled buttons allowed students to focus more of their mental capacity on the content presented. We also included repeated prompts and signals that communicate to students what information is most important, when a section is complete, what resources are available, and when they are required to make a decision. Approaches as simple as highlighting key words and using a consistent Next button allow students to reduce extraneous processing.

Tutorial Audience

We designed our tutorials for undergraduate students with little or no background in the biological sciences. Target populations included students majoring in biology, other science majors, and students majoring in nonscience subjects. Because the tutorials introduce and develop fundamental science process skills through the lens of biology, they are appropriate for this broad audience. We also were interested in designing the tutorials to aid students who are nonnative English-speaking (NNES) students. Interactive and online learning methods can be especially useful for NNES students, because they allow students to control the pace of their learning, immediately repeat difficult material, and use visual representations that do not depend on written text. Simulations and learning games are especially effective for NNES students (Abdel, 2002). As such, we recognized the potential our tutorials hold for NNES students and emphasized these elements in our tutorials to make them maximally useful.

Tutorial Format

In agreement with these design principles, each module followed a similar layout, and students quickly became familiar with the general types of slides: the introductory slide, the challenge questions, and the summary slides. The introductory slide was the first slide in each module (Figure 1A). On each introductory slide, students watched a brief video that summarized previous modules and outlined upcoming learning objectives. In accordance with the redundancy principle, we used whiteboard-style animations to build the introductory videos (Türkay, 2016). In the videos, drawings appeared as the narrator described them, an approach that has been shown to increase student engagement (Guo et al., 2014) and that allowed the viewer to take in related information through both the auditory and visual channels simultaneously. Capitalizing on the use of both auditory and visual channels limited cognitive overload and freed the learner to process and store larger amounts of information.

FIGURE 1.

Example screenshots from “Experimental Design” interactive tutorial. Interactive tutorials were designed using consistently formatted interactions. These included module introductions (A), challenge questions (B), and specific feedback (C). Tutorials also include test tube graphics that allow students to track their progress through each module, review individual learning objectives (D), review entire modules (E), and review their progress through the entire tutorial (F). The test tube rack containing test tubes for all completed modules is accessible at any time during the tutorial by clicking on the small rack icon in the upper right corner of the tutorial interface. This allows students easy access for reviewing past content and tracking progress.

Following the introductory whiteboard video, an onscreen character greets the student and introduces the specific activities for that module. Personalizing interactions between an onscreen character and the learner creates a sense of teamwork. The onscreen character in each module serves to guide the storyline, provide feedback, and prompt the students to think deeply about the learned material (Figure 1, B and C). In the module “Design an Experiment,” students are asked to help the researchers develop an experimental outline for the project. As the students progress through the module, they learn the importance of selecting a model system, assigning proper treatment and control groups, and creating a protocol. Challenge questions posed by the onscreen character during each of these steps push students to think critically about typical problems faced throughout the scientific process.

The tutorials were designed so that, when students made choices or answered questions, they reflected on why they chose their specific option and why the other options were better, worse, or totally wrong. For example, when selecting the treatment group for their experiments, students chose from four different treatment options. In this scenario, students must select and justify the use of their selected treatment. If students selected an incorrect treatment, they received specific feedback that helped guide them to the correct answer (Figure 1C). This feature of the tutorials comports with research indicating that explanatory or informed-tutoring feedback, which provides context and explanations for why an incorrect choice is incorrect and a correct one is correct, is more helpful in the learning process than feedback that is merely corrective (Moreno, 2004; Narciss, 2004).

Finally, once the student selected the correct answer, we used a consistent format to summarize the question and responses. This interactive slide let students review their correct answer and each of the incorrect answers, including explanations about how each answer fit with the larger concept. In pilot studies, students found the guided feedback to be very useful for helping them break down complicated material into single units of information. Additionally, we found that asking students to justify their selection forced students to think critically about the question at hand instead of randomly selecting an answer.

Students are better able to engage with multimedia tutorials when they can track their progress and see how close they are to finishing. Some tutorials display the percent complete or the total number of slides. For our tutorial, we created a test tube graphic to represent progress in the tutorial. The test tube also served a second purpose, to show students the learning objectives for that module. As students complete learning objectives, the test tube fills up (Figure 1D). At the end of the tutorial, the student finishes with a full test tube that displays all of the learning objectives (Figure 1E). This visual tracking method also allows students to “collect” test tubes for all seven modules in the tutorial, creating a game-like incentive that engages students (Figure 1F). On the basis of student feedback, students appreciated the ability to track their progress through the tutorial.

As mentioned, the test tubes served a second purpose as a way for students to explicitly see the learning objectives for the current module. Upon completing a module, students saw the full test tube with each learning objective filled in (Figure 1E). Each learning objective was as a clickable item that led to a small review activity on that topic. Along with this end-of-module review, students were always able to review test tubes and learning objectives from previously completed modules by clicking on a consistent icon in the corner of the tutorial screen. This approach requires that students actively seek out necessary information, referred to as “pulling” information. This design strategy was repeated throughout the tutorial as a way to further engage students and give them a sense of control as they work through the tutorial. By using the test tube format, the modules clearly articulate learning objectives and offer a convenient way to revisit previous material.

Usability Testing

After the initial design, we refined our tutorials based on usability testing. Because students experienced the tutorials on their own time and without direct input from the instructor, it was imperative that the tutorials were easy to use, engaging, accessible to students of all abilities, and met our intended learning objectives. To reach these goals, we collaborated with the University of Minnesota Usability Lab. The usability lab provides a physical space to observe a person using a product or design in real time and a process to help improve the effectiveness and accessibility of that product based on those observations. During this process, we met with a usability expert from the lab, determined testing goals, conducted focus groups with undergraduate students, evaluated the results, and decided on specific improvements to the tutorials that addressed identified problems. We chose to focus on improving navigation, optimizing information placement, and determining whether tutorial content was properly challenging for undergraduate students. Students selected for participation were observed as they attempted to complete the “Design an Experiment” tutorial module; this was immediately followed by a debriefing interview with the usability expert. During the testing session, we directly observed students via a one-way mirror, their computer screen via simulcast, and their eye movements via eye-tracker camera and software. We tested tutorials in this way with six undergraduate students, including both biology majors and nonmajors, and native English speakers and nonnative English speakers.

During usability testing, we found that students were most engaged by concise delivery of information with emphasis placed on key concepts (e.g. bold, italics, or color), consistent visual markers for navigation, and immediate feedback for wrong answers to challenge questions. As expected, students majoring in subjects outside of the sciences found the assessments more difficult. Finally, we found that distinct aspects of the tutorials engaged nonnative English speakers. Consistent layout and visual design were important for easy navigation. We suspect that this design allowed students to spend less cognitive energy decoding the instructions and navigation and to focus more on absorbing content from the tutorials. Along similar lines, nonnative English speakers also specifically appreciated repeated presentation of key ideas and optional chances to review those important concepts. We incorporated all of these observations into the design strategy for the final version of the tutorials (Supplemental Material 3).

RESEARCH STUDY

Design

To investigate the effectiveness of our online science process skills tutorials, we conducted a quasi-experimental study in a large-format introductory biology course covering evolution, genetics, and biology of sex for students majoring outside biology in Spring of 2017 at the University of Minnesota, a large, public, research institution in the Midwest of the United States (Figure 2). Without prior knowledge of this study, students enrolled in one of two sections, each with the same instructor. One section was assigned the tutorials as an out-of-class activity to supplement textbook reading over the first 2 weeks of the course, the “online tutorial” group (n = 118). Owing to instructor preference, students were assigned modules 3–7 over a 2-week period. Student completion of the tutorials was high: 93% of enrolled students completed at least three out of five tutorials, and 88% of enrolled students completed all five tutorials. The other section was assigned only out-of-class textbook reading covering similar subject material over the same 2 weeks, the “textbook reading” group (n = 118). For reasons of fairness, students in the textbook reading group were given access to the online tutorials following the experimental period and assessment. Students were incentivized to complete the tutorials with a small amount of homework credit (low stakes). We also estimated the time spent on the tutorials. The software used to deliver tutorials to students reports when students first open the tutorial window and when the window was last closed. For students who completed the module in one continuous session (opened and closed on same day, total duration less than 3 hours), median viewing times for each module ranged from 7.5 to 18 minutes, with the majority of students taking around 10 minutes to complete each module (Supplemental Material 6). On the basis of these data, we are not able to estimate the amount of activity while the tutorial window was open. We were unable to estimate viewing time if students completed the tutorial in multiple sessions. Module 7, “Communicate and Discuss,” included the option to watch several videos of scientific speakers, each 5–10 minutes long, likely the cause of the longer completion time observed for that module. On the basis of these findings and our observations during usability testing, we roughly estimate that students completed all five tutorial modules in ∼60–90 minutes. We were unable to measure time spent reading the textbook. The University of Minnesota Institutional Review Board deemed this study exempt from review (study number: 1612E01861).

FIGURE 2.

Experimental design. Students enrolled in parallel sections of the same introductory biology course were given 2 weeks to complete either the online tutorials or the textbook reading. At the end of the two weeks, a 15-question multiple-choice quiz assessed students’ science process skills.

Measures

While recent studies in undergraduate education have focused on ways to improve scientific literacy and proficiency, the ability to test the effectiveness of these interventions is limited. To date, very few assessment instruments designed to gauge science process skills have been created and validated, and those that have are geared toward assessing K–12 students. Therefore, due to the lack of validated assessments, we chose to use a subset of questions from a validated and well-accepted science process skills assessment tool called the Integrated Process Skills Test, or TIPSII (Burns et al., 1985). The TIPSII test uses multiple-choice questions to probe student understanding of science process skills. Topics covered are identifying variables, operationally defining, identifying testable hypotheses, data and graph interpretation, and experimental design. The test is not specific to a given curriculum or content area, so it is useful across various disciplines of science, including the life sciences. Versions of TIPSII have been successfully used to assess science process skills in undergraduate students and high school seniors, a population of students very similar to our own (Kazeni, 2005; Dirks and Cunningham, 2006). One such study found a significant positive relationship between TIPSII scores and final grade in an introductory undergraduate biology course (Dirks and Cunningham, 2006). Rather than design and validate a new assessment tool, for our assessment we chose to select 15 questions from TIPSII that most closely match the learning objectives covered in the tutorials. To prevent bias from student familiarity with the assessment, we verified that, while covering the same concepts, the content and examples used in the TIPSII assessment did not overlap with the specific subject material taught in the course or tutorials.

The 15-question assessment was designed to assess different categories of science process skills including data and graph interpretation, identifying variables, and identifying testable hypotheses, and experimental design. These categories align with the learning objectives for the tutorials. For example, one question asks students to consider an experiment about plant growth and, given a list of options, choose the statement that could be test to determine what affects plant height (Supplemental Material 4, question 9). This question is taken from the “Identifying Testable Hypotheses” category of the TIPSII and aligns with learning objectives from the second and third tutorials: “Ask a testable question,” and “Propose a testable hypothesis.” Similarly, in the fifth tutorial, the fourth learning objective states, “Create a graph representing the data that includes labeled axes and units” (Supplemental Material 2). In question 14 from the assessment, students were given a description of an experiment with results and were asked to identify the graph that best represented the data (Supplemental Material 4, Question #14). This question aligns directly with the learning objectives and highlights a student’s ability to derive a graphical representation of given data. The full assessment used in this study is available in Supplemental Material 4. To populate each category in our assessment, we selected only the questions from TIPSII with the highest item discrimination index (as reported in Kazeni, 2005), a statistical measure that distinguishes between high-performing and low-performing examinees for a given assessment. The average item discrimination index of questions selected from TIPSII was 0.4, well above the acceptable range (>0.3; Bass et al., 2016). Student scores on these 15 TIPSII items were averaged to create the main outcome variable in this study (Supplemental Material 5).

The ability to apply science process skills was assessed in both the online tutorial and textbook reading groups in the third week of the semester, after tutorial instruction was complete for the experimental group, using our modified TIPSII test. Student participation in the assessment was not required, so there was some attrition, but participation was high for both groups: 98/118 for the online tutorial group and 112/118 for the textbook reading group.

Data

The total pool of study participants was 54% female and 46% male, with a mean age of 20 years. The respondents were 1% American Indian, 9% Asian, 7% Black, 3% Hispanic, 16% international, and 65% white. They were 4% first-year students, 41% sophomores, 32% juniors, and 24% seniors, and 18% were listed as NNES students. Seventy-four percent of participants were non–science majors. Values are also listed in Table 1.

TABLE 1.

Study group demographics

| Study group demographics | |||||||

|---|---|---|---|---|---|---|---|

| % Female | % NNES | % Science majors | % Minority | GPA | ACT | Age | |

| Textbook reading group | 41 | 15 | 29 | 32 | 3.3 | 26 | 20 |

| Online tutorials group | 54 | 10 | 22 | 30 | 3.3 | 26 | 21 |

| p value | 0.089 | 0.38 | 0.388 | 0.8 | 0.566 | 0.511 | 0.008 |

Because participants were not randomly assigned to the online tutorial and textbook reading groups, it was important to establish comparability of the two groups on the available exogenous variables, which included aptitude variables (composite ACT score, grade point average [GPA]) and demographic variables (ethnicity, sex, age, major, NNES status). We ran appropriate group comparison tests and determined that the two groups of participants were statistically equivalent on all aptitude and demographic variables, with the exception of age: the mean age in the online tutorial group was slightly higher than the mean age in the textbook reading group (21 vs. 20, p = 0.008).

Analysis and Results

We constructed a multivariate ordinary least-squares regression model to predict participants’ performance on our outcome variable of interest, namely, score on the modified TIPSII test. For this model, casewise diagnostics were generated and examined to locate outliers in the data set, defined as cases with standardized residuals greater than 3.3. This procedure revealed three outliers for the dependent variable. On inspection, these cases were not otherwise anomalous, so they were retained in the data set. Variance inflation factor (VIF) statistics were also generated to check for multicollinearity among the predictor variables. In no case was the VIF statistic greater than 1.14, far from the common cutoff of 4, so multicollinearity did not appear to be a problem in the data set. The Durbin-Watson statistic of 2.35 indicated very little autocollinearity in the data.

The model included just three predictor variables (GPA, NNES status, and treatment group); no other demographic variables were significantly related to TIPSII score. The model was highly significant (p < 0.001) and accounted for a small to moderate amount of the variation in TIPSII score with an R2 value of 0.403, adjusted R2 = 0.140.

The covariate GPA was significantly related at the p < 0.05 level to TIPSII scores (t = 2.799, p = 0.006), as was the main treatment of interest, being in the online tutorial group (t = 2.600, p = 0.010). The predictor variable representing NNES status was negatively associated with TIPSII scores, but it was not statistically significant (t = −1.164, p = 0.246).

Given the results displayed in Table 2, we can conclude that for each unit increase in a student’s GPA, we can expect more than a 1-point increase in that student’s TIPSII score. Similarly, being in the online tutorial group was associated with a 0.8-point increase in a student’s TIPSII score, while holding other variables in the model constant. The pooled SD for the TIPSII score variable was 1.99, so the effect size for GPA was moderate to large (almost 60% of a SD), while the effect size for being in the online tutorial group was moderate (>40% of a SD).

TABLE 2.

Ordinary least-squares regression with TIPSII score as dependent variable

| Regression results: TIPSII scoresa | |

|---|---|

| Cumulative GPA | 0.212** (0.405) |

| Online tutorial group | 0.197* (0.317) |

| NNES status | −0.093 (0.474) |

| Constant | 7.374*** (1.352) |

| N | 151 |

| Adjusted R2 | 0.14 |

| F-test | 7.146*** |

aCell entries are standardized beta coefficients with SEs in parentheses.

*p < 0.05.

**p < 0.01.

***p < 0.001.

Results for NNES Students

One subpopulation of interest in this study was the group of students identified as NNES students, so additional analyses were performed to examine the effects of the online tutorials on this group. There were some indications in the data that the online tutorials may have benefited NNES students to a greater degree than they benefited students who were native speakers of English, but low subgroup n rendered these indications unclear. As a result, we observed two promising trends (noted below) but are unable to make any definitive conclusions at this time.

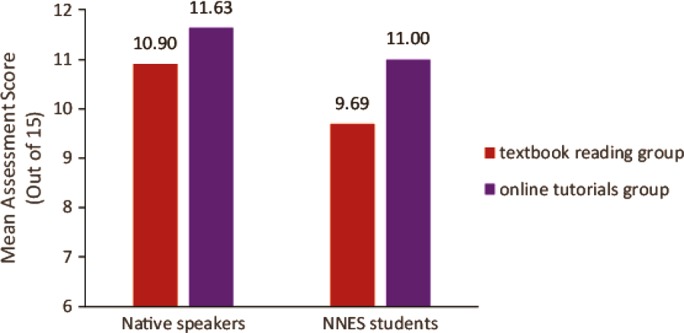

First, we asked whether being in the online tutorial group improved the TIPSII scores of NNES students more than it improved the scores of other students. The answer was, nominally, yes (Figure 3). Among native speakers, being in the online tutorial group helped (mean difference 0.7, p = 0.025). However, among NNES students, being in the experimental group helped more (mean difference 1.3, p = 0.311). The latter difference is greater than half of an SD and is much larger than the difference between the scores of tutorial and textbook native English speakers, but it does not test as significant because of low n in the NNES group (20 total).

FIGURE 3.

Mean scores for science process skills assessment after textbook (red) or online tutorial (purple) assignment. Comparison highlights difference between instructional approaches for native and nonnative English-speaking (NNES) students. While larger gains were observed for NNES students, statistical power was not sufficient to make a definitive conclusion due to the small number of NNES students enrolled in the study courses.

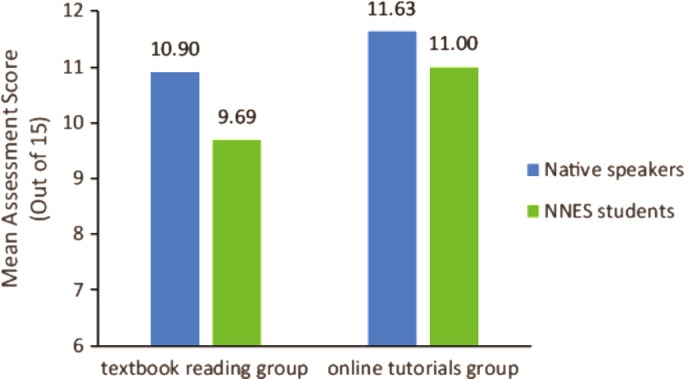

Second, we noted that NNES students had average TIPSII scores that were significantly lower than the scores of native English speakers (10 vs. 11, p = 0.030). So we asked whether being in the online tutorial group helped NNES students to close the gap between themselves and native speakers. Again, the answer was, nominally, yes (Figure 4). The difference in average TIPSII scores between native speakers and NNES students was 1.2 points in the textbook reading group (p = 0.101), while the difference was just about half of that in the online tutorial group, 0.6 points (p = 0.255).

FIGURE 4.

Mean scores for science process skills assessment among native and nonnative English speakers. Comparison highlights the difference between the two groups when given textbook reading or online tutorials. While larger gaps were observed for NNES students in the textbook reading group, statistical power was not sufficient to make a definitive conclusion due to the small number of NNES students enrolled in the study courses.

DISCUSSION

We created a series of online, interactive tutorials with the goal of helping undergraduate students develop science process skills. We designed the tutorials in accordance with evidence-based multimedia design principles and student feedback from usability testing. Our findings indicated that, after controlling for GPA, ACT score, and demographic characteristics, students who received the tutorials are predicted to score 0.8 points higher on a 15-point science process skill assessment than their peers who received traditional textbook instruction on the same topic. This moderate but significant impact indicates that well-designed online tutorials can be more effective than traditional ways of teaching science process skills to undergraduate students. We also found trends that suggest the tutorials are especially effective for NNES students. However, due to limited sample size, these trends did not rise to the level of statistical significance.

Strategies for Student Engagement

A preponderance of evidence shows that active learning improves student learning compared with traditional lecture approaches (Freeman et al., 2014). Here, we show that, even on a very limited scale, a switch from traditional textbook reading to include a more active approach results in improved learning. To achieve this, we designed the tutorials to maximize interaction and engage students in multiple ways in our tutorials. First, the nature of the multimedia-driven, interactive format increases interactivity compared with textbook reading. While completing the tutorial, students are required to make decisions, and those decisions shape how the rest of the tutorial proceeds. Multiple sensory channels are engaged in complementary ways when students see images, hear narration, and use computer interfaces to interact with the virtual environment. Each of these features creates opportunities for student engagement. While not every aspect might engage a particular student, the multiple levels of engagement increase the likelihood that one of those elements will grab and keep a student’s attention during the tutorial.

We also chose to use a continued narrative across the modules in the tutorial to create a human connection with students. Our storyline focused on the connection between stomach ulcers and the bacteria Helicobacter pylori and the story of how two scientists, Robin Warren and Barry Marshall, made that discovery in the 1980s. We used familiar settings, such as a doctor’s office and library, specific characters, and a medical mystery to create personal connections with students. We suspect that by connecting the scientific method to a human story, students were able to engage more quickly and deeply with the presented skills. While students appreciated the story, it was unclear whether they preferred a more cartoonish or realistic presentation style. Casual feedback from students covered all perspectives from appreciation to distaste for our animation-based style, but NNES students clearly favored the cartoon style. This is consistent with other research that shows schematics are more effective at teaching biological mechanisms than realistic images (Scheiter et al., 2009). Comparing different levels of realism would be an excellent area for future investigation.

Finally, we maximized student engagement by offering frequent, specific feedback and opportunity for reflection. We offered feedback in real time as students made choices, after challenge questions, and at the end of each module. We found that students appreciated a consistent format for feedback and were able to focus on their thought processes and reasoning when presented with a standardized feedback interaction. We also found that feedback needed to be specific. During usability testing, we observed that, when the tutorial provided targeted feedback explaining why each wrong answer was wrong, students stayed more engaged and were more comfortable with the presented concepts at the end of the tutorial. Finally, we gave students a chance to revisit and modify their ideas. This was especially evident in the “Propose a Hypothesis” module, in which the tutorial guides students to revise their hypotheses to make them more scientifically sound. These types of interactions provide both engagement and opportunity for formative assessment by the student.

Tutorials’ Impact

In our study, we found that students who used the tutorials instead of a traditional textbook reading to learn about science process skills are predicted to score 0.8 points higher on a 15-point science process skill assessment, a roughly 5% increase compared with their peers who used only a textbook. This effect size is to be expected, given the limited nature of instructional time spent using the tutorials (estimated 60–90 minutes total time over 2 weeks of a semester-long class). Larger-scale interventions, such as the semester-long Biology Fellows course at the University of Washington, show a 10% increase on a similar science process skills assessment, with larger gains in specific subjects (experimental design: 20%; graphing: 65%) (Dirks and Cunningham, 2006). Given the difference in scale, it is promising that tutorial use shows this level of improvement. We suggest that the tutorials, when used as part of a larger emphasis on science process skills throughout course and curriculum design, would be an especially effective tool.

How do the tutorials lead to increased ability to apply science process skills? Several elements may contribute to the efficacy of the tutorials in this study. First, on the basis of the use of evidence-supported design principles and a usability testing process, we suspect that the tutorials are more effective at capturing and maintaining student interest than more static forms of learning, such as textbook readings. This will lead students to complete more of the assigned activity and invest more time it. Second, we also suspect that student time spent with the tutorials is more productive than time spent with traditional readings. As several authors, especially Dunlosky et al. (2013), have shown, a variety of common student study techniques, such as highlighting text and rereading course materials, have low utility in terms of student learning. Only time on task spent doing effective learning activities improves outcomes. Our study has shown that our intervention—online tutorials focused on science process skills—is such an activity. While we cannot differentiate between these two interpretations in the present study, either interpretation shows that the tutorials used here, and the interactive format in general, are an effective tool for student learning and improve upon traditional instructional approaches.

Potential Uses for Tutorials

In this study, we used our online tutorials as an out-of-class assignment, but they could be employed in a variety of situations. We also created the tutorials with the intention that both the narrative content and the learning goals associated with science process skills could be adapted to suit individual instructors’ needs. In fact, we tweaked several learning objectives before our study to better align the tutorial with the instructor’s other activities and materials. This included modifying language and omitting one previously produced module entirely. We see this flexibility as a strength of the tutorial format. Unlike textbook and other static material, the instructors are free to modify these tutorials to meet their needs.

Impact on Broader Student Learning and NNES Students

One major strength of explicit science process skill instruction is the enhanced student learning in other facets of undergraduate biology education, including content retention (Kitchen et al., 2003; Dirks and Cunningham, 2006; Ward et al., 2014). In this study, we focused on specific gains in science process skills. We look forward to extending our assessment to other facets of student learning. Similarly, on the basis of our design decisions, student feedback during usability testing, and assessment trends, we suspect that the tutorials are especially helpful for NNES students. Better support for this idea would require a more targeted intervention involving larger numbers of NNES students and a study designed specifically to have enough statistical power to detect effects among NNES students. Such work would allow the trends observed in our study to be confirmed, broken down into greater detail, and extended. As modern biology deepens its international connections and international students continue to enroll at English-speaking universities, we hope that the online tutorial format will provide a strong instructional tool to help minimize gaps between native English-speaking and NNES students.

Accessing the Tutorials

All seven of our tutorial modules can be accessed in HTML5 format at https://sites.google.com/umn.edu/btltutorials. For LMS-compatible files or to discuss modifying modules for your use, please contact the authors.

Supplementary Material

Acknowledgments

D.O. and M.K. were supported by the University of Minnesota International Student Academic Services Fee and the Howard Hughes Medical Institute. We thank Deena Wassenberg, Robin Wright, Annika Moe, Sadie Hebert, Jessamina Blum, and Mark Decker for their input and support. We also acknowledge the Understanding Science website (University of California Museum of Paleontology, https://undsci.berkeley.edu) for their science process skills diagram.

REFERENCES

- Abdel C. (2002). Academic success and the international student: Research and recommendations. New Directions For Higher Education, (117), 13–20. [Google Scholar]

- American Association for the Advancement of Science. (2011). Vision and change in undergraduate biology education: A call to action, Final report. Washington, DC: Retrieved April 1, 2016, from http://visionandchange.org/files/2013/11/aaas-VISchange-web1113.pdf [Google Scholar]

- Bass K., Drits-Esser D., Stark L. A. (2016). A primer for developing measures of science content knowledge for small-scale research and instructional use. CBE—Life Sciences Education, (2), rm2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bloom B. S., Engelhart M. D., Furst E. J., Hill W. H., Krathwohl D. R. (1956). Taxonomy of educational objectives: The classification of educational goals. New York: McKay. [Google Scholar]

- Blummer B. A., Kritskaya O. (2009). Best practices for creating an online tutorial: A literature review. Journal of Web Librarianship, (3), 199–216.10.1080/19322900903050799 [Google Scholar]

- Brownell S., Hekmat-Scafe D., Singla V., Seawell P., Imam J., Eddy S. L., Cyert M. (2015). A high-enrollment course-based undergraduate research experience improves student conceptions of scientific thinking and ability to interpret data. CBE—Life Sciences Education, (2), ar21. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buchwitz B. J., Beyer C. H., Peterson J. E., Pitre E., Lalic N., Sampson P. D., Wakimoto B. T. (2012). Facilitating long-term changes in student approaches to learning science. CBE—Life Sciences Education, (3), 273–282.10.1187/cbe.12-01-0011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burns J., Okey J., Wise K. (1985). Development of an integrated process skill test: TIPS II. Journal of Research in Science Teaching, (2), 169–177. [Google Scholar]

- Carpi A. (2001). Improvements in undergraduate science education using Web-based instructional modules: The natural science pages. Journal Chemical Education, (12), 1709–1712. [Google Scholar]

- Carpi A., Mikhailova Y. (2003). Visionlearning Project. Evaluating the design and effectiveness of interdisciplinary science. Journal of College Science Teaching, (1), 12–15. [Google Scholar]

- Chudler E. H., Bergsman K. C. (2014). Explain the brain: Websites to help scientists teach neuroscience to the general public. CBE—Life Sciences Education, (4), 577–583.10.1187/cbe.14-08-0136 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clump M., Bauer H., Breadley C. (2004). The extent to which psychology students read textbooks: A multiple class analysis of reading across the psychology curriculum. Journal of Instructional Psychology, (3), 227–232. [Google Scholar]

- Coil D., Wenderoth M. P., Cunningham M., Dirks C. (2010). Teaching the process of science: Faculty perceptions and an effective methodology. CBE—Life Sciences Education, (4), 524–535.10.1187/cbe.10-01-0005 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crowe A., Dirks C., Wenderoth M. P. (2008). Biology in Bloom: Implementing Bloom’s taxonomy to enhance student learning in biology. CBE—Life Sciences Education, (4), 368–381.10.1187/cbe.08-05-0024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- DebBurman S. K. (2002). Learning how scientists work: Experiential research projects to promote cell biology learning and scientific process skills. Cell Biology Education, (4), 154–172.10.1187/cbe.02-07-0024 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dirks C., Cunningham M. (2006). Enhancing diversity in science: Is teaching science process skills the answer? CBE—Life Sciences Education, (3), 218–226.10.1187/cbe.05-10-0121 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Domagk S., Schwartz R. N., Plass J. L. (2010). Interactivity in multimedia learning: An integrated model. Computers in Human Behavior, (5), 1024–1033.10.1016/j.chb.2010.03.003 [Google Scholar]

- Dunlosky J., Rawson K. A., Marsh E. J., Nathan M. J., Willingham D. T. (2013). Improving students’ learning with effective learning techniques: Promising directions from cognitive and educational psychology. Psychological Science in the Public Interest, (1), 4–58.10.1177/1529100612453266 [DOI] [PubMed] [Google Scholar]

- Evans C., Gibbons N. J. (2007). The interactivity effect in multimedia learning. Computers & Education, (4), 1147–1160.10.1016/j.compedu.2006.01.008 [Google Scholar]

- Freeman S., Eddy S. L., McDonough M., Smith M. K., Okoroafor N., Jordt H., Wenderoth M. P. (2014). Active learning increases student performance in science, engineering, and mathematics. Proceedings of the National Academy of Sciences USA, (23), 8410–8415.10.1073/pnas.1319030111 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Goff E. E., Reindl K. M., Johnson C., McClean P., Offerdahl E. G., Schroeder N. L., White A. R. (2017). Efficacy of a meiosis learning module developed for the virtual cell animation collection. CBE—Life Sciences Education, (1), ar9.10.1187/cbe.16-03-0141 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gottesman A. J., Hoskins S. G. (2013). CREATE cornerstone: Introduction to scientific thinking, a new course for STEM-interested freshmen, demystifies scientific thinking through analysis of scientific literature. CBE—Life Sciences Education, (1), 59–72.10.1187/cbe.12-11-0201 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guo P. J., Kim J., Rubin R. (2014). How video production affects student engagement. Proceedings of the First ACM Conference on Learning @ Scale. 41–50.10.1145/2556325.2566239

- Hoskins S. G., Lopatto D., Stevens L. M. (2011). The C.R.E.A.T.E. approach to primary literature shifts undergraduates’ self-assessed ability to read and analyze journal articles, attitudes about science, and epistemological beliefs. CBE—Life Sciences Education, (4), 368–378.10.1187/cbe.11-03-0027 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hoskins S. G., Stevens L. M., Nehm R. H. (2007). Selective use of the primary literature transforms the classroom into a virtual laboratory. Genetics, (3), 1381–1389.10.1534/genetics.107.071183 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kazeni M. M. M. (2005). Development and validation of a test of integrated science process skills for the further education and training learners (Unpublished master’s thesis). University of Pretoria, Pretoria, South Africa. [Google Scholar]

- Kenyon K. L., Onorato M. E., Gottesman A. J., Hoque J., Hoskins S. G. (2016). Testing CREATE at community colleges: An examination of faculty perspectives and diverse student gains. CBE—Life Sciences Education, (1), ar8.10.1187/cbe.15-07-0146 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kitchen E., Bell J. D., Reeve S., Sudweeks R. R., Bradshaw W. S. (2003). Teaching cell biology in the large-enrollment classroom: Methods to promote analytical thinking and assessment of their effectiveness. Cell Biology Education, (3), 180–194.10.1187/cbe.02-11-0055 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Krajcik J., Sutherland L. (2010). Supporting students in developing scientific literacy. Nature, , 456–459. [DOI] [PubMed] [Google Scholar]

- Martin B. E., Brouwer W. (1991). The sharing of personal science and the narrative element in science-education. Science Education, (6), 707–722.10.1002/sce.3730750610 [Google Scholar]

- Mayer R. E. (2008). Applying the science of learning: Evidence-based principles for the design of multimedia instruction. American Psychologist, (8), 760–769.10.1037/0003-066x.63.8.760 [DOI] [PubMed] [Google Scholar]

- Mayer R. E., Moreno R. (2003). Nine ways to reduce cognitive load in multimedia learning. Educational Psychologist, (1), 43–52. [Google Scholar]

- McClean P., Johnson C., Rogers R., Daniels L., Reber J., Slator B. M., White A. (2005). Molecular and cellular biology animations: Development and impact on student learning. Cell Biology Education, (2), 169–179.10.1187/cbe.04-07-0047 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Moreno R. (2004). Decreasing cognitive load for novice students: Effects of explanatory versus corrective feedback in discovery-based multimedia. Instructional Science, (1–2), 99–113.10.1023/B:TRUC.0000021811.66966.1d [Google Scholar]

- Narciss S. (2004). The impact of informative tutoring feedback and self-efficacy on motivation and achievement in concept learning. Experimental Psychology, (3), 214–228.10.1027/1618-3169.51.3.214 [DOI] [PubMed] [Google Scholar]

- Podolefsky N., Finklestein N. (2006). The perceived value of college physics textbooks: Students and instructors may not see eye to eye. Physics Teacher, (6), 338–342. [Google Scholar]

- Scheiter K., Gerjets P., Huk T., Imhof B., Kammerer Y. (2009). The effects of realism in learning with dynamic visualizations. Learning and Instruction, (6), 481–494.10.1016/j.learninstruc.2008.08.001 [Google Scholar]

- Segura-Totten M., Dalman N. E. (2013). The CREATE method does not result in greater gains in critical thinking than a more traditional method of analyzing the primary literature. Journal of Microbiology & Biology Education, (2), 166–175.10.1128/jmbe.v14i2.506 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shi J., Power J., Klymkowsky M. (2011). Revealing student thinking about experimental design and the roles of control experiments. International Journal for the Scholarship of Teaching and Learning, (2), 10.20429/ijsotl.2011.050208 [Google Scholar]

- Silver S. L., Nickel L. T. (2005). Are online tutorials effective? A comparison of online and classroom library instruction methods. Research Strategies, (4), 389–396.10.1016/j.resstr.2006.12.012 [Google Scholar]

- Stelzer T., Gladding G., Mestre J. P., Brookes D. T. (2009). Comparing the efficacy of multimedia modules with traditional textbooks for learning introductory physics content. American Journal of Physics, (2), 184–190. [Google Scholar]

- Stevens L. M., Hoskins S. G. (2014). The CREATE strategy for intensive analysis of primary literature can be used effectively by newly trained faculty to produce multiple gains in diverse students. CBE—Life Sciences Education, (2), 224–242.10.1187/cbe.13-12-0239 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Türkay S. (2016). The effects of whiteboard animations on retention and subjective experiences when learning advanced physics topics. Computers & Education, , 102–114.10.1016/j.compedu.2016.03.004 [Google Scholar]

- Ward J. R., Clarke H. D., Horton J. L. (2014). Effects of a research-infused botanical curriculum on undergraduates’ content knowledge, STEM competencies, and attitudes toward plant sciences. CBE—Life Sciences Education, (3), 387–396.10.1187/cbe.13-12-0231 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wiggins G., McTighe J. (2005). Understanding by design. expanded 2nd ed. Alexandria, VA: Association for Supervision and Curriculum Development. [Google Scholar]

- Woodham H., Marbach-Ad G., Downey G., Tomei E., Thompson K. (2016). Enhancing scientific literacy in the undergraduate cell biology laboratory classroom. Journal of Microbiology & Biology Education 173 458–465.10.1128/jmbe.v17i3.1162 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wright R., Klymkowsky M. (2005). Points of view: Content versus process: Is this a fair choice? Cell Biology Education, , 189–198. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.