Abstract

Objectives

Virtual reality (VR) allows users to experience realistic, immersive 3D virtual environments with the depth perception and binocular field of view of real 3D settings. Newer VR technology has now allowed for interaction with 3D objects within these virtual environments through the use of VR controllers. This technical note describes our preliminary experience with VR as an adjunct tool to traditional angiographic imaging in the preprocedural workup of a patient with a complex pseudoaneurysm.

Methods

Angiographic MRI data was imported and segmented to create 3D meshes of bilateral carotid vasculature. The 3D meshes were then projected into VR space, allowing the operator to inspect the carotid vasculature using a 3D VR headset as well as interact with the pseudoaneurysm (handling, rotation, magnification, and sectioning) using two VR controllers.

Results

3D segmentation of a complex pseudoaneurysm in the distal cervical segment of the right internal carotid artery was successfully performed and projected into VR. Conventional and VR visualization modes were equally effective in identifying and classifying the pathology. VR visualization allowed the operators to manipulate the dataset to achieve a greater understanding of the anatomy of the parent vessel, the angioarchitecture of the pseudoaneurysm, and the surface contours of all visualized structures.

Conclusion

This preliminary study demonstrates the feasibility of utilizing VR for preprocedural evaluation in patients with anatomically complex neurovascular disorders. This novel visualization approach may serve as a valuable adjunct tool in deciding patient-specific treatment plans and selection of devices prior to intervention.

Keywords: Virtual reality, neurointervention, carotid pseudoaneurysm, interventional neuroradiology

INTRODUCTION

Surgical and interventional training has conventionally involved apprenticeship-based learning. Residents learn to perform various procedures under the guidance of trained proceduralists by practicing skills in simulation; and then, applying those skills by operating on real patients [1]. Even experienced operators benefit from practice and training via simulation before performing new procedures or before operating on particularly complex patient cases, in order to design and plan the therapeutic approach. Thus, simulation-based training and planning for interventions has been shown to be particularly effective in improving patient safety and clinical outcomes, especially in minimally-invasive or complex procedures [2]. Proceduralists can gain insightful information about three-dimensional (3D) geometries before operating on a real patient, especially given that the use of 3D tomographic patient imaging has allowed for patient-specific 3D visualizations. In fact, studies have shown that preprocedural planning is often considered to be the most important step for success in minimally-invasive procedures [3]. This is because these preprocedural visualizations can have utility beyond just preoperative planning and practice; and could even ideally be used to guide the interventionalist throughout the entire procedure by providing spatial orientation and navigational cues that may be lost during critical operative situations such as an uncontrolled hemorrhage [3].

Visualization technology takes on particular import in the current healthcare climate, given reduced training hours for residents, increasingly complex interventional cases, and an emphasis on improving operating room efficiency while providing ethical patient care [4]. With the advent of newer advanced technologies, various methods exist for both virtual and physical 3D simulations, including static 2D and 3D visualizations, 3D printing, interactive virtual reality (VR), and holography have been developed that can more realistically mimic normal patient anatomy as well as pathologic cases [5, 6]. Interacting with virtual models in a VR space models has an advantage over physical models in terms of customizability, cost, ease of access, and capacity of providing real-time feedback of subtle depth cues [5]. Also, 3D printed physical models can be costly, take time to produce, can break, or be lost. Interactive VR visualizations allow for repeated use without compromising the integrity of the model. While such models can also be used to create virtual simulation experiences, many of the currently used technologies, for this purpose, do not allow for a stereoscopic experience of a real operative field or intuitive manipulation of the simulation model using actual surgical or interventional tools [3]. Thus, more optimized, realistic virtual simulation technologies are needed.

VR encompasses a suite of technologies that create an interactive, realistic environment that can simulate various situations and provide feedback on user actions [7]. VR technology gives the user the capacity to produce and control 3D, and provide believable scenarios by reproducing the way in which we normally visualize objects to create an augmented reality experience.

This study investigates the use and utility of a novel VR visualization system for vascular neuroradiology and neurointervention that is designed to enhance the interventional management of aneurysms. This system has been developed as an adjunct to the standard 2D representations.

MATERIALS AND METHODS

Data acquisition

A 3D time-of-flight MR angiogram was acquired through the cervical arteries followed by a 3D long axis high-resolution proton density weighted black blood MRI sequence. After a precontrast mask sequence was acquired, 0.1 mmol per kilogram of a gadolinium-based contrast agent was administered and a 3D contrast-enhanced MR angiogram was acquired during arterial and venous phases. 3D and long and short axis 2D high-resolution black blood MRI sequences were acquired postcontrast, including high-resolution 3D black blood MRI images in coronal and sagittal orientations with T1-weighting. Maximum-intensity projection images were reconstructed from the MRA datasets.

3D segmentation

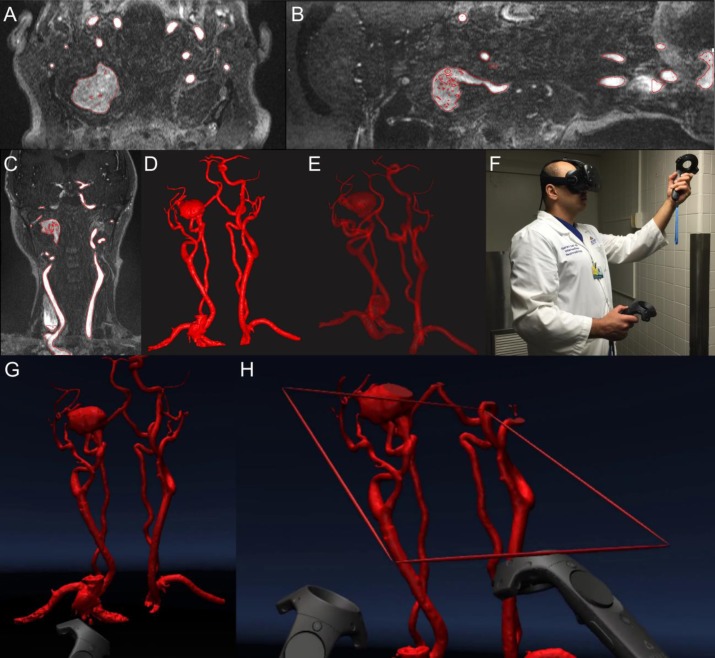

The digital imaging and communications in medicine (DICOM) dataset was imported into DICOM to Print (D2P) software (3D Systems, Rock Hill, SC) with automatic segmentation and vascular segmentation modules, which were used to identify bilateral carotid vasculature [Figure 1(A)–(C)] from their origins in the aorta, to create a preliminary 3D mask. Additional processing was performed by a careful review of individual slices to identify smaller vessels and to remove structures that were not of interest. A 3D mesh with opaque [Figure 1(D)] and translucent views [Figure 1(E)] was created from the 3D mask.

Figure 1. VR projection of right carotid pseudoaneurysm (A) axial view, (B) sagittal view, (C) coronal view, (D) opaque view, (E) translucent view, (F) interventional neuroradiologist using VR, (G) VR projection, and (H) VR projection with realtime sectioning.

Interactive virtual reality system

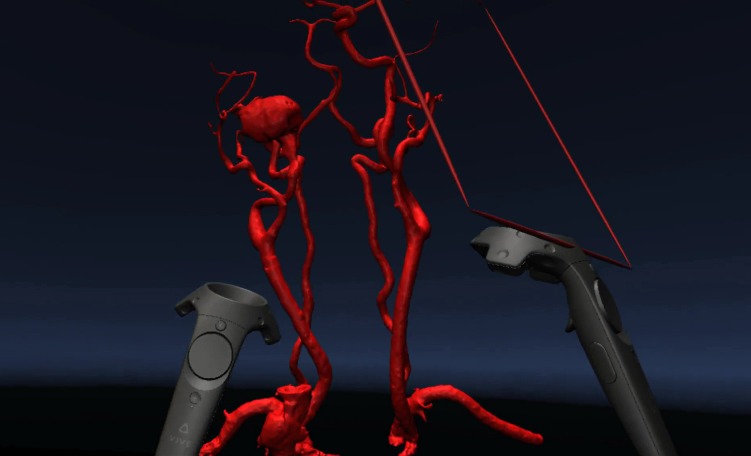

A VR system (HTC Vive, New Taipei City, Taiwan) was set up at the Johns Hopkins Carnegie 3D Printing and Visualization Facility for neurointerventionalists [Figure 1(F), Video 1] to visualize and interact with a 3D model of the right carotid pseudoaneurysm in VR. Two base stations were installed to detect the location of the operator wearing the VR headset as well as the two accompanying VR handheld controllers. The 3D mesh [Figure 1(D)] was then displayed inside the VR headset [Figure 1(G)] using D2P software (3D Systems, Rock Hill, SC). One of the handheld controllers was designated for rotation, magnification, and handling of the model; and another was designated to act as a clipping plane [Figure 1(H)] to examine cross-sectional views of the right carotid pseudoaneurysm. For visualization, the operator had the option to move himself or herself physically relative to the displayed object, or move the position of the 3D model using the controllers.

Video 1. Visualization of the right carotid pseudoaneurysm using an interactive VR system (Uploaded separately as a Supplementary File).

Operators

Two board-certified neuroradiologists (FH and GD), with 11 and 6 years of neuroimaging interpretation experience, respectively, used the VR projection system to evaluate MRI images of a complex pseudoaneurysm of the right internal carotid artery. Both operators filled in a questionnaire detailing their preferences and observations from this experience.

RESULTS

The segmented DICOM files from 3D angiographic imaging data were successfully loaded into the D2P software VR module and an evaluation was successfully performed in the VR environment.

MRA demonstrated a complex, partially thrombosed, large pseudoaneurysm involving the mid to distal right cervical ICA. The proximal ICA supplying the pseudoaneurysm was moderately to severely narrowed as it was compressed by thrombus at the level of the right lateral mass of C2. The lumen subsequently expanded within the thrombus, filling the inferior aspect of the pseudoaneurysm. There was moderate to severe flattening of the ICA after it exitted the pseudoaneurysm with the vessel compressed up against the right mastoid process by the pseudoaneurysm below.

Visualization modes

Currently angiographic 3D mesh data could be visualized as both opaque [Figure 1(D)] and translucent [Figure 1(E)] representations; however, only the opaque projection could be seen in VR.

Opaque display: This mode was especially useful when evaluating surface morphological parameters such as lobulations, daughter sacs, and surface irregularities.

Translucent projection: This mode proved particularly helpful to evaluate inflow and outflow tracts and visualize the relationship between overlapping vascular structures.

Visual landmarks

Key anatomical landmarks were reliably visualized using both conventional opaque and translucent projection modes [Figure 1(A)–(E)] and the virtual environment [Figure 1(G)–(H)]. The operators were able to visualize outflow and inflow tracts and rotate the model to obtain a working view that clearly outlined a potential trajectory for endovascular device deployment.

Aneurysm size, shape, contour, and morphology were all adequately assessed with all details defined on the conventional imaging resolved in the VR environment. As more potential views of the aneurysm could be assessed, there were a greater number of potential options for evaluation of size, shape, and volume. A true aneurysm volume could be defined (as opposed to an inferred volume based on 2D rendering). Surface morphological parameters such as of lobulations, daughter sacs, and surface irregularities were all apparent to the operator. The level of granularity of assessment was at least as high as any depiction on the conventional surface rendered radiographic imaging.

Procedural duration

The operators were both able to complete an entire preprocedural radiographic work-up in under 10 min, including a period of approximately 3 min to learn to operate the head-mounted VR display and the hand controllers.

Operator preferences and observations

There was a relatively short learning curve in becoming familiar with the device’s controls, getting used to the presence of the VR headset and controllers, and being inside the virtual environment. After an adjustment period, the operators found the VR headset and controllers unobtrusive and were able to wear them throughout the procedure without discomfort. The operator did not report any nausea, vertigo, or disorientation during or after using the VR device.

DISCUSSION

The past decade has seen a concurrent increase in the use of advanced radiological imaging technologies and minimally invasive intervention in the clinical setting [8, 9]. Thus far, understanding 3D anatomical relationships has relied on visualization of 3D data representations on a flat panel screen. This case demonstrates that current 3D visualization technology allows us to move beyond traditional radiographic monitors to an interactive 3D VR experience with multiple advantages for patient care, clinical training, and medical education.

With VR visualization, the clinician is able to literally immerse themselves into the patient’s anatomy and can garner an unprecedented real-time understanding of micro- and macro-anatomy. In the case of the large pseudoaneurysm illustrated in this report, the operators were able to visualize vascular inflow and outflow tracts optimally as well as infer areas which represented intralesional thrombus. This allows for better preprocedural planning and consideration of various interventional options, including reconstructive options such as placement of stents, or deconstructive options such as vessel sacrifice.

The operators were particularly impressed by the level of detail that could be defined about surface morphological parameters, such as of lobulations, daughter sacs, and surface irregularities, especially since aneurysm wall irregularity and daughter sacs have long been associated with greater risk of sac rupture [10–14].

The operators found the system intuitive (as demonstrated by the short time period required to learn to operate the system). They were also able to quickly adapt to the idea of being immersed in the displayed environment. The VR system uses a high definition display with precise low-latency constellation head tracking capabilities and relies on natural sensorimotor contingencies to perceive interaction with realistic anatomy. The virtual controllers are also of the same dimensions and shape in the virtual environment, resulting in two identical familiar objects in the virtual environment for the operators to quickly adapt to. The operator is able to visualize structures from multiple viewpoints within any portion of the room by moving their own body in a set of instinctively intuitive ways—they can turn their head, move their eyes, bend down, look under, look over, and look around to study the object from any angle.

The operator is also provided with a realistic representation of the handheld controller interface with six degrees-of-freedom and can therefore perform movements (such as picking up virtual objects) that are more intuitive than using a keyboard or mouse. This ability to orient the virtual object in any plane and place it in the visual field using handheld controllers allows the operator to mimic potential patient positioning for surgery or intervention. The observer can reach out, touch, push, pull, and perform these or a subset of these actions simultaneously to fully interpret the patient anatomy. This facility may also be helpful in evaluating images acquired in one position and interpreted in another (such as evaluating anatomic structures in a simulated erect position where the images may have been acquired supine or prone). Preliminary results of the use of this technology suggest a high level of user comfort, ease of use, and adaptation to these types of hand-held controllers [15]. Through these mechanisms, VR is able to provide an experience that gives rise to an illusory sense of place (“place illusion”) and an illusory sense of reality (“plausibility”) [16, 17].

In addition to object navigation and orientation, the operator can also utilize one of the controllers as a cutting plane to remove extraneous information from the field of view. This cutting plane is again more intuitive and easy to use than software applications in traditional radiology software visualization packages. Such packages often require technical knowledge in order to crop images and are often cumbersome, requiring multiple steps icons and other user interface shortcuts. The HTC Vive has a controller for increasing or reducing the size of images, which can easily be manipulated, with the use of the operator’s thumb. This can, therefore, be achieved without having to distract from any of the other tasks the operator may be performing (such as cropping the image).

The literature has reported that observers uniformly remark on the realism of the resulting images of VR technology [17, 18]. VR has the advantage over conventional display screens in that it reproduces the way in which light rays hit each eye [19]. This has the effect of bringing the virtual object much closer to the operator’s eyes and rendering it in a more detailed fashion than would be feasible in a typical diagnostic radiology setting. VR can assist radiological evaluation by considerably diminishing or cancelling out the effects of unsuitable ambient conditions. The viewer is shielded from the outside environment, limiting, or removing the effects of suboptimal background lighting, reflective glare, reducing visual distractions, and focusing on the subject matter at hand [20].

Beyond the patient care setting, VR technology enables the demonstration of 3D anatomic relationships efficiently and clearly, and its use for such educational purposes is expected to increase considerably in the coming years. In an academic conference and peer-teaching setting, VR allows interactive presentations of anatomy and real-time demonstrations to colleagues much like performing an open neurosurgical procedure (without the requirement for the trainee to be in the interventional suite).

There are many potential future directions of applications of VR visualization to vascular neuroradiology and neurointervention that are being explored. Our group is currently working on integrating vessel wall imaging information into a virtual environment using a dataset from Black Blood MRI. Additionally, advances in segmentation and coregistration may facilitate merging multiple imaging modalities (such as MRI, CT, and Nuclear medicine studies) to create a composite virtual object that can detail optimal information about multiple tissues and anatomic structures. Such advances may also allow for the creation of virtual objects representing endovascular devices, which could be sized in a virtual environment prior to being used in a procedure. This would allow the operator to compare the potential merits of different device constructs in an individual case.

CONCLUSION

This preliminary study demonstrates the feasibility of using VR as an adjunct to conventional imaging technologies and physical displays for the pretherapeutic evaluation of neurovascular pathology. This novel visualization approach may represent a valuable adjunct tool for neurovascular pathology in general, notably, when subtle nuances in aneurysm morphology and angioarchitecture are critical for therapeutic decision making.

Funding Statement:

This work was supported by a grant from The Women's Board of The Johns Hopkins Hospital (2017). C.S.O. is a recipient of the American Heart Association Predoctoral Fellowship (2017-2019) and the Tan Kah Kee Foundation Postgraduate Scholarship (2017).

REFERENCES

- Gurusamy K, et al. Systematic review of randomized controlled trials on the effectiveness of virtual reality training for laparoscopic surgery. Br J Surg. 2008;95(9):1088–1097. doi: 10.1002/bjs.6344. [DOI] [PubMed] [Google Scholar]

- Grantcharov TP, et al. Randomized clinical trial of virtual reality simulation for laparoscopic skills training. Br J Surg. 2004;91(2):146–150. doi: 10.1002/bjs.4407. [DOI] [PubMed] [Google Scholar]

- Stadie AT, et al. Virtual reality system for planning minimally invasive neurosurgery. J Neurosurg. 2008;108(2):382–394. doi: 10.3171/JNS/2008/108/2/0382. [DOI] [PubMed] [Google Scholar]

- Alaraj A, et al. Virtual reality cerebral aneurysm clipping simulation with real-time haptic feedback. Neurosurgery. 2015;11(1):52–58. doi: 10.1227/NEU.0000000000000583. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Addetia K, Lang RM. The future has arrived. Are we ready? Eur Heart J Cardiovasc Imaging. 2016;17:850–851. doi: 10.1093/ehjci/jew111. [DOI] [PubMed] [Google Scholar]

- Bruckheimer E, et al. Computer-generated real-time digital holography: first time use in clinical medical imaging. Eur Heart J Cardiovasc Imaging. 2016;17(8):845–849. doi: 10.1093/ehjci/jew087. [DOI] [PubMed] [Google Scholar]

- Schultheis MT, Rizzo AA. The application of virtual reality technology in rehabilitation. Rehabil Psychol. 2001;46(3):296–311. [Google Scholar]

- Stepan K, et al. Immersive virtual reality as a teaching tool for neuroanatomy. Int Forum Allergy Rhinol. 2017;7(10):1006–1013. doi: 10.1002/alr.21986. [DOI] [PubMed] [Google Scholar]

- Marks SC. Recovering the significance of 3-dimensional data in medical education and clinical practice. Clin Anat. 2001;14(1):90–91. doi: 10.1002/1098-2353(200101)14:1<90::AID-CA1014>3.0.CO;2-X. [DOI] [PubMed] [Google Scholar]

- Beck J, et al. Difference in configuration of ruptured and unruptured intracranial aneurysms determined by biplanar digital subtraction angiography. Acta Neurochir. 2003;145(10):861–865. doi: 10.1007/s00701-003-0124-0. [DOI] [PubMed] [Google Scholar]

- Hademenos GJ, et al. Anatomical and morphological factors correlating with rupture of intracranial aneurysms in patients referred for endovascular treatment. Neuroradiology. 1998;40(11):755–760. doi: 10.1007/s002340050679. [DOI] [PubMed] [Google Scholar]

- Lall RR, et al. Unruptured intracranial aneurysms and the assessment of rupture risk based on anatomical and morphological factors: sifting through the sands of data. Neurosurg Focus. 2009;26(5):E2. doi: 10.3171/2009.2.FOCUS0921. [DOI] [PubMed] [Google Scholar]

- Ma BS, et al. Three-dimensional geometrical characterization of cerebral aneurysms. Ann Biomed Eng. 2004;32(2):264–273. doi: 10.1023/b:abme.0000012746.31343.92. [DOI] [PubMed] [Google Scholar]

- Sadatomo T, et al. Morphological differences between ruptured and unruptured cases in middle cerebral artery aneurysms. Neurosurgery. 2008;62(3):602–608. doi: 10.1227/01.NEU.0000311347.35583.0C. [DOI] [PubMed] [Google Scholar]

- Sportillo D, et al., editors. An immersive virtual reality system for semi-autonomous driving simulation: a comparison between realistic and 6-DoF controller-based interaction. Proceedings of the 9th International Conference on Computer and Automation Engineering; 2017. ACM. [Google Scholar]

- Slater M. Place illusion and plausibility can lead to realistic behaviour in immersive virtual environments. Philos Trans R Soc Lond B Biol Sci. 2009;364(1535):3549–3557. doi: 10.1098/rstb.2009.0138. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Slater M. Enhancing our lives with immersive virtual reality. . Front. Robot. AI. 2016;74 [Google Scholar]

- Sutherland IE. A head-mounted three dimensional display . Proceedings of the Fall Joint Computer Conference, Part 1; New York, NY, . December 9–11, 1968; pp. 757–764. ACM. [Google Scholar]

- Castelvecchi D. Warped worlds in virtual reality. Nature. 2017;543(7646):473. doi: 10.1038/543473a. [DOI] [PubMed] [Google Scholar]

- Sousa M, et al. VRRRRoom: Virtual Reality for Radiologists in the Reading Room. Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems; 2017. pp. 4057–4062. [Google Scholar]