Abstract

Background

Web-based surveys provide an efficient means to track clinical outcomes over time without the use of clinician time for additional paperwork. Our purpose was to determine the feasibility of utilizing web-based surveys to capture rehabilitation compliance and clinical outcomes among postoperative orthopedic patients. The study hypotheses were that (a) recruitment rate would be high (>90%), (b) patients receiving surveys every two weeks would demonstrate higher response rates than patients that receive surveys every four weeks, and (c) response rates would decrease over time.

Methods

The study deaign involved a longitudinal cohort. Surgical knee patients were recruited for study participation during their first post-operative visit (n = 59, 34.9 ± 12.0 years of age). Patients with Internet access, an available email address and willingness to participate were counter-balanced into groups to receive surveys either every two or four weeks for 24 weeks post-surgery. The surveys included questions related to rehabilitation and questions from standard patient-reported outcome measures. Outcome measures included recruitment rate (participants consented/patients approached), eligibility (participants with email/participants consented), willingness (willing participants/participants eligible), and response rate (percentage of surveys completed by willing participants).

Results

Fifty-nine patients were approached regarding participation. Recruitment rate was 98% (n = 58). Eligibility was 95% (n = 55), and willingness was 91% (n = 50). The average response rate was 42% across both groups. There was no difference in the median response rates between the two-week (50%, range 0–100%) and four-week groups (33%, range 0–100%; p = 0.55).

Conclusions

Although patients report being willing and able to participate in a web-based survey, response rates failed to exceed 50% in both the two-week and four-week groups. Furthermore, response rates began to decrease after the first three months postoperatively. Therefore, supplementary data collection procedures may be necessary to meet established research quality standards.

Keywords: Survey instrument, outcomes assessment, response rate, compliance, Internet access, patient-reported outcomes, rehabilitation progress

Introduction

Patient-reported outcomes (PROs) are widely accepted and commonly used in health care to obtain health-related quality of life (HRQOL) data.1–3 PROs provide clinicians insight into patients’ experiences, which may include symptoms, side-effects, out-of-clinic therapies, or patient perceived effect of treatment. This information allows clinicians to alter treatments as needed according to the patient’s symptoms or progression, rather than relying on the clinician’s perceived condition of the patient. However, discrepancies remain as many clinicians continue to conduct outcome reports predominantly via staff-administered surveys instead of using patient-based reports. Patients may be able to provide additional symptoms beyond those reported by clinicians, and obtaining PROs encourages patient- clinician communication.4 In practice, patient self-reporting may result in more accurate and comprehensive information regarding patient experiences, and may improve the proficiency of data collection in clinical practice.5

While it is widely accepted in health care that patients, not physicians, should be providing HRQOL assessments, clinician-based assessments continue to be the predominant method of data collection,6 particularly in the field of orthopedics. Beyond a well-established discordance between performance-based outcomes and PROs,7–9 limited research exists regarding the application of PROs in clinical orthopedic practice. However, there is strong evidence supporting the use of PROs as a unique source of information in other clinical settings. It has been reported that physician ratings of symptoms do not correlate well with patient self-assessments of HRQOL in cases of prostate cancer treatment.3 Physicians have a tendency to report fewer symptoms and less symptom severity in later follow-ups and often underestimate the degree of patient-reported impairment.6 It has also been demonstrated that clinicians consistently report less severe patient symptoms and patient self-reports often include side effects not reported by clinicians.10,11

Currently one of the most common methods for documenting clinical outcomes is a chart review. However, this process of data collection and retrieval can be time-consuming and often requires multiple clinicians to capture the data. Throughout this process information may be misinterpreted, or simple errors may occur that result in a loss of information.2,4 Patient self-reporting removes several intermediate steps and may improve capture and consistency of recording treatment outcomes, compliance, and patient satisfaction.10

With the continuing growth of computer access, the Internet, and other electronic communications, there has been a large increase in the number of instruments available for web-based surveys and outcome tracking. The use of electronically collected data allows large volumes of information to be stored and easily transmitted for both clinical care and research.4 Using web-based interfaces may increase the depth and accuracy of available clinical data, save administrative time, and enhance consistency of data collection across treatment sites.12 Using the Internet to collect PROs also provides a unique advantage in that patients are able to report symptoms in real time between clinic visits, thus allowing reporting within home environments.4 Patients have reported that using a web-based interface improved discussion and communication with clinicians.4 Additionally, the consistent capture of patient outcomes may provide earlier reporting of signs or symptoms which may be “red flags” for clinicians, allowing for a quicker response and improved patient safety.4,5,13 Overall the use of electronic data capture, particularly through patient-assessed web-based interfaces, has a tremendous potential to enhance both clinical care and efficacy in research.

There is a further need for integrating patient-based measures into daily practice and developing a more effective method to distribute quality of life (QOL) assessments.6 Allowing patients to submit self-reported outcomes and rehabilitation participation longitudinally will provide more comprehensive information to better evaluate patient progress and enhance communication between patients and clinicians. With the growth of Internet access, collecting web-based surveys at multiple time points throughout treatment or rehabilitation may be a useful means to capture patient perceptions and behaviors. A web-based survey provides a means to track data over time more efficiently because it does not rely on the patient returning to the clinic to report outcomes. However, it is unknown whether it is feasible to capture PROs though the web in an orthopedic population. Factors such as patient willingness and compliance with participation in web-based surveys determine the feasibility of capturing this information. Therefore, the purpose of this study was to determine the feasibility of conducting a web-based survey to capture compliance and self-reported outcomes among postoperative orthopedic patients over time. There are three hypotheses that guided the study: (a) recruitment rate would be high (>90%), (b) patients receiving surveys every two weeks would demonstrate higher response rates than patients receiving surveys every four weeks, and (c) response rates would decrease over time in both groups.

Methods

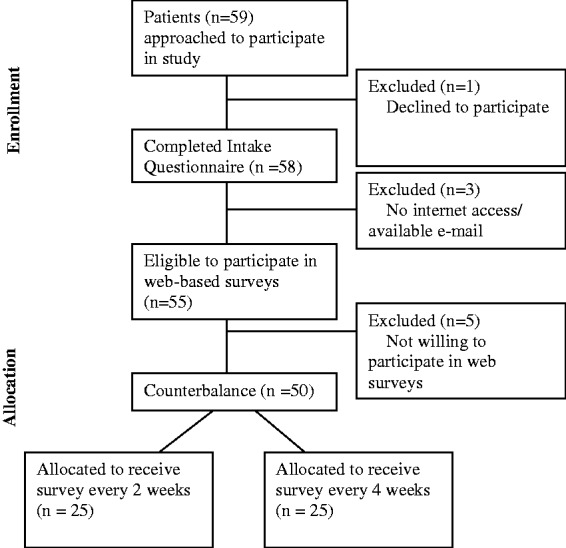

This was a prospective longitudinal cohort study. Patients were included in this study if they had undergone knee surgery. To be eligible for participation in the study, patients needed to be between the ages of 16–65 years and have undergone surgery related to patellar instability, ligament, cartilage, or meniscus injury. Patient recruitment and enrollment for this study is modeled in Figure 1.

Figure 1.

Patient recruitment.

Eligible and willing patients read and signed an informed consent document approved by the University of Kentucky Institutional Review Board (IRB#11-0644-P6A), in accordance with the ethical standards set forth in the 1964 Declaration of Helsinki. During screening, patients were asked to provide demographic information including education level, preferred method of contact (text message, phone, email, or standard mail), access to Internet, and employment status. If the patient did not have Internet access or was not willing to participate in further web-based surveys, she/he was no longer eligible to participate in the study. Patients who had internet access and were willing to participate were allocated in a counter-balanced manner (1:1) to receive surveys every two or four weeks. Based on group assignment, participants were sent recurring emails with a secure link to the appropriate survey along with log-in identification information. Depending on group assignment, patients received their initial survey either two or four weeks following surgery (one or three weeks following study enrollment). Those in the two-week group received a shortened survey (Supplementary Material, Appendix A) at weeks 2, 6, 10, 14, 18, 22, and 26, while all subjects received a longer survey (Supplementary Material, Appendix B) at weeks 4, 8, 12, 16, 20, and 24. In addition to recurring emails, reminders were sent to participants at regular intervals if they did not complete a survey within the allocated time period. A maximum of two reminders were sent for each time point. Reminders were sent two and five days after the initial email if the survey was still not completed. If the survey for a given time point was not completed within one week of the initial email, that time point was considered missed, and the participant moved on to the next survey time point. After two missed survey time points, the research staff contacted the participant via phone to remind him/her to complete the survey.

Instrumentation

The web-based surveys were created through Research Electronic Data Capture (REDCap Survey Software, Version 1.3.10, copyright 2011 Vanderbilt University).14 REDCap is a secure web-based application designed to support data storage and collection for research studies. All information is password protected and maintained on bioinformatics servers maintained in the university’s secure data center. Web-based surveys were used to assess patient function and report frequency of physical therapy sessions. The surveys created in REDCap included questions created by research personnel and also incorporated questions from the following scales: Lysholm Knee Scale, International Knee Documentation Committee (IKDC) Subjective Knee Form and Modified Cincinnati Knee Rating Scale.15–17 The two week survey was designed to be a “check-in” survey to test whether more frequent communication improved response rates. Additionally, certain questions, such as those incorporated from the IKDC specify “in the last month” making asking them more frequently than every four weeks potentially invalid. Questions regarding physical therapy attendance and crutch use along with the Modified Cincinnati Knee Rating Scale were included in both the two and four-week surveys.

Data reduction and statistical analysis

The independent variable of this study was group (two-week or four-week) based on the frequency of the survey. The outcome variables included the percentage of patients agreeing to participate in the intake questionnaire (recruitment rate), the percentage of patients capable of participating in the web-based survey (eligibility), the percentage of patients willing to participate in the web-based survey (willingness), and the percentage of web-based questionnaires completed (response rate). Overall compliance was evaluated categorically for each participant based on response rate. Compliance was qualified based on the following; completion of less than 50% of the surveys was considered poor compliance, 50–70% completion was good compliance and greater than 70% completion was considered excellent compliance.18 Non-compliance was defined as not completing any of the web-based surveys after agreeing to participate. Feasibility was defined as the percentage of all patients approached for the study that went on to participate in the study with at least good compliance (number of patients with at least good compliance/number of patients approached for study). For both groups data was collected for six months following surgery.

Data were analysed using descriptive statistics (percentages and frequencies) for all applicable variables for all participants. Because the data failed to meet the assumption of a normal distribution, the difference between response rates of the two groups (two or four week) was evaluated using a Mann Whitney U test and changes in response rate over time (first 12 weeks vs weeks 14–26) were compared using a related-samples Wilcoxon signed-rank test with the alpha level set a priori at p < 0.05. Categorical compliance rates between the two groups were analyzed using a chi square test (p < 0.05).

Results

From a single orthopedic surgeon a total of 59 patients who had undergone orthopedic knee surgery requiring formal post-operative rehabilitation were approached and invited to participate. There was a recruitment rate of 98% (58/59), with one patient who declined enrollment and 58 patients who agreed to participate in the intake questionnaire. Based on Internet access and available email, 95% (55/58) patients were eligible to participate in the web-based survey; one patient did not have Internet access (age 29) and two additional patients did not have an available email address (age 16 and 54 years). Of these 55 patients, five patients were not willing to further participate in the study. Therefore, 91% (50/55) of patients with Internet/email access were willing to participate in the web-based surveys. All patients having Internet access reported that access to be in their home.

Patients participating in the on-line survey included 29 females (58%) and 21 males (42%), with an average age of 35.4 years old (range 17–66). Among the 58 participants who participated in the intake questionnaire, the most common preferred method of contact was email (43%), followed by telephone (33%), text (15%) and mail (9%). The five patients unable/unwilling to participate in the online survey included one female and four males; two patients preferred contact by phone, while the remaining three patients preferred contact by email, text, and mail. Table 1 provides information relative to patient demographics.

Table 1.

Patient demographics.

| Total (n = 58) | Two-week group (n = 25) | Four-week group (n = 25) | |

|---|---|---|---|

| Age (mean/SD) | 35.1 (11.) | 36.4 (11.4) | 34.4 (11.7) |

| Gender (n/%) | |||

| Female | 31 (53.4%) | 14 (56%) | 15 (60%) |

| Male | 27 (46.6%) | 11 (44%) | 10 (40%) |

| Race (n/%) | |||

| African American | 5 (8.6%) | 2 (8%) | 3 (12%) |

| Caucasian | 51 (87.9%) | 22 (88%) | 21 (84%) |

| Hispanic | 2 (3.5%) | 1 (4%) | 1 (4%) |

| Education level (n/%) | |||

| Some high school | 14 (24.1%) | 3 (12%) | 6 (24%) |

| High school | 9 (15.5%) | 2 (8%) | 6 (24%) |

| Some college | 6 (10.3%) | 4 (16%) | 2 (8%) |

| Associates/2-year degree | 10 (17.2%) | 4 (16%) | 5 (20%) |

| Bachelors/4-year degree | 12 (20.7%) | 8 (32%) | 3 (12%) |

| Graduate degree | 7 (12.2%) | 4 (16%) | 3 (12%) |

| Employment status (n/%) | |||

| Employed | 33 (56.9%) | 19 (76%) | 12 (48%) |

| Unemployed | 22 (38.0%) | 6 (24%) | 11 (44%) |

| Retired | 1 (1.7%) | 1 (4%) | |

| No answer | 2 (3.4%) | 1 (4%) | |

| Workers comp. case (n/%) | |||

| Yes | 5 (8.7%) | 2 (8%) | 2 (8%) |

| No | 51 (87.9%) | 22 (88%) | 23 (92%) |

| No answer | 2 (3.4%) | 1 (4%) | |

| Type of surgery (n/%) | |||

| ACI | 10 (17.2%) | 3 (12%) | 7 (28%) |

| ACL | 19 (32.8%) | 8 (32%) | 8 (32%) |

| MPFL | 6 (10.3%) | 2 (8%) | 3 (12%) |

| Microfracture | 3 (5.2%) | 2 (8%) | 1 (4%) |

| Meniscus repair | 4 (6.9%) | 2 (8%) | 2 (8%) |

| Other | 15 (25.9%) | 7 (28%) | 4 (16%) |

| No answer | 1 (1.7%) | 1 (4%) | |

| Preferred method of contact (n/%) | |||

| 25 (43.1%) | 14 (56%) | 10 (40%) | |

| Telephone | 19 (32.8%) | 7 (28%) | 8 (32%) |

| 5 (8.6%) | 1 (4%) | 3 (12%) | |

| Text | 9 (15.5%) | 3 (12%) | 4 (16%) |

ACI: autologous chondrocyte implantation; ACL: anterior cruciate ligament; MPFL: medial patellofemoral ligament; SD: standard deviation.

Response rate

There was an overall response rate of 43% among the 50 participants over a 24 (four-week group) to 26 (two-week group) week follow-up. When compared between groups, those receiving a survey every two weeks had a median response rate of 50% (range 0–100%; mean = 44 ± 32%) compared to a median response rate of 33% (range 0 = 100%: mean = 40 ± 40%) among those receiving a survey every four weeks (p = 0.55). Table 2 illustrates survey compliance among the two-week and four-week groups. There were no statistically significant differences in compliance between groups (p = 0.097). There were four participants in the two-week group and 10 participants in the four-week group that were non-compliant. The two-week group also had six participants that had excellent compliance. The four-week group had a total of eight participants that had excellent compliance. A total of five patients completed 100% of the web-based surveys sent to them.

Table 2.

Survey compliance.

| Two-week group (n = 25) | Four-week group (n = 25) | Total | |

|---|---|---|---|

| Non-compliant (0%) | 16% (4/25) | 40% (10/25) | 28% |

| Poor (<50%) | 32% (8/25) | 20% (5/25) | 26% |

| Good (50–70%) | 28% (7/25) | 8% (2/25) | 18% |

| Excellent (>70%) | 24% (6/25) | 32% (8/25) | 28% |

Figure 2 illustrates the response rate of all participants receiving web-based surveys for the particular time point throughout the entire distribution time. The figure initially shows an identical response rate of 48% at four weeks among all participants. Response rate among the two-week group ranged from a high of 58% at 12 weeks to a low of 29% at 22 weeks. In the four-week group the highest response rate occurred at the initial four-week survey and declined to a low of 33% at the final 24-week survey. With the exception of week 8 (44% and 48%) the two-week group consistently had a higher response rate compared to the four-week group. Across both groups a higher response rate was observed in the first 12 weeks than in weeks 14 thru 26 (median response rate of 48% vs 38%, p = 0.028).

Figure 2.

Response rate over time.

Feasibility

Of all 59 patients that were approached to participate in the study, there was a total of 39% (23/59) that had at least good compliance (>50% response rate).

Discussion

The purpose of this study was to determine the feasibility of utilizing web-based surveys to capture compliance and PROs among post-operative orthopedic patients overtime. It was hypothesized that there would be an excellent recruitment rate, the two-week group would have a higher response rate than the four-week group, and response rate would decrease over time in both groups. This study demonstrated a high recruitment rate (98%), high eligibility (95%) based on Internet access and email availability, and a high willingness (91%) of patients to participate in web-based surveys. There was an average response rate of 42%, with the two-week group showing a slightly higher but not significantly different response rate (44%) compared to the four-week group (40%). Overall, 46% of participants demonstrated good or excellent compliance with returning study surveys. This is one of the first studies to examine the feasibility of web-based surveys in an orthopedic population. Therefore, when considering the feasibility and practicality of utilizing a web-based survey to document PROs and rehabilitation progress among post-operative orthopedic patients it is reasonable to anticipate obtaining results from 39% of eligible patients.

Recruitment rate

This study demonstrated a high recruitment rate (98%) among post-surgical orthopedic knee patients to regularly self-report progress during the rehabilitation process. Recruitment rate in the present study was likely high because the sample population was individually contacted in person during a regularly scheduled clinic visit. Patients also may have been more likely to agree to participate because the data collected via the survey was specific to their health status. The literature reports that participants are more likely to participate in surveys that relate to personal interests and behaviors.19 The patients were reporting their status following a recent surgery and subsequent rehabilitation, as the intrinsic value was high we expected that recruitment rate from patients would be high.

The results of this study agree with previous research19,20 suggesting that salience and personal relevance are important factors in recruitment rate. A recruitment rate of 85% was reported among lung cancer patients who were approached during a clinic visit to self-report symptoms. Patients were given the option of completing surveys using computers in the waiting area and also had the option of home access.5 The literature reports a recruitment rate ranging from 48–70% when the patients’ own health care or condition is not the subject being evaluated in the survey.21,22 Since, our study inquired about the patients’ own health care and rehabilitation progress, we feel this was instrumental in our high recruitment rate.

A unique component of our study was evaluating the eligibility and willingness of patients to respond. In the present study, 95% of the patients had Internet access and an email address making them eligible to participate in the web-based surveys, and 91% of these eligible patients were willing to participate in the surveys. Similar to our study, an eligibility of 96% and willingness of 86% in a sample population of lung cancer patients to complete web-based PROs both in a clinic and with an at home option has been reported.5 The high rates of willingness to complete web-based health related surveys supports the use of this methodology in current practice. The high eligibility rates demonstrated in the present study could be attributed to the growth of technologies and internet access among patients.

Internet access

The use of technology as a means of distributing health-related questionnaires is feasible if the technology is available to the population of interest. In this study 95% of patients reported having Internet access and an available email address, with 100% of these patients having Internet access at home. This rate of access was notably higher than previously reported Internet access. In 1998, 42% of US households reported owning a computer and 26% had an email connection.23 In 2003, 46% of rural and urban Indiana cancer clinic patients reported having access to the Internet.24 The US Census reported in 2007 that 66% of Kentucky residents had Internet access at some location (school, library, etc.) and 60% had Internet access in their homes.25

Preferred method of contact

The majority of patients in this study reported that their preferred method of contact was email (43%), followed by telephone (33%), text (15%) and postal mail (9%) Although texting may be a common way of communication in the general public, it is reasonable most patients did not prefer to be contacted by research staff in such a personal method. Electronic mail provides a quick contact and response time, allowing the patient the convenience of responding on his or her own time compared to the instant response required with telephone contacts. Historically, the most common method of survey distribution is postal mail followed by telephone interviews.18–20 Interestingly, in the present study less than 35% of patients preferred to be contacted by telephone and less than 10% by mail. These findings are in agreement with previous research demonstrating that email or in person communication is the preferred communication method for patients for a variety of physician-patient interactions across a wide range of health conditions.26 Furthermore, there is evidence to suggest that electronic communications, specifically email may improve clinical outcomes.27 It is evident that as technology continues to grow, new distribution methods will be required in order to capture information from a greater number of subjects.

Response rate

This study reports an overall response rate of 43% across all time points in both the two-week and four-week groups. Previous literature has reported response rates with web-based surveys between 51–83%4,20,28 with a response rate of 56% reported for a previous orthopedic web-based health survey.29 However, when comparing this study to others in the literature it is important to remember that this was a longitudinal study examining response rates over a six-month postoperative period; whereas some other studies may have only examined response rates for a single time point. In the current study, a trend of decreasing response rates were observed over time, providing a possible explanation for lower response rates. Although the average response rate was 42%, response rates for the first 12 weeks were higher (44–52%) and then decreased to 36% at 16 weeks and 30% at 24 weeks. The trend of decreased response rates after week 12 may relate to patients’ clinical experience as they are often discharged from physical therapy around the three-month time point and many physical activity restrictions are removed. At this time point patients may have felt the questions on the survey were no longer relevant to them as their post-operative recovery and knee health was no longer at the forefront of their day to day activities.19 Similarly, decreasing response rates over time may represent responder fatigue regardless of group membership.

It has been reported that longer questionnaires produce lower response rates.30 A recent study evaluated response rates in patients completing web-based surveys that took either 15–30 min to complete compared to a survey that took 30–45 min to complete. The response rate was higher in patients that completed the shorter surveys (24% vs 17%).30,31 In the present study, the four-week survey consisted of 37 questions, estimated to take 10–15 min, while the two-week version consisted of 13 questions, estimated to take 5–10 min. Those in the two-week group were asked to complete both the short (weeks 2, 5, 10, 14, 18, 22, and 26) and the long survey (weeks 4, 8, 12, 16, 20, and 24). Within the two-week group, the average response rate for the long survey (46%) was actually greater than the average response rate for the short survey (41%). Therefore, it appears that among our patients, as long as the survey took less than 15 min to complete, length was not a factor that influenced response rate.

Survey compliance

Survey compliance was established based on the categorization of response rates. Overall, 26% of patients had excellent compliance, completing over 70% of the surveys sent to them, while 28% were non-compliant, completing no surveys. An additional 26% had poor compliance, completing less than half of the surveys sent to them. Reasons for non-compliance are multi-factorial and may include technical problems (e.g. non-deliverable email, server malfunctions), discontinuation of treatment or therapy, symptom resolution, or general disinterest. Using technology to capture patient symptoms lends itself to the possibility of technical problems. It has previously been reported that up to 5% of participants completing on-line surveys reported technical problems. In the present study, technical difficulties were experienced. The difficulties were discovered after participants reported not being able to submit surveys. This was then reported to REDCap technical support, requiring over a month to determine the disruption with the survey system.

Feasibility

Feasibility was defined as the percentage of all participants approached during their clinic visit that went on to participate in the study with at least good compliance. Of all patients that were approached in the clinic to participate in the study, a total of 39% were considered to have good compliance. The literature reports that a response rate of at least 50% is generally considered adequate for analysis and reporting in order to avoid a response bias.18 Although 39% is below the recommended response rate for survey data, among those who did complete at least one survey 64%, had good or excellent compliance. In this same sub-sample of patients the mean response rate was 57%, suggesting that biggest hurdle to acceptable study participation levels may be those patients who agree to participate, but fail to follow through on even a single survey. For longitudinal studies, this observation underscores the importance of participant selection and ensuring patients have an adequate understanding of study expectations and that declining to participate should they be unable or unwilling to meet those expectations is acceptable.

Other scales of assessing the quality of a research design, such as that listed in the Physiotherapy Evidence Database (PEDro) scale suggest that at least 85% of the subjects initially allocated into groups must complete a key outcome study.32 Our study included all patients eligible to participate, yet only 72% of participants completed at least one survey, still not meeting the qualifications of the PEDro scale in this criterion. These results, combined with previous research,4,5 suggest that obtaining at least one outcome data point during an in clinic visit and/or providing patients with the ability to complete surveys during clinic visits may be necessary to ensure study feasibility.

Limitations

Limitations of this study include enrollment of only post-operative patients at an urban orthopedic medical center in central Kentucky. All participants enrolled were patients who had undergone knee surgery performed by a single orthopedic surgeon, which then required regular, ongoing physical therapy. Although the clinic is a comprehensive medical center, the results from this study may not be generalizable to all populations. However, our participants represented a heterogeneous sample of patients with varying levels of education and employment status suggesting that replication of our methodology in similar locales may produce similar results.

An additional limitation of the present study was that incentives were not used. There has been a reported increase in response rate with the use of incentives. Although incentives were not used in our study and can be difficult to incorporate into web-based surveys, they may prove beneficial to improve response rates.19 There were also limitations involved in the web-based survey. Some of the participants received web-based surveys during the holidays (Thanksgiving to New Year) and non-compliance was observed during this time from participants who had been compliant prior to and subsequently following the holiday season. There were also periodic technical difficulties experienced in which participants were not able to complete the web-based survey. Participants were asked to report any problems experienced with the surveys, and these responses were considered compliant with that time point as the participant had taken the time to try to complete the survey and then reported these difficulties to research personnel. Finally, reasons regarding failure to complete the survey were not formally collected, in part because the majority of those who failed to return surveys could not be reached for follow-up despite numerous attempted phone calls. Future qualitative investigation regarding why individuals may choose not to complete follow-up health related surveys is recommended.

Conclusion

The results of this study supported our first hypothesis, that the overall recruitment rate would exceed 90%. We observed a high recruitment rate (98%), high eligibility (95%) based on Internet access and email availability, and a high willingness (91%) of patients to participate in web-based surveys. Our second hypothesis, that patients receiving surveys every two weeks would demonstrate a higher response rate than patients receiving surveys every four weeks was not supported. There was an average response rate of 42%, with the two-week group showing a slightly higher but not significantly different response rate (44%) than the four-week group (40%). Similarly, a difference in compliance was not observed between groups. Overall, 39% of patients approached for participation demonstrated good or excellent survey compliance over a six month post-operative period. Finally, our results supported our third hypothesis, with both groups demonstrating decreasing in response rates after the 12-week follow-up survey.

This is one of the first studies to examine the feasibility of web-based surveys in an orthopedic population, and it should be noted that these results may not be generalizable to other healthcare areas. The results demonstrate that although orthopedic patients report being willing and able to participate in a web-based survey, response rates began to decrease after the first three months postoperatively, and a sub-set of patients failed to complete any research activities. Failure to complete any surveys was particularly problematic in the four-week group where 40% of patients failed to return a single survey. However, the percentage of participants achieving good or excellent compliance did not differ between groups suggesting that there is no meaningful advantage to sending outcomes surveys at two-week intervals comparted to four-week intervals. Overall, these findings demonstrate that although it is feasible to conduct a web-based survey to collect PROs and rehabilitation progress in post-operative orthopedic patients, supplementary data collection procedures may be necessary to meet established research quality standards.

Acknowledgements

The authors would like to thank Christian Lattermann for his assistance with patient recruitment for this study.

Contributorship

JH, JT, AM and CM researched literature and conceived the study. JH, AM, CWC, and JT were involved in protocol development, gaining ethical approval, patient recruitment and data analysis. AM wrote the first draft of the manuscript. JH and JT completed subsequent revision and additional analyses with assistance from CWC and CM. All authors reviewed and edited the manuscript and approved the final version of the manuscript.

Declaration of Conflicting Interests

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Ethical approval

The University of Kentucky Institutional Review Board approved this study (IRB#110644-P6A)

Funding

The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: This project did utilize resources supported by the National Center for Research Resources and the National Center for Advancing Translational Sciences, National Institutes of Health, through Grant 8UL1TR000117-02. The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH.

Guarantor

JH

Peer review

This manuscript was reviewed by Christopher Golby, University of Warwick and one other reviewer who has chosen to remain anonymous.

Supplemental material

The online supplementary material is available at http://dhj.sagepub.com/supplemental.

References

- 1.Wiklund I. Assessment of patient-reported outcomes in clinical trails: The example of health-related quality of life. Fundam Clin Pharmacol 2004; 18: 351–363. [DOI] [PubMed] [Google Scholar]

- 2.Fairclough D. Patient reported outcomes as endpoints in medical research. Stat Methods Med Res 2004; 13: 115–138. [DOI] [PubMed] [Google Scholar]

- 3.Webster K, Cella D, Yost K. The Functional Assessment of Chronic Illness Therapy (FACIT) measurement system: Properties, applications, and interpretation. Health Qual Life Out 2003; 1–79. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Basch E, Artz D, Dulko D, et al. Patient online self-reporting of toxicity symptoms during chemotherapy. J Clin Oncol 2005; 23: 3552–3561. [DOI] [PubMed] [Google Scholar]

- 5.Basch E, Iasonos A, Barz A, et al. Long-term toxicity monitoring via electronic patient-reported outcomes in patients receiving chemotherapy. J Clin Oncol 2007; 25: 5374–5380. [DOI] [PubMed] [Google Scholar]

- 6.Sonn G, Sadetsky N, Presti J, et al. Differing perceptions of quality of life in patients with prostate cancer and their doctors. J Urol 2009; 182: 2296–2302. [DOI] [PubMed] [Google Scholar]

- 7.Mizner RL, Petterson SC, Clements KE, et al. Measuring functional improvement after total knee arthroplasty requires both performance-based and patient-report assessments: A longitudinal analysis of outcomes. J. Arthroplasty 2011; 26: 728–737. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Jacobs CA, Christensen CP. Correlations between knee society function scores and functional force measures. Clin Orthop Relat Res 2009; 467: 2414–2419. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Maly MR, Costigan PA, Olney SJ. Determinants of self-report outcome measures in people with knee osteoarthritis. Arch Phys Med Rehabil 2006; 87: 96–104. [DOI] [PubMed] [Google Scholar]

- 10.Basch E. The missing voice of patients in drug-safety reporting. N Engl J Med 2010; 362: 865–869. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Pakhomov S, Jacobsen S, Chute C, et al. Agreement between patient-reported symptoms and their documentation in medical record. Am J Manag Care 2008; 14: 530–539. [PMC free article] [PubMed] [Google Scholar]

- 12.Lakeman R. Using the Internet for data collection in nursing research. Comput Nurs 1997; 13: 269–275. [PubMed] [Google Scholar]

- 13.Basch E. Patient-reported outcomes in drug safety evaluation. Ann Oncol 2009; 20: 1905–1906. [DOI] [PubMed] [Google Scholar]

- 14.Harris P, Taylor R, Thielke R, et al. Research electronic data capture (REDCap)–a metadata-driven methodology and workflow process for providing translational research informatics support. J Biomed Inform 2009; 42: 377–381. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Lysholm J, Gillquist J. Evaluation of knee ligament surgery results with special emphasis on use of a scoring scale. Am J Sports Med 1982; 10: 150–154. [DOI] [PubMed] [Google Scholar]

- 16.Irrgang J, Anderson A, Boland A. Development and validation of the International Knee Documentation Committee Subjective Knee Form. Am J Sports Med 2001; 29: 600–613. [DOI] [PubMed] [Google Scholar]

- 17.Browne J, Anderson A, Arciero R. Clinical outcome of autologous chondrocyte implantation at 5 years in US subjects. Clin Orthop 2005; 436: 237–245. [DOI] [PubMed] [Google Scholar]

- 18.Babbie E. Survey research methods, 2nd ed Belmonth, California: Wadsworth Publishing Company, 1990. [Google Scholar]

- 19.Dillman D. Mail and Internet surveys, 2nd ed Hoboken, New Jersey: John Wiley and Sons Inc, 2007. [Google Scholar]

- 20.Honnakker P, Carayon P. Questionnaire survey nonresponse: A comparison of postal mail and internet surveys. Int J Hum-Comput Int 2009; 25: 348–373. [Google Scholar]

- 21.Velikova G, Booth L, Smith A, et al. Measuring quality of life in routine oncology practice improves communication and patient well-being: A randomized controlled trial. J Clin Oncol 2004; 22: 714–724. [DOI] [PubMed] [Google Scholar]

- 22.Miller D, Brownlee C, McCoy T, et al. The effect of health literacy on knowledge and receipt of colorectal cancer screening: A survey study. BMC Family Practice 2007; 8: 1–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Irving L, Klegar-Levy K, Everette D, Reynolds T and Lader W. Falling through the Net: Defining the digital divide. A Report on the Telecommunications and Information Technology Gap in America Washington, DC: National Telecommunications and Information Administration, US Department of Commerce, 1999.

- 24.Abdullah M, Theobald D, Butler D. Access to communication technologies in a sample of cancer patients: An urban and rural survey. BMC Cancer 2005; 5: 16–18. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.File T. Computer and internet use in the United States. Current Population Survey Reports, P20-568 US Census Bureau, Washington, DC. 2013.

- 26.Hassol A, Walker JM, Kidder D, et al. Patient experiences and attitudes about access to a patient electronic health care record and linked web messaging. J Am Med Informatics Assoc 2004; 11: 505–513. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Zhou YY, Kanter MH, Wang JJ, et al. Improved quality at Kaiser Permanente through e-mail between physicians and patients. Health Aff 2010; 29: 1370–1375. [DOI] [PubMed] [Google Scholar]

- 28.Chen L, Goodson P. Web-based survey of US health educators: Challenges and lessons. Am J Health Behav 2010; 34: 3–11. [DOI] [PubMed] [Google Scholar]

- 29.Vitale M, Vitale M, Lehmann C. Towards a national pediatric musculoskeletal trauma outcomes registry. J Pediatr Orthop 2006; 26: 151–156. [DOI] [PubMed] [Google Scholar]

- 30.Deutskens E, De Ruyter K, Wetzels M and Oosterveld P. Response rate and response quality of internet-based surveys: An experimental study. Marketing letters 2004; 15: 21–36.

- 31.Galesic M, Bosnjak M. Effects of questionnaire length on participation and indicators of response quality in a web survey. Public Opin Q 2009; 73: 349–360. [Google Scholar]

- 32.Verhagen AP, de Vet HC, de Bie RA, et al. The Delphi list: a criteria list for quality assessment of randomized clinical trials for conducting systematic reviews developed by Delphi consensus. J Clin Epidemiol 1998; 51: 1235–1241. [DOI] [PubMed]