Abstract

In spite of substantial spending and resource utilization, today's health care remains characterized by poor outcomes, largely due to overuse (overtesting/overtreatment) or underuse (undertesting/undertreatment) of health services. To a significant extent, this is a consequence of low‐quality decision making that appears to violate various rationality criteria. Such suboptimal decision making is considered a leading cause of death and is responsible for more than 80% of health expenses. In this paper, we address the issue of overuse or underuse of health care interventions from the perspective of rational choice theory. We show that what is considered rational under one decision theory may not be considered rational under a different theory. We posit that the questions and concerns regarding both underuse and overuse have to be addressed within a specific theoretical framework. The applicable rationality criterion, and thus the “appropriateness” of health care delivery choices, depends on theory selection that is appropriate to specific clinical situations. We provide a number of illustrations showing how the choice of theoretical framework influences both our policy and individual decision making. We also highlight the practical implications of our analysis for the current efforts to measure the quality of care and link such measurements to the financing of health care services.

Keywords: clinical decision making, health policy, overtreatment, overuse, practice, rationality, undertreatment, underuse

1. INTRODUCTION

It is no secret that today's health care system is in crisis1, 2: Societies devote a substantial amount of resources to health care, and yet patient outcomes remain inferior. The United States alone spends nearly 18% ($3.2 trillion) of its gross domestic product on health care; however, only 55% of needed services are delivered and more than 30% is inappropriate and, therefore wasteful, “care.”3 Ultimately, the observed (suboptimal) care relates to the quality of medical decisions.3 Indeed, it has been contended that personal decisions are the leading cause of death4 and that physicians' decisions are responsible for 80% of health care expenditures.5, 6 If decision making can largely explain the relatively poor state of affairs of current health care utilization, the logical question to ask is as follows: Are the decisions made during doctor‐patient encounters, in fact, rational? In a recent paper, we reviewed existing theories of rationality and their implications for medical practice.7 We found that no single model of rationality can fit all medical contexts; what is considered “rational behaviour” under one rationality theory may be considered “irrational” under another one.7 We call this “normative pluralism,” which, as explained in detail below, calls for the matching of a given clinical situation/problem with a given theory of rationality.

In this paper, we extend this analysis of rational decision making in clinical medicine to demonstrate the practical importance of this debate for the question of overuse (overtesting/overtreatment) and underuse (undertesting/undertreatment). We show that theory choice determines the “rational” course of action, both at the level of individual and policy decision making.

2. BRIEF OVERVIEW OF PRINCIPLES AND THEORIES OF RATIONALITY

Rationality is commonly defined as decision making that helps us achieve our goals.8, 9 In the context of clinical medicine, this typically means the desire to improve our health. Rationality does not guarantee that a decision is error free; rather, rational decision making accounts for the potential consequences of possible errors of our action—false negatives and false positives—to help us arrive at optimal outcomes. Theories of rationality for decision making are broadly classified as descriptive theories (which depict how people actually make their decisions) and normative ones (addressing the question how people “should” or “ought to” make their decisions). In‐between are prescriptive theories, which prescribe routes of action expected to be effective given what is known about human cognitive processes and cognitive architecture.10 Table 1 displays a short summary description of some of the most common theories of rationality relevant to clinical medicine. Table 2 provides an overview of the core ingredients that are common across most theoretical constructs of rationality.7 We next illustrate the issues of overuse and underuse in medicine that can be observed under each of these theories. Overuse refers to “too much care” and is defined as “provision of a service that is unlikely to increase the quality or quantity of life, that poses more harm than benefit, or that patients who were fully informed of its potential benefits and harms would not have wanted”; underuse refers to “too little care,” defined as “failure to deliver a service that is highly likely to improve the quality or quantity of life, that represents good value for the money, and that patients who were fully informed of its potential benefits and harms would have wanted.”51 Thus, both overuse and underuse are defined relative to the goals of the decision maker—in this case, a fully informed patient and his or her physician.

Table 1.

A list of major theories and models of rationality relevant to medical decision making7

| Normative theories of rationality |

|---|

| Evidence‐based medicine approach to rational decision making 11, 12: A normative theory, which posits that there is a link between rationality and believing what is true. (Our actions and beliefs are justifiable [or reasonable/rational] as a function of the trustworthiness of the evidence, and the extent to which we believe that evidence is determined by credible processes.) See also Epistemic rationality |

| Example: Clinical practice guidelines panels more readily recommend health interventions if the quality of evidence supporting such a recommendation is high.13 |

| Epistemic rationality: The rationality based on acquisition of true/fit‐for‐purpose knowledge. Linked to new mind rationality14 (see also Grounded rationality). |

| Example: Evidence‐based medicine approach to decision making. |

| Expected utility theory (EUT)—decision analysis/Bayesian rationality 15: The type of rationality associated with conformity to a normative standard such as the probability calculus or classical logic. In medicine, the most dominant normative theory is EUT, which is based on mathematical axioms of rationality, according to which rational choice is associated with selection of the alternative with higher expected utility (expected utility is the average of all possible results weighted by their corresponding probabilities). It is typically based on Bayesian probability calculus. |

| Example: Decision analyses such as EUT‐based microsimulation model to develop screening recommendations for colorectal cancer.16 |

| Descriptive theories of rationality |

| Pragmatic/instrumental rationality/rationality 1 8 or substantive rationality 17, 18: A descriptive theory, which proposes that rationality depends on the content and not only on the structure of decisions (process), and that the content should be assessed in light of short‐ and long‐term goals (purpose). Fits with the descriptivist approach,19 which argues that empirical evidence cannot support the “oughtness” of a model. |

| Adaptive or ecological rationality 20, 21: A variant of bounded rationality, which stipulates that human decision making depends on the context and environmental cues; hence, rational behaviour/decision making requires adaptation to environment/patient circumstances. Sometimes referred to as “Panglossian,”9, 22 the position that humans should be considered to be a priori rational due to optimal evolutionary processes. |

| Example: Extrapolation of research evidence to specific patient circumstances including social context and co‐morbidities dominates medical practice. |

| Argumentative Theory of Reasoning 23, 24 proposes that reason and rational thinking has evolutionary evolved with primary social function to justify one's self and convince others to believe one and gain their trust. |

| Example: Doctors invoke evidence‐based knowledge out of sense that it would be approved by the medical community and, in doing so, preserve their reputation and improve the health of their patients. |

| Bounded rationality 25, 26: Posits that, reflective of the principle that rationality should respect epistemological, environmental, and computational constraints of human brains, rational behaviour relies on satisficing process (finding a good enough solution) instead of EUT maximizing approach. The heuristic approach to decision making is the mechanism of implementation of bounded rationality.27 Often linked to prescriptive models of rationality28 designs for improvement of human rationality informed by cognitive architecture. |

| Example: Simple fast‐and‐frugal tree using readily available clinical cues outperformed 50 variables multivariable logistic model regarding decision whether to admit the patient with chest pain to coronary care unit.21 |

| Deontic introduction theory 29: A descriptive theory of inference from “is” to “ought”, which implies that rationality requires integration of the evidence related to the problem at hand (“is”) with the goals and values to decisions and actions (“ought”), while taking context into account. See also Grounded rationality. |

| Example: Evidence (“is”) shows that if prostate cancer patients receive detailed information about hormone therapy, their decision making style improves; policymakers infer that patients should receive detailed information.30 |

| Dual processing theories of rational thought (DPTRT) 9: A family of theories based on the architecture of human cognition, contrasting intuitive (“type 1”) processes with effortful (“type 2”) processes. A descriptive variant of this approach is that the rational action should be coherent with formal principles of rationality as well as human intuitions about good decisions. The normative/prescriptive variant of this theory is sometimes referred to as “Meliorism,”9, 22 the position that humans are often irrational but can be educated to be rational. According to Meliorist principles, when the goals of the genes clash with the goals of the individual (see below), the rational course of action should be dictated by the latter. |

| Example: Physicians often adjust their recommendations based on their intuition.31 |

| DPTRT can be thought of as a combination/contrast of |

| Old mind/evolutionary rationality/rationality of the genes 14, 32: The rationality linked to evolutionarily instilled goals (sex, hunger, etc). Past oriented and relying on type 1 mechanisms, it is driven by the evolutionary past and by experiential learning |

| Example: Eating chocolates when one has to reduce weight. |

| New mind/individual rationality 14, 32: The rationality linked to the goals of the individual rather than those of the genes. It is future oriented and relies on type 2 mechanisms, most importantly the ability to run mental simulations of future events and hypothetical situations. This is what enables humans to think consequentially and solve novel problems |

| Example: Use of contraceptives. The genes' goal is to self‐replicate, ie, to produce more copies of themselves. Contraceptives negate this goal while allowing humans greater individual freedom. |

| Grounded rationality 33: A descriptive theory, which postulates that rationality should be judged within epistemic context (ie, what is known to a decision maker and his or her goals), and that rational course of action is the one that facilitates the achievement of our goals given the context. See also Pragmatic rationality. |

| Example: To achieve health goals, physicians typically recommend treatment with which they are familiar/know about. |

| Meta‐rationality 34 or the master rationality motive 35: Relies on DPTRT and posits that rationality represents hierarchical goal integration while taking into account both emotions and reasons. It also refers to integration of so‐called Thin theories of rationality: theories in which the goals, context, and desires of behaviour are not evaluated (as per, for example, applying EUT without taking patient's desires into account)—that is, any goal is as good as any other goal—with Broad theories of rationality: theories of rationality in which the goals and desires of the decision‐maker are evaluated within context and in such a way as to achieve hierarchical coherence within goals.32, 36 |

| Example: Meta‐rationality model of rationality subsumes other variants of DPTRT. The approach based on meta‐rationality is often characteristic of a “wise” physician; the approach is particularly evident in high‐stake, high‐emotional decisions such as end‐of‐life where the substantive goals about achievable health status have to be reconciled with patient/physician emotional reaction to a proposed decision |

| Example: Pragmatic rationality dominates clinical decision making particularly in the fields such as oncology, where desirable health goals (eg, cure) may not be possible; as a result, the re‐evaluation of both goals and decision procedures may be needed (eg, switch from aggressive treatment to palliative care in advanced incurable cancers) |

| Regret regulation‐rationality is characterized by regulation of regret 37: This is a variant of DPTRT that relies on regret, which as a cognitive emotion uses counterfactual reasoning processes to tap into the analytical aspect of our cognitive architecture as well as into affect‐based decision making. According to this view, medical rational decision making is associated with regret‐averse decision processes. |

| Example: Contemporary medical practice has increasingly adopted the practice that patients' values and preferences should be consulted before a given health intervention is given. Patient values and preferences heavily depend on emotions such as regret, which, if properly elicited, may improve vigilance in decision making. 38, 39, 40 |

| Robust satisficing 17, 18: A variant of regret‐based DPTRT, according to which the rational course of action is to “maximize confidence in a good enough outcome even if the things go poorly” (instead of maximizing EUT); the concept is similar to “acceptable regret”41, 42 hypothesis of rational decision making, which postulates that we can rationally accept some losses without feeling regret. |

| Example: Annual screening mammography over 10 years in women older than 50 will prevent one death per 1000 from breast cancer but at cost of 50‐200 unnecessary false alarms and 2‐10 unnecessary breast removals.43 When it comes to decisions like these, which are value‐ and emotionally driven decisions, there are no right or wrong answers. Some women will accept harms for a small chance of avoiding death from breast cancer. Others may not.44 |

| Threshold model of rational action proposes that the most rational decision is to prescribe treatment or order a diagnostic test when the expected treatment benefit outweighs its expected harms at a given probability of disease or clinical outcome.45 It has been formulated within EUT,46, 47 dual processing theories,48 and regret framework.41, 42, 45, 49 |

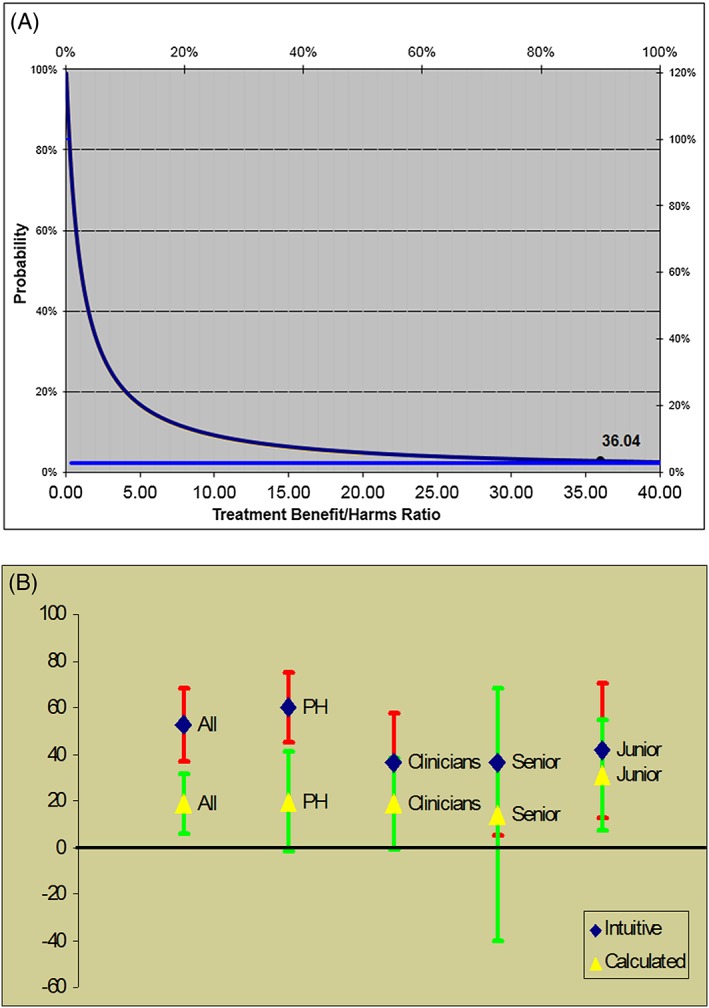

| Example: See text and Figure 1. |

Table 2.

Core ingredients (“principles”) of rationality commonly identified across theoretical models7

| P1: Most major theories of choice agree that rational decision making requires integrations of |

| • benefits (gains) |

| • harms (losses) |

| to fulfil our goals (eg, better health) |

| P2: It typically occurs under conditions of uncertainty |

| • rational approach requires reliable evidence to deal with the inherent uncertainties |

| • relies on cognitive processes that allow integration of probabilities/uncertainties |

| P3: Rational thinking should be informed by human cognitive architecture |

| • it is composed of type 1 reasoning processes, which characterizes “old mind” (affect‐based, intuitive, fast, and resource‐frugal) and type 2 processes (analytic and deliberative, consequentially‐driven, and effortful) of the “new mind” |

| P4: Rationality depends on the context and should respect epistemological, environmental, and computational constraints on human brains |

| P5: Rationality (in medicine) is closely linked to ethics and morality of our actions |

| • it requires consideration of utilitarian (society‐oriented), duty‐bound (individual‐oriented), and rights‐based (autonomy, “no decision about me, without me”) ethics |

3. OVERUSE AND UNDERUSE UNDER NORMATIVE THEORIES OF DECISION MAKING

3.1. Evidence‐based medicine

Evidence‐based medicine (EBM) represents the dominant mode of clinical practice today. Evidence‐based medicine rationality rests on the link between taking action and believing what is true.11, 12, 52 That is, our actions and beliefs are justifiable (or reasonable/rational) as a function of the trustworthiness of the evidence (evidentialism) and the extent to which we believe that evidence is determined by credible processes (reliabilism).11, 12, 52 Evidence‐based medicine posits that when evidence is of higher quality (ie, it is closer to the “truth”), our estimates about benefits and harms are better calibrated.12 Under the premise that “rational people respect their evidence,”11 EBM postulates that recommending tests or treatments when there is high quality of evidence in favour of their support is the most rational recommendation to make. Indeed, there is some evidence that the probability that guidelines panels will issue strong recommendations (for or against interventions) is much higher when the quality of the evidence is actually better.53, 54 Thus, it appears that practitioners of EBM generally behave rationally. On the other hand, this EBM principle is not consistently followed: A study evaluating 456 recommendations made by 116 World Health Organization (WHO) guidelines panels found that about 55% of strong recommendations were based on low‐quality or very low‐quality evidence.55 People, including experts, are generally not skilled in distinguishing strong from weak evidence, an effect known as “meta‐cognitive myopia.”56 In most cases, following these recommendations would result in overuse, ie, an irrational policy according to the EBM rationality standard. However, a number of justifications could explain the WHO panel recommendations, and this may make them rational under different rationality theories (see below “Argumentative Theory of Reasoning/Rationality”).

An additional challenge for an EBM theory of rationality is that only about 20% of recommendations are based on consistent, high‐quality evidence.57, 58 In many cases, perhaps most, the recommendations cannot be made because of an absence of evidence. “Absence of evidence is, however, not evidence of absence”59—a lack of high‐quality evidence does not mean that the intervention is not effective. This creates situations ripe for both underuse and overuse. The latter occur when clinicians use their uncontrolled experience or “best judgment” in the absence of empirical data. However, most often, the major government or professional organizations are reluctant to recommend interventions for which there is no reliable evidence of its beneficial effects. Thus, rational behaviour according to EBM may lead primarily to underuse—denying health interventions to those who may need it.

3.2. Expected utility‐based decision analysis

Decision analysis is the second most commonly used normative theory in clinical medicine. It is typically used in cost‐effectiveness analyses as well as to guide development of guidelines for practice.16, 60 With respect to rationality, decision analysis is based on expected utility theory (EUT). According to EUT, when faced with several possible courses of actions, the rational decision is judged to be the one based on the selection of the alternative with highest expected utility—for instance, the one with the highest quality‐adjusted life years. Note that EUT is the only known theory of choice that satisfies all the mathematical axioms of rational decision making.7

One of the major advances in the field of decision making was the development of the so‐called threshold model.45, 46, 47 The threshold embodies a critical link between evidence (which exists on the continuum of credibility) and decision making (which is a categorical exercise—we decide to act or not act).45 The threshold model stipulates that the most rational decision in medicine is to initiate an intervention when the expected benefits outweigh its expected harms at a given probability of disease or clinical outcome (Figure 1A).46, 47 Figure 1A illustrates that, as the therapeutic benefit/harm ratio increases, the threshold probability at which treatment should be given is lowered.46, 47 Conversely, if a treatment's benefit/harm ratio is smaller, the threshold probability for therapeutic action will be higher.46, 47 For example, Basinga and colleagues estimated that benefit/harm ratio of administering antituberculosis therapy to a patient with suspected tuberculosis (TB) is about 36 in terms of morbidity/mortality outcomes.50 This converts into a low threshold probability of about 2.7%.50 Thus, according to EUT, rational physicians should prescribe drugs against TB when the probability of TB exceeds 2.7%.50 At the probability of 2.7%, this means that most patients suspected of having TB will actually not have TB. As a result, acting according to EUT, the normative theory widely accepted as the gold standard in medicine, will predictably lead to further increase (and resource waste) in the use of diagnostic and treatment interventions!45, 49, 61 This can hardly be considered a rational course of action. Note that because most evaluation of drug effects passes through the scrutiny of regulatory approval agencies such as FDA, they will be approved for use in practice only if the benefits outweigh the harms; similarly, most diagnostic tests are perceived as harmless. This means that overtesting and overtreatment are built into the EUT model. Underuse is also possible, but that usually occurs as a result of poorly calibrated prediction models that may (mis)estimate the probability of a disease/outcome to be below the threshold, when it is actually above the threshold. This is an epistemic (ie, knowledge‐related) issue caused by poor predictive evidence; it should be distinguished from the effects of emotions on estimates of probability and the consequences of decisions. As explained below, acting based on regret theory or a dual processing theory of rationality can modify an action threshold in a way that would appear more rational to a decision maker.

Figure 1.

Threshold model of decision making. A, The model states that the most rational decision is to prescribe treatment when the expected treatment benefit outweighs its expected harms at given probability of disease or clinical outcome. The horizontal line indicates the probability at which physicians should treat the patient with suspected tuberculosis (2.7%). B, Actual threshold for treating a patient suspected of having tuberculosis (based on Basinga et al50; graph: Courtesy of Dr Jef Van den Ende (see Table 1 and text for details)

4. OVERUSE AND UNDERUSE UNDER DESCRIPTIVE THEORIES OF DECISION MAKING

4.1. Interactionist or Argumentative Theory of Reasoning and Rationality

The Interactionist or Argumentative Theory of Reasoning (ATR) proposes that people make decisions because they can find reasons to support them. People do not necessary favour the “best” decisions or decisions that satisfy some criterion of rationality, but decisions that are most (socially) acceptable, ie, those that can be most easily justified to oneself and others and are less at risk of being criticized.23, 24 The theory stipulates that reason and rational thinking has evolved with a primarily social function to justify oneself, to convince others to be believed, and to gain others' trust (Table 1).23, 24 From the ATR perspective, it is easy to explain why conflicts of interest—defined to exist “when professional judgment concerning a primary interest (such as patients' welfare or the validity of research) may be influenced by a secondary interest (such as financial gain or desire to avoid a lawsuit)”62—are pervasive in medicine and difficult to eradicate. From the perspective of those to whom conflicts of interest apply, such behaviour may be quite rational (if not necessarily moral).

Similarly, in explaining the reasons why their strong recommendations were based on low‐quality or very low‐quality evidence, the WHO panel members55 gave a number of reasons for issuing such recommendations (see above).63 A typical reason for offering treatment was given as “avoiding underuse,” based on a conviction that a treatment is beneficial despite the fact that the panel made explicit ratings of the evidence quality as low or very low.63 Such a reason directly contradicts EBM principles of rationality.7, 64 Another frequently given reason is a concern that policymakers responsible for funding decisions will ignore recommendations that are insufficiently “strong.” In addition, WHO panellists sometimes feel wedded to long‐established practices and feel uncomfortable issuing any but strong recommendations regarding such practices.63 Importantly, as predicted by ATR, the reasons given by the panel members are not meant to satisfy a specific criterion of rationality but to convince other panel members to “vote” in a similar way. Whether a particular recommendation would lead to overuse or underuse was rarely explicitly invoked.

4.2. Emotions and regret theory of rationality

People's decision making often relies on emotions and intuition. Our feelings influence the way we perceive and process risks; this is known as the “risk as feelings” phenomena.65 Emotions lead to different ways in which probabilities and consequences of our actions (utilities) are evaluated. Affect‐rich situations may lead to probability neglect in which people are sensitive only to the presence or absence of stimuli, and recognize outcomes only as being possible or not,66 while in affect‐poor contexts, probabilities tend to be evaluated without such distortions.67, 68 The “risk as feelings” phenomenon can influence the way physicians make their decisions. For example, Hemmerich et al69 studied physicians who experienced negative emotions, such as having a patient die during “watchful waiting” for a small abdominal aortic aneurysm. They found that such physicians' management of the subsequent patients would be significantly affected, to the point that they would accelerate the timing of surgery, even if this would contradict normative EBM guidelines. These physicians appear to aim to minimize their feelings of regret in the management of the next patient.69

Regret is a cognitive emotion, which we are motivated to regulate to achieve our desired goals; many of our decisions are driven by the desire to avoid regret and minimize (perceived) risks.38, 39 It has been argued that rational decision making is associated with regret‐averse decision processes,17, 18, 34 particularly if the beneficial aspects of regret regulation, such as learning and explicitly considering the consequences of decision making, are decoupled from the deleterious ones (eg, self‐blame and self‐reproach).70, 71 Importantly, unlike normative models such as those based on decision analysis, regret takes context into account. When regret was taken into account, the threshold for giving treatment to a patient suspected of having TB dramatically increased (from 2.7% to 20‐60%) (see Figure 1B).50 The threshold model can also be reformulated accounting for regret.41, 42, 45, 72 The model predicts drops in the threshold level if a decision maker regrets failing to benefit more than they regret causing unnecessary harms. This possibly can lead to more false‐positive decisions,73 resulting in overtreatment and overtesting as one would expect in the case of the management of an individual patient. Conversely, if regret of harms is felt to be higher than failing to benefit, the threshold will increase.41, 42, 45, 72 Under these circumstances, fewer false positive decisions would be made, but more false negative ones would be made, resulting in more undertreatment and underdiagnosis.73

4.3. Dual process theories of cognition and rationality

Principle no. 3 states that rational thinking should be informed by human cognitive architecture (Table 2). According to dual process theories, human cognition can be thought of as a function of 2 types of processes: type 1 processes, characterized as “old mind” (affect‐based, intuitive, fast, and resource‐frugal), and type 2, “new mind” processes (analytic, deliberative, consequential, and effortful).9, 14, 74 In the setting of dual‐processing architecture, it is important to realize that regret, as a cognitive emotion, is characterized by a counterfactual reasoning process: It operates imagining “what if” scenarios—we regret when we compare the actual outcome to what might have happened, but did not.38, 39 In this respect, regret serves as a link between intuitive and effortful processes, providing a mechanism for a dual‐process rationality model.7, 75 The threshold model, which links the key features of clinical medicine: evidence with decision making, has also been formulated within a framework of dual‐processing theories.48 An empirical study testing prediction according to EUT versus regret theory versus a dual‐processing threshold model showed that the model based on dual‐processing theory of decision making provided the best explanation for the observed results.76 This is likely because the model integrates regret, EUT, and a switch between 2 cognitive domains that can explain how a decision maker increases or decreases an action threshold as a function of interactions between type 1 and type 2 processes.48 For example, consistent with Hemmerich et al,69 the model postulates that a physician's threshold will go up, if his or her recent experience was coloured with emotions when he or she saw the next patient facing a similar decision. The threshold will go down if no emotion (including regret) had affected the physician's perception of benefits and harms of health interventions.48 Rationality, according to dual‐processing theories, needs to take into account both analytical and affect‐based reasoning.77 This might sound counter‐intuitive at first, in that popular culture often contrasts emotionalism with rationality; however, without emotion, we have no goals, and without goals, there is no rationality.14 It is the regulation of emotions, particularly regret, which represents one of the key ingredients of rational behaviour.37 Thus, according to dual‐processing theories of decision making, incoherence between type 1 and type 2 processes can disrupt optimal decision‐making, resulting in overuse or underuse as a function of their influence on the action threshold.45, 49 This, as explained, may happen when research evidence on benefits and harms implies one course of action (eg, treat at a lower probability of disease), but context, emotion, or recent experiences indicate a different course of action (eg, treat at a much higher probability of disease).48, 69

4.4. Theory of bounded rationality: Adaptive/ecological rationality

Medical encounters increasingly occur within the setting of the limited time and in the context of the ongoing information explosion.78 A typical clinical encounter is approximately 11 minutes long with less than 2 minutes available to search for reliable information, with interruptions occurring, on average, every 15 minutes.78 At the same time, more than 6 million articles are published in more than 20 000 biomedical journals every year,79 with MEDLINE alone containing over 22 million indexed citations from more than 5600 journals.80 In addition, 75 randomized clinical trials and 11 systematic reviews are published every day.81 This information explosion needs to be contrasted with the human brain's limited capacity for information processing, memory limitations, and relatively low storage capability.78 The theory of bounded rationality (which serves as the basis for principle no. 4, Table 2) posits that rationality depends on the context, and should respect epistemological, environmental, and computational constraints of human brains.7 Under the real‐life complexity of the health care system and the limitations of human information processing, rational behaviour relies on satisficing process (ie, finding a good enough solution)7, 25, 78 instead of maximizing (ie, finding the best possible solution).

Satisficing is sometimes structured via heuristics, which represent mechanisms for implementing bounded rationality.27 Heuristics are widely used in medical education, as popular “mental shortcuts,” “rules of thumb,” clinical pathways, and algorithms. The use of heuristics is defined as “a strategy that ignores part of the information, with the goal of making decisions more quickly, frugally, and/or accurately than more complex methods”20 and may sometimes outperform complex statistical models, in a phenomenon known as “less‐is‐more.”21

The principle behind satisficing is that there must be a point (threshold) at which obtaining more information or computation becomes overly detrimental and costly; the use of heuristics helps the decision maker stop searching before this threshold has been crossed. 21 In clinical medicine, it is often implemented via fast‐and‐frugal trees, highly effective, simple decision trees composed of sequentially ordered cues (tests) and binary (yes/no) decisions formulated via a series of if‐then statements.82 Fast‐and‐frugal trees can be linked to EUT and regret via the threshold model.82 A variant of satisficing known as “robust satisficing” is proposed to regulate regret,17, 18, 37 a concept similar to “acceptable regret”: We can rationally accept some losses without feeling regret.41, 42 “Acceptable regret” is shown to explain both underuse and overuse when compared against deviations from normative standards.41, 42 It explains why the “stubborn quest for diagnostic certainty,”83 that is, overtesting in the face of already sufficient evidence, widely considered to be one of the main culprits of increasing health care costs, may not be irrational.41, 42, 61, 84 For example, in an end‐of‐life setting, patients would accept a potentially wrong referral to hospice, only if the estimated probability of death within 6 months exceeded 96%. That is, they would accept hospice care without regretting it only if they are virtually certain that death is imminent.84 This may explain why dying patients are consistently referred to hospice very late, typically averaging less than 1 week before dying.85

4.5. Deontic introduction rationality: linking the rationality of “is” with the rationality of “ought”

We have consistently referred to the essence of clinical practice as the integration of empirical evidence with categorical actions (yes/no) that fundamentally defines decision making. In a medical context, rationality requires integration of evidence related to the problem at hand (“is”, which is derived from our observations) with the goals and values of decisions and (potential) actions (“ought”) (Principle Nos. 1 and 2, Table 2). It is these rationally guided ought decisions that allows us to achieve our goals. We referred to the threshold model of decision making as a model that serves as a link between evidence and decision making.45 Recently, deontic introduction theory was developed29, 86 to provide psychological mechanisms for how normative (a.k.a. “deontic”) rules for actions can be generated by linking empirical evidence to values and the transference of value from goals to actions.29, 86 Interestingly, there seems to be an evolutionary background for both the need for reliable evidence to help us function in our environment87, 88 and for the generation of normative “ought” or “should” rules (“Faced with the knowledge that there are hungry children in Somalia, we easily and naturally infer that we ought to donate to famine relief charities”).29 Physicians seem to generate deontic “ought” or “should” rules routinely. They first link evidence with outcomes to create explanations in terms of causation (“If you smoke, you will likely get lung cancer”). They then infer values from outcomes (“Lung cancer is an undesirable outcome”; therefore, “Smoking is bad”), which in turn results in value transference from goals to actions to create a normative conclusion (“You should not smoke”). The action rules thus created reflect pragmatic rationality that involves instrumental “oughts.” (Note that instrumental “oughts” should not be confused with evaluative “oughts,” which reflects overall value judgments such as moral judgments).19 The former are typically accurate within a specific setting, constituting of if‐then rules, while the latter aim at universally valid statements (even though they often cannot be separated from the context). So acting on normative conclusions frequently seen in oncology practice, such as “Given that the diagnosis of metastatic cancer is made, the patient ought to be treated with chemotherapy,” can be rational in one setting, but irrational in another. This can result either in overtreatment with futile therapy, as is often observed in the end‐of‐life setting,89 or undertreatment, as in cases when treatment is inappropriately denied based on an arbitrary (usually older) age or due to “excessively high” costs.

Deontic introduction theory emphasizes the crucial role of context in generating normative rules for guiding behaviour. Context defines the goals of the individual as well as their beliefs in how to cause them to materialize. According to grounded rationality 33 (Table 1), the rational course of action represents the action aimed to achieve our goals as a function of epistemic context—the evidence and knowledge available to us at the time of making such decisions. Such epistemic context is subject to cognitive variability, that is, the individual and cultural characteristics of the decision maker. For example, decisions on opting for palliative care are sensitive to cultural and individual values.90 This again serves to underline our thesis that no single theory of rationality can fit all decision makers in all contexts. What might be rational for a specific context may not be rational in a different context and might not even be rational for another provider in a similar medical context but from a different culture.

5. DISCUSSION

Rationality revolves around finding the most effective procedures to achieve our goals.7 As “no one size model fits all” clinical circumstances,7 these goals may be differently, but correctly, pursued and achieved under different theories of rationality. Purely normative models can often be off the mark, as they rely on mathematical abstractions, whereas prescriptive models of rationality10 aim to realize rational solutions by relying on the accumulated knowledge of human cognitive architecture and make recommendations accordingly. We propose that prescriptive rational models in clinical decision making should also make use of whichever model best fits a specific context. For example, operational achievements of the goals in health care can be realized by linking evidence with decisions via the threshold model.45 According to the threshold model, rational decision making consists of prescribing treatment or ordering a test when the benefits of treatment exceed its harms for given probability of disease or clinical outcome.45 However, thresholds can vary as a function of different contextual factors that play roles in some theories of decision making and not in others.45 As a result, what is defined as rational or irrational actions resulting in overuse or underuse is inextricably intertwined with whatever theoretical frameworks within which these decisions are considered. That is, rational behaviour under one theory may be irrational under a different viewpoint.

One of the fundamental challenges for medical decision making is that goals often conflict and that rational attempts to achieve one goal may prevent achieving another. According to Stanovich,34 rationality means achieving a coherence among goals, and we need to rely on both normative and descriptive procedures to coherently integrate across goals. This is known as meta‐rationality34—asking reflectively about the appropriateness of our emotional reactions to a decision. “The trick may be to value formal principles of rationality, but not to take them too seriously.”34

However, coherent integration of goals may sometimes be impossible. For example, we may have a rational goal to extend a patient's life, but resource use may exceed what is affordable. That is, decision makers often face a trade‐off between goals and interests of individuals and the wider society, pitching duty‐bound, deontological decisions against the utilitarian ethics. These goals are often expressed in terms of “value,” where “value” is defined as equivalent to clinical benefit/cost ratio. Formally, the most common metric to gauge the “right” value for health care is to calculate the incremental cost‐effectiveness ratio (ICER) among competing health interventions, ICER = (cost1 − cost2)/(effectiveness1 − effectiveness2). Typically, effectiveness is expressed as the quality life‐adjusted years gained for determining whether a given health intervention is considered beneficial. What is acceptable according to ICER depends on a particular society, which may decide not to offer a particular treatment or a diagnostic test if the societally agreed upon ICER threshold is exceeded. In the United States, for example, the generally acceptable ICER threshold is between $50 and $200 K per quality life‐adjusted year.91 In contrast, the WHO considers an intervention to be cost‐effective if the cost of the intervention per disability‐adjusted life year averted is less than 3 times the country's annual gross domestic product per capita.92 Using a different definition, and neglecting relevant context such as the disease burden and the available budget, may result in a paradoxical— and seemingly irrational—allocation of a country's health budget, as demonstrated by Marseille et al in their analysis of the WHO ICER thresholds.92 Fundamentally, all these initiatives expose tensions between societal vs individual interests.73 Interestingly, testing of the role of deontic introduction in moral inference found that the tendency to infer normative conclusions mostly coheres with utilitarian (rather than deontological) judgments,86 which can explain increasing outrage over ever‐increasing health care costs.93

Another example of a goal conflict, one that all too often leads to “overtreatment” with aggressive therapy rather than a more appropriate palliative approach such as hospice, is the end‐of‐life setting. The usual goal for medical decision making is to improve health, but what are appropriate goals at the end‐of‐life? A “good death” is not “better health” in the usual sense, yet it can be rationally accepted, most importantly at the level of intrinsic emotional peace. A theoretic approach to rational decision making must accommodate this goal. Here again, invoking regret may prove the mechanism allowing the peaceful exit that many humans typically desire. Consistent with Aristotle's “dead bed test” of no regret—life lived with no unfulfilled potentials weighing on our souls—it was found that elicitation of regret can actually improve decision making at the end of life.40 Thus, rational decision making has to take into account both analytical and affect‐based reasoning.

A “unifying theory of rationality” is likely not possible, particularly because decision making is extremely context sensitive (and, as explained, normative theories typically fail to take context into account).7 We also believe that context setting is a prerequisite to a rational approach to both practical and theoretical considerations to problem‐solving and decision making. How a given clinical problem should be approached is ultimately an empirical question. By calling for “normative pluralism” and pragmatic rationality, according to which the context and the clinical situation should be matched to a contextually appropriate theory of rationality, we believe that the current unsatisfactory situation in health care could dramatically improve. Although this position incorporates an element of relativism, by acknowledging contextual dependence, it is a moderate type of relativism rather than a stronger “anything goes” version.94, 95

Our paper also has important practical implications. Physicians are increasingly paid according to the quality of care they deliver and penalized for overuse/underuse.96 What our analysis shows is that these policy initiatives cannot be devoid of the theoretical rationality framework in which quality improvement assessments operate. Financing of health care services, which is increasingly being proposed to be a function of the measurement of overuse and underuse of health services, should be determined based on the choice of theoretical framework.

We believe that an attempt to define theoretical framework(s) to measure appropriateness of care is what is largely missing in the current discussion of overuse and underuse of care. Even though both overuse and underuse are widely acknowledged as an empirical phenomena in modern health care,51, 97, 98, 99, 100 actual measurement of overuse and underuse has been difficult to achieve.97, 98

Two methodological approaches have dominated measurements of overuse and underuse: (1) comparing the use of health care services against some sort of predefined “truth” or “gold standard” and (2) detecting unexpectedly wide variations in the delivery of health care services.97, 98 The first approach relies on a “correspondence theory of truth,” which assumes that there is “objective reality” and that “truth” is based on the correspondence of ideas, concepts, and theories with facts.101, 102 This typically takes a form of measuring outcomes against evidence‐based guidelines. However, as discussed above, evidence is often challenged and finding an incontrovertible “truth” that is uniformly accepted is, in practice, extremely difficult, perhaps impossible. One possible solution is by seeking correspondence with the goals of the decision maker (rather than with an “objective truth” outside the decision maker), as argued above.

The second approach, which measures overuse and underuse by assessing (surprisingly wide) variations in care, relies on the “coherence theory of truth,” according to which statements or judgments are “true” if they cohere with other judgments or statements.101, 102 Thus, it is assumed that similar patients in similar conditions in similar settings should be managed similarly, with minimal variation. This approach is typically based on the analysis of practice patterns from large data sets33, 90 and fundamentally disregards individual patients' circumstances and their values and preferences. For example, use of more medicalized terminology in discussions with patients often leads to more aggressive treatment and overuse.103 In this sense, coherence is often used to define “rationality”—given the premises, certain conclusions should be necessarily drawn if a rational reasoning process is followed. However, deductive argument validity only guarantees rational conclusions given rational premises, which is not necessarily always the case. If the premises are false, even if the reasoning is valid, the conclusion will also be false. In contrast, correspondence is concerned with the accuracy of judgments or claims against some criterion of accuracy,101, 102 which makes it potentially more useful for rationality in clinical decision making.

Ultimately, both measurement and mitigations of overuse and underuse will not improve until they are placed within a better framework of rationality theories.101, 102 Although there are many theories of truth, broadly speaking, normative theories of rationality reflect the coherence theory of truth, while descriptive theories of rationality tend to rely on the correspondence theory of truth. As we stress above, different medical problems will require different theoretical approaches. In our view, further advances in health care, including reducing the rates of overuse and underuse, will only be possible with an explicit identification of the theoretical framework from which the problem is addressed.

Djulbegovic B, Elqayam S, Dale W. Rational decision making in medicine: Implications for overuse and underuse. J Eval Clin Pract. 2018;24:655–665. https://doi.org/10.1111/jep.12851

REFERENCES

- 1. IOM (Institute of Medicine) . Delivering High‐Quality Cancer Care: Charting A New Course for A System in Crisis. Washington, DC: The National Academies Press; 2013. [PubMed] [Google Scholar]

- 2. IOM (Institute of Medicine) . Variation in Health Care Spending: Target Decision Making, Not Geography. Washington, DC: The National Academies Press; 2013. [PubMed] [Google Scholar]

- 3. Berwick DM, Hackbarth AD. Eliminating waste in US health care. JAMA. 2012;307(14):1513‐1516. https://doi.org/10.1001/jama.2012.362 [DOI] [PubMed] [Google Scholar]

- 4. Keeney R. Personal decisions are the leading cause of death. Oper Res. 2008;56(6):1335‐1347. https://doi.org/10.1287/opre.1080.0588 [Google Scholar]

- 5. Djulbegovic B. A framework to bridge the gaps between evidence‐based medicine, health outcomes, and improvement and implementation science. J Oncol Pract. 2014;10(3):200‐202. https://doi.org/10.1200/JOP.2013.001364 [DOI] [PubMed] [Google Scholar]

- 6. Cassel CK, Guest JA. Choosing wisely: helping physicians and patients make smart decisions about their care. JAMA. 2012;307(17):1801‐1802. https://doi.org/10.1001/jama.2012.476 [DOI] [PubMed] [Google Scholar]

- 7. Djulbegovic B, Elqayam S. Many faces of rationality: implications of the great rationality debate for clinical decision‐making. J Eval Clin Pract. 2017;23(5):915‐922. https://doi.org/10.1111/jep.12788 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8. Evans JSTBT, Over DE. Rationality and Reasoning. Hove: Psychology Press; 1996. [Google Scholar]

- 9. Stanovich KE. Rationality and the Reflective Mind. Oxford: Oxford University Press; 2011. [Google Scholar]

- 10. Baron J. Thinking and Deciding. 4th ed. Cambridge: Cambridge University Press; 2007. [Google Scholar]

- 11. Djulbegovic B, Guyatt GH, Ashcroft RE. Epistemologic inquiries in evidence‐based medicine. Cancer Control. 2009;16(2):158‐168. https://doi.org/10.1177/107327480901600208 [DOI] [PubMed] [Google Scholar]

- 12. Djulbegovic B, Guyatt GH. Progress in evidence‐based medicine: a quarter century on. Lancet. 2017;390(10092):415‐423. https://doi.org/10.1016/S0140-6736(16)31592-6 [DOI] [PubMed] [Google Scholar]

- 13. Guyatt GH, Oxman AD, Kunz R, et al. Going from evidence to recommendations. BMJ. 2008;336(7652):1049‐1051. https://doi.org/10.1136/bmj.39493.646875.AE [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14. Evans JSTBT. Thinking Twice. Two Minds in One Brain. Oxford: Oxford University Press; 2010. [Google Scholar]

- 15. Oaksford M, Chater N. Précis of Bayesian rationality: the probabilistic approach to human reasoning. Behav Brain Sci. 2009;32(1):69‐84. discussion 85‐120, https://doi.org/10.1017/S0140525X09000284 [DOI] [PubMed] [Google Scholar]

- 16. US Preventive Services Task Force . Screening for colorectal cancer: US Preventive Services Task Force recommendation statement. Ann Intern Med. 2008;149(9):627‐637. https://doi.org/10.7326/0003-4819-149-9-200811040-00243 [DOI] [PubMed] [Google Scholar]

- 17. Schwartz B. What does it mean to be a rational decision maker? J Market Behav. 2015;1(2):113‐145. https://doi.org/10.1561/107.00000007 [Google Scholar]

- 18. Schwartz B. What is rationality? J Market Behav. 2015;1(2):175‐185. https://doi.org/10.1561/107.00000011 [Google Scholar]

- 19. Elqayam S, Evans JSBT. Subtracting ‘ought’ from ‘is’: descriptivism versus normativism in the study of human thinking. Behav Brain Sci. 2011;34(05):233‐248. https://doi.org/10.1017/S0140525X1100001X [DOI] [PubMed] [Google Scholar]

- 20. Gigerenzer G, Brighton H. Homo heuristicus: why biased minds make better inferences. Top Cogn Sci. 2009;1(1):107‐143. https://doi.org/10.1111/j.1756-8765.2008.01006.x [DOI] [PubMed] [Google Scholar]

- 21. Gigerenzer G, Hertwig R, Pachur T, eds. Heuristics. The Foundation of Adaptive Behavior. New York: Oxford University Press; 2011, https://doi.org/10.1093/acprof:oso/9780199744282.001.0001. [Google Scholar]

- 22. Stanovich KE. Who Is Rational? Studies of Individual Differences in Reasoning. Mahway, NJ: Lawrence Elrbaum Associates; 1999. [Google Scholar]

- 23. Mercier H, Sperber D. Why do humans reason? Arguments for an argumentative theory. Behav Brain Sci. 2011;34(2):57‐74. discussion 74‐111, https://doi.org/10.1017/S0140525X10000968 [DOI] [PubMed] [Google Scholar]

- 24. Mercier H, Sperber D. The Enigma of Reason. Cambridge, MA: Harvard University Press; 2017. [Google Scholar]

- 25. Simon HA. A behavioral model of rational choice. Quart J Econom. 1955;69(1):99‐118. https://doi.org/10.2307/1884852 [Google Scholar]

- 26. Simon HA. Information processing models of cognition. Ann Review Psychol. 1979;30:263‐296. [DOI] [PubMed] [Google Scholar]

- 27. Katsikopoulos KV, Gigerenzer G. One‐reason decision‐making: modeling violations of expected utility theory. J Risk Uncert. 2008;37(1):35‐56. https://doi.org/10.1007/s11166-008-9042-0 [Google Scholar]

- 28. Baron J. Rationality and Intelligence. New York, NY: Cambridge University Press; 1985, https://doi.org/10.1017/CBO9780511571275. [Google Scholar]

- 29. Elqayam S, Thompson VA, Wilkinson MR, Evans JS, Over DE. Deontic introduction: a theory of inference from is to ought. J Exp Psychol Learn Mem Cogn. 2015;41(5):1516‐1532. https://doi.org/10.1037/a0038686 [DOI] [PubMed] [Google Scholar]

- 30. Gorawara‐Bhat R, O'Muircheartaigh S, Mohile S, Dale W. Patients' perceptions and attitudes on recurrent prostate cancer and hormone therapy: qualitative comparison between decision‐aid and control groups. J Geriatr Oncol. 2017;8(5):368‐373. https://doi.org/10.1016/j.jgo.2017.05.006 [DOI] [PubMed] [Google Scholar]

- 31. Van den Bruel A, Thompson M, Buntinx F, Mant D. Clinicians' gut feeling about serious infections in children: observational study. BMJ. 2012;345:e6144(sep25 2). https://doi.org/10.1136/bmj.e6144 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Stanovich KE. The Robot's Rebellion: Finding Meaning in The Age of Darwin. Chicago: Chicago University Press; 2004, https://doi.org/10.7208/chicago/9780226771199.001.0001. [Google Scholar]

- 33. Elqayam S. Grounded rationality: descriptivism in epistemic context. Synthese. 2012;189(S1):39‐49. https://doi.org/10.1007/s11229-012-0153-4 [Google Scholar]

- 34. Stanovich KE. Meta‐rationality in cognitive science. J Market Behav. 2015;1(2):147‐156. https://doi.org/10.1561/107.00000009 [Google Scholar]

- 35. Stanovich KE. Higher order preference and the master rationality motive. Think Reason. 2008;14(1):111‐127. https://doi.org/10.1080/13546780701384621 [Google Scholar]

- 36. Stanovich KE. Why humans are (sometimes) less rational than other animals: cognitive complexity and the axioms of rational choice. Think Reason. 2013;19(1):1‐26. https://doi.org/10.1080/13546783.2012.713178 [Google Scholar]

- 37. Zeelenberg M. Robust satisficing via regret minimization. J Market Behav. 2015;1(2):157‐166. https://doi.org/10.1561/107.00000010 [Google Scholar]

- 38. Zeelenberg M, Pieters R. A theory of regret regulation 1.0. J Consum Psychol. 2007;17(1):3‐18. https://doi.org/10.1207/s15327663jcp1701_3 [Google Scholar]

- 39. Zeelenberg M, Pieters R. A theory of regret regulation 1.1. J Consum Psychol. 2007;17:29‐35. [Google Scholar]

- 40. Djulbegovic B, Tsalatsanis A, Mhaskar R, Hozo I, Miladinovic B, Tuch H. Eliciting regret improves decision making at the end of life. Eur J Cancer. 2016;68:27‐37. https://doi.org/10.1016/j.ejca.2016.08.027 [DOI] [PubMed] [Google Scholar]

- 41. Hozo I, Djulbegovic B. When is diagnostic testing inappropriate or irrational? Acceptable regret approach. Med Decis Making. 2008;28(4):540‐553. https://doi.org/10.1177/0272989X08315249 [DOI] [PubMed] [Google Scholar]

- 42. Hozo I, Djulbegovic B. Clarification and corrections of acceptable regret model. Med Decis Making. 2009;29:323‐324. [Google Scholar]

- 43. Gigerenzer G. Full disclosure about cancer screening. BMJ. 2016;352:h6967 https://doi.org/10.1136/bmj.h6967 [DOI] [PubMed] [Google Scholar]

- 44. Djulbegovic B, Lyman G. Screening mammography at 40‐49 years: regret or no regret? Lancet. 2006;368(9552):2035‐2037. https://doi.org/10.1016/S0140-6736(06)69816-4 [DOI] [PubMed] [Google Scholar]

- 45. Djulbegovic B, van den Ende J, Hamm RM, Mayrhofer T, Hozo I, Pauker SG. When is rational to order a diagnostic test, or prescribe treatment: the threshold model as an explanation of practice variation. Eur J Clin Invest. 2015;45(5):485‐493. https://doi.org/10.1111/eci.12421 [DOI] [PubMed] [Google Scholar]

- 46. Pauker SG, Kassirer J. The threshold approach to clinical decision making. N Engl J Med. 1980;302(20):1109‐1117. https://doi.org/10.1056/NEJM198005153022003 [DOI] [PubMed] [Google Scholar]

- 47. Pauker SG, Kassirer JP. Therapeutic decision making: a cost‐benefit analysis. N Engl J Med. 1975;293(5):229‐234. https://doi.org/10.1056/NEJM197507312930505 [DOI] [PubMed] [Google Scholar]

- 48. Djulbegovic B, Hozo I, Beckstead J, Tsalatsanis A, Pauker SG. Dual processing model of medical decision‐making. BMC Med Inform Decis Mak. 2012;12(1):94 https://doi.org/10.1186/1472-6947-12-94 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49. Djulbegovic B, Hamm RM, Mayrhofer T, Hozo I, Van den Ende J. Rationality, practice variation and person‐centred health policy: a threshold hypothesis. J Eval Clin Pract. 2015;21(6):1121‐1124. https://doi.org/10.1111/jep.12486 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Basinga P, Moreira J, Bisoffi Z, Bisig B, Van den Ende J. Why are clinicians reluctant to treat smear‐negative tuberculosis? An inquiry about treatment thresholds in Rwanda. Med Decis Making. 2007;27(1):53‐60. https://doi.org/10.1177/0272989X06297104 [DOI] [PubMed] [Google Scholar]

- 51. Elshaug AG, Rosenthal MB, Lavis JN, et al. Levers for addressing medical underuse and overuse: achieving high‐value health care. Lancet. 390(10090):191‐202. https://doi.org/10.1016/S0140-6736(16)32586-7 [DOI] [PubMed] [Google Scholar]

- 52. Djulbegovic B, Guyatt G. EBM and the theory of knowledge In: Guyatt G, Meade M, Cook D, eds. Users' Guides to the Medical Literature: A Manual for Evidence‐Based Clinical Practice. Boston: McGraw‐Hill; 2014. [Google Scholar]

- 53. Djulbegovic B, Trikalinos TA, Roback J, Chen R, Guyatt G. Impact of quality of evidence on the strength of recommendations: an empirical study. BMC Health Serv Res. 2009;9(1):120 https://doi.org/10.1186/1472-6963-9-120 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Djulbegovic B, Kumar A, Kaufman RM, Tobian A, Guyatt GH. Quality of evidence is a key determinant for making a strong GRADE guidelines recommendation. J Clin Epidemiol. 2015;68(7):727‐732. https://doi.org/10.1016/j.jclinepi.2014.12.015 [DOI] [PubMed] [Google Scholar]

- 55. Alexander PE, Bero L, Montori VM, et al. World Health Organization recommendations are often strong based on low confidence in effect estimates. J Clin Epidemiol. 2014;67(6):629‐634. https://doi.org/10.1016/j.jclinepi.2013.09.020 [DOI] [PubMed] [Google Scholar]

- 56. Fiedler K. Meta‐cognitive myopia and the dilemmas of inductive‐statistical inference. Psychol Learn Motivat ‐ Adv Res Theory. 2012;57:1‐55. https://doi.org/10.1016/B978-0-12-394293-7.00001-7 [Google Scholar]

- 57. Djulbegovic B, Loughran TP Jr, Hornung CA, et al. The quality of medical evidence in hematology‐oncology. Am J Med. 1999;106(2):198‐205. https://doi.org/10.1016/S0002-9343(98)00391-X [DOI] [PubMed] [Google Scholar]

- 58. Ebell MH, Sokol R, Lee A, Simons C, Early J. How good is the evidence to support primary care practice? Evid Based Med. 2017;22(3):88‐92. https://doi.org/10.1136/ebmed-2017-110704 [DOI] [PubMed] [Google Scholar]

- 59. Altman D, Bland M. Absence of evidence is not evidence of absence. BMJ. 1995;311(7003):485 https://doi.org/10.1136/bmj.311.7003.485 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60. US Preventive Service Task Force . Screening for breast cancer: US Preventive Services Task Force recommendation statement. Ann Intern Med. 2009;151:716‐726. [DOI] [PubMed] [Google Scholar]

- 61. Hozo I, Djulbegovic B. Will insistence on practicing medicine according to expected utility theory lead to an increase in diagnostic testing? Med Dec Making. 2009;29(3):320‐322. https://doi.org/10.1177/0272989X09334370 [Google Scholar]

- 62. Fontanarosa P, Bauchner H. Conflict of interest and medical journals. JAMA. 2017;317(17):1768‐1771. https://doi.org/10.1001/jama.2017.4563 [DOI] [PubMed] [Google Scholar]

- 63. Alexander PE, Gionfriddo MR, Li SA, et al. A number of factors explain why WHO guideline developers make strong recommendations inconsistent with GRADE guidance. J Clin Epidemiol. 2016;70:111‐122. https://doi.org/10.1016/j.jclinepi.2015.09.006 [DOI] [PubMed] [Google Scholar]

- 64. Djulbegovic B, Hozo I, Greenland S. Uncertainty in clinical medicine In: Gifford F, ed. Philosophy of Medicine (Handbook of the Philosophy of Science). London: Elsevier; 2011:299‐356. [Google Scholar]

- 65. Slovic P, Finucane ML, Peters E, MacGregor DG. Risk as analysis and risk as feelings: some thoughts about affect, reason, risk, and rationality. Risk Anal. 2004;24(2):311‐322. https://doi.org/10.1111/j.0272-4332.2004.00433.x [DOI] [PubMed] [Google Scholar]

- 66. Sunstein CR. Probability neglect: emotions, worst cases, and law. Yale Law J. 2002;112(1):61‐107. https://doi.org/10.2307/1562234 [Google Scholar]

- 67. Hsee CK, Rottenstreich Y. Music, pandas and muggers: on the affective psychology of value. J Exp Psychol. 2004;133(1):23‐30. https://doi.org/10.1037/0096-3445.133.1.23 [DOI] [PubMed] [Google Scholar]

- 68. Pachur T, Hertwig R, Wolkewitz R. The affect gap in risky choice: affect‐rich outcomes attenuate attention to probability information. Decision. 2013;1:64‐78. [Google Scholar]

- 69. Hemmerich JA, Elstein AS, Schwarze ML, Moliski EG, Dale W. Risk as feelings in the effect of patient outcomes on physicians' subsequent treatment decisions: a randomized trial and manipulation validation. Soc Sci Med. 2012;75(2):367‐376. https://doi.org/10.1016/j.socscimed.2012.03.020 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70. Inman J. Regret regulation: disentagling self‐reproach from learning. J Consum Psychol. 2007;17:19‐24. [Google Scholar]

- 71. Connolly T, Zeelenberg M. Regret in decision making. Curr Dir Psychol Sci. 2002;11(6):212‐216. https://doi.org/10.1111/1467-8721.00203 [Google Scholar]

- 72. Djulbegovic B, Hozo I, Schwartz A, McMasters K. Acceptable regret in medical decision making. Med Hypotheses. 1999;53(3):253‐259. https://doi.org/10.1054/mehy.1998.0020 [DOI] [PubMed] [Google Scholar]

- 73. Djulbegovic B, Paul A. From efficacy to effectiveness in the face of uncertainty indication creep and prevention creep. JAMA. 2011;305(19):2005‐2006. https://doi.org/10.1001/jama.2011.650 [DOI] [PubMed] [Google Scholar]

- 74. Stanovich KE, West RF. Evolutionary Versus Instrumental Goals. How Evolutionary Psychology Misconceives Human Rationality. Hove, UK: Psychological Press; 2003. [Google Scholar]

- 75. Djulbegovic M, Beckstead J, Elqayam S, et al. Thinking styles and regret in physicians. PLoS One. 2015;10(8):e0134038):e0134038 https://doi.org/10.1371/journal.pone.0134038 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76. Djulbegovic B, Elqayam S, Reljic T, et al. How do physicians decide to treat: an empirical evaluation of the threshold model. BMC Med Inform Decis Mak. 2014;14(1):47 https://doi.org/10.1186/1472-6947-14-47 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77. Hume D. A Treatise on Human Nature (Original publication date 1739‐1740). Oxford: Clarendon; 2000. [Google Scholar]

- 78. Djulbegovic B, Beckstead J, Nash DB. Human judgment and health care policy. Popul Health Manag. 2014;17(3):139‐140. https://doi.org/10.1089/pop.2014.0027 [DOI] [PubMed] [Google Scholar]

- 79. Djulbegovic B, Lyman GH, Ruckdeschel J. Why evidence‐based oncology. Evid‐Based Oncol. 2000;1(1):2‐5. https://doi.org/10.1054/ebon.1999.0003 [Google Scholar]

- 80. https://www.nlm.nih.gov/pubs/factsheets/medline.html. MEDLINE fact sheet. 2016; https://www.nlm.nih.gov/pubs/factsheets/medline.html. Accessed April 20. 2016.

- 81. Bastian H, Glasziou P, Chalmers I. Seventy‐five trials and eleven systematic reviews a day: how will we ever keep up? PLoS Med. 2010;7(9):e1000326):e1000326 https://doi.org/10.1371/journal.pmed.1000326 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82. Hozo I, Djulbegovic B, Luan S, Tsalatsanis A, Gigerenzer G. Towards theory integration: threshold model as a link between signal detection theory, fast‐and‐frugal trees and evidence accumulation theory. J Eval Clin Pract. 2017;23(1):49‐65. https://doi.org/10.1111/jep.12490 [DOI] [PubMed] [Google Scholar]

- 83. Kassirer JP. Our stubborn quest for diagnostic certainty. A cause of excessive testing. N Engl J Med. 1989;320(22):1489‐1491. https://doi.org/10.1056/NEJM198906013202211 [DOI] [PubMed] [Google Scholar]

- 84. Tsalatsanis A, Hozo I, Djulbegovic B. Acceptable regret model in the end‐of‐life setting: patients require high level of certainty before forgoing management recommendations. Eur J Cancer. 2017;75:159‐166. https://doi.org/10.1016/j.ejca.2016.12.025 [DOI] [PubMed] [Google Scholar]

- 85. Wentlandt K, Krzyzanowska MK, Swami N, Rodin GM, Le LW, Zimmermann C. Referral practices of oncologists to specialized palliative care. J Clin Oncol. 2012;30(35):4380‐4386. https://doi.org/10.1200/JCO.2012.44.0248 [DOI] [PubMed] [Google Scholar]

- 86. Elqayam S, Wilkinson MR, Thompson VA, Over DE, Evans J. Utilitarian moral judgment exclusively coheres with inference from is to ought. Front Psychol. 2017;8:1042 https://doi.org/10.3389/fpsyg.2017.01042 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 87. Pirolli P. Rational analyses of information foraging on the web. Cognit Sci. 2005;29(3):343‐373. https://doi.org/10.1207/s15516709cog0000_20 [DOI] [PubMed] [Google Scholar]

- 88. Pirolli P, Card S. Information foraging. Psychol Rev. 1999;106(4):643‐675. https://doi.org/10.1037/0033-295X.106.4.643 [Google Scholar]

- 89. IOM (Institute of Medicine) . Dying in America: Improving Quality and Honoring Individual Preferences Near The End of Life. Washington, DC: The National Academies Press; 2015. [PubMed] [Google Scholar]

- 90. Gomes B, Higginson IJ, Calanzani N, et al. Preferences for place of death if faced with advanced cancer: a population survey in England, Flanders, Germany, Italy, the Netherlands, Portugal and Spain. Ann Oncol. 2012;23(8):2006‐2015. https://doi.org/10.1093/annonc/mdr602 [DOI] [PubMed] [Google Scholar]

- 91. Neumann PJ, Cohen JT, Weinstein MC. Updating cost‐effectiveness—the curious resilience of the $50,000‐per‐QALY threshold. N Engl J Med. 2014;371(9):796‐797. https://doi.org/10.1056/NEJMp1405158 [DOI] [PubMed] [Google Scholar]

- 92. Marseille E, Larson B, Kazi DS, Kahn JG, Rosen S. Thresholds for the cost‐effectiveness of interventions: alternative approaches. Bull World Health Organ. 2015;93(2):118‐124. https://doi.org/10.2471/BLT.14.138206 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93. Djulbegovic B. Value‐based cancer care and the excessive cost of drugs. JAMA Oncol. 2015;1(9):1301‐1302. https://doi.org/10.1001/jamaoncol.2015.3302 [DOI] [PubMed] [Google Scholar]

- 94. Elqayam S. Grounded rationality: descriptivism in epistemic context. Synthese. 2012;189(S1):39‐49. https://doi.org/10.1007/s11229-012-0153-4 [Google Scholar]

- 95. Stich SP. The Fragmentation of Reason: Preface to A Pragmatic Theory of Cognitive Evaluation. Cambridge, Mass: MIT Press; 1990. [Google Scholar]

- 96. Institute of Medicine . Vital Signs: Core Metrics for Health and Health Care Progress. Washington, DC: The National Academies Press; 2015. [PubMed] [Google Scholar]

- 97. Brownlee S, Chalkidou K, Doust J, et al. Evidence for overuse of medical services around the world. Lancet. 390(10090):156‐168. https://doi.org/10.1016/S0140-6736(16)32585-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 98. Glasziou P, Straus S, Brownlee S, et al. Evidence for underuse of effective medical services around the world. Lancet. 390(10090):169‐177. https://doi.org/10.1016/S0140-6736(16)30946-1 [DOI] [PubMed] [Google Scholar]

- 99. Saini V, Brownlee S, Elshaug AG, Glasziou P, Heath I. Addressing overuse and underuse around the world. Lancet. 390(10090):105‐107. https://doi.org/10.1016/S0140-6736(16)32573-9 [DOI] [PubMed] [Google Scholar]

- 100. Saini V, Garcia‐Armesto S, Klemperer D, et al. Drivers of poor medical care. Lancet. 390(10090):178‐190. https://doi.org/10.1016/S0140-6736(16)30947-3 [DOI] [PubMed] [Google Scholar]

- 101. Hammond KR. Human Judgment and Social Policy. Irreducible Uncertainty, Inevitable Error, Unavoidable Injustice. Oxford: Oxford University Press; 1996. [Google Scholar]

- 102. Hammond KR. Beyond rationality. The search for wisdom in a troubled time. Oxford: Oxford University Press; 2007. [Google Scholar]

- 103. Nickel B, Barratt A, Copp T, Moynihan R, McCaffery K. Words do matter: a systematic review on how different terminology for the same condition influences management preferences. BMJ Open. 2017;7(7):e014129 https://doi.org/10.1136/bmjopen-2016-014129 [DOI] [PMC free article] [PubMed] [Google Scholar]