Abstract

The finding of power law scaling in neural recordings lends support to the hypothesis of critical brain dynamics. However, power laws are not unique to critical systems and can arise from alternative mechanisms. Here, we investigate whether a common time-varying external drive to a set of Poisson units can give rise to neuronal avalanches and exhibit apparent criticality. To this end, we analytically derive the avalanche size and duration distributions, as well as additional measures, first for homogeneous Poisson activity, and then for slowly varying inhomogeneous Poisson activity. We show that homogeneous Poisson activity cannot give rise to power law distributions. Inhomogeneous activity can also not generate perfect power laws, but it can exhibit approximate power laws with cutoffs that are comparable to those typically observed in experiments. The mechanism of generating apparent criticality by time-varying external fields, forces or input may generalize to many other systems like dynamics of swarms, diseases or extinction cascades. Here, we illustrate the analytically derived effects for spike recordings in vivo and discuss approaches to distinguish true from apparent criticality. Ultimately, this requires causal interventions, which allow separating internal system properties from externally imposed ones.

Author summary

The analysis of complex systems in nature introduces several challenges, because typically a number of parameters either remain unobserved or cannot be controlled. In particular, it can be challenging to disentangle the dynamics generated within the system from that imposed by the environment. With this difficulty in mind, we reinvestigate the popular hypothesis that neural dynamics is poised close to a critical point. Criticality is characterized by power-law scaling and has been linked to favorable computational properties of networks. Power-law distributions for “neural avalanches,” i.e., spatio-temporal clusters of neural activity, have been observed in various neural systems and support the criticality hypothesis. Here we show that approximate power laws do not necessarily reflect critical network dynamics but can be imposed externally on non-critical networks, i.e., by driving the network with input of specific statistics. We derive these results analytically and illustrate them both in simulations and using neural recordings. The findings indicate that more caution and additional tests are required for distinguishing between genuine and apparent criticality. Ultimately, this requires causal interventions, not only in neural systems, but in many other complex dynamical systems that are subject to time-varying external forces, such as the dynamics of swarms, diseases or extinction cascades.

Introduction

In the quest to understand the principles that govern collective neural dynamics, it has been proposed that brains operate at or near criticality [1–5], i.e., a dynamical state that arises at second-order phase transitions and is characterized by scale-invariant activity cascades or avalanches. Criticality is an important candidate state for brain function, because in models criticality optimizes information processing capacities [6–9]. Since the expected power law distributions for avalanches have been found for neural activity on many scales – from spiking activity in vitro [10–12] to local field potential, EEG, MEG and BOLD signals in humans [13–18] – these power laws are taken as evidence that the brain does indeed operate at criticality. However, it is known that power laws can also be generated by alternative mechanisms [19]. Most of those mechanisms do not map naturally onto neural networks and are therefore not plausible. However, here we identify a particular mechanism, namely, time-varying changes in the strength of an external drive, as a potential candidate to generate approximate power law scaling in the absence of criticality. Specifically, we investigate the hypothesis that a generic model of neural network dynamics, implemented by an inhomogeneous Poisson process (IPP), can give rise to power law avalanche size and duration distributions.

In the following sections, we outline the conditions under which approximate power law scaling for avalanches arises from IPPs. Specifically, we first derive analytically the duration and size distributions for a homogeneous Poisson process (HPP) and show that they follow (approximate) exponential distributions, with rate-dependent decay constants. Subsequently, we derive the known result that superposition, i.e., a weighted summation, of such exponential distributions with different decay constants could, in theory, lead to power laws with any exponent. However, this mechanism does not apply to neural activity, because the weighting function of the rates that is required for a perfect power law cannot be normalized. Hence, this mechanism can generate only approximate power laws with cutoffs. Finally, we show how these approximate power laws can be generated by IPPs and how they resemble avalanche distributions that are typically observed experimentally. Thus, they can, in principle, be mistaken as evidence for criticality.

This paper focuses on the conditions leading to power law distributions from Poisson activity, but power laws form only one marker for criticality. To distinguish apparent criticality from true criticality, it is advisable to extend the criticality analysis beyond power laws. By applying additional measures and by studying the impact of the temporal scale (bin size), many types of IPP can be distinguished from critical processes. In Section 3.3, we also present a number of measures that aid in distinguishing apparent criticality from true criticality, in the hope that this overview will serve as a guide for future rigorous analysis of critical systems. However, it is necessary to bear in mind that because of the correlative nature of any data analysis, a very sophisticated external drive (i.e., very specific IPPs) could perfectly mimic the neural activity of critical systems. Thus, ultimately, the distinction between criticality and apparent criticality can be achieved only by causal interventions that probe the internal system dynamics and disentangle it from the impact of some hidden drive. This idea not only holds for the analysis of critical systems but also points to the fundamental limitation of correlative system analysis in general, which can be overcome only by causal intervention. Nonetheless, even without causal intervention, analyses that go beyond the standard set of avalanche measures can increase the confidence that a particular system is critical.

Results

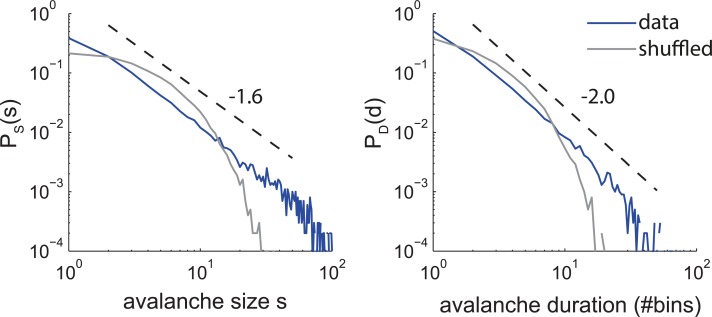

We start by asking whether non-critical systems can indeed appear critical. Fig 1 depicts distributions for avalanche sizes and durations that resemble distributions often observed in experiments [4,13]. The distributions exhibit approximate power law scaling with exponents near the theoretical values of -1.5 and -2 for size and duration, respectively. Similar to experimental data, they differ clearly from the avalanche distributions obtained after shuffling the events in the data (Fig 1, gray, thin line). The shuffled data are better fitted by exponential than by power law distributions. Together, these distributions could be taken as evidence for criticality of the underlying systems. However, here the avalanches were actually generated by an IPP. In this example, the process was composed of four equal periods with a different fixed-rate at each period (rates r = {1, 2, 5, 10}/18, i.e., mean rate 1, see Methods). This is a striking example to show that slow and moderate variations in the overall rate of a Poisson process can lead to approximate power laws in the size and duration distributions, which could be mistaken as indicators of criticality. In this paper, we derive the conditions under which IPP can give rise to approximate power laws such as these.

Fig 1. (Color) Avalanche size and duration distributions from an inhomogeneous Poisson process (IPP, blue, see methods), which approximates power laws that resemble those observed in typical experiments.

Shuffling the events of the IPP results in a homogeneous Poisson process (HPP, gray). For HPPs, the size and duration distributions are (approximately) exponential rather than following a power law. Dashed lines indicate power laws with exponents -1.6 and -2.0 in the left and right panel, respectively.

Results for a homogeneous Poisson process with rate r

In this section, we review the avalanche analysis, discuss the impact of the bin size parameter, and then derive analytically the duration and size distributions for an HPP. We also derive or review other measures, including the avalanche shape, the scaling of the shape with duration, the inter-event/avalanche distributions, the spike-count ratio or branching parameter Q, the power spectrum and the Fano factor.

Definition of neuronal avalanches using temporal binning

Avalanches are defined as cascades of events that originate from a single seed event [20]. For neural recordings, these events are either spikes or binary events obtained from thresholding continuous signals, such as LFP or EEG signals. The events from all recording channels are combined into a single time series of events, A(t). To extract neuronal avalanches, this time series is partitioned into temporal bins Δt. An avalanche is then defined as a sequence of consecutive non-empty bins. The duration of an avalanche is the number of bins, and its size is the total number of events. Subsequent avalanches are separated by at least one empty bin. These empty time bins, or pauses, between any two avalanches, are characteristic of critical systems [4,20–22]. However, technically, avalanche analysis can be applied to any time series that shows pauses, such as the Poisson processes we are analyzing here. Importantly, the choice of the bin size can impact the avalanche distributions. Thus, for any data or model analysis, taking this bin size dependence into account adds valuable information about the system.

Effect of the bin size and rate. In all derivations, the rate r is typically varied, whereas the bin size (Δt) is fixed. Here, without loss of generality, Δt = 1 ms, and this bin size often equals one average IEI, namely, Δt = 1/r = 〈IEI〉. It is sufficient to derive only the dependence of the different quantities on r, because the HPP depends only on the product r Δt. As a consequence, changing Δt to Δt′ is strictly equivalent to changing the rate of the HPP from r to r′ = r ∙ Δt′/Δt. Thus, exploring rate dependences is exchangeable with exploring bin size dependences.

Contribution of each neuron. How does each single neuron contribute to the population activity A(t)? In our generic network model, we assume that each neuron follows the same rate envelope or drive r(t). For the HPP, r(t) = r is constant. Each neuron i can spike with its own average rate ri. Thus, although rates can differ among neurons, the sum of the rates over all N recorded neurons must equal the rate of the process, . For the resulting A(t), it is equivalent to either double the number of neurons or to double the rate of each neuron. Importantly, as in IPPs, all neurons follow the same drive r(t). This common drive introduces correlations between the neurons' firing, and these correlations contribute to the long-tailed avalanche distributions.

Analytical derivation of avalanche duration and size distributions

For an HPP, it is commonly assumed that the avalanche measures are exponential and not power law distributed. We show analytically that the duration distribution, PD(d), is indeed exponential, but the expression for the size distribution, PS(s), deviates from the exponential assumption. In the main text we provide the results together with the outline of the derivation, and the full analytical derivations are detailed in the Methods section.

Avalanche duration distribution PD(d). Consider an HPP with rate r and bin size Δt = 1 time step. The avalanche duration d is defined as the number of non-empty bins in a sequence. The probability of a bin being empty is p0 = e−r, and the probability of a non-empty bin is thus p = 1 − p0 = 1 − e−r. Because the events in different time bins are independent, the probability of obtaining a sequence of d non-empty bins between two empty bins is proportional to . This gives PD(d) ~ (1 − e−r)d = e−μ(r)d, where μ(r) = −ln(1 − e−r) is the rate-dependent decay constant of the exponential. Thus, the avalanche durations are exponentially distributed, and the distributions become flatter as r increases (Fig 2B). The normalized distribution is given by (see Methods):

| (1) |

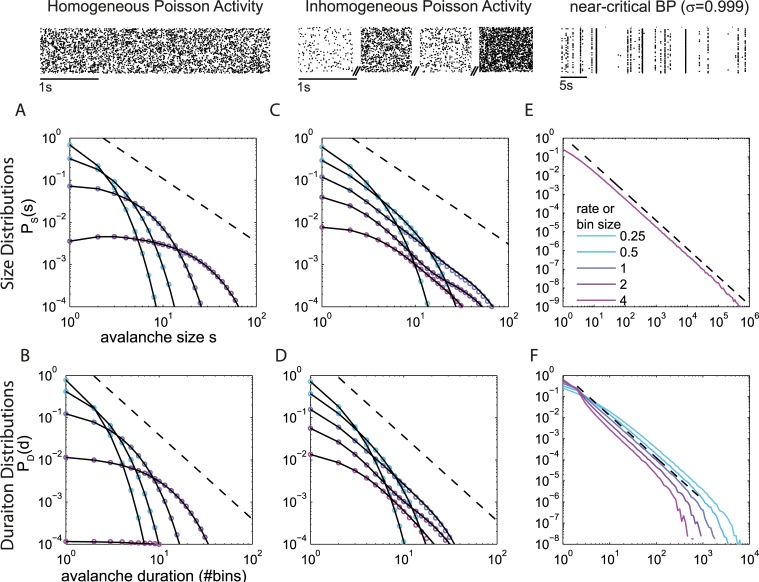

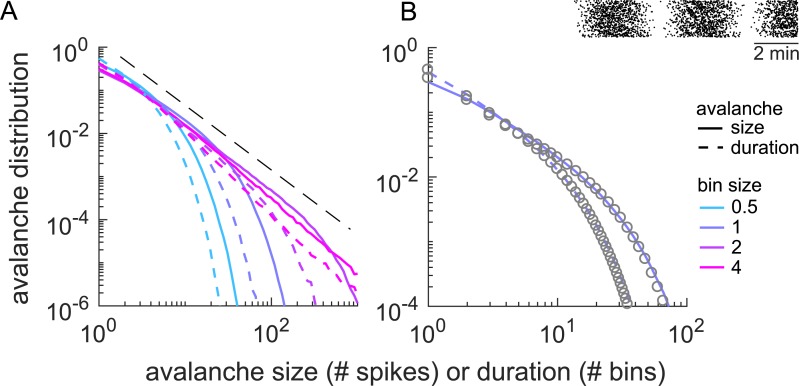

Fig 2.

(Color) Avalanche size and duration distributions for three example processes, as exemplified in the raster plots above, all with the same mean rate: A&B. homogeneous Poisson process, C&D. inhomogeneous Poisson process, E&F. critical branching process (BP). Different colors represent different bin sizes, Δt, at r = 1 (or equivalently different rates r at Δt = 1). Colored lines or dots are numerical results; black lines are analytical results. A-D. For both the homogeneous and the inhomogeneous Poisson processes, an increase in Δt (or r) makes the size distribution PS(s) and the duration distribution PD(d) flatter. E&F. For the critical system, a change in Δt (or r) hardly changes PS(s), which shows a power law with exponent -1.5 (dashed). The slope of PD(d) changes systematically, because d is in units of bins. In units of time steps, PD(d) would also change very little and show the exponent -2 (dashed).

The above results hold for any rate (or equivalently for any bin size). For the widely used bin size of one “average inter-event interval,” Δt = 〈IEI〉 = 1/r, the duration distribution is independent of the rate r and simplifies to:

| (2) |

Avalanche size distribution PS(s). The derivation of the avalanche size distribution PS(s) is more intricate than the derivation of PD(d) (see Methods for full details). The first step involves obtaining an expression for the conditional size distribution, PS(s|d). This requires knowing the probability of having A = a events in a bin, which is given by the Poisson distribution, . However, within an avalanche, all bins have a ≥ 1 events, and therefore the probability must be renormalized, yielding:

| (3) |

The size of each avalanche is then the sum of the events from all the bins that constitute it, namely, the sum of d independent random variables A. The conditional size distribution PS(s|d) can be derived from the corresponding probability-generating function (see Methods). The resulting expression involves Stirling numbers of the second kind, , which represent the number of ways to distribute s events into d bins such that none of the bins is empty (the number of surjections from s to d):

| (4) |

In the second step, PS(s|d) is combined with PD(d) to yield the size distribution:

| (5) |

This distribution is not exponential and does not resemble a power law (Fig 2A). Note that it does also not necessarily decrease monotonically with s. In fact, for large enough rates, r>2, PS(s) shows a global maximum at s>1. However, the tail of the distribution approximates an exponential (see Methods). More precisely, for large s the distribution can be approximated by:

| (6) |

where λ is a function of r

| (7) |

B(sc) accounts for the slow change of λ with s and is evaluated for a representative sc (see Methods). Thus, in contrast to the duration distribution, the size distribution is not exponential and is not necessarily monotonic.

Additional avalanche measures

In this section we derive or review additional common time series measures for the HPP. All results are shown in Fig 3A.

Fig 3.

(Color) Avalanche size and duration distributions and additional time-series measures for: A. a homogeneous Poisson process (HPP), B. an inhomogeneous Poisson process (IPP, same as Fig 1), and C. a near-critical branching process (BP) with branching parameter σ = 0.999 = σc − 10−3. Circles represent numerical results; black lines represent analytical results; and dashed line are reference power laws. The mean rate of all processes is r = 1, and the bin size (if relevant) is Δt = 1. The top row shows representative examples of raster plots for each process. While HPPs do not follow power laws, the avalanche size distribution of the example IPP does approximate a power law with cutoff, comparable to distributions obtained in experiments and in simulations of critical branching processes.

Avalanches shape. In critical systems, the avalanche shape is expected to be "universal," i.e., the characteristic shape Fu(t/d) of the avalanche scales with the duration d of the avalanche F(t,d) = Fu(t/d)dν [23,24]. This relationship implies that the average avalanche size also scales with d. For homogeneous Poisson processes, the shape is flat, because the expected number of events per bin of size 1 is simply r. The expected size for a given duration is thus:

| (8) |

Thus, follows a trivial power law with slope ν = 1, or, more simply, is proportional to d (Fig 3A). For certain critical systems, specific relations between the exponents of PS(s), PD(d), and have been predicted [23,24]. However, for HPPs neither PS(s) nor PD(d) follows a power law, and thus the scaling relationships are not applicable.

Inter-event and inter-avalanche-interval distributions. The inter-avalanche-interval (IAI) distribution is closely related to the IEI distribution of A(t), that is the IEI is calculated from taking all events together. More precisely, the IAI distribution is a left-truncated version of the IEI distribution, where the truncation is determined by the bin size. In other words, all IEIs that are smaller than Δt do not contribute to P(IAI), whereas for all IEI or IAI > 2Δt, the counts for IEI and IAI are exactly equal. As P(IEI) is the more general distribution, we report only P(IEI) here. Analytically, P(IEI) is the inter-event distribution of a Poisson process

| (9) |

and follows an exponential (Fig 3A). Note that even under very high rates (r ≫ 1), there is still a non-zero probability of obtaining empty bins. This allows parsing the process into avalanches.

Fano factor F. The Fano factor is the mean-normalized variance of a process and for Poisson processes F=1, independently of the bin size (Fig 3A).

Event or spike count ratio Q. The spike count ratio (or branching parameter), Q, is defined as the expected value of activity in one bin divided by the activity in the previous bin, , and the expectation is taken over all bin pairs with A(t|Δt) ≥ 1 [4]. For HPPs, Q can be derived analytically. Q changes with Δt, and, as before, the dependence on Δt is equal to that on r, i.e., Q(Δt = z|r = 1) = Q(r = z|Δt = 1). The analytical expression for Q for Poisson processes is derived in the Methods. It yields:

| (10) |

where Ei(Δt) is the exponential integral, and γ is the Euler-Mascheroni constant (γ ≈ 0.577) [25]. The spike count ratio Q(Δt) increases for small Δt, equals unity for Δt ≈ 1.5 ∙ 〈IEI〉, assumes a maximum at Δt ≈ 3.75 ∙ 〈IEI〉, and finally approaches unity from above for Δt → ∞ (Fig 3A).

Fourier spectrum. Finally, the Fourier spectrum of a Poisson process is known to be flat (Fig 3A, bottom panel).

Weighted superposition of exponential distributions can yield power laws with a cut-off but not perfect power laws

As derived above, the durations and size distributions of HPPs are (approximately) exponential. The decay constant of the exponentials depends on the rate of the process, r. This dependence is the key to obtaining approximate power law distributions from IPPs, via superimposing multiple exponential distributions, which are each generated by periods of activity with different rates. Mathematically, it is known that specific superpositions (i.e., weighted sums) of exponential functions lead to power laws. In this section, we review the general conditions under which such a superposition can lead to a power law with a given exponent. We then translate these conditions to neural activity with a time varying rate (IPP) and show that a perfect power law cannot be obtained. However, superposition of a few exponentials can result in approximate power law distributions, spanning a few orders of magnitude.

To obtain a perfect power law P(x) ~ x−α from the superposition of exponentials, the weighting function w(λ) for each decay rate λ must fulfill the following condition:

| (11) |

Note that here, for the sake of clarity, generic exponential functions e−λx are first used; later we replace them with the full avalanche duration distributions of HPPs. To obtain a power law without a cutoff, the bounds of the integral have to extend over the entire interval λ1 = 0 to λ2 → ∞. Otherwise, the range of the power law distribution is limited on the right or left, respectively. The weighting function that results in a power law is a power law in itself: w(λ)~λα−1 (see Methods).

| (12) |

Γ is the gamma function, Zp is the normalization, and α > 1 to allow normalization of the power law. However, w(λ)~λα−1 cannot be normalized for α ≥ 0, i.e., the probabilities w(λ) with which each exponential e−λx would contribute to the power law are undefined. As a consequence, real-world systems cannot generate a perfect power law from addition (superposition) of exponentials. However, the weights can be normalized by choosing a reduced integration range [λ1,λ2] at the cost of obtaining only an approximate power law with cutoffs. This approach is used below to study avalanche distributions generated by an IPP. To achieve this goal, we need first to translate the general relation for P(x) above to the specific cases of the duration distribution PD(d); in particular, we need to derive the specific weight function w(r)– instead of the generic function w(λ) – that gives rise to a power law for PD(d)~d−β. The density or weighting function w(r) denotes the fraction of time that an IPP has to assume each rate r (and hence sample from the respective exponential distribution), so that a power law is obtained across the full IPP. We assume that the IPP rate changes far more slowly than the typical duration of an avalanche. We can thus assume that an IPP takes a fixed rate r for some time window. During each time window, the duration distribution is PD(d|r), as derived above for fixed rates (HPP, see Eqs (1) and (2)). The resulting PD(d) of the IPP can be written as:

| (13) |

where ZD is the appropriate normalization, and ρ(r) is the rate at which avalanches occur given a Poisson rate r. This equation holds, in analogy to the argument above, if all the factors in front of the exponential in PD(d|r) are proportional to rβ−1. This condition yields the general expression for w(r) (see Methods):

| (14) |

To obtain, for example, PD(d) ~ d−2, which is characteristic for critical branching processes, the weighting function is:

| (15) |

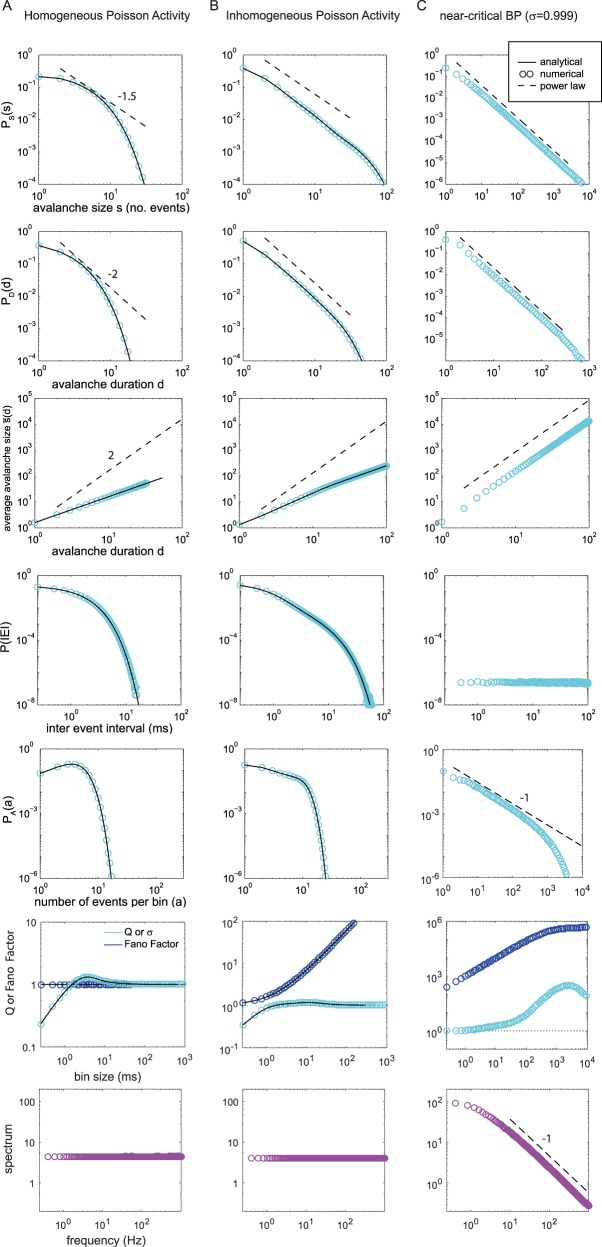

This function is approximately 1/r for r ≪ 1 and approximately constant for r>1 (Fig 4A, black dashed line). Importantly, this implies that for low rates the number of events that each rate contributes is invariant: w(r)r ~ 1/r ∙ r = const., whereas for large rates, each rate contributes an equal fraction of time (w(r) = const.), and hence larger rates contribute more events (~ r). However, it immediately becomes clear that this weighting function cannot be normalized over the full range of rates from zero to infinity. Nonetheless, w(r) can be normalized if a limited integration range [r1,r2] is chosen, albeit at the cost of introducing a right and left cutoff to the power law of PD(d), respectively. For the numerical illustration in Fig 4, we chose the range [r1 = 0.01, r2 = 5] and sampled 300 values from w(r) in this range. For the analytical results, the functional form of the cutoffs can be obtained as follows (see Methods):

| (16) |

where γ(⋅,⋅) is the lower incomplete gamma function. The terms γ(⋅,⋅) generate smooth cutoffs on both sides of the power law d−β by “windowing” it. The windowing function Δγ = Δγ(β = 2, r1 = 0.01, r2 = 5) is depicted in Fig 4C for different Δt (or r). With increasing bin size (or, equally, with increasing rate) it moves to larger avalanche durations d (i.e., to the right). Likewise, the cutoffs of the resulting PD(d) move from left to right (Fig 4B). The right cutoff is thus prominent at small bin sizes (Δt < 1), whereas the left cutoff sets in at large bin sizes (Δt > 1). For Δt = 1, this example IPP shows a power law that extends over more than two orders of magnitude.

Fig 4. (Color) Superposition of exponential distributions that arise from windows with a fixed Poisson firing rate can combine to power law distributions with a cutoff.

A. The weighting function w(r) that leads to a power law with an exponent of -2 in the duration distribution; the analytical result is shown as a dashed black line, and a specific stochastic realization that we used for panels B-D is shown as a non-broken red line. B. Avalanche duration distributions PD(d) arising from the weighting function in A, using either the red weighting function (solid line) or the analytical expression with the same integration limits, i.e., 0.01 to 5 (dashed lines). Colors indicate different bin sizes Δt (in units of 1/r), and the dashed black line is a reference power law with exponent -2. C. Effect of bin size on the cutoff. The different functions depict the theoretical cutoff function Δγ imposed on the target duration distribution PD(d) ~ d−2 for different bin sizes (see text for details; same color code as in B). D. Avalanche size distributions PS(s) from the same red weighting function as in A also show an approximate power law with an exponent characteristic of branching processes (exponent -1.5 is indicated by the dashed black line).

In branching processes, the characteristic exponent for the duration distribution is -2, whereas for the size distributions it is -1.5. Interestingly, we obtained the same pair of exponents for IPPs by naively applying to the size distributions PS(s|r) the exact weight function w(r|β = 2) that we had derived for PD(d). Thus, by construction, for an IPP that gives rise to PD(d) ≈ d−2/ZD (with cutoff), the corresponding size distribution shows a power law with PS(s) ≈ s−1.5/ZS when applying a bin size of Δt = 1 (Fig 4D). Avalanches extracted from this IPP can thus easily be taken as evidence for criticality. In summary, Poisson neurons with slowly changing finite rates can give rise to approximate power laws with the characteristic exponents -1.5 and -2 for the sizes and durations, respectively, if the different rates occur with probability w(r|β = 2) as derived above. In practice, the generation of a power law from superimposed exponentials can be realized only with a cutoff and requires the weighted contribution of each exponential according to w(r|β).

Non-stationary Poisson processes can give rise to approximate power law distributions for avalanches, but typically differ from critical processes in other measures

As shown above, IPPs can give rise to approximate power laws with a cutoff if their rates change slowly and if they are distributed according to w(r|β) on an interval [r1,r2]. In this section, we show that the rate distribution does not have to be exactly w(r|β) to generate distributions that resemble those obtained from experimental results. However, IPPs and truly critical processes typically differ in other measures. This differentiation allows us to distinguish apparent critical systems from truly critical systems, as described below.

Consider an IPP that assumes one of four equiprobable rates {r1,r2,r3,r4} = {0.1,0.2,0.5,1}/Z. The normalization Z = 5/9 assures 〈r〉 = 1, without loss of generality. Each rate is maintained for a long time window compared to the typical avalanche duration—here for 250,000 time steps (≈ 4 min, assuming a sampling rate of 1 kHz). While each interval separately shows (approximately) exponential avalanche distributions, combining all epochs results in an approximate power law with a cutoff at around s = 80, thus covering almost two orders of magnitude for PS(s) and PD(d) and also for P(IEI), the inter-event interval distribution (Fig 3B, same parameters as Fig 1). Thus, avalanche distributions from this simple non-stationary Poisson process could easily be taken as evidence for criticality, especially since the exponents match those of a critical branching process (-1.5 and -2 for the size and rate distribution, respectively, Fig 3B).

Measures other than avalanche distributions, however, show clear differences between this inhomogeneous Poisson activity (IPP) and a critical branching process (compare Fig 3B and 3C, respectively), as follows: (i)The relationship between mean avalanche size and duration, , exhibits an almost perfect power law for the IPP, but not with the exponent of -2 that is expected from the exponents of the size and duration distributions [23,24]. Instead it shows the trivial exponent of unity, i.e., ~ d. (ii) The inter-event interval distribution P(IEI) is flat for the branching process but constitutes a sum of exponentials, approximating a power law, for the IPP. (iii) The density of events PA(a) approximates a power law for the branching process but not for the IPP. (iv) The estimated branching ratio or spike count ratio Q(Δt) shows a pronounced maximum for the branching process (maxΔt Q(Δt) ≈ 500), whereas for the IPP Q(Δt) is close to unity for all Δt ≥ 1. (v) The Fano factor takes much higher values around Δt = 1 in the branching process than in the IPP (note the different y-axis ranges). (vi) The Fourier spectrum of the population activity A(t) shows a power law spanning more than two orders of magnitude for the branching process, whereas it is flat for the IPP. (vii) Finally, PS(s) and PD(d) for the IPP change markedly with Δt, as predicted analytically, whereas for the branching process they are almost invariant against moderate changes in Δt (Fig 2C–2F). This is because the critical branching process, despite having exactly the same average rate as the IPP, shows a moderate separation of time scales (see Discussion).

Discussion

We have shown that it is not possible to generate a perfect power law for avalanches with an IPP, whereas approximate power laws, extending over several orders of magnitude before cutoff, can be generated by assuming that the rates vary over time across only one or two orders of magnitude. Our findings thus indicate that power law distributions for avalanches may also appear in non-critical systems, given a specific time-varying external drive. For many types of input, an analysis that extends beyond avalanches alone can rule out or provide evidence for the criticality hypothesis. However, for certain types of input (in particular r(t) of the IPP mimicking exactly the A(t) generated by a true critical system), "passive" data analysis, from avalanche size through Fourier spectrum to approaches from equilibrium thermodynamics [26], cannot distinguish between them.

The distinction between a critical-like driven system and a truly critical system ultimately requires manipulation, i.e., the use of “active” causal interventions. Application, for example, of small, controlled perturbations can separate the intrinsic network properties from those imposed by the external input. In critical systems, these perturbations cause avalanches that should follow the predicted power-law distributions. Alternatively, manipulations could directly target the control parameter of the system and assess its impact on the correlation length, susceptibility and specific heat [8,27,28]. Thereby one can establish a second-order phase transition. While such manipulations may be feasible in models, not all experimental preparations allow for well-controlled manipulations, and alternatively manipulating a maximum entropy model fitted to the data may yield spurious results [29]. If direct manipulations cannot be applied, data analyses should make use of the diversity of measures available, including investigating the effects of different temporal bin sizes. Such combined analyses can distinguish between many types of rate-varying drives and truly critical systems. However, again, analyses without manipulations are not sufficient to distinguish between a drive that perfectly mimics the 1/f envelope expected for critical systems and 1/f dynamics generated because of criticality within a network. At the core of these considerations is the fundamental issue of correlative versus causal studies of the underlying system. In general, correlative approaches can be "fooled," and thus the more rigorous, causal analysis is advisable.

We have discussed here the superposition of exponentials as a potential alternative mechanism to criticality that may underlie power law generation. A number of other alternative mechanisms have been proposed, four of them compiled by Newman [19], namely, a combination of exponentials, inverses of quantities, random walks, and the Yule process. There are basically two reasons why it is not possible for these alternative models to explain the power laws observed for neuronal avalanches: either the experimentally observed distributions do not agree with the model functions (e.g., the Yule process shows a power law tail, whereas neuronal avalanches show cutoffs; random walks show an exponent of -2, whereas for avalanche sizes it is typically -1.5), or it is not clear how the generating mechanism would map onto neural networks (all four examples). In contrast, the branching process offers an elegant mechanistic approximation of spike propagation on a network and exhibits the same avalanche distributions as those observed in data [4,30,31].

Schwab et al. [32] and Aitchison et al. [33] have shown that power laws for pattern frequency, i.e., Zipf's law, can emerge from a random external input or field. Their studies are similar to ours in that they used a varying external input, in effect, potentially also leading to a superposition of exponentials. However, avalanches – in contrast to Zipf patterns – are temporally extended, and thus the random external field is not sufficient to generate power law avalanche distributions. The spatio-temporal characteristics of avalanches require a temporally correlated external field. The effects of such a temporally correlated external field have been studied by Touboule & Destexhe [34]. They, in analogy to our study, applied a time-varying external field r(t) to all Poisson neurons. They chose one specific r(t), namely, an Ornstein–Uhlenbeck (OU) process, which they realized with a long correlation time τ compared to the bin size Δt of the avalanche analysis. (They chose τ = 1/α = 1 at simulation steps Δt = 0.0001; this corresponds to τ’ =104 at Δt′ = 1, and implies a very small distance to criticality α′ = 10−4). Thereby, the OU process introduces correlations among neurons and in time, and the resulting avalanche distributions display power laws with a cutoff. Overall, this choice of parameters makes the OU process more similar to our critical branching process than to a HPP [35].

Time varying external input may induce additional correlations not only for neural systems, but also in other collective systems, like the dynamics of flocks, which are subject to wind fluctuations and time varying external cues, or the dynamics of disease propagation that can be influenced seasonally, by weather conditions and by travel patterns. For all such systems, careful analyses are required to disentangle the external input from the internally generated dynamics. A classic example is that of solar flares, which evolve in cycles. Their inter event intervals (IEI) show a heavy tailed distribution. The generation of the heavy tail is derived from superposition of exponential distributions arising from different event rates [36,37], in analogy to the derivations here (Fig 3B).

For the generation of power laws from IPPs, we assumed that some external mechanism, the drive, makes the Poisson neurons fire with a fixed rate for a certain time interval, and then with a different rate for another time interval. For the simulations, the changes in r were assumed to be abrupt to allow for analytical treatment. However, the rate changes can also be slow and continuous. The important constraint is that the rates change slowly compared to the duration of an avalanche. In past studies, avalanches typically lasted a few milliseconds or tens of milliseconds (depending on the rate and bin size) [4,12,13,24,30]. Thus, any change in r of seconds can be considered “slow.” If the rate changed on very fast time scales, much shorter than typical avalanche durations, then the process would resemble an HPP with regard to the avalanche analysis. An example of a slowly varying drive is depicted in Fig 5, where we simulated a simple time-varying input, specifically, a sinusoidal with mean rate 1, amplitude 1, and a slow period of about four minutes. With this naïve choice of parameters, the avalanche size distribution approximated a power law with an exponent of -1.5 over three orders of magnitude (Fig 5A), and the numerical and analytical results still showed a good match (Fig 5B).

Fig 5.

(Color) Avalanche size and duration distributions obtained for a continuously varying IPP are well approximated by power-law distributions with an exponent of -1.5 (A), and are well approximated by the analytical results, shown for bin size 1 (B). The IPP was realized as a sinusoidal with period T=250s and offset 1 (i.e. sin(t/T)+1), as sketched in the inset. The resulting mean rate is unity. Colored lines correspond to different bin sizes, circles depict analytical results, and the dashed black line depicts a reference power law with an exponent of -1.5.

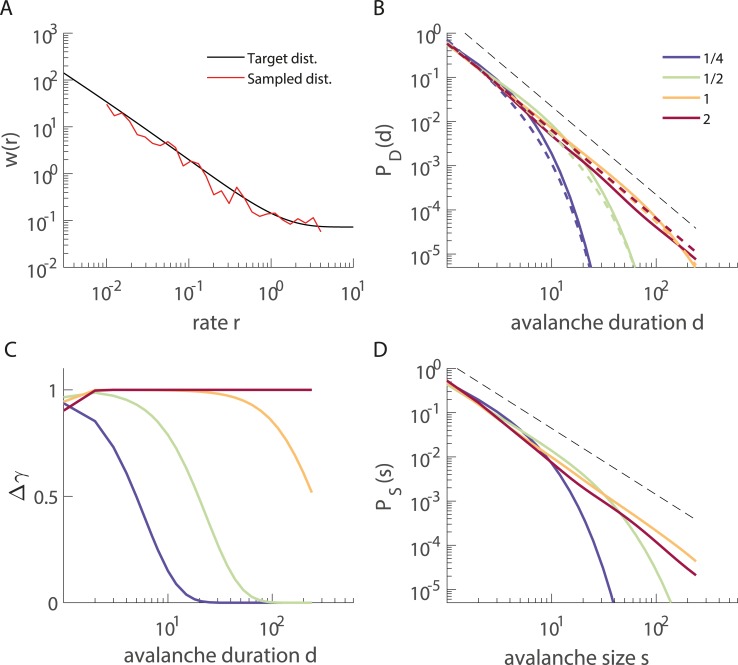

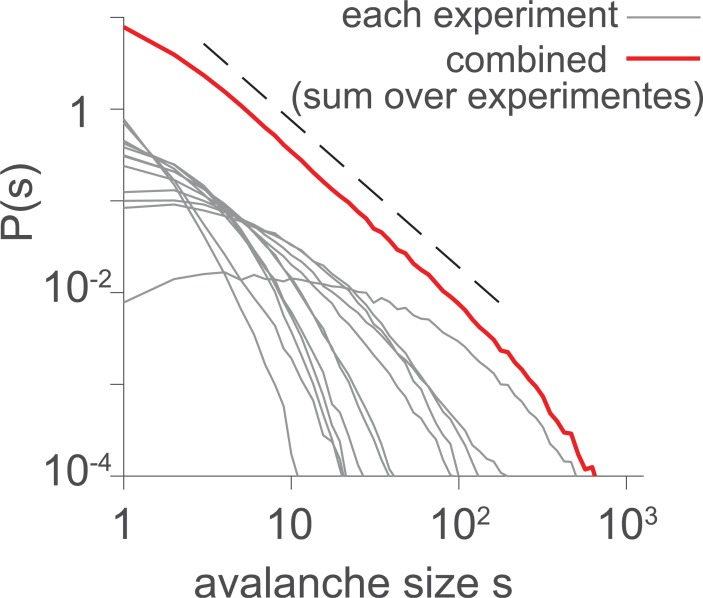

A power law could also arise from combining avalanche distributions from different experiments that differ in the mean event rate. Each recording might show an exponential distribution, but as the rates differ, the decay rates of the exponentials would differ, and adding them could yield approximate power law scaling. This effect is illustrated in Fig 6, where avalanche size distributions from 12 spike recording sessions in macaque monkeys were plotted both individually (gray) and in a combined manner (red). The data sets are precisely the same as those in [30,35]. The size distribution P(s) does not approximate a power law for any of the individual experiments, but combining the data from all twelve recording sessions yields a power law extending over more than two orders of magnitude. This is because each recording shows a different population spike rate, which translates to diverse decay behavior of P(s). Thus, it is evident that avalanche distributions from different experiments should not be combined into distributions by simple averaging. In contrast, an experiment in which the rate diversity lies in the Poisson neurons does not yield approximate power laws: If each neuron spikes with a different, constant Poisson rate, then the overall process is again an HPP with a firing rate equal to the sum of the individual rates.

Fig 6. (Color) This graph illustrates that combining a number of size distributions recorded in different sessions or in different animals can easily yield approximate power laws.

Depicted are the avalanche size distributions PS(s) from 12 spike recording sessions in Macaque monkeys used in [35] (gray; Δt = 4 ms), and from summing over the 12 individual PS(s) (red; plotted with offset). Dashed line: power law with slope -1.6.

Our current study of neural network dynamics using purely phenomenological models led us to ask: What can be achieved by using simple reduced models? We show here that such models offer an alternative explanation for power law generation: Instead of arising from critical networks, power laws can be imposed by a sophisticated drive with long time scales and large rate variations onto a set of unconnected Poisson neurons. Is this a better model for neural population dynamics? In terms of biophysical plausibility, certainly not: Single neuron dynamics are more complex than assumed here, and there is an abundance of connections between neurons and these connections are certainly used. Nonetheless, the phenomenological model allowed to disentangle the neural network dynamics generated within a network, and that imposed by external drive or input. A combination of the two determines the resulting population dynamics. Here we focused on the role of the external drive.

Long time scales have been observed in many studies (e.g., [2,4,38]). One argument for their emergence from within the network, and not from the external world, is that evidence for criticality has been found in isolated systems: in vitro networks clearly lack an external input but show evidence of internally generated criticality [4,10,11,24,39]. In vivo evidence for critical dynamics has also been provided for states with reduced input from the outside world, i.e., anesthesia and sleep in both animals and people [12,13,30]. In such a scenario, the long time scales could be imposed by input from a different part of the brain than the one recorded from, but these, in turn, need to generate the long time scales themselves. Thus, at least some brain areas need to generate the long time scales, e.g., by being close to criticality. In other words, the problem of generating long time scales is shifted only to a different entity than the one investigated, without solving the question about the origin of the long correlations. Importantly, the emergence of long time scales – indicative of near critical dynamics – has also been predicted in a detailed hierarchical model of the primate cortex [40].

A property of critical systems (with finite rate r) is the separation of timescales (STS). The STS imposes that the duration of an avalanche is typically much shorter than the pauses between avalanches. Assuming a certain rate r, a STS emerges in branching processes when approaching criticality. This is because the population rate r and the external input h obey the relation r = h/(1 − σ) = h/ϵ, where ϵ is the distance to the critical point. When approaching criticality (ϵ → 0), the drive rate h has to approach zero to assure a finite rate. Sufficiently close to criticality, the finite rate together with the diverging variance of the activity typically leads to long waiting times before a new avalanche is started and hence to a STS [35]. A STS implies that the avalanche size and duration do not change (much) when the bin size is changed, on condition that the bin size is shorter than the typical pauses (Fig 2E and 2F).

In experimental data, the waiting times or inter avalanche intervals (IAI), which are closely related to the inter event intervals (IEI) across all events, can reveal the nature of the external drive. For branching processes with Poisson drive, p(IEI) is exponentially distributed (Fig 3C). Different drives, however, would induce different IEI distributions. For the IPPs, for example, p(IEI) can resemble a power law (Fig 3B), or a Gamma distribution [36,37]. In experiments, both, approximate power laws [13,41,42], as well as exponential or gamma-like distributions [43,44] were observed. Thus the presence of a power-law distributed p(IEI) cannot prove a critical state, and the absence cannot rule out criticality. Similarly, temporal correlations between avalanche sizes have been observed in experiments and in some critical models, but not in all. Thus these correlations can narrow down the classes of generating models, but do not necessarily imply that the system is not critical.

Inference about the collective dynamics of a network in extended networks is further complicated if only a small fraction of all neurons can be sampled, or alternatively if one has to resort to coarse measures of neural activity such as LFP, EEG or MEG (coarse sampling) [35,39,43–45]. Currently, neural recordings in vivo are constrained by either subsampling or coarse sampling, and the biases that are potentially induced by sampling should be treated with care in any data analysis project. While no panacea exists to date to overcome these limitations, incorporating subsampling or coarse sampling to models, when comparing them to neural activity obtained from experiments is highly advisable. In fact, subsampling effects are already being implemented on a regular basis [13,14,30,35,43–46]. Recent advances have even provided an analytical understanding of subsampling-induced biases, which now allows us to correctly infer aggregated properties of a full system from an observed subset [35,39,47].

In conclusion, a non-critical system that is externally driven by a time-varying input can give rise to power law avalanche distributions resembling empirical distributions. The main requirements are that the rate envelope of the external drive changes sufficiently slowly in time, that it spans a wide enough range of rates, and that each rate contributes approximately for the correct fraction of time, given by w(r). An important question concerns the general mechanisms that could give rise to such slowly varying temporal envelops. Ironically, one potential general mechanism is critical dynamics, which exhibits slow time scales. In other words, a system of non-interacting or weakly-interacting elements that are driven by a critical system may be indistinguishable from a genuine critical system. Thus, from the point of view of Occam's razor, it may well be that an underlying critical system is still the most parsimonious explanation of the data.

Methods

Ethics statement

The experiments were performed according to the German Law for the Protection of Experimental Animals and were approved by the Regierungspräsidium Darmstadt. The procedures also conformed to the regulations issued by the NIH and the Society for Neuroscience. The recordings were used in earlier publications already [30,35,48].

Models

Homogeneous Poisson process

The spiking activity of our neural network model is simulated as a continuous-time, homogeneous (stationary) Poisson point process (HPP) with rate r. For avalanche analysis (see below), the process is transformed into discrete time steps by applying temporal bins Δt. The number of events A(t) = a at each interval [t,t + Δt) is then given by the Poisson distribution . This process depends only on the product r ⋅ Δt, and thus changes in r and changes in Δt – while keeping the other parameter constant – have identical effects. Thus, throughout the manuscript, without loss of generality, we set either Δt = 1 or r = 1 and vary only the other parameter. With Δt = 1, the rate is given in units of 1/Δt, and vice versa. In general, for any HPP, applying the same bin size relative to the rate yields exactly the same results.

For the standard avalanche analysis, which was introduced by Beggs & Plenz 2003 and is based on temporal binning, it is sufficient to generate just one random process A(t) to represent the activity of any N Poisson units, because the avalanche analysis does not require knowledge about the identity of the units (e.g., neuron, electrode, channel, or voxel): It combines the activity of all units into a single population activity vector A(t). To compare the Poisson process A(t) to neural activity, one can assume that Δt = 1 ms and r = 1 kHz represents, for example, N = 100 independent Poisson neurons that each spike at rate 〈ri〉 = 10 Hz, or N = 256 EEG channels with an event rate on each channel of 〈ri〉 ≈ 3.9 Hz. Each of the units or channels can have a different rate; the only relevant parameter for Poisson activity is the rate r across all units: . We note that in addition to the conventional definition of avalanches using temporal binning, there are alternative definitions that assume spatial proximity and thus require knowledge about the identity of the units [49,50], or that make use of thresholding to separate one avalanche from another in the absence of clear pauses [51–53]. Here, we focus only on the classical temporal binning definition.

Inhomogeneous Poisson process

In many systems, such as the brain, it is conceivable that the event rate changes with time, i.e., r = r(t). For the analytical derivations, we assume that the rate changes slowly compared to the actual duration of the avalanches. In the example process that we use here, the rate r(t) assumed for a period of 250,000 time steps (≈ 4 min at Δt = 1 ms) one of four different equiprobable rates, r = {1 2 5 10}/Z, where Z =18 assures that 〈r(t)〉 = 1 without loss of generality.

Critical branching process

In the context of criticality, activity propagation in the brain is commonly simulated using a branching model or branching process (BP) [4,30,31,35,39,43,45,54–56]. In a BP, each active unit i activates with some probability each of its postsynaptic units in the next time step. More precisely, each active unit i activates in the next time step Yt,i = y units (called offsprings), where Y is a non-negative, integer random variable [constraints: P(Y = 0) > 0; P(Y = 0) + P(Y = 1) < 1]. Each of these activated units in the next time step again activates y units, leading to a cascade or avalanche-like propagation of activity. The dynamics of the process is defined by the control parameter σ = ∑yP(y) ⋅ y = 〈Y〉. For σ < 1 (> 1) the process is subcritical (supercritical), and for σ = 1 it is critical. Here, we realize the BP such that each active neuron activates with probability q = σ/k one of its k =2 postsynaptic neurons, i.e., P(y=0) = (1-q)2, P(y=1) = 2 q(1-q), P(y=2) = q2, and P(y>2) = 0. Note that all relevant measures in this paper are independent of the precise choice of the offspring distribution and depend only on σ. The number of events A(t) at each time step t is described as:

| (17) |

The external drive, ht, starts new “avalanches” with mean rate h. Here, we choose ht to be 1 with probability h and zero otherwise. Given h > 0, the BP exhibits stationary dynamics in the subcritical regime (σ < 1), whereas in the supercritical regime it displays on average exponential growth, as expected. At criticality (σ = 1), it grows linearly. We choose a branching process with drive to approximate the dynamics of neural networks at criticality; this choice offers a number of advantages, apart from providing an elegant approximation of neural activity propagation: (a) It does not need to be mapped on a grid and thereby avoids finite size effects, (b) the distance ε to the critical point is well defined as ε = 1 − σ, and (c) the rate r of the stationary (subcritical) processes can be matched to that of empirical data or of other processes by adjusting the drive strength: h = r ⋅ ε.

In contrast to the Poisson processes, the BP is implemented on discrete time. To simulate a BP close to criticality but still in the stationary (subcritical) regime, we chose a fairly small distance to criticality ε = 1 − σ = 0.001. To make the BP comparable to the Poisson processes, we implement it with time steps of 0.25ms, and r = 1 kHz, where r = 〈A(t)〉. That rate is realized by using a drive h = r ⋅ ε = 0.001 per time step. Thus, on average, starting four new avalanches per second leads to an overall rate of 1 kHz. Recall, the units in (kHz) or (ms) are only for comparison to neural recordings and can be neglected.

Experimental data—Spike recordings

The recording sessions are the same as in Priesemann et al. [30] and in Wilting & Priesemann [35]. The relevant details can be found in those articles and in the original publication of Pipa et al. [48]. In brief, spikes were recorded simultaneously from up to 16 single-ended micro-electrodes or tetrodes in the lateral prefrontal cortex of each of three trained macaque monkeys. For each recording, avalanches were extracted as described below, using a bin size of Δt = 4 ms. In this study, we did not acquire new data but re-used data that had previously been recorded for different purposes. All relevant data are presented in this paper and in the Supporting Information files.

Measures

Below we briefly review the definitions of avalanche measures and other time series measures. All definitions follow the standard definitions in the field. Most measures depend on the bin size Δt, and hence Δt introduces the relevant time scale for the time series.

To define avalanches, events of all recorded units are combined into a single time series A(t), which describes the instantaneous population rate (Fig 7). To segment this time series into avalanches, temporal binning is applied. An avalanche is thus defined as a sequence of non-empty time bins, preceded and followed by at least one empty bin [4]. The avalanche size s is the total number of events in the avalanche, and the avalanche duration d is the number of non-empty bins in the sequence. Both quantities are expected to follow power law distributions with characteristic exponents if a system is critical [4,54]. The average avalanche size given a duration d is denoted by . The inter-event intervals (IEI) are defined as the time differences between subsequent events in the population rate vector A(t). The probability of observing A = a events in a time bin is denoted by PA(a|Δt) or simply by PA(a). The Fano factor F is defined as the variance of the binned signal, divided by the mean. Both A and F depend on the bin size. Finally, the spike count ratio, Q, is defined as the ratio of events in the ith bin, A(t = i|Δt), and the previous bin, A(t = i − 1|Δt), averaged over all bins with A(t = i − 1|Δt) > 0:

| (18) |

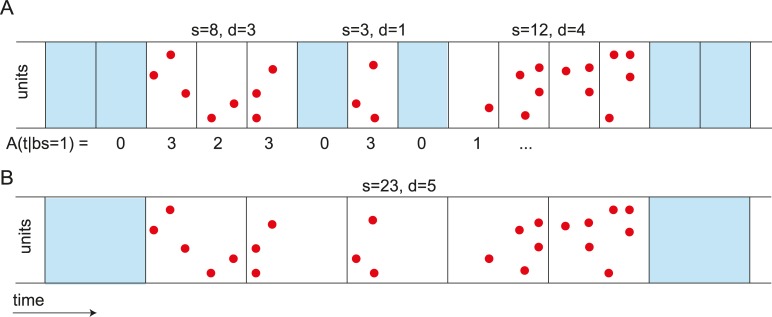

Fig 7. (Color).

Avalanche Definition. A. For the avalanche analyses, events from all channels or units are combined into a single vector of activity per bin, A(t|Δt), which is a function of the bin size (Δt). An avalanche is defined as the set of events in a sequence of non-empty time bins. Empty time bins are denoted in blue, events in red. The avalanche size s is defined as the total number of events in an avalanche, the avalanche duration d is defined as its length in bins (depicted above the raster plot). B. With changing the bin size, avalanche measures can also change (modified from [30]).

The measure Q is equivalent to the so-called “branching parameter” in Beggs & Plenz (2003) and subsequent studies; however, since the measure does not necessarily return the “branching parameter” of a branching process [25,30], we opted to give it a different name to avoid confusion.

Analytical treatment

Derivation of avalanche size and duration distributions for a fixed-rate continuous time Poisson process

We assume that a sequence of independent discrete events is generated by a fixed-rate (homogeneous) Poisson process. The rate of the process is denoted by r.

A cascade or avalanche is defined as a sequence of consecutive time bins in which there was at least one event (Fig 7). The number of time bins in the sequence is the duration, denoted by d, and the total number of events is the size, denoted by s. Our goal is to calculate the size distribution, PS(s).

We first calculate the duration distribution, PD(d):

The probability of an empty bin is p0 = e−rΔt, and the probability of a non-empty bin is p = 1 − e−rΔt.

For simplicity and without loss of generality, we assume a time bin of one time unit, Δt = 1, which gives

Due to the independence of different time bins, the probability of obtaining a sequence of d non-empty bins between two empty bins is proportional to . The normalization factor is the sum of a geometric series

Thus, the duration probability is given by

The number of events in a single bin of a cascade, A, must be 1 or more. Thus, the distribution of the number of events in a single bin, Δt, is a renormalized Poisson distribution, which excludes the possibility of having 0 events:

where the normalization factor is given by

In the last step, we have used the fact that the sum is the Taylor expansion of the exponential function excluding the first term. We thus obtain:

| (19) |

The total number of events in a cascade of duration d is the sum of d independent variables obeying the distribution PA≥1(a):

The distribution of s given the duration d can be calculated from the corresponding generating function. The generating function of PA≥1(a) is given by:

The generating function of a sum of independent random variables is the product of the underlying generating functions, yielding

We define

Taking all this together and using the properties of a probability generating function, we obtain

To obtain the derivatives of f, we note that f is very similar in structure to

the probability generating function of Stirling numbers of the second kind, [57]. These numbers describe the number of surjective ("onto") mappings of a set containing n elements onto a set containing k elements when n ≥ k (i.e., the number of mappings such that each of the k elements contains at least one of the n elements). They can be obtained from the generating function by

Thus, the sth order derivative of f at z = 0 is given by:

where

This gives

The size distribution now can be expressed as

We next calculate the mean avalanche size as a function of the duration, . We first note that the mean number of events in a non-empty bin, a, satisfies

Extracting a and multiplying by the duration, d, yields:

The mean avalanche size across all durations is given by:

The mean avalanche duration is given by:

We note that for a power-law distribution with no cutoff and an exponent larger than -2, the mean avalanche size diverges. However, for a fixed-rate Poisson process, the distribution is not heavy tailed and the mean avalanche size is well defined.

The avalanche rate, i.e., the number of avalanches per time unit at a given rate is:

Exponential approximation

In general, the size distribution is non-monotonic. However, numerical simulations indicate that at large avalanche sizes the size distribution is approximately exponential, PS(s)~e−λs. We are interested in quantifying the dependence of the exponent on the rate of the underlying homogeneous Poisson process. Formally, the exponent can be estimated by evaluating

For a given s, the Stirling numbers obtain a single maximum value [57]. For a large s, the point at which the maximum is obtained and the maximum value itself can be approximated by

and

When summing over all values of d, the dominant contribution comes from d*, and the sum can be replaced by this dominant term. Using the above approximation and the Stirling approximation for factorial log n! ≈ nlog(n) − n, we obtain:

To estimate λ, we need to consider each term in the difference log PS(s) − log PS(s + 1) and evaluate its limit as s → ∞.

The first term vanishes in the difference, and for the second term the difference is −log r. For the third term, the limit of the difference is 0:

For the fourth term, the limit of the difference is -1:

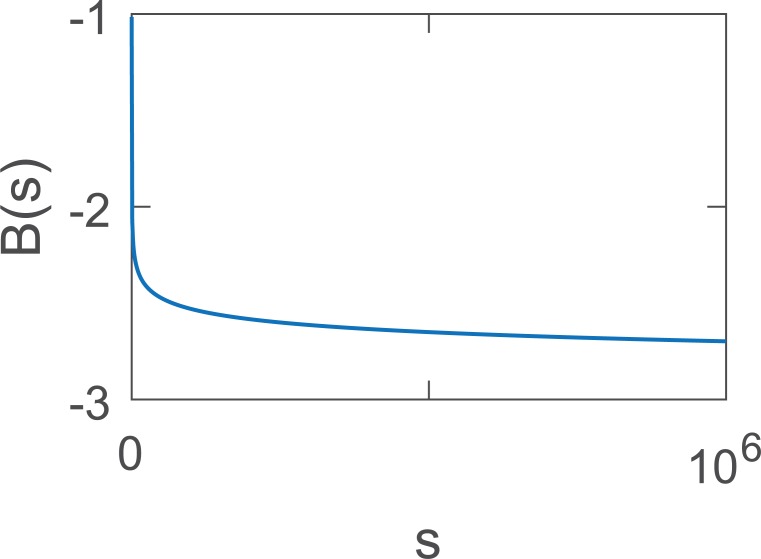

The last term changes very slowly with s due to the double log (Fig 8). Let us define it as:

Fig 8. The function B(s) approaches its limit lims→∞ B(s) = −∞ very slowly, and thus can be approximated by a constant for large intervals of s.

The limit of this term is −∞:

This relation shows that the size distribution is not strictly exponential but rather deviates slowly from a perfect exponential. Nevertheless, in practice one can replace B(s) → B(sc), where sc is a representative value for the relevant range of sizes.

Taking all this together, we obtain the following approximation for the dependence of the exponent on the rate of the underlying Poisson process:

Thus, at large sizes s, the distribution can be approximated by:

To obtain a normalized distribution, λ must be positive. Thus, the following condition must be satisfied:

For sizes around 1000, B ≅ −2, giving r < 0.046. In other words, the exponential approximation is valid only for relatively small rates.

Avalanche size and duration distributions for an inhomogeneous Poisson process

For an inhomogeneous (time-dependent) Poisson process (IPP), the avalanche size distribution can be calculated by summing up the contributions from the different rates involved, assuming that the rate of the IPP changes slowly. To emphasize the dependence on the rate, we now explicitly denote the avalanche size distribution for a fixed-rate Poisson process (HPP) by PS(s|r). Given the temporal envelope of the rate, we denote the probability density function of the rates by w(r). The rate of avalanches during a Poisson process with a fixed-rate r is denoted by ρ(r) and derived above. The full avalanche size distribution of the IPP is given by

This expression represents the portion of avalanches of size s out of the total number of avalanches of all sizes.

The duration distribution can be calculated in a similar way to the size distribution, giving:

The dependence of the mean avalanche size on the duration is given by:

The variance of the number of events A in a single bin is given by

The Fano factor F of the number of events in a single bin is given by

Derivation of the rate distribution that gives rise to a power law duration distribution

As shown above, for an HPP, the duration distribution is an exponential distribution, and the corresponding size distribution is approximately exponential. An IPP can give rise to a power law distribution if the distribution of its underlying rates, w(r), has a specific form. Below we derive the rate distribution that gives rise to a power-law duration distribution.

Using the expressions for ρ(r) and PD(d|r), we obtain:

We next define

Changing the integration variable from r to μ, we obtain

| (20) |

We next show that a superposition of exponential distributions with a power law weighting function can yield a power law distribution. This distribution can be derived from the properties of the gamma function. The lower incomplete gamma function satisfies the following relationship:

where the lower incomplete gamma function is defined as:

Changing the integration variable to μ = t/d, we obtain:

| (21) |

Comparing Eqs (20) and (21), we obtain the following expression for the weighting function:

The resulting duration distribution is then given by:

where r1 and r2 are the lower and upper bounds of the rate distribution, respectively. Note that the lower rate,r1, is associated with the upper μ value and vice versa. Taking the limit r1 → 0, r2 → ∞, would yield a perfect power law. However, in this limit w(r) cannot be normalized. Moreover, in practice, there would be some lower and upper bounds to the rate distribution, and hence the duration distribution would deviate from a perfect power law.

For critical branching processes, the exponent of the duration distribution is β = 2, which can be obtained by using

Derivation of the spike count ratio Q

We here derive the dependence of the spike count ratio Q on the rate r of a Poisson process (which is equivalent to changes in the bin size). Assuming Δt = 1, the general definition of Q is

where Pk,l(k|l) is the probability of having k events in a bin and l events in the previous bin. Note that the sum over l starts only at 1, by definition of Q.

For Poisson processes, the probabilities for obtaining A = k or A = l events in a time bin is independent of the number in the previous bin. Therefore, for Poisson processes QP(r) becomes:

where PA≥0(k) is the regular Poisson distribution and PA≥1(l) is the renormalized Poisson distribution, which appears in Eq (19). Inserting these relations and executing the sums, we obtain:

γ is the Euler-Mascheroni constant (γ ≈ 0.577) and is the exponential integral function.

Note that here, as usual, r can be exchanged with Δt assuming Δt = 1 or r = 1, respectively. Thus QP(r) = QP(r|Δt = 1) = QP(Δt|r = 1). More generally,

Supporting information

Spike avalanche distributions from recordings in prefrontal cortex of macaque monkey. Details on the recordings can be found in Priesemann et al., 2014 [30].

(ZIP)

Acknowledgments

We thank Matthias Munk for sharing in vivo spike recordings of macaque monkeys with us.

Data Availability

All relevant data are within the paper and its Supporting Information files.

Funding Statement

VP received financial support from the Max Planck Society, the German Ministry for Education and Research (BMBF) via the Bernstein Center for Computational Neuroscience (BCCN) Göttingen under Grant No. 01GQ1005B, and by the German-Israel- Foundation (GIF) under grant number G-2391-421.13. OS received financial support from the Israel Science Foundation (Grant No. 754/14). The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

References

- 1.Dunkelmann S, Radons G. Neural Networks and Abelian Sandpile Models of Self-Organized Criticality. Proceedings of International Conference Artificial Neural Networks. 1994.

- 2.Linkenkaer-Hansen K, Nikouline VV, Palva JM, Ilmoniemi RJ. Long-Range Temporal Correlations and Scaling Behavior in Human Brain Oscillations. J Neurosci. 2001;21: 1370–1377. 11160408 [Google Scholar]

- 3.Eurich CW, Herrmann JM, Ernst UA. Finite-size effects of avalanche dynamics. Phys Rev E. 2002;66: 066137. [DOI] [PubMed] [Google Scholar]

- 4.Beggs JM, Plenz D. Neuronal avalanches in neocortical circuits. J Neurosci. 2003;23: 11167–11177. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Levina A, Herrmann JM, Geisel T. Dynamical synapses causing self-organized criticality in neural networks. Nat Phys. 2007;3: 857–860. [Google Scholar]

- 6.Boedecker J, Obst O, Lizier JT, Mayer NM, Asada M. Information processing in echo state networks at the edge of chaos. Theory Biosci. 2012;131: 205–213. doi: 10.1007/s12064-011-0146-8 [DOI] [PubMed] [Google Scholar]

- 7.Shew WL, Plenz D. The functional benefits of criticality in the cortex. The Neuroscientist. 2013;19: 88–100. doi: 10.1177/1073858412445487 [DOI] [PubMed] [Google Scholar]

- 8.Shew WL, Yang H, Yu S, Roy R, Plenz D. Information capacity and transmission are maximized in balanced cortical networks with neuronal avalanches. J Neurosci. 2011;31: 55–63. doi: 10.1523/JNEUROSCI.4637-10.2011 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Shriki O, Yellin D. Optimal Information Representation and Criticality in an Adaptive Sensory Recurrent Neuronal Network. PLoS Comput Biol. 2016;12: e1004698 doi: 10.1371/journal.pcbi.1004698 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Pasquale V, Massobrio P, Bologna LL, Chiappalone M, Martinoia S. Self-organization and neuronal avalanches in networks of dissociated cortical neurons. Neuroscience. 2008;153: 1354–1369. doi: 10.1016/j.neuroscience.2008.03.050 [DOI] [PubMed] [Google Scholar]

- 11.Tetzlaff C, Okujeni S, Egert U, Wörgötter F, Butz M. Self-Organized Criticality in Developing Neuronal Networks. PLoS Comput Biol. 2010;6: e1001013 doi: 10.1371/journal.pcbi.1001013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 12.Hahn G, Petermann T, Havenith MN, Yu S, Singer W, Plenz D, et al. Neuronal avalanches in spontaneous activity in vivo. J Neurophysiol. 2010;104: 3312–3322. doi: 10.1152/jn.00953.2009 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Priesemann V, Valderrama M, Wibral M, Le Van Quyen M. Neuronal Avalanches Differ from Wakefulness to Deep Sleep–Evidence from Intracranial Depth Recordings in Humans. PLoS Comput Biol. 2013;9: e1002985 doi: 10.1371/journal.pcbi.1002985 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Shriki O, Alstott J, Carver F, Holroyd T, Henson RN, Smith ML, et al. Neuronal Avalanches in the resting MEG of the human brain. J Neurosci. 2013;33: 7079–7090. doi: 10.1523/JNEUROSCI.4286-12.2013 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Arviv O, Goldstein A, Shriki O. Near-Critical Dynamics in Stimulus-Evoked Activity of the Human Brain and Its Relation to Spontaneous Resting-State Activity. J Neurosci. 2015;35: 13927–13942. doi: 10.1523/JNEUROSCI.0477-15.2015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Tagliazucchi E, Balenzuela P, Fraiman D, Chialvo DR. Criticality in large-scale brain FMRI dynamics unveiled by a novel point process analysis. Front Physiol. 2012;3: 15 doi: 10.3389/fphys.2012.00015 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Arviv O, Medvedovsky M, Sheintuch L, Goldstein A, Shriki O. Deviations from Critical Dynamics in Interictal Epileptiform Activity. J Neurosci. 2016;36: 12276–12292. doi: 10.1523/JNEUROSCI.0809-16.2016 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Palva JM, Zhigalov A, Hirvonen J, Korhonen O, Linkenkaer-Hansen K, Palva S. Neuronal long-range temporal correlations and avalanche dynamics are correlated with behavioral scaling laws. Proc Natl Acad Sci. 2013;110: 3585–3590. doi: 10.1073/pnas.1216855110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Newman ME. Power laws, Pareto distributions and Zipf’s law. Contemp Phys. 2005;46: 323–351. [Google Scholar]

- 20.Bak P, Tang C, Wiesenfeld K. Self-organized criticality: An explanation of 1/f noise. Phys Rev Lett. 1987;59: 381–384. doi: 10.1103/PhysRevLett.59.381 [DOI] [PubMed] [Google Scholar]

- 21.Pruessner G. Self-Organised Criticality: Theory, Models and Characterisation. Cambridge University Press; 2012. [Google Scholar]

- 22.Taylor TJ, Hartley C, Simon PL, Kiss IZ, Berthouze L. Identification of criticality in neuronal avalanches: I. A theoretical investigation of the non-driven case. J Math Neurosci JMN. 2013;3: 1–26. doi: 10.1186/2190-8567-3-1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Sethna JP, Dahmen KA, Myers CR. Crackling noise. Nature. 2001;410: 242–250. doi: 10.1038/35065675 [DOI] [PubMed] [Google Scholar]

- 24.Friedman N, Ito S, Brinkman BA, Shimono M, DeVille RL, Dahmen KA, et al. Universal critical dynamics in high resolution neuronal avalanche data. Phys Rev Lett. 2012;108: 208102 doi: 10.1103/PhysRevLett.108.208102 [DOI] [PubMed] [Google Scholar]

- 25.Priesemann V. Subsampling in Critical Systems. Library of the Johann Wolfgang Goethe-Universitaet, Frankfurt: 2013. [Google Scholar]

- 26.Tkačik G, Marre O, Amodei D, Schneidman E, Bialek W, Berry MJ II. Searching for Collective Behavior in a Large Network of Sensory Neurons. PLoS Comput Biol. 2014;10: e1003408 doi: 10.1371/journal.pcbi.1003408 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Soriano J, Martínez MR, Tlusty T, Moses E. Development of input connections in neural cultures. Proc Natl Acad Sci. 2008;105: 13758–13763. doi: 10.1073/pnas.0707492105 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Gautam SH, Hoang TT, McClanahan K, Grady SK, Shew WL. Maximizing sensory dynamic range by tuning the cortical state to criticality. PLoS Comput Biol. 2015;11: e1004576 doi: 10.1371/journal.pcbi.1004576 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Nonnenmacher M, Behrens C, Berens P, Bethge M, Macke JH. Signatures of criticality arise in simple neural population models with correlations. ArXiv160300097 Q-Bio. 2016; Available: http://arxiv.org/abs/1603.00097

- 30.Priesemann V, Wibral M, Valderrama M, Pröpper R, Le Van Quyen M, Geisel T, et al. Spike avalanches in vivo suggest a driven, slightly subcritical brain state. Front Syst Neurosci. 2014;8: 108 doi: 10.3389/fnsys.2014.00108 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Haldeman C, Beggs JM. Critical branching captures activity in living neural networks and maximizes the number of metastable states. Phys Rev Lett. 2005;94: 058101 doi: 10.1103/PhysRevLett.94.058101 [DOI] [PubMed] [Google Scholar]

- 32.Schwab DJ, Nemenman I, Mehta P. Zipf’s law and criticality in multivariate data without fine-tuning. Phys Rev Lett. 2014;113: 068102 doi: 10.1103/PhysRevLett.113.068102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Aitchison L, Corradi N, Latham PE. Zipf’s Law Arises Naturally When There Are Underlying, Unobserved Variables. PLOS Comput Biol. 2016;12: e1005110 doi: 10.1371/journal.pcbi.1005110 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Touboul J, Destexhe A. Power-law statistics and universal scaling in the absence of criticality. ArXiv150308033 Q-Bio. 2015; Available: http://arxiv.org/abs/1503.08033 [DOI] [PubMed]

- 35.Wilting J, Priesemann V. Inferring collective dynamical states from subsampled systems. Nat Commun. in press; Available: https://arxiv.org/abs/1608.07035 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36.Wheatland MS, Sturrock PA, McTiernan JM. The Waiting-Time Distribution of Solar Flare Hard X-Ray Bursts. Astrophys J. 1998;509: 448 doi: 10.1086/306492 [Google Scholar]

- 37.Wheatland MS. The Origin of the Solar Flare Waiting-Time Distribution. Astrophys J Lett. 2000;536: L109 doi: 10.1086/312739 [DOI] [PubMed] [Google Scholar]

- 38.Murray JD, Bernacchia A, Freedman DJ, Romo R, Wallis JD, Cai X, et al. A hierarchy of intrinsic timescales across primate cortex. Nat Neurosci. 2014;17: 1661–1663. doi: 10.1038/nn.3862 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Levina A, Priesemann V. Subsampling scaling. Nat Commun. 2017;8: ncomms15140. doi: 10.1038/ncomms15140 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.Chaudhuri R, Knoblauch K, Gariel M-A, Kennedy H, Wang X-J. A large-scale circuit mechanism for hierarchical dynamical processing in the primate cortex. Neuron. 2015;88: 419–431. doi: 10.1016/j.neuron.2015.09.008 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 41.Lombardi F, Herrmann HJ, Perrone-Capano C, Plenz D, De Arcangelis L. Balance between excitation and inhibition controls the temporal organization of neuronal avalanches. Phys Rev Lett. 2012;108: 228703 doi: 10.1103/PhysRevLett.108.228703 [DOI] [PubMed] [Google Scholar]

- 42.Lombardi F, Herrmann HJ, Plenz D, De Arcangelis L. On the temporal organization of neuronal avalanches. Front Syst Neurosci. 2014;8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 43.Ribeiro TL, Copelli M, Caixeta F, Belchior H, Chialvo DR, Nicolelis MA, et al. Spike avalanches exhibit universal dynamics across the sleep-wake cycle. PLoS One. 2010;5: e14129 doi: 10.1371/journal.pone.0014129 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Priesemann V, Munk MH, Wibral M. Subsampling effects in neuronal avalanche distributions recorded in vivo. BMC Neurosci. 2009;10: 40 doi: 10.1186/1471-2202-10-40 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.Ribeiro TL, Ribeiro S, Belchior H, Caixeta F, Copelli M. Undersampled Critical Branching Processes on Small-World and Random Networks Fail to Reproduce the Statistics of Spike Avalanches. PloS One. 2014;9: e94992 doi: 10.1371/journal.pone.0094992 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Yu S, Klaus A, Yang H, Plenz D. Scale-Invariant Neuronal Avalanche Dynamics and the Cut-Off in Size Distributions. PLoS ONE. 2014;9: e99761 doi: 10.1371/journal.pone.0099761 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Stumpf MP, Wiuf C, May RM. Subnets of scale-free networks are not scale-free: sampling properties of networks. Proc Natl Acad Sci U S A. 2005;102: 4221–4224. doi: 10.1073/pnas.0501179102 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Pipa G, Städtler ES, Rodriguez EF, Waltz JA, Muckli LF, Singer W, et al. Performance-and stimulus-dependent oscillations in monkey prefrontal cortex during short-term memory. Front Integr Neurosci. 2009;3 Available: http://www.ncbi.nlm.nih.gov/pmc/articles/PMC2766269/ [DOI] [PMC free article] [PubMed] [Google Scholar]

- 49.Scott G, Fagerholm ED, Mutoh H, Leech R, Sharp DJ, Shew WL, et al. Voltage imaging of waking mouse cortex reveals emergence of critical neuronal dynamics. J Neurosci. 2014;34: 16611–16620. doi: 10.1523/JNEUROSCI.3474-14.2014 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Fagerholm ED, Scott G, Shew WL, Song C, Leech R, Knöpfel T, et al. Cortical entropy, mutual information and scale-free dynamics in waking mice. Cereb Cortex. 2016;26: 3945–3952. doi: 10.1093/cercor/bhw200 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Poil S-S, Hardstone R, Mansvelder HD, Linkenkaer-Hansen K. Critical-State Dynamics of Avalanches and Oscillations Jointly Emerge from Balanced Excitation/Inhibition in Neuronal Networks. J Neurosci. 2012;32: 9817–9823. doi: 10.1523/JNEUROSCI.5990-11.2012 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Larremore DB, Shew WL, Ott E, Sorrentino F, Restrepo JG. Inhibition causes ceaseless dynamics in networks of excitable nodes. Phys Rev Lett. 2014;112: 138103 doi: 10.1103/PhysRevLett.112.138103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Papa BD, Priesemann V, Triesch J. Criticality meets learning: Criticality signatures in a self-organizing recurrent neural network. PLOS ONE. 2017;12: e0178683 doi: 10.1371/journal.pone.0178683 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54.Harris TE. The theory of branching processes Springer Verlag, Berlin; 1963. [Google Scholar]

- 55.Heathcote CR. A branching process allowing immigration. J R Stat Soc Ser B Methodol. 1965; 138–143. [Google Scholar]

- 56.Larremore DB, Carpenter MY, Ott E, Restrepo JG. Statistical Properties of Avalanches in Networks. Phys Rev E. 2012;85 doi: 10.1103/PhysRevE.85.066131 [DOI] [PubMed] [Google Scholar]

- 57.Rennie BC, Dobson AJ. On Stirling numbers of the second kind. J Comb Theory. 1969;7: 116–121. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Spike avalanche distributions from recordings in prefrontal cortex of macaque monkey. Details on the recordings can be found in Priesemann et al., 2014 [30].

(ZIP)

Data Availability Statement

All relevant data are within the paper and its Supporting Information files.