Abstract

Background

In the cognitive-behavioral approach, Functional Behavioural Assessment is one of the most effective methods to identify the variables that determine a problem behavior. In this context, the use of modern technologies can encourage the collection and sharing of behavioral patterns, effective intervention strategies, and statistical evidence about antecedents and consequences of clusters of problem behaviors, encouraging the designing of function-based interventions.

Objective

The paper describes the development and validation process used to design a specific Functional Behavioural Assessment Ontology (FBA-Ontology). The FBA-Ontology is a semantic representation of the variables that intervene in a behavioral observation process, facilitating the systematic collection of behavioral data, the consequential planning of treatment strategies and, indirectly, the scientific advancement in this field of study.

Methods

The ontology has been developed deducing concepts and relationships of the ontology from a gold standard and then performing a machine-based validation and a human-based assessment to validate the Functional Behavioural Assessment Ontology. These validation and verification processes were aimed to verify how much the ontology is conceptually well founded and semantically and syntactically correct.

Results

The Pellet reasoner checked the logical consistency and the integrity of classes and properties defined in the ontology, not detecting any violation of constraints in the ontology definition. To assess whether the ontology definition is coherent with the knowledge domain, human evaluation of the ontology was performed asking 84 people to fill in a questionnaire composed by 13 questions assessing concepts, relations between concepts, and concepts’ attributes. The response rate for the survey was 29/84 (34.52%). The domain experts confirmed that the concepts, the attributes, and the relationships between concepts defined in the FBA-Ontology are valid and well represent the Functional Behavioural Assessment process.

Conclusions

The new ontology developed could be a useful tool to design new evidence-based systems in the Behavioral Interventions practices, encouraging the link with other Linked Open Data datasets and repositories to provide users with new models of eHealth focused on the management of problem behaviors. Therefore, new research is needed to develop and implement innovative strategies to improve the poor reproducibility and translatability of basic research findings in the field of behavioral assessment.

Keywords: ontology, behavioral interventions, functional behavioral assessment, eHealth care, evidence-based practice

Introduction

Background

Behavioral Interventions (BI) are assessed as effective and evidence-based strategies by several studies and meta-analyses for reducing problem behaviors identified in school-age children from [1-5]. Among BI the Functional Behavioral Assessment (FBA) is considered one of the most effective methods for identifying the antecedents and consequences that control a problem behavior [6] and for gathering information about the reason or function for a behavior [7]. In the FBA, the data obtained through indirect measures, direct observation, and experimental manipulation of environmental variables contribute in formulating a functional hypothesis.

It can then be used in designing effective intervention plans aimed at reducing the reinforcement effect that specific antecedents and consequents could have in triggering and maintaining the problem behavior. For instance, children with Attention Deficit Hyperactivity Disorder (ADHD), one of the most common syndromes generating behavior disorders, often show many disruptive behaviors during class at school. If appropriate instructional methodologies are not implemented by teachers, a child with ADHD can have difficulties in sustaining attention to a task, and this can trigger challenging classroom behavior.

For example, these include: calling out, leaving their seat, and frequent rule violations. If the FBA was applied in a similar case, health professionals would probably have hypothesized that the function “avoidance” is what motivates the child´s behavior in an attempt to get away from the frustrating task. Accordingly, they would have suggested teachers use an intervention plan composed of strategies aimed at increasing the student task-oriented behaviors. These include the following: breaking the task into smaller portions, reducing the task duration, using visual cues, and reducing the number of challenging ones.

Newcomer and Lewis [8], comparing treatment outcomes demonstrate that behavior intervention plans based on FBA information (function-based) were more effective than behavior intervention plans not based on FBA information (non-function-based). This confirms the usefulness and importance of conducting an FBA to guide intervention plans based on the conscientious and explicit use of current best evidence [9].

The general tendency of the scientific community to open and share processes and results to anyone interested could be a further opportunity to corroborate the application of FBA as Evidence-Based Practice (EBP). An EBP is a decision-making process that integrates: the best available evidence, clinical expertise, client values, and context [10]. As suggested by Kazdin [11], clinical psychology “would profit enormously from codifying the experiences of the clinician in practice so that the information is accumulated and can be drawn on to generate and test hypotheses”. Transparency, openness, and, reproducibility could be the lifeblood for the advancement of psychological sciences and the dissemination of a more open research culture [12]. However, Scott and Alter [13], reveal that only a few scientific papers about FBA with an EBP approach can be found in the literature.

In this direction, Richesson and Andrews [14] explore how computer science could support the digitization and computation of information related to clinical processes regarding representation of knowledge found in clinical studies and in particular the role of ontologies.

In computer science, an ontology is a taxonomic description of the concepts in an application domain and the relationships among them [15] aimed to promote knowledge generation, organization, reuse, integration, and analysis [16]. Ontologies are a powerful tool to accumulate knowledge in a specific domain especially when there is a lack of shared terms and procedures.

Today, the use of ontologies in biomedical research is an established practice. For example, Gene Ontology [17] provides researchers with extensive knowledge regarding the functions of genes and gene products. Also, the Open Biomedical Ontologies initiative provides a repository of controlled vocabularies to be used across different biological and medical domains [18]. However, computable information about behavioral disorders and mental illness is still dispersed. The lack of shared definition and practices makes them difficult to aggregate, share, and search for specific information when needed.

Recently, researchers have started to recognize the important role that ontologies can play in the clinical psychology context. By far the most interesting examples include the: (1) Mental Disease Ontology [19], (2) Mental Health Ontology [20], (3) Mood Disorder Ontology [21], (4) Autism Phenotype Ontology [22], (5) Ontology of Schizophrenia [23], and (6) Ontology to monitor mental retardation rehabilitation process [24].

In the domain of the description of human behavior, a successful example is the Ontology for human behavior models [25] created with the purpose of tracing what causes a person to take an action, the cognitive state associated with the behavior, and the effects of the particular action. However, at the time of writing this paper, authors have not identified ontologies specifically focused on behavioral disorders according to the FBA methods in the main international journals on medical information systems. Starting from this perspective, the definition of a Functional Behavior Ontology (FBA-Ontology) could play a key role in the adoption of an evidence-based approach among behavioral experts to fill the gap between research and practice still widely observed in clinical psychology [26]. In fact, data mining algorithms have great potential for identifying patterns in psychological data, facilitating the decision-making processes, and automatic meta-analysis.

This study presents the description and validation of the FBA-Ontology [27] as a semantic tool to support the systematic collection of behavioral knowledge and the decision-making process based on evidence and gathered data.

Methods

Methodological Approach

The FBA-Ontology was developed applying the Uschold and King [28] methodology, which comprises the following set of guidelines: (1) identification of the ontology purpose, (2) capture the concepts and the relations between the concepts, (3) coding the ontology using a formal language, and (4) evaluate the ontology from a technical point of view. Moreover, authors of the present contribution also added a human-based assessment by interviewing 84 domain experts to check the formal structure of the ontology regarding taxonomy, relationships, and axioms. The next paragraphs describe in detail each of the steps as mentioned above.

Identification of the Ontology Purpose

The FBA-Ontology purpose is to describe the structure and the semantics of Functional Behavior Assessment methods. Gresham et al [29] define the FBA as “a collection of methods for gathering information about antecedents, behaviors, and consequences to determine the reason (function) of behavior.” The FBA derives from operant learning theories [30,31], and it is commonly used in clinical and educational contexts to design effective intervention plans. These are aimed at reducing the reinforcement effect that specific antecedents and consequents could have in triggering and maintaining the problem behavior.

Capture the Concepts and the Relations Between the Concepts

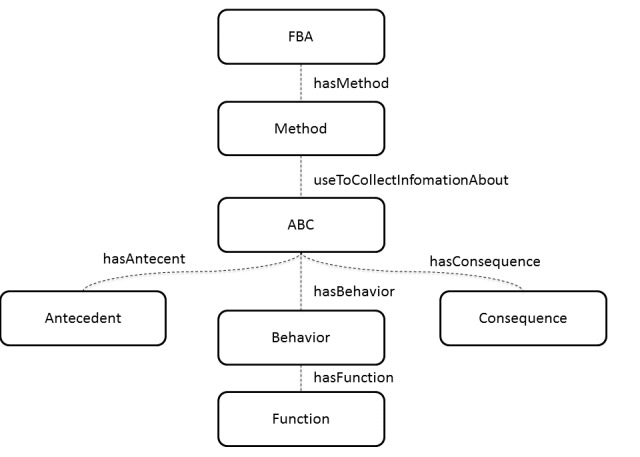

The concepts of the ontology were captured starting from the above mentioned theoretical assumptions. The FBA-Ontology is, therefore, a collection of classes and properties used to describe the whole assessment process. This includes the definition of a target behavior, the collection of the behavioral data, the hypotheses about the target behavior functions, and the planning of a behavioral intervention. In particular, the FBA-Ontology key concepts are FBA, Method, Antecedent, Behavior, Consequence, and Function (Figure 1).

Figure 1.

Key concepts at the basis of the FBA-Ontology.

According to Hanley [32], the FBA is a descriptive assessment including indirect and direct observation methods and measurements of a target behavior. The FBA-Ontology includes the Method class to specify the observation methods applied to the target behavior, specified in the class Behavior. Rating scales, questionnaires, and interviews are examples of indirect methods because they do not require direct observation of the target behavior. The direct methods are based on descriptive assessments and systematic recordings of observation sessions. The descriptive assessments provide qualitative information about variables that may trigger or maintain a target behavior, while the recording methods, such as the systematic direct observation, provide quantitative information about frequency, intensity, and duration of a targeted behavior during a specific time interval. The property isDirect, defined within the Method class, models the use of several direct or indirect methods [33].

The triad of classes: Antecedent, Behavior, and Consequence encloses the descriptors of the target behavior (Behavior), and the variables that trigger (Antecedent) or maintain it (Consequence). The class Function defines what purpose the problem behavior serves for the individual. According to Iwata and colleagues [34], the four behavioral functions are: avoid or escape difficult tasks, gain adult and peer attention, access to a desired object or activity, and sensory stimulation. The FBA-Ontology embodies these functions through the purported enumerated datatypes, included into the Function class mentioned above.

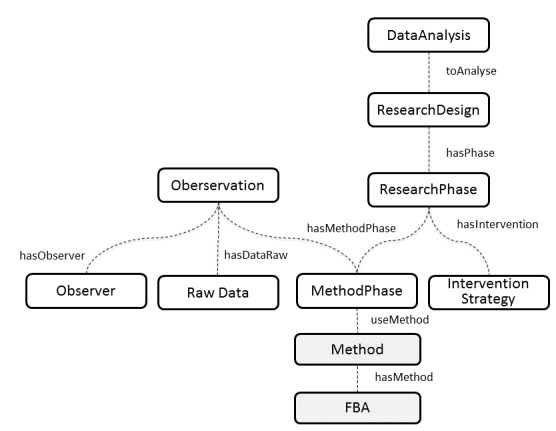

Unlike experimental designs where researchers can randomly assign participants to a control and treatment group, the behavior of the subject under observation generates data that can change over time or stay steady. To evaluate whether the time series changes, the elective and most popular research approach is single case research design. Single-case research designs are a diverse and powerful set of procedures used for demonstrating causal relationships among clinical phenomena [35]. Clinicians use three main research designs: case studies, quasi-experimental designs, and experimental designs. The differences among these are regarded as the increasing level of scientific rigor, ranging from anecdotal data gathered retrospectively to the maximum level of control of the dependent variables achievable in a laboratory setting. Dallery and Raiff [36] suggest the use of single-case design as a method for optimizing behavioral health interventions and facilitating the practitioners in the planning of suitable interventions for both individuals and groups. The FBA-Ontology assumes that data about an observed behavior is collected according to the single-case research design constraints (Figure 2).

Figure 2.

Key concepts related to research methods and data collection.

Generally, single-case designs start with a baseline phase (A) to observe the dependent variable as it appears. Once the baseline is established, the observer continues while implementing the intervention (B) to compare the time series looking for significant changes. The design just described is named AB. Other examples of single-case designs are ABA (adding a nontreatment condition to the AB design), ABAB (repeating the AB design twice) or BAB (implementing the intervention immediately for the safety of the person observed). The typology of single case designs used during the FBA process can be specified in the property ResearchType of the ResearchDesign class. In turn, the class ResearchPhase identifies the specific phase of the research design.

The Observation class describes the observed data gathered during a research phase. This class is linked both to the type Observer (the person who carries out the observation) and RawData (the data collected during the observation session). The class InterventionStrategy defines the set of strategies chosen to increase positive behaviors or decrease negative ones.

The RawData, once gathered during the single-case design, could be analyzed to assess the statistical effect of the intervention implemented. Many statistical methods include non-parametric tests and time-series analysis which are used to compare the data gathered during the different experimental conditions. The DataAnalysis class of the FBA-Ontology specifies what statistical methods are used to analyze data.

To identify the constraints related to the concepts included in the FBA-Ontology, a set of competency questions were formulated (Table 1). Competency questions are requirements that are expressed in the form of questions [37-39] using the natural language. They play an important role in both the ontology creation and validation. The competence questions support the ontology development enabling developers to identify the main elements and relationships within the selected domain. They also represent a starting point to carry out a deeper evaluation in a later stage of development [40].

Table 1.

List of competence questions formulated for the FBA-Ontology and its relative constraints.

| Competency questions | Constraints |

| Which are the types of methods to collect information about a behavior? | Direct Indirect |

| How many methods can have an FBAa? | Unlimited number |

| How many functions serve a behavior? | At least 1 |

| Which are the functions of a behavior? | Avoid or escape difficult tasks, gain adult and/or peer attention, acess to a desired object or activity, or sensory stimulation |

| How many antecedents for a behavior? | At least 1 |

| How many consequences for a behavior | At least 1 |

| How many single case research designs exist? | Many, for example: AB, ABA, ABABb, multiple baseline, changing criterion |

| How many intervention strategies can be applied to reduce the occurrence of a behavior? | Unlimited number. (Examples: token economy, response cost, shape, etc.) |

| How many observers can a behavior have? | Unlimited number |

| Who gathers data about a behavior? | Only individuals of the class Observer |

| How many statistical methods can be applied to analyze the raw data? | Unlimited number |

aFBA: Functional Behavioral Assessment.

bAB is a design with a baseline phase with repeated measurements. ABA and ABAB are withdrawal designs. The intervention is concluded or stopped for some period of time before it is begun again.

Coding the Ontology Using a Formal Language

The Protégé tool was used to model the ontology and produce an OWL (Ontology Web Language) version of the ontology. The Protégé tool also includes “reasoners” that can be used to perform inferences and to verify the ontology.

The process of ontology verification is generally performed to check its syntactic quality and the presence of anomalies or pitfalls.

In a metric proposed by Burton-Jones and colleagues [41], the syntactic quality is measured by assessing whether the source code is correctly structured, and how rich the programming language features are which model the ontology.

The anomalies or pitfalls can refer to the assessment of the logic consistency of the ontology and the identification of modeling issues in comparison with well-known best practices [42]. Many automatic tools have been developed to facilitate the ontology verification. For instance, XD Analyzer checks whether the ontology satisfies a set of best practice criteria or not, showing errors, warnings, and suggestions useful to improve it. XD Analyzer is included in XD Tools [43], and it is released as a plugin for Eclipse. Another useful recent tool is OOPS! [42] a Web-based tool aimed at identifying the most common anomalies in the ontology development. It scans 21 pitfalls grouped in 4 different dimensions: human understanding, logical consistency, real word representation, and modeling issues. Many other tools are available, but their description is out of the scope of the present paper. The FBA-ontology created with Protégé was verified by using the Pellet reasoner [44] to check the logical consistency of the ontology in addition to the integrity of classes and properties defined. The Pellet reasoner has not detected any violation of constraints in the ontology definition in its results.

The Evaluation of the Ontology

The evaluation of the quality of an ontology plays a key role during the whole ontology development process. As suggested by Gomez-Perez [45], evaluating an ontology should ensure that it correctly implements the expected requirements and performs correctly in the real world. Low-quality ontologies reduce the possibility that intelligent agents can perform accurately intelligent tasks because of inaccurate, incomplete or inconsistent information [42]. The quality of an ontology can be assessed evaluating how well a semantic structure represents the knowledge about a specific domain and the relationships about the identified concepts. Sabou and Fernandez [46] use the term “ontology validation” to compare the ontology definitions with a frame of reference that the ontology would represent.

According to these researchers, while the ontology validation is a process to evaluate how much an ontology is well-founded and corresponds accurately to the real world, the “ontology verification” aims to evaluate whether the way in which it is produced is correct.

A wide range of approaches and methodologies can be applied to perform the ontology validation. Brank et al [47] grouped the most common methods in four categories: (1) methods comparing the ontology with a golden standard [48-53], (2) methods based on the inductive evaluation of the results obtained through the application of the ontology [54-56], (3) methods comparing the ontology with resources specialized in the ontology domain [57,58], and (4) methods based on the assessment provided by expert humans [59-62].

The evaluation strategy adopted for the FBA-ontology was based on human evaluation, and it was aimed to assess whether the ontology definition is coherent with the knowledge domain. In this case, domain experts have been interviewed to check the formal structure of the ontology regarding taxonomy, relationships, and axioms.

To let the experts in the FBA domain assess the ontology authors created a questionnaire composed of 13 questions. Questions were aimed to evaluate the issues of the ontology. In the case of concepts, experts have to rate how much they agree with a set of 12 definitions using a 5-point Likert scale (from strongly agree to strongly disagree). For relations between concepts, experts have to rate the appropriateness of 6 statements describing the links between the main concepts of the ontology using a 5-point Likert scale (from strongly agree to strongly disagree). In concepts’ attributes experts have to rate how strong the relationship between 6 concepts and 9 related attributes is through a 5-point Likert scale (from strongly related to unrelated).

Moreover, questions about demographics were included in the questionnaire to gather information about the sex, age, and level of expertise of the respondents. The following tables report concepts and attributes (Table 2) and relationships (Table 3) evaluated by the questionnaire items.

Table 2.

Concepts and attributes of the FBA-Ontology assessed during the human-based evaluation.

| Concepts | Attributes |

| FBAa | description |

| Behavior | description setting (ie, school, home, etc.) place |

| Function of a behavior | function_categories is_main_function |

| Typologies of behavior’s functions | category (ie, social attention, avoidance, etc) |

| Methods to gather information about behaviors | hasDescription isDirect |

| Behavioral intervention | type (reactive or proactive) |

| Intervention strategy | interventionType |

| Antecedent | description |

| Consequence | description |

| Observer | role |

| Research design | type (ie, AB, ABA, ABAB, etc)b |

| Data analysis | statistical method results |

| ResearchPhase | sequence_num |

aFAB: Functional Behavioral Assessment.

bAB: is a design with a baseline phase with repeated measurements. ABA and ABAB are withdrawal designs. The intervention is concluded or stopped for some period of time before it is begun again.

Table 3.

Relationships between concepts of the FBA-Ontology assessed during the human-based evaluation.

| Relation Name | Concepts in relation |

| hasPhase | Research Design-Research Phases |

| hasFunction | Behavior-Function |

| has Antecedent | Behavior-Antecedent |

| hasConsequence | Behavior-Consequence |

| hasObserver | Observer-Observation |

| toAnalise | DataAnalysis-ResearchDesign |

| hasRawData | Observation-RawData |

| hasIntervention | Research Phase-InterventionStrategy |

| hasMethod | FBAa-Method |

| useToCollectInformationAbout | Method-ABCb |

aFAB: Functional Behavioral Assessment.

bABC is a chart to collect information about a behavior that occur in a context.

Results

Principal Findings

A total of 29/84 (34.52%) people accessed the survey and completed the responses. The mean age of the valid subset was 32 (SD 6.34) years with a range of 24-57 years. The respondents were mainly female (89.66%). Participants worked in the FBA domain with a mean of 5 (SD 6.87) years.

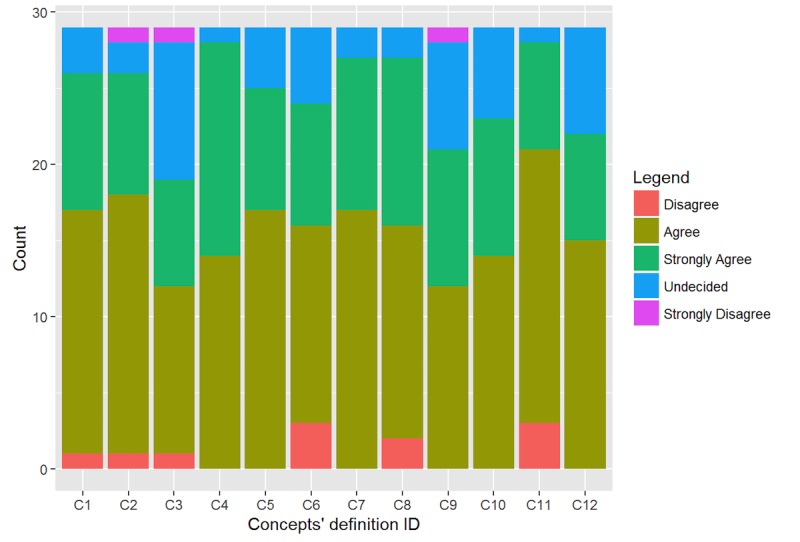

Figure 3 shows how the expert of the domain assessed the 12 concept definitions provided in the first section of the survey. The majority of responses confirmed the proposed definitions. The response rate is higher for “agree” (15/29, 51.15%) and “strongly agree” (9/29, 30.75%). It is worth noticing that the concept definition 3 and 9 received the higher rate of undecided responses, respectively 9/29 (31.03%) and 7/29 (24.14%). In both cases, the items were probably ambiguous to the experts and not straightforward. The definition number 3 is: “The function of a behavior is the reason that motivates a behavioral topography.” This sentence seems to wrongly suggest that a behavioral topography depends on its’ function. However, authors wanted to get a confirmation that a function determines why a certain behavior occurred. The definition number 9 is “An observer is a person who registers qualitative and quantitative information about a behavior.” This item probably does not provide enough contextual information to responders. The definition could probably be improved by adding some information about FBA and the role of the observer in the data collection of single case research designs.

Figure 3.

Evaluation of FBA-Ontology concepts.

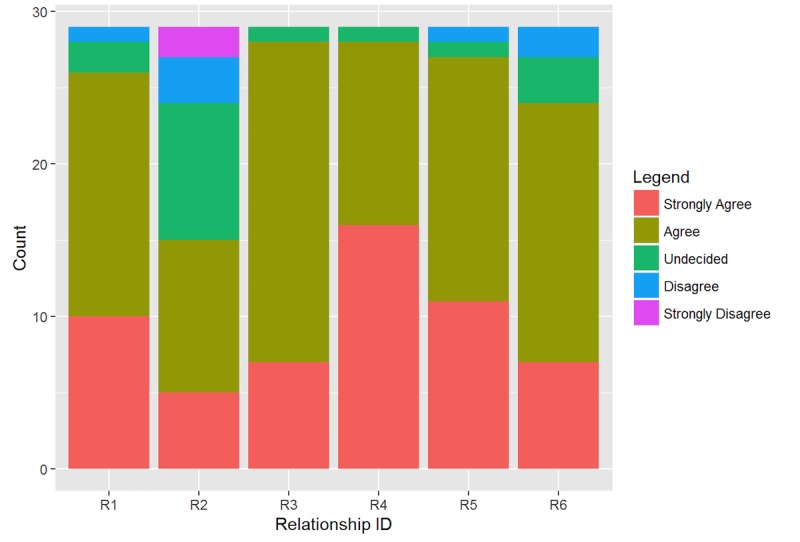

The experts’ evaluation about the correctness of the relationships between some of the most relevant concepts of the ontology is reported in Figure 4. The majority of respondents were “agree” (15/29, 52.87%) and “strongly agree” (9/29, 32.18%) with the proposed statements. The most controversial relationship is the number 2 (“A research phase must contain a minimum one measure”) that obtained the lower agreement rate of the section (18/29, 62.07%) and a large percentage of undecided (9/29, 31.03%). Once again, rather than indicating a problem with the ontology structure, the item is probably not well expressed (the verb “contain” is not self-explanatory) and lacks contextual information about the single case research design.

Figure 4.

Evaluation of the relationships between FBA-Ontology concepts.

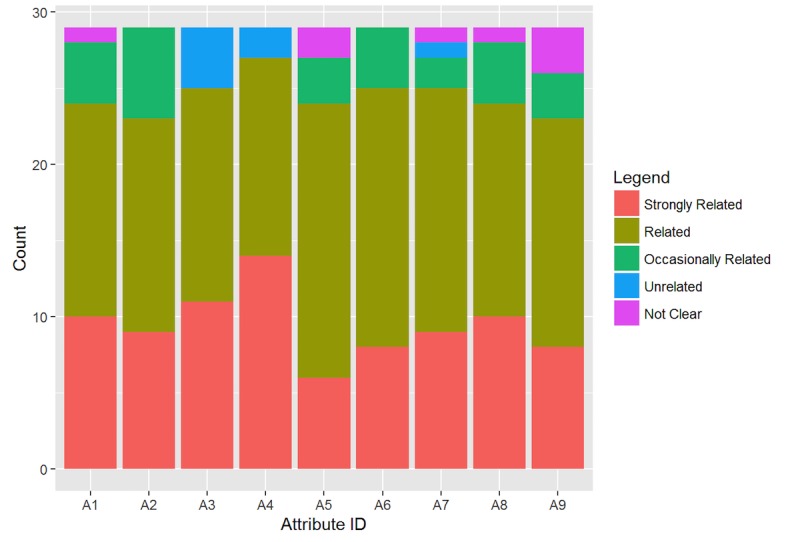

Finally, as shown in Figure 5, experts confirmed, cohesively, the relationships between the proposed concepts and their relative attributes. The higher response rate (24/29, 84.29%) was for the “related” and “strongly related” options that obtained respectively the 15/29 (51.72%) and the 9/29 (32.57%) of the overall responses. Just a few responses (4/29, 15.71%) report disagreements among the proposed attributes.

Figure 5.

Evaluation of the FBA-Ontology attributes.

Discussion

Principal Findings

The FBA-Ontology describes the structure and the semantics of the FBA methods supporting the systematic collection of behavioral data, the definition of hypotheses about the function of a behavior, the consequential planning of treatment strategies, and the evidence-based evaluation of the efficacy of the applied treatments.

In the field of behavioral science, the mixing of terms and labels is frequent; this lack of common terms and shared definitions for interventions renders the aggregation of knowledge a difficult process [16]. An ontology, which provides a controlled vocabulary of agreed terms and their relationships, enables and facilitates new approaches in behavioral science. Data collected by experts are no longer collected only to be used in their research, but they can be shared, compared and integrated across experiments conducted by the whole research community. In this perspective, the FBA-Ontology represents a model able to promote the creation of new repositories, the integration, and interlinking of Linked Open Data datasets in the field of BI. It represents an open approach for sharing and exchanging data, explicating common mechanisms of action, collecting behavioral patterns, classifying contingency variables according to behavioral patterns, monitoring the statistical evidence of behavioral intervention.

Besides, the FBA-Ontology could favor the development of new applications able to support the collection of observational data in different life contexts, facilitating the interaction among practitioners and caregivers. In general, the FBA-Ontology supports the integration of several sources of data thus constituting a key element to enhance the value of the data itself. In educational settings, the presence of innovative applications could improve Lifelong Learning opportunities for teachers, parents, and clinicians to spread the use of the behavioral observation practices and the promotion of home-school relationships, to reduce the gap between research and practice. Also, the dissemination of a common communication language and the improvement of effective evidence-based decision-making processes will be advantageous from this perspective.

Concerning the ontology verification, a machine-based approach has been applied to check the logical consistency, to which the integrity of classes and properties of FBA-Ontology have not detected any violation of constraints. Moreover, the result of the questionnaire administered to domain experts confirmed that the concepts, the attributes, and the relationships between concepts defined in the FBA-Ontology are valid. These findings are particularly important for behavioral science because they contribute to improve class definitions and comparability of operational definitions, and to enable automatic and efficient meta-analysis and scientific syntheses, which, in turn, could be translated into clinical guidelines [16].

The FBA-Ontology, developed contextually to the Web Health Application for ADHD Monitoring (WHAAM) [63-65], could be a starting point to guarantee the systematic organization of behavioral knowledge and the development of future eHealth systems devoted to spreading the digital use of evidence-based assessment practices. A limitation of the work presented here concerns the lack of practical use cases in which the ontology has been adopted. This issue will be tackled in two European funded projects recently approved in the framework of the Erasmus+ program.

These projects are respectively focused on the management of social, emotional and behavioral difficulties, and the promotion of positive behaviors at school, thus offering a suitable setting to conduct further experimentations of the FBA-Ontology in a real environment. Finally, we aim to encourage not only the empirical application but also the use of computational tools and psychometric methods to provide the refinement of ontology in the future, aware that this field of study needs to be explored more in-depth.

Conclusion

In this study, we developed the FBA Ontology to promote knowledge generation, organization, reuse, integration, and analysis of behavioral data. The FBA Ontology is composed of concepts that describe the process of gathering information about behavior to determine its function and design effective intervention plans.

The study presented the assessment of the ontology by a group of experts in the domain. Results from the human-based evaluation confirmed that the ontology concepts, attributes, and relationships between concepts are valid. Moreover, the analysis provided by automatic tools has not identified anomalies in the ontology definition. Further research involving the creation and the interlink of repositories based on the behavioral data would contribute to highlight the importance of the aggregation and sharing of information in this domain.

Acknowledgments

The authors would like to thank the experts who took part in the validation of the FBA Ontology, as well as everyone who supported the writing of the article.

Abbreviations

- ADHD

Attention Deficit Hyperactivity Disorder

- BI

behavioral interventions

- EBP

evidence-based practice

- FBA

functional behavioral assessment

- WHAAM

web health application for ADHD monitoring

Footnotes

Authors' Contributions: All authors read and approved the final manuscript and have contributed equally to the work.

Conflicts of Interest: None declared.

References

- 1.Pelham WE, Wheeler T, Chronis A. Empirically supported psychosocial treatments for attention deficit hyperactivity disorder. J Clin Child Psychol. 1998 Jun;27(2):190–205. doi: 10.1207/s15374424jccp2702_6. [DOI] [PubMed] [Google Scholar]

- 2.Ghuman JK, Arnold LE, Anthony BJ. Psychopharmacological and other treatments in preschool children with attention-deficit/hyperactivity disorder: current evidence and practice. J Child Adolesc Psychopharmacol. 2008 Oct;18(5):413–47. doi: 10.1089/cap.2008.022. http://europepmc.org/abstract/MED/18844482 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Pelham JW, Fabiano G. Evidence-based psychosocial treatments for attention-deficit/hyperactivity disorder. Journal of Clinical Child & Adolescent Psychology. 2008;37(1):184–214. doi: 10.1080/15374410701818681. [DOI] [PubMed] [Google Scholar]

- 4.Fabiano GA, Pelham WE, Coles EK, Gnagy EM, Chronis-Tuscano A, O'Connor BC. A meta-analysis of behavioral treatments for attention-deficit/hyperactivity disorder. Clin Psychol Rev. 2009 Mar;29(2):129–40. doi: 10.1016/j.cpr.2008.11.001.S0272-7358(08)00156-6 [DOI] [PubMed] [Google Scholar]

- 5.Rajwan E, Chacko A, Moeller M. Nonpharmacological interventions for preschool ADHDtate of the evidence and implications for practice. Professional Psychology: Research and Practice. 2012;43(5):520. [Google Scholar]

- 6.Horner RH. Functional assessment: Contributions and future directions. J Appl Behav Anal. 1994;27(2):401–404. doi: 10.1901/jaba.1994.27-401. http://europepmc.org/abstract/MED/16795831 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Witt J, Daly E, Noell G. Functional assessments: A step-by-step guide to solving academic and behavior problems. Longmont, Sopris West. 2000 [Google Scholar]

- 8.Newcomer L, Lewis T. Functional behavioral assessment an investigation of assessment reliability and effectiveness of function-based interventions. Journal of Emotional and Behavioral Disorders. 2004;12(3):168–181. [Google Scholar]

- 9.Sackett DL, Rosenberg WM, Gray JA, Haynes RB, Richardson WS. Evidence based medicine: what it is and what it isn't. BMJ. 1996 Jan 13;312(7023):71–72. doi: 10.1136/bmj.312.7023.71. http://europepmc.org/abstract/MED/8555924 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Slocum TA, Detrich R, Wilczynski SM, Spencer TD, Lewis T, Wolfe K. The Evidence-Based Practice of Applied Behavior Analysis. Behav Anal. 2014 May;37(1):41–56. doi: 10.1007/s40614-014-0005-2. http://europepmc.org/abstract/MED/27274958 .5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Kazdin AE. Evidence-based treatment and practice: new opportunities to bridge clinical research and practice, enhance the knowledge base, and improve patient care. Am Psychol. 2008 Apr;63(3):146. doi: 10.1037/0003-066X.63.3.146.2008-03389-001 [DOI] [PubMed] [Google Scholar]

- 12.Nosek BA, Alter G, Banks GC, Borsboom D, Bowman SD, Breckler SJ, Buck S, Chambers CD, Chin G, Christensen G, Contestabile M, Dafoe A, Eich E, Freese J, Glennerster R, Goroff D, Green DP, Hesse B, Humphreys M, Ishiyama J, Karlan D, Kraut A, Lupia A, Mabry P, Madon TA, Malhotra N, Mayo-Wilson E, McNutt M, Miguel E, Paluck EL, Simonsohn U, Soderberg C, Spellman BA, Turitto J, VandenBos G, Vazire S, Wagenmakers EJ, Wilson R, Yarkoni T. Scientific Standards. Promoting an open research culture. Science. 2015 Jun 26;348(6242):1422–1425. doi: 10.1126/science.aab2374. http://europepmc.org/abstract/MED/26113702 .348/6242/1422 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Scott T, Alter P. Examining the case for functional behavior assessment as an evidence-based practice for students with emotional and behavioral disorders in general education classrooms. Preventing School Failure: Alternative Education for Children and Youth 2017. 2017;61(1):80–93. [Google Scholar]

- 14.Richesson RJ. Introduction to Clinical Research Informatics. Richesson R and Andrews J (eds) Clinical Research Informatics. 1st ed. London: Springer. 2012:3–16. [Google Scholar]

- 15.Musen M. Modern architectures for intelligent systems: reusable ontologies and problem-solving methods. AMIA Symposium; 1998; Stanford. 1998. pp. 46–52. [PMC free article] [PubMed] [Google Scholar]

- 16.Larsen KR, Michie S, Hekler EB, Gibson B, Spruijt-Metz D, Ahern D, Cole-Lewis H, Ellis RJB, Hesse B, Moser RP, Yi J. Behavior change interventions: the potential of ontologies for advancing science and practice. J Behav Med. 2017 Feb;40(1):6–22. doi: 10.1007/s10865-016-9768-0.10.1007/s10865-016-9768-0 [DOI] [PubMed] [Google Scholar]

- 17.What is the gene ontology? [2018-05-02]. http://www.geneontology.org/page/introduction-go-resource .

- 18.Smith. Barry Relations in biomedical ontologies. Genome biology. 2005;6(5):6–R46. doi: 10.1186/gb-2005-6-5-r46. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Hastings J. Representing mental functioning: Ontologies for mental health and disease. 3rd International Conference on Biomedical Ontology; July 21-25, 2012; Graz. Representing mental functioning: Ontologies for mental health and disease. 3rd International Conference on Biomedical Ontology; University of Graz; 2012. [Google Scholar]

- 20.Hadzic M, Meifania C, Tharam S. Towards the mental health ontology. Bioinformatics and Biomedicine; IEEE International Conference. 2008 [Google Scholar]

- 21.Haghighi M, Koeda M, Takai T, Tanaka H. Development of clinical ontology for mood disorder with combination of psychomedical information. J Med Dent Sci. 2009 Mar;56(1):1–15. [PubMed] [Google Scholar]

- 22.McCray AT, Trevvett P, Frost HR. Modeling the autism spectrum disorder phenotype. Neuroinformatics. 2014 Apr;12(2):291–305. doi: 10.1007/s12021-013-9211-4. http://europepmc.org/abstract/MED/24163114 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Toyoshima F. Towards an Ontology of Schizophrenia. ICBO/BioCreative. 2016 [Google Scholar]

- 24.Lovrencic S, Vidacek-Hains V, Kirinic V. Katalinic B editor. DAAAM International Scientific Book. Vienna: DAAAM International Vienna; 2010. Use of ontologies in monitoring mental retardation rehabilitation process; pp. 289–301. [Google Scholar]

- 25.Napierski D, Young A, Harper K. Towards a common ontology for improved traceability of human behavior models. Conference on Behavior Representations in Modeling and Simulation; 19 May 2004; Arlington, Virginia. 2004. [Google Scholar]

- 26.Hershenberg R, Drabick DAG, Vivian D. An opportunity to bridge the gap between clinical research and clinical practice: implications for clinical training. Psychotherapy (Chic) 2012 Jun;49(2):123–34. doi: 10.1037/a0027648. http://europepmc.org/abstract/MED/22642520 .2012-13805-006 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Taibi D, Chiazzese G, Merlo G, Seta L. An ontology to support evidence-based Functional Behavioral Assessments. 2nd International Conference on Medical Education Informatics; 2015 June 18-20; Thessaloniki. 2015. pp. 18–20. [Google Scholar]

- 28.Uschold M, King M. Towards a methodology for building ontologies. Proceedings of the Workshop on Basic Ontological Issues in Knowledge Sharing held in conjunction with IJCAI; 1995; Montreal, Canada. 1995. [Google Scholar]

- 29.Gresham F, Watson T, Skinner C. Functional behavioral assessment: Principles, procedures, and future direction. School Psychology Review. 2001;30(2):156. [Google Scholar]

- 30.Skinner B. Science and human behavior. Simon, Schuster. 1953 [Google Scholar]

- 31.Skinner B. Operant behavior. American Psychologist. 1963;18(8):503. [Google Scholar]

- 32.Hanley GP. Functional assessment of problem behavior: dispelling myths, overcoming implementation obstacles, and developing new lore. Behav Anal Pract. 2012;5(1):54–72. doi: 10.1007/BF03391818. http://europepmc.org/abstract/MED/23326630 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33.Kozlowski A, Matson J. Interview and observation methods in functional assessment. Matson JL (eds) Functional assessment for challenging behaviors. New York: Springer, pp. 2012:105–124. [Google Scholar]

- 34.Iwata BA, Dorsey MF, Slifer KJ, Bauman KE, Richman GS. Toward a functional analysis of self-injury. J Appl Behav Anal. 1994;27(2):197–209. doi: 10.1901/jaba.1994.27-197. http://europepmc.org/abstract/MED/8063622 . [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Nock M, Michel B, Photos V, McKay D. Single-case research designs. Handbook of research methods in abnormal and clinical psychology. 7th ed. United States of America: SAGE Publications Ltd., pp. 2008:337–350. [Google Scholar]

- 36.Dallery J, Raiff BR. Optimizing behavioral health interventions with single-case designs: from development to dissemination. Transl Behav Med. 2014 Sep;4(3):290–303. doi: 10.1007/s13142-014-0258-z. http://europepmc.org/abstract/MED/25264468 .258 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Grüninger M, Fox M. Methodology for the Design and Evaluation of Ontologies. IJCAI 95- Workshop on Basic Ontological Issues in Knowledge Sharing; April 13, 1995; Montreal, Canada. 1995. http://citeseer.ist.psu.edu/grninger95methodology.html . [Google Scholar]

- 38.Uschold M. Building ontologies: Towards a unified methodology. Technical report-university of Edinburgh artificial intelligence applications institute AIAI TR. 1996 [Google Scholar]

- 39.Mariano FL, Gómez-Pérez A. Overview and analysis of methodologies for building ontologies. The Knowledge Engineering Review. 2002;17(2):129–156. [Google Scholar]

- 40.Bezerra C, Freitas F, Santana F. Evaluating ontologies with competency questions. Web Intelligence/IAT Workshops. IEEE Computer Society. 2013;284:285. [Google Scholar]

- 41.Burton-Jones A, Storey V, Sugumaran V, Ahluwalia P. A semiotic metrics suite for assessing the quality of ontologies. Data & Knowledge Engineering. 2005;55(1):84–102. [Google Scholar]

- 42.Poveda-Villalón M, Suárez-Figueroa M, Gómez-Pérez A. Validating ontologies with OOPS! In: ten Teije, A. (eds) Knowledge Engineering and Knowledge Management. Heidelberg, Germany: Springer Berlin Heidelberg, pp. 2012:267–281. [Google Scholar]

- 43.Presutti V, Blomqvist E, Daga E, Gangemi A. Pattern-based ontology design. Suárez-Figueroa MC, Gómez-Pérez A, Motta E. Gangemi A (eds) Ontology Engineering in a Networked World. Heidelberg, Germany: Springer Berlin Heidelberg, pp. 2012:35–64. [Google Scholar]

- 44.Sirin E, Parsia B, Grau B, Kalyanpur A, Katz Y. Pellet: A practical owl-dl reasoner. Web Semanticscience, services and agents on the World Wide Web. 2007;5(2):51–53. [Google Scholar]

- 45.Gómez Pérez A. Evaluation of ontologies. International Journal of intelligent systems. 2001;16(3):391–409. [Google Scholar]

- 46.Sabou M, Fernandez M. Ontology (network) evaluation. Suárez-Figueroa MC, Gómez-Pérez A, Motta EGangemi A. (eds) Ontology engineering in a networked world. 1st ed. BerlSpringer Berlin Heidelberg. 2012:193–212. [Google Scholar]

- 47.Brank J, Grobelnik M, Mladenic D. A survey of ontology evaluation techniques. In: Proceedings of the Conference on Data Mining and Data Warehouses (SiKDD ), Ljubljana, Slovenia, , pp; 2005; Ljubljana, Slovenia. 2005. pp. 166–170. [Google Scholar]

- 48.Cheatham M, Hitzler P. String similarity metrics for ontology alignment. The Semantic Web - ISWC 2013 12th International Semantic Web Conference; 21 - 25 October 2013; Sydney, Australia. 2013. [Google Scholar]

- 49.Gan M, Dou X, Jiang R. From ontology to semantic similarity: calculation of ontology-based semantic similarity. The Scientific World Journal. 2013 doi: 10.1155/2013/793091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Euzenat J, Shvaiko P. Ontology matching. 2nd ed. Heidelberg: Springer. 2013 [Google Scholar]

- 51.Maedche A, Staab S. Measuring similarity between ontologies. Benjamins VR (ed). Knowledge engineering and knowledge management: Ontologies and the semantic web. 2002:251–263. [Google Scholar]

- 52.Shvaiko P, Euzenat J. Ontology matchingtate of the art and future challenges. IEEE Transactions on knowledge and data engineering. 2013;25(1):158–176. [Google Scholar]

- 53.Zavitsanos E, Paliouras G, Vouros G. Gold standard evaluation of ontology learning methods through ontology. IEEE Transactions on knowledge and data engineering. 2011;23(11):1635–1648. [Google Scholar]

- 54.Fernández M, Overbeeke C, Sabou M, Motta E. What makes a good ontology? A case-study in fine-grained knowledge reuse. Gómez-Pérez A, Yu YDing Y. (eds) The semantic web. New York: Springer-Verlag. 2009:61–75. [Google Scholar]

- 55.Hastings J, Brass A, Caine C, Jay C, Stevens R. Evaluating the Emotion Ontology through use in the self-reporting of emotional responses at an academic conference. Journal of biomedical semantics. 2014;5(1):5–38. doi: 10.1186/2041-1480-5-38. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Porzel R, Malaka R. A task-based approach for ontology evaluation. InCAI Workshop on Ontology Learning and Population; 22 - 23 August 2004; Valencia, Spain. 2004. [Google Scholar]

- 57.Brewster C, Alani H, Dasmahapatra S, Wilks Y. Data-driven ontology evaluation. In: Proceedings of the Language Resources and Evaluation Conference (LREC ); 26 - 28 May 2004; Lisbon, Portugal. 2004. pp. 164–168. [Google Scholar]

- 58.Gordon CL, Weng C. Combining expert knowledge and knowledge automatically acquired from electronic data sources for continued ontology evaluation and improvement. J Biomed Inform. 2015 Oct;57:42–52. doi: 10.1016/j.jbi.2015.07.014. https://linkinghub.elsevier.com/retrieve/pii/S1532-0464(15)00154-9 .S1532-0464(15)00154-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 59.Casellas N. Ontology evaluation through usability measures. In: Meersman R, Herrero P. Dillon T (eds) On the Move to Meaningful Internet Systems: OTM. 2009;Workshops. Heidelberg, Germany:594–603. [Google Scholar]

- 60.Kim H, Park H, Min YH, Jeon E. Development of an obesity management ontology based on the nursing process for the mobile-device domain. J Med Internet Res. 2013 Jun 28;15(6) doi: 10.2196/jmir.2512. http://www.jmir.org/2013/6/e130/ v15i6e130 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61.Lozano-Tello A, Gómez-Pérez A. Ontometric: A method to choose the appropriate ontology. Journal of Database Management. 2004;2(15):1–18. [Google Scholar]

- 62.Zemmouchi-Ghomari L, Ghomari A. A New Approach for Human Assessment of Ontologies. Third International Conference of Information Systems and Technologies, ICIST; March 2013; Tangier, Morocco. 2013. [Google Scholar]

- 63.Chifari A, Maia M, Bamidis P, Doherty G, Bilbow A, Rinaldi R. A Project to Foster Behavioural Monitoring in the Field of ADHD. The Future of Education; 13 - 14 June 2013; Firenze, Italy. 2013. [Google Scholar]

- 64.Alves S, Bamidis P, Bilbow A, Callahan A, Chiazzese G, Chifari A, Denaro P, Doherty G, Janegerber S, McGee C, Merlo G, Myttas N, Nikolaidou M, Rinaldi R, Sanches-Ferreira M, Santos M, Sclafani M, Seta L, Silveira-Maia M. Palermo, Italy: CNR - Istituto per le Tecnologie Didattiche. 2013. [2018-05-02]. Web Health Application for Adhd Monitoring Context - Driven Framework https://www.whaamproject.eu/images/documents/WhaamFrameworkEN.pdf .

- 65.Spachos D, Chiazzese G, Merlo G, Doherty G, Chifari A, Bamidis P. WHAAM: A mobile application for ubiquitous monitoring of ADHD behaviors. International Conference on Interactive Mobile Communication Technologies and Learning; 13 - 14 November 2014; Thessaloniki, Greece. 2014. pp. 305–309. [Google Scholar]