Abstract

There has been an increase in the use of web-based training methods to train behavioral health providers in evidence-based practices. This systematic review focuses solely on the efficacy of web-based training methods for training behavioral health providers. A literature search yielded 45 articles meeting inclusion criteria. Results indicated that the serial instruction training method was the most commonly studied web-based training method. While the current review has several notable limitations, findings indicate that participating in a web-based training may result in greater post-training knowledge and skill, in comparison to baseline scores. Implications and recommendations for future research on web-based training methods are discussed.

Keywords: evidence-based practice, implementation, online training, training, web-based training

Increasing the availability of therapeutic interventions that are supported by scientific evidence (commonly termed evidence-based practices or EBPs) has been a priority for behavioral health (e.g., APA Presidential Task Force on Evidence-Based Practice, 2006, National Institute of Mental Health, 1999; U.S. Department of Health and Human Services, 2003). EBPs have been identified for numerous behavioral health concerns, which include substance use disorders, mental disorders, and suicidality for both children and adults (Ayers, Sorrell, Thorp, & Wetherell, 2007; Chorpita et al., 2011; Substance Abuse and Mental Health Services Administration, 2014; Scogin, Welsh, Hanson, Stump, & Coates, 2005). Despite knowledge of these treatments, it takes an estimated 17 years for an EBT to move from research into community settings where many families receive behavioral health services (Morris, Wooding, & Grant, 2011).

A number of reports published in the United States have detailed the lack of EBPs in community settings, and outlined recommendations to reduce the research-practice gap (e.g., American Psychological Association, 1993; National Institute of Mental Health, 1998; US Department of Health and Human Services, 2003). Following these reports, efforts surrounding the implementation of EBPs proliferated, including increased funding for EBP implementation (McHugh & Barlow, 2010), and a substantial increase in research on factors related to implementation (Bruns, Kerns, Pullmann, & Hensley, 2015). These efforts are a promising step in improving the implementation of EBPs in community settings; however, it is crucial to continue identifying methods which facilitate implementation.

Training has been identified as a key implementation strategy for improving provider knowledge and skill in an EBP; however, there is a lack of agreement regarding the most effective training methods (Fixsen, Naoom, Blase, Friedman, & Wallace, 2005; Powell et al., 2015; Stuart, Tondora, & Hoge, 2004). A need to understand the effectiveness of various training methods for behavioral health providers prompted several systematic reviews (Beidas & Kendall, 2010; Herschell, Kolko, Baumann, & Davis, 2010). The results of these reviews led to similar conclusions, that commonly utilized methods, such as reading treatment manuals and brief workshops, are insufficient at increasing provider knowledge and skill in an intervention (Herschell et al., 2010). These reviews also found evidence for the importance of ongoing support (e.g., consultation, supervision) and contextual factors (e.g., organizational resources) in the effectiveness of a training (Beidas & Kendall, 2010).

Since the completion of these reviews, there has been an increased interest in the use of web-based training methods (McMillen, Hawley, & Proctor, 2015). In contrast to traditional face-to-face training methods, web-based training methods offer a promising way of reaching a large number of providers at a low cost. Despite the promise of web-based training methods, they remain relatively unstudied in previous reviews (Herschell et al., 2010). A review by Herschell and colleagues (2010) found mixed evidence for web-based training on provider knowledge, although conclusions were made from a limited amount of studies. Within this review, studies using more rigorous research methodology noted increases in knowledge both immediately following the web-based training, yet other studies using weaker methodological designs noted little or no change in knowledge following a web-based training (Herschell et al., 2010). A recent systematic review of web-based training methods for substance abuse counselors by Calder and colleagues (2017) was unable to draw definitive conclusions due to a small number of included studies; however, the authors suggested that web-based training may be effective under certain conditions. These two reviews highlight the need for further research on web-based training.

To date, no review to our knowledge has synthesized the literature on web-based training methods, specifically pertaining to community-based behavioral health providers. Behavioral health providers, including individuals such as psychologists, social workers, and counselors, prevent and help treat behavior health concerns (Substance Abuse and Mental Health Services Administration, 2014). Additionally, previously reviews have not differentiated outcomes according to web-based training method. As there is now a larger base of studies examining web-based training methods, the current review seeks to extend previous reviews by addressing this question. To achieve this goal, web-based training methods commonly used for EBP training will be categorized according to training method and the inclusion of ongoing support. Ultimately, this review will serve as a summary of current knowledge on the effectiveness of web-based training to better understand its potential as an EBP training option.

Method

Search Strategy

The search strategy and terms were developed by CJ, LQ, and AH. An electronic search was conducted between October 11th, 2017 and November 13th, 2017. Studies included in the review were identified through a literature search utilizing the following databases: Cumulative Index to Nursing and Allied Health (CINAHL), Embase, Medline EBSCO, Medline OVID, and Psychological Abstracts (PsycINFO). The search string utilized was (“distance education” OR “distance learning” OR “e-training” OR “online education” OR “online training” OR “web-based education” OR “web-based training”) AND (“counselor” OR “counsellor” OR “behavioral health” OR “behavioural health” OR “mental health” OR “psychologist” OR “substance abuse” OR “substance use”). The grey literature was searched by contacting listservs and authors of included studies for any relevant published or unpublished literature. Citations were exported to Mendeley, and duplicates were removed. Titles and abstracts were then examined to determine inclusion eligibility. If the relevance of an article could not be determined through the title or abstract, full-text versions of the article were obtained and further examined.

Inclusion Criteria

To be included in the review, articles had to be peer-reviewed, written in English, contain a sample of behavioral health clinicians, and a research question addressing the effectiveness of web-based training designs (i.e., include an outcome variable such as knowledge, skill, adherence). Dates were not constricted as web-based training methods are relatively new training designs, particularly in the field of behavioral health. Unpublished dissertations, abstracts, review papers, and studies with graduate students and/or teachers as the primary participants were excluded. Studies with graduate students as the central participants of the study were excluded due to concerns that graduate students may be delivering interventions in primarily university settings, which differ considerably from community settings where children commonly have more comorbid diagnoses, and clinicians have less supervision and support (Southam-Gerow, Rodríguez, Chorpita, & Daleiden, 2012). Studies with teachers as participants were also excluded as the classroom environment and clinical diagnoses targeted may vary substantially from the setting in which behavioral health providers work (Rones & Hoagwood, 2000).

Coding Procedures

Coding procedures were developed by CJ, LQ, and AH. CJ developed a data extraction form that was verified by LQ. Following this initial search, the articles identified as potentially relevant were divided between CJ and LQ, both of whom are doctoral-level graduate students, and excluded if they did not meet inclusion criteria. In the circumstances that the authors were unable to independently determine if an article met inclusion criteria, decisions were finalized at a consensus meeting. An independent third author, with doctoral-level qualifications, participated in the consensus meeting to lend expertise and decide on any remaining article disagreements. Coding was conducted by CJ and LQ. Although we did not calculate an inter-rater reliability statistic to assess inter-rater reliability, CJ and LQ met regularly to discuss coding discrepancies and achieve consensus on disparities.

Due to the heterogeneity of web-based training designs, studies were then classified according to Shapiro’s (2001) definitions of web-based training methods, given that this classification method is parsimonious and common in continuing medical education and the behavioral health field. Web-based training methods included virtual classroom, serial instruction, self-directed learning, and simulation training. The virtual classroom design provides collaborative, group learning in a web-based environment, where a facilitator guides the classroom instruction, communicates with the learners, and provides resources for learning (Shapiro, 2001). Serial instruction designs are linear tutorial models in which users complete learning content administered in the same order to all users (Shapiro, 2001). Self-directed learning designs place the users in charge of determining their individual needs and learning goals; therefore, the users are responsible for choosing appropriate learning materials and modules necessary to achieve these goals (Shapiro, 2001). The final training type, simulation, involves the use of virtual patients with whom the users interact to make diagnoses, medical decisions, and treatment plans (Shapiro, 2001).

The included articles were then coded by training dosage, intended training recipients, type of EBP (e.g., cognitive-behavioral therapy; CBT), condition to treat, ongoing support (i.e., consultation, supervision), research design (e.g., pre-post, post-test only), measurement method (e.g., self-report, behavioral observations), and outcome assessed (e.g., knowledge, skill). Many of the included studies examined a CBT intervention for a specific population (e.g., Trauma-Focused Cognitive Behavioral Therapy); however, these studies have all been categorized as using a CBT intervention, although there may be differences in the description and use of CBT in each study. Previous research has also suggested that ongoing support is a significant component of training effectiveness (Beidas & Kendall, 2010). Therefore, studies that included an element of ongoing support are highlighted to further understand the impact of training design on effectiveness.

Assessment of Study Quality

To evaluate the methodological quality of each article included in the review, the authors categorized studies according to Nathan and Gorman’s (2002, 2007) classification criteria. These criteria are commonly used in review papers (e.g., Silverman, Pina, & Viswesvaran, 2008; Waldron & Turner, 2008) to rate studies on a continuum from most rigorous (Type 1) to least rigorous (Type 6). All studies included in the current review were found to meet criteria for a classification of Type 1, 2, or 3. Considered the most rigorous, Type 1 studies include randomized clinical trials that use comparison groups, blinded assessments, clearly defined inclusion/exclusion criteria, appropriate sample size, and detailed research design and statistical methods (Nathan & Gorman, 2002, 2007). Type 2 studies contain almost all of the design elements of Type 1 studies, but they are missing at least one important component (e.g., adequate sample size; Nathan & Gorman, 2002). Type 3 studies are open treatment studies that aim to collect pilot data (Nathan & Gorman, 2002).

Effect Size Determination

Hedge’s g was utilized as a measure of effect size as it a derivation of Cohen’s d that reduces bias for small sample sizes (Borenstein & Cooper, 2009). The information required to calculate an effect size was extracted to determine an effect size for each outcome. This information included sample size, pre- and post-means, standard deviations, and t-statistics. Unless noted in the table, the effect size calculation was based on a pre-post comparison of these statistics.

Results

Selection of Studies

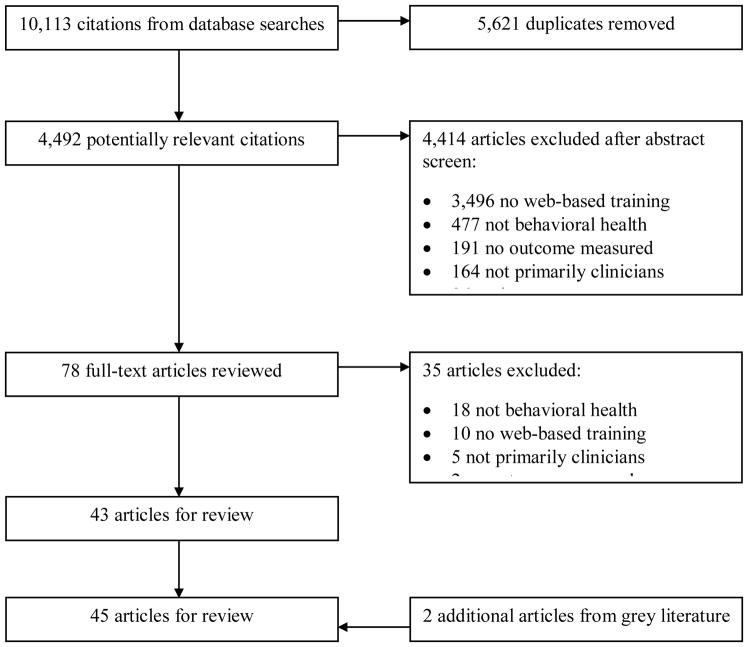

Figure 1 details the study selection process. A literature search of all databases yielded 10,113 citations. After 5,261 duplicates were removed, 4,492 potentially relevant titles and abstracts were screened, of which 78 full-text articles were reviewed. Thirty-five articles were excluded: 18 were not behavioral health, 10 did not include a web-based training method, five did not have behavioral health clinicians as the primary sample, and two did not assess an outcome. This left 43 articles that met inclusion criteria. Corresponding authors of these articles and relevant listservs were contacted to examine the grey literature for relevant published and unpublished literature, resulting in an additional two articles for a total of 45 articles.

Figure 1.

PRISMA diagram depicting systematic search process.

Classification of Studies

Tables 1, 2, 3, and 4 provide brief summaries of the included studies categorized by web-based training method. If a study included more than one web-based training method, the study was included in both tables. Web-based training methods utilized in the 45 studies included 10 virtual classroom designs, 37 serial instruction designs, two self-directed learning design, and two simulation training designs. Thirteen of the 45 studies included a form of ongoing support, with five of these using supervision and eight using consultation (see Table 5).

Table 1.

Studies Including a Virtual Classroom Training Design

|

Nath an & Gor man (200 2) Crit eria |

Autho rs |

Sample | Interve ntion & Condit ion to Treat |

Intende d Traine es |

Ong oing Supp ort |

Design | Measurement Method |

Findin gs & Hedge’ s g |

|||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

| |||||||||||||

| Ran dom Assi gn. |

# and Co mp. Gro ups |

Traini ng Dosa ge |

Foll ow- up |

Do mai n |

Ty pe |

Stand ard- ized Meas ure |

|||||||

| 3 | Kanter et al., 2013 | 16 clinicians | FAP No specific condition |

Clinicians | No | Yes | 2: 1. VC 2. C |

16 hrs. | None | SA, S | SR BO |

No | Skill: VC > C (g = 1.59) |

| 3 | Kobak, et al,. 2013 | 39 clinicians | CBT for anxiety disorders | Mental health clinicians | No | No | 2: 1. SI 2. SI + VC |

10 modules | None | K, S | SR BO |

Yes | Knowledge: SI (g = 4.7) Skill: SI + VC (g = .96) |

| 1 | Leykin et al., 2011 | 149 substance use counselors | CBT for substance use | Substance use counselors | Yes, S | Yes | 2: 1. VC 2. SI |

8 modules | 6 mos. | B | SR | Yes | Burnout: SI > VC (g = .31) |

| 3 | Puspitasari et al., 2013 |

n = 8 Study 2: n = 9 mental health providers |

Behavioral activation for depression | Community mental health providers | No | No | 0 (pre-post) |

4 online modules and 3 90 min. live online training sessions |

Study 1: None Study 2: 6 weeks |

Study 1: U, SA, Study 2: U, S, SA, SE |

1: SR 2: BO, SR |

No | Study 1: Increased use, good satisfaction; Study 2: Increased use, self-efficacy, and skill; very good satisfaction; g = X |

| 1 | Rawson et al., 2013 | 143 clinicians | CBT for stimulant use disorders | Substance use counselors | Yes, C | Yes | 3: 1. FTF 2. VC 3. C |

3 day workshop either in person (FTF) or online (VC) | 0, 4, 8, and 12 weeks | K, S, U | SR BO |

Yes | Knowledge: FTF > C (g = .73) VC > C (g = .16) Skill and Use: FTF = VC > C (g = X) |

| 3 | Rees et al., 2001 | 12 mental health practitioners | CBT No specific condition |

Rural mental health practitioners | No | No | 0 (pre-post) |

10 1.5 hr. sessions with auxiliary readings | No | K, SA | SR | No | Knowledge: g = 1.34 Satisfaction: High rates post-training (g = X) |

| 3 | Rees et al., 2009 | 48 mental health practitioners | CBT No specific condition |

Rural mental health practitioners | No | No | 0 (pre-post) |

10 1.5 hr. sessions with auxiliary readings | No | K, SA | SR | No | Knowledge: g = .47 Satisfaction: High rates post-training (g = X) |

| 3 | Reid et al., 2005 | 133 mental health practitioners | Disaster mental health for trauma/disaster survivors | Public health, mental health, and general healthcare providers | No | No | 0 (pre-post) |

5 modules | none | K, S | SR | No | Increase in knowledge and skill (g = X) |

| 3 | Shafer et al., 2004 | 30 substance use therapists | MI for substance use | General and behavioral healthcare providers | No | No | 0 (pre-post) |

5 3-hr. video workshops, once a month | 4 mos. | K, S | SR BO |

Mixed | Knowledge: g = .47 Skill: g = 1.05 |

| 3 | Stone et al., 2005 | 1200 participants | Suicide prevention | General and behavioral healthcare providers | No | No | 2: 1. SI 2. VC |

3 workshops | none | K | SR | No | Increases in knowledge (g = X); Conclusions not made between groups |

Note. Ongoing Support: C = Consultation, S = Supervision; Comparison Groups: C = Non-Training Control, FTF = Face-To-Face or In-Person, ME = Motivational Enhancement, OS = Ongoing Support, SD = Self Directed, SI = Serial Instruction, SM = Simulation, VC = Virtual Classroom, WM = Written Materials; Measurement Domains: A = Attitudes, AC = Acceptability, B = Burnout, BA = Barriers, C = Training Completion, E = Engagement, F = Fidelity or Adherence, FE = Feasibility, I = Importance, K = Knowledge, P = Preparedness, S = Skill, SA = Satisfaction, SE = Self-Efficacy or Confidence, U = Use, X = Client Outcomes; Measurement Type: BO = Behavioral Observation, SR = Self Report; Effect Size: X: Insufficient information to calculate effect size.

Table 2.

Studies Including a Serial Instruction Training Method

|

Nat han & Gor man (200 2) Crit eria |

Autho rs |

Sampl e |

Interve ntion & Conditi on to Treat |

Intend ed Traine es |

Ong oing Sup port |

Design | Measurement Method |

Finding s & Effect Size |

|||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

| |||||||||||||

| Ran dom Assi gn. |

# and Comp. Group s |

Trai ning Dos age |

Foll ow- up |

Do mai n |

Ty pe |

Stan dard- ized Meas ure |

|||||||

| 1 | Bennett-Levy et al., 2012 | 40 mental health professionals | CBT, no specific condition | Counsellors | Yes, C | Yes | 2: 1. SI 2. SI+OS |

22.5 hours | 4 weeks | K, S, C, SE | SR | No | SI + OS = SI for knowledge (g = .07), skill (g = .07), self-efficacy (g = .04); SI + OS > SI for training completion |

| 3 | Bray et al., 2009 | 62 counselors | Substance use prevention | Counsellors | No | No | 0 (pre-post) |

3-hours | none | I, P, SE | SR | No | Increase in awareness of importance (g = .71), preparedness (g = .33) to deliver the intervention, and self-efficacy (g = .32) |

| 3 | Brose et al., 2012 | 778 stop smoking practitioners | Smoking cessation | Community practitioners | No | No | 0 (pre-post) |

2.5-hours | none | K | N/A | No | Increase in knowledge (g = .89) |

| 3 | Brownlow et al., 2015 | 187 health professionals | Eating disorder treatment | Clinicians in community and medical settings | No | No | 0 (pre-post) |

17.5-hours | none | A, K, SE, S | SR | No | Increase in knowledge (g = .23), skill (g = .23), and self-efficacy (g = X); Decrease in stigmatized beliefs (attitudes) (g = .84) about eating disorders |

| 2 | Chu et al., 2017 | 35 providers | CBT for anxiety | Clinicians in youth service settings | Yes, C | Yes | 3 1. SI+OS 2. SI+PC 3. SI+WM |

6.5 hours | none | K, S, U, SA, SE | SR | No | All conditions: Increases in use (g = .76), decreases in knowledge (g = −1.7) and skill (g = −2.1) SI + PC: Decreases in knowledge (g = −1.1), skill (g = −1.47), and implementation potential (g = −.2.1) compared to SI + WM SI + OS: Did not differ from SI + PC |

| 1 | Cohen et al., 2016 | 129 mental health practitioners | CBT for trauma | Therapists | Yes, C | Yes | 2: 1. SI+OS 2. SI+FTF+OS |

10 hours | none | X, E, F | SR | Yes | SI + FTF + OS > SI + OS for engagement (g = X), fidelity (g = .58), and completing treatment with clients (g = .41) |

| 3 | Crawford et al., 2015 | 49 clinicians | Behavioral activation for depression | Cognitive/behavioral clinicians | No | Yes | 2: 1. SI 2. C |

2–3 hours | 1 week | K, SA, SE | SR | No | SI > C for knowledge (g = .78) and self-efficacy (g = 1.34); High rates of satisfaction (g = X) |

| 2 | Dimeff et al., 2009 | 174 clinicians | DBT for borderline personality disorder | Substance use and mental health providers | No | Yes | 3: 1. WM 2. SI 3. FTF |

20 hours online, 2 days in-person | 90 days | K, SA, S, U | SR, BO | No | SI > FTF for knowledge (g = .37), skill (g = .21) FTF, WM > SI for use (g = .46) FTF > SI for satisfaction (g = X) |

| 2 | Dimeff et al., 2011 | 132 providers | DBT for borderline personality disorder | Community behavioral health providers | No | Yes | 3: 1. WM 2. SI 3. C |

N/A | 2, 7, & 11 weeks | K, SE, SA, U | SR | No | SI > C for knowledge (g = 3.4), self-efficacy (g = .77), and use (g = .05) WM > C for knowledge (g = 2.8), self-efficacy (g = .42), and use (g = .65) SI produced better long-term outcomes |

| 1 | Dimeff et al., 2015 | 172 clinicians | DBT for borderline personality disorder | Clinicians that treat borderline personality disorder | No | Yes | 3: 1. WM 2. SI 3. FTF |

8 hours | 30, 60, 90 days | K, S, SA, SE, U | SR, BO | Yes | Self-efficacy: SI > WM (g = .15) FTF > SI (g = .80) Knowledge: SI > WM (g = .49) SI > FTF (g = .49) Satisfaction greater for FTF (g = X) No differences in condition for skill, use |

| 3 | Fairburn et al., 2017 | 139 clinicians | CBT for eating disorders | Mental health providers | No | No | 0 (pre-post) | 9 hours | none | K | SR | Yes | Significant improvements in knowledge (g = X) |

| 3 | German et al., in press | 362 clinicians | CBT, no specific condition | Community clinicians | Yes, C | No | 2: 1. FTF 2. SI |

FTF: 22 hrs. SI: 6 hrs. |

none | K, S | SR | Yes | SI not inferior to FTF with no differences in knowledge; SI clinicians less likely to be competent (g = − .1) than FTF |

| 1 | Ghoncheh et al., 2016 | 190 gatekeepers | Suicide prevention | Gatekeepers (e.g., school staff, police, primary healthcare providers) | No | Yes | 2: 1. SI 2. C |

8 modules | 3 months | K, SE | SR | No | SI > C for knowledge (g = 1.1) and self-efficacy (g = .56) |

| 3 | Gryglewicz et al., 2017 | 178 mental health providers | Question, Persuade, Refer, Treat for suicidality | Mental health providers | No | No | 0 (pre-post) | 8–12 hours | No | K, SA | SR | No | Significant improvements in knowledge (g = .93); High rates of satisfaction (g = X) |

| 2 | Harned et al., 2011 | 46 mental health providers | Exposure therapy for anxiety disorders | Mental health providers | No | Yes | 3: 1. SI 2. SI+ME 3. C |

10 hours | 1 week | A, K, S, SE | SR | No | SI = SI + ME > C for knowledge (g = 2.1; 3.1), self-efficacy (g = 1.41; 1.8), and satisfaction (g = .85; 1.12) SI + ME > SI for attitudes (g = .02) |

| 1 | Harned et al., 2014 | 181 clinicians | Exposure therapy for anxiety disorders | Mental health providers | Yes, C | Yes | 3: 1. SI/SM 2. SI/SM+ME 3. SI/SM + ME + OS |

10 hours | 6 & 12 weeks | A, K, SA, S | BO, SR | Mixed | SI/SM + ME + OS showed greatest improvements in knowledge (g = .38), attitudes (g = 43), and skill (g = .39); All conditions equal for satisfaction and use |

| 3 | Heck et al., 2015 | 123,848 trainees | CBT for trauma | Any professional working with child trauma victims | No | No | 0 (pre-post) |

20 hours | none | K | N/A | No | Increase in knowledge (g = .77) |

| 3 | Kobak et al., 2013 | 39 clinicians | CBT for anxiety disorders | Mental health clinicians | No | No | 2: 1. SI 2. SI+VC |

10 modules | None | K, S | SR BO |

Yes | Knowledge: SI (g = 4.7) Skill: SI + VC (g = .96) |

| 3 | Kobak et al., 2017 | 70 clinicians | CBT for anxiety disorders | Community clinicians | No | No | 0 (pre-post) | 9 modules | No | K, S, U, X | SR | Yes | Improvements in knowledge (g = 3.65), skill (g = 1.53), use (g = 1.15), client outcomes (g = .85) |

| 3 | Larson et al., 2009 | 38 counselors | CBT for substance use | Substance use counselors | No | No | 0 (pre-post) |

8 modules | none | AC, FE, SA | SR | No | High rates of acceptability (g = X), feasibility (g = X), and satisfaction (g = X) |

| 1 | Larson et al., 2013 | 127 counselors from 54 addiction units | CBT for substance use | Substance use counselors | No | Yes | 2: 1. SI 2. C |

8 modules | 3 months | S | SR BO |

No | Increase in skill (g = X) for SI and C with no differences between groups |

| 1 | Leykin et al., 2011 | 149 substance use counselors | CBT for substance use | Substance use counselors | Yes, S | Yes | 2: 1. VC 2. SI |

8 modules | 6 months | B | SR | Yes | Burnout: SI > VC (g = .31) |

| 1 | Marshall et al., 2014 | 215 VA providers | Suicide prevention | Behavioral health providers | No | Yes | 3: 1. SI 2. FTF 3. C |

4 modules | none | SA,U | SR | No | SI = FTF > C for both satisfaction (g = X) and use (g = X) |

| 3 | Martino et al., 2011 | 26 counselors | Motivational Interviewing for substance use |

Substance use counselors | Yes, S | No | 0 (step-wise method) | 4 hours | 24 weeks | SA, F, S | SR, BO | No | SI only led to higher rates of skill (g = X) and fidelity (g = X); Positive satisfaction with course |

| 1 | McPherson et al., 2006 | 192 health promotion professionals | Substance use prevention | Health promotion professionals | No | Yes | 2: 1. SI 2. WM |

5 modules | none | K, SA, SE | N/A | No | No differences between groups on knowledge or satisfaction; SI > WM for self-efficacy (g = 1.72) |

| 3 | Mignogna et al., 2014 | 9 therapists | CBT for medically ill patients with depressive and anxiety | Primary care therapists | Yes, C | No | 0 (pre-post) |

6 30–45 min. sessions | none | AC, FE, F | BO, SR | No | High rates of fidelity (g = X) and feasibility (g = X); Moderate acceptability (g = X) |

| 3 | Puspitasari et al., 2013 | Study 1: N = 8 Study 2: N = 9 mental health providers |

Behavioral activation for depression | Mental health providers | No | No | 0 (pre-post) |

3 modules | Study 1: none Study 2: 6 weeks |

Study 1: U, SA, Study 2: U, S, SA, SE |

1: SR 2: BO, SR |

No | Increases in use (g = X), satisfaction (g = X), and skill (g = X) |

| 1 | Rakovshik et al., 2016 | 61 practicing mental health providers | CBT for anxiety disorders | Therapists | Yes, S | Yes | 3: 1. SI 2. SI + OS 3. C |

20 hours | none | S | BO | Yes | SI + OS > SI (g = .95) and C (g = 1.01) for skill |

| 3 | Reid et al., 2005 | 133 public health practitioners | Disaster mental health | Public health providers | No | No | 0 (pre-post) |

5 days/modules | none | K, S | SR | No | Increase in knowledge (g = X) and skill (g = X) |

| 1 | Rheingold et al., 2012 | 188 child care professionals | Child abuse prevention | Child care professionals | No | Yes | 3: 1: FTF 2: SI 3: C |

2.5 hours | none | AC | SR | No | SI = FTF for acceptability (g = X) |

| 1 | Ruzek et al., 2014 | 168 VHA mental health clinicians | CBT for trauma | Mental health clinicians | Yes, C | Yes | 3: 1: SI 2: SI+OS 3: C |

No info. provided | none | K, SE, S | SR | Mixed | Knowledge: SI+OS > C (g = .86) SI > C (g = .41) Self-efficacy: SI+OS > C (g = .91) SI > C (g = .72) Use: No differences between groups |

| 3 | Samuelson et al., 2014 | 73 primary care providers | Psychoed. for PTSD | Primary care providers | No | No | 0 (pre-post) | 4 modules | 30 days | K, SE | SR | No | Increase in knowledge (g = 3.23) and self-efficacy (g = X) |

| 1 | Stein et al., 2015 | 36 clinicians | Interpersonal psychotherapy for bipolar disorder | Clinicians | Yes, S | Yes | 2: 1. FTF 2. SI |

12 hours | once a month for one year | U | SR | Yes | Increased in use (g = X) for both groups |

| 3 | Stone et al., 2005 | 1200 participants | Suicide prevention | Public officials, service providers, community coalitions | No | No | 2: 1. SI 2. VC |

3 modules | none | K | SR | No | Increases in knowledge (g = X); Conclusions not made between groups |

| 3 | Vismara et al., 2009 | 10 therapists | Early intervention for autism spectrum disorder | Community-based therapists | Yes, S | No | 2: 1. SI 2. FTF |

5 months of didactic training, 5 months of parent coaching | none | X, F, SA | BO, SR | Yes | Fidelity: FTF > SI (g = 1.89) SI > baseline (g = 1.65) Satisfaction: FTF > SI (g = 2.46) Improvement in child behavior in both groups (g = X) |

| 1 | Weingardt et al., 2006 | 166 substance abuse counselors | CBT for substance use | Substance use counselors | No | Yes | 3: 1. FTF 2. SI 3. C |

60 minutes | none | K | N/A | No | FTF = SI > C for knowledge (g = 1.29) |

| 3 | Weingardt et al., 2009 | 147 substance use counselors | CBT for substance use | Substance use counselors | Yes, S | Yes | 2: 1. High fidelity SI 2. Low fidelity SI |

8 modules | None | K, SE, B | SR | Yes | No differences in knowledge or self-efficacy; Low-fidelity group< burnout (g = .28) than high-fidelity group |

Note. Ongoing Support: C = Consultation, S = Supervision; Comparison Groups: C = Non-Training Control, FTF = Face-To-Face or In-Person, ME = Motivational Enhancement, OS = Ongoing Support, PC = Peer Consultation, SD = Self Directed, SI = Serial Instruction, SM = Simulation, VC = Virtual Classroom, WM = Written Materials; Measurement Domains: A = Attitudes, AC = Acceptability, B = Burnout, BA = Barriers, C = Training Completion, E = Engagement, F = Fidelity or Adherence, FE = Feasibility, I = Importance, K = Knowledge, P = Preparedness, S = Skill, SA = Satisfaction, SE = Self-Efficacy or Confidence, U = Use, X = Client Outcomes; Measurement Type: BO = Behavioral Observation, SR = Self Report; Effect Size: X: Insufficient information to calculate effect size.

Table 3.

Studies Including a Self-Directed Training Design

|

Nath an & Gor man (200 2) Crite ria |

Author s |

Samp le |

Interve ntion & Conditi on to Treat |

Intend ed Traine es |

Ong oing Supp ort |

Design | Measurement Method |

Finding s & Hedge’s g |

|||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

| |||||||||||||

| Rand om Assi gn. |

# and Co mp. Gro ups |

Train ing Dosa ge |

Foll ow- up |

Dom ain |

Ty pe |

Stan dard -ized Meas ure |

|||||||

| 3 | Han et al., 2013 | 666 web site users | Toolkit with depression resources | Behavioral health providers | No | No | 0 (pre-post) |

N/A | No | U, SA, SE | SR | No | High satisfaction and self-efficacy; use varied by profession type; (g = X) |

| 3 | Sholomskas et al., 2005 | 78 clinicians | CBT for substance use | Substance use clinicians | Yes, S | No | 3: 1. WM 2. WM+ SD 3. WM+ SD+ OS |

20 hours online | 3 mos. | K, S | BO | Yes | Knowledge: WM+SD+OS (g = .32) > WM+SD (g = .29) > WM Skill: WM+SD+OS (g = .69) > WM+SD (g = .22) > WM |

Note. Ongoing Support: C = Consultation, S = Supervision; Comparison Groups: C = Non-Training Control, FTF = Face-To-Face or In-Person, ME = Motivational Enhancement, OS = Ongoing Support, SD = Self Directed, SI = Serial Instruction, SM = Simulation, VC = Virtual Classroom, WM = Written Materials; Measurement Domains: A = Attitudes, AC = Acceptability, B = Burnout, BA = Barriers, C = Training Completion, E = Engagement, F = Fidelity or Adherence, FE = Feasibility, I = Importance, K = Knowledge, P = Preparedness, S = Skill, SA = Satisfaction, SE = Self-Efficacy or Confidence, U = Use, X = Client Outcomes; Measurement Type: BO = Behavioral Observation, SR = Self Report; Effect Size: X: Insufficient information to calculate effect size.

Table 4.

Studies Including a Simulation Training Design

|

Nath an & Gor man (200 2) Crite ria |

Aut hors |

Samp le |

Interve ntion & Conditi on to Treat |

Intend ed Traine es |

Ong oing Supp ort |

Design | Measurement Method |

Findings | |||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

| |||||||||||||

| Ran dom Assi gn. |

# and Comp. Group s |

Trai ning Dosa ge |

Foll ow- up |

Do mai n |

Ty pe |

Stand ard- ized Meas ure |

|||||||

| 1 | Harned et al. 2014 | 181 clinicians | Exposure therapy for anxiety disorders | Mental health treatment providers | Yes, C | Yes | 3: 1. SI/SM 2. SI/SM+ME 3. SI/SM + ME + OS |

10 hours | 6 & 12 weeks | A, K, SA, S, U | BO, SR | Mixed | SI/SM + ME + OS showed greatest improvements in knowledge (g = .38), attitudes (g = 43), and skill (g = .39); All conditions equal for satisfaction, use, and attitudes |

| 3 | Kobak et al. 2017 | 70 clinicians | CBT for anxiety disorders | Community clinicians | No | No | 0 (pre-post) | 9 modules | No | K, S, U, X | SR | Yes | Knowledge: g = 3.68; Skill: g = 3.11; High satisfaction, use, and positive client outcomes post-training |

Note. Ongoing Support: C = Consultation, S = Supervision; Comparison Groups: C = Non-Training Control, FTF = Face-To-Face or In-Person, ME = Motivational Enhancement, SM = Simulation, SI = Serial Instruction, VC = Virtual Classroom, WM = Written Materials; Measurement Domains: A = Attitudes, AC = Acceptability, B = Burnout, BA = Barriers, C = Training Completion, E = Engagement, F = Fidelity or Adherence, FE = Feasibility, I = Importance, K = Knowledge, P = Preparedness, S = Skill, SA = Satisfaction, SE = Self-Efficacy or Confidence, U = Use, X = Client Outcomes; Measurement Type: BO = Behavioral Observation, SR = Self Report; Effect Size: X: Insufficient information to calculate effect size.

Table 5.

Studies Including an Ongoing Support Component

|

Nat han & Gor man (200 2) Crit eria |

Auth ors |

Sampl e |

Interve ntion & Conditi on to Treat |

Intend ed Traine es |

Ong oing Sup port |

Design | Measurement Method |

Findings & Effect Size |

|||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

|

| |||||||||||||

| Ran dom Assi gn. |

# and Comp . Group s |

Train ing Dosa ge |

Foll ow- up |

Do mai n |

Ty pe |

Stan dard- ized Meas ure |

|||||||

| 1 | Bennett-Levy et al. 2012 | 40 mental health professionals | CBT, no specific condition | Counsellors | Yes, C | Yes | 2: 1. SI 2. SI+OS |

22.5 hours | 4 weeks | K, S, C, SE | SR | No | SI + OS = SI for knowledge (g = .07), skill (g = .07), and self-efficacy (g = .04); SI + OS > SI for training completion |

| 2 | Chu et al. 2017 | 35 providers | CBT for anxiety | Clinicians in youth service settings | Yes, C | Yes | 3 1. SI+OS 2. SI+PC 3. SI+WM |

6.5 hours | none | K, S, U, SA, SE | SR | No | All conditions: Increases in use (g = .76), decreases in knowledge (g = −1.7) and skill (g = −2.1) SI + PC: Decreases in knowledge (g = −1.1), skill (g = −1.47), and implementation potential (g = −.2.1) compared to SI + WM SI + OS: Did not differ from SI + PC |

| 1 | Cohen et al. 2016 | 129 mental health practitioners | CBT for trauma | Therapists | Yes, C | Yes | 2: 1. SI + OS 2. SI + FTF + OS |

10 hours | none | X, E, F, C, S | SR | Yes | SI + FTF + OS > SI + OS for engagement (g = X), fidelity (g = .58), and completing treatment with clients (g = .41) |

| 3 | German et al., in press | 362 clinicians | CBT, no specific condition | Community clinicians | Yes, C | No | 2: 1. FTF 2. SI |

FTF: 22 hrs. SI: 6 hrs. |

none | K, S | SR | Yes | SI not inferior to FTF with no differences in knowledge; SI clinicians less likely to be competent (g = − .1) than FTF |

| 1 | Harned et al. 2014 | 181 clinicians anxiety disorders | Exposure therapy for providers | Mental health treatment | Yes, C | Yes | 3: 1. SI/SM 2. SI/SM+ME 3. SI/SM + ME + OS |

10 hours | 6 & 12 wee ks | A, K, SA, S | BO, S R |

Mixed | SI/SM + ME + OS showed greatest improvements in knowledge (g = .38), attitudes (g = .43), and skill (g = .39); All conditions equal for satisfaction and use |

| 1 | Leykin et al. 2011 | 149 substance use counselors | CBT for substance use | Substance use counselors | Yes, S | Yes | 2: 1. VC 2. SI |

8 modules | 6 months | B | SR | Yes | Burnout: SI > VC (g = .31) |

| 3 | Martino et al. 2011 | 26 counselors | Motivational Interviewing for substance use |

Substance use counselors | Yes, S | No | 0 (step-wise method) | 4 hours | 24 weeks | SA, F, S | SR, BO | No | SI only led to higher rates of skill (g = X) and fidelity (g = X); Positive satisfaction with course |

| 3 | Mignogna et al. 2014 | 9 therapists | CBT for medically ill patients with depressive and anxiety | Primary care therapists | Yes, C | No | 0 (pre-post) |

6 30–45 min. sessions | none | AC, FE, F | BO, SR | No | High rates of fidelity (g = X) and feasibility (g = X); Moderate acceptability (g = X) |

| 1 | Rakovshik et al. 2016 | 61 practicing mental health providers | CBT for anxiety disorders | Therapists | Yes, S | Yes | 3: 1. SI 2. SI + OS 3. C |

20 hours | none | S | BO | Yes | SI + OS > SI (g = .95) and C (g = 1.01) for skill |

| 1 | Rawson et al., 2013 | 143 clinicians | CBT for stimulant use disorders | Substance use counselors | Yes, C | Yes | 3: 1. FTF 2. VC 3. C |

3 day workshop either in person (FTF) or online (VC) | 0, 4, 8, and 12 weeks | K, S, U | SR BO |

Yes | Knowledge: FTF > C (g = .73) VC > C (g = .16) Skill and Use: FTF = VC > C (g = X) |

| 1 | Ruzek et al. 2014 | 168 VHA mental health clinicians | CBT for trauma | Mental health clinicians | Yes, C | Yes | 3: 1: SI 2: SI+OS 3: C |

No info. provided | none | K, SE, U | SR | Mixed | Knowledge: SI+OS > C (g = .86) SI > C (g = .41) Self-efficacy: SI+OS > C (g = .91) SI > C (g = .72) Use: No differences between groups |

| 1 | Stein et al. 2015 | 36 clinicians | Interpersonal psychotherapy for bipolar disorder | Clinicians | Yes, S | Yes | 2: 1. FTF 2. SI |

12 hours | once a month for one year | U | SR | Yes | Increased in use (g = X) for both groups |

| 3 | Vismara et al. 2009 | 10 therapists | Early intervention for autism spectrum disorder | Community-based therapists | Yes, S | No | 2: 1. SI 2. FTF |

5 months of didactic training, 5 months of parent coaching | none | X, F, SA | BO, SR | Yes | Fidelity: FTF > SI (g = 1.89) SI > baseline (g = 1.65) Satisfaction: FTF > SI (g = 2.46) Improvement in child behavior in both groups (g = X) |

Note. Ongoing Support: C = Consultation, S = Supervision; Comparison Groups: C = Non-Training Control, FTF = Face-To-Face or In-Person, ME = Motivational Enhancement, SM Simulation, SI = Serial Instruction, VC = Virtual Classroom, WM = Written Materials; Measurement Domains: A = Attitudes, AC = Acceptability, B = Burnout, BA = Barriers, C = Training Completion, E = Engagement, F = Fidelity or Adherence, FE = Feasibility, I = Importance, K = Knowledge, P = Preparedness, S = Skill, SA = Satisfaction, SE = Self-gficacy or Confidence, U = Use, X = Client Outcomes; Measurement Type: BO = Behavioral Observation, SR = Self Report; Effect Size: X: Insufficient information to calculate effect size.

Web-based Training Methods

Virtual classroom

The present review identified 10 studies utilizing the virtual classroom as a web-based training method, which employs a group-based learning design where a facilitator provides resources for the learners (see Table 1). Nine of these studies (n = 9; 90%) demonstrated a variety of positive outcomes following participation in the virtual classroom training method. Seven studies included sufficient information to calculate effect sizes, with average effect size for knowledge was g = .61 (range = .16 – 1.34) and for skill was g = 1.2 (range = .96–1.59). In comparison to the serial instruction method, one study found that the virtual classroom method was associated with higher levels of burnout (Leykin, Cucciare, & Weingardt, 2011). When compared to a face-to-face training, both training methods resulted in increases in knowledge, skills, and use; however, the face-to-face training was associated with a larger effect size (g = .73) than the virtual classroom training (g = .16) (Rawson et al., 2013).

Serial instruction

The present review yielded 37 studies meeting inclusion criteria for web-based training with the serial instruction method, which is a linear tutorial model where each learner proceeds through content in the same order as other learners (Table 2). A majority of included studies (n = 35; 95%) found that the serial instruction training method was associated with positive training outcomes. There were two exceptions to this overall finding, with trainees participating in the serial instruction condition less likely to be skillful (Chu et al., 2017; German et al., in press), and demonstrating lower knowledge and implementation potential compared to other conditions. There was an average effect size for knowledge of g = 1.29 (range = −1.70 – 4.70), skill of g = .15 (range = −2.10 – 1.53), self-efficacy of g = .78 (range = .04 – 1.34), and use of g = .65 (range = .05 – 1.15). Nine studies directly compared the serial instruction training method to a face-to-face training method. Five of these studies found that the serial instruction training method was comparable to face-to-face training (German et al., in press; Marshal et al., 2014; Rheingold et al., 2012; Stein et al., 2015; Weingardt et al., 2006). Two studies found that the face-to-face condition was superior to the serial instruction training method (Cohen et al., 2016; Vismara et al., 2009). Similarly, two studies found mixed results, with the effectiveness of conditions differing across outcomes (Dimeff et al., 2011; Dimeff et al., 2015). Across studies that included information on effect size, face-to-face training outcomes were associated with larger effect sizes than serial instruction training (Cohen et al., 2016; Rawson et al., 2013; Vismara et al., 2009).

Self-directed learning

Two studies evaluated the effectiveness of the self-directed learning method where the learner chooses the learning modules that fit the individual’s needs and learning objectives (see Table 3). Across studies, participation in the self-directed learning method was associated with increased knowledge and skill (Sholomskas et al., 2005), as well as satisfaction, use, and self-efficacy (Han, Voils, & Williams, 2013). The study by Sholomskas et al., 2005 found that the addition of a self-directed learning component to written materials resulted in an increase in knowledge (g = .29) and skill (g = .22) compared to written materials alone.

Simulation training

Summaries of the two studies that utilized a simulation training method, where training involves virtual patients that the learner interacts with to determine treatment, are displayed in Table 4. Both of the included studies found that the simulation training method was related to positive outcomes in knowledge, skill, attitudes, satisfaction, and use following training (Harned et al., 2014; Kobak, Wolitsky-Taylor, Craske, & Rose, 2017). There was an average effect size for knowledge of g = 2.03 (range = .38 – 3.68) and skill of g = 1.75 (range = .39 – 3.11).

Ongoing support

Thirteen studies in the current review included a form of ongoing support (i.e., consultation, supervision; Table 5). A majority of studies (n = 12; 92%) found that the inclusion of ongoing support was associated with various positive outcomes, including completion, knowledge, attitudes, skill, and fidelity. Four of the 13 studies made comparisons between participants that received ongoing support and those that did not. Two of these studies found that ongoing support was associated with more positive outcomes than not participating in ongoing support (Harned et al., 2014; Rakoyshik et al., 2016). One study by Bennett-Levy et al. (2012) found that participants that received ongoing support did not significantly differ on knowledge, skill, or self-efficacy compared to trainees that did not receive ongoing support. Similarly, a study by Chu et al. (2017) concluded that trainees receiving ongoing support demonstrated decreases in knowledge, skill, and implementation potential. For conditions directly comparing the inclusion of ongoing support to a condition without ongoing support, there was an average effect size of −.04 (range = −2.1 – .95).

Discussion

The purpose of this review was to obtain a greater understanding of the outcomes associated with web-based training methods for behavioral health clinicians. Although previous reviews have examined the effectiveness of a variety of training methods, the current review extends these previous reviews by focusing on web-based training methods for behavioral health providers. Following a literature search, 45 articles were identified as meeting inclusion criteria, and were subsequently categorized according to rigor and web-based training method.

One of the primary findings of this review is the heterogeneity of web-based training methods available to behavioral health clinicians. The availability of a variety of training methods is promising in that training methods can be tailored to fit the individual needs of clinicians. It is important to note though that the most commonly used training method was serial instruction. This method has the ability to have a broad public health impact by being disseminated to a broad audience of behavioral health clinicians given that it is low-cost and does not require an instructor to lead the web-based training.

In general, results across these studies indicate that web-based training methods may be an effective way to train behavioral health clinicians. For example, these studies found that participation in web-based training methods was generally associated with increases in knowledge, skill, self-efficacy, and use. This finding is consistent with previous systematic reviews demonstrating that web-based training may result in positive outcomes of a moderate effect size for healthcare professionals (Cook et al., 2011; Roh & Park, 2010). High rates of training completion were also noted, which, combined with increasingly widespread accessibility to the internet, make web-based training more promising than in-person training in terms of their potential to reach a wide range of clinicians. This is particularly important as behavioral health providers have noted the importance of identifying inexpensive training options that may produce similar results to an in-person training (Powell, McMillen, Hawley, & Proctor, 2013).

Although positive outcomes were noted in these studies, it was difficult to draw conclusions on the most effective web-based training design given that there were relatively few studies comparing the various training methods. Similarly, several studies compared web-based training methods to face-to-face training methods. A majority of these studies found that web-based training methods were comparable to face-to-face training methods, but several studies did find that face-to-face training methods produced better outcomes. This finding is similar to other reviews that have noted inconsistencies in the relative effectiveness of web-based training compared to other training methods (Cook et al., 2011). Despite this finding, this review found that face-to-face training generally was associated with a larger effect size than web-based training methods. Although a previous systematic review found that web-based training demonstrates larger effect sizes than face-to-face training, this review did not support this finding (Sitzmann, Kraiger, Stewart, & Wisher, 2006). The effect sizes for face-to-face training outcomes generally fell within the medium to large range, consistent with a previous meta-analysis on the effectiveness of training personnel in organizations (Arthur, Bennett, Edents, & Bell, 2003). Given these mixed findings, additional research is needed to more fully understand the relative effectiveness of web-based training in comparison to face-to-face training.

This review also examined studies that utilized ongoing support (e.g., consultation, supervision) in addition to a web-based training method. However, these studies demonstrated mixed evidence on the importance of utilizing ongoing support in combination with a web-based training. This may be partially due to the large variation in dosage (i.e., 30-minutes, 1-hour) and duration (i.e., 6-months, 12-months) of ongoing support among studies. The importance of ongoing support as a mechanism for improving clinician knowledge in an EBP and connecting clinicians with peers and trainers has received empirical support within the field of behavioral health (Nadeem, Gleacher, & Beidas, 2013). Within the field of education, a meta-analysis by Zhao and colleagues found that higher instructor involvement is linked to greater achievement of learning objectives (2005). In the current review, the virtual classroom training type likely had greater instructor involvement than other methods such as serial instruction. Therefore, in situations such as web-based training where there is a lack of face-to-face contact with trainers and peers, the interaction that ongoing support emphasizes may be even more pertinent.

Limitations

Several limitations of the current review should be noted. The first limitation is that the search strategy and inclusion criteria utilized may have restricted the findings of the review. Although the search strategy employed used a variety of terms to identify studies of web-based training, there is a lack of consistency in the terms used to define web-based training (i.e., online training, distance education), which may have led to the unintentional omission of studies from the current review. Additionally, the current review excluded articles that were not written in English. While the review did include international articles, it is notable that we were unable to analyze non-English articles that may have limited our findings to research primarily from English-speaking countries.

A second limitation of the current review is that we did not pilot our coding framework or calculate an inter-rater reliability statistic to assess reliability between coders. It has been well-documented that inter-rater reliability is an important step in systematic reviews to reduce subjectivity and present an objective synthesis of the review’s findings (Gough, Oliver, & Thomas, 2017). While we did not calculate inter-rater reliability statistics, we met frequently to discuss discrepancies and achieve consensus on coding. Despite our efforts to enhance reliability, the results of this review may be hindered by the subjective interpretations of the coders.

Third, while the current review attempted to calculate effect sizes to determine the effectiveness of web-based training methods, a meta-analysis was not performed and we did not assess risk of bias or heterogeneity of the included studies. This limits the interpretations that can be made following the synthesis of studies. Therefore, we qualify the findings of our results and temper our interpretations of the effectiveness of the various training methods. Additionally, we included studies that did not have sufficient information to calculate effect size, yet we thought it was important to identify all possible articles that may be relevant to this growing area of research.

A fourth concern of the review is the inadequate description of web-based training provided in research articles. While several of the articles cited links to access the described web-based training, many of these links were no longer functioning. Second, when articles did include information about the web-based training platform, this information was often insufficient and only provided general characteristics about the training. Although the present review attempted to categorize these web-based training according to method (e.g., serial instruction) and dosage, there is still a high level of heterogeneity within each training type (Shapiro, 2001). These limitations hinder the conclusions that may be made about the overall effectiveness of web-based training methods due to a lack of consistency in the description of training method and dosage.

A Critique of the Literature

The methodological quality of the included studies also should be noted. While many of the limitations identified below are also relevant to face-to-face training, it is important to highlight how they affect the literature on web-based training. Many of the articles included in the review were classified as Type 3 studies, with few studies being classified as either Type 1 or 2. Although the results summarized in this review suggest favorable post-training outcomes, it is important to keep in mind that pilot data is insufficient as it commonly does not include a control group or randomization, leading to numerous internal validity threats. Additional studies that directly compare groups of participants different web-based training methods and control groups are needed to understand the relative effectiveness of each design type.

Similarly, the methodological quality of the studies was hindered by the common use of study-developed outcome measures. The lack of standardized measures is a prevalent issue in the field of implementation science, and the web-based training literature is no exception (Martinez, Lewis, & Weiner, 2014). Although the use of study-developed measures often occurs in fields advancing quickly, a lack of proper instrumentation limits the validity and reliability of interpretations that may be drawn from studies (Martinez et al., 2014). A related concern is that the use of study-developed measures often hinders the ability to compare findings across studies (Martinez et al., 2014). While using study-developed measures is understandable in a field that is rapidly developing, it is important to understand the impact that it has on interpreting outcomes.

Similarly, the majority of studies included in this review used self-report methods to assess clinician knowledge and skill. This is problematic because studies examining the validity of clinician self-report have found that clinicians typically rate themselves as more skillful compared with behavioral observations (Miller & Mount, 2001; Miller, Yahne, Moyers, Martinez, & Pirritano, 2004). Although a number of barriers exist to using behavioral observations (e.g., cost, time, availability of trained observers), it is crucial that researchers begin using them with more regularity to provide an accurate measure of outcomes. Unlike other domains in which web-based training are often used (e.g., to implement new technologies in the workplace, to impart knowledge about new policies), clinicians require change in their skills to produce positive client changes. It is imperative that researchers are accurately assessing the skills of trainees to ensure that web-based training result in adequate skill and behavior change.

Future Directions

This review provides insight into the growing area of web-based training as well as suggestions for future research on web-based training. Improvements in study design would greatly strengthen the interpretations that can be drawn from these studies. To improve upon the current literature, greater depth in descriptions of web-based training method utilized and outcomes measured are needed. As highlighted previously, it is necessary for studies to include an adequate description of the web-based training to understand effective components and web-based training designs. While studies assessing web-based training commonly utilize knowledge and satisfaction as an outcome measure, the outcomes of skill and fidelity are even more pertinent to the goals of behavioral health clinicians. Including these outcomes in future studies will provide insight into the utility of web-based training methods for training behavioral health clinicians in EBPs. These improvements in study design are necessary to understanding the efficacy of web-based training.

Additionally, many of the included studies relied heavily on self-report, study-developed measures to evaluate clinician learning. Researchers evaluating web-based training should be conscious of utilizing measures that have developed psychometric evidence, and collect information from a variety of informants (e.g., supervisors, clients) and methods (e.g., behavioral observation, self-report). As an alternative to study-developed measures, future studies may also utilize measures such as the Cognitive Behavioral Therapy Questionnaire (CBT-Q; Myles, Latham, & Ricketts, 2003) or the Provider Efficacy Questionnaire (Ozer et al., 2004) which have demonstrated psychometric evidence. When it is not possible to utilize a measure with established psychometric evidence, researchers should report on the psychometric properties of the study-developed measures.

To understand web-based training, more studies comparing the different web-based training methods are needed. A majority of included studies in the review examined the efficacy of one type of web-based training method; however, further studies should include at least two web-based training methods in the study design. Comparison studies should also be conducted examining the differences in outcomes between face-to-face and web-based training methods. Research has supported the efficacy of face-to-face multi-component training, which typically include several design components (e.g., face-to-face workshop and consultation), in improving clinician knowledge, skill, and adherence (Herschell et al., 2010; Schoenwald, Chapman, Sheidow, & Carter, 2009). Future research should seek to understand how the multi-component training design compares to web-based training methods.

Web-based training methods have recently garnered a great amount of interest, yet little is known about their efficacy for behavioral health clinicians. Although web-based training has the potential to make a broad public health impact, there is insufficient evidence to conclude if they are able to effectively train clinicians. This is important, given that web-based training methods for behavioral health clinicians are consistently being developed and disseminated ahead of supporting empirical evidence. By understanding web-based training, training efforts and guidelines for behavioral health clinicians can further be refined, with the goal of ultimately improving the delivery of EBPs within communities.

Acknowledgments

Funding: This research was funded by a grant from the National Institute of Mental Health (NIMH; R01 MH095750; A Statewide Trial to Compare Three Training Models for Implementing an EBT; PI: Herschell).

Footnotes

Compliance with Ethical Standards:

Conflict of Interest: All authors declare that they have no conflict of interest.

Ethical approval: This article does not contain any studies with human participants performed by any of the authors.

References

- American Psychological Association. Task force on promotion and dissemination of psychological procedures. The meeting of the American Psychological Association.1993. [Google Scholar]

- APA Presidential Task Force on Evidence-Based Practice. Evidence-based practice in psychology. American Psychologist. 2006;61(4):271–285. doi: 10.1037/0003-066X.61.4.271. http://doi.org/10.1037/0003-066X.61.4.271. [DOI] [PubMed] [Google Scholar]

- Arthur W, Jr, Bennett W, Jr, Edens PS, Bell ST. Effectiveness of training in organizations: A meta-analysis of design and evaluation features. Journal of Applied Psychology. 2003;88(2):234–245. doi: 10.1037/0021-9010.88.2.234. http://dx.doi.org/10.1037/0021-9010.88.2.234. [DOI] [PubMed] [Google Scholar]

- Ayers CR, Sorrell JT, Thorp SR, Wetherell JL. Evidence-based psychological treatments for late-life anxiety. Psychology and Aging. 2007;22(1):8–17. doi: 10.1037/0882-7974.22.1.8. http://doi.org/10.1037/0882-7974.22.1.8. [DOI] [PubMed] [Google Scholar]

- Beidas RS, Kendall PC. Training Therapists in Evidence-Based Practice: A Critical Review of Studies From a Systems-Contextual Perspective. Clinical Psychology: Science and Practice. 2010;17(1):1–30. doi: 10.1111/j.1468-2850.2009.01187.x. http://doi.org/10.1111/j.1468-2850.2009.01187.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bennett-Levy J, Hawkins R, Perry H, Cromarty P, Mills J. Online cognitive behavioural therapy training for therapists: Outcomes, acceptability, and impact of support. Australian Psychologist. 2012;47(3):174–182. http://doi.org/10.1111/j.1742-9544.2012.00089.x. [Google Scholar]

- Bray JW, Bray LM, Lennox R, Mills MJ, McRee B, Goehner D, Higgins-Biddle JC. Evaluating web-based training for employee assistance program counselors on the use of screening and brief intervention for at-risk alcohol use. Journal of Workplace Behavioral Health. 2009;24(3):307–319. [Google Scholar]

- Brose LS, West R, Michie S, Kenyon JAM, McEwen A, LSB, … McEwen A. Effectiveness of an online knowledge training and assessment program for stop smoking practitioners. Nicotine and Tobacco Research. 2012;14(7):794–800. doi: 10.1093/ntr/ntr286. http://doi.org/10.1093/ntr/ntr286. [DOI] [PubMed] [Google Scholar]

- Brownlow RS, Maguire S, O’Dell A, Dias-da-Costa C, Touyz S, Russell J. Evaluation of an online training program in eating disorders for health professionals in Australia. Journal of Eating Disorders. 2015;3(1):37. doi: 10.1186/s40337-015-0078-7. http://doi.org/10.1186/s40337-015-0078-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bruns EJ, Kerns SEU, Pullmann MD, Hensley S. Research, Data, and Evidence-Based Treatment Use in State Behavioral Health Systems, 2001–2012. Psychiatric Services. 2015;67(5):496–503. doi: 10.1176/appi.ps.201500014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Calder R, Ainscough T, Kimergård A, Witton J, Dyer KR. Online training for substance misuse workers: A systematic review. Drugs: Education, Prevention and Policy. 2017:1–13. [Google Scholar]

- Chorpita BF, Daleiden EL, Ebesutani C, Young J, Becker KD, Nakamura BJ, … Starace N. Evidence-based treatment of children and adolescents: An updated review of indicators of efficacy and effectiveness. Clinical Psychology: Science and Practice. 2011;18(2):154–172. [Google Scholar]

- Chu BC, Carpenter AL, Wyszynski CM, Conklin PH, Comer JS. Scalable Options for Extended Skill Building Following Didactic Training in Cognitive-Behavioral Therapy for Anxious Youth: A Pilot Randomized Trial. Journal of Clinical Child and Adolescent Psychology. 2017;46(3):401–410. doi: 10.1080/15374416.2015.1038825. http://doi.org/10.1080/15374416.2015.1038825. [DOI] [PubMed] [Google Scholar]

- Cohen JA, Mannarino AP, Jankowski K, Rosenberg S, Kodya S, Wolford GL. A Randomized Implementation Study of Trauma-Focused Cognitive Behavioral Therapy for Adjudicated Teens in Residential Treatment Facilities. Child Maltreatment. 2016;21(2):156–167. doi: 10.1177/1077559515624775. http://doi.org/10.1177/1077559515624775. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cook DA, Levinson AJ, Garside S, Dupras DM, Erwin PJ, Montori VM. Internet-based learning in the health professions: a meta-analysis. JAMA. 2008;300(10):1181–1196. doi: 10.1001/jama.300.10.1181. [DOI] [PubMed] [Google Scholar]

- Crawford AM, Curran JH. Disseminating Behavioural Activation for Depression via Online Training: Preliminary Steps. Behavioural and Cognitive Psychotherapy. 2015;43:224–238. doi: 10.1017/S1352465813000842. http://doi.org/10.1177/0192513X12437708. [DOI] [PubMed] [Google Scholar]

- Dimeff LA, Koerner K, Woodcock EA, Beadnell B, Brown MZ, Skutch JM, … MSH Which training method works best? A randomized controlled trial comparing three methods of training clinicians in dialectical behavior therapy skills. Behaviour Research and Therapy. 2009;47(11):921–930. doi: 10.1016/j.brat.2009.07.011. http://doi.org/10.1016/j.brat.2009.07.011. [DOI] [PubMed] [Google Scholar]

- Dimeff LA, Harned MS, Woodcock EA, Skutch JM, Koerner K, Linehan MM. Investigating Bang for Your Training Buck: A Randomized Controlled Trial Comparing Three Methods of Training Clinicians in Two Core Strategies of Dialectical Behavior Therapy. Behavior Therapy. 2015;46(3):283–295. doi: 10.1016/j.beth.2015.01.001. http://doi.org/10.1016/j.beth.2015.01.001. [DOI] [PubMed] [Google Scholar]

- Dimeff LA, Woodcock EA, Harned MS. Can Dialectical Behavior Therapy Be Learned in Highly Structured Learning Environments Results From a Randomized Controlled Dissemination Trial. Behavior Therapy. 2011;42(2):263–275. doi: 10.1016/j.beth.2010.06.004. http://doi.org/10.1016/j.beth.2010.06.004. [DOI] [PubMed] [Google Scholar]

- Fairburn CG, Allen E, Bailey-Straebler S, O’Connor ME, Cooper Z. Scaling up psychological treatments: A countrywide test of the online training of therapists. Journal of Medical Internet Research. 2017;19(6):319–324. doi: 10.2196/jmir.7864. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fixsen DL, Naoom SF, Blase KA, Friedman RM, Wallace F. Implementation Research: A Synthesis of the Literature. 2005. [Google Scholar]

- German RE, Adler A, Frankel SA, Stirman SW, Pinedo P, Evans AC, … Creed TA. Testing a Web-Based, Trained-Peer Model to Build Capacity for Evidence-Based Practices in Community Mental Health Systems. Psychiatric Services. 2017;(21):appi.ps.2017000. doi: 10.1176/appi.ps.201700029. http://doi.org/10.1176/appi.ps.201700029. [DOI] [PubMed]

- Ghoncheh R, Gould MS, Twisk JW, Kerkhof AJ, Koot HM. Efficacy of Adolescent Suicide Prevention E-Learning Modules for Gatekeepers: A Randomized Controlled Trial. JMIR Mental Health. 2016;3(1):e8. doi: 10.2196/mental.4614. http://doi.org/10.2196/mental.4614. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gough D, Oliver S, Thomas J, editors. An introduction to systematic reviews. Sage; 2017. [Google Scholar]

- Gryglewicz K, Chen JI, Romero GD, Karver MS, Witmeier M. Online suicide risk assessment and management training: Pilot evidence for acceptability and training effects. Crisis: The Journal of Crisis Intervention and Suicide Prevention. 2017;38(3):186–194. doi: 10.1027/0227-5910/a000421. http://doi.org/10.1027/0227-5910/a000421. [DOI] [PubMed] [Google Scholar]

- Han C, Voils CI, Williams JWJ. Uptake of Web-based clinical resources from the MacArthur Initiative on Depression and Primary Care. Community Mental Health Journal. 2013;49(2):166–171. doi: 10.1007/s10597-011-9461-2. http://doi.org/10.1007/s10597-011-9461-2. [DOI] [PubMed] [Google Scholar]

- Harned MS, Dimeff LA, Woodcock EA, Skutch JM, MSH, LAD, … Skutch JM. Overcoming barriers to disseminating exposure therapies for anxiety disorders: A pilot randomized controlled trial of training methods. Journal of Anxiety Disorders. 2011;25(2):155–163. doi: 10.1016/j.janxdis.2010.08.015. http://doi.org/10.1016/j.janxdis.2010.08.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Harned MS, Dimeff LA, Woodcock EA, Kelly T, Zavertnik J, Contreras I, Danner SM. Exposing Clinicians to Exposure: A Randomized Controlled Dissemination Trial of Exposure Therapy for Anxiety Disorders. Behavior Therapy. 2014;45(6):731–744. doi: 10.1016/j.beth.2014.04.005. http://doi.org/10.1016/j.beth.2014.04.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Heck NC, Saunders BE, Smith DW. Web-based training for an evidence-supported treatment: Training completion and knowledge acquisition in a global sample of learners. Child Maltreatment. 2015;20(3):183–192. doi: 10.1177/1077559515586569. http://doi.org/10.1177/1077559515586569. [DOI] [PubMed] [Google Scholar]

- Herschell AD, Kolko DJ, Baumann BL, Davis AC. The role of therapist training in the implementation of psychosocial treatments: A review and critique with recommendations. Clinical Psychology Review. 2010a;30(4):448–466. doi: 10.1016/j.cpr.2010.02.005. http://doi.org/10.1016/j.cpr.2010.02.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Herschell AD, Kolko DJ, Baumann BL, Davis AC. The role of therapist training in the implementation of psychosocial treatments: A review and critique with recommendations. Clinical Psychology Review. 2010b;30(4):448–466. doi: 10.1016/j.cpr.2010.02.005. http://doi.org/10.1016/j.cpr.2010.02.005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kanter JW, Tsai M, Holman G, Koerner K. Preliminary data from a randomized pilot study of web-based functional analytic psychotherapy therapist training. Psychotherapy. 2013;50(2):248–255. doi: 10.1037/a0029814. http://doi.org/10.1037/a0029814. [DOI] [PubMed] [Google Scholar]

- Kobak KA, Craske MG, Rose RD, Wolitsky-Taylor K. Web-based therapist training on cognitive behavior therapy for anxiety disorders: A pilot study. Psychotherapy. 2013;50(2):235–247. doi: 10.1037/a0030568. http://doi.org/10.1037/a0030568. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kobak KA, Lipsitz JD, Markowitz JC, Bleiberg KL. Web-based therapist training in interpersonal psychotherapy for depression: Pilot study. Journal of Medical Internet Research. 2017;19(7):306–317. doi: 10.2196/jmir.7966. http://doi.org/10.2196/jmir.7966. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kobak KA, Wolitsky-Taylor K, Craske MG, Rose RD. Therapist Training on Cognitive Behavior Therapy for Anxiety Disorders Using Internet-Based Technologies. Cognitive Therapy and Research. 2017;41(2):252–265. doi: 10.1007/s10608-016-9819-4. http://doi.org/10.1007/s10608-016-9819-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Larson MJ, Amodeo M, Locastro JS, Muroff J, Smith L, Gerstenberger E. Randomized trial of web-based training to promote counselor use of cognitive behavioral therapy skills in client sessions. Substance Abuse. 2013;34(2):179–187. doi: 10.1080/08897077.2012.746255. http://doi.org/10.1080/08897077.2012.746255. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leykin Y, Cucciare MA, Weingardt KR. Differential effects of online training on job-related burnout among substance abuse counsellors. Journal of Substance Use. 2011;16(2):127–135. http://doi.org/10.3109/14659891.2010.526168. [Google Scholar]

- Martinez RG, Lewis CC, Weiner BJ. Instrumentation issues in implementation science. Implementation Science. 2014;9:118. doi: 10.1186/s13012-014-0118-8. http://doi.org/10.1186/s13012-014-0118-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Martino S, Canning-Ball M, Carroll KM, Rounsaville BJ. A criterion-based stepwise approach for training counselors in motivational interviewing. Journal of Substance Abuse Treatment. 2011;40(4):357–365. doi: 10.1016/j.jsat.2010.12.004. http://doi.org/10.1016/j.jsat.2010.12.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- McHugh RK, Barlow DH. The dissemination and implementation of evidence-based psychological treatments. A review of current efforts. The American Psychologist. 2010;65(2):73–84. doi: 10.1037/a0018121. http://doi.org/10.1037/a0018121. [DOI] [PubMed] [Google Scholar]

- McMillen JC, Hawley KM, Proctor EK. Mental Health Clinicians’ Participation in Web-Based Training for an Evidence Supported Intervention: Signs of Encouragement and Trouble Ahead. Administration and Policy in Mental Health and Mental Health Services Research. 2015;43(4):592–603. doi: 10.1007/s10488-015-0645-x. http://doi.org/10.1007/s10488-015-0645-x. [DOI] [PubMed] [Google Scholar]

- McPherson TL, Cook RF, Back AS, Hersch RK, Hendrickson A. A field test of a web-based substance abuse prevention training program for health promotion professionals. American Journal of Health Promotion. 2006;20(6):396–400. doi: 10.4278/0890-1171-20.6.396. http://doi.org/10.4278/0890-1171-20.6.396. [DOI] [PubMed] [Google Scholar]

- Mignogna J, Hundt NE, Kauth MR, Kunik ME, Sorocco KH, Naik AD, … Cully JA. Implementing brief cognitive behavioral therapy in primary care: A pilot study. Translational Behavioral Medicine. 2014;4(2):175–183. doi: 10.1007/s13142-013-0248-6. http://doi.org/10.1007/s13142-013-0248-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Miller WR, Mount KA. A small study of training in motivational interviewing: Does one workshop change clinician and client behavior? Behavioural and Cognitive Psychotherapy. 2001;29(04):457–471. [Google Scholar]

- Miller WR, Yahne CE, Moyers TB, Martinez J, Pirritano M. A randomized trial of methods to help clinicians learn motivational interviewing. Journal of Consulting and Clinical Psychology. 2004;72(6):1050. doi: 10.1037/0022-006X.72.6.1050. [DOI] [PubMed] [Google Scholar]

- Morris ZS, Wooding S, Grant J. The answer is 17 years, what is the question: understanding time lags in translational research. Journal of the Royal Society of Medicine. 2011;104(12):510–20. doi: 10.1258/jrsm.2011.110180. http://doi.org/10.1258/jrsm.2011.110180. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Myles PJ, Latham M, Ricketts T. The contributions of an expert panel in the development of a new measure of knowledge for the evaluation of training in cognitive behavioural therapy. Paper presented at the British Association of Behavioural and Cognitive Psychotherapies Annual Conference; York. 2003. [Google Scholar]

- Nadeem E, Gleacher A, Beidas RS. Consultation as an implementation strategy for evidence-based practices across multiple contexts: Unpacking the black box. Administration and Policy in Mental Health and Mental Health Services Research. 2013;40(6):439–450. doi: 10.1007/s10488-013-0502-8. http://doi.org/10.1007/s10488-013-0502-8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Nathan PE, Gorman JM. A Guide to Treatments that Work. 2. London: Oxford University Press; 2002. [Google Scholar]

- National Institute of Mental Health. Bridging science and service: A report by the National Advisory Mental Health Council’s Clinical Treatment and Services Work Group. Rockville, MD: 1998. [Google Scholar]

- Ozer EM, Adams SH, Gardner LR, Mailloux DE, Wibbelsman CJ, Irwin CE., Jr Provider self-efficacy and the screening of adolescents for risky health behaviors. Journal of Adolescent Health. 2004;35:101–107. doi: 10.1016/j.jadohealth.2003.09.016. [DOI] [PubMed] [Google Scholar]

- Powell BJ, McMillen JC, Hawley KM, Proctor EK. Mental health clinicians’ motivation to invest in training: Results from a practice-based research network survey. Psychiatric Services. 2013;64(8):816–818. doi: 10.1176/appi.ps.003602012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, … Kirchner JE. A refined compilation of implementation strategies: results from the Expert Recommendations for Implementing Change (ERIC) project. Implementation Science. 2015;10(1):21. doi: 10.1186/s13012-015-0209-1. http://doi.org/10.1186/s13012-015-0209-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Puspitasari A, Kanter JW, Murphy J, Crowe A, Koerner K. Developing an online, modular, active learning training program for behavioral activation. Psychotherapy. 2013;50(2):256–265. doi: 10.1037/a0030058. http://doi.org/10.1037/a0030058. [DOI] [PubMed] [Google Scholar]

- Rakovshik SG, McManus F, Vazquez-Montes M, Muse K, Ougrin D. Is supervision necessary? Examining the effects of internet-based CBT training with and without supervision. Journal of Consulting and Clinical Psychology. 2016;84(3):191–199. doi: 10.1037/ccp0000079. http://doi.org/10.1037/ccp0000079. [DOI] [PubMed] [Google Scholar]

- Rawson RA, Rataemane S, Rataemane L, Ntlhe N, Fox RS, McCuller J, Brecht ML. Dissemination and implementation of cognitive behavioral therapy for stimulant dependence: A randomized trial comparison of 3 approaches. Substance Abuse. 2013;34(2):108–117. doi: 10.1080/08897077.2012.691445. http://doi.org/10.1080/08897077.2012.691445. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rees CS, Gillam D. Training in cognitive-behavioural therapy for mental health professionals: a pilot study of videoconferencing. Journal of Telemedicine and Telecare. 2001;7(5):300–303. doi: 10.1258/1357633011936561. http://doi.org/10.1258/1357633011936561. [DOI] [PubMed] [Google Scholar]

- Rees CS, Krabbe M, Monaghan BJ. Education in cognitive-behavioural therapy for mental health professionals. Journal of Telemedicine and Telecare. 2009;15(2):59–63. doi: 10.1258/jtt.2008.008005. http://doi.org/10.1258/jtt.2008.008005. [DOI] [PubMed] [Google Scholar]

- Reid WM, Ruzycki S, Haney ML, Brown LM, Baggerly J, Mescia N, Hyer K. Disaster mental health training in Florida and the response to the 2004 hurricanes. Journal of Public Health Management and Practice: JPHMP, Suppl. 2005 Jun;:S57–62. doi: 10.1097/00124784-200511001-00010. 2016. http://doi.org/10.1097/00124784-200511001-00010. [DOI] [PubMed]

- Rheingold AA, Zajac K, Patton M. Feasibility and acceptability of a child sexual abuse prevention program for childcare professionals: Comparison of a web-based and in-person training. Journal of Child Sexual Abuse. 2012;21(4):422–436. doi: 10.1080/10538712.2012.675422. http://doi.org/10.1080/10538712.2012.675422. [DOI] [PubMed] [Google Scholar]

- Roh KH, Park H. A meta-analysis on the effectiveness of computer-based education in nursing. Healthcare Informatics Research. 2010;16(3):149–157. doi: 10.4258/hir.2010.16.3.149. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rones M, Hoagwood K. School-based mental health services: a research review. Clinical Child and Family Psychology Review. 2000;3(4):223–41. doi: 10.1023/a:1026425104386. http://doi.org/10.1023/A:1026425104386. [DOI] [PubMed] [Google Scholar]