We applaud Matz et al. (1) for using field studies on Facebook to investigate the effectiveness of psychologically targeted messages, building on prior, largely laboratory-based research. That said, this comparison of Facebook ad campaigns does not randomly assign users to conditions, which threatens the internal validity of their findings and weakens their conclusions.

The paper uses Facebook’s standard ad platform to compare how different versions of ads perform. However, this process does not create a randomized experiment: users are not randomly assigned to different ads, and individuals may even receive multiple ad types (e.g., both extroverted and introverted ads). Furthermore, ad platforms like Facebook optimize campaign performance by showing ads to users whom the platform expects are more likely to fulfill the campaign’s objective (e.g., study 1, online purchases; studies 2 and 3, app installs). This optimization generates differences in the set of users exposed to each ad type, so that differences in responses across ads do not by themselves indicate a causal effect.

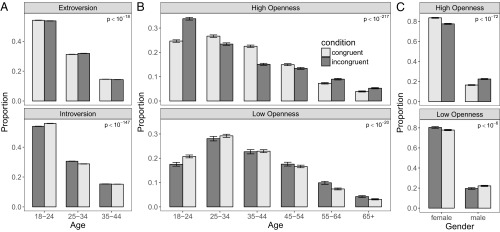

Accordingly, statistical tests reveal differences across ad type conditions by comparing all reported preexisting user characteristics. Using the authors’ provided data, in study 1, we find differences in the age distribution (Fig. 1A) among the “extroverted” users exposed to congruent versus incongruent ads [χ2(2) = 84.5, P < 10−18] and also for the “introverted” users (P < 10−147). In study 2, we find similar differences in age (Fig. 1B) among “high-openness” users (P < 10−217) and “low-openness” users (P < 10−20), as well as differences in gender (Fig. 1C) (P < 10−72 and P < 10−6, respectively). (Study 3 reports no user characteristics. Code for this analysis is archived at https://osf.io/y8nuk.) More importantly, these differences in observables indicate potential differences in unobserved characteristics between exposed groups.

Fig. 1.

Distribution of all reported user characteristics: age in study 1 (A), and age (B) and gender (C) in study 2. Error bars are 95% CIs. Displayed P values are from χ2 tests of independence.

Could such confounding affect the results? In studies 1, 2, and 3 by Matz et al. (1), the reported 95% CIs for the relative increase in conversions are [25%, 90%], [9%, 58%], and [5%, 27%], respectively. Thus, biases of 25%, 9%, and 5% are sufficient to account for these results even without differences in effectiveness. As one comparison point, Gordon et al. (ref. 2, section 5.1) document a Facebook study that similarly compares two ads which produces an incorrectly signed effect estimate relative to the experimental baseline; the implied confounding bias is 6%, 95% CI [2%, 11%]. The degree of confounding in Matz et al.’s (1) studies could be larger or smaller. The intent of this comparison is to highlight that confounds in user characteristics and ad exposure might explain a nonnegligible portion, if not all, of each study’s effects.

While suggestive, the Matz et al. (1) studies provide limited new evidence for the efficacy of psychologically targeted advertising. Researchers should be aware of the limitations of testing ad campaigns on platforms that optimize ad delivery and do not randomize users into conditions. Since the Matz et al. (1) studies were conducted, some ad platforms, including Facebook, introduced tools for advertisers to conduct randomized experiments (3–5), which may aid future work.

Footnotes

Conflict of interest statement: D.E. and G.A.J. have been previously employed by Facebook. B.R.G. is a contractor of Facebook; he donates all resulting income to charity. D.E. and B.R.G. have co-authorship relationships with Facebook employees. D.E. has a significant financial interest in Facebook.

Data deposition: Code for our analysis has been deposited at https://osf.io/y8nuk.

References

- 1.Matz SC, Kosinski M, Nave G, Stillwell DJ. Psychological targeting as an effective approach to digital mass persuasion. Proc Natl Acad Sci USA. 2017;114:12714–12719. doi: 10.1073/pnas.1710966114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Gordon BR, Zettelmeyer F, Bhargava N, Chapsky D. 2016 A comparison of approaches to advertising measurement: Evidence from big field experiments at Facebook. Whitepaper. Available at https://www.kellogg.northwestern.edu/faculty/gordon_b/files/kellogg_fb_whitepaper.pdf. Accessed May 7, 2018.

- 3.Johnson GA, Lewis RA, Nubbemeyer EI. Ghost ads: Improving the economics of measuring online ad effectiveness. J Mark Res. 2017;54:867–884. [Google Scholar]

- 4.Facebook 2018 Conversion Lift. Available at https://www.facebook.com/business/a/conversion-lift. Accessed May 7, 2018.

- 5.Facebook 2018 Split testing. Available at https://www.facebook.com/business/measurement/split-testing. Accessed May 7, 2018.