Abstract

Objective:

Understanding the costs to implement Screening, Brief Intervention, and Referral to Treatment (SBIRT) for adolescent substance use in primary care settings is important for providers in planning for services and for decision makers considering dissemination and widespread implementation of SBIRT. We estimated the start-up costs of two models of SBIRT for adolescents in a multisite U.S. Federally Qualified Health Center (FQHC). In both models, screening was performed by a medical assistant, but models differed on delivery of brief intervention, with brief intervention delivered by a primary care provider in the generalist model and a behavioral health specialist in the specialist model.

Method:

SBIRT was implemented at seven clinics in a multisite, cluster randomized trial. SBIRT implementation costs were calculated using an activity-based costing methodology. Start-up activities were defined as (a) planning activities (e.g., changing existing electronic medical record system and tailoring service delivery protocols); and (b) initial staff training. Data collection instruments were developed to collect staff time spent in start-up activities and quantity of nonlabor resources used.

Results:

The estimated average costs to implement SBIRT were $5,182 for the specialist model and $3,920 for the generalist model. Planning activities had the greatest impact on costs for both models. Overall, more resources were devoted to planning and training activities in specialist sites, making the specialist model costlier to implement.

Conclusions:

The initial investment required to implement SBIRT should not be neglected. The level of resources necessary for initial implementation depends on the delivery model and its integration into current practice.

Alcohol and drug problems are serious and costly societal issues affecting adolescents (Substance Abuse and Mental Health Services Administration [SAMHSA], 2013). In 2014, approximately 10% of adolescents in the United States were current users of illicit drugs, and about 12% currently used alcohol, including 6% who reported binge drinking in the past month (Center for Behavioral Health Statistics and Quality, 2015). These substance use patterns are a major threat to long-term public health, as most substance use disorders begin during adolescence and young adulthood, and early onset of substance use is associated with negative health and social outcomes later in life (Chen et al., 2005; Duncan et al., 1997). Unfortunately, the vast majority of adolescents with nascent substance use are not identified, and only 9% of adolescents who need substance abuse treatment actually receive it (SAMHSA, 2014).

In response to the need for early identification of substance abuse problems, the Screening, Brief Intervention, and Referral to Treatment (SBIRT) model was developed. The SBIRT model typically uses a universal screen to identify individuals who are at risk for substance use problems and then offers additional care to those who need it, either through brief intervention (BI) or referral to treatment (Knight et al., 2002, 2003; Reinert & Allen, 2007).

Support for the use of screening and brief intervention (SBI) and SBIRT continues to grow, especially as the integration of substance use treatment services and general medical care is growing (Buck, 2011). For example, in the United States, the Affordable Care Act established SBI for all adults and alcohol and illicit substance use assessment for adolescents as standard preventive benefits (HealthCare. gov, 2013). In addition, several U.S. national and professional regulatory bodies (e.g., Surgeon General’s Call to Action to Prevent and Reduce Underage Drinking, National Quality Forum, and SAMHSA) have recommended SBI in medical, educational, and criminal justice settings (Padwa et al., 2012). Outside of the United States, national guidelines (National Institute for Health and Care Excellence, 2010), recommendations (World Health Organization, 2012), and policies (Bendtsen et al., 2016) encourage the implementation of SBI.

Despite this support and evidence from clinical trials (Bernstein et al., 2009, 2010; Colby et al., 2005; D’Amico et al., 2008; Heckman et al., 2010; Hollis et al., 2005; Martin & Copeland, 2008; McCambridge & Strang, 2004; Monti et al., 1999; Peterson et al., 2009; Spirito et al., 2004; Tait et al., 2004; Walton et al., 2010; Winters & Leitten, 2007) and meta-analyses (Tait & Hulse, 2003; Tanner-Smith & Lipsey, 2015; Tripodi et al., 2010) demonstrating the effectiveness of SBIRT for adolescents who use alcohol, tobacco, and marijuana, primary care providers have been slow to adopt this evidence-based approach. The lack of research on how best to implement SBIRT services for adolescents in primary care and on the costs involved may be one reason many providers are not implementing SBIRT (Mitchell et al., 2016).

Understanding the costs of SBIRT is important for policy makers and treatment providers when deciding when, how, and where to implement an SBIRT program. A lack of knowledge of the investment required to implement an SBIRT program may pose a barrier to its widespread adoption. A review of 47 studies assessing numerous potential barriers to adopting and sustaining SBI, which is similar in concept to SBIRT but without an explicit referral to treatment component, concluded that the lack of financial resources for SBI is one of the three most important barriers to implementation (Johnson et al., 2011). For decision makers to know whether financial resources for SBIRT are sufficient, they first need detailed estimates on the costs of implementing SBIRT.

This article presents estimates of the initial start-up costs associated with implementing SBIRT in seven clinics operated by a Federally Qualified Health Center (FQHC) that participated in a recent National Institute on Drug Abuse (NIDA)–funded study on SBIRT implementation strategies for adolescents (Mitchell et al., 2016). This large study was a seven-site cluster randomized trial that randomly assigned clinics to implement SBIRT through a generalist or a specialist SBIRT delivery approach for adolescents ages 12–17. In the generalist approach, a medical assistant delivers the initial screening assessment and a primary care provider delivers the BI for substance misuse, if needed. In the specialist approach, a medical assistant delivers the initial screening but a behavioral health counselor delivers the BI. The participating FQHC was a large, urban organization that provides general medical and behavioral health services to adolescents at seven clinics throughout the Baltimore, MD, area. The clinics had an average annual flow of about 800 adolescent patient-visits, with a range from 180 to 1,200. The seven clinics were randomized into generalist (n = 4) and specialist (n = 3) implementation conditions (Mitchell et al., 2016). One of the key implementation outcomes of the study was to understand the cost of SBIRT implementation for adolescents in an FQHC using a generalist versus specialist delivery approach.

With one exception (Zarkin et al., 2003), recent studies that estimated the cost of SBIRT programs (Barbosa et al., 2016; Bray et al., 2014) did not account for the start-up cost component that is critical to the real-world adoption of SBIRT. Zarkin et al. (2003) estimated the cost of alcohol SBI for risky drinking as implemented in four large managed-care organizations in the United States. The authors compared SBI costs of generalist and specialist models of implementation and separated costs into start-up and ongoing implementation costs. After adjustments to exclude some less common expenses and assuming each managed care organization implemented the intervention in three or two clinics, the authors reported a start-up cost of $67,640– $76,540 per clinic, respectively (2016 prices converted from 2011 prices with Bureau of Labor Statistics Consumer Price Index—all amounts are in U.S. dollars). However, the study did not consider training and other start-up costs by implementation model (i.e., generalist and specialist models were assumed to incur the same start-up cost).

The current study addresses the gap in the literature on the start-up costs of SBIRT to answer two important research questions: How much does it cost a provider organization to start SBIRT for adolescents in FQHCs, and do start-up costs differ by implementation model—generalist versus specialist?

Method

Start-up costs were computed using an activity-based costing approach from the perspective of the service provider. Start-up costs are the costs associated with setting up and preparing for the implementation (i.e., preparing for service delivery). Start-up activities are typically incurred over a short time frame and include administrative activities to set up and implement SBIRT, staff training, and technical assistance to ensure that SBIRT is fully integrated into the daily routine of each clinic before the ongoing implementation phase.

To maximize the degree to which findings can be generalized, our approach estimates economic rather than accounting costs. Economic costs are costs directly paid by the service provider and the monetary value of resources required during the start-up period but not directly paid by the service provider (Dunlap & French, 1998). Research-related activities carried out as part of the larger grant study (e.g., data collection and analysis) were excluded because these would not be done in real-world practice. Start-up activities spanned a period of 6 months. This period was marked by the beginning of planning activities for the implementation of SBIRT and the beginning of service delivery, which marked ongoing implementation. Staff training and technical assistance that occurred after the end of the start-up period are not accounted for in the start-up cost estimates presented here. Those recurring costs are part of ongoing implementation costs.

Data collection

The first step in our cost data collection was to identify the sites’ start-up activities. We conducted semi-structured interviews with key site staff before the start-up period to gather information on the major start-up activities, and these activities were categorized as either planning or training. Planning activities were meetings to understand the technicalities of each site, information gathering and revision, logistical planning for SBIRT roll out, dealing with challenges and obstacles, and getting the buy-in from key stakeholders at the seven sites. Research staff worked with a local consulting firm and the FQHC leadership (the medical director, site managers, and behavioral health supervisors), to develop service delivery protocols tailored to fit the model and the site workflow. The local consulting firm had an existing relationship with the FQHC and topical expertise in SBIRT implementation. The consultants, medical director, and research staff worked with the electronic medical records (EMR) vendor to add SBIRT adolescent items and develop system reports in the EMR. On several occasions, research staff and the FQHC leadership met with other staff at the sites to inform them about the SBIRT implementation and cultivate their buy-in for SBIRT.

Technical assistance and training for the two SBIRT models were provided to clinic staff by the local consulting firm. Working with the research staff, this firm tailored training materials to the two SBIRT models under study. All staff potentially involved in any aspect of SBIRT for adolescents attended the training. Training comprised background information on adolescent substance use; an overview of SBIRT; directions to use, score, and interpret the screening tool (i.e., CRAFFT [Knight et al, 2002, 2003] plus a tobacco question, together termed the “CRAFFT+”); and guidelines for providing BI (generalist sites) or brief advice and “warm hand-off” to the behavioral health counselor (specialist sites). The initial training session also included an introduction to the research study. For cost estimation purposes, the portion of the session that was related to the research study was excluded. Additional training on providing BIs, including motivational interviewing techniques, was given to the primary care providers at the generalist sites and to behavioral health counselors at specialist sites (Mitchell et al., 2016).

Activity-level cost data were collected for planning and training activities. A standardized cost form was designed to collect information on the amount of time spent by each staff person on specified start-up activities and, for activities that required building space (e.g., meetings), the size of the room where the activity took place. Resource use for planning and training activities was collected separately for each site or by model, when appropriate.

To reduce the burden on clinic staff, the study’s principal investigator and the project manager provided resource use information on the planning activities because they worked closely with the FQHC in all planning steps. The local consulting firm provided resource use information on training activities. Specifically, the firm provided information on the time spent customizing existing training materials for the FQHC and traveling to the clinics for the trainers. Information on the training sessions, including the number and type of staff attending, the length of each training session, and the size of the training room, was provided by the project manager.

Because this implementation was done as part of a research study, some of the activities that would normally be performed by a site director were performed by research staff. For these activities, the time spent by research staff members was assumed to serve as a proxy for the time spent by the director of clinical operations of the FQHC. The director of clinical operations was identified as the person who would be conducting necessary nonresearch activities done by research team members. Therefore, the salary of the director of clinical operations was used to value this research staff time.

Employee salary information was provided by the FQHC. The information included the average salary for each staff type and the amount spent on employee benefits and taxes. Information on building space cost was drawn from the 2013 Baltimore office market report published by Newmark Grubb Knight Frank (2013).

Estimating start-up costs

Start-up costs consisted of the site staff labor, building space, and contracted service costs for technical assistance related to planning for the implementation of SBIRT and initial training of the FQHC staff on SBIRT. These are one-time costs and do not include ongoing implementation costs. Consistent with the implementation study design, and because most start-up activities were specific to each site, costs were calculated at the site level and the study presents the average across the sites within each model. Some of the planning meetings were specific to the implementation model but not to each site. For those situations, costs were divided equally between the sites implementing the model. Other planning meetings, such as meetings with the FQHC stakeholders to discuss general implementation, were divided equally across all sites because they were associated with all sites and both models. To show the scalability of the start-up costs, the average cost within each model was divided by total number of staff trained, producing the start-up cost per staff trained. We did not test for statistically significant differences between the two models of delivery given the small sample size (n = 7). All cost estimates are presented in 2015 U.S. dollars.

Planning costs.

The cost of planning activities comprised labor costs due to the time spent by research staff (proxy of the director of clinical operations) and FQHC staff setting up and implementing SBIRT at the seven sites, and associated nonlabor (space) costs. It also included the cost of technical assistance provided by the local consulting firm. Clinic staff labor cost was calculated by multiplying the total number of hours spent on planning activities by staff’s hourly wage. Hourly wage rates were calculated by dividing the annual salary by 2,000 hours multiplied by the FQHC’s fringe rate, which covers the cost of employee benefits (e.g., vacation) and employer taxes (e.g., FICA [Federal Insurance Contributions Act] taxes). Space cost was calculated by multiplying the unit price per square foot per hour ($0.0083) by the room size used in each activity and the length of time the room was used.

The consulting firm hired to provide technical assistance and training during the start-up and implementation phases of the study was paid on a fixed price contract. The firm recorded the number of hours spent on planning activities, training activities, and research activities. The time was further divided into whether it was spent on a specific site, on a specific model, or covered all sites. The value of the firm’s contract during the 6-month start-up period was then divided between the three types of activities based on the proportion of time spent on each one. The contract cost included nonlabor resources, such as pamphlets shared with trainees and office supplies used to prepare training sessions. The total cost of planning activities was calculated by summing labor cost, space cost, and contract cost related to planning activities.

Training costs.

The cost of training activities comprised labor costs due to the time spent by the FQHC staff attending training sessions and associated nonlabor (space) costs. It also included the cost of training delivery by the local consulting firm. Staff time, space, and the consultant costs were calculated in the same manner as for planning activities. Out of a total of 12 training sessions, 5 had missing data on the size of the room used, and in those situations space used was imputed based on the average space of training sessions at sites within the same model of SBIRT delivery.

Results

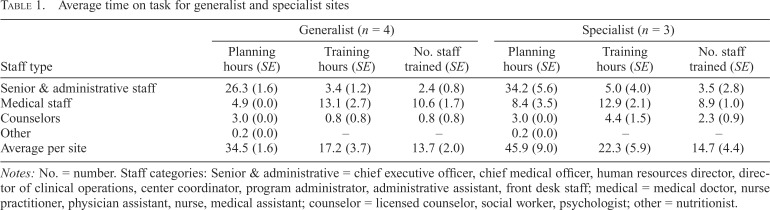

Table 1 presents average time spent on planning and training activities by staff type and intervention model (i.e., generalist and specialist). The total average time spent on planning activities was 34.5 hours and 45.9 hours for the generalist and specialist sites, respectively. Most of the planning time was spent by senior and administrative staff (i.e., the director of clinical operations, the chief medical officer, and chief executive officer).

Table 1.

Average time on task for generalist and specialist sites

| Staff type | Generalist (n = 4) |

Specialist (n = 3) |

||||

| Planning hours (SE) | Training hours (SE) | No. staff trained (SE) | Planning hours (SE) | Training hours (SE) | No. staff trained (SE) | |

| Senior & administrative staff | 26.3 (1.6) | 3.4 (1.2) | 2.4 (0.8) | 34.2 (5.6) | 5.0 (4.0) | 3.5 (2.8) |

| Medical staff | 4.9 (0.0) | 13.1 (2.7) | 10.6 (1.7) | 8.4 (3.5) | 12.9 (2.1) | 8.9 (1.0) |

| Counselors | 3.0 (0.0) | 0.8 (0.8) | 0.8 (0.8) | 3.0 (0.0) | 4.4 (1.5) | 2.3 (0.9) |

| Other | 0.2 (0.0) | – | – | 0.2 (0.0) | – | – |

| Average per site | 34.5 (1.6) | 17.2 (3.7) | 13.7 (2.0) | 45.9 (9.0) | 22.3 (5.9) | 14.7 (4.4) |

Notes: No. = number. Staff categories: Senior & administrative = chief executive officer, chief medical officer, human resources director, director of clinical operations, center coordinator, program administrator, administrative assistant, front desk staff; medical = medical doctor, nurse practitioner, physician assistant, nurse, medical assistant; counselor = licensed counselor, social worker, psychologist; other = nutritionist.

Generalist sites, on average, spent approximately 17 hours training 14 staff (about 1.2 hour per staff member), with medical staff receiving 76% of total training time and making up the largest portion of staff type trained (an average of 11 medical staff trained per site). Similarly, specialist sites, on average, spent 22 hours training 15 staff (about 1.5 hours per staff member), with medical staff receiving 58% of total training (average of 13 hours) and making up the largest portion of staff type trained (an average of 9 medical staff trained per site). The trainings at the specialist sites included more counselors than the generalist sites (2.3 vs. 0.8) because the specialist model used counselors to provide BI.

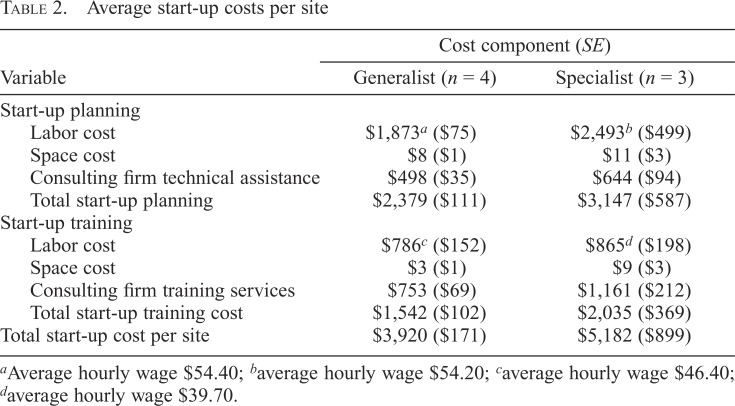

Table 2 presents average start-up costs for the generalist and specialist sites. The average start-up cost for the generalist sites was $3,920, and the average start-up cost for the specialist sites was $5,182. The average cost of start-up planning was $2,379 and $3,147 for generalist and specialist sites, respectively. Overall, staff labor cost related to planning activities accounted for almost 50% of costs across both models. Technical assistance and training also accounted for a significant proportion of start-up costs in both models of SBIRT delivery. Space costs accounted for a very small proportion of start-up costs (less than 1% of start-up costs in both models). Labor cost related to planning activities was greater in the specialist model because, on average, specialist sites spent 12 hours more on planning activities than generalist sites. The higher time spent on planning activities in specialist sites is explained by the higher complexity of BI implementation in a specialist model. Service delivery protocols in specialist sites included brief advice followed by a warm hand-off to behavioral health counselors without disrupting the current workflow.

Table 2.

Average start-up costs per site

| Variable | Cost component (SE) |

|

| Generalist (n = 4) | Specialist (n = 3) | |

| Start-up planning | ||

| Labor cost | $1,873a ($75) | $2,493b ($499) |

| Space cost | $8 ($1) | $11 ($3) |

| Consulting firm technical assistance | $498 ($35) | $644 ($94) |

| Total start-up planning | $2,379 ($111) | $3,147 ($587) |

| Start-up training | ||

| Labor cost | $786c ($152) | $865d ($198) |

| Space cost | $3 ($1) | $9 ($3) |

| Consulting firm training services | $753 ($69) | $1,161 ($212) |

| Total start-up training cost | $1,542 ($102) | $2,035 ($369) |

| Total start-up cost per site | $3,920 ($171) | $5,182 ($899) |

Average hourly wage $54.40;

average hourly wage $54.20;

average hourly wage $46.40;

average hourly wage $39.70.

The average cost of start-up training activities was $1,542 and $2,035 for generalist and specialist models, respectively. The average labor cost associated with the clinic staff’s time to attend the trainings was very similar between the two models ($786 and $865 for the generalist model and specialist model, respectively). The average wage for staff trained on SBIRT was higher in the generalist sites ($46) than the specialist sites ($40) because of a greater number of lower paid staff (i.e., counselors) attending the training sessions at the specialist sites. The clinic staff labor cost for attending the training sessions was approximately the same between models because of the lower average wage and greater training intensity in specialist sites. The overall cost of training was greater for the specialist sites because of greater costs associated with training delivery by the consulting firm. On average, the cost for the consulting firm to provide training was $753 at a generalist site compared with $1,161 at a specialist site. The higher training cost for the specialist sites was due to the additional time required for tailoring training instruments that accounted for patient hand-offs. The slightly higher cost of specialist sites is also reflected in the total cost per staff trained. Dividing the total start-up cost in generalist and specialist clinics ($3,920 and $5,182, respectively) by the total number of staff trained (14 and 15, respectively) gave a cost per staff trained of $280 and $345 at generalist and specialist sites, respectively.

Discussion

This study contributes to the growing field of implementation science by presenting estimates for the start-up costs of two different SBIRT models that were part of a large NIDA-funded multisite implementation study. The study compared the implementation of a generalist and a specialist SBIRT model for adolescents receiving primary care in a multisite FQHC organization located in Baltimore, MD. Understanding the initial cost of starting an SBIRT program is useful for decision makers as they consider whether and how to implement SBIRT. Few studies have researched the resources necessary before SBIRT services can be delivered. Recent studies have focused on the costs incurred after SBIRT is implemented (Barbosa et al., 2016; Bray et al., 2012, 2014; Horn et al., 2017; McCollister et al., 2017). Although the ongoing costs necessary to sustain SBIRT are also important in planning for service delivery, they differ from the one-off start-up costs that have been neglected in the SBIRT literature.

The average start-up cost for the generalist sites was $3,920, and the average start-up cost for the specialist sites was $5,182. The slightly higher average cost for specialist sites was driven by greater labor and contract costs, resulting from a larger amount of time spent on planning and training activities by research staff and the consulting firm in specialist sites. Our findings suggest that there might be economically meaningful cost differences in start-up between the more and less complex intervention models studied. Our results challenge the general perception that starting a new evidence-based practice is always costly. We found that starting an evidence-based practice like SBIRT is associated with low costs relative to the operating budget of the FQHC analyzed here, which should encourage a wider implementation of SBIRT in primary care settings.

A direct comparison of our results to the only other study that estimated the start-up costs of SBI (Zarkin et al., 2003) is not appropriate for several reasons. First, our study was conducted at the level of clinics within an FQHC with an average annual flow of adolescent visits ranging from 180 to 1,200, whereas Zarkin et al. (2003) estimated start-up costs in clinics of four large-scale managed care organizations, with an average annual flow of patient visits from 14,600 to 82,500 (Babor et al., 2005). Differences in the scale of the analysis is one of the main reasons why the start-up costs presented in Zarkin et al. (2003) ($67,640 to $76, 540 per clinic, 2016 prices) were well above the start-up costs found in our study. Second, technical assistance and training were much more intensive in Zarkin et al. than in our study. The authors noted that substantial technical assistance was provided to ensure adherence to the study protocol. They assumed that the clinics would not need technical assistance after a certain point and included technical assistance costs incurred during ongoing implementation as part of start-up. Third, Zarkin et al. did not estimate start-up costs by implementation model. They hypothesized that start-up costs would be less in the specialist model because of lower salaries for specialists compared with higher salaries for physicians. However, despite the salary difference for service staff, the specialist model in our analysis had a slightly higher start-up cost because of the greater amount of time devoted to planning and training activities in specialist sites.

The extent to which our results can be translated to other SBIRT programs depends on how similar the implementation strategy and settings are. Our findings pertain to the FQHC and its clinic sites analyzed here and are specific to the characteristics of the clinics in terms of workflow, staff mix, patient population, and existing infrastructure. For example, because all sites operated under a single umbrella FQHC, some of the costs could be divided equally across sites (e.g., meetings with the FQHC stakeholder), which would not happen had each site been affiliated with a different FQHC, with its own set of meetings. Because those costs are not model specific or site specific, they do not need to be incurred again if another clinic under the same FQHC organization decides to implement SBIRT. To help with the translation of our results to other settings, unit cost resources were valued at their opportunity cost, quantity of resources and dollar values were presented separately, and research-related costs were excluded, which should facilitate comparison to future studies that plan on calculating start-up costs of SBIRT.

The analysis has several limitations. First, the time spent on start-up activities was self-reported by members of the research team. Some activities that occurred less frequently may have been underreported or their time not accurately recorded. Ideally, the economic team would have observed each activity and collected the time necessary to conduct it (Cowell et al., 2017). However, this would be extremely time demanding and costly. We attempted to balance data accuracy and burden by collecting weekly data and maintaining close contact with the larger research team. Second, the time spent on the integration of SBIRT screening and scoring in the FQHC’s EMR could not be disentangled from other planning activities, but it was qualitatively reported that EMR integration activities accounted for a large share of the time spent in planning activities. Also, the EMR vendor did not charge separately for the integration of SBIRT in the EMR, as the current contract with the vendor covered any updates to the system, and the same may not apply to other clinics. Ideally, we would prefer to report a separate cost estimate for the time spent and additional costs related to the health information technology needs associated with SBIRT implementation, as it is an effort that most clinics would probably incur. Unfortunately, these data were not available separate from other planning activity data.

Third, our cost approach attempted to separate the costs of research from the true costs of SBIRT start-up for clinics serving adolescents. Although some of these costs are easy to distinguish (e.g., planning for data analysis), others involve subtle differences in level of effort and require respondents to allocate, sometimes imprecisely, costs to different activities. We valued the research team’s nonresearch time based on the salary of the director of operations. Absent a research study, it is possible that another staff member would be conducting those activities, which would change the unit costs, and that the time required would be higher (because of less experience with SBIRT requirements) or lower (because of better knowledge of the policies and procedures of the clinic). Last, we presented start-up cost per site within each model of implementation as the unit of analysis because most start-up activities were conducted at the site level. A fundamental limitation to this approach is that the sample size is restricted to the number of clinics (n = 4 in the generalist model and n = 3 in the specialist model) and, therefore, we did not test for statistically significant differences between the two models of delivery. For scalability we also presented the cost per staff trained. The start-up cost per staff trained in specialist sites was higher than in generalist sites ($345 vs. $280), which further supported the higher cost of the specialist model. However, it should be recognized that the estimate of the start-up cost includes costs of a different nature. Although the costs of each additional staff member trained and each involved in planning activities are variable, the cost of the contract for training and planning delivery is a quasi-fixed one, which is fixed up to the point that clinic size or additional implementation complexity drive an increase in the contract cost.

Despite the limitations, our study sheds light on the actual costs of initial implementation of SBIRT for adolescents in primary care clinics implementing a generalist or specialist model of delivery. The current cost study was conducted alongside a cluster randomized trial, and data collection started at inception. The prospective design of cost data collection enabled a complete and accurate collection of important cost data. Often, cost studies start in the middle or after the completion of the main study, which can lead to loss of information because of recall bias and incomplete records. We followed an activity-based costing approach, which allowed us to look at different staff types involved in planning and training activities, and included not only those involved in direct service delivery (e.g., medical doctor, counselor), but also those supporting the implementation of SBIRT (e.g., trainer, executive staff, administrative staff). Our findings highlight the importance of planning activities and their cost relative to training activities during start-up. Ignoring the cost of planning activities would seriously underestimate SBIRT start-up costs. We also found that most start-up costs in both models of delivery are labor costs.

This study provides further guidance to the implementation field in general. The implementation of evidence-based practices should account for the economic implications of starting a new intervention or approach. Despite the support for SBIRT for adolescents and SBIRT in general, it has not yet been widely adopted as a standard practice. It might be that potential providers of SBIRT lack information about the one-time cost associated with start-up. Studies in the implementation field have generally focused on intervention outcomes related to penetration, provider acceptability, patient satisfaction, fidelity/adherence, and sustainability, disregarding economic outcomes. However, decision makers such as third-party payers and health care organizations need to know the implications of implementing an intervention on their financial bottom line. The implementation field would benefit from more studies on the costs of starting an intervention, and economic studies in general. Because start-up costs will depend on the characteristics of the setting and mode of implementation, future research should look at the costs necessary to start an SBIRT program in different settings (e.g., emergency department vs. primary care and rural vs. urban implementation), delivery mode (computerized vs. in-person), and existing infrastructure (e.g., EMR capabilities). The next step in the researchers’ agenda is to determine which model is more cost-effective in delivering services.

Acknowledgments

The authors wish to thank the FQHC staff who participated in this study.

Footnotes

This study was supported through National Institute on Drug Abuse (NIDA) Grant 1R01DA034258-01 (Shannon Gwin Mitchell, principal investigator). NIDA had no role in the design and conduct of the study; data acquisition, management, analysis, and interpretation of the data; or preparation, review, or approval of the article. This trial is registered with clinicaltrials.gov (NCT01829308).

References

- Babor T. E., Higgins-Biddle J., Dauser D., Higgins P., Burleson J. A. Alcohol screening and brief intervention in primary care settings: Implementation models and predictors. Journal of Studies on Alcohol. 2005;66:361–368. doi: 10.15288/jsa.2005.66.361. doi:10.15288/jsa.2005.66.361. [DOI] [PubMed] [Google Scholar]

- Barbosa C., Cowell A. J., Landwehr J., Dowd W., Bray J. W. Cost of screening, brief intervention, and referral to treatment in health care settings. Journal of Substance Abuse Treatment. 2016;60:54–61. doi: 10.1016/j.jsat.2015.06.005. doi:10.1016/j.jsat.2015.06.005. [DOI] [PubMed] [Google Scholar]

- Bendtsen P., Müssener U., Karlsson N., López-Pelayo H., Palacio-Vieira J., Colom J., Anderson P. Implementing referral to an electronic alcohol brief advice website in primary healthcare: Results from the ODHIN implementation trial. BMJ Open. 2016;6:e010271. doi: 10.1136/bmjopen-2015-010271. doi:10.1136/bmjopen-2015–010271. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernstein E., Edwards E., Dorfman D., Heeren T., Bliss C., Bernstein J. Screening and brief intervention to reduce marijuana use among youth and young adults in a pediatric emergency department. Academic Emergency Medicine. 2009;16:1174–1185. doi: 10.1111/j.1553-2712.2009.00490.x. doi:10.1111/j.1553-2712.2009.00490.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bernstein J., Heeren T., Edward E., Dorfman D., Bliss C., Winter M., Bernstein E. A brief motivational interview in a pediatric emergency department, plus 10-day telephone follow-up, increases attempts to quit drinking among youth and young adults who screen positive for problematic drinking. Academic Emergency Medicine. 2010;17:890–902. doi: 10.1111/j.1553-2712.2010.00818.x. doi:10.1111/j.1553-2712.2010.00818.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bray J. W., Mallonee E., Dowd W., Aldridge A., Cowell A. J., Vendetti J. Program- and service-level costs of seven screening, brief intervention, and referral to treatment programs. Substance Abuse and Rehabilitation. 2014;5:63–73. doi: 10.2147/SAR.S62127. doi:10.2147/SAR.S62127. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bray J. W., Zarkin G. A., Hinde J. M., Mills M. J. Costs of alcohol screening and brief intervention in medical settings: A review of the literature. Journal of Studies on Alcohol and Drugs. 2012;73:911–919. doi: 10.15288/jsad.2012.73.911. doi:10.15288/jsad.2012.73.911. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Buck J. A. The looming expansion and transformation of public substance abuse treatment under the Affordable Care Act. Health Affairs. 2011;30:1402–1410. doi: 10.1377/hlthaff.2011.0480. doi:10.1377/hlthaff.2011.0480. [DOI] [PubMed] [Google Scholar]

- Center for Behavioral Health Statistics and Quality. Behavioral health trends in the United States: Results from the 2014 National Survey on Drug Use and Health (HHS Publication No. SMA 15–4927, NSDUH Series H-50) 2015 Retrieved from http://www.samhsa.gov/data/

- Chen C. Y., O’Brien M. S., Anthony J. C. Who becomes cannabis dependent soon after onset of use? Epidemiological evidence from the United States: 2000–2001. Drug and Alcohol Dependence. 2005;79:11–22. doi: 10.1016/j.drugalcdep.2004.11.014. doi:10.1016/j.drugalcdep.2004.11.014. [DOI] [PubMed] [Google Scholar]

- Colby S. M., Monti P. M., O’Leary Tevyaw T., Barnett N. P., Spirito A., Rohsenow D. J., Lewander W. Brief motivational intervention for adolescent smokers in medical settings. Addictive Behaviors. 2005;30:865–874. doi: 10.1016/j.addbeh.2004.10.001. doi:10.1016/j.addbeh.2004.10.001. [DOI] [PubMed] [Google Scholar]

- Cowell A. J., Dowd W. N., Landwehr J., Barbosa C., Bray J. W. A time and motion study of Screening, Brief Intervention, and Referral to Treatment implementation in health-care settings. Addiction. 2017;112(Supplement 2):65–72. doi: 10.1111/add.13659. doi:10.1111/add.13659. [DOI] [PubMed] [Google Scholar]

- D’Amico E. J., Miles J. N., Stern S. A., Meredith L. S. Brief motivational interviewing for teens at risk of substance use consequences: A randomized pilot study in a primary care clinic. Journal of Substance Abuse Treatment. 2008;35:53–61. doi: 10.1016/j.jsat.2007.08.008. doi:10.1016/j.jsat.2007.08.008. [DOI] [PubMed] [Google Scholar]

- Duncan S. C., Alpert A., Duncan T. E., Hops H. Adolescent alcohol use development and young adult outcomes. Drug and Alcohol Dependence. 1997;49:39–48. doi: 10.1016/s0376-8716(97)00137-3. doi:10.1016/S0376-8716(97)00137-3. [DOI] [PubMed] [Google Scholar]

- Dunlap L. J., French M. T. A comparison of two methods for estimating the costs of substance abuse treatment. Journal of Maintenance in the Addictions. 1998;1:29–44. doi:10.1300/J126v01n03_06. [Google Scholar]

- HealthCare.gov. What are my preventive care benefits? 2013 Retrieved from https://www.healthcare.gov/what-are-my-preventive-care-benefits/

- Heckman C. J., Egleston B. L., Hofmann M. T. Efficacy of motivational interviewing for smoking cessation: A systematic review and meta-analysis. Tobacco Control. 2010;19:410–416. doi: 10.1136/tc.2009.033175. doi:10.1136/tc.2009.033175. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hollis J. F., Polen M. R., Whitlock E. P., Lichtenstein E., Mullooly J. P., Velicer W. F., Redding C. A. Teen reach: Outcomes from a randomized, controlled trial of a tobacco reduction program for teens seen in primary medical care. Pediatrics. 2005;115:981–989. doi: 10.1542/peds.2004-0981. doi:10.1542/peds.2004-0981. [DOI] [PubMed] [Google Scholar]

- Horn B. P., Crandall C., Forcehimes A., French M. T., Bogenschutz M. Benefit-cost analysis of SBIRT interventions for substance using patients in emergency departments. Journal of Substance Abuse Treatment. 2017;79:6–11. doi: 10.1016/j.jsat.2017.05.003. doi:10.1016/j.jsat.2017.05.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Knight J. R., Sherritt L., Harris S. K., Gates E. C., Chang G. Validity of brief alcohol screening tests among adolescents: A comparison of the AUDIT, POSIT, CAGE, and CRAFFT. Alcoholism: Clinical and Experimental Research. 2003;27:67–73. doi: 10.1097/01.ALC.0000046598.59317.3A. doi:10.1111/j.1530-0277.2003.tb02723.x. [DOI] [PubMed] [Google Scholar]

- Knight J. R., Sherritt L., Shrier L. A., Harris S. K., Chang G. Validity of the CRAFFT substance abuse screening test among adolescent clinic patients. Archives of Pediatrics & Adolescent Medicine. 2002;156:607–614. doi: 10.1001/archpedi.156.6.607. doi:10.1001/archpedi.156.6.607. [DOI] [PubMed] [Google Scholar]

- Martin G., Copeland J. The adolescent cannabis check-up: Randomized trial of a brief intervention for young cannabis users. Journal of Substance Abuse Treatment. 2008;34:407–414. doi: 10.1016/j.jsat.2007.07.004. doi:10.1016/j.jsat.2007.07.004. [DOI] [PubMed] [Google Scholar]

- McCambridge J., Strang J. The efficacy of single-session motivational interviewing in reducing drug consumption and perceptions of drug-related risk and harm among young people: Results from a multi-site cluster randomized trial. Addiction. 2004;99:39–52. doi: 10.1111/j.1360-0443.2004.00564.x. doi:10.1111/j.1360-0443.2004.00564.x. [DOI] [PubMed] [Google Scholar]

- McCollister K., Yang X., Sayed B., French M. T., Leff J. A., Schackman B. R. Monetary conversion factors for economic evaluations of substance use disorders. Journal of Substance Abuse Treatment. 2017;81:25–34. doi: 10.1016/j.jsat.2017.07.008. doi:10.1016/j.jsat.2017.07.008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mitchell S. G., Schwartz R. P., Kirk A. S., Dusek K., Oros M., Hosler C., Brown B. S. SBIRT implementation for adolescents in urban federally qualified health centers. Journal of Substance Abuse Treatment. 2016;60:81–90. doi: 10.1016/j.jsat.2015.06.011. doi:10.1016/j.jsat.2015.06.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Monti P. M., Colby S. M., Barnett N. P., Spirito A., Rohsenow D. J., Myers M., Lewander W. Brief intervention for harm reduction with alcohol-positive older adolescents in a hospital emergency department. Journal of Consulting and Clinical Psychology. 1999;67:989–994. doi: 10.1037//0022-006x.67.6.989. doi:10.1037/0022-006X.67.6.989. [DOI] [PubMed] [Google Scholar]

- National Institute for Health and Care Excellence. Alcohol-use disorders: Prevention (NICE public health guidance 24) 2010 Retrieved from https://www.nice.org.uk/guidance/ph24.

- Newmark Grubb Knight Frank. New York, NY: Newmark Grubb Knight Frank; 2013. 2Q13 Baltimore Office Market. [Google Scholar]

- Padwa H., Urada D., Antonini V. P., Ober A., Crèvecoeur-MacPhail D. A., Rawson R. A. Integrating substance use disorder services with primary care: The experience in California. Journal of Psychoactive Drugs. 2012;44:299–306. doi: 10.1080/02791072.2012.718643. doi:10.1080/02791072.2012.718643. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Peterson A. V., Jr., Kealey K. A., Mann S. L., Marek P. M., Ludman E. J., Liu J., Bricker J. B. Group-randomized trial of a proactive, personalized telephone counseling intervention for adolescent smoking cessation. Journal of the National Cancer Institute. 2009;101:1378–1392. doi: 10.1093/jnci/djp317. doi:10.1093/jnci/djp317. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Reinert D. F., Allen J. P. The Alcohol Use Disorders Identification Test: An update of research findings. Alcoholism: Clinical and Experimental Research. 2007;31:185–199. doi: 10.1111/j.1530-0277.2006.00295.x. doi:10.1111/j.1530-0277.2006.00295.x. [DOI] [PubMed] [Google Scholar]

- Spirito A., Monti P. M., Barnett N. P., Colby S. M., Sindelar H., Rohsenow D. J., Myers M. A randomized clinical trial of a brief motivational intervention for alcohol-positive adolescents treated in an emergency department. Journal of Pediatrics. 2004;145:396–402. doi: 10.1016/j.jpeds.2004.04.057. doi:10.1016/j.jpeds.2004.04.057. [DOI] [PubMed] [Google Scholar]

- Substance Abuse and Mental Health Services Administration. Rockville, MD: Author; 2013. The NSDUH report: Trends in adolescent substance use and perception of risk from substance use. [PubMed] [Google Scholar]

- Substance Abuse and Mental Health Services Administration. Rockville, MD: Author; 2014. Results from the 2013 National Survey on Drug Use and Health: Summary of national findings (NSDUH Series H-48, HHS Publication No. (SMA) 14–4863) [Google Scholar]

- Tait R. J., Hulse G. K. A systematic review of the effectiveness of brief interventions with substance using adolescents by type of drug. Drug and Alcohol Review. 2003;22:337–346. doi: 10.1080/0959523031000154481. doi:10.1080/0959523031000154481. [DOI] [PubMed] [Google Scholar]

- Tait R. J., Hulse G. K., Robertson S. I. Effectiveness of a brief-intervention and continuity of care in enhancing attendance for treatment by adolescent substance users. Drug and Alcohol Dependence. 2004;74:289–296. doi: 10.1016/j.drugalcdep.2004.01.003. doi:10.1016/j.drugalcdep.2004.01.003. [DOI] [PubMed] [Google Scholar]

- Tanner-Smith E. E., Lipsey M. W. Brief alcohol interventions for adolescents and young adults: A systematic review and meta-analysis. Journal of Substance Abuse Treatment. 2015;51:1–18. doi: 10.1016/j.jsat.2014.09.001. doi:10.1016/j.jsat.2014.09.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tripodi S. J., Bender K., Litschge C., Vaughn M. G. Interventions for reducing adolescent alcohol abuse: A meta-analytic review. Archives of Pediatrics & Adolescent Medicine. 2010;164:85–91. doi: 10.1001/archpediatrics.2009.235. doi:10.1001/archpediatrics.2009.235. [DOI] [PubMed] [Google Scholar]

- Walton M. A., Chermack S. T., Shope J. T., Bingham C. R., Zimmerman M. A., Blow F. C., Cunningham R. M. Effects of a brief intervention for reducing violence and alcohol misuse among adolescents: A randomized controlled trial. JAMA. 2010;304:527–535. doi: 10.1001/jama.2010.1066. doi:10.1001/jama.2010.1066. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Winters K. C., Leitten W. Brief intervention for drug-abusing adolescents in a school setting. Psychology of Addictive Behaviors. 2007;21:249–254. doi: 10.1037/0893-164X.21.2.249. doi:10.1037/0893-164X.21.2.249. [DOI] [PubMed] [Google Scholar]

- World Health Organization. Copenhagen, Denmark: World Health Organization Regional Office for Europe; 2012. European action plan to reduce the harmful use of alcohol 2012–2020. [Google Scholar]

- Zarkin G. A., Bray J. W., Davis K. L., Babor T. F., Higgins-Biddle J. C. The costs of screening and brief intervention for risky alcohol use. Journal of Studies on Alcohol. 2003;64:849–857. doi: 10.15288/jsa.2003.64.849. doi:10.15288/jsa.2003.64.849. [DOI] [PubMed] [Google Scholar]