Abstract

Among individuals diagnosed, hospitalized, and treated for schizophrenia, up to 40% of those discharged may relapse within 1 year even with appropriate treatment. Passively collected smartphone behavioral data present a scalable and at present underutilized opportunity to monitor patients in order to identify possible warning signs of relapse. Seventeen patients with schizophrenia in active treatment at a state mental health clinic in Boston used the Beiwe app on their personal smartphone for up to 3 months. By testing for changes in mobility patterns and social behavior over time as measured through smartphone use, we were able to identify statistically significant anomalies in patient behavior in the days prior to relapse. We found that the rate of behavioral anomalies detected in the 2 weeks prior to relapse was 71% higher than the rate of anomalies during other time periods. Our findings show how passive smartphone data, data collected in the background during regular phone use without active input from the subjects, can provide an unprecedented and detailed view into patient behavior outside the clinic. Real-time detection of behavioral anomalies could signal the need for an intervention before an escalation of symptoms and relapse occur, therefore reducing patient suffering and reducing the cost of care.

Introduction

Schizophrenia, impacting up to 1.5% of the world population, remains a devastating psychotic illness in terms of suffering, disability, and global economic burden. Despite advances in the understanding of the biological basis of schizophrenia, the clinical management of psychotic disorders remains challenging. Even with appropriate antipsychotic medication, up to 40% of patients discharged from a hospital may still relapse within 1 year [1]. Relapse is associated with higher risks of self-harm, loss of employment, and strain on personal relationships, as well as biological risk of cognitive decline [2]. Predicting relapse remains challenging given the complexity of schizophrenia and the heterogeneity of presentations [3, 4], as well as the fundamental difficulty of observing patient behavior and symptoms outside of the clinic.

Many have proposed using technology to augment relapse prediction. Over a decade ago, the pioneering ITAREPS study demonstrated feasibility of a mobile phone text-messaging platform to identify early symptoms of relapse in schizophrenia [5]. While the study was only powered to identify self-reported medication non-compliance as a significant risk factor for hospitalization, it also demonstrated feasibility of digital monitoring with only 10% of the 45 subjects dropping out over a 1-year duration. More recently, the advent and rapid advancement of new sensors via wearables and smartphones affords new opportunities and advantages for exploring the potential of technology to augment relapse prediction [6, 7]. Crucially, these technologies make it possible to obtain social and behavioral markers from patients requiring only minimal, if any, active involvement of the subjects.

We have previously defined digital phenotyping as the moment-by-moment quantification of the individual-level human phenotype in situ using data from personal digital devices, in particular smartphones [8]. Smartphones may be an especially useful device for relapse prediction, not only because they afford a wide variety of types of behavioral data that can be automatically recorded by suitable software applications but also as they are increasingly owned and used by those with schizophrenia [9, 10]. Although wearables offer the possibility of collecting physiological data, their ownership is considerably lower than that of smartphones, with currently only 12% of the American adults owning and regularly using wearables [11]. While a notion of a digital divide has been raised in the past [12], claiming that those with schizophrenia may not own or be interested in using smartphones, recent evidence suggests otherwise [9, 10, 13]. It also appears that the adoption of smartphones, including among those with serious mental illness, will continue to rise as conventional feature phones, phones without smartphone capabilities, are becoming less available from vendors. The ability to use patients’ own smartphones for research in schizophrenia offers the advantage of potentially massive scalability at little or no additional cost, and it limits the confounders associated with monitoring behavior through a study phone, which the subjects may use differently from their own phones [14]. Smartphones also offer the ability to collect two different classes of data, both of which may be useful for mental health research. The first is “active data,” which is similar to ecological momentary assessment in that the phone prompts subjects to report on their current symptoms. The name reflects active user engagement, such as taking a survey, and the data are only generated if the user chooses to complete the survey or task. Smartphones can also collect “passive data,” which consists of sensor data from built-in global positioning system (GPS) and accelerometer, among others, as well as phone usage data, such as call logs, text logs, and screen activity. By using a suitable software application, smartphones may be configured to automatically collect passive data without any active user engagement. Recent research suggests that this passive data may be a potential proxy for behaviors in psychiatric disorders, for example, variations in distance traveled measured by GPS may correlate with mood symptoms [15]. The CrossCheck Study found strong associations and predictive power between self-reported symptoms and both active and passive data [16]. In another study, women at risk for perinatal depression with a larger radius of travel tended to have milder depressive symptoms than those with a smaller radius of travel [17].

An additional advantage of smartphones as a relapse prediction tool in schizophrenia is the potential for these devices to respond to the very data they collect and create customized interventions when they are most needed. Researchers have already demonstrated the ability of smartphone applications to help those with schizophrenia to increase quality of life through enhanced motivation and social connections and to manage symptoms, such as hearing voices [13, 18]. While still nascent, smartphone interventions for schizophrenia offer an immediate target for relapse prediction systems [19]. However, these interventions will be of most use when coupled with accurate prediction of when they are most needed, such as when relapse is predicted to be imminent.

Smartphones and the data they collect are only one element of the platform necessary for technology augmented relapse prediction. Other critical elements are a back-end system that support scalable collection, storage, and processing of smartphone data, a suite of suitable analysis and modeling tools, and integration of these components with clinical information systems. Given the volume, variety, and velocity of raw smartphone sensor data, an appropriate data analysis strategy is necessary to protect against the risk of overfitting, leading to poor generalizability to future data or new subjects [20], a potential pitfall that is amplified by larger and larger datasets. Finally, no relapse prediction system is useful if not integrated into clinical care and actually used by clinicians and patients.

In this study, we explore the potential of active and passive smartphone data to predict relapse in schizophrenia. Specifically, we model time-varying patterns in a variety of data streams, and develop a statistical model for detecting daily behavioral anomalies. Since this is a pilot study, we explore the feasibility and utility of smartphone-based digital phenotyping for relapse prediction and use our results to generate a computational model of relapse that may serve as a foundation for future research efforts.

Materials and methods

Seventeen patients with schizophrenia in active treatment at a state mental health clinic in Boston used the Beiwe app on their personal smartphone for up to 3 months per subject. We used the Beiwe app, which is part of the Beiwe research platform [19], to offer subjects biweekly in-app symptoms surveys, and also continuously collect passive data from GPS, accelerometer, anonymized call and text message logs, screen on/off time, and phone charging status. The subjects were not paid for app use, not given additional support for app use, and not provided with check in calls or study staff reminders to use the app. We configured Beiwe to use WiFi rather than cellular network for data uploads in order to eliminate data charges to the subjects. Because 1 of the 17 subjects never connected his phone to WiFi, his data are unfortunately not available for analysis. Of the 16 remaining subjects, 1 had less than a week of follow-up, leaving 15 subjects with sufficient data collected through the smartphone application. These 15 subjects were followed for 1075 person-days with a median follow-up time of 79 days. We defined clinical relapse as either psychiatric hospitalization or an increase in the level of psychiatric care, such as increase in the frequency of clinic visits or referral to a partial or outpatient hospital program [21].

In this study, Beiwe was configured to collect GPS data for 1 min every 10 min in order to minimize battery drain. After projecting the collected latitude–longitude data to 2D plane, we converted them to mobility trajectories consisting of flights, corresponding to time periods of movement at a constant speed in a constant direction, and pauses, corresponding to time periods moments of non-movement [22]. We then used our statistical approach based on weighted resampling of observed trajectories to impute the gaps [23], a mixture of flights and pauses, in the mobility trajectories. We summarized mobility trajectories using 15 daily mobility features, such as daily distance traveled, daily time spent at home, and the number of daily significant locations visited. Similarly, using SMS text and call logs, an additional 16 sociability features were estimated for each day, such as the total duration of calls, number of missed calls, and number of text messages sent. Aside from these features, which are collected passively, patients were also prompted with surveys twice per week. These in-app surveys contained questions designed to measure anxiety, depression, sleep quality, psychosis, the warning symptoms scale, and whether or not the subjects were taking their medication. The full set of mobility and sociability summary statistics, as well as survey question categories, are listed in Table 2, with details on the wording of the survey questions in Table S1 in Supplementary Materials.

Table 2.

Listing of 6 survey question categories, 15 mobility features, and 16 sociability features

| Survey question categories | Mobility features | Sociability features |

|---|---|---|

| 1. Depression | 1. Time spent at home | 1. Number of outgoing texts |

| 2. Sleep quality | 2. Distance traveled | 2. Total outgoing text length |

| 3. Psychosis | 3. Radius of gyration | 3. Texting out-degree |

| 4. Warning symptoms scale | 4. Maximum diameter | 4. Number of incoming texts |

| 5. Taking medication | 5. Maximum distance from home | 5. Total incoming text length |

| 6. Anxiety | 6. Number of significant locations | 6. Texting in-degree |

| 7. Average flight length | 7. Texting reciprocity | |

| 8. Standard deviation of flight length | 8. Texting responsiveness | |

| 9. Average flight duration | 9. Number of outgoing calls | |

| 10. Standard deviation of flight duration | 10. Total outgoing call duration | |

| 11. Fraction of the day spent stationary | 11. Call out-degree | |

| 12. Significant location entropy | 12. Number of incoming calls | |

| 13. Minutes of GPS data missing | 13. Total incoming call durations | |

| 14. Physical circadian rhythm | 14. Call in-degree | |

| 15. Physical circadian rhythm stratified | 15. Call reciprocity | |

| 16. Call responsiveness |

Each mobility and sociability feature is calculated each day for each patient. For each survey question category, a category score is produced for each day the surveys were administered by averaging the score across all questions answered from that category, where each survey question is scored from 0 to 3. Mobility feature 15 is stratified by weekend day vs. week day. Detailed descriptions of mobility feature definitions can be found in Canzian and Musolesi [35]. In sociability features, text length is quantified as the number of characters in the text messages, so for example, sociability feature 2 is the sum of the number of characters in text messages over each day. Further, we use the social network term “degree” to refer to the number of distinct communication partners. For example, sociability feature 6, texting in-degree, corresponds to the number of individuals who have sent a text message to the subject on the given day.

With this wide range of mobility features, sociability features, and clinical outcomes being recorded up to 3 months on a daily basis for each patient, trends in patient behavior can be established by treating these features as a multivariate time series. Anomalous breaks from a patient’s usual trend in their self-reported outcomes, sociability, or mobility may be indicative of broader behavioral changes and could precede adverse events, such as relapse. Therefore, it is important to be able to identify anomalous changes as they may represent early opportunities for outreach and relapse prevention. While time-series methods for multivariate anomaly detection have been developed, such as methods which transform the multivariate time series to a univariate function or statistic [24, 25], or network-based methods [26–28], these approaches are not equipped to handle the missing observations that frequently arise when collecting active and passive smartphone data from patient populations.

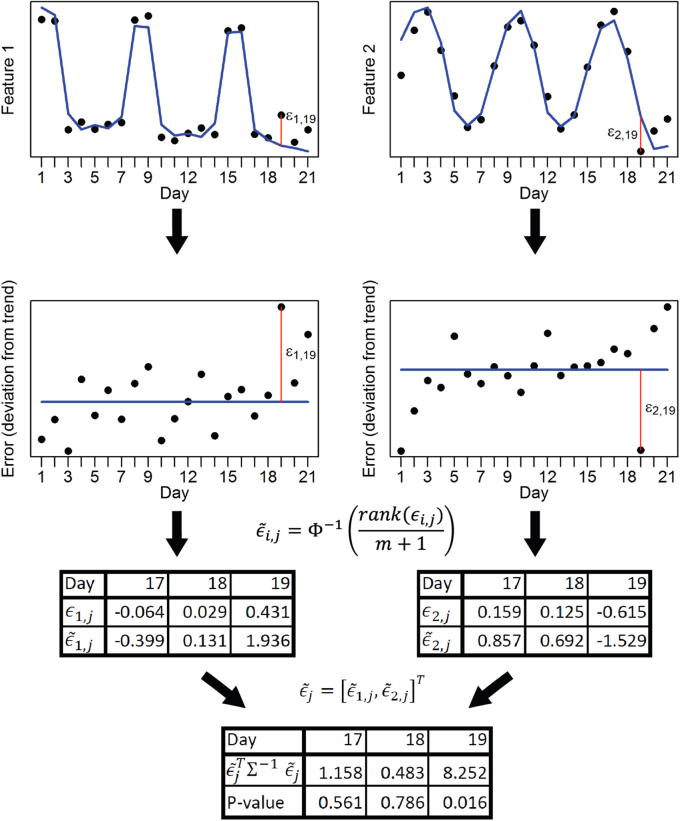

To determine if anomalies exist in the daily features while accommodating missing observations, we propose a statistical test inspired by Filzmoser’s approach [24]. We first defined expected behavior by decomposing the multivariate time series into an overall trend, including a weekly component. We then tested for aberrant behavior in the time series of each univariate feature by separately performing a Hotelling’s T2 test on deviations from the trend, called error. For each feature’s time series, we transformed errors non-parametrically into Z-scores by sorting the errors by rank across all days of follow-up with observed data for that feature, and we then transformed them to the standard normal distribution using the probability integral transform. Each of these steps is shown schematically in Fig. 3, and a more detailed description of this approach is left to the Supplementary Materials. This approach is agnostic to the set of features being used and so in a different context this feature set can be augmented or replaced.

Figure 3.

A demonstration of the multivariate time-series anomaly detection method. A bivariate example with toy data is presented to demonstrate how anomaly detection is performed at the daily level. The blue lines represent the sum of the overall trend and weekly components for each time series, with the remaining ε is regarded as the error. Red vertical lines show the specific error for day 19 for each univariate time series. After the rank(·) function ranks the errors, and the probability integral transforms these ranks to normally distributed adjusted errors, the multivariate time series across all features becomes multivariate normal, and the Hotelling’s T2 test is used to test them simultaneously for anomalies. Here, m represents the number of days in the time series and Φ is the standard normal cumulative distribution function

Only if multiple features are simultaneously and sufficiently anomalous on the same day will the resulting test yield a statistically significant result. To correct for multiple comparisons, we bootstrapped the error components of the time series assuming stationarity to generate the null distribution for the largest test statistic across all days of follow-up, and the α-quantile of the bootstrapped values provided the threshold for significance at the α significance level.

Results

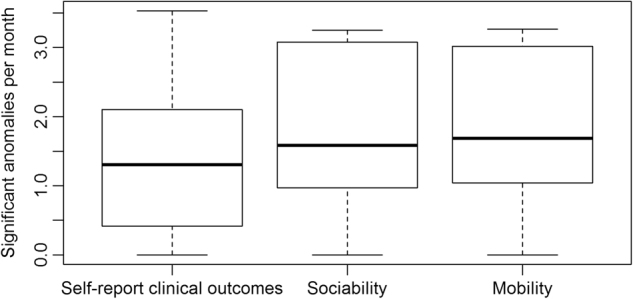

We investigate the rate at which significant anomalies occur across the 15 patients in the sample (Fig. 1). On average, there were 1.8 significant anomalies in mobility detected per month across the sample, 1.7 significant anomalies in sociability detected per month across the sample, and 1.4 significant anomalies in self-report of clinical outcomes detected per month across the sample. In all three cases, the variability in this rate varied greatly across subjects, with some subjects having over twice the rate of anomalies detected as some others. If anomaly detection is to be used to prompt interventions in the future, the frequency of anomalies detected should ideally match the frequency of actual relapses without having too many false positives. Given the rarity of relapse and the small sample size used in this pilot study, sensitivity and specificity cannot be accurately estimated from our data.

Figure 1.

Monthly rate of anomalies detected in the cohort of 15 patients with schizophrenia. After performing anomaly detection for each day of data collection for each patient across the sample, the frequency of anomalies detected at the 0.05 significance level per 30 days are calculated separately for each patient. These numbers should be compared to the expected number of anomalies under the null hypothesis, or 30*0.05 = 1.5 per 30 days. The distribution of anomaly rates in all data streams match tend to be centered near this null rate of 1.5 anomalies per 30 days. As a point of comparison, in the patients who relapsed the rate of anomalies within 2 weeks of hospitalization was 2.5 anomalies per 30 days

Of the 15 subjects whose data were analyzed, five experienced clinical relapses, and of those who relapsed, three patients had sufficient data collected prior to relapse to test for the presence of behavioral anomalies. Of the two subjects who experienced relapse but had insufficient data, one uninstalled the smartphone application 3 weeks prior to hospitalization, and the other uninstalled the application 1 week prior to hospitalization. Of the three subjects who experienced relapse with sufficient data collected to perform anomaly detection analyses, the rate of anomalies detected in the 2 weeks prior to relapse was 71% higher than the rate of anomalies detected in dates further away from relapse (Table 1). Due to the relative rarity of relapse events in our sample, a 2-week window was selected instead of 1 week in order to allow for sufficient data in the calculation of anomaly rates.

Table 1.

Comparison of the frequency of anomalies detected close to and far from the date of relapse for patients that were hospitalized

| No. of anomaly tests performed | No. of combined anomalies | No. of survey anomalies | No. of social anomalies | No. of mobility anomalies | |

|---|---|---|---|---|---|

| Within 2 weeks of relapse | 118 | 10 | 2 | 6 | 2 |

| Other days | 161 | 8 | 1 | 6 | 1 |

For each patient, anomaly tests are performed daily and separately for survey responses, social features, and mobility features. Only data collected before relapse and hospitalization are considered. The rate of anomalies within 2 weeks of relapse is 10/118 = 0.085 anomalies per day, while the rate of anomalies on other days is lower, at 8/161 = 0.050 anomalies per day.

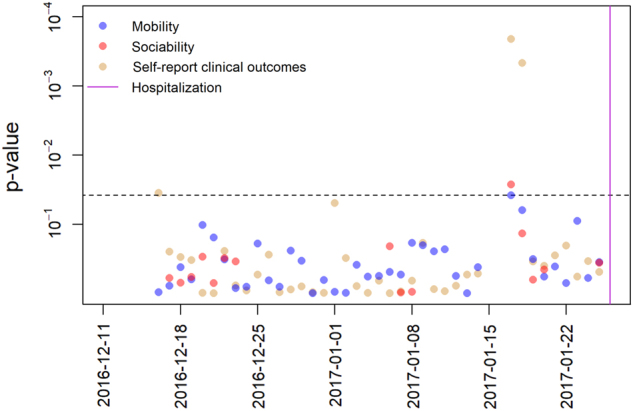

Of the patients with relapse and hospitalization, the first patient had 48 days of follow-up, 37 of which were collected prior to hospitalization; the second patient had 53 days of follow-up, 48 of which were collected prior to hospitalization; and the third patient had 79 days of follow-up, 41 of which were collected prior to hospitalization. In this third patient, a week prior to relapse and hospitalization there were significant anomalies in mobility, sociability, and self-reported clinical outcomes (Fig. 2). Despite adhering to the study and having reliable smartphone data collection for the first month, no data were collected for 2 days prior to the significant behavioral anomalies, which could also be related to the patient’s regression. On the anomalous day, 9 days before hospitalization, the subject spent only 6 h and 51 min out of the 24-h period at home, far less time than usual. Also, in contrast with most previous days during which the subject communicated with no one through their phone’s SMS system or calls, the subject made four phone calls with the same phone number on the anomalous day. Additionally, on the anomalous day the patient reported trouble with moderately strong anxiety (2 on a 0–3 scale), moderately strong depression (2 on a 0–3 scale), moderately high on the Warning Symptoms Scale (2.25 on a 0–3 scale), extreme sleeping problems (3 on a 0–3 scale), and extreme levels of psychosis (3 on a 0–3 scale). These scores represent an increase across the board in all categories when compared to self-report from a week before the anomaly.

Figure 2.

Daily p values for anomaly detection in mobility, sociability, and self-report of clinical outcomes in a patient leading up to relapse and hospitalization. The horizontal dotted line represents the significance level 0.05 after adjusting for multiple comparisons. Each point represents the p value for the test of anomaly detection on that day, with feature category indicated by color. Nine days prior to hospitalization there are significant anomalies in all data streams. These anomalies correspond with an escalation in self-reported symptoms in the days leading up to hospitalization and relapse

Discussion

In this 3-month pilot study, we applied anomaly detection to active and passive smartphone data in 15 subjects with schizophrenia. Of the 15 subjects, five experienced a clinical relapse, and of those three had sufficient data for anomaly detection. For individuals who relapsed, the rate of anomalies detected in the passive data streams in the 2 weeks prior to relapse was 71% higher than the rate of anomalies detected in dates further away from relapse. Our results demonstrate how smartphone passive data hold potential to identify early warning signs for relapse in schizophrenia.

Our data collection platform and statistical methods were designed to minimize bias and encourage future reproducibility. Because subjects were not paid for app use, not given additional support for app use, and not provided with check in calls or study staff reminders to use the app, we minimized confounding variables regarding app use. Thus, we aimed, as best possible and while maintaining an ethical research approach, to simulate real-world conditions in this study. Second, because the Beiwe application runs on both Android and Apple smartphones, we did not exclude any subjects based on the type of phone they owned, which is important as phone may be a proxy for socioeconomic status [29, 30], which is known to be associated with both overall health and the type of health care that is available to individuals [31, 32]. Third, given that the Beiwe platform is open source, others will be able to conduct similar studies and can replicate or disprove our results. Because the Beiwe platform captures all data collection settings for active and passive data in a single configuration file, investigators can replicate the data collection of any study simply by importing that study’s configuration file into the new study. (The Beiwe configuration file, together with the code used for all analyses here, is available for this study.) As the Beiwe platform already maintains data in secure and encrypted formats compatible with federal privacy laws, implementation in a formal clinical setting would not face regulatory hurdles. While this work only represents a pilot study, our results can be scaled to a population level at a low cost.

Though only a few of our subjects relapsed over the course of the study, our anomaly detection in the data for these individual subjects suggests several interesting hypotheses for future research. With the increased rate of anomalies in the behavioral digital phenotypes from patient smartphones, anomaly detection noted predictive changes in mobility and social metrics that would be difficult to capture or quantify with current clinical tools and care methods. Another advantage of the anomaly detection approach is that it provides interpretable results for use in clinical settings. Given the large amount of data that can be collected from smartphones, in our study up to one million data points per day per subject, it will always be possible to find ways to perfectly classify subjects to almost any clinical outcome using simple linear classifiers. While it is possible to create complex linear or nonlinear models, the clinical interpretation of such models is nearly impossible. In contrast, anomaly detection facilitates clinically meaningful and actionable information, and it can form the foundation of various types of future interventions. Anomaly detection also offers the advantage that, by correcting for multiple testing across all days of follow-up, excessive false positives are avoided, which is important in reducing alert fatigue on both the part of the patient and clinician while also economizing the use of healthcare resources. These results also suggest a computational model of relapse prediction for future studies. While understanding the personal triggers and temporal trajectories of relapse remain largely unknown, our results suggest that mobility and social disturbances will be important factors to study in building new models of relapse prediction.

Our results have broader implications for digital phenotyping and mobile health research. With tools like smartphones, making use of big data from psychiatric patients is less a restriction of technology, and the bottleneck is increasingly a lack of sufficient statistical methodology. As the Beiwe platform used in this study is available in the public domain, it will enable any research team to collect identical data in a secure and low-cost manner. However, determining valid and reproducible methods that are able to transform these data into personal and population level health insights is perhaps the greatest challenge in this line of research. Additional barriers towards clinical implementation of smartphone-based relapse prediction include current lack of guidance on legal liability, integration of warnings into electronic medical records and clinical workflows, and further collaborative research on patient preferences with this technology.

Our results support the feasibility of digital phenotyping in psychotic disorders, in line with other recent research efforts. While the pioneering CrossCheck study used loaner phones, did not focus on relapse, and used different statistical methods, namely generalized estimating equations, it also demonstrated how active and passive smartphone data could be used to predict symptoms in patients with schizophrenia [16]. The MONARCA Project in Europe has also explored the utility of active and passive data in bipolar disorder patients, and it found correlations between call and text messaging patterns and symptom severity [33].

While our anomaly detection model worked well for patients who relapsed, one limitation of our study is that most subjects did not relapse over the course of the data collection, thus providing only a few cases where anomaly detection prior to relapse could be demonstrated. The nature of relapse quantified in the three subjects may not have reflected other potential trajectories and mechanisms that can lead to relapse. The complexity and heterogeneity of all mental illnesses, and especially psychotic illnesses, suggests that finding population level models of relapse will be a challenge. Building individual computational models of relapse at the personal level may be an appropriate starting point from which to create new theories and test new hypotheses. For schizophrenia, it may be easier to realize the potential of personalized medicine compared to population medicine.

The 3-month duration, although longer than many other mobile health schizophrenia studies [6, 7], also limited the number of relapses that occurred. While we view the use of personal rather than study phones as a strength of the study design—and are convinced that the use of personal phones is the only way to scale these types of studies to larger cohorts and longer follow-up times—it does introduce complications to passive data collection as the sensors and operating systems are not identical in all phones. The heterogeneity of smartphone devices and operating systems underlines the importance of collecting raw sensor and phone usage data, which makes the heterogeneity of the collected data obvious, and highlights the need for using and developing methods of inference that are robust to such data heterogeneity. However, it is important to also note that considerably larger national or international studies would be unlikely to confront much greater heterogeneity in devices than that seen here, given that roughly 99% of smartphones globally run either Android or Apple iOS operating systems [34]. No subjects indicated that passive data made them feel paranoid or afraid, a concern often raised when using digital phenotyping methodology. However, it is important to acknowledge that this type of monitoring may be unacceptable to some patients and there is a need for further collaborative research to inform the clinical limitations and benefits of digital phenotyping.

Conclusion

With appropriate software instrumentation, smartphones can be used as research tools across a range of subfields within medicine and public health [6, 7]. Combined with statistical and computational methods like anomaly detection, they offer novel opportunities to explore psychiatric symptoms and functioning. Our results offer an exciting albeit preliminary view into the potential of these data-driven tools for relapse prediction in schizophrenia. They also underscore the need for further efforts in the space that will rely on the continued synergetic efforts of clinicians, patients, and researchers.

Electronic supplementary material

Acknowledgements

IB, PS, and J-PO are supported by NIH/NIMH 1DP2MH103909 (PI: J-PO) and the Harvard McLennan Dean’s Challenge Program (PI: J-PO). JT, LS, and MK are supported by the Natalia Mental Health Foundation. JT is also supported by a Dupont-Warren Fellowship from the Harvard Medical School Department of Psychiatry as well as a Young Investigator Grant form the Brain and Behavior Research Foundation.

Competing interests

The authors declare no competing interests.

Footnotes

These authors contributed equally: Ian Barnett, John Torous.

Electronic supplementary material

The online version of this article (10.1038/s41386-018-0030-z) contains supplementary material, which is available to authorized users.

References

- 1.Emsley R, Chiliza B, Asmal L, Harvey BH. The nature of relapse in schizophrenia. BMC Psychiatry. 2013;13:50. doi: 10.1186/1471-244X-13-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Almond S, Knapp M, Francois C, Toumi M, Brugha T. Relapse in schizophrenia: costs, clinical outcomes and quality of life. Br J Psychiatry. 2004;184:346–51. doi: 10.1192/bjp.184.4.346. [DOI] [PubMed] [Google Scholar]

- 3.Remington G, Foussias G, Agid O, Fervaha G, Takeuchi H, Hahn M. The neurobiology of relapse in schizophrenia. Schizophr Res. 2014;152:381–90. doi: 10.1016/j.schres.2013.10.009. [DOI] [PubMed] [Google Scholar]

- 4.Tibbo P, Malla A, Manchanda R, Williams R, Joober R. Relapse risk assessment in early phase psychosis: the search for a reliable and valid tool. Can J Psychiatry. 2014;59:655–8. doi: 10.1177/070674371405901207. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Španiel F, Vohlídka P, Hrdlička J, Kožený J, Novák T, Motlová L, et al. ITAREPS: information technology aided relapse prevention programme in schizophrenia. Schizophr Res. 2008;98:312–7. doi: 10.1016/j.schres.2007.09.005. [DOI] [PubMed] [Google Scholar]

- 6.Torous J, Firth J, Mueller N, Onnela JP, Baker JT. Methodology and reporting of mobile heath and smartphone application studies for schizophrenia. Harv Rev Psychiatry. 2017;25:146–54. doi: 10.1097/HRP.0000000000000133. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Torous J, Onnela JP, Keshavan M. New dimensions and new tools to realize the potential of RDoC: digital phenotyping via smartphones and connected devices. Transl Psychiatry. 2017;7:e1053. doi: 10.1038/tp.2017.25. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Onnela JP, Rauch SL. Harnessing smartphone-based digital phenotyping to enhance behavioral and mental health. Neuropsychopharmacology. 2016;41:1691–6. doi: 10.1038/npp.2016.7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 9.Firth J, Cotter J, Torous J, Bucci S, Firth JA, Yung AR. Mobile phone ownership and endorsement of “mHealth” among people with psychosis: a meta-analysis of cross-sectional studies. Schizophr Bull. 2015;42:448–55. doi: 10.1093/schbul/sbv132. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 10.Gay K, Torous J, Joseph A, Pandya A, Duckworth K, et al. Digital technology use among individuals with schizophrenia: results of an online survey. JMIR Ment Health. 2016;3:e15. doi: 10.2196/mental.5379. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 11.Johnson J. What’s holding fitness wearables back? Brodeur Partners, 2015. https://www.prnewswire.com/news-releases/whats-holding-fitness-wearables-back-300150936.html

- 12.Ennis L, Rose D, Denis M, Pandit N, Wykes T. Can’t surf, won’t surf: the digital divide in mental health. J Ment Health. 2012;21:395–403. doi: 10.3109/09638237.2012.689437. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Schlosser D, Campellone T, Kim D, Truong B, Vergani S, Ward C, et al. Feasibility of PRIME: a cognitive neuroscience-informed mobile app intervention to enhance motivated behavior and improve quality of life in recent onset schizophrenia. JMIR Res Protoc. 2016;5:e77. doi: 10.2196/resprot.5450. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Grünerbl A, Muaremi A, Osmani V, Bahle G, Oehler S, Tröster G, et al. Smartphone-based recognition of states and state changes in bipolar disorder patients. IEEE J Biomed Health Inform. 2015;19:140–8. doi: 10.1109/JBHI.2014.2343154. [DOI] [PubMed] [Google Scholar]

- 15.Saeb S, Zhang M, Karr CJ, Schueller SM, Corden ME, Kording KP. Mobile phone sensor correlates of depressive symptom severity in daily-life behavior: an exploratory study. J Med Internet Res. 2015;17:e175. doi: 10.2196/jmir.4273. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Wang R, Aung MS, Abdullah S, Brian R, Campbell AT, Choudhury T, Hauser M, Kane J, Merrill M, Scherer EA, Tseng VW. CrossCheck: toward passive sensing and detection of mental health changes in people with schizophrenia. Proceedings of the 2016 ACM International Joint Conference on Pervasive and Ubiquitous Computing. ACM; 2016.

- 17.Faherty LJ, Hantsoo L, Appleby D, Sammel MD, Bennett IM, Wiebe DJ. Movement patterns in women at risk for perinatal depression: use of a mood-monitoring mobile application in pregnancy. J Am Med Inform Assoc. 2017;24:746–753. doi: 10.1093/jamia/ocx005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Ben-Zeev D, Brenner CJ, Begale M, Duffecy J, Mohr DC, Mueser KT. Feasibility, acceptability, and preliminary efficacy of a smartphone intervention for schizophrenia. Schizophr Bull. 2014;40:1244–53. doi: 10.1093/schbul/sbu033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Torous J, Kiang MV, Lorme J, Onnela J-P. New tools for new research in psychiatry: a scalable and customizable platform to empower data driven smartphone research. JMIR Ment Health. 2016;3:e16. doi: 10.2196/mental.5165. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Torous J, Staples P, Onnela JP. Realizing the potential of mobile mental health: new methods for new data in psychiatry. Curr Psychiatry Rep. 2015;17:1–7. doi: 10.1007/s11920-015-0602-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Csernansky JG, Mahmoud R, Brenner R. A comparison of risperidone and haloperidol for the prevention of relapse in patients with schizophrenia. N Engl J Med. 2002;346:16–22. doi: 10.1056/NEJMoa002028. [DOI] [PubMed] [Google Scholar]

- 22.Shin IRNM, Lee SHNK, Chong S. Human mobility patterns and their impact on routing in human-driven mobile networks. HotNets-VI, 2017.

- 23.Barnett I, Onnela J-P. Inferring mobility measures from GPS traces with missing data. arXiv preprint arXiv:160606328 2016. [DOI] [PMC free article] [PubMed]

- 24.Filzmoser P. A multivariate outlier detection method, vol. 1. Proceedings of the Seventh International Conference on Computer Data Analysis and Modeling; 2004.

- 25.Li J, Pedrycz W, Jamal I. Multivariate time series anomaly detection: a framework of hidden Markov models. Applied Soft Computing; 2017.

- 26.Idé T, Papadimitriou S, Vlachos M. Computing correlation anomaly scores using stochastic nearest neighbors. Data Mining, 2007. ICDM 2007. Seventh IEEE International Conference on IEEE; 2007.

- 27.Qiu H, Liu Y, Subrahmanya NA, Li W. Granger causality for time-series anomaly detection. Data Mining (ICDM), 2012 IEEE 12th International Conference on IEEE; 2012.

- 28.Cheng H, Tan PN, Potter C, Klooster S. Detection and characterization of anomalies in multivariate time series. Proceedings of the 2009 SIAM International Conference on Data Mining. SIAM; 2009.

- 29.Arora S, Ford K, Terp S, Abramson T, Ruiz R, Camilon M, et al. Describing the evolution of mobile technology usage for Latino patients and comparing findings to national mHealth estimates. J Am Med Inform Assoc. 2016;23:979–83. doi: 10.1093/jamia/ocv203. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 30.Blumberg SJ, Luke Julian V. Wireless substitution: early release of estimates from the National Health Interview Survey, January–June 2016. National Center for Health Statistics. 2016;201:1–17. [Google Scholar]

- 31.Adler NE, Newman K. Socioeconomic disparities in health: pathways and policies. Health Aff. 2002;21:60–76. doi: 10.1377/hlthaff.21.2.60. [DOI] [PubMed] [Google Scholar]

- 32.Chen E, Miller GE. Socioeconomic status and health: mediating and moderating factors. Annu Rev Clin Psychol. 2013;9:723–49. doi: 10.1146/annurev-clinpsy-050212-185634. [DOI] [PubMed] [Google Scholar]

- 33.Osmani V. Smartphones in mental health: detecting depressive and manic episodes. IEEE Pervasive Comput. 2015;14:10–13. doi: 10.1109/MPRV.2015.54. [DOI] [Google Scholar]

- 34.Goasduff LF, Forni AA. Gartner says worldwide sales of smartphones grew 7 percent in the fourth quarter of 2016. Stamford: Gartner; 2017. https://www.gartner.com/newsroom/id/3609817.

- 35.Canzian L, Musolesi M. Trajectories of depression: unobtrusive monitoring of depressive states by means of smartphone mobility traces analysis. Proceedings of the 2015 ACM international joint conference on pervasive and ubiquitous computing. ACM; 2015..

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.