Abstract

Self-generated movement leads to the attenuation of predicted sensory consequences of the movement. This mechanism ensures that attention is generally not drawn to sensory signals caused by own movement. Such attenuation has been observed across the animal kingdom and in different sensory modalities. In this study we used novel virtual reality (VR) devices to test the hypothesis that the human brain attenuates visual sensation in the area of the visual field where the subject’s hand is currently moving. We conducted three VR experiments where we monitored hand position during movement while the participants performed a visual search task. In the first two experiments we measured response time for salient moving targets and observed that reaction time (RT) is slower for targets that are behind the (invisible) hand. This result provides the first evidence that the visual motion signals generated by the subject’s own hand movement are suppressed. In the third experiment we observed that RT is also slower for colored targets behind the hand. Our findings provide support for the active inference account of sensory attenuation, which posits that attenuation occurs because attention is withdrawn from the sensory consequences of own movement. Furthermore, we demonstrate how modern VR tools could open up new exciting avenues of research for studying the interplay of action and perception.

Keywords: perception, sensory attenuation, virtual reality, visual attention, active inference

Introduction

From an evolutionary perspective, detecting motion is of critical importance. However, if an agent (e.g. an animal or a robot) is moving, most of the movement in an environment is caused by the movement of the agent. For example, in the act of running, one’s own hands, two relatively big objects, move through the lower part of one’s visual field. If there was an external object moving at the same position (a running dog, a moving ball) it would immediately capture one’s attention. Nonetheless, such hand movements are not usually perceived—they appear to be suppressed from our experience. What is the mechanism behind such visual attenuation?

It is generally accepted that the brain predicts the sensory consequences of its own movement and that these predictions result in attenuation of the respective sensory signals (Von Helmholtz, 1867; Sperry, 1950; von Holst and Mittelstaedt, 1950; Frith, 1992; Blakemore et al., 1998; Bays et al., 2006; Friston, 2010; Clark, 2015). In humans, previous studies have suggested that such attenuation of the sensory signal is the reason why we, for example, cannot tickle ourselves (Blakemore et al., 1998). Furthermore, several studies have shown that the perception of external tactile stimuli is suppressed during the movement of the subject’s own hand (Juravle et al., 2010; Juravle and Spence,2011).

Moving of own body also leads to visual changes in the external world that have to be attenuated as well. For example, when the hands are moving (e.g. to grab a beer), the visual motion signals of hand movement might distract us from seeing more relevant external movement (e.g. someone throwing a chair). However, previous work has not investigated the visual attenuation of self-generated limb movement. The basic idea to test for this type of attenuation is simple: having objects change behind the moving limb and assess whether the perception of these moving objects is impaired. However, it is complicated to test this hypothesis with conventional tools of experimental psychology, as it would require that the participants see the moving objects “behind” the moving limb without seeing the moving limb itself.

We addressed this challenge by taking advantage of modern virtual reality (VR) tools and a novel hand tracking device. We first conducted two VR experiments where participants were performing a visual search task. At the same time, the participants were asked to raise their hand in front of their eyes. We captured the coordinates of hand movements without letting the participant see the hand in the VR environment. Critically, in the experimental condition, we utilized the movement coordinates to ensure that the targets appeared behind the invisible hand during the hand movement. We measured the reaction time (RT) of noticing the targets.

We used a visual search task to assess visual attenuation through its effect on exogenous attention. In other words, we measured through RT the involuntary capture of attention by an abrupt change of the target. According to the more classic views of sensory attenuation, this approach would seem unparsimonious since attention is not thought to have a role for efference copy generation or subsequent attenuation of signals. However, our study is based on contemporary theories that explain sensory attenuation through the effect of attention. In particular, according to the active inference or prediction error minimization account (Friston, 2010; Hohwy, 2013; Clark, 2015) "during sensory attenuation, attention is withdrawn from the consequences of movement, so that movement can occur" (Brown et al., 2013).

Active inference theory posits that behaving organisms are constantly trying to predict upcoming sensory input. These predictions allow intelligent systems to process only the deviations of the predictions (i.e. prediction errors) with the overall aim of long-term prediction of error minimization. According to this theory, action is not primarily a response to the sensory stream, but rather an efficient way of changing the sensory data to fit the predictions (Friston, 2010; Hohwy, 2013; Clark, 2015). In this framework, movement is caused by predictions of the sensory consequences of the performed movement (Brown et al., 2013). Importantly, these predictions run counter the “current” sensory evidence (where the movement still has not reached the final predicted state). Hence, to allow movement, the current sensory precision needs to be reduced. The active inference account proposes that the reduction of sensory precision works through withdrawal of attention from the sensory prediction errors (Brown et al., 2013, Hohwy, 2013; Clark, 2015). With regard to the present study, this means that the subject’s own hands are attenuated from vision by withdrawing attention from the area of the visual field where the hands are predicted to move through to reach their desired destination. In other words, we hypothesized on the basis of the active inference theory that smooth movement of this type is maintained by continuous attenuation of sensory input from the hand's current position throughout the trajectory until it returns to its desired final position.

In the first two experiments the targets of the visual search were defined through a change in movement direction. Based on the active inference account, we had a clear hypothesis for the first two experiments: as attention is withdrawn from the area of the moving hand, moving targets that are directly behind the (invisible) hand at that moment should be processed more slowly than control targets. In the first experiment the control target was in a different visual hemifield whereas in the second experiment the control targets were also on the same side of the visual field.

Studying visual attenuation through visual attention is advantageous, as the visual search task allows changing the features of the search target easily. In the third experiment the target was set to change color. This is important, as the first two experiments cannot experimentally differentiate the active inference account from the classic efference copy theory (e.g. von Holst and Mittelstaedt, 1950; Blakemore et al., 1998). In the first two experiments the targets moved in the same direction as the hand was moving. Such targets could, in principle, be attenuated by an efference copy, as the key features of these targets (direction and speed of the movement) directly coincided with the hand movement. However, the color change in the third experiment should not be attenuated according to the efference copy theory, as the color of the target is not related to the hand movement. In contrast, the active inference account predicts sensory attenuation even in this case, as according to this theory, sensory precision in general ought to be downregulated during movement. In this sense, the third experiment is a direct comparison between the active inference account and the classic efference copy theory.

Methods

Experimental setup

We tested our hypotheses using an Oculus Rift VR headset together with Leap Motion, a novel device for tracking hands in VR (see details below). The VR technology allowed us to have absolute control over the visual environment the participant perceived. Although the moving hand of the participant was not rendered in the VR environment, the mathematical-physical parameters (position, velocity, orientation) of the hand were constantly monitored using the Leap tracking system. In this way we were able to study whether the brain uses knowledge about the hand’s position to suppress the perception of movement in the area of the visual field that would have been covered by the hand. Hence, note that wherever we mention the "target being behind the hand", the hand is actually completely invisible to the participant.

The participants were shown horizontally oscillating spheres (Fig. 1B) and their main task was to react as fast as possible if they noticed a salient target object either moving in a vertical fashion (Experiments 1 and 2, "E1" and "E2", respectively) or changing its color (Experiment 3, "E3"). The change in the direction of motion or a change in the color of the target object was triggered by the participant’s hand movement. More specifically, the target was presented while the participant performed a pre-trained hand movement (see below). The RT to the stimulus was registered by the press of a mouse button. The participants held the mouse in the hand commonly used for controlling the mouse. The participants were instructed to always look at the fixation point (a cross in the middle of the field of view).

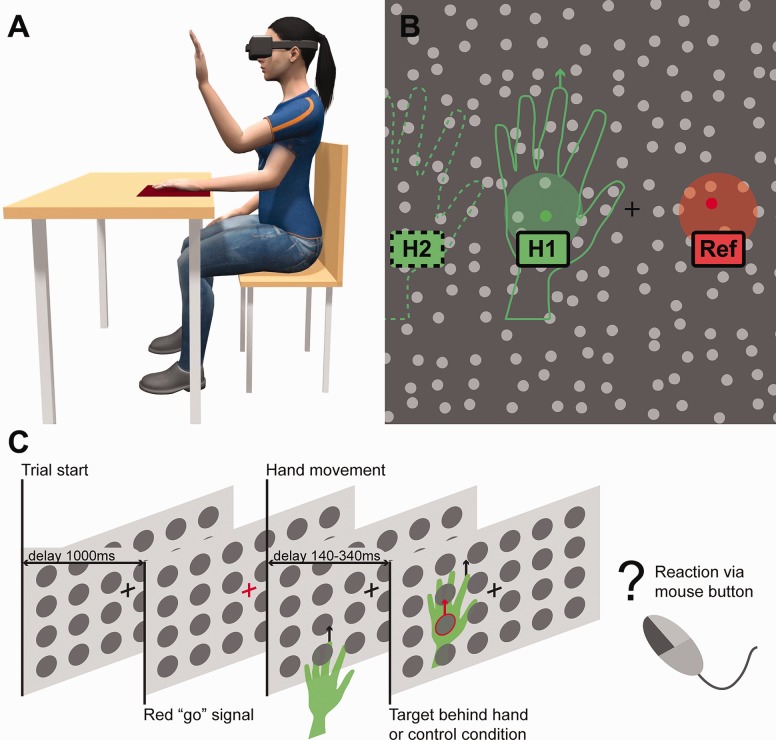

Figure 1.

We conducted three VR experiments where participants had to perform a pre-trained hand movement. We monitored the coordinates of the hand using a novel hand-tracking device, while the hand was kept invisible for the participant. A) Illustration of the physical setup of the experiment: The participants were sitting behind a desk and had to make a pre-trained hand movement while the hand was kept invisible in the VR environment. The coordinates of the hand were monitored and moving targets were presented behind the (invisible) moving hand. The left hand is shown in two positions. B) An illustrative view of the VR environment. In the critical condition, the targets were chosen from behind the (invisible) moving hand (position "“H1” for clarity, the hand contour, the area behind the hand, and an example random target are illustrated in green). In control conditions, the targets were shown either in the area reflected from the fixation point (“Ref ”), or the hand was moved to the left (“H2” position) while the targets were still presented as if the hand would have been in the position H1. C) Illustrative overview of the experimental design. After a red “go” signal, the participants performed a pre-learned hand movement. The target object was chosen from the area behind the invisible moving hand (for visual clarity, green in the figure) and the participants had to react as fast as possible when they noticed the target. Note that the figure is illustrative: the objects (except the targets of E3) and the hand were neither colored, visible, nor outlined for the participant, and the targets were much smaller (see Fig. 1B).

In all three experiments, we compared the RTs from the condition where the target was behind the hand with a condition where the target was elsewhere, i.e. not behind the hand. The temporal and spatial distribution of the targets in different conditions were kept constant in all experiments.

Physical setup

The participants were seated behind the table with their left hand on the table and right hand on the lap, holding a wired USB mouse (Fig. 1A), and wore an Oculus Rift (Oculus VR, LLC; "Oculus") VR headset with a refresh rate of 90 Hz, and a field of view of 100°. The headset features multiple infrared sensors tracked by an infrared camera which together with the gyroscope and accelerometer allow for precise, low-latency positional tracking.

A novel hand recognition system Leap Motion Controller (Leap Motion, Inc; "Leap") Orion SDK (version 3.1.2) was used to track hand movements. The Leap tracks the position, velocity, and orientation of hands and fingers with low latency and an average position accuracy of 1.2 mm (Weichert et al., 2013). The hardware component of Leap uses two infrared optical sensors and three infrared lights to detect hands, and has a field of view of 150 degrees horizontally and 120 degrees vertically. The experiments were conducted in a windowless room to avoid daylight that could negatively affect the performance of the infrared-based tracking systems.

Stimuli and targets

The virtual environment consisted of horizontally oscillating spheres (the stimuli) covering the whole visual field (Fig. 1B). The stimuli were approximately 0.88° in diameter from the perspective of the subject, and were randomly generated on a 2D plane in the beginning of each trial with a between-sphere distance of approximately 2.6°. The spheres were oscillating horizontally on a sinusoidal trajectory in an approximate radius equal to half of the distance between two spheres. In E1 and E2, the targets were moving vertically upward with a velocity approximately equal to the average velocity of the moving hand. The targets moved an average of 0.15° in the direction of the hand movement, a distance approximately one-fifth of the size of the target, or one-tenth of the distance between two spheres. The targets were triggered 140–340 ms after the detection of the hand movement, and were chosen randomly from a circular area behind the invisible hand (Fig. 1B). Hence, the targets appeared in the middle of the hand movement with random distribution around either left or right side of the fixation point (Fig. 1C).

In E3, the targets changed color instead of moving vertically. In other words, the target was oscillating horizontally as all other stimuli but (at the same temporal and spatial moment as the movement of the target in the E1) a change in color was triggered. The color of target changed only in the hue channel of the HSL color model, keeping the saturation and luminosity constant, and the change was permanent until the end of the trial. In E1 and E2, the stimuli and targets were light gray. In E3, the stimuli were colored light green and the target light red (Note that we specifically chose participants with no type of color blindness.).

In the experimental condition, targets were chosen randomly from a circular area behind the hand. In the control conditions of E1 and E3, the targets were chosen from an area vertically in the same position as the hand but reflected horizontally to the other side of the fixation point ("Reflected" condition). In E2, the participants had to move the hand in two different positions (H1 and H2 in Fig. 1B). In the first position, the hand was in the same place as in E1 and the positions of the targets were recorded. In the second position, the hand was shifted to the left and the prerecorded target positions were used to present targets as if the hand would be in the first position on the right. In other words, when the hand was on the left position ("Hand-left"), the targets were still presented as if the hand would be on the right. Following the hypothesis, we expected no attenuation in the "Hand-left" condition as the targets were presented in an area not covered by the hand.

Participants

The participants were recruited amongst the friends of the authors via personal contact. We specifically did not recruit psychology students. A total of 60 healthy participants with normal or corrected-to-normal vision took part in the three experiments: 16 in the first experiment (8 females, 8 males, aged 19–42 [mean = 25.5]), 15 in the second experiment (8 females, 7 males, aged 18–30 [mean = 24.4]), and 29 in the third experiment (14 males, 15 females, aged 18–30 [mean 24.1]).

All of the participants read and signed informed consent forms and participated in the experiments voluntarily. The VR experiments conducted in this study were approved by the Ethics Committee of the University of Tartu.

Procedure

Instructions to the participants

The participants were assisted to remove all accessories (jewellery, rings, etc.) and expose their arms up to the elbows to ensure the best hand tracking performance. As a cover story, the hand tracking device was described as "a device that detects the direction of hand movements". The participants were guided through training which consisted of (i) practice of the hand movement, (ii) practice of the RT task without the hand movement, and (iii) practice of the combination of the hand movement and the RT task.

Training the hand movement was conducted without the headset. The hand movement was presented by the experimenter and described as following: "Move your hand straight up from the start position on the table to the height of your eyes and then straight down, back to the earlier position on the table. The motion ought to be smooth and the hand should not stop at the top. The motion has to be straight upwards to avoid getting too close to the headset. For the best movement direction tracking, the fingers should be pointed upwards and separate from each other." This method of training was found superior to other methods, e.g. trying to follow a ball movement with a visible hand inside the VR.

During the RT training and the experiments, the participants had to focus on the fixation point and react to the target as fast as possible by pressing the left mouse button. The participants were instructed to move the hand as practiced within 3 s after the red "go" signal. The participants were informed that the target will be triggered by the up movement of the hand.

Experiment 1

A total of 150 trials were performed of which 30% had random targets, 30% targets behind the hand ("Behind hand"), and 30% reflected from the vertical of the fixation point ("Reflected"). In 10% of the trials, there was no target present. The conditions were balanced and randomized for each participant, and each participant was exposed to all of the conditions. After every 40 trials a pause of 10 s was made to provide time for resting. The general design of a single trial for all of the experiments is illustrated in Fig. 1C.

During the experiment, the participants were shown textual notifications in the VR environment when Leap could not detect the hand, or when the hand was moving too fast and targets could not be presented during the hand’s upward movement. In the other experiments, the participants reported that the notifications shifted attention from the RT task to the hand movement; the notifications were excluded from the other experiments.

Experiment 2

Similarly to E1, the participants had to move their hand as previously described and the target was presented while the hand was moving upwards. However, the participants had to alternately move the hand in two different positions: in the same place as in the previous experiment, and in a place shifted to the left of the initial position (the "Behind hand" and "Hand-left" condition, respectively). Importantly, the position of the target was kept the same for both of these conditions (see about targets above). The difference between these conditions was only the position of the hand: in the critical case ("Behind hand"), the hand was in front of the moving target, and in the control condition ("Hand-left") the hand was horizontally shifted away from the area where the target was presented, while, importantly, still being in the same side of the visual field (Fig. 1B above). As in the "Hand-left" condition the hand was not moving in front of the target, we expected similar RTs as in the "Reflected" condition. The comparison between the trials where the target appeared behind the hand ("Behind hand") and the "Reflected" condition was similar to E1, and therefore we expected similar findings.

A total of 154 trials per participant were measured of which 10 trials had no targets and the rest were equally divided between the "Behind hand", "Hand-left", and "Reflected" condition. We had twice as many trials in the reflected control condition compared to the other conditions as the participants would otherwise have been biased to search for targets on the left side of the visual field.

Compared to E1, most of the textual notifications shown to the participants were omitted and instead displayed on a separate screen to the experimenter. During the experiment, when the Leap could not detect the hand for several consecutive trials, the participants were verbally instructed how to improve the hand movement.

Experiment 3

The experiment followed procedures similar to E1 with a difference in the target. To differentiate the efference copy theory from the active inference account, the target now changed the color instead of moving vertically (see about targets above). Altogether, 175 trials per participant were collected of which 10 trials had no targets and the rest were equally divided between "Behind hand", "Reflected", and random conditions.

Debriefing

All of the participants were debriefed after the end of the experiment. No participant reported perceiving any patterns in the distribution of the targets. In other words, all the participants remained unaware that some targets were presented behind the hand.

Data preprocessing

The data preprocessing and analysis were performed with R (version 3.1.2; R Core Team, 2015). First, trials with RTs less than 100 ms—the minimum time needed for physiological processes such as stimulus perception (Luce, 1986)—were eliminated. Next, to control for the spurious RTs in the data, we used a cutoff of two median absolute deviations from the median of the participant response times.

Due to the natural variation of hand movements and the horizontal oscillation of the spheres, targets of different conditions were not deterministically at an equal distance from the fixation point nor had an equal distribution with respect to the mean position within a condition.

To make sure the RTs for targets of different conditions are comparable, we included in the further analysis trials as to where the targets were located in the maximal common spatial area across conditions. Specifically, we found the minimal common maximum x-coordinates and y-coordinates, and the maximal common minimal x-coordinates and y-coordinates across groups and included the targets within the rectangular areas from both sides of the fixation point. In other words, the targets of different conditions were trimmed to a maximal common quadrangular area to control the effect of the distance from fixation point between conditions.

Taken together, a mean of 24% of trials were rejected from E1 (27% from the experimental condition, 22% from the control condition), a mean of 32% trials from E2 (30% from experimental condition, 34% from "Hand left" condition, and 32% from "Reflected" condition), and a mean of 35% trials from E3 (34% and 35% from experimental and control condition, respectively). More than half of the rejected trials were rejected due to the trimming procedure. When we repeated the analysis without any trimming the results remained unchanged.

Finally, participants were excluded from the analysis in cases where there were less than 10 trials in one condition. In addition, in E3 we removed one participant whose average RTs were slower than 800 ms (the results were the same when this participant was included in the final analysis). All in all, data from 1 participant in both E1 and E2, and from 5 participants in E3 were excluded from further analysis; hence, the final analysis is done with 15, 14, and 24 participants for E1, E2, and E3, respectively.

Statistical analysis

For statistical testing we used a one-tailed paired t-test to test for the mean difference of RTs in different conditions. The test was one-tailed as we had a specific hypothesis regarding the direction of the effect. As in E2 there were three conditions, a within-subjects Analysis Of Variance (ANOVA) was first used to test for the effect of condition on the RT before proceeding with the t-tests. Welch’s t-test was used to test for the statistical difference between the RTs between the control ("Reflected") conditions of different experiments.

Results

Experiment 1

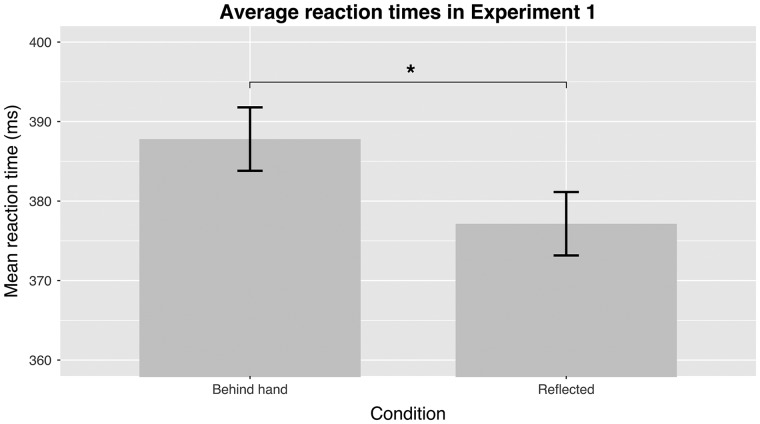

VR technology allowed us to investigate the hypothesis that the brain suppresses movement information in the particular area of the visual field that should have been covered by the hand at that moment. We observed significantly slower RTs for targets presented behind the moving (and invisible) hand compared to the control targets (mean difference 10 ms; t(14) = 2.62, P = 0.010, d = 0.23; Fig. 2).

Figure 2.

The mean RTs in Experiment 1 for both conditions. The participants had to react as fast as possible to moving targets either in an area currently covered by the (invisible) hand (“Behind hand”) or a symmetric area in the other side of the visual field (“Reflected”). The RTs in the experimental condition (“Behind hand”) were slower than in the control condition (“Reflected”). Error bars on Figs 2–4 show within-participant 95% confidence intervals (Cousineau, 2005).

Experiment 2

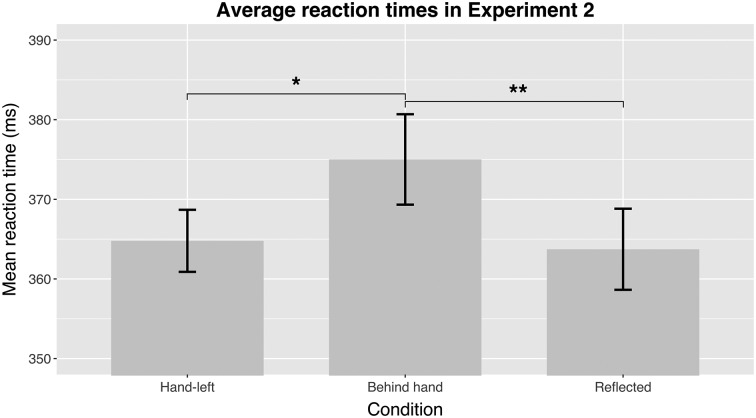

In Experiment 2, we measured an additional control condition to test for the spatial specificity of visual attenuation. Whereas in Experiment 1 the targets and the control targets were in different visual hemifields, in Experiment 2 some control targets were also in the same side of the visual field. We found a statistically significant effect of condition on RT [F(2, 13) = 4.58, P = 0.02]. Posthoc analysis revealed a significant difference between the RTs to targets behind the hand and from the control condition [mean difference 10 ms; t(13) = 3.65, P = 0.001, d = 0.23], confirming the results of the first experiment. Next, we observed a significant difference between the RTs in the "Behind hand" and "Hand-left" condition [mean difference 10.4 ms; t(13) = 2.53, P = 0.013, d = 0.20]. We observed no difference between the two control conditions [mean difference <1 ms; t(13) = 0.24, P = 0.812, d = 0.03; Fig. 3].

Figure 3.

The mean RTs in Experiment 2 for all conditions. In the experimental condition (“Behind hand”), the moving targets were presented behind the (invisible) hand. In the additional control setting, the hand was shifted to the left but the target was still presented as if the hand was in the right position (“Hand-left”). The “reflected” condition is the same as in Experiment 1.

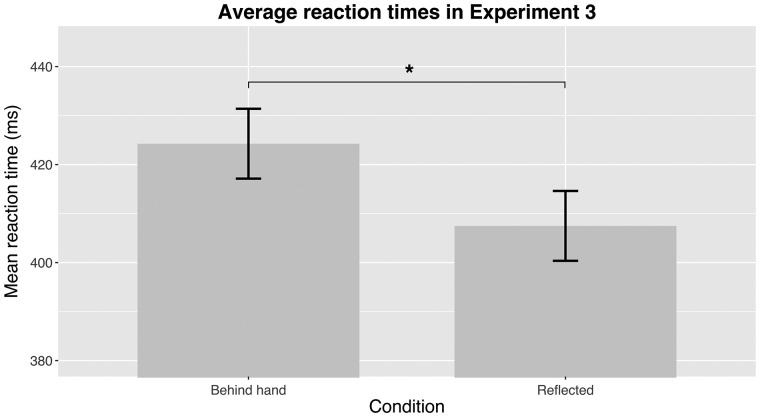

Experiment 3

The first two experiments demonstrated that there is attenuation of visual movement signals coinciding with the movement of the own hand. However, these experiments cannot differentiate between the two explanatory accounts for this effect (see section “Discussion” below). In Experiment 3, the target of the visual search was an object that changed color. We observed significantly slower RTs in the condition where color changing targets were presented behind the hand compared to the control condition [mean difference 17 ms; t(23) = 2.15, P = 0.021, d = 0.14; Fig. 4].

Figure 4.

The mean RTs in Experiment 3. The target changed color during the hand movement, and participants were required to press a button when they noticed the change. In the "Behind hand" condition, targets were presented in the area where the (invisible) hand was moving, compared to the control condition ("Reflected") where the targets were presented in an area not covered by the (invisible) hand.

Although numerically the RTs in the control ("Reflected") condition seem to be longer in Experiment 3 than they are in either Experiment 1 or Experiment 2, there is in fact no statistical difference between the RTs between the control conditions of the different experiments (all P > 0.19).

Discussion

Our results show that the brain attenuates visual perception in the part of the visual field where the hand movement is predicted to occur. We used novel VR and hand tracking devices to allow the experimental participants to see objects in the area that should have been covered by the moving hand at that moment. We achieved this by making the hand invisible in the virtual environment. We conducted three experiments where we monitored the hand movement and presented targets either behind the hand or away from it. By measuring the RTs in a visual search task, we demonstrated that RTs to the targets behind the (invisible) hand were slower than in the control conditions. Importantly, such attenuation happened even when the target changed color instead of changing the direction of movement (Experiment 3). These findings provide direct support for the active inference account of sensory attenuation.

Sensory attenuation has been observed across the animal kingdom and in different sensory modalities. When the limbs are moving they generate prominent somatosensory, visual, and (less often) auditory consequences. Key studies investigating the attenuation of self-generated sensory effects of limbs have been conducted within the somatosensory modality (e.g. Blakemore et al., 1998; Shergill et al., 2003). This is understandable as it has been technologically feasible to manipulate the predictability of the somatosensory consequences of movement. However, given that primates are highly visual animals, it is also important to study the sensory attenuation of limb movement within the visual modality.

There have been some prior studies of the sensory attenuation of self-generated visual stimuli. In these studies, self-generated movement is a voluntary button press and the sensory consequence is an arbitrary visual stimulus paired with the button press (Cardoso-Leite et al., 2010; Hughes and Waszak, 2011; Stenner et al., 2014). With the help of such experimental paradigms, it has been shown that the attenuation of the perception of self-generated stimuli is not caused by a response bias of the subjects, but that the anticipation of the sensory consequences appear to reduce visual sensitivity (Cardoso-Leite et al., 2010). However, none of the previous studies have investigated the attenuation of visual sensation caused by the movement of the subject’s own limbs. The present work has extended the investigation of sensory attenuation to self-generated limb movements.

In the first experiment, the experimental targets appeared behind the (invisible) hand on the left side of the field of view and the RTs were compared to the targets presented to the symmetrically reflected spot in the right field of view. We observed slower RTs to the moving targets behind the (invisible) hand. We conducted the second experiment with an additional control condition, and observed slower RTs to the targets behind the moving hand, compared to the control conditions on both the left and right side of the field of view. These results suggest that the sensorimotor system of the human brain attenuates the visual motion of self-generated limb movements. Because in the first two experiments the features of the target directly coincided with the hand movement (both the hand and the target were moving, and moving in the same direction and roughly at the same speed), these results could, in principle, be explained by the efference copy theory (e.g. von Holst and Mittelstaedt, 1950; Blakemore et al., 1998; Clark, 2015). According to this account, the brain uses a copy of the motor commands ("efference copy") to subtract the predicted sensory consequences from the actual sensory input.

However, as noted by others, this classical explanation based on the efference copy has several shortcomings (Brown et al, 2013; Clark, 2015; van Doorn et al., 2014, 2015). Most importantly, there have been previous experimental findings that are in conflict with the efference copy theory. For example, the attenuation of self-generated tickle sensations persists even for unpredicted movement perturbations (van Doorn et al., 2015). In addition, there are several results demonstrating sensory attenuation even before the movement has started (Voss et al., 2008; Bays et al., 2006). These results are difficult to reconcile with the efference copy theory (Brown et al., 2013; Clark, 2015). In our third experiment the target was defined through a color change. This color change in Experiment 3 should not be attenuated, according to the efference copy theory, as color is not related to the motor commands of the hand movement. Nevertheless, we found a clear effect of sensory attenuation: the RTs were slower for the color changes appearing where the hand was predicted to be.

This result is in line with the active inference account. This framework predicts sensory attenuation of color processing due to sensory precision in general being reduced during movement (Friston, 2010; Hohwy, 2013; Clark, 2015). According to this explanation, sensory attenuation happens because attention is withdrawn from the sensory consequences of the subject’s own movement. Withdrawal of attention from the sensory consequences is necessary in the framework of active inference for eliciting movement (Brown et al., 2013; Hohwy, 2013; Clark, 2015). The visual search paradigm used in the present study allowed us to directly investigate the relationship between sensory attenuation and attention. Due to the relatively low cost of the technologies used, the current experimental setup could be used to further test the links between attention, sensory attenuation, and agency even in clinical populations.

Limitations of the present study and further questions

The novel VR headsets used in the present study came with the drawback of not being able to control for the effect of eye movements. In other words, there was no technical guarantee that the participants maintained fixation throughout the experiment. To mitigate problems stemming from eye movements, we always had the same amount of targets on both sides of the visual field—the participant had no incentive to be biased to look at only one side. Furthermore, the participants were explicitly told to keep their eyes on the fixation point and during debriefing, all of them asserted that they had done so. Finally, in Experiment 2 the stimuli for the conditions "behind hand" and "hand left" were physically at the same positions, hence eye movements cannot explain the significant differences in RTs.

In addition, RTs are not the most informative measures of perception in general. Therefore, future studies should go beyond the visual search paradigm used here and test visual perception directly. For example, one could measure the sensitivity to low-contrast stimuli (e.g. Burr et al., 1982) and determine whether it is harder to detect stimuli that are currently behind the invisible hand.

One could argue that the effects of active inference should manifest when one compares an active movement condition with a passive condition where the participants do not move at all. It might be considered trivial if the condition with movement would have slower RTs to visual stimuli than the condition without any movement, as this effect could be attributed to simple dual-task interference (e.g. Pashler, 1994). However, in theory, it might be that active movement is always associated with slower RTs not only because of dual-task interference, but also because the brain indeed needs to downregulate visual precision when self-generated movement occurs within the visual field. These questions could be answered in the foreseeable future with the VR setup used here.

Finally, there is an intriguing research tradition showing that visual perception is “altered” near the hands (for review, see Goodhew et al., 2015). These studies mostly have used static placement of hands, but some recent experiments (e.g. Festman et al., 2013) have used moving hands as in our study. One further aspect that makes a direct comparison with this research tradition harder is that in our current work the target was “behind” the invisible hand. Further research will need to directly investigate how our current results fit with this line of research. In any case it is well conceivable that sensory attenuation directly contributes to the altered perception near the hands.

Conclusions

We conducted three novel VR experiments with human participants to test the hypothesis that the brain actively attenuates visual perception in the area of the visual field in which hand movement is predicted to occur. In a visual search task, we showed that the targets behind the moving hand were processed slower than other targets. These results provide the first experimental evidence that the attenuation of visual perception of the subject’s own movement is in line with the active inference account. More generally, the present results demonstrate that the usage of novel VR tools opens up new exciting avenues of research.

Acknowledgements

We would like to thank R. Vicente for help and discussions. We are indebted to D. Nattkemper, R. Rutiku, C.M. Schwiedrzik, A.W. Tammsaar, and K. Aare for reading and commenting previous versions of the manuscript. We would also like to thank J. Hohwy and two anonymous reviewers for substantially improving the manuscript. Data is available upon request.

Funding

The work was supported by PUT438 and IUT20-40 of the Estonian Research Council. The authors declare no competing financial interests.

Conflict of interest statement. None declared.

References

- Bays PM, Flanagan JR, Wolpert DM. Attenuation of self-generated tactile sensations is predictive, not postdictive. PLoS Biol 2006;4:e28.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blakemore SJ, Wolpert DM, Frith CD. Central cancellation of self-produced tickle sensation. Nat Neurosci 1998;1:635–40. [DOI] [PubMed] [Google Scholar]

- Brown H, Adams RA, Parees I. et al. Active inference, sensory attenuation and illusions. Cogn Process 2013;14:411–27. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Burr DC, Holt J, Johnstone JR. et al. Selective depression of motion sensitivity during saccades. J Physiol 1982;333:1–15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cardoso-Leite P, Mamassian P, Schütz-Bocsbach S. et al. A new look at sensory attenuation action-effect anticipation affects sensitivity, not response bias. Psychol Sci 2010;21:1740–45. [DOI] [PubMed] [Google Scholar]

- Clark A. Surfing Uncertainty: Prediction, Action, and the Embodied Mind. Oxford: Oxford University Press, 2015. [Google Scholar]

- Cousineau D. Confidence intervals in within-subject designs: A simpler solution to Loftus and Masson's method. Tutorials in Quantitative Methods for Psychology 2005;1:42–5. [Google Scholar]

- Festman Y, Adam JJ, Pratt J. et al. Continuous hand movement induces a far-hand bias in attentional priority. Atten Percept Psychophys 2013;75:644–49. [DOI] [PubMed] [Google Scholar]

- Friston K. The free-energy principle: a unified brain theory? Nat Rev Neurosci 2010;11:127–38. [DOI] [PubMed] [Google Scholar]

- Frith CD. The Cognitive Neuropsychology of Schizophrenia. Hove: Erlbaum, 1992. [Google Scholar]

- Goodhew SC, Edwards M, Ferber S. et al. Altered visual perception near the hands: a critical review of attentional and neurophysiological models. Neurosci Biobehav Rev 2015;55:223–33. [DOI] [PubMed] [Google Scholar]

- Hohwy J. The predictive mind. Oxford: Oxford University Press, 2013. [Google Scholar]

- Holst E, Mittelstaedt H. Das reafferenzprinzip. Naturwissenschaften 1950;37:464–76. [Google Scholar]

- Hughes G, Waszak F. ERP correlates of action effect prediction and visual sensory attenuation in voluntary action. Neuroimage 2011;56:1632–40. [DOI] [PubMed] [Google Scholar]

- Juravle G, Spence C. Juggling reveals a decisional component to tactile suppression. Exp Brain Res 2011;213:87–97. [DOI] [PubMed] [Google Scholar]

- Juravle G, Deubel H, Tan HZ. et al. Changes in tactile sensitivity over the time-course of a goal-directed movement. Behav Brain Res 2010;208:391–401. [DOI] [PubMed] [Google Scholar]

- Luce RD. Response times: Their role in inferring elementary mental organization (No. 8). New York: Oxford University Press on Demand, 1986. [Google Scholar]

- Pashler H. Dual-task interference in simple tasks: data and theory. Psychol Bull 1994;116:220–44. [DOI] [PubMed] [Google Scholar]

- R Core Team (2015). A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria. URL https://www.R-project.org/.

- Shergill SS, Bays PM, Frith CD. et al. Two eyes for an eye: the neuroscience of force escalation. Science 2003;301:187. [DOI] [PubMed] [Google Scholar]

- Sperry RW. Neural basis of the spontaneous optokinetic response produced by visual inversion. J Comp Physiol Psychol 1950;43:482–9. [DOI] [PubMed] [Google Scholar]

- Stenner MP, Bauer M, Haggard P. et al. Enhanced alpha-oscillations in visual cortex during anticipation of self-generated visual stimulation. J Cogn Neurosci 2014;26:2540–51. [DOI] [PubMed] [Google Scholar]

- Van Doorn G, Hohwy J, Symmons M. Can you tickle yourself if you swap bodies with someone else? Conscious Cogn 2014;23:1–11. [DOI] [PubMed] [Google Scholar]

- Van Doorn G, Paton B, Howell J. et al. Attenuated self-tickle sensation even under trajectory perturbation. Conscious Cogn 2015;36:147–53. [DOI] [PubMed] [Google Scholar]

- Von Helmholtz H. Treatise on Physiological Optics Vol. III New York: Dover, 1867. [Google Scholar]

- Voss M, Ingram JN, Wolpert DM. et al. Mere expectation to move causes attenuation of sensory signals. PLoS One 2008;3:e2866.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Weichert F, Bachmann D, Rudak B, et al. Analysis of the accuracy and robustness of the leap motion controller. Sensors 2013;13:6380–93. [DOI] [PMC free article] [PubMed] [Google Scholar]