Abstract

Over the last 30 years, our understanding of the neurocognitive bases of consciousness has improved, mostly through studies employing vision. While studying consciousness in the visual modality presents clear advantages, we believe that a comprehensive scientific account of subjective experience must not neglect other exteroceptive and interoceptive signals as well as the role of multisensory interactions for perceptual and self-consciousness. Here, we briefly review four distinct lines of work which converge in documenting how multisensory signals are processed across several levels and contents of consciousness. Namely, how multisensory interactions occur when consciousness is prevented because of perceptual manipulations (i.e. subliminal stimuli) or because of low vigilance states (i.e. sleep, anesthesia), how interactions between exteroceptive and interoceptive signals give rise to bodily self-consciousness, and how multisensory signals are combined to form metacognitive judgments. By describing the interactions between multisensory signals at the perceptual, cognitive, and metacognitive levels, we illustrate how stepping out the visual comfort zone may help in deriving refined accounts of consciousness, and may allow cancelling out idiosyncrasies of each sense to delineate supramodal mechanisms involved during consciousness.

Keywords: contents of consciousness, metacognition, unconscious processing, states of consciousness, self

Introduction

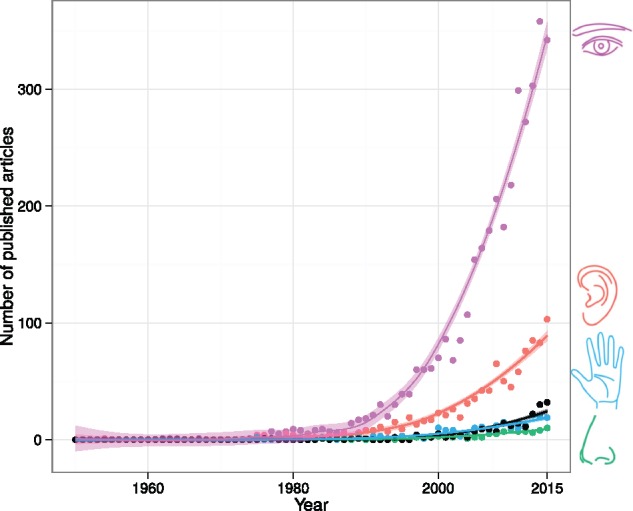

Cognitive neurosciences largely focus on vision, and the field of consciousness studies is no exception. Counting the number of peer-reviewed articles published per year with the keyword “consciousness” or “awareness” in the visual, auditory, tactile, and olfactory modalities, one can note two trends. First and foremost, starting from the 1990s, the publication rate increases exponentially in all modalities, which attests to the growing importance of consciousness studies in cognitive neurosciences over the last 30 years (Fig. 1). In addition, looking at the publication rate across modalities, one cannot but notice the overwhelming dominance of visual studies compared with all other modalities: in 2015, there were three times more studies of visual consciousness compared with auditory consciousness, and around 12 times more compared with studies of olfactory and tactile consciousness put together. We see two main reasons for this “visual bias” in consciousness research. The first one belongs to recent history: the pioneers who initiated the scientific study of subjective experience in the 1990s relied on the relatively comprehensive understanding of the visual system as a starting point to the quest for consciousness, assuming that other forms of consciousness may have the same properties: “We made the plausible assumption that all forms of consciousness (e.g. seeing, thinking, and pain) employ, at bottom, rather similar mechanisms and that if one form were understood, it would be much easier to tackle the others. We then made the personal choice of the mammalian visual system as the most promising one for an experimental attack. This choice means that fascinating aspects of the subject, such as volition, intentionality, and self-consciousness, to say nothing of the problem of qualia, have had to be left on one side” (Crick and Koch 1990b).

Figure 1.

Number of published articles per year containing the keywords “consciousness” or “awareness” together with “vision” or “visual” (purple), “audition” or “auditory” (red), “touch” or “tactile” (blue), “olfaction” or “olfactory” (green), and “multisensory” or “multimodal” (black). Results were obtained from PubMed (www.ncbi.nlm.nih.gov/pubmed). Fitting was obtained using local regression with R (2016) and the ggplot2 package (Wickham 2009).

The second reason is pragmatic: researchers can rely on a vast arsenal of experimental paradigms allowing the display of visual stimuli below perceptual threshold (Kim and Blake 2005; Dubois and Faivre 2014), while it remains an experimental challenge in all other modalities [but see exceptions like D’Amour and Harris (2014) for vibrotactile masking; Gutschalk et al. (2008); Dupoux et al. (2008); Faivre et al. (2014), for auditory masking; Sela and Sobel (2010) for olfactory masking, Stevenson and Mahmut (2013), for olfactory rivalry, or Zhou et al. (2010) for binaral rivalry]. As most research on perceptual consciousness relies on a contrastive approach (Baars 1994), the capacity to present stimuli below the threshold for consciousness is crucial, and the difficulty to do so in nonvisual domains can be an impediment to research. We acknowledge that this difficulty could simply be due to methodological reasons, with less effort put onto the design of audio or tactile masking compared with visual masking. However, it could also be due to physiological differences between senses. One possibility is that the transition between conscious and unconscious states is gradual in vision, allowing for fine-grained estimations of unconscious processes, and more abrupt in nonvisual senses, so that subtle changes in the stimulation pattern map onto sudden variations in terms of conscious experience. The possibility that the limen of consciousness (i.e. the transition between unconscious and conscious states) is thinner for nonvisual senses warrants empirical exploration, as it may underlie different properties of consciousness for visual vs. nonvisual modalities.

In what follows, we summarize four lines of research focusing on the multisensory nature of consciousness. Namely, we review the evidence showing that signals from distinct sensory modalities interact even when they are not perceived consciously due to psychophysical manipulations affecting the content of consciousness (“Multisensory interactions below the perceptual threshold for consciousness” section), or due to variations in vigilance states decreasing the level of consciousness (“Multisensory interactions at low levels of consciousness” section). Multisensory interactions between subliminal stimuli or during low levels of consciousness challenge the view that consciousness is a prerequisite for multisensory processing. We then address the role of multisensory interactions in the formation of bodily self-consciousness (BSC), defined as the subjective experience of owning a body and perceiving the world from its point of view (“Multisensory interactions for bodily self-consciousness” section). Finally, we review how the metacognitive self, defined as a second-order monitoring of mental states, applies to different sensory modalities, and conjunctions of sensory modalities (“Supramodal properties of metacognition” section). We conclude by arguing that a multisensory study of consciousness may allow deepening our understanding of subjective experience beyond the idiosyncrasies of visual consciousness.

Multisensory Interactions below the Perceptual Threshold for Consciousness

Most researchers consider conscious experience to be multisensory in nature: For instance, it is difficult to appreciate our favorite dish dissociating the visual, somatosensory, olfactory, auditory, and gustatory sensations evoked by it [this applies both to perception and mental imagery, see Spence and Deroy (2013)]. Despite its apparent tangibility, the actual nature of multisensory conscious remains a topic of philosophical debate [see O’Callaghan (2017), for six differing ways in which conscious perceptual awareness may be multisensory], and some wonder if consciousness is indeed multisensory or rather a succession of unisensory experiences (Spence and Bayne 2014). As scientists, we need to assess how different sensory signals are bound together into a single unified object (i.e. object-unity), but also more globally to assess whether different objects from different senses fit into a single unified experience [i.e. phenomenal unity, see Bayne and Chalmers (2003); Deroy et al. (2014)]. Based on the apparent multisensory nature of conscious experiences, it has been argued that conscious processing plays a role in merging together inputs from different sensory modalities (Baars 2002). Yet, recent evidence suggests that cross-modal interactions exist in the absence of consciousness [reviewed in Deroy et al. (2016)]. The majority of studies contributing to this recent reevaluation of cross-modal consciousness have focused on cross-modal interactions occurring outside of visual consciousness, as visual stimuli can be easily presented subliminally (Kim and Blake 2005; Dubois and Faivre 2014). For example, several sensory modalities can interact with a visual stimulus rendered invisible by interocular suppression [binocular rivalry: Alais and Blake (2005); or continuous flash suppression: Tsuchiya and Koch (2005)]. Cross-modal interactions were demonstrated between subliminal visual stimuli and audition (Conrad et al. 2010; Guzman-Martinez et al. 2012; Alsius and Munhall 2013; Lunghi et al. 2014; Aller et al. 2015), touch (Lunghi et al. 2010; Lunghi and Morrone 2013; Lunghi and Alais 2013, 2015; Salomon et al. 2015), smell (Zhou et al. 2010), vestibular information (Salomon et al. 2015), and proprioception (Salomon et al. 2013). These studies have shown that cross-modal signals facilitate consciousness of a congruent visual stimulus, facilitating its entry into consciousness earlier compared either to visual only stimulation or to incongruent cross-modal stimulation. Moreover, in order for this “unconscious” cross-modal interaction to occur, the cross-modal signals need to be matched. Thus, when the features of a suppressed visual stimulus (e.g. orientation, spatial frequency, temporal frequency, and spatial location) match those of a suprathreshold stimulus in another modality this may facilitate visual consciousness, indicating that the cross-modal enhancement of visual consciousness only occurs when the different sensory signals can be interpreted as arising from the same object. This suggests that these unconscious cross-modal interactions reflect a genuine perceptual effect, which is unlikely to be mediated by a cognitive association between the cross-modal signals. In fact, it has been shown (Lunghi and Alais 2013) that the orientation tuning of the interaction between vision and touch is narrower than the subjects’ cognitive categorization: the interaction between visuo-haptic stimuli with a mismatch of only 7° does not occur, despite the fact that observers cannot consciously recognize the visual and haptic stimuli as being different. These results suggest that signals from nonvisual modalities can interact with visual signals at early stages of visual processing, before the emergence of the conscious representation of the visual stimuli (Sterzer et al. 2014). Visual stimuli can also interact with subliminal tactile signals: a study by Arabzadeh et al. (2008) has reported that a dim visual flash is able to improve tactile discrimination thresholds, suggesting that visual signals can enhance subliminal tactile perception. A similar visuo-haptic interaction has been reported in a study showing that congruent tactile stimulation can improve visual discrimination thresholds (Van Der Groen et al. 2013). These studies indicate a tight interplay between visual and tactile consciousness. To our knowledge, genuine sensory cross-modal interactions with nonvisual subliminal auditory and olfactory signals have not yet been reported, even though unconscious integration of auditory and visual stimuli occurs after conscious associative learning (Faivre et al. 2014).

Taken together, these results show that different sensory modalities can contribute to unimodal consciousness, as cross-modal signals can enhance consciousness in a particular modality. What is the function of this cross-modal contribution to consciousness? We suggest that cross-modal interactions might facilitate consciousness of relevant sensory stimuli. Besides facilitating an efficient interaction with the external world under normal circumstances, this mechanism could also be particularly useful in conditions in which the unisensory information is weak or impaired, e.g. during development (when the resolution of the visual system is coarse) or in case of sensory damage.

Multisensory Interactions at Low Levels of Consciousness

The previous section has outlined how unconsciously processed stimuli from different sensory modalities interact, and affect the formation of perceptual consciousness. These findings pertain to situations in which perceptual signals are not consciously experienced during wakefulness, but a few studies show that multisensory interactions may also occur under reduced levels of consciousness. There are several states which are characterized by a decreased level of consciousness: a naturally recurring state occurring during sleep, a pharmacologically induced state under anesthesia, and a pathological state in the case of disorders of consciousness (DOC) (Laureys 2005; Bayne et al. 2016). Humans in low-level states of consciousness have closed eyes most of the time. Therefore, while visual paradigms may be highly appealing for studying consciousness under normal wakefulness, during altered states of consciousness nonvisual signals may be more suitable.

One of the earliest works examining interactions between different sensory modalities in states of diminished consciousness focused on the feasibility of multisensory associations during pharmacologically induced sleep (Beh and Barratt 1965). Specifically, it was shown that a tone can be successfully paired with an electrical pulse during continuous uninterrupted sleep – which as far as we know is the first evidence for multisensory interaction during human sleep. It took approximately 30 years until the study was revisited, this time during natural human sleep (Ikeda and Morotomi 1996). Again, a tone and an electrical stimulus were associated, yet only during discontinuous slow wave sleep (SWS) and not during Stage 2 sleep, implying that multisensory associations might be sleep stage dependent. To disentangle the influence of different sleep stages on multisensory interactions, a more recent study investigated auditory–olfactory trace conditioning during nonrapid eye movement (NREM) and rapid eye movement (REM) sleep (Arzi et al. 2012). Sleeping humans were able to associate a specific tone with a specific odor during both NREM and REM sleep. Intriguingly, although associative learning was evident in both sleep stages, retention of the association during wakefulness was observed only when stimuli were presented during NREM sleep, but was irretrievable when stimuli were presented only during REM sleep. This may suggest that although new links between sensory modalities can be created in natural NREM and REM sleep, access to this new information in a different state of consciousness is limited, and sleep stage dependent.

Multisensory associative learning has also been demonstrated in awake neonates (Stamps and Porges 1975; Crowell et al. 1976; Little et al. 1984) and in sleeping newborns (Fifer et al. 2010). One to two days old sleeping infants presented with an eye movement conditioning procedure, learned to blink in response to a tone which predicts a puff of air (Fifer et al. 2010). Taken together, these findings suggest that despite the different nature of adult and newborn sleep (de Weerd and van den Bossche 2003; Ohayon et al. 2004), sleeping newborns can also rapidly learn to associate information from different sensory modalities.

A similar auditory–eye movement trace conditioning was used as an implicit tool to assess partially preserved conscious processing in DOC patients (Bekinschtein et al. 2009). Vegetative state (VS)/unresponsiveness wakefulness syndrome (UWS) and minimally conscious state (MCS) are DOC that can be acute and reversible or chronic and irreversible. VS/UWS is characterized by wakefulness without awareness, and no evidence of reproducible behavioral responses. In contrast, MCS is characterized by partial preservation of awareness, and reproducible though inconsistent responsiveness. Accurate diagnosis of consciousness in DOC has significant consequences on treatment and on end-of-life decisions (Giacino et al. 2002; Laureys 2005; Bayne et al. 2016). Some individuals diagnosed with VS or with MCS were able to associate between a tone and an air puff – an ability that was found to be a good indicator for recovery of consciousness. Intriguingly, when the same paradigm was applied to subjects under the anesthetic agent propofol, no association between the tone and the air puff was observed (Bekinschtein et al. 2009), suggesting that multisensory trace conditioning may not occur under propofol anesthesia. This result is in line with previous findings showing that multisensory neurons during wakefulness become unimodal under propofol anesthesia (Ishizawa et al. 2016). That said, there is considerable evidence for multisensory interactions at the neuronal level under other anesthetics (Populin 2005; Stein and Stanford 2008; Cohen et al. 2011; Costa et al. 2016).

Put together, the few studies that investigated multisensory interactions at low levels of consciousness confirm that information from different sensory modalities can be associated unconsciously. Nevertheless, such unconscious interactions are only found under specific conditions and states of reduced consciousness, suggesting that the ability to associate stimuli from different modality is probably state-specific.

Multisensory Interactions for Bodily Self-Consciousness

The studies we reviewed so far manipulated perceptual signals or examined cases in which consciousness was absent due to neurological damage or sleep. Although this line of work yields some important findings about consciousness and its neural correlates, it overlooks an important aspect of consciousness, namely the “Self,” the subject or “I” who is undergoing the perceptual experiences (Blanke et al. 2015; Faivre et al. 2015). Recent research on BSC has uncovered that the implicit and pre-reflective experience of the self is related to processing of bodily signals in multisensory brain systems [for reviews see Blanke (2012); Blanke et al. (2015); Ehrsson (2012); Gallagher (2000)]. Neurological conditions and experimental manipulations revealed that fundamental components of BSC, such as owning and identifying with a body (body ownership), and the experience of the self in space (self-location) are also based on the integration of multisensory signals [e.g. Ehrsson (2007); Lenggenhager et al. (2007)]. For example, tactile stimulation of the real hand/body coupled with spatially and temporally synchronous stroking of the viewed virtual hand/body give rise to illusory ownership over the virtual hand [rubber hand illusion (RHI)] or body [full body illusion (FBI)] as measured by subjective responses as well as neural and physiological measurements [Botvinick and Cohen (1998); reviewed in Blanke (2012) and Kilteni et al. (2015)]. Such bodily illusions may impact perception, implying a tight link between the subject and the object of consciousness. A recent study based on the RHI revealed that perceptual consciousness and BSC are intricately linked, by showing that visual afterimages projected on a participant’s hand drifted laterally toward a rubber hand placed next to their own hand, but only when the rubber hand was embodied (Faivre et al. 2016). These results suggest that vision is not only influenced by other sensory modalities, but is also self-grounded, mapped on a self-referential frame which stems from the integration of multisensory signals from the body.

Others have used sensorimotor correlations giving rise to the sense of agency to establish a sense of ownership over a hand (Sanchez-Vives et al. 2010; Tsakiris et al. 2010; Salomon et al. 2016) or a body (Slater et al. 2010; Banakou and Slater 2014). These findings suggest that BSC is a malleable and ongoing process in which multisensory and motor signals interact such that congruent information from different bodily senses form a current spatio-temporal representation of the self [reviewed in Blanke (2012); Ehrsson (2012); Sanchez-Vives and Slater (2005)]. As BSC is thought to be implicit and pre-reflective, one would expect that it should not require consciousness of the underlying sensory signals. Indeed, it has recently been demonstrated that the FBI, based on visuo-tactile correspondences, can be elicited even when participants are completely unaware of the visual stimuli or the visuo-tactile correspondence (Salomon et al. 2016). This suggests that subliminal stimuli or unattended bodily signals may still contribute to the formation of BSC.

The above studies show that exteroceptive signals are fundamental in establishing the sense of self. In parallel, several theories have suggested a central role for interoceptive signals arising from respiratory and cardiac activity in BSC (Damasio 1999; Craig 2009; Seth 2013; Park and Tallon-Baudry 2014). Indeed, recent experimental work has linked interoceptive signals to BSC. For example, two experiments employed a paradigm similar to the RHI and FBI, but substituted the tactile stimulation with cardio-visual stimulation: when participants saw their hand/body flashing synchronously with their heartbeat, they reported illusory ownership over the seen limb/body compared with an asynchronous condition (Aspell et al. 2013; Suzuki et al. 2013). Furthermore, changes in the neural processing of heartbeats during the FBI (Park et al. 2016), as well as when facial stimuli are modulated by heartbeat (Sel et al. 2016), and learning of interoceptive motor tracking have been reported (Canales-Johnson et al. 2015), suggesting that interoceptive processing is tightly linked to BSC. Beyond their influence on BSC, recent work has shown that cardiac activity and related neural responses also affect visual consciousness, suggesting the possibility of interactions between processing of bodily signals for BSC and perceptual consciousness (Faivre et al. 2015). Notably, Park et al. (2014) have shown that spontaneous fluctuations of neural responses to cardiac events (heartbeat evoked responses) are predictive of stimulus visibility. Furthermore, visual stimuli presented synchronously with the heartbeat were found to be suppressed from consciousness through activity in the anterior insular cortex, suggesting that the perceptual consequences of interoceptive activity are suppressed from consciousness (Salomon et al. 2016). Taken together, these studies indicate that internal bodily processes interact with exteroceptive senses and have substantial impact on both our perceptual and bodily consciousness.

In summary, the sense of self is formed through the combination of multisensory information of interoceptive and exteroceptive origins at a pre-reflective level. While often neglected in classical perceptual consciousness studies, this fundamental multisensory representation of the organism is critical to our understanding of both bodily and perceptual consciousness and their associated neural states.

Supramodal Properties of Metacognition

As described above, the sense of self includes the feeling of owning a body, based on the integration of exteroceptive and interoceptive bodily signals. Another crucial aspect of mental life that relates to the self is our capacity to monitor our own percepts, thoughts, or memories (Koriat 2006; Fleming et al. 2012). This capacity, long known as a specific form of introspection and now referred to as metacognition is measured by asking volunteers to perform a perceptual or memory task (first-order task), followed by a confidence judgment regarding their own performance [second-order task; Fleming and Lau (2014)]. In this operationalization, metacognitive accuracy is quantified as the capacity to adapt confidence according to first-order task performance, so that confidence is on average high after correct responses, and low if an error is detected. Metacognition has a crucial function in vision (e.g. confidence in spotting a flash of light), as well as in other nonvisual modalities (e.g. confidence in hearing a baby crying, or smelling a gas leak). As for the study of perceptual consciousness, the study of perceptual metacognition also suffers from a visual bias, most studies using single, isolated and almost exclusively visual stimuli. As a result, the properties of metacognition across senses remain poorly described. Notably, an open question is whether metacognitive processes involve central, domain-general neural mechanisms that are shared between all sensory modalities, and/or mechanisms that are specific to each sensory modality, such as direct read out from early sensory areas involved in the first-order task. Answering this question requires one to assess metacognition also for nonvisual stimuli. Recently, two studies met this challenge by examining perceptual metacognition in the auditory domain. First, it was shown that metacognitive performance in an auditory pitch discrimination task correlated with that in a luminance discrimination task (note that correlations between tasks relying on vision or memory were not found, see Ais et al. 2016). These results are supported by another study showing that confidence estimates across a visual orientation discrimination task and an auditory pitch discrimination task can be compared as accurately as within two trials of the same task, suggesting that auditory and visual confidence estimates share a common format (de Gardelle et al. 2016). These similarities between auditory and visual metacognition were further supported by another study, in which metacognitive performances during auditory, visual, as well as tactile laterality discrimination tasks were found to correlate with one another (Faivre et al. 2016). Metacognitive performances in these unimodal conditions also correlated with bimodal metacognitive performance, where confidence estimates were to be made on the congruency between simultaneous auditory and visual signals. Modeling work further showed that confidence in audiovisual signals could be estimated from the joint distributions of auditory and visual signals, or based on a comparison of confidence estimates made on each signal as taken separately. In both cases, these models imply that confidence estimates are encoded with a supramodal format, independent from the sensory signals from which they are built. Jointly, these results suggest that perceptual metacognition involves mechanisms that are shared between modalities [see Deroy et al. (2016) for review].

General Discussion

The dominance of vision in consciousness studies may have led to an inadvertent confounding of what we know about visual consciousness and what consciousness is: subjective experience. Conscious experiences evoked by stimuli from different modalities share common features yet each kind of sensory consciousness also has particular qualities. For instance, while sensory perception appears continuous, the temporal sampling in olfaction is in the scale of seconds dictated by sniffing rate, while it is in the scale of hundreds of milliseconds in vision through eye movement, and is almost continuous in audition or other senses such as proprioception (Sela and Sobel 2010; Van Rullen et al. 2014). Another example among many is the role of attention and its link with consciousness, which is likely to vary across sensory modalities [for a special issue on this topic, see Tsuchyia and van Boxtel (2013)]. At the neural level, most accounts of consciousness assume that visual, tactile, auditory, or olfactory conscious experiences stem from the transduction of sensory signals of completely different nature (i.e. electromagnetic, mechanical, or chemical, respectively), followed by the processing of subsequent neural activity by a common mechanism referred to as a neural correlate of consciousness (NCC: Crick and Koch 1990a; Koch et al. 2016). Considerable progress has been made regarding the description of NCCs, mostly through the measure of brain activity associated with seen vs. unseen visual stimuli. Among the likely NCC candidates, some predominate, like the occurrence of long-range neural feedback from fronto-parietal networks to sensory areas (Dehaene and Changeux 2011), local feedback loops within sensory areas (Lamme and Roelfsema 2000), or the existence of networks that are intrinsically irreducible to independent subnetworks (Tononi et al. 2016). If one accepts that a common mechanism enables consciousness in different modalities, a NCC candidate should apply to all senses, and not reflect idiosyncratic properties of visual consciousness only. While these limitations are now often recognized (Koch et al. 2016), a clear disambiguation between the processes which are idiosyncratic to visual consciousness with those related to consciousness in other modalities, or supramodal processes is central to advancing the field. One step forward in identifying NCCs that are not idiosyncratic of each sensory modality is to rely on a multisensory contrastive approach, whereby one contrasts simultaneously – with the same participants, paradigms, and measures – brain activity associated with conscious vs. unconscious processing in different senses. Within such framework, a supramodal NCC may be defined as the functional intersection of all unisensory NCCs, and consists in the common mechanism that enables consciousness, irrespective of the specificities inherent to each sensory pathway.

Beyond independent subjective experiences in distinct sensory modalities, one may consider consciousness as a holistic experience made of numerous sensory signals bound together (Nagel 1971; Revonsuo 1999; Bayne and Chalmers 2003; Deroy et al. 2014; O’Callaghan 2017; but see Spence and Bayne 2014). Although often discussed in philosophy, this crucial aspect of phenomenology has remained outside of empirical focus until recently, with notable current theories stressing the importance of consciousness for information integration [reviewed in Mudrik et al. (2014)]. The results reviewed in “Multisensory interactions below the perceptual threshold for consciousness” and “Multisensory interactions at low levels of consciousness” sections clearly show that multisensory interactions occur in the complete absence of consciousness, which challenges the theoretical predictions that consciousness is a prerequisite for multisensory processing. Nevertheless, the existence of interactions between senses does not imply that two signals from distinct modalities are integrated into a bimodal representation. To test if unconscious processing allows bimodal signals to be processed as such, future studies should assess whether bimodal subliminal signals are subject to the classical laws of multisensory integration. In particular, future experiments should assess if the strength of multisensory integration increases as the signal from individual sensory stimuli decreases (i.e. inverse effectiveness), and if the combinations of two unimodal signals produce a bimodal response that is bigger than the summed individual responses (i.e. superadditivity).

Besides perceptual consciousness, the results reviewed in “Multisensory interactions for bodily self-consciousness” and “Supramodal properties of metacognition” sections show that in addition to shaping perceptual experiences, multimodal interactions are also central for self-representations, as they give rise to the “I” or subject of experience (i.e. BSC), and can be processed to form a sense of confidence during the monitoring of one’s own mental states (i.e. metacognition). Thus, beyond overcoming the “visual bias” in the current literature, we argue that studying consciousness in different sensory modalities, and most importantly with combinations of sensory modalities, can reveal important aspects of perceptual consciousness and self-consciousness that would otherwise remain obscured.

Conflict of interest statement. None declared.

Acknowledgements

N.F. was an EPFL fellow co-funded by Marie Skłodowska-Curie. C.L. was funded by the project ECSPLAIN (European Research Council under the Seventh Framework Programme, FPT/2007-2013, grant agreement no. 338866). A.A. was a Blavatnik fellow and a Royal society Kohn international fellow. We thank the Association for the Scientific Study of Consciousness for travel funding, and we are grateful to Liad Mudrik, Lavi Secundo, Andrés Canales-Johnson, and Ophelia Deroy for valuable discussions and helpful comments on the article.

References

- Ais J, Zylberberg A, Barttfeld P, et al. Individual consistency in the accuracy and distribution of confidence judgments. Cognition 2016; 146:377–86. [DOI] [PubMed] [Google Scholar]

- Alais D, Blake R.. Binocular Rivalry. Cambridge, MA: MIT Press, 2005. [Google Scholar]

- Aller M, Giani A, Conrad V, et al. A spatially collocated sound thrusts a flash into awareness. Front Integrat Neurosci 2015; 9:16.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alsius A, Munhall K.. Detection of audiovisual speech correspondences without visual awareness. Psychol Sci 2013;24:423–31. [DOI] [PubMed] [Google Scholar]

- Arzi A, Shedlesky L, Ben-Shaul M. et al. Humans can learn new information during sleep. Nat Neurosci 2012; 15:1460–5. [DOI] [PubMed] [Google Scholar]

- Aspell JE, Heydrich L, Marillier G, et al. Turning body and self inside out: visualized heartbeats alter bodily self-consciousness and tactile perception. Psychol Sci 2013; 24:2445–53. [DOI] [PubMed] [Google Scholar]

- Arabzadeh E, Clifford CW, Harris JA.. Vision merges with touch in a purely tactile discrimination. Psychol Sci 2008; 19:635–41. [DOI] [PubMed] [Google Scholar]

- Baars BJ. A thoroughly empirical approach to consciousness. Psyche 1994; 1. [Google Scholar]

- Baars BJ. The conscious access hypothesis: origins and recent evidence. Trends Cognit Sci 2002; 6:47–52. [DOI] [PubMed] [Google Scholar]

- Banakou D, Slater M.. Body ownership causes illusory self-attribution of speaking and influences subsequent real speaking. Proceed Natl Acad Sci USA 2014; 111:17678–83. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bayne T, Hohwy J, Owen AM.. Are there levels of consciousness? Trends Cognit Sci 2016; doi:10.1016/j.tics.2016.03.009 [DOI] [PubMed] [Google Scholar]

- Bayne T, Chalmers D.. What is the unity of consciousness? In: Cleeremans A. (ed.), The Unity of Consciousness: Binding, Integration, Dissociation. Oxford: Oxford Scholarship Online, 2003. [Google Scholar]

- Beh HC, Barratt PEH.. Discrimination and conditioning during sleep as indicated by the electroencephalogram. Science 1965; 147:1470–1. [DOI] [PubMed] [Google Scholar]

- Bekinschtein T, Dehaene S, Rohaut B, et al. Neural signature of the conscious processing of auditory regularities. Proceed Natl Acad Sci USA 2009; 106:1672–7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Blanke O. Multisensory brain mechanisms of bodily self-consciousness. Nat Rev Neurosci 2012; 13:556–71. [DOI] [PubMed] [Google Scholar]

- Blanke O, Slater M, Serino A.. Behavioral, neural, and computational principles of bodily self-consciousness. Neuron 2015; 88:145–66. [DOI] [PubMed] [Google Scholar]

- Botvinick M, Cohen J.. Rubber hands ‘feel’ touch that eyes see. Nature 1998; 391: 756. [DOI] [PubMed] [Google Scholar]

- Canales-Johnson A, Silva C, Huepe D, et al. Auditory feedback differentially modulates behavioral and neural markers of objective and subjective performance when tapping to your heartbeat. Cerebral Cortex 2015; 25:4490–503. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cohen L, Rothschild G, Mizrahi A.. Multisensory integration of natural odors and sounds in the auditory cortex. Neuron 2011; 72:357–69. [DOI] [PubMed] [Google Scholar]

- Conrad V, Bartels A, Kleiner M, et al. Audiovisual interactions in binocular rivalry. J Vis 2010; 10:27.. [DOI] [PubMed] [Google Scholar]

- Costa M, Piché M, Lepore F, et al. Age-related audiovisual interactions in the superior colliculus of the rat. Neuroscience 2016; 320:19–29. [DOI] [PubMed] [Google Scholar]

- Craig A. How do you feel—now? the anterior insula and human awareness. Nat Rev Neurosci 2009; 10:59–70. [DOI] [PubMed] [Google Scholar]

- Crick F, Koch C.. Towards a neurobiological theory of consciousness. Semin Neurosci 1990a; 2:263–75. [Google Scholar]

- Crick F, Koch C.. Some reflections on visual awareness. Cold Spring Harb Symp Quant Biol 1990b; 55:953–62. [DOI] [PubMed] [Google Scholar]

- Crowell DH, Blurton LB, Kobayash LR, et al. Studies in early infant learning: classical conditioning of the neonatal heart rate. Dev Psychol 1976; 12:373–97. [Google Scholar]

- D’Amour S, Harris LR.. Contralateral tactile masking between forearms. Exp Brain Res 2014; 232:821–6. [DOI] [PubMed] [Google Scholar]

- Damasio AR. The Feeling of What Happens: Body and Emotion in the Making of Consciousness . Houghton Mifflin Harcourt. London, 1999. [Google Scholar]

- Dehaene S, Changeux J-P.. Experimental and theoretical approaches to conscious processing. Neuron 2011; 70:200–27. [DOI] [PubMed] [Google Scholar]

- Deroy O, Chen Y, Spence C.. Multisensory constraints on awareness. Philos Trans R Soc B Biol Sci 2014; 369 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deroy O, Faivre N, Lunghi C, et al. The complex interplay between multisensory integration and perceptual awareness. Multisensory Res 2016; 29: 585–606. doi:10.1163/22134808-00002529 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Deroy O, Spence C, Noppeney U.. Metacognition in multisensory perception. Trends Cognit Sci 2016; 20:736–47. [DOI] [PubMed] [Google Scholar]

- Dubois J, Faivre N.. Invisible, but how? The depth of unconscious processing as inferred from different suppression techniques. Front Psychol 2014;5:1117. doi: 10.3389/fpsyg.2014.01117 [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dupoux E, de Gardelle V, Kouider S.. Subliminal speech perception and auditory streaming. Cognition 2008; 109:267–73. [DOI] [PubMed] [Google Scholar]

- De Gardelle V, Le Corre F, Mamassian P.. Confidence as a common currency between vision and audition. PLoS ONE 2016; 11:e0147901. doi:10.1371/journal.pone.0147901 [DOI] [PMC free article] [PubMed] [Google Scholar]

- de Weerd AW, van den Bossche RA.. The development of sleep during the first months of life. Sleep Med Rev 2003; 7:179–91. [DOI] [PubMed] [Google Scholar]

- Ehrsson HH. The experimental induction of out-of-body experiences. Science 2007; 317:1048.. [DOI] [PubMed] [Google Scholar]

- Ehrsson HH, The concept of body ownership and its relation to multisensory integration In: The New Handbook of Multisensory Processing. Cambridge, MA: MIT Press, 2012. [Google Scholar]

- Faivre N, Doenz J, Scandola M, et al. Self-grounded vision: hand ownership modulates visual location through cortical beta and gamma oscillations. J Neurosci 2016; 37:11–22. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Faivre N, Filevich E, Solovey G, et al. Behavioural, modeling, and electrophysiological evidence for domain-generality in human metacognition. bioRxiv 2016; doi: https://doi.org/10.1101/095950. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Faivre N, Mudrik L, Schwartz N, et al. Multisensory integration in complete unawareness: evidence from audiovisual congruency priming. Psychol Sci 2014; 1–11. doi:10.1177/0956797614547916 [DOI] [PubMed] [Google Scholar]

- Faivre N, Salomon R, Blanke O.. Visual and bodily self-consciousness. Curr Opin Neurol 2015; 28:23–28. [DOI] [PubMed] [Google Scholar]

- Fifer WP, Byrd DL, Kaku M, et al. Newborn infants learn during sleep. Proceed Natl Acad Sci USA 2010; 107:10320–3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fleming S, Dolan R, Frith C.. Metacognition: computation, biology and function. Philos Trans R Soc B Biol Sci 2012; 367: 1280–6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fleming SM, Lau HC.. How to measure metacognition. Front Hum Neurosci 2014; 8:443.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gallagher S. Philosophical conceptions of the self: implications for cognitive science. Trends Cognit Sci 2000; 4:14–21. [DOI] [PubMed] [Google Scholar]

- Giacino JT, Ashwal S, Childs N, et al. The minimally conscious state definition and diagnostic criteria. Neurology 2002; 58:349–53. [DOI] [PubMed] [Google Scholar]

- Gutschalk A, Micheyl C, Oxenham AJ.. Neural correlates of auditory perceptual awareness under informational masking. PLoS Biol 2008; 6:1156–65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Guzman-Martinez E, Ortega L, Grabowecky M, et al. Interactive coding of visual spatial frequency and auditory amplitude-modulation rate. Curr Biol 2012; 22:383–8. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Ikeda K, Morotomi T.. Classical conditioning during human NREM sleep and response transfer to wakefulness. Sleep 1996; 19:72–74. [DOI] [PubMed] [Google Scholar]

- Ishizawa Y, Ahmed OJ, Patel SR, et al. Dynamics of propofol-induced loss of consciousness across primate neocortex. J Neurosci 2016; 36:7718–26. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kilteni K, Maselli A, Kording KP, et al. Over my fake body: body ownership illusions for studying the multisensory basis of own-body perception. Front Hum Neurosci 2015; 9:141.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kim C-Y, Blake R.. Psychophysical magic: rendering the visible ‘invisible’. Trends Cognit Sci 2005; 9:381–8. [DOI] [PubMed] [Google Scholar]

- Koch C, Massimini M, Boly M, et al. Neural correlates of consciousness: progress and problems. Nat Rev Neurosci 2016; 17:307–21. [DOI] [PubMed] [Google Scholar]

- Koriat A. Metacognition and consciousness In: Zelazo PD, Moscovitch M, Thompson E (eds.), Cambridge Handbook of Consciousness. New York: Cambridge University Press, 2006. [Google Scholar]

- Lamme V. A, Roelfsema PR.. The distinct modes of vision offered by feedforward and recurrent processing. Trends Neurosci 2000; 23:571–9. [DOI] [PubMed] [Google Scholar]

- Laureys S. The neural correlate of (un)awareness: lessons from the vegetative state. Trends Cognit Sci 2005; 9:556–9. [DOI] [PubMed] [Google Scholar]

- Lenggenhager B, Tadi T, Metzinger T, et al. Video ergo sum: manipulating bodily self-consciousness. Science 2007; 317:1096–9. [DOI] [PubMed] [Google Scholar]

- Little AH, Lipsitt LP, Rovee-Collier C.. Classical conditioning and retention of the infant’s eyelid response: effects of age and interstimulus interval. J Exp Child Psychol 1984; 37: 512–24. [DOI] [PubMed] [Google Scholar]

- Lunghi C, Alais D.. Touch interacts with vision during binocular rivalry with a tight orientation tuning. PLoS ONE 2013; 8:e58754.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lunghi C, Alais D.. Congruent tactile stimulation reduces the strength of visual suppression during binocular rivalry. Scientific Rep 2015; 5:9413. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lunghi C, Binda P, Morrone M. Touch disambiguates rivalrous perception at early stages of visual analysis. Curr Biol 2010. [DOI] [PubMed] [Google Scholar]

- Lunghi C, Morrone MC.. Early interaction between vision and touch during binocular rivalry. Multisensory Res 2013; 26:291–306. [DOI] [PubMed] [Google Scholar]

- Lunghi C, Morrone MC, Alais D.. Auditory and tactile signals combine to influence vision during binocular rivalry. J Neurosci 2014; 34:784–92. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mudrik L, Faivre N, Koch C.. Information integration without awareness. Trends Cognit Sci 2014; 18:488–96. [DOI] [PubMed] [Google Scholar]

- Nagel T. Brain bisection and the unity of consciousness. Synthese 1971; 22:396–413. [Google Scholar]

- O’Callaghan C. Grades of multisensory awareness. Brain & Lang 2017, in press. [Google Scholar]

- Ohayon MM, Carskadon MA, Guilleminault C, et al. Meta-analysis of quantitative sleep parameters from childhood to old age in healthy individuals: developing normative sleep values across the human lifespan. Sleep 2004; 27:1255–74. [DOI] [PubMed] [Google Scholar]

- Park H, Correia S, Ducorps A, et al. Spontaneous fluctuations in neural responses to heartbeats predict visual detection. Nat Neurosci 2014; 17: 612–8. [DOI] [PubMed] [Google Scholar]

- Park H-DH, Tallon-Baudry C.. The neural subjective frame: from bodily signals to perceptual consciousness. Philos Trans R Soc Lond B Biol Sci 2014; 369:20130208.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Park H-D, Bernasconi F, Bello-Ruiz J, et al. Transient modulations of neural responses to heartbeats covary with bodily self-consciousness. J Neurosci 2016; 36:8453–60. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Populin LC. Anesthetics change the excitation/inhibition balance that governs sensory processing in the cat superior colliculus. J Neurosci 2005; 25:5903–14. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Revonsuo A. Binding and the phenomenal unity of consciousness. Conscious Cognit 1999; 8:173–85. [DOI] [PubMed] [Google Scholar]

- Salomon R, Galli G, Lukowska M, et al. An invisible touch: body-related multisensory conflicts modulate visual consciousness. Neuropsychologia 2016a; 88:131–9. [DOI] [PubMed] [Google Scholar]

- Salomon R, Fernandez NB, van Elk M, et al. Changing motor perception by sensorimotor conflicts and body ownership. Scientific Rep 2016b; 6:25847. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Salomon R, Kaliuzhna M, Herbelin B, et al. Balancing awareness: vestibular signals modulate visual consciousness in the absence of awareness. Conscious Cognit 2015; 36: 289–97. [DOI] [PubMed] [Google Scholar]

- Salomon R, Lim M, Herbelin B, et al. Posing for awareness: proprioception modulates access to visual consciousness in a continuous flash suppression task. J Vis 2013; 13:2. [DOI] [PubMed] [Google Scholar]

- Salomon R, Noel JP, Lukowska M, et al. Unconscious integration of multisensory bodily inputs in the peripersonal space shapes bodily self-consciousness. bioRxiv 2016; 048108. [DOI] [PubMed] [Google Scholar]

- Sanchez-Vives MV, Slater M.. From presence to consciousness through virtual reality. Nat Rev Neurosci 2005; 6:332–9. [DOI] [PubMed] [Google Scholar]

- Sanchez-Vives MV, Spanlang B, Frisoli A, et al. Virtual hand illusion induced by visuomotor correlations. PLoS ONE 2010; 5:e10381.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sel A, Azevedo R, Tsakiris M.. Heartfelt self: cardio-visual integration affects self-face recognition and interoceptive cortical processing. Cereb Cortex 2016; doi:10.1093/cercor/bhw296 [DOI] [PubMed] [Google Scholar]

- Sela L, Sobel N.. Human olfaction: a constant state of change-blindness. Exp Brain Res 2010; 205:13–29. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Seth A. Interoceptive inference, emotion, and the embodied self. Trends Cognit Sci 2013; 17:565–73. [DOI] [PubMed] [Google Scholar]

- Slater M, Spanlang B, Sanchez-Vives MV, et al. First person experience of body transfer in virtual reality. PLoS ONE 2010; 5: e10564.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Spence C, Bayne T.. Is consciousness multisensory? In Stokes D, Biggs S, Matthen M (eds.), Perception and its Modalities. New York: Oxford University Press, 2014, 95–132. [Google Scholar]

- Spence C, Deroy O.. Multisensory Imagery. Lacey S, Lawson R (eds.). New York: Springer, 2013, 157–83. doi:10.1007/978-1-4614-5879-1_9 [Google Scholar]

- Stamps LE, Porges SW.. Heart rate conditioning in newborn infants: relationships among conditionability, heart rate variability, and sex. Dev Psychol 1975; 11:424–31. [Google Scholar]

- Stein BE, Stanford TR.. Multisensory integration: current issues from the perspective of the single neuron. Nat Rev Neurosci 2008; 9:255–66. [DOI] [PubMed] [Google Scholar]

- Sterzer P, Stein T, Ludwig K, et al. Neural processing of visual information under interocular suppression: a critical review. Front Psychol 2014; 5:453. doi: 10.3389/fpsyg.2014. 00453. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Stevenson RJ, Mahmut MK.. Detecting olfactory rivalry. Conscious Cognit 2013; 22:504–16. [DOI] [PubMed] [Google Scholar]

- Suzuki K, Garfinkel SN, Critchley HD, et al. Multisensory integration across exteroceptive and interoceptive domains modulates self-experience in the rubber-hand illusion. Neuropsychologia 2013; 51:2909–17. [DOI] [PubMed] [Google Scholar]

- Tononi G, Boly M, Massimini M, et al. Integrated information theory: from consciousness to its physical substrate. Nat Rev Neurosci 2016; 17:450–61. [DOI] [PubMed] [Google Scholar]

- Tsakiris M, Longo MR, Haggard P.. Having a body versus moving your body: neural signatures of agency and body-ownership. Neuropsychologia 2010; 48:2740–9. [DOI] [PubMed] [Google Scholar]

- Tsuchiya N, Koch C.. Continuous flash suppression reduces negative afterimages. Nat Neurosci 2005; 8:1096–101. [DOI] [PubMed] [Google Scholar]

- Tsuchiya N, van Boxtel J. Introduction to research topic: attention and consciousness in different senses. Front Psychol 2013; 4:249 doi: 10.3389/fpsyg.2013.00249. [DOI] [PMC free article] [PubMed] [Google Scholar]

- van der Groen O, van der Burg E, Lunghi C, et al. Touch influences visual perception with a tight orientation-tuning. PloS ONE 2013; 8:e79558.. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Van Rullen R, Zoefel B, Ilhan B.. On the cyclic nature of perception in vision versus audition. Philos Trans R Soc Lond B Biol Sci 2014; 369:20130214. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zhou W, Jiang Y, He S, et al. Olfaction modulates visual perception in binocular rivalry. Curr Biol 2010; 20:1356–8. [DOI] [PMC free article] [PubMed] [Google Scholar]