Abstract

This chapter reviews evidence regarding the predictive relationship between execution of actions and their effect on performance of cognitive tasks based on processing visual feeback. The concept of forward modeling of action refers to a process whereby simulated or executed actions evoke a predictive model of the future state and position of the effector. For visually guided tasks, this forward model might include the visual outcome of the action. We describe a series of behavioural experiments that suggest that forward model output generated during action performance can assist in the processing of related visual stimuli. Additional results from a neuroimaging experiment on this “motor-visual priming” indicate that the superior parietal lobule is a likely key structure for processing the relationship between performed movements and visual feedback of those movements, and that this predictive system can be accessed for cognitive tasks.

There has been great interest recently in the potential roles of internal models in sensory-motor control and coordination [1]. Two distinct types of model are possible[2]. One, inverse modeling, covers those neural processes that are necessary to convert the plans and goals of an intended action into motor commands; this process could be achieved within a discrete neural system, where the idea of an “internal model” seems appropriate, but it could also be the functional outcome of even a simple error-correcting feedback system. Inverse modelling translates the difference between the current and desired state of the body into a motor command to reach the desired state (inverse modeling is outside the scope of this chapter and is not discussed further). The other form, forward modeling, describes the opposite process. Forward modeling is a predictive process, which in human motor control is thought to allow the prediction of a future limb state by combining current information about limb position with new information about issued motor commands. The forward modeling process begins with a current estimate of limb state (in terms of position and dynamics). When a new motor command is executed, a copy of this command (called motor efference copy) is integrated with the existing estimate to produce a predicted future state of the limb. This output can take the form of an estimate of the new limb state, or go through further modeling into an estimate of the action’s sensory consequences [1].

This forward modeling process allows the brain to overcome the inherent delays involved in waiting for visual or proprioceptive feedback during movement. It can also be used as an unexecuted simulation to see if a planned action will achieve its goal. It can be used to compare an action’s actual sensory outcome with the predicted outcome, allowing error detection and/or correction. Or it can help keep track of a limb state and position during the movement. Further evidence suggests that forward models may also be used to distinguish self-induced sensations (e.g., tickling your own hand) from externally-induced sensations (e.g., someone else tickling your hand). This predicted sensory outcome can be used to remove or reduce reafferent sensations from somatosensory inputs, leaving those exafferent inputs that are more important for motor control. Such a process has been hypothesized to lie behind the well-known (and frankly disappointing) phenomenon that one cannot tickle oneself [3].

The work described here addresses the question of whether the predictive output of such a forward model can be made available to cognitive processes outside of the motor system. If so, then it may be possible to detect its influence on non-motor cognitive tasks. There is ample evidence supporting the notion that motor-related cognitive tasks make use of sensorimotor systems in the brain. Judgment of the laterality of a visually presented hand appears to involve the participant mentally rotating his or her own hand into the same position as the viewed hand, prior to making a decision [4,5]. Similarly, deciding whether a target object can be successfully manipulated takes a similar period of time to physically attempting to interact with the object [6]. Of course, this is indirect evidence for similar neural processing of the mental and physical tasks. What is still missing is evidence that the motor system influences cognitive tasks independent of the action being performed (motor-visual priming).

In two sets of experiments, Craighero and colleagues [7,8] and Vogt and colleagues [9] have further tested the interrelationship between performed actions and visual stimuli. The basic paradigm is straightforward. The participant has to reach and grasp a bar (hidden out of sight) that is oriented either +60° or -60° from the vertical: at the start of each trial the participant is informed of the actual orientation of the bar. A visual “go” signal tells the participant to grasp the bar. This stimulus is either congruent or incongruent with the required grasp – congruent stimuli were either pictures of a bar matching the orientation of the actual bar, or a picture of a hand in the correct orientation to grasp the bar; incongruent stimuli were pictures of a bar at the opposite orientation, or an image of a hand oriented at an angle incompatible with grasping the bar. Response initiation was significantly faster when the visual stimulus was congruent with the required response.

Two mechanisms for this effect have been proposed. Firstly, it may be that the preparation of a motor response produces (through forward modeling) a sensory prediction of the action outcome; this allows faster processing of the congruent visual go-stimulus. This is described as motor-visual priming [7]. The reverse scenario, visuo-motor priming, is also possible – the visual go-stimulus primes the production of a congruent hand movement [8]. It is difficult to decide between these two hypotheses on the basis of these data.

Another set of studies by Brass and colleagues [10] required participants to tap either their index or middle finger, on the basis of a displayed hand tapping that finger, or a symbolic cue (a number) that indicated an index/middle finger tap. On some trials, both finger movement and symbolic cue were presented. If the participant had been instructed to respond on the basis of the symbolic cue, then the simultaneous display of a congruent finger movement would facilitate response initiation, whereas display of incongruent movement delayed response initiation. This is not a simple response-compatibility effect: if the participant was responding on the basis of the displayed movement, then the congruency of the symbolic cue made no difference to their speed of response initiation. The observation of a similar movement to that required of the participant influenced their response.

Our own studies on a related phenomenon provide less ambiguous evidence for motor-visual priming [11]. In these experiments, the participant performs a continuous hand movement (e.g., a slow opening and closing the hand) while simultaneously observing a series of pictures on a computer screen that show a computer animated hand performing either congruent or incongruent hand movement. The task is to detect oddball hand position pictures in the visual series, and report these vocally (by saying “ta”). Unlike the previously described studies, the oddball response component of the task is distinct from both the motor task and the visual series: Participants did not have to produce a response that was related to the visual stimulus or the performed hand action, whereas in the other studies the dependent variable was tightly linked to the performed hand action. Thus visuo-motor priming can be discounted as an interpretation of the following results.

We hypothesized that in this visual discrimination task, participants would be able to use forward model output of their hand state to aid a visual discrimination task. During active movement, forward model processes may produce an expectation of the next hand state in the form of a visual representation. If hand movement and visual series are congruent, the output of this forward model could prime the visual discrimination process. On the incongruent trials, forward model output from this hand action would be of no use for this discrimination task as the internal prediction of hand state would correspond to a different movement from that observed. A savings in RT to the oddball for congruent compared to incongruent trials would therefore suggest the involvement of forward modeling information in the cognitive task.

The rest of this chapter describes a series of five behavioral experiments aimed at investigating whether forward model output could contribute to an ongoing visual discrimination task, and the limits of such contributions. This is followed by the results of a functional imaging study, in which we used functional magnetic resonance imaging (fMRI) to explicitly test whether this task makes use of brain areas proposed to be used by the motor system in forward modeling.

Behavioral evidence for motor-visual priming

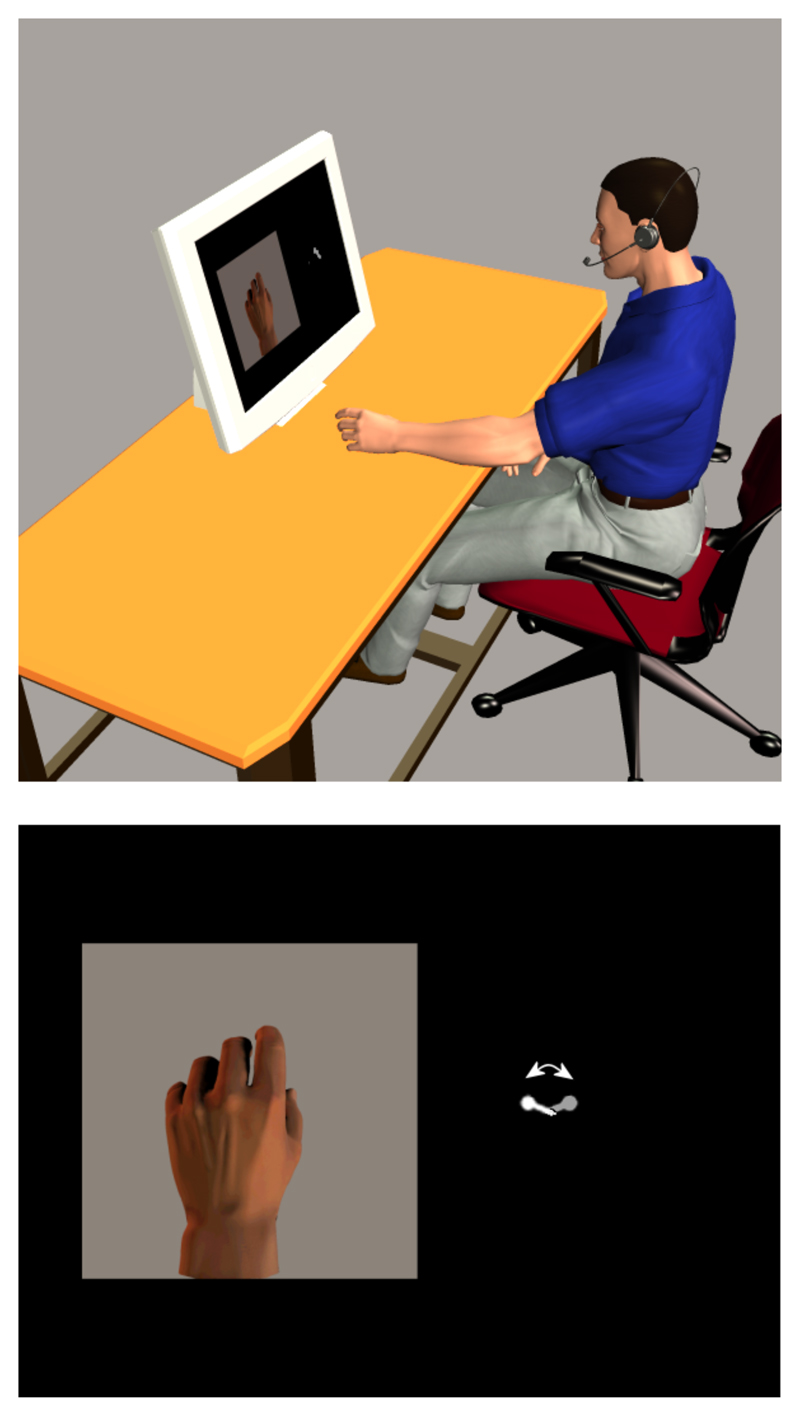

The same basic experimental paradigm was used in all of the experiments described hereafter, with deviations from the standard design as noted. The participant sat in front of a computer monitor (Figure 1; upper panel) where a picture of a hand was displayed on the left of the screen, while on the right a small oscillating pointer acted as both a fixation point and as a metronome for pacing the performed hand movement (Figure 1; lower panel). The display was updated every second. The participant fixated on the pointer, while a series of pictures were presented showing a hand either opening and closing, or rotating at the wrist (from pronation to supination), completing two cycles of movement in 15s (see Figure 2, panel A). At the same time, the participant continuously performed one of these two hand movements (Figure 2, panel B) in time with the metronome-pointer, thus keeping performed movement in phase with the visually presented movement. The participant was instructed at the start of each trial as to which hand movement to perform for the duration of the trial, and which hand movement animation they would view. The metronome ensured that movements were performed at correctly matched speeds across all conditions (even when performed and observed hand movements did not match). This meant that the performed hand movements and observed hand images could be either congruent or incongruent with each other.

Figure 1.

Top panel: Experimental setup for the behavioral experiments. The participant moves his or her hand in time with the visual metronome on the right of the screen, and responds vocally to target stimuli via the microphone. Bottom panel: Example display screen, showing one hand picture from the visual series on the left of the display, and the visual metronome on the right of the display (metronome not to scale).

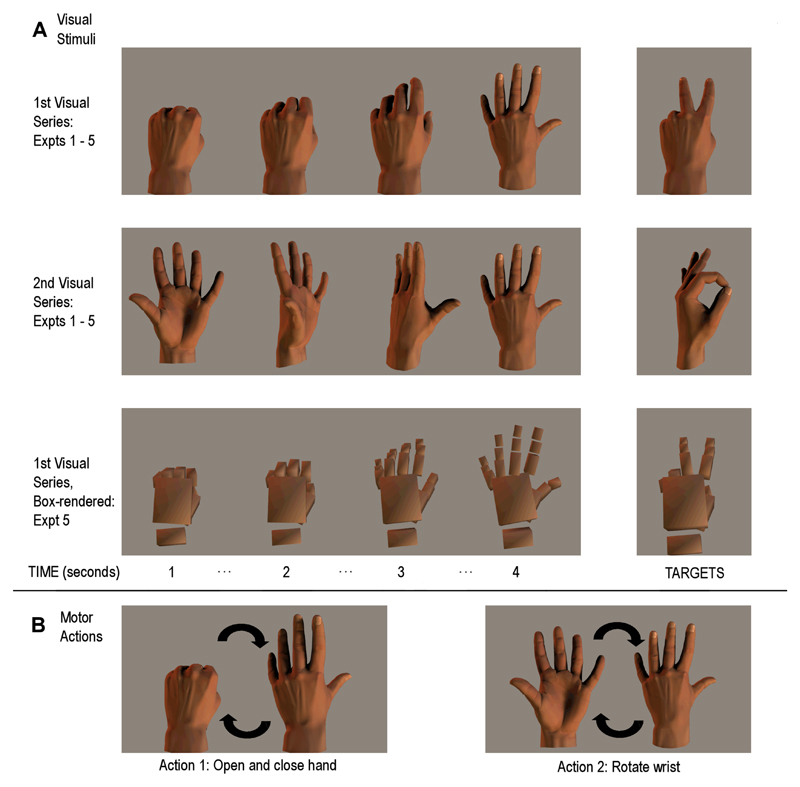

Figure 2.

Panel A: The visual stimuli used in the sequential conditions of Experiments 1 - 5; the top row indicates the visual images for the hand opening sequence, the second row indicates the wrist rotation sequence. The third row of this panel shows examples of the box-rendered images used in Experiment 5. During the presentation of these visual stimuli, the participant performed hand actions (Panel B) that were either congruent or incongruent with the ongoing visual series, and had to respond vocally when each target stimulus (Panel A, right side) was presented.

The detection part of the task required the participant to respond vocally to target pictures inserted into the ongoing visual series (shown in Figure 2). These target pictures were hand positions that did not fit into the main movement sequence. The participant was not required to imitate this oddball hand position, but instead respond vocally when it appeared. Reaction time was measured with a microphone fitted to a switch, triggered by the vocal response.

Experiment 1: The effect of congruency between performed and observed action on the prediction of visual images

The initial experiment was conducted as described above, with one additional factor. Participants viewed the visual stimuli as a sequential series in one condition (e.g., a hand opening and closing), and in the other condition as a random ordering of the same frames (so that there is no temporal matching between the sequence of individual frames of the observed action and the instructed action, which was performed slowly and continuously in time to the oscillating metronome). This allowed a control for performed-movement difficulty. We hypothesized that the results would show an advantage for oddball detection during the congruent condition over the incongruent condition only in the sequential visual series.

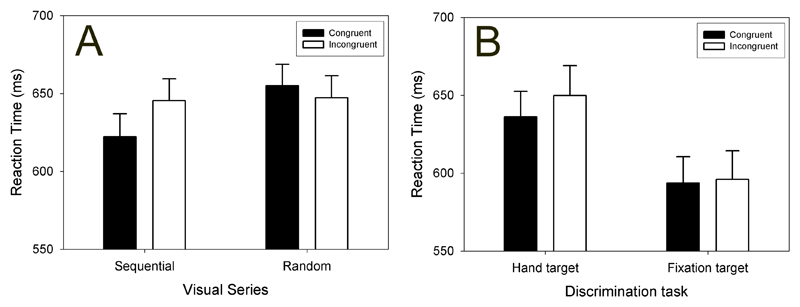

Reaction times to the oddball stimuli were in line with our hypothesis, as displayed in panel A of Figure 3. When viewing the sequential visual series, participants were faster at responding to the target stimuli if performing the congruent hand action than if performing the incongruent action. No such congruency effect was found when viewing the random visual series. While consistent with our hypothesis that forward model output could be used to help determine if the target stimulus belonged in the visual series, several competing interpretations also had to be considered and ruled out.

Figure 3.

Average reactions times (+/- 1 SEM) for discrimination of the target images during congruent (filled bars) and incongruent (empty bars) hand actions.

Panel A: Results of Experiment 1. The displayed visual series was either sequential or random. Panel B: Results of Experiment 2. Participants responded vocally either to the hand-picture targets or the fixation-cross targets.

Experiment 2: Addressing attentional interpretations of the congruency effect

One simple explanation for this phenomenon is that performing a hand movement while observing an incongruent hand movement is a hard task. This interpretation presumes that the RT differences in the sequential visual series are not mediated by sensory-predictive processes, but rather by a general cognitive slowing or interference in the incongruent condition, caused by the task demands of seeing one action while performing another. To test this, we replicated the basic phenomenon of the initial experiment (for the sequential visual images) while introducing a new task on some blocks of trials. In these new trials, the performed hand movements and visual images remained the same as in the basic paradigm, but participants now had to respond vocally to changes of the visual metronome (from a pointer to a cross). If the earlier results were due to a simple attentional difference caused by having to perform incongruent rather than congruent hand movements, we would also expect reaction time differences in this control condition to differ from each other.

The replication of the basic phenomenon was a success, with faster RTs to oddball hand-targets for the congruent condition compared to the incongruent condition. For the attentional control condition, responses to the change in the metronome were not different for congruent or incongruent hand actions (Figure 3, panel B). This suggested that the congruency effect in the basic paradigm is specific to cognitive tasks related to hand position, rather than being due to a general attentional effect.

Having established that the congruency RT effect on our task was not simply due to attentional differences between the congruent and incongruent movement conditions, in Experiments 3 to 5 we proceeded to investigate more complex aspects of this phenomenon.

Experiment 3: The time course of motor-visual priming

One artificial aspect of the original paradigm is that while the performed movement is a smooth, continuous action, the visual display only changes once a second. This allowed comparison between the sequential and random visual presentations (the latter of which would have been untenable with constantly updating visual presentation). However, this then raises a question of when the predictions of each forthcoming image are generated and/or used: are the predictions continuously generated, while subjects perform the slow, continuous hand movements, or are they generated or used only at the time of visual presentation?

In previous studies, the visuo-motor priming effect appeared to have a short-lived time course: if the prime stimulus and the go signal are temporally separated (e.g., the prime stimulus is a black and white image of a hand position, and the go signal is a switch from black and white to color display), then with an interstimulus interval longer than 700 milliseconds the prime stimulus no longer affects the speed of response initiation [9].

In order to examine whether motor-visual priming is similarly time-locked, we reduced each picture’s display time to 500 ms, and presented an opaque grey square over the top of this picture for the 500 ms period before the subsequent picture presentation. Introducing this interval between picture presentations removed the congruency priming effect. It seems reasonable that any forward modeling during active hand movement should be a continuous process (although this is still a working hypothesis). It is therefore likely that it is the integration of the two streams of information (forward model output and visual inputs) that is time constrained, and that the contributions of the forward model process to the visual discrimination task are perhaps time-locked to the onset of the visual stimulus. While this result suggests that the timing of this integration is critical, this issue currently remains unresolved.

Experiment 4: First-person and third-person perspective visual stimuli

The perspective of visual stimuli influences visuo-motor priming, with experimental reports of priming advantages for both first-person and third-person perspective stimuli. It has been suggested that a third-person viewpoint advantage might reflect imitative experience, or experience of images seen in mirrors; in contrast, the first-person effect observed for images of hands may reflect the action-relevance of the cue, allowing direct matching of the cue image with hand posture.

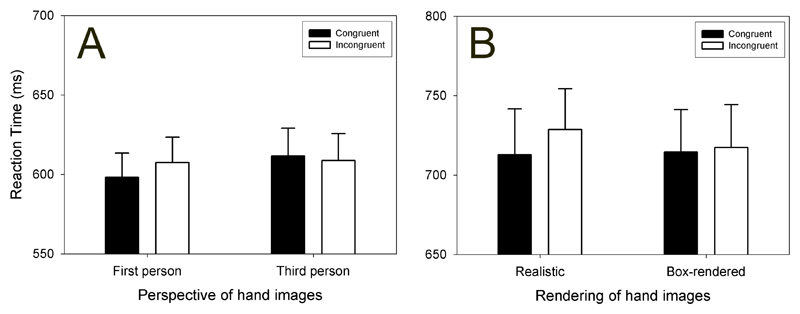

Experiment 4 used the same stimuli as the original experiment for the first-person perspective condition. The third-person stimuli were mirrored versions of the same pictures (now a right-hand, presented on the left of the screen). As in Experiments 1 and 2 (both of which used first person stimuli), there was an advantage for responding to the oddball targets in the congruent relative to the incongruent condition, during the first-person stimuli. These first-person congruent condition responses were also significantly faster than the third-person congruent responses; most importantly, the third-person congruent/incongruent oddball detection responses did not differ from each other. These data are displayed in Figure 4, panel A.

Figure 4.

Average reactions times (+/- 1 SEM) for discrimination of the target images during congruent (filled bars) and incongruent (empty bars) hand actions.

Panel A: Results of Experiment 4. The displayed visual images were in first-person perspective or third-person perspective. Panel B: Results of Experiment 5. The displayed visual images were either realistically rendered, or box rendered (Figure 1).

The fact that the congruency effect is limited to first-person perspective hand stimuli provides additional evidence against the hypothesis that the phenomenon is simply a result of having to perform an action different from that which is observed. Given that humans have a preference for specular imitation from an early age [12,13], we expect that the motor difficulty of performing in a particular action/observation combination will be matched for first-person and third-person stimuli.

More interestingly, this result indicates that it is the relationship between the performed movement and the observed visual series that mediates the congruency effect. If our hypothesis regarding the use of forward models in this task is correct, then it may be that in the first-person, congruent movement condition the visual stimuli are classified as being “the same” as the performed movement, or perhaps are experienced as being the result of the performed movement. All of the other conditions (incongruent movement and/or third-person perspective) are experienced as distinct from the performed movement and hence it may not be possible to use forward model information in these situations.

Experiment 5: Realistic biological rendering

The final experiment in this series of behavioral studies expands upon whether altering the gross similarity of visual features between the hand and the observed hand images impacts on the congruency effect. Experiment 4 suggested that identification with the observed action in the congruent condition is necessary in order to produce the congruency RT effect.

We contrasted the congruency effect under the basic paradigm with a second set of visual stimuli: these portrayed the same hand movements, but the hand image was composed of rendered boxes rather than being a realistically-rendered hand (see Figure 2, panel A; bottom row). Behavioral [14] and functional imaging studies [15,16] have shown that we process movement or action differently if the actor is a biological agent (i.e., another person) rather than a non-biological agent (such as a robot), although it is not clear at present whether this effect is due to differences with regard to attribution of agency (dealing with an autonomous agent, rather than a preprogrammed machine) or differences in the kinematics of the movement between these agents. In our study, the kinematics were identical for these two visual series; the only difference was whether the pictures looked like hands or were non-realistic schematics of a hand.

The results showed that the congruency effect did not differ between the realistic and box-rendered visual stimuli (Figure 4, panel B). It therefore appears that the realism of the model hands is not as relevant for this task as is the realism of the observed movement itself.

Summary of behavioral evidence for motor-visual priming

The basic congruency effect – faster detection and response to oddball stimuli when performing a congruent hand action, compared to an incongruent movement – was found in Experiments 1, 2, 4, and 5. We have argued that these results are indicative of motor-visual priming. A visuo-motor interpretation would require the visual stimuli to prime performance of similar hand actions; here, the dependent variable was vocal reaction time to the oddball stimulus, and so any visuo-motor priming would have to operate indirectly by altering the neural resources available to the discrimination task, a possibility which we have refuted (Experiments 2 and 4).

In conclusion, we interpret these results as indicating that the output of an internal forward model of hand state could be applied to a visual discrimination task, when the hand action and visual stimuli are congruent. The next step was to test this explicitly, using functional magnetic resonance imaging (fMRI) to measure brain activity while participants performed this task. We anticipated that our task would differentially activate brain areas hypothesized to be involved in such forward model motor processes, and the next section begins with a review of these areas.

Neural substrates of action imitation and forward modeling

Certain areas of the brain are involved in processing both the production and observation of goal-directed movement: in the monkey, such neurons (e.g., in the ventral premotor cortex) are termed mirror neurons because of this dual role [17]. The entire population of these premotor mirror neurons can be subdivided into two categories. Strictly-congruent mirror neurons are active during observation of the same part of the motor repertoire that they are responsible for during action execution, e.g. a precision grip, or a power grip; broadly-congruent mirror neurons are active during observation of movement components different to those they produce during action execution [18]. Some of these neurons also fire if the monkey hears a sound consequent to a particular action, such as paper tearing [19,20], or even if the monkey can see the start of an action and knows that a target object is present, but is unable to see the interaction with the object [21]. On the basis of this evidence, it has been proposed that such neurons are coding for the goal of the observed action [22].

In humans, functional neuroimaging has identified ventral premotor cortex, the superior parietal cortex, and other motor-related areas as showing mirror-neuron properties [23]. These areas are activated when an action is performed, observed, or even just imagined. Iacoboni [24] provides a framework for how these areas might interact during imitation, starting with a visual representation of the to-be-imitated action in the superior temporal sulcus (STS), an area that is responsive to movement of biological agents, and which is active during action observation but not execution. Visual information from the observed action passes from the STS to the superior parietal lobule, which codes for the predicted somatosensory outcome of the intended action; this passes to the ventral premotor cortex, where the action’s goal is translated into a motor program; an efferent copy of this planned action then returns to the STS where it is compared to the original visual representation of the observed movement. The final stage of this process is clearly an instance of forward modeling, albeit one driven by an external stimulus.

We hypothesized that one or more components of this system would be differentially activated when participants performed congruent movements in time with a sequential visual series, compared to the other conditions of our motor-visual priming task. We were unsure of the direction this difference might manifest itself in, but given that the sequential congruent condition most closely approximates natural circumstances (hand action and visual feedback agree), we might anticipate greater levels of processing in the incongruent conditions.

Functional activity during a motor-visual priming task

In order to test this hypothesis, we scanned participant’s brain activity using fMRI while they performed the basic version of our paradigm, as described in Experiment 1 (with congruent and incongruent hand movements, sequential and random visual series presentations). Due to the restrictions of the scanning environment, the participant responded to the oddball targets on a foot pedal rather than with a vocal response. Additionally, movements were performed with the right hand, and the picture displays used in the behavioral studies was reversed accordingly (i.e., a right hand was presented on the right of the projector screen, and the metronome on the left). Responses on the foot pedal were made with the left foot.

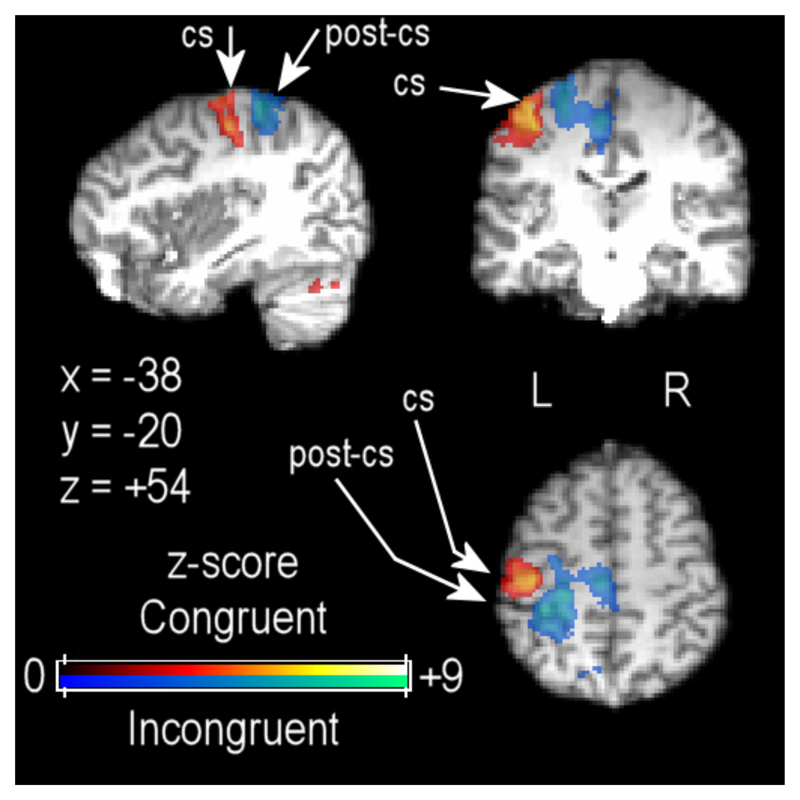

fMRI data analysis was performed with the data organized in a block design, initially comparing brain activity across conditions with a 2 x 2 factorial design: Visual series (sequential vs. random) x hand movement (congruent vs. incongruent). As shown in Figure 5, contrasting the congruent and incongruent hand movements (collapsed over sequential and random conditions) confirmed that primary sensorymotor cortex activations differed for these two hand movements, one requiring opening and closing the hand (shown in the red spectrum), the other rotating the wrist with the palm open (shown in the blue spectrum).

Figure 5.

Functional imaging data showing differential sensorimotor cortex activation for congruent (red to yellow shading) and incongruent (blue to pink shading) hand movements, thresholded at Z > 2.6 with cluster thresholding at p < .05. Functional data are projected onto a single participant’s high-resolution structural scan, registered to standard MNI-space coordinates. CS = central sulcus; PCS = postcentral sulcus.

Several motor and visual areas (not displayed in the figures) were more strongly activated for the random visual series compared to the sequential visual series [25]. These included bilateral dorsal premotor areas, right hemisphere ventral premotor cortex, bilateral superior parietal cortex, and bilateral anterior cingulate cortex. Increased activity in bilateral sites for area V5/MT was also significant in this comparison. We propose that performance demands in the random condition were greater than in the sequential condition. Increasing the complexity of motor tasks frequently leads to increased recruitment of ipsilateral motor cortex, including dorsal premotor cortex.

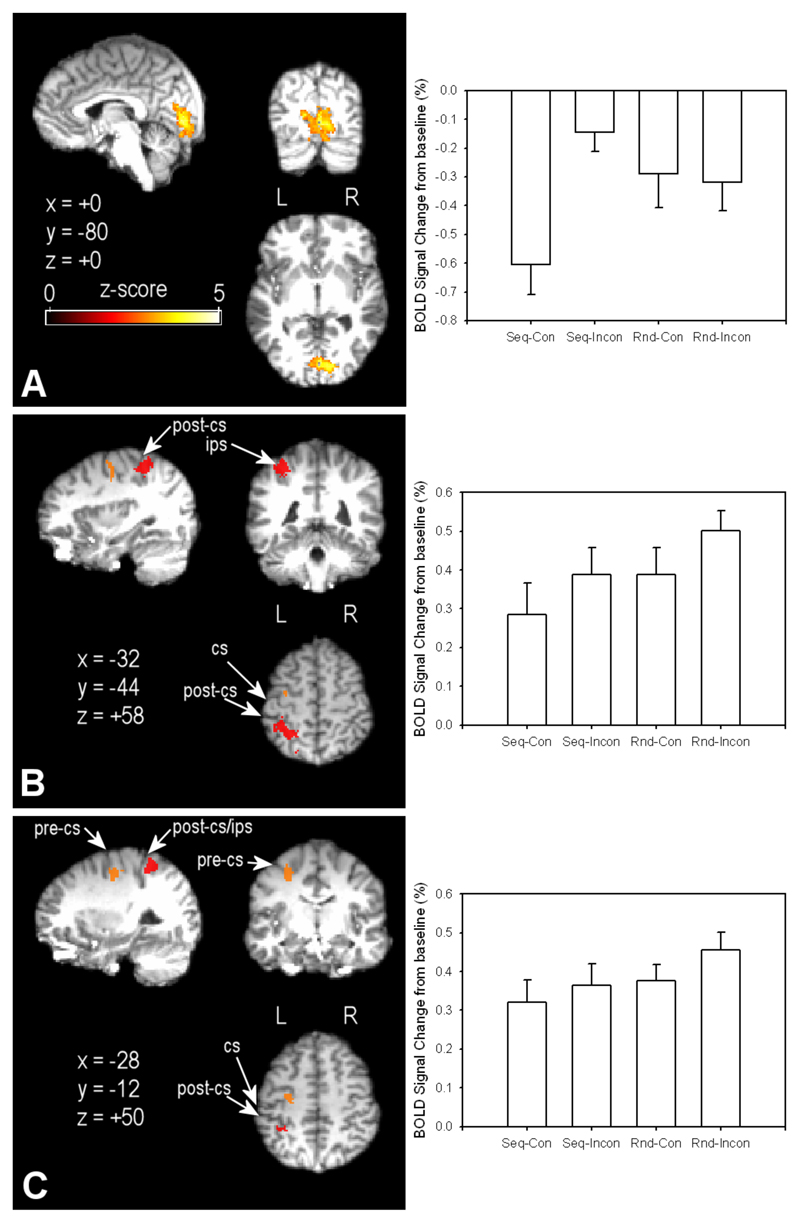

The negative interaction between the visual series and hand movement factors implicated differences in primary visual cortex activation (Figure 6, Panel A). This interaction calculates the difference in neural activation for the sequential incongruent minus the sequential congruent condition, balancing for the effects of hand movement by subtracting from this initial equation the result of random incongruent minus random congruent. However, this factorial analysis is somewhat inappropriate at this stage of the analysis, because the random congruent condition is not a truly “congruent” condition: there is a continuous mismatch between the performed action and the observed visual series.

Figure 6.

Functional data are projected onto a single participant’s high-resolution structural scan, registered to standard MNI-space coordinates. Panel A: Visual cortex activation for the interaction between the Random/Sequential visual display and Incongruent/Congruent hand movement factors. Panel B: Left hemisphere superior parietal cortex activation (in red shading) for conjunction of mismatch conditions compared to sequential congruent. Red highlighted areas were significantly more active in the conjunction of the two main effects (Incongruent > Congruent, Random > Sequential). Panel C: Left hemisphere dorsal premotor cortex activation for the conjunction as noted in Panel B. CS = central sulcus; PCS = postcentral sulcus. Thresholding of contrasts was at Z > 2.6, with clusters thresholded at p < .05. Bar graphs to the right of each map show average percentage signal change in the displayed area for the four experimental conditions (Seq = Sequential; Rnd = Random; Con = Congruent; Inc = Incongruent).

To address this issue, we calculated a conjunction of the areas found to be differentially activated for the two main effect contrasts: (1) Incongruent compared to Congruent, and (2) Random compared to Sequential (looking at the reverse of this conjunction revealed no commonly active areas). This conjunction effectively controls for neural differences in performed hand action (both the sequential and random congruent conditions had the same hand movement) and viewed visual series (the sequential congruent and incongruent conditions had the same visual stimuli). Therefore it is likely that activations revealed by this conjunction are related to the integration of motor efference copy with visual inputs, rather than simple effects due to either visual or motor input on its own.

The results of the conjunction analysis were quite clear. Firstly, they confirmed that primary visual cortex activation was greater when hand action and visual series did not match (as shown for the interaction in Figure 6, panel A). We propose that this activation indicates that the discrimination task relies more heavily on early visual signals when there is no useful forward model information from the performed hand movement to apply to the task. Interestingly, two extra areas of activity appeared in the conjunction analysis that were not apparent in the factorial analysis – left hemisphere superior parietal lobule (SPL), contralateral to the side of movement and visual presentations (red areas in Figure 5, panel B), and left hemisphere dorsal premotor cortex (orange areas in Figure 5, Panel C; see [25].

Roles of posterior parietal cortex and primary visual cortex in motor-visual priming

We propose that this SPL activity is coding for/updating an internal model of the contralateral hand’s state. There are several other studies that support our interpretation of these data. Firstly, a patient with a cyst occluding her left SPL has been reported to lose track of the position of her right arm when denied visual feedback of this limb [26]. Secondly, disrupting local processing in the superior posterior parietal cortex (PPC), with repetitive transcranial magnetic stimulation (rTMS), interferes with the ability to judge whether visual feedback of a virtual hand is temporally coincidental with hand movement. This effect only occurred for active hand movement; no differences were seen for judgments of virtual reality feedback for passive movements. This was taken as evidence that the superior PPC is involved in integrating motor efference copy (which would only occur during the active movement condition) with visual feedback, so that when this area was deactivated with rTMS, these comparisons became less accurate [27].

Thirdly, another brain imaging study using positron emission tomography has suggested that viewing inaccurate or misleading visual feedback may lead to increased activation in the SPL [28]. Participants performed a bimanual task where they opened and closed both hands, either in-phase with each other or 180° out-of-phase, always looking at their left hand. However, on some trials, the view of the left hand was replaced by a mirror so that participants viewed a mirror image of their right hand. In this experiment, activity in the right hemisphere SPL (note that this is contralateral to the observed hand) increased when the hands were moving out-of-phase compared to in-phase, and also when viewing the mirror image compared to viewing the actual left hand [28]. These results suggest that as visual feedback concerning the left hand becomes less reliable, being replaced by a mirror image with in-phase movement, or the mirror image when the hands are out of phase, the SPL has to work harder, presumably to maintain an accurate representation of the left hand’s actual state.

To summarize our results, we believe that the superior parietal lobule maintains a dynamic estimate of hand state, based on the forward model estimate which is calculated from motor efference copy and visual feedback. In the mismatch conditions, the observed visual images provide an inaccurate index of hand state. It seems likely that the increase in SPL activation in these conditions indicates increased processing to resolve the mismatch between action and visual input, in order to produce a more accurate estimate of hand state. However, under these mismatch conditions, this estimate would be of no use for the visual discrimination task, and so we suggest that the increase in primary visual cortex activity, which was primarily in the left hemisphere, contralateral to the side of hand image presentation, represents an increased reliance on early visual processing of these stimuli. This allows the task to be performed correctly – but with a slight delay relative to the sequential congruent condition, in which forward model output can facilitate the discrimination task.

Integrating functional imaging and behavioral results

We have argued that the behavioral effects we have seen are primarily related to forward modeling of hand state, and increased dependency on visual inputs when this forward model is not reliable. Our functional imaging experiment localized differences in brain activity between sequential congruent and the mismatch conditions to the superior parietal lobule, an area involved with producing forward model estimates of hand state, which is also consistent with our finding that the congruency effect is limited to visual judgments of hand state (Experiment 2).

If our interpretation of the changes in brain activity is accurate, then one might hypothesize that during third-person perspective trials (as in Experiment 4) primary visual cortex activity would be similarly potentiated compared to first-person sequential congruent trials. This would confirm that increased dependency on early visual signals mediates the congruency RT effect. Conducting Experiment 4 with fMRI would yield useful information regarding both the role of the superior parietal lobule and the nature of the processing in the third-person perspective trials. If the SPL was not activated during these trials, it might confirm that these stimuli are not considered to be visual feedback of the performed movement. Alternatively, it may be that these stimuli are processed in a similar manner to the first-person incongruent stimuli.

Finally, we could speculate on the significance of the null congruency effect in Experiment 3, when visual feedback was intermittent. In the light of the functional imaging data, it seems reasonable to assume that the forward modeling process is engaged by the need to integrate of motor efference copy and visual feedback. Switching visual feedback on and off might disengage this process, or the output of this process might only persist for a short time – perhaps in a similar manner to the temporal limits of visuo-motor priming [9].

Conclusions

In the introduction, we summarized behavioral experiments that have tested various aspects of the interaction between motor and visual processes. Our own results, which we believe to be indicative of motor-visual priming, have shown that information derived from the performance of hand movements can be used to aid judgments on a related visual discrimination task. The functional imaging data localized the neural basis of this effect to the left hemisphere superior parietal lobule – where the integration of motor efference copy and visual feedback is hypothesized to take place – and primary visual cortex, which appears to be relied on more heavily when forward modeling of actual hand position is not relevant to the visual task.

As a final point, we cannot currently state with any certainty whether this area of superior parietal lobule is part of the forward model process itself, or simply receives the output of this model process from elsewhere. We presume that forward modeling occurs throughout the entire duration of the hand movements, regardless of the nature of the visual feedback. This means that the fMRI contrasts may be insensitive to the site of this forward modeling, as this process should occur in all active hand movement conditions. While we have some data comparing these active conditions with a passive version of the RT task, it is not possible to separate out the neural activations involved in forward model processing from more general motor-related brain activity. Future research should address the site of this processing.

Acknowledgements

This work was funded by the Wellcome Trust and the J S McDonnell Foundation. We thank the Oxford FMRIB centre for use of their facilities and their support. We also thank Jonathan Winter for his continuous technical assistance, and Peter Hansen for assistance with preliminary analysis of the fMRI data.

Reference List

Futher reading:

We suggest the interested reader starts with the three papers [1,17,24] that are highlighted in the following reference list.

- 1.Miall RC, Wolpert DM. Forward models for physiological motor control. Neural Netw. 1996;9:1265–1279. doi: 10.1016/s0893-6080(96)00035-4. [DOI] [PubMed] [Google Scholar]

- 2.Wolpert DM, Miall RC, Kawato M. Internal models in the cerebellum. Trends Cogn Sci. 1998;2:338–347. doi: 10.1016/s1364-6613(98)01221-2. [DOI] [PubMed] [Google Scholar]

- 3.Blakemore SJ, Wolpert DM, Frith CD. Central cancellation of self-produced tickle sensation. Nat Neurosci. 1998;1:635–640. doi: 10.1038/2870. [DOI] [PubMed] [Google Scholar]

- 4.Parsons LM. Temporal and kinematic properties of motor behavior reflected in mentally simulated action. J Exp Psychol Hum Percept Perform. 1994;20:709–730. doi: 10.1037//0096-1523.20.4.709. [DOI] [PubMed] [Google Scholar]

- 5.Parsons LM, Fox PT, Hunter Downs J, Glass T, Hirsch TB, Martin CC, Jerabek PA, Lancaster JL. Use of implicit motor imagery for visual shape discrimination as revealed by PET. Nature. 1995;375:54–58. doi: 10.1038/375054a0. [DOI] [PubMed] [Google Scholar]

- 6.Frak V, Paulignan Y, Jeannerod M. Orientation of the opposition axis in mentally simulated grasping. Exp Brain Res. 2001;136:120–127. doi: 10.1007/s002210000583. [DOI] [PubMed] [Google Scholar]

- 7.Craighero L, Fadiga L, Rizzolatti G, Umilta C. Action for perception: A motor-visual attentional effect. Journal of Experimental Psychology-Human Perception and Performance. 1999;25:1673–1692. doi: 10.1037//0096-1523.25.6.1673. [DOI] [PubMed] [Google Scholar]

- 8.Craighero L, Bello A, Fadiga L, Rizzolatti G. Hand action preparation influences the responses to hand pictures. Neuropsychol. 2002;40:492–502. doi: 10.1016/s0028-3932(01)00134-8. [DOI] [PubMed] [Google Scholar]

- 9.Vogt S, Taylor P, Hopkins B. Visuomotor priming by pictures of hand postures: perspective matters. Neuropsychol. 2003;41:941–951. doi: 10.1016/s0028-3932(02)00319-6. [DOI] [PubMed] [Google Scholar]

- 10.Brass M, Bekkering H, Wohlschlager A, Prinz W. Compatibility between observed and executed finger movements: comparing symbolic, spatial, and imitative cues. Brain Cogn. 2000;44:124–143. doi: 10.1006/brcg.2000.1225. [DOI] [PubMed] [Google Scholar]

- 11.Miall RC, Stanley J, Todhunter S, Levick C, Lindo S, Miall JD. Performing hand actions assists the visual discrimination of similar hand postures. Neuropsychol. 2006;44:966–976. doi: 10.1016/j.neuropsychologia.2005.09.006. [DOI] [PubMed] [Google Scholar]

- 12.Schofield WN. Do Children Find Movements Which Cross Body Midline Difficult. Q J Exp Psychol. 1976;28:571–582. [Google Scholar]

- 13.Bekkering H, Wohlschlager A, Gattis M. Imitation of gestures in children is goal-directed. Quarterly Journal Of Experimental Psychology Section A-Human Experimental Psychology. 2000;53:153–164. doi: 10.1080/713755872. [DOI] [PubMed] [Google Scholar]

- 14.Kilner JM, Paulignan Y, Blakemore SJ. An interference effect of observed biological movement on action. Curr Biol. 2003;13:522–525. doi: 10.1016/s0960-9822(03)00165-9. [DOI] [PubMed] [Google Scholar]

- 15.Perani D, Fazio F, Borghese NA, Tettamanti M, Ferrari S, Decety J, Gilardi MC. Different brain correlates for watching real and virtual hand actions. Neuroimage. 2001;14:749–758. doi: 10.1006/nimg.2001.0872. [DOI] [PubMed] [Google Scholar]

- 16.Han S, Jiang Y, Humphreys GW, Zhou T, Cai P. Distinct neural substrates for the perception of real and virtual visual worlds. Neuroimage. 2005;24:928–935. doi: 10.1016/j.neuroimage.2004.09.046. [DOI] [PubMed] [Google Scholar]

- 17.Rizzolatti G, Fadiga L, Gallese V, Fogassi L. Premotor cortex and the recognition of motor actions. Brain Res Cogn Brain Res. 1996;3:131–141. doi: 10.1016/0926-6410(95)00038-0. [DOI] [PubMed] [Google Scholar]

- 18.Gallese V, Fadiga L, Fogassi L, Rizzolatti G. Action recognition in the premotor cortex. Brain. 1996;119(Pt 2):593–609. doi: 10.1093/brain/119.2.593. [DOI] [PubMed] [Google Scholar]

- 19.Kohler E, Keysers C, Umilta MA, Fogassi L, Gallese V, Rizzolatti G. Hearing sounds, understanding actions: action representation in mirror neurons. Science. 2002;297:846–848. doi: 10.1126/science.1070311. [DOI] [PubMed] [Google Scholar]

- 20.Keysers C, Kohler E, Umilta MA, Nanetti L, Fogassi L, Gallese V. Audiovisual mirror neurons and action recognition. Exp Brain Res. 2003;153:628–636. doi: 10.1007/s00221-003-1603-5. [DOI] [PubMed] [Google Scholar]

- 21.Umilta MA, Kohler E, Gallese V, Fogassi L, Fadiga L, Keysers C, Rizzolatti G. I know what you are doing. a neurophysiological study. Neuron. 2001;31:155–165. doi: 10.1016/s0896-6273(01)00337-3. [DOI] [PubMed] [Google Scholar]

- 22.Rizzolatti G, Fogassi L, Gallese V. Neurophysiological mechanisms underlying the understanding and imitation of action. Nat Rev Neurosci. 2001;2:661–670. doi: 10.1038/35090060. [DOI] [PubMed] [Google Scholar]

- 23.Grezes J, Decety J. Functional anatomy of execution, mental simulation, observation, and verb generation of actions: a meta-analysis. Hum Brain Mapp. 2001;12:1–19. doi: 10.1002/1097-0193(200101)12:1<1::AID-HBM10>3.0.CO;2-V. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Iacoboni M. Understanding others: Imitation, Language, and Empathy. In: Hurley S, Chater N, editors. Perspectives on Imitation, 1: Mechanisms of Imitation and Imitation in Animals. 2005. pp. 77–100. [Google Scholar]

- 25.Stanley J, Miall RC. Functional activation in parieto-premotor and visual areas dependent on congruency between hand movement and visual stimuli during motor-visual priming. Neuroimage. 2006;34:290–299. doi: 10.1016/j.neuroimage.2006.08.043. [DOI] [PubMed] [Google Scholar]

- 26.Wolpert DM, Goodbody SJ, Husain M. Maintaining internal representations: the role of the human superior parietal lobe. Nat Neurosci. 1998;1:529–533. doi: 10.1038/2245. [DOI] [PubMed] [Google Scholar]

- 27.MacDonald PA, Paus T. The Role of Parietal Cortex in Awareness of Self-generated Movements: a Transcranial Magnetic Stimulation Study. Cereb Cortex. 2003;13:962–967. doi: 10.1093/cercor/13.9.962. [DOI] [PubMed] [Google Scholar]

- 28.Fink GR, Marshall JC, Halligan PW, Frith CD, Driver J, Frackowiak RS, Dolan RJ. The neural consequences of conflict between intention and the senses. Brain. 1999;122(Pt 3):497–512. doi: 10.1093/brain/122.3.497. [DOI] [PubMed] [Google Scholar]