Abstract

Objectives

Despite the importance of verbal learning and memory in speech and language processing, this domain of cognitive functioning has been virtually ignored in clinical studies of hearing loss and cochlear implants in both adults and children. In this paper, we report the results of two studies that used a newly developed visually based version of the California Verbal Learning Test (CVLT-II), a well-known normed neuropsychological measure of verbal learning and memory.

Design

The first study established the validity and feasibility of a computer-controlled visual version ofthe CVLT-II, which eliminates the effects of audibility of spoken stimuli, in groups of young normal-hearing and older normal-hearing adults. A second study was then carried out using the visual CVLT-II format with a group of older postlingually deaf experienced cochlear implant (ECI) users (N=25) and a group of older normal hearing (ONH) controls (N=25) who were matched to ECI users for age, socioeconomic status, and non-verbal IQ. In addition to the visual CVLT-II, subjects provided data on demographics, hearing history, non-verbal IQ, reading fluency, vocabulary, and short-term memory span for visually presented digits. ECI participants were also tested for speech recognition in quiet.

Results

The ECI and ONH groups did not differ on most measures of verbal learning and memory obtained with the visual CVLT-II, but deficits were identified for ECI participants that were related to recency recall, the build-up of proactive interference (PI) and retrieval induced forgetting. Within the ECI group, nonverbal fluid IQ, reading fluency, and resistance to the build-up of PI from the CVLT-II consistently predicted better speech recognition outcomes.

Conclusions

Results from this study suggest that these underlying foundational neurocognitive abilities are related to core speech perception outcomes following implantation in older adults. Implications of these findings for explaining individual differences and variability and predicting speech recognition outcomes following implantation are discussed.

Introduction

Cochlear implants (CIs) work and often work very well for many children and adults with profound sensorineural hearing loss. There is no question in anyone’s mind at this time that CIs are “one of the great success stories of modern medicine,” representing a significant engineering and medical milestone in the treatment of sensorineural hearing loss (Wilson et al. 2011). Unfortunately, while many CI patients display substantial benefits in recognizing speech and biologically significant environmental sounds and understanding spoken language following implantation, a significant number of patients have poor outcomes and display less than optimal benefits following implantation, even after several years of experience with their CIs. The estimates of poor outcomes following cochlear implantation fall in the 25 to 30 percent range, depending on the behavioral criteria that are used to assess benefit and outcomes (Rumeau et al. 2015; Lenarz et al. 2012). Most patients who have received CIs get some benefit from their implants under idealized, highly controlled listening conditions in the audiology clinic or research laboratory, but in everyday real-world conditions they commonly report significant difficulties in listening to speech in noise, especially multi-talker babble, communicating over the telephone, or listening under conditions of high cognitive workload. Recognizing and understanding speech produced by non-native speakers or speakers who have marked unfamiliar regional dialects are also very serious challenges for most patients who have received CIs (see Tamati & Pisoni 2014). CI users’ abilities to rapidly adapt and adjust to highly variable adverse listening conditions are significantly compromised, compared to young normal-hearing listeners.

Understanding and explaining the reasons for poor outcomes following cochlear implantation is a challenging research problem, with significant gaps in our knowledge remaining, despite the pressing clinical significance of this issue (See Moberly et al. 2016a, b). Only a small number of conventional clinical factors have been identified as predictors of speech recognition outcomes, such as amount of residual hearing preoperatively, previous use of a hearing aid, patient age, and duration of hearing loss prior to implantation (Green, Bhatt, Mawman et al., 2007; Kelly, Purdy, & Thorne, 2005; Lazard et al., 2012; Leung et al., 2005). However, it still remains unclear why some patients do extremely well with their CIs while other patients struggle and fail to reach optimal levels of speech recognition performance even under quiet conditions in the clinic or laboratory. This issue represents a significant gap in our current knowledge of speech recognition outcomes following cochlear implantation and is an important barrier to progress in developing novel personalized interventions to help patients with suboptimal outcomes improve their speech recognition performance.

Patients with CIs generally have difficulty understanding speech in noise, recognizing music and non-speech biologically significant environmental sounds, and processing acoustic signals under challenging listening conditions (Limb & Roy; 2014; Shafiro, Gygi, Cheng, Vachhani, & Mulvey, 2011; Srinivasan, Padilla, Shannon, & Landsberger, 2013). Part of the difficulty they have stems from the nature and integrity of the electrical signal they receive through their implant, which is spectrally degraded, frequency-shifted, significantly underspecified, and sparsely-coded relative to the original unprocessed signal presented at the ear (Friesen, Shannon, Baskent, & Wang, 2001). The critical acoustic-phonetic cues encoded in the signal that support robust speech intelligibility are coarsely-coded, and the fine-grained phonetic and indexical details of the original speech signal are significantly compromised during neural encoding and transmission to higher neurocognitive information processing centers of the brain. While some of the elementary acoustic-phonetic cues needed for speech recognition in the quiet are encoded and preserved, these minimal phonetic attributes are fragile and poorly specified by the current generation of signal processing algorithms used in CIs clinically today. As a result, subsequent neural coding and memory representations in sensory and working memory are also fragile, lacking important episodic context cues and critical dynamic information about coarticulation that reflects the encoding of the motions of the talker’s vocal tract. These contextual variations in the speech waveform are essential for robust recognition of speech in noise and under challenging listening conditions or under high cognitive load (Mattys et al. 2012).

In addition to these considerations about the degraded nature of the signal a listener receives through a CI, several researchers have argued recently that a much broader based systems-approach should be adopted to begin investigating the underlying basis of the enormous individual differences and variability routinely observed after implantation (Pisoni & Kronenberger 2010). The variability universally observed in conventional speech recognition outcome measures not only reflects the efficiency and quality of early sensory registration and the fidelity of encoding of acoustic signals by the auditory nerve, but it also reflects the foundational contributions of the information processing system as a whole. Robust speech recognition and spoken language comprehension rely heavily on basic foundational cognitive information processing operations such as attention, learning, memory, and inhibitory control processes, which are actively used by listeners to support the initial encoding, processing, storage and retrieval of linguistic information carried by the speech waveform (Altman, 1990; Borden, Harris & Raphael, 1994; Pisoni & Remez, 2005). Speech scientists and acoustical engineers have known for more than 60 years that speech recognition and spoken language processing do not take place in isolation in the ear or at the auditory periphery (Flanagan, 1972; Liberman, 1996; Stevens, 1998). Robust spoken word recognition and speech understanding reflect the final product of a long series of information processing stages that routinely draw on multiple cognitive resources and many different sources of knowledge in long-term memory, based on the listener’s prior experiences and unique developmental histories (Pisoni & McLennan 2015).

In a similar vein, there is a growing consensus among many clinicians and researchers in the field of CIs that although there is enormous variability routinely observed in our current endpoint outcome measures of speech recognition following implantation, these endpoint measures do not help us to understand and explain the underlying foundational basis for these individual differences (Wilson & Dorman, 2012). The current battery of behaviorally based outcome measures which are used universally at all CI centers around the world was never designed to assess or investigate individual differences in speech recognition outcomes. Rather, this test battery was developed to establish efficacy of CIs for FDA approval; none of the individual tests on these assessment batteries were developed to study the effectiveness of CIs in complex real-world environments or to investigate the information processing mechanisms underlying individual differences and variability in speech recognition outcomes (Pisoni et al. 2008).

In this paper, we report the results of a new study undertaken to investigate verbal learning and memory following implantation in a group of post-lingually deaf adults who received a CI for acquired severe-to-profound hearing loss. Verbal learning and memory are defined as encoding, storage, and retrieval processes using words presented over multiple trials. The most distinctive hallmark of spoken language comprehension is rapid adaptation and adjustment to novel input signals in many different listening environments. In the case of a successful CI user, rapid perceptual learning and efficient adaptation processes must compensate and normalize for acoustic-phonetic variability, as well as for the compromised underspecified signals delivered to the brain by the CI. Despite the central importance that learning and memory processes play in the perceptual processing and recognition of novel input signals, basic and clinical research on verbal learning and memory processes has been neglected in the research efforts carried out on patients with CIs, even though these cognitive processes are central to understanding successful adaptive functioning in adverse listening environments. Most clinicians and researchers in the CI field believe that the variability observed in speech recognition outcomes following implantation is simply due to differences in early registration and encoding of sensory information in short-term and working memory. This is true in spite of the critical importance of long-term memory (LTM) in supporting robust adaptive functioning and speech recognition in challenging or adverse listening environments. In these situations, prior linguistic knowledge and experience play compensatory roles when the bottom-up sensory information is significantly degraded (see Rönnberg et al. 2013). Thus, the premise of this study was that the use of a non-auditory measure of rapid verbal learning and memory could provide valuable insight into the information-processing mechanisms of CI users. Moreover, we predicted that findings from this measure of learning and memory would be related to speech recognition outcomes.

The present study used a well-known clinical neuropsychological instrument, the California Verbal Learning Test – Second Edition (CVLT-II; Delis et al. 2000), to measure several underlying information processing components and cognitive processes used to encode, store, process, and retrieve lists of familiar words from both short- and long-term memory. The CVLT-II and a variety of other verbal learning and memory tests such as the Rey Auditory Verbal Learning Test (RAVLT; Schmidt 1996), the Hopkins Verbal Learning Test (HVLT; Brandt 1991), and the Wide Range Assessment of Memory and Learning Verbal Learning subtest (WRAML; Sheslow & Adams 1990) are routinely used by neuropsychologists to identify memory weaknesses and impairments in a broad range of clinical populations. In addition to recall and recognition measures, the CVLT-II also provides very detailed information about the control processes and self-generated organizational strategies that an individual patient uses in a well-defined experimental task — free recall of categorized word lists — which has been studied and modeled extensively by cognitive and mathematical psychologists over the last 60 years (see Delis et al. 2000; Atkinson & Shiffrin 1968; Wixted & Rohrer 1993). Moreover, the CVLT-II has been used with several different clinical populations, providing critical behaviorally based benchmarks for comparison to establish strengths, weaknesses, and developmental milestones across a number of core foundational domains underlying verbal learning and memory processes.

The CVLT-II is a multi-trial free recall (MTFR) neuropsychological test that is used to study repetition learning effects and organizational strategies for verbal learning and memory. The test protocol produces a large amount of clinically relevant data in a short period of time. The scores obtained from the CVLT-II provide important diagnostic information about basic core verbal learning and memory processing mechanisms that are related to domains of executive functioning and cognitive control (cognitive processes involved in active recruitment and direction of mental activity in the service of attaining goals), including controlled attention, fluency-speed, abstraction, and self-generated retrieval organization strategies, along with measures of word recognition, encoding, storage, and retrieval strategies. Specifically, the MTFR methodology used in the CVLT-II provides measures of foundational cognitive processes underlying verbal learning and memory, including repetition-based multi-trial free recall, primacy and recency effects, proactive and retroactive interference, memory storage and decay in both immediate and delayed free- and cued-recall, as well as self-generated organizational strategies in memory retrieval such as serial, semantic, and subjective clustering. These organization strategies are routinely used by subjects to increase the strength of memory representations and make the encoding of verbal items in memory more accessible for retrieval during free recall tasks (Delis et al. 2000). Thus, it is reasonable to predict that measures obtained using the CVLT-II would be related to speech recognition performance in CI users.

In the traditional clinical use of the CVLT-II, participants are read a list of 16 familiar words (List A) five times by a trained examiner to assess repetition learning processes and free recall after each study trial. The 16 words on List A come from four semantic categories (animals, vegetables, ways of travelling, furniture). After the list is presented, the participant is asked to recall as many of the words from List A as possible in any order. This free recall procedure is then repeated four additional times using the same List A, with items always presented in the same order, for a total of five repetition learning study trials. After the fifth presentation and recall trial of items from List A, participants are presented with a new list of 16 words from List B, the “interference list,” to measure proactive interference (PI). List B also contains words from four semantic categories. Half of the new words on List B share semantic categories with words on List A (animals, vegetables); the other half of the items are new words from two different semantic categories (musical instruments, rooms of a house). After recall of List B, participants are then asked to recall List A again (short-delay free recall) to measure retroactive interference (RI) effects produced by List B. Following a 20-minute delay period, during which the participant is engaged in a non-verbal distractor task, the participant is asked to recall the words from List A again (long-delay free recall) to measure memory decay after a retention interval. After each of the two List A delayed free recall tasks, the subjects are also given a cued recall task using the four original semantic categories from List A as retrieval cues. Finally, after the long delay recall tests are completed, a Yes/No recognition memory test is administered to assess storage of items from List A without any demands on retrieval.

The present study builds on the results reported in two earlier studies using the CVLT-II carried out with postlingually deaf adults who had severe-to-profound hearing losses (Heydebrand et al. 2007; Holden et al. 2013). Both studies used a non-standard version of the CVLT-II with simultaneous visual (printed words) and live-voice auditory presentation of the test lists. Heydebrand and colleagues (2007) reported that auditory-visual CVLT-II scores based on a composite score of several component measures of free recall performance accounted for 42% of the variance in CNC monosyllabic word recognition scores six months post-implantation. Based on their findings from the CVLT, the authors suggested the potential use of verbal learning and memory tasks in predicting speech recognition outcomes for post-lingually deaf CI users, but they provided few details about precisely what kinds of verbal learning measures would be clinically useful and which specific domains of verbal memory and learning should be investigated.

In a more recent follow-up study with a larger sample size encompassing a broader age range, Holden and her colleagues (2013) also found a significant relation between a similar CVLT-II composite free recall score and speech recognition outcomes, but this correlation was eliminated when they controlled for chronological age. However, despite use of combined visual-auditory presentation of stimuli, both of these studies were also limited in their conclusions because their CVLT-II assessment results may have been influenced by variability in auditory capabilities of the participants. For example, there may have been interference and competition from the auditory modality, or alternatively participants with stronger auditory processing may have received more benefit from the auditory signal resulting in uncontrolled variance in the free recall scores from the CVLT. Moreover, detailed analysis and assessments of the critical process and contrast measures of performance that can be derived from the CVLT-II, which was designed to measure specific capacities of verbal encoding, storage, retrieval, and self-generated organizational strategies used in retrieval, were unfortunately not reported in either of these earlier studies. The process measures obtained from the CVLT-II are highly informative about underlying cognitive information processing strategies, because they provide very detailed information and quantitative measures about what participants are doing with the verbal information they encode, store, and retrieve from memory in this task. Without examining the process measures provided by the CVLT-II, such as learning rates, proactive interference (PI) and retroactive interference (RI), retrieval inhibition, release from PI, and organizational strategies such as semantic, serial and subjective clustering of output responses, as well as response repetitions and intrusions in free recall, detailed insights cannot be gained into the possible differences in underlying information processing operations and neurocognitive mechanisms used by participants in carrying out the CVLT-II task protocol. Focusing on only the “first-order” primary free recall measures obtained from the immediate and delayed free recall trials provides only a global overall impression of the foundational information processing operations underlying verbal learning and memory for lists of categorized words in this clinical population. Without detailed information about the process measures on the CVLT-II, we obtain an incomplete picture of the strengths, weaknesses, and milestones in these patients.

Despite these concerns and reservations about the two earlier studies, the findings reported by Heydebrand et al. (2007) and Holden et al. (2013) suggest that measures of verbal memory and learning processes could serve as useful predictors of speech recognition outcomes in adult CI users and therefore might provide new process-based behavioral measures that could help explain the underlying basis of the enormous individual differences and variability observed in outcomes following implantation. The current study used a novel visual presentation version of the CVLT-II (the “V-CVLT”) to assess verbal memory and learning abilities in adults with postlingual severe-to-profound hearing loss who were experienced CI users. The use of the V-CVLT eliminated audibility issues and live-voice presentation by using visual presentation of test stimuli on a computer display screen. This visual-presentation format of the CVLT-II eliminates any concerns about the role of audibility and early auditory sensory processing as possible confounding factors influencing the verbal learning and memory measures obtained. Thus, any differences observed among CI users (or between CI users and normal-hearing control participants) cannot be due to modality-specific sensory effects related to audibility or early sensory processing and encoding. Rather, differences found must reflect levels of information processing that involve modality-general differences associated with verbal coding, phonological and lexical processing, storage, retrieval, and information processing operations that are not compromised by prior hearing loss or differences in audibility.

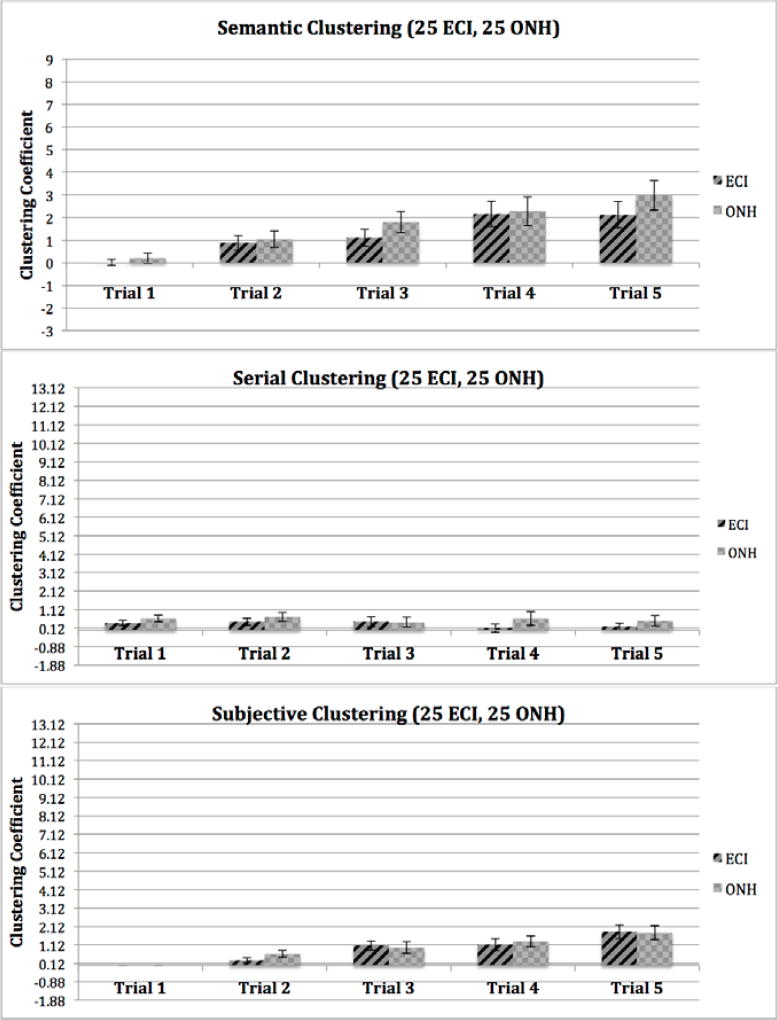

This report presents the results of two studies. Study 1 consisted of two phases (1a and 1b) that were performed to assess the validity and feasibility of the V-CVLT to ensure that the primary and process measures obtained from visual presentation of the CVLT-II would be equivalent to scores obtained from the conventional auditory, live-voice clinical version of the same task. Study 1a was carried out with two groups of young normal-hearing (YNH) adults at Indiana University in Bloomington; Study 1b was carried out with two groups of older normal-hearing (ONH) adults at The Ohio State University in Columbus. In each of these studies, we hypothesized that scores of primary and process measures of the V-CVLT would be equivalent to those obtained using the conventional live-voice auditory version of the CVLT-II, in both young YNH (Study 1a) and ONH (Study 1b) adults. Study 2, which was also carried out at Ohio State, was designed to compare performance on the new V-CVLT between adult experienced CI users (ECI) and age-matched older normal-hearing (ONH) control participants, and to investigate the relations between verbal learning and memory and speech recognition outcomes in the ECI users. In Study 2, we hypothesized that ECI participants would demonstrate deficits in some primary and process measures from the V-CVLT, compared with ONH peers, as a result of their experience of prolonged auditory deprivation. In particular, based on recent findings from Pisoni et al. (2016) with prelingually deaf adult CI users, we predicted that ECI participants would recall fewer list items over multiple presentations, as a result of less efficient encoding of verbal information, even using visual presentation. We also predicted that ECI participants would show less semantic clustering and more serial clustering than ONH peers because semantic clustering requires allocation of additional cognitive resources during verbal learning and memory, which would be too taxing for CI users who already allocated maximum resources for basic recall of list items. Additionally, we hypothesized that several measures from the V-CVLT would correlate with speech recognition outcomes in ECI users. Understanding speech through a CI requires rapid perceptual learning and adaptation to the underspecified degraded auditory signals; thus, ECI participants with good speech recognition outcomes (as compared with ECI users with poor speech recognition) might be expected to demonstrate highly effective learning of word lists over serial presentation on the V-CVLT, and might demonstrate more effective retrieval strategies, such as use of semantic clustering. Finally, in addition to the V-CVLT, several non-auditory visually based neurocognitive measures were also obtained from listeners in both studies to investigate the relations between measures of verbal learning and memory obtained from the V-CVLT and neurocognitive scores from tests of non-verbal fluid reasoning (IQ), reading fluency, immediate memory span, and vocabulary knowledge. These additional measures served to ensure equivalence between groups enrolled in the validation studies (1a and 1b), as well as to relate findings on these more traditional neurocognitive measures with scores on the V-CVLT.

Study 1a – Validation and Feasibility of V-CVLT in Young Normal-Hearing Adults

Methods

Participants and Procedures

Forty participants between the ages of 18 and 54 (mean 23.3 years) were recruited via flyers posted around the Indiana University Bloomington campus, listings on the IU Classifieds online advertisement system, and ads on IU’s paid subject pool website. Participants reported minimal to no history of any speech and hearing problems and received compensation at the rate of $5 per half hour for the time they were in the lab, for a total of $10. The present study consisted of measurement of verbal learning and memory and assessment of several domains of neurocognitive functioning. Participants were randomly assigned (1:1) to either the visual (V-CVLT) or the conventional auditory (A-CVLT) presentation condition. All participants completed the same visually-presented versions of all of the other measures in the study.

Materials

Visual and Auditory CVLT-II. Two new computer-controlled versions of the California Verbal Learning Test – Second Edition (CVLT-II) were developed specifically for this validity and feasibility study. The CVLT-II provides verbal learning and memory scores based on participants’ recall of lists of words using the procedure described in the Introduction. The various measures obtained from the CVLT-II are described in Table 1. The conventional clinical administration of the CVLT-II is carried out using live-voice presentation (see Delis et al. 2000). The current validity and feasibility study used two on-line computer-controlled versions of the CVLT-II that followed the same instructions and procedures of the live-voice CVLT-II as closely as possible. The first version, called the “Auditory” condition (A-CVLT), included the same words and followed the same order of trials as the original live-voice CVLT-II, but instead we used digital audio recordings of the test items that were created off-line by an experienced clinician. After the audio files were made, the test words were played out of a high-quality loudspeaker (Advent, Model AV-570) at a comfortable listening level next to a computer display screen, separated by 650 milliseconds. The second version of the CVLT was a “Visual” presentation condition (V-CVLT), in which the test words from both List A and List B were presented on the computer display screen visually in printed English for 1 second, with the same 650 msec interval between each word. For both presentation conditions, a research assistant sitting next to the participant also read aloud the printed instructions for each trial, which were modified slightly from the original CVLT-II instructions to match the computer-controlled versions of the two tasks. All participants also completed an on-line non-verbal distractor task using the Raven’s Progressive Matrices for a fixed 15-minute time period in between the short and long delay recall trials to block active rehearsal (Ravens; Raven et al. 1998). Scores on all CVLT-II trials were calculated as raw scores as well as percent correct scores depending on the comparisons of interest. Several normed scores were also obtained from the CVLT-II scoring software (see Table 1).

Table 1.

CVLT-II Scores and Descriptions

| Score | Description |

|---|---|

| List A Trial N | Number of words recalled following the Nth exposure to List A, where N=1 to 5 |

| List A Trials 1–5 | Total number of words recalled across all 5 List A exposure trials |

| List B | Number of words recalled following exposure to List B, the “interference list” |

| Short-Delay Free Recall (SDFR) | Immediately after List B recall, number of List A words recalled (List A is not read again for this trial or for the remainder of the test) |

| Short-Delay Cued Recall (SDCR) | Immediately after SDFR, number of List A words recalled when the subject is provided with each of the four semantic categories (furniture, vegetables, ways of traveling, animals) for List A as cues |

| Long-Delay Free Recall (LDFR) | Following a 20-minute delay after SDCR, number of List A words recalled |

| Long-Delay Cued Recall (LDCR) | Immediately after LDFR, number of List A words recalled when the subject is provided with each of the four semantic categories (furniture, vegetables, ways of traveling, animals) for List A as cues |

| Long-Delay Recognition | Immediately after LDCR, percentage of words identified accurately as List A words from a list of 48 words (16 List A words, 16 List B words, and 16 distractor words) |

| Primacy Recall | Percentage of the first four words on the list that are recalled on a trial or set of trials (note that this measure differs from the method of calculating Primacy Recall in the CVLT-II manual) |

| Pre-recency Recall | Percentage of the middle 8 words on the list that are recalled on a trial or set of trials |

| Recency Recall | Percentage of the last four words on the list that are recalled on a trial or set of trials (note that this differs from the method of calculating Recency Recall in the CVLT-II manual) |

| Serial Clustering | Chance adjusted score for recall clustering based on serial order, obtained by taking the difference between observed number of words recalled in the same serial order as presented and the the number of words that would be recalled in the same serial order as presented by chance alone |

| Semantic Clustering | Chance-adjusted score for recall clustering based on semantic category, obtained by taking the difference between observed number of words from the same semantic category recalled by the subject in serial contiguity and the expected number of words from the same semantic category that would occur in serial contiguity by chance alone |

| Subjective Clustering | When subject uses the same unique idiosyncratic clustering strategy in free recall across trials following each list presentation. |

| Intrusions | Words recalled by the subject that were not part of the target list |

| Perseverations (Repetitions) | Words repeated by the subject in response to the same trial |

Neurocognitive Measures. In addition to the Ravens Progressive Matrices that was completed during the 15-minute delay period in the CVLT protocol, all participants also completed three additional neurocognitive tasks to ensure that both groups of subjects were comparable, not only on non-verbal fluid IQ from the Raven's, but also reading fluency, vocabulary knowledge, and short-term memory capacity. First, the Test of Word Reading Efficiency, Second Edition (TOWRE-2; Torgesen et al. 2012), a reading assessment, was administered to obtain a measure of reading fluency and speed using visually presented words and non-words. Participants were given 45 seconds to read aloud as many words as accurately as possible from a list of 108 words that increased in difficulty as the list progressed. Next, a list of 66 non-words of increasing difficulty was presented. Participants were also given 45 seconds to read aloud as many non-words as possible. In both conditions of the TOWRE-2 test, all of the stimulus items were presented visually on the computer screen in columns. After the 45-second time period, the screen changed to blank. Raw scores were the number of words, non-words, and total number of items read aloud correctly.

Second, a visual word familiarity task, the WordFAM-150 test, was administered to measure vocabulary knowledge and lexicon size (Lewellen et al. 1993). One hundred and fifty-two words varying in frequency and subjective familiarity were presented visually on the computer display screen, one at a time, along with a rating scale ranging from one to five where participants recorded their familiarity with each word. In this task, a rating of 1 indicated low familiarity, whereas a rating of 5 indicated high familiarity. Each participant’s subjective word familiarity score was calculated by averaging his or her familiarity ratings over all words.

Finally, participants completed a forward Visual Digit Span test using visually presented sequences of digits. Random sequences of numbers from 2 to 13 digits in length were presented one digit at a time on the computer display screen. After each test sequence was presented, participants saw a 3 X 3 grid of numbers on the computer display and were instructed to use their mouse to click the numbers that they saw in the same order in which they were presented on the computer screen. An up-down adaptive testing algorithm was used to increase the sequence length if the subject correctly reproduced the test sequence items. Two sequences were presented at each length. If the subject correctly reproduced both sequences at a given list length, the algorithm increased the sequence length on the next trial. If the subject made two errors in a row, the algorithm decreased the length of the next test sequence. The longest span that each participant correctly recalled was recorded, along with the total number of individual digits recalled in the correct order, out of 180.

Data Analyses

Five of the original 40 subjects were removed from the final data analysis because they were considered outliers based on their List A Trials 1–5 T-Score. Only subjects who fell within two SDs from the mean normative score (i.e., T-Scores between 30 and 70) were included, while subjects with higher or lower T-Scores were excluded. This procedure eliminated three subjects from the V-CVLT group who were at ceiling above the normative mean and two subjects from the A-CVLT group who were at the floor below the norm mean, leaving Ns of 17 and 18, respectively, in each group. Independent-samples t-tests were then performed separately to establish equivalency between the two groups on age, Ravens scores, TOWRE-2 scores, Visual Digit Span, and word familiarity ratings. Following these initial analyses, additional t-tests were performed to assess group differences in performance on the primary, process, and contrast measures obtained from the CVLT-II protocol. Relations between the neurocognitive tests and the CVLT-II scores were assessed using correlational analyses. ANOVA and post-hoc paired-comparisons were carried out to test for differences between groups and experimental variables.

Results

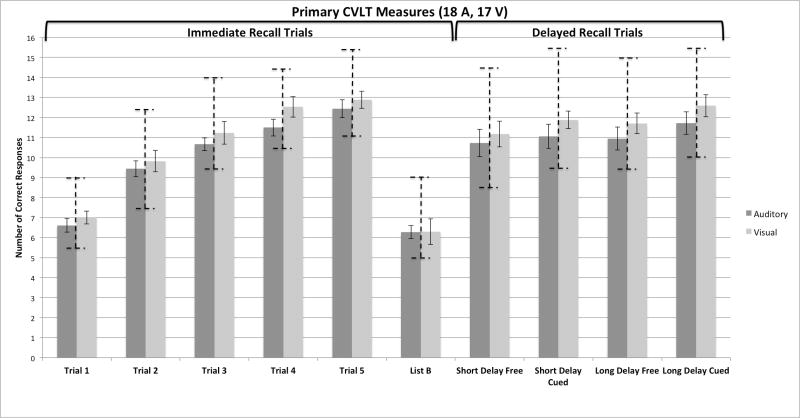

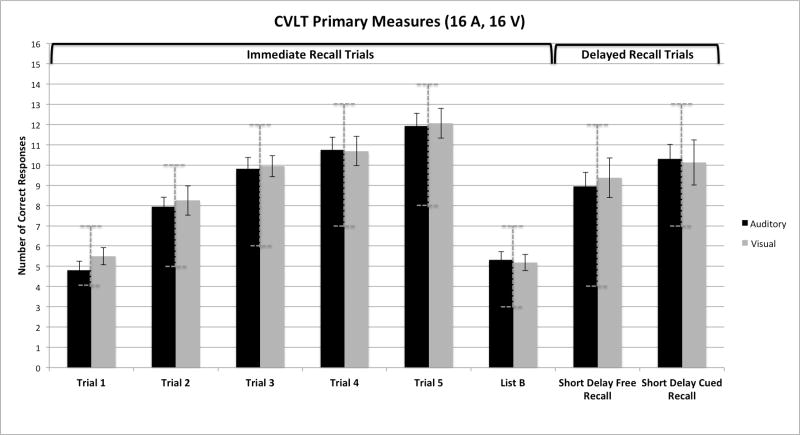

Figure 1 shows a global overall summary of the immediate and delayed free- and cued-recall scores obtained from the CVLT-II protocol. The figure is divided into two panels. On the left are the immediate free recall scores for the five repetitions of List A and the one presentation of List B (the interference list) obtained from the two groups of participants who were tested. The left set of bars in each panel of this figure shows the average free recall raw scores from the group of participants who were assigned to the A-CVLT; the right set of bars shows the scores from the group of participants who were assigned to the V-CVLT. Each bar represents the average number of correct responses out of the 16 items in each presentation of List A and List B. On the right of Figure 1 are the short-delay and long-delay free- and cued-recall scores obtained from both groups of subjects.

Figure 1.

Overall summary of the immediate and delayed free and cued recall scores obtained from the CVLT-II protocol in young normal-hearing adults. On the left are the immediate free recall scores for the five repetitions of List A and the single presentation of List B. On the right are the short-delay and long-delay free- and cued-recall scores obtained from both groups of subjects, who were tested using the auditory CVLT-II (A-CVLT) versus the visual CVLT-II (V-CVLT). The left set of bars in each panel shows the average free recall raw scores from the participants who were assigned to the A-CVLT; the right set of bars shows the scores from the participants who were assigned to the V-CVLT. The vertical dashed bars show the range of normative scores for each of the recall conditions based on age and gender.

In addition to the raw scores for each group, both panels in Figure 1 also display the range of normative scores for each of the recall conditions. These normative bars are shown as vertical dashed error bars superimposed on the raw scores and correspond to the normative range based on the gender and age of the participants included in each group. The normative range plotted here was found by first calculating the average age of males and females in each sample. The averaged ages were then used to look up the normed score for each trial at +/−1 SD from the mean in the CVLT-II manual. As shown in Figure 1, the mean of the two groups fell within +/− 1 SD from the normative means for all of the CVLT-II measures in both panels of the figure.

Inspection of the immediate free recall scores on the left in Figure 1 shows two main findings. First, both groups of subjects displayed robust repetition learning effects over the five presentations of List A. Performance improved continuously from Trial 1 to Trial 5 after each List A repetition (F= 99.29, p<.001). Second, inspection of this figure shows that both groups of participants showed comparable levels of immediate free recall performance after each repetition of List A over the five presentations. The main effect of group over the five learning trials was not statistically significant by a 2 X 5 ANOVA followed by a series of post-hoc paired-comparison tests (all p> .05). While the recall performance of both groups declined significantly overall for immediate free recall of the new words from List B compared to recall of words on List A Trial 1 (p< .001), both groups showed comparable free recall scores on this list, too. Although no group differences were found in immediate free recall for items on either List A or List B based on presentation modality, a significant linear trend was observed across the five study trials for List A as a function of repetition learning (F=258.29; p<.001).

The short- and long-delay scores for the free- and cued-recall conditions of List A items are shown on the right of Figure 1 for both groups of subjects. The first two sets of bars on the right show the results for the short-delay free-recall (SDFR) and short-delay cued-recall (SDCR) conditions; the remaining two sets of bars show the results for the long-delay free-recall (LDFR) and long-delay cued-recall (LDCR) conditions. Examination of the raw scores in the four conditions of this panel, followed by independent-samples t-test comparisons between groups, also revealed no differences in either free or cued recall between the groups based on presentation modality (p>.05).

CVLT-II Process Measures

Looking at the overall average first-order measures of immediate and delayed free and cued recall performance shown in Figure 1 provides only a global superficial picture of the underlying organizational and processing strategies that subjects use in carrying out a multi-trial free recall task with categorized word lists. In addition to providing total recall scores summed across all serial positions following the five repetitions of List A, and the one repetition of List B (the interference list), we also looked at several other more detailed measures of the cognitive processes underlying verbal learning and memory using this task. These measures included examination of serial position curves, primacy and recency effects, proactive and retroactive interference (PI and RI), retrieval inhibition, release from PI, self-generated organizational strategies (semantic, serial, or subjective clustering), recall intrusion errors, and Yes/No recognition memory. There were no significant differences in any of these process measures between the two groups. The results that are most relevant for interpretation of Study 2 (primacy and recency effects, PI, RI, retrieval inhibition, and release from PI) are reported below.

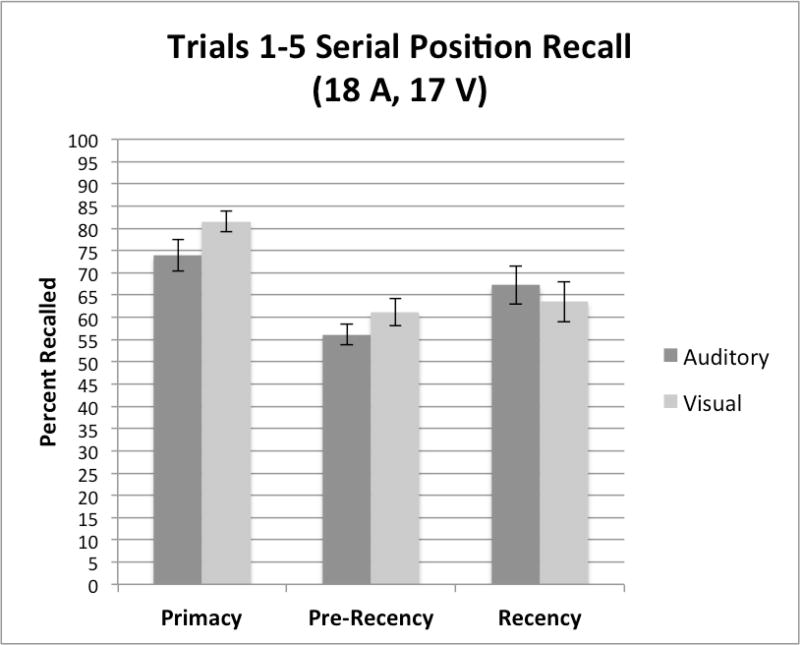

Primacy and Recency Effects

Analysis of recall of words from primacy (first four items of the list), pre-recency (middle 8 items), and recency (final four items) portions of List A were carried out. Recall of words from these three subcomponents of the serial position curve are assumed to reflect fundamentally different storage and retrieval processes used in carrying out free recall tasks (see Atkinson & Shiffrin 1971; Raaijmakers 1990). Figure 2 shows a summary of the free recall scores obtained from the primacy (left panel), pre-recency (center panel), and recency (right panel) components of the serial position curves of List A averaged over the five repetition learning trials for each group of subjects. Examination of this figure shows two patterns in the free recall scores. First, recall is better overall from primacy than from recency or pre-recency portions of the serial position curve (p<.005). Second, although there are reliable differences across the three serial positions, no differences in free recall are present in any of the three subcomponents between the two groups based on presentation modality. The absence of any differences due to presentation modality between the two groups in both the primacy and recency portions of the serial position curve suggests that early list items were successfully encoded and stored in memory and retrieved equivalently by both groups of subjects.

Figure 2.

Summary of free recall scores for the primacy (left panel), pre-recency (center panel), and recency (right panel) subcomponents of the serial position curves for List A averaged over the five repetition learning trials for the subjects, tested using the auditory CVLT-II (A-CVLT) versus the visual CVLT-II (V-CVLT).

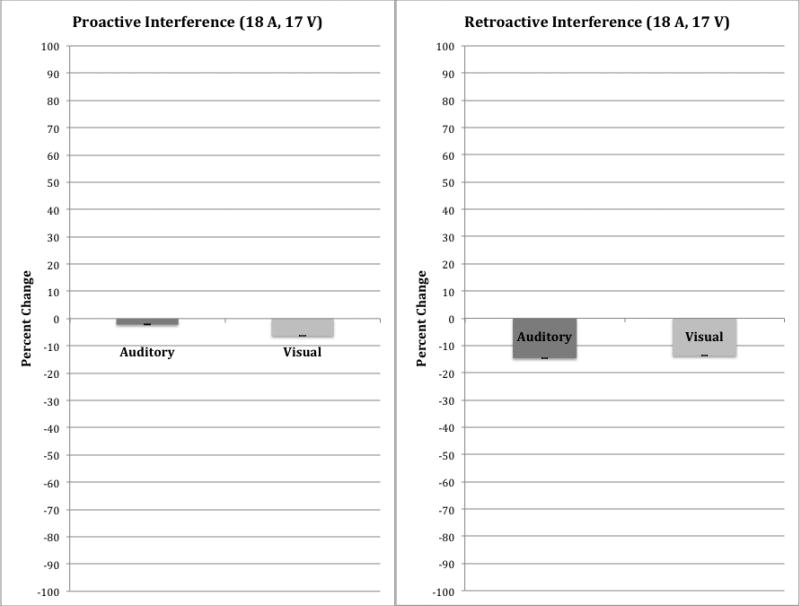

Proactive and Retroactive Interference

The free recall scores from List B, the interference list, are typically used to compute two measures of forgetting: a proactive interference (PI) index and a retroactive interference (RI) index. PI refers to forgetting of newly learned words on List B as a result of interference from previous learning of similar or related words from List A. In contrast, RI refers to the presence of forgetting that occurs when learning of new words on List B reduces the ability to remember words that were previously learned on List A. A measure of PI is obtained by subtracting the recall of words on List B from the immediate free- recall of words from List A Trial 1; a measure of RI is obtained by subtracting recall of short-delay free-recall of List A items from immediate free-recall of items on List A Trial 5. Figure 3 shows a summary of these two interference scores for both presentation conditions. The PI scores for both groups are shown in the left-hand panel while the RI scores are shown in the right-hand panel. Measures of PI (List B free-recall minus List A Trial 1 free-recall) and RI (List A short-delay free-recall minus List A Trial 5 free-recall) were obtained from the response protocols. Although we found slightly greater RI overall than PI, no differences were observed in either the PI or RI scores between the two groups of subjects based on presentation modality.

Figure 3.

Summary of the proactive interference (PI) and retroactive interference (RI) scores for auditory CVLT-II (A-CVLT) versus visual CVLT-II (V-CVLT). PI scores are shown in the left-hand panel; RI scores are shown in the right-hand panel.

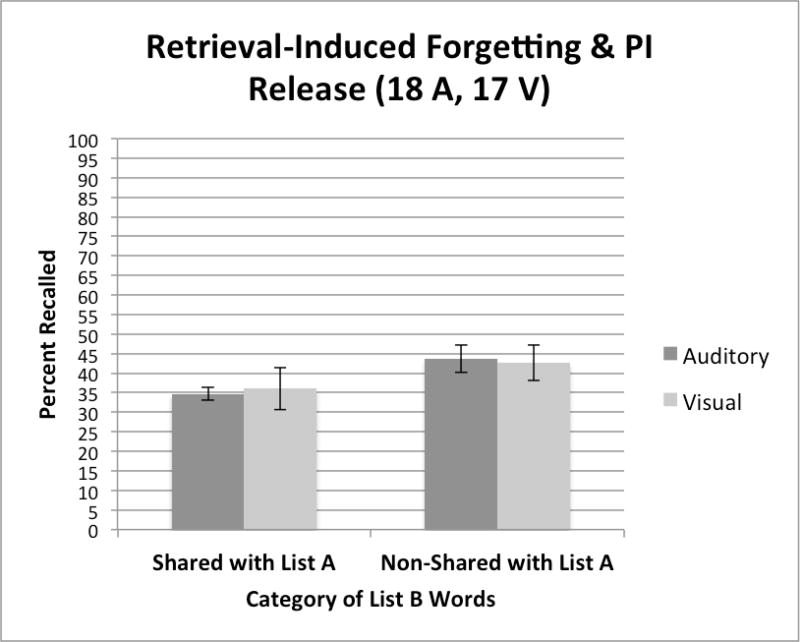

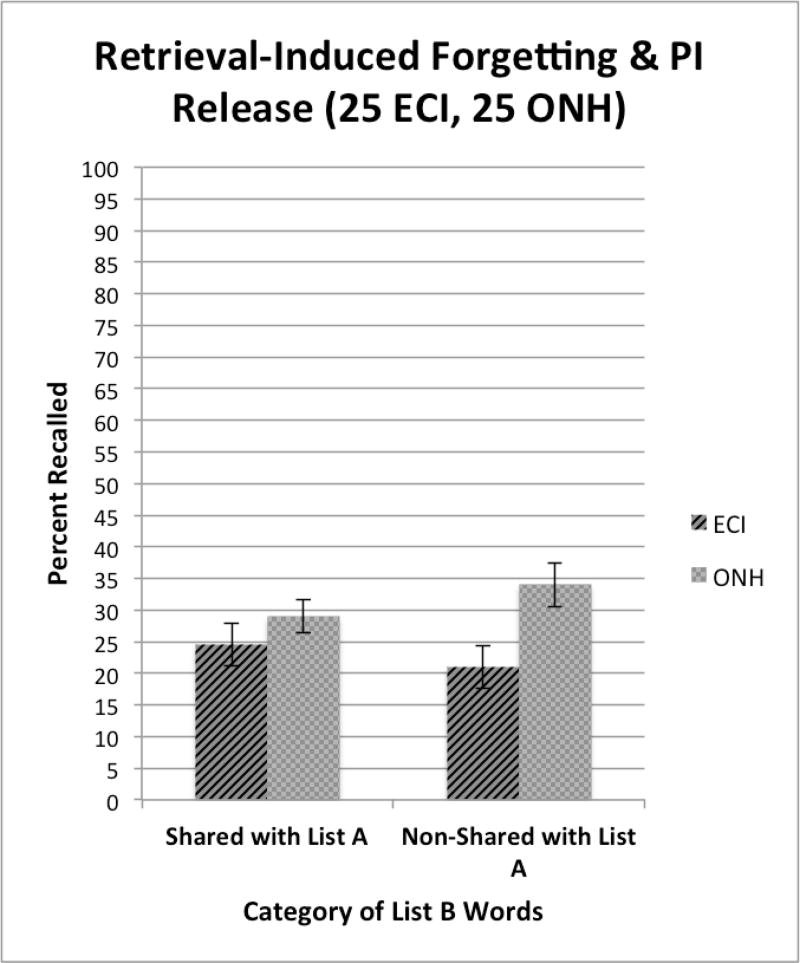

Retrieval Induced Forgetting and Release from PI

The composition of the specific test items used on List B of the CVLT-II, the interference list, was not only designed to measure the presence of PI and RI effects but was also created to assess several more subtle aspects of forgetting due to interference and inhibitory control processes in list learning experiments. Half of the test items on List B were new words that shared semantic categories with words on List A while the other half of the items on List B were new words that did not share any categories with the items on List A. Recall of List B words that share semantic features with words on List A provides a way to measure forgetting of new items due to “retrieval induced forgetting,” a form of forgetting that reflects interference or inhibitory control processes related to the activation of semantically similar items in memory (see Anderson et al. 1994). Retrieval induced forgetting has received a great deal of interest and attention by memory scientists in recent years because it demonstrates that sometimes the very act of remembering information and retrieving a memory trace from long-term memory can cause forgetting and memory loss (see Anderson et al. 1994; Storm et al. 2015). In contrast, recall of non-shared List B items on the CVLT provides a way to measure of the improvement in free recall due to a “release from PI,” which reflects the restoration of the capacity to encode and recall studied words from one semantic category after switching the semantic categories of new study words to be recalled (Wickens et al. 1963; Wickens 1970). Thus, an examination of the free recall scores for the shared and non-shared items on List B provides a way to assess both retrieval induced forgetting as well as release from PI at the same time.

Figure 4 shows the free recall scores for shared and non-shared items on List B for the two groups of subjects. Auditory presentation (A-CVLT) is shown by the solid dark bars on the left of each pair of bars; visual presentation (V-CVLT) is shown by the gray bars on the right of each pair of bars. Although performance was better overall for the non-shared items than the shared items on List B (p<.001), there were no differences in recall between the two groups based on presentation modality. The lower recall scores observed for the shared items in this figure is evidence for retrieval induced forgetting for new items on List B that share the same semantic categories as the items previously studied and retrieved from List A. In contrast, the advantage observed for non-shared items on List B is evidence of a release from PI, the improvement in recall of words from one semantic category after the category of the new materials is shifted to a different semantic category (Wickens et al. 1963).

Figure 4.

Free recall scores for Shared and Non-shared words on List B for the two groups of subjects, tested using the auditory CVLT-II (A-CVLT) versus the visual CVLT-II (V-CVLT). A-CVLT is shown by the solid dark bars on the left of each pair of bars; V-CVLT is shown by the gray bars on the right of each pair of bars.

Neurocognitive Measures

In addition to the measures of verbal learning and memory using the newly developed computer-controlled CVLT protocols, we also administered several neurocognitive measures to assess the strengths, weaknesses, and milestones in these two groups of subjects (see Kronenberger et al. 2016; Kronenberger & Pisoni 2016). The cognitive measures obtained from both groups of subjects included the following: Ravens Progressive Matrices, a non-verbal test of fluid reasoning (Raven et al. 1998), TOWRE-2, a visual word recognition test of reading fluency and speed (Torgesen et al. 2012), a forward Visual Digit Span test to measure verbal short-term memory capacity, and the WordFam-150 test, a visual word familiarity rating scale that has been used to measure vocabulary knowledge and lexicon size (Lewellen et al. 1993; Stallings et al. 2000). Table 2 presents a summary of the scores on these four tests for the two groups of subjects. No differences were observed in any of the neurocognitive scores between the two groups of subjects.

Table 2.

Demographic Variables for Study 1a

| Table 2 (Study 1A) | ||||||

|---|---|---|---|---|---|---|

|

| ||||||

| Auditory (N = 18) | Visual (N = 17) | |||||

|

| ||||||

| Demographics | Mean | SD | Range | Mean | SD | Range |

|

| ||||||

| Age | 22.61 | 1.95 | 18–54 | 24.00 | 1.73 | 18–42 |

|

| ||||||

| Gender Breakdown | 4 M | -- | -- | 6 M | -- | -- |

| 14 F | 11 F | |||||

|

| ||||||

| Measures | Mean | SD | Range | Mean | SD | Range |

|

| ||||||

| Raven’s Matrices (# Correct) | 44.94 | 1.30 | 32–55 | 41.41 | 1.70 | 24–52 |

|

| ||||||

| TOWRE-II Words (# Correct) | 90.28 | 2.38 | 73–108 | 93.00 | 1.71 | 83–107 |

|

| ||||||

| TOWRE-II Non-Words (# Correct) | 53.56 | 1.74 | 34–64 | 51.47 | 1.84 | 35–60 |

|

| ||||||

| TOWRE-II Total (# Correct) | 143.83 | 3.51 | 117–169 | 144.47 | 2.64 | 125–157 |

|

| ||||||

| Word Familiarity Score | 3.12 | 0.12 | 2.22–4.28 | 3.22 | 0.15 | 2.30–4.44 |

|

| ||||||

| Longest Digit Span | 7.56 | 0.33 | 5–10 | 8.41 | 0.39 | 5–11 |

|

| ||||||

| Digit Span Points | 104.00 | 4.05 | 55–132 | 104.00 | 4.53 | 70–134 |

We also carried out a series of bivariate correlations to investigate the relations between the neurocognitive scores and a subset of the primary first-order measures obtained from the A-CVLT or V-CVLT (List A Trial 1, List A Trial 5, List A Trial 1–5 total words correctly recalled, List B, and learning slope over the five List A repetition trials). No differences were found between the groups in the pattern of correlations among the measures. These findings provide converging evidence of common associations between measures of verbal learning and memory obtained from the CVLT-II and several verbal and non-verbal neurocognitive measures, suggesting that the same elementary information processing operations are shared by all these sets of measures independently of presentation modality. Again, no differences were observed between the two groups of subjects based on presentation modality of the CVLT-II.

Discussion

The present set of findings comparing auditory and visual presentation modalities using stimulus materials adapted from the conventional live-voice CVLT-II clinical protocol revealed no differences in primary or process measures as a function of presentation modality. Therefore, based on these findings, it may be appropriate to use the alternative V-CVLT when the conventional live-voice auditory presentation format would be inappropriate to study verbal learning and memory processes in some clinical populations, such as adults who have significant hearing loss. In addition to the absence of any significant differences on scores of the CVLT-II between the A-CVLT and V-CVLT groups, the correlations of the CVLT-II scores with the neurocognitive measures were comparable across the two groups of subjects.

Because the new V-CVLT test was developed specifically for postlingually deaf older adults with significant hearing loss who are CI users or potential candidates for CIs, we carried out an additional validity and feasibility study with two groups of older normal-hearing adults. This additional validation study was done because it was possible that the validity and feasibility results obtained with the young normal hearing adults in this study might not generalize robustly to an older population of healthy adults who may have other additional co-morbidities related to cognitive aging. To accomplish this objective, we recruited two new groups of older normal hearing (ONH) participants and compared their performance on the same computer-controlled auditory and visual presentation conditions of the CVLT-II used in Study 1a. Our objective was to establish validity and feasibility of the V-CVLT with a sample of ONH subjects who are representative of the age range of the target clinical population of CI patients we are currently studying.

Study 1b – Validation & Feasibility of V-CVLT in Older NH Adults

Methods

Participants

Thirty-two participants between the ages of 55 and 77 years (mean age of 67.6) were recruited via flyers posted at The Ohio State University Department of Otolaryngology and through a national research recruitment database, ResearchMatch. Participants reported no history of any speech and hearing problems. For compensation, they received $15 for participation. All participants underwent conventional audiological assessment immediately prior to testing. Normal hearing was defined as four-tone (.5, 1, 2, and 4 kHz) pure-tone average (PTA) of better than 25 dB HL in the better ear. Because many of these participants were elderly adults, this criterion was relaxed to 35 dB HL PTA, although only three participants had a PTA poorer than 25 dB HL. All participants also demonstrated scores within normal limits on the Mini-Mental State Examination (Folstein et al. 1983), a cognitive screening task, with raw scores all greater than 26 out of a possible 30. A summary of the demographics is provided in Table 3.

Table 3.

Demographic Variables for Study 1b

| Table 3 (Study 1B) | ||||||

|---|---|---|---|---|---|---|

|

| ||||||

| Auditory (N = 16) | Visual (N = 16) | |||||

|

| ||||||

| Demographics | Mean | SD | Range | Mean | SD | Range |

|

| ||||||

| Age | 66.00 | 0.70 | 60–71 | 69.00 | 1.40 | 55–77 |

|

| ||||||

| Gender Breakdown | 8 M | -- | -- | 5 M | -- | -- |

| 8 F | 11 F | |||||

|

| ||||||

| MMSE (raw score) | 29.25 | 0.27 | 26–30 | 29.31 | 0.22 | 27–30 |

|

| ||||||

| Measures | Mean | SD | Range | Mean | SD | Range |

|

| ||||||

| Raven’s Matrices (# Correct) | 14.50 | 1.55 | 6–26 | 11.44 | 1.22 | 6–22 |

|

| ||||||

| Longest Digit Span | 6.69 | 0.35 | 4–9 | 5.94 | 0.23 | 5–8 |

|

| ||||||

| Digit Span Points | 56.19 | 5.08 | 25–100 | 44.75 | 3.09 | 27–69 |

|

| ||||||

| TOWRE-II Words (# Correct) | 82.94 | 3.30 | 55–108 | 85.25 | 2.06 | 75–107 |

|

| ||||||

| TOWRE-II Non-Words (# Correct) | 45.88 | 2.78 | 20–59 | 43.44 | 2.70 | 19–57 |

|

| ||||||

| TOWRE-II Total (# Correct) | 128.81 | 4.82 | 95–164 | 128.69 | 3.89 | 95–150 |

|

| ||||||

| Word Familiarity Score | 5.36 | 0.24 | 4.12–6.46 | 5.43 | 0.17 | 3.85–6.36 |

Procedures and Materials

Participants were randomized to undergo testing using either the V-CVLT or the A-CVLT. Testing was performed as described above in Study 1a, except that an abridged version of each CVLT task was used, concluding with “Short Delay Cued Recall” followed by the Yes/No recognition task. The 20 minute distractor period and the long-delay free-recall and long-delay cued-recall tests were not used with these subjects to reduce testing time. As in Study 1a, four additional neurocognitive assessments were also performed: the Raven’s Progressive Matrices for a 10 minutes time period, the TOWRE-2, visual forward digit span, and the WordFam-150 test. This final test was similar to the earlier WordFam-150 test, except that it was completed on paper by participants at a later time and mailed back in, and participants rated their familiarity with words from 1 (not familiar at all) to 7 (very familiar).

Data Analyses

To evaluate the feasibility and equivalency of the A-CVLT and V-CVLT tests in older NH participants, independent-samples t-tests were performed separately to assess any differences between groups based on age, Ravens, TOWRE-2, Visual Digit Span, and word familiarity ratings. Following these analyses, a series of ANOVAs and post-hoc paired-comparison tests were performed to assess group differences in performance between auditory and visual presentation conditions on the primary and process measures of the CVLT-II.

Results

Figure 5 shows a summary of the immediate and short-delay free- and cued- recall CVLT scores obtained from the two groups of ONH subjects tested. As in Study 1a, the figure is divided into two panels. On the left are the immediate free-recall scores for the five repetitions of List A and the one repetition of List B. The panel on the right presents a summary of the short-delay free- and short-delay cued-recall scores from both groups of subjects. The left set of bars in each panel shows the average recall scores from the group of ONH participants who were assigned to the auditory presentation condition (A-CVLT); the right set of bars shows the scores from the group of ONH participants who were assigned to the visual presentation condition (V-CVLT).

Figure 5.

Overall summary of the immediate and short-delay free- and cued- recall CVLT-II scores obtained from two groups of older normal hearing (ONH) subjects using the auditory CVLT-II (A-CVLT) versus the visual CVLT-II (V-CVLT). On the left are the immediate free-recall scores for the five repetitions of List A and the one repetition of List B. On the right are the short-delay free- and cued-recall scores from both groups of subjects. The left set of bars in each panel shows the average recall scores from ONH participants assigned to the auditory presentation condition (A-CVLT); the right set of bars shows the scores from the group of ONH participants who were assigned to the visual presentation condition (V-CVLT). The vertical dashed bars show the range of normative scores for each of the recall conditions based on age and gender.

As in Study 1a with young adults, the immediate free recall scores displayed on the left in Figure 5 show two main findings. First, both groups of ONH subjects displayed robust repetition learning effects over the five presentations of List A. Using a 2 (modality) × 5 (trials) ANOVA, followed by paired-comparison tests, performance improved continuously for both groups from Trial 1 to Trial 5 after each repetition of List A (F=103.44, p<.001). Second, as in the initial study, both groups of participants showed comparable levels of immediate free recall performance after each repetition of List A over the five presentations. The main effect of presentation modality across the five learning trials was not statistically significant in a 2 × 5 ANOVA (p> .05). As in the first study, performance of both groups was also substantially lower for recall of the new items from List B compared to the items on List A Trial 1 (p<.001). When the free recall scores for both groups were plotted as a function of serial position for List A Trial 1 and List B, no significant differences were observed based on presentation modality. Both groups also showed comparable levels of recall on List B, List A short-delay free recall, and List A short-delay cued recall, replicating the results obtained with the younger subjects in Study 1a.

No differences between the two groups were found in primacy or recency recall, PI or RI, retrieval induced forgetting, or release from PI. Self-generated organizational strategies in free recall (i.e. semantic, serial, or subjective clustering) were also comparable across both groups of ONH subjects, as were recall intrusion errors and scores on Yes/No recognition memory. We also did not find any differences in performance between the two groups on any of the neurocognitive measures. The correlations between CVLT-II primary and process scores and the neurocognitive measures were again similar between A-CVLT and V-CVLT groups.

Discussion

The results of this validity and feasibility study using two groups of ONH controls in Study 1b replicated the initial findings obtained with young college-aged students in Study 1a. No differences were observed in any of the immediate or delayed free- and cued-recall measures or any of the primary or process measures obtained from the CVLT-II based on presentation modality. Taken together with the results obtained from Study 1a, we believe it is appropriate to use the new visual presentation format of the CVLT-II with hearing impaired older adults who have received CIs. Having a visual presentation format of the CVLT-II available to study foundational underlying processes of verbal learning and memory in this clinical population eliminates any concerns that could be raised about the role of audibility and any possible differences that may be due to early auditory sensory processing and hearing loss as possible confounding factors that might influence the verbal learning and memory measures obtained. The use of a visual CVLT protocol removes hearing and audibility from the equation and permits us to obtain pure measures of any disturbances in basic verbal learning and memory processes without the confounding influence of compromised hearing or speech perception.

Study 2 – Visual CVLT-II in older experienced CI users (ECIs) and older NH controls (ONHs)

After having confirmed the equivalency of the visual CVLT-II with the conventional auditory CVLT-II, a subsequent study was carried out to investigate verbal learning and memory processes in a group of post-lingual experienced CI users (ECI) and to compare their performance to a group of age- and nonverbal IQ-matched older normal-hearing controls (ONH). Our objective was to uncover and identify differences in core verbal learning and memory processes that could serve as reliable predictors of speech recognition outcomes following implantation. We also wanted to investigate the combined contributions to speech recognition outcomes of demographics and hearing history, neurocognitive factors, and several core measures of verbal learning and memory in this clinical population.

It is important to mention here that except for the two earlier studies by Heydebrand et al. (2007) and Holden et al. (2013), all of the previous research carried out on verbal learning and memory with post-lingual adult CI users has been concerned almost exclusively with short-term and working memory processes, not multi-trial verbal learning, or long-term memory (LTM) processes. Research on verbal learning and memory using supra-span lists that exceed the immediate processing capacity of STM has received very little attention by clinicians and researchers in the past despite the critical importance of LTM to speech recognition and spoken language processing. This is not surprising because most clinicians and researchers who are working in the field of CIs believe that the individual differences and variability routinely observed in speech recognition outcomes following implantation simply reflect differences in early registration and encoding sensory information in short-term and working memory. Research on storage and retrieval processes in LTM and interactions between encoding and retrieval processes has been minimal despite the critical importance of LTM in supporting robust adaptive functioning and speech recognition in challenging or adverse listening environments, situations in which the use of prior linguistic knowledge and experience play substantial compensatory roles when the bottom-up sensory information is significantly degraded (see Rönnberg et al. 2013).

Participants

Fifty adult participants between the ages of 53 and 81 years (mean age of 68.0) were recruited using flyers posted at The Ohio State University Department of Otolaryngology and through the use of ResearchMatch, a national research recruitment database. Half of the subjects were experienced CI users (ECIs) and half were older normal hearing control participants (ONH). All subjects received $15 as compensation for participation in this study. Socioeconomic status (SES) of participants was quantified using a metric developed by Nittrouer and Burton, consisting of occupational and educational levels each rated from 1 (lowest level) to 8 (highest level) and then multiplied, resulting in scores between 1 and 64 (Nittrouer & Burton, 2006).

Inclusion criteria for all participants were as follows: (1) native English speaker; (2) high school diploma or equivalency; (3) vision of 20/40 or better on a basic near-vision test; (4) Mini Mental State Examination (MMSE; Folstein & Folstein, 1975) score greater than or equal to 26, suggesting no evidence of cognitive impairment; (5) Wide Range Achievement Test (WRAT; Wilkinson & Robertson, 2006) word reading standard score ≥ 80; (6) age 50 or older. Inclusion criteria for the ECI sample were (1) onset of severe-to-profound hearing loss no earlier than age 12 years; (2) severe-to-profound hearing loss in both ears prior to implantation; (3) use of at least one cochlear implant; and (4) CI-aided thresholds better than 35 dB HL at .25, .5, 1, and 2 kHz, as measured by clinical audiologists within one year before enrollment in the present study. The inclusion criterion required specifically for the ONH sample was four-tone (.5, 1, 2, and 4 kHz) pure-tone average (PTA) of less than 35 dB HL in the better ear (the standard 25dB HL criterion for NH was relaxed to 35 dB HL PTA because the sample consisted of older adults, although only three participants had a PTA poorer than 25 dB HL).

The twenty-five ECI participants were recruited from the patient population of the Otolaryngology department at OSU and had diverse underlying etiologies of hearing loss and different ages of implantation (see Table 4). All but four of the CI users reported onset of hearing loss after age 12 years, meaning they were post-lingually deaf and had normal language development prior to the onset of their hearing loss (suggested by their normal hearing until the time of puberty). The other four CI users reported some degree of congenital hearing loss or onset of hearing loss during childhood but did not meet criteria for severe-to-profound hearing loss until age 12. All ECI participants had experienced early hearing aid intervention and typical auditory-only spoken language development during childhood, were mainstreamed in education, and experienced progressive hearing losses into adulthood. All of the CI users received their CIs after the age of 35 years, with mean age at implantation of 61.5 years (SD 10.4). All ECI participants had used their CIs for at least 2 years prior to testing, with mean duration of CI use 7.5 years (SD 6.7). All of the ECI participants except one used Cochlear Corporation (New South Wales, Australia) devices with an Advanced Combined Encoder processing strategy; one CI user had an Advanced Bionics (Valencia, California) device and used a Hi Res Optima-S processing strategy. Eleven participants had a right CI, four used a left implant, and nine had bilateral implants. Eight participants wore a contralateral hearing aid. During testing with auditory materials, participants wore their devices in their usual everyday modes, including any use of hearing aids, and settings were kept the same throughout the entire testing session. Twenty-five ONH participants were recruited from the same settings and did not differ from the ECI users in chronological age, Ravens scores (non-verbal fluid IQ), or socioeconomic status (SES) (Table 4).

Table 4.

Demographic Variables for Study 2

| Table 4 (Study 2) | ||||||||

|---|---|---|---|---|---|---|---|---|

|

| ||||||||

| Experienced CI (N = 25) | Older Normal Hearing (N = 25) | |||||||

|

| ||||||||

| Demographics | Mean | SD | Range | Mean | SD | Range | ||

|

| ||||||||

| Age | 67.0 | 1.58 | 55–81 | 69.0 | 1.44 | 53–81 | ||

|

| ||||||||

| Gender Breakdown | 14 M | -- | -- | 6 M | -- | -- | ||

| 11 F | 19 F | |||||||

|

| ||||||||

| Education | 6 High school or lower, | -- | -- | 4 High school or lower, | -- | -- | ||

| 2 Tech degree, | 0 Technical degree, | |||||||

| 14 College degree or some college, | 10 College degree or some college, | |||||||

| 3 Masters/PhD/post-graduate | 11 Masters/PhD/post-graduate | |||||||

|

| ||||||||

| SES | 25.00 | 3.18 | 6–64 | 34.88 | 3.03 | 9–64 | ||

|

| ||||||||

| Pure-Tone Average | 101.00 dB | 3.14 | 70–120 | 21 dB | 1.61 | 8–34 | ||

|

| ||||||||

| MMSE | 28.00 | 0.29 | 26–30 | 29.28 | 0.17 | 27–30 | ||

|

| ||||||||

| Age Hearing Loss Began | 27.00 | 3.96 | 0–61 | -- | -- | -- | ||

|

| ||||||||

| Etiology of Hearing Loss | 13 Genetic, | -- | -- | -- | -- | -- | ||

| 1 Menieres, | ||||||||

| 4 Progressive, | ||||||||

| 1 Otosclerosis, | ||||||||

| 1 Sepsis, | ||||||||

| 1 Chronic ear infections, | ||||||||

| 4 Unknown | ||||||||

|

| ||||||||

| Wear Hearing Aids Currently | 6 Y, 19 N | -- | -- | -- | -- | -- | ||

Procedures

All participants underwent testing using the computer-controlled visual presentation CVLT-II developed for Study 1. Testing was performed as described above in Study 1b, using the abridged shortened version of the original V-CVLT concluding with “Short Delay Free Recall” followed by the Yes/No Recognition test. Participants also completed the Raven’s Progressive Matrices for a fixed 10-minute time period, the TOWRE-2 (words and nonwords), and visual forward Visual Digit Span task. To decrease testing time, the WordFam-150 test was completed on paper at home and mailed back to the laboratory.

In addition to the V-CVLT and neurocognitive measures, the ECI participants also completed three different speech recognition tests to assess their ability to recognize spoken words in isolation and in sentence contexts. All test signals were presented in quiet at 68 dB SPL over a high-quality loudspeaker one meter in front of the participant at zero degrees azimuth in a sound attenuated booth. Percent correct keyword recognition scores were computed for both the word and sentence tests. In addition, percent correct whole sentence scores were also calculated for the two sentence tests.

To assess open-set recognition of isolated spoken words, one list of 50 CID W-22 words (Hirsh et al. 1952) was used. Test words were presented in the carrier phrase, “Say the word _______.” All test words were recorded by a single male talker who spoke with a mid-western regional dialect. To assess word recognition in sentences, two measures of sentence recognition in meaningful contexts were obtained using the following materials: (1) Harvard “Standard” test sentences which are relatively long, complex, and semantically meaningful sentences taken from the IEEE corpus (IEEE 1969; Egan 1948), such as “The wharf could be seen from the opposite shore”; (2) PRESTO (Perceptually Robust English Sentence Test Open-set) sentences, representing perceptually challenging, high-variability listening conditions (Gilbert et al. 2013; Tamati et al. 2013). To increase acoustic-phonetic and indexical variability in sentence recognition, each sentence on a given PRESTO test list was produced by a different talker, and all of the talkers used for a given test list were selected to span a wide range of regional dialects in the US. To reduce perceptual learning and adaptation, no talker was ever repeated in the same test list and each test sentence in a list was always a novel sentence. None of the sentences on any of the lists were ever repeated during the test.

Data Analyses

ANOVAs and t-tests were performed to assess group differences between ECI and ONH groups on the primary and process measures obtained from the CVLT-II protocol. In addition, correlational and regression techniques were performed for the ECI group to assess the relations among V-CVLT primary, process, and contrast measures with the speech recognition scores.

Results

Descriptive statistics comparing the ECI participants and ONH controls on Ravens, TOWRE-2, visual digit span, and WordFam-150 scores are shown in Table 5. No differences in the group means were found for any of the baseline cognitive test scores except word familiarity ratings, where ECI participants demonstrated slightly smaller vocabulary size (p<.05).

Table 5.

Summary of Performance Measure 5 for Study 2

| Table 5 (Study 2) | ||||||

|---|---|---|---|---|---|---|

| Experienced CI (N = 25) |

Older Normal Hearing (N = 25) |

|||||

| Cognitive Measures | Mean | SD | Range | Mean | SD | Range |

| Raven’s Correct Matrices | 10.08 | 0.89 | 3–20 | 11.84 | 1.08 | 5–26 |

| TOWRE-II Words (# Correct) | 77.72 | 2.68 | 45–95 | 82.96 | 1.62 | 66–107 |

| TOWRE-II Non-Words (# Correct) | 41.68 | 2.44 | 6–59 | 41.72 | 1.96 | 19–57 |

| TOWRE-II Total (# Correct) | 119.40 | 4.74 | 51–148 | 124.68 | 2.97 | 95–150 |

| Longest Digit Span | 5.68 | 0.26 | 3–8 | 5.8 | 0.20 | 4–8 |

| Digit Span Points | 42.68 | 3.53 | 14–79 | 43.40 | 2.60 | 20–69 |

| Word Familiarity Score | 4.69 | 0.19 | 3–7 | 5.30 | 0.15 | 3.57–6.36 |

| Speech Recognition | Mean | SD | Range | Mean | SD | Range |

| CID Phonemes Percent Correct | 78.56 | 3.90 | 28–100 | -- | -- | -- |

| CID Words Percent Correct | 69.04 | 4.63 | 18–96 | -- | -- | -- |

| Harvard Standard Keywords Percent Correct (N=24) | 79.46 | 3.70 | 11–95 | -- | -- | -- |

| Harvard Standard Sentences Percent Correct (N=24) | 48.47 | 4.49 | 0–80 | -- | -- | -- |

| PRESTO Keywords Percent Correct (N=24) | 61.00 | 4.24 | 8–89 | -- | -- | -- |

| PRESTO Sentences Percent Correct (N=24) | 27.78 | 3.75 | 3–63 | -- | -- | -- |

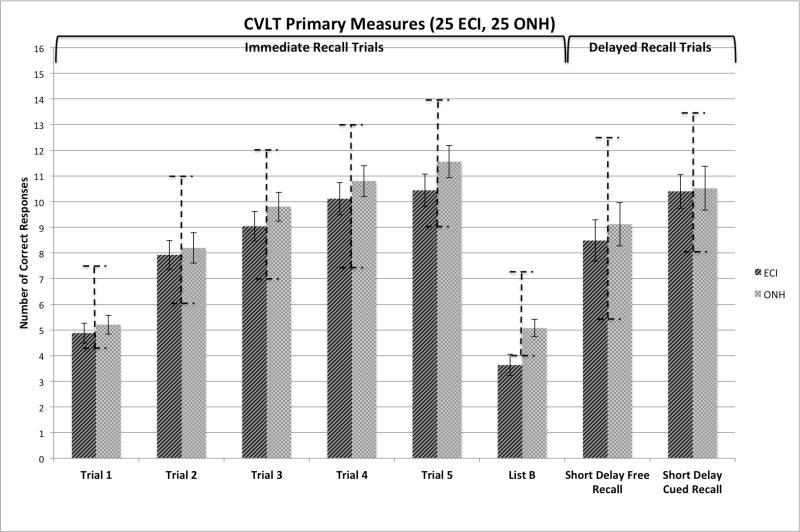

Figure 6 shows a summary of the immediate and short-delay free- and cued-recall scores obtained on the V-CVLT from the ECI and ONH controls. Except for recall of List B items by the ECI group, all of the scores fell within +/−1 SD of the normative range. A 2 × 5 ANOVA followed by a series of post-hoc pair-wise comparisons showed no group differences in recall on any of the List A immediate or short-delay recall measures. However, as shown in Figure 6, the groups did differ significantly in recall of items on List B; the ONH controls recalled significantly more List B words than the ECI group (p=.009). No other group differences were observed on any of the other primary measures from the CVLT.

Figure 6.

Summary of the immediate and short-delay free- and cued-recall scores obtained from the experienced CI users (ECI) and older normal hearing (ONH) controls, tested using the visual CVLT-II (V-CVLT). The panel on the left presents a summary of the immediate free recall scores for the five repetitions of List A and the one presentation of List B for both groups of subjects. The panel on the right presents a summary of the short-delay free- and short-delay cued-recall scores from both groups. The left set of bars in each panel shows the average recall scores from the ECI participants; the right set of bars shows the scores from the ONH controls. Bars representing the norm range scores are plotted on top of each recall condition based on age and gender.

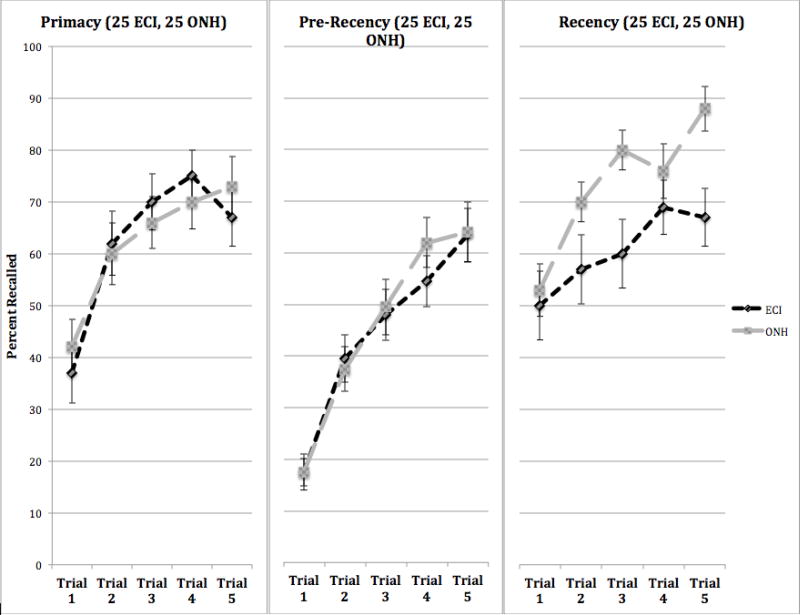

An examination of the process measures from the CVLT revealed that the ONH group recalled more List A words from the recency portion of the serial position curve than the ECI group, and this difference occurred consistently across all five study trials of List A, as shown in the right-hand panel of Figure 7. A 2 × 5 ANOVA on recall of words from the recency portions of List A revealed main effects for group and repetition learning trials (F= 5.83, p=.02; F=10.57, p<.001). Both groups showed comparable levels of free recall for words from the primacy (left-hand panel) and pre-recency (middle panel) subcomponents of the serial position curve (main effects for group and repetition learning trials on both of these subcomponents were not significantly different in a series of 2 × 5 ANOVAs).

Figure 7.

Percent correct free recall of words from primacy, pre-recency, and recency sub-components of the serial position curve for the experienced CI users (ECI) and older normal hearing (ONH) groups as a function of the five repetition study trials of List A, using the visual CVLT-II (V-CVLT). The panel of the left shows free recall of words from primacy, the middle panel shows free recall of words from pre-recency, and the right panel shows free recall of words from recency.

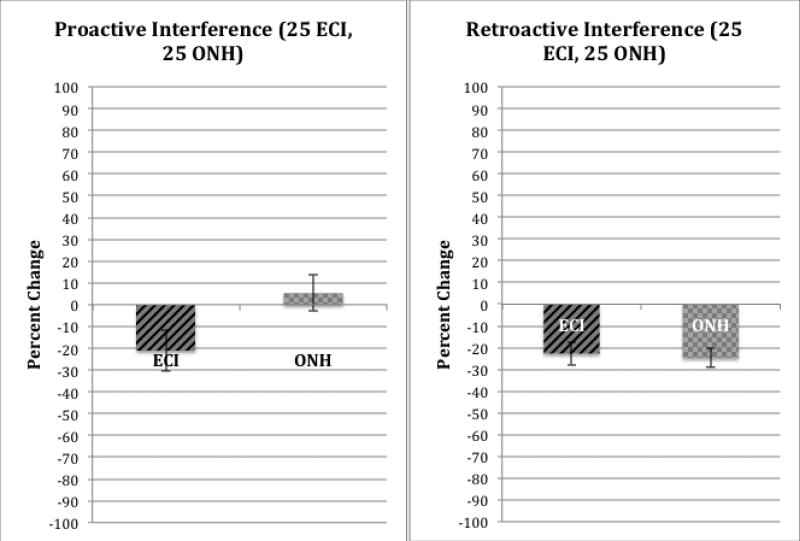

Forgetting Due to Proactive and Retroactive Interference

Figure 8 shows a summary of the secondary process measures of PI in the left-hand panel and RI in the right-hand panel obtained from the CVLT. The measure of PI was obtained by subtracting the recall of words on List B from the recall of words from List A Trial 1; the measure of RI is obtained by subtracting recall of short-delay free-recall of List A items from immediate free-recall of items on List A Trial 5. Both groups showed comparable RI effects. Although recall performance on short-delay free recall of List A items in both groups was reduced significantly by prior exposure to List B items (p<.001), there was no difference in RI between the two groups. In contrast, a different pattern of results was obtained for PI, as shown in the left-hand panel of Figure 8. While the ECI group showed significantly lower performance on List B items following the five repetitions of List A (p=.025), the ONH group showed a small increase in recall of List B items. A 2 (group) × 2 (List A Trial 1 vs. List B) ANOVA revealed a significant effect of trial (List A Trial 1 vs List B; p=.02) and marginal effect for group (ECI vs ONH; p=.058). There was also a marginally significant interaction between these two main effects (p=.053).

Figure 8.

Summary of measures of proactive interference (PI) and retroactive interference (RI) for the experienced CI users (ECI) and older normal hearing (ONH) groups, using the visual CVLT-II (V-CVLT). PI scores are shown in the left-hand panel; RI scores are shown in the right-hand panel.

Retrieval Induced Forgetting and Release from Proactive Interference