Abstract

Visual memory is crucial to navigation in many animals, including insects. Here, we focus on the problem of visual homing, that is, using comparison of the view at a current location with a view stored at the home location to control movement towards home by a novel shortcut. Insects show several visual specializations that appear advantageous for this task, including almost panoramic field of view and ultraviolet light sensitivity, which enhances the salience of the skyline. We discuss several proposals for subsequent processing of the image to obtain the required motion information, focusing on how each might deal with the problem of yaw rotation of the current view relative to the home view. Possible solutions include tagging of views with information from the celestial compass system, using multiple views pointing towards home, or rotation invariant encoding of the view. We illustrate briefly how a well-known shape description method from computer vision, Zernike moments, could provide a compact and rotation invariant representation of sky shapes to enhance visual homing. We discuss the biological plausibility of this solution, and also a fourth strategy, based on observed behaviour of insects, that involves transfer of information from visual memory matching to the compass system.

Keywords: visual homing, insect navigation, skyline, frequency encoding, rotational invariance, Zernike moments

1. Introduction

Spatial memory can be broadly defined as remembering information about a location that enables return to that location. For many animals, visual information is primary for this task. Potential advantages of visual cues are that they can be sensed passively over large distances, and that the structural information that can be extracted from an image tends to be a relatively stable and identifiable property of space (unlike, for example, olfactory cues). In order of increasing complexity, visual spatial navigation may involve simple recognition when a location is re-encountered, visual servoing towards a beacon (a clear visual cue at the desired location), visual homing that uses the configuration of surrounding cues, and, at a higher level, linking together of multiple recognized locations, beacons or cue configurations into some form of map. Even the simplest function of recognizing a location already raises significant problems for visual processing especially under natural conditions, such as dealing with varying illumination conditions and different viewpoints that will alter the image on the animal's retina. However, in this paper the focus will be on the problem of visual homing, which can be defined as the problem of determining, from comparison of the current view with a stored ‘home’ view, how to move so as to return to the home location [1–3].

This problem can be compared with the classic navigation process of triangulation, that is, using the bearing of landmarks to determine a position [4]. In modern robotics, it forms a key part of visual simultaneous localization and mapping (vSLAM): using the difference in bearing between identified landmarks in the current and previous views to estimate a movement vector. Theoretically, for movement on a plane, if three static landmarks are in view and correctly identified, the bearing difference between those landmarks is sufficient to uniquely identify the current location [4]. In practice using more landmarks increases robustness, but there will always be some point beyond which disappearance of the familiar landmarks due to distance or occlusion will preclude successful homing. The region around the home view from which homing is possible is often referred to as the catchment area, and its size is one way in which different homing algorithms can be compared. It is worth noting here that many algorithms for visual homing (including some of those discussed below) have relaxed the concept of ‘landmark’ to allow dense matching of image features, or even pixel-wise comparison of views, to form the basis of the calculation of the home direction.

In mammals, a classic demonstration of the ability to use surrounding visual cues to return via direct, novel paths to a desired location is the Morris water maze [5]. In this widely used paradigm, a rodent is placed in a pool of opaque liquid and must swim until it discovers the location of a platform concealed under the water surface. On subsequent trials, starting from different locations, it finds the platform more rapidly. If conspicuous surrounding cues in the room are rotated, the search (for a platform that is now missing) will be centred on the position relative to these cues where the animal would experience the same view as it saw from the platform.

Many species of insects need to solve the equivalent task to pinpoint the location of their nest (which can have an inconspicuous entrance) after foraging journeys. For example, ants displaced from their nest to novel locations within the catchment area are able to head directly back towards the nest, but are lost if moved beyond a visual boundary that occludes most of the features visible from the nest [6]. Ants, bees or wasps trained with cues around their nest which are then translated while the animal forages will search for their nest in the appropriate location relative to the visual cues rather than at the actual nest position (for wasps, [7]; for bees, [1]; for ants, [8]). By manipulating landmark size and placement, it appears this search is principally based on obtaining a retinotopic match of the view, i.e. the bearing and size of landmarks [1,8–10], although, in some situations, bees may also rely on the absolute distances of landmarks [11].

Perhaps more surprisingly, several species of insect that are not particularly known for their navigational prowess have been shown to be able to perform visual homing in a direct experimental analogue of the Morris water maze. In this case, the aversive pool of water is replaced by an aversive heated floor, and the platform by a small area of cooler floor, which the animal can only discover by search. This paradigm was first used with cockroaches [12], then with crickets [13] and more recently with fruitflies [14,15]. After several trials, the animals move with increasing directness to the correct location, and if surrounding visual cues are rotated they will search in the appropriate relative location.

A number of questions are still open regarding the mechanisms underlying this ability in insects (and, for that matter, in vertebrates). What visual processing does the animal do to extract the information in a visual scene that will support homing, particularly under potentially variable conditions? What is the form of the stored memory? How are the memory and the current scene compared to recover a movement direction? What brain systems could support the required processing, memory storage and direction recovery?

Insects have a number of specializations in the periphery of their visual system that appear pertinent to the first question. Most insects have a near omnidirectional, but low-resolution, view of the world. Their apposition compound eyes are lattice-like structures that consist of many functionally independent yet physically interlocked light-sensitive structures known as ommatidia, each complete with its own lens and photoreceptors. When stacked together these cone-shaped ommatidia form a bulbous outer contour producing an overall wide field of view but lacking focusing capacities [16]. The combination of wide field of view with low resolution has been demonstrated to be beneficial for visual navigation tasks, providing sufficient information while preventing overfitting to details of the environment [17,18]. It is common for insect photoreceptors to be sensitive to ultraviolet light [19], which has been shown to enhance skyline detection capacity [20–23] underpinning skyline-based navigation strategies [24,25] and demonstrated to be advantageous for autonomous navigation [21,26–28]. Further, specially adapted photoreceptors in the dorsal rim (upwards facing) area of the eye show sensitivity to linearly polarized light, allowing insects to derive their compass orientation from the pattern of polarized light present in the sky [29–31].

Clearly, the question of memory representation and recovery of a movement direction are linked, and a range of solutions have been developed (for a review, see [3]). Most earlier theories focused on obtaining a compact representation, e.g. a one-dimensional binary description of surrounding landmarks and gaps between them [1], or a single vector average of the bearings of landmarks [32] or the intensity distribution [33]. Reducing the memory to a vector allows very elegant calculation of the home vector through vector subtraction, but has been found insufficient to account for insect homing in some of the experimental assays described above [34]. More recent work has favoured approaches that assume storage of the raw, or minimally processed, full image [35,36]. In its most straightforward form, the agent stores an (omnidirectional) image at the home location, and uses pixel-wise intensity comparison with the current image in a new location to obtain a difference measure. For natural scenes, the difference tends to increase monotonically with distance from the home location, so a process of gradient descent on the difference surface will result in homing in both natural [35,37,38] and laboratory environments [34].

A problem that all of these approaches need to address is whether and how the animal can carry out a retinotopic image comparison when it is currently facing in a different direction. Most earlier algorithms incorporated the assumption that visual memories would be tagged by the compass system, and thus the first step to discover the heading direction would be to re-align the current image and the home image, through either mental rotation or physical rotation to the desired compass angle [39]. Yet, this cannot explain visual homing in the watermaze-inspired experiments described above where insects have no access to celestial compass cues and the arenas are specifically designed to provide no orientation information.

An alternative recent approach has been to use the fact that misaligned images are likely to mismatch as the basis for recovering the correct alignment. By rotating (it is usually assumed physically) while comparing images, a minimum in the image difference will occur when the animal is aligned. In fact, this ‘visual compass’ approach has been used to argue that visual homing (or visual ‘positional matching’ [40]) can be subsumed under a general mechanism for route following by visual ‘alignment matching’ [41–43]. If, rather than storing a single image at the home location, the animal stores a set of images from short displacements in different directions while facing the nest, then the best match it will obtain from a new location will be when aligned in the direction of the nearest stored image, i.e. facing the nest, the direction it needs to move [6,37,44]. This explanation has several attractive features. The animal does not need to store any additional information about the images (the order in which they were experienced, their compass direction, etc.) and the matching process already provides the movement information, rather than requiring some additional mechanism to discover the local gradient of similarity. This lends itself to a neural network solution for memory storage in which all key images are input to a single associative net and the output is a general ‘familiarity’ measure [45], and such processing has been shown to be consistent with the circuit architecture of the insect mushroom body [46]. This approach is also supported by some observations of insect behaviour. Central place foraging insects engage in learning flights (bees, [47]; wasps, [10,48–51]) and walks [52–54]—on departure from the nest they rotate to view the nest from different positions. Ants also exhibit ‘scanning’ behaviours in which they rotate on the spot before moving off in a particular direction, which suggests they may be attempting to physically align views [55,56].

Overall, this algorithm explains well the behaviour of ants recapitulating a familiar route [45,57,58] and can also explain homing from novel locations assuming the use of nest-directed views stored during learning walks [6,44]. In the latter case, the recall of a useful nest-directed view requires that the stored views that lie between the agent and the nest match better than any other stored views. This can work over 10–15 m in relatively open natural scenes [6,37]; however, it requires the agent to deal with small differences in mismatch and can be potentially brittle in other environments [57]. In particular, during a scan, there will no longer be a single minimum in the image difference function but rather multiple minima corresponding to each direction in which images were stored (cf. fig. 7 in [6]). This makes the task of finding the ‘correct’ minima more difficult, and more vulnerable to any factors that may distort the view or prevent regular, accurate alignment. Such factors include incomplete compensation for pitch [59] and roll [60] when moving over uneven terrain, as well as highly variable yaw relative to the direction of travel when dragging heavy food in a backwards motion [61]. Ants in this situation may use forward peeks [62], but neither peeks nor scans are observed as often as has been assumed in most simulated evaluations of this algorithm. Finally, ants displaced from a familiar route do not orient as predicted by a visual alignment strategy (running parallel to the familiar path) but instead move directly towards the familiar route, suggesting they can match and home to the nearest image while misaligned with its direction [57,63,64].

Here we present an alternative solution, exploiting an approach from computer vision for rotationally invariant recognition which is particularly suited to the problem insects need to solve in visual homing.

2. Methods

2.1. Homing by matching sky shapes

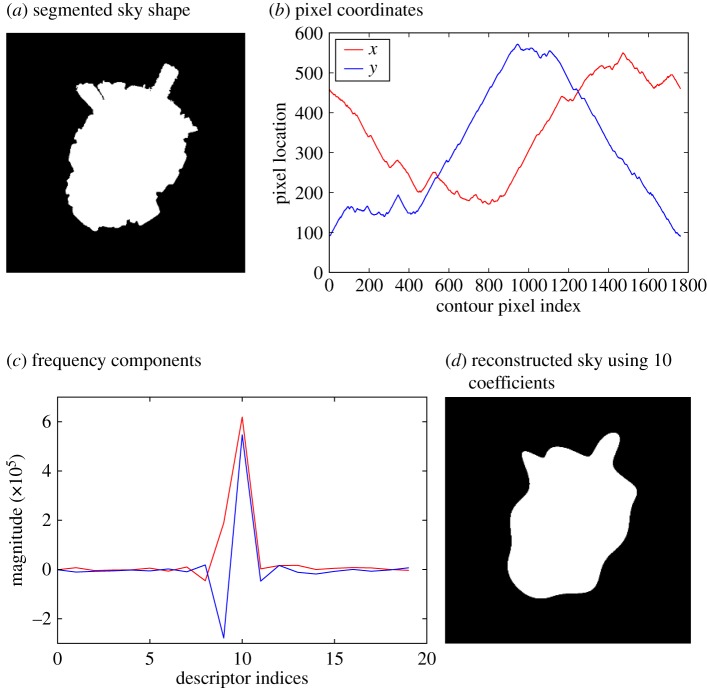

As briefly described above (for more detail, see [21,22]), the visual system of the insect appears specialized to obtain an omnidirectional view that emphasizes the skyline. If sky is segmented from ground and stored as a binary image, the shape of the sky forms a single blob or group of blobs with a characteristic shape (figure 1a). Matching a segmented sky image to another, despite yaw rotations, is essentially a form of shape recognition, a well-established field [65]. Various algorithms exist for matching shapes, despite variation in translation, scale and rotation. Common solutions include capturing properties of the contour [66–68] or the region itself, typically through the use of geometric moments [69,70].

Figure 1.

Fourier descriptors to match skylines. (a) Segmented sky of an urban scene can be thought of as a closed shape. (b) x- and y-coordinates of the shape edge create a continuous signal. (c) The frequency components of this signal can describe the outline of the shape up to an arbitrary amount of detail. (d) Reconstruction of sky shape using only the first 10 Fourier descriptors shows the rough shape signature, allowing high compression of skylines.

The possibility of using frequency coding on a one-dimensional skyline description to do more efficient visual homing was proposed in [71]. This approach extracted a horizontal strip of greyscale values from the horizon pixels in an image from a panoramic camera mounted on a robot, and the array was converted to a frequency representation using a Fourier transform. However, rather than use this for rotation invariant image matching, their approach instead used phase components to recover an estimate of rotation combined with a frequency domain equivalent of the warping method [72] to determine the displacement from home. This was successfully used to home, with catchment areas being more pronounced and narrow for higher frequencies and wider for lower frequencies. Our first approach was to extend frequency coding to a two-dimensional sky shape using Fourier descriptors [66]. The outline of the shape is traced to form a complex signal representing the changing x- and y-coordinates (figure 1b), which then undergoes a Fourier transform to represent it in the frequency domain (figure 1c). Ignoring phase and storing the amplitude provides a shape signature that is invariant to yaw rotation. An additional benefit of this encoding is that the level of detail can be adjusted by only retaining the coefficients representing lower frequencies (figure 1d), allowing for easy scaling of storage and fast look-up of similar skylines. However, this approach fails when the sky does not clearly consist of a single area, such as under foliage.

To mitigate these problems, a region-based shape recognition approach was applied. Zernike moments [70] (ZMs) are an orthogonal basis function set defined on the unit disc in polar coordinates, and have been widely used as global image descriptors. Like Fourier descriptors, the coefficients provide a frequency-based yaw-rotation-invariant representation of an image. As we are interested in performing homing based on the information in an image that consists of a ‘sky disc’ with a fixed centre and radius to the horizon (hence no translation and scaling) this representation seems especially suitable for our purposes.

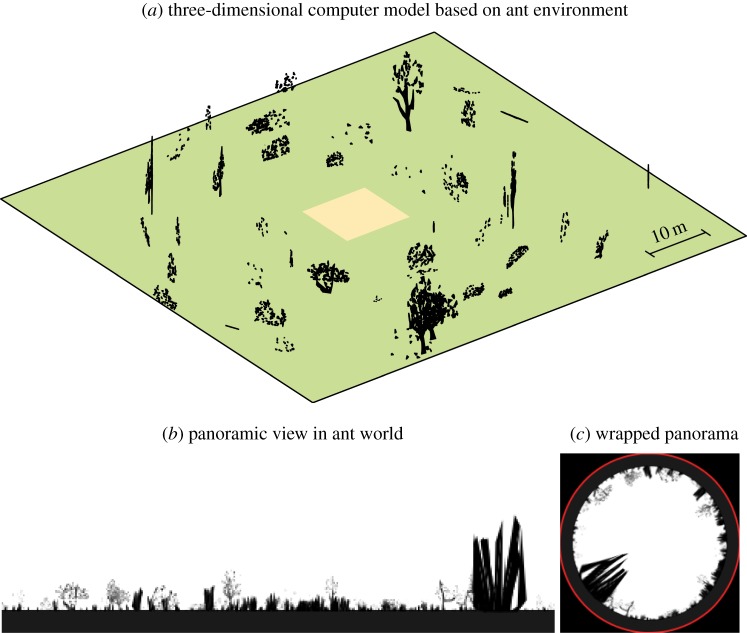

2.2. Simulating visual homing

A virtual ant world previously made by Baddeley et al. [45] was used to create realistic panoramic greyscale views (figure 2a). Views were generated in a 10 × 10 m box at 2 cm intervals (figure 2b). Twenty evenly spaced locations were used as reference positions, with which all other locations within the box were compared. Two methods of comparison were used: a retinotopic pixel-wise matching, and using amplitude coefficients of ZMs of the panoramic images, remapped to a sky-centred disc (figure 2c).

Figure 2.

Simulated ant world. (a) A simulated world was populated with random vegetation at different densities, creating an ant-like environment in which 360° panoramic views were generated every 2 cm in the test area (light orange) to test homing models. (b) Panoramic view of the world. (c) Images were wrapped before converting to a ZM representation.

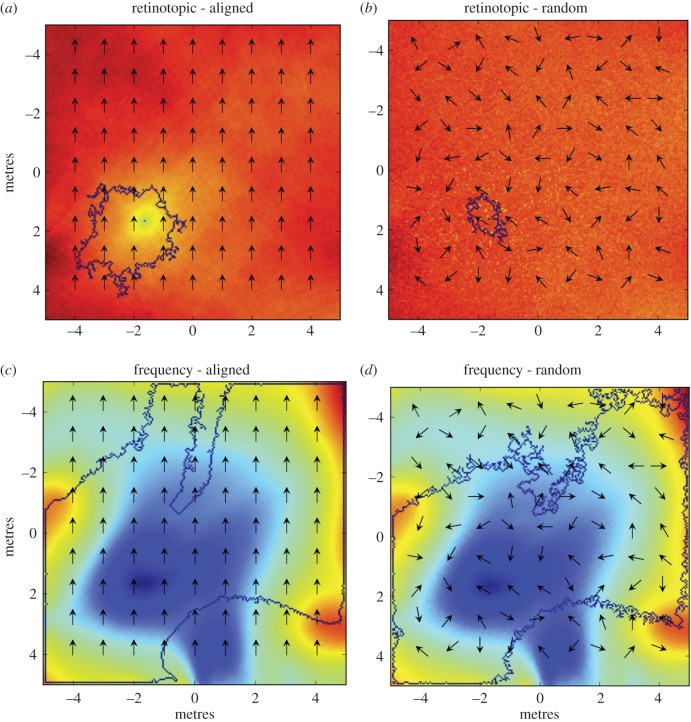

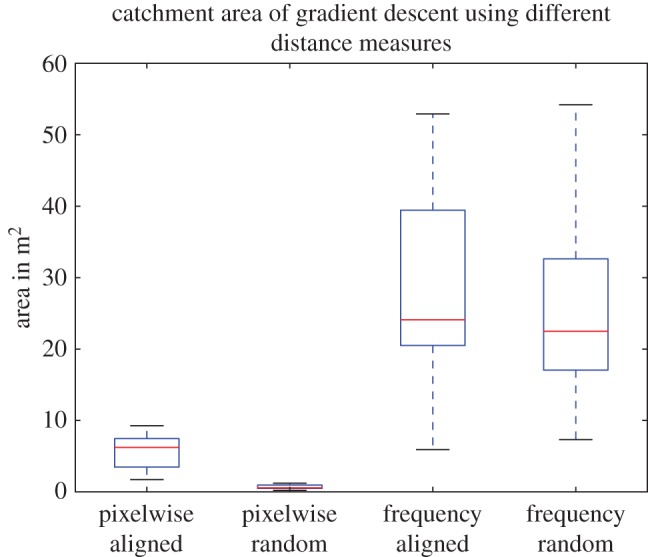

The Euclidean distance between moments at each location was plotted on a two-dimensional surface and gradient descent was used to determine which points would fall within the basin of attraction by iteratively moving a small step in the direction of steepest descent, where the local gradient was inferred using bicubic interpolation. If the starting location eventually led back to the nest it was deemed to be within the catchment area (figure 3). As we seek to assess the homing robustness of each encoding to changes in rotation it is the change in catchment area size that is most important rather than absolute performance per se, which could be strongly affected by multiple parameters not explored in this study.

Figure 3.

Frequency-based homing shows increased catchment areas. Heatmaps show similarity (highest for blue, lowest for red) to a panoramic image from the same reference location measured at 2 cm intervals in the virtual environment. In (a) and (c), the images are taken facing north; in (b) and (d) with random headings. In (a) and (b), comparison is pixelwise; in (c) and (d) by taking the Euclidean distance between vectors made up by ZM amplitude coefficients. Blue contour lines indicate the border of the catchment area.

3. Results

Figure 3 shows the catchment areas obtained from comparison of images across the environment with a home image captured at one specific location. The images were either aligned with the reference image or taken at random headings. In figure 4, the results for 20 trials with different home image locations are shown.

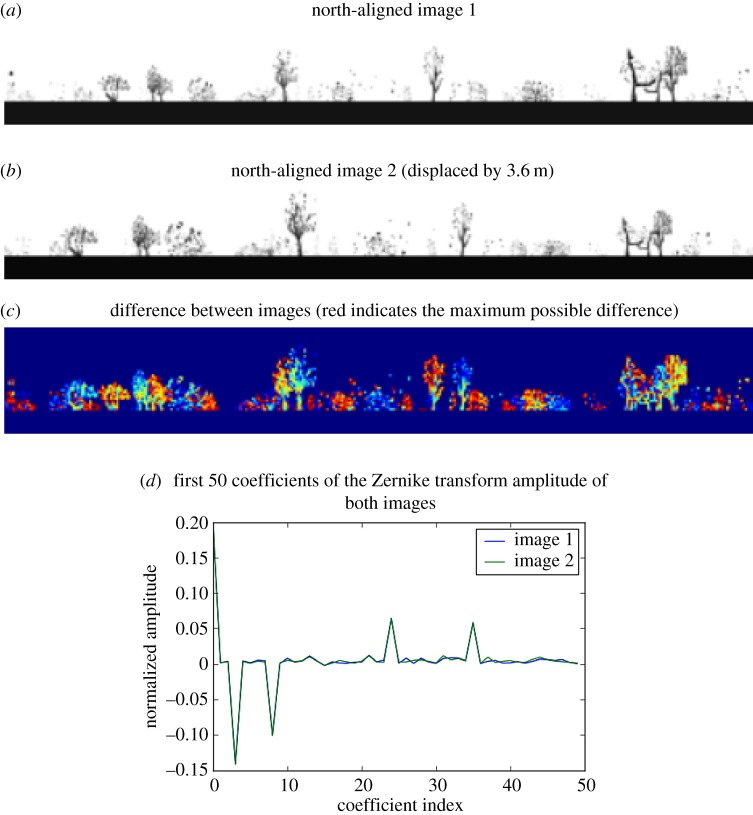

Figure 4.

Homing performance. N = 20. Pixelwise retinotopic matching provides guidance but only when aligned with memory. Frequency encoding performs well, regardless of orientation.

As expected, these results clearly show that the use of random headings completely disrupts homing based on retinotopic matching (figure 3b), whereas the frequency representation is unaffected by the heading direction in which the pictures are perceived (figure 3d). We also found that, with a frequency representation, the catchment areas were considerably larger. The surprisingly poor performance obtained using retinotopic matching even with aligned images (figure 3a) is most likely due to a noisy image difference function surface, causing the agent to get stuck at local minima (similar results were reported by Mangan [73]). Storing multiple snapshots facing nestward from around the nest, which is one proposed solution to deal with random headings, will not fix this limitation. By contrast, the use of frequency representation seems to smooth out the image difference function surface (figure 3c), thus simplifying the problem of finding the global minima. We note that reducing the resolution of images (using bilinear interpolation) did not improve results for retinotopic matching, nor did introducing smoothing of the images. Nevertheless, we expect parallax changes to cause landmarks across the horizon to be misaligned in moderately distant views, producing a poor retinotopic match (figure 5c) and limiting the catchment area. It appears the frequency approach, especially at low frequencies, is more robust (figure 5d).

Figure 5.

Explanation of poor performance of retinotopic matches between allocentrically aligned images. (a, b) Two panoramic views taken 3.6 m apart in the virtual ant environment shown in figure 2a. (c) Absolute image difference between the two images. Even a relatively small translational displacement in an open area with only distant landmarks can cause the majority of matching skyline features to misalign. (d) Conversely, normalized Zernike amplitude coefficients of each image remain relatively similar.

4. Discussion

Vision plays an important role in spatial navigation for many animals and the image processing that enables setting a course back towards a home location has been widely studied and modelled in insects. We have shown in previous work [21] that an image sensor based on the insect eye can be effective for recognition of previously experienced outdoor locations: combining ultraviolet light filtering and an omnidirectional view results in distinctive, illumination invariant sky shapes. Here, we show that using a frequency-based representation of the sky shape allows rotation invariant comparison of views in the context of visual homing. The increased catchment areas even for unrotated views suggests this encoding is also an effective way to capture global similarity in views in a way that alters smoothly with displacement. We note this contrasts with the results of frequency encoding of one-dimensional horizon intensity used for visual homing in the study by Stürzl & Mallot [71], who report a 20% reduction in the catchment area compared with pixel-based comparison. However, their approach was significantly different, as the aim was to show the efficiency of a frequency-based method for image warping rather than image matching per se. Along with the different environment used (not representing a natural outdoor scene), there are multiple reasons why this different result could have been observed.

Using ZMs provides rotation invariance but is still vulnerable to factors that might cause the sky disc not to be centred. Specifically, in the insect (or robot) context, there might be additional variation in the image caused by pitch [59] and roll [60]. We have explored the use of spherical harmonics [74] to address this issue. This method similarly uses the summation of basis functions to approximate a function corresponding to an image, but on the surface of a sphere rather than a disc. The results of a robot study applying this method for recognition of sky images are reported in [75]. We note, however, that in contexts where variance is expected to be predominantly around the z-axis (yaw), e.g. for an animal moving on flat terrain or stabilizing its head with respect to the horizon, ZMs will preserve more information about the shape with fewer components.

The use of ZMs (or alternative basis function approaches) provides a potential advantage in terms of low-dimensional storage and simple comparison of images using a small number of coefficients. But this comes at the cost of more complex image processing. However, the long-established analogy between basis function encoding and neural receptive fields [76–78] suggests that such processing may be biologically plausible. A mechanism to extract the amplitude at various frequencies would be through randomly aligned sinusoidal receptive fields, which is not inconsistent with known optics and early visual processing in insects, such as bar-sensitive, orientation-biased cells in insect lobula [79] or lateral triangle [80]. Scene recognition in this manner would resemble the use of GIST descriptors used in computer vision, where the image is convolved with Gabor filters at different frequencies and orientations [81]. However, we note that ZMs would be equivalent to very large receptive fields.

This problem has been approached from the other direction, i.e. from known properties of insect visual receptive fields to navigational behaviour [82–84]. This work uses the receptive fields described in [80], or a generalized version of them, to encode omnidirectional views for an insect navigation task. However, the approach more closely resembles a phase filtering at a single spatial frequency, and as such is shown to be effective for assessing rotational match rather than encoding rotational invariance. The trend for many computer vision problems is towards using convolutional neural networks, where features are learned rather than hand-designed, and thus potentially acquire invariance to many types of distortion including pose and lighting conditions [85]. Previous results for this method in a navigation context can be seen in [86], where images depicting the same place but taken at different times are used to learn localization-specific invariant features. A recent paper applies this approach in the ant navigation problem [87], using an autoencoder to compress 8100 pixel images taken in a forest ant habitat to 64 features that can still suffice for robust image comparison. As above, this work focuses on recovering information for rotational matching. It would be interesting to apply the approach to rotated and tilted views and to visualize how features learned by the network correspond to hand-designed ones. At lower levels, we certainly see the Gabor filter style tuning of units, which indicates some type of frequency approximation [88]. It would be interesting to investigate whether any higher level features that emerge in such an approach approximate skyline contours.

As may be evident from the previous paragraph, the desirability of a rotation invariant encoding depends on the assumed role of image comparison in navigation. In route following tasks, the correct heading along a route can be recovered by rotational alignment with respect to a visual memory, in which case a rotation invariant encoding of view is not desired. This alignment strategy can also be combined with the use of multiple home facing images for direct control of movement in the homing task; this could be sufficient [6,37] but may lack robustness compared with a rotation invariant approach. However, as discussed in the Introduction, a purely alignment based approach does not capture the fact that displaced ants can return directly towards their familiar route by moving in a direction misaligned with stored views [57,63,64]. Our frequency coding can provide an explanation for this behaviour as the gradient of familiarity, which slopes towards the closest familiar location, becomes accessible whatever the current body alignment.

Note that frequency coding could also be used to recover rotation information by using the phase of the components, as was done in [71]. Here we have ignored the phase information to obtain a rotation invariant image similarity measure that (within a catchment area) correlates with displacement distance. Choice of movement direction then requires estimation of the local gradient of familiarity, for which several possible solutions have been suggested. First, the familiarity of neighbour locations could be sampled, randomly or not, enabling different kinds of gradient descent [35]. Local image warping has been suggested as a means to ‘mentally’ sample the consequences of moving to neighbouring locations [36]. Another possibility is to adopt the klinokinesis strategy based on continuous oscillations that has been proposed for other animals negotiating a gradient with only a single-point source measure, e.g. Drosophila larva in an odour gradient [89]. This method, already demonstrated to be sufficient for route following in desert ant habitats [90], involves making regular lateral oscillations with an amplitude mediated by the change in stimulus intensity (here, in image similarity). Ants do appear to display lateral oscillation during visual guidance [91].

It also remains plausible that insects are able to implement either strategy, that is, rotation-based alignment or rotation invariant image matching, depending on current conditions [57]. An interesting possibility is that these mechanisms interact in visual homing, in a manner that would be consistent with the observation that ants dragging a large food item backwards are observed to peek forwards, followed by immediate course correction [62]. That is, rather than homing by following the similarity gradient using any of the methods described in the previous paragraph, the animal may use a rotation invariant measure to monitor the visual familiarity of its current location and thus to control when it peeks, but still require physical alignment to obtain an estimate of the direction it needs to move. The direction may then be set relative to the celestial compass, allowing the animal to maintain the desired heading while facing another way when it returns to dragging the food [62]. Whether or not the ant is still monitoring familiarity while facing backwards could be readily tested in experiments that alter the view under these conditions.

As we have reviewed in this paper, visual homing in insects has been a rich field for investigation of the nature of biological image processing, and has been both an influence on, and influenced by, computer vision, especially in the context of robot navigation. A general conclusion that can be drawn is that biological ‘image understanding’ should be investigated within the context of behavioural control—what does the animal actually need to ‘understand’ in the image to solve the ecological task it is faced with? This approach has shifted the emphasis in insect navigational studies away from landmark extraction and towards holistic scene memory, leading to significant new insights into the mechanisms. A similar shift in perspective may be overdue for navigation in other animals.

Acknowledgements

Material in the results section was presented as a poster at the 2016 International Congress of Neuroethology. We thank reviewers for their comments.

Data accessibility

The code used to generate the data in this paper is available at: https://github.com/InsectRobotics/interface-focus-2018.

Authors' contributions

Conception and analysis: T.S., M.M., A.W. and B.W. Simulation and figures: T.S. Writing: B.W., M.M. and A.W.

Competing interests

We declare we have no competing interests.

Funding

This work was supported by EPSRC grant EP/M008479/1 ‘Invisible cues’, and grants EP/F500385/1 and BB/F529254/1 for the University of Edinburgh School of Informatics Doctoral Training Centre in Neuroinformatics and Computational Neuroscience.

References

- 1.Cartwright B, Collett TS. 1983. Landmark learning in bees. J. Comp. Physiol. 151, 521–543. ( 10.1007/BF00605469) [DOI] [PubMed] [Google Scholar]

- 2.Vardy A, Möller R. 2005. Biologically plausible visual homing methods based on optical flow techniques. Conn. Sci. 17, 47–89. ( 10.1080/09540090500140958) [DOI] [Google Scholar]

- 3.Zeil J. 2012. Visual homing: an insect perspective. Curr. Opin. Neurobiol. 22, 285–293. ( 10.1016/j.conb.2011.12.008) [DOI] [PubMed] [Google Scholar]

- 4.Pierlot V, Van Droogenbroeck M. 2014. A new three object triangulation algorithm for mobile robot positioning. IEEE. Trans. Robot. 30, 566–577. [Google Scholar]

- 5.Morris R. 1981. Spatial localization does not require the presence of local cues. Learn. Motiv. 12, 239–260. ( 10.1016/0023-9690(81)90020-5) [DOI] [Google Scholar]

- 6.Narendra A, Gourmaud S, Zeil J. 2013. Mapping the navigational knowledge of individually foraging ants, Myrmecia croslandi. Proc. R. Soc. B 280, 20130683 ( 10.1098/rspb.2013.0683) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 7.Tinbergen N. 1951. The study of instinct. Oxford, UK: Clarendon Press/Oxford University Press. [Google Scholar]

- 8.Wehner R, Räber F. 1979. Visual spatial memory in desert ants, Cataglyphis bicolor (Hymenoptera: Formicidae). Cell. Mol. Life. Sci. 35, 1569–1571. ( 10.1007/BF01953197) [DOI] [Google Scholar]

- 9.Brünnert U, Kelber A, Zeil J. 1994. Ground-nesting bees determine the location of their nest relative to a landmark by other than angular size cues. J. Comp. Physiol. A 175, 363–369. ( 10.1007/BF00192995) [DOI] [Google Scholar]

- 10.Zeil J. 1993. Orientation flights of solitary wasps (Cerceris; Sphecidae; Hymenoptera). J. Comp. Physiol. A 172, 207–222. ( 10.1007/BF00189397) [DOI] [Google Scholar]

- 11.Lehrer M, Collett T. 1994. Approaching and departing bees learn different cues to the distance of a landmark. J. Comp. Physiol. A 175, 171–177. ( 10.1007/BF00215113) [DOI] [Google Scholar]

- 12.Mizunami M, Weibrecht J, Strausfeld N. 1998. Mushroom bodies of the cockroach: their participation in place memory. J. Comp. Neurol. 402, 520–537. ( 10.1002/(SICI)1096-9861(19981228)402:4%3C520::AID-CNE6%3E3.0.CO;2-K) [DOI] [PubMed] [Google Scholar]

- 13.Wessnitzer J, Mangan M, Webb B. 2008. Place memory in crickets. Proc. R. Soc. B. 275, 915–921. ( 10.1098/rspb.2007.1647) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Foucaud J, Burns JG, Mery F. 2010. Use of spatial information and search strategies in a water maze analog in Drosophila melanogaster. PLoS ONE 5, e15231 ( 10.1371/journal.pone.0015231) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Ofstad TA, Zuker CS, Reiser MB. 2011. Visual place learning in Drosophila melanogaster. Nature 474, 204–207. ( 10.1038/nature10131) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 16.Land MF, Nilsson DE. 2012. Animal eyes. Oxford, UK: Oxford University Press. [Google Scholar]

- 17.Milford M. 2013. Vision-based place recognition: how low can you go? Int. J. Rob. Res. 32, 766–789. ( 10.1177/0278364913490323) [DOI] [Google Scholar]

- 18.Wystrach A, Dewar A, Philippides A, Graham P. 2016. How do field of view and resolution affect the information content of panoramic scenes for visual navigation? A computational investigation. J. Comp. Physiol. A 202, 87–95. ( 10.1007/s00359-015-1052-1) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Menzel R, Ventura DF, Hertel H, De Souza J, Greggers U. 1986. Spectral sensitivity of photoreceptors in insect compound eyes: comparison of species and methods. J. Comp. Physiol. A 158, 165–177. ( 10.1007/BF01338560) [DOI] [Google Scholar]

- 20.Möller R. 2002. Insects could exploit UV–green contrast for landmark navigation. J. Theor. Biol. 214, 619–631. ( 10.1006/jtbi.2001.2484) [DOI] [PubMed] [Google Scholar]

- 21.Stone T, Mangan M, Ardin P, Webb B. 2014. Sky segmentation with ultraviolet images can be used for navigation. In Proceedings of Robotics: Science and Systems. Berkeley, CA: Robotics: Science and Systems Foundation; See http://www.roboticsproceedings.org/rss10/p47.pdf. [Google Scholar]

- 22.Differt D, Möller R. 2015. Insect models of illumination-invariant skyline extraction from UV and green channels. J. Theor. Biol. 380, 444–462. ( 10.1016/j.jtbi.2015.06.020) [DOI] [PubMed] [Google Scholar]

- 23.Differt D, Möller R. 2016. Spectral skyline separation: extended landmark databases and panoramic imaging. Sensors 16, 1614 ( 10.3390/s16101614) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Graham P, Cheng K. 2009. Ants use the panoramic skyline as a visual cue during navigation. Curr. Biol. 19, R935–R937. ( 10.1016/j.cub.2009.08.015) [DOI] [PubMed] [Google Scholar]

- 25.Schultheiss P, Wystrach A, Schwarz S, Tack A, Delor J, Nooten SS, Bibost A-L, Freas CA, Cheng K. 2016. Crucial role of ultraviolet light for desert ants in determining direction from the terrestrial panorama. Anim. Behav. 115, 19–28. ( 10.1016/j.anbehav.2016.02.027) [DOI] [Google Scholar]

- 26.Stein F, Medioni G. 1995. Map-based localization using the panoramic horizon. IEEE. Trans. Rob. Autom. 11, 892–896. ( 10.1109/70.478436) [DOI] [Google Scholar]

- 27.Meguro J, Murata T, Amano Y, Hasizume T, Takiguchi J. 2008. Development of a positioning technique for an urban area using omnidirectional infrared camera and aerial survey data. Adv. Robot. 22, 731–747. ( 10.1163/156855308X305290) [DOI] [Google Scholar]

- 28.Ramalingam S, Bouaziz S, Sturm P, Brand M. 2009. Geolocalization using skylines from omni-images. In Proc. 2009 IEEE 12th Int. Conf. on Computer Vision Workshops (ICCV Workshops), Kyoto, Japan, 27 September–4 October 2009, pp. 23–30. New York, NY: IEEE. [Google Scholar]

- 29.Wehner R, Labhart T. 2006. Polarization vision. In Invertebrate vision (eds E Warrant, D-E Nilsson), pp. 291–348. Cambridge, UK: Cambridge University Press. [Google Scholar]

- 30.Homberg U, Heinze S, Pfeiffer K, Kinoshita M, El Jundi B. 2011. Central neural coding of sky polarization in insects. Phil. Trans. R. Soc. B 366, 680–687. ( 10.1098/rstb.2010.0199) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Heinze S. 2017. Unraveling the neural basis of insect navigation. Curr. Opin. Insect. Sci. 24, 58–67. ( 10.1016/j.cois.2017.09.001) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Lambrinos D, Kobayashi H, Pfeifer R, Maris M, Labhart T, Wehner R. 1997. An autonomous agent navigating with a polarized light compass. Adapt. Behav. 6, 131–161. ( 10.1177/105971239700600104) [DOI] [Google Scholar]

- 33.Hafner VV. 2001. Adaptive homing—robotic exploration tours. Adapt. Behav. 9, 131–141. ( 10.1177/10597123010093002) [DOI] [Google Scholar]

- 34.Mangan M, Webb B. 2009. Modelling place memory in crickets. Biol. Cybern. 101, 307–323. ( 10.1007/s00422-009-0338-1) [DOI] [PubMed] [Google Scholar]

- 35.Zeil J, Hofmann MI, Chahl JS. 2003. Catchment areas of panoramic snapshots in outdoor scenes. J. Opt. Soc. Am. A 20, 450–469. ( 10.1364/JOSAA.20.000450) [DOI] [PubMed] [Google Scholar]

- 36.Möller R. 2009. Local visual homing by warping of two-dimensional images. Rob. Auton. Syst. 57, 87–101. ( 10.1016/j.robot.2008.02.001) [DOI] [Google Scholar]

- 37.Stürzl W, Grixa I, Mair E, Narendra A, Zeil J. 2015. Three-dimensional models of natural environments and the mapping of navigational information. J. Comp. Physiol. A 201, 563–584. ( 10.1007/s00359-015-1002-y) [DOI] [PubMed] [Google Scholar]

- 38.Murray T, Zeil J. 2017. Quantifying navigational information: the catchment volumes of panoramic snapshots in outdoor scenes. PLoS ONE 12, e0187226 ( 10.1371/journal.pone.0187226) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Möller R. 2012. A model of ant navigation based on visual prediction. J. Theor. Biol. 305, 118–130. ( 10.1016/j.jtbi.2012.04.022) [DOI] [PubMed] [Google Scholar]

- 40.Collett M, Chittka L, Collett TS. 2013. Spatial memory in insect navigation. Curr. Biol. 23, R789–R800. ( 10.1016/j.cub.2013.07.020) [DOI] [PubMed] [Google Scholar]

- 41.Baddeley B, Graham P, Philippides A, Husbands P. 2011. Holistic visual encoding of ant-like routes: navigation without waypoints. Adapt. Behav. 19, 3–15. ( 10.1177/1059712310395410) [DOI] [Google Scholar]

- 42.Wystrach A, Mangan M, Philippides A, Graham P. 2013. Snapshots in ants? New interpretations of paradigmatic experiments. J. Exp. Biol. 216, 1766–1770. ( 10.1242/jeb.082941) [DOI] [PubMed] [Google Scholar]

- 43.Dewar ADM, Philippides A, Graham P. 2014. What is the relationship between visual environment and the form of ant learning-walks? An in silico investigation of insect navigation. Adapt. Behav. 22, 163–179. (doi:1177/1059712313516132) [Google Scholar]

- 44.Graham P, Philippides A, Baddeley B. 2010. Animal cognition: multi-modal interactions in ant learning. Curr. Biol. 20, R639–R640. ( 10.1016/j.cub.2010.06.018) [DOI] [PubMed] [Google Scholar]

- 45.Baddeley B, Graham P, Husbands P, Philippides A. 2012. A model of ant route navigation driven by scene familiarity. PLoS Comput. Biol 8, e1002336 ( 10.1371/journal.pcbi.1002336) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46.Ardin P, Peng F, Mangan M, Lagogiannis K, Webb B. 2016. Using an insect mushroom body circuit to encode route memory in complex natural environments. PLoS Comput. Biol. 12, e1004683 ( 10.1371/journal.pcbi.1004683) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 47.Philippides A, de Ibarra NH, Riabinina O, Collett TS. 2013. Bumblebee calligraphy: the design and control of flight motifs in the learning and return flights of Bombus terrestris. J. Exp. Biol. 216, 1093–1104. ( 10.1242/jeb.081455) [DOI] [PubMed] [Google Scholar]

- 48.Collett T, Lehrer M. 1993. Looking and learning: a spatial pattern in the orientation flight of the wasp Vespula vulgaris. Proc. R. Soc. Lond. B 252, 129–134. ( 10.1098/rspb.1993.0056) [DOI] [Google Scholar]

- 49.Lehrer M. 1993. Why do bees turn back and look? J. Comp. Physiol. A: Neuroethology Sensory Neural Behav. Physiol. 172, 549–563. ( 10.1007/BF00213678) [DOI] [Google Scholar]

- 50.Collett T. 1995. Making learning easy: the acquisition of visual information during the orientation flights of social wasps. J. Comp. Physiol. A 177, 737–747. ( 10.1007/BF00187632) [DOI] [Google Scholar]

- 51.Stürzl W, Zeil J, Boeddeker N, Hemmi JM. 2016. How wasps acquire and use views for homing. Curr. Biol. 26, 470–482. ( 10.1016/j.cub.2015.12.052) [DOI] [PubMed] [Google Scholar]

- 52.Nicholson D, Judd S, Cartwright B, Collett T. 1999. Learning walks and landmark guidance in wood ants (Formica rufa). J. Exp. Biol. 202, 1831–1838. [DOI] [PubMed] [Google Scholar]

- 53.Müller M, Wehner R. 2010. Path integration provides a scaffold for landmark learning in desert ants. Curr. Biol. 20, 1368–1371. ( 10.1016/j.cub.2010.06.035) [DOI] [PubMed] [Google Scholar]

- 54.Fleischmann PN, Christian M, Müller VL, Rössler W, Wehner R. 2016. Ontogeny of learning walks and the acquisition of landmark information in desert ants, Cataglyphis fortis. J. Exp. Biol. 219, 3137–3145. ( 10.1242/jeb.140459) [DOI] [PubMed] [Google Scholar]

- 55.Wystrach A, Philippides A, Aurejac A, Cheng K, Graham P. 2014. Visual scanning behaviours and their role in the navigation of the Australian desert ant Melophorus bagoti. J. Comp. Physiol. A 200, 615–626. ( 10.1007/s00359-014-0900-8) [DOI] [PubMed] [Google Scholar]

- 56.Zeil J, Narendra A, Stürzl W. 2014. Looking and homing: how displaced ants decide where to go. Phil. Trans. R. Soc. B 369, 20130034 ( 10.1098/rstb.2013.0034) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 57.Wystrach A, Beugnon G, Cheng K. 2012. Ants might use different view-matching strategies on and off the route. J. Exp. Biol. 215, 44–55. ( 10.1242/jeb.059584) [DOI] [PubMed] [Google Scholar]

- 58.Wystrach A, Schwarz S, Schultheiss P, Beugnon G, Cheng K. 2011. Views, landmarks, and routes: how do desert ants negotiate an obstacle course? J. Comp. Physiol. A 197, 167–179. ( 10.1007/s00359-010-0597-2) [DOI] [PubMed] [Google Scholar]

- 59.Ardin P, Mangan M, Wystrach A, Webb B. 2015. How variation in head pitch could affect image matching algorithms for ant navigation. J. Comp. Physiol. A 201, 585–597. ( 10.1007/s00359-015-1005-8) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Raderschall CA, Narendra A, Zeil J. 2016. Head roll stabilisation in the nocturnal bull ant Myrmecia pyriformis: implications for visual navigation. J. Exp. Biol. 219, 1449–1457. ( 10.1242/jeb.134049) [DOI] [PubMed] [Google Scholar]

- 61.Ardin PB, Mangan M, Webb B. 2016. Ant homing ability is not diminished when traveling backwards. Front. Behav. Neurosci. 10, 69 ( 10.3389/fnbeh.2016.00069) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 62.Schwarz S, Mangan M, Zeil J, Webb B, Wystrach A. 2017. How ants use vision when homing backward. Curr. Biol. 27, 401–407. ( 10.1016/j.cub.2016.12.019) [DOI] [PubMed] [Google Scholar]

- 63.Kohler M, Wehner R. 2005. Idiosyncratic route-based memories in desert ants, Melophorus bagoti: how do they interact with path-integration vectors? Neurobiol. Learn. Mem. 83, 1–12. ( 10.1016/j.nlm.2004.05.011) [DOI] [PubMed] [Google Scholar]

- 64.Narendra A. 2007. Homing strategies of the Australian desert ant Melophorus bagoti II. Interaction of the path integrator with visual cue information. J. Exp. Biol. 210, 1804–1812. ( 10.1242/jeb.02769) [DOI] [PubMed] [Google Scholar]

- 65.Latecki LJ, Lakamper R, Eckhardt T. 2000. Shape descriptors for non-rigid shapes with a single closed contour. In Proc. of IEEE Conf. on Computer Vision and Pattern Recognition, Hilton Head, SC, 15 June 2000, vol. 1, pp. 424–429. New York, NY: IEEE. [Google Scholar]

- 66.Persoon E, Fu KS. 1977. Shape discrimination using Fourier descriptors. IEEE. Trans. Syst. Man. Cybern. 7, 170–179. ( 10.1109/TSMC.1977.4309681) [DOI] [PubMed] [Google Scholar]

- 67.Kaneko T, Okudaira M. 1985. Encoding of arbitrary curves based on the chain code representation. IEEE Trans. Commun. 33, 697–707. ( 10.1109/TCOM.1985.1096361) [DOI] [Google Scholar]

- 68.Mokhtarian F, Abbasi S, Kittler J. 1997. Efficient and robust retrieval by shape content through curvature scale space. Ser. Softw. Eng. Knowl. Eng. 8, 51–58. ( 10.1142/9789812797988_0005) [DOI] [Google Scholar]

- 69.Hu MK. 1962. Visual pattern recognition by moment invariants. IRE Trans. Inf. Theory 8, 179–187. ( 10.1109/TIT.1962.1057692) [DOI] [Google Scholar]

- 70.Khotanzad A, Hong YH. 1990. Invariant image recognition by Zernike moments. IEEE. Trans. Pattern. Anal. Mach. Intell. 12, 489–497. ( 10.1109/34.55109) [DOI] [Google Scholar]

- 71.Stürzl W, Mallot HA. 2006. Efficient visual homing based on Fourier transformed panoramic images. Rob. Auton. Syst. 54, 300–313. ( 10.1016/j.robot.2005.12.001) [DOI] [Google Scholar]

- 72.Franz MO, Schölkopf B, Mallot HA, Bülthoff HH. 1998. Where did I take that snapshot? Scene-based homing by image matching. Biol. Cybern. 79, 191–202. ( 10.1007/s004220050470) [DOI] [Google Scholar]

- 73.Mangan M. 2011. Visual homing in field crickets and desert ants: a comparative behavioural and modelling study. Edinburgh, UK: The University of Edinburgh. [Google Scholar]

- 74.Kazhdan M, Funkhouser T, Rusinkiewicz S. 2003. Rotation invariant spherical harmonic representation of 3D shape descriptors. In Symposium on geometry processing, Aachen, Germany, 23–25 June 2003, vol. 6, pp. 156–164. Aire-la-Ville, Switzerland: Eurographics Association. [Google Scholar]

- 75.Stone T, Differt D, Milford M, Webb B. 2016. Skyline-based localisation for aggressively manoeuvring robots using UV sensors and spherical harmonics. In Proc. 2016 IEEE Int. Conf. on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016, pp. 5615–5622. New York, NY: IEEE. [Google Scholar]

- 76.Maffei L, Fiorentini A. 1973. The visual cortex as a spatial frequency analyser. Vision Res. 13, 1255–1267. ( 10.1016/0042-6989(73)90201-0) [DOI] [PubMed] [Google Scholar]

- 77.Daugman JG. 1985. Uncertainty relation for resolution in space, spatial frequency, and orientation optimized by two-dimensional visual cortical filters. J. Opt. Soc. Am. A 2, 1160–1169. ( 10.1364/JOSAA.2.001160) [DOI] [PubMed] [Google Scholar]

- 78.Bell AJ, Sejnowski TJ. 1997. The ‘independent components’ of natural scenes are edge filters. Vision Res. 37, 3327–3338. ( 10.1016/S0042-6989(97)00121-1) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 79.O'Carroll D. 1993. Feature-detecting neurons in dragonflies. Nature 362, 541–543. ( 10.1038/362541a0) [DOI] [Google Scholar]

- 80.Seelig JD, Jayaraman V. 2013. Feature detection and orientation tuning in the Drosophila central complex. Nature 503, 262–266. ( 10.1038/nature12601) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 81.Oliva A, Torralba A. 2001. Modeling the shape of the scene: a holistic representation of the spatial envelope. Int. J. Comput. Vis. 42, 145–175. ( 10.1023/A:1011139631724) [DOI] [Google Scholar]

- 82.Wystrach A, Dewar AD, Graham P. 2014. Insect vision: emergence of pattern recognition from coarse encoding. Curr. Biol. 24, R78–R80. ( 10.1016/j.cub.2013.11.054) [DOI] [PubMed] [Google Scholar]

- 83.Dewar AD, Wystrach A, Graham P, Philippides A. 2015. Navigation-specific neural coding in the visual system of Drosophila. Biosystems 136, 120–127. ( 10.1016/j.biosystems.2015.07.008) [DOI] [PubMed] [Google Scholar]

- 84.Dewar AD, Wystrach A, Philippides A, Graham P. 2017. Neural coding in the visual system of Drosophila melanogaster: how do small neural populations support visually guided behaviours? PLoS Comput. Biol. 13, e1005735 ( 10.1371/journal.pcbi.1005735) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 85.LeCun Y, Huang FJ, Bottou L. 2004. Learning methods for generic object recognition with invariance to pose and lighting. In Proc. of the 2004 IEEE Computer Society Conf. on Computer Vision and Pattern Recognition, Washington, DC, 27 June–2 July 2004, vol. 2, pp. 97–104. New York, NY: IEEE. [Google Scholar]

- 86.Arandjelović R, Gronat P, Torii A, Pajdla T, Sivic J. 2015. NetVLAD: CNN architecture for weakly supervised place recognition. (http://arxiv.org/abs/151107247). [DOI] [PubMed] [Google Scholar]

- 87.Walker C, Graham P, Philippides A. 2017. Using deep autoencoders to investigate image matching in visual navigation. In Biomimetic and biohybrid systems. Living machines 2017 (eds M Mangan, M Cutkosky, A Mura, P Verschure, T Prescott, N Lepora), pp. 465–474. Lecture Notes in Computer Science, vol. 10384 Cham, Switzerland: Springer. [Google Scholar]

- 88.Krizhevsky A, Sutskever I, Hinton GE. 2012. ImageNet classification with deep convolutional neural networks. In Advances in neural information processing systems (eds F Pereira, CJC Burges, L Bottou, KQ Weinberger), pp. 1097–1105. Red Hook, NY: Curran Associates. [Google Scholar]

- 89.Wystrach A, Lagogiannis K, Webb B. 2016. Continuous lateral oscillations as a core mechanism for taxis in Drosophila larvae. Elife 5, 417 ( 10.7554/eLife.15504.001 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90.Kodzhabashev A, Mangan M. 2015. Route following without scanning. In Biomimetic and biohybrid systems. Living machines 2015 (eds S Wilson, P Verschure, A Mura, T Prescott), pp. 199–210. Lecture Notes in Computer Science, vol. 9222 Cham, Switzerland: Springer. [Google Scholar]

- 91.Lent DD, Graham P, Collett TS. 2013. Phase-dependent visual control of the zigzag paths of navigating wood ants. Curr. Biol. 23, 2393–2399. ( 10.1016/j.cub.2013.10.014) [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Data Availability Statement

The code used to generate the data in this paper is available at: https://github.com/InsectRobotics/interface-focus-2018.