Abstract

Abstract words refer to concepts that cannot be directly experienced through our senses (e.g. truth, morality). How we ground the meanings of abstract words is one of the deepest problems in cognitive science today. We investigated this question in an experiment in which 62 participants were asked to communicate the meanings of words (20 abstract nouns, e.g. impulse; 10 concrete nouns, e.g. insect) to a partner without using the words themselves (the taboo task). We analysed the speech and associated gestures that participants used to communicate the meaning of each word in the taboo task. Analysis of verbal and gestural data yielded a number of insights. When communicating about the meanings of abstract words, participants' speech referenced more people and introspections. In contrast, the meanings of concrete words were communicated by referencing more objects and entities. Gesture results showed that when participants spoke about abstract word meanings their speech was accompanied by more metaphorical and beat gestures, and speech about concrete word meanings was accompanied by more iconic gestures. Taken together, the results suggest that abstract meanings are best captured by a model that allows dynamic access to multiple representation systems.

This article is part of the theme issue ‘Varieties of abstract concepts: development, use and representation in the brain’.

Keywords: abstract meaning, concrete meaning, gesture, language production, concepts

1. Introduction

Abstract words allow us to convey important human ideas like scientific (e.g. theory, calculus) and social (e.g. justice) concepts, and extend our capacity to convey ideas beyond the physical reality of the here and now. Despite the fact that abstract words make up the majority of our lexicon [1], empirical studies of word meaning have historically focused on studying concrete words (e.g. truck; [2–4]). There are intuitive differences between concrete and abstract words: our understanding of truck unfolds against the physical reality in which we operate. We can perceive concrete referents through various senses and we can physically interact with them. Their physical existence and our typical perceptual and motor experiences with them provide stable scaffolding. Indeed, there is mounting evidence for the involvement of sensorimotor systems in the processing of concrete words (e.g. [5,6]), and many theories of conceptual representation assume a tight coupling between sensorimotor and conceptual systems (e.g. [7]; for a review see [8]). It is much harder to make the case that processing of abstract words such as truth is aided by these systems. In fact, abstract words are most commonly characterized in the literature by the absence of physical or spatial grounding: ‘Roughly speaking, an abstract concept refers to entities that are neither purely physical nor spatially constrained’ [9, p. 129].

While challenging, it is important that we investigate representation and processing of abstract words in order that we understand this essential characteristic of human cognition. In our view, concreteness is a continuum ranging from highly concrete concepts, which are typically external entities that can be perceived by the visual and haptic senses, to highly abstract concepts, which are typically constructs that are learned through language and introspection rather than perception (for a similar definition, see [10]). Thus far, most of the previous work on abstract word meaning has involved recognition or comprehension tasks. A few studies have used feature listing or property generation tasks to study abstract meaning [1,9,11]. In these studies, participants are asked to list all characteristics of target concepts that they can think of. The assumption is that participant-generated features or properties reflect what people know about those target concepts. For instance, participants tend to list more communicative acts, social actions and cognitive states or feelings as properties for abstract words than for concrete words [1]. One limitation of this approach, however, is that participants tend to list fewer properties for abstract than for concrete concepts [12]. Indeed, the single words or short phrases that participants typically use to convey each property may not capture the more complex relational or social properties that are proposed to be important for abstract concepts (e.g. [11,13]). Abstract concepts may be more difficult to separate from situations or contexts and distil into short words or phrases [9].

Beyond these feature-listing studies, there has been limited examination of abstract meanings using language production tasks, yet investigating the ways people speak about abstract meaning in communicative context could be informative. Further, as Goldin-Meadow & Brentari [14] have argued, speech is in its most natural form when produced with gesture. The words and the gestures that people use to convey word meanings could offer valuable insights into the mechanisms involved in conceptual processing. The present study was motivated by these possibilities. While there is some precedent for the use of verbal utterance data for this purpose [9], our examination of gestures is novel and is particularly well suited to testing the predictions of certain theories, described below (e.g. Conceptual Metaphor Theory, [15]).

(a). Verbal utterances and abstract meanings

Examining the ways that people talk about abstract concepts could test several theoretical proposals about abstract meaning. The first is the situated conceptualization account [9]. Barsalou and Wiemer-Hastings hypothesized that there were both similarities and differences in the representation of abstract and concrete words. That is, while situations are important to both concrete and abstract meanings, the nature of the situations differs. Background situations for concrete meanings tend to be based around objects, while background situations for abstract meanings tend to be based around introspections and events. Further, Barsalou and Wiemer-Hastings proposed that simulation processes could be important even for abstract meaning; for instance, introspective content (drive states, mental states, emotion states, see also [16]) could be simulated in the brain's modality-specific systems. Indeed, the consensus that is beginning to emerge across theories and studies is that abstract word and sentence meanings are, at least in part, simulated in sensorimotor terms and that such simulations are involved in language comprehension [17–20].

To test these claims, Barsalou & Wiemer-Hastings [9] conducted a study in which participants were asked to verbally generate properties of three abstract words, three concrete words and three words of intermediate concreteness. As hypothesized, their results showed that participants tended to describe more event (properties of settings or events) and introspective properties (e.g. mental states, emotions of someone in a situation) for abstract word meanings, and more entity properties (properties of physical objects) for concrete word meanings. Analyses also suggested that person information was important to abstract meanings; Barsalou and Wiemer-Hastings noted that properties generated for abstract words often included social information about people and relationships.

Similarly, Wiemer-Hastings & Xu [21] asked participants to generate properties for words sampled from three different levels of abstractness and three levels of concreteness. Further, property generation was constrained by asking participants to list either item properties, context elements always occurring with the item, or specific associated context. Results showed that, as in the Barsalou and Wiemer-Hastings study, participants generated more introspective properties for abstract words than for concrete words, and this difference was more pronounced when participants were instructed to generate context elements for the target item. Further, the most abstract target words were associated with the most introspective properties and fewest entity properties, while the most concrete target words were associated with the most entity properties and fewest introspective properties. The authors concluded that the meanings of abstract words ‘are anchored in situations and regularly involve subjective experiences, such as cognitive processes and emotion.’ (p. 731). Further, they noted that it was not obvious how such mental processes or emotions could be tied to perceptual simulations, and suggested that the notion of simulation for abstract meaning required further examination. This stands in contrast to Barsalou & Wiemer-Hastings' [9] assertion that introspective content could be simulated in mental images.

Barsalou & Wiemer-Hastings [9] argued that concepts are always experienced within situations, and the content of those situations involves introspective states, along with agents, objects, events, etc. Since that content is perceived when the concept is initially experienced, it can be re-enacted later when the concept is retrieved. As such, according to this grounded, situated view of abstract meaning, these kinds of information should also be prevalent in the verbal utterances produced by participants to describe word meanings in the present study.

The account offered by Barsalou & Wiemer-Hastings [9] was based on the primacy of perceptual simulation for conceptual processing. Several recent proposals offer a broader, multidimensional account, suggesting that conceptual knowledge is represented in multimodal systems [22–24]. That is, word meanings are grounded in sensory, motor, emotion and language systems, and the representations of abstract words can be based in more than perception. For example, Reilly et al. [25] proposed that perceptual and linguistic systems are both important to semantic representation, and that these systems converge on a single semantic store. Reilly et al. further proposed that common dimensions underlie the meanings of abstract and concrete words, including emotion, social interaction, morality and valence. Although the dimensions of representation are common to both abstract and concrete words, such proposals do allow for the notion that different dimensions are relatively more important for the representation of different kinds of meanings. Indeed, empirical work suggested that some dimensions are relatively more important to abstract meaning (e.g. thought, morality) and others more important to concrete meaning (e.g. sensation, ease of teaching; [26]). Similarly, with the Words as Social Tools proposal, Borghi & Binkofski [27] argued that grounding through sensorimotor systems is important for all concepts, and that both linguistic (auditory processing, language production, etc.) and social areas are emphasized to represent the meanings of abstract words. Thus, these accounts predict that introspective, social and person information should be more prevalent in descriptions of abstract word meanings than in descriptions of concrete word meanings.

In contrast, the Qualitatively Different Representational Framework proposes distinct organizing principles for abstract and concrete representations [28,29]. That is, associative information (e.g. death and taxes) is proposed to be particularly important to the meanings of abstract words, whereas categorical information (semantic similarity; e.g. chicken and turkey) is the organizing principle for representations of concrete meanings. As such, associative information will be accessed more readily for abstract words than for concrete words [29]. Thus, by this account, relatively more associative information should be generated in order to communicate abstract meanings than concrete meanings in the present study.

(b). Gestures and abstract meanings

We can also test proposals about abstract meaning by examining the ways that people gesture about abstract concepts. In the present study, our focus is on gestures of the hands. Production of meaningful gestures for abstract words could provide novel insight into the representation of abstract concepts. Gestures are particularly well suited for conveying spatial, relational and motoric information [30]. As Hostetter & Alibali [31] articulated in their Gesture as Simulated Action Theory, gestures can tell us a great deal about the underlying conceptual system: ‘sensorimotor representations that underlie speaking, we argue, are the bases for speech-accompanying gestures’ (p. 499). Hostetter and Alibali argued that language production involves sensorimotor representations. It is these representations, and specifically mental imagery, that give rise to gestures. Gestures that carry representational meaning depict the spatial, physical and configurational information inherent in the simulated mental image. When the simulation is strong enough, it has the potential to exceed the individual's unique gesturing threshold and activate the premotor and motor areas. Because gestures are overwhelmingly observed during language production and not comprehension, Hostetter and Alibali proposed that articulatory movements necessary for speech production cascade or leak out and ‘bring motor movement along’, leading to gesture.

The proposal that Hostetter & Alibali [31] make for abstract meanings is based in Conceptual Metaphor Theory [15] wherein our understandings of abstract ideas are grounded in our knowledge of the physical world (for criticisms of this view, see [32]). In this view, our pervasive use of metaphors suggests that we use metaphors as concrete ‘vehicles’ to structure our understanding of abstract ideas. For abstract concepts, some of the relevant metaphors may be spatial (e.g. good is up, bad is down, as in I am feeling up today; he is really low these days). Since gestures are well suited to convey spatial information, this account suggests that a reliance on metaphor to ground the meanings of abstract words may be reflected in gestures used to convey the meanings of those words. Gestures can serve to convey the metaphoric mapping of (abstract) target domain to (concrete) source domain [33], as when a speaker is describing a theory and makes a framing or cupping gesture, suggesting reliance on the ideas are containers metaphor. If such mappings reflect an important part of representations for abstract meanings, then one would expect higher rates of metaphorical gesture for abstract than for concrete words in the present study.

In contrast, Barsalou & Wiemer-Hastings [9] proposed that while metaphors sometimes augment the meanings of abstract concepts, they are not central to their content. Instead, they argued that what is central to content for abstract concepts are direct internal and external experiences of those concepts. Those experiences give structure to the representation of abstract concepts, and when complementary metaphors are involved they map to those representations that are built from direct experience. By this view, metaphorical gestures might not be more frequent for abstract than for concrete words.

By examining the gestures produced when communicating abstract concepts, the present study will build on the findings of previous research that has examined how abstract concepts are conveyed in sign languages (e.g. [34,35]). For instance, based on their analysis of Italian Sign Language, Borghi et al. [34] concluded that many signs for abstract concepts were based on underlying metaphors, in keeping with Conceptual Metaphor Theory. They argued, further, that metaphors could not explain all abstract signs; some signs conveyed meaning through situations or emotion, consistent with a more multidimensional view of abstract concepts.

(c). The present study

To test these predictions about grounding of abstract meaning, we devised a novel task in which we could observe what participants communicate with their words and their gestures when asked to explicitly convey the meanings of abstract and concrete words to a partner. Since this task hadn't been used before to study abstract meaning, we anticipated that participants might show a great deal of variability in their approach to the task and in their responses. To try to measure some of this variance, we included two individual difference measures that we thought might be related to participants' performance in the task: vocabulary and Need for Cognition. Need for Cognition measures ‘the tendency for an individual to engage in and enjoy thinking’ [36, p. 116]. By including these two dimensions in our analyses we hoped to distinguish variability that is best attributed to participants' language and cognitive abilities from that based in conceptual knowledge per se.

We expected that the meanings of abstract words would be more difficult to convey than those of equally frequent concrete words. This would be an extension of the standard concreteness effect, whereby abstract words are more difficult to remember and comprehend than are concrete words [37–40]. Our real interest, however, was not in this accuracy difference, but in differences in the information used to convey the meanings of abstract and concrete words, even after we had accounted for differences in accuracy.

2. Methods

(a). Participants

The participants were 62 undergraduate students (30 female, mean age = 20.58, s.d. = 3.45) recruited in 31 same-gender dyads through the Department of Psychology Research Participation System. Participants received bonus course credit in exchange for participation, and all declared English as their first language. All dyads indicated that they had no prior relationship.

(b). Procedure

Participants completed three tasks: (i) vocabulary assessment (the North American Adult Reading Test, NAART35; [41]), (ii) Need for Cognition scale [36], and (iii) the taboo task. The objective of the taboo task was for a participant (i.e. the clue-giver) to have their partner guess the target word on the clue-giver's card by providing verbal description but without saying the target word itself (i.e. the taboo word). During the taboo task participants were seated across from each other, with about 1 m of open space between chairs. The experimenter was also seated in the room, to the side and in range to hand cards to the clue-giver. After each card was presented to the clue-giver it was placed in a small stand so that they could see it but had both hands free during the trial. Participants were given two practice trials in order to ensure that they understood the task. A random draw determined which member of the dyad assumed the clue-giver role on the first trial, and then clue-giver and partner roles were alternated between trials thereafter.

The target words were 20 abstract and 10 concrete nouns, selected from the stimuli used in Zdrazilova & Pexman [19], and listed in the Appendix. The goal was to select abstract and concrete words that were representative of abstract and concrete words more generally. As such, we chose some abstract words that had more valenced meanings and others that had more neutral meanings. As illustrated in table 1, the abstract words had significantly lower concreteness ratings [10] than did the concrete words, but abstract and concrete words had equivalent word frequency (LogSUBTLWF, [42]). Further, comparison of the mean concreteness and valence [43] values of the taboo task words with those of a much larger set (the 5000 abstract and 5000 concrete words presented in the Calgary Semantic Decision Project [44]) suggests reasonable representativeness for the taboo task words, although the taboo task words were somewhat more frequent than those in the larger sets.

Table 1.

Mean item characteristics for abstract and concrete words used in the taboo task, and, for comparison, mean characteristics for larger sets of abstract and concrete words. Note. For valence from Calgary Semantic Decision Project items, n = 2499 for abstract words and n = 2763 for concrete words. (Standard deviations in parentheses.)

| n | concreteness | valence | frequency | |

|---|---|---|---|---|

| taboo task stimuli | ||||

| abstract words | 20 | 2.18 (0.37) | 5.36 (1.41) | 2.75 (0.44) |

| concrete words | 10 | 4.62 (0.39) | 5.33 (0.91) | 2.94 (0.61) |

| p-value for difference test | <0.001 | 0.96 | 0.39 | |

| Calgary Semantic Decision Project stimuli | ||||

| abstract words | 5000 | 2.02 (0.28) | 5.01 (1.43) | 1.60 (0.77) |

| concrete words | 5000 | 4.28 (0.43) | 5.20 (1.14) | 1.61 (0.77) |

Target words were presented in a blocked order in the taboo task. That is, all abstract words were presented first, in a different random order for each dyad, and all concrete words were presented second, also in random order. We presented the abstract words first since these were of primary interest and we did not want participants' strategies for communicating concrete words to influence their approach to the abstract words (see related discussion of carry-over effects in [9]).

The taboo task was videotaped with two cameras so that gestures of both members of each dyad were clearly visible for coding. Although participants were not explicitly instructed to gesture, all did so to some degree and so we coded the clue-giver's verbal utterances and also their gestures during communication of abstract and concrete word meanings. All videotaped interactions were transcribed using Elan transcription software (https://tla.mpi.nl/tools/tla-tools/elan/). The transcripts were coded to capture detailed verbal and gestural information. Separate frameworks guided the segmentation and coding process for verbal utterance data and for gesture data; the details for each are presented next.

(i). Verbal utterance coding

The transcribed participant protocols were segmented into 4803 separate utterances so that each segment represented a complete unit of talk bounded by the speaker's silence or by the speaker's shift to a new topic/idea. Next each segment was assigned one of 20 category codes (electronic supplementary material) that best captured the type of property the participant generated with each utterance. Coding categories were adapted from Barsalou & Wiemer-Hastings [9] and Recchia & Jones [1]. A primary coder coded all of the data. To check the fidelity of the primary coding, a second coder who was naive to the study purpose independently coded 30% of the data, selected randomly from all transcripts. Agreement between coders for these transcripts was 87%, and disagreements were resolved through consensus.

(ii). Gesture coding

We used the framework first proposed by McNeill [45] and refined and validated by Kita et al. [46] to identify and segment 3998 separate gestures. The unit of analysis for the gesture data was the movement unit [46]: hand movement that began when the hand departed from its resting position and ended when the hand returned to its resting position. When gestures were continuous, involving only a partial return to a resting position, segmentation was guided by the presence of a stroke [45]. Once segmented, the gesture data were assigned to one of seven categories (electronic supplementary material). Coding categories were adapted from Cartmill et al. [47] to fit the nature of the taboo task. As with the utterance data, a primary coder coded all of the data and then 30% of the data were selected for second coding. For gesture data, initial agreement between coders was 91%, and disagreements were again resolved through consensus.

3. Results

The data were analysed using Bayesian mixed effects multinomial logistic regressions. These models were computed using the statistical software R [48] and the package ‘brms’ [49], which fits Bayesian mixed effects models using the Stan programming language. In short, this approach determines the probability that a model's parameters take on different values, given the observed data (viz., the posterior). Following Bayes' theorem, this is proportional to a combination of our prior expectations for those parameter values (viz., the prior) and the likelihood that we would have observed our data given different parameter values (viz., the likelihood). In practice, functions describing the prior and the likelihood are combined to create a posterior density function. This is then sampled from,1 and the resulting sample can be used to establish the 95% credible intervals: the range of values with a 95% probability of containing the true value of a given parameter. When a given parameter's credible interval does not include zero, we considered it significantly different from zero, and worth interpreting. Analyses were run using the default setting of computing four sampling chains, each with 2000 iterations; the first 1000 of these are treated as warmups, resulting in 4000 posterior samples. Because of the lack of previous literature on the topic, models were fit using flat priors for fixed effects, and default weakly informative priors (i.e. half Student's t distribution with three degrees of freedom) for random effects.

The goal of our analyses was to examine how the types of information conveyed by participants' verbal utterances and gestures differed as a function of word type (abstract versus concrete); thus, word type was included as our main fixed effect of interest. Since participants were less successful at guessing the taboo word for abstract (M = 0.72, s.d. = 0.45) than for concrete words (M = 0.95, s.d. = 0.26, t895.63 = 9.85, p < 0.001), we also included trial accuracy as a fixed effect in our analyses. Models included random subject intercepts, as well as random subject slopes for word type and trial accuracy. They also included random item intercepts, as well as a random item slope for trial accuracy. Random effects help generalize results beyond a particular set of subjects and items. They accomplish this by accounting for subject and item level variation in the tendency to make utterances and gestures of each type, and in the effects of word type and trial accuracy. Trials on which participants accidentally said the taboo word, or passed, were not included in the analyses (2.68% of abstract trials; 0.65% of concrete trials).

Initially, we ran two versions of the analyses: one version that included the individual differences variables (NAART and Need for Cognition) and one version that excluded those variables. Since the key results for word types were the same in the two analyses, we report only the version in which the individual difference values were excluded in order to help the reader focus on the main findings.

(a). Verbal utterance analyses

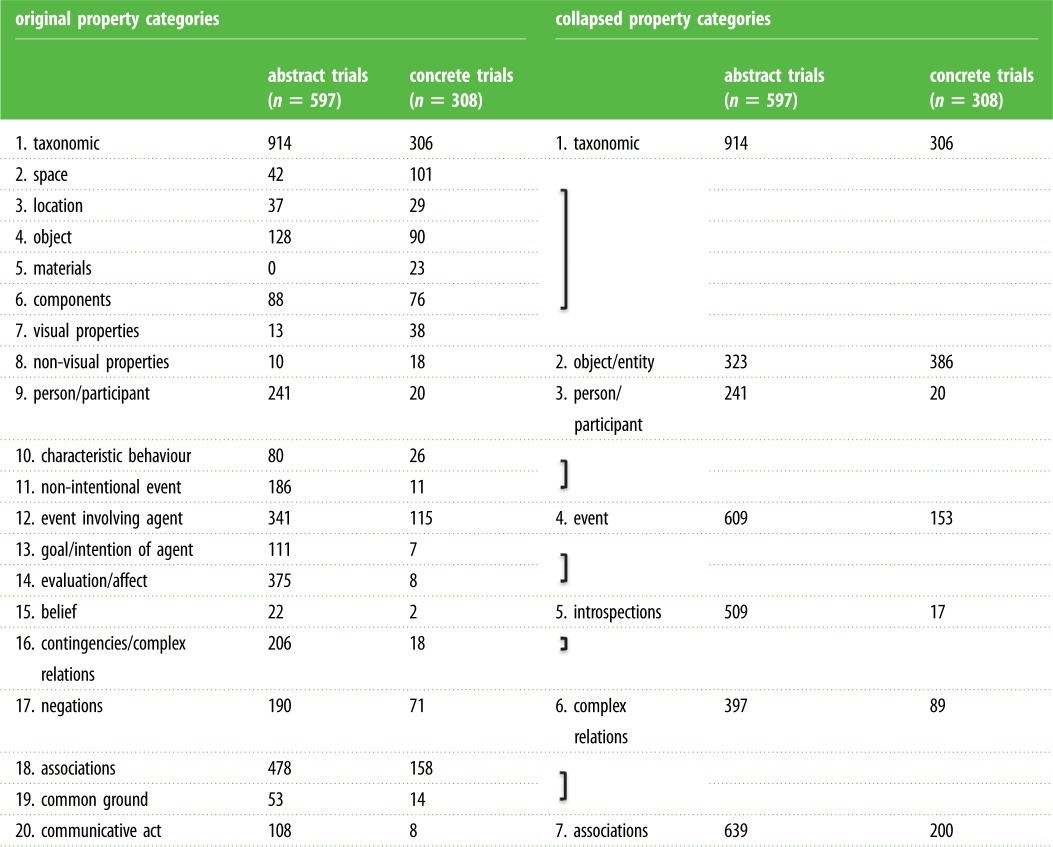

Thirteen of the original verbal utterance categories included less than 5% of total observations. We combined similar categories and thus collapsed categories into seven broader categories (table 2). The taxonomic category was used as a reference category because it was the most common utterance type, and proportions of taxonomic utterances did not vary by word type (25.19% of utterances on abstract word trials were taxonomic; 26.15% of utterances on concrete word trials were taxonomic).2 In addition, we did not have any a priori hypothesis of a relationship between word type and production of taxonomic utterances.

Table 2.

Verbal utterance categories and frequencies, for 20 original and 7 collapsed categories.

|

All  values were ≤1.01, indicating that the analysis had reached convergence [51] (i.e. additional sampling would not lead to different results). Results (table 3) showed that the odds of producing a person/participant utterance were 7.39 times higher, and the odds of producing an introspection utterance were 11.47 higher, for abstract compared to concrete words; conversely, the odds of producing an object/entity utterance were 3.82 times higher for concrete compared to abstract words. In addition, the odds of producing an object/entity utterance were 1.45 times higher, and the odds of producing an association utterance were 1.77 times higher, on accurate compared to inaccurate trials.

values were ≤1.01, indicating that the analysis had reached convergence [51] (i.e. additional sampling would not lead to different results). Results (table 3) showed that the odds of producing a person/participant utterance were 7.39 times higher, and the odds of producing an introspection utterance were 11.47 higher, for abstract compared to concrete words; conversely, the odds of producing an object/entity utterance were 3.82 times higher for concrete compared to abstract words. In addition, the odds of producing an object/entity utterance were 1.45 times higher, and the odds of producing an association utterance were 1.77 times higher, on accurate compared to inaccurate trials.

Table 3.

Results of the multinomial logistic regression predicting verbal utterance type, using taxonomic utterances as the reference category. Note. Leave-one-out information criterion = 16036.75, s.e. = 91.22. Percentage abstract (concrete) refers to the percentage of utterances generated on abstract (concrete) trials that belonged to each utterance category. Abstract words and inaccurate trials were treated as reference categories. B, estimated regression coefficient, based on the mean of the posterior distribution.

| percentage abstract |

percentage concrete |

B | s.e. | Exp(B) | 95% CI | |

|---|---|---|---|---|---|---|

| taxonomic | 25.19% | 26.15% | ||||

| object/entity | 8.85% | 32.99% | ||||

| intercept | −1.53 | 0.24 | 0.22 | [−2.01, −1.04]a | ||

| word type | 1.34 | 0.42 | 3.82 | [0.49, 2.15]a | ||

| accuracy | 0.37 | 0.17 | 1.45 | [0.03, 0.70]a | ||

| person/participant | 6.64% | 1.71% | ||||

| intercept | −1.93 | 0.45 | 0.15 | [−2.82, −1.07]a | ||

| word type | −2.00 | 0.89 | 0.14 | [−3.88, −0.36]a | ||

| accuracy | −0.36 | 0.28 | 0.70 | [−0.94, 0.17] | ||

| event | 16.78% | 13.08% | ||||

| intercept | −0.56 | 0.19 | 0.57 | [−0.93, −0.19]a | ||

| word type | −0.34 | 0.36 | 0.71 | [−1.06, 0.33] | ||

| accuracy | 0.06 | 0.16 | 1.06 | [−0.26, 0.37] | ||

| introspection | 14.03% | 1.45% | ||||

| intercept | −0.89 | 0.28 | 0.41 | [−1.47, −0.34]a | ||

| word type | −2.44 | 0.59 | 0.09 | [−3.68, −1.35]a | ||

| accuracy | −0.07 | 0.16 | 0.93 | [−0.39, 0.24] | ||

| complex relation | 10.94% | 7.61% | ||||

| intercept | −0.72 | 0.18 | 0.49 | [−1.06, −0.39]a | ||

| word type | −0.38 | 0.22 | 0.68 | [−0.82, 0.05] | ||

| accuracy | −0.31 | 0.17 | 0.73 | [−0.64, 0.01] | ||

| association | 17.58% | 17.01% | ||||

| intercept | −0.72 | 0.15 | 0.49 | [−1.00, −0.43]a | ||

| word type | −0.10 | 0.24 | 0.90 | [−0.57, 0.35] | ||

| accuracy | 0.57 | 0.14 | 1.77 | [0.29, 0.84]a |

aParameter estimate whose 95% credible interval does not include 0.

(b). Gesture analyses

Frequency data for the gesture categories are presented in table 4. For analysis of the gesture data, we used communicative gestures as the reference category because it was the most common gesture type after metaphorical gesture (which was not chosen as a reference category due to its theoretical importance), and because proportions of communicative gestures did not vary by word type (19.30% of gestures on abstract word trials were communicative; 22.55% of gestures on concrete word trials were communicative).3 In addition, we did not have any a priori hypothesis of a relationship between word type and production of communicative gestures.

Table 4.

Gesture categories and frequencies.

| gesture categories | abstract trials (n = 597) |

concrete trials (n = 308) |

|---|---|---|

| conventional | 83 | 37 |

| iconic | 221 | 123 |

| metaphorical | 961 | 171 |

| beat | 588 | 86 |

| deictic | 244 | 77 |

| communicative | 614 | 171 |

| holds | 526 | 95 |

All  values were ≤1.01, indicating that the analysis had reached convergence [51]. Results (table 5) showed that the odds of producing a metaphorical gesture were 1.57 times higher, and the odds of producing a beat gesture were 2.01 times higher, for abstract compared to concrete words; conversely, the odds of producing an iconic gesture were 2.61 times higher for concrete compared to abstract words. In addition, the odds of producing a deictic gesture were 1.73 times higher on accurate compared to inaccurate trials; conversely, the odds of producing a metaphorical gesture were 1.62 times higher, the odds of producing a beat gesture were 2.01 times higher, and the odds of producing a holding gesture were 2.69 times higher, on inaccurate compared to accurate trials.

values were ≤1.01, indicating that the analysis had reached convergence [51]. Results (table 5) showed that the odds of producing a metaphorical gesture were 1.57 times higher, and the odds of producing a beat gesture were 2.01 times higher, for abstract compared to concrete words; conversely, the odds of producing an iconic gesture were 2.61 times higher for concrete compared to abstract words. In addition, the odds of producing a deictic gesture were 1.73 times higher on accurate compared to inaccurate trials; conversely, the odds of producing a metaphorical gesture were 1.62 times higher, the odds of producing a beat gesture were 2.01 times higher, and the odds of producing a holding gesture were 2.69 times higher, on inaccurate compared to accurate trials.

Table 5.

Results of the multinomial logistic regression predicting gesture type, using communicative gestures as the reference category. Note. Leave-one-out information criterion = 12919.75, s.e. = 83.21. Percentage abstract refers to the percentage of gestures generated on abstract trials that belonged to each gesture category. Percentage concrete refers to the percentage of gestures generated on concrete trials that belonged to each gesture category. Abstract words and inaccurate trials were treated as reference categories. B, estimated regression coefficient, based on the mean of the posterior distribution.

| percentage abstract |

percentage concrete |

B | s.e. | Exp(B) | 95% CI | |

|---|---|---|---|---|---|---|

| communicative | 19.30% | 22.55% | ||||

| conventional | 2.56% | 4.77% | ||||

| intercept | −2.54 | 0.31 | 0.08 | [−3.21, −1.99]a | ||

| word type | 0.42 | 0.31 | 1.52 | [−0.20, 1.02] | ||

| accuracy | 0.23 | 0.32 | 1.26 | [−0.36, 0.87] | ||

| iconic | 6.83% | 16.18% | ||||

| intercept | −1.55 | 0.30 | 0.21 | [−2.18, −0.96]a | ||

| word type | 0.96 | 0.40 | 2.61 | [0.18, 1.73]a | ||

| accuracy | −0.05 | 0.25 | 0.95 | [−0.53, 0.48] | ||

| metaphorical | 29.69% | 22.68% | ||||

| intercept | 0.67 | 0.15 | 1.95 | [0.37, 0.94]a | ||

| word type | −0.45 | 0.20 | 0.64 | [−0.86, −0.07]a | ||

| accuracy | −0.48 | 0.14 | 0.62 | [−0.76, −0.21]a | ||

| beat | 17.89% | 11.27% | ||||

| intercept | 0.19 | 0.15 | 1.21 | [−0.10, 0.49] | ||

| word type | −0.70 | 0.26 | 0.50 | [−1.23, −0.22]a | ||

| accuracy | −0.61 | 0.16 | 0.54 | [−0.93, −0.30]a | ||

| deictic | 7.69% | 10.21% | ||||

| intercept | −1.56 | 0.23 | 0.21 | [−2.02, −1.14]a | ||

| word type | −0.21 | 0.29 | 0.81 | [−0.85, 0.33] | ||

| accuracy | 0.55 | 0.23 | 1.73 | [0.12, 1.01]a | ||

| holding | 16.03% | 12.33% | ||||

| intercept | 0.16 | 0.15 | 1.17 | [−0.13, 0.46] | ||

| word type | −0.21 | 0.19 | 0.81 | [−0.60, 0.15] | ||

| accuracy | −0.99 | 0.16 | 0.37 | [−1.31, −0.68]a |

aParameter estimate whose 95% credible interval does not include 0.

4. Discussion

The aim of this study was to provide insight into the kinds of conceptual processes that are engaged during the communication of abstract word meanings. We did so by using a novel open-ended production task to examine the types of information participants generated in order to convey word meaning to a communicative partner. In addition to verbal behaviour, we examined gesture behaviour as an additional window on word meaning.

When communicating about the meanings of abstract words, participants' utterances referenced more people and introspections. In contrast, the meanings of concrete words were communicated by referencing more objects and entities. These findings suggest that participants' descriptions of abstract words unfolded against the background of the self (or others) situated in specific contexts and experiencing internal states such as intentions, beliefs, emotions and motivations. These verbal utterance results are similar to those reported by Barsalou & Wiemer-Hastings [9], despite the fact that the two studies involved different items, different numbers of items and different task demands. Barsalou and Wiemer-Hastings asked participants to freely generate attributes; in our study participants communicated meaning descriptions to a partner with the specific goal of helping the partner to guess the target word.

The verbal utterance results suggest that participants relied on several different semantic dimensions in order to convey word meaning to a partner. These included internal and introspective information, as well as social information. These findings are not consistent with predictions derived from the Qualitatively Different Representational Framework [28,29], since we did not find that associative utterances were more likely for participants' descriptions of abstract than concrete word meanings.

In a step beyond the previous literature, we also examined the gestures that accompanied participants' verbal utterances about abstract word meanings. Results showed that attempts to convey the meanings of abstract words involved more metaphorical and beat gestures, whereas communication about concrete word meanings involved more iconic gestures. Metaphoric gestures suggested a concrete grounding or frame for meaning; for instance, when communicating decision, a participant gestured with two palms up, one moving upward, one moving downward like a seesaw, to convey the idea that one has to weigh something carefully. This gesture was metaphoric because it conveyed meaning by the action of weighing, grounding the abstract mental process of decision in something more concrete. Iconic gestures referenced word meaning more directly; for instance, when communicating beverage, participants often depicted the actions of holding a container and drinking from it. According to the Gesture as Simulated Action framework [31], the common element that metaphoric and iconic gestures imply is the existence of an underlying image schema.

Our results suggest, further, that such schemas might perhaps be even more pervasive than Hostetter & Alibali [31] proposed. For instance, the word likelihood is one that Hostetter and Alibali would have suggested is probably not understood via image schema or action simulation since the word has ‘…no clear relation to the metaphor of physical forces…’ (p. 504). Yet our data showed that 22 participants produced 49 separate instances of metaphorical gestures when communicating the meaning of likelihood. For instance, participants often offered vague verbal utterances, such as ‘say something is similar to something’, made more concrete by the accompanying gesture: hands enclose space and move to enclose space in a second location (i.e. side by side existence or similarity); or ‘something will occur’ palms face each other (i.e. something) and move away to the right of body and resting there (some entity existing in the future). Likelihood may not have an obvious connection to a metaphor of physical forces per se, but participants found ways to depict at least some meaningful physical aspects via gesture. The presence of meaningful gestures when communicating the meanings of abstract words provides a novel insight into the representation of abstract meaning. That is, data from gestural behaviour in the present study are consistent with the notion that underlying spatial image schemas were activated along with, or as part of, the linguistic system.

The fact that rates of metaphoric gesture were higher for abstract than for concrete words is consistent with the predictions we derived from Conceptual Metaphor Theory. This finding is also inconsistent with Barsalou & Wiemer-Hastings' [9] claim that metaphors are not central to the content of abstract concepts. As Borghi et al. [17] noted, however, it is unlikely that conceptual metaphors can explain representation of all abstract concepts. Note that beat gestures were also more likely when participants communicated the meanings of abstract words. This could be because this type of gesture fills a gap, in that it is used for meanings that can't be conveyed with iconic or metaphoric gestures. It is also possible that beat gestures were more prevalent for abstract meanings because their use supports verbal production [52,53] and thus reflects efforts participants are making to communicate meaning with any means possible.

On balance, the diversity of information coded in our data seems consistent with a model of semantic representation in which several different types of information support word meaning [16,22,27], with reliance on different types of information varying across item types and tasks. Participants in our study did not rely on simple shortcuts to convey word meaning; for instance, they rarely offered synonyms or sentence frames (e.g. ‘complete this statement: manifest ______’ destiny) to convey the target word. Instead, when asked to communicate abstract meanings, participants derived relatively rich descriptions that appear to coalesce around an agent. That is, they conveyed meaning by evoking situations that were focused around person (self, other) and introspective (beliefs, emotions, intentions) information. Their gestural behaviour provided a window on aspects of meaning that were not necessarily revealed by speech; gestures conveyed additional perceptually-based information. The fact that participants drew on so many types of information, even though they could have completed the task without doing so, suggests relatively rich and dynamic multidimensional representations. Based on our data, however, we cannot determine whether perceptually-based information was essential to semantic processing in this task [54] or, rather, a by-product of symbolic representations [55]. Establishing whether all of these dimensions are central or even necessary to abstract semantic processing will be an important issue for future research.

Since our focus was on fundamental differences in meanings of abstract and concrete words, and not on differences that should be attributed to task difficulty or to small numbers of observations, we adopted several strategies that may have had the effect of minimizing differences observed. That is, we included trial accuracy as a factor in our analyses in order to account for variance in responses that could be attributed to more versus less difficult trials. We also collapsed or omitted verbal utterance categories with fewer than 5% of observations. It was often the concrete words that had particularly low numbers of observations in certain categories. Collapsing those may have had the consequence of minimizing some of the differences in utterances produced for abstract and concrete trials. Given the novel, unconstrained nature of this task we think that these decisions were justified but the result is that our findings may be a conservative estimate of the ways in which abstract and concrete meanings differ.

We believe that the words presented in the taboo task were reasonably representative of larger populations of abstract and concrete words, but it seems unlikely that they represent all types of abstract and concrete meanings. Given the nature of the taboo task, we had to limit our stimuli to a fairly small set of 20 abstract and 10 concrete nouns, but think it will be important in future research to examine a greater diversity of abstract meanings. Extending the investigation to other classes of abstract words, including verbs and adjectives, is an important next step. The diversity of abstract meanings could never adequately be captured in a sample of 20 words, but our findings provide important new insights about the deep problem of how we ground the meanings of abstract words.

Supplementary Material

Supplementary Material

Supplementary Material

Supplementary Material

Supplementary Material

Endnotes

In particular, this method uses a No-U-Turn Sampler [50].

A logistic regression predicting the likelihood of participants generating a taxonomic utterance found no effect of word type (b = −0.08, 95% CI [−0.63, 0.47]).

A logistic regression predicting the likelihood of participants generating a communicative gesture found no effect of word type (b = 0.22, 95% CI [−0.15, 0.58]).

Ethics

This research was approved by the University of Calgary Conjoint Faculties Research Ethics Board. Informed consent was obtained from all participants.

Data accessibility

The datasets supporting this article have been uploaded as part of the electronic supplementary material.

Authors' contributions

L.Z. conceived and designed the study, acquired the data and contributed to data coding, analysis and interpretation. D.M.S. analysed and interpreted the data and contributed to drafting and revising the article. P.M.P. assisted with study conception and design, data acquisition, analysis and interpretation, and drafted the article.

Competing interests

We have no competing interests.

Funding

This research was supported through graduate scholarships to L.Z. and D.M.S., and a Discovery Grant to P.M.P., all from the Natural Sciences and Engineering Research Council (NSERC) of Canada.

References

- 1.Recchia G, Jones MN. 2012. The semantic richness of abstract concepts. Front. Hum. Neurosci. 6 ( 10.3389/fnhum.2012.00315) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2.Amsel BD. 2011. Tracking real-time neural activation of conceptual knowledge using single-trial event-related potentials. Neuropsychologia 49, 970–983. ( 10.1016/j.neuropsychologia.2011.01.003) [DOI] [PubMed] [Google Scholar]

- 3.Pexman PM, Hargreaves IS, Siakaluk PD, Bodner GE, Pope J. 2008. There are many ways to be rich: effects of three measures of semantic richness on visual word recognition. Psychon. Bull. Rev. 15, 161–167. ( 10.3758/PBR.15.1.161) [DOI] [PubMed] [Google Scholar]

- 4.Yap MJ, Tan SE, Pexman PM, Hargreaves IS. 2011. Is more always better? Effects of semantic richness on lexical decision, speeded pronunciation, and semantic classification. Psychon. Bull. Rev. 18, 742–750. ( 10.3758/s13423-011-0092-y) [DOI] [PubMed] [Google Scholar]

- 5.Juhasz BJ, Yap MJ, Dicke J, Taylor SC, Gullick MM. 2011. Tangible words are recognized faster: the grounding of meaning in sensory and perceptual systems. Q. J. Exp. Psychol. 64, 1683–1691. ( 10.1080/17470218.2011.605150) [DOI] [PubMed] [Google Scholar]

- 6.Siakaluk PD, Pexman PM, Aguilera L, Owen WJ, Sears CR. 2008. Evidence for the activation of sensorimotor information during visual word recognition: the body–object interaction effect. Cognition 106, 433–443. ( 10.1016/j.cognition.2006.12.011) [DOI] [PubMed] [Google Scholar]

- 7.Barsalou LW. 1999. Perceptual symbol systems. Behav. Brain Sci. 22, 577–660. ( 10.1017/S0140525X99002149) [DOI] [PubMed] [Google Scholar]

- 8.Meteyard L, Cuadrado SR, Bahrami B, Vigliocco G.. 2012. Coming of age: a review of embodiment and the neuroscience of semantics. Cortex 48, 788–804. ( 10.1016/j.cortex.2010.11.002) [DOI] [PubMed] [Google Scholar]

- 9.Barsalou LW, Wiemer-Hastings K. 2005. Situating abstract concepts. In Grounding cognition: The role of perception and action in memory, language, and thought (eds Pecher D, Zwaan R), pp. 129–163. Cambridge, UK: Cambridge University Press. [Google Scholar]

- 10.Brysbaert M, Warriner AB, Kuperman V. 2014. Concreteness ratings for 40 thousand generally known English word lemmas. Behav. Res. Methods 46, 904–911. ( 10.3758/s13428-013-0403-5) [DOI] [PubMed] [Google Scholar]

- 11.Hampton JA. 1981. An investigation of the nature of abstract concepts. Mem. Cognit. 9, 149–156. ( 10.3758/BF03202329) [DOI] [PubMed] [Google Scholar]

- 12.de Mornay Davies P, Funnell E. 2000. Semantic representation and ease of predication. Brain Lang. 73, 92–119. ( 10.1006/brln.2000.2299) [DOI] [PubMed] [Google Scholar]

- 13.Gentner D. 1981. Some interesting differences between verbs and nouns. Cogn. Brain Theory 4, 161–178. [Google Scholar]

- 14.Goldin-Meadow S, Brentari D. 2017. Gesture, sign, and language: the coming of age of sign language and gesture studies. Behav. Brain Sci. 40, e46 ( 10.1017/S0140525X15001247) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 15.Lakoff G, Johnson M. 1980. Conceptual metaphor in everyday language. J. Philos. 77, 453–486. ( 10.2307/2025464) [DOI] [Google Scholar]

- 16.Kousta S-T, Vigliocco G, Vinson DP, Andrews M, Del Campo E. 2011. The representation of abstract words: why emotion matters. J. Exp. Psychol. Gen. 140, 14–34. ( 10.1037/a0021446) [DOI] [PubMed] [Google Scholar]

- 17.Borghi AM, Binkofski F, Castelfranchi C, Cimatti F, Scorolli C, Tummolini L. 2017. The challenge of abstract concepts. Psychol. Bull. 143, 263–292. ( 10.1037/bul0000089) [DOI] [PubMed] [Google Scholar]

- 18.Wilson NL, Gibbs RW. 2007. Real and imagined body movement primes metaphor comprehension. Cogn. Sci. 31, 721–731. ( 10.1080/15326900701399962) [DOI] [PubMed] [Google Scholar]

- 19.Zdrazilova L, Pexman PM. 2013. Grasping the invisible: semantic processing of abstract words. Psychon. Bull. Rev. 20, 1312–1318. ( 10.3758/s13423-013-0452-x) [DOI] [PubMed] [Google Scholar]

- 20.Zwaan RA. 2016. Situation models, mental simulations, and abstract concepts in discourse comprehension. Psychon. Bull. Rev. 23, 1028–1034. ( 10.3758/s13423-015-0864-x) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Wiemer-Hastings K, Xu X. 2005. Content differences for abstract and concrete concepts. Cogn. Sci. 29, 719–736. ( 10.1207/s15516709cog0000_33) [DOI] [PubMed] [Google Scholar]

- 22.Barsalou LW, Santos A, Simmons WK, Wilson CD. 2008. Language and simulation in conceptual processing. In Symbols, embodiment, and meaning (eds De Vega M, Glenberg AM, Graesser A), pp. 245–284. Oxford, UK: Oxford University Press. [Google Scholar]

- 23.Dove G. 2011. On the need for embodied and dis-embodied cognition. Front. Psychol. 1, 242 ( 10.3389/fpsyg.2010.00242) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Thill S, Twomey KE. 2016. What's on the inside counts: a grounded account of concept acquisition and development. Front. Psychol. 7, 402 ( 10.3389/fpsyg.2016.00402) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25.Reilly J, Peelle JE, Garcia A, Crutch SJ. 2016. Linking somatic and symbolic representation in semantic memory: the dynamic multilevel reactivation framework. Psychon. Bull. Rev. 23, 1002–1014. ( 10.3758/s13423-015-0824-5) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Troche J, Crutch S, Reilly J. 2014. Clustering, hierarchical organization, and the topography of abstract and concrete nouns. Front. Psychol. 5, 360 ( 10.3389/fpsyg.2014.00360) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Borghi AM, Binkofski F. 2014. Words as social tools: an embodied view on abstract concepts. Berlin, Germany: Springer. [Google Scholar]

- 28.Crutch SJ, Ridha BH, Warrington EK. 2006. The different frameworks underlying abstract and concrete knowledge: evidence from a bilingual patient with a semantic refractory access dysphasia. Neurocase 12, 151–163. ( 10.1080/13554790600598832) [DOI] [PubMed] [Google Scholar]

- 29.Duñabeitia JA, Avilés A, Afonso O, Scheepers C, Carreiras M. 2009. Qualitative differences in the representation of abstract versus concrete words: evidence from the visual-world paradigm. Cognition 110, 284–292. ( 10.1016/j.cognition.2008.11.012) [DOI] [PubMed] [Google Scholar]

- 30.Alibali MW. 2005. Gesture in spatial cognition: expressing, communicating, and thinking about spatial information. Spat. Cogn. Comput. 5, 307–331. ( 10.1207/s15427633scc0504_2) [DOI] [Google Scholar]

- 31.Hostetter AB, Alibali MW. 2008. Visible embodiment: gestures as simulated action. Psychon. Bull. Rev. 15, 495–514. ( 10.3758/PBR.15.3.495) [DOI] [PubMed] [Google Scholar]

- 32.Murphy GL. 1996. On metaphoric representation. Cognition 60, 173–204. ( 10.1016/0010-0277(96)00711-1) [DOI] [PubMed] [Google Scholar]

- 33.Cienki A. 2008. Why study metaphor and gesture? In Metaphor and gesture (eds Cienki A, Müller C), pp. 5–26. Amsterdam, NL: John Benjamins Publishing Co. [Google Scholar]

- 34.Borghi AM, Capirci O, Gianfreda G, Volterra V. 2014. The body and the fading away of abstract concepts and words: a sign language analysis. Front. Psychol. 5, 811 ( 10.3389/fpsyg.2014.00811) [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Reilly J, Hung J, Westbury C. 2017. Non-arbitrariness in mapping word form to meaning: cross-Linguistic formal markers of word concreteness. Cogn. Sci. 41, 1071–1089. ( 10.1111/cogs.12361) [DOI] [PubMed] [Google Scholar]

- 36.Cacioppo JT, Petty RE. 1982. The need for cognition. J. Pers. Soc. Psychol. 42, 116–131. ( 10.1037/0022-3514.42.1.116) [DOI] [Google Scholar]

- 37.Marschark M, Paivio A. 1977. Integrative processing of concrete and abstract sentences. J. Verbal Learn. Verbal Behav. 16, 217–231. ( 10.1016/S0022-5371(77)80048-0) [DOI] [Google Scholar]

- 38.Nelson DL, Schreiber TA. 1992. Word concreteness and word structure as independent determinants of recall. J. Mem. Lang. 31, 237–260. ( 10.1016/0749-596X(92)90013-N) [DOI] [Google Scholar]

- 39.Paivio A, Walsh M, Bons T. 1994. Concreteness effects on memory: when and why? J. Exp. Psychol. Learn. Mem. Cogn. 20, 1196–1204. ( 10.1037/0278-7393.20.5.1196) [DOI] [Google Scholar]

- 40.Schwanenflugel PJ, Shoben EJ. 1983. Differential context effects in the comprehension of abstract and concrete verbal materials. J. Exp. Psychol. Learn. Mem. Cogn. 9, 82–102. ( 10.1037/0278-7393.9.1.82) [DOI] [Google Scholar]

- 41.Uttl B. 2002. North American adult reading test: age norms, reliability, and validity. J. Clin. Exp. Neuropsychol. 24, 1123–1137. ( 10.1076/jcen.24.8.1123.8375) [DOI] [PubMed] [Google Scholar]

- 42.Brysbaert M, New B. 2009. Moving beyond Kucera & Francis: a critical evaluation of current word frequency norms and the introduction of a new and improved word frequency measure for American English. Behav. Res. Methods 41, 977–990. ( 10.3758/BRM.41.4.977) [DOI] [PubMed] [Google Scholar]

- 43.Warriner AB, Kuperman V, Brysbaert M. 2013. Norms of valence, arousal, and dominance for 13,915 English lemmas. Behav. Res. Methods 45, 1191–1207. ( 10.3758/s13428-012-0314-x) [DOI] [PubMed] [Google Scholar]

- 44.Pexman PM, Heard A, Lloyd E, Yap MJ. 2017. The Calgary semantic decision project: concrete/abstract decision data for 10,000 English words. Behav. Res. Methods 49, 407–417. [DOI] [PubMed] [Google Scholar]

- 45.McNeill D. 1992. Hand and mind: what gestures reveal about thought. Chicago, IL: University of Chicago Press. [Google Scholar]

- 46.Kita S, van Gijn I, van der Hulst H.. 1998. Movement phases in signs and co-speech gestures, and their transcription by human coders. In Gesture and sign language in human–computer interaction (eds Wachsmuth I, Fröhlich M), pp. 23–35. Proceedings of the International Gesture Workshop, Bielefeld, Germany. [Google Scholar]

- 47.Cartmill E, Demir OE, Goldin-Meadow S. 2012. Studying gesture. In The research guide to methods in child language (ed. Hoff E.), pp. 209–225. Malden, MA: Wiley-Blackwell. [Google Scholar]

- 48.R Core Team. 2016 R: A language and environment for statistical computing. Vienna, Austria: R Foundation for Statistical Computing. [Google Scholar]

- 49.Bürkner C. 2017. brms: An R package for Bayesian multilevel models using Stan. J. Stat. Softw. 80, 1–28. ( 10.18637/jss.v080.i01) [DOI] [Google Scholar]

- 50.Hoffman MD, Gelman A. 2014. The No-U-turn sampler: adaptively setting path lengths in Hamiltonian Monte Carlo. J. Mach. Learn. Res. 15, 1593–1623. [Google Scholar]

- 51.Gelman A, Rubin DB. 1992. Inference from iterative simulation using multiple sequences. Stat. Sci. 7, 457–472. ( 10.1214/ss/1177011136) [DOI] [Google Scholar]

- 52.Lucero C, Zaharchuk H, Casasanto D. 2014. Beat gestures facilitate speech production. In Proceedings of the 36th Annual Conference of the Cognitive Science Society (eds Bello P, Guarini M, McShane M, Scassellati B), pp. 898–903. Austin, TX: Cognitive Science Society. [Google Scholar]

- 53.McClave E. 1994. Gestural beats: the rhythm hypothesis. J. Psycholinguist. Res. 23, 45–66. ( 10.1007/BF02143175) [DOI] [Google Scholar]

- 54.Glenberg AM. 2015. Few believe the word is flat: how embodiment is changing the scientific understanding of cognition. Can. J. Exp. Psychol. 69, 165–171. ( 10.1037/cep0000056) [DOI] [PubMed] [Google Scholar]

- 55.Mahon BZ. 2015. The burden of embodied cognition. Can. J. Exp. Psychol. 69, 172–178. ( 10.1037/cep0000060) [DOI] [PMC free article] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

The datasets supporting this article have been uploaded as part of the electronic supplementary material.