Abstract

Implementation experts suggest tailoring strategies to the intended context may enhance outcomes. However, it remains unclear which strategies are best suited to address specific barriers to implementation in part because few measurement methods exist that adhere to recommendations for reporting. In the context of a dynamic cluster randomized trial comparing a standardized to tailored approach to implementing measurement based care (MBC), this study aimed to: 1) describe a method for tracking implementation strategies, 2) demonstrate the method by tracking strategies generated by teams tasked with implementing MBC at their clinics in the tailored condition 3) conduct preliminary examinations of the relation between strategy use and implementation outcomes (i.e., self-reported fidelity to MBC). The method consisted of a coding form based on Proctor et al.’s (2013) implementation strategy reporting guidelines and Powell et al.’s (2012) taxonomy to facilitate specification of the strategies. A trained research specialist coded digitally recorded implementation team meetings. The method allowed for the following characterization of strategy use. Each site generated 39 unique strategies across an average of 6 meetings in 5 months. There was little variability in the use of types of implementation strategies across sites with the following order of prevalence: Quality Management (50.00%), Restructuring (16.53), Communication (15.68%), Education (8.90%), Planning (7.20%), and Financing (1.69%). We identified a new category of strategies not captured by the existing taxonomy, labeled “Communication.” There was no evidence that number of implementation strategies enacted was statistically significantly associated with changes in self-reported fidelity to MBC; however, Financing strategies were associated with increased fidelity. This method has the capacity to yield rich data that will inform investigations into tailored implementation approaches.

Keywords: Implementation Strategy, Implementation Team, Measurement-Based Care

Introduction

Effectively implementing and sustaining evidence-based interventions in community behavioral health service settings requires thoughtful selection and application of implementation strategies, defined as methods or techniques used to enhance the adoption, implementation, or sustainment of a program or practice (Proctor, Powell, & McMillen, 2013). This could include discrete implementation strategies such as educational meetings, computerized reminders, or audit and feedback (Powell et al., 2012, 2015). Addressing the multilevel barriers to implementing change in community settings typically requires more complex, multifaceted and multilevel implementation strategies that combine discrete strategies (Aarons, Hurlburt, & Horwitz, 2011; Mittman, 2012; Weiner, Lewis, Clauser, & Stitzenberg, 2012). Increasingly, scholars have argued that a “one size fits all” approach to implementation is not likely to be effective, and that tailoring implementation strategies to address context-specific barriers to change is warranted (Baker et al., 2015; Bosch, van der Weijden, Wensing, & Grol, 2007; Lewis et al., 2015; Powell et al., 2017; Wensing et al., 2011).

There is emerging evidence of the effectiveness of multifaceted and tailored implementation strategies (Baker et al., 2015; Grimshaw, Eccles, Lavis, Hill, & Squires, 2012; Novins, Green, Legha, & Aarons, 2013; Powell, Proctor, & Glass, 2014). However, strategies are often poorly reported in the published literature, which is a major impediment to those who wish to interpret and apply the evidence from specific implementation studies (Michie, Fixsen, Grimshaw, & Eccles, 2009; Proctor et al., 2013). For example, it is often the case that implementation strategies are labeled with inconsistent terms; specific actions involved are not described in sufficient detail; relations between implementation barriers and strategies are not made explicit; and a theoretical, empirical, or pragmatic justification for the selection of each discrete strategy is not provided (Michie et al., 2009; Proctor et al., 2013). This presents several challenges. First, poor reporting makes it nearly impossible to interpret findings of empirical studies, or to understand mechanisms by which the strategies exerted (or failed to exert) their effect. If the study findings are positive, it is unclear how the positive effects were obtained; conversely, if study findings are negative or mixed, it is unclear how the strategies might be improved. Second, poor reporting impedes replication in science and practice, limiting the accumulation of evidence and precluding applied implementers from immediately benefiting from the scientific literature. Third, it limits the synthesis of evidence through systematic reviews and meta-analyses, as it is difficult to determine which implementation strategies are truly comparable. Finally, poor reporting makes it difficult to parse out the effects of discrete implementation strategies. This is already difficult to do empirically (Alexander & Hearld, 2012), and is further complicated when strategies are inadequately described and justified. Poor reporting ultimately renders implementation strategies a “black box.”

Implementation researchers have attempted to improve reporting in the field in at least two ways. First, a number of taxonomies have been developed to promote more consistent use of implementation strategy terms and definitions (Cochrane Effective Practice and Organisation of Care Group, 2002; Kok et al., 2016; Mazza et al., 2013; Michie et al., 2013; Powell et al., 2012, 2015; Walter, Nutley, & Davies, 2003). For example, Powell et al. (2012) developed a compilation of 68 discrete implementation strategies that were grouped into six categories: 1) Plan, 2) Educate, 3) Finance, 4) Restructure, 5) Quality Management, and 6) Attend to Policy Context. These taxonomies can also be used to enhance the level of detail in which implementation strategies are described. For instance, Aarons et al. (2015) used Michie and colleagues’ (2013) taxonomy of behavior change techniques to further describe their Leadership and Organizational Change Intervention. Second, there are a number of reporting guidelines that can be used to improve the quality of implementation strategy descriptions (Albrecht, Archibald, Arseneau, & Scott, 2013; Hoffman et al., 2014; Lokker, McKibbon, Colquhoun, & Hempel, 2015; Pinnock et al., 2017; Proctor et al., 2013; Workgroup for Intervention Development and Evaluation Research, 2008). Proctor et al. (2013) recommended that authors carefully name, define, and specify the: 1) actor (i.e. who enacts the strategy), 2) action(s) (i.e. what are the specific actions, steps, or processes that need to be enacted, 3) action target (i.e. constructs targeted, unit of analysis), 4) temporality (i.e. when the strategy is used 5) dose (i.e. intensity), 6) implementation outcome (i.e. implementation outcome(s) likely to be affected by each strategy), and 7) justification (i.e. empirical, theoretical, or pragmatic justification for the choice of implementation strategy). Few studies have yet to apply these recommendations; however, two noteworthy examples include Bunger et al.’s (2016) description of key components of a learning collaborative intended to increase the use of Trauma-Focused Cognitive Behavioral Therapy and Gold et al.’s (2016) report of a strategy to implement a diabetes quality improvement intervention within community health centers. Unfortunately, these studies do not provide a methodology for tracking implementation strategies as they are generated in an ongoing implementation effort. Bunger and colleagues (2017) put forth the only methodology, to our knowledge, for achieving this goal of prospective tracking. They instituted collection of monthly activity logs from leadership and implementation teams. This methodology allows for tracking of strategies generated and used outside of formal implementation meetings. However, this requires stakeholders to accurately report on strategies, which undermines the rigor that may be necessary to build the initial evidence base and places the tracking burden directly on stakeholders.

The current study fills this gap by offering a rigorous approach to tracking strategies applied to six behavioral health clinic sites engaged in a tailored implementation of measurement-based care (MBC). MBC is an evidence-based intervention that involves systematically evaluating patient reported outcomes prior to or during a clinical encounter to inform treatment (Scott & Lewis, 2015). MBC provides direct benefits to patients (e.g., symptom improvement), providers (e.g., accurate and timely information to guide clinical decision making), organizations (e.g., aggregate data can guide quality improvement efforts), and systems (e.g., assessing population health) (Bickman, Kelley, Breda, de Andrade, & Riemer, 2011; Carlier et al., 2012; Jackson, 2016; Kearney, Wray, Dollar, & King, 2015). Despite these broad benefits, fewer than 20% of providers in the United States use MBC (17.9% of psychiatrists, 11.1% of psychologists, 13.9% of masters level clinicians; Jensen-Doss et al., 2016). The parent study addresses a priority in implementation science by conducting a rigorous comparative trial of a tailored implementation approach versus a “standard” multifaceted protocol (Baker et al., 2015; Powell et al., 2017; Wensing, 2017; Wensing et al., 2011). The current study addressed three aims: 1) describe a method for tracking implementation strategies, 2) demonstrate the method 3) conduct preliminary examinations of the relation between strategy use and implementation outcomes (self-reported fidelity to MBC).

Methods

Study Context

The method for tracking implementation strategy utilization was developed in the context of a cluster randomized control trial comparing a standardized versus tailored approach to implementing MBC across 12 clinics of the nation’s largest not-for-profit behavioral health provider; see Lewis et al. (2015) for the full study protocol. The standardized condition was conceptualized as a “best practices” one-size-fits-all approach that sought to achieve MBC implementation with fidelity. The tailored condition was conceptualized as a “collaborative and customized” approach that sought to target the unique contextual factors that might serve as barriers with implementation strategies tailored to each site. In both conditions, clinicians participated in a four-hour training session on MBC that offered continuing education units and focused on the use of the Patient Health Questionnaire 9-item (PHQ-9; Kroenke et al., 2001) to monitor symptom change and inform care for depressed adult (age 18 and over) clients. Following training, clinicians in the standardized condition participated in tri-weekly consultation meetings led by a clinical psychologist on the research team. In the tailored condition, clinicians and clinic managers were strategically invited to participate in tri-weekly implementation team meetings based on their degree of social influence at their clinic as measured by nominations by peers (Hlebec & Ferligoj, 2002) and positive attitudes toward MBC as measured by a self-report of attitudes toward MBC (Jensen-Doss et al., 2016). A clinical psychologist facilitated the triweekly implementation team meetings, the focus of which was engaging members in addressing the unique barriers to implementing MBC at their clinic. Because of the explicit aim of implementation team meetings to plan and enact implementation strategies, the present study focused on the six sites in the tailored condition.

Implementation Team Meetings

Implementation team meetings occurred on an approximately tri-weekly basis for the five-month active implementation period. Because implementation team meetings constituted the only time clinic personnel came together to formally discuss the implementation of MBC, these meetings were the focus of strategy tracking. On average, teams met six times (range of five to seven), with a total of 36 meetings across the six sites. While the content and focus of meetings differed due to the unique barriers addressed by each team, there were several structural components that were common across all teams. First, all teams assigned at least three specific roles: chair, secretary, and evaluation specialist. The chair was responsible for creating an agenda and leading each meeting. The secretary was responsible for taking notes and distributing these notes to other members with the goal of keeping track of strategies, targets, and progress made. The evaluation specialist was responsible for reviewing, interpreting, and discussing penetration data (defined below). Most teams also created a “communication specialist” who was responsible for sharing team decisions with other clinicians in the clinic.

Second, implementation teams were offered data from the needs assessment that was conducted prior to MBC training that revealed unique barriers at their clinic. The needs assessment consisted of a battery of self-report measures administered to clinicians at baseline. Measures mapped onto the domains of the Framework for Dissemination (Mendel et al., 2008) and included: Norms & Attitudes: EBPAS (Aarons, 2004), Resources: Survey of Organizational Functioning (Broome, Flynn, Knight, & Simpson, 2007), Policies & Incentives (assessed qualitatively; Lewis et al., 2015); Networks & Linkages: Sociometric Questionnaire (Valente et al., 2007)); and Structure & Process: Barriers & Facilitators (Salyers, Rollins, McGuire, & Gearhart, 2009); Media & Change Agents: Opinion Leadership Scale (Childers, 1986). See (Lewis et al., 2015) for a full description of measures included in the needs assessment.

Third, teams were given the option to tailor the guideline for PHQ-9 administration. While the facilitator made clear that the literature supported administering the PHQ-9 every session with adult depressed clients, the implementation team was encouraged to generate a tailored guideline for their clinic. For example, several teams decided to expand use of PHQ-9 to clients as young as twelve. Another team decided to pilot MBC by identifying ten clients on each clinician’s caseload for which the PHQ-9 should be completed and to then expand to their entire caseload once they were comfortable with the process (practically and clinically).

Finally, all teams received penetration data from a member of the research team prior to each meeting. Penetration data consisted of information regarding rates of PHQ-9 use by clinician as pulled from the electronic health record (EHR) such as percent of clinicians who completed PHQ-9s with eligible clients and percent of clinicians who reviewed the PHQ-9 score trajectory graphs. However, implementation teams could request data aggregated at different levels to suit their specific PHQ-9 guidelines or explore hypothesized barriers to PHQ-9 use. For example, one team member surmised that fewer PHQ-9s were completed when the volume of services at the clinic was high. Data was provided that allowed them to compare the volume of services to PHQ-9 use at the clinic. Penetration data differed from MBC itself in that it did not include data regarding client symptom change. The evaluation specialist was encouraged to consume the data prior to the team meeting to guide a discussion.

Implementation Team Members

Six behavioral health clinics in the Midwest were included in the study. A total of 22 clinicians, 13 clinic managers and five office professionals participated in at least one meeting. Implementation team members were predominantly white (97.50%), female (85.00%), and on average 41.59 years old. Additionally, 70% were licensed mental health care providers and 52% supervised other clinicians. Implementation teams varied with respect to number of team members, ranging from 4–9 typically reflective of clinic size. Implementation team member attendance ranged from 58% to 95% at each meeting. Through the course of team meetings, 5 members left the clinic for other positions. Of those, 4 members found other people to take over their roles on the team. Clinics varied in size based on number of clinicians and number of clients served as well as urban versus rural status (See Table 1).

Table 1.

Characteristics of clinics

| Site # | Number of team members |

Urban | Clinic Size |

|---|---|---|---|

| 1 | 6 | No | small |

| 2 | 4 | Yes | large |

| 3 | 5 | Yes | small |

| 4 | 9 | Yes | large |

| 5 | 7 | No | medium |

| 6 | 9 | Yes | large |

Note: Clinic size based on number of clinicians employed at the time of cohort assignment. Small <15, Medium 15–20, Large >20

Developing the coding system

An initial document for recording and coding implementation strategies was created to map onto the recommendations for reporting proposed by Proctor et al. (2013). Recommendations included details across two levels: (1) naming and defining strategies by drawing upon existing taxonomies and (2) operationalizing each strategy by reporting on the actor performing the implementation strategy, the action being performed, the target of the action, temporality, dose, outcome affected, and justification/rationale for the selected strategy. A research specialist piloted the coding document with a subset of five implementation team meetings. The coding system was then operationalized and refined based on discussion with two implementation scientists (coauthors of this paper). Based on observations in the meetings, constructs were added to the coding system to capture more nuanced information about each strategy. For example, constructs were added to capture if a strategy was “planned” (i.e., team members intended to engage the strategy but had not yet done so) or “enacted” (i.e., team members had executed the strategy). Codes were added to track “ongoing strategies” (i.e., strategies that members utilized habitually such as weekly check-ins with other clinicians about PHQ-9 use) versus “time-limited” (i.e., strategies that members utilized once such as conduct a booster MBC training). See the appendix for all constructs, definitions, and examples.

Coding

Implementation team meetings were recorded using a HIPAA compliant, telehealth platform and then transcribed. The research specialist listened to each meeting and recorded information regarding implementation strategies using the final coding system. The research specialist listened to the entire meeting, marking in the transcript areas where strategy discussion occurred, and then returned to the transcript to code for strategies extracting as much detail as possible for each. This approach was taken because it was often the case that members of the implementation team would discuss a strategy and then return to it later in the meeting, adding more specific details about who would perform the strategy and when. Discussion of any activity that appeared to advance implementation of MBC at the clinic was coded as a strategy. This included emails and informal conversations initiated by team members with other clinicians to remind or encourage PHQ-9 use, data requests made by team members to better understand PHQ-9 use at the clinic, and distribution of physical copies of the PHQ-9 to office professionals and clinicians. Unless members explicitly stated that they enacted a strategy, strategies were coded as being planned (not yet enacted). If members did not explicitly state when a strategy would be enacted (temporality), it was assumed that they intended to enact the strategy before the next meeting. If it was unclear who the intended actor of the strategy was, then the person who discussed the strategy was assumed to be the actor of the strategy. A small number of strategies did not directly align with any of the strategies in the established taxonomy (Powell et al., 2012) and further did not align with any of the additional strategies proposed in the later version of the taxonomy (Powell et al., 2015). The investigative team reviewed these and proposed a new code, “Communication,” that captured these strategies. These strategies generally consisted of communication in meetings, in the break room, or via email regarding guidelines for PHQ-9 use, encouragement for PHQ-9 use, reminders for incorporating PHQ-9 into workflow, etc. For full instructions for coding, see the appendix.

Strategies were first described using quotes from meeting participants extracted from the transcripts and later coded by the first author using on an established taxonomy of implementation strategies (Powell et al., 2012). The second author provided feedback on approximately ten percent of coded strategies, which was integrated throughout the coding process. The sixth clinic had missing video recordings for four of the six meetings so strategies were coded based on detailed meeting minutes taken by the secretary of the implementation team as well as emails sent between meeting participants, the research specialist, and the facilitator.

Implementation Outcome: Measurement Based Care Fidelity

An adapted version of the Monitoring As Usual measure (MAU, Lyon, Dorsey, Pullman, Martin, Grigore, et al., 2017) served as the primary outcome variable in this study. The MAU was revised to reflect the three core components of MBC fidelity: (1) administer patient reported outcome measure(s), (2) review score trajectory, and (3) discuss scores with client. In response to these items, clinicians selected, “never,” “every 90 days,” “every month,” or “every one to two sessions.” For the purposes of analyses, these categories were treated as a four point scale with “never” being assigned “1” and “every one to two sessions” being assigned “4”. The MAU was administered to clinicians (N=68) prior to implementation team meetings (baseline) and five months later (end of active implementation). The clinicians who completed the MAU were primarily female (86.76%), white (95.59%) and on average 40.04 years old. They represented all participating clinicians at each of the six sites and included, but were not limited to, members of the implementation team.

Analytic Plan

Frequencies and descriptive statistics of strategies, actors, and temporality were calculated in Excel 2011. To test the effects of strategies on MBC fidelity, several ordered logistic regressions were employed. Because the MAU was administered at two time points, we could not analyze the effect of strategies enacted in each meeting on fidelity. Instead, enacted strategies were averaged across each site’s total number of meetings. Each site had different values for the dependent variables; however values of the independent variables were the same for each clinician within the same site. As a result, models relied on variation between sites in order to analyze the effects of strategies. There was no variation among the strategy variables within sites. We also included the clinicians’ baseline responses to the MAU as an independent variable. We could not include other variables to account for possible unobserved differences in clinicians and sites. The inclusion of fixed effects for either site or time (first or second round) resulted in the terms being omitted due to multicollinearity. Models did not converge when a random effect for each clinician was included. One set of analyses explored the effect of strategy number on clinician fidelity to MBC, the other explored the effect of strategy type.

Results

Report of Implementation Strategies

A total of 233 strategies were generated by implementation members across all sites with 55.36% of these reported by team members in meetings as being explicitly enacted (though it is possible that strategies were enacted and not explicitly labeled as such by members in a meeting). On average, each site generated 39 unique strategies (ranging from 36 to 44 strategies). See the supplemental file for strategy use by each site.

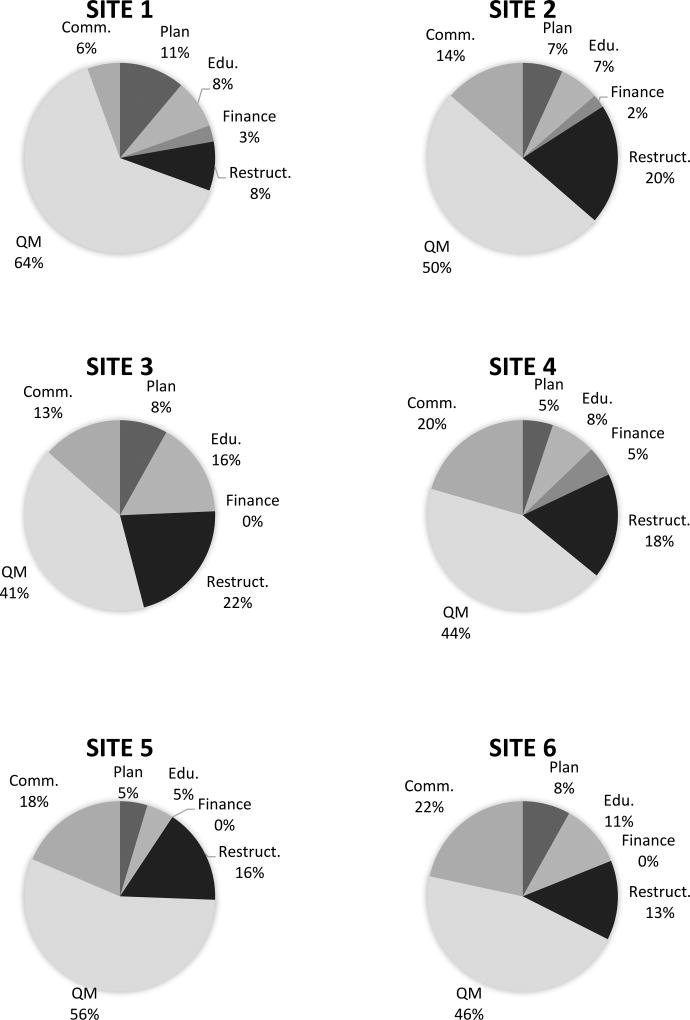

Members used 26 of the 68 strategies identified by Powell et al. (2012). There was little variability in the use of types of implementation strategies across sites with the following order of prevalence: Quality Management (50.00%), Restructuring (16.53), Communication (15.68%), Education (8.90%), Planning (7.20%), and Financing (1.69%); see Figure 1.

Figure 1. Percentage of Implementation Strategies Generated by Category.

Figure Description: This figure depicts strategies generated by each site broken down by categories specified by Powell et al., 2012. Planned and enacted strategies were summed to calculate the percentage. The Policy category was not included because no sites engaged in strategies within this category. Edu- Education Category, Restruct.-Restructuring Category, QM-Quality Management Category, Comm.-Communication Category

Report of Implementation Actors

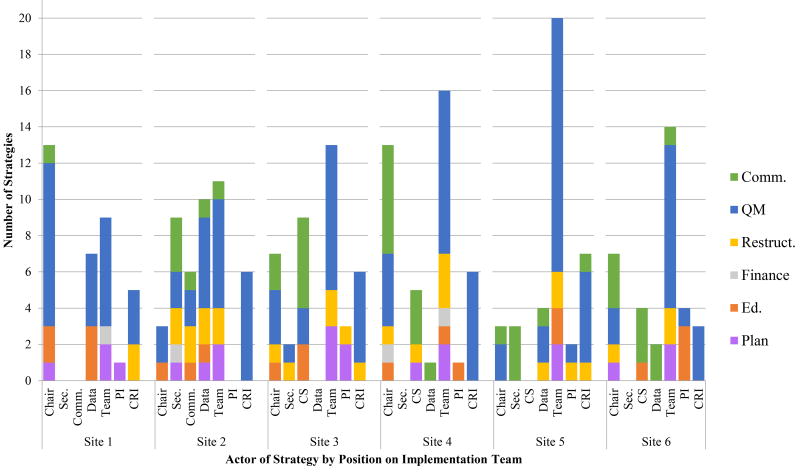

The chair was generally the most active position of the meeting, enacting (or planning to enact) an average of 7.65 strategies across meetings followed by the communication specialist enacting (or planning to enact) an average of 6 strategies across all meetings. At every site, the majority of strategies (average of 13.83) were planned or enacted with contributions from the entire team. For example, at one site, the entire team contributed to the coordination of a short booster training for the clinic. See Figure 2 for strategies affiliated with implementation team members by category.

Figure 2. Strategies Generated by Implementation Team Members by Category.

Figure Description: This figure depicts strategies planned or enacted by implementation team members broken down by categories specified by Powell et al., 2012. Planned and enacted strategies were summed. Edu.-Education Category, Restruct.-Restructuring Category, QM-Quality Management Category, Comm.-Communication Category, Sec.-Secretary Position, CS- Communication Specialist, CRI- Research personnel, PI-Principle Investigator, Team- contribution of entire team to enact (or plan to enact) strategy

Temporality of Strategy Use

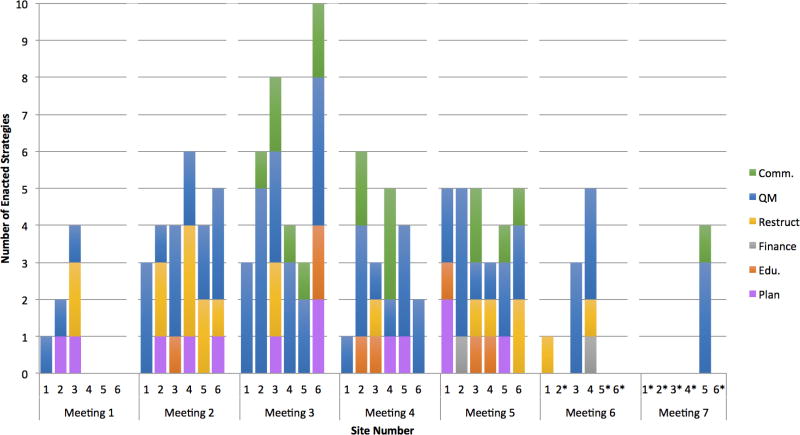

Three of the six clinics did not enact any strategies in the first meeting. Restructuring strategies were most common in the first two meetings as members decided on team positions (i.e., revise professional roles) and worked with office professionals to encourage the completion of the PHQ-9 in the waiting room (i.e. facilitate the relay of clinical data to providers). On average, the number of enacted strategies peaked at the third meeting. Only two teams enacted Finance strategies but this was not until the fifth and sixth meeting, possibly because this strategy may be considered more complex as compared to others. See Figure 3 for strategy use across all implementation team meetings by site.

Figure 3. Strategies Enacted by Implementation Teams across Meetings.

Figure Description: This figure depicts strategies enacted by implementation teams across meetings. *indicates that the team did not host a meeting, Edu.-Education Category, Restruct.-Restructuring Category, QM-Quality Management Category, Comm.-Communication Category

Clinician Fidelity to MBC

Number of strategies did not have a significant effect on fidelity to MBC. However, in analyses of the effect of strategy type, we found that Finance strategies had a significant positive effect on two of the three levels of fidelity to MBC (see Table 2). Interpretations come from calculating the odds ratios. With respect to the first component of fidelity, for a standard deviation increase in average number of finance strategies (about 0.09), the odds of a clinician administering the PHQ-9 increased by a factor of 2.5, holding other variables constant (p<0.05, two-tailed test). With respect to the second component of fidelity, for a standard deviation increase in average number of finance strategies per meeting, the odds of a clinician reviewing the score trajectory increased by a factor of 3, holding other variables constant (p<0.05, two-tailed test). Communication strategies were excluded from this analysis because of multicollinearity issues, and we suspect multicollinearity also influenced lack of significance for other strategy types. Across all clinics and all levels of fidelity there was an improvement in MAU score from pre implementation team meetings to post (See Table 3).

Table 2.

Results from ordered logistic regressions for the effect of types of strategies on self report fidelity to MBC

| DV: | MAU1 | MAU2 | MAU3 | |||

|---|---|---|---|---|---|---|

|

| ||||||

| Coef. | Std. Err. |

Coef. | Std. Err. |

Coef. | Std. Err. |

|

| Plan | −3.496 | 4.451 | −4.602 | 4.459 | −3.882 | 4.752 |

| Educate | 1.485 | 3.873 | 5.429 | 3.752 | −0.157 | 3.696 |

| Finance | 11.224** | 4.753 | 12.893** | 4.429 | 7.021 | 4.379 |

| Restructure | −1.207 | 2.032 | −3.211 | 2.034 | 0.874 | 1.842 |

| QM | −0.595 | 1.066 | −1.034 | 0.995 | 0.531 | 0.958 |

| Base line score | ||||||

| 2 | 0.31 | 0.578 | 0.616 | 0.543 | 0.535 | 0.574 |

| 3 | 1.274 | 0.939 | 1.561* | 0.904 | 1.11 | 0.869 |

| 4 | 0.497 | 0.846 | 0.59 | 0.756 | 0.094 | 0.76 |

| /cut1 | −4.749 | 2.645 | −5.438 | 2.734 | −1.311 | 2.397 |

| /cut2 | −3.379 | 2.609 | −4.09 | 2.711 | −0.629 | 2.379 |

| /cut3 | −2.03 | 2.585 | −2.3 | 2.687 | 1.147 | 2.388 |

Note: Reference category for baseline score is a value of 1. The MAU has three indicator items: MAU1-administration of standardized progress measures, MAU2-review of client symptom score trajectory, MAU3-discusion of scores with clients; QM-Quality Management Category,

p < 0.1,

p < 0.05

Table 3.

MAU fidelity scores pre and post implementation team meetings

| Pre | Post | |||

|---|---|---|---|---|

|

| ||||

| Variable | M | SD | M | SD |

| MAU1 | 1.912 | 1.004 | 3.235 | 0.964 |

| MAU2 | 1.735 | 0.956 | 2.897 | 0.995 |

| MAU3 | 1.662 | 0.874 | 3.074 | 1.027 |

Note: N=68, MAU=Monitoring as Usual. The MAU has three indicator items: MAU1-administration of standardized progress measures, MAU2-review of client symptom score trajectory, MAU3-discusion of scores with clients

Discussion

Few studies, with the exception of Bunger et al. (2016) and Gold et al., (2016), have systematically described the use of implementation strategies according to recently published reporting recommendations (Proctor et al., 2013), and none have done so in the context of a tailored approach to implementation. This manuscript builds upon recent work (Bunger et al., 2017) to reveal a systematic method for tracking strategies in line with reporting recommendations (Proctor et al., 2013) that allowed for a rich, detailed depiction of one agency’s attempt to implement MBC in six sites using a tailored approach. Although preliminary, our analyses revealed that Finance related strategies significantly increased the likelihood that clinicians would use MBC with fidelity, whereas the sheer number of strategies employed was not related to MBC fidelity. A discussion of the usability of this method follows.

Tracking Implementation Strategies: The Methodology

While the reporting standards put forth by Proctor et al., (2013) are relatively comprehensive, we identified several nuances for capturing strategy use in the context of an ongoing implementation effort in which strategies are not specified a priori. For instance, we tracked if strategies generated by the teams were planned versus explicitly enacted as indicated by follow up confirmation from team members and found that about half (44.64%) were planned without any follow up in future meetings. While this gap may suggest a need for additional follow up with team members similar to the method described by Bunger et al. (2017), comparing rates of planned versus enacted strategies could provide insight into the effectiveness of implementation teams. A lack of follow up on strategies discussed may have undermined implementation team progress. Without information on the impact of applying a strategy, the team had no way of knowing if the strategy had its intended effect. Our team tried to support systematic planning and evaluation by encouraging the chair to set an agenda, the secretary to take notes (using a template that would support tracking strategies and intended targets), and the evaluation specialist to monitor impacts, but in the absence of quality improvement training these team member roles were often under-performing. As our method is intended to inform the efforts and impacts of tailored approaches to implementation, we recommend that researchers report on whether strategies are planned versus enacted until we are able to discern their differential impact on implementation.

To best capture implementation strategy reporting recommendations related to dose, we tracked whether a strategy was intended to be used once or multiple times. Certain strategies may inherently lend themselves to time-limited use, such as training to engender knowledge about an intervention before a clinician can develop skill. However, to expand on this training example and the need to track time-limited or ongoing strategies, a clinic may choose to offer training in MBC on a monthly basis to support sustainment (e.g., with turnover a threat in community behavioral health ongoing training would allow new clinicians to become knowledgeable). The dose is likely to have a substantively different impact on implementation outcomes and thus should be formally tracked and evaluated.

Finally, Communication was a category of strategies that emerged, in relatively high frequency (15.88% of all strategies), across all of the implementation teams. In fact, communication was thought to be so important that four of the six sites elected to nominate a communication specialist to formally report out to the larger clinic about the implementation team activities. This observation is consistent with Bunger et al.’s (2017) work in which they acknowledged their activity log approach likely did not capture informal communications that have been identified as important for building buy-in and shared understanding (Ditty, Landes, Doyle, & Beidas, 2015; Powell, Hausmann-Stabile, & McMillen, 2013; Tenkasi & Chesmore, 2003). Given the prevalence of communication in this study and its role as a key mechanism for diffusing evidence based practices among individuals within a network (Rogers, 2003), we recommend routinely tracking this category of strategies. Importantly, it may be the case that communication is heavily relied upon by implementation teams because of its ease of use, but it may be less potent than other strategies, which remains an empirical question. Further, certain types of communication may be more effective than others. Mass media communication (e.g., emails from clinic director to entire staff) may be less effective in persuading individuals to try an innovation when compared to individual face-to-face conversations (Rogers, 2003). Further specification of the types of strategies that fall into the category of Communication will help address these important empirical questions.

There were several constructs included in Proctor et al.’s (2013) recommendations for reporting implementation strategies that were difficult to capture in the course of an ongoing implementation effort. “Action target” defined as the “conceptual target” of the strategy was challenging to discern due to the way that clinicians talk about strategy use. Clinicians do not necessarily have insight into theories of implementation or evidence-based implementation strategies; thus, they do not clearly indicate the intended target of their strategy. For the same reason, it was also difficult to determine a theoretically- or empirically-informed justification, because such justification was simply not given. This raises the question of how much an implementation team should be driven by stakeholders at the site of implementation versus a facilitator with implementation expertise. While stakeholders have invaluable insight into the unique context of their site, an implementation expert has insight into relevant theories, research, and evidence-based implementation strategies. It may be the case that an implementation expert can teach teams to work “smarter” by clearly articulating the target of their chosen strategies and evaluating progress. A balance of contributions is likely critical, but the empirical literature is sparse and only recently has external facilitation been more carefully described and delineated (Kirchner et al., 2014). “Dose” was also difficult to determine. Strategies were coded as being “time-limited” or “ongoing” and if determined “ongoing” further specified how often (daily, weekly, biweekly) which provided some details related to dose. However this method was not able to capture nuanced information related to person hours, suggesting a possible need for triangulation of coded information with self report from team members as outlined by Bunger et al. (2017).

Reporting implementation strategies based on Proctor et al.’s (2013) recommendations may be wieldier when designing an experiment to test a strategy rather than in an ongoing implementation effort. There is a need to strike the balance of feasibility and comprehensiveness when recording strategies under such circumstances. While certain information may be important for researchers seeking to determine the effectiveness of implementation strategies or mechanisms of strategies, different information may be of greater interest to stakeholders looking to learn from and replicate implementation efforts at their own site. For example, researchers may have an interest in the theoretical justification of the use of a specific strategy to gain insight into potential mechanisms of implementation. However, to stakeholders, clear, step-by-step instructions (i.e. temporality construct of Proctor’s recommendations) for carrying out combinations of effective strategies may be of most interest.

Limitations

There are several noteworthy limitations to this tracking methodology and the study in which it was applied. First, like many methods (notably intervention fidelity monitoring; e.g., Essock et al., 2015), the fact that our tracking methodology was quite rigorous means that it was also very laborious. To confidently code the strategies, the meetings were recorded, transcriptions were obtained, and the research specialist watched and read the transcriptions of the meetings (sometimes multiple times). This methodology is manageable and recommended for work in a research context, but pragmatic tracking methods are needed if we are to support stakeholders in monitoring the impact of implementation strategies used. However, the method of coding outlined in this paper allows for data to be aggregated in a large number of ways (e.g., across time, by category, by actor) lending to a detailed record of what occurred in the course of an implementation effort that could be useful both to researchers and stakeholders seeking to understand implementation efforts in different ways. For example, we further explored the Finance strategies used by sites given the significant impact of this category of strategies on clinician fidelity. The detailed record produced by this method revealed that one site created a competition among clinicians to incentivize PHQ-9 use, the other penalized office professionals with a write up when they ignored clinician requests to hand out the PHQ-9 in the wait room.

Second, given the laborious nature of this work, we opted for one research specialist to code the strategy content and had an expert check 10% of the strategies. A more rigorous approach would have been to have all 36 meetings double coded. Third, we were unable to code four of the six videos from one of the sites due to an administrative error in which recording was not enabled. Although we had relatively rich meeting minutes and between meeting summaries, it is likely the case that the number of strategies reported on for this site is an underestimate. However, given that coding meeting notes rather than recordings and transcripts is less labor intensive, a comparison of these methods is needed. Fourth, because it was not required for discussion, it is unknown whether any (or all) of the planned strategies were actually enacted or what informal (or formal) strategies were enacted outside of the implementation meetings. It is also possible that subtle but influential strategies such as leadership praise were missed. These limitations suggest it may be important to train sites how to recognize and track their own strategies so they can glean insights as to how and why their implementation efforts are (or are not) succeeding, in real time. Alternatively, it may point to a need to combine the methodology outlined by Bunger et al. (2017) with the methodology presented in this paper when possible for the most complete record of implementation strategies. This would ensure that implementation strategies generated outside of meetings were captured as well.

Fifth, it is unclear how appropriate the strategies enacted were based on the unique needs, barriers, and facilitators of each clinic. The implementation strategy target was almost ubiquitously implicit and it may be the case that the majority of those enacted were misaligned with the needs of the clinic, which would be consistent with previous research (Baker et al., 2015; Bosch et al., 2007). There is emerging research on the importance of mechanisms (Williams, 2015; Lewis et al., 2016) to streamline and focus implementation approaches, but the field is far from systematically supporting this kind of work. Until the science progresses, stakeholders will be making decisions about implementation strategy targets based on common sense and previous experience. When the field better understands the mechanisms by which strategies achieve their effects, stakeholders will be able to weigh various strategies that target similar mechanisms in order to choose the most feasible approach for their setting and time constraints. Sixth, the results reported herein reflect the experience of six clinics within the same behavioral health organization and with similar demographic characteristics (i.e. team members were predominantly white females). It will be important to test out this tracking methodology in other contexts to ensure its utility and generalizability and connect the strategy information (number, type, dose, temporality), in a causal fashion, to both implementation and clinical outcomes.

Seventh, we were unable to control for site differences when assessing the effect of strategies on fidelity to MBC. Further, there were issues of multicollinearity among strategy types. Disentangling the effect of specific implementation strategies on key implementation outcomes was not a primary aim of the larger project. Researchers seeking to understand the effects of strategies on implementation outcomes may consider the use of interrupted time-series research designs which allow for the experimental manipulation of strategy use without necessitating large sample sizes that are often unfeasible in community-based research conducted at the level of the organization (Biglan et al, 2000).

Conclusion

The current study offered a rigorous approach for specifying and reporting implementation strategies based on the recommendations of Proctor et al. (2013). Preliminary results of this methodology revealed that behavioral health clinics tasked with implementing MBC generated a great number and variety of implementation strategies with similar proportions, despite differences with respect to size, rural/urban status, and likely contextual barriers. Although preliminary, our results suggest that the quality and not quantity of strategies may influence implementation outcomes, but more research is needed to explore this relation. A rigorous method for tracking strategies will enable researchers to determine how specific strategies (acted by whom, how often, on what target, etc.) impact critical determinants such as turnover and burnout and outcomes such as fidelity and sustainability, and to replicate strategies effects across studies (Proctor et al., 2013). Further, tracking strategies and outcomes longitudinally serves as a critical step toward disentangling the mechanisms of implementation strategies that will ultimately inform and optimize tailored approaches to implementation.

Supplementary Material

Highlights.

Developed method to track implementation strategies systematically.

Described implementation strategy use by teams implementing measurement-based care.

Results suggest type rather than quantity of strategies key to implementation.

Acknowledgments

The research reported in this publication was supported by the National Institute of Mental Health of the National Institutes of Health under Award Number R01MH103310.

Abbreviations

- MBC

measurement-based care

- PHQ-9

Patient Health Questionnaire, 9-items

- EHR

electronic health record

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Aarons GA. Mental health provider attitudes toward adoption of evidence-based practice: The Evidence-Based Practice Attitude Scale (EBPAS) Mental Health Service Research. 2004;6(2):61–74. doi: 10.1023/b:mhsr.0000024351.12294.65. http://dx.doi.org/10.1023/B:MHSR.0000024351.12294.65. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Ehrhart MG, Farahnak LR, Hurlurt MS. Leadership and organizational change for implementation (LOCI): a randomized mixed method pilot study of a leadership and organization development intervention for evidence-based practice implementation. Implementation Science. 2015;10(11):1–12. doi: 10.1186/s13012-014-0192-y. https://doi.org/10.1186/s13012-014-0192-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Aarons GA, Hurlburt M, Horwitz SM. Advancing a conceptual model of evidence-based practice implementation in public service sectors. Administration and Policy in Mental Health and Mental Health Services Research. 2011;38:4–23. doi: 10.1007/s10488-010-0327-7. https://doi.org/10.1007/s10488-010-0327-7. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Albrecht L, Archibald M, Arseneau D, Scott SD. Development of a checklist to assess the quality of reporting of knowledge translation interventions using the Workgroup for Intervention Development and Evaluation Research (WIDER) recommendations. Implementation Science. 2013;8(52):1–5. doi: 10.1186/1748-5908-8-52. https://doi.org/10.1186/1748-5908-8-52. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Alexander JA, Hearld LR. Methods and metrics challenges of delivery-systems research. Implementation Science. 2012;7(15):1–11. doi: 10.1186/1748-5908-7-15. https://doi.org/10.1186/1748-5908-7-15. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baker R, Comosso-Stefinovic J, Gillies C, Shaw EJ, Cheater F, Flottorp S, Jäger C. Tailored interventions to address determinants of practice. Cochrane Database of Systematic Reviews. 2015;4(CD005470):1–118. doi: 10.1002/14651858.CD005470.pub3. https://doi.org/10.1002/14651858.CD005470.pub3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bickman L, Kelley SD, Breda C, de Andrade AR, Riemer M. Effects of routine feedback to clinicians on mental health outcomes of youths: results of a randomized trial. Psychiatr Services. 2011;62(12):1423–1429. doi: 10.1176/appi.ps.002052011. http://dx.doi.org/10.1176/appi.ps.002052011. [DOI] [PubMed] [Google Scholar]

- Biglan A, Ary D, Wagenaar AC. The value of interrupted time-series experiments for community intervention research. Prevention Science. 2000;1(1):31–49. doi: 10.1023/a:1010024016308. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bosch M, van der Weijden T, Wensing M, Grol R. Tailoring quality improvement interventions to identified barriers: A multiple case analysis. Journal of Evaluation in Clinical Practice. 2007;13:161–168. doi: 10.1111/j.1365-2753.2006.00660.x. https://doi.org/10.1111/j.1365-2753.2006.00660.x. [DOI] [PubMed] [Google Scholar]

- Broome KM, Flynn PM, Knight DK, Simpson DD. Program structure, staff perceptions, and client engagement in treatment. Journal of Substance Abuse Treatment. 2007;33(2):149–158. doi: 10.1016/j.jsat.2006.12.030. http://dx.doi.org/10.1016/j.jsat.2006.12.030. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bunger AC, Hanson RF, Doogan NJ, Powell BJ, Cao Y, Dunn J. Can learning collaboratives support implementation by rewiring professional networks? Administration and Policy in Mental Health and Mental Health Services Research. 2016;43(1):79–92. doi: 10.1007/s10488-014-0621-x. https://doi.org/10.1007/s10488-014-0621-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Bunger AC, Powell BJ, Robertson HA, MacDowell H, Birken SA, Shea C. Tracking implementation strategies: A description of a practical approach and early findings. Health Research Policy and Systems. 2017;15(15):1–12. doi: 10.1186/s12961-017-0175-y. https://doi.org/10.1186/s12961-017-0175-y. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Carlier IV, Meuldijk D, van Vliet IM, van Fenema EM, van der Wee NJ, Zitman FG. Empirische evidence voor de effectiviteit van routine outcome monitoring; een literatuuronderzoek [Empirical evidence for the effectiveness of Routine Outcome Monitoring. A study of the literature] Tijdschrift voor Psychiatre. 2012;54(2):121–128. [PubMed] [Google Scholar]

- Childers T. Assessment of the psychometric properties of an Opinion Leadership Scale. Journal of Marketing Research. 1986;23(2):184–188. http://dx.doi.org/10.2307/3151666. [Google Scholar]

- Cochrane Effective Practice and Organisation of Care Group. Data collection checklist. 2002:1–30. Retrieved from http://epoc.cochrane.org/sites/epoc.cochrane.org/files/uploads/datacollectionchecklist.pdf.

- Ditty MS, Landes SJ, Doyle A, Beidas RS. It takes a village: A mixed method analysis of inner setting variables and dialectical behavior therapy implementation. Administration and Policy in Mental Health and Mental Health Services Research. 2015;42(6):672–81. doi: 10.1007/s10488-014-0602-0. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Essock SM, Nossel IR, McNamara K, Bennett ME, Buchanan RW, Kreyenbuhl JA, Dixon LB. Practical monitoring of treatment fidelity: Examples from a team-based intervention for people with early psychosis. Psychiatric Services. 2015;66(7):674–676. doi: 10.1176/appi.ps.201400531. http://doi.org/10.1176/appi.ps.201400531. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Gold R, Bunce AE, Cohen DJ, Hollombe C, Nelseon CA, Proctor EK, DeVoe JE. Reporting on the strategies needed to implement proven interventions: An example from a “real world” cross-setting implementation study. Mayo Clinic Proceedings. 2016 doi: 10.1016/j.mayocp.2016.03.014. https://doi.org/10.1016/j.mayocp.2016.03.014. [DOI] [PMC free article] [PubMed]

- Grimshaw JM, Eccles MP, Lavis JN, Hill SJ, Squires JE. Knowledge translation of research findings. Implementation Science. 2012;7(50):1–17. doi: 10.1186/1748-5908-7-50. http://dx.doi.org/10.1186/1748-5908-7-50. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hlebec V, Ferligoj A. Reliability of social network measurement instruments. Field Methods. 2002;14(3):288–306. https://doi.org/10.1186/1748-5908-7-50. [Google Scholar]

- Hoffman TC, Glasziou PP, Boutron I, Milne R, Perera R, Moher D, Michie S. Better reporting of interventions: Template for intervention description and replication (TIDieR) checklist and guide. BMJ. 2014;348(g1687):1–12. doi: 10.1136/bmj.g1687. https://doi.org/10.1136/bmj.g1687. [DOI] [PubMed] [Google Scholar]

- Jackson WC. The benefits of measurement-based care for primary care patients with depression. Journal of Clinical Psychiatry. 2016;77(3):e318. doi: 10.4088/JCP.14061tx4c. [DOI] [PubMed] [Google Scholar]

- Jensen-Doss A, Haimes EMB, Smith AM, Lyon AR, Lewis CC, Stanick CF, Hawley KM. Monitoring treatment progress and providing feedback is viewed favorably but rarely used in practice. Administration and Policy in Mental Health and Mental Health Services Research. 2016:1–14. doi: 10.1007/s10488-016-0763-0. Advance online publication. http://dx.doi.org/10.1007/s10488-016-0763-0. [DOI] [PMC free article] [PubMed]

- Kearney LK, Wray LO, Dollar KM, King PR. Establishing Measurement-based Care in Integrated Primary Care: Monitoring Clinical Outcomes Over Time. Journal of Clinical Psychology in Medical Settings. 2015;22(4):213–227. doi: 10.1007/s10880-015-9443-6. http://dx.doi.org/10.1007/s10880-015-9443-6. [DOI] [PubMed] [Google Scholar]

- Kirchner JE, Ritchie MJ, Pitcock JA, Parker LE, Curran GM, Fortney JC. Outcomes of a partnered facilitation strategy to implement primary care–mental health. Journal of General Internal Medicine. 2014;29(4):904–912. doi: 10.1007/s11606-014-3027-2. http://dx.doi.org/10.1007/s11606-014-3027-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kok G, Gottlieb NH, Peters GY, Mullen PD, Parcel GS, Ruiter RAC, Bartholomew LK. A taxonomy of behaviour change methods: An Intervention Mapping approach. Health Psychology Review. 2016;10(3):297–312. doi: 10.1080/17437199.2015.1077155. https://doi.org/10.1080/17437199.2015.1077155. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Kroenke K, Spitzer RL, Williams JB. The Phq-9. Journal of General Internal Medicine. 2001;16(9):606–613. doi: 10.1046/j.1525-1497.2001.016009606.x. http://dx.doi.org/10.1046/j.1525-1497.2001.016009606.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis CC, Scott K, Marti CN, Marriott BR, Kroenke K, Putz JW, Rutkowski D. Implementing measurement-based care (iMBC) for depression in community mental health: A dynamic cluster randomized trial study protocol. Implementation Science. 2015;10(127):1–14. doi: 10.1186/s13012-015-0313-2. https://doi.org/10.1186/s13012-015-0313-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lewis CC, Boyd M, Kassab H, Beidas R, Lyon A, Chambers D, Mittman B. A research agenda for studying mechanisms of dissemination & implementation science. Poster presented at the Annual Convention of the Association for Behavioral and Cognitive Therapies; New York, NY. 2016. Oct, [Google Scholar]

- Lokker C, McKibbon KA, Colquhoun H, Hempel S. A scoping review of classification schemes of interventions to promote and integrate evidence into practice in healthcare. Implementation Science. 2015;10(27):1–12. doi: 10.1186/s13012-015-0220-6. https://doi.org/10.1186/s13012-015-0220-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lyon AR, Dorsey S, Pullman MD, Martin P, Grigore AA, Becker EM, Jensen-Doss A. Reliability, Validity, and Factor Structure of the Current Assessment Practice Evaluation- Revised (CAPER) in a National Sample. 2017 doi: 10.1007/s11414-018-9621-z. Manuscript Under Review. [DOI] [PubMed] [Google Scholar]

- Mazza D, Bairstow P, Buchan H, Chakraborty SP, Van Hecke O, Grech C, Kunnamo I. Refining a taxonomy for guideline implementation: Results of an exercise in abstract classification. Implementation Science. 2013;8(32):1–10. doi: 10.1186/1748-5908-8-32. https://doi.org/10.1186/1748-5908-8-32. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mendel P, Meredith LS, Schoenbaum M, Sherbourne CD, Wells KB. Interventions in organizational and community context: a framework for building evidence on dissemination and implementation in health services research. Administration and Policy in Mental Health and Mental Health Services Research. 2008;35(1–2):21–37. doi: 10.1007/s10488-007-0144-9. http://dx.doi.org/10.1007/s10488-007-0144-9. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Michie S, Fixsen DL, Grimshaw JM, Eccles MP. Specifying and reporting complex behaviour change interventions: the need for a scientific method. Implementation Science. 2009;4(40):1–6. doi: 10.1186/1748-5908-4-40. https://doi.org/10.1186/1748-5908-4-40. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Michie S, Richardson M, Johnston M, Abraham C, Francis J, Hardeman W, Wood CE. The behavior change technique taxonomy (v1) of 93 hierarchically clustered techniques: Building an international consensus for the reporting of behavior change interventions. Annals of Behavioral Medicine. 2013;46(1):81–95. doi: 10.1007/s12160-013-9486-6. https://doi.org/10.1007/s12160-013-9486-6. [DOI] [PubMed] [Google Scholar]

- Mittman BS. Implementation science in health care. In: Brownson RC, Colditz GA, Proctor EK, editors. Dissemination and implementation research in health: Translating science to practice. New York: Oxford University Press; 2012. pp. 400–418. [Google Scholar]

- Novins DK, Green AE, Legha RK, Aarons GA. Dissemination and implementation of evidence-based practices for child and adolescent mental health: A systematic review. Journal of the American Academy of Child and Adolescent Psychiatry. 2013;52(10):1009–1025. doi: 10.1016/j.jaac.2013.07.012. https://doi.org/10.1016/j.jaac.2013.07.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Pinnock H, Barwick M, Carpenter CR, Eldridge S, Grandes G, Griffiths CJ, Taylor SJC. Standards for Reporting Implementation Studies (StaRI) statement. BMJ. 2017;356(i6795) doi: 10.1136/bmj.i6795. https://doi.org/10.1136/bmj.i6795. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell BJ, Beidas RS, Lewis CC, Aarons GA, McMillen JC, Proctor EK, Mandell DS. Methods to improve the selection and tailoring of implementation strategies. Journal of Behavioral Health Services Research. 2017;44(2):177–194. doi: 10.1007/s11414-015-9475-6. https://doi.org/10.1007/s11414-015-9475-6. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell BJ, Hausmann-Stabile C, McMillen JC. Mental health clinicians’ experiences of implementing evidence-based treatments. Journal of Evidence-Based Social Work. 2013;10(5):396–409. doi: 10.1080/15433714.2012.664062. http://dx.doi.org/10.1080/15433714.2012.664062. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell BJ, McMillen JC, Proctor EK, Carpenter CR, Griffey RT, Bunger AC, York JL. A compilation of strategies for implementing clinical innovations in health and mental health. Medical Care Research and Review. 2012;69(2):123–157. doi: 10.1177/1077558711430690. http://dx.doi.org/10.1177/1077558711430690. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell BJ, Proctor EK, Glass JE. A systematic review of strategies for implementing empirically supported mental health interventions. Research on Social Work Practice. 2014;24(2):192–212. doi: 10.1177/1049731513505778. http://dx.doi.org/10.1177/1049731513505778. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Powell BJ, Waltz TJ, Chinman MJ, Damschroder LJ, Smith JL, Matthieu MM, Kirchner JE. A refined compilation of implementation strategies: Results from the Expert Recommendations for Implementing Change (ERIC) project. Implementation Science. 2015;10(21):1–14. doi: 10.1186/s13012-015-0209-1. https://doi.org/10.1186/s13012-015-0209-1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Proctor EK, Powell BJ, McMillen JC. Implementation strategies: Recommendations for specifying and reporting. Implementation Science. 2013;8(139):1–11. doi: 10.1186/1748-5908-8-139. https://doi.org/10.1186/1748-5908-8-139. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rogers EM. Diffusion of innovations. New York, NY: Free Press; 2003. pp. 204–208. [Google Scholar]

- Salyers MP, Rollins AL, McGuire AB, Gearhart T. Barriers and facilitators in implementing illness management and recovery for consumers with severe mental illness: trainee perspectives. Administration and Policy in Mental Health and Mental Health Services Research. 2009;36(2):102–111. doi: 10.1007/s10488-008-0200-0. http://dx.doi.org/10.1007/s10488-008-0200-0. [DOI] [PubMed] [Google Scholar]

- Scott K, Lewis CC. Using Measurement-Based Care to Enhance Any Treatment. Cognitive and Behavioral Practice. 2015;22(1):49–59. doi: 10.1016/j.cbpra.2014.01.010. http://dx.doi.org/10.1016/j.cbpra.2014.01.010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Tenkasi RV, Chesmore MC. Social networks and planned organizational change: the impact of strong network ties on effective change implementation and use. Journal of Applied Behavioral Science. 2003;39(3):281–300. http://dx.doi.org/10.1177/0021886303258338. [Google Scholar]

- Valente TW, Ritt-Olson A, Stacy A, Unger JB, Okamoto J, Sussman S. Peer acceleration: effects of a social network tailored substance abuse prevention program among high-risk adolescents. Addict. 2007;102(11):1804–1815. doi: 10.1111/j.1360-0443.2007.01992.x. [DOI] [PubMed] [Google Scholar]

- Walter I, Nutley S, Davies H. Developing a taxonomy of interventions used to increase the impact of research. Research Unit for Research Utilisation, Department of Management; University of St. Andrews: 2003. [Google Scholar]

- Weiner BJ, Lewis MA, Clauser SB, Stitzenberg KB. In search of synergy: Strategies for combining interventions at multiple levels. JNCI Monographs. 2012;44:34–41. doi: 10.1093/jncimonographs/lgs001. https://doi.org/10.1093/jncimonographs/lgs001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wensing M. The Tailored Implementation in Chronic Diseases (TICD) project: Introduction and main findings. Implementation Science. 2017;12(5):1–4. doi: 10.1186/s13012-016-0536-x. https://doi.org/10.1186/s13012-016-0536-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Wensing M, Oxman A, Baker R, Godycki-Cwirko M, Flottorp S, Szecsenyi J, Eccles M. Tailored implementation for chronic diseases (TICD): A project protocol. Implementation Science. 2011;6(103):1–8. doi: 10.1186/1748-5908-6-103. https://doi.org/10.1186/1748-5908-6-103. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Williams NJ. Multilevel mechanisms of implementation strategies in mental health: Integrating theory, research, and practice. Administration and Policy in Mental Health and Mental Health Services Research. 2015;43(5):783–798. doi: 10.1007/s10488-015-0693-2. http://dx.doi.org/10.1007/s10488-015-0693-2. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Workgroup for Intervention Development and Evaluation Research. WIDER recommendations to improve reporting of the content of behaviour change interventions. 2008 Retrieved from http://interventiondesign.co.uk/wp-content/uploads/2009/02/wider-recommendations.pdf.

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.