Abstract

In this paper, we investigate the state estimation of systems with unknown covariance non-Gaussian measurement noise. A novel improved Gaussian filter (GF) is proposed, where the maximum correntropy criterion (MCC) is used to suppress the pollution of non-Gaussian measurement noise and its covariance is online estimated through the variational Bayes (VB) approximation. MCC and VB are integrated through the fixed-point iteration to modify the estimated measurement noise covariance. As a general framework, the proposed algorithm is applicable to both linear and nonlinear systems with different rules being used to calculate the Gaussian integrals. Experimental results show that the proposed algorithm has better estimation accuracy than related robust and adaptive algorithms through a target tracking simulation example and the field test of an INS/DVL integrated navigation system.

Keywords: Gaussian filter, maximum correntropy criterion, variational Bayes, Kalman filter

1. Introduction

As the benchmark work in state estimation problems, the linear recursive Kalman filter (KF) has been applied in various applications, such as information fusion, system control, integrated navigation, target tracking, and GPS solutions [1,2,3,4]. It is then extended to nonlinear systems through different ways to approximate the nonlinear functions or filtering distributions. Using the Taylor series to linearize the nonlinear functions, the popular extended Kalman filter (EKF) [5] is obtained. To further improve the estimation accuracy of EKF, several sigma points based nonlinear filters have been proposed in recent decades, such as unscented Kalman filter (UKF) using unscented transform [6], cubature Kalman filter (CKF) according to cubature rules [7], and divided difference Kalman filter (DDKF) adopting the polynomial approximations [8]. All these filters can be regarded as special cases of Gaussian filter (GF) [9,10,11], where the noise distribution is assumed to be Gaussian.

However, when the measurements are polluted by non-Gaussian noise, such as impulsive inference or outliers, GF will have worse estimation results and even break down [12,13]. Besides the computation extensive methods including particle filter [14,15], Gaussian sum filter [16], and multiple model filters [17], the robust filters, such as Huber’s KF (HKF, also known as M-estimation) [18,19,20] and filter [21], are also intended for the contaminated measurements. Although the filter can obtain guaranteed bounded estimation error, it does not perform well under Gaussian noise [22]. The Huber’s M-estimation is a combined and norm filter that can effectively suppress the non-Gaussian noise [18,19,20]. Recently, the information theoretical measure correntropy has been used to incorporate the non-Gaussian noise [23,24,25,26,27,28]. According to the maximum correntropy criterion (MCC), a new robust filter known as the maximum correntropy Kalman filter (MCKF) has been proposed in [24], and it is also extended to nonlinear systems using EKF [27] and UKF [25,26,28]. Simulation results show that an MCC based GF (MCGF) may obtain better estimation accuracy than M-estimation when choosing a proper kernel bandwidth [23,24,25,26]. Even so, both MCGF and M-estimation still require the information of nominal measurement noise covariance, which may be unknown or time varying in some applications. In this situation, the performance of MCGF will degrade as shown in our simulation examples.

Traditionally, the unknown noise statistic can be estimated by the adaptive filter, such as the Sage–Husa filter [29] and fading memory filter [30]. One drawback of these recursion adaptive filters is that the previously estimated statistic of the last time instant will influence current estimation, which is not suitable for the case measurement noise having frequently changing statistics [31]. The recently proposed variational Bayes (VB) based adaptive filter avoids this limitation by VB approximation, and VB based GFs (VBGFs) for both linear [32] and nonlinear systems [9,33] have been proposed.

In this paper, we proposed an adaptive MCGF based on the VB approximation, which is especially useful for estimating the system state from the measurements with unknown covariance non-Gaussian noise. Typical applications include low-cost INS/GPS integrated navigation systems [32] and maneuvering target tracking [33]. To overcome the limitation of MCGF under unknown time varying measurement noise covariance, the VB method is utilised to improve the adaptivity of MCGF, which is achieved through the fixed-point iteration framework. As will be demonstrated in our simulation results, our proposed method has better estimation accuracy than related algorithms. Furthermore, various filters can be obtained by using different ways to calculate the Gaussian integrals.

The rest of this paper is given as follows. In Section 2, after briefly introducing the concept of correntropy, we give the general MCGF algorithm. In Section 3, we explain the main idea of the VB method and the procedure of embedding it into MCGF to obtain our proposed adaptive MCGF. Section 4 gives the experimental results of a typical target tracking model and an INS/DVL navigation system comparing with several related algorithms. Conclusions are made in the final section.

2. Gaussian Filter Based on the Maximum Correntropy Criterion

2.1. Correntropy

As a kind of similarity measure, the correntropy of random variables X and Y is defined as [24,25,26]

| (1) |

where denotes expectation, is the joint density function, and represents the Mercer kernel. The most popular Gaussian kernel is given as the following:

| (2) |

where , and is the kernel bandwidth.

Then, taking the Taylor series expansion on the Gaussian correntropy, we obtain that

| (3) |

Obviously, it contains all the even moments of weighted by the kernel bandwidth . It enables us to capture high order information when applying the correntropy in signal processing. In practice, we can use the sampling data to estimate the real correntropy since the joint density function is usually unavailable.

2.2. Maximum Correntropy Gaussian Filter

In this paper, we consider the following nonlinear system with additive noise

| (4) |

where is the system state at time i and denotes the measurement. and represent the known nonlinear functions. In standard GF, the process noise and the measurement noise are assumed to be zero mean Gaussian noise sequences with known covariance and . The initial state has known mean and covariance .

In both GF and MCGF, the one step estimation and its estimation covariance are obtained through:

| (5) |

| (6) |

To further improve the robustness of GF, MCC has been applied on the derivation of measurement update of MCGF. Consider the following regression model based on Equations (4)–(6) [25]:

| (7) |

where . The covariance of is

| (8) |

Multiplying on both sides of Equation (7), we obtain

| (9) |

where and .

Then, the optimal estimation under the MCC can be obtained through the following optimization problem:

| (10) |

where is the k-th element of .

Equation (10) can be solved by:

| (11) |

where

| (12) |

| (13) |

| (14) |

Note here that the is used to denote the diagonal matrix.

Based on the above equation and using to replace the contained in Equation (9), MCGF can be written in a similar way as GF except a modified measurement noise covariance [25]:

| (15) |

Then, and its covariance can be obtained through

| (16) |

| (17) |

where

| (18) |

| (19) |

| (20) |

| (21) |

We easily find that the main difference between MCGF and GF is the modified measurement noise covariance, and MCGF shows excellent estimation performance when measurement is polluted by outliers or shot noise [24,25,26]. However, it still requires the knowledge of measurement noise covariance. When the covariance changes over time (which implies the true covariance is different from the known covariance), the MCGF algorithm does not perform well. Therefore, we adopt the adaptive method to further improve the performance of MCGF in this case.

3. Variation Beysian Maximum Correntropy Gaussian Filter

The main idea under state estimation is to obtain the posterior probability density function . For GF, we obtain it through the Gaussian approximation . However, if the measurement noise covariance is unavailable, we need to estimate the joint posterior distribution . This distribution can be solved by the free form VB approximation [9,32]:

| (22) |

where and are unknown approximation densities, which can be calculated by minimizing the Kullback–Leibler (KL) divergence between the true one and its corresponding approximation [9,32]:

| (23) |

| (24) |

According to the VB method, can be approximated as a product of Gaussian distribution and inverse Wishart (IW) distribution [9,32]:

| (25) |

where

| (26) |

| (27) |

where is the trace of a matrix, and and are the degree of freedom parameter and the inverse scale matrix, respectively.

The integrals in (22) and (23) can be computed as follows [9,32]:

| (28) |

| (29) |

where , , and , are some constants. Due to the fact that , we obtain

| (30) |

Besides this, the expectation can be rewritten as

| (31) |

Substituting (30) and (31) into (28) and (29), and matching the parameters in (26) and (27), we can obtain the following results:

| (32) |

| (33) |

| (34) |

| (35) |

| (36) |

| (37) |

where is the estimated measurement covariance.

The VB based GF works well for unknown measurement noise covariance. However, when the measurement contains outliers or shot noise, their estimation will degrade, as will be shown in our simulation results. To overcome the shortcomings of MCGF and VBGF, we take the advantages of VB and MCC by the fixed-point iteration method, and design the so called VBMCGF algorithm, which is summarized as follows:

-

Step 1:

Predict:

-

Step 2:

Update:

First, set , , , and . Calculate and by (19) and (21).

For , iterate the following equations:(40) (41) (42) (43) (44) (45) (46) (47) (48) End For. In addition, set , , and .

The main difference between the proposed VBMCGF and existing GFs lies in the modified estimation error covariance , where VB iterations are used to estimate its value and MCC is used to modify it in the presence of non-Gaussian noises. The kernel bandwidth plays an important role in reducing the effect of non-Gaussian noise or outliers. A smaller will make the filter more sensitive to outliers, but it may affect the convergence performance. In addition, a too large may cause the VBMCGF to perform more like VBGF (It can be proved that, if , the proposed VBMCGF will reduce to VBGF). One possible way to select it is by the trial and error method [24,25,26]. Another important issue is the number of fixed-point iterations. In fact, only a few iterations (e.g., 2 or 3) are enough [31,32].

As the general framework, our filter can be easily implemented according to the real requirements. For linear systems that are described by and , the predation update in the VBMCKF is the same as KF:

| (49) |

| (50) |

In addition, the , , , and that appeared in VBMCKF will reduce to the following equations:

| (51) |

| (52) |

| (53) |

| (54) |

while other steps are the same as the general framework.

When it comes to the nonlinear systems, the Gaussian integrals contained in , , , , , and can be calculated according to Taylor series, unscented transform, or cubature rules, and the corresponding filters are called VBMCEKF, VBMCUKF, and VBMCCKF, respectively.

4. Experimental Results

4.1. Simulation Results of the Target Tracking Model

To illustrate the performance of the proposed algorithm, we first give the simulation results using a typical target tracking model, where cubature rules are used to calculate the integrals. We compare the estimation accuracy of seven filters: CKF [7], MCCKF-1 [26], MCCKF-2 [25], VBCKF [9], HCKF [18], VBHCKF (which adopts Huber’s function) and the proposed VBMCCKF under various kinds of measurement noise. The target tracking example is modeled as [2]:

| (55) |

| (56) |

where is the system state with and being the position and velocity in x and y directions. The position of radar is set as = (−100 m, −100 m). We set the sampling period , . The initial state is and the covariance is . The root mean square error (RMSE) and average RMSE (ARMSE) in position or velocity are used to describe the estimation accuracy—for example, the RMSE and ARMSE in position are defined as [2]:

| (57) |

| (58) |

where and are the true and estimated position in the cth Monte Carlo experiment, respectively. The RMSE and ARMSE of velocity are similar.

We here consider the following five kinds of measurement noises:

Case A: Gaussian distribution

| (59) |

Case B: Time varying measurement noise covariance

| (60) |

Case C: Gaussian mixture noise with time varying measurement noise covariance

| (61) |

Case D: Time varying measurement noise covariance and shot noise

| (62) |

Case E: Gaussian mixture noise with time varying measurement noise covariance and shot noise

| (63) |

where the parameters , , and are given in Figure 1.

Figure 1.

The time varying parameters. (a) ; (b) and .

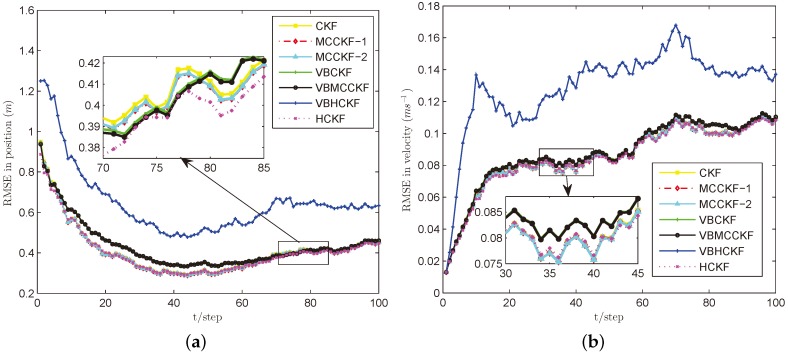

In these simulations, we use for MCC based algorithms, the commonly used threshold for Huber’s function, and we set and for VB approximations. We perform steps and run Monte Carlo experiments for each case. The simulation results are plotted in Figure 2, Figure 3, Figure 4, Figure 5 and Figure 6.

Figure 2.

RMSE performances of different filters under Case A. (a) position; (b) velocity.

Figure 3.

RMSE performances of different filters under Case B. (a) position; (b) velocity.

Figure 4.

RMSE performances of different filters under Case C. (a) Position; (b) Velocity.

Figure 5.

RMSE performances of different filters under Case D. (a) position; (b) velocity.

Figure 6.

RMSE performances of different filters under Case E. (a) position; (b) velocity.

Under the Gaussian measurement noise with known noise covariance, as given in Figure 2, both MCCKF and HCKF have nearly similar estimation accuracy to CKF, since they will reduce to CKF if choosing proper free parameters (e.g., the and h are infinity). VBCKF and VBMCCKF work slightly worse as compared with CKF because they only use their online estimated measurement noise covariance instead of the real one. In particular, the VBHCKF has the worst performance since the commonly used parameter for Huber’s function doesn’t fit the Gaussian noise situation when using the inaccurate online estimated measurement noise covariance. The proposed VBMCCKF works well with the same kernel bandwidth under both Gaussian and non-Gaussian noise situations, as will be shown in the following cases.

Figure 3, Figure 4, Figure 5 and Figure 6 show the estimation performances of different algorithms under Cases B–E. It can be seen obviously that CKF has the worst estimation accuracy since it requires the measurement noise satisfying Gaussian distribution with known covariance, which is violated in these situations. MCCKF-1 and MCCKF-2 have similar estimation performance but are slightly worse than HCKF when using this kernel bandwidth. As demonstrated in [23,24,25,26,27], MCCKF is able to obtain better estimation accuracy than HCKF with a suitable . The estimation results of MCCKF and HCKF do not change too much when Gaussian mixture noise or shot noise are added, since they are robust filters. The VBHCKF has better estimation results in velocity but worse accuracy than HCKF. The VBCKF has much better estimation in Cases B and C, as it is able to online estimate the time varying measurement noise covariance. However, its performance will degrade once shot noise is injected in Cases D and E. Among these algorithms, our VBMCCKF has the best estimation accuracy as compared with other algorithms under Cases B–E. It shows the adaptivity to unknown changing measurement noise covariance and robustness to Gaussian mixture noise and shot noise. Its estimation results are also much better than VBHCKF since the MCC has the potential to capture high order information than Huber’s function. The ARMSEs of these filters under different noises are also given in Table 1 to clearly show the differences.

Table 1.

ARMSEs of different filters under five cases.

| Algorithms | Case A | Case B | Case C | Case D | Case E | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| Pos. | Vel. | Pos. | Vel. | Pos. | Vel. | Pos. | Vel. | Pos. | Vel. | |

| CKF | 0.4097 | 0.0855 | 2.2930 | 0.3121 | 2.2050 | 0.3053 | 3.7720 | 0.7809 | 3.2960 | 0.6923 |

| MCCKF-1 | 0.4077 | 0.0854 | 1.4960 | 0.1959 | 1.5680 | 0.2052 | 1.4090 | 0.2077 | 1.5680 | 0.2100 |

| MCCKF-2 | 0.4079 | 0.0853 | 1.5280 | 0.1989 | 1.5990 | 0.2058 | 1.4500 | 0.2098 | 1.6010 | 0.2114 |

| HCKF | 0.4045 | 0.0847 | 1.0990 | 0.1304 | 1.1360 | 0.1390 | 1.0100 | 0.1384 | 1.1030 | 0.1365 |

| VBHCKF | 0.6500 | 0.1296 | 1.7180 | 0.1185 | 1.6360 | 0.1260 | 1.6460 | 0.1207 | 1.7590 | 0.1251 |

| VBCKF | 0.4362 | 0.0880 | 0.8761 | 0.0845 | 0.7828 | 0.0914 | 1.1240 | 0.0977 | 1.3100 | 0.1000 |

| VBMCCKF | 0.4350 | 0.0878 | 0.8633 | 0.0843 | 0.7752 | 0.0909 | 0.7424 | 0.0933 | 0.8236 | 0.0931 |

4.2. Field Results of Integrated Navigation

To further illustrate the effectiveness of the proposed algorithm, we compare our algorithm and existing related methods using the real data collected by a self-made fiber optical gyroscope inertial navigation system (INS) together with a doppler velocity logger (DVL). The integrated navigation results of photonics inertial navigation system (PHINS) and GPS are used as the reference system. We adopt the loosely coupled method to fusion the information of INS and DVL. The state vector is chosen as , where and are the latitude and longitude error, are the velocity error, attitude error, accelerometer bias and gyroscope constant drift, respectively. j denotes the subscribe , where e and n present the east and north directions in the local-level frame, and x, y, and z are the directions of three axises in the body frame. Then, the continuous system model is given as follows:

| (64) |

where t is the continuous system time, and is the process noise, which contains the Gaussian noise of both accelerometers and gyroscopes. The detailed elements of matrix and can refer to [34]. The measurement equation is

| (65) |

where , , and . Then, the discretization process is performed before running filtering algorithms.

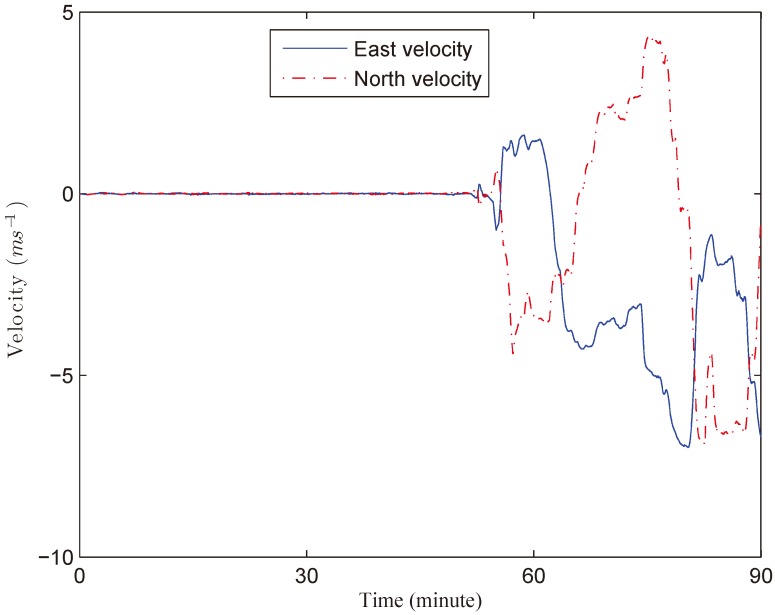

We compare the estimation results of KF, VBKF, HKF, MCKF, and VBMCKF, where we choose , , , and . We set , and the covariance is P0 = diag( 0.5°, 0.5°, 3°, 1 × 10−4 g, 1 × 10−4 g, 0.01°/h, 0.01°/h, 0.01°/h), where is the radius of the earth, and g is the gravitational acceleration. The process noise covariance and measurement noise covariance are set as = diag(0, 0, 1 × 10−3 g, 1 × 10−3 g, 0.025°/h, 0.025 °/h, 0.025 °/h, 0, 0, 0, 0, 0) and = diag(0.1 m/s, 0.1 m/s) according to the parameters of INS and DVL.

In this experiment, the system is first tested in anchorage for about 50 min, then the ship starts to move. The real velocities of the ship are shown in Figure 7 provided by the commercial INS/GPS integrated navigation system. The collected data is processed using MATLAB (R2014a by MathWorks, Inc., Natick, MA, USA) on a computer with 2.50 GHz Intel Core i5-7300HQ CPU and 8 GB memory. The total computational time of KF, VBKF, HKF, MCKF, and VBMCKF are 0.1900 s, 0.4800 s, 0.2640 s, 0.2310 s, and 0.6340 s, respectively. The position and velocity errors of different filters are given in Figure 8 and Figure 9. The differences between attitude and heading errors are quite similar so we omit them.

Figure 7.

Real velocities of this field experiment.

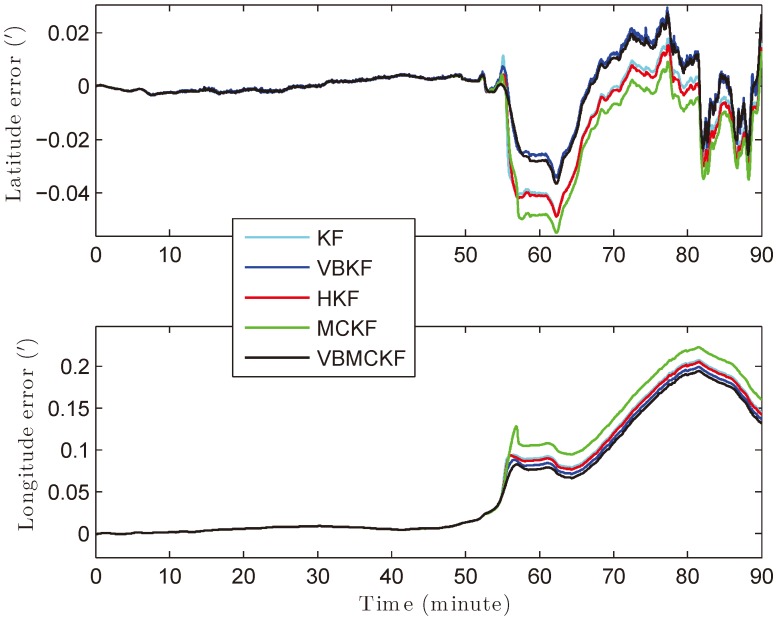

Figure 8.

Position errors of different filters.

Figure 9.

Velocity errors of different filters.

It can be seen from Figure 8 and Figure 9 that when the motion state changes sharply, the proposed VBMCKF algorithm has the smallest estimation errors with slightly increased computational time as compared with other estimation methods.

5. Conclusions

In this paper, a novel adaptive MCGF based on VB approximation is proposed. The MCC is used to reduce the effect of non-Gaussian measurement noise and outliers, while we use VB to estimate the unknown measurement noise covariance. Experimental results based on simulation examples and real data show that the proposed algorithm has better estimation accuracy than related robust and adaptive filters.

Author Contributions

W.G. and G.Z. conceived and designed the experiments; W.G. and Z.Y. performed the experiments and analyzed the data; M.B. and Z.Y. contributed experiment tools; W.G. and G.Z. wrote the paper.

Funding

This research was funded by the National Natural Science Foundation of China under grant number 61773133 and 61633008, the Natural Science Foundation of Heilongjiang Province under grant number F2016008, the Fundamental Research Funds for the Central Universities under grant number HEUCFM180401, the China Scholarship Council Foundation, and the Ph.D. Student Research and Innovation Foundation of the Fundamental Research Funds for the Central Universities under grant number HEUGIP201807.

Conflicts of Interest

The authors declare no conflict of interest.

References

- 1.Auger F., Hilairet M., Guerrero J.M., Monmasson E., Orlowska-Kowalska T., Katsura S. Industrial Applications of the Kalman Filter: A Review. IEEE Trans. Ind. Electron. 2013;60:5458–5471. doi: 10.1109/TIE.2012.2236994. [DOI] [Google Scholar]

- 2.Wang G., Li N., Zhang Y. An Event based Multi-sensor Fusion Algorithm with Deadzone Like Measurements. Inf. Fusion. 2018;42:111–118. doi: 10.1016/j.inffus.2017.10.004. [DOI] [Google Scholar]

- 3.Wang G., Li N., Zhang Y. Diffusion Distributed Kalman Filter over Sensor Networks without Exchanging Raw Measurements. Signal Process. 2017;132:1–7. doi: 10.1016/j.sigpro.2016.07.033. [DOI] [Google Scholar]

- 4.Huang Y., Zhang Y., Xu B., Wu Z., Chambers J. A New Outlier-Robust Student’s t Based Gaussian Approximate Filter for Cooperative Localization. IEEE ASME Trans. Mechatron. 2017;22:2380–2386. doi: 10.1109/TMECH.2017.2744651. [DOI] [Google Scholar]

- 5.Reif K., Günther S., Yaz E., Unbehauen R. Stochastic Stability of the Discrete-time Extended Kalman Filter. IEEE Trans. Autom. Control. 1999;44:714–728. doi: 10.1109/9.754809. [DOI] [Google Scholar]

- 6.Julier S.J., Uhlmann J.K. Unscented Filtering and Nonlinear Estimation. Proc. IEEE. 2004;92:401–422. doi: 10.1109/JPROC.2003.823141. [DOI] [Google Scholar]

- 7.Arasaratnam I., Haykin S. Cubature Kalman Filters. IEEE Trans. Autom. Control. 2009;54:1254–1269. doi: 10.1109/TAC.2009.2019800. [DOI] [Google Scholar]

- 8.N Rgaard M., Poulsen N.K., Ravn O. New Developments in State Estimation for Nonlinear Systems. Automatica. 2000;36:1627–1638. doi: 10.1016/S0005-1098(00)00089-3. [DOI] [Google Scholar]

- 9.Särkkä S., Hartikainen J. Non-linear Noise Adaptive Kalman Filtering via Variational Bayes; Proceedings of the 2013 IEEE International Workshop on Machine Learning for Signal Processing; Southampton, UK. 14 November 2013; pp. 1–6. [Google Scholar]

- 10.Huang Y., Zhang Y., Wang X., Zhao L. Gaussian Filter for Nonlinear Systems with Correlated Noises at the Same Epoch. Automatica. 2015;60:122–126. doi: 10.1016/j.automatica.2015.06.035. [DOI] [Google Scholar]

- 11.Wang G., Li N., Zhang Y. Hybrid Consensus Sigma Point Approximation Nonlinear Filter Using Statistical Linearization. Trans. Inst. Meas. Control. 2018;40:2517–2525. doi: 10.1177/0142331217691758. [DOI] [Google Scholar]

- 12.Shmaliy Y.S., Zhao S., Ahn C.K. Unbiased Finite Impulse Response Filtering: An Iterative Alternative to Kalman Filtering Ignoring Noise and Initial Conditions. IEEE Control Syst. Mag. 2017;37:70–89. doi: 10.1109/MCS.2017.2718830. [DOI] [Google Scholar]

- 13.Zhao S., Shmaliy Y.S., Shi P., Ahn C.K. Fusion Kalman/UFIR Filter for State Estimation with Uncertain Parameters and Noise Statistics. IEEE Trans. Ind. Electron. 2017;64:3075–3083. doi: 10.1109/TIE.2016.2636814. [DOI] [Google Scholar]

- 14.Kotecha J.H., Djuric P.M. Gaussian Particle Filtering. IEEE Trans. Signal Process. 2003;51:2592–2601. doi: 10.1109/TSP.2003.816758. [DOI] [Google Scholar]

- 15.Pak J.M., Ahn C.K., Shmaliy Y.S., Lim M.T. Improving Reliability of Particle Filter-Based Localization in Wireless Sensor Networks via Hybrid Particle/FIR Filtering. IEEE Trans. Ind. Inform. 2015;11:1089–1098. doi: 10.1109/TII.2015.2462771. [DOI] [Google Scholar]

- 16.Alspach D., Sorenson H. Nonlinear Bayesian Estimation Using Gaussian Sum Approximations. IEEE Trans. Autom. Control. 1972;17:439–448. doi: 10.1109/TAC.1972.1100034. [DOI] [Google Scholar]

- 17.Li X.R., Jilkov V.P. Survey of Maneuvering Target Tracking. Part V. Multiple-model Methods. IEEE Trans. Aerosp. Electron. Syst. 2005;41:1255–1321. [Google Scholar]

- 18.Chang L., Hu B., Chang G., Li A. Multiple Outliers Suppression Derivative-Free Filter Based on Unscented Transformation. J. Guid. Control Dyn. 2012;35:1902–1907. doi: 10.2514/1.57576. [DOI] [Google Scholar]

- 19.Karlgaard C.D. Nonlinear Regression Huber-Kalman Filtering and Fixed-Interval Smoothing. J. Guid. Control Dyn. 2014;38:322–330. doi: 10.2514/1.G000799. [DOI] [Google Scholar]

- 20.Wu H., Chen S., Yang B., Chen K. Robust Derivative-Free Cubature Kalman Filter for Bearings-Only Tracking. J. Guid. Control Dyn. 2016;39:1866–1871. doi: 10.2514/1.G001686. [DOI] [Google Scholar]

- 21.Hur H., Ahn H.S. Discrete-Time H∞ Filtering for Mobile Robot Localization Using Wireless Sensor Network. IEEE Sens. J. 2013;13:245–252. doi: 10.1109/JSEN.2012.2213337. [DOI] [Google Scholar]

- 22.Graham M.C., How J.P., Gustafson D.E. Robust State Estimation with Sparse Outliers. J. Guid. Control Dyn. 2015;38:1229–1240. doi: 10.2514/1.G000350. [DOI] [Google Scholar]

- 23.Wang Y., Zheng W., Sun S., Li L. Robust Information Filter Based on Maximum Correntropy Criterion. J. Guid. Control Dyn. 2016;39:1126–1131. doi: 10.2514/1.G001576. [DOI] [Google Scholar]

- 24.Chen B., Liu X., Zhao H., Principe J.C. Maximum Correntropy Kalman Filter. Automatica. 2017;76:70–77. doi: 10.1016/j.automatica.2016.10.004. [DOI] [Google Scholar]

- 25.Liu X., Qu H., Zhao J., Yue P., Wang M. Maximum Correntropy Unscented Kalman Filter for Spacecraft Relative State Estimation. Sensors. 2016;16:1530. doi: 10.3390/s16091530. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 26.Liu X., Chen B.D., Xu B., Wu Z.Z., Honeine P. Maximum Correntropy Unscented Filter. Int. J. Syst. Sci. 2017;48:1607–1615. doi: 10.1080/00207721.2016.1277407. [DOI] [Google Scholar]

- 27.Liu X., Qu H., Zhao J., Chen B. Extended Kalman Filter under Maximum Correntropy Criterion; Proceedings of the 2016 International Joint Conference on Neural Networks; Vancouver, BC, Canada. 24–29 July 2016; pp. 1733–1737. [Google Scholar]

- 28.Wang G., Li N., Zhang Y. Maximum Correntropy Unscented Kalman and Information Filters for Non-Gaussian Measurement Noise. J. Frankl. Inst. 2017;354:8659–8677. doi: 10.1016/j.jfranklin.2017.10.023. [DOI] [Google Scholar]

- 29.Narasimhappa M., Rangababu P., Sabat S.L., Nayak J. A modified Sage–Husa Adaptive Kalman Filter for Denoising Fiber Optic Gyroscope Signal; Proceedings of the 2012 Annual IEEE India Conference; Kochi, India. 7–9 December 2012; pp. 1266–1271. [Google Scholar]

- 30.Wang Y., Sun S., Li L. Adaptively Robust Unscented Kalman Filter for Tracking a Maneuvering Vehicle. J. Guid. Control Dyn. 2014;37:1696–1701. doi: 10.2514/1.G000257. [DOI] [Google Scholar]

- 31.Li K., Chang L., Hu B. A Variational Bayesian-Based Unscented Kalman Filter with Both Adaptivity and Robustness. IEEE Sens. J. 2016;16:6966–6976. doi: 10.1109/JSEN.2016.2591260. [DOI] [Google Scholar]

- 32.Sarkka S., Nummenmaa A. Recursive Noise Adaptive Kalman Filtering by Variational Bayesian Approximations. IEEE Trans. Autom. Control. 2009;54:596–600. doi: 10.1109/TAC.2008.2008348. [DOI] [Google Scholar]

- 33.Mbalawata I.S., Särkkä S., Vihola M., Haario H. Adaptive Metropolis Algorithm using Variational Bayesian Adaptive Kalman Filter. Comput. Stat. Data Anal. 2015;83:101–115. doi: 10.1016/j.csda.2014.10.006. [DOI] [Google Scholar]

- 34.Gao W., Li J., Zhou G., Li Q. Adaptive Kalman Filtering with Recursive Noise Estimator for Integrated SINS/DVL Systems. J. Navig. 2015;68:142–161. doi: 10.1017/S0373463314000484. [DOI] [Google Scholar]