Version Changes

Revised. Amendments from Version 1

The data extraction tool for impact evaluations was edited to clarify the study designs to be included in the evidence gap map protocol and now include (1) a list of broad of eligible study designs; (2) eligible types of analytical approaches; and, (3) improved instructions for coders when extracting equity and outcome data in full-text review.

Abstract

Background . For the last two decades there has been growing interest in governmental and global health stakeholders about the role that performance measurement and management systems can play for the production of high-quality and safely delivered primary care services. Despite recognition and interest, the gaps in evidence in this field of research and practice in low- and middle-income countries remain poorly characterized. This study will develop an evidence gap map in the area of performance management in primary care delivery systems in low- and middle-income countries.

Methods. The evidence gap map will follow the methodology developed by 3Ie, the International Initiative for Impact Evaluation, to systematically map evidence and research gaps. The process starts with the development of the scope by creating an evidence-informed framework that helps identify the interventions and outcomes of relevance as well as help define inclusion and exclusion criteria. A search strategy is then developed to guide the systematic search of the literature, covering the following databases: Medline (Ovid), Embase (Ovid), CAB Global Health (Ovid), CINAHL (Ebsco), Cochrane Library, Scopus (Elsevier), and Econlit (Ovid). Sources of grey literature are also searched. Studies that meet the inclusion criteria are systematically coded, extracting data on intervention, outcome, measures, context, geography, equity, and study design. Systematic reviews are also critically appraised using an existing standard checklist. Impact evaluations are not appraised but will be coded according to study design. The process of map-building ends with the creation of an evidence gap map graphic that displays the available evidence according to the intervention and outcome framework of interest.

Discussion . Implications arising from the evidence map will be discussed in a separate paper that will summarize findings and make recommendations for the development of a prioritized research agenda.

Keywords: Accountability, Evidence gap maps, Implementation strategies, Low- and middle-income countries, Performance measurement and management systems, Primary Care delivery systems, Quality of care, Safety

Background

The critical role that primary care delivery systems can play in helping achieve desirable societal goals in low- and middle-income countries (LMIC) has been widely recognized. Given their potential to serve as first points of contact for continuous, coordinated, comprehensive and people-centered health services, high-performing primary care systems are a necessary element for the achievement of the sustainable development goals, the operationalization of calls for universal health coverage, and the management of global pandemics 1– 3. While considerable research is available on primary health care and its constitutive elements, it is not clear which approaches are most effective to ensure that primary care systems consistently deliver safe and quality services, that harmful services are not delivered, that primary care delivery systems acquire the capabilities required for continuous improvement, and that all of the above add up to improved population health and other socially valued outcomes.

The objective of this study is to identify and describe existing evidence on the effects of interventions in the area of performance measurement and management in primary care delivery systems in LMICs and, also, to provide easy access to such evidence for relevant decision makers. The resulting evidence gap map (EGM) will inform the development of a prioritized research agenda for primary care delivery systems in LMIC.

Why is this study relevant to research, policy and practice in LMICs?

There are multiple approaches, frameworks, and conceptualizations for characterizing health systems, measuring and managing their performance, and typifying health system interventions. The study uses a multidisciplinary approach to identify and characterize the relevant literature from different fields and disciplines such as organizational science, development economics, behavioural science, health systems research, and public-sector management.

Organizational performance refers to the results generated by an organization and measured against its intended aims. In private sector organizations, performance can be a function of profits, organizational efficiency and effectiveness, quality of goods and services, market share, and customer satisfaction. In public administration, the definition of organizational performance has evolved with the changing framings for the role of the State in the production and delivery of public goods and services 4. Historically, governments initially emphasized aspects of performance such as the control of inputs and the compliance with standards. Subsequent framings shifted, first, towards a focus on the quantity and quality of outputs, productivity, and efficiency and, in recent years, to outcomes and policy impacts and, in the case of the health sector, to social values like patient- or people-centered health services and equity 4– 7.

On the research side, the theories of organizational performance have followed, in general, the evolution of the practice of performance management in high-income countries. According to Talbot 8, an initial set of theories and frameworks were focused on characterizing associations between individual elements of performance and organizational effectiveness. Afterwards, researchers focused on excellence, quality and organizational culture which led to the development of a first wave of models of performance measurement and management. These models did not account for differences between public and private sector dimensions of performance but were nonetheless adopted by governments around the world. In the 90s, the focus shifted from theoretical perspectives about organizational performance to interest in how to measure goal achievement in public and private sector organizations using performance models such as the Balance Scorecard and others 9– 11. Interest in performance measurement and management spread around the world, and international comparisons and benchmarking of performance flourished in various sectors such as governance 12, 13, health 14, and education 15.

During the last 40 years, innovations in performance measurement and management in the health sector have been prevalent in the United Kingdom, Canada, Australia, New Zealand, Sweden and the US, among other countries 16– 19. Amplified by multilateral finance organizations and some bilateral agencies, performance measurement and management systems have spread among LMIC, sometimes as central aspects of large-scale public-sector reforms and, also, as stand-alone health sector reforms. Some elements of performance measurement and management have spread more than others particularly performance-based financing, pay-for-performance, performance budgeting and contracting and the use of financial incentives (defined below).

The spread of the practice and research of performance measurement and management has also affected the global health architecture and its governance. The interest among donor governments, multilateral finance institutions, bilateral agencies, and global philanthropies started shifting since the late 90s from a focus on funding inputs towards an interest on the production of measurable results aid effectiveness, and accountability. Such shifts in preferences contributed to the emergence of new global organizational forms and partnerships such as the Global Fund to Fight HIV/AIDS, Tuberculosis and Malaria, GAVI the Vaccine Alliance, the Global Finance Facility, and the Mesoamerican Health Facility, to name a few.

In terms of effectiveness, 40 years of research on performance measurement and management have shown that, despite many challenges, such systems can be effective 20– 25. There is evidence, also, of the generation of unintended effects in the public and private sectors 22, 26– 29.

In the area of health systems research, research conducted to date by the Cochrane Collaboration has generated approximately 200 systematic reviews addressing the effective organization of health services. While the majority of these have been focused on issues of relevance to high-income countries research and policy, there is a growing portfolio of reviews focused on delivery and financial arrangements, as well as implementation strategies in LMIC 30– 38.

Study objectives

This evidence gap map aims to identify and describe the existing evidence on the effects of interventions in the area of performance measurement and management in primary care delivery systems in LMICs. Also, to identify evidence gaps where new primary studies or systematic reviews could add value and provide easy access to the best available existing evidence on intervention effects in this area. The resulting EGM will inform the development of a prioritized research agenda for primary care delivery systems in LMICs.

How performance measurement and management may work in primary care delivery systems

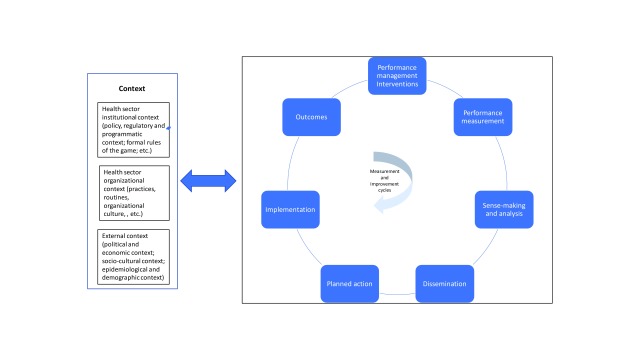

The components of a generic, performance management system are delineated in Figure 1 and represent an adaptation of two frameworks. The first, is a generic framework of public management in public sector organizations developed by Pollitt 27 and the second is the result of ongoing research on primary care performance in Mesoamerica led by one of this study’s authors (WM) 39. Framework components include: 1) A context in which various policies, organizations, programs and health interventions coexist with system actors and stakeholders; 2) one or more performance management interventions; 3) activities for measuring the results from the implementation of primary care policies and programs, and its ensuing data; 4) a process through which raw performance data is made sense of and transformed into performance information; 5) dissemination of performance information among system actors and stakeholders with the intent of making it actionable; 6) performance information use, misuse or non-use; 7) implementation of planned action based on the use of performance information; and, 8) the effects from the implementation of planned action and clinical and managerial improvements (proximal processes, outputs and outcomes, and distal, societal and population-level outcomes).

Figure 1. Generic performance measurement and management system.

However, the production of actual, measurable performance is a complex, dynamic phenomenon. Real performance can be very hard if at all possible to observe. Its measurement is characterized by lags between the introduction of interventions, the production of effects, and their measurement. These delays can also create a disconnect between action, measurement and results. Once measurement occurs and performance information is available, system actors and stakeholders can respond to the perceived performance gap by using, not using, or misusing such information 40, 41. To be effective, performance information needs to apprise subsequent organizational action. Changes in strategic direction or operational tactics would also have to be effectively implemented for outcomes to be generated.

Based on the above theoretical and practice-oriented considerations, the study defines performance measurement and management in a primary care delivery system as the introduction of management systems focused on measuring organizational processes, outputs and outcomes with the proximal aim of informing the introduction of clinical, managerial, programmatic and policy changes and the ultimate goal of contributing to socially valued, population level health outcomes.

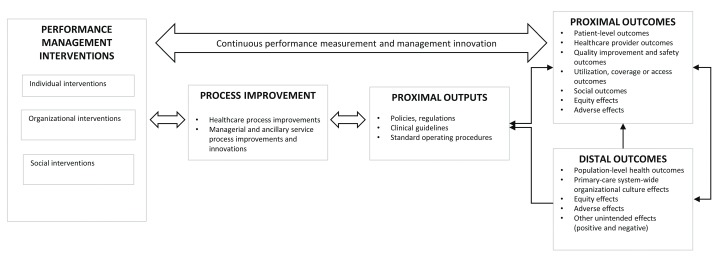

Figure 2 provides a framework which maps out three broad classes of performance measurement and management interventions, the assumed associated process improvements and outputs, and proximal and distal outcomes. The framework highlights some of the dynamic and complex relationships between interventions and outcomes and characterizes a process of multi-level change in a primary care delivery system. The process of change described in this framework adheres to the following logic:

Figure 2. Performance measurement and management framework in primary care delivery systems.

-

•

Performance management interventions operating at individual, organizational, and social levels can initially trigger short-term changes in healthcare as well as in managerial and ancillary service process improvements. Such changes would be the result of short cycles of experimentation with technological, managerial and clinical innovations 42, 43.

-

•

The repetition of these cycles through time, and the utilization of the information derived from performance measurement by system actors would lead to the generation of proximal outputs such as policies, regulations, clinical guidelines, and standard operating procedures, as well as negative or unintended outcomes.

-

•

If effectively implemented, these new routines and processes would lead to intended and unintended proximal outcomes including changes in the behaviors of healthcare providers, primary care managers, and policy-makers. Proximal health effects could include the adoption of improved clinical behaviors by providers; quality improvement and safety outcomes at the patient- and facility-levels; increased service utilization and effective coverage; positive and negative equity effects; and, adverse or unintended effects. Examples of negative or unintended effects of performance management interventions have been reported in the literature, including gaming, shirking and cream-skimming 25, 44– 47.

-

•

If sustained through time and effectively implemented, additional desirable outcomes from iterative cycles of innovation, measurement and improvement may include increased retention of the workforce; increased productivity and efficiency; or improved equity, among others.

-

•

Continuous cycles of performance measurement and management would also lead to the emergence or reinforcement of organizational-level capabilities and resources that could sustain performance improvements at higher-levels within the primary care system leading to, in some but not all instances, reinforcing cycles of improvement and organizational learning.

-

•

The reiteration of these reinforcing cycles would be necessary conditions for the sustained generation of organizational-level level outcomes such as improved quality, patient safety, customer satisfaction and, distantly, population-level health outcomes. Private sector organizations outcomes may include profits, market share, efficiency and productivity gains, and customer satisfaction, among others, but these have been excluded from this study.

The framework contains three additional elements that would generate interdependence and non-linearities in the behavior of a primary care performance measurement and management system and that would help explain how performance measurement and management systems could work or not, and why. These include 1) the recursive linkages among system elements, described in Figure 1 as bidirectional arrows which will likely generate feedback effects; 2) the dynamic interaction between context and system actors, which will likely introduce context-specific variations in the outcomes from performance management interventions; and, 3) the repetition of performance measurement and management cycles as a precondition for the generation of sustained change/improvement through time.

Also, given the well-known limits to the adoption and use of evidence by healthcare system actors at all levels 48– 53, the use of performance information is a critical, intermediate factor in the process of production of downstream outcomes. In ways that are similar to how the results of evaluation studies may or may not be used 54– 59, system actors’ use of performance information is oftentimes implicit in assumptions about how performance measurement and management systems are supposed to generate multi-level outcomes. Performance information use can be defined as “the assessments, decisions, or attitudes that primary care system actors and stakeholders hold towards the interventions that are the object of the PM system” 27.

Primary care system actors’ assessments, decisions or attitudes can be triggered or not in response to 1) the performance measurement and management interventions in use; and, 2) the contextual conditions in which they are embedded 41, 60– 64. For performance measurement and management systems to achieve desirable effects, the supply of performance information needs to be accompanied by individual and organizational decisions to act upon it. Unfortunately, production of the former does not always guarantee the achievement of the latter 40, 65. Also, the assumption that adopting performance information will only have positive effects has also been proven not to be correct at all times 66– 68.

Context factors, or the environment or setting in which the proposed process of change is to be implemented can exert influence through interactions that occur at multiple levels (individual, interpersonal, organizational, community and societal) within the primary care system. Such factors can facilitate or inhibit the effects of performance measurement and management systems and are exemplified by the composition and dynamics of the institutional primary care setting (policies, legislation, and sector-specific reforms, among others); the degree of autonomy or flexibility granted to primary care delivery actors to innovate and implement organizational changes; and by social and political pressures for transparency, accountability or social control, among others. System antecedents, such as experiences with previous institutional reforms, and the readiness for change in the primary care system, have also been shown to have effects on the acceptance and assimilation of performance improvements 69, 70. Finally, ancillary components like technical assistance, monitoring and evaluation, and training, among others, should also be considered as relevant factors that can contribute or create obstacles in the generation of performance improvements 25, 37, 44.

Specific Intervention and outcomes of interest

The conceptual frameworks outlined above informs the scope of this EGM. To define and describe the specific interventions and outcomes considered for inclusion, this study uses an adapted version of the taxonomy developed by the Effective Practice and Organization of Care (EPOC) 71. Within the general categories described in this taxonomy, the study will focus on two: 1) Implementation strategies; 2) Accountability arrangements; and, 3) Some types of financial arrangements.

Implementation strategies are defined as interventions designed to bring about changes in healthcare organization, the behavior of healthcare professionals or the use of health services by recipients 37. Financial arrangements refer to changes in how funds are collected, insurance schemes, how services are purchased, and the use of targeted financial incentives or disincentives 71. These two categories of interventions can operate at individual- (providers, managers, etc.) or organizational-levels (facilities, networks of care, local health systems, etc.).

Accountability interventions at individual, organizational, and community-levels were also included as a separate category. Given the growing interest on values like people-centered care and the confluence of the latter with long-standing interest in community participation and citizen engagement, there has been an increase in the availability of evidence surrounding the policy relevance of social accountability interventions as a system of external control that can drive performance improvements in primary care delivery systems 72– 77. There has also been a long-standing focus of government-driven performance reforms focused on inducing accountability among healthcare providers using internal accountability interventions such as audit and feedback, supervision, and others. For the purposes of this study, accountability arrangements are defined as the organizational and institutional arrangements used by system actors within governments to steward the delivery of public services towards increased performance.

Within the three intervention categories of implementation strategies, accountability arrangements and financial arrangements we identified fourteen different types of interventions. Table 1 summarizes these interventions, and also indicate the level at which the interventions take place. We describe each intervention category in more detail below.

Table 1. Interventions of relevance to the evidence gap map.

| Intervention

categories |

Individual-level provider

interventions |

Organizational-level interventions | Societal, community-

level interventions |

|---|---|---|---|

| Implementation

strategies |

Clinical practice guidelines;

Reminders; In-service training; Continuous education; Supervision |

Clinical incident reporting; Clinical

practice guidelines in PHC facilities; Local opinion leaders; Continuous quality improvement (including lean management). |

Not applicable |

| Accountability

arrangements |

Audit and feedback | Public release of

performance information; Social accountabioity |

|

| Financial

arrangements |

Performance-based financing

(Includes supply-side Results- Based Financing, Pay for Performance, and other provider incentives and rewards) |

Performance-based financing (Includes

supply-side Results-Based Financing, Pay for Performance, and other facility- based incentives and rewards) |

Not applicable |

Implementation strategies

In this category, we identified eight interventions of relevance including: 1) In-service training, a form of positive behavior support aimed at increasing the capabilities of individual primary care system actors 78; 2) Reminders, manual or computerized interventions that prompt individual providers to perform an action during a clinical exchange and can include, among others, job aids, paper reminders, checklists, and computer decision support systems 71, 79– 83; 3) Clinical practice guidelines, or systematically developed statements to assist healthcare providers and patients to decide on appropriate health care for specific circumstances 71, 84– 88; 4) Continuous education, referring to courses, workshops, or other educational meetings aimed at increasing the technical competencies of primary care providers; 5) Clinical incident reporting, or systems for reporting critical incidents and adverse or undesirable effects as a means to improving the safety of healthcare delivery 33; 6) Local opinion leaders, referring to the identification and use of identifiable local opinion leaders to promote good clinical practices 31, 89; 7) Continuous quality improvement defined as the iterative process to review and improve care that includes involvement of healthcare teams, analysis of a process or system, a structured process improvement method or problem-solving approach, and use of data analysis to assess changes 71. It will include lean management as one of the approaches used to improve efficiency and quality in service provider organizations 90– 94; and, 8) Supervision, defined as routine control visits by senior primary care staff to providers and facilities 95– 101.

Accountability arrangements

In this category we included the following three interventions: 1) Audit and feedback, defined as a summary of primary care provider or facility performance over a specified period of time, given in a written, electronic, or verbal format; such interventions can occur at individual provider as well as at organizational, facility level 102– 107; 2) Public release of performance data, defined as arrangements to inform the public about the performance of primary care providers or facilities in written or electronic formats; and, 3) Social accountability interventions, defined as an accountability arrangement in which community members and/or civil society organizations are involved in the monitoring of performance of primary care providers or facilities 77.

Financial arrangements

There are many variations in this type intervention and contested definition among them. The interventions of interest to this study are under the general heading of Performance-Based Financing (PBF) but can also include Results-Based Financing (RBF), Pay-for-Performance (PFP), and the use of provider rewards and incentives. For precision purposes, we include the definitions developed by Musgrove 108 for these terms:

-

•

Results-based financing refers to any program that rewards the delivery of one or more outputs or outcomes by one or more incentives, financial or otherwise, upon verification that the agreed-upon result has actually been delivered. Incentives may be directed to service providers (supply side), program beneficiaries (demand side) or both. Payments or other rewards are not used for recurrent inputs, although there may be supplemental investment financing of some inputs, including training and equipment to enhance capacity or quality; and they are not made unless and until results or performance are satisfactory; and,

-

•

Performance-based financing is a form of RBF distinguished by three conditions. Incentives are directed only to providers, not beneficiaries; awards are purely financial--payment is by fee for service for specified services; and payment depends explicitly on the degree to which services are of approved quality, as specified by protocols for processes or outcomes;

-

•

Pay-for performance, performance-based payment and performance-based incentives can all be considered synonyms for RBF. Performance in these labels means the same thing as results, and payment means the same thing as financing.

Outcomes included in the evidence gap map

Outcomes were categorized following the guidelines developed for EPOC systematic reviews and adapted for research on performance management in primary care systems in LMIC. Relevant outcomes are therefore those that can be actionable for the intended users: research groups, funding agencies, and performance measurement and management practitioners in primary care systems in LMIC. Based on these considerations outcomes of interest will be wide in scope; can occur across short- and long-term timeframes; can be observable at various levels within a system (individual, organizational, social); and, can include desirable as well as undesirable, adverse effects. The priority-setting exercise that will follow the completion of this EGM may result in the identification of primary and secondary outcomes; at this stage, however, the study aims to scope the largest number of relevant outcomes within available operational constraints.

The main outcome categories included in this EGM are listed in Table 2. They include: 1) provider and managerial outputs and outcomes, defined as individual, provider and managerial staff effects, and exemplified by changes in workload, work morale, stress, burnout, sick leave, and staff turnover; 2) patient outcomes, defined as changes in health status or on patient health behaviours; 3) organizational outcomes, defined as organizational-level effects within and across facilities and networks of primary care such as quality of care process improvements, patient satisfaction, perceived quality of care, workforce retention, organizational culture, and unintended outcomes (gaming, shirking, shaming, data falsification, etc.); 4) population-level outputs and outcomes, defined as aggregate, health and equity effects accruing defined populations, including utilization of specific primary care services (for instance, number of antenatal care visits, institutional deliveries, etc.), coverage of services (such as the proportion of pregnant women receiving antenatal care, proportion of pregnant women delivering in facilities; coverage rate of specific vaccines), access to primary care services (for instance, waiting times), adverse health effects or harm, health equity effects, and unintended health effects; and, 5) social outcomes defined as non-health, social, economic, or cultural effects affecting defined populations, such as changes in community participation, non-health equity effects, non-health adverse effects or harm, and other unintended social outcomes. Table 2 lists each outcome category and provides examples of specific types of results within each category.

Table 2. Outcomes of relevance to users of the evidence gap map.

| Provider and

managerial outputs and outcomes |

Patient outcomes | Organizational

outcomes |

Population health outputs and

outcomes |

Social outcomes |

|---|---|---|---|---|

| Workload

Work morale Stress Burnout Sick leave Staff turnover |

Health status outcomes:

a) Physical health and treatment outcomes such as mortality, and morbidity; b) Psychological health and wellbeing; c) Psychosocial outcomes such as quality of life, social activities Health behaviors: adherence by patients to treatment or care plans and/or health-seeking behaviors; Unintended patient outcomes |

Quality of care process

improvements; Adherence to recommended practice or guidelines; Patient satisfaction Perceived quality of care Workforce retention Changes in organizational culture Unintended organizational outcomes |

Utilization of specific services

(example: number of antenatal visits) Coverage of specific services or interventions (example: proportion of pregnant women receiving antenatal care; proportion of pregnant women delivering in facilities; coverage rate of specific vaccines) Access to primary care services (example waiting times) Health equity effects Adverse health effects or harm Unintended population outcomes |

Community

participation Other equity effects Unintended social outcomes |

Adapted from: Cochrane Effective Practice and Organization of Care (EPOC). What outcomes should be reported in EPOC reviews? EPOC resources for review authors, 2017.

Methods

Overall approach

The team will follow the methodology to produce evidence gap maps developed by 3ie 109, 110. The methodology was developed as a tool to systematically map evidence and research gaps on intervention effects for a broad topic area. In doing so, EGMs can help inform strategic use of resources for new research by identifying ‘absolute gaps’ where there are few or no available impact evaluations, and ‘synthesis gaps’ where there are clusters of impact evaluations but no available high-quality systematic reviews. By making existing studies easily available to researchers and describing the broad characteristics of the evidence base, the EGM can also inform the methods and design of future studies. EGMs may also facilitate the use of evidence to inform decisions by providing collections of systematic reviews that are critically appraised and ready for use by various decision makers. The methods used to develop EGMs are informed by systematic approaches to evidence synthesis and review and include key characteristics such as explicit inclusion/exclusion criteria and a systematic and transparent approach to study identification, data extraction and analysis. We describe our methods in more detail below.

Criteria for including and excluding studies

The process starts with developing the scope for the EGM by creating an evidence-informed framework that serves for the identification of the interventions and outcomes that are relevant for the domains under study. To do so we drew on several existing frameworks (cited above) and adapted these according to the scope of our work, which had been broadly defined to focus on performance management and measurement in a primary care setting.

Table 1 and Table 2 above define the final intervention and outcome inclusion criteria. To be included, studies have to assess the effect of at least one of these interventions on one of the outcomes.

Performance management and measurement interventions are of relevance across the health sector. To make our study manageable within operational constraints, we will focus on the supply-side, of primary care service delivery. We will exclude demand-side health interventions, such as conditional cash transfers, communication for behavior-change, and social marketing, among others. We will also exclude public health interventions such as epidemiological surveillance. Finally, services delivered in hospitals will also be excluded.

Types of included study designs

We will include studies designed to assess the effects of interventions, and systematic reviews of such studies, as defined below:

-

•

Explicitly described as systematic reviews and reviews that describe methods used for search, data collection and synthesis as per the protocol for the 3ie database of systematic reviews (Snilstveit et al., 2018).

-

•

Impact evaluations, defined as program evaluations or field experiments that use experimental or observational data to measure the effect of a program relative to a counterfactual representing what would have happened to the same group in the absence of the program. Specifically we will include the following impact evaluation designs: Randomized controlled trials (RCT) where the intervention is randomly allocated at the individual or cluster level; Regression discontinuity design (RDD); Controlled before and after studies using appropriate methods to control for selection bias and confounding such as Propensity Score Matching (PSM) or other matching methods; Instrumental Variables Estimation or other methods using an instrumental variable such as the Heckman Two Step approach; Difference-in-Differences (DD) or a fixed- or random-effects model with an interaction term between time and intervention for baseline and follow-up observations; Cross-sectional or panel studies with an intervention and comparison group using methods to control for selection bias and confounding as described above; and, Interrupted-time series (ITS), a type of study that uses observations at multiple time points before and after an intervention (the ‘interruption’). We will only include ITS studies that use at least three observations before and three observations after the intervention.

Efficacy trials and systematic reviews of efficacy trials will be excluded. Broadly, efficacy trials determine whether an intervention produces the expected result under ideal/controlled circumstances, whereas effectiveness trials measure the degree of beneficial effect under “real world” settings. However, the distinction between these two types of studies is generally considered as a continuum rather than a clear dichotomy and in practice it can be difficult to clearly categorize a trial as either effectiveness or efficacy 111. We will therefore draw on the criteria developed by Snilstveit 112 et al. to aid the identification of efficacy trials for exclusion from the EGM. The adapted criteria are as follows:

-

•

Research Objective: Is the study primarily designed to determine to what extent a specific technique, technology, treatment, procedure or service works under ideal condition rather than attempt to answer a question relevant to the roll-out of a large program?

-

•

Providers: Is the intervention primarily delivered by the research study team rather than primary health care personal/trained laypersons who don’t have extensive expertise?

-

•

Delivery of intervention: Is the intervention delivered with high degree of assurance of delivery of the treatment? (Is the delivery tightly monitored/supervised by the researcher following specific protocols; Is adherence to the treatment monitored closely with frequent follow- ups?)

Other inclusion and exclusion criteria

In addition, studies have to be conducted in a low- or middle-income country as defined by the World Bank. We will exclude studies exclusively focused on high-income countries, or systematic reviews focusing on a single country. Moreover, studies have to be published in any language in the year 2000 and after. Studies published before 2000 will be excluded. We will include studies regardless of status (ongoing or completed) and type of publication, published (e.g. journal article, book chapter) and unpublished (e.g. report or working paper).

Search strategy

We have developed a systematic search strategy in collaboration with two information specialists. We developed a detailed search string for searching bibliographic databases and relevant portals. A sample strategy was developed for Medline, (see Supplementary File 1) and covers a detailed explanation of the search terms used based on an initial set of English search terms relevant to the main concepts of our inclusion criteria, including intervention, study design and population (low- and middle-income countries). These were combined using appropriate Boolean operators. All search strategies used in the study will be published along with study results.

We will identify potential studies using three strategies as listed below:

-

•

Advanced search of the following bibliographic databases such as Medline (Ovid), Embase (Ovid), CAB Global Health (Ovid), CINAHL (Ebsco), Cochrane Library, Scopus (Elsevier), and Econlit (Ovid);

-

•

Search of key institutional databases, repositories of impact evaluations and systematic reviews and other sources of grey literature such as the International Initiative for Impact Evaluation Impact Evaluation and Systematic Review repositories; Cochrane Effective Practice and Organization of Care (EPOC); the Abdul Latif Jameel Poverty Action Lab (J-PAL); The World Bank’s Independent Evaluation Group; Inter-American Development Bank repository; and, American Economic Association Register;

-

•

Snowballing the references in appraised systematic reviews and citation tracking of included studies using Scopus and contacting authors, when required.

Procedures for screening and data extraction

Following the search, we will import all records into EPPI reviewer 4. Following the removal of duplicates, we will combine manual screening and text mining to assess studies for inclusion at the title and abstract stage. To ensure consistent application of screening criteria for all screeners, we plan to assess the same random sample of 100 abstracts. Any discrepancies will be discussed within the team and inclusion criteria will be clarified if necessary. Following this initial set of 100, we will move to single screening with “safety first approach”, whereby there is an option to mark unclear studies for review by a second reviewer 113.

Once all screeners have been trained, we will screen a random sample of 500 abstracts to train EPPI reviewer’s priority screening function. The priority screening function can be used at the title/abstract screening stage to prioritize the items most likely to be categorized as ‘to-be included’ based on previously included documents. Using priority screening in this way allows for the identification of includable records at an earlier stage in the review process so that work can begin earlier on full-text screening and data extraction.

Depending on the number of search hits, we may also make use of EPPI reviewer’s auto-exclude function to auto-exclude studies from the search that have less than a ten per cent probability score of inclusion. This function classifies un-screened studies into ten percent intervals of probability of inclusion, based on keywords included in previously included and excluded studies.

Because of time and resource constraints we will not conduct independent double screening of all studies that will be considered at full text. To minimize bias and human error we will however double screen a sample of studies at the beginning to ensure inter-rater reliability between screeners, as described for the title/abstract stage above. In addition, we will take a “safety first” approach at the title and abstract stage, whereby any studies where the first screener is uncertain about inclusion/exclusion will be screened by a second person 113. All studies identified for inclusion will be effectively screened by a second/third person during data extraction.

We will use a standardized data extraction form in Microsoft Excel to systematically extract meta-data from all included studies, including bibliographic details, intervention type and description, outcome type and definition, study design, and geographical location. We will also assess the extent to which studies incorporate a consideration of equity, and extract information about if and how studies consider vulnerable and marginalized groups. To do so we will draw on the PROGRESS-Plus framework 114 which outlines dimensions that may give rise to inequity in either access to services, or final health outcomes. In particular, we will consider the following dimensions: Place of residence (location of household such as distance from health facility, or rural/urban), ethnicity, culture and language, gender, socioeconomic status and other vulnerable groups (open category to be used iteratively to record details of any vulnerable groups identified during coding).

For each study we will assess if they consider equity for any of these dimensions, and if so how, giving the following options: 1) Contains equity-sensitive analytical frameworks/theory of change; 2) Uses equity-sensitive research questions; 3) Follows equity-sensitive methodologies (sub-group analysis); 4) Contains equity-sensitive methodologies: additional study components to assess how and why (including mixed and qualitative methods); 5) Uses any other methodology that is equity sensitive that is not covered by the other options; 6) Uses equity-informed research processes (who are the respondents, who collects data, when, where etc.); 7) Addresses interventions targeting specific vulnerable groups - Looks at the impact of an intervention that targets specific population groups; and/or, 8) Measures effects on an inequality outcome.

For multi-arm trials testing different interventions, each comparison arm will be treated as an individual study for the coding of interventions. We will report both number of studies and number of papers identified. In addition, we will report on the number of linked studies. Studies will be considered linked if there are multiple papers by the same study team on the same impact evaluation reporting different outcomes or different follow-up periods. If they report the same information, the study will be excluded as a duplicate.

A full list of descriptive data to be extracted is included in the coding tools in Supplementary File 2. We will begin the coding process with a training with the whole research team. This training will involve coding one included systematic review and one included impact evaluation as a group to familiarize all coders with the coding tools. The entire research team will then independently pilot the coding tool on the same small subset of studies to ensure consistency in coding and to resolve any issues or ambiguities. We will start this process with two systematic reviews and two impact evaluations, and test an additional small subset if issues or discrepancies remain in the application of the tool. Data extraction will then be completed by a single coder. To minimize bias and human error we will however review the data extraction of a sample of studies.

We will follow the adapted SURE checklist, available in the 3ie systematic review database protocol for appraisal of systematic reviews. This checklist is based on the SURE Collaboration checklist for deciding how much confidence to place in the findings of a systematic review, giving systematic reviews a rating of high, medium or low confidence ( Supplementary File 3). All systematic reviews will be appraised by at least two people; a user-friendly summary will be generated for all reviews of high confidence, and the results will be shared with study authors before publication of the EGM results.

Statistical analysis plan and EGM visualization

Upon completion of the data collection, findings will be initially presented in a visual interactive format using 3ie’s custom-built platform and accompanied by a detailed report.

The visual, online EGM will be built by, first, transforming the intervention-outcome framework into a matrix followed by the uploading of cvs files with data for all the studies included in the map (intervention, outcome, study type, impact evaluation study design, systematic review confidence level, geographical location and equity focus). This data will automatically populate the framework matrix to indicate the relative availability of evidence. This will be used to identify and describe absolute evidence gaps (no studies) and synthesis gaps (sizeable impact evaluation literature, but no high confidence SR). In addition, the map will contain descriptions of the characteristics of the evidence using graphs, figures and descriptive statistics.

Discussion

Evidence gap maps provide collections of evidence in specific development sectors or thematic areas 110. They adopt systematic methods to identify and map evidence from systematic reviews and impact evaluations. They are structured around a framework of interventions and outcomes of relevance to any given sector and this is used to provide a graphical display of the volume of ongoing and completed impact evaluations and systematic reviews, with a rating of the confidence in the findings from systematic reviews. EGMs can be used to identify areas where there is a need for the generation of new or more rigorous research evidence; also, to inform decisions by policymakers and development practitioners as policies and programs are designed.

Current best -practice in the design of evidence gap maps recommends that EGMs have a pre-specified protocol, have a systematic search strategy, contain precise and clear criteria for inclusion and exclusion, and systematically report all eligible studies. This protocol is the first step in ensuring compliance with such practices. As the visual interface is built, a final report will be submitted for peer-reviewed publication and will include a summary of the findings from the evidence gap analysis and recommendations for a prioritized research agenda on performance measurement and management in LMIC.

This evidence gap map aims to identify and describe the existing evidence on the effects of interventions in the area of performance measurement and management in primary care delivery systems in LMICs and, also, to provide easy access to the best available existing evidence on intervention effects in this area. As a result, the EGM will inform the development of a prioritized research agenda for primary care delivery systems in LMICs.

Dissemination of findings

Findings will be presented at the 5 th Global Health System Symposium in October, 2018 after which a paper will be submitted for peer-reviewed publication. We will also publish the results in the form of an interactive Evidence Gap Map, which will be made freely available from the 3ie website. We will use our institutional channels to disseminate our findings as widely as possible, including via our websites, social media platforms and events beyond the Global Health System Symposium.

Study status

By the time of submission of this paper, the framework, search strategies and data extraction tools included in this protocol have been completed. Data collection, analysis and development of the graphical interface will be completed by September 2018. A paper summarizing study results and implications will be submitted by December, 2018.

Data availability

No data is associated with this article.

Funding Statement

Bill and Melinda Gates Foundation [OPP1154415, OPP1149078]. This work was supported by the Bill & Melinda Gates Foundation through grants to George Washington University [OPP1154415], and Ariadne Labs through Brigham and Women's Hospital [OPP1149078].

The funders had no role in study design, data collection and analysis, decision to publish, or preparation of the manuscript.

[version 2; peer review: 2 approved]

Supplementary material

Supplementary File 1 – Medline search strategy

.

Supplementary File 2 – Coding tools containing full list of descriptive data to be extracted

.

Supplementary File 3 – Systematic Reviews critical appraisal checklist

.

References

- 1. Bitton A, Ratcliffe HL, Veillard JH, et al. : Primary Health Care as a Foundation for Strengthening Health Systems in Low- and Middle-Income Countries. J Gen Intern Med. 2017;32(5):566–71. 10.1007/s11606-016-3898-5 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 2. Kruk ME, Porignon D, Rockers PC, et al. : The contribution of primary care to health and health systems in low- and middle-income countries: a critical review of major primary care initiatives. Soc Sci Med. 2010;70(6):904–11. 10.1016/j.socscimed.2009.11.025 [DOI] [PubMed] [Google Scholar]

- 3. Gates B: The next epidemic--lessons from Ebola. N Engl J Med. 2015;372(15):1381–4. 10.1056/NEJMp1502918 [DOI] [PubMed] [Google Scholar]

- 4. Borgonovi E, Anessi-Pessina E, Bianchi C: Outcome-Based Performance Management in the Public Sector.Bianchi C, editor. Cham, Switzerland: Springer;2017. 10.1007/978-3-319-57018-1 [DOI] [Google Scholar]

- 5. Rajala T, Laihonen H, Vakkuri J: Shifting from Output to Outcome Measurement in Public Administration-Arguments Revisited.In: Borgonovi E, Anessi-Pessina E, Bianchi C, editors. Outcome-Based Performance Management in the Public Sector. System Dynamics for Perfromance Management Cham, UK: Springer;2018;3–23. 10.1007/978-3-319-57018-1_1 [DOI] [Google Scholar]

- 6. Mohammed K, Nolan MB, Rajjo T, et al. : Creating a Patient-Centered Health Care Delivery System: A Systematic Review of Health Care Quality From the Patient Perspective. Am J Med Qual. 2016;31(1):12–21. 10.1177/1062860614545124 [DOI] [PubMed] [Google Scholar]

- 7. World-Health-Organization: People-Centered Health Care: A Policy Framework.Manila: Phillipines: World Health Organization;2007;20 Reference Source [Google Scholar]

- 8. Talbot C: Theories of performance: Organizational and service improvement in the public domain.Oxford University Press;2010. Reference Source [Google Scholar]

- 9. Neely AD, Adams C, Kennerley M: The performance prism: The scorecard for measuring and managing business success.London: Financial Times/Prentice Hall;2002. Reference Source [Google Scholar]

- 10. Burke WW, Litwin GH: A causal model of organizational performance and change. J Manage. 1992;18(3):523–45. 10.1177/014920639201800306 [DOI] [Google Scholar]

- 11. Kaplan RS, Norton DP: The balanced scorecard: translating strategy into action.Boston MA: Harvard Business Press;1996. Reference Source [Google Scholar]

- 12. World-Bank: World Bank Approach to Public Sector Management 2011–2020. Better results from public sector institutions. Working draft Policy dialogue document. Washington DC: The World Bank;2011. Reference Source [Google Scholar]

- 13. World-Bank: World development indicators.Washington DC: World Bank;1999. Reference Source [Google Scholar]

- 14. World-Health-Organization: The world health report 2000.Health systems: improving performance. Geneva, Switzerland: World Health Organization;2000. Reference Source [Google Scholar]

- 15. Program-for-International-Student-Assessment: Knowledge and skills for life: First results from the OECD Programme for International Student Assessment (PISA) 2000.Paris, France: Organisation for Economic Co-operation and Development;2001. Reference Source [Google Scholar]

- 16. Behn RD: Why measure performance? Different purposes require different measures. Public Admin Rev. 2003;63(5):586–606. 10.1111/1540-6210.00322 [DOI] [Google Scholar]

- 17. Smith PC: Measuring outcome in the public sector.Taylor & Francis;1996. Reference Source [Google Scholar]

- 18. Smith PC, Mossialos E, Papanicolas I: Performance measurement for health system improvement: experiences, challenges and prospects.Geneva: WHO;2008. Reference Source [Google Scholar]

- 19. Moynihan DP: Explaining the Implementation of Performance Management Reforms. The Dynamics of Performance Management Washington DC: Georgetown University Press;2008. Reference Source [Google Scholar]

- 20. Kelman S, Friedman JN: Performance improvement and performance dysfunction: an empirical examination of distortionary impacts of the emergency room wait-time target in the English National Health Service. J Public Adm Res Theory. 2009;19(4):917–46. 10.1093/jopart/mun028 [DOI] [Google Scholar]

- 21. Bevan G: Setting targets for health care performance: lessons from a case study of the English NHS. Natl Inst Econ Rev. 2006;197(1):67–79. 10.1177/0027950106070036 [DOI] [Google Scholar]

- 22. Bevan G, Hood C: What’s measured is what matters: targets and gaming in the English public health care system. Public Admin. 2006;84(3):517–38. 10.1111/j.1467-9299.2006.00600.x [DOI] [Google Scholar]

- 23. Bevan G, Wilson D: Does ‘naming and shaming’work for schools and hospitals? Lessons from natural experiments following devolution in England and Wales. Public Money Manage. 2013;33(4):245–52. 10.1080/09540962.2013.799801 [DOI] [Google Scholar]

- 24. Suthar AB, Nagata JM, Nsanzimana S, et al. : Performance-based financing for improving HIV/AIDS service delivery: a systematic review. BMC Health Serv Res. 2017;17(1):6. 10.1186/s12913-016-1962-9 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 25. Witter S, Fretheim A, Kessy FL, et al. : Paying for performance to improve the delivery of health interventions in low- and middle-income countries. Cochrane Database Syst Rev. 2012; (2):CD007899. 10.1002/14651858.CD007899.pub2 [DOI] [PubMed] [Google Scholar]

- 26. Pollitt C: Performance management 40 years on: a review. Some key decisions and consequences. Public Money Manage. 2018;38(3):167–74. 10.1080/09540962.2017.1407129 [DOI] [Google Scholar]

- 27. Pollitt C: The logics of performance management. Evaluation. 2013;19(4):346–63. 10.1177/1356389013505040 [DOI] [Google Scholar]

- 28. Cepiku D, Hinna A, Scarozza D, et al. : Performance information use in public administration: an exploratory study of determinants and effects. Journal of Management & Governance. 2017;21(4):963–91. 10.1007/s10997-016-9371-3 [DOI] [Google Scholar]

- 29. Belle N, Cantarelli P: What Causes Unethical Behavior? A Meta-Analysis to Set an Agenda for Public Administration Research. Public Admin Rev. 2017;77(3):327–39. 10.1111/puar.12714 [DOI] [Google Scholar]

- 30. Parmelli E, Flodgren G, Beyer F, et al. : The effectiveness of strategies to change organisational culture to improve healthcare performance: a systematic review. Implement Sci. 2011;6(1):33. 10.1186/1748-5908-6-33 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31. Flodgren G, Parmelli E, Doumit G, et al. : Local opinion leaders: effects on professional practice and health care outcomes. Cochrane Database Syst Rev. 2011; (8):CD000125. 10.1002/14651858.CD000125.pub4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32. Ivers N, Jamtvedt G, Flottorp S, et al. : Audit and feedback: effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev. 2012; (6):CD000259. 10.1002/14651858.CD000259.pub3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 33. Parmelli E, Flodgren G, Fraser SG, et al. : Interventions to increase clinical incident reporting in health care. Cochrane Database Syst Rev. 2012; (8):CD005609. 10.1002/14651858.CD005609.pub2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34. Imamura M, Kanguru L, Penfold S, et al. : A systematic review of implementation strategies to deliver guidelines on obstetric care practice in low- and middle‐income countries. Int J Gynaecol Obstet. 2017;136(1):19–28. 10.1002/ijgo.12005 [DOI] [PubMed] [Google Scholar]

- 35. Pantoja T, Opiyo N, Ciapponi A, et al. : Implementation strategies for health systems in low-income countries: an overview of systematic reviews (Protocol). Cochrane Database Syst Rev.The Cochrane Library.2014; (5):CD011086 10.1002/14651858.CD011086 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 36. Ciapponi A, Lewin S, Herrera CA, et al. : Delivery arrangements for health systems in low‐income countries: an overview of systematic reviews. Cochrane Database Syst Rev.The Cochrane Library.2017; (9):CD011083. 10.1002/14651858.CD011083.pub2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37. Pantoja T, Opiyo N, Lewin S, et al. : Implementation strategies for health systems in low-income countries: an overview of systematic reviews. Cochrane Database Syst Rev. 2017;9:CD011086. 10.1002/14651858.CD011086.pub2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38. Wiysonge CS, Paulsen E, Lewin S, et al. : Financial arrangements for health systems in low-income countries: an overview of systematic reviews. Cochrane Database Syst Rev. 2017;9:CD011084: 10.1002/14651858.CD011084.pub2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39. Munar W, Wahid SS, Curry L: Characterizing performance improvement in primary care systems in Mesoamerica: A realist evaluation protocol [version 1; referees: 2 approved, 1 approved with reservations]. Gates Open Res. 2018;2:1. 10.12688/gatesopenres.12782.1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40. Kroll A: Drivers of Performance Information Use: Systematic Literature Review and Directions for Future Research. Public Perform Manag. 2015;38(3):459–86. 10.1080/15309576.2015.1006469 [DOI] [Google Scholar]

- 41. Kroll A: Exploring the link between performance information use and organizational performance: A contingency approach. Public Perform Manag. 2015;39(1):7–32. 10.1080/15309576.2016.1071159 [DOI] [Google Scholar]

- 42. Berwick DM: The science of improvement. JAMA. 2008;299(10):1182–4. 10.1001/jama.299.10.1182 [DOI] [PubMed] [Google Scholar]

- 43. Lemire S, Christie CA, Inkelas M: The Methods and Tools of Improvement Science. New Directions for Evaluation. 2017;2017(153):23–33. 10.1002/ev.20235 [DOI] [Google Scholar]

- 44. Witter S, Toonen J, Meessen B, et al. : Performance-based financing as a health system reform: mapping the key dimensions for monitoring and evaluation. BMC Health Serv Res. 2013;13(1):367. 10.1186/1472-6963-13-367 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45. Fretheim A, Witter S, Lindahl AK, et al. : Performance-based financing in low- and middle-income countries: still more questions than answers. Bull World Health Organ. 2012;90(8):559–559A. 10.2471/BLT.12.106468 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 46. Renmans D, Paul E, Dujardin B: Analysing Performance-Based Financing through the Lenses of the Principal-Agent Theory.Antwerp, Belgium: Universiteit Antwerpen, Institute of Development Policy and Management (IOB);2016. Contract No.: 2016.14. [Google Scholar]

- 47. Paul E, Lamine Dramé M, Kashala JP, et al. : Performance-Based Financing to Strengthen the Health System in Benin: Challenging the Mainstream Approach. Int J Health Policy Manag. 2017;7(1):35–47. 10.15171/ijhpm.2017.42 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48. Punton M, Hagerman K, Brown C, et al. : How can capacity-development promote evidence-informed policy making? Literature review for the Building Capacity to Use Research Evidence (BCURE) Programme.Brighton, UK: ITAD Ltd.;2016. Reference Source [Google Scholar]

- 49. Oxman AD, Lavis JN, Lewin S, et al. : SUPPORT Tools for evidence-informed health Policymaking (STP) 1: What is evidence-informed policymaking? Health Res Policy Syst. 2009;7 Suppl 1:S1. 10.1186/1478-4505-7-S1-S1 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50. Gonzales JJ, Ringeisen HL, Chambers DA: The tangled and thorny path of science to practice: Tensions in interpreting and applying “evidence”. Clinical Psychology: Science and Practice. 2002;9(2):204–9. 10.1093/clipsy.9.2.204 [DOI] [Google Scholar]

- 51. Wickremasinghe D, Hashmi IE, Schellenberg J, et al. : District decision-making for health in low-income settings: a systematic literature review. Health Policy Plan. 2016;31 suppl 2:ii12–ii24. 10.1093/heapol/czv124 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52. McCormack B, Kitson A, Harvey G, et al. : Getting evidence into practice: the meaning of 'context'. J Adv Nurs. 2002;38(1):94–104. 10.1046/j.1365-2648.2002.02150.x [DOI] [PubMed] [Google Scholar]

- 53. Murthy L, Shepperd S, Clarke MJ, et al. : Interventions to improve the use of systematic reviews in decision-making by health system managers, policy makers and clinicians. Cochrane Database Syst Rev. 2012; (9):CD009401. 10.1002/14651858.CD009401.pub2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 54. Christie CA: Reported influence of evaluation data on decision makers’ actions: An empirical examination. Am J Eval. 2007;28(1):8–25. 10.1177/1098214006298065 [DOI] [Google Scholar]

- 55. Weiss CH: Have we learned anything new about the use of evaluation? Am J Eval. 1998;19(1):21–33. 10.1016/S1098-2140(99)80178-7 [DOI] [Google Scholar]

- 56. Henry GT, Mark MM: Beyond use: Understanding evaluation’s influence on attitudes and actions. Am J Eval. 2003;24(3):293–314. 10.1177/109821400302400302 [DOI] [Google Scholar]

- 57. Mark MM, Henry GT: The Mechanisms and Outcomes of Evaluation Influence. Evaluation. 2004;10(1):35–57. 10.1177/1356389004042326 [DOI] [Google Scholar]

- 58. Cousins JB, Goh SC, Elliott CJ, et al. : Framing the capacity to do and use evaluation. New Directions for Evaluation. 2014;141(141):7–23. 10.1002/ev.20076 [DOI] [Google Scholar]

- 59. Cousins JB, Goh SC, Elliott C, et al. : Government and voluntary sector differences in organizational capacity to do and use evaluation. Eval Program Plann. 2014;44(1):1–13. 10.1016/j.evalprogplan.2013.12.001 [DOI] [PubMed] [Google Scholar]

- 60. Kok MC, Kane SS, Tulloch O, et al. : How does context influence performance of community health workers in low- and middle-income countries? Evidence from the literature. Health Res Policy Syst. 2015;13:13. 10.1186/s12961-015-0001-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 61. Pollitt C, editor: Context in public policy and management: The missing link?Cheltenham, UK: Edward Elgar Publishing;2013. Reference Source [Google Scholar]

- 62. Kaplan HC, Brady PW, Dritz MC, et al. : The influence of context on quality improvement success in health care: a systematic review of the literature. Milbank Q. 2010;88(4):500–59. 10.1111/j.1468-0009.2010.00611.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63. Lamarche P, Maillet L: The performance of primary health care organizations depends on interdependences with the local environment. J Health Organ Manag. 2016;30(6):836–54. 10.1108/JHOM-09-2015-0150 [DOI] [PubMed] [Google Scholar]

- 64. Mafuta EM, Hogema L, Mambu TN, et al. : Understanding the local context and its possible influences on shaping, implementing and running social accountability initiatives for maternal health services in rural Democratic Republic of the Congo: a contextual factor analysis. BMC Health Serv Res. 2016;16(1):640. 10.1186/s12913-016-1895-3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65. Moynihan DP, Pandey SK: The big question for performance management: Why do managers use performance information? J Public Adm Res Theory. 2010;20(4):849–66. 10.1093/jopart/muq004 [DOI] [Google Scholar]

- 66. Sterman JD, Repenning NP, Kofman F: Unanticipated side effects of successful quality programs: Exploring a paradox of organizational improvement. Manage Sci. 1997;43(4):503–21. 10.1287/mnsc.43.4.503 [DOI] [Google Scholar]

- 67. Repenning NP, Sterman JD: Capability Traps and Self-Confirming Attribution Errors in the Dynamics of Process Improvement. Adm Sci Q. 2002;47(2):265–95. 10.2307/3094806 [DOI] [Google Scholar]

- 68. Hovmand PS, Gillespie DF: Implementation of Evidence-Based Practice and Organizational Performance. J Behav Health Serv Res. 2010;37(1):79–94. 10.1007/s11414-008-9154-y [DOI] [PubMed] [Google Scholar]

- 69. Greenhalgh T, Robert G, MacFarlane F, et al. : Diffusion of Innovations in Health Service Organisations: A Systematic Literature Review.Malden MA: Blackwell Publishing; 2005.2004;581–629. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 70. Greenhalgh T, Robert G, Macfarlane F, et al. : Diffusion of innovations in service organizations: systematic review and recommendations. Milbank Q. 2004;82(4):581–629. 10.1111/j.0887-378X.2004.00325.x [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71. EPOC: EPOC Taxonomy.The Cochrane Collaboration;2015. Reference Source [Google Scholar]

- 72. Bauhoff S, Rabinovich L, Mayer LA: Developing citizen report cards for primary health care in low and middle-income countries: Results from cognitive interviews in rural Tajikistan. PLoS One. 2017;12(10):e0186745. 10.1371/journal.pone.0186745 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 73. Besley T, Persson T: Pillars of prosperity: The political economics of development clusters. Princeton University Press;2011. Reference Source [Google Scholar]

- 74. Björkman M, Svensson J: Power to the people: evidence from a randomized field experiment on community-based monitoring in Uganda. Q J Econ. 2009;124(2):735–69. 10.1162/qjec.2009.124.2.735 [DOI] [Google Scholar]

- 75. Danhoundo G, Nasiri K, Wiktorowicz ME: Improving social accountability processes in the health sector in sub-Saharan Africa: a systematic review. BMC Public Health. 2018;18(1):497. 10.1186/s12889-018-5407-8 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 76. Gullo S, Galavotti C, Sebert Kuhlmann A, et al. : Effects of a social accountability approach, CARE's Community Score Card, on reproductive health-related outcomes in Malawi: A cluster-randomized controlled evaluation. PLoS One. 2017;12(2):e0171316. 10.1371/journal.pone.0171316 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 77. Molina E, Carella L, Pacheco A, et al. : Community monitoring interventions to curb corruption and increase access and quality in service delivery: a systematic review. J Dev Effect. 2017;9(4):462–99. 10.1080/19439342.2017.1378243 [DOI] [Google Scholar]

- 78. Dunlap G, Hieneman M, Knoster T, et al. : Essential elements of inservice training in positive behavior support. J Posit Behav Interv. 2000;2(1):22–32. 10.1177/109830070000200104 [DOI] [Google Scholar]

- 79. Arditi C, Rège-Walther M, Durieux P, et al. : Computer-generated reminders delivered on paper to healthcare professionals: effects on professional practice and healthcare outcomes. Cochrane Database Syst Rev. 2017;7: CD001175. 10.1002/14651858.CD001175.pub4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 80. Pantoja T, Romero A, Green ME, et al. : Manual paper reminders: effects on professional practice and health care outcomes. Cochrane Database Syst Rev. 2004; (2). 10.1002/14651858.CD001174.pub2 [DOI] [Google Scholar]

- 81. Shojania KG, Jennings A, Mayhew A, et al. : The effects of on-screen, point of care computer reminders on processes and outcomes of care. Cochrane Database Syst Rev. 2009; (3): CD001096. 10.1002/14651858.CD001096.pub2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 82. Josephson E, Gergen J, Coe M, et al. : How do performance-based financing programmes measure quality of care? A descriptive analysis of 68 quality checklists from 28 low- and middle-income countries. Health Policy Plan. 2017;32(8):1120–1126. 10.1093/heapol/czx053 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 83. McConnell M, Ettenger A, Rothschild CW, et al. : Can a community health worker administered postnatal checklist increase health-seeking behaviors and knowledge?: evidence from a randomized trial with a private maternity facility in Kiambu County, Kenya. BMC Pregnancy Childbirth. 2016;16(1):136. 10.1186/s12884-016-0914-z [DOI] [PMC free article] [PubMed] [Google Scholar]

- 84. IOM-Institute-of-Medicine: Crossing the Quality Chasm: A New Health System for the 21st Century.Washington, DC.: Institute of Medicine;2001. 10.17226/10027 [DOI] [PubMed] [Google Scholar]

- 85. Lau R, Stevenson F, Ong BN, et al. : Achieving change in primary care--effectiveness of strategies for improving implementation of complex interventions: systematic review of reviews. BMJ Open. 2015;5(12):e009993. 10.1136/bmjopen-2015-009993 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 86. Rowe SY, Kelly JM, Olewe MA, et al. : Effect of multiple interventions on community health workers' adherence to clinical guidelines in Siaya district, Kenya. Trans R Soc Trop Med Hyg. 2007;101(2):188–202. 10.1016/j.trstmh.2006.02.023 [DOI] [PubMed] [Google Scholar]

- 87. Stanback J, Griffey S, Lynam P, et al. : Improving adherence to family planning guidelines in Kenya: an experiment. Int J Qual Health Care. 2007;19(2):68–73. 10.1093/intqhc/mzl072 [DOI] [PubMed] [Google Scholar]

- 88. Rusa L, Ngirabega Jde D, Janssen W, et al. : Performance-based financing for better quality of services in Rwandan health centres: 3-year experience. Trop Med Int Health. 2009;14(7):830–7. 10.1111/j.1365-3156.2009.02292.x [DOI] [PubMed] [Google Scholar]

- 89. Salam RA, Lassi ZS, Das JK, et al. : Evidence from district level inputs to improve quality of care for maternal and newborn health: interventions and findings. Reprod Health. 2014;11 Suppl 2:S3. 10.1186/1742-4755-11-S2-S3 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 90. Hung D, Martinez M, Yakir M, et al. : Implementing a Lean Management System in Primary Care: Facilitators and Barriers From the Front Lines. Qual Manag Health Care. 2015;24(3):103–8. 10.1097/QMH.0000000000000062 [DOI] [PubMed] [Google Scholar]

- 91. Hung DY, Harrison MI, Martinez MC, et al. : Scaling Lean in primary care: impacts on system performance. Am J Manag Care. 2017;23(3):161–8. [PubMed] [Google Scholar]

- 92. Lawal AK, Rotter T, Kinsman L, et al. : Lean management in health care: definition, concepts, methodology and effects reported (systematic review protocol). Syst Rev. 2014;3(1):103. 10.1186/2046-4053-3-103 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 93. Poksinska BB, Fialkowska-Filipek M, Engström J: Does Lean healthcare improve patient satisfaction? A mixed-method investigation into primary care. BMJ Qual Saf. 2017;26(2):95–103. 10.1136/bmjqs-2015-004290 [DOI] [PubMed] [Google Scholar]

- 94. Rotter T, Plishka CT, Adegboyega L, et al. : Lean management in health care: effects on patient outcomes, professional practice, and healthcare systems. Cochrane Database Syst Rev. 2017; (11). 10.1002/14651858.CD012831 [DOI] [Google Scholar]

- 95. Leonard KL, Masatu MC: Changing health care provider performance through measurement. Soc Sci Med. 2017;181:54–65. 10.1016/j.socscimed.2017.03.041 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 96. Singh D, Negin J, Orach CG, et al. : Supportive supervision for volunteers to deliver reproductive health education: a cluster randomized trial. Reprod Health. 2016;13(1):126. 10.1186/s12978-016-0244-7 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 97. Magge H, Anatole M, Cyamatare FR, et al. : Mentoring and quality improvement strengthen integrated management of childhood illness implementation in rural Rwanda. Arch Dis Child. 2015;100(6):565–70. 10.1136/archdischild-2013-305863 [DOI] [PubMed] [Google Scholar]

- 98. Hill Z, Dumbaugh M, Benton L, et al. : Supervising community health workers in low-income countries--a review of impact and implementation issues. Glob Health Action. 2014;7(1): 24085. 10.3402/gha.v7.24085 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 99. Bosch-Capblanch X, Liaqat S, Garner P: Managerial supervision to improve primary health care in low- and middle‐income countries. Cochrane Database Syst Rev. 2011; (9):CD006413. 10.1002/14651858.CD006413.pub2 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 100. Uys LR, Minnaar A, Simpson B, et al. : The effect of two models of supervision on selected outcomes. J Nurs Scholarsh. 2005;37(3):282–8. 10.1111/j.1547-5069.2005.00048.x [DOI] [PubMed] [Google Scholar]

- 101. Trap B, Todd CH, Moore H, et al. : The impact of supervision on stock management and adherence to treatment guidelines: a randomized controlled trial. Health Policy Plan. 2001;16(3):273–80. 10.1093/heapol/16.3.273 [DOI] [PubMed] [Google Scholar]

- 102. Willcox ML, Nicholson BD, Price J, et al. : Death audits and reviews for reducing maternal, perinatal and child mortality. Cochrane Database Syst Rev. 2018; (3): CD012982. 10.1002/14651858.CD012982 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 103. Gude WT, van Engen-Verheul MM, van der Veer SN, et al. : How does audit and feedback influence intentions of health professionals to improve practice? A laboratory experiment and field study in cardiac rehabilitation. BMJ Qual Saf. 2017;26(4):279–87. 10.1136/bmjqs-2015-004795 [DOI] [PubMed] [Google Scholar]

- 104. Irwin R, Stokes T, Marshall T: Practice-level quality improvement interventions in primary care: a review of systematic reviews. Prim Health Care Res Dev. 2015;16(6):556–77. 10.1017/S1463423615000274 [DOI] [PubMed] [Google Scholar]

- 105. Ivers NM, Sales A, Colquhoun H, et al. : No more 'business as usual' with audit and feedback interventions: towards an agenda for a reinvigorated intervention. Implement Sci. 2014;9:14. 10.1186/1748-5908-9-14 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 106. Ivers NM, Grimshaw JM, Jamtvedt G, et al. : Growing literature, stagnant science? Systematic review, meta-regression and cumulative analysis of audit and feedback interventions in health care. J Gen Intern Med. 2014;29(11):1534–41. 10.1007/s11606-014-2913-y [DOI] [PMC free article] [PubMed] [Google Scholar]

- 107. Ndabarora E, Chipps JA, Uys L: Systematic review of health data quality management and best practices at community and district levels in LMIC. Inf Dev. 2014;30(2):103–20. 10.1177/0266666913477430 [DOI] [Google Scholar]

- 108. Musgrove P: Rewards for good performance or results: A short glossary.Washington, DC: The World Bank;2011. Reference Source [Google Scholar]

- 109. Snilstveit B, Vojtkova M, Bhavsar A, et al. : Evidence gap maps--a tool for promoting evidence-informed policy and prioritizing future research.Washington DC: The World Bank;2013; Contract No.: 6725. Reference Source [Google Scholar]

- 110. Snilstveit B, Bhatia R, Rankin K, et al. : 3ie evidence gap maps. A starting point for strategic evidence production and use.New Delhi, India: International Initiative for Impact Evaluation (3ie);2017; Contract No.: Working Paper 28. Reference Source [Google Scholar]

- 111. Thorpe KE, Zwarenstein M, Oxman AD, et al. : A pragmatic-explanatory continuum indicator summary (PRECIS): a tool to help trial designers. J Clin Epidemiol. 2009;62(5):464–75. 10.1016/j.jclinepi.2008.12.011 [DOI] [PubMed] [Google Scholar]

- 112. Snilstveit B, Stevenson J, Phillips D, et al. : Interventions for improving learning outcomes and access to education in low-and middle-income countries: a systematic review.The Campbell Collaboration;2015. Reference Source [Google Scholar]

- 113. Shemilt I, Khan N, Park S, et al. : Use of cost-effectiveness analysis to compare the efficiency of study identification methods in systematic reviews. Syst Rev. 2016;5(1):140. 10.1186/s13643-016-0315-4 [DOI] [PMC free article] [PubMed] [Google Scholar]

- 114. O'Neill J, Tabish H, Welch V, et al. : Applying an equity lens to interventions: using PROGRESS ensures consideration of socially stratifying factors to illuminate inequities in health. J Clin Epidemiol. 2014;67(1):56–64. 10.1016/j.jclinepi.2013.08.005 [DOI] [PubMed] [Google Scholar]