Abstract

Behavioral neuroscience research incorporates the identical high level of meticulous methodologies and exacting attention to detail as all other scientific disciplines. To achieve maximal rigor and reproducibility of findings, well-trained investigators employ a variety of established best practices. Here we explicate some of the requirements for rigorous experimental design and accurate data analysis in conducting mouse and rat behavioral tests. Novel object recognition is used as an example of a cognitive assay which has been conducted successfully with a range of methods, all based on common principles of appropriate procedures, controls, and statistics. Directors of Rodent Core facilities within Intellectual and Developmental Disabilities Research Centers contribute key aspects of their own novel object recognition protocols, offering insights into essential similarities and less-critical differences. Literature cited in this review article will lead the interested reader to source papers that provide step-by-step protocols which illustrate optimized methods for many standard rodent behavioral assays. Adhering to best practices in behavioral neuroscience will enhance the value of animal models for the multiple goals of understanding biological mechanisms, evaluating consequences of genetic mutations, and discovering efficacious therapeutics.

Keywords: Behavior, mice, rats, behavioral assays, experimental design, statistical analysis, cognitive, novel object recognition, best practices, replication, rigor, reproducibility

Introduction

Scientists seek the truth. Neuroscientists design our experiments to maximize the chances of discovering fundamental biological principles. Decades of trial and error have yielded ever-improving strategies for effective experimental designs. Unbiased data collection, the correct control groups, sufficient sample sizes, randomized sampling, methodological rigor using gold-standard techniques, maintaining blinded data collection, stringent statistical analyses, and the importance of replicating each finding, are issues taught to graduate students and recommended by journal reviewers. Grant proposals to the National Institutes of Health in the United States are now required to explicate research strategies that promote unbiased rigor, transparency and reproducibility for generating replicable findings. Rigorous approaches to ensure sufficient statistical power are a priority recently described in the National Institute of Mental Health Request for Information Notice NOT-MH-17-036, (https://grants.nih.gov/grants/guide/notice-files/NOT-MH-17-036.html). Yet many published discoveries are subsequently not reproduced (Collaboration, 2015; Gilmore et al., 2017; Jarvis et al., 2016; Landis et al., 2012). What are the reasons for the apparent failures of the scientific enterprise to ensure robustness and reproducibility of every discovery?

Principles of high relevance to rodent behavioral research include (a) well-validated assay methods, b) sufficient sample sizes of randomly selected subject animals, (c) consideration of sex differences as detected by male-female comparisons, (d) consideration of age factors, especially for rodent models of neurodevelopmental disorders, along with using age-matched controls; (e) consideration of background strain phenotypes to optimize the choice of parental inbred strain for breeding a new mutant line of mice, and (f) using wildtype littermates as the most appropriate controls for genotype comparisons. Full reporting of the test environment and testing apparatus is key to accurately interpreting results. Employing well-established assays from the extensive behavioral neuroscience literature enables meaningful replication studies across laboratories. Conducting a battery of behavioral assays in a defined sequence that proceeds from the least stressful to the most stressful will further enhance replicability across labs. Beginning with measures of general health can help rule out physical disabilities that impair procedural requirements. Health confounds could introduce artifacts and invalidate the interpretation of results from complex behavioral tests, e.g. a mouse that cannot walk will score poorly on behavioral tests that require locomotion. Conducting two or more assays within the same behavioral domain, e.g. two corroborative social tests or three corroborative learning and memory tasks, may increase the reliability of findings. Approaches to maximally confirm a positive finding include (a) repeating studies with a second independent cohort of mice or rats in the researcher’s lab; (b) employing another closely related but non-identical experimental manipulation such as another drug from the same pharmacological class; (c) replicating findings in other labs (Crawley, 2008; Crawley et al., 1997).

In this review, we focus on replicability issues in analyzing rodent behaviors. Behavioral assays have a reputation for high variability, as discussed below. Ideally, highly standardized testing protocols will become widely used. In practice, reaching consensus on methods has proven difficult because of the varieties of available equipment, and varying local conditions. Importantly, the innate behavioral repertoire of mice and rats is influenced by a broad range of environmental factors, including aspects of parenting received from birth through weaning, dominance hierarchies in the home cage, amount of human handling prior to testing, previous testing experiences, olfactory cues from the investigators, and physical properties of the vivarium and laboratory such as lighting, temperature and noises (Crabbe et al., 1999; Sorge et al., 2014; Voelkl et al., 2016; Wahlsten et al., 2003). Many small but essential details affect the success of each rodent behavioral assay. It is most helpful to learn these essential tips from an expert behavioral neuroscience laboratory, to avoid making common novice mistakes. In fact, innate biological variability is similarly a property of rodent anatomy, physiology, biochemistry, and genetics. Analogous considerations apply to other fields of neuroscience, including imaging, electrophysiology, neurochemistry, optogenetics, inducible pluripotent stem cell phenotypes, and gene expression studies (Gilmore et al., 2017; Marton et al., 2016; Peixoto et al., 2015; Wang et al., 2010; Young-Pearse et al., 2016). Many of the principles presented in this article are applicable across neuroscience research disciplines.

Relevance to the Goals of the Special Issue on Behavioral Analyses of Animal Models of Intellectual and Developmental Disabilities

In this Special Issue, Directors of Rodent Behavior Cores at NICHD-supported Intellectual and Developmental Disorders Research Centers (IDDRCs) and other behavioral core facilities offer their expertise for conducting rigorous mouse and rat behavioral assays. Behavioral neuroscientists routinely use a variety of standardized rodent behavioral assays, as described in many reviews (Bussey et al., 2012; Crabbe et al., 2010; Crawley, 2007; Cryan et al., 2005; Kazdoba et al., 2016a; Kazdoba et al., 2016b; Moser, 2011; Puzzo et al., 2014; Wohr et al., 2013). Rodent behavioral assays fall into four general categories of standardized scoring methods. (1) Fully automated mouse and rat behavioral assays such as open field, acoustic startle, prepulse inhibition, contextual and cued fear conditioning, and operant learning. (2) Semi-automated video tracking assays such as Morris water maze, novel object recognition, and 3-chambered social approach, are dependent on sensitive parameters, settings, and appropriate statistical interpretations. (3) Observer scored assays such as non-automated elevated plus-maze, forced swim, spontaneous alternation, intradimensional/extradimensional set-shift using olfactory substrates, reciprocal social interactions, and repetitive self-grooming, are potentially subject to unconscious rater bias and require evidence of high inter-rater reliability. (4) Automated assays in which the data sets are very large and complex, such as Intellicage (Krackow et al., 2010; Robinson et al., 2014) and interesting new machine learning approaches (Hong et al., 2015; Lorbach et al., 2017; Wiltschko et al., 2015), involve massive data acquisition approaches that may introduce ambiguities which limit the interpretations of results. In the present opinion article, we offer suggestions of strategies toward standardizing rodent behavioral assays and ensuring reproducibility. Examples presented focus on novel object recognition, one of the most methodologically variable of the cognitive assays that are commonly used by behavioral neuroscientists to investigate the neurobiology of learning and memory.

Strategies to Ensure Reproducibility

The importance of identifying and rigorously assessing functional behavioral outcomes is often underestimated. In many cases, functional and behavioral outcomes are still the primary, and sometime the only, means of diagnosis of intellectual disabilities in humans, as there are many syndromes without unequivocal biomarkers or pathophysiology. Furthermore, it is fundamentally the amelioration and/or prevention of the negative behavioral sequalae of disease, including such symptoms as pain, depression and cognitive or sensorimotor impairment, that is the true goal of any search for novel therapeutics or underlying mechanisms. Thus, it is essential that functional assays conducted in laboratory animals (as well as in humans) are valid, reliable and reproducible.

In theory, the strategies to ensure reproducibility and reliability in behavioral assays are no different than those necessary to ensure rigor in other fields and follow the guidelines of good laboratory practice. These include thoroughly researching the field, meticulous record keeping, identifying and minimizing extraneous sources of variability and confounds, limiting experimental error, ensuring validity, having sufficient sample size, optimizing the assay so that neither ceiling nor floor effects limit the assay sensitivity, and having well-trained personnel perform the assay. However, logistically and practically, many factors that have no effect on biochemical assays, for example, time of day of testing or test order effects, can have profound effects on functional and behavioral outcomes in laboratory animals. This is further complicated by the fact that some behavioral domains may be more susceptible to particular types of artifacts than others.

Example of One Widely Used Cognitive Task: Novel Object Recognition

The novel object recognition assay was developed by Ennaceur and colleagues in 1988 (Ennaceur et al., 1989; Ennaceur et al., 1988; Ennaceur et al., 1992) in rats and relies on the innate tendency of rodents to preferentially explore novel objects. This is most commonly conducted with one exposure to 2 identical objects in an open field (sample trial, Trial 1, familiarization or training trial) followed by a retention interval and subsequent testing (Trial 2, testing trial, retention trial) in which one of the familiar objects has now been replaced with a novel object. Cognitively intact animals should explore the novel (new) object more than the familiar (old) object. At first glance, the task is deceptively simple and can be implemented without large financial investment in specialized equipment. In practice, there are many variations in the coding parameters, methodological parameters and in calculating cognitive performance (Antunes et al., 2012; Bertaina-Anglade et al., 2006; Bevins et al., 2006; Ennaceur, 2010; Leger et al., 2013). These include whether the animals are habituated to the test arena prior to the assay, how many habituation sessions are conducted and for how long, the shape and nature of the arena, properties of the object pairs, the duration of the familiarization (training, sample) and novel object recognition (test) trials, and the duration of the retention interval. Other critical variables include lighting conditions, handling of the subjects, and nocturnal or diurnal testing.

Table 1 describes methodological parameters that have been used by several IDDRC Rodent Cores in successfully conducting novel object recognition testing of rodent models of human neurodevelopmental disorders with intellectual disabilities. Figure 1 illustrates object pairs that have proven useful in novel object recognition assays across IDDRC facilities.

Table 1.

Representative examples of methods used for novel object recognition in mice. Column 1 lists the NICHD-supported Intellectual and Developmental Disabilities Rodent Core facilities that contributed information about standard methods which have been effective in their studies. Column 2 confirms that object pairs were pre-tested to ensure that the two objects, presented simultaneously, evoked equal amounts of exploration by control mice. Column 3 summarizes various protocols used for habituating subject mice to the test arena without objects present. Habituation is designed to reduce the time spent exploring arena surfaces during the novel object test phases. Procedures for habituation vary across facilities for many reasons, including properties of the testing environment and background strain of the subject mice. Column 4 displays the retention interval, i.e. the length of time between familiarization of the two identical objects and choice between the familiar and the novel object. Retention intervals are usually selected to evaluate aspects of short-term and long-term memory. Column 5 lists the statistical tests used to determine whether novel object recognition was significant, i.e. more time was spent exploring the novel mouse than the familiar object, for each genotype or for each treatment group. Choice of statistical test varies according to experimental design. Column 6 provides references for obtaining more detailed information about novel object recognition procedures used by reputable behavioral laboratories. Further descriptions of standard protocols and caveats for conducting novel object recognition assays appear in the text sections under “Strategies to Ensure Reproducibility.”

| IDDRC site, Rodent Core Manager and/or Director | Were the two objects previously confirmed for equal valence? (Yes/No) | Prior habituation to the empty test chamber (# days, # minutes/day) | Interval between familiarization and recognition sessions (minutes, hours or days) | Data presentation and statistical analyses used | Reference(s) |

|---|---|---|---|---|---|

| UC Davis MIND Institute, Crawley | Yes 6 objects in use across studies, object pairs counterbalanced | 30 minutes/day,1–4 days, varies by strain | 1 hour or 30 minutes for short-term memory, 24 hours for long-term memory | Repeated Measures ANOVA; One Way ANOVA with Tukey’s posthoc for preference and discrimination indices; paired t-test for sniff times within-group | Gompers et al. (2017); Silverman et al. (2013); Stoppel et al. (2017); Yang et al. (2015) |

| Albert Einstein College of Medicine, Gulinello | Yes, 2 objects used, counterbalanced, validated | Usually 6 minutes, sometimes no habituation | 1 hour-24 hours | ANOVA, Chi Square % preference and pass/fail rate | Dai et al. (2010); Vijayanathan et al. (2011) |

| UW Madison Waisman Center, Mitchell/Chang | Yes, 4 objects used, counterbalanced | Mice pre-handled by experimenter for at least 3 days, habituation to chamber is one 6-minute session | 3 minutes | ANOVA | Hullinger et al. (2016) |

| UPenn, CHOP O’Brien/Abel | Yes | 5 min/day, 3–5 days, varies by strain | 1 hour for short-term memory, 24 hours for long-term memory | Two-way ANOVA for gene effect, drug effect, and gene × drug interaction, Tukey post- hoc | Oliveira et al. (2010); Patel et al. (2014) |

| Children’s National Medical Center, Wang/Corbin | Yes | 15 minutes/day, 4 consecutive days | 15 minutes, 6-hour interval | Two-Way Repeated Measures ANOVA, Newman-Keuls post-hoc | (Wang et al., 2011) |

| Baylor College of Medicine, Veeraragavan/Samaco | Yes | 5 minutes/day,1–4 days | 24 hours | One-Way ANOVA with LSD or Tukey’s posthoc, paired t-test | Lu et al. (2017) |

| Boston Children’s Hospital, Andrews/Fagiolini | Yes | Typically 5 minutes on day prior to first trial of same objects, sometimes no habituation | 10 minutes, 24 hours, depending on mouse model | ANOVA, genotype × object between/within | Arque et al. (2008) |

| University of Washington, Cole/Burbacher | Yes | Habituation to room > 1 week; pre-handling 3–5 days; habituation to chamber 15–30 minutes, 1 day prior to testing. | 1 hour, 48 hours | One-Way ANOVA or Two-Way ANOVA with Bonferroni’s post hoc | Choi et al. (2017); Pan et al. (2012); Wang et al. (2014) |

Figure 1.

Examples of object pairs used in novel object recognition testing by IDDR Rodent Behavior Cores. A) Left: Orange traffic cone, 7 cm high × 5 cm wide (source unknown), and green cylindrical magnet, 7 cm high × 3 cm wide (source: Magneatos, Guide Craft, Amazon.com); photo by Michael Pride, UC Davis MIND Institute Rodent Behavior Core). B) Premium Big Briks, 3 staggered reclining bricks, ~ 6 cm high × 3 cm wide (source: Amazon.com # B01N5FGUHB), coral, 5 cm high × 3 cm wide (source unknown) and treasure chest 2 cm high × 4 cm wide (source unknown); photo by Melanie Schaffler, UC Davis MIND Institute. Unpublished photos in A and B were contributed by Jacqueline Crawley, UC Davis MIND Institute IDDRC Rodent Behavior Core. C) Binder clip (source: Office Depot, Washington DC, USA), Open field chamber (source: Accuscan, Columbus, OH, USA); decorated binder clip. Unpublished photos contributed by Li Wang, Children’s National Health System (CNHS) Center for Neuroscience IDDRC Neurobehavioral Core. D) R2D2 toy, plastic Easter egg, gold oval, metal toy car (source: Target), and Lego block (source unknown). Unpublished photos contributed by Heather Mitchell, University of Wisconsin-Madison, Waisman Center, IDD Models Core. E) Upper left: Plastic shapes (source: Toys”R”Us); photo by Brett Mommer and Zhengui Xia. Upper right: Gloss-painted wooden blocks (source unknown); photo by Christine Cheah and William Catterall. Lower left: small sand-filled and water-filled glass jars (source unknown), photo by Brett Mommer and Zhengui Xia. Lower right: Plastic train whistles and mini color 3×3 cube puzzles (source: Amazon.com), photos provided by Melissa Barker-Haliski and H. Steve White. Assembled photos contributed by Toby Cole, University of Washington IDDRC Mouse Behavior Laboratory. F) Left: child’s sippy cup, right, baby bottle – middle- ruler for scale. Unpublished photos contributed by Maria Gulinello, Albert Einstein College of Medicine IDDRC Animal Phenotyping Core. G) Plastic pipe fittings (source: ACE hardware, Porter Square, Cambridge, MA) 7 cm × 2 cm, and glass jar 10 cm × 3.5 cm. Unpublished photo contributed by Nick Andrews, Boston Children’s Hospital IDDRC Neurodevelopmental Behavior Core. H) LEGO Classic Medium Creative Brick Box and LEGO Duplo Deluxe Box (source: Amazon.com #10696 and #10580). Orange, black and yellow tower dimensions: 15 cm tall × 6.5 cm wide × 6.5 cm long. White, red, blue, yellow tower dimensions: 15.5 cm × 10 cm at the base, 7.5 cm wide along the rest of the body. Brown, yellow, green, orange tower dimensions: 16.5 cm tall × 6.5 cm wide × 9.5 cm long. Unpublished photo contributed by Surabi Veeraragavan, Baylor College of Medicine IDDRC Neurobehavioral Core. I) Bottle, 4.5 cm diam × 16.5 cm tall, clear, filled with white sand and capped (source: Michaels.com). Metal bar, 3.75 cm × 3.75 cm × 15 cm tall, thin aluminum sheet cut and fabricated with glue, filled with white sand (source: in-house fabrications shop). Objects are mounted on a 6.5 cm × 6.5 cm base. Unpublished photos contributed by Tim O’Brien, University of Pennsylvania, Children’s Hospital of Philadelphia IDDRC Neurobehavior Testing Core.

Methods for measuring and analyzing performance are also diverse (Antunes et al., 2012; Bevins et al., 2006; Leger et al., 2013; Vogel-Ciernia et al., 2014). Definitions of exploratory sniffing vary according to scoring system. Automated videotracking systems usually define a zone around the object, e.g. a 2 cm annulus. More sophisticated videotracking systems which detect body shapes further require that the triangular nose shape is pointing in the direction of the object. When scoring manually, sniffing is generally defined as the nose pointing toward the object and within a 1–2 cm distance from the object. As shown in Figure 2, several outcome measures are in use (Antunes et al., 2012; Bevins et al., 2006; Leger et al., 2013; Vogel-Ciernia et al., 2014) and include (1) Time spent exploring each object. The absolute time spent exploring the novel object – and the familiar object, in seconds, during the recognition test phase provides the most transparent presentation of the raw data. (2) Preference score. The preference score for the recognition test is calculated as (novel object exploration time/(novel object + familiar object exploration time) × 100%. (3) Difference score. The difference score is calculated as time spent exploring the novel object – time spent exploring the familiar object during the recognition test. (4) Discrimination index. The discrimination index is calculated as time spent exploring the novel object time exploring the familiar)/(total time exploring both novel + familiar). Derived index scores, such as the preference score and discrimination index, correct for individual differences in total exploration. This score also provides an objective value for “failure” as a 50% preference score reflects equal exploration of both novel and familiar objects. However, the effect size can be small, as the average preference score of healthy subjects is typically 60–70% and the mean score if all animals fail will be about 50%. These derived indices can result in different outcomes and often different variability than absolute measures of exploration (Akkerman et al., 2012a; Akkerman et al., 2012b). (4) Pass/fail rate. Because there is currently no convincing evidence that the absolute magnitude of the preference for the novel object is related to the inherent strength of the memory or extent of cognitive dysfunction, an additional possibility is to treat the data as categorical or binary and illustrate and analyze the percent of subjects passing and failing. “Pass” is calculated as the percentage of subjects exploring the novel object more than the familiar object.

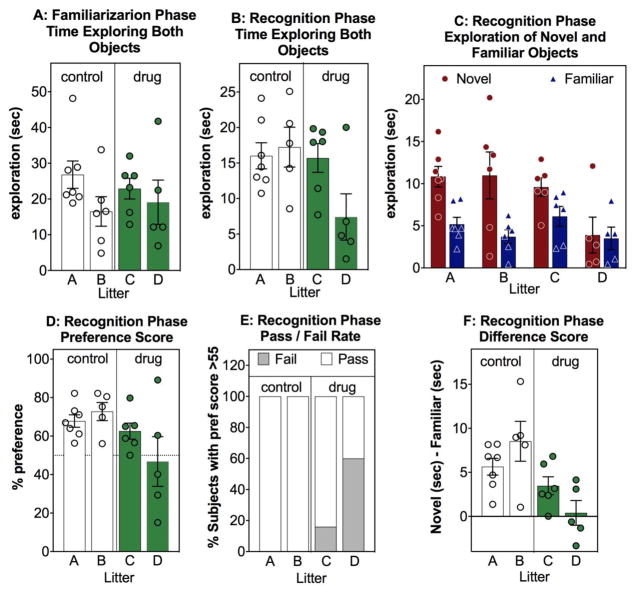

Figure 2.

Common methods for analyzing and illustrating novel object recognition data. (A) Total object exploration of both objects (in seconds) during the familiarization phase (training, Trial 1, sample phase) scored and illustrated separately for each of four litters (X axis- designated A, B, C and D) (B) Total object exploration of both objects (in seconds) during the novel object recognition phase (Test, Trial 2). (C) The absolute exploration of the new (novel) object (red, circle) and the old (familiar) object (blue, triangle), in seconds, during the novel object recognition test phase. (D) Preference score = [(exploration novel object in sec)/(exploration novel + exploration familiar)] X 100 during the novel object recognition test phase. A 50% preference score = the same exploration both the novel (new) and the familiar (old) object). (E) Pass/Fail rate indicates the % of subjects with and without a preference for the novel object, with a preference designated as > 55% preference score during the novel object recognition test phase. (F): Difference score = (exploration of novel object in sec)-(exploration of familiar object in sec) during the novel object recognition test phase. A difference score of 0 = the same exploration both the novel and the familiar object. Data shown are from 4 individual litters of C57Bl/6 mice wherein dams were treated during pregnancy with either normal drinking water or a drug in the drinking water. Offspring were tested at 5 mos. Litters A and B are control treated (open bars) and litters C and D are drug treated (green bars). Unpublished data by Gulinello, Einstein, IDDRC core.

Figure 2 is an exemplar (for illustrative purposes), to demonstrate these several ways of presenting the data, to highlight some critical issues and familiarize the reader with the various advantages and problems associated with each way of presenting and analyzing the data. These data also graphically express a potential source of variability, specifically variability that could be due to litter effects. Discussion of the various methods of analyses is detailed in the statistics section below.

No one single way of illustrating and analyzing the data is superior or intrinsically correct – some methods more accurately illustrate important patterns of the data. Data should be presented in the way that most honestly reflects the true outcome of the experiment and any aspect of the data that would affect interpretation, including showing individual data points in addition to means and error bars. Thus, in Figure 2D the standard bar chart with error would indicate that only one drug-treated litter tends to perform worse than the control litters, however Fig 2F, more dramatically illustrates that both litters C and D potentially have lower exploration of the novel object compared to the familiar object. The pass/fail mosaic plot (Fig 2E) indicates also that a greater proportion of litter D does not have a preference for the novel object than litter C but that both drug treated litters tend to perform worse than control litters. This figure also illustrates the critical need to have sufficient sample size, as these in these data there is neither the statistical power to warrant combining litters nor to detect litter effects should they be present.

It is also essential to first establish that the control group exhibits significant novel object recognition, to confirm that the methods are working correctly and that the task parameters are feasible for the specific age, species, and strain (Akkerman et al., 2012a; Akkerman et al., 2012b). Furthermore, the absolute levels of exploration during the habituation and familiarization phases (Fig 2A), preceding the novel object recognition phase of the assay (Fig 2B), are reported as an internal control measure of general exploration, to avoid artifacts and misinterpretations due to motor dysfunction or abnormalities in spontaneous exploration of the environment and objects.

Successful novel object recognition methods – What works, what doesn’t, and what factors influence the reproducibility of your cognitive tests

So, did the experiment in Figure 2 “work”? Did the controls display novel object recognition? Did any patterns appear during the familiarization phase that would indicate exploratory confounds? Was there an effect of the drug? And more importantly, if there was an effect, how likely would it be that the investigator could precisely replicate the drug effects and that others could also replicate these data? The answer in this case is equivocal, as it appears plausible that one litter is more affected by the drug than the other. Sample size in this exemplar is insufficient to make a statistical conclusion one way or another. This example highlights the many ways that an apparently simple task could be difficult to replicate, and some of the reasons why it is so often difficult to replicate published work.

It is clear that there is a range of variables that can affect behavioral and physiological assays in rodents, thus we focus next on the critical parameters that can affect outcomes in behavioral assays with a focus on the novel object recognition assay. A summary of some of the main factors is listed below, and then discussed in more detail.

-

Empirical Factors

Training of staff and logistics of measurement

Investigator Factors

Object validations

Habituation, Cleaning the arena and objects, olfaction

Exclusion Criteria

Retention Interval and Test Duration

-

Animal Factors

Housing Conditions

Sex

Age

Breeding strategy

Circadian and seasonal

Vendor source

-

Experimental DesignFactors

Blinding

Matching and Circadian factors

Sample sizes

Empirical Factors

Accuracy of scoring and reliability of scoring

Training and inter-rater reliability

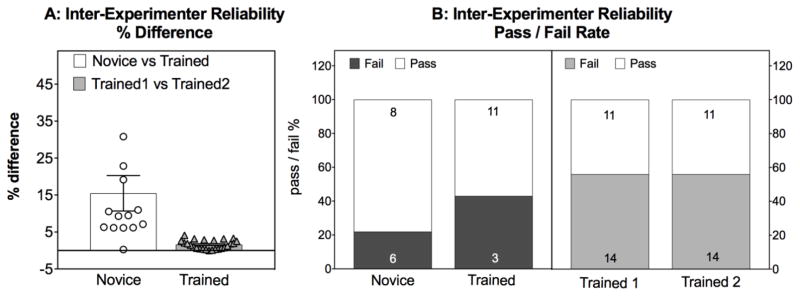

A “novice” experimenter who is well-trained by an expert and has practiced on 10 subjects may still have unreliable data compared to an experienced and practiced investigator, resulting in differences in the apparent percentage of subjects passing or failing (Fig 3). In comparison, trained experimenters have a high degree of concordance when compared to another trained investigator (Fig 3). This should not be surprising to anyone who has tried to pipette perfect triplicates, insert a good cannula or perform any bench work – there are many ways to make mistakes. Training, expertise and independent validation of scores should be required, as correct and accurate criteria for conducting and scoring behavioral assessments are critical.

Figure 3.

High degree of inter-experimenter reliability is dependent on sufficient training. (A). The % difference in total exploration in seconds was scored by a novice and a trained experimenter (circles, open bars) when scoring identical movies of the same subjects vs the % difference between 2 trained experimenters also scoring identical movies (triangles, closed bars. % difference = [ABS value (trained-novice)/trained %100] or [ABS value (trained1-trained2)/trained2 %100] (B) The Pass/Fail Rate for a novice vs a trained experimenter (left panel) and for 2 trained experimenters (right panel). N for each condition is shown in panel A as individual points in and within the bars in panel B. Note – the trained and novice in panel A and viewed the same movies as did the trained experimenters. The two trained experimenter in panel B viewed identical movies for each comparison, but these experiments were conducted separately, hence the different sample size. Unpublished data by Gulinello, Einstein, IDDRC core.

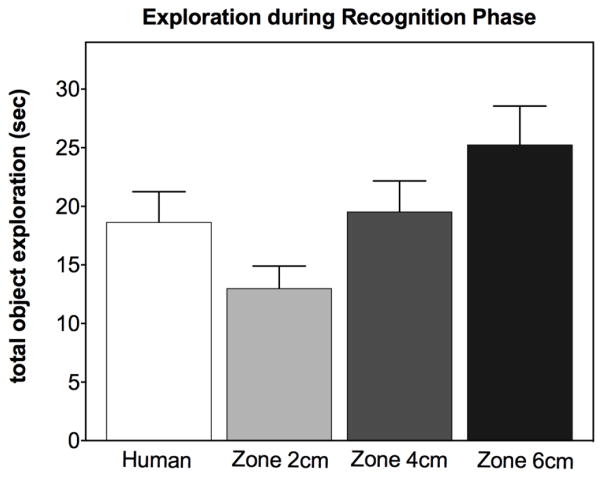

Manual vs Automated Scoring

Automated scoring with a tracking system can be a reliable alternative, but still requires extensive training in setting up the automated parameters. For example, a small difference (2 cm) in the size of the zones used to score exploration can result in very large differences in calculated exploration of each novel object (Fig 4) (Silvers et al., 2007). Thus, automated scoring is not necessarily free from experimenter error as it also requires substantial training to achieve valid and reliable scores. It is not really possible to just “set it and forget it.” Body size, activity levels and even anxiety levels affect the approach patterns of the subjects and thus the appropriate zone size must be set by a trained experimenter (Rutten et al., 2008) and must furthermore be validated.

Figure 4.

The size of the zones (X Axis) in automated tracking software can affect apparent object exploration scores. Very small differences of 2 cm in the size of the zone around the object can result in significant differences in recorded exploration (F (2,71)=5.4, p<0.01). N=24. Results obtained by a trained human scorer are provided for comparison only. Unpublished data by Gulinello, Einstein, IDDRC core

Although automated tests are appealing to many researchers and funding agencies, automation is not necessarily more reliable nor more valid, or less dependent on experience on the part of the researcher. Most “automated” assays, including video tracking software, infrared beam detection systems, acoustic startle systems, etc., require proper calibration and understanding of complex software and hardware. Issues include implementing the correct internal controls, substantial datasets requiring extensive manipulation, large numbers of parameters for which small variations drastically change the results, and a knowledge of behavioral psychology principles, that when violated, confound or invalidate the results.

Another variant is the parameter reported. Some experimenters report the number of entries into the object zones. Others report the duration of time exploring. Although a correlation between the number of entries into the zones and the duration of exploration is expected, there are large deviations from this pattern in sufficient numbers of subjects to potentially invalidate the results if only one parameter is reported.

Investigator factors

Many behavioral procedures rely on the test subject responding to novelty in their environment. An often overlooked variable in behavioral testing is the properties of the investigator performing the procedure. To avoid distracting the subject, consideration of the appearance and scent of the investigator is noteworthy. Animal facilities often utilize personal protective equipment as a means of bioprotection of rodent colonies and to limit allergen exposure to investigators. Consistency in the use of scrubs or gowns, gloves, face masks, bonnets, etc. imparts a day-today “sameness” to the appearance of the investigator, which may minimize the reaction of the rodent to the investigator. Likewise, changes of perfumes, shampoos, soaps, etc. by an investigator across the days of a procedure might affect behavioral scores in the rodents being tested. Mice appear to respond differently to the sex of investigator, particularly on procedures related to anxiety and pain sensitivity (Sorge et al., 2014), which may be related to odors or handling differences. However, this effect, which has not been demonstrated in the novel object recognition test, lasts for a short time (10–60 min) and can be controlled for by sufficiently habituating the subject to both the testing room and the experimenter (Hanstein et al., 2010). Thus, implementing a standard acclimatization period before testing the subjects is essential.

Object Validations, training period and retention interval

The most critical factors that affect the outcome of the novel object recognition test, once reliable and valid scoring are established and appropriate experimental design and sample size have been achieved, are (1) validation of the object pairs (no intrinsic preference of either object) and (2) duration of the retention interval (Gulinello et al., 2006; Ozawa et al., 2011). (3) Total duration of the test can also be an issue.

Object Validations

The premise of the novel object recognition assay is that subjects with intact cognitive function will preferentially explore the novel object. Thus, it is assumed that there is no intrinsic preference for either of the objects. The issue is that a highly preferred object will be explored more, regardless of whether it is familiar or novel. Thus, before the test can be conducted reliably, the objects must be validated. If the objects are equally attractive, then animals should explore them both for about the same time during familiarization (training Trial 1, sample trial). The pattern of performance (if subjects pass or fail) will be independent of which object is novel and which is familiar. Further, objects should be counterbalanced during an experiment such that half of each group gets object A as the familiar object and object B as the novel object, and the other half of each group gets B as the familiar object and A as the novel object. Using unvalidated pairs in which a clear preference exists will obscure signficant differences that may otherwise exist (Zhang et al., 2012). This step is also critical to ensure that the two objects are readily distinguishable by the subjects. Behavioral neuroscience laboratories traditionally conduct a series of trial and error validation tests to identify object pairs with sufficient differences and equal valences. It is interesting to note that 3D printing is used by the IDDRC Rodent Core at Washington University at St. Louis to generate highly standardized object pairs (David Wozniak, personal communication). Figure 1 illustrates some object pairs that have been successfully used by IDDRC Rodent Cores.

The exact stimuli (objects) are not a critical factor in the novel object recognition assay (Busch et al., 2006). Subjects can be re-tested with new object pairs and, as long as all pairs have been validated. High within-subject and between-cohort concordance has been reported for repeated testing of the same subjects with sequential object pairs (Dai et al., 2010; Silverman et al., 2013; Vijayanathan et al., 2011; Yang et al., 2015).

Habituation

Various laboratories use different methods for habituation and familiarization in the novel object recognition task. Whether the subjects are habituated to the testing arena prior to the familiarization phase (training, sample phase, Trial 1) and for how long, seems to be idiosyncratic to each successful IDDRC Rodent Core (Table 1). Specific experiments assessing the effect of habituation on subsequent memory performance indicate that prior habituation does not play a great role in affecting cognitive function, nor in general levels of object exploration during the familiarization phase (Leger et al., 2013). However, there may be practical benefits to habituation, particularly in juvenile or highly active subjects. Specifically, habituation may reduce unwanted behaviors such as climbing or leaping that make interpretation and scoring of the assay difficult.

Cleaning and the influence of olfaction

Rodents and lagamorphs have exceptional olfactory acuity and use this modality of sensory information to a greater extent that do humans. Mice and rats also scent mark, depositing urinary pheromones in the test arena. Thus, the olfactory environment is potentially an issue in confounding the test. It is worthy of note here that although many assume that mice and rats rely on the visual modality to perform this assay, rodents do not have good visual acuity and do not see color, as they have no fovea and essentially lack cone cells. Olfactory, whisker and tactile modalities provide at least an equal extent of information as does the visual modality. Rodents can reliably perfom novel object preference tests in the dark (Albasser et al., 2010). Objects that once contained scented contents should generally be avoided as this can alter the preference or avoidance of the object. This information is empirically determined. Many scents, including citrus and perfumes, which are appealing to humans, appear to be aversive to rodents. If it is important to the hypothesis that you do not include regions of the brain that process olfactory information, then it is also critical to establish parameters that prevent the subjects from using olfactory information. Standard practice is to clean the arena with 70% ethanol between trials, and to thoroughly clean the arena and objects after each day’s testing. Various dilutions of ethanol, from 10–70%, isopropyl alcohol, and antimicrobial cleaning solutions such as vimoba, have been used. It is possible that strongly scented cleaning solutions could influence the novel object recognition results, particularly if insufficient time is allowed for the liquid to evaporate, or the room air ventilation is insufficient to clear odors quickly. However, there is in fact little evidence that the subjects use olfactory cues in the novel object recognition assay (Chan et al., 2017; Hoffman et al., 2013).

Criteria for exclusion

For some mutant lines of mice and rats, and in older rodents, exploratory locomotion and total exploration scores may be consistently low. Care should be taken to ensure that there has been adequate exploration of the objects during the familiarization phase (Trial 1, training, sample phase). Preferences based on less than 2–3 sec of data are not technically accurate. Low scores cannot be quantified reproducibly with a stopwatch when scoring manually, are more subject to anomalous entries into a zone when scoring automatically, and do not reflect an adequate sampling of the subject’s behavior. Furthermore, when general exploration is low, there is insufficient exploration during habituation for the old objects to become actually familiar, and is thus inadequate for an accurate assessment of a preference for the novel object. There is also a tendency for those subjects with very low exploration to sit close to a single object. Thus, the behavioral sampling may not reflect an active preference for the proximal object. Data sets can be unreliable if they include many data points with very low preference scores that are generated as the result of inadequate exploration rather than true cognitive deficits. Subjects with very low (<3–5 sec) exploration “fail” more consistently than subjects with higher exploration. In contrast, in subjects with exploration greater than 3–5 sec during familiarization (Trial 1, training or sample phase), the extent of exploration during the familiarization phase is not correlated with subsequent performance in the novel object recognition phase (Trial 2, test). A requirement for a minimum duration of total object exploration during the familiarization phase, and/or during the novel object recognition test phase, has not been standardized across behavioral neuroscience labs or IDDRCs.

Retention Interval

The sensitivity of the test, and therefore how likely an effect will be significant and how likely that effect will be reproducible, is also greatly dependent on the retention interval. Generally, longer retention intervals, e.g. 24 hours for long-term memory testing, are more “difficult” than shorter retention intervals, e.g. 1 hour for short-term memory testing (Gulinello et al., 2006; Leger et al., 2013; Ozawa et al., 2011; Sik et al., 2003). The retention interval should be defined and optimized to address the scientific hypothesis about the mutation or treatment. Failing to optimize the retention interval can result in a loss of effect through floor effects (too great a proportion of controls failing) or lack of sensitivity from ceiling effects. Variations in retention intervals between experimenters and different labs can result in an inability to replicate studies through loss of sensitivity.

Test Duration

The duration of the test is also a parameter which varies between labs and can affect the outcome of the assay. Eventually, the “new” object will also become familiar and exploration and novel object preference decline over time (Dix et al., 1999). The optimum time for test duration is 3–5 minutes in most cases, but can depend on the level of exploration of the subjects, specifically older subjects, subjects that are ill or stressed, etc. Thus, the test duration is another parameter of this apparently simple assay that can greatly affect the performance of the subjects, must be validated empirically, and is often not reported in the methods section.

Animal Factors

In addition to variations in the exact methods of conducting and scoring the assay, several other animal factors can affect reliability and reproducibility of the novel object recognition test. These include housing and, vivarium conditions, handling, litter effects, and carryover effects of prior tests or experimental manipulations.

Housing Conditions

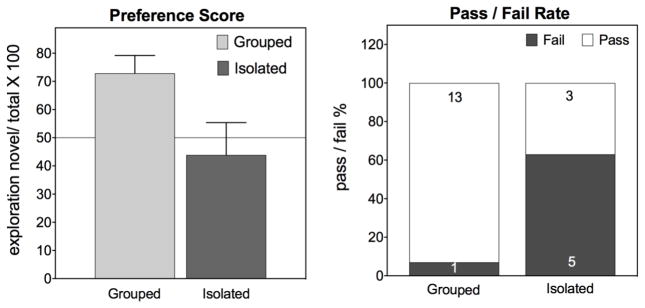

Housing rodents in isolation can have profound effects on the subjects’ physiology, health and behavioral sequelae (Chang et al., 2015; Chida et al., 2005; Douglas et al., 2003; Huang et al., 2011; Ibi et al., 2008; Kwak et al., 2009; Lander et al., 2017; Makinodan et al., 2016; McLean et al., 2010; Pietropaolo et al., 2008; Pyter et al., 2014; Sakakibara et al., 2012; Siuda et al., 2014; Talani et al., 2016; Varty et al., 2006) however see (van Goethem et al., 2012). Female rats housed in isolation have significantly worse preference scores in the novel object recognition test assessed in a parametric test (F (1,20) = 8.7; p<0.008) or in a Wilcoxon test (Z=−2.4: p<0.015). Chi square (Fischer’s exact) analysis of the pass fail/ratio also indicates that a higher proportion of isolated rats fail (p<0.05). In addition to social housing considerations, husbandry (such as food and water restriction) and exposure to anesthetics, drugs, chronic injections or other stressors, enrichment and surgical manipulations may also alter behavior and apparent performance in the novel object recognition test (Beck et al., 1999; Elizalde et al., 2008; Huang et al., 2017a; Kawano et al., 2015; Orsini et al., 2004; Weiss et al., 2012; Xiao et al., 2016).

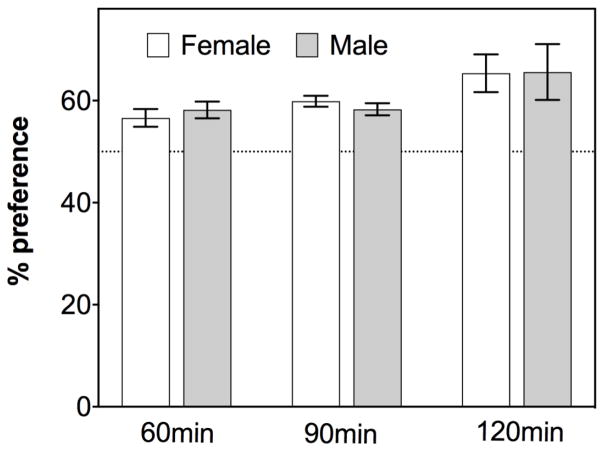

Sex differences

Sex differences on novel object recognition have not been routinely seen in control subjects (Figure 6), however, see (Bettis et al., 2012; Ghi et al., 1999). Females tend to have higher levels of exploration and some groups have found sex differences when absolute levels of exploration (in seconds) is used as the measure of cognitive performance as (Bettis et al., 2012; Ghi et al., 1999). Furthermore, sex differences have been reported in baseline performance and treatment effects on some behavioral assays and not on others. In 1993, the NIH Revitalization Act required the inclusion of women in NIH-funded clinical research. Sex as a biological variable must now be included in NIH grant proposals. Preclinical studies have traditionally not kept up with this policy, as the great majority studies with rodents either use males exclusively, or do not report the sex, or provide no analysis of the similarities and differences when both sexes are used (Zucker et al., 2010), despite urging by the NIH to include data from each sex (Clayton et al., 2014).

Figure 6.

Absence of sex differences in the novel object recognition test. The graph depicts the performance of male and female mice (total n=551) on a C57Bl6 background, tested between 3–10 months old. Sex differences in performance were not detected at any of the retention intervals. Unpublished data by Gulinello, Einstein, IDDRC core

Failure to address sex differences can result in a loss of both reproducibility and translational power. Firstly, single sex studies, or inadequately powered studies which cannot detect sex differences should they occur, result in loss of translation power to half the human population. Secondly, there is the potential for skewed data when both sexes are not equally represented in all treatment groups. Despite the lack of sex differences in the novel object recognition assay, sex differences are evident in many other behavioral domains. Assessing the number of slips in the balance beam, a measure of sensorimotor function and motor coordination, indicates significant differences between males and females in 7 month old C57 mice (Mean number of slips: females= 11+/−3.1, males = 24 +/− 3.0; F(1,19) = 9.09, p<0.007; unpublished data by Maria Gulinello, Einstein, IDDRC core). Sex difference were found to be significant in the visible platform trials in the Morris water maze (Gulinello et al., 2009) though not in the spatial memory trials (Fritz et al., 2017; Gulinello et al., 2009). Baseline differences between sexes have been reported for other assays, and mutations, treatment and housing conditions may differentially affect either sex (Chitu et al., 2015; Gulinello et al., 2003; Hanstein et al., 2010; Huang et al., 2017b).

Age

Scores for novel object recognition seem to be stable in mice tested between 3–4 months of age. Older subjects (>12 months) tend to explore less and do not tend to perform as well as younger subjects at the same retention interval. When testing older subjects, the duration of the familiarization trial, the duration of the recognition test, and the retention interval must be optimized for the age of the subjects. However, the pattern recognition, or spatial, version of this assay is significantly affected even in 12 month old subjects (Benice et al., 2006; Cavoy et al., 1993; Haley et al., 2012; Li et al., 2015). Pre-weanling pups and mice younger than 2 months may also have poorer and more unreliable scores on novel object recognition than adults (Anderson et al., 2004). Onset of puberty varies within and between strains, ranging from about 25–43 days in female mice (Pinter et al., 2007; Yuan et al., 2012). Concomitant hormone fluctuations and developmental changes can contribute to behavioral differences. Younger mice tend to have generally higher activity levels in a novel environment, more rapidly approach novel objects and spend more time with a novel object relative to adults (Anderson et al., 2004; Silvers et al., 2007).

Breeding strategy, circadian and seasonal effects, vendors

Many additional animal factors require consideration. These include the number of generations of backcrossing or heterozygote breeding which are conducted for a genetic mutation model, diurnal and circadian effects, and seasonal effects of minor variations in room temperature and humidity during the summer versus winter months. In addition, different commercial vendors supply different substrains of C57BL mice, and may breed mutant lines onto different genetic backgrounds. As mentioned above, the background strain of mice incorporates phenotypes that can directly affect the consequences of a targeted gene mutation.

Experimental Design Factors

Blinding

Unconscious bias in scoring behavioral data is unavoidable. The solution is to ensure that the investigator does not know the genotype of the subject animal, and/or which subject received which treatment. This is usually done by coding the animal genotypes and/or videos with uninformative identification numbers, and/or coding the drug vials with uninformative letters such as A, B, C. Coding is done by another lab member. The rater remains uninformed until after the scoring is completed and the code is broken. Blinding of the experimenter scoring the test is a critical factor as the assessment of behavioral criteria for exploring requires split-second judgments that must be free from unconscious bias. However, in some cases this is logistically difficult to achieve as subjects may have observable phenotypic differences (body weight, fur condition etc.). It is thus useful to have another experimenter independently conduct scoring to confirm scores and observations when the condition of the subjects is grossly visible.

Matching and Circadian Effects

Other important experimental design factors include matching testing blocks. For example, it may take about 8 hours to test 20–25 subjects in a typical 2 trial novel object recognition paradigm with a 1 hour retention interval. This would mean that some subjects are tested in the beginning of the day and some are tested at the end of the day, introducing potentially confounding circadian effects. Each block of tests should include equivalent numbers of each treatment group, keeping in mind that a treatment group is a single sex, genotype, and/or drug, using Ns that can be tested in about 2 hours. Circadian confounds due to time of day of testing or sleep disruption can potentially affect performance in the novel object recognition test (Muller et al., 2015; Ruby et al., 2013). Recognition memory may be less susceptible to circadian influences than pattern recognition or object placement memory (Takahashi et al., 2013).

Sample Size

A critical factor in reproducibility and reliability is sample size. Behavioral testing can be time consuming. It is tempting for researchers to “test until significance” – i.e. to keep testing more subjects until an effect is found. This incorrect strategy can yield a small sample size, insufficient for a truly robust data set, or an unreasonably large sample size. In some cases, it is impractical to complete the entire behavioral assay with the sample size as originally designed. It is preferable to combine smaller, manageable subsets to obtain sufficient sample size to define a cohort. Data from the two or three subsets are compared, e.g. scores in the control subgroups should be similar to each other, and scores in the treatment subgroups should be similar to each other, to confirm that the subsets can be combined to form the full cohort with the required N (Gulinello et al., 2009; Hanstein et al., 2010; Yang et al., 2015). In practice, this requires that all animals be subjected to the same exact conditions, i.e. all previous tests in the same order, identical objects, identical test parameters. Good management of lab notes and databases are required, to keep track of all the relevant factors that would prevent substantial intra-cohort variability.

Importance of reporting all details in the Methods section of publications

Perfunctory methods sections with inadequate details and insufficient citations are a recipe for irreproducible results. There are clearly numerous factors, including specifics of the testing parameters, scoring methods, housing conditions, and age of the subjects, that affect the results obtained and how robust and reliable those results are. Unfortunately, behavioral methods sections are often perfunctory, especially in publications where the primary focus is not primarily behavioral. Suggestions to improve transparency, robustness, and reproducibility of behavioral publications are offered below.

Cite relevant publications from the investigator’s lab and others. If the experiment was conducted previously and similar data were obtained, cite the paper, to let other researchers know that the findings have been replicated.

Methods should be sufficiently detailed to address the major issues affecting the outcomes of the test.

Housing conditions and animal factors should be detailed (barrier or conventional, group housed or isolated).

Citations to a method should focus on papers containing detailed methods and validation. It is best to avoid “ghost” citations that cite a paper that cites a paper that cites a paper that eventually contains the method.

If you haven’t read the methods, you haven’t read the paper. If you haven’t read the paper, please don’t cite the paper.

The best advice for investigators and authors whose experience is more molecular or physiological is to confer with behavioral experts before conducting behavioral assays and before publishing the data.

We encourage journal editors to recruit behavioral neuroscientists with appropriate expertise as reviewers of manuscripts in which the behavioral results impact the conclusions.

Appropriate statistical tests

Both underpowered studies, from a sample size that is too small, and inappropriately applied statistical tests, can affect the interpretation of the data and thus the likelihood that it will be reproducible. Experiments in live subjects conducted with small sample sizes are prone to artifacts, covariance and confounds that can neither be assessed nor controlled for.

All statistical tests make certain assumptions about the data, that, when violated, can affect the apparent significance. These include assumptions about the distribution and variability and sample size. Nonparametric tests are sometimes called distribution free statistics because they do not require that the data fit a normal distribution. In fact, these are not free of assumptions about the data, and parametric tests offer many advantages. Within their assumptions, parametric tests are robust and have greater power efficiency, e.g. relative to sample size there is higher statistical power. Parametric tests are also more flexible and can provide unique data, specifically about interactions between factors and interactions of the factors over time. Parametric tests are also arguably relatively resistant to violations of the assumption of normality (due to the Central Limit Theorem) provided the sample size is adequate – such as N=20–30 (Kwak et al., 2017).

Here we summarize some general guidelines and strategies for obtaining the highest quality and most rigorous behavioral data.

The importance of core facilities and expert advice in conducting these assays

Very few researchers would embark on creating a new mutant mouse or conducting HPLC analysis of samples without appropriate training, literature searches, pilot studies, optimization, appropriate validation studies, internal and external controls and consultation with experts. In contrast, experimenters sometimes think that behavioral assays do not require a high level of expertise. Core facilities and research centers are designed to prevent “re-inventing the wheel”, with all the attendant pitfalls that this entails. Core facilities are uniquely able to validate existing methods and equipment and maintain databases over long periods of time, which are conducive to analyses of replications, and can examine meta-data in a manner usually inaccessible to a single researcher or lab. Capabilities of core facilities include assessment of internal controls such as locomotor activity confounds which may impact total object exploration, and retention intervals that set the level of challenge for cognitive tests. Behavioral neuroscience experts routinely evaluate procedural control measures in a new line of mice, since performance on the procedures of a cognitive task can directly affect scores.

In contrast to analytical chemistry methods in which internal and external standards are included within the assay, internal and external standards for behavioral research generally rely on consistency of data from control groups across time, using the maintained databases and meta-data analyses. For the novel object recognition test, internal controls may include (a) assessment of performance with no retention interval, to distinguish non-specific sensorimotor deficits from cognitive dysfunction (Gulinello et al., 2006; Li et al., 2010); (b) analysis of counterbalanced object data, to ensure that no object bias exists, even after objects have been previously validated; (c) analysis of exploration levels during the familiarization trial, (d) inclusion of more than one retention interval (Gulinello et al., 2006), and (e) assessment of general locomotor activity, rearing, grooming etc. External controls include (a) evaluation of the consistency of data from control groups across time, (b) between-cohort analyses, and (c) comparison to other assays in analogous behavioral domains (Gulinello et al., 2009). Does the subject have cognitive impairment, depression-like behavior or tactile insensitivity? Or is that subject simply moving less? One single test can seldom answer that question. Molecular biologists, physiologists and biochemists use internal controls and multiple methods, which each have limitations. Behavioral investigators can and should similarly differentiate between procedural performance and cognitive abilities, and detect likely confounds.

Honest illustration of the data set

Just as scientists have been admonished for less than honest Western blots and IHC images (Neill, 2006), so must behavioral neuroscientists strive to illustrate and analyze their data with scrupulous integrity. In the words of physicist Richard Feynman, “We’ve learned from experience that the truth will come out. Other experimenters will repeat your experiment and find out whether you were wrong or right. Nature’s phenomena will agree or they’ll disagree with your theory. And, although you may gain some temporary fame and excitement, you will not gain a good reputation as a scientist if you haven’t tried to be very careful in this kind of work. And it’s this type of integrity, this kind of care not to fool yourself, that is missing to a large extent” (Feynman et al., 1985).

Empirical data to test the critical parameters

To what extent should rodents be habituated to the arena/test environment? Should for the test session have a duration of 5 minutes or 20 minutes? Numerous examples of methodological variations appear in the literature, and should thus use appropriate caution about setting up a new experiment based on one paper. These are empirical questions, with empirical answers. While JOVE and various protocol journals can provide a “quick start guide” that can be invaluable to setting up an unfamiliar paradigm, there is no substitute for testing it, researching it and validating it.

Conclusions

Ultimately the success of a rigorous experimental design will be judged by the replicability of its findings. Especially when the rodent behavioral phenotype is applied as a translational tool to evaluate potential clinical treatments, well-replicated and highly robust phenotypes are necessary to detect drug responses, over and above innate biological variability (Begley et al., 2012; Cole et al., 2013; Drucker, 2016; Schulz et al., 2016; Silverman et al., 2012). One reasonable progression of replications to confirm the universal strength of a finding is: (1) Replication within a lab, repeating the same procedures in two independent cohorts of animals; (2) Evaluations of a range of related behavioral assays, e.g. four learning and memory tasks, or three sociability tests; (3) Replication across labs, each repeating approximately the same experiments in mice or rats with the same mutation or treatment. In cases where results replicate well within one laboratory but not universally, it is reasonable to assume that methods specific to one lab will require modifications in other labs, to validate the assay. It is useful for labs to look carefully at the experimental parameters and conditions originally reported, and even to take the time to contact the authors, to understand if there are any crucial differences that may determine whether an effect is consistently detected; (4) Comparisons across mutant lines, e.g. using mice with different mutations in the same gene, or mutations in functionally related genes, or a different drug in the same pharmacological class; (5) Comparisons across species, e.g. mice, rats, and non-human primates. Findings that replicate across these stringent criteria would provide high confidence that the animal data are strong enough to inform consideration of a clinical trial.

Issues surrounding the translational value of preclinical animal models as predictive translational tools for clinical trials have been extensively discussed (Belin et al., 2016; Flier, 2017; Geyer, 2008; Jablonski et al., 2013; Kas et al., 2014; Kazdoba et al., 2016b; Lynch et al., 2011; McGraw et al., 2017; Robbins, 2017; Sarter, 2006; Schulz et al., 2016; Snyder et al., 2016; Spooren et al., 2012).

Although non-predictive animal studies are one factor, clinical trials fail for many other reasons. These include toxicity and other human safety concerns, dose-response pharmacokinetic variability across human subjects, brain bioavailability of the drug, inappropriate aspects of study design such as age of subjects at treatment onset, characteristics selected for patient stratification such as IQ and language abilities, and choice of primary outcome measures (Insel et al., 2013; Kola et al., 2004; Lythgoe et al., 2016).

In addition, rodents and humans may have innate differences in critical pharmacodynamic, pharmacokinetic, metabolic, immune, and lifespan parameters. Sanchez et al., 2015 provides a comprehensive example of pharmacokinetic and pharmacodynamic differences in drug action between rodents and humans. Vortioxetine, a novel, multimodal antidepressant, displays a binding affinity profile to specific serotonin receptors which differs considerably between rodents and humans, as does its absolute oral availability and elimination half-life (Sanchez et al., 2015).

“It is better (and cheaper) that potential targets be discarded at the preclinical level should they prove ineffective.” (Perry et al., 2017). In terms of financial investment in drug development, conducting rigorous animal studies may be cost-effective. The highest quality of preclinical rodent data will maximize predictive validity. Conversely, findings of no replicable preclinical efficacy may be sufficient to disprove the hypothesized target mechanism, thereby saving future expenditures. However, from the point of view of academic researchers dependent on limited grant funding, well-designed replication studies are expensive, time consuming, and difficult to support within current NIH grant budgets.

Of course, we recognize the conundrum. Research is a costly, time-intensive endeavor. On the other hand, public and private support for financing scientific research depends on confidence that results are trustworthy. In principle, the scientific method is self-correcting. Successful scientists maintain a high level of motivation for the long, hard slog to generate important, pristine findings. Enthusiasm can wane when consistently negative findings are obtained, which cannot be published in good journals. Particularly discouraging is the common scenario wherein careful researchers are scooped by competing labs who publish less rigorous data. A major issue is the difficulty of publishing negative data, particularly failures to replicate, especially when the initial paper is from a prominent laboratory and appears in a high-profile journal (Button et al., 2014; Macleod et al., 2015).

It is heartening to see the reproducibility issue at the forefront of debate in journals and at major funding agencies (Baker, 2017; Landis et al., 2012; McNutt, 2014a; b). Approaches to improve rigor, transparency and reproducibility of data are now in place in many venues (Collins et al., 2014; Lithgow et al., 2017; McNutt, 2014a; b; Moher et al., 2008). Researchers can certainly be incentivized to invest their limited funding in conducting rigorous experimental designs and replication studies. Labs will be motivated to repeat positive findings in a second experiment before considering publication, when their funding agency supports the replication study, and when editors of high profile journals prioritize manuscripts that incorporate replication studies. Such strategies may ameliorate the “reproducibility crisis” to a great extent. Promoting reproducibility goals could go a long way towards maximizing our discoveries of biological truths.

Figure 5.

The effect of isolation on performance in the novel object recognition test (3 min familiarization, 3 min recognition, 30 min retention interval). Female Long-Evans rats were housed in either grouped (2–3 per cage) or isolated conditions for 4–6 weeks and then assessed in the novel object recognition test. Sample sizes are shown in the bars in the pass/fail graph at right. Unpublished data by Gulinello, Einstein, IDDRC core

Highlights.

Strategies to maximize rigor and reproducibility in rodent behavioral assays

Replicability of translational rodent models as preclinical research tools

Key methods for one example, the novel object recognition cognitive test

Extensive literature cited will refer the reader to detailed appropriate methods

Acknowledgments

We thank Dr. Tracy King, NICHD, for her leadership and encouragement of the cross-IDDRC comparisons described in Table 1 and Figure 1. Supported by U54HD090260 (MG); U54 HD090256 (AM, QC); U54HD086984 (WTO, ZZ, TA); U54HD090257 (LW, JGC); U54HD083092 (SV, RCS); U54HD090255 (NAA, MF); U54HD083091 (TBC, TMB); U54HD079125 (JNC).

Footnotes

Publisher's Disclaimer: This is a PDF file of an unedited manuscript that has been accepted for publication. As a service to our customers we are providing this early version of the manuscript. The manuscript will undergo copyediting, typesetting, and review of the resulting proof before it is published in its final citable form. Please note that during the production process errors may be discovered which could affect the content, and all legal disclaimers that apply to the journal pertain.

References

- Akkerman S, Blokland A, Reneerkens O, van Goethem NP, Bollen E, Gijselaers HJ, Lieben CK, Steinbusch HW, Prickaerts J. Object recognition testing: methodological considerations on exploration and discrimination measures. Behav Brain Res. 2012a;232:335–347. doi: 10.1016/j.bbr.2012.03.022. [DOI] [PubMed] [Google Scholar]

- Akkerman S, Prickaerts J, Steinbusch HW, Blokland A. Object recognition testing: statistical considerations. Behav Brain Res. 2012b;232:317–322. doi: 10.1016/j.bbr.2012.03.024. [DOI] [PubMed] [Google Scholar]

- Albasser MM, Chapman RJ, Amin E, Iordanova MD, Vann SD, Aggleton JP. New behavioral protocols to extend our knowledge of rodent object recognition memory. Learn Mem. 2010;17:407–419. doi: 10.1101/lm.1879610. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Anderson MJ, Barnes GW, Briggs JF, Ashton KM, Moody EW, Joynes RL, Riccio DC. Effects of ontogeny on performance of rats in a novel object-recognition task. Psychol Rep. 2004;94:437–443. doi: 10.2466/pr0.94.2.437-443. [DOI] [PubMed] [Google Scholar]

- Antunes M, Biala G. The novel object recognition memory: neurobiology, test procedure, and its modifications. Cogn Process. 2012;13:93–110. doi: 10.1007/s10339-011-0430-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Arque G, Fotaki V, Fernandez D, Martinez de Lagran M, Arbones ML, Dierssen M. Impaired spatial learning strategies and novel object recognition in mice haploinsufficient for the dual specificity tyrosine-regulated kinase-1A (Dyrk1A) PLoS One. 2008;3:e2575. doi: 10.1371/journal.pone.0002575. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Baker M. Reproducibility: Check your chemistry. Nature. 2017;548:485–488. doi: 10.1038/548485a. [DOI] [PubMed] [Google Scholar]

- Beck KD, Luine VN. Food deprivation modulates chronic stress effects on object recognition in male rats: role of monoamines and amino acids. Brain Res. 1999;830:56–71. doi: 10.1016/s0006-8993(99)01380-3. [DOI] [PubMed] [Google Scholar]

- Begley CG, Ellis LM. Drug development: Raise standards for preclinical cancer research. Nature. 2012;483:531–533. doi: 10.1038/483531a. [DOI] [PubMed] [Google Scholar]

- Belin D, Belin-Rauscent A, Everitt BJ, Dalley JW. In search of predictive endophenotypes in addiction: insights from preclinical research. Genes Brain Behav. 2016;15:74–88. doi: 10.1111/gbb.12265. [DOI] [PubMed] [Google Scholar]

- Benice TS, Rizk A, Kohama S, Pfankuch T, Raber J. Sex-differences in age-related cognitive decline in C57BL/6J mice associated with increased brain microtubule-associated protein 2 and synaptophysin immunoreactivity. Neuroscience. 2006;137:413–423. doi: 10.1016/j.neuroscience.2005.08.029. [DOI] [PubMed] [Google Scholar]

- Bertaina-Anglade V, Enjuanes E, Morillon D, Drieu la Rochelle C. The object recognition task in rats and mice: a simple and rapid model in safety pharmacology to detect amnesic properties of a new chemical entity. J Pharmacol Toxicol Methods. 2006;54:99–105. doi: 10.1016/j.vascn.2006.04.001. [DOI] [PubMed] [Google Scholar]

- Bettis T, Jacobs LF. Sex differences in object recognition are modulated by object similarity. Behav Brain Res. 2012;233:288–292. doi: 10.1016/j.bbr.2012.04.028. [DOI] [PubMed] [Google Scholar]

- Bevins RA, Besheer J. Object recognition in rats and mice: a one-trial non-matching-to-sample learning task to study ‘recognition memory’. Nat Protoc. 2006;1:1306–1311. doi: 10.1038/nprot.2006.205. [DOI] [PubMed] [Google Scholar]

- Busch NA, Herrmann CS, Muller MM, Lenz D, Gruber T. A cross-laboratory study of event-related gamma activity in a standard object recognition paradigm. Neuroimage. 2006;33:1169–1177. doi: 10.1016/j.neuroimage.2006.07.034. [DOI] [PubMed] [Google Scholar]

- Bussey TJ, Holmes A, Lyon L, Mar AC, McAllister KA, Nithianantharajah J, Oomen CA, Saksida LM. New translational assays for preclinical modelling of cognition in schizophrenia: the touchscreen testing method for mice and rats. Neuropharmacology. 2012;62:1191–1203. doi: 10.1016/j.neuropharm.2011.04.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Button KS, Munafo MR. Incentivising reproducible research. Cortex. 2014;51:107–108. doi: 10.1016/j.cortex.2013.09.011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cavoy A, Delacour J. Spatial but not object recognition is impaired by aging in rats. Physiol Behav. 1993;53:527–530. doi: 10.1016/0031-9384(93)90148-9. [DOI] [PubMed] [Google Scholar]

- Chan W, Singh S, Keshav T, Dewan R, Eberly C, Maurer R, Nunez-Parra A, Araneda RC. Mice Lacking M1 and M3 Muscarinic Acetylcholine Receptors Have Impaired Odor Discrimination and Learning. Front Synaptic Neurosci. 2017;9:4. doi: 10.3389/fnsyn.2017.00004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Chang CH, Hsiao YH, Chen YW, Yu YJ, Gean PW. Social isolation-induced increase in NMDA receptors in the hippocampus exacerbates emotional dysregulation in mice. Hippocampus. 2015;25:474–485. doi: 10.1002/hipo.22384. [DOI] [PubMed] [Google Scholar]

- Chida Y, Sudo N, Kubo C. Social isolation stress exacerbates autoimmune disease in MRL/lpr mice. J Neuroimmunol. 2005;158:138–144. doi: 10.1016/j.jneuroim.2004.09.002. [DOI] [PubMed] [Google Scholar]

- Chitu V, Gokhan S, Gulinello M, Branch CA, Patil M, Basu R, Stoddart C, Mehler MF, Stanley ER. Phenotypic characterization of a Csf1r haploinsufficient mouse model of adult-onset leukodystrophy with axonal spheroids and pigmented glia (ALSP) Neurobiol Dis. 2015;74:219–228. doi: 10.1016/j.nbd.2014.12.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Choi WS, Kim HW, Tronche F, Palmiter RD, Storm DR, Xia Z. Conditional deletion of Ndufs4 in dopaminergic neurons promotes Parkinson’s disease-like non-motor symptoms without loss of dopamine neurons. Sci Rep. 2017;7:44989. doi: 10.1038/srep44989. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Clayton JA, Collins FS. NIH to balance sex in cell and animal studies. Nature. 2014;509:282–283. doi: 10.1038/509282a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cole PD, Vijayanathan V, Ali NF, Wagshul ME, Tanenbaum EJ, Price J, Dalal V, Gulinello M. Memantine protects rats treated with intrathecal methotrexate from developing spatial memory deficits. Clin Cancer Res. 2013;19:4446–4454. doi: 10.1158/1078-0432.CCR-13-1179. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collaboration, O. S. Psychology. Estimating the reproducibility of psychological science. Science. 2015;349:aac4716. doi: 10.1126/science.aac4716. [DOI] [PubMed] [Google Scholar]

- Collins FS, Tabak LA. Policy: NIH plans to enhance reproducibility. Nature. 2014;505:612–613. doi: 10.1038/505612a. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crabbe JC, Phillips TJ, Belknap JK. The complexity of alcohol drinking: studies in rodent genetic models. Behav Genet. 2010;40:737–750. doi: 10.1007/s10519-010-9371-z. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Crabbe JC, Wahlsten D, Dudek BC. Genetics of mouse behavior: interactions with laboratory environment. Science. 1999;284:1670–1672. doi: 10.1126/science.284.5420.1670. [DOI] [PubMed] [Google Scholar]

- Crawley JN. What’s Wrong With My Mouse? Behavioral Phenotyping of Transgenic and Knockout Mice. Hoboken, New Jersey: John Wiley & Sons, Inc; 2007. [Google Scholar]

- Crawley JN. Behavioral phenotyping strategies for mutant mice. Neuron. 2008;57:809–818. doi: 10.1016/j.neuron.2008.03.001. [DOI] [PubMed] [Google Scholar]

- Crawley JN, Paylor R. A proposed test battery and constellations of specific behavioral paradigms to investigate the behavioral phenotypes of transgenic and knockout mice. Horm Behav. 1997;31:197–211. doi: 10.1006/hbeh.1997.1382. [DOI] [PubMed] [Google Scholar]

- Cryan JF, Holmes A. The ascent of mouse: advances in modelling human depression and anxiety. Nat Rev Drug Discov. 2005;4:775–790. doi: 10.1038/nrd1825. [DOI] [PubMed] [Google Scholar]

- Dai M, Reznik SE, Spray DC, Weiss LM, Tanowitz HB, Gulinello M, Desruisseaux MS. Persistent cognitive and motor deficits after successful antimalarial treatment in murine cerebral malaria. Microbes Infect. 2010;12:1198–1207. doi: 10.1016/j.micinf.2010.08.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Dix SL, Aggleton JP. Extending the spontaneous preference test of recognition: evidence of object-location and object-context recognition. Behav Brain Res. 1999;99:191–200. doi: 10.1016/s0166-4328(98)00079-5. [DOI] [PubMed] [Google Scholar]

- Douglas LA, Varlinskaya EI, Spear LP. Novel-object place conditioning in adolescent and adult male and female rats: effects of social isolation. Physiol Behav. 2003;80:317–325. doi: 10.1016/j.physbeh.2003.08.003. [DOI] [PubMed] [Google Scholar]

- Drucker DJ. Never Waste a Good Crisis: Confronting Reproducibility in Translational Research. Cell Metab. 2016;24:348–360. doi: 10.1016/j.cmet.2016.08.006. [DOI] [PubMed] [Google Scholar]

- Elizalde N, Gil-Bea FJ, Ramirez MJ, Aisa B, Lasheras B, Del Rio J, Tordera RM. Long-lasting behavioral effects and recognition memory deficit induced by chronic mild stress in mice: effect of antidepressant treatment. Psychopharmacology (Berl) 2008;199:1–14. doi: 10.1007/s00213-007-1035-1. [DOI] [PubMed] [Google Scholar]

- Ennaceur A. One-trial object recognition in rats and mice: methodological and theoretical issues. Behav Brain Res. 2010;215:244–254. doi: 10.1016/j.bbr.2009.12.036. [DOI] [PubMed] [Google Scholar]

- Ennaceur A, Cavoy A, Costa JC, Delacour J. A new one-trial test for neurobiological studies of memory in rats. II: Effects of piracetam and pramiracetam. Behav Brain Res. 1989;33:197–207. doi: 10.1016/s0166-4328(89)80051-8. [DOI] [PubMed] [Google Scholar]

- Ennaceur A, Delacour J. A new one-trial test for neurobiological studies of memory in rats. 1: Behavioral data. Behav Brain Res. 1988;31:47–59. doi: 10.1016/0166-4328(88)90157-x. [DOI] [PubMed] [Google Scholar]

- Ennaceur A, Meliani K. A new one-trial test for neurobiological studies of memory in rats. III. Spatial vs. non-spatial working memory. Behav Brain Res. 1992;51:83–92. doi: 10.1016/s0166-4328(05)80315-8. [DOI] [PubMed] [Google Scholar]

- Feynman R, Leighton R. Surely You’re Joking, Mr. Feynman!: Adventures of a Curious Character. USA: W.W. Norton; 1985. [Google Scholar]

- Flier JS. Irreproducibility of published bioscience research: Diagnosis, pathogenesis and therapy. Mol Metab. 2017;6:2–9. doi: 10.1016/j.molmet.2016.11.006. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Fritz AK, Amrein I, Wolfer DP. Similar reliability and equivalent performance of female and male mice in the open field and water-maze place navigation task. Am J Med Genet C Semin Med Genet. 2017;175:380–391. doi: 10.1002/ajmg.c.31565. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Geyer MA. Developing translational animal models for symptoms of schizophrenia or bipolar mania. Neurotox Res. 2008;14:71–78. doi: 10.1007/BF03033576. [DOI] [PMC free article] [PubMed] [Google Scholar]