Abstract

From the perspective of constructivist theories, emotion results from learning assemblies of relevant perceptual, cognitive, interoceptive, and motor processes in specific situations. Across emotional experiences over time, learned assemblies of processes accumulate in memory that later underlie emotional experiences in similar situations. A neuroimaging experiment guided participants to experience (and thus learn) situated forms of emotion, and then assessed whether participants tended to experience situated forms of the emotion later. During the initial learning phase, some participants immersed themselves in vividly imagined fear and anger experiences involving physical harm, whereas other participants immersed themselves in vividly imagined fear and anger experiences involving negative social evaluation. In the subsequent testing phase, both learning groups experienced fear and anger while their neural activity was assessed with functional magnetic resonance imaging (fMRI). A variety of results indicated that the physical and social learning groups incidentally learned different situated forms of a given emotion. Consistent with constructivist theories, these findings suggest that learning plays a central role in emotion, with emotion adapted to the situations in which it is experienced.

Keywords: emotion, learning, situated cognition, situated conceptualization, constructivist theories

Graphical Abstract

According to constructivist theories, emotions take situation-specific forms (e.g., Barrett, 2006a, 2006b, 2012, 2013, 2014, 2017; Gendron & Barrett, 2009; Wilson-Mendenhall, Barrett, Simmons, & Barsalou, 2011; Wilson-Mendenhall & Barsalou, 2016). In a situation that affords emotion, an emotional state is assembled from perceptual, cognitive, interoceptive, and motor processes relevant for interpreting and coordinating both physical and cognitive responses to the situation. Imagine, for example, stepping into a cross walk as a speeding car running a red light approaches suddenly from the left. The fear experienced might engage perceptual processes for sensing physical threat, cognitive processes for imagining bodily harm, interoceptive processes for mobilizing action, and motor processes for avoiding the approaching vehicle. Alternatively, imagine being at a dinner party, failing to read the social milieu properly, and impulsively saying something offensive, such that an angry silence ensues. The fear experienced in this situation might engage perceptual processes for sensing social threat, cognitive processes for imagining social exclusion, interoceptive processes for inhibiting further impulsive comments, and motor processes for expressing regret facially and verbally.

From the constructivist perspective, different forms of an emotion are constructed dynamically in specific situations, with each form producing an emotional experience adapted to current conditions. Fear, for example, takes still more different forms during mechanical difficulties on a plane, losing one’s job, choking on food, losing one’s spouse, and so on. We further assume that as different forms of an emotion are experienced, they become established in long-term memory as situated memories, which later influence emotional experiences in similar situations. When perceiving another rapidly approaching car on a subsequent occasion, the situational memory from the previous occasion becomes active, implicitly and rapidly, coordinating the cognitive, interoceptive, and motor processes that produce fear in the situation. The current study was designed to assess whether experiencing emotions such fear and anger repeatedly in specific kinds of situations induces situation-specific emotional experiences when experiencing these situations again later.

Situated conceptualization

We utilize the construct of situated conceptualization to explain how situated forms of emotion are learned (Barsalou, 2003b, 2009, 2013, 2016a,b; Barsalou, Niedenthal, Barbey, & Ruppert, 2003; Yeh & Barsalou, 2006). According to this account, the brain is a situation processing architecture, with multiple networks simultaneously using concepts in memory to interpret various elements of the current situation, including the setting, agents, objects, actions, events, mental states, and self-relevance. As these individual elements are each conceptualized, a global conceptualization of the situation assembles them into a coherent interpretation of what is occurring across the situation as a whole (e.g., how an event bears on one’s self interests, how various coping actions might regulate the situation and one’s bodily responses to it; cf. Lazarus, 1991). Together, these elemental and global conceptualizations establish a situated conceptualization that represents and interprets the situation at multiple levels. While consuming a croissant at a coffee house, for example, a situated conceptualization is constructed that includes conceptualizations of the coffee house, the croissant, its goal relevance, eating, and the emotion experienced.

As a situated conceptualization becomes assembled to interpret a situation, it is superimposed on memory via associative mechanisms. Once stored, it can later be reactivated when a similar situation is encountered again, or just part of the original situation. Once reactivated, the situated conceptualization reinstates itself in the brain and body, reproducing a state similar to the original experience, which may then be further adapted to the current situation via executive processing. Because the reactivated conceptualization is grounded in perceptual, cognitive, interoceptive, and motor systems, it does not simply describe the situation symbolically, but instead activates perceptions, cognitions, bodily states, actions, and emotions associated with the original situation. To the extent that the reinstated memory is appropriate for the current situation, it provides useful pattern completion inferences about it. When returning to the coffee house, for example, the situated conceptualization constructed previously in it might become active, simulating the positive emotion of eating the croissant, which then motivates consuming another.

Over time, large populations of situated conceptualizations become increasingly established in memory for an individual. Because different people store different populations of situated conceptualizations from different life experiences, individual differences result in applying these memories to current situations. To the extent that individuals have different emotional experiences of the same coffee house, for example, they store different situated conceptualizations that later produce different anticipatory emotions via pattern completion inference.

Emotions as categories of exemplar memories

From this perspective, the development of emotion categories results from constructing situated conceptualizations in emotional situations and organizing them into categories that become increasingly established in memory. We assume that this account applies to emotion categories that are both ‘basic’ (e.g., fear, anger, sadness, disgust, happiness) and ‘non-basic’ (e.g., dread, guilt, hope love, peacefulness). We further assume that a variety of sociocultural mechanisms, especially language, are responsible for organizing and differentiating emotional experiences over the course of development. As a child feels anger across different situations, for example, hearing the word “anger” associated with these experiences causes the respective situated conceptualizations to become organized together (reflecting the culture’s conventions for what constitutes anger). Additionally, as the child experiences new anger situations similar to earlier ones, situated conceptualizations for the new situations become integrated into the anger category, as its situated conceptualizations become active to guide current emotion via pattern completion inferences. As situated conceptualization accumulate for different emotions, guided by the socio-cultural and linguistic regularities that scaffold learning, the brain constructs differentiated emotion in relevant situations with increasing ease and efficiency.

To the extent this account is correct, it follows that learning emotion categories should have much in common with learning non-emotion categories, especially when viewing learning from the perspective of exemplar theories (Wilson-Mendenhall et al., 2011). If one views situated conceptualizations in an emotion category as the category’s exemplars, then learning an emotion category, such as fear, should proceed similarly to learning a natural category, such as apple. Similar to how learning populations of exemplars underlies the acquisition of natural and artifact categories (e.g., Murphy, 2002; Nosofsky, 2011), learning populations of exemplars underlies the acquisition of emotion categories. Analogous to how prototypical animals and artifacts emerge from acquired populations of animal and artifact exemplars (Hintzman, 1986; Medin & Schafer, 1978), prototypical emotions emerge from acquired populations of emotion exemplars (Wilson-Mendenhall, Barrett, & Barsalou, 2015). In each case, prototypes are exemplars that are, on average, most frequent and most similar relative to other category exemplars, and that are most ideal with respect to goals associated with using the category (Barsalou, 1985; Hampton, 1979; Rosch & Mervis, 1975).1

Also similar to other categories, emotion categories are relatively unique. Just as any other important kind of category assembles a unique collection of features and processes (e.g., tools, foods, animals, people), so do emotion categories. For example, emotion categories typically assemble biologically-based processes for arousal, valence, reward, action, and cognitive control (e.g., Barrett & Bliss-Moreau, 2009). Importantly, these biologically-based processes appear to underlie emotional states across all emotion categories (Barrett & Satpute, 2013; Lindquist et al., 2012; Wilson-Mendenhall, Barrett, & Barsalou, 2013a). As a consequence, emotions, as a whole, constitute a special category, assembling somewhat unique processes, many of which have strong biological origins.

Within the broad category of emotions, emotion categories develop that reflect statistical regularities in the specific processes assembled to constructed situated conceptualizations. Fear, anger, and disgust, for example, exhibit different statistical regularities in the perceptual, cognitive, interoceptive, and motor processes assembled for them.

Emotion as categorization and inference

Once the situated conceptualizations that constitute an emotion category become established in memory, emotion typically results from the process of categorization, namely, from conceptual acts (e.g., Barrett, 2006b, 2009, 2012, 2013e.g., Barrett, in press). From this theoretical perspective, emotion categorization operates much like categorization in general (e.g., for artifact and animal categories). On perceiving an affective stimulus or situation, the emotion category whose situated conceptualizations provide the best fit categorizes it. On meeting with one’s boss, for example, situated conceptualizations stored from previous experiences become active and begin to elicit the emotion stored in the reactivated memories as pattern completion inferences. Elements of the situated conceptualization not (yet) present in the situation are simulated or enacted, including perceptual anticipations, assessments of self-relevance, appropriate bodily states, and preparation for action (both cognitive and motoric). Thus, the conceptual act, not only categorizes the situation as an instance of a particular emotion, it contributes to embodied experiences of the emotion.

To the extent that individuals have different emotional experience in a given situation (e.g., meetings with one’s boss), they categorize the situation differently, with different emotion resulting. Even when individuals activate the same emotion category in the situation (e.g., fear), the specific form produced may vary as a function of their previous situational experience (e.g., fear involving an unreasonable work request vs. job loss).

Explaining distributional properties of emotion categories

Reviews and meta-analyses of emotion document three distributional properties of emotion categories: (1) statistical regularities, (2) non-homogeneity, and (3) non-selectivity (e.g., Barrett, 2006a,b; Kober et al., 2008; Lindquist et al. 2012; Vytal & Hamann, 2010). As described next, viewing emotions as learned categories of situated conceptualizations explains these distributional properties naturally.

First, for a given emotion such as fear, statistical regularities typically occur for facial expression, action, subjective experience, peripheral physiology, and neural activity (e.g., Kober et al., 2008; Lindquist et al. 2012; Vytal & Hamann, 2010). Certain facial expressions, for example, are somewhat more likely for fear than for other emotions; similarly, certain brain activations are somewhat more likely for fear, as are certain forms of peripheral physiology. From the constructivist perspective, these regularities result because assembling processes to produce emotional states is not random. Because different emotions differ systematically in the processes they assemble, regularities result in the forms they take.

Nevertheless, as reviews document, these regularities are relatively weak, reflecting the distributional properties of non-homogeneity and non-selectivity (e.g., Barrett, 2006a,b; Kober et al., 2008; Lindquist et al. 2012). Non-homogeneity results because the processes that compose different exemplars of the same emotion vary widely across exemplars. A specific facial expression, for example, does not occur for all exemplars of fear, but only for some, with a wide variety of different facial expressions occurring across exemplars. Similarly, a particular cardiovascular response does not occur for all fear experiences, nor does the activation of a particular brain area, nor the elicitation of a particular coping response. Instead each emotional situation produces a specific emotional response adapted to current situational constraints. As a result, no emotional process is common across all instances of the same emotion.

Non-selectivity results because the processes used to construct exemplars of one emotion are often used frequently to construct exemplars of other emotions as well. The action of retreating, for example, may be useful for coping in some instances of fear, but may also be useful for coping with some instances of disgust, anger, and even happiness (e.g., when being happy about something might offend someone; Barrett et al., 2007). Similarly, the utilization of a particular process may be relevant across many emotions, not just one (e.g., the amygdala signaling attentional relevance; the insula providing interoceptive feedback; Lindquist et al., 2012).

Emotion coherence and communication

People often have the sense that emotions constitute coherent categories, namely, each emotion shares a well-defined set of core features across its instances. Furthermore, because emotions appear to have conceptual cores, people can communicate clearly and effectively about the emotion that they or someone else is experiencing. How are coherence and communication possible if emotions result from categories of exemplar memories that are non-homogeneous and non-selective? How could an emotion, such as fear, appear coherent? How could two people talking about a fearful experience converge on a similar understanding?

The problems of non-homogeneity and non-selectivity apply to categories in general, not just to emotion categories (e.g., Wittgenstein, 1953). In general, most categories do not have core features common across category members that determine category membership (e.g., Hampton, 1979; Rosch & Mervis, 1975). Instead of coherence within categories resulting from core features, coherence results from statistical regularities associated with family resemblance structures (Rosch & Mervis, 1975) and radial category structures (Lakoff, 1987). Furthermore, only a small subset of a category’s exemplars may be relevant for representing, understanding, and/or using a category on a given occasion, such that core features are neither necessary nor relevant (e.g., Medin & Ross, 1989; Spalding & Ross, 1994; cf. Barsalou, 2003a).

Even when categories do not have core features, they nevertheless appear coherent to people. For various reasons, people may create the illusion that core features exist for a category (e.g., Brooks & Hannah, 2006), or they may create the fiction that a category has an essence (e.g., Gelman, 2003). In each case, cognitive structure added to exemplars creates an illusion of coherence. Another possibility is that using the same word (e.g., “fear”) when referring to the diverse non-homogeneous exemplars of a category creates the illusion that the underlying features of the category are as stable as its name (e.g., Barsalou, 1989; James, 1950/1890).

Experiment Overview and Predictions

As just described, we assume that an individual possesses a large population of situated conceptualizations (exemplars) in memory for a given emotion category, based on previous emotional experience. Furthermore, when a new situation similar to one of these situated conceptualizations is encountered, the previous situated conceptualizations becomes active and produces a similar emotional state in the current moment. It follows that if a person experiences an emotion multiple times in a new kind of situation, then new situated conceptualizations for the emotion become increasingly established. Furthermore, these situated conceptualizations are likely to become active later in related situations, producing similar emotional states. Emotion learning should occur that affects how the person experiences emotion in this new kind of situation.

To assess whether people learn situation-specific forms of an emotion in this manner, we manipulated the situational experience that two participant groups had with the same emotion, and then assessed whether these different learning experiences affected subsequent experiences of the emotion. We describe the learning and testing phases next, along with relevant predictions for each.

Learning phase

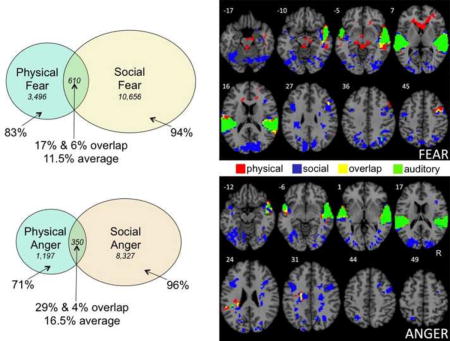

As Figure 1 illustrates, two learning groups consisting of different participants participated in the experiment. Across sessions, one group of participants experienced fear and anger only in physical harm situations (e.g., being run over by a car while walking in a crosswalk). Conversely, a second participant group experienced fear and anger only in social threat situations (e.g., being ridiculed after saying something unpopular at a dinner party).

Figure 1.

Overview of the two learning sessions and the scanning session.

To implement an effective situational manipulation, the physical and social situations were constructed to be distinctly different, having different statistical regularities. In physical danger situations, the immersed participant was the only person present in an outdoor setting, and was responsible for creating a threat of bodily harm. As a result, anger was directed toward the self, and fear involved imminent physical danger. Conversely, in social evaluation situations, other people were present in an indoor setting who were responsible for putting the immersed participant in a risky or difficult social situation. As a result, anger was directed toward someone else, and fear involved negative evaluation by others. Although the specific situations within each situation type varied considerably, they were nevertheless designed to share the situational regularities just described.

Across two learning sessions illustrated in Figure 1, participants were asked to generate either fear or anger (not both) while immersed in a physical or social situation. Once immersed in the situation, participants performed memory, imagery, being there, and typicality judgment at different points in the learning procedure (Figure 1). As later results indicate, participants were generally successful at immersing themselves in the physical and social situations as instructed.

As much research demonstrates, situation immersion is a powerful method for evoking emotion in laboratory environments (e.g., Corradi-Dell’Acqua, Hofstetter, & Vuilleumier, 2014; Lench, Flores, & Bench, 2011; Wilson-Mendenhall et al. 2011; 2013a,b). The fact that people spend much time each day experiencing emotions in response to imagined events also attests to the power of this method (Killingsworth & Gilbert, 2010). Furthermore, many studies demonstrate that the neural activity associated with imagining events overlaps significantly with the neural activity associated with actually experiencing them (Barsalou, 2008). Finally, we found elsewhere that the physical and social situations used here induce immersion in the respective situations (Wilson-Mendenhall et al., 2013b). Whereas the physical situations induce action planning associated with handling a physical threat, the social situations induce social inference and mentalizing associated with being evaluated negatively.

According to the constructivist perspective, participants should assemble a situated conceptualization on each trial to represent each learning situation and to feel emotion in it, perhaps utilizing related situated conceptualizations already in memory. Thus, participants who experienced emotion in physical harm situations should have typically assembled processes relevant for perceiving a physical threat in an outdoor setting, anticipating bodily harm, and preparing motoric actions to remain safe. Conversely, participants who experienced emotion in social evaluation situations should have typically assembled processes relevant for perceiving a social threat in an indoor setting, anticipating a decrease in social value, and preparing interpersonal actions to minimize social damage. Across learning trials, each group should have increasingly established assemblies of processes relevant for processing the situational regularities encountered repeatedly. As a result, each group should have implicitly learned to experience fear and anger differently within the experimental context.

Along with fear and anger, two non-emotional mental states—plan and observe—were also included during the learning phase. As for fear and anger, plan and observe were each experienced multiple times in either physical or social situations, but not both (mixed randomly with fear and anger within a learning group). Besides functioning as fillers, plan and observe provided an opportunity to assess effects of situational learning on non-emotional mental states. From hereon, “mental state” will refer to fear, anger, plan, and observe, so that all four can be referred to as a group.

Test phase

Following the second learning session, participants produced experiences of fear, anger, plan, and observe while undergoing functional magnetic resonance imaging (fMRI). At the start of each test trial, participants were cued with the word for fear, anger, plan, or observe, and asked to produce the associated experience for 3 seconds. As described for the learning phase, participants had extensive practice earlier producing experiences of fear, anger, plan, and observe upon hearing the respective words. Cuing experiences with words in this manner has been used effectively in many related paradigms (e.g., Addis, Wong, & Schachter, 2007; Lench et al., 2011; Rubin, 1982).

After generating an experience of fear, anger, plan, or observe, participants then listened to one of the situations that they had experienced earlier during the learning phase. As they listened to the situation, they were asked to embed their previously cued experience of fear, anger, plan, or observe into the developing situation. Finally, participants judged how typical it was to experience the previously cued state in the situation. The situation was always one that been experienced earlier during the learning phase. Participants who received physical situations during learning only received the same physical situations again during testing; participants who received social situations during learning only received the same social situations again. Thus, the testing context reinstated the learning context, such that participants were likely to experience emotional states during testing similar to those they had experienced during learning earlier.

In the imaging analysis, the brain activations occurring during the 9 sec situation period were separated from the activations initiated during the 3 sec before the situation (i.e., to the initial mental state word that cued participants to experience fear, anger, plan, or observe). Of primary interest was whether the initial 3 sec activations for fear and anger, in particular, differed between learning groups as a function of the different situations experienced during the learning phase. By focusing on activations during this initial phase, we were able to assess the neural activity associated with the same physical stimulus (e.g., the cue word “fear”) prior to a situation being presented. In previous work, we found that presenting concepts initially, prior to subsequent task-relevant material, provided an effective means of establishing the neural systems used to process the initial concepts (Wilson-Mendenhall, Simmons, Martin, & Barsalou, 2013).

Our analyses focused on voxel activations significantly active above the resting state baseline for the following reasons. First, we wanted to remove activations associated with the auditory processing of mental state cues during the first 3 sec. By removing voxels significantly active above baseline across all four mental states in a given learning condition, we assumed that we would primarily be removing activations associated with perceptual stimulus processing peripheral to our hypotheses. Because higher-level cognitive and affective processing is likely to vary considerably across the four mental states, we assumed that only neural activations associated with perceptual stimulus processing would be shared across them. If so, then only auditory processing areas should become active, not other areas associated with cognitive and affective processing.2

Assessing the remaining voxels significantly active above the resting state baseline allowed us to test hypotheses that follow from constructivist theories of emotion. In general, if producing emotional and non-emotional mental states in different situations assembles different cognitive, interoceptive, and motor processes during the learning phase, then participants should activate different neural areas for the same mental state later during the test phase. Three specific predictions follow.

First, the number of voxels that become active above baseline to represent a situated emotional experience should depend on the specific collection of processes assembled. Depending on the situation, different processes could become relevant for the same emotion, such that the total amount of neural activity above baseline varies. Rather than a constant number of voxels becoming active across physical and social situations to represent a mental state, large situational differences in the voxels active above baseline could result. Furthermore, these situational effects could vary considerably, with some mental states assembling more processes in social situations, and with other mental states assembling more processes in physical situations.

Second, if the physical and social learning groups assembled different neural processes for the same mental state during the learning phase, they should activate different neural areas when later cued to produce mental states during the test phase. If so, then the neural areas active above baseline for the same mental state across the physical and social learning groups should differ significantly (analogous to the non-overlapping activations observed for mental states when primed in physical vs. social situations; Wilson-Mendenhall et al., 2011). To test this prediction, we used conjunction analysis to assess the overlap in voxels active above baseline for the same mental state across the two learning conditions.

Third, if the same mental state assembles different processes in the physical and social conditions, different intrinsic networks should become active. To assess this hypothesis, we assessed the number of voxels active above baseline in Yeo et al.’s (2011) intrinsic network masks. If different sets of neural processes are assembled for the same mental state in different learning situations, then different distributions of activations across neural networks should be observed.

Finally, two additional analyses assessed other issues of interest. First, it follows from constructivist views that the neural activations for two emotions, such as fear and anger, could vary in similarity as a function of situation. In some situations, fear and anger might assemble more similar sets of processes than in others. If so, then the amount of overlap in activations for fear and anger above baseline should vary between the physical and social learning conditions. Second, a final analysis assessed the possibility that instead of generating situated mental states to the cue words in the test phase, participants simply anticipated the situations to follow. If so, then activations above baseline for all four mental states should be the same within each learning condition.

Methods

Participants

Thirty right-handed, native-English speakers from the Emory University community, ranging in age from 20 to 50 (average 28.17), participated in the experiment. Fifteen participants were randomly assigned to each of the two situation groups (with 7 women in the physical situations group, and 8 women in the social situations group). Two additional participants were dropped due to excessive head motion in the scanner, and two more were dropped due to low temporal signal-to-noise ratios in their BOLD data. During the first learning session, participants provided informed consent and were screened for any potential problems that could arise during an MRI scan. Participants had no history of psychiatric illness and were not currently taking any psychotropic medication. Participants received $100 in compensation, along with anatomical images of their brain.

Materials

Four mental-state words were used throughout both the learning and scanning phases: fear, anger, plan, and observe. Plan and observe provided filler trials that served to: (1) create diversity during the learning and scanning sessions (i.e., not just two emotions); (2) help establish baselines used in scanning analyses; (3) provide an opportunity to assess situational learning effects on non-emotional mental states.

The 50 situations used in this experiment were a subset of the 66 situations developed by Wilson-Mendenhall et al. (2011), and included 25 physical danger situations and 25 social evaluation situations. The scanning session used 20 situations of each type; the practice session just before the scanning session used the 5 other situations of each type. Each situation was designed so that each of the four mental state words would elicit a mental state that could be experienced in it plausibly. A broad range of real-world situations served as the content of the experimental situations. The physical situations involved vehicles, pedestrians, water, eating, wildlife, fire, power tools, and theft. The social situations involved friends, family, neighbors, love, work, courses, public events, and service.

A full and core form of each situation was constructed, with the latter being a subset of the former. Each full and core situation described an emotional situation from the first-person perspective, so that the participant could immerse him- or herself in it. The full form served to provide a rich, detailed, and affectively compelling description of a situation. The core form was a distilled version of the full form that only included its essential aspects. The purpose of the core form was to minimize presentation time in the scanner, so that the number of necessary trials could be completed in the time available. As described for the Learning Procedure in the Supplemental Materials, participants practiced reinstating the full form of a situation when receiving its core form during the learning phase, so that they would be prepared to also reinstate the full form during the scanning phase when receiving its core form. Table 1 presents examples of the full and core situations.

Table 1.

Examples of physical danger and social evaluation situations in the template format used to construct them.

| Examples of Physical Danger Situations |

| Full Version |

| (P1) You step off the curb to cross a busy street without looking. (S1) Suddenly you see traffic coming toward you from the corner of your eye. |

| (P2A) You leap to avoid an approaching car. (P2C) It hits you and sweeps your legs off the ground. (S2) You tumble onto the hood. (S3). You feel the car skidding to a stop. |

| Core Version |

| (P1) You step off the curb to cross a busy street without looking. |

| (P2) You leap to avoid an approaching car, but it hits you and sweeps your legs off the ground. |

| Full Version |

| (P1) You’re standing by a very shallow swimming pool. (S1) Because you can see that bottom is so close to the surface of the water, you realize that diving in could be dangerous. (P2A) You dive in anyway. (P2C) Your head bangs hard on concrete bottom. (S2) You put out your hands to push away. (S3) You feel yourself swallowing water. |

| Core Version |

| (P1) You’re standing by a very shallow swimming pool. |

| (P2) You dive in anyway, and your head bangs hard on the concrete bottom. |

| Examples of Social Evaluation Situations |

| Full Version |

| (P1) You’re at a dinner party with friends. (S1) A debate about a contentious issue arises that gets everyone at the table talking. (P2A) You alone bravely defend the unpopular view. (P2C) Your comments are met with sudden uncomfortable silence. (S2) Your friends are looking down at their plates, avoiding eye contact with you. (S3) You feel your chest tighten. |

| Core Version |

| (P1) You’re at a dinner party with friends. |

| (P2) You alone bravely defend the unpopular view, and your comments are met with sudden uncomfortable silence. |

| Full Version |

| (P1) You’re checking e-mail during your morning routine. (S1) You hear a familiar ping, indicating that a new e-mail has arrived. (P2A) A friend has posted a blatantly false message about you on Facebook. (P2C) It’s about your love life. (S2) The lower right corner of the website shows 1,000 hits already. (S3) You feel yourself finally exhale after holding in a breath. |

| Core Version |

| (P1) You’re checking e-mail during your morning routine. |

| (P2) A friend has posted a blatantly false message on Facebook about your love life. |

Note. The label preceding each sentence (e.g., P1) designates its role in the template, as described in the materials section.

As Table 1 illustrates, situation templates were used to construct the full and core situations. Each template for the full situations specified a sequence of six sentences: three primary sentences (Pi) also used in the related core situation, and three secondary sentences (Si) not used in the core situation that provided additional relevant detail. The two sentences in each core situation were created by using P1 as the first sentence and a conjunction of P2A and P2C as the second sentence.

For the physical situations, the template specified the following six sentences in order: P1 described a setting and activity performed by the immersed participant in the setting, along with relevant personal attributes; S1 provided visual detail about the setting; P2A described an action (A) of the immersed participant; P2C described the consequence (C) of that action; S2 described the participant’s action in response to the consequence; S3 described the participant’s resulting external somatosensory experience (on the body surface). The templates for the social situations were similar, except that S1 provided auditory detail about the setting (instead of visual detail), S2 described another person’s action in response to the consequence (not action by the immersed participant), and S3 described the participant’s resulting internal bodily experience (not on the body surface). Different secondary sentences were used for the physical and social threat situations to assess issues addressed elsewhere on activations during the situations.

High-quality audio recordings were created for the full and core versions of each situation, spoken by an adult American woman. The prosody in the recordings expressed slight emotion, so that the situations did not seem strangely neutral. The four mental state words were recorded similarly. Each core situation lasted about 8 sec or slightly less.

Procedure

Figure 1 provides an overview of the procedure described in detail below.

Learning procedure

During the first learning session on Day 1, participants performed two tasks (memory judgments, imagery judgments) designed to produce implicit learning of each mental state (fear, anger, plan, observe) in either physical or social situations. On each learning trial, participants heard a mental state word first, followed immediately by either the full or core version of a situation, and were asked to imagine experiencing the mental state in the situation over the course of listening to it. Participants were further asked to experience the situation from the first-person perspective, to construct mental imagery of the situation as if it were actually happening, and to experience the situation in as much vivid detail as possible.

In the memory task, participants received each mental state word with the full version of each physical or social situation, with the 25 trials for each of the 4 mental states randomly intermixed across the 100 trials. On each trial, participants judged how familiar they were with experiencing the mental state in the situation, whether they had actually experienced it, and how recently (if ever) they had experienced it.

In the subsequent 100 trials for the imagery task, participants received each mental state word with the core version of each physical or social situation, and were asked to practice reinstating the full version heard in the previous task. On each trial, participants rated the vividness of the imagery that they experienced for the mental state in the situation on four modalities: vision, audition, body, and thought (affect was not mentioned explicitly for thought).

One to three days later (typically two), participants returned for a second learning session and the scanning session. During the second learning session, participants again received each mental state word with the full version of each physical or social situation and judged how much they experienced “being there” in it. The full versions were used again to refresh participants’ memories of the full situations, prior to the scanning session when they would receive the core versions. The Supplemental Materials provide further details about the three learning tasks.

Practice run

Immediately following the final learning task, participants were introduced to the task that they would perform in the scanner, shown how to use the button box, and told that both complete and catch trials would be randomly intermixed (details provided in the next section). Participants then practiced the task for the equivalent of one scanner run outside the scanner, using 5 of the 25 situations received during the learning task (not used in the critical scanning runs).

Scanning procedure

On each complete trial of the scanning task, participants heard a mental state word (fear, anger, plan, observe) for 3 sec, followed by a core version of a situation studied earlier during learning for 9 sec. Participants then judged how typical it would be to experience the mental state in the situation, responding on a button box with 3 (very typical), 2 (somewhat typical), or 1 (not typical). Participants were reminded to immerse themselves in the mental state and situation while listening to them, and to experience them as vividly as possible. To facilitate immersion, participants were asked to perform the task with their eyes closed. Each mental state was followed once by each relevant situation, for a total of 80 complete trials (4 mental states each followed by the same 20 situations heard during learning but not during practice). The physical learning group only received physical situations, and the social group only received social situations.

Besides receiving complete trials that contained both a mental state and a situation, participants also received catch trials containing only a mental state, which enabled separation of BOLD activations for the mental states and situations on the complete trials (Ollinger, Corbetta, & Shulman 2001; Ollinger, Shulman, & Corbetta, 2001). Each of the 4 mental states occurred 12 times as a catch trial, for a total of 48 catch trials, constituting 37.5% of the total trials, a proportion in the recommended range for an effective catch trial design (Ollinger et al., 2001a,b).

In each of 4 functional runs lasting 7 min 40 sec, participants received 20 complete trials and 12 catch trials (5 complete trials and 3 catch trials for each of the 4 mental states). All trial types were randomly intermixed in a fast event-related design, separated by random jitter that ranged from 3 to 15 sec in increments of 3 sec (obtained from the optseq2 program). On a given trial, participants could not predict whether they would receive a complete or catch trial, nor the mental state or situation presented. Although 5 situations repeated within the practice run, none of the 20 remaining situations ever repeated within a critical scanner run. Instead, the 4 presentations of the 20 critical situations were distributed randomly across the four runs, once following each of the 4 mental states.

Participants received two anatomical scans, one before the first run, and one after the last run. Participants took a short break between scans and runs. Total time in the scanner was around 1 hr.

Image acquisition

Functional and structural MRI scans were collected in a 3T Siemens Trio scanner at Emory University, using a 12-channel head coil and a functional scan sequence designed to minimize susceptibility artifacts (56 contiguous 2 mm slices in the axial plane, interleaved slice acquisition, TR=3000ms, TE=30ms, flip angle=90°, bandwidth=2442Hz/Px, FOV=220mm, matrix=64, iPAT=2, voxel size=3.44×3.44×2mm). This scanning sequence was selected after testing a variety of sequences for susceptibility artifacts in orbitofrontal cortex, the temporal poles, and medial temporal cortex. We selected this sequence, not only because it minimized susceptibility artifacts by using thin slices and parallel imaging, but also because using 3.44 mm in the X-Y dimensions yielded a voxel volume large enough to produce good temporal signal-to-noise ratios.

In each of the two anatomical runs, a T1 weighted volume was collected using a high resolution MPRAGE scan sequence that had the following parameters: 192 contiguous slices in the sagittal plane, single-shot acquisition, TR = 2300 ms, TE = 4 ms, flip angle = 8°, FOV = 256 mm, matrix = 256, bandwidth = 130 Hz/Px, voxel size = 1 mm × 1 mm × 1 mm.

Preprocessing and analysis

Image processing using the AFNI platform included standard preprocessing steps, along with resampling to 2×2×2mm voxels and smoothing with a 6 mm kernel. Regression analysis was performed on individual participants, using a Gamma function that modeled the mental state and situation periods as blocks. The 11 regressors included 4 for fear, anger, plan, and observe, 1 for the situation period, and 6 for motion parameters. One random-effects ANOVA was performed on each learning group to establish significant activations for each of the 4 mental state periods, relative to fixation baseline (using an individual voxel significance threshold of p<.005 and a cluster threshold of 221 voxels, yielding a whole brain threshold of p<.05 corrected for multiple comparisons).3 Results are also shown at lower cluster thresholds of 110 and 60 voxels to assess the robustness of the results observed at the 221-voxel threshold. Of interest was whether including smaller clusters at lower thresholds would alter the results for the critical analyses. Finally, pairs of individual significance maps were entered into conjunction analyses to test hypotheses of interest, as described later. The Supplemental Materials provide further details for all analyses.

Results

Behavioral Data

Learning phase

Table 2 presents the behavioral data from the two learning sessions. As the memory measures illustrate, participants were moderately familiar with the situations used throughout the experiment. Participants showed a general tendency to have experienced the situations either themselves or with another (an average 59% of the time), and to have experienced the situations within the past 5 years. As the imagery measures illustrate, participants generated moderate to strong imagery for the situations used in the experiment, and exhibited a moderate to strong ability to imagine being there when experiencing the situations. Together, the imagery and being there judgments indicate that participants were able to immerse themselves effectively in the situations.

Table 2.

Means (standard errors) for the behavioral data collected during training.

| Physical Training

|

Social Training

|

|||||||

|---|---|---|---|---|---|---|---|---|

| Measure (Scale) | Fear | Anger | Plan | Observe | Fear | Anger | Plan | Observe |

| Memory Measures | ||||||||

| Familiarity (1-7) | 3.57 (.11) | 3.37 (.10) | 3.24 (.10) | 3.27 (.10) | 4.18 (.10) | 4.29 (.10) | 4.21 (.09) | 4.18 (.10) |

| Experience (0-1) | .47 (.03) | .44 (.03) | .47 (.03) | .46 (.03) | .71 (.02) | .74 (.02) | .73 (.02) | .71 (.02) |

| Last time (1-5) | 1.94 (.06) | 1.87 (.06) | 1.94 (.06) | 1.93 (.06) | 2.72 (.07) | 2.77 (.07) | 2.75 (.07) | 2.76 (.07) |

| Imagery Measures | ||||||||

| Vision (1-7) | 5.05 (.06) | 4.92 (.07) | 4.92 (.07) | 4.93 (.06) | 5.55 (.07) | 5.64 (.06) | 5.46 (.07) | 5.49 (.07) |

| Audition (1-7) | 3.27 (.09) | 3.17 (.08) | 3.11 (.08) | 3.24 (.09) | 4.84 (.08) | 4.78 (.08) | 4.67 (.08) | 4.66 (.08) |

| Body (1-7) | 4.75 (.07) | 4.69 (.07) | 4.67 (.08) | 4.73 (.08) | 5.28 (.07) | 5.29 (.07) | 4.84 (.07) | 4.97 (.07) |

| Thought (1-7) | 4.93 (.08) | 4.79 (.08) | 4.75 (.08) | 4.70(1.08) | 5.23 (.07) | 5.36 (.07) | 5.19 (.07) | 5.10 (.07) |

| Being There (1-7) | 5.13 (.06) | 4.78 (.07) | 4.69 (.07) | 4.71 (.07) | 5.27 (.07) | 5.41 (.06) | 5.08 (.07) | 5.11 (.06) |

Note. All measures assessed the experience of experiencing a mental state (fear, anger, plan, observe) in a situation (Physical, Social). For the familiarity measure (actual and vicarious experience): 1 = no familiarity, 4 = average familiarity, 7= high familiarity. For the experience measure (actually experienced by oneself or with another), 1 = experienced, 0 = not experienced. For the last-time-experienced measure: 5 = past month, 4 = within the past year, 3 = within the past five years, 2 = any other earlier time, 1 = never. For the four measures of imagery vividness (visual, auditory, bodily, thought): 1 = no imagery, 4 = moderate imagery, 7 = highly vivid imagery. For the being there measure (immersion in the mental state and situation): 1 = not experiencing being there at all, 4 = experiencing being there a moderate amount, 7 = experiencing very much as if actually being there. See the Supplemental Materials for additional task details.

Scanning phase

Table 3 shows the average typicality data from the scanning session. As these data illustrate, participants found the mental states to range from being somewhat typical in the situations to being very typical (an average typicality of 2.13, where 1 = not typical, 2 = somewhat typical, 3 = very typical). For the emotions, participants found physical fear (2.66), social fear (2.37), and social anger (2.57) all to be relatively typical in the situations. In contrast, participants found physical anger (2.01) to be somewhat less typical. Physical anger may have exhibited somewhat less typicality for two reasons. First, fear may have been a stronger emotion in the physical situations than anger. Participants might have primarily focused on how to avoid physical harm, and may not have had sufficient time for feeling angry toward themselves about getting into dangerous situations. Anger may have appeared secondary to the primary goal of remaining safe. Second, participants may have had some difficulty feeling anger towards themselves, not feeling comfortable about directing blame at themselves in these situations. Wilson-Mendenhall et al. (2011) observed a similar pattern of results in their data.

Table 3.

Means (standard errors) for the behavioral data collected during the scanner task.

| Measure (Scale) | Physical Training

|

Social Training

|

||||||

|---|---|---|---|---|---|---|---|---|

| Fear | Anger | Plan | Observe | Fear | Anger | Plan | Observe | |

| Typicality (1-3) | 2.66 (.04) | 2.01 (.05) | 1.82 (.05) | 1.73 (.04) | 2.37 (.05) | 2.57 (.04) | 1.90 (.05) | 2.00 (.05) |

Note. For the measure of how typical it would be to experience the mental state in the situation, 1 = not typical, 2 = somewhat typical, 3 = very typical. See the Supplemental Materials for additional task details.

Additionally, participants found it more typical to experience the two emotions in the situations (2.40) than the two non-affective mental states (1.86). Participants also found the mental states, overall, to be more typical in the social situations (2.40) than in physical situations (2.05).

Assessing Neural Activity for Situated Emotions and Their Overlap

The hypothesis of primary interest in this experiment was that the activations above the resting state baseline for a given emotion—fear or anger—would differ between the physical and social learning groups. Because each group experienced different situational regularities for the same emotion during the learning phase, they would learn to assemble different processes when experiencing it for the same critical stimulus.

Overview of the analysis procedure

Figure S1 and the associated text in the Supplemental Materials describe the three steps of the analysis used to assess this hypothesis in detail. The earlier section, Experiment Overview and Predictions, presented the rationale and logic of this analysis pipeline in detail. We summarize these three steps briefly before proceeding here. Again, the results presented only included activations during the initial 3 sec mental state phase of each trial, excluding activations from the subsequent 9 sec situation phase.

Within these initial 3 sec activations, we first removed shared activations across the four mental states most likely associated with auditory processing of the cues, so that we could focus on semantic activations. To establish shared perceptual activations, two conjunction analyses were performed across the four mental state conditions, one for physical situations, and one for social situations. In each conjunction analysis, activations were only included in the final conjunction if significantly active in all four conditions at the corrected p < .05 significance level. We will refer to these two sets of auditory-processing activations as the “physical baseline” and the “social baseline.”

Second, we established activations important for each mental state in each situation, excluding activations associated with auditory stimulus processing. Thus, the physical baseline was removed from the four activation maps for fear, anger, plan, and observe in the physical situations condition, and the social baseline was removed from the four activation maps for fear, anger, plan, and observe in the social situations condition. By removing common activations across both emotional and non-emotional mental states, subsequent analyses focused on activations only important for mental state processing, excluding shared activations associated with auditory processing. After establishing each of these eight maps, we computed the overall number of voxels in it across the brain and in each of Yeo et al.’s (2011) intrinsic network masks, enabling tests of hypotheses presented earlier.

Third, we established how much the resultant maps for each mental state overlapped across the physical and social learning situations. Specifically, the two activation maps for each mental state in the physical and social learning conditions were submitted to a conjunction analysis that assessed the overlap in their activations. In each analysis, three classes of voxels were identified: (1) voxels active only in the physical learning group, (2) voxels active only in the social learning group, and (3) voxels active in both the physical and social learning groups. By establishing these three voxel classes for each mental state, we were again able to assess how much the situation learning manipulation affected the generation of mental states in the test phase. The following sections present these steps in greater detail, together with related analyses and findings.

Common auditory processing in the physical and social baselines

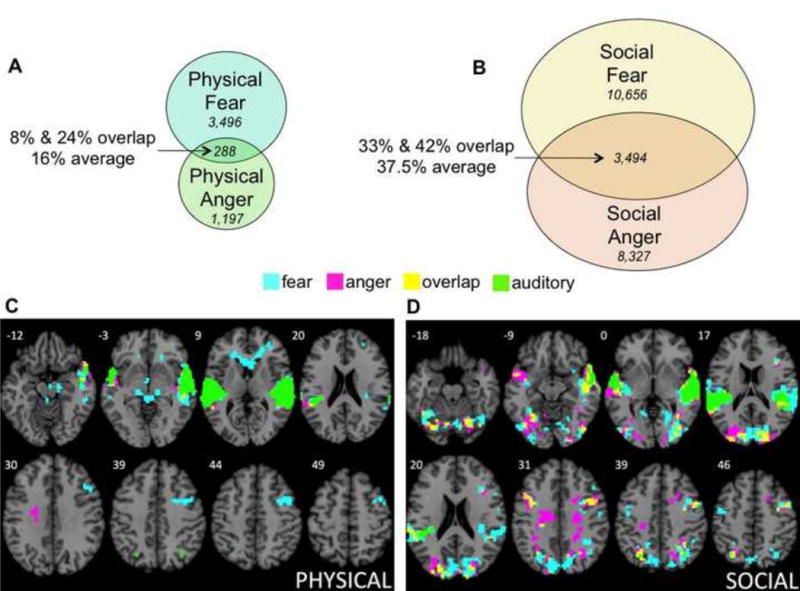

As just defined, the physical baseline included activations common across all four mental states in the physical learning condition, whereas the social baseline included activations common across all four mental states in the social learning condition. As Supplemental Table S1 shows, each baseline contained two very large clusters, one in each hemisphere, containing voxels in superior temporal gyrus and posterior insula. As much research documents, both the temporal and insular activations in these baselines are highly associated with auditory processing (e.g., Bamiou, Musiek, & Luxon, 2003; Nazimek et al., 2013). Figure 2 shows the auditory activations common to the physical and social baselines (in green). Supplemental Figure S2 shows the small unique activations in these clusters in the physical and social learning conditions, along with the much larger common activations shared between them.

Figure 2.

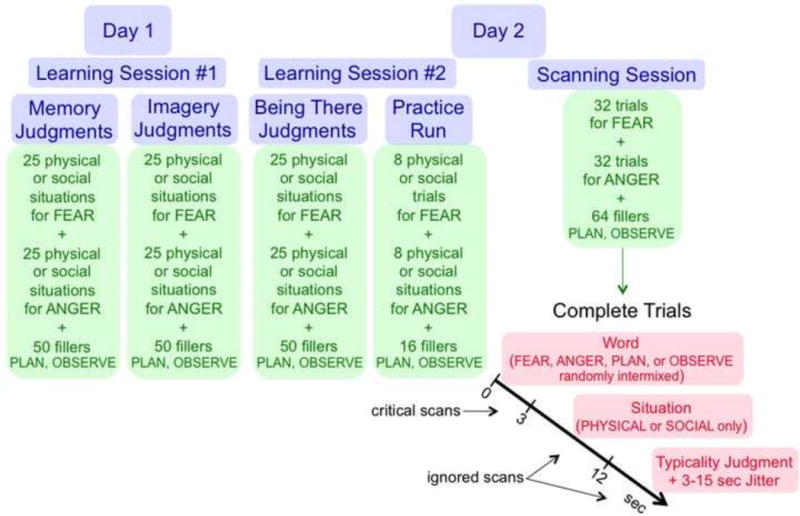

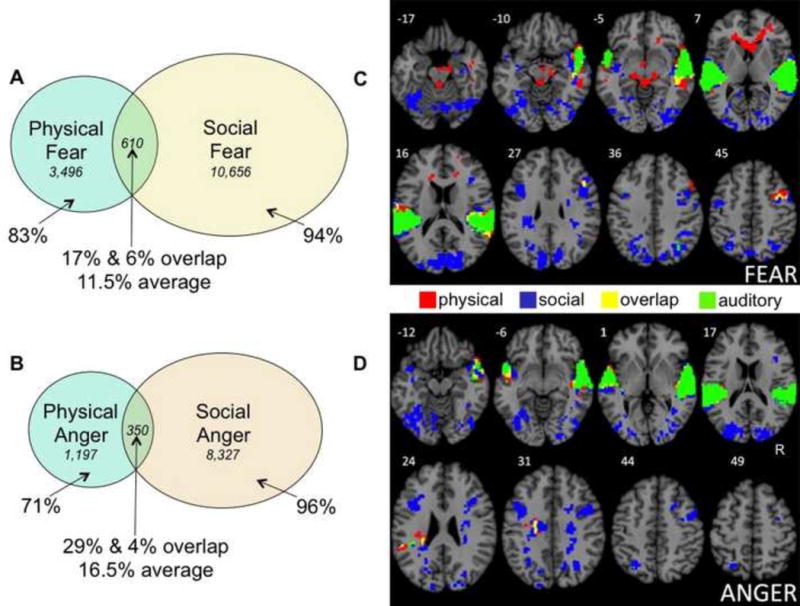

Percentages of situationally unique and shared voxels for fear (A) and anger (B) across the physical and social learning groups from conjunction analyses (voxel frequencies are shown in italics). Unique activations in the physical learning group (red), unique activations in the social learning group (blue), shared activations across both groups (yellow), and activations for auditory processing (green) are shown for fear (C) and anger (D). Voxel percentages and frequencies in Panels A and B do not include shared voxels for auditory processing. Tables 2 and 3 provide full listings of activations, and Table 6 provides full listings of the voxel counts. The two activation maps entered into each conjunction analysis were obtained in random effects analyses of the 3 sec mental state phase (excluding activations from the subsequent situation phase), using an independent voxel threshold of p<.005 and cluster extent threshold of 221 voxels (corrected significance, p<.05).

Because the activated areas in the auditory baselines were most likely associated with auditory processing of input stimuli, we removed them from the critical analyses to follow. These regions were also removed because they were active for the non-affective mental states (plan and observe), not just for fear and anger. By removing these activations, we focused the critical analyses of fear and anger on neural activity associated with emotion per se.

Overall voxel counts

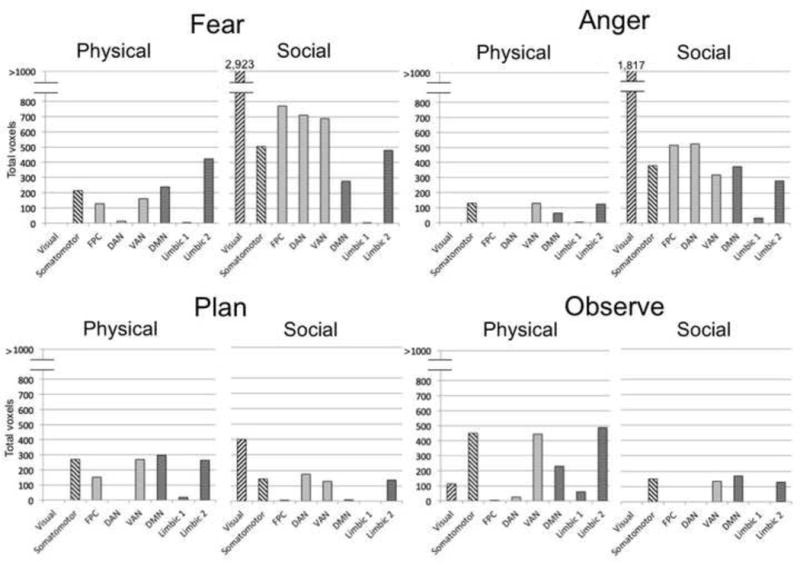

From the constructivist perspective, a given emotion, such as fear, assembles different processes in different situations. It follows that the total amount of processes assembled for an emotion could be relatively large in one situation but smaller in another. To assess this hypothesis, we established the overall amount of neural activity for each mental state in the physical and social learning groups above the resting state baseline, after removing shared activations for auditory processing as just described. As Figure 3 illustrates, the results of this analysis support the hypothesis that a given emotion assembles different processes in different situations. Three times as many voxels were active for fear after social learning than after physical learning, and seven times more voxels were active for anger.

Figure 3.

Total voxels significantly active for fear, anger, plan, and observe in physical vs. social situations, after removing voxels associated with auditory processing. Tables 2 and 3 provide full listings of activations, and Table 6 provides full listings of the voxel counts. The two activation maps entered into each conjunction analysis were obtained in random effects analyses of the 3 sec mental state phase (excluding activations from the subsequent situation phase), using an independent voxel threshold of p<.005 and cluster extent threshold of 221 voxels (corrected significance, p<.05).

One possibility is that constructing an emotion in some situations requires more complex processing than constructing it in others. For example, our social situations may have tended to be more complex than our physical situations, given that other people were always involved in the social situations but never in the physical ones. Another possibility is that greater experience with social emotion situations establishes richer processes in memory, thereby producing more neural activity when social emotions are generated. Consistent with this possibility, participants reported during the learning phase that they had more experience with fear and anger in social situations than in physical situations (as the higher ratings of familiarity, actual experience, and recency for social situations in Table 2 indicate).

Two other findings related to the overall voxel counts are also of potential interest. First, as Figure 3 illustrates, physical anger produced the lowest number of significantly active voxels (1,197) relative to the other seven conditions. As discussed earlier for the behavioral data from the scanning session (Table 3), participants in physical situations may have experienced difficulty in generating anger towards themselves for various reasons. Thus, the relatively low voxel counts for physical anger may have reflected difficulty assembling processes to produce this specific emotion.

Second, the overall voxel counts for plan and observe demonstrated a very different distributional pattern across physical vs. social situations than did the voxel counts for fear and anger (χ2(3) = 100,347, p < .001). As we just saw, more voxels were active in social situations than in physical situations for both fear (10,656 social voxels vs. 3,496 physical voxels) and anger (8,327 social voxels vs. 1,197 physical voxels). Interestingly, the opposite pattern occurred for plan (2,038 social voxels vs. 2,914 physical voxels) and observe (1,340 social voxels vs. 3,121 physical voxels). This finding indicates that there was not something about the social learning condition that induced greater overall processing of all four mental states. Instead, fear and anger, induced more neural activity in the social condition, whereas plan and observe induced more in the physical condition.

Situational overlap

If a given emotion, such as fear, assembles different processes in different situations, then processes assembled for it should differ across situations. To test this hypothesis, we used conjunction analyses to assess how much the neural activity for the physical and social learning groups overlapped for each mental state (after removing auditory processing areas). Figure 2 summarizes the conjunction analyses for fear and anger, with Tables 4 and 5 quantifying the patterns of activity.

Table 4.

Unique and shared activations for fear from a conjunction analysis across activations in the physical and social training groups.

| Brain Region | Brodmann Area | Cluster Volume | Max Intensity t | Voxel | ||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| Unique Activations in the Physical Training Group | ||||||

| B caudate head | 770 | 9.72 | −1 | 21 | 10 | |

| B hypothalamus/pons/ thalamus/parahippocampus/ cerebellum/culmen | 552 | 8.63 | −1 | −31 | −6 | |

| R mid-temporal | 21 | 460 | 7.71 | 49 | −35 | −6 |

| L mid-temporal | 22 | 247 | 6.93 | −45 | −31 | 4 |

| R mid-frontal (eye fields) | 6 | 211 | 6.23 | 31 | 3 | 42 |

| R posterior insula/ | 7 | 203 | 8.51 | 45 | 1 | −8 |

| R STG | 21 | |||||

| L posterior occipital | 17/18 | 193 | 5.00 | −19 | −97 | −2 |

| R lOFC | 47 | 142 | 5.77 | 53 | 19 | −10 |

| L mid-cingulate gyrus | 24 | 111 | 6.34 | −17 | −11 | 34 |

| L med frontal gyrus | 6 | 105 | 5.76 | −9 | 7 | 54 |

| L precuneus | 7 | 90 | 6.00 | −3 | −77 | 42 |

| L mid-frontal (eye fields) | 6 | 72 | 5.22 | −39 | −1 | 48 |

| L cerebellum/culmen | 65 | 5.21 | −9 | −45 | −6 | |

| L parahippocampal gyrus/ culmen | 61 | 6.56 | −29 | −55 | 2 | |

| L OFC/STG | 47/38 | 55 | 5.18 | −45 | 13 | −6 |

| L insula | 13 | 51 | 4.59 | −41 | −7 | 0 |

| L postcentral gyrus | 43 | 38 | 4.80 | −51 | −17 | 16 |

| R insula | 13 | 24 | 5.68 | 37 | −23 | 16 |

| L inferior parietal | 40 | 20 | 5.16 | −41 | −37 | 22 |

| Unique Activations in the Social Training Group | ||||||

| R supramarginal gyrus | 40 | 6,143 | 7.36 | 13 | −73 | 34 |

| B precuneus | 7 | |||||

| angular gyrus | 39 | |||||

| occipital lobe | 18/19 | |||||

| fusiform gyrus cerebellum (declive, culmen) | 37 | |||||

| R posterior insula | 13 | 1,538 | 8.39 | 45 | −17 | −10 |

| STG | 21/22 | |||||

| L posterior insula | 13 | 1,051 | 8.16 | −63 | −41 | 18 |

| STG parahippocampus | 21/22 | |||||

| R IFC | 6/9 | 911 | 5.95 | 39 | 5 | 30 |

| L precentral gyrus | 6 | 331 | 5.41 | −43 | −9 | 42 |

| R thalamus (red nucleus, medial geniculum) | 131 | 5.37 | 9 | −23 | −4 | |

| L dlPFC | 10 | 94 | 8.53 | −35 | 55 | 24 |

| L mid-cingulate gyrus | 24 | 92 | 6.62 | −23 | −7 | 28 |

| R dlPFC | 10 | 89 | 5.64 | 35 | 43 | 14 |

| B precuneus | 7 | 81 | 5.37 | −3 | −49 | 52 |

| R mid-cingulate gyrus | 24 | 77 | 5.25 | 13 | 7 | 38 |

| B PCC | 23 | 72 | 5.09 | −5 | −31 | 28 |

| Shared Activations Between the Physical and Social Training Groups | ||||||

| R mid-temporal | 22 | 125 | 6.24 (7.38) | 43 (43) | −23 (−23) | −4 (−4) |

| R mid-frontal (eye fields) | 6 | 77 | 7.52 (4.90) | 31 (43) | 1 (1) | 42 (48) |

| L mid-temporal | 22 | 70 | 6.77 (5.67) | −47 (−47) | −31 (−25) | 4 (2) |

| L cingulate gyrus | 24 | 68 | 6.83 (8.13) | −23 (−23) | −15 (−5) | 32 (28) |

| R insula | 13 | 50 | 6.02 (7.18) | 47 (35) | −23 (−23) | 16 (16) |

| L precentral gyrus | 43 | 47 | 6.72 (4.66) | −59 (−59) | −7(−23) | 12 (18) |

| R thalamus | 40 | 5.06 (5.43) | 13 (11) | −25 (−25) | −4 (−2) | |

| L ACC | 32 | 38 | 7.66 (5.14) | −19 (−19) | 31 (31) | 4 (6) |

| R dlPFC | 9 | 38 | 4.31 (5.38) | 47 (41) | 17 (15) | 30 (26) |

| R supramarginal gyrus | 40 | 31 | 4.57 (5.40) | 55 (53) | −47 (−39) | 18 (16) |

| L insula | 13 | 21 | 4.36 (6.69) | −47 (−51) | −33 (−39) | 20 (18) |

Note. Activations were obtained using an independent voxel threshold of p < .005 and a cluster threshold of 60 voxels, in each of the two situation training groups. Clusters having 221 voxels or larger are significant at p < .05. Smaller clusters are shown to provide a sense of weaker activations. Clusters smaller than 60 voxels resulted from the conjunction analysis producing cluster fragments, when different parts of a cluster were shared vs. unique. Cluster fragments smaller than 20 voxels are not shown.

indicates a shared activation significant at the p<.05 extent threshold (221 voxels). R is right, L is left, B is bilateral, dlPFC is dorsolateral prefrontal cortex, STG is superior temporal gyrus, lOFC is lateral orbitofrontal cortex, and ACC is anterior cingulate cortex.

Table 5.

Unique and shared activations for anger from a conjunction analysis across activations in the physical and social training groups.

| Brain Region | Brodmann Area | Cluster Volume | Max Intensity t | Voxel | ||

|---|---|---|---|---|---|---|

| x | y | z | ||||

| Unique Activations in the Physical Training Group | ||||||

| L superior temporal pole | 38 | 205 | 6.87 | −55 | 7 | 0 |

| R posterior insula | 13 | 160 | 5.63 | 33 | −23 | 6 |

| R superior temporal | 22 | 146 | 5.34 | 67 | −39 | 12 |

| L posterior insula/caudate | 13 | 133 | 6.44 | −29 | −23 | 26 |

| R mid-frontal (eye fields) | 6 | 129 | 7.45 | 37 | 5 | 46 |

| L supramarginal gyrus | 40 | 120 | 5.19 | −43 | −37 | 22 |

| R superior temporal | 22 | 116 | 6.03 | 65 | 1 | −2 |

| L mid-frontal (eye fields) | 6 | 84 | 4.94 | −43 | 1 | 54 |

| R dlPFC | 80 | 5.08 | 39 | 21 | 30 | |

| R precentral gyrus | 6 | 53 | 5.14 | 27 | 1 | 26 |

| R insula | 13 | 47 | 5.63 | 25 | −29 | 22 |

| R mid-temporal gyrus | 21 | 24 | 5.69 | 53 | −21 | −6 |

| R temporal pole | 38 | 21 | 5.38 | 53 | 15 | −12 |

| Unique Activations in the Social Training Group | ||||||

| L mid-cingulate | 23 | 3,690 | 7.25 | −11 | −13 | 32 |

| supramarginal gyrus | 40 | |||||

| precuneus | 7 | |||||

| angular gyrus | 39 | |||||

| posterior occipital | 17/18/19 | |||||

| temporal | 21/22 | |||||

| temporal | 38 | |||||

| posterior insula | 13 | |||||

| fusiform gyrus | 37 | |||||

| cerebellum/declive | ||||||

| R posterior occipital | 17/18/19 | 1,786 | 7.72 | 37 | −53 | 2 |

| fusiform gyrus | 37 | |||||

| R temporal gyrus | 22/38 | 994 | 10.94 | 49 | −5 | −6 |

| R precentral gyrus | 6 | 647 | 5.89 | 41 | 1 | 38 |

| R mid-posterior cingulate | 24/31 | 408 | 7.78 | 23 | −19 | 34 |

| L premotor gyrus | 6 | 375 | 8.30 | −35 | 3 | 28 |

| L medial frontal gyrus | 6 | 111 | 6.63 | −15 | −3 | 48 |

| B precuneus | 7 | 87 | 4.97 | −3 | −49 | 50 |

| L PCC | 29 | 68 | 6.27 | −13 | −41 | 10 |

| L substantia nigra/ thalamus (medial geniculum) | 61 | 6.30 | −13 | −25 | −2 | |

| Shared Activations Between the Physical and Social Training Groups | ||||||

| L mid-temporal | 21 | 67 | 4.82 (5.14) | −49 (−53) | −45 (−41) | 8 (18) |

| L mid-cingulate | 24/31 | 61 | 5.38 (5.61) | −25 (−19) | −29 (−9) | 24 (32) |

| L STG | 22 | 35 | 6.20 (5.34) | −49 (−55) | −3 (−1) | −6 (−6) |

| R temporal pole | 38 | 33 | 9.16 (5.47) | 51 (49) | 15 (11) | −10 (−12) |

| R insula | 13 | 33 | 6.74 (4.92) | 43 (43) | −15 (−13) | −6 (−6) |

| R dlPFC | 9 | 27 | 4.29 (4.79) | 43 (37) | 17 (19) | 28 (26) |

Note. Activations were obtained using an independent voxel threshold of p < .005 and a cluster threshold of 60 voxels, in each of the two situation training groups. Clusters having 221 voxels or larger are significant at p < .05. Smaller clusters are shown to provide a sense of weaker activations. Clusters smaller than 60 voxels resulted from the conjunction analysis producing cluster fragments, when different parts of a cluster were shared vs. unique. Cluster fragments smaller than 20 voxels are not shown.

indicates a shared activation significant at the p<.05 extent threshold (221 voxels). R is right, L is left, B is bilateral, dlPFC is dorsolateral prefrontal cortex, PCC is posterior cingulate cortex, and STG is superior temporal gyrus.

As can be seen, the two learning groups activated very different neural assemblies for the same emotion. For fear, only 11.5% of active brain voxels, on average, were shared across participants in the physical and social learning groups. Similarly, for anger, only 16.5% of active voxels, on average, were shared across learning. As each learning group generated an emotion to the same critical stimulus, they activated nearly non-overlapping brain areas. This result remained virtually unchanged upon adopting more liberal cluster thresholds. When the cluster threshold was set to 110 voxels, average overlap between the physical and social conditions was 13% for fear, and 15% for anger. Similarly, for the cluster threshold of 60 voxels, fear exhibited an average 13% overlap, and anger 14%. Thus, the pattern of overlap remained robust across a wide range of cluster thresholds.

As Supplemental Tables S2 and S3 show, similar results occurred for plan and observe. At the 221 voxel threshold, the average overlap between physical and social learning for plan was 12%; for observe, the average overlap was 16.5% (with similar patterns again occurring at lower cluster thresholds). Table 6 provides summary voxel counts across all four conjunction analyses. The section on Monte Carlo Simulations to Assess Random Overlap in the Supplemental Materials indicates that the overlap between the physical and social learning situations resulted from systematic differences between conditions, not from random activations within them.

Table 6.

Proportions of shared (non-baseline) voxels for fear, anger, plan, and observe in the physical and social learning groups, together with the relevant voxel frequencies.

| Physical Learning

|

Social Learning

|

|||||||

|---|---|---|---|---|---|---|---|---|

| Mental State | Proportion Shared Voxels | Shared Non-Baseline Voxels | Unique Voxels | Total Voxels | Proportion Shared Voxels | Shared Non-Baseline Voxels | Unique Voxels | Total Voxels |

| Cluster Threshold = 221 Voxels | ||||||||

| Fear | .17 | 610 | 2,886 | 3,496 | .06 | 610 | 10,046 | 10,656 |

| Anger | .29 | 350 | 847 | 1,197 | .04 | 350 | 7,977 | 8,327 |

| Plan | .10 | 292 | 2,622 | 2,914 | .14 | 292 | 1,746 | 2,038 |

| Observe | .10 | 306 | 2,815 | 3,121 | .23 | 306 | 1,034 | 1,340 |

| Average | .66 | 302 | 2,081 | 2,383 | .00 | 302 | 5,194 | 5,496 |

| Cluster Threshold = 110 Voxels | ||||||||

| Fear | .19 | 734 | 3,157 | 3,891 | .07 | 734 | 10,254 | 10,988 |

| Anger | .25 | 370 | 1,122 | 1,492 | .04 | 370 | 8,068 | 8,438 |

| Plan | .10 | 318 | 2,922 | 3,240 | .11 | 318 | 2,527 | 2,845 |

| Observe | .10 | 345 | 3,104 | 3,449 | .19 | 345 | 1,489 | 1,834 |

| Average | .15 | 442 | 2,576 | 3,018 | .07 | 442 | 5,585 | 6,026 |

| Cluster Threshold = 60 Voxels | ||||||||

| Fear | .19 | 818 | 3,573 | 4,391 | .07 | 818 | 10,650 | 11,468 |

| Anger | .23 | 419 | 1,388 | 1,807 | .05 | 419 | 8,235 | 8,654 |

| Plan | .09 | 331 | 3,170 | 3,501 | .10 | 331 | 2,969 | 3,300 |

| Observe | .10 | 373 | 3,332 | 3,705 | .19 | 373 | 1,623 | 1,996 |

| Average | .15 | 485 | 2,866 | 3,351 | .08 | 485 | 5,869 | 6,355 |

Note. All voxels from the physical and social baselines for the mental states have been removed from this analysis (5,265 voxels from the physical baseline, 4,899 voxels from the social baseline). Only non-baseline voxels are included. Voxel totals in Tables 4, 5, S2, and S3 do not add up to the totals here, because fragments smaller than 20 voxels from the conjunction analysis were not included in the earlier tables, but are included here (see the Supplementary Materials for details).

The specific brain areas active for fear (Table 4) and for anger (Table 5) offer post hoc interpretations into the situated emotions that the two learning groups constructed. Across physical situations, fear activated brain areas associated with motoric action in environmental settings to handle physical threat (e.g., thalamus, caudate, cerebellum, frontal eye fields, parahippocampal gyrus), along with areas that could potentially track the affective significance of threatening entities (lateral orbitofrontal cortex), and interoceptive responses to them (insula). In contrast, for fear in social situations, much more brain activity was associated with visual processing of people and social cues in the environment (e.g., angular gyrus, fusiform face area, occipital lobe, precuneus, supramarginal gyrus), executive control (lateral prefrontal cortex), and interoceptive states (larger insula activations). In our previous work on priming different forms of fear in physical vs. social situations (Wilson-Mendenhall et al., 2011; cf. Wilson-Mendenhall et al., 2013b), analogous differences in patterns of neural activity were observed, with physical fear oriented toward motoric action in the physical environment, and social fear oriented toward visuospatial processing of the social environment.

Across physical situations, anger (similar to fear) activated brain areas associated with controlling action in the environment toward a physical threat (frontal eye-fields, precentral gyrus, caudate, dorsolateral prefrontal cortex, supramarginal gyrus), along with areas that could potentially track the salience of the threatening entities (insula). Across social situations, anger (similar to fear), activated brain areas associated with processing people and social cues in the environment (e.g., medial prefrontal cortex, angular gyrus, fusiform gyrus, posterior cingulate, precuneus, occipital cortex). Unlike social fear, social anger was associated with action, perhaps taking the form of imagined engagement with someone responsible for a social transgression (precentral gyrus, middle cingulate, supramarginal gyrus, cerebellum, thalamus).

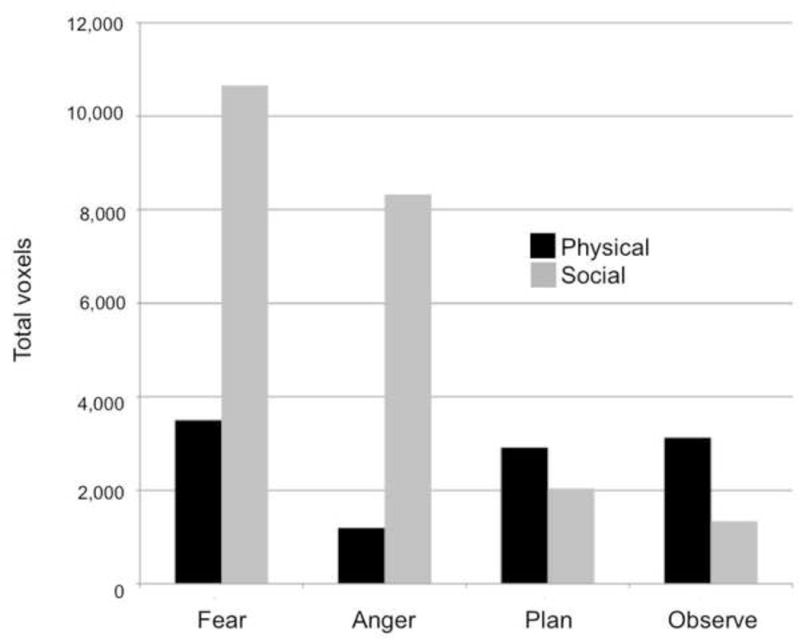

Network profiles

To further assess the processes assembled for each situated emotion (e.g., physical fear), we established its profile of activation across the brain’s intrinsic networks. From the constructivist perspective, the same emotion should be likely to activate different intrinsic networks in different situations, depending on the processes assembled. A given emotion such as fear, for example, should exhibit different profiles of network activation in physical and social situations.

To perform this analysis, we used the network masks developed by Yeo et al. (2011) for seven intrinsic brain networks observed during the resting state: visual, somatomotor, frontoparietal control (FPC), dorsal attention (DAN), ventral attention (VAN), default mode network (DMN), and limbic (Limbic 1). Because Yeo et al.’s limbic network (Limbic 1) omitted many classic limbic areas (allocated instead to their other networks), we also present results for a second limbic mask (Limbic 2) developed by the Barrett lab that represents limbic areas more completely. The Supplemental Materials provide a list of the brain areas that each of these limbic masks contains.

For each situated emotion, we took the unique voxels significantly active for it in the conjunction analysis at the 221-voxel cluster threshold and established the number that fell within each network mask. Because some voxels did not fall in any mask, the total number of voxels across masks does not sum to the total unique voxels significantly active. Figure 4 displays the results of this analysis.

Figure 4.

Within the unique voxels for each situated mental state at the 221-voxel threshold (Table 6), the total voxels active in Yeo et al.’s (2011) visual network, somatomotor network, frontoparietal control (FPC), dorsal attention (DAN), ventral attention (VAN), default mode network (DMN), limbic network (Limbic 1), and in a more complete limbic network (Limbic 2).

As predicted, fear and anger each activated a different distributional profile of networks in physical vs. social situations, as did plan and observe (fear, χ2(7) = 2,154, p < .001; anger, χ2(7) = 1,081, p < .001; plan, χ2(7) = 1,216, p < .001; observe, χ2(7) = 2,040, p < .001). These varying profiles suggest post hoc interpretations of the processes that the different situated emotions tended to assemble. Although physical fear and social fear utilized the DMN and limbic networks comparably, social fear drew much more heavily on networks associated with mental simulation (visual, somatomotor) and with attention and control (FCN, DAN, VAN). One possible interpretation is that social fear required more construction and control of mental simulations than did physical fear. Analogously, social anger appeared to rely more on mental simulation than did physical anger, while also being associated with more mentalizing (DMN) and affect (Limbic 2). Consistent with the earlier conclusion from the behavioral data that physical anger was difficult to construct, neural activity was low across all networks for this situated emotion. Future work could aim to better understand these different distributional profiles.

Further Evidence for Situated Emotion Learning

Varying similarity of fear and anger across learning groups

From the constructivist perspective, the neural activity of an emotion varies across situations. As a consequence, the similarity between two emotions can also vary. Because the perceptual, cognitive, interoceptive, and motor processes assembled for the same emotion vary across situations, the similarity of two different emotions to one another can vary as well. If, for example, the processes assembled for fear and anger are more similar to each other across social situations than across physical situations, then the neural assemblies that implement fear and anger should overlap more in social situations.