Abstract

In everyday acoustic environments, we navigate through a maze of sounds that possess a complex spectrotemporal structure, spanning many frequencies and exhibiting temporal modulations that differ within frequency bands. Our auditory system needs to efficiently encode the same sounds in a variety of different contexts, while preserving the ability to separate complex sounds within an acoustic scene. Recent work in auditory neuroscience has made substantial progress in studying how sounds are represented in the auditory system under different contexts, demonstrating that auditory processing of seemingly simple acoustic features, such as frequency and time, is highly dependent on co-occurring acoustic and behavioral stimuli. Through a combination of electrophysiological recordings, computational analysis and behavioral techniques, recent research identified the interactions between external spectral and temporal context of stimuli, as well as the internal behavioral state.

At the first stage in the auditory processing cascade, the cochlea decomposes the incoming sound waveform into electrical signals for distinct frequency bands, creating a frequency-delimited organization that persists throughout the central auditory processing centers. The inferior colliculus, the auditory thalamus and the auditory cortex all exhibit tonotopic organization through a systematic neuronal best frequency gradient across space. Therefore, tonotopy has been considered a fundamental feature of auditory processing and, historically, auditory neuroscientists used pure frequency tones to systematically characterize the response properties of the auditory system. However, while pure tones are useful for determining the tuning properties of individual cells and the tonotopic arrangement of different brain regions, they ultimately do not capture the complex spectral profile of many natural sounds. Natural acoustic stimuli like speech, conspecific vocalizations, and environmental sounds, are comprised of signals with power across multiple frequency bands. Encoding complicated spectral profiles is behaviorally important, as these types of sounds provide cues for identifying different speakers and call types and for sound localization. However, how a complex sound is encoded is not immediately evident by looking at the responses to individual frequency components: rather, responses to distinct spectral components of sounds interact with each other in frequency and time. From moment to moment, a neuron’s response does not necessarily reflect only the frequency band it is best tuned to, but also depends on nonlinear integration of stimulus power across the spectral and temporal bands. Furthermore, behavioral engagement or task can modify this representation. Here, we review recent investigations on how spectral, temporal and behavioral contexts affects sound representation in the auditory cortex (Figure 1).

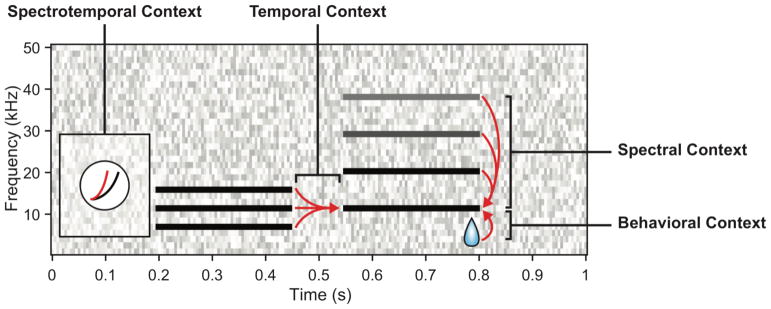

Figure 1.

Schematic of auditory context effects. Spectral context. The effects of spectral energy in near and distant frequency bands on characteristic frequency responses, as demonstrated with two-tone suppression and harmonic facilitation. Temporal context. The effects of preceding tones on a probe stimulus, as demonstrated by forward suppression and related to SSA. Spectrotemporal context. The joint effects of energy distributed across frequency and time, often resulting in adaptation of nonlinear response properties to suit persistent environmental statistics. Behavioral context. The effects of reward contingency on auditory responses.

Modulation of Auditory Processing by Spectral Context

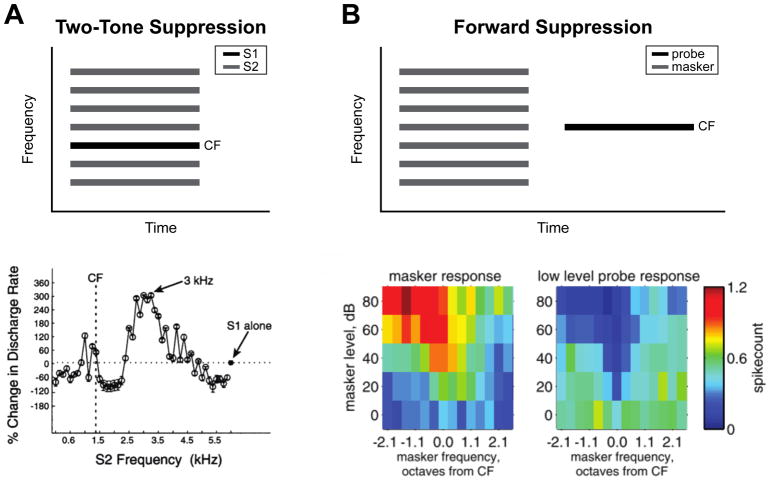

Indeed, in the central auditory pathway, neural response properties to spectrally complicated stimuli are not well predicted by their tuning to pure tones. In the periphery, auditory nerve fibers typically transmit a linear, narrow-band representation of pure tone stimuli that is determined by their frequency tuning [1]. However, when presented with pairs of pure tones, auditory nerve fiber responses at best frequency are often suppressed by the presence of a second tone, a well-studied phenomenon called two-tone suppression (Figure 1, 2A; for review, see [2]), which arises from nonlinearities in the mechanics of the basilar membrane of the cochlea [3–5]. Many cortical neurons also nonlinearly integrate spectral components, showing multi-peaked tuning [6–9], two-tone suppression and facilitation (Figure 2A) [10–12], or combination sensitivity [13,14] when presented with sounds composed of multiple frequencies. This selectivity for complex spectral stimuli is thought to arise from a combination of excitation and lateral inhibition, as belied by the suppressive effects of multiband stimuli on single-peaked neuronal responses [10,15]. Thus, rather than combining responses to inputs at different frequencies in an additive fashion, the auditory system facilitates non-linear interactions across spectral bands.

Figure 2.

Examples of spectral and temporal context. A) Two-tone suppression. Top: schematic of stimuli used in two-tone suppression experiments, consisting of a reference tone presented at characteristic frequency (CF; S1, black bar) presented alone and in the presence of a competing tone (S2, grey bars). Bottom: Change in firing rate of an example neuron between presentations of S1 alone (S1: 1.47 kHz, 50 dB SPL) relative to S1 presented with S2 stimuli of varying frequencies (S2:.12–5.88 kHz, 70 dB SPL). Note suppressive effects at nearby frequencies, but facilitative effects near the first harmonic (3 kHz). Dotted and dashed lines represent the response to S1 alone, and the characteristic frequency, respectively. Figure adapted from Kadia and Wang, 2003 [12]. B) Forward suppression. Top: schematic of stimuli used in forward suppression experiments, consisting of masker tones of varying frequency (grey bars) followed by a probe tone typically presented at CF (black bar) at variable delays. Bottom: Frequency response areas (FRAs) of an example neuron in response to the masker (left) and the probe (right) as a function of masker frequency relative to CF and masker level. Note the suppression of spiking in response to the probe when preceded by masker tones that elicited strong responses, such that forward suppression roughly resembles the FRA of the neuron. Figure adapted from Scholl et al., 2008 [30].

Sensitivity to spectral context is useful for encoding sounds composed of several distinct frequencies, a feature common to many mammalian vocalizations [12,13]. Many communication sounds contain harmonic components, a broadband acoustic feature that is highly perceptible by many mammalian species [16–18]. Indeed, harmonic features are perceptually useful, and can be used to discriminate between different sound sources or speakers [19] or to hear vocalizations in noisy environments [20], indicating that harmonicity is a prominent acoustic feature for auditory processing. In auditory cortex, single- and multi-peaked neurons are often suppressed or facilitated by harmonically-spaced tone pairs (Figure 2A) [12] and can be selective for higher order harmonic sounds [21–23] demonstrating that auditory cortex is highly sensitive to the harmonic content of natural stimuli, possibly through a harmonic arrangement of alternating excitatory and inhibitory inputs [23]. These studies demonstrate that spectral processing in the auditory system combines a linear, tonotopic representation of frequency with a nonlinear representation, which creates sensitivity to features of the spectral context outside of a neuron’s best frequency.

Modulation of Auditory Processing by Temporal Context

Just as the spectral context outside of the best frequency is integrated in cortical neurons, the temporal history of an acoustic waveform also impacts neural responses to proceeding stimuli. Sensitivity to temporal context is important for identifying auditory objects, allowing sequences of auditory stimuli to be perceptually grouped or separated based on their temporal properties [24,25] or for detecting novel or rare sounds by decreasing responsivity to redundant sounds [26,27].

In the auditory cortex, responses to a probe tone are suppressed by a preceding masking tone, a phenomenon known as forward suppression (Figure 2B) [11,28–31]. The magnitude of forward suppression depends on the frequency and intensity of the masker, creating a suppressive area that matches the frequency response area (FRA) of the neuron, and decays at large delays between the probe and masker, approximately 250ms after masker onset [11,28,29]. The suppressive effect of the masker is released with increasing probe intensities, suggesting a competitive interaction between excitatory responses to the probe, and delayed inhibitory responses to the masker (Figure 2B) [30]. Whole-cell recordings show that inhibitory conductances elicited from the masker last only 50 to 100ms, indicating the involvement GABA-mediated synaptic inhibition at short timescales, but also that long-term synaptic depression may underlie suppression observed at longer delays [32]. Notably, there is considerable diversity in forward masking in awake mice, with mixtures of suppression and facilitation by the masker, and nonlinear relationships between responses to the masker and the probe, further implicating synaptic inhibition mediated by cortical interneurons as a potential mechanism for temporal context sensitivity [31].

However, prolonged stimulus history also affects neural sensitivity. Stimulus specific adaptation (SSA) is one such phenomenon, in which neurons reduce their response to a tone frequently repeated over several seconds, but do not suppress their response to a rarely presented tone of a different frequency [27,33–37]. SSA typically occurs over the course of several seconds [35–37] and can be elicited by tones whose frequency difference is an order of magnitude smaller than typical cortical and auditory nerve tuning widths [33,36]. Curiously, this phenomenon results in frequency hyperacuity in single neurons which matches the psychophysical acuity of untrained humans in a frequency discrimination task [38]. Converging evidence suggests that SSA is mediated through a combination of feedforward synaptic depression and intra-cortical inhibition [27,39]. Parvalbumin-positive (PV) and somatostatin-positive (SOM) interneurons differentially contribute to SSA, with PVs providing non-specific inhibition to the frequent and rare tones, while SOMs selectively inhibit frequent tones in a manner that increases over time [27]. Taken together, these findings outline a key role for interneuron-mediated synaptic inhibition in adaption to temporal context, providing a neural mechanism by which sound sequences and temporal events are encoded in auditory cortex.

Spectrotemporal Context: Adapting to Noisy Environments

So far in this review, spectral and temporal context have been treated separately, although there is a clear interaction between frequency and time in forward suppression [11,28–31]. This interaction is well documented, and can be made explicitly clear by quantifying spectrotemporal receptive fields (STRFs), in which neural responses are characterized by their sensitivity to sound intensity over both time and frequency [40,41]. Notably, incorporating local temporal and spectral stimulus context to an STRF-based model of neuronal responses to sounds not only improves predictions of activity, but suggests that context-specific changes in gain may underlie previously observed context effects such as two-tone or forward suppression [42,43]. This sensitivity to the local effects of frequency and time likely facilitates responses to combinations of spectrotemporal features [13,14]. Indeed, in nearly all natural soundscapes, the auditory background contains high order temporal and spectral characteristics [44,45]. To hear in these complex natural environments, it is necessary to encode the spectrotemporal statistics of the acoustic context to separate auditory streams, or, similarly, hear in the presence of background noise.

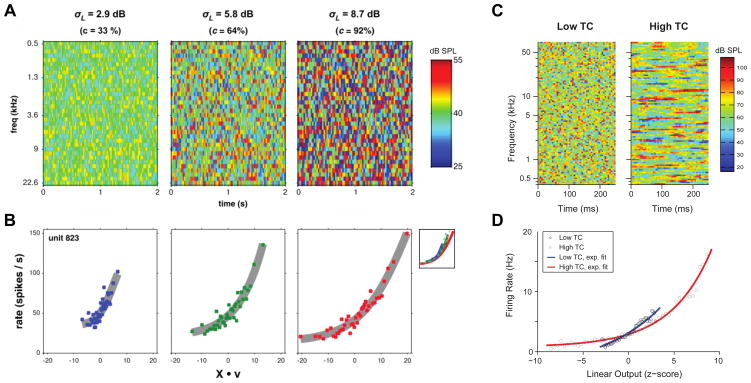

As discussed previously, stimulus-specific adaptation allows auditory cortex to reduce sensitivity to redundant stimuli [27,33,36]. Adaptive mechanisms are not limited to pure frequency, but can also account for broadband stimulus statistics over time, including stimulus volume [46] and contrast [47,48]. When presented with stimuli distributed at different volumes, neurons in the inferior colliculus (IC) adjust their rate-level functions to maximize sensitivity to sounds at the presented volume. On a population level, this is reflected by shifts in the mutual information contained in the rate-level responses towards intensities that are most commonly presented [46]. In auditory cortex, neurons adapt not only to stimulus level, but also the dynamic range, or contrast, of the stimulus spectra over time (Figure 3A). To do this, neurons adjust the dynamic range of their response gain to nearly match the dynamic range of the stimulus (Figure 3B) [47,48]. Notably, gain adaptation accounts not only for contrast over spectral space, but contrast over time, in broadband stimuli with varied temporal correlations (Figure 3C, D) [49], creating invariance to changes in temporal modulation rate, commonly seen in speech and other natural sounds. Through these adaptive mechanisms, the auditory system adjusts nonlinearities in its response properties to account for consistent spectrotemporal statistics in broadband stimuli.

Figure 3.

Gain adaptation to spectrotemporal context. A) Spectrograms of dynamic random chord (DRC) stimuli used to manipulate stimulus contrast. σL and c denote the width of the uniform distributions from which tone levels were sampled, analogous to the spectrotemporal contrast in each DRC stimulus. Contrast increases from left to right, and the level of each tone in dB SPL is denoted by the color bar on the right. B) Nonlinear fits (grey lines) to predicted linear responses versus the observed rate responses to stimuli presented at low (blue), medium (green), and high (red) contrast levels. X·v denotes the convolution of the corresponding stimulus spectrogram (X) above with the normalized STRF (v) of the neuron. Note that the slopes of the nonlinear fits scale to maximize sensitivity in the range of intensity values present at each contrast level, demonstrating adaptation of neural dynamic range to account for the stimulus dynamic range. C) Spectrograms of DRCs generated with different levels of temporal correlation (TC) across adjacent chords. D) Nonlinear fits (solid lines) to predicted versus observed responses in low (blue) and high TC conditions (red). Response gain is high for stimuli with low TC, and low for stimuli with high TC. A and B adapted from Rabinowitz et al., 2011 [47], C and D adapted from Natan et al., 2017 [49].

Gain adaptation is useful in generating responses that are invariant to persistent statistics of auditory environments, a processing feature which may underlie our ability to hear in the presence of noise [48,50,51]. In the anesthetized ferret, responses to speech in noise become increasingly noise invariant from IC to AC, a phenomenon that correlates with estimates of level and contrast adaptation in each region [48]. Further work explicitly modeling these adaptive mechanisms in terms of subtractive synaptic depression and divisive normalization significantly improve AC response predictions and stimulus reconstructions from population responses to noise-corrupted stimuli compared to static LN models [50,52,53]. These results indicate that, in environments with persistent background statistics, neural adaptation reduces responses to the background to optimally encode stimuli with different spectrotemporal profiles.

It is worth noting that many natural environments, such as a cocktail party or loud crowd, have noise backgrounds that fluctuate, or are a superposition of stimuli with statistics similar to the signal of interest. For complicated sounds, such as vocalizations, sensory representations are modified between primary and secondary auditory areas, generating invariance to acoustic distortions of these complex signals [54,55]. Similarly, in high-level human and avian auditory areas, responses to vocalizations embedded in multi-speaker choruses are background invariant, and strongly reflect behavioral detection of the attended speaker [56,57]. These mixtures of complex background sounds often contain coherently modulated power across different frequency bands [44]. This general property of auditory scenes may underlie co-modulation masking release (CMR), a psychophysical phenomenon in which co-modulated background noise facilitates the detection of embedded signals [58]. When presented with tones in co-modulated noise, auditory cortex strongly locks to the noise modulation envelope, but increases sensitivity to embedded tones by suppressing noise locking during the tone, providing a potential neural substrate for CMR, and behavioral detection of sounds in complex environments [44,59,60].

Modulation of Auditory Processing by Behavioral Context

So far, we discussed how external stimulus context affects auditory coding; however, what we hear in an acoustic scene depends not only on the spectrotemporal properties of the sound reaching our ears, but also on how our movements, attentional state and behavioral goals relate to those auditory inputs. An optimal auditory system generates stimulus representations that facilitate adaptive behaviors; as such, how coding of acoustic stimuli is modulated by behavior is of fundamental importance in our understanding of auditory processing.

The auditory cortex is highly sensitive to behavioral state, showing suppression mediated by PV interneurons during locomotion [61,62], an effect which is likely involved in auditory-motor learning [63] and the suppression of self-generated sounds [64,65]. During auditory tasks, intermediate, but not low or high arousal levels (as assayed by pupillometry, locomotion and hippocampal activity), improves the signal-to-noise ratio of sound-evoked responses [66], providing a neural substrate for the inverted-U relationship between arousal and task performance [67]. These findings suggest that auditory responses rely not only on the external sounds reaching the ear, but also on the behavioral and internal state of the subject.

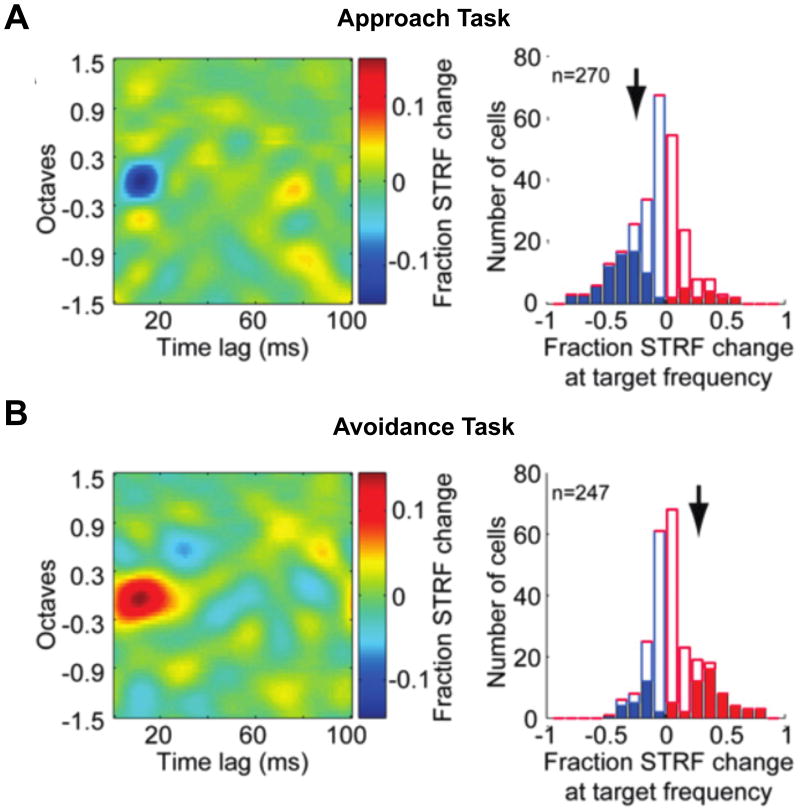

Recent findings demonstrate that populations of neurons in auditory cortex maintain relatively stable receptive field parameters in response to a wide array of acoustic stimuli [68]. However, during active engagement in a behavioral task, cortical receptive fields change to maximize behavioral outcomes by improving encoding of relevant stimulus features. A popular paradigm to assay the neural effects of task engagement is to record stimulus responses during active (rewarded) and passive (non-rewarded or randomly rewarded) behavioral contexts. Using a trained tone detection task, Fritz and colleagues manipulated reward contingencies between passive and active behavioral contexts while recording from single units in auditory cortex. Estimating STRFs in each context revealed plasticity in the cortical representation of the target stimulus, such that responses to the target frequency were greatly elevated in the active context [69] while responses to non-target stimuli are depressed [70]. These behaviorally-driven changes in auditory coding also generalize to more complex sounds, such as tone sequences [71] and distorted speech [72]. Notably, these effects depend on the reward contingency, with enhanced responses to targets requiring behavioral avoidance, but suppressed responses to targets requiring approach behaviors [71,73], demonstrating considerable cortical adaptability to task demands while conserving neural discrimination of task-relevant frequency information.

While reward contingencies have a clear effect on tuning during frequency discrimination tasks, it was not clear whether these coding properties would hold for more complex stimuli. Using amplitude modulation (AM) discrimination tasks, Niwa and colleagues found that neural responses in AC increase both their firing rate and phase-locking to AM stimuli during the active behavioral context, thus increasing the amount of information about the attended amplitude modulations. While changes in rate and phase-locking are both correlated with behavioral performance, changes in rate are more affected by task engagement and are more correlated with behavior, implicating a multiplexed temporal- and rate-code that is dominated by firing rate changes during behavior (Figure 4) [74,75]. On a population level, neurons with similar AM tuning decrease their noise correlations during active engagement, while noise correlations in neurons with dissimilar AM tuning are unaffected, suggesting that AC selectively modulates population variability to maximize sensory discrimination [76]. Taken together, these results demonstrate that auditory cortex is highly plastic, rapidly adjusting its single-unit and population response properties to optimally encode stimulus features that are relevant to the current behavioral task.

Figure 4.

STRF plasticity during behavior. A) Approach task. During behavior, animals received rewards when licking during a target tone. Left: Population averaged STRF changes between active and passive conditions. STRFs were aligned to the frequency of the target tone. Blue indicates a suppression relative to the passive condition, while red indicates excitation. Note that during the approach task, there is a prominent suppression at the target frequency. Right: Cell counts indicating the population level STRF change at the target frequency. Blue bars indicate suppressed responses while red bars indicate enhanced responses. Filled bars indicate units showing significant modulation by behavioral context. Arrows denote the median change in significant units. B) Avoidance task. During behavior, animals received rewards when licking during reference noise bursts, but not during the target stimulus. Plots as in A). Note that during the approach task, there is significant suppression at the target, while during the avoidance task, there is significant enhancement at the target. Figures adapted from David et al., 2012 [73].

Conclusion

Natural acoustic scenes are highly complex, consisting of stimuli with complex spectral and temporal profiles that can occur in noisy environments and have different behavioral meanings. To handle this considerable complexity, our auditory system evolved considerable sensitivity to spectrotemporal and behavioral context. Beyond a simple spectral representation, the auditory system demonstrates nonlinear sensitivity to temporal and spectral context, often employing network-level mechanisms, such as cross-band and temporally adaptive inhibition, to modulate stimulus responses across time and frequency. Notably, as neuroscience employs more experiments in awake and behaving animals, it is important to consider the effects of behavioral context; arousal state and reward contingency have substantial effects on sensory responses, revealing highly plastic stimulus representations that optimize sensory discrimination depending on the task demands. On a circuit level, it is not yet known how the auditory system modulates responses to sensory and behavioral contexts, providing a rich avenue for future investigation.

Acknowledgments

This work was supported by National Institutes of Health (Grant numbers NIH R03DC013660, NIH R01DC014700, NIH R01DC015527), Klingenstein Award in Neuroscience, Human Frontier in Science Foundation Young Investigator Award and the Pennsylvania Lions Club Hearing Research Fellowship to MGN. MNG is the recipient of the Burroughs Wellcome Award at the Scientific Interface. CA is supported by 1F31DC016524. We thank the members of the Geffen laboratory and the Hearing Research Center at the University of Pennsylvania, including Dr. Steve Eliades, Dr. Yale Cohen, Dr. Mark Aizenberg, Dr. Katherine Wood, and Jennifer Blackwell for helpful discussions.

Footnotes

The authors declare that they have no conflicts of interest with respect to the work described in the manuscript.

References

- 1.von Békésy G. Experiments in hearing. McGraw-Hill; 1960. [Google Scholar]

- 2.Ruggero MA. The Mammalian Auditory Pathway: Neurophysiology. Springer; New York, NY: 1992. Physiology and Coding of Sound in the Auditory Nerve; pp. 34–93. [Google Scholar]

- 3.Rhode WS. Some observations on two-tone interaction measured with the Mössbauer effect. Psychophys Physiol Hear. 1977 [Google Scholar]

- 4.Robles L, Ruggero MA, Rich NC. Cochlear Mechanisms: Structure, Function, and Models. Springer; US: 1989. Nonlinear Interactions in the Mechanical Response of the Cochlea to Two-Tone Stimuli; pp. 369–375. [Google Scholar]

- 5.Ruggero MA, Robles L, Rich NC. Two-Tone Suppression in the Basilar Membrane of the Cochlea: Mechanical Basis of Auditory-Nerve Rate Suppression. JOURNALOF Neurophysiol. 1992:68. doi: 10.1152/jn.1992.68.4.1087. [DOI] [PubMed] [Google Scholar]

- 6.Phillips DP, Irvine DR. Responses of single neurons in physiologically defined primary auditory cortex (AI) of the cat: frequency tuning and responses to intensity. J Neurophysiol. 1981;45:48–58. doi: 10.1152/jn.1981.45.1.48. [DOI] [PubMed] [Google Scholar]

- 7.Phillips DP, Cynader MS. Some neural mechanisms in the cat’s auditory cortex underlying sensitivity to combined tone and wide-spectrum noise stimuli. Hear Res. 1985;18:87–102. doi: 10.1016/0378-5955(85)90112-1. [DOI] [PubMed] [Google Scholar]

- 8.Sutter ML, Schreiner CE. Physiology and topography of neurons with multipeaked tuning curves in cat primary auditory cortex. J Neurophysiol. 1991;65:1207–26. doi: 10.1152/jn.1991.65.5.1207. [DOI] [PubMed] [Google Scholar]

- 9.Kanwal JS, Matsumura S, Ohlemiller K, Suga N. Analysis of acoustic elements and syntax in communication sounds emitted by mustached bats. J Acoust Soc Am. 1994;96:1229–1254. doi: 10.1121/1.410273. [DOI] [PubMed] [Google Scholar]

- 10.Shamma SA, Fleshman JW, Wiser PR, Versnel H. Organization of response areas in ferret primary auditory cortex. J Neurophysiol. 1993;69:367–383. doi: 10.1152/jn.1993.69.2.367. [DOI] [PubMed] [Google Scholar]

- 11.Calford MB, Semple MN. Monaural inhibition in cat auditory cortex. J Neurophysiol. 1995;73:1876–1891. doi: 10.1152/jn.1995.73.5.1876. [DOI] [PubMed] [Google Scholar]

- 12.Kadia SC, Wang X. Spectral integration in A1 of awake primates: neurons with single- and multipeaked tuning characteristics. J Neurophysiol. 2003;89:1603–22. doi: 10.1152/jn.00271.2001. [DOI] [PubMed] [Google Scholar]

- 13.Suga N, O’Neill WE, Kujirai K, Manabe T. Specificity of combination-sensitive neurons for processing of complex biosonar signals in auditory cortex of the mustached bat. J Neurophysiol. 1983;49:1573–1626. doi: 10.1152/jn.1983.49.6.1573. [DOI] [PubMed] [Google Scholar]

- 14.Suga N, Simmons JA, Jen PH, O’Neill WE, Manabe T. Cortical neurons sensitive to combinations of information-bearing elements of biosonar signals in the mustache bat. Science. 1978;200:778–81. doi: 10.1126/science.644320. [DOI] [PubMed] [Google Scholar]

- 15.Phillips DP, Orman SS, Musicant AD, Wilson GF. Neurons in the cat’s primary auditory cortex distinguished by their responses to tones and wide-spectrum noise. Hewing Res. 1985;18:73–86. doi: 10.1016/0378-5955(85)90111-x. [DOI] [PubMed] [Google Scholar]

- 16.Sommers MS, Moody DB, Prosen CA, Stebbins WC. Formant frequency discrimination by Japanese macaques (Macaca fuscata) J Acoust Soc Am. 1992;91:3499–510. doi: 10.1121/1.402839. [DOI] [PubMed] [Google Scholar]

- 17.Fitch WT, Neubauer J, Herzel H. Calls out of chaos: the adaptive significance of nonlinear phenomena in mammalian vocal production. Anim Behav. 2002;63:407–418. [Google Scholar]

- 18.Ehret G, Riecke S. Mice and humans perceive multiharmonic communication sounds in the same way. Proc Natl Acad Sci U S A. 2002;99:479–482. doi: 10.1073/pnas.012361999. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 19.Houtsma AJM, Smurzynski J. Pitch identification and discrimination for complex tones with many harmonics. J Acoust Soc Am. 1990;87:304–310. [Google Scholar]

- 20.de Cheveigné A, Mcadams S, Laroche J, Rosenberg M. Identification of concurrent harmonic and inharmonic vowels: A test of the theory of harmonic cancellation and enhancement. J Acoust Soc Am. 1995;97:3736–3748. doi: 10.1121/1.412389. [DOI] [PubMed] [Google Scholar]

- 21.Bendor D, Wang X. The neuronal representation of pitch in primate auditory cortex. Nature. 2005;436:1161–1165. doi: 10.1038/nature03867. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Bizley JK, Walker KMM, Nodal FR, King AJ, Schnupp JWH. Auditory Cortex Represents Both Pitch Judgments and the Corresponding Acoustic Cues. 2013 doi: 10.1016/j.cub.2013.03.003. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Feng L, Wang X. Harmonic template neurons in primate auditory cortex underlying complex sound processing. Proc Natl Acad Sci U S A. 2017 doi: 10.1073/pnas.1607519114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 24.Fishman YI, Reser DH, Arezzo JC, Steinschneider M. Neural correlates of auditory stream segregation in primary auditory cortex of the awake monkey. Hear Res. 2001;151:167–187. doi: 10.1016/s0378-5955(00)00224-0. [DOI] [PubMed] [Google Scholar]

- 25.Fishman YI, Arezzo JC, Steinschneider M. Auditory stream segregation in monkey auditory cortex: effects of frequency separation, presentation rate, and tone duration. J Acoust Soc Am. 2004;116:1656–1670. doi: 10.1121/1.1778903. [DOI] [PubMed] [Google Scholar]

- 26.Nelken I. Stimulus-specific adaptation and deviance detection in the auditory system: experiments and models. Biol Cybern. 2014;108:655–663. doi: 10.1007/s00422-014-0585-7. [DOI] [PubMed] [Google Scholar]

- 27.Natan RG, Briguglio JJ, Mwilambwe-Tshilobo L, Jones SI, Aizenberg M, Goldberg EM, Geffen MN. Complementary control of sensory adaptation by two types of cortical interneurons. Elife. 2015;4:163–174. doi: 10.7554/eLife.09868. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 28.Brosch M, Schreiner CE. Time course of forward masking tuning curves in cat primary auditory cortex. J Neurophysiol. 1997;77:923–43. doi: 10.1152/jn.1997.77.2.923. [DOI] [PubMed] [Google Scholar]

- 29.Brosch M, Schulz A, Scheich H. Processing of sound sequences in macaque auditory cortex: response enhancement. J Neurophysiol. 1999;82:1542–1559. doi: 10.1152/jn.1999.82.3.1542. [DOI] [PubMed] [Google Scholar]

- 30.Scholl B, Gao X, Wehr M. Level dependence of contextual modulation in auditory cortex. J Neurophysiol. 2008;99:1616–1627. doi: 10.1152/jn.01172.2007. [DOI] [PubMed] [Google Scholar]

- 31.Phillips EAK, Schreiner CE, Hasenstaub AR. Diverse effects of stimulus history in waking mouse auditory cortex. J Neurophysiol. 2017 doi: 10.1152/jn.00094.2017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 32.Wehr MS, Zador AM. Balanced inhibition underlies tuning and sharpens spike timing in auditory cortex. Nature. 2003;426:442–6. doi: 10.1038/nature02116. [DOI] [PubMed] [Google Scholar]

- 33.Condon CD, Weinberger NM. Habituation produces frequency-specific plasticity of receptive fields in the auditory cortex. Behav Neurosci. 1991;105:416–430. doi: 10.1037//0735-7044.105.3.416. [DOI] [PubMed] [Google Scholar]

- 34.Sanes DH, Malone BJ, Semple MN. Role of synaptic inhibition in processing of dynamic binaural level stimuli. J Neurosci. 1998;18:794–803. doi: 10.1523/JNEUROSCI.18-02-00794.1998. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Malone BJ, Semple MN. Effects of auditory stimulus context on the representation of frequency in the gerbil inferior colliculus. J Neurophysiol. 2001;86:1113–30. doi: 10.1152/jn.2001.86.3.1113. [DOI] [PubMed] [Google Scholar]

- 36.Ulanovsky N, Las L, Nelken I. Processing of low-probability sounds by cortical neurons. Nat Neurosci. 2003;6:391–398. doi: 10.1038/nn1032. [DOI] [PubMed] [Google Scholar]

- 37.Ulanovsky N, Las L, Farkas D, Nelken I. Multiple Time Scales of Adaptation in Auditory Cortex Neurons. J Neurosci. 2004;24:10440–10453. doi: 10.1523/JNEUROSCI.1905-04.2004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Ahissar M, Protopapas A, Reid M, Merzenich MM, Keck WM. Auditory processing parallels reading abilities in adults. Proc Natl Acad Sci U S A. 2000;97:6832–6837. doi: 10.1073/pnas.97.12.6832. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 39.Chen I-W, Helmchen F, Lutcke H. Specific Early and Late Oddball-Evoked Responses in Excitatory and Inhibitory Neurons of Mouse Auditory Cortex. J Neurosci. 2015;35:12560–12573. doi: 10.1523/JNEUROSCI.2240-15.2015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 40.DeCharms RC, Blake DT, Merzenich MM. Optimizing sound features for cortical neurons. Science. 1998;280:1439–1443. doi: 10.1126/science.280.5368.1439. [DOI] [PubMed] [Google Scholar]

- 41.Klein DJ, Depireux DA, Simon JZ, Shamma SA. Robust spectrotemporal reverse correlation for the auditory system: Optimizing stimulus design. J Comput Neurosci. 2000;9:85–111. doi: 10.1023/a:1008990412183. [DOI] [PubMed] [Google Scholar]

- 42.Ahrens MB, Linden JF, Sahani M. Nonlinearities and contextual influences in auditory cortical responses modeled with multilinear spectrotemporal methods. J Neurosci. 2008;28:1929–1942. doi: 10.1523/JNEUROSCI.3377-07.2008. [DOI] [PMC free article] [PubMed] [Google Scholar]

- **43.Williamson RS, Ahrens MB, Linden JF, Sahani M. Input-Specific Gain Modulation by Local Sensory Context Shapes Cortical and Thalamic Responses to Complex Sounds. Neuron. 2016;91:467–81. doi: 10.1016/j.neuron.2016.05.041. This paper demonstrates through an elegant computational model that gain modulation improves response predictions of neurons in the auditory pathway. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 44.Nelken I, Rotman Y, Bar Yosef O. Responses of auditory-cortex neurons to structural features of natural sounds. Nature. 1999;397:154–7. doi: 10.1038/16456. [DOI] [PubMed] [Google Scholar]

- 45.Rodriguez FA, Chen C, Read HL, Escabi MA. Neural Modulation Tuning Characteristics Scale to Efficiently Encode Natural Sound Statistics. J Neurosci. 2010;30:15969–15980. doi: 10.1523/JNEUROSCI.0966-10.2010. [DOI] [PMC free article] [PubMed] [Google Scholar]

- *46.Dean I, Harper NS, McAlpine D. Neural population coding of sound level adapts to stimulus statistics. Nat Neurosci. 2005;8:1684–1689. doi: 10.1038/nn1541. This paper finds adaptation to level and contrast in the auditory midbrain. [DOI] [PubMed] [Google Scholar]

- *47.Rabinowitz NC, Willmore BDB, Schnupp JWH, King AJ. Contrast Gain Control in Auditory Cortex. Neuron. 2011;70:1178–1191. doi: 10.1016/j.neuron.2011.04.030. This paper finds that neurons in auditory cortex exhibit gain control to sound contrast. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 48.Rabinowitz NC, Willmore BDB, King AJ, Schnupp JWH. Constructing Noise-Invariant Representations of Sound in the Auditory Pathway. PLoS Biol. 2013;11:e1001710. doi: 10.1371/journal.pbio.1001710. [DOI] [PMC free article] [PubMed] [Google Scholar]

- **49.Natan RG, Carruthers IM, Mwilambwe-Tshilobo L, Geffen MN. Gain Control in the Auditory Cortex Evoked by Changing Temporal Correlation of Sounds. Cereb Cortex. 2017;27:2385–2402. doi: 10.1093/cercor/bhw083. This paper finds that neurons in the auditory cortex exhibit gain control not only to stimulus contrast, but also to the temporal correlation structure. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 50.Mesgarani N, David SV, Fritz JB, Shamma SA. Mechanisms of noise robust representation of speech in primary auditory cortex. Proc Natl Acad Sci U S A. 2014;111:6792–7. doi: 10.1073/pnas.1318017111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 51.Willmore BDB, Cooke JE, King AJ. Hearing in noisy environments: noise invariance and contrast gain control. J Physiol. 2014;592:3371–3381. doi: 10.1113/jphysiol.2014.274886. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 52.Rabinowitz NC, Willmore BDB, Schnupp JWH, King AJ. Spectrotemporal Contrast Kernels for Neurons in Primary Auditory Cortex. J Neurosci. 2012;32:11271–11284. doi: 10.1523/JNEUROSCI.1715-12.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 53.Willmore BDB, Schoppe O, King AJ, Schnupp JWH, Harper NS. Incorporating Midbrain Adaptation to Mean Sound Level Improves Models of Auditory Cortical Processing. J Neurosci. 2016;36:280–289. doi: 10.1523/JNEUROSCI.2441-15.2016. [DOI] [PMC free article] [PubMed] [Google Scholar]

- *54.Carruthers IM, Laplagne Da, Jaegle A, Briguglio J, Mwilambwe-Tshilobo L, Natan RG, Geffen MN. Emergence of invariant representation of vocalizations in the auditory cortex. J Neurophysiol. 2015 doi: 10.1152/jn.00095.2015. This paper identifies a transformation toward a more invariant code in representation of vocalizations between the primary and non-primary auditory cortex. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 55.Carruthers IM, Natan RG, Geffen MN. Encoding of ultrasonic vocalizations in the auditory cortex. J Neurophysiol. 2013;109:1912–1927. doi: 10.1152/jn.00483.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 56.Mesgarani N, Chang EF. Selective cortical representation of attended speaker in multi-talker speech perception. Nature. 2012;485:233–6. doi: 10.1038/nature11020. [DOI] [PMC free article] [PubMed] [Google Scholar]

- *57.Schneider D, Woolley SMN. Sparse and Background-Invariant Coding of Vocalizations in Auditory Scenes. Neuron. 2013;79:141–152. doi: 10.1016/j.neuron.2013.04.038. This paper identifies neurons in the songbird auditory pathway that exhibit responses to vocalizations that are robust to background noise. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 58.Hall JW, Haggard MP, Fernandes MA. Detection in noise by spectro-temporal pattern analysis. J Acoust Soc Am. 1984;76:50–6. doi: 10.1121/1.391005. [DOI] [PubMed] [Google Scholar]

- 59.Las L, Stern EA, Nelken I, Theseneuronswerehypersensitive M. Representation of Tone in Fluctuating Maskers in the Ascending Auditory System. 2005;25:1503–1513. doi: 10.1523/JNEUROSCI.4007-04.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 60.Hershenhoren I, Nelken I. Detection of Tones Masked by Fluctuating Noise in Rat Auditory Cortex. Cereb Cortex. 2016 doi: 10.1093/cercor/bhw295. [DOI] [PubMed] [Google Scholar]

- 61.Nelson A, Schneider DM, Takatoh J, Sakurai K, Wang F, Mooney R. A Circuit for Motor Cortical Modulation of Auditory Cortical Activity. J Neurosci. 2013;33:14342–14353. doi: 10.1523/JNEUROSCI.2275-13.2013. [DOI] [PMC free article] [PubMed] [Google Scholar]

- **62.Schneider DM, Nelson A, Mooney R. A synaptic and circuit basis for corollary discharge in the auditory cortex. Nature. 2014;513:189–94. doi: 10.1038/nature13724. This paper identifies the neuronal circuit for motor-evoked modulation of auditory responses in A1. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 63.Schneider DM, Mooney R. Motor-related signals in the auditory system for listening and learning. Curr Opin Neurobiol. 2015;33:78–84. doi: 10.1016/j.conb.2015.03.004. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 64.Eliades SJ, Wang X. Contributions of sensory tuning to auditory-vocal interactions in marmoset auditory cortex. Hear Res. 2017 doi: 10.1016/j.heares.2017.03.001. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 65.Eliades SJ, Wang X. Neural substrates of vocalization feedback monitoring in primate auditory cortex. Nature. 2008;453:1102–1106. doi: 10.1038/nature06910. [DOI] [PubMed] [Google Scholar]

- 66.McGinley MJ, David SV, McCormick DA. Cortical Membrane Potential Signature of Optimal States for Sensory Signal Detection. Neuron. 2015;87:179–192. doi: 10.1016/j.neuron.2015.05.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 67.McGinley MJ, Vinck M, Reimer J, Batista-Brito R, Zagha E, Cadwell CR, Tolias AS, Cardin JA, McCormick DA. Waking State: Rapid Variations Modulate Neural and Behavioral Responses. Neuron. 2015;87:1143–1161. doi: 10.1016/j.neuron.2015.09.012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 68.Blackwell JM, Taillefumier TO, Natan RG, Carruthers IM, Magnasco MO, Geffen MN. Stable encoding of sounds over a broad range of statistical parameters in the auditory cortex. Eur J Neurosci. 2016;43:751–764. doi: 10.1111/ejn.13144. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 69.Fritz J, Shamma S, Elhilali M, Klein D. Rapid task-related plasticity of spectrotemporal receptive fields in primary auditory cortex. Nat Neurosci. 2003;6:1216–1223. doi: 10.1038/nn1141. [DOI] [PubMed] [Google Scholar]

- 70.Fritz JB, Elhilali M, Shamma SA, Elhilali M, Klein D. Differential dynamic plasticity of A1 receptive fields during multiple spectral tasks. J Neurosci. 2005;25:7623–35. doi: 10.1523/JNEUROSCI.1318-05.2005. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 71.Yin P, Fritz JB, Shamma SA. Rapid spectrotemporal plasticity in primary auditory cortex during behavior. J Neurosci. 2014;34:4396–408. doi: 10.1523/JNEUROSCI.2799-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 72.Holdgraf CR, de Heer W, Pasley B, Rieger J, Crone N, Lin JJ, Knight RT, Theunissen FE. Rapid tuning shifts in human auditory cortex enhance speech intelligibility. Nat Commun. 2016;7:13654. doi: 10.1038/ncomms13654. [DOI] [PMC free article] [PubMed] [Google Scholar]

- **73.David SV, Fritz JB, Shamma SA. Task reward structure shapes rapid receptive field plasticity in auditory cortex. Proc Natl Acad Sci U S A. 2012;109:2144–9. doi: 10.1073/pnas.1117717109. This paper identifies bidirectional, task-driven changes in the receptive fields of neurons in the primary auditory cortex. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 74.Niwa M, Johnson JS, O’Connor KN, Sutter ML. Activity Related to Perceptual Judgment and Action in Primary Auditory Cortex. J Neurosci. 2012;32:3193–3210. doi: 10.1523/JNEUROSCI.0767-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 75.Niwa M, Johnson JS, O’Connor KN, Sutter ML. Active Engagement Improves Primary Auditory Cortical Neurons’ Ability to Discriminate Temporal Modulation. J Neurosci. 2012;32:9323–9334. doi: 10.1523/JNEUROSCI.5832-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- *76.Downer JD, Niwa M, Sutter ML. Task engagement selectively modulates neural correlations in primary auditory cortex. J Neurosci. 2015;35:7565–74. doi: 10.1523/JNEUROSCI.4094-14.2015. This paper finds that correlations in neuronal responses are affected by engagement in an auditory task. [DOI] [PMC free article] [PubMed] [Google Scholar]