Abstract

Pathology images capture tumor histomorphological details in high resolution. However, manual detection and characterization of tumor regions in pathology images is labor intensive and subjective. Using a deep convolutional neural network (CNN), we developed an automated tumor region recognition system for lung cancer pathology images. From the identified tumor regions, we extracted 22 well-defined shape and boundary features and found that 15 of them were significantly associated with patient survival outcome in lung adenocarcinoma patients from the National Lung Screening Trial. A tumor region shape-based prognostic model was developed and validated in an independent patient cohort (n = 389). The predicted high-risk group had significantly worse survival than the low-risk group (p value = 0.0029). Predicted risk group serves as an independent prognostic factor (high-risk vs. low-risk, hazard ratio = 2.25, 95% CI 1.34–3.77, p value = 0.0022) after adjusting for age, gender, smoking status, and stage. This study provides new insights into the relationship between tumor shape and patient prognosis.

Introduction

Lung cancer is the leading cause of death from cancer, with about half of all cases comprised of lung adenocarcinoma (ADC), which is remarkably heterogeneous in morphological features1,2 and highly variable in prognosis. Through sophisticated visual inspection of tumor pathology images, ADC can be further classified into different subtypes with drastically different prognoses. Some contributing morphological features have been recognized, such as tumor size or vascular invasion in lung ADC. However, there is a lack of systematic studies on the relationship between tumor shape in pathology images and patient prognosis.

Tumor tissue image scanning is becoming part of routine clinical practice for the acquisition of high resolution tumor histological details. In recent years, several computer algorithms for hematoxylin and eosin (H&E) stained pathology image analysis have been developed to aid pathologists in objective clinical diagnosis and prognosis3–7. Examples include an algorithm to extract stromal features8 and an algorithm to assess cellular heterogeneity6 as a prognostic factor in breast cancer. More recently, studies have shown that morphological features are associated with patient prognosis in lung cancer as well4,5,7. Deep learning methods, such as convolutional neural networks (CNNs), have been widely used in image segmentation, object classification and recognition9–11 and are now being adapted in biomedical image analysis to facilitate cancer diagnosis. To some extent, the performances of deep learning algorithms are similar to, or sometimes even better than, those of humans12,13. For analysis of H&E-stained pathology images, deep learning methods have been developed to distinguish tumor regions14, detect metastasis15, predict mutation status16, and classify tumors17 in breast cancer as well as in other cancers. However, due to the complexity of lung cancer tissue structures (such as microscopic alveoli and micro-vessel), deep learning methods for automatic lung cancer region detection from H&E-stained pathology images are not currently available.

Automatic tumor region detection allows for tumor size calculation and tumor shape estimation. Tumor size is a well-established lung cancer prognostic factor18–20; the effect of tumor shape has also been investigated in regard to its relationship with drug delivery21,22 and prognosis prediction23–28. In X-Ray and computer tomography (CT) image studies, the rough tumor boundary has been reported as a marker for malignant tumor in breast cancer29, and found to be associated with local tumor progression and worse prognosis in lung cancer patients24,28. Compared with CT images, which are most commonly used to evaluate tumor shape, pathology images have much higher spatial resolution30. Thus, automatic tumor region detection in pathology images allows us to better characterize tumor region boundaries and extract tumor shape and boundary-based features.

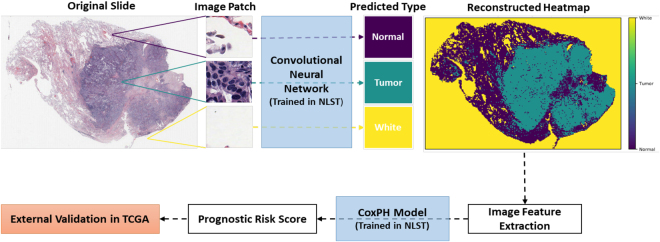

In this study, we developed a deep CNN model to automatically recognize tumor regions for lung ADC from H&E pathology images. More importantly, based on a systematic study of the detected tumor regions of lung ADC patients from the National Lung Screening Trial (NLST) cohort (n = 150), we found that many features that characterize the shape of the tumors are significantly associated with tumor prognosis. Finally, we developed a risk-prediction model for lung cancer prognosis using the tumor shape-related features from the NLST lung ADC patient cohort. The prognostic model was then validated in lung ADC patients from The Cancer Genome Atlas (TCGA) dataset. The design of this study is summarized in a flow chart (Fig. 1).

Figure 1.

Flow chart of analysis process. CNN, convolutional neural network; NLST, the National Lung Screening Trial; TCGA, The Cancer Genome Atlas.

Results

CNN model distinguishes tumor patches from non-malignant and white (empty region) patches

5344 tumor, non-malignant, and white image patches were extracted from 27 lung ADC H&E pathology images (Supplemental Fig. 1). The image patches were split into training, validation, and testing datasets (see Methods section). The CNN model was trained on the training set. The training process stopped at the 28th epoch after validation accuracy failed to improve after 10 epochs. The learning curves for the CNN model in the training and validation sets are shown in Supplemental Fig. 2. The overall prediction accuracy of the CNN model in the testing set was 89.8%; the accuracy was 88.1% for tumor patches and 93.5% for non-malignant patches (Supplemental Table 1).

Tumor region recognition for pathology images

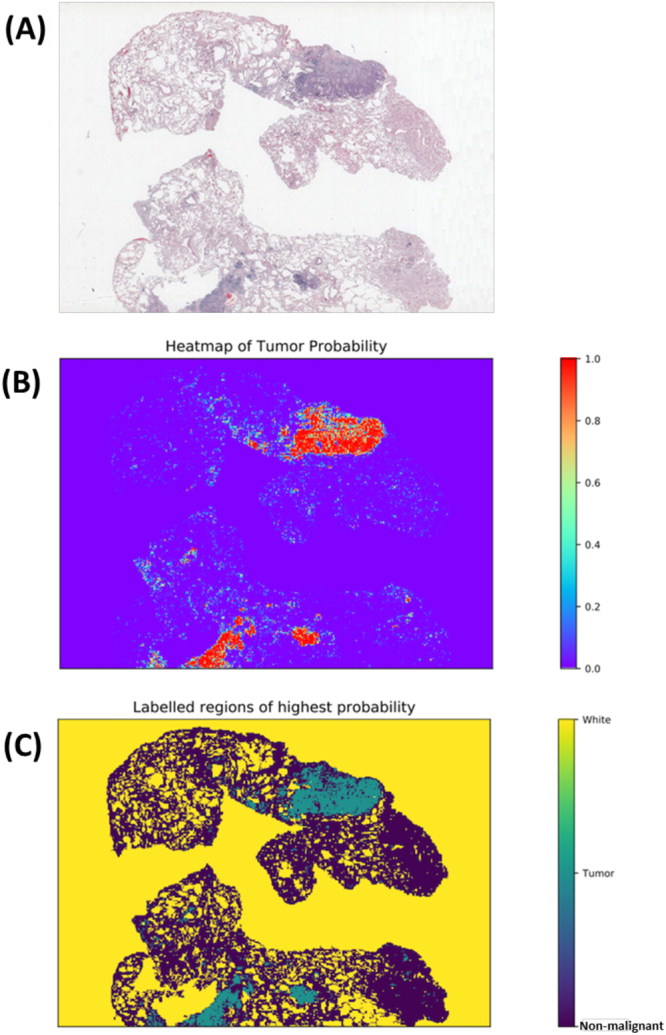

In the NLST dataset, the pathology images have sizes ranging from 5280 × 4459 pixels to 36966 × 22344 pixels (median 24244 × 19261 pixels). To identify tumor regions, each image was partitioned into 300 × 300 image patches. To speed up prediction, tissue regions were first identified (see Methods section) and only the image patches within the tissue regions were predicted by the CNN model (Supplemental Fig. 3). The predicted probabilities of the image patches were summarized into heatmaps of tumor probability (Fig. 2). An example of a tumor probability heatmap is shown in Fig. 2B. The tumor region heatmap, predicted as the category with highest probability, is shown in Fig. 2C. Each pixel in the heatmaps corresponds to a 300 × 300 pixel image patch in the original 40X pathology image.

Figure 2.

Example results of image-level tumor region detection. (A) Original image. (B) Predicted tumor probability. Each point in the heatmap corresponds to a 300 × 300 pixel image patch in original 40x image. (C) Predicted region labels. Yellow: white (empty) region; green: tumor region; blue: non-malignant region.

Shape and boundary-based features from predicted tumor regions correlate with survival outcome

Based on the predicted tumor region heatmap, tissue samples were identified (Supplemental Fig. 4) and 22 shape and boundary-based features were extracted for each tissue sample (see Methods section). For each patient, the image features from multiple tissue samples of the same patient were averaged. The associations between tumor region features and prognostic outcome are summarized in Table 2 in the NLST dataset. It shows that many features were associated with survival outcome. Most tumor area-related features, including area, perimeter, convex area, filled area, major axis length, and minor axis length, both for all tumor regions and for the main tumor region, were associated with poor survival outcome. Interestingly, the number of holes and the perimeter2 to area ratio (an estimation of circularity and boundary roughness), were also associated with poor survival outcome (for all tumor regions: per 100 number of holes, hazard ratio [HR] = 1.087, p value = 0.033; per 1000 perimeter2 to area ratio, HR = 1.15, p value = 0.016; similar results for main tumor region; Table 2). Examples comparing tumor regions with high and low values of eccentricity and perimeter2 to area ratio of main tumor region are illustrated in Fig. 3. As expected, the angle between the X-axis and the major axis of the main region was not correlated with survival, which serves as a negative control of the feature extraction process.

Table 2.

Univariate analysis of tumor region features in NLST training dataset.

| HR (95% CI) | p-value | |

|---|---|---|

| Number of regions (per 1000) | 1.29 (0.64–2.58) | 0.48 |

| Area sum of all regions (per 1000 pixels*) | 1.030 (1.010–1.050) | 0.0033 |

| Perimeter sum of all regions (per 1000 pixels) | 1.088 (1.028–1.151) | 0.0034 |

| Sum of convex area for all regions (per 1000 pixels) | 1.020 (1.006–1.033) | 0.0047 |

| Sum of filled area for all regions (per 1000 pixels) | 1.027 (1.009–1.045) | 0.0029 |

| Sum of hole numbers of all regions (per 100) | 1.087 (1.031–1.16) | 0.0033 |

| Sum of major axis length of all regions (per 1000 pixels) | 1.40 (1.00–1.96) | 0.051 |

| Sum of minor axis length of all regions (per 1000 pixels) | 2.65 (1.10–6.40) | 0.030 |

| Perimeter2/area of all regions (per 1000) | 1.18 (1.03–1.35) | 0.019 |

| Area of main region (per 1000 pixels) | 1.027 (1.007–1.048) | 0.0093 |

| Convex area of main region (per 1000 pixels) | 1.018 (1.004–1.032) | 0.010 |

| Eccentricity of main region | 6.37 (0.57–71.56) | 0.13 |

| Hole number of main region (per 100) | 1.087 (1.020–1.15) | 0.0060 |

| Extent of main region | 4.90 (0.19–126.30) | 0.34 |

| Filled area for main region (per 1000 pixels) | 1.025 (1.007–1.043) | 0.0072 |

| Major axis length for main region (per 100 pixels) | 1.57 (1.11–2.21) | 0.0099 |

| Minor axis length for main region (per 100 pixels) | 1.73 (1.05–2.83) | 0.031 |

| Angle between the X-axis and the major axis of main region | 0.98 (0.64–1.50) | 0.92 |

| Perimeter of main region (per 1000 pixels) | 1.087 (1.023–1.15) | 0.0068 |

| Solidity of main region | 7.24 (0.45–117.40) | 0.16 |

| Average tumor probability of the main region (per 0.10) | 1.11 (0.53–2.24) | 0.78 |

| Perimeter2/area for main region (per 1000) | 1.21 (1.03–1.42) | 0.021 |

NLST, the National Lung Screening Trial.

*1 pixel in heatmap = 1 patch in 40 X pathological image. Patch size: 300 pixels * 300 pixels.

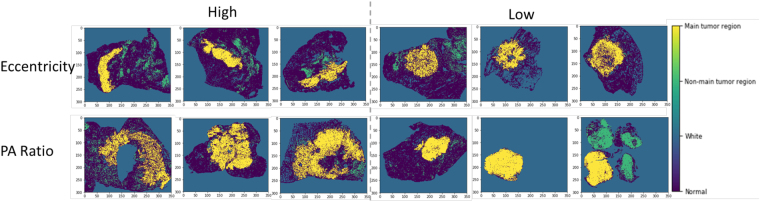

Figure 3.

Comparison of tumor shapes with high or low values of eccentricity and PA ratio of main tumor region. Original heatmaps are cropped to the same size with the same image scale. Yellow, main tumor region; green, non-main tumor region; dark blue, non-malignant tissue; blue, blank part of pathology image. PA ratio, perimeter2 to area ratio.

Development and validation of prognostic model

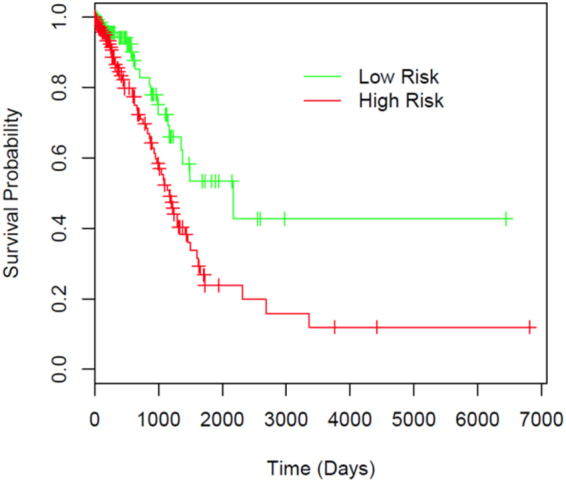

Utilizing the tumor shape features extracted from the pathology images in the NLST dataset, we developed a prognostic model to predict patient survival outcome. The model was then independently validated in the TCGA cohort. Each patient was assigned into a predicted high- or low-risk group based on the extracted tumor shape and boundary features of the patient (see Methods section). The survival curves for the predicted high- and low-risk groups are shown in Fig. 4. The patients in the predicted high-risk group had significantly worse survival outcome than those in the predicted low-risk group (log rank test, p value = 0.0029). The multivariate analysis shows that the predicted risk groups independently predicted survival outcome (high- vs. low-risk, HR = 2.25, 95% CI 1.34–3.77, p value = 0.0022, Table 3) after adjusting for age, gender, smoking status and stage. This indicates the risk group defined by tumor shape features is an independent prognostic factor, in addition to other clinical variables.

Figure 4.

Prognostic performance in TCGA validation dataset illustrated by Kaplan-Meier plot. Patients are dichotomized according to median predicted risk score. Difference between the two risk groups: log-rank test, p value = 0.0029.

Table 3.

Multivariate analysis of predicted risk and clinical variables in TCGA.

| Variable | HR (95% CI) | p-value |

|---|---|---|

| High risk vs. low risk | 2.25 (1.34–3.77) | 0.0022 |

| Age | 1.02 (1.00–1.04) | 0.12 |

| Male vs. female | 0.71 (0.43–1.16) | 0.17 |

| Smoker vs. non-smoker | 0.95 (0.59–1.54) | 0.85 |

| Stage II vs. stage I | 2.58 (1.47–4.51) | <0.001 |

| Stage III vs. stage I | 5.23 (2.85–9.59) | <0.001 |

| Stage IV vs. stage I | 2.69 (1.19–6.09) | 0.017 |

Discussion

In this study, we developed image processing, tumor region recognition, image feature extraction, and risk prediction algorithms for pathology images of lung ADC. The algorithms successfully visualized the tumor region from the pathology images, and serve as a prognostic method independent of other clinical variables. The patient prognostic model was trained in the NLST cohort and independently validated in the TCGA cohort, which indicates the generalizability of the model to other lung ADC patient cohorts.

For tumor region detection, the pathology image was divided into 300 × 300 pixel image patches, which were then classified into tumor, non-malignant, or white categories using a CNN model. The CNN model was trained on 3,848 image patches and tested on 1,068 patches, with 89.8% accuracy in the testing sets. Within the 109 incorrectly predicted patches, 27 contained an insufficient number of cells, which caused confusion between the tissue and background. For the other 82 cases where non-malignant patches were misclassified as tumor or vice versa, the cause seems to be interference from red blood cells, stroma, macrophages, and necrosis (Supplemental Table 1). The prediction errors related to out-of-focus tissues (such as macrophages and stroma cells) could be reduced by improved image scanning quality and training set labelling. A similar problem has also been reported in breast cancer recognition14.

The patch-level tumor prediction results were then arranged to generate tumor region heatmaps (Fig. 2). In total, 22 well-defined image features were extracted for each tissue region, and averaged to generate patient-level features (Supplemental Fig. 3). 15 of the 22 features were significantly correlated with survival outcome in the NLST dataset (Table 2). The features related to tumor shape, boundary and perimeter were associated with worse prognosis.

Interestingly, both for all tumor regions and for the main tumor region, the perimeter2 to area ratio was negatively correlated with survival outcome. The perimeter2 to tumor area ratio is a quantification of the smoothness of the tumor boundary; a large perimeter2 to tumor area ratio indicates a large tumor surface and thus a rough tumor boundary. The negative correlation between perimeter2 to area ratio and survival outcome is consistent with studies conducted on lung cancer CT images, which reported that a more irregular shape predicted worse survival24,28. To date, new biomarkers have been identified and several genes have been reported to be associated with tumor shape31,32. Since cancer hallmark-associated genes can boost patient survival prediction performance33,34, incorporating pathological image features into the cancer hallmark framework and understanding the relationship among gene expression, tumor shape, and survival outcome can provide insight into tumor development and guide therapeutic decision35,36.

This is the first study to quantify tumor shape-related features using a CNN-based model in lung cancer. In addition, both the main tumor body and the tumor spread through air spaces (STAS, sometimes referred as aerogenous spread with floating cancer cell clusters [ASFC]) can be easily detected in the heatmaps37,38. Since the median size of 40X pathology images is 24244 × 19261 pixels and the STASs usually only occupy 1 image patch (300 × 300 pixels) in the NLST dataset, it is labor intensive for human pathologists to circle accurate tumor boundaries and indicate all the tumor STASs. Thus, automatically generating the tumor region heatmap will facilitate pathologists in finding tumor regions and quantifying STASs. More importantly, our study has developed a computation-based method to quantify tumor shape, circularity, irregularity and surface smoothness, which can be an essential tool to study the underlying biological mechanisms. Although tumor size is a well-known prognostic factor, quantifications of the tumor area and perimeter-related features from pathology images are challenging and time-consuming for human pathologists. Thus, it is a natural step to extract image features directly from the predicted tumor heatmaps, thereby avoiding a subjective assessment by a human pathologist.

There are several limitations to our tumor region detection and image feature extraction pipeline. First, pathology images only capture the characteristics of part of the tumor, and may not be representative of the whole tumor, in terms of size, shape, and other characteristics. Furthermore, pathological images are 2-dimensional, which loses the 3-dimensional spatial information. Combining the tumor prediction and feature extraction algorithms with other imaging techniques, such as CT or X-Ray, may produce more comprehensive descriptions of the tumor region and improve the performance of the current risk prediction model. Second, as mentioned before, our CNN model is sensitive to out-of-focus tissue such as red blood cells, macrophages, and stroma cells. Better pathology image scanning quality and more comprehensive labeling of the training set will help solve the problem. Third, the image features can be affected by image preparation artifacts, such as artificially damaged tumor tissues and failure to select the images that faithfully represent the tumor. Thus, to ensure the representability of the predicted risk score, a representative tumor image is required.

Conclusion

Our pipeline for tumor region recognition and risk-score prediction based on tumor shape features serves as an objective prognostic method independent of other clinical variables, including age, gender, smoking status and stage. The tumor region heatmaps generated by our model can help pathologists locate tumor regions in pathology tissue images swiftly and accurately. The model development pipeline can also be used in other cancer types, such as breast and kidney cancer.

Methods

Datasets

The pathology images together with the corresponding clinical data were obtained from two independent datasets: 267 40X images for 150 lung ADC patients were acquired from the NLST dataset; 457 40X images for 389 lung ADC patients were acquired from the TCGA dataset. In the NLST dataset, the H&E stained images were sampled from lung tumor tissues that were resected during diagnosis and treatment of lung cancer; more information can be found on the NLST website, https://biometry.nci.nih.gov/cdas/learn/nlst. Clinical characteristics of patients in this study are presented in Table 1. The prognostic model was trained on the NLST dataset and independently validated on the TCGA dataset.

Table 1.

Patient characteristics of training and validation datasets.

| NLST (training)* | TCGA (validation) | p-value | |

|---|---|---|---|

| No. of patients | 150 | 389 | |

| Age | 64.03 ± 5.12 | 64.98 ± 10.33 | 0.16 |

| Gender | 0.055 | ||

| Male | 82 (54.7) | 175 (45.0) | |

| Female | 68 (45.3) | 214 (55.0) | |

| Smoking status | 0.0020 | ||

| Yes | 81 (54.0) | 267 (68.6) | |

| No | 69 (46.0) | 122 (31.4) | |

| Stage | 0.0048 | ||

| I | 101 (67.3) | 222 (57.1) | |

| II | 16 (10.7) | 96 (24.7) | |

| III | 23 (15.3) | 49 (12.6) | |

| IV | 10 (6.7) | 22 (5.7) |

NLST, the National Lung Screening Trial; TCGA, the Cancer Genome Atlas.

*Values are either mean ± standard deviation, or number (percentage).

Image patch generation

A CNN model was trained to classify non-malignant tissues, tumor tissues, and white regions based on image patches of H&E stained pathology images. The patch size was determined as 300 × 300 pixels under 40X magnification, to ensure at least 20 cells within one patch (Supplemental Fig. 1). Tumor and non-malignant patches were randomly extracted from tumor regions and non-malignant regions labeled by a pathologist, respectively. The patches were classified as white if the mean intensity of all pixel values was larger than a threshold determined from sample images. Examples of each patch class are shown in Supplemental Fig. 1. 2139 non-malignant, 2475 tumor and 730 white patches were generated in total. Images were scaled to the range [0, 1] by dividing by 255 before being fed into the model.

CNN training process

The Inception (V3) architecture39 with input size 300 × 300 and weights pre-trained on ImageNet was used to train our CNN model. The network was trained with stochastic gradient descent algorithms in Keras with TensorFlow backend40. The batch size was set to 32, the learning rate was set to 0.0001 without decay, and the momentum was set to 0.9. From the extracted 5,344 image patches, 3,848 patches (72%) were allocated to the training set, 428 patches (8%) to the validation set, and the remaining 1,068 patches (20%) to the testing set, with equal proportions among the three classes. Keras Image Generators were used to normalize and flip the images, both horizontally and vertically, to augment the training and validation datasets. The maximum number of epochs to train was set to 50. To avoid overfitting, the training process automatically stopped after validation accuracy failed to improve for 10 epochs.

Prediction heatmap generation

To avoid prediction on a large empty image area and to speed up the prediction process, the Otsu thresholding method followed by morphological operations such as dilation and erosion was first applied to pathology images to generate the tissue region mask (Supplemental Fig. 2)41,42. A 300 × 300 pixel window was then slid over the entire mask without overlapping between any two windows. The image patches were predicted with batch size 32, and one image patch was predicted only once without rotation or flipping. For each image patch, probabilities of being in each of the three classes were predicted, and a heatmap of the predicted probability was generated for each pathology image (Fig. 2). For each image patch, the class with the highest probability was determined as the predicted class.

Image feature extraction

In a pathology image, sometimes there are multiple tissue samples. To distinguish different tissue samples in the same image, disconnected tissue regions were first identified by morphological operations on heatmaps of predicted classes (Supplemental Fig. 3A–C)42. To remove the effects of some very small tissue samples, the tissue regions with area smaller than half of the largest tissue region in the same image were removed from analysis. Within each tissue region, the tumor region with the largest area was regarded as the “main tumor region” (Supplemental Fig. 3D). The following features of tumor regions were estimated for each tissue sample: number of regions, area, convex area, filled area, perimeter, major axis length, minor axis length, number of holes, and perimeter2 to area ratio for all tumor regions and the main tumor region separately; eccentricity, extent, solidity, and angle between the X-axis and the major axis for the main tumor region (22 features in total)43. Here, 8-connectivity was used to determine disconnected tumor regions and disconnected holes43. When multiple tissue regions were available for one patient, either due to multiple tissues within one image or multiple images for one patient, the 22 image features were averaged to generate patient-level image features.

Prognostic model development

A univariate Cox proportional hazard model was used to study the association between the 22 tumor shape features and patient survival outcome in the NLST dataset. The image features that were significantly associated with survival outcome were selected to build the prediction model for patient prognosis. To avoid overfitting, a Cox proportional hazard model with an elastic-net penalty44 was used; the penalty coefficient λ was determined through 10-fold cross-validation in the NLST cohort.

Model validation in an independent cohort

The model developed from the NLST cohort was then independently validated in the TCGA cohort (n = 389) for prognostic performance. First, the tumor region(s) in each pathology image from the TCGA dataset were detected using the CNN model, and the tumor shape features were extracted based on the detected tumor region(s) in each image and summarized to patient level. Finally a risk score was calculated based on the extracted tumor features. The patients were dichotomized into high- and low-risk groups based on their predicted risk score, using the median as the cutoff. A log-rank test was used to compare survival difference between predicted high- and low-risk groups. The survival curves were estimated using the Kaplan-Meier method. A multivariate Cox proportional hazard model was used to test the prognostic value of the predicted risk score after adjusting for other clinical factors, including age, gender, tobacco history, and stage. Overall survival, defined as the period from diagnosis until death or last contact, was used as response. Survival analysis was performed with R software, version 3.3.245. R packages “survival” (version 2.40-1) and “glmnet” (version 2.0-5) were used44,46. The results were considered significant if the two-tailed p value ≤ 0.05.

Data availability

Pathology images that support the findings of this study are available online in NLST (https://biometry.nci.nih.gov/cdas/nlst/) and The Cancer Genome Atlas Lung Adenocarcinoma (TCGA-LUAD, https://wiki.cancerimagingarchive.net/display/Public/TCGA-LUAD).

Code availability

The codes are available upon request. We will share the codes through Github following manuscript acceptance.

Electronic supplementary material

Acknowledgements

This work was partially supported by the National Institutes of Health [5R01CA152301, P50CA70907, 5P30CA142543, 1R01GM115473, and 1R01CA172211], and the Cancer Prevention and Research Institute of Texas [RP120732].

Author Contributions

S.W. and G.X. designed the study. S.W., A.C., L.C., Y.X., J.F., A.G., and G.X. analyzed the results and wrote the manuscript. S.W. and A.C. implemented the algorithm. L.Y. labeled the data. All authors commented on the manuscript.

Competing Interests

The authors declare no competing interests.

Footnotes

Shidan Wang and Alyssa Chen contributed equally to this work.

Electronic supplementary material

Supplementary information accompanies this paper at 10.1038/s41598-018-27707-4.

Publisher's note: Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

References

- 1.Howlader, N. et al. SEER cancer statistics review, 1975–2008. Bethesda, MD: National Cancer Institute 19 (2011).

- 2.Matsuda T, Machii R. Morphological distribution of lung cancer from Cancer Incidence in Five Continents Vol. X. Jpn J Clin Oncol. 2015;45:404. doi: 10.1093/jjco/hyv041. [DOI] [PubMed] [Google Scholar]

- 3.Tabesh A, et al. Multifeature prostate cancer diagnosis and Gleason grading of histological images. Medical Imaging, IEEE Transactions on. 2007;26:1366–1378. doi: 10.1109/TMI.2007.898536. [DOI] [PubMed] [Google Scholar]

- 4.Wang H, Xing F, Su H, Stromberg A, Yang L. Novel image markers for non-small cell lung cancer classification and survival prediction. BMC Bioinformatics. 2014;15:310. doi: 10.1186/1471-2105-15-310. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 5.Luo X, et al. Comprehensive Computational Pathological Image Analysis Predicts Lung Cancer Prognosis. J Thorac Oncol. 2017;12:501–509. doi: 10.1016/j.jtho.2016.10.017. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 6.Yuan, Y. et al. Quantitative image analysis of cellular heterogeneity in breast tumors complements genomic profiling. Sci Transl Med4, 157ra143, 10.1126/scitranslmed.3004330 (2012). [DOI] [PubMed]

- 7.Yu KH, et al. Predicting non-small cell lung cancer prognosis by fully automated microscopic pathology image features. Nat Commun. 2016;7:12474. doi: 10.1038/ncomms12474. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 8.Beck, A. H. et al. Systematic analysis of breast cancer morphology uncovers stromal features associated with survival. Sci Transl Med3, 108ra113, 10.1126/scitranslmed.3002564 (2011). [DOI] [PubMed]

- 9.LeCun Y, Bengio Y, Hinton G. Deep learning. Nature. 2015;521:436–444. doi: 10.1038/nature14539. [DOI] [PubMed] [Google Scholar]

- 10.Krizhevsky A, Sutskever I, Hinton GE. ImageNet Classification with Deep Convolutional Neural Networks. Commun Acm. 2017;60:84–90. doi: 10.1145/3065386. [DOI] [Google Scholar]

- 11.Schmidhuber J. Deep learning in neural networks: an overview. Neural Netw. 2015;61:85–117. doi: 10.1016/j.neunet.2014.09.003. [DOI] [PubMed] [Google Scholar]

- 12.Esteva A, et al. Dermatologist-level classification of skin cancer with deep neural networks. Nature. 2017;542:115–118. doi: 10.1038/nature21056. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Li H, Giger ML, Huynh BQ, Antropova NO. Deep learning in breast cancer risk assessment: evaluation of convolutional neural networks on a clinical dataset of full-field digital mammograms. Journal of medical imaging. 2017;4:041304. doi: 10.1117/1.JMI.4.4.041304. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Liu, Y. et al. Detecting cancer metastases on gigapixel pathology images. arXiv preprint arXiv:1703.02442 (2017).

- 15.Wang, D., Khosla, A., Gargeya, R., Irshad, H. & Beck, A. H. Deep Learning for Identifying MetastaticBreast Cancer. https://arxiv.org/abs/1606.05718 (2016).

- 16.Vandenberghe ME, et al. Relevance of deep learning to facilitate the diagnosis of HER2 status in breast cancer. Scientific reports. 2017;7:45938. doi: 10.1038/srep45938. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Cruz-Roa A, et al. Accurate and reproducible invasive breast cancer detection in whole-slide images: A Deep Learning approach for quantifying tumor extent. Scientific reports. 2017;7:46450. doi: 10.1038/srep46450. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 18.Goldstraw P, et al. The IASLC Lung Cancer Staging Project: proposals for the revision of the TNM stage groupings in the forthcoming (seventh) edition of the TNM Classification of malignant tumours. J Thorac Oncol. 2007;2:706–714. doi: 10.1097/JTO.0b013e31812f3c1a. [DOI] [PubMed] [Google Scholar]

- 19.Balagurunathan Y, et al. Test-retest reproducibility analysis of lung CT image features. J Digit Imaging. 2014;27:805–823. doi: 10.1007/s10278-014-9716-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 20.Goldstraw P, et al. The IASLC Lung Cancer Staging Project: Proposals for Revision of the TNM Stage Groupings in the Forthcoming (Eighth) Edition of the TNM Classification for Lung Cancer. J Thorac Oncol. 2016;11:39–51. doi: 10.1016/j.jtho.2015.09.009. [DOI] [PubMed] [Google Scholar]

- 21.Soltani M, Chen P. Effect of tumor shape and size on drug delivery to solid tumors. J Biol Eng. 2012;6:4. doi: 10.1186/1754-1611-6-4. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Sefidgar M, et al. Effect of tumor shape, size, and tissue transport properties on drug delivery to solid tumors. J Biol Eng. 2014;8:12. doi: 10.1186/1754-1611-8-12. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 23.Chatzistamou I, et al. Prognostic significance of tumor shape and stromal chronic inflammatory infiltration in squamous cell carcinomas of the oral tongue. J Oral Pathol Med. 2010;39:667–671. doi: 10.1111/j.1600-0714.2010.00911.x. [DOI] [PubMed] [Google Scholar]

- 24.Vogl TJ, et al. Factors influencing local tumor control in patients with neoplastic pulmonary nodules treated with microwave ablation: a risk-factor analysis. AJR Am J Roentgenol. 2013;200:665–672. doi: 10.2214/AJR.12.8721. [DOI] [PubMed] [Google Scholar]

- 25.Hashiba T, et al. Scoring radiologic characteristics to predict proliferative potential in meningiomas. Brain Tumor Pathol. 2006;23:49–54. doi: 10.1007/s10014-006-0199-4. [DOI] [PubMed] [Google Scholar]

- 26.Miller TR, Pinkus E, Dehdashti F, Grigsby PW. Improved prognostic value of 18F-FDG PET using a simple visual analysis of tumor characteristics in patients with cervical cancer. J Nucl Med. 2003;44:192–197. [PubMed] [Google Scholar]

- 27.Yokoyama I, et al. Clinicopathologic factors affecting patient survival and tumor recurrence after orthotopic liver transplantation for hepatocellular carcinoma. Transplant Proc. 1991;23:2194–2196. [PubMed] [Google Scholar]

- 28.Grove O, et al. Quantitative computed tomographic descriptors associate tumor shape complexity and intratumor heterogeneity with prognosis in lung adenocarcinoma. PLoS One. 2015;10:e0118261. doi: 10.1371/journal.pone.0118261. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 29.Rangayyan RM, El-Faramawy NM, Desautels JE, Alim OA. Measures of acutance and shape for classification of breast tumors. IEEE Trans Med Imaging. 1997;16:799–810. doi: 10.1109/42.650876. [DOI] [PubMed] [Google Scholar]

- 30.Farahani N, Parwani AV, Pantanowitz L. Whole slide imaging in pathology: advantages, limitations, and emerging perspectives. Pathol Lab Med Int. 2015;7:23–33. [Google Scholar]

- 31.Tripodis N, Demant P. Genetic analysis of three-dimensional shape of mouse lung tumors reveals eight lung tumor shape-determining (Ltsd) loci that are associated with tumor heterogeneity and symmetry. Cancer Res. 2003;63:125–131. [PubMed] [Google Scholar]

- 32.Kida H, et al. A single nucleotide polymorphism in fibronectin 1 determines tumor shape in colorectal cancer. Oncol Rep. 2014;32:548–552. doi: 10.3892/or.2014.3251. [DOI] [PubMed] [Google Scholar]

- 33.Gao S, et al. Identification and Construction of Combinatory Cancer Hallmark-Based Gene Signature Sets to Predict Recurrence and Chemotherapy Benefit in Stage II Colorectal Cancer. JAMA Oncol. 2016;2:37–45. doi: 10.1001/jamaoncol.2015.3413. [DOI] [PubMed] [Google Scholar]

- 34.McGee SR, Tibiche C, Trifiro M, Wang E. Network Analysis Reveals A Signaling Regulatory Loop in the PIK3CA-mutated Breast Cancer Predicting Survival Outcome. Genomics Proteomics Bioinformatics. 2017;15:121–129. doi: 10.1016/j.gpb.2017.02.002. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 35.Wang E, et al. Predictive genomics: a cancer hallmark network framework for predicting tumor clinical phenotypes using genome sequencing data. Semin Cancer Biol. 2015;30:4–12. doi: 10.1016/j.semcancer.2014.04.002. [DOI] [PubMed] [Google Scholar]

- 36.Li J, et al. Identification of high-quality cancer prognostic markers and metastasis network modules. Nat Commun. 2010;1:34. doi: 10.1038/ncomms1033. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 37.Kadota K, et al. Tumor Spread through Air Spaces is an Important Pattern of Invasion and Impacts the Frequency and Location of Recurrences after Limited Resection for Small Stage I Lung Adenocarcinomas. J Thorac Oncol. 2015;10:806–814. doi: 10.1097/JTO.0000000000000486. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 38.Shiono, S. et al. Histopathologic prognostic factors in resected colorectal lung metastases. Ann Thorac Surg79, 278–282; discussion 283, 10.1016/j.athoracsur.2004.06.096 (2005). [DOI] [PubMed]

- 39.Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J. & Wojna, Z. Rethinking the Inception Architecture for Computer Vision. 2016 Ieee Conference on Computer Vision and Pattern Recognition (Cpvr), 2818–2826, 10.1109/Cvpr.2016.308 (2016).

- 40.Chollet, F. et al. Keras. GitHub, https://github.com/fchollet/keras (2015).

- 41.Otsu N. Threshold Selection Method from Gray-Level Histograms. Ieee T Syst Man Cyb. 1979;9:62–66. doi: 10.1109/TSMC.1979.4310076. [DOI] [Google Scholar]

- 42.Vincent L. Morphological grayscale reconstruction in image analysis: applications and efficient algorithms. IEEE Trans Image Process. 1993;2:176–201. doi: 10.1109/83.217222. [DOI] [PubMed] [Google Scholar]

- 43.Gonzalez, R. C. & Woods, R. Digital Image Processing. (Pearson Education, 2002).

- 44.Simon N, Friedman J, Hastie T, Tibshirani R. Regularization Paths for Cox’s Proportional Hazards Model via Coordinate Descent. J Stat Softw. 2011;39:1–13. doi: 10.18637/jss.v039.i05. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 45.R Core Team R: A language and environment for statistical computing., R Foundation for Statistical Computing, Vienna, Austria., https://www.R-project.org/ (2016).

- 46.Therneau, T. A Package for Survival Analysis in S. https://CRAN.R-project.org/package=survival (2015).

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.

Supplementary Materials

Data Availability Statement

Pathology images that support the findings of this study are available online in NLST (https://biometry.nci.nih.gov/cdas/nlst/) and The Cancer Genome Atlas Lung Adenocarcinoma (TCGA-LUAD, https://wiki.cancerimagingarchive.net/display/Public/TCGA-LUAD).