Abstract

Collaborative Virtual Environments (CVEs), which allow naturalistic communication between two or more individuals in a shared virtual environment, hold promise as a tool for measuring and promoting social communication between peers. In this work, a CVE platform and a set of CVE-based collaborative games are designed for children with Autism Spectrum Disorder (ASD). Two groups (7 ASD/TD pairs; 7 TD/TD pairs) participated in a pilot study to establish system feasibility and tolerability. We also designed a methodology for capturing meaningful metrics of social communication. Based on these metrics, we found improved game performance and trends in communication of these participants over time. Although preliminary, these results provide important insights on CVE-based interaction for ASD intervention.

Keywords: Autism Spectrum Disorders, Collaborative Virtual Environment, Social Computing, Peer-mediated Learning

Introduction

Given recent rapid developments in technology, it has been argued that computer and Virtual Reality (VR) based applications could be harnessed to provide effective and innovative clinical tools for meaningfully measuring, and perhaps intervening on social communicative impairments in individuals with Autism Spectrum Disorder (ASD) (Ploog et al. 2013; Parsons and Mitchell 2002; Mitchell et al. 2007; Lahiri et al. 2013; Murray 1997). In terms of potential application to ASD measurement and intervention, VR technology possesses several strengths. Specifically, VR technology is capable of realizing platforms that mimic real-world social communication tasks while simultaneously providing quantitative, objective, and reliable measures of performance and processing. Given naturalistic social constraints and resource challenges, VR can also depict scenarios that may not be feasible in a “real world” therapeutic setting. As such, VR appears well-suited for creating interactive platforms for assessing specific aspects of social interaction.

In spite of these potential advantages, many studies have developed and utilized technologies that require rate limiting confederate participation or control. Most VR studies have used preprogrammed and/or confederate avatars to communicate and interact with individuals with ASD (Pennington 2010; Ploog et al. 2013). This rigid structure, which is highly dependent on predefined paradigms, limits the flexibility of interactions and communication compared to those in the dynamic real world (M. Schmidt et al. 2011). Further, most existing autonomous VR and technological environments for ASD have been primarily designed (1) to measure learning via aspects of performance alone (i.e., correct or incorrect) within simplistic interaction paradigms (e.g., “What is this person feeling?”) or (2) visual processing during social tasks (e.g., how much time a user spent on a non-social versus social targets) (Scahill et al. 2015; Anagnostou et al. 2015). Such approaches, while promising, may not generalize to complex, dynamic real-world social interactions.

In contrast to these approaches, a Collaborative Virtual Environment (CVE) is a computer-based, distributed, virtual space for multiplayers to interact with one another and/or with the virtual items (Benford et al. 2001). A CVE may have the potential to address the limitations of VR by enabling players to fluidly practice social skills with peers. Compared to existing studies on interactions between children with ASD and computer-controlled virtual avatars (Moore et al. 2005; Cheng et al. 2010), CVEs present the opportunity for dynamic user-to-user interactions and communication in the shared virtual environments, which may offer opportunities to foster social relationships among children with ASD and their Typically Developing (TD) peers (Leman 2015; Reynolds et al. 2011).

Several recent studies have examined the use of CVEs for ASD intervention within this context. Millen et al. explored CVEs to promote collaboration among children with ASD, and the results of a self-report questionnaire showed improved engagement of children with ASD in CVEs (Millen et al. 2011). iSocial is a CVE application aimed at social competency development of children with ASD (M. Schmidt et al. 2011). It has been used to explored cooperative interactions, such as reading facial expressions and predicting other’s thoughts (C. Schmidt et al. 2011). Wallace et al. designed and developed a CVE-system to teach greeting behaviors to children with ASD in a virtual gallery (Wallace et al. 2015). It was found that children with ASD, compared to their TD peers, were less sensitive to a negative greeting when interacted with each other using virtual avatars. Finally, Cheng et al. designed a CVE-based virtual restaurant to understand empathy of children with ASD (Cheng et al. 2010). They found children with ASD could appropriately answer more empathic questions after using the CVE.

Although existing CVEs have been successfully utilized as a way for individuals with ASD to practice specific social skills, most of these studies were designed to measure learning via performance alone. Technological systems that not only gauge performance of users in specified tasks within the CVEs, but also automatically detect, respond to and adjust task characteristics based on the users’ communication and coordinated aspects of interaction, may hold more promise for creating increasingly powerful metrics of change in social communication, which is often a primary intervention target for individuals on the autism spectrum.

Although designing an intelligent system that cannot be distinguished from a human for unrestricted naturalistic conversation is a challenge yet to be solved (i.e., Turing test), designing paradigms for controlling, indexing, and altering aspects of interactions may represent an extremely valuable and much more viable methodology (Kopp et al. 2005; Cauell et al. 2000). It is much more feasible to design aspects of controlled game scenarios in CVEs where partners are required to participate in certain actions or communications (i.e., have to move a piece in concert with movement of another, must communicate within certain time window, must respond to prompt from partner) in order to accomplish the task (Leman 2015; Benford et al. 2001). The rules of the game create a context-appropriate, rate-limiting step that allows for realistic embodiment of dynamic interaction without artificiality preprogrammed and limited conversation and communication trees, often deployed in traditional VR “conversation formats.” In this way, a CVE with carefully designed games can facilitate, control, and constrain dynamic interaction between users. This environment can also be endowed with capacities for quantifying aspects of participant and peer interaction. Ultimately, such a system has the potential to index both performance within the tasks as well as components contributing to task performance (e.g., communication bids/responses, collaborative actions). It is hypothesized that such a two-fold measurement strategy, during tasks that embody real-world social interactions (e.g. collaborative goal attainment), could yield rich objective data potentially sensitive to meaningful short-term and long-term change related to social information processing and, subsequently, day-to-day functioning.

In the current work, we designed and preliminarily tested i) the feasibility and performance characteristics of a CVE, and ii) an associated strategy for quantifying aspects of social communication and interaction during peer game play. Participants and their partners (both children with ASD and their TD peers) participated in goal directed puzzle games requiring a combination of sequential and simultaneous interactions. We hypothesized that the CVE would demonstrate an ability to (1) enable children with and without ASD to collaboratively interact with each other across the internet, and (2) meaningfully index aspects of interaction within system (e.g., communication efficiency, collaborative actions) that might represent important targets for future measurement and intervention tools.

Methods

Participants

Participants included 28 children (7 with ASD, 21 TD) recruited from an existing clinical research registry (See Table 1 for participant characteristics). Fourteen age-matched pairs (7 ASD/TD, 7 TD/TD) were created. TD1 in Table 1 represents the TD children of the ASD/TD pairs, and TD2 means all the TD children of the TD/TD pairs.

Table 1.

Participant characteristics

| Age | Gender Female/male | SRS-2 total raw score | SCQ current total score | |

|---|---|---|---|---|

| ASD (N=7) | 13.71(2.70) | 1/6 | 107(22.35) | 19(9.40) |

| TD1(N=7) | 13.89(3.14) | 1/6 | 13.71 (16.06) | 1.29(1.38) |

| TD2 (N=14) | 10.59(2.00) | 2/12 | 18.14 (16.60) | 2.14 (3.53) |

Participants with ASD had diagnoses from licensed clinical psychologists based upon DSM-5 criteria as well as Autism Diagnostic Observation Schedule-2 scores (Pruette 2013). Additional inclusion criteria were the use of spontaneous phrase speech and IQ scores higher than 70 as recorded in the registry (tested abilities from the Differential Ability Scales (DAS)(Elliott et al. 1990), Wechsler Intelligence Scale for Children (WISC) (Wechsler 1949)). The IQ criterion was established as a rough proxy for the estimated 5th grade reading level necessary for understanding/completion of the instructions of the CVE tasks. Participants in the TD group were recruited through an electronic recruitment registry accessible to community families. To index initial autism symptoms and screen for autism risk among the TD participants, parents of all participants completed the Social Responsiveness Scale, Second Edition (SRS-2) (Constantino and Gruber 2002) and the Social Communication Questionnaire Lifetime (SCQ) (Rutter et al. 2003). Participant characteristics are outlined in Table 1.

A CVE environment

Within the CVE, two geographically distributed users can interact and communicate with each in a shared environment. Puzzle games were selected as interactive activities in the environment since these games have been shown to foster collaborative and communicative skills in children with ASD (Battocchi et al. 2010). The CVE was designed with four elements: a game engine module, a network connection module, a data logging module, and an audio communication module.

In the game engine module, a Finite State Machine (FSM) (Clarke et al. 1986) with hierarchy and concurrency was developed in order to govern the game logic of all tasks. A FSM is mathematical framework to represent and control execution flow of a process. The execution flow can be modeled with a finite number of states. In a FSM, only one of the states can be active at a certain time. As a result, the FSM must transition from one state to another in order to perform different actions. The states can be transitioned in response to some external inputs. We selected FSM as the modeling method in this paper because of its ability to represent human actions as inputs to switch states (Moreno-Ger et al. 2008). In different states, our system responds differently to the users. The different responses lead to different types of games. A concurrent state can extend FSM so that multiple concurrent states can be active at the same time. With these concurrent states, two users can stay in different states to act individually at the same time. The details of the FSM can be found in our previous paper (Zhang et al. 2016). Different kinds of CVE games were designed using the FSM.

The network communication module was designed to provide network-based interaction between the players utilizing a server-client architecture. Although an external server is ideal for a large number of players in a virtual environment, it was far more cost effective and efficient to assign one of the two CVE nodes as the server, and the other as the client. Further, the network communication module facilitated all data transmission for off-line analysis, including game information data, human behavior data and audio data.

Storage of these data was managed by a data logging module. Data were stored locally to minimize internet data transmission, while multiple threads were used to store audio data to help minimize the risk of freezing the central audio thread. The audio communication module facilitated conversation between both players during game play and linked to both the network communication and data logging modules to catalogue real-time, time-stamped audio recordings. Additionally, the audio communication module was linked to the game engine module in order to generate timestamps at the start and at the completion of each task. This provided an efficient and consistent means of synchronizing audio files of both players for each type of collaborative game.

CVE Games

We designed several puzzle games capable of eliciting and indexing two distinct aspects of interactions: 1) Collaborative-communication: wherein participants must either coordinate taking turns or sharing information to achieve a common goal (e.g., solving puzzles where information is available to only one partner); and 2) Collaborative-action: wherein participants must dynamically coordinate movement to optimize performance (e.g., games where participants must move a piece simultaneously to achieve game objectives). These puzzle games included a castle game and a set of tangram games. The castle game and the tangram games have different game contents. However, both of them could i) provide feedbacks in order to help users complete games, and ii) have three collaborative strategies (i.e., turn-taking, information-sharing, and enforced-collaboration) in order to elicit specific collaborative interactions.

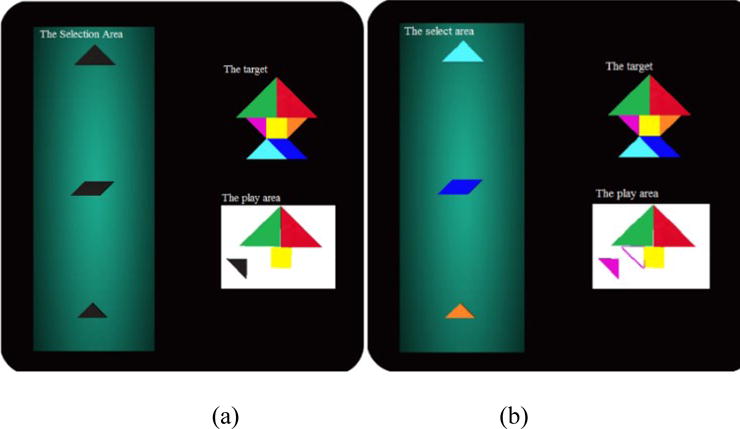

One example of the tangram games is shown in Figure 1. Each tangram games required users to assemble a specific shape from 7 flat pieces available on screen. Users moved through a set of games without receiving specific instructions, such as color and who can move the pieces. This required communication with partners and trial-and-error discovery of the rules of each new game. This kind of game can also offer some piece of the hidden instructions as feedbacks when the users fail to move any pieces in certain time duration.

Figure 1.

Screenshot of a tangram game; Figure 1 (a) and Figure 1 (b) show the views of two users.

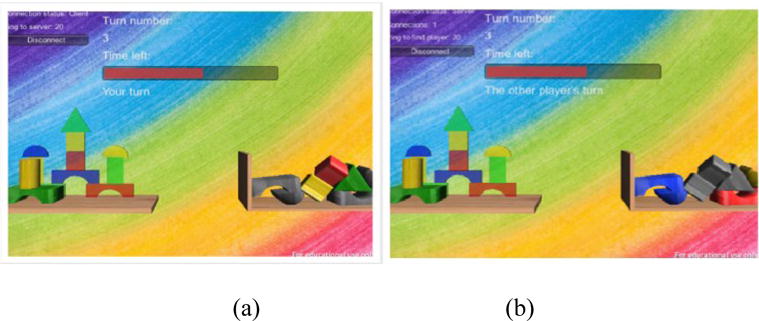

In addition, a castle game (see Figure 2) was also created to trigger and evaluate communication and collaboration. Each user could move one of their own puzzle pieces per turn within a specified period of time that was set by the system. Pieces had specific shapes and colors that mapped onto an example presented on the screen (as seen on the left side of Figure 2 (a) and Figure 2 (b)). In addition, each puzzle piece had “gravity” that required pieces to be placed in order. Users had to communicate with each other to determine which piece should be moved in order to construct a castle like the one displayed on the screen. Given the order constraints, a user may have no piece to move during his/her turn. Under this condition, feedbacks (a button asking whether the user want to skip the current turn) were designed in order to continue with the game. The feedback strategy of the castle game was implemented by searching a graph of the game that captured the constraints of the castle game.

Figure 2.

Screenshot of the castle game; Figure 2 (a) and Figure 2 (b) represent the views of two users. The target castle to be built is shown in the left of each figure.

Each individual game, the castle game and the tangram games, was embedded with a specific strategy requiring communication for successful assembly of the pieces. Game strategies were chosen to elicit key collaborative behaviors, i.e., turn-taking (Rummel and Spada 2005), information-sharing (Johnson and Johnson 1996) or simultaneous work (Gal et al. 2005). These collaborative behaviors were targeted because they might relate to broader social skills. Turn-taking is necessary elements for social success in all environments (Rao et al. 2008; Bernard-Opitz et al. 2001). In order to build friendship with others, children with ASD need to share information with their peers (Rao et al. 2008). Simultaneous work, which requires members of a group to take actions simultaneously, are important aspects of a group work (Gal et al. 2005). In order to elicit these collaborative behaviors, three categories of games were created: (1) turn-taking games, (2) information sharing games, and (3) enforced collaboration games.

In the turn-taking games, users had full control over their puzzle pieces during their own turns but no control over the puzzle pieces during their partner’s turn. Information sharing games were designed to hide the color of a user’s puzzle pieces from themselves, but make them visible to their partners. This required users to ask their partners about the colors of the puzzle pieces, since successful piece placement was color dependent. Finally, enforced collaboration games required that both users simultaneously select and drag a puzzle piece in the same direction. Essentially, effective communication was required to choose the desired piece and move it in unison to the appropriate location. These game strategies were implemented by defining several configuration features, such as: 1) Who could see the colored pieces; 2) Who could move the pieces; 3) Who could rotate the pieces; and 4) the time duration of each step. The configure feature of each game is listed in Table 2. Take T1 game in Table 2 for example. Two users, i.e., P1 and P2, need to play this game one by one. In this game, P1 could control pieces at the 1st, 3rd, 5th, and 7th turns, while P2 could control the pieces at the 2nd, 4th, and 6th turns. Therefore, this is a turn-taking game.

Table 2.

Configuration features for each game

| Game Name | Who see colored puzzles? | Who can move puzzles? | Who can rotate puzzles? | Duration (seconds) | Type of game |

|---|---|---|---|---|---|

| T1 | P1 and P2 | P1 in turn 1, 3, 5, 7; P2 in turn 2, 4,6 | Auto | 30 | turn-taking |

| T2 | P1 and P2 | P1 in turn 3, 4, 7; P2 in turn 1, 2, 5, 6 | Auto | 30 | turn-taking |

| T3 | P1 and P2 | P1 and P2 in all turns | Auto | 45 | enforced collaboration |

| T4 | P1 | P2 in all turns | P2 | 40 | information-sharing |

| T5 | P2 | P1 in all turns | P1 | 40 | information-sharing |

| T6 | P1 | P1 and P2 in all steps | P2 | 50 | information-sharing and enforced collaboration |

| T7 | P2 | P1 and P2 in all steps | P1 | 50 | information-sharing and enforced collaboration |

| Castle game | Half for P1, and half for P2 | P1 in turn 1, 3, 5, 7, 9; P2 in turn 2, 4, 6, 8, 10. | Auto | 30 | turn-taking and information-sharing |

Note: T = Tangram. P1 and P2 represent two users.

Measurement strategy

To assess potential targets for future system development, we conducted both offline coding of aspects of interactions as well as within-system measures of performance and interaction.

Communication efficiency

We measured communication via offline coding of participant speech. In the offline coding, user’s communication was classified into 9 different variables to reflect the primary types of utterances made within experimental sessions (see Table 3). Communication variables, as listed in Table 3, were created by consensus between five members of the research team based on previous studies of peer-peer interactions for both ASD intervention and TD learning (Teasley 1995; Van Boxtel et al. 2000; Curtis and Lawson 2001).

Table 3.

Communication variables (used for communication coding) and their descriptions

| Communication variable | Description |

|---|---|

| Frequency of words | The number of words participants speak per minute |

| Frequency of question asking | The number of task related questions participants ask per minute |

| Frequency of information sharing-response | How often participants respond to partners |

| Frequency of information sharing-spontaneous | How often participants spontaneously provide information |

| Frequency of social reinforcement-positive | How often participants give positive social feedback (e.g., “Good job”). |

| Frequency of social reinforcement-negative | How often participants give negative social feedback (e.g., “That was stupid”). |

| Frequency of directives | How often participants give partners instructions |

| Frequency of socially oriented vocalizations | How often participants make a socially oriented utterance (e.g., “What’s your name?”) |

| Frequency of speaker changes | How often participants and their partners take turns talking |

The following explains the procedure for the offline coding. First, the audio data, which were recorded for each participant in real time, were transcribed using the DragonNaturallySpeaking software (www.nuance.com). Then the transcriptions were corrected by two native speakers of English. Finally, a member of the research team labeled each utterance with one of these communication variables (listed in Table 3). The offline coding, conducted by a member of the research team, was necessary due to the preliminary design of the system and the lack of available software programs to adequately capture not only the number of words spoken, but also the qualitative nature of those utterances.

Performance efficiency

As seen in Table 4, we measured performance based on four factors necessary for game play: frequency of success, game duration, duration of collaborative actions, and collaborative movement ratio.

Table 4.

Performance variables and their description

| Performance variable | Description |

|---|---|

| Success frequency | How many times participants succeed in placing a puzzle |

| Game duration | How long a game lasted |

| Collaborative action duration | How long two participants moved a puzzle together during enforced collaboration games |

| Collaborative movement ratio | The ratio of how much game time is spent with one participant moving a puzzle compared to how much time is spent in collaborative movement by both participants together |

Game play experience

At the completion of the session, we asked the participants to self-report on several factors related to the quality of game play experience, including: enjoyment, difficulty, appraisal of own performance, ease of communicating with partner to accomplish goals, and perceived improvement in communication over game tasks. Each item was scored according to a 5-point Likert scale, with 1 reflecting more critical responses (e.g., disliked the game, found it very difficult, felt I played very badly) and 5 reflecting more positive responses (e.g., liked the game very much, I think I played very well).

Procedures

All pairs completed a single session that lasted approximately 60 minutes. Informed consent and assent were obtained from the participants and their guardians prior to the experiment. Participants sat at computers in separate rooms but within the same building. They could not see each other. Video recordings of the participants’ faces and their respective computer screens were made. All procedures were approved by the university’s governing Institutional Review Board.

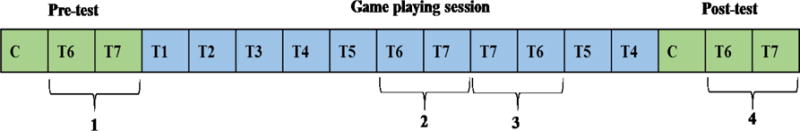

The session consisted of three parts: pre-test, a series of collaborative games, and post-test. Before game play began, participants were given text- and audio-based instructions regarding the three types of games and how to play them, including a prompt to talk to their partners. Table 2 shows all the designed tangram games, and Table 5 outlines the description and order of the games, all of which were played between two partners. The pre-test consisted of three games, one castle game and two tangram games, with an approximate completion time of five minutes for the purpose of collecting baseline data on communication and puzzle-completion skills. After the pre-test, the participant pair was presented with a series of eleven tangram games which took approximately thirty minutes to complete. The session ended with a post-test, consisting of the same set of three pre-test games. Note that the games T6_1, T6_2, and T6_3 are variants of T6 (the configuration features of T6 is shown in Table 2) with each having a different target shape to complete. The same is true for T7_1, T7_2, and T7_3.

Table 5.

All the games and their order during experiment

| Pre-test | Castle game, T6_1, T7_1 |

| Game playing | T1, T2, T3, T4, T5, T6_2, T7_2, T7_3, T6_3, T5, T4 |

| Post-test | Castle game, T6_1, T7_1 |

Results

Our primary goal was to evaluate whether the system could be feasibly implemented and well tolerated by children with ASD and their TD peers. We also examined the capability of the system to index important aspects of partner interactions. We analyzed the feasibility and the tolerability of the system as well as participants’ opinions about their performance and game play experience using a self-report questionnaire. In order to evaluate the system capability to index important aspects of partner interactions, we analyzed pre- to post-test changes in performance and communication variables (shown in Table 3 and Table 4), and documented trends in important aspects of utterances across the course of game play.

Feasibility results

Overall, the system software and hardware worked as designed. All participants completed their experiments with a zero-dropout rate, indicating that children with and without ASD were able to tolerate the CVE and protocol. The system also successfully logged performance in all cases and 99.8% audio data in real time. The 0.2% failed audio data were manually extracted offline from the recorded video. These results indicated that the system had the potential to facilitate and record aspects of interactions between children with and without ASD across the internet.

Self-report engagement

Results from the self-report questionnaire revealed overall high levels of satisfaction with the game play experience (Table 6). Specifically, participants indicated high levels of enjoyment during partner play in both TD1 individual group (4.43/5), ASD individual group (4.86/5), and TD2 individual group (4.71/5). Question 5 was used to assess individuals’ improvements in talking with their partners, with participants reporting high levels of perceived improvement in talking with their partners (ASD group is 4.57 out of 5, TD1 group is 4.0 out of 5, and TD2 group is 4.57 out of 5). The Kruskal-Wallis test, a non-parametric method for testing whether two or more independent samples originate from the same distribution (Billard 2005), indicated that there were no significant between-group differences in responses on the self-report questionnaire.

Table 6.

Numerical results of all the self-report questions

| Question | ASD Mean(SD) |

TD1 Mean(SD) |

TD2 Mean(SD) |

|

|---|---|---|---|---|

| Question1 | How much did you like playing the games with your partner? | 4.86(0.38) | 4.43(0.79) | 4.71(0.61) |

| Question2 | Overall, how would you rate the difficulty of the games | 3.14(1.35) | 3.57(0.96) | 3.29(0.91) |

| Question3 | Overall, how do you think you played | 4(1) | 4.28(0.49) | 3.71(0.61) |

| Question4 | Was it easy to talk to your partner in order to figure out how to complete the puzzle? | 4.29(1.11) | 4.29(1.11) | 4.29(0.73) |

| Question5 | Did you get better at talking with your partner the longer you played | 4.57(0.79) | 4(0.58) | 4.57(0.65) |

| Question6 | Did your performance change at all by the end of today’s visit? That is, as you played more games, did your performance get better or worse? | 4.29(0.76) | 4.43(0.79) | 4.57(0.51) |

Note: SD=Standard deviation

Measuring collaborative performance and communication

In order to determine the system capability to index important aspects of within-CVE interactions, we analyzed pre- to post-test changes in the collaborative performance and communication variables. This analysis was conducted by statistically comparing results of pre- and post- tests regarding these predefined performance and communication variables for all groups. The Wilcoxon Signed-rank test was used for the statistical analysis with 0.05 as the alpha level. We also presented effect sizes using Spearman’s rank correlation (0.1 is small effect, 0.3 is medium effect, and 0.5 is large effect). All variables that showed significant pre-post differences are listed in the Table 7.

Table 7.

All the variables with significant differences from pre-test to post-test

| Index | Group name | Game name | Variable | Increased/Decreased | P value | σ |

|---|---|---|---|---|---|---|

| 1 | ASD/TD pair | Castle Game | Success frequency | Increased | 0.031 | 0.95 |

| 2 | Time duration | Decreased | 0.031 | 1.00 | ||

| 3 | T6_1 and T7_1 | Success frequency | Increased | 0.016 | 0.19 | |

| 4 | Time duration | Decreased | 0.016 | 0.36 | ||

| 5 | collaborative ratio | Increase | 0.0001 | 0.67 | ||

| 6 | TD/TD pair | Castle Game | Success frequency | Increased | 0.031 | 0.73 |

| 7 | Time duration | Decreased | 0.016 | 0.89 | ||

| 8 | ASD/TD pair | Castle Game | Frequency of spontaneous (task oriented) utterance | Increased | 0.031 | 0.93 |

| 9 | Frequency of speaker changes | Increased | 0.031 | 0.39 | ||

| 10 | TD/TD pair | Castle Game | frequency of question (task oriented) utterance | Increased | 0.031 | 0.72 |

| 11 | Frequency of speaker changes | Increased | 0.005 | 0.86 |

Regarding Performance measures, ASD/TD pairs had more successful piece placement and took less time to finish both the castle game (p < .05, ρ = 0.95) and tangram games (p < .05, ρ = 0.19). The TD/TD pair had more successful piece placements and took less time for the castle game (p < .05, ρ = 0.93), but not the tangram game. The collaborative movement ratio, which means how often two participants work together, provides a quantitative measure of collaborative interactions. We found that ASD/TD pairs had higher collaborative movement ratio (p < 0.01, ρ = 0.67) in the post-test compared to the pre-test.

Regarding Communication measures, both ASD/TD pairs and TD/TD pairs had more task oriented utterances and frequency of speaker changes in the post- castle game than the pre-test castle game. TD/TD pairs showed an increase in frequency of speaker changes (p < .01, ρ = 0.86), as did ASD/TD pairs (p < .05, ρ = 0.86). No Communication differences emerged for the tangram games.

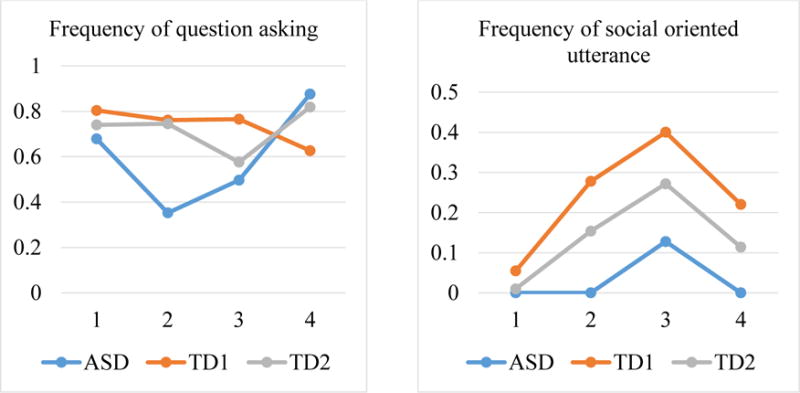

Communication trajectories

We observed interesting results in two important communication variables (i.e., frequency of question asking and frequency of social oriented utterance) of each individual group (ASD, TD1, and TD2) across the course of the experimental session by plotting communication trajectories. To compare communication trajectories across the course of the experimental session, we administered two tangram games at four different time points: during the pre-test (1), back-to-back mid-session (2,3), and during the post-test (4), shown in Figure 3. These two tangram games were analyzed together since one game required asking color information while the other required sharing color information for each player of a pair. Two games together involved asking and answering questions. We plotted participant performance on the two communication variables, shown in Figure 4. The question-asking frequency of children with ASD decreased (0.77 questions per minute in the pre-test to 0.35 questions per minute in the mid-session), and then increased (from 0.35 to 0.50, and then to 0.88). In terms of social oriented utterance frequency, ASD children had no social oriented utterance in the pre-test and post-test. However, they had 0.13 social oriented utterances per minute in the second mid-session.

Figure 3.

Experimental procedure; each experiment includes a pre-test, a game playing session, and a post-test; number 1, 2, 3, and 4 represent four pairs of enforced-collaboration-games.

Figure 4.

Numerical changes of communication variables across experiments; number 1, 2, 3, and 4 represent four pairs of enforced-collaboration-games.

Discussion

We designed and tested a CVE system with the capability to enable collaborative interactions and communication between children with ASD and their TD peers. We specifically investigated how participants with and without ASD tolerated the system, by analyzing system performance and the drop-out rate as well as self-report of game play experience. We also piloted a coding methodology and explored preliminary differences in game-performance and communication variables from pre- to post-test, as well as documented trends in utterances across the course of game play.

Evaluating the tolerability of a CVE system is an important and promising area of ASD intervention science because a successful system could open new avenues for teaching and measuring social communication skills. A large quantity of literature has evaluated the feasibility of computer-mediated systems for ASD intervention (Fletcher-Watson 2014). Some studies reported significant drop-out rates when using computer-mediated systems for ASD intervention (Silver and Oakes 2001; Golan and Baron-Cohen 2006). Other studies found that some children with ASD lacked the skills necessary to access the technology (Whalen et al. 2010). In our system, all the participants successfully completed the CVE-based interaction and the data capture was robust. In addition, both ASD and TD children reported enjoying the games and reported self-perceived improvements related to performance and communication.

In terms of the system capability to index participants’ interactions in the system, we analyzed data from several predefined performance and communication measures. Using these measures, we observed statistically significant increases in several collaborative measures (e.g., collaborative ratio) and communication measures (e.g., frequency of spontaneous utterance and frequency of speaker changes) in the post-test compared to the pre-test. Importantly, this system was not designed or studied as an intervention within the current methodology (i.e., single session use and a small sample size). Rather, we were attempting to index measurement capacity during brief dynamic interaction periods. Our ability to measure and document change within these limited interactions and observation periods therefore provides important preliminary evidence of the system’s capacity to not only measure, but also influence and promote, interactions within the CVE.

Regarding the measurement results, several areas of potential interaction differences were observed. We found that ASD/TD pairs had statistically significant higher collaborative movement ratios from pre- to post-test, which indicated the potential positive effects of the CVE system on their collaborative performance within the CVE. We also saw trends related to more complex social communication variables over the course of the experimental session. These trends are in line with results of other studies in this area. Specifically, we saw low levels of question asking in our ASD group at the very beginning of the experiments. Schmidt at el.’s also reported that children with ASD may have fewer initiations, including question asking, compared to their TD peers (M. Schmidt et al. 2011). We also noticed increases in such questioning during the course of games play. Owen-Schryver el at. reported when interacting with their TD peers in a peer-mediated training intervention, children with ASD may have increased frequency of initiation (Owen-DeSchryver et al. 2008). However, the effects of the CVE on these children need to be evaluated further with long-term and multi-session experiments.

Our successful design and implementation of this protocol paves the way for future investigations into how technology can not only provide opportunities for, but also potentially structure and encourage, collaborative interactions for children with ASD. Future research will develop and systematically deploy highly engaging CVE systems where children with ASD and TD peers interact. These CVE systems will potentially be designed explicitly to be highly, intrinsically motivating to children with ASD and typically developing alike. Specifically, the games will be constructed such that ultimate success and improvement necessitates effective collaboration (i.e., participants must move pieces at same time, provide instructions to partner about portions of the environment they cannot see, etc.). In this manner, CVEs may overcome the traditional, and to date insurmountable, programming burdens associated with attempting to model dynamic conversation within intelligent systems.

While such future applications may be promising, the current work represents merely a step in this direction. The current work has a number of limitations, including: a) small sample size, b) a restricted range of abilities of included participants, c) reliance on offline coding and indices, d) a limited window of observation of skills (i.e. single session non-intervention oriented interactions), and e) a lack of matching participants based on their motor and cognitive impairments.

Considering the impact of participants’ cognitive and motor functioning on task performance is an important goal for future work. Individuals with more severe ASD symptoms or difficulties with fine motor or visual spatial skills would undoubtedly have more challenges interacting with the CVE system. Further matching participants based on these abilities and their IQs in next studies will help better understand not only which children with ASD this type of technology is best suited for, but also how we may be able to adjust the task demands of our activities to better accommodate a broader range of skill sets.

Despite these powerful limits and need for larger/extended studies of applications, our CVE system was well tolerated, apparently engaging/enjoyable to participants with perceived interactive benefits, and our results suggested important measures of social interaction and communication could potentially be measured and altered within the environment.

Acknowledgments

This work was supported in part by the National Institute of Health (1R01MH091102-01A1 and 1R21MH111548-01), the National Science Foundation (0967170) and the Hobbs Society Grant from the Vanderbilt Kennedy Center. The authors would also express great appreciation to the participants and their families for assisting in this research. Although the manuscript text is wholly original, the work discussed here has been presented in peer-reviewed engineering journal–ACM Transactions on Accessible Computing.

Footnotes

Author Note

Lian Zhang, Department of Electrical Engineering and Computer Science, Vanderbilt University, Nashville, TN, USA. Zachary Warren, Department of Pediatrics and Psychiatry, Vanderbilt University, Nashville, TN, USA. Amy Swanson, Treatment and Research Institute for Autism Spectrum Disorders, Vanderbilt University, Nashville, TN, USA. Amy Weitlauf, Treatment and Research Institute for Autism Spectrum Disorders, Vanderbilt University, Nashville, TN, USA. Nilanjan Sarkar, Department of Mechanical Engineering, Vanderbilt University, Nashville, TN, USA.

Author Contributions: LZ implemented the software used in the study, oversaw all experiments, conducted all data analyses, and drafted the technical portions of the manuscript. AS and AW provided major design considerations for the study from a clinical perspective, managed recruitment of participants, drafted portions of the manuscript, and aided in several rounds of editing and revision. NS and ZW conceived of the study, crafted the experimental design, and revised the manuscript. All authors read and approved the final manuscript.

Conflict of Interest: Each author declares that he/she has no conflict of interest.

References

- Anagnostou E, Jones N, Huerta M, Halladay AK, Wang P, Scahill L, et al. Measuring social communication behaviors as a treatment endpoint in individuals with autism spectrum disorder. Autism. 2015;19(5):622–636. doi: 10.1177/1362361314542955. [DOI] [PubMed] [Google Scholar]

- Battocchi A, Ben-Sasson A, Esposito G, Gal E, Pianesi F, Tomasini D, et al. Collaborative puzzle game: a tabletop interface for fostering collaborative skills in children with autism spectrum disorders. Journal of Assistive Technologies. 2010;4(1):4–13. [Google Scholar]

- Benford S, Greenhalgh C, Rodden T, Pycock J. Collaborative virtual environments. Communications of the ACM. 2001;44(7):79–85. [Google Scholar]

- Bernard-Opitz V, Sriram N, Nakhoda-Sapuan S. Enhancing social problem solving in children with autism and normal children through computer-assisted instruction. Journal of autism and developmental disorders. 2001;31(4):377–384. doi: 10.1023/a:1010660502130. [DOI] [PubMed] [Google Scholar]

- Billard L. Journal of The American Statistical Association. Encyclopedia of Biostatistics 2005 [Google Scholar]

- Cauell J, Bickmore T, Campbell L, Vilhjálmsson H. Designing embodied conversational agents. Embodied conversational agents. 2000:29–63. [Google Scholar]

- Cheng Y, Chiang H-C, Ye J, Cheng L-h. Enhancing empathy instruction using a collaborative virtual learning environment for children with autistic spectrum conditions. Computers & Education. 2010;55(4):1449–1458. [Google Scholar]

- Clarke EM, Emerson EA, Sistla AP. Automatic verification of finite-state concurrent systems using temporal logic specifications. ACM Transactions on Programming Languages and Systems (TOPLAS) 1986;8(2):244–263. [Google Scholar]

- Constantino JN, Gruber CP. The social responsiveness scale. Los Angeles: Western Psychological Services 2002 [Google Scholar]

- Curtis DD, Lawson MJ. Exploring collaborative online learning. Journal of Asynchronous learning networks. 2001;5(1):21–34. [Google Scholar]

- Elliott CD, Murray G, Pearson L. Differential ability scales. San Antonio, Texas: 1990. [Google Scholar]

- Fletcher-Watson S. A targeted review of computer-assisted learning for people with autism spectrum disorder: Towards a consistent methodology. Review Journal of Autism and Developmental Disorders. 2014;1(2):87–100. [Google Scholar]

- Gal E, Goren-Bar D, Gazit E, Bauminger N, Cappelletti A, Pianesi F, et al. International Conference on Intelligent Technologies for Interactive Entertainment. Springer; 2005. Enhancing social communication through story-telling among high-functioning children with autism; pp. 320–323. [Google Scholar]

- Golan O, Baron-Cohen S. Systemizing empathy: Teaching adults with Asperger syndrome or high-functioning autism to recognize complex emotions using interactive multimedia. Development and psychopathology. 2006;18(02):591–617. doi: 10.1017/S0954579406060305. [DOI] [PubMed] [Google Scholar]

- Johnson DW, Johnson RT. Cooperation and the use of technology. Handbook of research for educational communications and technology: A project of the Association for Educational Communications and Technology. 1996:1017–1044. [Google Scholar]

- Kopp S, Gesellensetter L, Krämer NC, Wachsmuth I. International Workshop on Intelligent Virtual Agents. Springer; 2005. A conversational agent as museum guide–design and evaluation of a real-world application; pp. 329–343. [Google Scholar]

- Lahiri U, Bekele E, Dohrmann E, Warren Z, Sarkar N. Design of a virtual reality based adaptive response technology for children with autism. IEEE Transactions on Neural Systems and Rehabilitation Engineering. 2013;21(1):55–64. doi: 10.1109/TNSRE.2012.2218618. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leman PJ. How do groups work? Age differences in performance and the social outcomes of peer collaboration. Cognitive science. 2015;39(4):804–820. doi: 10.1111/cogs.12172. [DOI] [PubMed] [Google Scholar]

- Millen L, Hawkins T, Cobb S, Zancanaro M, Glover T, Weiss PL, et al. Proceedings of the 10th International Conference on Interaction Design and Children. ACM; 2011. Collaborative technologies for children with autism; pp. 246–249. [Google Scholar]

- Mitchell P, Parsons S, Leonard A. Using virtual environments for teaching social understanding to 6 adolescents with autistic spectrum disorders. Journal of autism and developmental disorders. 2007;37(3):589–600. doi: 10.1007/s10803-006-0189-8. [DOI] [PubMed] [Google Scholar]

- Moore D, Cheng Y, McGrath P, Powell NJ. Collaborative virtual environment technology for people with autism. Focus on Autism and Other Developmental Disabilities. 2005;20(4):231–243. [Google Scholar]

- Moreno-Ger P, Burgos D, Martínez-Ortiz I, Sierra JL, Fernández-Manjón B. Educational game design for online education. Computers in Human Behavior. 2008;24(6):2530–2540. [Google Scholar]

- Murray D. Autism and information technology: therapy with computers. Autism and learning: a guide to good practice. 1997:100–117. [Google Scholar]

- Owen-DeSchryver JS, Carr EG, Cale SI, Blakeley-Smith A. Promoting social interactions between students with autism spectrum disorders and their peers in inclusive school settings. Focus on Autism and Other Developmental Disabilities. 2008;23(1):15–28. [Google Scholar]

- Parsons S, Mitchell P. The potential of virtual reality in social skills training for people with autistic spectrum disorders. Journal of Intellectual Disability Research. 2002;46(5):430–443. doi: 10.1046/j.1365-2788.2002.00425.x. [DOI] [PubMed] [Google Scholar]

- Pennington RC. Computer-assisted instruction for teaching academic skills to students with autism spectrum disorders: A review of literature. Focus on Autism and Other Developmental Disabilities. 2010;25(4):239–248. [Google Scholar]

- Ploog BO, Scharf A, Nelson D, Brooks PJ. Use of computer-assisted technologies (CAT) to enhance social, communicative, and language development in children with autism spectrum disorders. Journal of autism and developmental disorders. 2013;43(2):301–322. doi: 10.1007/s10803-012-1571-3. [DOI] [PubMed] [Google Scholar]

- Pruette JR. Autism Diagnostic Observation Schedule-2 (ADOS-2) 2013 [Google Scholar]

- Rao PA, Beidel DC, Murray MJ. Social skills interventions for children with Asperger’s syndrome or high-functioning autism: A review and recommendations. Journal of autism and developmental disorders. 2008;38(2):353–361. doi: 10.1007/s10803-007-0402-4. [DOI] [PubMed] [Google Scholar]

- Reynolds S, Bendixen RM, Lawrence T, Lane SJ. A pilot study examining activity participation, sensory responsiveness, and competence in children with high functioning autism spectrum disorder. Journal of autism and developmental disorders. 2011;41(11):1496–1506. doi: 10.1007/s10803-010-1173-x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Rummel N, Spada H. Learning to collaborate: An instructional approach to promoting collaborative problem solving in computer-mediated settings. The Journal of the Learning Sciences. 2005;14(2):201–241. [Google Scholar]

- Rutter M, Bailey A, Lord C. The social communication questionnaire: Manual. Western Psychological Services; 2003. [Google Scholar]

- Scahill L, Aman MG, Lecavalier L, Halladay AK, Bishop SL, Bodfish JW, et al. Measuring repetitive behaviors as a treatment endpoint in youth with autism spectrum disorder. Autism. 2015;19(1):38–52. doi: 10.1177/1362361313510069. [DOI] [PubMed] [Google Scholar]

- Schmidt C, Stichter JP, Lierheimer K, McGhee S, O’Connor KV. An initial investigation of the generalization of a school-based social competence intervention for youth with high-functioning autism. Autism research and treatment. 2011 doi: 10.1155/2011/589539. 2011. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Schmidt M, Laffey J, Stichter J. Virtual social competence instruction for individuals with autism spectrum disorders: Beyond the single-user experience. Proceedings of CSCL. 2011:816–820. [Google Scholar]

- Silver M, Oakes P. Evaluation of a new computer intervention to teach people with autism or Asperger syndrome to recognize and predict emotions in others. Autism. 2001;5(3):299–316. doi: 10.1177/1362361301005003007. [DOI] [PubMed] [Google Scholar]

- Teasley SD. The role of talk in children’s peer collaborations. Developmental Psychology. 1995;31(2):207. [Google Scholar]

- Van Boxtel C, Van der Linden J, Kanselaar G. Collaborative learning tasks and the elaboration of conceptual knowledge. Learning and instruction. 2000;10(4):311–330. [Google Scholar]

- Wallace S, Parsons S, Bailey A. Self-reported sense of presence and responses to social stimuli by adolescents with ASD in a collaborative virtual reality environment. Journal of Intellectual and Developmental Disability 2015 [Google Scholar]

- Wechsler D. Wechsler intelligence scale for children 1949 [Google Scholar]

- Whalen C, Moss D, Ilan AB, Vaupel M, Fielding P, Macdonald K, et al. Efficacy of TeachTown: Basics computer-assisted intervention for the intensive comprehensive autism program in Los Angeles unified school district. Autism. 2010;14(3):179–197. doi: 10.1177/1362361310363282. [DOI] [PubMed] [Google Scholar]

- Zhang L, Gabriel-King M, Armento Z, Baer M, Fu Q, Zhao H, et al. International Conference on Universal Access in Human-Computer Interaction, 2016. Springer; 2016. Design of a mobile collaborative virtual environment for autism intervention; pp. 265–275. [Google Scholar]