Abstract

During sentence processing, areas of the left superior temporal sulcus, inferior frontal gyrus and left basal ganglia exhibit a systematic increase in brain activity as a function of constituent size, suggesting their involvement in the computation of syntactic and semantic structures. Here, we asked whether these areas play a universal role in language and therefore contribute to the processing of non-spoken sign language. Congenitally deaf adults who acquired French sign language as a first language and written French as a second language were scanned while watching sequences of signs in which the size of syntactic constituents was manipulated. An effect of constituent size was found in the basal ganglia, including the head of the caudate and the putamen. A smaller effect was also detected in temporal and frontal regions previously shown to be sensitive to constituent size in written language in hearing French subjects (Pallier et al., 2011). When the deaf participants read sentences versus word lists, the same network of language areas was observed. While reading and sign language processing yielded identical effects of linguistic structure in the basal ganglia, the effect of structure was stronger in all cortical language areas for written language relative to sign language. Furthermore, cortical activity was partially modulated by age of acquisition and reading proficiency. Our results stress the important role of the basal ganglia, within the language network, in the representation of the constituent structure of language, regardless of the input modality.

Keywords: fMRI, Sign language, Deaf, Language comprehension, Constituent structure

Introduction

Does language processing recruit a universal set of brain mechanisms, regardless of culture and education? In the past twenty years, this important question, initially posed solely throughout linguistic and behavioral studies in the context of Chomsky's Universal Grammar hypothesis, has begun to be investigated at the brain level (Moro, 2008). Several brain-imaging studies have homed in on a consistent network of brain regions in the superior temporal sulcus and inferior frontal gyrus, lateralized to the left hemisphere, which are systematically activated whenever subjects process sentences in their native language (Mazoyer et al., 1993; Marslen-Wilson and Tyler, 2007; Pallier et al., 2011; Friederici, 2012). Neuroimaging studies of language comprehension suggest that essentially the same left-lateralized perisylvian network is engaged by the processing of spoken or written language (Vagharchakian et al., 2012). Those regions respond to manipulations of syntactic complexity (Marslen-Wilson and Tyler, 2007; Pallier et al., 2011; Shetreet and Friedmann, 2014) and activate to natural but not unnatural linguistic constructions (Musso et al., 2003). Intracranial recordings in adults indicate that their activation varies monotonically with the number of words that can be integrated in a phrase or sentence (Fedorenko et al., 2016; Nelson et al., 2017). Remarkably, those regions are already active when 2-month-old babies listen to their native language (Dehaene--Lambertz and Spelke, 2015), and already exhibit hemispheric asymmetries that are unique to the human species (Leroy et al., 2015), further comforting the hypothesis that they may host a specific and universal mechanism for language acquisition.

The existence of sign languages presents a significant challenge for this hypothesis. Several researchers have presented data supporting the idea that, even though sign languages are based on an entirely distinct output modality, they are full-fledged natural languages and are governed by the same linguistic constraints as spoken languages (Klima and Bellugi, 1979; Sallandre and Cuxac, 2002; Sandler, 2003, 2010; Cuxac and Sallandre, 2007; Brentari, 2010; Pfau et al., 2012; Börstell et al., 2015). The acquisition of sign language also follows a time course similar to that of spoken language, with deaf babies undergoing an early stage of sign overproduction and “babbling” in the first year of life (Petitto and Marentette, 1991; Cheek et al., 1998; Cormier et al., 1998; Petitto et al., 2001, 2004). Moreover, consistent with neuroimaging studies of language comprehension, neuropsychological studies have revealed classical patterns of aphasia for sign language, due to similar brain lesions (Hickok et al., 2002; Pickell et al., 2005; Hickok and Bellugi, 2010; Rogalsky et al., 2013). Finally, neuroimaging studies of sign language (SL), when disregarding the low-level differences due to input modalities, have also converged on a classical network of left-hemispheric regions similar to spoken language (for reviews, see Campbell et al. (2007), Rogalsky et al. (2013), Corina et al. (2013b)). Nevertheless, a few studies have reported stronger responses to sign language in the right, or left, parietal regions, which have been hypothesized to reflect the spatial content of sign languages (Emmorey et al., 2002; Newman et al., 2002, 2010a; MacSweeney et al., 2008; Courtin et al., 2010; Newman et al., 2010b; Emmorey et al., 2013; Newman et al., 2015).

In summary, in the present state of knowledge, it seems plausible that sign language should rely on the same brain areas as spoken language, but the data is not fully convergent. Furthermore, most neuroimaging studies of sign language have only mapped the entire language system, from lexical to morphological, syntactic, semantic and pragmatic components, by using basic contrasts such as viewing full-fledged movies of people signing versus “backward layered” movies, i.e. 3 different semi-transparent ASL sentence video clips superimposed and played backwards (Newman et al., 2010a, 2010b; 2015). Furthermore, the comparison of the brain areas activated by sign language and by spoken or written language has typically been performed at the group level, by comparing a group of signers with another group of non-signers (Corina et al., 2013a). In the present work, our goals were to go beyond this state of knowledge in two distinct ways. First, our experimental design attempted to specifically isolate the cortical representation of the constituent structure of sign language. Second, we aimed to compare sign language with written language processing within the same subjects, by scanning deaf subjects who were native signers and who could also read written sentences fluently.

Supplementary video related to this article can be found at https://doi.org/10.1016/j.neuroimage.2017.11.040.

To achieve those goals, we adapted, to sign language, a paradigm previously developed to study the constituent structure of written language (Pallier et al., 2011), and in which the stimuli were lists of twelve words that ranged in complexity from a list of 12 unrelated words to phrases of 2 words, 3 words, 4 words, 6 words, or a full sentence of 12 words. Here, similarly, we presented deaf participants with sequences of 8 signs in which the size of syntactic constituents was systematically manipulated, from unrelated signs to phrases of 2, 4 or 8 signs. All participants were congenitally deaf adults who had acquired French Sign Language (LSF: Langue des Signes Française) as a first language and written French as a second language. With this design, our aim was to identify the brain regions involved in compositional processes in sign language comprehension (see Makuuchi et al. (2009), Goucha and Friederici (2015), Zaccarella et al. (2015), or Nelson et al. (2017) for similar approaches in written sentence processing). We also included a reading condition where participants watched sequences of words that formed either fully well-formed sentences or plain lists of words that could not merge into larger constituents, thus partially replicating the Pallier et al. (2011) experiment within the same subjects. Our main experimental questions were the following: (1) are the brain responses to the constituent size manipulation similar in sign language and in reading within deaf signers? (2) how do these responses compare to the ones in the native French speakers tested in Pallier et al. (2011)?

Materials and methods

Participants

Twenty signers of French sign language (8 men and 12 women, all right-handed) took part in the study (see Table 1 for details). All the participants had a binaural hearing loss of 75 dB or more. They were all born deaf except one, who lost hearing when she was 3 months old. All participants declared that sign language was their dominant language. Sixteen of them had started to learn sign language before age 5 (11 of them since birth). The remaining four participants started at the age of 5.5, 6, 8 and 16 years. 19 of them had been “oralized” (that is, they learned to lip read French and articulate it) so that they had all received linguistic input early in life. Moreover, all had received an education in French, and reached various degrees of proficiency. Our initial criterion for inclusion was to be native in sign language (age of acquisition = 0). However, in the course of recruiting participants we relaxed this criterion because, in France, it is very difficult to find true native signers as the education system still favors oralization.

Table 1.

Participants’ profiles and behavioral data. The laterality quotient was obtained from the Edinburgh questionnaire (Oldfield, 1971) with one additional question about the dominant hand when signing. The participants self-reported the ages at which they started learning sign language and French (age of start of acquisition), the ages at which they considered having mastered sign language and French (age of mastering), their fluencies in sign language and French reading, on a scale from 1 (not good at all) to 7 (very good). The last rows describe the performance on three short behavioral tests of reading ability: semantic decision, lexical decision, and detection of grammatical anomalies. SD: standard deviation; Sign language: French sign language. * indicates significant values at pFWE=0.05.

| Deaf subjects (n = 20) | Mean | SD | Min–Max | |

|---|---|---|---|---|

| Age (years old) | 30.2 | 6.7 | 19.5–43 | |

| Laterality quotient (%) | 93.6 | 8.6 | 64–100 | |

| Age of start of Acquisition (years) | Sign language* | 2.5 | 4.0 | 0–16* |

| French | 6.3 | 2.9 | 2.5–16 | |

| Age of Mastering (years) | Sign language | 12.8 | 6.2 | 4.5–25 |

| French | 13.9 | 4.2 | 8–23 | |

| Self-rated fluency (1–7) | Sign language | 6.5 | 0.6 | 5.5–7 |

| French reading | 5.3 | 0.9 | 3.5–7 | |

| Semantic decision on French words | Time per word (s) | 1.4 | 0.3 | 0.9–2.1 |

| Accuracy (%) | 98.5 | 2.2 | 92.5–100 | |

| Lexical decision on French words | Time per word (s) | 1.6 | 0.5 | 1–3 |

| Accuracy (%) | 82 | 10.8 | 55–95 | |

| Detection of grammatical errors in French sentences | Accuracy (%) | 80.5 | 8 | 62.5–95 |

In addition to their “age of acquisition”, i.e. the age of start of exposure, we also asked the participants about the age at which they thought that they had reached proficiency in each language (sign language and French). We expected more variability in the amount of exposure to sign and written languages across participants than is typically the case when the question of age of acquisition is asked to native speakers of an oral language. Thus, our questionnaire aimed to capture this variability in the ages of reaching high proficiency, while acknowledging that this is a highly subjective judgment.

Background information on the participants is provided in Table 1. The experiment was approved by the regional ethical committee, and all subjects gave written informed consent and received 80 euros for their participation.

Behavioral data

Three short behavioral tests were administered to assess reading ability: semantic decision, lexical decision, and detection of grammatical anomalies. For the semantic decision task, participants were presented with a printed list of forty nouns and asked to classify each of them as quickly as possible as ‘artificial’ or ‘natural’. All words were five letters long, had a lexical frequency above 10 ppm according to Lexique3 (see http://www.lexique.org); half of them represented man-made objects (such as radio, train) and half were natural objects (such as fruits). Accuracy and time to complete the test were recorded. For the lexical decision task, forty stimuli were used. Half were French nouns with a relatively low frequency (lower than 1 ppm) (such as tonus, fluor) and half were pseudowords (such as vorle). The stimuli were printed on a sheet and the task of the participant was to classify each stimulus as a word or a nonword. Accuracy and time to complete the full test were recorded. Finally, for the grammatical anomaly detection test, the participants were asked to read forty sentences and to indicate if they contained a grammatical mistake or not (these materials were provided courtesy of Dr. Dehaene-Lambertz). Global accuracy was measured.

Stimuli for fMRI

Sign language

Signs with a low iconicity were selected, to avoid spatially organized discourse or highly iconic structures (Courtin et al., 2010). Our goal was to create sign language sentences that were most “comparable” to French sentences, where written words entertain a fully arbitrary relationship with the corresponding meanings. Twenty-five 8-sign sentences were produced by a native signer (F. Limousin). Each sign was produced in isolation and recorded in a high-quality black-and-white video. In each sign clip, the signer started and ended with her hands on her laps. The signs were then strung together in order to create the condition c08 (full sentence). In order to ensure a smooth transition from one clip to another, the two clips were digitally overlaid during 0.75 s (see supplementary materials for examples). The resulting streams were considered as easily interpretable by native signers.

The same signs used in condition c08 were also recombined into smaller syntactic constituents comprising 4 signs. Two of these 4-sign constituents were then randomly concatenated to construct sequences of 8 signs comprising two unrelated groups of 4, corresponding to condition c04. The same signs were also recombined in order to create the stimuli for condition c02, which consisted in four unrelated groups of syntactic constituents of size 2. For condition c01, finally, single signs from the original sentences were randomly concatenated to create a list of 8 unrelated signs. The stimuli were manually verified and, if necessary, reshuffled to ensure that they did not contain, by chance, constituents of greater size than intended. For instance, in the c01 condition, two consecutive signs never formed a constituent.

In order to check the absence of any low-level difference between the conditions, we conducted a small experiment with 10 non-signers. We presented them with the 25 stimuli of type “sentence” (c08) and the 25 stimuli “list of signs” (c01) in random order and asked them to classify them as sentences versus lists. The average performance was 56% correct (95% C.I. = [49.8, 62.2]). A Student t-test yielded a marginal p-value p = 0.057. We thus conclude that the two classes of stimuli are not easily distinguishable by non-signers.

Following the same procedure as for c08 stimuli, several 8-sign probe sentences were recorded. These probe sentences explicitly requested the participants (in sign language) to press a button and served to ensure that they were paying attention to the stimuli.

Written French

Twenty-five 12-word sentences were first generated to create the condition c12 (full sentence). Single words from these sentences were randomly concatenated to create sequences for the c01 condition (word list). The stimuli were manually verified and, if necessary, reshuffled to ensure that two consecutive words never formed a constituent. Several probe 12-word sentences were also generated. These probe sentences explicitly requested the participants to press a button and served to ensure that they were paying attention to the stimuli.

Procedure

Before entering the scanner, the participants were familiarized with examples of both sign language and written French stimuli, from each condition and with the probe detection task. In the scanner, an anatomical image was first acquired, followed by the functional scans: 4 sessions for the sign language paradigm and 2 sessions for the written French stimuli. Instructions were displayed in sign language at the beginning of each session (not included in the scanning period).

Sign language

Each subject took 4 fMRI sessions containing 22 stimuli each (stimulus duration = 12 s; interstimulus interval = 8 s; see Fig. 1). Each session contained five stimuli belonging to each of the four experimental conditions c01, c02, c04 and c08 and two probe stimuli. The order of conditions was randomized within each session.

Fig. 1.

Time course of stimulus presentation for French sign language stimuli (top) and written French stimuli (bottom).

Written French

The 12-word sequences were presented using rapid serial visual presentation (600 ms per word) in white characters on a black background. Each subject took 2 fMRI sessions containing 27 stimuli each (stimulus duration = 7.2 s; interstimulus interval = 7.8 s; see Fig. 1). Each session contained ten stimuli belonging to each of the two experimental conditions c01 and c12 and seven probe sentences. The order of conditions was randomized.

Imaging and data analysis

The acquisition was performed on a 3 T S Tim Trio system equipped with a 12-channel coil. For each participant, an anatomical image was first taken, using a 3D gradient-echo sequence and voxel size of 1 × 1 × 1.1 mm. Then, functional scans were acquired during 4 sessions of 180 scans each for the sign language paradigm and 2 sessions of 170 scans each for the written French stimuli, using an Echo-Planar sequence sensitized to the BOLD effect (TR = 2.4 s, TE = 30 ms, matrix = 64 × 64; voxel size = 3 × 3x3 mm; 40 slices in ascending order).

Data processing was performed with SPM8 (Wellcome Department of Cognitive Neurology, software available at http://www.fil.ion.ucl.ac.uk/spm/). The anatomical scan was spatially normalized to the avg152 T1-weighted brain template of the Montreal Neurological Institute using the default parameters (including nonlinear transformations and trilinear interpolation). Functional volumes were corrected for slice timing differences (first slice as reference), realigned to correct for motion correction (registered to the mean using 2nd degree B-Splines), coregistered to the anatomy (using Normalized Mutual Information), spatially normalized using the parameters obtained from the normalization of the anatomy, and smoothed with an isotropic Gaussian kernel (FWHM = 5 mm).

For the sign language paradigm, experimental effects at each voxel were estimated using a multi-session design matrix modeling the 5 conditions (c01, c02, c04, c08, probes) and including the 6 movement parameters computed at the realignment stage. Each stimulus was modeled as an epoch lasting 12 s, convolved by the standard SPM hemodynamic response function. Similarly, for the written French paradigm, experimental effects at each voxel were estimated using a multi-session design matrix modeling the 3 conditions (c01, c12, probes) and including the 6 movement parameters computed at the realignment stage. Each stimulus was modeled as an epoch lasting 7.2 s, convolved by the standard SPM hemodynamic response function.

Contrasts averaging the regression coefficients associated with each condition were computed and smoothed with an 8 × 8x8 mm Gaussian kernel. These estimates of the individual effects were entered in a second-level one-way analysis of variance model with one regressor per experimental condition, as well as one regressor per subject.

To search for areas where activation increased with degree of structure, we used a linear contrast with coefficients normalized so that an increase of one unit from the less structured to the more structured condition would yield an effect size of 1. For written French, the contrast is simply (−1 1) applied to the coefficients for lists and sentences respectively. For sign language, there are four conditions: c01, c02, c04 and c08. A linear increase of 1 between c01 and c08, implies an increase of 1/3 for c02, and 2/3 for c04, that is, the vector of activations would be (0, 1/3, 2/3, 1), plus a constant. To find the normalizing constant α for the linear contrast (−1−1/3 1/3 1), we must solve α*(−1*0−1/3*1/3 + 1/3*2/3 + 1*1) = 1. The solution is α = 0.9, yielding the contrast (−0.9, −0.3 0.3 0.9).

Regions of interest (ROI) analyses were also performed on the data. The ROIs we used were the following (see also the Region of Interest analyses section, Fig. 4, and Pallier et al. (2011)): inferior frontal gyrus pars orbitalis (IFGorb), inferior frontal gyrus pars triangularis (IFGtri), inferior frontal gyrus pars opercularis (IFGoper), putamen, temporal pole (TP), anterior superior temporal sulcus (aSTS), posterior superior temporal sulcus (pSTS), temporo-parietal junction (TPJ). As described by Pallier et al. (2011) (supplementary material, section 4), those ROIs were defined as the intersections of spheres of 10 mm radius with the clusters identified in the data by Pallier et al. (2011) by the linear contrasts for constituent-size thresholded at voxel-based p < 0.001. The centers of the regions were defined from their results for the normal prose group for TP, aSTS and TPJ and for the Jabberwocky group for IFGorb, IFGtri, pSTS. We also added a region corresponding to the inferior frontal gyrus pars opercularis (IFGoper), which is often activated in language studies but was not found in Pallier et al. (2011) at the above thresholds. For the center of this ROI, we used the coordinates of the peak found by Goucha and Friederici (2015) (Table 3, contrast JP2-JRO2, coordinates (−51, 8, 15)). Using a similar method as for the other ROIs, this region was defined as the intersection of a sphere of 10 mm radius with the grey matter template furnished with SPM8. For the ROIs in the right hemisphere, we used the symmetrical regions of the ROIs in the left hemisphere.

Fig. 4.

Amplitude of activations across conditions in the regions of interest (error bars represent ± 1 SEM): IFGorb, IFGtri, IFGoper, putamen, TP, aSTS, pSTS, TPJ. Sign language activations are in black, and activations to written French in grey. Three statistical tests are represented on the figure, assessing the effect of coherence, that is, the increase of activity between the extreme conditions (c01 to c08 in sign language; c01 and c12 in written French), and the difference between the two (interaction between coherence and modality). Significance levels: (***) p ≤ 0.001, (**) p ≤ 0.01, and (*) p ≤ 0.05.

Table 3.

Brain regions showing a larger effect of coherence in written French than in sign language, in MNI space. A p = 0.001 voxelwise threshold, uncorrected for multiple comparisons, was applied. An exclusive mask was used to exclude regions showing a decreasing activation as a function of coherence in sign language, at voxelwise p < 0.05. * indicates significant values at pFWE = 0.05.

| Contrast | Anatomical labels (AAL) | Cluster size (mm3) | Peak Z | x | y | z |

|---|---|---|---|---|---|---|

| Interaction French-Sign language | ||||||

| L Frontal Inf Tri | 1458 | 5.19* | −51 | 35 | 4 | |

| L Temporal Mid | 1134 | 4,90* | −54 | −10 | −11 | |

| R Temporal Sup | 1485 | 4,74* | 51 | −10 | −11 | |

| R Temporal Sup | 3,31 | 63 | −4 | −8 | ||

| L Temporal Mid | 324 | 4,34 | −63 | −34 | 1 | |

| L Precentral | 540 | 4,08 | −48 | 2 | 49 | |

| L Frontal Mid | 3,24 | −36 | 5 | 49 | ||

| L Frontal Inf Oper | 594 | 3,90 | −36 | 11 | 31 | |

BOLD response amplitudes were computed from the SPM model, which used a standard hemodynamic response function (hrf). The parameters estimates from the hrf model for each condition and each subject were averaged across all voxels in the region of interest, and were entered in a one-way within subjects ANOVA model with the 6 conditions (c01, c02, c04, c08 for sign language and c01 and c12 for written French). A priori contrasts were computed to detect effects of the size of constituent structures (hereafter called “coherence”) within each language, and the interaction between coherence and language. The significance of these contrasts (uncorrected for multiple comparisons) is displayed on the figures showing the activation patterns in the ROIs (Fig. 4 and Fig. S1).

Results

Whole brain analyses

We first looked at the overall networks involved in processing sign language versus written French, by contrasting the periods of stimulation with the periods of rest. The results are shown in Fig. 2A and B. Broadly similar patterns of activations were obtained for the processing of sign language and written French. In both cases, occipital regions, temporal regions around the superior temporal sulcus (STS), the inferior, middle and superior frontal gyri showed bilateral activations (see the intersection in Fig. 2C). Still, the activations patterns were not strictly identical. Contrasting sign language to written French revealed that sign language elicited stronger activations in occipital and posterior temporal regions bilaterally (Fig. 2D). The effect (Sign language > French) extended to the right superior temporal sulcus. Stronger activations to sign language were also observed in the fusiform gyri, with a larger effect in the right hemisphere, in the thalamus bilaterally, and in the right inferior frontal gyrus.

Fig. 2.

Brain regions activated by sign language (A) and by written French (B) relative to rest; regions activated by sign language and French (intersection, C); regions more activated by sign language stimuli than by written French (D) and regions more activated by written French than by sign language (E). Maps thresholded at T > 3.2, p < 0.001 uncorrected.

The opposite contrast (French > Sign language), shown on Fig. 2E, showed a bilateral pattern of activations in the inferior parietal lobules, the fusiform gyri, the cingulate gyri and the SMA (supplementary motor area), the postcentral gyri, the insulas, the inferior frontal gyri (Brodmann area 44, pars opercularis), and precentral gyri. Activations in the calcarine and in the fusiform gyrus, at the classical location of the visual word form area (VWFA), were lateralized to the left hemisphere.

Constituent-size effects

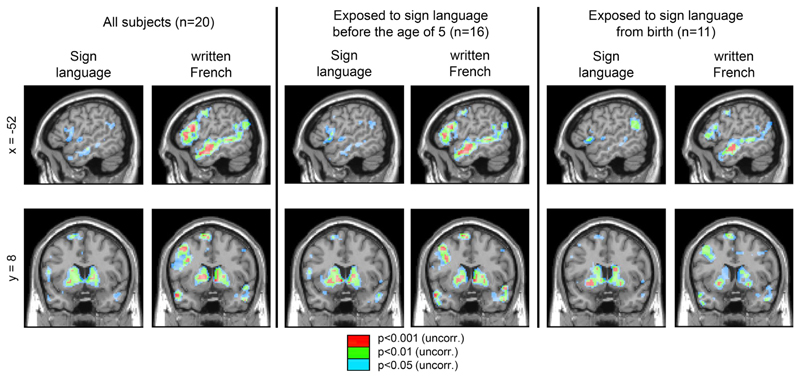

A linear contrast was used to search for regions where activation increased with the size of constituent structures (hereafter called “coherence”). At the p < 0.001 voxel-wise threshold, only the caudate nuclei showed a significant increase with coherence in sign language; patches of activations were detected at lower thresholds in the left inferior frontal and in the left middle temporal lobes (see Fig. 3 and Table 2). Contrasting the two extreme conditions in sign language (c08 > c01) yielded a similar outcome (Table 2).

Fig. 3.

Brain regions with a significant increase in activation with constituent size for sign language and for written French. Maps thresholded at three different values: p < 0.05 (unc.) in blue, p < 0.01 (unc.) in green, and p < 0.001 (unc.) in red.

Table 2.

Regions showing significant effects of coherence in sign language and in written French. Coordinates are in MNI space. A p = 0.001 voxelwise threshold, uncorrected for multiple comparisons, was applied. * indicates significant values at pFWE = 0.05. Anatomical labels were obtained with the Anatomical Automatic Labeling toolbox (Tzourio-Mazoyer et al., 2002).

| Contrast | Anatomical labels (AAL) | Cluster size (mm3) | Peak Z | x | y | z |

|---|---|---|---|---|---|---|

| Sign language linear contrast | ||||||

| R Caudate | 4239 | 4.51 | 9 | 14 | 4 | |

| L Caudate | 4.12 | –18 | 11 | 13 | ||

| 3.74 | –6 | 11 | 4 | |||

| L Insula | 648 | 4.10 | –24 | 23 | 1 | |

| R Frontal Inf Orb | 270 | 3.91 | 33 | 17 | –23 | |

| Sign language c08 > c01 | ||||||

| L Insula | 4995 | 4.34 | –24 | 23 | –2 | |

| L Caudate | 4.29 | –18 | 11 | 13 | ||

| L Putamen | 4.28 | –21 | 11 | –2 | ||

| R Caudate | 2673 | 4.20 | 9 | 11 | 1 | |

| R Putamen | 3.68 | 15 | 14 | –5 | ||

| R Caudate | 3.47 | 21 | 23 | –2 | ||

| L Fusiform | 135 | 4.07 | –33 | –31 | –14 | |

| L SMA | 189 | 3.89 | –6 | 17 | 64 | |

| R Insula | 243 | 3.77 | 30 | 14 | –20 | |

| L Frontal Mid | 351 | 3.65 | –21 | 50 | 16 | |

| Written French c12 > c01 | ||||||

| R Temporal Mid | 4671 | 6.19* | 54 | –7 | –17 | |

| L Temporal Mid | 6426 | 5.94* | –54 | –10 | –11 | |

| L Temporal Mid | 5.05* | –60 | 2 | –23 | ||

| L Temporal Mid | 4.35 | –54 | 8 | –23 | ||

| L Frontal Inf Tri | 11043 | 5.92* | –51 | 32 | 4 | |

| L Frontal Inf Tri | 4.93* | –57 | 17 | 16 | ||

| L Precentral | 5.46* | –48 | 2 | 49 | ||

| L Frontal Sup | 4239 | 5.09* | –12 | 56 | 31 | |

| L Frontal Sup Medial | 4.91* | –6 | 56 | 37 | ||

| L Frontal Sup Medial | 4.48 | –3 | 47 | 40 | ||

| L Temporal Mid | 3132 | 5.09* | –63 | –34 | 1 | |

| L Temporal Mid | 4.77* | –57 | –43 | 1 | ||

| L Angular | 2241 | 4.92* | –48 | –70 | 28 | |

| L SMA | 999 | 4.62 | –9 | 8 | 70 | |

| L Rectus (ventromedial PFC) | 864 | 4.56 | 0 | 44 | –17 | |

| L Precuneus | 1107 | 4.48 | –3 | –55 | 16 | |

| R Caudate | 1782 | 4.19 | 9 | 8 | 10 | |

| R Caudate | 3.98 | 9 | 8 | 1 | ||

| L Putamen | 2349 | 4.18 | –21 | 2 | 7 | |

| L Caudate | 3.94 | –9 | 8 | 7 | ||

| L Occipital Inf | 324 | 4.06 | –18 | –91 | –5 | |

| L Amygdala | 378 | 4.00 | – 18 | –1 | – 11 | |

For written French, we compared sentences (c12) to lists of words (c01). The results are displayed on Fig. 3 and reported in Table 2 and Table S1. The largest clusters were detected in the left temporal, inferior and middle frontal regions, as well as in the caudate nucleus and putamen. Homologous regions of the right hemisphere, in the right middle and inferior frontal gyri and the middle temporal gyri, were activated to a smaller extent. The IFG activations encompassed the pars triangularis and pars opercularis, and extended to the middle frontal gyrus, including the precentral region.

In summary, as shown in Fig. 3, coherence modulated activation in the basal ganglia, both in sign language and in written French. The cortical language network located in frontal and temporal areas was also sensitive to coherence, albeit in a more statistically reliable manner in written French than in sign language (see Table 3). Note that all of our subjects acquired sign language as a first language, but a few acquired it during childhood, a factor that was shown to affect behavioral language abilities (Friedmann and Szterman, 2006, 2011; Lieberman et al., 2015) and brain activity (Mayberry et al., 2011). To examine whether the weak cortical activations with sign language could be due to variability in age of acquisition, we looked at the coherence effects in subsets of subjects who acquired sign language before the age of 5 (middle panel of Fig. 3) or from birth (right panel). The results were virtually identical, with coherence effect in sign language mainly found in the basal ganglia, and sparse activation at p < 0.01 in temporal and inferior frontal cortices.

Region of interest analyses

To complement the whole brain analyses, and for greater statistical sensitivity, we performed focused analyses within a-priori regions of interest derived from Pallier et al. (2011), as described in the Materials and Methods section. Activations in these regions, as a function of experimental conditions, are displayed in Fig. 4 (left hemisphere) and S1 (right hemisphere). For written French, all ROIs responded more strongly to sentences than to lists of words, replicating in deaf subjects the result previously observed in hearing subjects (Pallier et al., 2011). An effect of coherence was also detected in some homologous regions of the right hemisphere, namely aSTS, putamen, TP and pSTS.

The effect of coherence was also significant for sign language in all of the left-hemisphere ROIs, except IFGorb. In the right hemisphere, the effect also reached significance in the right putamen and the right TP. As attested by significant language by condition interactions, the effect of coherence was significantly stronger for written French than for sign language in several of these regions, namely, the left IFGtri, aSTS and pSTS, and the right aSTS.

Correlations between individual subjects’ characteristics and brain activity

The deaf population necessarily exhibits important variability in factors that could impact on language processing, including age of acquisition and reading fluency. We examined the effects of the variables presented in Table 1 on brain activity. More precisely, we searched for correlations between these variables and our two main contrasts within each modality, namely the activation to sentences relative to rest, and the effect of coherence.

In whole-brain analyses, age of acquisition of sign language correlated negatively with the linear contrast of coherence in sign language (p < 0.001 voxelwise uncorrected) in the left frontal region (middle frontal gyrus, orbital part: volume = 2133 mm3; peak = −27, 56, −8, Z = 4.08). The ROI analysis did not reveal any significant effect of age of acquisition of sign language. However, as this variable has a large cluster of participants in the 0–1 range, these results should be taken with caution. Nevertheless, activity correlated with another variable, more broadly distributed across participants, namely age of mastering sign language. On the one hand, the age of mastering sign language correlated positively with the activation for full sentences in sign language in the left temporal pole (TP) and, on the other hand, it correlated negatively with the coherence effect in sign language in pars orbitalis (see Table S2).

In addition to this, in whole-brain analyses, lexical decision scores yielded significant correlations with activations in the left temporal pole (volume = 7857 mm3; peak = −54, −4, −35, Z = 5.89) during reading relative to rest (at the p < 0.05 FWE corrected threshold). This result is displayed on Fig. 5 (top panel). Unsurprisingly perhaps, the subjects with the best scores in lexical decision task, i.e. the better readers, showed the strongest activations when exposed to written stimuli. In ROI analyses (Table S2), the main outcome was that the activation in the language network during French reading was modulated positively by the accuracy in lexical decision, probably reflecting the general understanding of the sentences by the subjects (Fig. 5, bottom).

Fig. 5.

Correlation of activations in French sentences and individual lexical decision performance in several ROIs (IFGorb, IFGtri, IFGoper, putamen, TP, aSTS, pSTS, TPJ). Each dot represents one subject. Significance levels: (***) p ≤ 0.001, (**) p ≤ 0.01, and (*) p ≤ 0.05. Top figure: Brain regions showing a significant correlation between French sentences activation (c12) and lexical decision scores. Maps thresholded at T > 3.6, p < 0.001 uncorrected.

Discussion

In order to evaluate the universality of linguistic networks, we scanned deaf participants who acquired sign language as a first language and written French as a second language, while they processed stimuli that varied from random lists of words or signs to full-fledged sentences in both languages. The manipulation of constituent size during reading yielded the same result as Pallier et al. (2011), i.e., robust activations all along the left superior temporal sulcus, the inferior frontal gyrus, and the left basal ganglia. In sign language, however, the manipulation of constituent size only robustly modulated the activation in the basal ganglia, while its effect was still present but with a significantly smaller amplitude in cortical language areas. The region-of-interest analyses confirmed a weaker effect of constituent size in cortical regions in sign-language processing than in reading.

Our sign-language stimuli were clearly successful in manipulating syntactic and semantic coherence, as attested by robust and essentially monotonic effects in the basal ganglia. It is therefore not entirely clear why their impact on cortical activation was so reduced. It should be remembered, however, that in order to equalize all conditions, sign-language sentences were artificially constructed: signs were first recorded in isolation, with hands always returning to an intermediate resting position, and then they were strung together to form well-matched lists, phrases or full-length sentences. In such an artificial context, the loss of continuity, of prosodic cues, and of the facial cues which typically provide a global prosodic context for sign language (e.g. specifying whether the sentence is an affirmation or a question) could all explain the reduced amount of cortical activity. It is important to note that studies that have used a more natural contrast for sign language, such as forward versus backward-layered movies of sentences, have observed highly significant activations in cortical language areas (Newman et al., 2010a, 2010b; 2015).

A study which has looked at a similar issue as the one investigated here, albeit with a different experimental paradigm, is that of Inubushi and Sakai (2013). The authors presented deaf participants with sign language stimuli forming a dialog. The participants were asked to spot lexical, syntactic or contextual errors in sentences. The same exact sentences were reused for the different tasks and the participants were instructed at the start of each block which level they would have to attend to. The baseline consisted in blocks where sentences were presented backwards and the task was to detect a stimulus repetition. The authors found that activity in frontal language areas gradually increased and expanded ventrally with higher linguistic levels. Paying attention to the word level yielded activations in the premotor cortex and the SMA, which extended to pars opercularis and triangularis when detecting syntactic errors, and then to pars orbitalis when detecting contextual anomalies. In addition, activations were detected in the STS and the basal ganglia for the sentence level and discourse level tasks but not for the word level task. One important difference to note between Inubushi and Sakai‘s study and the current one is that they used constant stimuli and explicitly required participants to perform tasks at different linguistic levels, while in our paradigm, the participants just watched or read sequences varying in degree of coherence.

Our results point to the basal ganglia as a key region for the constituent structure of language, whose activation is identical regardless of the modality of language presentation (written or sign). The basal ganglia have been traditionally associated with motor sequence learning, notably chunk formation (Graybiel, 1995), but also with higher levels of cognition, such as language processing (Ullman, 2001; Lehéricy et al., 2005). For example, the putamen and caudate nuclei have been suggested to be implicated in morpho-syntactic processing by Teichmann (2005) who observed that Huntington patients with damage in the striatum were impaired in their ability to conjugate verbs using inflections (but see Longworth (2005)). In normal subjects, increased activations in the striatum were also reported when subjects had to pay attention to syntax (Moro et al., 2001) or when constituent size increased (Pallier et al., 2011).

In two studies of sign language comprehension, Newman and collaborators also found converging evidence for an implication of the basal ganglia. Newman et al. (2010a) reported increasing activation in the basal ganglia when the sentences contained signs with inflectional morphology, while Newman et al. (2010b) observed stronger activation in the basal ganglia when deaf participants had to rely on grammatical information to comprehend sentences lacking in prosodic cues. In addition, as indicated above, Inubushi and Sakai observed activations in the STS and the basal ganglia for the sentence level and discourse level tasks but not for the word level task.

More speculatively, studies of the FOXP2 gene also provide evidence linking the basal ganglia and language. Alterations of this gene have been famously associated with language deficits, although primarily at the articulatory level (see Marcus and Fisher (2003) and Vargha-Khadem et al. (2005) for reviews). Electrophysiological research in mice demonstrated that FOXP2 specifically modulates the anatomical and functional organization of cortico-striatal circuits (Enard et al., 2009; Reimers-Kipping et al., 2011; Chen et al., 2016). Mutant mice with the human version of FOXP2 exhibited enhanced long-term synaptic plasticity and learned stimulus-response associations faster than normal mice (Schreiweis et al., 2014). Remarkably, mice ultrasonic vocalizations were also affected (Enard et al., 2009). Overall, basal ganglia circuits appear to be involved, in all mammals, in the efficient learning of routinized procedures via a mechanism that identifies reproducible chunks within repeated sequences (Smith and Graybiel, 2013), in close interaction with prefrontal cortex (Fujii and Graybiel, 2003). In the human brain, this mechanism might have extended to the representation of the cognitively more complex tree-structures underlying sentences (Dehaene et al., 2015), thus providing a tentative explanation for why this region appears so prominently in the present work as a shared substrate for the constituent structure of written and sign languages.

Beyond the identification of regions implicated in constituent structure building, the design of our study allowed us to compare the regions involved in sign language processing versus reading. We observed that the pSTS and TPJ regions, bilaterally, responded more strongly, relative to a rest baseline, to sign language than to written French (Fig. 4 and Fig. S1). These regions are known to be involved in the perception of biological motion (Grossman et al., 2000) and also in language comprehension both in reading (Pallier et al., 2011) and in sign language (Newman et al., 2015). Both factors may have contributed to their greater recruitment during sign language processing. Some researchers have proposed that there may be common processes shared by biological motion and language perception, notably the integration of information over time and space (Redcay, 2008; Bachrach et al., 2016). If activations in the pSTS and TPJ reflect the integration of words into a larger context, the fact that these regions respond more strongly to sign language in all conditions of our study suggests that integration occurs in sign language even at the level of a single sign. This would be consistent with the fact that information in sign language is perceived in parallel compared to oral or written language, where the constituents are produced (and perceived) in a more linear (serial) way (Sallandre, 2007). Another possibility is that the perceivers try harder to find coherence between the stimuli in a sequence of signs than in a sequence of written words.

It must be noted that the comparison between sign language processing and reading must be taken with caution. Although both languages rely on the visual modality, they differ along many dimensions. For example, sign language sentences typically exhibit a high degree of iconicity (Cuxac and Sallandre, 2007; Sallandre and Cuxac, 2002). This issue has been investigated by Courtin et al. (2010) in hearing native signers of French Sign language. As explained in the Materials section, when creating the sign language stimuli for our experiment, we did our best to avoid highly iconic structures, but one could still argue that our sign language stimuli were more iconic than written sentences. It would be worthwhile to pursue the work of Courtin et al. by explicitly manipulating iconicity within the sign language stimuli in deaf participants.

Turning now to inter-individual differences, we observed a negative correlation between age of acquisition (mastering) of sign language and activation to coherence in sign language in pars orbitalis. This is consistent with the study of Mayberry et al. (2011) who reported a negative correlation between age of acquisition and responses to sign language in anterior language regions. Thus, our results add to the evidence that frontal regions become less involved when sign language is mastered later in life, and concur with behavioral findings suggesting that acquisition of the first language must occur before the age of ~18 months for full behavioral mastery (Friedmann and Szterman, 2006, 2011; Lieberman et al., 2015).

Another observation is that reading performance, as attested by lexical decision scores, correlated with activation during sentence reading in the left temporal pole. This result can be likened to the study of Emmorey et al. (2016) who observed a positive correlation in the left anterior temporal lobe between neural activity and task accuracy in a semantic decision task (consisting in deciding if a word was a concrete concept). These authors proposed that this activation reflects the “depth of semantic processing” in deaf readers. Both their data and ours fit with the conception that the anterior temporal lobe contains amodal semantic representations and forms part of the “deep” reading route (Hodges et al., 1992; Wilson et al., 2008; Mion et al., 2010; Bouhali et al., 2014; Collins et al., 2016).

The same correlation analysis detected two additional regions, namely the IFG pars opercularis in the frontal lobe and the supramarginal gyri in the parietal lobe (correlations were significant in both hemispheres, but were more robust on the left side). In young hearing participants, Monzalvo López (2011) and Monzalvo et al. (2012) reported correlations between a measure of vocabulary scores (DEN 48) and the activations to written words in pars opercularis and in the supramarginal gyrus.

It is important to underline that the lexical decision score that we used mixes vocabulary knowledge and reading abilities. It would be interesting in future studies to disentangle the effects of these variables using tests estimating vocabulary size (e.g. picture naming) and reading speed (e.g. number of words per minute).

With respect to written French, it is interesting to compare the results obtained here in deaf participants with those of hearing French participants (Pallier et al., 2011). Note that in the present case, written French was the second language of the deaf participants, while it was the first language of the hearing participants. Nevertheless, we observed a remarkable similarity of the observed regions that were modulated by constituent size in written French. This replicability is all the more striking that differences in global reading networks were previously reported in other brain-imaging studies of deaf and hearing subjects. For example, Hirshorn et al. (2014) contrasted sentences to false font strings in three populations: hearing, oral deaf participants and deaf native signers. They found that the bilateral superior temporal cortices, including the left primary auditory cortex, showed greater recruitment for deaf individuals, signers or oral, than for hearing participants, and that the left fusiform gyrus activated more for hearing and oral deaf participants than for deaf native signers. By contrast, our study suggests that the network involved in the combinatorial process of constituent building during reading is independent of deafness. This result is remarkable inasmuch as deaf people learn to read in a much less standardized way than hearing people, due to important differences in education among the deaf. It is probably relevant that our participants, on average, judged that they were relatively good readers (see Table 1). A minimal level of reading fluency is probably necessary to activate the syntactic-coherence network when reading written French sentences.

In sum, two main conclusions can be drawn from the present study. First, it stresses, within the language network, the important role of the basal ganglia in the representation of the constituent structure of language, regardless of input modality. Secondly, it highlights the similarity between the reading networks in the hearing and the deaf. In accordance with previous proposals (Mazoyer et al., 1993; Musso et al., 2003; Marslen-Wilson and Tyler, 2007; Pallier et al., 2011; Friederici, 2012; Vagharchakian et al., 2012; Shetreet and Friedmann, 2014; Fedorenko et al., 2016; Nelson et al., 2017), it confirms that the language network, comprising the left superior temporal sulcus, inferior frontal gyrus, and basal ganglia, is systematically involved in combinatorial language operations. Note that, contrary to our previous study (Pallier et al., 2011), the present design did not attempt to disentangle effects of semantic and syntactic combinatorial processes: for practical reasons, including limits on scanning time, we could not include Jabberwocky stimuli with pseudo signs. It would nevertheless be interesting to run a new experiment with such stimuli, and see if a focus on syntactic processing would restrict the responses to a subset of cortical areas (IFG and pSTS) previously observed by Pallier et al. (2011). It would also be interesting to run a similar study in bilingual hearing signers in order to see if the early acquisition of oral language impacts on the syntactic processing of sign language. Lastly, it would highly desirable to study the impact of the amount of education of deaf children (either bilingual sign language and oral + written French, or “monolingual” oral + written French) on the language comprehension network. Nevertheless, in the present state of knowledge, it appears that the absence of hearing experience does not prevent the deployment of a universal cortical and subcortical network for language processing.

Supplementary Material

Supplementary data related to this article can be found at https://doi.org/10.1016/j.neuroimage.2017.11.040.

Acknowledgements

We are grateful to Carlo Geraci and Mirko Santoro who helped us design the experiment, and to the doctors, nurses, technicians and colleagues at NeuroSpin who helped us in data acquisition. We kindly thank all the deaf participants. This work was supported by INSERM, CEA, Collège de France, the Bettencourt-Schueller Foundation, an ERC “neurosyntax” grant to S.D., and the ANR program Blanc 2010 (project “construct”, 140301). It was performed on a platform of the France Life Imaging network partly funded by grant ANR-11-INBS-0006.

References

- Bachrach A, Jola C, Pallier C. Neuronal bases of structural coherence in contemporary dance observation. NeuroImage. 2016;124:464–472. doi: 10.1016/j.neuroimage.2015.08.072. [DOI] [PubMed] [Google Scholar]

- Börstell C, Sandler W, Aronoff M. Sign Language Linguistics. Oxford Bibliographies online; 2015. [Google Scholar]

- Bouhali F, Thiebaut de Schotten M, Pinel P, Poupon C, Mangin J-F, Dehaene S, Cohen L. Anatomical connections of the visual word form area. J Neurosci. 2014;34:15402–15414. doi: 10.1523/JNEUROSCI.4918-13.2014. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Brentari D, editor. Cambridge Language Surveys. Cambridge University Press; Cambridge, UK and New York: 2010. Sign Languages. [Google Scholar]

- Campbell R, MacSweeney M, Waters D. Sign language and the brain: a review. J Deaf Stud Deaf Educ. 2007;13:3–20. doi: 10.1093/deafed/enm035. [DOI] [PubMed] [Google Scholar]

- Cheek A, Cormier K, Rathmann C, Repp A, Meier R. Motoric constraints link manual babbling and early signs. Infant Behav Dev. 1998;21:340. [Google Scholar]

- Chen Y-C, Kuo H-Y, Bornschein U, Takahashi H, Chen S-Y, Lu K-M, Yang H-Y, Chen G-M, Lin J-R, Lee Y-H, Chou Y-C, et al. Foxp2 controls synaptic wiring of corticostriatal circuits and vocal communication by opposing Mef2c. Nat Neurosci. 2016;19:1513–1522. doi: 10.1038/nn.4380. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Collins JA, Montal V, Hochberg D, Quimby M, Mandelli ML, Makris N, Seeley WW, Gorno-Tempini ML, Dickerson BC. Focal temporal pole atrophy and network degeneration in semantic variant primary progressive aphasia. Brain. 2016 doi: 10.1093/brain/aww313. aww 313. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corina DP, Lawyer LA, Cates D. Cross-linguistic differences in the neural representation of human language: evidence from users of signed languages. Front Psychol. 2013a;3 doi: 10.3389/fpsyg.2012.00587. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Corina DP, Lawyer LA, Hauser P, Hirshorn E. Lexical processing in deaf readers: an fMRI investigation of reading proficiency. PLoS ONE. 2013b;8:e54696. doi: 10.1371/journal.pone.0054696. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Cormier K, Mauk C, Repp A. Proceedings of the Child Language Research Forum. 1998. Manual babbling in deaf and hearing infants: a longitudinal study; pp. 55–61. [Google Scholar]

- Courtin C, Hervé P-Y, Petit L, Zago L, Vigneau M, Beaucousin V, Jobard G, Mazoyer B, Mellet E, Tzourio-Mazoyer N. The neural correlates of highly iconic structures and topographic discourse in French Sign Language as observed in six hearing native signers. Brain Lang. 2010;114:180–192. doi: 10.1016/j.bandl.2010.05.003. [DOI] [PubMed] [Google Scholar]

- Cuxac C, Sallandre M-A. Iconicity and arbitrariness in French sign language: highly iconic structures, degenerated iconicity and diagrammatic iconicity. Verbal and signed languages: comparing structures. Constr Methodol. 2007:13–33. [Google Scholar]

- Dehaene S, Meyniel F, Wacongne C, Wang L, Pallier C. The neural representation of sequences: from transition probabilities to algebraic patterns and linguistic trees. Neuron. 2015;88:2–19. doi: 10.1016/j.neuron.2015.09.019. [DOI] [PubMed] [Google Scholar]

- Dehaene-Lambertz G, Spelke ES. The infancy of the human brain. Neuron. 2015;88:93–109. doi: 10.1016/j.neuron.2015.09.026. [DOI] [PubMed] [Google Scholar]

- Emmorey K, Damasio H, McCullough S, Grabowski T, Ponto LLB, Hichwa RD, Bellugi U. Neural systems underlying spatial language in American sign language. NeuroImage. 2002;17:812–824. doi: 10.1006/nimg.2002.1187. [DOI] [PubMed] [Google Scholar]

- Emmorey K, McCullough S, Mehta S, Ponto LL, Grabowski TJ. The biology of linguistic expression impacts neural correlates for spatial language. J Cognitive Neurosci. 2013;25:517–533. doi: 10.1162/jocn_a_00339. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Emmorey K, McCullough S, Weisberg J. The neural underpinnings of reading skill in deaf adults. Brain Lang. 2016;160:11–20. doi: 10.1016/j.bandl.2016.06.007. [DOI] [PubMed] [Google Scholar]

- Enard W, Gehre S, Hammerschmidt K, Hölter SM, Blass T, Somel M, Brückner MK, Schreiweis C, Winter C, Sohr R, Becker L, et al. A humanized version of Foxp2 affects cortico-basal ganglia circuits in mice. Cell. 2009;137:961–971. doi: 10.1016/j.cell.2009.03.041. [DOI] [PubMed] [Google Scholar]

- Fedorenko E, Scott TL, Brunner P, Coon WG, Pritchett B, Schalk G, Kanwisher N. Neural correlate of the construction of sentence meaning. Proc Natl Acad Sci. 2016;113:E6256–E6262. doi: 10.1073/pnas.1612132113. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Friederici AD. The cortical language circuit: from auditory perception to sentence comprehension. Trends Cognitive Sci. 2012;16:262–268. doi: 10.1016/j.tics.2012.04.001. [DOI] [PubMed] [Google Scholar]

- Friedmann N, Szterman R. Syntactic movement in orally trained children with hearing impairment. J Deaf Stud Deaf Educ. 2006;11:56–75. doi: 10.1093/deafed/enj002. [DOI] [PubMed] [Google Scholar]

- Friedmann N, Szterman R. The comprehension and production of Wh-questions in deaf and hard-of-hearing children. J Deaf Stud Deaf Educ. 2011;16:212–235. doi: 10.1093/deafed/enq052. [DOI] [PubMed] [Google Scholar]

- Fujii N, Graybiel AM. Representation of action sequence boundaries by macaque prefrontal cortical neurons. Science. 2003;301:1246–1249. doi: 10.1126/science.1086872. [DOI] [PubMed] [Google Scholar]

- Goucha T, Friederici AD. The language skeleton after dissecting meaning: a functional segregation within Broca's area. NeuroImage. 2015;114:294–302. doi: 10.1016/j.neuroimage.2015.04.011. [DOI] [PubMed] [Google Scholar]

- Graybiel AM. Building action repertoires: memory and learning functions of the basal ganglia. Curr Opin Neurobiol. 1995;5:733–741. doi: 10.1016/0959-4388(95)80100-6. [DOI] [PubMed] [Google Scholar]

- Grossman E, Donnelly M, Price R, Pickens D, Morgan V, Neighbor G, Blake R. Brain areas involved in perception of biological motion. J Cognitive Neurosci. 2000;12:711–720. doi: 10.1162/089892900562417. [DOI] [PubMed] [Google Scholar]

- Hickok G, Bellugi U. Neural organization of language: clues from sign language aphasia. In: Guendouzi J, Loncke F, Williams M, editors. The Handbook of Psycholinguistic & Cognitive Processes: Perspectives in Communication Disorders. Taylor & Francis; 2010. pp. 685–706. [Google Scholar]

- Hickok G, Bellugi U, Klima ES. Sign language in the brain. Sci Am. 2002;12:46–53. doi: 10.1038/scientificamerican0601-58. [DOI] [PubMed] [Google Scholar]

- Hirshorn EA, Dye MWG, Hauser PC, Supalla TR, Bavelier D. Neural networks mediating sentence reading in the deaf. Front Hum Neurosci. 2014;8 doi: 10.3389/fnhum.2014.00394. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Hodges JR, Patterson K, Oxbury S, Funnell E. Semantic dementia. Brain. 1992;115:1783–1806. doi: 10.1093/brain/115.6.1783. [DOI] [PubMed] [Google Scholar]

- Inubushi T, Sakai KL. Functional and anatomical correlates of word-, sentence-, and discourse-level integration in sign language. Front Hum Neurosci. 2013;7 doi: 10.3389/fnhum.2013.00681. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Klima ES, Bellugi U, editors. The Signs of Language. Harvard University Press; Cambridge, MA: 1979. [Google Scholar]

- Lehéricy S, Benali H, Van de Moortele P-F, Pélégrini-Issac M, Waechter T, Ugurbil K, Doyon J. Distinct basal ganglia territories are engaged in early and advanced motor sequence learning. Proc Natl Acad Sci U S A. 2005;102:12566–12571. doi: 10.1073/pnas.0502762102. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Leroy F, Cai Q, Bogart SL, Dubois J, Coulon O, Monzalvo K, Fischer C, Glasel H, Van der Haegen L, Bénézit A, Lin C-P, et al. New human-specific brain landmark: the depth asymmetry of superior temporal sulcus. Proc Natl Acad Sci. 2015;112:1208–1213. doi: 10.1073/pnas.1412389112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Lieberman AM, Borovsky A, Hatrak M, Mayberry RI. Real-time processing of ASL signs: delayed first language acquisition affects organization of the mental lexicon. J Exp Psychol Learn Mem Cognition. 2015;41:1130–1139. doi: 10.1037/xlm0000088. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Longworth CE. The basal ganglia and rule-governed language use: evidence from vascular and degenerative conditions. Brain. 2005;128:584–596. doi: 10.1093/brain/awh387. [DOI] [PubMed] [Google Scholar]

- MacSweeney M, Capek CM, Campbell R, Woll B. The signing brain: the neurobiology of sign language. Trends Cognitive Sci. 2008;12:432–440. doi: 10.1016/j.tics.2008.07.010. [DOI] [PubMed] [Google Scholar]

- Makuuchi M, Bahlmann J, Anwander A, Friederici AD. Segregating the core computational faculty of human language from working memory. Proc Natl Acad Sci. 2009;106:8362–8367. doi: 10.1073/pnas.0810928106. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Marcus GF, Fisher SE. FOXP2 in focus: what can genes tell us about speech and language? Trends Cognitive Sci. 2003;7:257–262. doi: 10.1016/S1364-6613(03)00104-9. [DOI] [PubMed] [Google Scholar]

- Marslen-Wilson WD, Tyler LK. Morphology, language and the brain: the decompositional substrate for language comprehension. Philosophical Trans R Soc B Biol Sci. 2007;362:823–836. doi: 10.1098/rstb.2007.2091. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Mayberry RI, Chen J-K, Witcher P, Klein D. Age of acquisition effects on the functional organization of language in the adult brain. Brain Lang. 2011;119:16–29. doi: 10.1016/j.bandl.2011.05.007. [DOI] [PubMed] [Google Scholar]

- Mazoyer BM, Dehaene S, Tzourio N, Frak V, Murayama N, Cohen L, Lévrier O, Salamon G, Syrota A, Mehler J. The cortical representation of speech. J Cognitive Neurosci. 1993;5:467–479. doi: 10.1162/jocn.1993.5.4.467. [DOI] [PubMed] [Google Scholar]

- Mion M, Patterson K, Acosta-Cabronero J, Pengas G, Izquierdo-Garcia D, Hong YT, Fryer TD, Williams GB, Hodges JR, Nestor PJ. What the left and right anterior fusiform gyri tell us about semantic memory. Brain. 2010;133:3256–3268. doi: 10.1093/brain/awq272. [DOI] [PubMed] [Google Scholar]

- Monzalvo K, Fluss J, Billard C, Dehaene S, Dehaene-Lambertz G. Cortical networks for vision and language in dyslexic and normal children of variable socio-economic status. NeuroImage. 2012;61:258–274. doi: 10.1016/j.neuroimage.2012.02.035. [DOI] [PubMed] [Google Scholar]

- Monzalvo López AK. Etude chez l’enfant normal et dyslexique de l’impact sur les réseaux corticaux visuel et linguistique d’une activité culturelle: la lecture. Université Pierre et Marie Curie; Paris 6.: 2011. Ecole Doctorale Cerveau, Cognition et Comportement (3C) (Spécialité: Sciences Cognitives) [Google Scholar]

- Moro A. The Boundaries of Babel: the Brain and the Enigma of Impossible Languages. MIT Press; 2008. [Google Scholar]

- Moro A, Tettamanti M, Perani D, Donati C, Cappa SF, Fazio F. Syntax and the brain: disentangling grammar by selective anomalies. NeuroImage. 2001;13:110–118. doi: 10.1006/nimg.2000.0668. [DOI] [PubMed] [Google Scholar]

- Musso M, Moro A, Glauche V, Rijntjes M, Reichenbach J, Büchel C, Weiller C. Broca's area and the language instinct. Nat Neurosci. 2003;6:774–781. doi: 10.1038/nn1077. [DOI] [PubMed] [Google Scholar]

- Nelson MJ, El Karoui I, Giber K, Yang X, Cohen L, Koopman H, Cash SS, Naccache L, Hale JT, Pallier C, Dehaene S. Neurophysiological dynamics of phrase-structure building during sentence processing. Proc Natl Acad Sci. 2017 doi: 10.1073/pnas.1701590114. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newman AJ, Bavelier D, Corina D, Jezzard P, Neville HJ. A critical period for right hemisphere recruitment in American Sign Language processing. Nat Neurosci. 2002;5:76–80. doi: 10.1038/nn775. [DOI] [PubMed] [Google Scholar]

- Newman AJ, Supalla T, Hauser PC, Newport EL, Bavelier D. Dissociating neural subsystems for grammar by contrasting word order and inflection. Proc Natl Acad Sci. 2010a;107:7539–7544. doi: 10.1073/pnas.1003174107. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newman AJ, Supalla T, Hauser PC, Newport EL, Bavelier D. Prosodic and narrative processing in American Sign Language: an fMRI study. NeuroImage. 2010b;52:669–676. doi: 10.1016/j.neuroimage.2010.03.055. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Newman AJ, Supalla T, Fernandez N, Newport EL, Bavelier D. Neural systems supporting linguistic structure, linguistic experience, and symbolic communication in sign language and gesture. Proc Natl Acad Sci. 2015;112:11684–11689. doi: 10.1073/pnas.1510527112. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Oldfield RC. The assessment and analysis of handedness: the Edinburgh inventory. Neuropsychologia. 1971;9:97–113. doi: 10.1016/0028-3932(71)90067-4. [DOI] [PubMed] [Google Scholar]

- Pallier C, Devauchelle A-D, Dehaene S. Cortical representation of the constituent structure of sentences. Proc Natl Acad Sci. 2011;108:2522–2527. doi: 10.1073/pnas.1018711108. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Petitto LA, Holowka S, Sergio LE, Levy B, Ostry DJ. Baby hands that move to the rhythm of language: hearing babies acquiring sign languages babble silently on the hands. Cognition. 2004;93:43–73. doi: 10.1016/j.cognition.2003.10.007. [DOI] [PubMed] [Google Scholar]

- Petitto LA, Holowka S, Sergio LE, Ostry D. Language rhythms in baby hand movements. Nature. 2001;413:35–36. doi: 10.1038/35092613. [DOI] [PubMed] [Google Scholar]

- Petitto LA, Marentette PF. Babbling in the manual mode: evidence for the ontogeny of language. Science. 1991;251:1493–1496. doi: 10.1126/science.2006424. [DOI] [PubMed] [Google Scholar]

- Pfau R, Steinbach M, Woll B, editors. Sign Language: an International Handbook. Walter de Gruyter; Boston: 2012. [Google Scholar]

- Pickell H, Klima E, Love T, Kritchevsky M, Bellugi U, Hickok G. Sign language aphasia following right hemisphere damage in a left-hander: a case of reversed cerebral dominance in a deaf signer? Neurocase. 2005;11:194–203. doi: 10.1080/13554790590944717. [DOI] [PubMed] [Google Scholar]

- Redcay E. The superior temporal sulcus performs a common function for social and speech perception: implications for the emergence of autism. Neurosci Biobehav Rev. 2008;32:123–142. doi: 10.1016/j.neubiorev.2007.06.004. [DOI] [PubMed] [Google Scholar]

- Reimers-Kipping S, Hevers W, Pääbo S, Enard W. Humanized Foxp2 specifically affects cortico-basal ganglia circuits. Neuroscience. 2011;175:75–84. doi: 10.1016/j.neuroscience.2010.11.042. [DOI] [PubMed] [Google Scholar]

- Rogalsky C, Raphel K, Tomkovicz V, O'Grady L, Damasio H, Bellugi U, Hickok G. Neural basis of action understanding: evidence from sign language aphasia. Aphasiology. 2013;27:1147–1158. doi: 10.1080/02687038.2013.812779. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sallandre M-A, Cuxac C. Iconicity in Sign Language: a theoretical and methodological point of view. In: Wachsmuth I, Sowa T, editors. Proceedings of the International Gesture Workshop, GW'2001, LNAI 2298. Springer-Verlag; Berlin, London: 2002. pp. 171–180. [Google Scholar]

- Sallandre M-A. Simultaneity in French sign language discourse. In: Vermeerbergen M, Leeson L, Crasborn O, editors. Simultaneity in Signed Languages: Form and Function. John Benjamins; Amsterdam/Philadelphia: 2007. pp. 103–125. [Google Scholar]

- Sandler W. The uniformity and diversity of language: evidence from sign language. Lingua. 2010;120:2727–2732. doi: 10.1016/j.lingua.2010.03.015. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Sandler W. On the Complementarity of Signed and Spoken Languages. Language Competence Across Populations: Towards a Definition of SLI. 2003:383–409. [Google Scholar]

- Schreiweis C, Bornschein U, Burguière E, Kerimoglu C, Schreiter S, Dannemann M, Goyal S, Rea E, French CA, Puliyadi R, et al. Humanized Foxp2 accelerates learning by enhancing transitions from declarative to procedural performance. Proc Natl Acad Sci. 2014;111:14253–14258. doi: 10.1073/pnas.1414542111. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Shetreet E, Friedmann N. The processing of different syntactic structures: fMRI investigation of the linguistic distinction between wh-movement and verb movement. J Neurolinguistics. 2014;27:1–17. doi: 10.1016/j.jneuroling.2013.06.003. [DOI] [Google Scholar]

- Smith KS, Graybiel AM. A dual operator view of habitual behavior reflecting cortical and striatal dynamics. Neuron. 2013;79:361–374. doi: 10.1016/j.neuron.2013.05.038. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Teichmann M. The role of the striatum in rule application: the model of Huntington's disease at early stage. Brain. 2005;128:1155–1167. doi: 10.1093/brain/awh472. [DOI] [PubMed] [Google Scholar]

- Tzourio-Mazoyer N, Landeau B, Papathanassiou D, Crivello F, Etard O, Delcroix N, Mazoyer B, Joliot M. Automated anatomical labeling of activations in SPM using a macroscopic anatomical parcellation of the MNI MRI single-subject brain. NeuroImage. 2002;15:273–289. doi: 10.1006/nimg.2001.0978. [DOI] [PubMed] [Google Scholar]

- Ullman MT. A neurocognitive perspective on language: the declarative/procedural model. Nat Rev Neurosci. 2001;2:717–726. doi: 10.1038/35094573. [DOI] [PubMed] [Google Scholar]

- Vagharchakian L, Dehaene-Lambertz G, Pallier C, Dehaene S. A temporal bottleneck in the language comprehension network. J Neurosci. 2012;32:9089–9102. doi: 10.1523/JNEUROSCI.5685-11.2012. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Vargha-Khadem F, Gadian DG, Copp A, Mishkin M. FOXP2 and the neuroanatomy of speech and language. Nat Rev Neurosci. 2005;6:131–138. doi: 10.1038/nrn1605. [DOI] [PubMed] [Google Scholar]

- Wilson SM, Brambati SM, Henry RG, Handwerker DA, Agosta F, Miller BL, Wilkins DP, Ogar JM, Gorno-Tempini ML. The neural basis of surface dyslexia in semantic dementia. Brain. 2008;132:71–86. doi: 10.1093/brain/awn300. [DOI] [PMC free article] [PubMed] [Google Scholar]

- Zaccarella E, Meyer L, Makuuchi M, Friederici AD. Building by syntax: the neural basis of minimal linguistic structures. Cereb Cortex bhv. 2015;234 doi: 10.1093/cercor/bhv234. [DOI] [PubMed] [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.