Abstract

Objective:

Inattentional blindness (IB) refers to an observer’s failure to notice unexpected stimulus. The aim of this study was to investigate the effects of the priming type (perceptual, conceptual, and no priming) and emotional context (positive, negative, neutral) on IB with behavioral (IB, non-IB) and eye tracking measurements (latency of first fixation, total fixation time, and total fixation count in response to unexpected stimuli).

Methods:

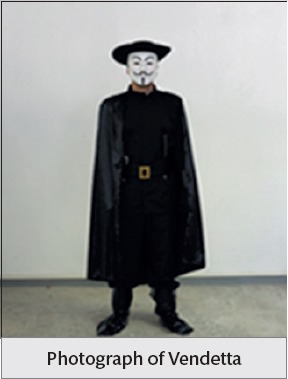

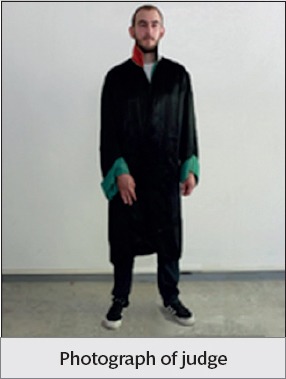

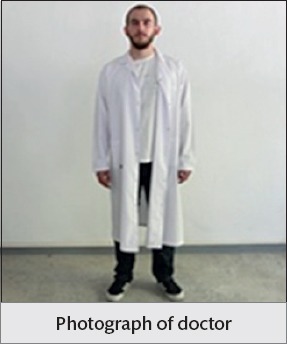

A total of 193 volunteered male university students were invited in the study. Three thematic videos (positive, negative, and neutral) were created to capture the IB. In the first stage, five pictures (a model dressed in different costumes Zorro as an unexpected stimulus, Vendetta, a judge, a doctor, and a worker) were shown to the participants for priming. In the second stage, a distractor task which involved 30 simple arithmetic operations were given. In the third stage, one of the thematic IB videos were shown to the participants, and then they answered the questions about those videos. Participants were assigned randomly to 9 different experimental conditions according to a 3 (Priming Type: Perceptual, Conceptual, No Priming) x 3 (Emotional Context: Positive, Negative, Neutral) factorial design. Finally, behavioral and eye tracking measurements were collected.

Results:

Main and interaction effects of priming type and emotional contexts were not significant in terms of the behavioral measures. In addition, there were no significant differences between types of priming for eye tracking measures. However, there were significant differences between types of emotional contexts in all eye tracking measurements. In the negative context, participants made less total fixation, and looked shorter to unexpected stimulus than positive and neutral contexts. In addition, non-notifiers made less total fixation, and looked at unexpected stimulus for a shorter time compared to notifiers.

Conclusion:

The fact of “looking without seeing” was again demonstrated experimentally. Priming and emotional context did not affect behavioral data, but eye movements were affected from the emotional context. Current findings showed a relation between emotion and attention.

Keywords: Inattentional blindness, priming effect, emotional context, eye movements

INTRODUCTION

People have limited visual perceptual capacity, and the visual area involves more stimuli than people are able to identify and recall (1). When the perceptual load is high and consumes perceptual processing capacity, people may miss objects that are incongruent with the perceptual task (2). Inattentional blindness (IB) is the failure to see an unexpected stimulus in the visual field while attention is focused on another object (3, 4). In IB studies, an unexpected stimulus enters the visual field, stays for a while and then leaves, and participants may not notice the unexpected stimulus while performing a cognitive task (5–7).

IB frequencies are affected by various factors, such as the difficulty of cognitive tasks, features of unexpected stimuli, similarity between unexpected stimulus and task objects, and properties of participants. When the unexpected stimulus were human faces, IB rates were lower compared to the research in which other objects were used as the unexpected stimuli (8, 9). Furthermore, participants are able to notice their name as an unexpected stimulus more easily than other names (9).

Studies examining the effect of similarity between unexpected stimulus and task object have suggested that sharing similar visual properties causes an unexpected stimulus to be seen. When task objects are black and distractors are white, it is hard to detect white unexpected stimulus (6, 10). Similarly, when the task required participants to focus on white objects, black-coloured unexpected stimulus caused IB. Analogously, a series of studies have indicated that when stimuli are either simple shapes, such as geometrical figures, or complex shapes, such as human faces, both form and colour/tone similarities help participants for noticing unexpected stimulus (11). In summary, if there is a substantial physical similarity between unexpected stimulus and task objects, IB rates are lower. However, if there is no physical similarity between unexpected stimulus and task objects, IB rates are higher.

Even though it is not a new phenomenon, there is few research about psychiatric patients and IB. Participants with Attention Deficit and Hyperactivity Disorder (ADHD) who performed lower performance on a selective attention task (MOXO task) than participants without ADHD, have noticed unexpected stimulus in the IB task more frequently (12). On the other hand, while schizophrenic patients have had difficulties in noticing unexpected objects, controls showed stronger task related theta power increase than patients (13).

Early examples of emotion and IB relation were studied by Mack and Rock (9). When participants were performing a task that involved determining the long arm of a non-symmetrical cross, one of the three unexpected stimuli (a happy face, an angry face, and a scrambled face) was shown to them. Results indicated that participants noticed unexpected happy faces more easily than an angry face or a scrambled face. Another study about emotions and IB was conducted by Becker and Leinenger (14). Before the IB phase, participants wrote about an emotional event (a positive event, negative event, or a neutral event). For the IB task, circles and scrambled faces were used as a target and distractor. The unexpected stimuli were a smiling face for the half of the test trials, and an angry face for the other half. The results showed that mood congruent stimuli were noticed easily, and prevented participants from experiencing IB.

A priming effect refers to a phenomenon that does not require conscious awareness, and which should be described as a cognitive convenience of the repeated processing of a stimulus after first exposure. The negative effect of priming shows itself as processing retardation of a new stimulus due to a previous one (15). A few studies have examined the relation between priming and IB; a first example of which is that of Mack and Rock (9). In a series of experiments, words were used as an unexpected stimulus. After an IB procedure, a word stem completion task was given to participants. Results showed that participants tended to complete words with the words shown as an unexpected stimulus.

Slavich and Zimbardo (16) used a short story about suicide as a prime with a photo included which shows a woman falling from one of the upper floors of a building as an unexpected stimulus. Participants who had read a story about suicide saw the woman more frequently than those who did not read the story. The prime was exhibited in order to create a priming effect, and participants observed the unexpected stimulus according to prime. In this study, an unexpected stimulus was used to be a prime similarly.

Use of the Eye Tracking Method in Inattentional Blindness Studies

Most researchers agree that eye movement reflect visual attention. Eye tracking measurements make important scientific contributions to understand the relation between emotion and attention (17, 18). Most eye tracking studies on IB have indicated that fixation and gaze frequencies on distractors are related to noticing unexpected stimulus. Spending more time on distractors causes IB (19). Koivisto, Hyönä and Revonuo (20) suggested that participants who fixate on the centre of the screen miss more often the unexpected stimuli compared to the not fixating group. On the other hand, Kuhn and Findlay (21) compared the eye movement pattern of participants who missed and didn’t miss the unexpected stimulus but not while watching a magic trick video. According to the analysis, there was no significant difference between the two groups’ fixation positions, although noticing the illusion (seeing the lighter’s fall) caused eye movements to become faster. Similar results were reported by Memmert (22). Results showed that there was no significant difference between participants who noticed the unexpected stimulus, and those who did not notice it as well. In addition, with respect to the average fixation time of participants, non-notifiers were looking long enough (one second or 20% of the total display time) to the unexpected stimulus.

The aim of this study was to explore the effects of priming type (perceptual, conceptual, or control) and emotional context (positive, negative, or neutral) on IB, using behavioral (whether IB occurs) and eye tracking measurements (latency of first fixation, total fixation time, and total fixation count to unexpected stimulus). We hypothesized that IB rates would be at least 45% for the videos in this study. Rate of 45% was determined according to the IB literature (6, 7, 23, 20). In this research IB rates had been changing between 42–53%. It was also expected that IB rates would be higher under no priming (control) compared to priming conditions (conceptual and perceptual). It was expected that repeated processing of a prime stimulus after first exposure would cause lower IB rates. Another hypothesis was that the highest IB rates should be in negative video, because negative background had attracted attention (24). Finally, it was hypothesised that notifiers’ total fixation times would be longer, and total fixation count more for unexpected stimulus but that there would be no difference between notifiers and non-notifiers in terms of latencies of first fixation for the unexpected stimulus.

METHODS

Participants

The total number of participants was 201, of whom eight (six were colour blind, and two had some health issues) were excluded from the experiment. The study was conducted with 193 volunteers, all of them were male university students between the ages of 18 and 26 (X̄=21.12, SD=1.92) with normal or corrected to normal vision. Colour blindness and neurologic or psychiatric illness were used as exclusion criteria in this experiment. All participants were informed about the experiment, and asked to sign an informed consent form. The participants were assigned to different experimental conditions randomly. This study was conducted in accordance with recognized ethical principles and code of conduct of psychologists, and with the World Medical Association’s Declaration of Helsinki. Ethics committee approval was obtained from Hacettepe University Ethics Commission.

Stimuli and Apparatus

Ishihara Test for Color Blindness: This test was developed by Ishihara in 1917, and is the most common test in order to determine colour blindness. This test is especially sensitive for red-green colour blindness.

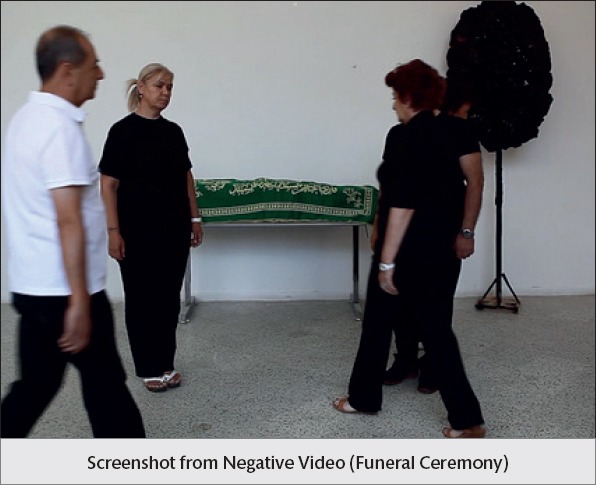

Videos of Inattentional Blindness: Three videos representing different emotional contexts were created for this research (each lasting for 30 seconds). An engagement ceremony background (using a garland and decoration) was used for the positive video, a funeral ceremony background (using a black garland and coffin) was used for the negative video, and an unfurnished background was used for the neutral video. All videos were recorded in the same place with the same volunteer adults and stream.

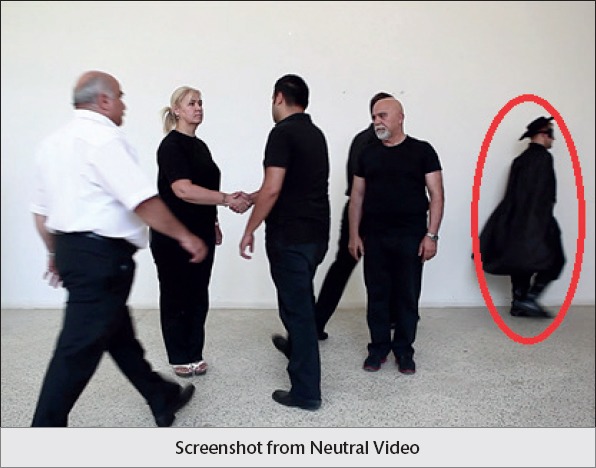

All videos start with two stationary adults dressed in black shirts at the same distance to the centre of the screen. Other performers (half dressed in white, and other half dressed in black) enter the screen from left or right, walk to the stationary person who is waiting on the other side, then continue non-stop, and exit from view. Some of the performers shake hands with the stationary person who is waiting on the opposite side to the performers’ entrance, and the remaining adults do not (in all of the videos the same people shake hands, and the total handshake count is seven). After approximately 10 seconds, one person dressed in a black Zorro costume (an unexpected stimulus) enters from the left side of the screen, walks to the middle of the two stationary persons, waits for one second, and then leaves from the right side of the screen (see Appendix A).

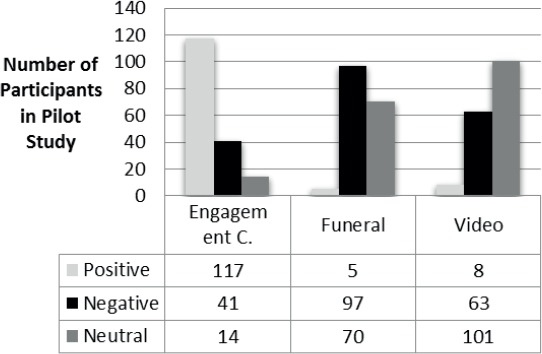

A pilot study was used to determine the emotional value of videos with 172 students who were not included in the principal study (Figure 1).

Figure 1.

Results of pilot study in relation to the emotional value of videos.

Photo Set for Priming Task: This photo set was used in the first phase of the experiment. In order to prevent any possible bias to the unexpected stimulus, five photos were shot with the same model dressed in different costumes (Zorro, Vendetta, a judge, a doctor and a worker) and were used in this experiment (see Appendix B). All photos had different number of models. However, only Zorro was used as a prime, and thus only Zorro was shown in the videos.

Distractor Task: Thirty simple arithmetic operations were included as a distractor task. Participants answered the questions by writing. The results of this task are not included in the analysis. This task lasted approximately 10 minutes.

Eye movements were recorded using a Tobii T120 Eye Tracker on a 17” TFT monitor with 1280×1024 pixels resolution. The refresh rate was 120 Hz with binocular tracking. The maximum record error of the device was 0.5°. Each immobility that was greater than or equal to 100 ms was defined as fixation. Tasks were designed by the E-Prime 2.0 Professional programme (Psychology Software Tools, USA). The Virtual Dub Capture/Processing Program (free licensed for non-commercial use) was used to capture images on a video with 40 ms intervals; as a result, the videos were analysed image by image.

Procedure

All participants were informed about the experiment. The Ishihara Test for Colour Blindness was used to determine colour blind participants. The distance between the screen and participants was adjusted to approximately 60–65 cm, and the calibration of the eye track device was adjusted individually. The visual angle was 30°×27°. Participants were assigned to 9 different experimental conditions randomly according to 3 (Priming Type: Perceptual, Conceptual, No Priming) x 3 (Emotional Context: Positive, Negative, Neutral) factorial design for behavioral and eye tracking measurements (Table 1). The experiment was divided into three stages. In the first stage, a photo set was shown as the priming task to participants. For perceptual priming condition, participants answered the number of characters on the screen, for conceptual priming condition, participants rated the pleasantness of characters, and in the no priming condition this task was skipped. In the second stage, 30 arithmetic operations were given as a distractor task. In the third and last stage, one of the three IB videos was shown to the participants, and their answers to questions about the videos were recorded by the researcher. Participants were first asked to state the number of handshakes using a multiple choice question, and then if they had seen any unusual thing in the video, through a “yes/no” type question. The participants who answered “yes” to the second question were asked to describe the unusual event via an open-ended question.

Table 1.

Experimental design and number of participants in different experimental conditions

| N=193 | Emotional Context | |||

|---|---|---|---|---|

| Positive Context | Negative Context | Neutral Context | ||

| Priming Types | Perceptual Priming Frequencies of notifiers/non-notifiers |

n=20 7/13 |

n=26 10/16 |

n=17 9/8 |

| Conceptual Priming Frequencies of notifiers/non-notifiers |

n=24 15/9 |

n=29 10/9 |

n=19 11/8 |

|

| No Priming Frequencies of notifiers/non-notifiers |

n=18 3/15 |

n=24 13/11 |

n=16 6/10 |

|

Statistical Analysis

In the study, 3 (Priming Type: Perceptual, Conceptual, No Priming) x 3 (Emotional Context: Positive, Negative, Neutral) ANOVA was used. All independent variables were manipulated by between group design. IBM SPSS 20.0 was used for statistical analysis. The behavioral (conducted with 193 participants) and the eye tracking (conducted with 135 participants) data sets were analysed with different numbers of participants. Fifty-eight participants’ eye-tracking data were excluded from analysis since the eye-tracking data quality was below the 70% threshold, implying inadequate eye movement recording quality. Meanwhile, behavioral data of these participants were preserved. In order to compensate the data loss, additional 58 participants were invited for the experiment. To sum up, behavioral analysis was conducted with 193 participants’ (135 + 58) data, and eye tracking analysis was conducted with 135 participants’ data in 9 different experimental conditions.

RESULTS

The behavioral (collected from 193 participants) and the eye tracking (collected from 135 participants) data sets were analysed with different numbers of participants.

Behavioral Data Analysis

IB was measured as the failure to see an unexpected stimulus (Zorro) in the computer screen. The highest IB rate was in no priming and positive video condition (83%), and the lowest was in conceptual priming and neutral video condition (37%). A summary of behavioral data was displayed in Table 2. The analysis showed no significant difference between IB frequencies of all 9 conditions (Fisher’s Exact Test, p>0.05).

Table 2.

Inattentional Blindness (IB) rates and frequencies in different experimental conditions

| Priming Type | Emotional Context | |||

|---|---|---|---|---|

| Positive | Negative | Neutral | ||

| Video | Video | Video | ||

| Perceptual | IB Rates | 65% | 62% | 47% |

| Frequencies of notifiers/ non-notifiers | 7/13 | 10/16 | 9/8 | |

| Conceptual | IB Rates | 38% | 66% | 37%b |

| Frequencies of notifiers/ non-notifiers | 15/9 | 10/19 | 12/7 | |

| No Priming | IB Rates | 83%a | 46% | 63% |

| Frequencies of notifiers/ non-notifiers | 3/15 | 13/11 | 6/10 | |

Highest Value

Lowest Value

IB rates were also compared among emotional contexts. Highest IB rate was observed in positive video (60%), and lowest was observed in neutral video (48%). IB rates and frequencies according to emotional context were given in Table 3. The analysis showed no significant difference between IB frequencies of the emotional context conditions (Fisher’s Exact Test, p>0.05).

Table 3.

Inattentional Blindness (IB) rates and frequencies in different emotional videos

| N=193 | Emotional Context | |||

|---|---|---|---|---|

| Positive Video | Negative Video | Neutral Video | Total | |

| IB rates | 60%a | 58% | 48%b | 56% |

| Frequencies of notifiers/non-notifiers | 25/37 | 33/46 | 27/25 | 85/108 |

Highest IB rate

Lowest IB rate

IB rates according to the priming conditions were compared as well. Highest IB rate was in no priming condition (62%), and lowest was in the conceptual priming condition (49%). IB rates and frequencies according to priming type were given in Table 4. There was no significant difference between IB frequencies of priming types (Fisher’s Exact Test, p>0.05). These results show that priming effect was not generated experimentally in this study.

Table 4.

Inattentional Blindness (IB) rates and frequencies in different priming types

| N=193 | Priming Type | |||

|---|---|---|---|---|

| Perceptual | Conceptual | No Priming | Total | |

| IB rates | 59% | 49%b | 62%a | 56% |

| Frequencies of notifiers/non-notifiers | 26/37 | 37/35 | 22/36 | 85/108 |

Highest IB rate

Lowest IB rate

Eye Tracking Data Analysis

Three separate 3 (Priming Type: Perceptual, Conceptual, No Priming) x 3 (Emotional Context: Positive, Negative, Neutral) ANOVAs were conducted on eye tracking data including total fixation time, total fixation counts, and latency of first fixation for unexpected stimulus (n=15 for each experimental condition) (Table 5).

Table 5.

Mean and standard deviations of eye track measurements for unexpected stimulus

| Eye Movement Mesurments | Priming Type | Emotional Context | |||

|---|---|---|---|---|---|

| Positive | Negative | Neutral | |||

| Total Fixation Time to Unexpected Stimulus | Perceptual | X̄ | 413.20 | 293.20b | 764.60a |

| S. D. | 222.50 | 237.52 | 332.39 | ||

| Notifier/Non-Notifiers | 10/5 | 10/5 | 8/7 | ||

| Conceptual | X̄ | 502.33 | 302.13 | 644.27 | |

| S. D. | 315.44 | 262.81 | 413.33 | ||

| Notifier/Non-Notifiers | 10/5 | 8/7 | 12/3 | ||

| No Priming | X̄ | 329.07 | 306.60 | 683.13 | |

| S. D. | 305.09 | 288.07 | 422.95 | ||

| Notifier/Non-Notifiers | 5/10 | 8/7 | 7/8 | ||

| Total Fixation Count to Unexpected Stimulus | Perceptual | X̄ | 1.73 | 1.07 | 2.13a |

| S. D. | 1.10 | 0.88 | 0.99 | ||

| Notifier/Non-Notifiers | 10/5 | 10/5 | 8/7 | ||

| Conceptual | X̄ | 1.73 | 0.80b | 1.80 | |

| S. D. | 1.22 | 0.68 | 1.01 | ||

| Notifier/Non-Notifiers | 10/5 | 8/7 | 12/3 | ||

| No Priming | X̄ | 1.60 | 0.93 | 1.80 | |

| S. D. | 1.30 | 0.80 | 1.08 | ||

| Notifier/Non-Notifiers | 5/10 | 8/7 | 7/8 | ||

| Latency of First Fixation (ms) to Unexpected Stimulus | Perceptual | X̄ | 3083.00a | 1248.93 | 2115.53 |

| S. D. | 832.73 | 991.96 | 623.64 | ||

| Notifier/Non-Notifiers | 10/5 | 10/5 | 8/7 | ||

| Conceptual | X̄ | 2373.27 | 1240.70 | 1853.47 | |

| S. D. | 1350.31 | 929.93 | 860.15 | ||

| Notifier/Non-Notifiers | 10/5 | 8/7 | 12/3 | ||

| No Priming | X̄ | 1857.87 | 1124.47b | 2065.27 | |

| S. D. | 1430.36 | 873.56 | 799.23 | ||

| Notifier/Non-Notifiers | 5/10 | 8/7 | 7/8 | ||

Highest Value

Lowest Value

For total fixation time main effect of emotional context was significant (F(2, 126)=18.56, p=0.000, ηp2=0.23) but main effect of priming type and priming type X emotional context interaction were not significant. Post-hoc pairwise comparisons (Tukey Test) indicated significant differences between neutral (X̄=697.33, S. E. =47.50) and positive (X̄=414.87, S. E. =47.40) emotional context (X̄dif. =282.47, p=0.000), and neutral (X̄=697.33, S. E. =47.50) and negative (X̄=300.64, S. E. =47.50) emotional context (X̄dif. =396.69, p=0.000). Analogically for total fixation count, main effect of emotional context was significant (F(2, 126)=11.26, p=0.000, ηp2=0.15) but main effect of priming type and priming type X emotional context interaction were not significant. Post-hoc pairwise comparisons (Tukey Test) indicated significant differences between positive (X̄=1.69, S. E. =0.15) and negative (X̄=0.93, S. E. =0.15) emotional context (X̄dif. =0.76, p=0.002), and neutral (X̄=1.91, S. E. =0.15) and negative (X̄=0.93, S. E. =0.15) emotional context (X̄dif. =0.98, p=0.000). Finally, for latency of first fixation, main effect of emotional context was significant (F(2, 126)=2.60, p=0.000, ηp2=0.22) whereas main effect of priming type and priming type X emotional context interaction were not. Post-hoc pairwise comparisons (Tukey Test) indicated significant differences between positive (X̄=2.438.04, S. E. =148.59) and negative (X̄=1204.49, S. E. =148.59) emotional context (X̄dif. =1.233.56, p=0.000), and neutral (X̄=2011.42, S. E. =148.59) and negative (X̄=1204.49, S. E. =148.59) emotional context (X̄dif. =806.93, p=0.001).

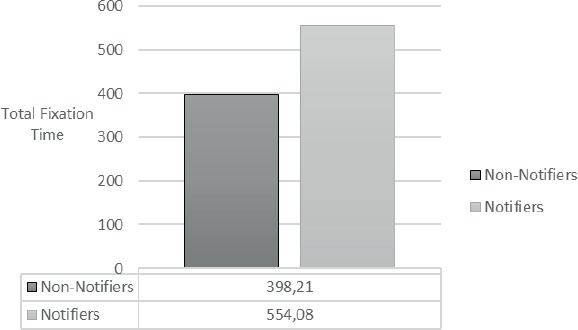

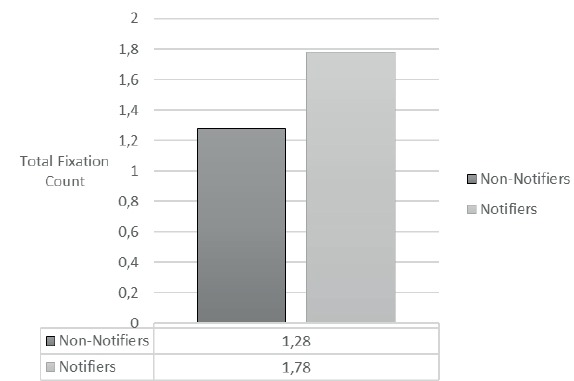

Eye tracking data were compared between notifiers who expressed that he/she saw the unexpected stimulus and non-notifiers by using the independent sample t-test. According to the results, notifier group’s total fixation time (X̄=554.08, S. E. =49.25) were significantly longer than non-notifier (X̄=398.21, S. E. =35.78) group (t(133)=-2.60, p<0.05 r=0.22). Similarly, notifier group’s (X̄=1.78, S. E. =. 14) total fixation count was significantly greater than non-notifier (X̄=1.28, S. E. =0.12) group (t(133)=-2.74, p<0.05, r=0.23) (Figure 2 and Figure 3). On the other hand, there was no significant difference between notifiers (X̄=1892.46, S. E. =128.51) and non-notifiers (X̄=1877.82, S. E. =145.40) in latency of first fixation.

Figure 2.

Comparison of notifiers and non-notifiers for total fixation time.

Figure 3.

Comparison of notifiers and non-notifiers for total fixation count

DISCUSSION

Three videos with different emotional contexts were created for this experiment. In all of the videos, the phenomenon of IB was obtained (60% for the positive, 58% for the negative and 48% for the neutral videos). The “looking without seeing” fact was again demonstrated. These IB ratios are important because this result shows that videos are almost capable of revealing the IB phenomenon. Otherwise, if they were close to 0%, testing capability of the IB videos would be questionable. On the other hand, if IB ratios were close to 100%, there would be two possible reasons: either unexpected stimulus in the video was over-hidden and participants were not able to see it, or the cognitive load of the task was unduly high and participants had to allocate all their resources to the task so missed the unexpected stimulus. Both reasons would indicate potential problem in the procedure.

To sum up, the behavioral analysis showed that main and interaction effects of priming type and emotional context variables were not significant. Although eye track analysis showed that priming type and related interaction effects were not significant, main effect of emotional context was significant. According to the hypothesis of this study, as priming yields convenience in the cognitive process, these tasks should decrease the IB rates. However, the results did not support this hypothesis. There are three reasons for the unexpected findings: firstly, although no significant difference was found, the pattern of IB according to priming conditions was expected. First reason for the non-significant results may be the number of participants, which could be increased in future studies. Secondly, the priming paradigm used was different from the traditional implicit memory paradigm. This paradigm was adapted for the IB experiment but this adaptation did not elicit the priming effect on IB, which could explain the results. Thirdly, a steady stimulus (such as drawings and words) was commonly used in traditional implicit memory tasks, with no distractors and/or background details such as the decoration and various moving performers, as was the case in the videos in this study. Those distractors/background details may have caused the priming process to fail.

Behavioral results show us that there was no significant difference between emotional contexts for IB rates. While empty background was used in the neutral video, decorative materials were used in the positive and negative videos. The neutral video, therefore, contains less clutter than the other conditions. It is known that people have limited cognitive capacity, and these materials may have caused people to allocate more attentional resource to them instead of the unexpected stimulus. As a result, even though there was no significant difference, the lower IB ratio in the neutral videos could be a consequence of lack of decorative materials.

On the other hand, the use of decorative materials had to be limited because of the problem caused by the different levels of crowdedness in the videos. There are only two items for the negative and three items for the positive video. Despite the findings of the pilot study, the limited decoration would lead to fail to manipulate the emotional context, which could be the reason for the non-significant results of the behavioral data in different emotional contexts.

In this study, three eye movement measurements were analysed in relation to the unexpected stimulus: latency of first fixation, total fixation time, and total fixation count. For all these measurements, the main effect of emotional context was significant, whilst the main effect of priming and priming X emotional context interaction effect were not.

In the literature, other studies have shown that an emotional stimulus causes attentional bias (25–28). However, the data show that the total fixation time on Zorro (unexpected stimulus) was the longest in the neutral video (notifiers=27, non-notifiers=25). In addition, the total fixation time to emotional stimulus was longer than for neutral ones (29–31). In the light of this information, the emotional backgrounds of positive and negative videos (such as flowers and ornaments, happy human faces in the positive video, or the coffin and black garland, despondent human faces in the negative video) should attract attention and cause more fixation on the distractors in the videos. The total fixation time on Zorro was thus shorter in the positive and negative videos.

Analysis indicates that the total fixation count on Zorro was higher in the positive and neutral videos than in the negative one. Other studies have reached different conclusions: For example, Megias et al. (30) found that emotional photos elicited a greater fixation count, while Zhu et al. (32) found that there was a tendency to fixate towards the positive stimuli compared to the neutral and negative ones for both schizophrenic and healthy control groups. The results of our study show similarity with the findings of Zhu et al. (32).

The latency of first fixation to unexpected stimulus data was longer for the positive and neutral videos compared to the negative. There is limited research about the latency of first fixation to emotional stimulus (31, 33). According to these studies, latency of first fixation to emotional stimulus was shorter than neutral ones. These results are not wholly consistent with those obtained in this study. One possible reason is that, in those studies, the targets were either an emotional or neutral stimulus, whilst in this study, emotional context and details were distractors rather than the target.

As the analysis showed, participants who noticed the Zorro had a longer total fixation time, and greater total fixation count than the non-notifier group. Memmert (22) found no significant difference between notifiers and non-notifiers in total fixation time to unexpected stimulus. This result is inconsistent with this research. On the other hand, Richards, Hannon and Vitkovic (19) suggested that total fixation count to unexpected stimulus was greater, and latency of first fixation was shorter for notifiers. Even though the results on total fixation count are consistent with this research, no significant difference in latency of first fixation comparison was found. According to this finding, latency of first fixation was not a predictive measurement for IB.

To sum up, for the eye movement data, total fixation count to unexpected stimulus was highest in positive and neutral videos, and the latency of first fixation was highest in the negative video. Despite this, the longest total fixation time was in the emotional videos. In this experiment, in contrast to previous experiments, the emotional valence of the context, rather than the unexpected stimulus’ valence, was manipulated. As a result, this causes attentional narrowing, and prevents participants from noticing unexpected stimuli. These results related with eye tracking measurement support the attentional narrowing hypothesis which claims that attention gets narrow in negative emotional stimulus or events (34). However, the main limitation of our study was unsuccessful priming manipulation. This study had two other limitations. One of them was a problem of discrimination between neutral and negative videos, and the other one was that the number of notifiers and non-notifiers were not equal in different experimental conditions.

Previous researches about the relation between IB and emotions have either manipulated participants’ mood by writing about an emotional event (14) or by introducing an emotional load of unexpected stimulus (9, 35). There are limited numbers of studies about priming and IB. This is the first experimental initiative to investigate the effects of both emotional context (with videos) and priming in IB.

Acknowledgements

On behalf of the authors, we would like to express deep sense of thankfulness to research assistants (Arcan Tiğrak, Aylin Koçak, Burcu Korkmaz, Funda Salman, Gamze Şen, and Zülal İşcanoğlu), and 2015 graduates of Hacettepe University Department of Psychology who participated as figurant to the inattentional blindness videos, for their kind help.

Appendix A

Appendix B

Footnotes

Ethics Committee Approval: Ethics committee approval was obtained from Hacettepe University Ethics Commission.

Informed Consent: Informed Consent was obtained from volunteers who participated in this study.

Peer-review: Externally peer-reviewed.

Author Contributions: Concept - BC, BO; Design - BO; Supervision - BC; Resource - BO; Materials - BO; Data Collection and/ or Processing - BO; Analysis and/or Interpretation - BO; Literature Search - BO; Writing - BO; Critical Reviews - BC.

Conflict of Interest: No conflict of interest was declared by the authors.

Financial Disclosure: The authors declared that this study has received no financial support.

REFERENCES

- 1.Cater K, Chalmers A, Ledda P. Shi J, Hodges LF, Sun H, Peng Q, editors. Selective quality rendering by exploiting human inattentional blindness:looking but not seeing. Proceedings of the ACM Symposium on Virtual Reality Software and Technology, VRST. 2002:17–24. [Google Scholar]

- 2.Cosman DJ, Vecera SP. Object-based attention overrides perceptual load of modulate visual distraction. J Exp Psychol Hum Percept Perform. 2012;38:576–579. doi: 10.1037/a0027406. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 3.Apfelbaum HL, Gambacorta C, Woods RL, Peli E. Inattentional blindness with the same scene at different scales. Ophthalmic Physiol Opt. 2010;30:124–131. doi: 10.1111/j.1475-1313.2009.00702.x. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 4.Mack A. Inattentional blindness:Looking without seeing. American Psychological Society. 2003;12:180–184. [Google Scholar]

- 5.Cartwright-Finch U, Lavie N. The role of perceptual load in inattentional blindness. Cognition. 2007;102:321–340. doi: 10.1016/j.cognition.2006.01.002. [DOI] [PubMed] [Google Scholar]

- 6.Most SB, Simons DJ, Scholl BJ, Jimenez R, Clifford E, Chabris CF. How not to be seen:the contribution of similarity and selective ignoring to sustained inattentional blindness. Psychol Sci. 2001;12:9–17. doi: 10.1111/1467-9280.00303. [DOI] [PubMed] [Google Scholar]

- 7.Simons DJ, Chabris CF. Gorillas in our midst:sustained inattentional blindness for dynamic events. Perception. 1999;28:1059–1074. doi: 10.1068/p281059. [DOI] [PubMed] [Google Scholar]

- 8.Devue C, Laloyaux C, Feyers D, Theeuwes J, Brédart S. Do pictures of faces, and which ones, capture attention in the inattentional-blindness paradigm? Perception. 2009;38:552–568. doi: 10.1068/p6049. [DOI] [PubMed] [Google Scholar]

- 9.Mack A, Rock I. Inattentional Blindness. 1st ed. Cambridge MA: The MIT Press; 1998. [Google Scholar]

- 10.Aimola Davies AM, Waterman S, White RC, Davies M. When you fail to see what you were told to look for:inattentional blindness and task instructions. Conscious Cogn. 2013;22:221–230. doi: 10.1016/j.concog.2012.11.015. [DOI] [PubMed] [Google Scholar]

- 11.Most SB, Scholl BJ, Clifford ER, Simons DJ. What you see is what you set:sustained inattentional blindness and the capture of awareness. Psychol Rev. 2005;112:217–242. doi: 10.1037/0033-295X.112.1.217. [DOI] [PubMed] [Google Scholar]

- 12.Grossman ES, Hoffman YS, Berger I, Zivotofsky AZ. Beating their chests:University students with ADHD demonstrate greater attentional abilities on an inattentional blindness paradigm. Neuropsychology. 2015;29:882–887. doi: 10.1037/neu0000189. [DOI] [PubMed] [Google Scholar]

- 13.Hanslmayr S, Backes H, Straub S, Popov T, Langguth B, Hajak G, Bauml KH, Landgrebe M. Enhanced resting-state oscillations in schizophrenia are associated with decreased synchronization during inattentional blindness. Hum Brain Mapp. 2013;34:2266–2275. doi: 10.1002/hbm.22064. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 14.Becker MW, Leinenger M. Attentional selection is biased toward mood-congruent stimuli. Emotion. 2011;11:1248–1254. doi: 10.1037/a0023524. [DOI] [PubMed] [Google Scholar]

- 15.Tulving E, Schacter DL. Priming and human memory systems. Science. 1990;247:301–306. doi: 10.1126/science.2296719. [DOI] [PubMed] [Google Scholar]

- 16.Slavich GM, Zimbardo PG. Out of Mind, Out of Sight:Unexpected Scene Elements Frequently Go Unnoticed Until Primed. Curr Psychol. 2013;32 doi: 10.1007/s12144-013-9184-3. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 17.Christianson SÅ, Loftus EF. Remembering emotional events:The fate of detailed information. Cognition & Emotion. 1991;5:81–108. [Google Scholar]

- 18.Loftus EF, Loftus GR, Messo J. Some facts about “weapon focus”. Law Hum Behav. 1987;11:55–62. [Google Scholar]

- 19.Richards A, Hannon EM, Derakshan N. Predicting and manipulating the incidence of inattentional blindness. Psychol Res. 2010;74:513–523. doi: 10.1007/s00426-009-0273-8. [DOI] [PubMed] [Google Scholar]

- 20.Koivisto M, Hyönä J, Revonsuo A. The effects of eye movements spatial attention, and stimulus features on inattentional blindness. Vision Res. 2004;44:3211–3221. doi: 10.1016/j.visres.2004.07.026. [DOI] [PubMed] [Google Scholar]

- 21.Kuhn G, Findlay JM. Misdirection, attention and awareness:inattentional blindness reveals temporal relationship between eye movements and visual awareness. Q J Exp Psychol (Hove) 2010;63:136–146. doi: 10.1080/17470210902846757. [DOI] [PubMed] [Google Scholar]

- 22.Memmert D. The effects of eye movements, age, and expertise on inattentional blindness. Conscious Cogn. 2006;15:620–627. doi: 10.1016/j.concog.2006.01.001. [DOI] [PubMed] [Google Scholar]

- 23.Simons DJ, Jensen MS. The effects of individual differences and task difficulty on inattentional blindness. Psychon Bull Rev. 2009;16:398–403. doi: 10.3758/PBR.16.2.398. [DOI] [PubMed] [Google Scholar]

- 24.Gülçay Ç, Cangöz B. Effects of emotion and perspective on remembering events:An eye-tracking study. Journal of Eye Movement Research. 2016;9:4, 1–19. [Google Scholar]

- 25.Calvo MG, Lang PJ. Gaze patterns when looking at emotional pictures:motivationally biased attention. Motivation and Emotion. 2004;28:221–243. [Google Scholar]

- 26.Kaspar K, Hloucal TM, Kriz J, Canzler S, Gameiro RR, Krapp V, König P. Emotions'impact on viewing behaviour under natural conditions. PloS One. 2013;8:e52737. doi: 10.1371/journal.pone.0052737. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 27.Kousta ST, Vinson DP, Vigliocco G. Emotion words regardless of polarity have a processing advantage over neutral words. Cognition. 2009;112:473–481. doi: 10.1016/j.cognition.2009.06.007. [DOI] [PubMed] [Google Scholar]

- 28.Scott GG, O'Donnell PJ, Sereno SC. Emotion words affect eye fixations during reading. J Exp Psychol Learn Mem Cogn. 2012;38:783–792. doi: 10.1037/a0027209. [DOI] [PubMed] [Google Scholar]

- 29.Chipchase SY, Chapman P. Trade-offs in visual attention and the enhancement of memory specificity for positive and negative emotional stimuli. Q J Exp Psychol (Hove) 2013;66:277–298. doi: 10.1080/17470218.2012.707664. [DOI] [PubMed] [Google Scholar]

- 30.Megías ALopez A, Catena A, Di Stasi L, Serrano J, Cándido A. Modulation of attention and urgent decisions by affect-laden roadside advertisement in risky driving scenarios. Safety Science. 2012;49:1388–1393. [Google Scholar]

- 31.Nummenmaa L, Hyönä J, Calvo MG. Eye movement assessment of selective attentional capture by emotional pictures. Emotion. 2006;6:257–268. doi: 10.1037/1528-3542.6.2.257. [DOI] [PubMed] [Google Scholar]

- 32.Zhu XL, Tan SP, De Yang F, Sun W, Song CS, Cui JF, Zao YL, Fan FM, Li YJ, Tan YL, Zou YZ. Visual scanning of emotional faces in schizophrenia. Neurosci Lett. 2013;552:46–51. doi: 10.1016/j.neulet.2013.07.046. [DOI] [PubMed] [Google Scholar]

- 33.Shechner T, Jarcho JM, Britton JC, Leibenluft E, Pine DS, Nelson EE. Attention bias of anxious youth during extended exposure of emotional face pairs:an eye-tracking study. Depress Anxiety. 2013;30:14–21. doi: 10.1002/da.21986. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 34.Christianson SÅ. Emotional stress and eyewitness memory:a critical review. Psychol Bull. 1992;112:284–309. doi: 10.1037/0033-2909.112.2.284. [DOI] [PubMed] [Google Scholar]

- 35.Brailsford R, Catherwood D, Tyson PJ, Edgar G. Noticing spiders on the left:Evidence on attentional bias and spider fear in the inattentional blindness paradigm. Laterality. 2014;19:201–218. doi: 10.1080/1357650X.2013.791306. [DOI] [PubMed] [Google Scholar]