Abstract

Blinded sample size reassessment is a popular means to control the power in clinical trials if no reliable information on nuisance parameters is available in the planning phase. We investigate how sample size reassessment based on blinded interim data affects the properties of point estimates and confidence intervals for parallel group superiority trials comparing the means of a normal endpoint. We evaluate the properties of two standard reassessment rules that are based on the sample size formula of the z-test, derive the worst case reassessment rule that maximizes the absolute mean bias and obtain an upper bound for the mean bias of the treatment effect estimate.

Keywords: Adaptive design, interim analysis, internal pilot study, point estimate, sample size reassessment

1 Introduction

In clinical trials for the comparison of means of normally distributed observations, the sample size to achieve a specific target power depends on the true effect size and variance. For the purpose of sample size planning, the effect size is usually assumed to be equal to a minimally clinically relevant effect while the variance is often estimated from historical data. For situations where only little prior knowledge on the variance is available, clinical trial designs with sample size reassessment based on interim estimates of this nuisance parameter have been proposed. Stein1 developed a two-stage procedure, where the second stage sample size is calculated based on a first stage variance estimate aiming to achieve a pre-specified target power. In the context of clinical trials, several extensions of Steins two-stage procedure have been considered2–8 that all require an unblinding of the interim data for the computation of the variance estimate. However, regulatory agencies generally prefer blinded interim analyses as they entail less potential for bias.9–13

Gould and Shih14 proposed to estimate the variance from the blinded interim data by computing the variance from the total sample (pooling the observations from both groups), instead. Although this interim estimate is not consistent15 and has a positive bias if the alternative hypothesis holds, the bias is negligible for effect sizes typically observed in clinical trials.16 Furthermore, sample size reassessment based on the total variance has no relevant impact on the type I error rate in parallel group superiority trials17 (see also the literature18–21) and achieves the target power well. Similar results on sample size reassessment based on blinded nuisance parameter estimates were obtained for binary data,22 count data23–25 longitudinal data,26 and for fully sequential sample size reassessment.27 For non-inferiority trials with normal endpoints, a minor inflation of the type I error rate for small sample sizes has been observed.28,29 However, if the sample size reassessment rule is not only based on the primary endpoint but also on blinded secondary or safety endpoint data, the type I error rate for the test of the primary endpoint may be substantially inflated.30

While for adaptive clinical trials with sample size reassessment based on the unblinded interim treatment effect estimate, it is well known that unadjusted point estimates of the effect size and confidence intervals may be biased;31,32 the properties of point estimates and confidence intervals computed at the end of an adaptive clinical trial with blinded sample size reassessment have received less attention. In this paper, we investigate the bias and standard error of the treatment effect point estimate and compare the absolute mean bias due to blinded sample size reassessment to upper boundaries derived for adaptive designs with unblinded sample size reassessment.33 We also investigate the bias of the final variance estimate under sample size reassessment rules that are based on the blinded interim variance estimate. We also investigate the bias of the final variance estimate under sample size reassessment rules that are based on the blinded interim variance estimate. Previously, this bias had been investigated for a corresponding unblinded reassessment rule (which had no upper sample size bound), sharp bounds for the bias had been derived and an additive bias correction had been suggested.8 Here, in this paper, we derive corresponding bounds for the bias of the final variance estimate in the blinded case. Furthermore, we assess the coverage probability of one- and two-sided confidence intervals.

In Section 2, we introduce adaptive designs with blinded sample size reassessment. Theoretical results on the bias of estimates are presented in Section 3. In Section 4, we report a simulation study quantifying the bias and coverage probabilities in a variety of scenarios. The impact of the results is discussed in the context of a case study in Section 5. Section 6 concludes the paper with a discussion and recommendations. Technical proofs are given in Appendix 1.

2 Blinded sample size reassessment

Consider a parallel group comparison of the means of a normally distributed endpoint with common unknown variance . The one-sided null hypothesis , is tested against at level α, where denotes the true effect size. Let denotes the alternative for which the trial is powered and assume that in the planning phase a first stage per group sample size is chosen based on an a priori variance estimate . Note, however, that all results below depend only on the chosen n1 and not the way it has been determined. As a consequence, for given n1, they also apply if it has been determined with other justifications. After the endpoints of the first stage subjects are observed, in a blinded interim analysis, the one-sample variance estimate is computed, given by

where Xijk is the observation in stage j = 1, 2 for treatment and is the mean of the pooled first stage samples. Based on , the second stage sample size is chosen with a pre-specified sample size function . Below we drop the argument of the function n2 for convenience if the meaning is clear from the context. Then, the overall sample size is per group. After the study is completed and unblinded, we assume that the point estimates for the mean difference and the variance

are computed, where . Furthermore, (i) the standard fixed sample lower confidence bound corresponding to the one-sided null hypothesis H0 given by , where denotes the quantile of the central t-distribution with degrees of freedom, (ii) the upper confidence bound—corresponding to the complementary null hypotheses —given by and (iii) the two-sided confidence interval given by is determined.

As example consider a sample size reassessment rule aiming to control the power under a pre-specified absolute treatment effect. It is derived from the standard sample size formula for the comparison of means of normally distributed observations with a one-sided z-test using the variance estimate

| (1) |

where is the desired power and and are pre-specified minimal and maximal second stage sample sizes with . The +1 in formula (1) was added for mathematical convenience to derive a lower bound for the variance estimate in Theorem 4 below. Given that we anyway have to round the sample size to an integer, this modification is of minor practical importance. While is an unbiased variance estimator for when the true effect size is zero, it is positively biased by for effect sizes .5 A sample size reassessment rule that is based on an adjusted variance estimate which is unbiased under the effect size δ0 for which the trial is powered is obtained from equation (1) by replacing by and is given by

| (2) |

3 Theoretical results on the bias of the final mean and variance estimators

In this section, we consider adaptive two-stage designs with general sample size reassessment rules where the second stage sample size is given as some non-constant function of the blinded interim variance estimate . We derive the bias of the final effect size and variance estimators and S2.

Theorem 1 —

Consider an adaptive two-stage design with a general sample size reassessment rule .

where

Under the null hypothesis δ = 0, the final mean estimator is unbiased.

If is increasing and () the estimator is negatively (positively) biased, respectively. Furthermore, the absolute bias is symmetric in δ around 0.

If the sample size reassessment rule is increasing and , the bias of converges to 0 for .

Note that the sample size functions are increasing such that the property (c) applies. If , tend to infinity for such that the property (d) applies.

In Theorem 2, we give an upper bound for the bias of the mean in adaptive two-stage designs with a general sample size reassessment rule such that , for lower and upper bounds where , where may be infinite (corresponding to the case of unrestricted sample size reassessment). For general unblinded sample size reassessment rules that may depend on the fully unblinded interim data, the upper bound for the bias is33

| (3) |

and is realized for the sample size reassessment rule that sets if and otherwise. This upper bound obviously also applies for the case of blinded sample size reassessment based on the total variance estimate because the set of unblinded sample size reassessment rules for which n2 may depend on the unblinded interim data in any way, includes all blinded sample size reassessment rules. This bound is not sharp if the sample size depends on , only. In the theorem below, we derive the sample size reassessment rule that maximizes the bias and compute the corresponding maximal bias which is a sharp upper bound for the bias that can occur under any sample reassessment based on sample size rules . We show that for increasing effect sizes, the bias approaches the bias of unblinded sample size reassessment.

Theorem 2 —

Consider an adaptive two-stage design with a general sample size reassessment rule , such that , where may be infinite. Then,

where denotes the unblinded first stage estimate of the mean treatment effect, and

(4)

(5) For and σ fixed, the maximum bias of caused by blinded sample size reassessment rules based on converges to the maximum bias caused by unblinded sample size reassessment rules given by equation (3).

Note that, by symmetry, the negative bias of is maximized by the sample size rule equation (4) and is given by equation (5) with the inequality signs reversed. If and , the first factor in equation (5) reduces to 1.

In Theorem 3, we derive the bias of the variance estimator computed at the end of the trial for general sample size reassessment rules and show that it is symmetric in the true treatment effect δ and converges to 0 for increasing δ under suitable conditions (which are satisfied for if ).

Theorem 3 —

Consider an adaptive two-stage design with a general sample size reassessment rule . Then,

where and r is defined in Theorem 1;

the bias of S2 is symmetric in δ around 0;

if , the bias of S2 converges to 0 for .

In Theorem 4, we derive a lower bound for the bias of the variance estimator S2 (which is negative) in an adaptive two-stage design with sample size reassessment rule .

Theorem 4 —

Consider a design with blinded sample size reassessment based on , where the second stage sample size is set to . Under the null hypothesis δ = 0, the bias of the variance estimator S2 at the end of the study has the following bounds

where . If (no upper restriction for the sample size), the bias converges to the lower bound for large variances

Note that this lower bound has a similar form as the bound derived for the bias in adaptive two-stage designs with sample size reassessment based on the unblinded variance estimate.8 In the latter setting, the bound is given by . The bound derived in Theorem 4 does not apply to designs where the adjusted rule is applied. The numerical results below suggest that for the adjusted rule the absolute bias is maximized for a finite and not for as for the unadjusted rule.

4 Simulation study of the properties of point estimates and confidence intervals

To quantify the bias of mean and variance estimates as well as the actual coverage of corresponding one- and two-sided confidence intervals in adaptive two-stage designs with blinded sample size reassessment, a simulation study was performed. We assumed that a trial is planned for an effect size and a priori variance estimate σ0 at a one-sided significance level . The preplanned sample size of a two-armed parallel group trial was chosen to provide 80% power (based on the normal approximation) for . The first stage sample size was set to half of the preplanned total sample size leading to first stage per group sample sizes of . For each first stage sample size, first stage data were simulated from normal distributions for effect sizes δ ranging from −2 to 2 (in steps of 0.1) and common standard deviations σ, ranging from 0.5 to 2 in steps of 0.5. The second stage sample sizes were then reassessed to (with ). Below we refer to the latter reassessment rules as the unadjusted and adjusted sample size reassessment rules. For each scenario , trials were simulated with R.34 The source code is available in the R-package blindConfidence which can be downloaded and installed from github. Details on the implementation and additional results are presented in the Supplementary Material. Figures 1 and 2 show the mean bias of the final estimates of the mean and variance based on the total sample. If the alternative holds, the estimates may be substantially biased for small first stage sample sizes.

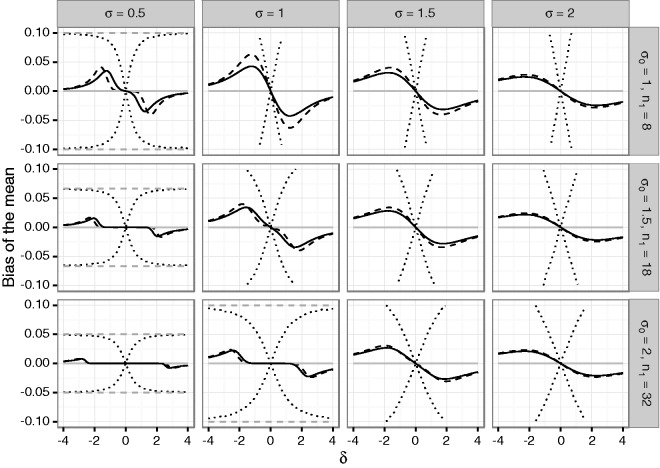

Figure 1.

Bias of the mean under blinded sample size reassessment using the unadjusted (solid line) and adjusted (dashed line) interim variance estimate. The dotted lines show maximum negative and positive bias that can be attained under any blinded sample size reassessment rule according to Theorem 2. The dashed gray line shows the maximum bias that can be attained under any (unblinded) sample size reassessment rule. The treatment effect used for planning is set to . Rows refer to the a priori assumed standard deviations σ0 determining the first stage sample size n1. The columns correspond to actual standard deviations. The x-axis in each graph denotes the true treatment effect δ, the y-axis shows the bias.

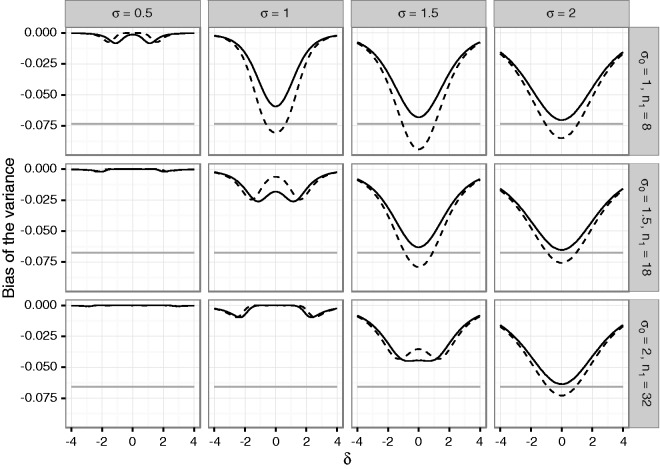

Figure 2.

Bias of the variance under blinded sample size reassessment using the unadjusted (solid line) and adjusted (dashed line) interim variance estimate. The gray line gives the lower bound from Theorem 4 for the bias under sample size reassessment based on the unadjusted variance estimate. The treatment effect used for planning is set to . Rows refer to the a priori assumed standard deviations σ0 determining the first stage sample size n1. The columns correspond to actual standard deviations. The x-axis in each graph denotes the true treatment effect δ, the y-axis shows the bias.

For both sample size reassessment rules, the bias of the mean is zero under the null hypothesis and has the opposite sign as the true effect size, otherwise (in accordance with Theorem 1). For very large positive and negative effect sizes, the bias is close to zero. This is due to the fact that a large effect size results in a large positive bias of the blinded interim variance estimates which in turn results in very large second stage sample sizes. As a consequence, the overall estimates are essentially equal to the (unbiased) second stage estimates, and the bias becomes negligible. In the considered scenarios, the absolute bias is decreasing in the first stage sample size. This also holds for the upper bound of the bias for general sample size reassessment rules. However, while the bias for the adjusted and unadjusted reassessment rules is small for larger sample sizes, the upper bound for general sample size reassessment rules still exceeds 10% in the considered scenarios even in the case where subjects per group are recruited in the first stage. In the considered scenarios, we observe that the upper bound for the bias of the mean estimate increases in the true effect size δ and the true standard deviation σ. The numerical results confirm that for increasing the maximum bias under blinded sample size reassessment approaches to the maximum bias under unblinded sample size reassessment as shown in Theorem 2.

The variance estimate is negatively biased for both considered sample size reassessment rules. For increasing variances, the absolute bias under the null hypothesis δ = 0 for the rule approaches the lower bound derived in Theorem 4. For large positive and negative effect sizes, the bias approaches zero, again, because the overall estimate becomes essentially equal to the (unbiased) second stage estimates. In general, we observe that using the adjusted interim variance estimate for sample size reassessment results in a larger absolute bias of mean and variance estimates.

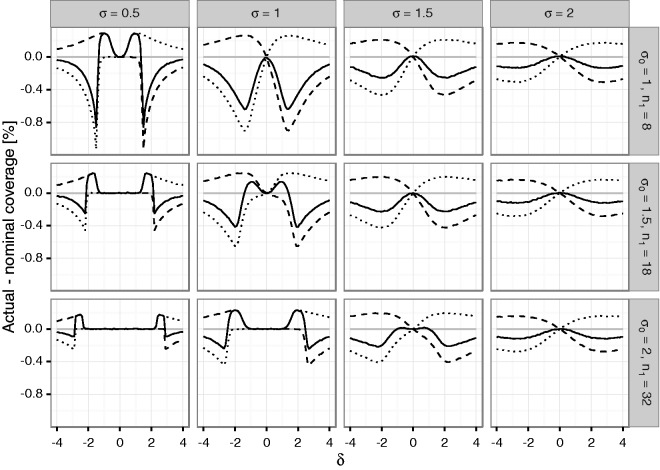

The difference of the coverage probabilities of the confidence intervals to the nominal coverage probabilities (i.e., 0.975 for the one-sided, 0.95 for the two-sided confidence interval) is shown in Figure 3 for the unadjusted sample size reassessment rule (see Figure 1 of the Supplementary Material for corresponding results for the adjusted sample size reassessment rule). For positive δ, the lower confidence bound is conservative (with coverage probability larger than 0.975) while the upper bound is anti-conservative, and vice-versa for negative δ. In fact, due to symmetry, the coverage probability for the upper confidence bound at a certain true effect size δ is the same as the coverage probability of the lower confidence bound at . If the true and the true are small, the trial will stop with minimal sample size almost with probability 1. Then, sample size is essentially fixed, no bias occurs and coverage is as desired. This is, e.g., the case for and and explains the exact coverage there. Similar cases occur for small in the three pictures for as well.

Figure 3.

Difference between actual and nominal coverage probabilities in percentage points under blinded sample size reassessment using the unadjusted interim variance estimate : upper confidence bound (dashed line), lower confidence bound (dotted line) and two-sided interval (solid line). Rows refer to the a priori assumed standard deviations σ0 determining the first stage sample size n1. The columns correspond to actual standard deviations. The x-axis in each graph denotes the true treatment effect δ; the y-axis shows the difference of actual to nominal coverage probability such that negative values indicate settings where the confidence bound is not valid.

The two-sided coverage probability (which is given by one minus the sum of the non-coverage probabilities of the lower and upper bounds) is not controlled over a large range of δ. However, for δ = 0 (i.e., under the null hypothesis), the inflation of the non-coverage probability, which corresponds to the Type I error rate of the corresponding test, is minor for small sample sizes (0.5 to 0.01 percentage points ) and essentially controlled for larger samples sizes. (See Figure 15 of the Supplementary Material for a simulation study that aims to identify the σ where the Type I error rate of the original hypothesis test is maximized.) The good coverage under the null hypothesis is at first sight surprising, given that the variance estimate is negatively biased and the mean estimate is unbiased. We therefore computed the actual variance of the mean estimate in the simulation study. To compare the actual variance of the mean to the estimated variance, we computed for each simulated trial the variance estimate as well as the actual variance of a design with fixed sample sizes n1, n2 (thus, ignoring that n2 is dependent on the first stage sample). Both quantities were then averaged over the Monte-Carlo samples. The results show that the true variance of the mean estimate of an adaptive design with blinded sample size reassessment is smaller than the average variance of a fixed sample design with the same sample sizes. The bias of the estimate of the variance of the mean estimate is close to zero around the null hypothesis. This holds both for designs using the adjusted and unadjusted interim variance estimates to reassess the sample size and gives some intuition why there is no relevant inflation of the coverage probability of confidence intervals under the null hypotheses, even though the variance estimate has a considerable negative bias (see Figures 5 and 6 of the Supplementary Material for results that show the variance of the mean estimate for designs that use the unadjusted and adjusted sample size rule).

While Figures 1 to 3 demonstrate the dependence of the bias and coverage probabilities on the true mean and standard deviation, in an actual trial these parameters are typically unknown. Therefore, we computed the maximum absolute bias of the mean, variance and the maximum difference between nominal and actual coverage probabilities for fixed first stage sample sizes over a range of values for the true mean and variance (see Figure 4). The optimization was performed in several steps based on simulations across an increasingly finer grid of true mean differences and standard deviations and using increasingly larger numbers of up to 108 simulation runs (see Section 2 of the Supplementary Material for computational details of this simulation).

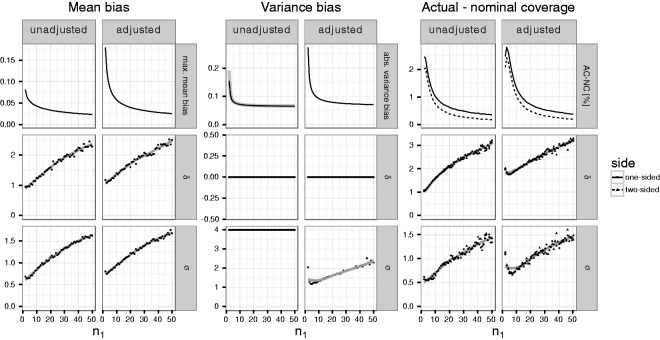

Figure 4.

Maximum absolute mean and variance bias as well as maximum negative difference between actual and nominal coverage probabilities (in percentage points) for given per group first stage sample sizes n1 between 2 and 50; values of δ between 0 and 4; σ between 0.5 and 4. Left columns show the results for the unadjusted sample size reassessment rule, right columns for the adjusted sample size reassessment rule. The first row shows the value for bias and non-coverage, the second shows the effect size δ and the third shows the standard deviation σ at which the specific value is attained. The gray line denotes a Loess smoothed estimate. For the maximum absolute variance bias, based on the unadjusted rule, the theoretical bound is shown using the slightly thicker gray line.

Overall, the maximum bias of the mean and variance estimates as well as the maximum difference between nominal and actual coverage probabilities using the adjusted sample size reassessment rule exceed that of the unadjusted rule. The maximum absolute bias of the mean bias (Columns 1 and 2 of Figure 4) drops from 0.18 (0.08) for trials with a per group first stage sample size of to 0.02 (0.02) for trials with a per group first stage sample size of if the adjusted (unadjusted) interim variance estimate is used to reassess the sample size. For increasing values of the first stage sample size, the maximum of the absolute mean bias is found for larger values of the true mean and variance.

For the unadjusted rule, the simulations suggest that the absolute variance bias is maximized for δ = 0 and (see Figure 2 and the additional simulations in Section 2 of the Supplementary Material). Thus, Column 3 of Figure 4 shows the absolute bias for δ = 0 and σ = 4 (the largest value of σ considered in the parameter grid). For the adjusted rule, Column 4 of Figure 4 shows the maximum absolute bias for δ = 0 and σ maximized over the grid. For the adjusted rule, the maximum bias is attained for a finite σ. The maximum absolute bias of the variance estimate drops from 0.27 (0.19) for trials with a per group first stage sample size of to 0.07 (0.06) for trials with a per group first stage sample size of if the adjusted (unadjusted) interim variance estimate is used to reassess the sample size. In the simulation, the maxima of the absolute variance bias, using the unadjusted reassessment rule, is practically identical to the theoretical boundary derived in Theorem 4 (see the gray line in the first graph of Column 3 of Figure 4). If the adjusted rule is used, the maximum is attained for values of σ ranging from 1 to 2, increasing with the first stage sample size.

The maximum negative difference between actual and nominal coverage probabilities (“AC-NC,” in Columns 5 and 6 of Figure 4) of the one-sided confidence intervals ranges from about 2.8 (2.4) to 0.4 (0.3) percentage points if the adjusted (unadjusted) variance estimate is used to reassess the sample size. For the two-sided confidence intervals, differences between actual and nominal coverage are slightly lower ranging from 2.4 (2) to 0.2 (0.2) percentage points, respectively. For increasing first stage sample sizes, the maximum is attained for increasing values of both σ and δ where the former ranges between 1 and 3 and the latter between 0.5 and 1.5.

In Section 4 of the Supplementary Material, the corresponding results for restricted sample size rules that limit the maximum second stage sample size to twice the preplanned second stage sample size, are given. We observe that for scenarios where , limiting the second stage sample size results in a reduction of the (absolute) bias and higher coverage probabilities. This, however, comes at the price of limited control in terms of power. When we observe that the (absolute) bias of the mean estimate (in line with the theoretical results in Theorem 2) is reduced by a factor . The bias of the variance estimate as well as the difference between actual and nominal coverage probabilities are less affected by restricting the second stage sample size. When σ is substantially smaller than σ0 bias and coverage probabilities under the restricted and unrestricted sample size reassessment rules are nearly identical.

5 Case study

We illustrate the estimation of treatment effects with a randomized placebo controlled trial to demonstrate the efficacy of the kava-kava special extract “WS® 1490” for the treatment of anxiety35 that was used to illustrate procedures for blinded sample size adjustment.17 The primary endpoint of the study was the change in the Hamilton Anxiety Scale (HAMA) between baseline and end of treatment. Assuming a mean difference between treatment and control of and a standard deviation of (i.e., a variance of ) results in a sample size of 34 patients per group to provide a power of 80%.

As in the previous case study,17 we assume that sample size reassessment based on blinded data is performed after 15 patients per group, that the sample size reassessment rules were applied with and and consider that the interim estimate of the standard deviation is . Consequently, we get and , such that in the second stage five patients per group would have been recruited based on the unadjusted sample size reassessment rule , and only one patient per group based the adjusted rule .

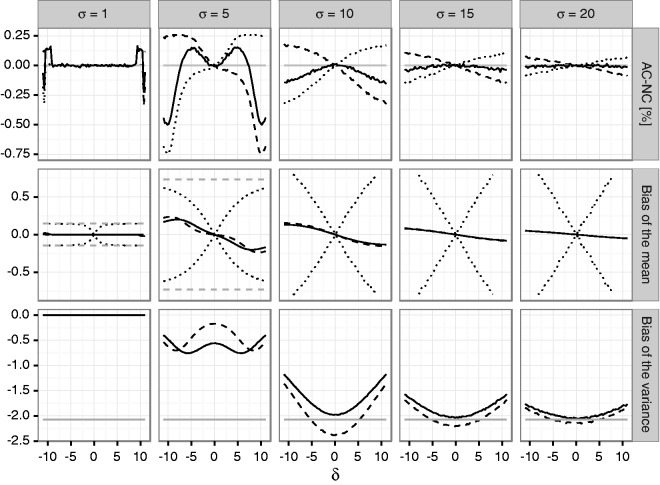

To estimate the bias of the mean and variance estimate as well as the coverage probabilities of confidence intervals, we performed a simulation study for true effect sizes δ ranging from −11 to 11 in steps of 0.05, and σ from 1 to 20 in steps of 1 (see Figure 5).

Figure 5.

Coverage probabilities and bias of mean and variance estimates for the case study. The first row of panels shows actual-nominal coverage probabilities (AC-NC) for the 97.5% upper (dashed line), lower (dotted line) and the 95% two-sided confidence intervals, for the unadjusted reassessment rule . The second row shows the bias of the mean estimate if (solid line) or (dashed line) is used. The dotted line shows upper and lower bounds for the bias for general blinded sample size reassessment rules based on . The dashed gray line shows the bounds for the bias for a general unblinded sample size reassessment rule. The third panel shows the bias of the variance estimate if either (solid line) or (dashed line) is used. The red line shows the theoretical boundary for the bias give in Theorem 4.

5.1 Unadjusted sample size rule

For fixed δ, the absolute bias of the variance estimate is increasing in σ. For σ = 20, the bias of the variance becomes which is within simulation error of the theoretical lower bound. The absolute mean bias takes its maximum (minimum) 0.2 (−0.2) for the effect sizes , respectively, and a standard deviation of . The maximum inflation of the non-coverage probabilities of one-sided 97.5% and two-sided 95% confidence intervals is 0.7 and 0.5 percentage points, respectively. The actual coverage probabilities are smallest for large absolute values of the true mean difference and standard deviations of around 5.

5.2 Adjusted sample size rule

If the sample size reassessment is based on the adjusted variance estimate, the absolute bias of the variance and mean estimate will be even larger taking values up to 2.49 for the variance and up to 0.25 for the mean, respectively. The inflation of the non-coverage probabilities goes up to 0.9 percentage points for the one-sided confidence intervals and 0.6 percentage points for the two-sided intervals.

6 Discussion

We investigated the properties of point estimates and confidence intervals in adaptive two-stage clinical trials where the sample size is reassessed based on blinded interim variance estimates. Such adaptive designs are in accordance with current regulatory guidance that proposes blinded sample size reassessment procedures based on aggregated interim data.9–11 We showed that such blinded sample size reassessment may lead to biased effect size estimates, biased variance estimates and may have an impact on the coverage probability of confidence intervals. The extent of the biases depends on the specific sample size reassessment rule, the first stage sample size, the true effect size and the variance.

We showed that for the unadjusted and the adjusted sample size reassessment rules that aim to control the power at the pre-specified level, the bias of confidence intervals may be large for very small first stage sample sizes but is small otherwise. Under the null hypothesis, even for first stage sample sizes as low as 16, the confidence intervals do not exhibit a relevant inflation of the non-coverage probability. For positive effect sizes, inflations (even though minor) are observed also for somewhat larger sample sizes. This corresponds to previous findings that the type I error rate of superiority as well as non-inferiority tests is hardly affected by blinded sample size reassessment.17,28 In addition, we show that for positive treatment effects the lower confidence interval (which is often the most relevant because it gives a lower bound of the treatment effect) is strictly conservative in all considered simulation scenarios. The upper bound in contrast shows an inflation of the non-coverage probability, which is however only of relevant size if sample sizes are small.

An approach to obtain conservative confidence intervals even for trials with small first stage sample sizes is to apply methods proposed for flexible designs with unblinded interim analyses32,33,36–39 which are based on combination tests or the conditional error rate principle. Such approaches allow to construct confidence intervals which control the coverage probabilities. However, they are based on test statistics that do not equally weigh outcomes from patients recruited in the first and second stage. A hybrid approach that strictly controls the coverage probability could be based on confidence intervals that are the union of the fixed sample confidence interval and the confidence interval obtained from the flexible design methodology. Similar approaches have been proposed for hypothesis testing in adaptive designs.40,41

We demonstrated that the treatment effect estimates are negatively (positively) biased under the adjusted and unadjusted sample size reassessment rules for all positive (negative) effect sizes. The bias decreases with the first stage sample size but for worst case parameter constellations it remains noticeable also for larger sample sizes. In addition, for the worst case sample size reassessment rule based on blinded variance estimates, the bias may be substantial even for large first stage sample sizes: As the effect size increases the maximum bias of the blinded sample size reassessment rule approaches the bias of unblinded sample size reassessment. As a consequence to maintain the integrity of confirmatory clinical trials with blinded sample size reassessment, binding sample size adaptation rules must be pre-specified and it is important to verify on a case-by-case basis that the reassessment rules used do not have a substantial impact on the properties of estimators should be used.

Supplementary Material

Appendix 1: Proofs of the Theorems

Note that the blinded first stage variance estimate can be written as17

| (6) |

where denotes the unblinded first stage estimates of the mean treatment effect and the (unblinded) pooled variance estimate. Thus, every second stage sample size rule can be written as function of and which is symmetric in around 0. We can then write Below we switch between both parameterizations of n2.

Proof of Theorem 1

Proof —

(a) Let the true effect size δ be fixed. The bias of is given by , see Brannath et al.,33 which can be computed by

(7) where .

(b) and (c) The sign of the integral is determined by the sign of . Note that r(q, y) is symmetric in y around 0 and increasing for y > 0 since this is true for . Thus, the integral is zero for δ = 0, negative for positive δ and positive for negative δ. Also the symmetry of the absolute bias follows by the symmetry of in y around 0. (d) The first factor in equation (7) tends to 0, and the proof follows with the dominated convergence theorem. □

Proof of Theorem 2

Proof —

(a) By equation (6), the joint density of and is given by

for and and otherwise. Therefore, the conditional distribution of given is and (a) follows.

(b) Note that the bias is given by

| (8) |

| (9) |

For fixed , the term within the outer conditional expectation in equation (8) is deterministic and decreases in n2 if and increases if . Therefore, the sample size rule equation (4) maximizes the bias.

(c) We plug-in the worst case sample size rule equation (4) into equation (9) and obtain

Because is unbiased such that we get equation (5).

(d) Let denotes a set of sample size reassessment rules that may depend on . Given a sample size rule , the bias can be written as

| (10) |

The set of integrands of the outer integral in equation (10) for second stage sample size rules in is uniformly integrable because the fraction and the -density is bounded (since ).

The unblinded sample size reassessment rule that maximizes the bias for given δ is33

Consider the blinded sample size rules

We show that for fixed σ and the second stage sample sizes of the blinded sample size rule , almost surely. Because, as shown above, the corresponding integrands in equation (10) are uniformly integrable, and it follows that also the respective biases converge and (d) follows.

Let . Then, there exists an , such that for all δ we have . Furthermore, there exists a such that for all we have and . Thus, for the event we have . However, on the set A we have : If then and on A also . Therefore, by equation (6)

and . On the other hand, if then and on A also . Therefore, by equation (6), because and and on A we have

where the last inequality follows because and . □

Proof of Theorem 3

Proof —

Let be the observed mean difference between the treatments in stage j = 1 and (if ) in stage j = 2.

We consider first the case that ; the following considerations are under the condition of and such that they lead to . Let be the observed mean difference between the stages for treatment . Let further be the (unblinded) pooled variance estimate of the second stage (for , we define ), be twice of the overall difference between the stages and . With this notation, we can split the total variance estimate S2 into parts

| (11) |

Obviously, for all n. As and are mutually independent (and consequently n and ), and as and are independent under the condition of n, is conditionally on n distributed as .

Under the condition of n, is distributed as . Further, under the condition of and has a non-central distribution with 1 degree of freedom and non-centrality parameter . Therefore, it follows

| (12) |

For cases and leading to , we can go directly from the first to the last row in the above derivation.

Using and , the bias can therefore be written as

The integral can again as in Theorem 1 be divided into and . By replacing t with −t in the first integral, it can be written as one integral over in the same way as in Theorem 1.

One can conclude that the bias is symmetric around 0 for δ since .

The asymptotic unbiasedness for follows from the integral expression with the dominated convergence theorem. □

Proof of Theorem 4

For the proof of Theorem 4, we show the following lemmas:

Lemma 1 —

Under the null hypothesis δ = 0

Proof —

is -distributed with degrees of freedom and by equation (6) can be written as sum of independent -distributed random variables such that and . All are identically distributed, and all Qj appear in the same way in the condition . Therefore, the Qj is exchangeable in the conditional distribution without changing the value of it: we have for and

□

Lemma 2 —

Let c, d be arbitrary with . For , the following inequality is fulfilled

Proof —

Note that a proof for and for instead of is in Miller.8 To include the possibility of , we show

As and are monotonically decreasing functions of x, the second and third covariances above are ; moreover, as c > 0, the second covariance is negative.42 This completes the proof. □

Proof of Theorem 4 —

From equation (12) in the proof of Theorem 3 follows with Lemma 1 under the null hypothesis δ = 0

Since and as function of is a monotonically decreasing weight function, the bias of S2 is non-positive. Since there is no constant c such that , the bias is negative and the upper bound is shown. To show the lower bound, we calculate (writing and )

| (13) |

| (14) |

| (15) |

| (16) |

| (17) |

The last expected value in equation (14) is negative since as function of is monotonically decreasing; therefore, the total expression becomes smaller when this term is removed in equation (15). Further, we used in equation (15) the sample size formula . For the inequality in equation (16), we applied Lemma 2. For the equality in equation (17), we have used where X is a chi-squared distributed random variable with degrees of freedom. The expected value of can be derived, for example, from Johnson et al.,43 p. 421.

When , the last expected value in equation (14) converges to 0 which implies that the difference between the expressions in equations (14) and (15) converges to 0. Further, as the probability for converges to 1 in the case , also the difference between equations (15) and (16) converges to 0. Therefore, the lower bound becomes sharp when . □

We note that in case of using the adjusted sample size rule instead of , then we cannot go in a similar way from equations (15) to (16). Our simulation results in Figure 2 and in the lower panel of Figure 5 show that the bias for the adjusted sample size rule can be below the lower bound of Theorem 4.

Declaration of conflicting interests

The author(s) declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Funding

The author(s) disclosed receipt of the following financial support for the research, authorship, and/or publication of this article: Martin Posch and Franz Koenig were supported by Austrian Science Fund Grant P23167. Florian Klinglmueller was supported by Austrian Science Fund Grants Number P23167 and J3721.

Supplementary material

Supplementary figures and additional details on the simulation study are presented in an additional document, which is distributed with the paper as Supplementary Material.

The R code used to compute the results of the simulation, and the case study is available as an R-package named blindConfidence which is available on github or on request from the authors.

References

- 1.Stein C. A two-sample test for a linear hypothesis whose power is independent of the variance. Ann Math Stat 1945; 16: 243–258. [Google Scholar]

- 2.Wittes J, Brittain E. The role of internal pilot studies in increasing the efficiency of clinical trials. Stat Med 1990; 9: 65–72. [DOI] [PubMed] [Google Scholar]

- 3.Betensky RA, Tierney C. An examination of methods for sample size recalculation during an experiment. Stat Med 1997; 16: 2587–2598. [DOI] [PubMed] [Google Scholar]

- 4.Wittes J, Schabenberger O, Zucker D, et al. Internal pilot studies I: type I error rate of the naive t-test. Stat Med 1999; 18: 3481–3491. [DOI] [PubMed] [Google Scholar]

- 5.Zucker DM, Wittes JT, Schabenberger O, et al. Internal pilot studies II: comparison of various procedures. Stat Med 1999; 18: 3493–3509. [DOI] [PubMed] [Google Scholar]

- 6.Denne JS, Jennison C. Estimating the sample size for a t-test using an internal pilot. Stat Med 1999; 18: 1575–1585. [DOI] [PubMed] [Google Scholar]

- 7.Proschan MA, Wittes J. An improved double sampling procedure based on the variance. Biometrics 2000; 56: 1183–1187. [DOI] [PubMed] [Google Scholar]

- 8.Miller F. Variance estimation in clinical studies with interim sample size reestimation. Biometrics 2005; 61: 355–361. [DOI] [PubMed] [Google Scholar]

- 9.EMEA. Reflection paper on methodological issues in confirmatory clinical trials planned with an adaptive design, London, www.ema.europa.eu/ema/pages/includes/document/open_document.jsp?webContentId=WC500003616 (2007, accessed 8 September 2016).

- 10.FDA, CDER. Guidance for industry: adaptive design clinical trials for drugs and biologics (Draft Guidance), Rockville: Food and Drug Administration, 2010. [Google Scholar]

- 11.FDA, CDRH. Draft guidance: adaptive designs for medical device clinical studies, Rockville: Food and Drug Administration, 2015. [Google Scholar]

- 12.Elsäßer A, Regnstrom J, Vetter T, et al. Adaptive clinical trial designs for European marketing authorization: a survey of scientific advice letters from the European Medicines Agency. Trials 2014; 15: 383–383. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 13.Pritchett YL, Menon S, Marchenko O, et al. Sample size re-estimation designs in confirmatory clinical trialscurrent state, statistical considerations, and practical guidance. Stat Biopharm Res 2015; 7: 309–321. [Google Scholar]

- 14.Gould LA, Shih WJ. Sample size re-estimation without unblinding for normally distributed outcomes with unknown variance. Comm Stat Theor Meth 1992; 21: 2833–2853. [Google Scholar]

- 15.Govindarajulu Z. Robustness of sample size re-estimation procedure in clinical trials (arbitrary populations). Stat Med 2003; 22: 1819–1828. [DOI] [PubMed] [Google Scholar]

- 16.Friede T, Kieser M. Blinded sample size re-estimation in superiority and noninferiority trials: bias versus variance in variance estimation. Pharmaceut Stat 2013; 12: 141–146. [DOI] [PubMed] [Google Scholar]

- 17.Kieser M, Friede T. Simple procedures for blinded sample size adjustment that do not affect the type I error rate. Stat Med 2003; 22: 3571–3581. [DOI] [PubMed] [Google Scholar]

- 18.Proschan MA. Two-stage sample size re-estimation based on a nuisance parameter: a review. J Biopharm Stat 2005; 15: 559–574. [DOI] [PubMed] [Google Scholar]

- 19.Proschan MA, Wittes JT, Lan K. Statistical monitoring of clinical trials, New York: Springer, 2006. [Google Scholar]

- 20.Posch M, Proschan MA. Unplanned adaptations before breaking the blind. Stat Med 2012; 31: 4146–4153. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 21.Proschan M, Glimm E, Posch M. Connections between permutation and t-tests: relevance to adaptive methods. Stat Med 2014; 33: 4734–4742. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 22.Friede T, Kieser M. Sample size recalculation for binary data in internal pilot study designs. Pharmaceut Stat 2004; 3: 269–279. [Google Scholar]

- 23.Friede T, Schmidli H. Blinded sample size reestimation with count data: methods and applications in multiple sclerosis. Stat Med 2010; 29: 1145–1156. [DOI] [PubMed] [Google Scholar]

- 24.Schneider S, Schmidli H, Friede T. Blinded and unblinded internal pilot study designs for clinical trials with count data. Biometrical J 2013; 55: 617–633. [DOI] [PubMed] [Google Scholar]

- 25.Stucke K, Kieser M. Sample size calculations for noninferiority trials with Poisson distributed count data. Biometrical J 2013; 55: 203–216. [DOI] [PubMed] [Google Scholar]

- 26.Wachtlin D, Kieser M. Blinded sample size recalculation in longitudinal clinical trials using generalized estimating equations. Ther Innov Regul Sci 2013; 47: 460–467. [DOI] [PubMed] [Google Scholar]

- 27.Friede T, Miller F. Blinded continuous monitoring of nuisance parameters in clinical trials. J R Stat Soc Series C (Appl Stat) 2012; 61: 601–618. [Google Scholar]

- 28.Friede T, Kieser M. Blinded sample size reassessment in non-inferiority and equivalence trials. Stat Med 2003; 22: 995–1007. [DOI] [PubMed] [Google Scholar]

- 29.Lu K. Distribution of the two-sample t-test statistic following blinded sample size re-estimation. Pharm Stat 2016; 15: 208–215. [DOI] [PubMed] [Google Scholar]

- 30.Żebrowska M, Posch M, Magirr D. Maximum type I error rate inflation from sample size reassessment when investigators are blind to treatment labels. Stat Med 2015; 35: 1972–1984. [DOI] [PMC free article] [PubMed] [Google Scholar]

- 31.Bretz F, Koenig F, Brannath W, et al. Adaptive designs for confirmatory clinical trials. Stat Med 2009; 28: 1181–1217. [DOI] [PubMed] [Google Scholar]

- 32.Brannath W, Mehta CR, Posch M. Exact confidence bounds following adaptive group sequential tests. Biometrics 2009; 65: 539–546. [DOI] [PubMed] [Google Scholar]

- 33.Brannath W, König F, Bauer P. Estimation in flexible two stage designs. Stat Med 2006; 25: 3366–3381. [DOI] [PubMed] [Google Scholar]

- 34.R Core Team. R: A language and environment for statistical computing. Vienna: R Foundation for Statistical Computing, www.R-project.org (2014, accessed 8 September 2016).

- 35.Malsch U, Kieser M. Efficacy of kava-kava in the treatment of non-psychotic anxiety, following pretreatment with benzodiazepines. Psychopharmacology 2001; 157: 277–283. [DOI] [PubMed] [Google Scholar]

- 36.Brannath W, Posch M, Bauer P. Recursive combination tests. J Am Stat Assoc 2002; 97: 236–244. [Google Scholar]

- 37.Lawrence J, Hung H. Estimation and confidence intervals after adjusting the maximum information. Biometrical J 2003; 45: 143–152. [Google Scholar]

- 38.Mehta CR, Bauer P, Posch M, et al. Repeated confidence intervals for adaptive group sequential trials. Stat Med 2007; 26: 5422–5433. [DOI] [PubMed] [Google Scholar]

- 39.Posch M, Koenig F, Branson M, et al. Testing and estimation in flexible group sequential designs with adaptive treatment selection. Stat Med 2005; 24: 3697–3714. [DOI] [PubMed] [Google Scholar]

- 40.Posch M, Bauer P, Brannath W. Issues in designing flexible trials. Stat Med 2003; 22: 953–969. [DOI] [PubMed] [Google Scholar]

- 41.Burman CF, Sonesson C. Are flexible designs sound? Biometrics 2006; 62: 664–669. [DOI] [PubMed] [Google Scholar]

- 42.Behboodian J. Covariance inequality and its application. Int J Math Educ Sci Technol 1994; 25: 643–647. [Google Scholar]

- 43.Johnson NL, Kotz S, Balakrishnan N. Continuous univariate distributions 1994; Vol. 1 Hoboken, NJ, USA: John Wiley & Sons. [Google Scholar]

Associated Data

This section collects any data citations, data availability statements, or supplementary materials included in this article.